- Preface

- I File systems and mounting

- II Logical volumes (LVM)

- 5 LVM configuration

- 5.1 Understanding the logical volume manager

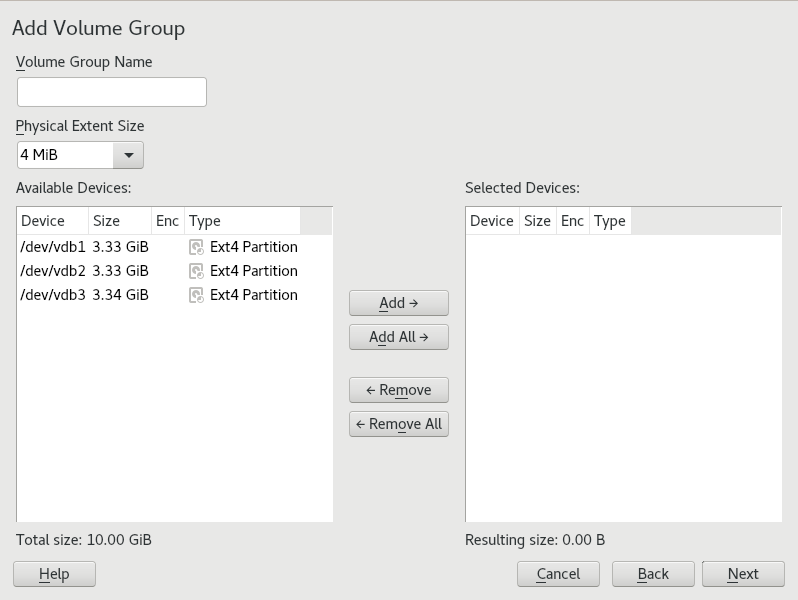

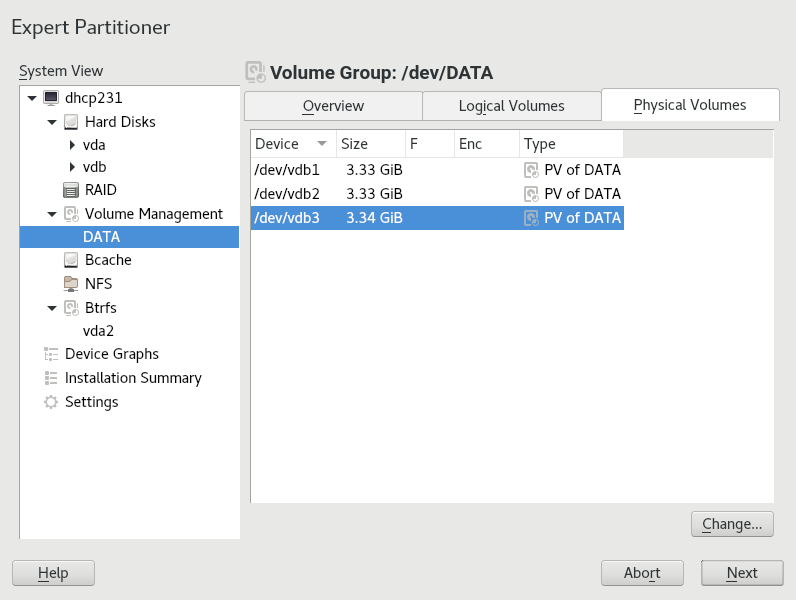

- 5.2 Creating volume groups

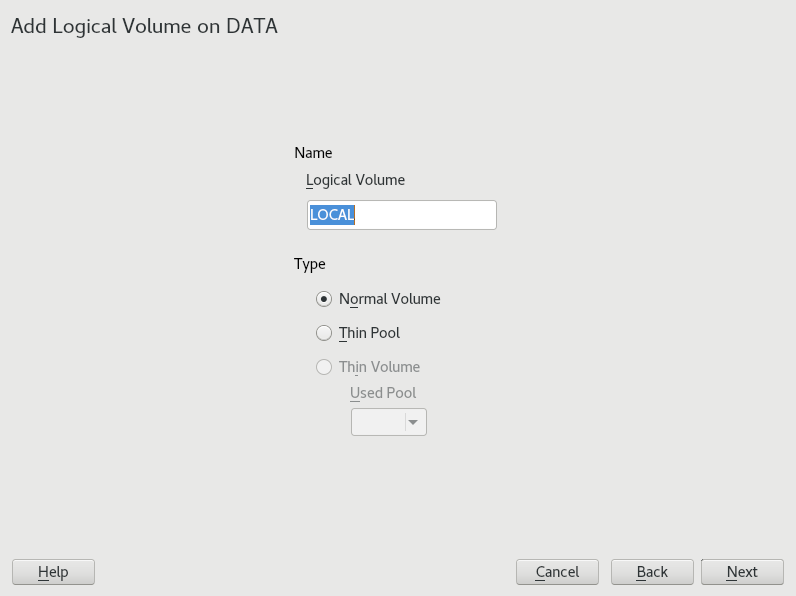

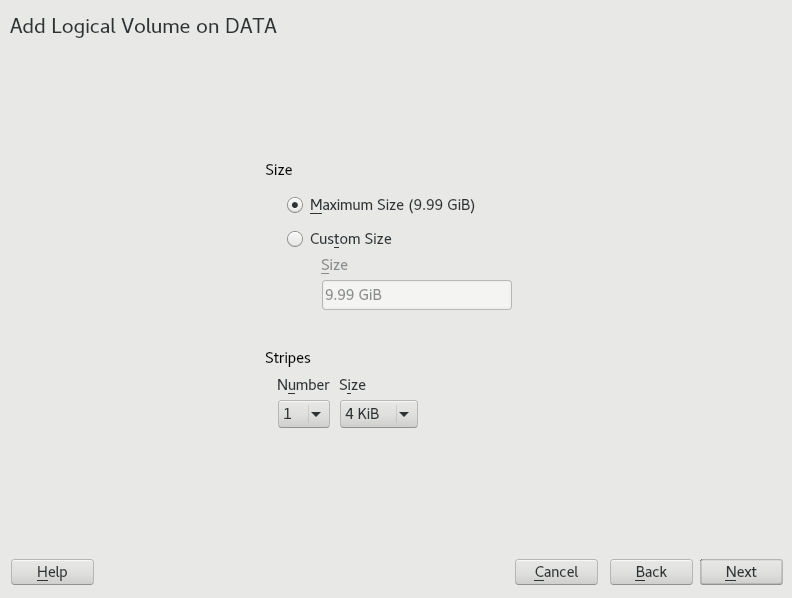

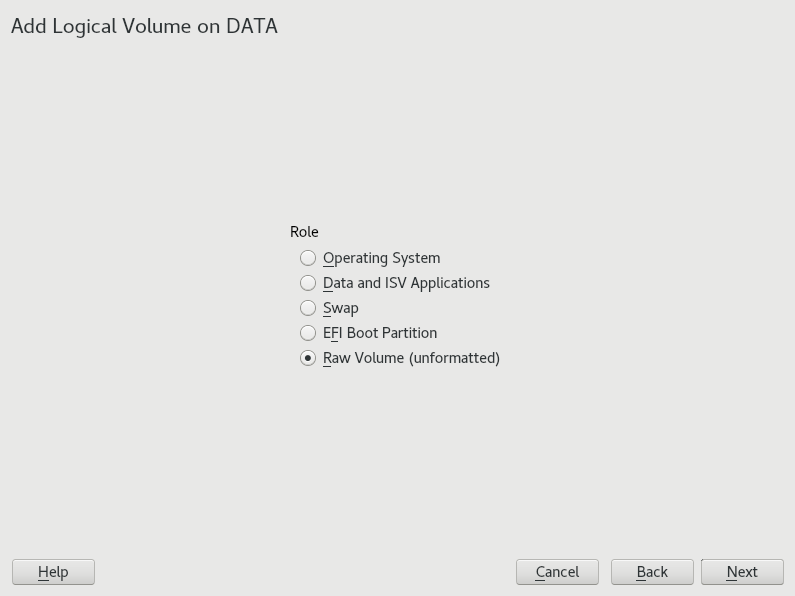

- 5.3 Creating logical volumes

- 5.4 Automatically activating non-root LVM volume groups

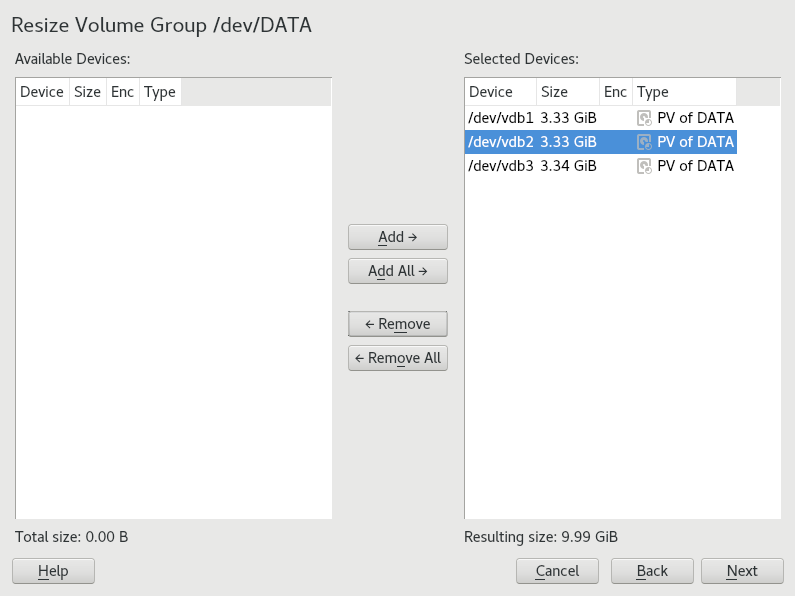

- 5.5 Resizing an existing volume group

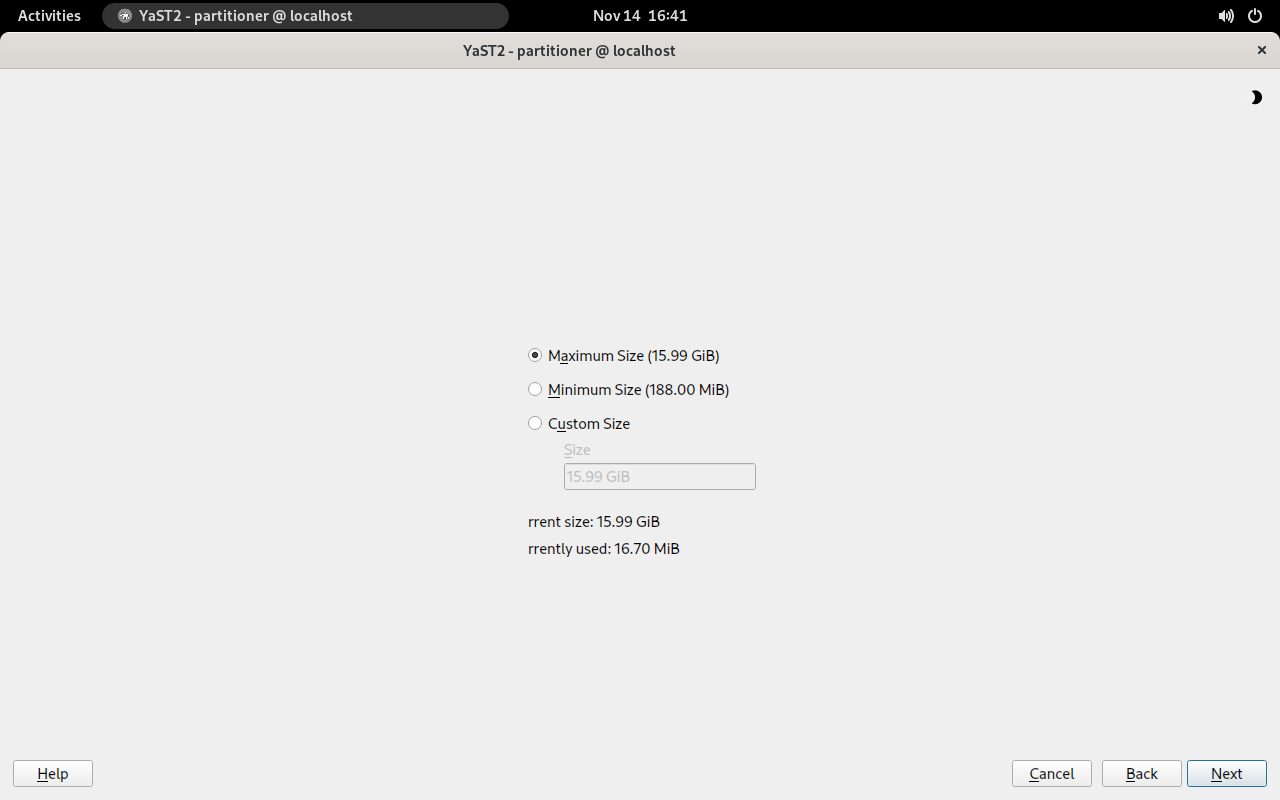

- 5.6 Resizing a logical volume

- 5.7 Deleting a volume group or a logical volume

- 5.8 Disabling LVM on boot

- 5.9 Using LVM commands

- 5.10 Tagging LVM2 storage objects

- 6 LVM volume snapshots

- 5 LVM configuration

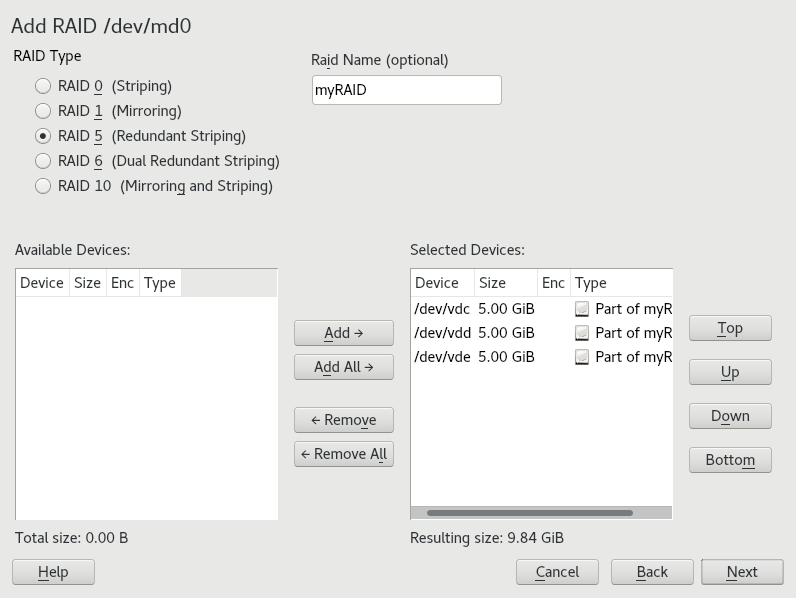

- III Software RAID

- IV Network storage

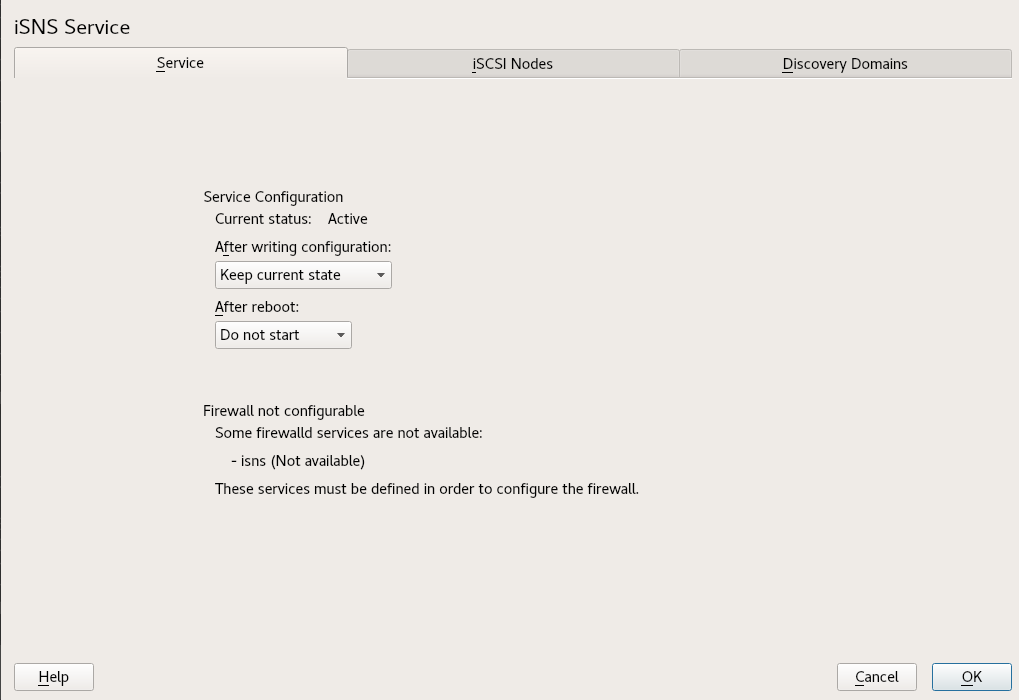

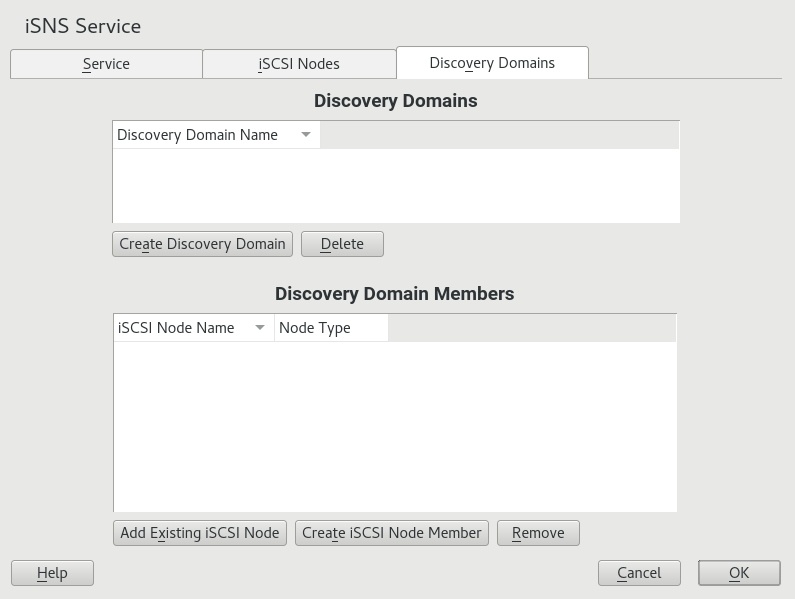

- 14 iSNS for Linux

- 15 Mass storage over IP networks: iSCSI

- 15.1 Installing the iSCSI LIO target server and iSCSI initiator

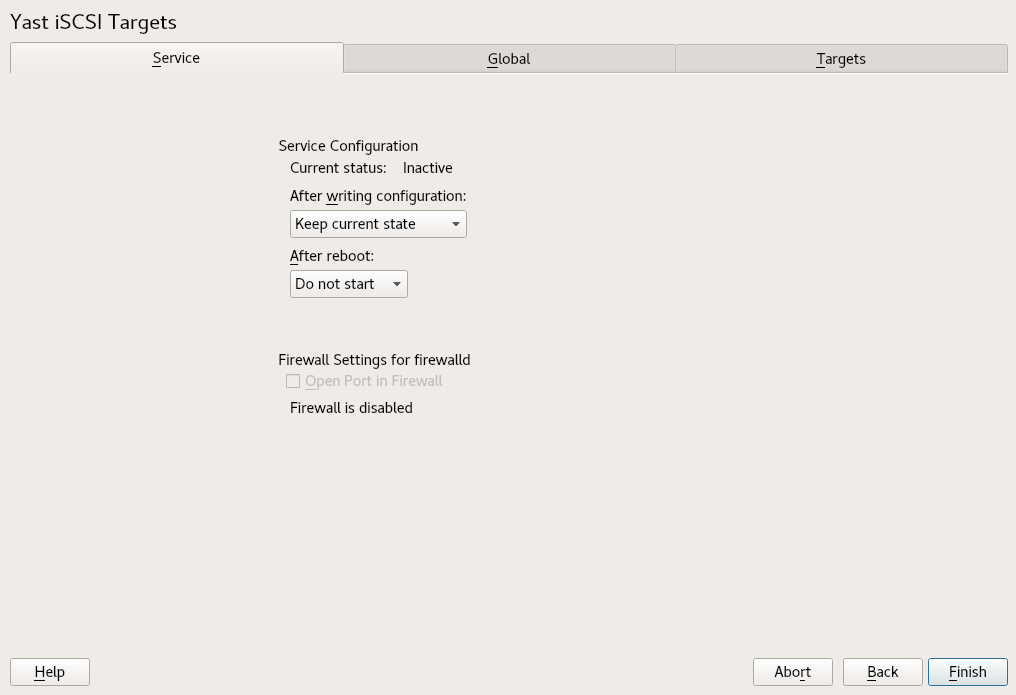

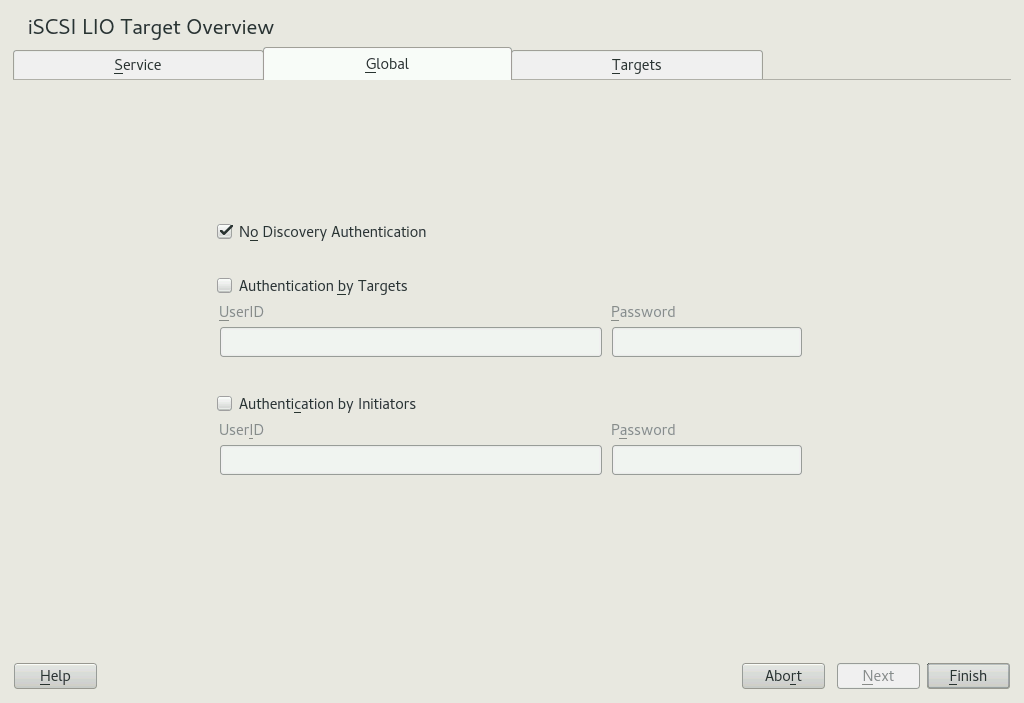

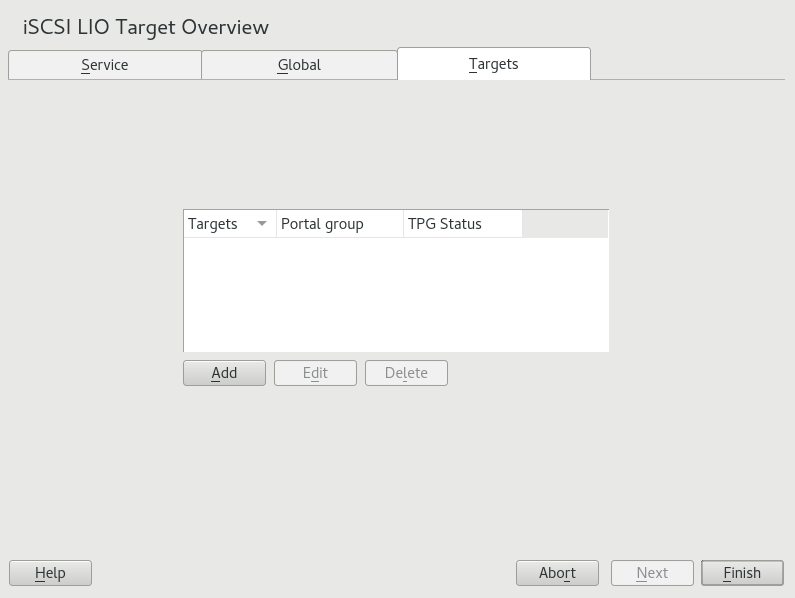

- 15.2 Setting up an iSCSI LIO target server

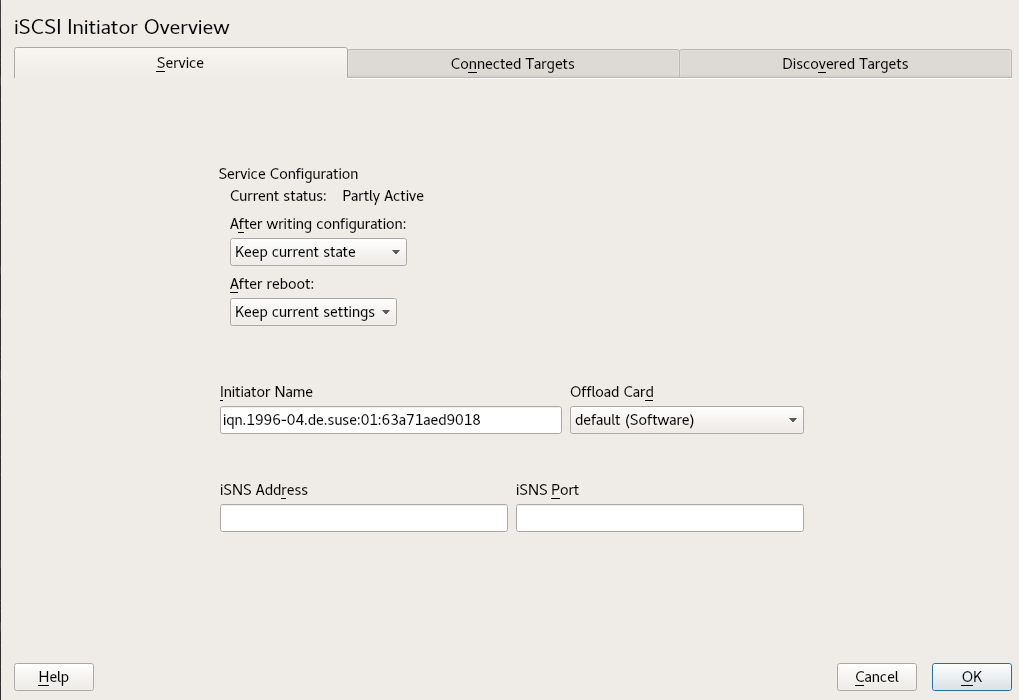

- 15.3 Configuring iSCSI initiator

- 15.4 Setting up software targets using targetcli-fb

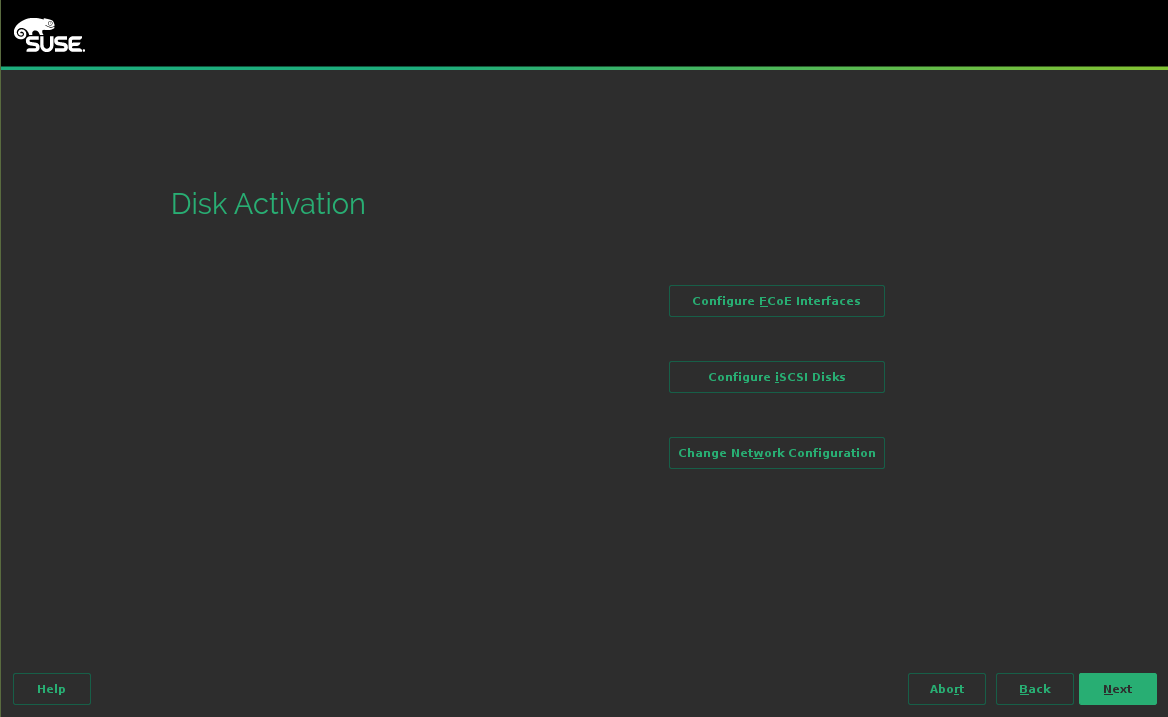

- 15.5 Using iSCSI disks when installing

- 15.6 Troubleshooting iSCSI

- 15.7 iSCSI LIO target terminology

- 15.8 More information

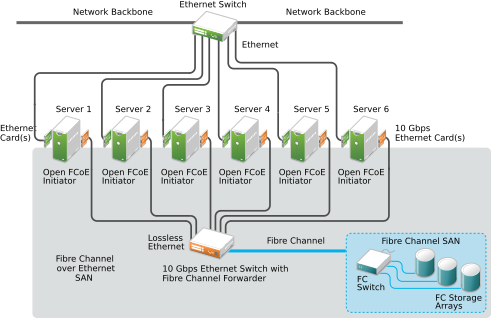

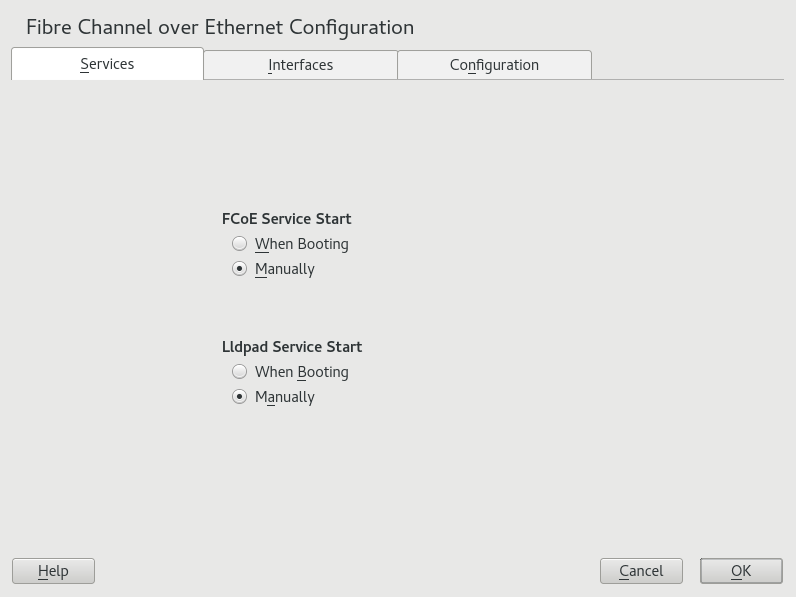

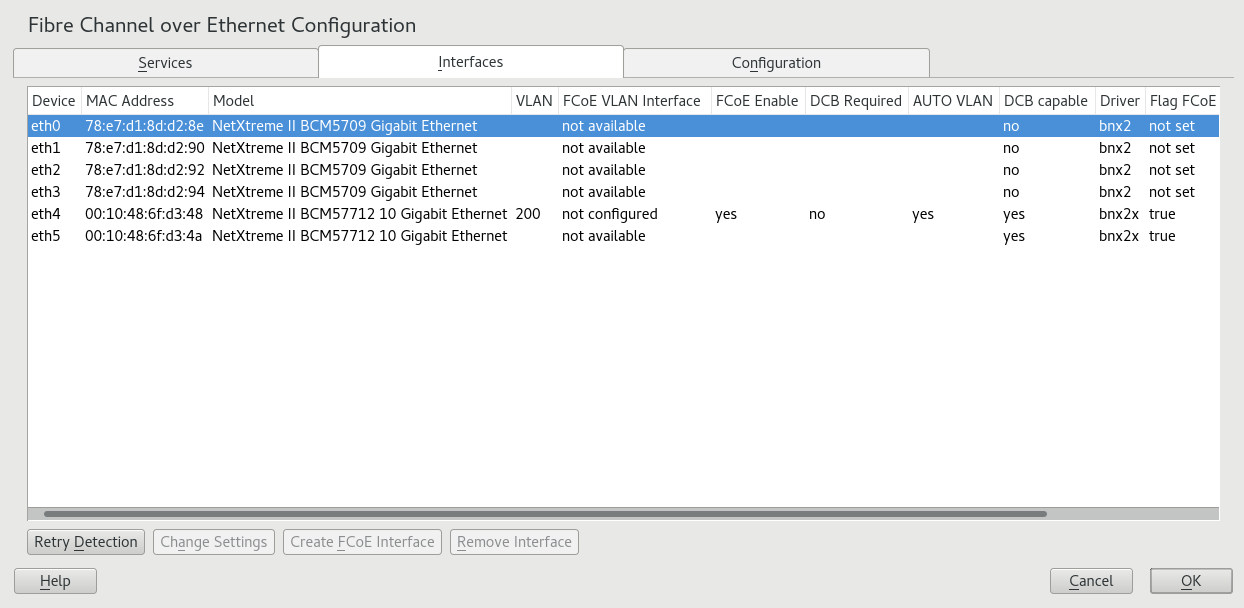

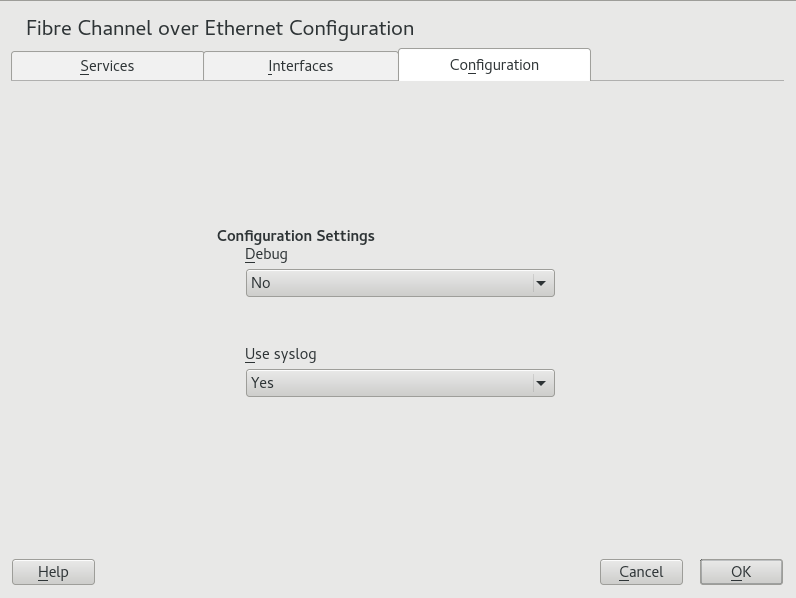

- 16 Fibre Channel storage over Ethernet networks: FCoE

- 17 NVMe-oF

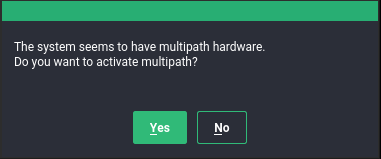

- 18 Managing multipath I/O for devices

- 18.1 Understanding multipath I/O

- 18.2 Hardware support

- 18.3 Planning for multipathing

- 18.4 Installing SUSE Linux Enterprise Server on multipath systems

- 18.5 Updating SLE on multipath systems

- 18.6 Multipath management tools

- 18.7 Configuring the system for multipathing

- 18.8 Multipath configuration

- 18.9 Configuring policies for failover, queuing, and failback

- 18.10 Configuring path grouping and priorities

- 18.11 Selecting devices for multipathing

- 18.12 Multipath device names and WWIDs

- 18.13 Miscellaneous options

- 18.14 Best practice

- 18.15 Troubleshooting MPIO

- 19 Sharing file systems with NFS

- 20 Samba

- 21 On-demand mounting with autofs

- A GNU licenses

- 1.1 Enabling Btrfs quotas

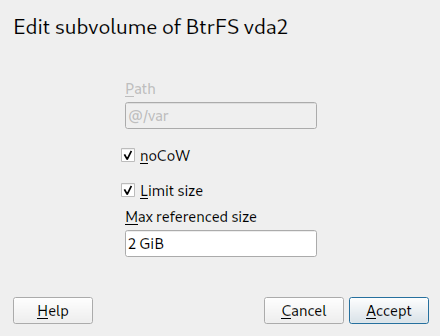

- 1.2 Setting quota for a subvolume

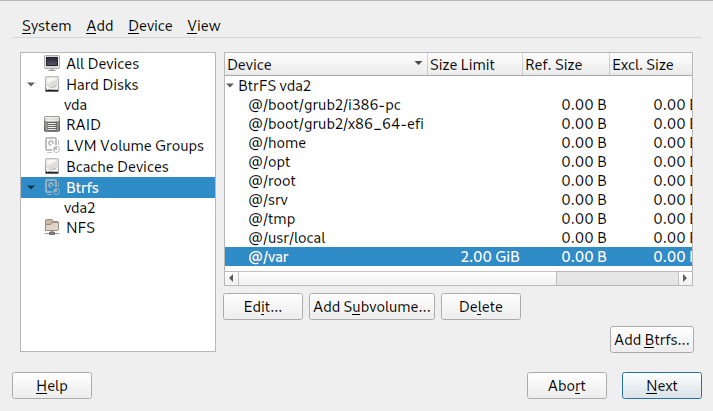

- 1.3 List of subvolumes for a device

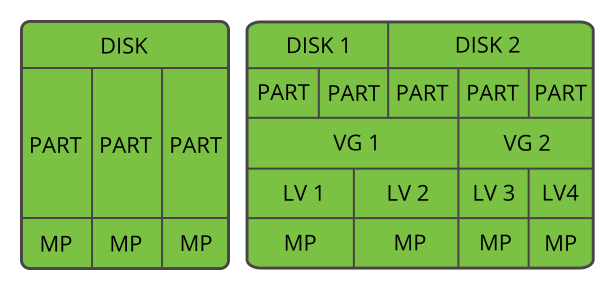

- 5.1 Physical partitioning versus LVM

- 5.2 Physical volumes in the volume group named DATA

- 7.1 Example RAID 5 configuration

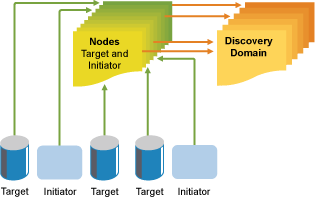

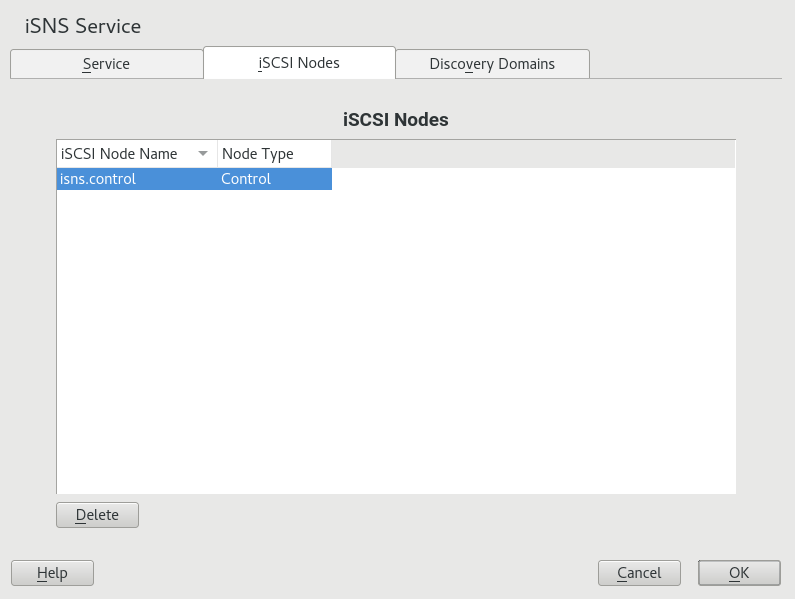

- 14.1 iSNS discovery domains

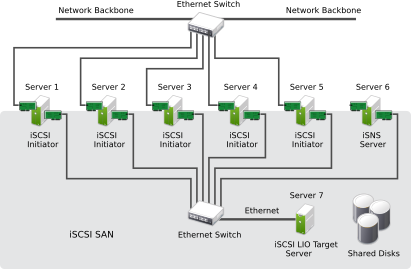

- 15.1 iSCSI SAN with an iSNS server

- 16.1 Open Fibre channel over ethernet SAN

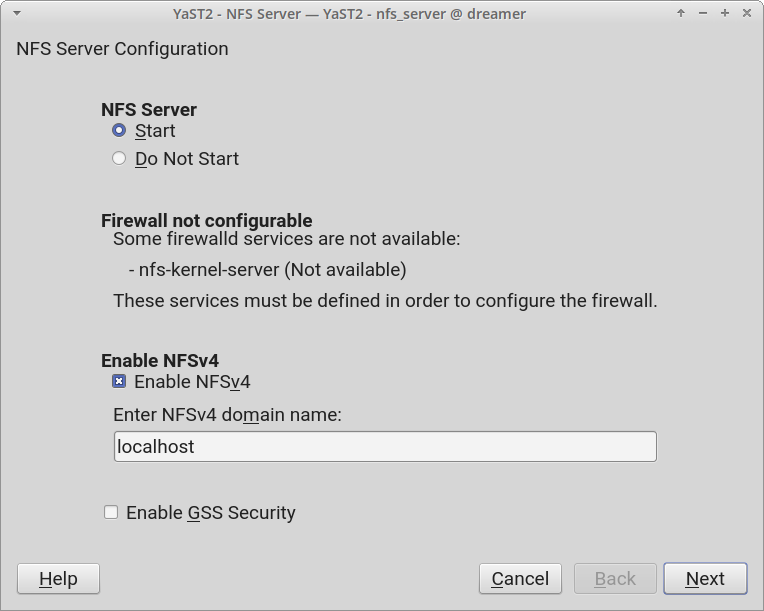

- 19.1 NFS server configuration tool

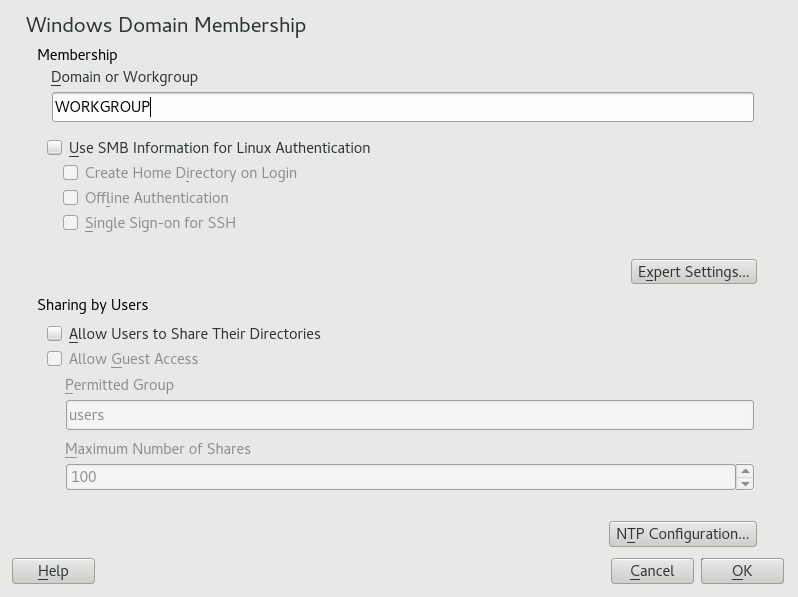

- 20.1 Determining Windows domain membership

- 20.2 Windows Explorer dialog

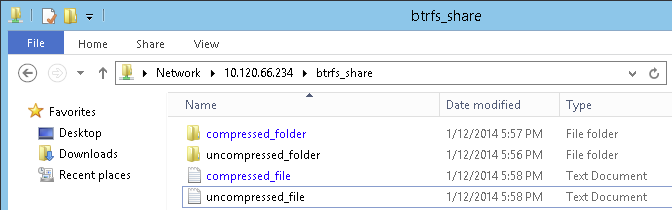

- 20.3 Windows Explorer directory listing with compressed files

- 20.4 Adding a new Samba share with snapshots enabled

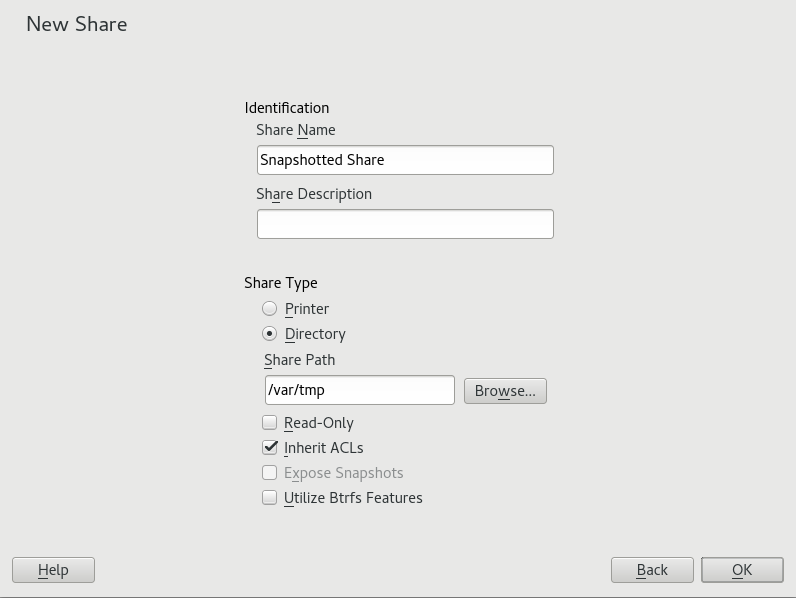

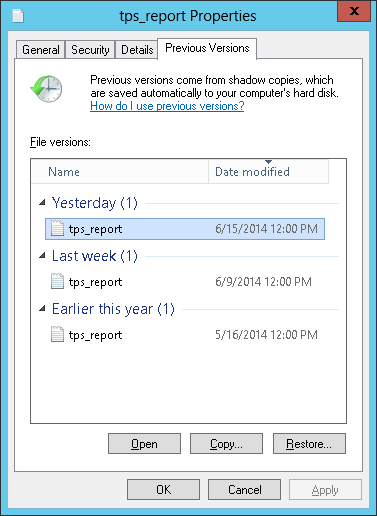

- 20.5 The tab in Windows explorer

- 1.1 File system types in Linux

- 1.2 Maximum sizes of files and file systems (on-disk format, 4 KiB block size)

- 1.3 Storage limitations

- 2.1 File system support for resizing

- 7.1 Comparison of RAID 5 and RAID 6

- 9.1 Nested RAID levels

- 9.2 Scenario for creating a RAID 10 (1+0) by nesting

- 9.3 Scenario for creating a RAID 10 (0+1) by nesting

- 9.4 Complex RAID 10 compared to nested RAID 10

- 9.5 Scenario for creating a RAID 10 using mdadm

- 11.1 Tasks involved in resizing a RAID

- 11.2 Scenario for increasing the size of component partitions

- 12.1 Translation between non-SES-2 patterns and SES-2 patterns

Copyright © 2006–2024 SUSE LLC and contributors. All rights reserved.

Permission is granted to copy, distribute and/or modify this document under the terms of the GNU Free Documentation License, Version 1.2 or (at your option) version 1.3; with the Invariant Section being this copyright notice and license. A copy of the license version 1.2 is included in the section entitled “GNU Free Documentation License”.

For SUSE trademarks, see https://www.suse.com/company/legal/. All third-party trademarks are the property of their respective owners. Trademark symbols (®, ™ etc.) denote trademarks of SUSE and its affiliates. Asterisks (*) denote third-party trademarks.

All information found in this book has been compiled with utmost attention to detail. However, this does not guarantee complete accuracy. Neither SUSE LLC, its affiliates, the authors nor the translators shall be held liable for possible errors or the consequences thereof.

Preface #

1 Available documentation #

- Online documentation

Our documentation is available online at https://documentation.suse.com. Browse or download the documentation in various formats.

Note: Latest updatesThe latest updates are usually available in the English-language version of this documentation.

- SUSE Knowledgebase

If you have run into an issue, also check out the Technical Information Documents (TIDs) that are available online at https://www.suse.com/support/kb/. Search the SUSE Knowledgebase for known solutions driven by customer need.

- Release notes

For release notes, see https://www.suse.com/releasenotes/.

- In your system

For offline use, the release notes are also available under

/usr/share/doc/release-noteson your system. The documentation for individual packages is available at/usr/share/doc/packages.Many commands are also described in their manual pages. To view them, run

man, followed by a specific command name. If themancommand is not installed on your system, install it withsudo zypper install man.

2 Improving the documentation #

Your feedback and contributions to this documentation are welcome. The following channels for giving feedback are available:

- Service requests and support

For services and support options available for your product, see https://www.suse.com/support/.

To open a service request, you need a SUSE subscription registered at SUSE Customer Center. Go to https://scc.suse.com/support/requests, log in, and click .

- Bug reports

Report issues with the documentation at https://bugzilla.suse.com/.

To simplify this process, click the icon next to a headline in the HTML version of this document. This preselects the right product and category in Bugzilla and adds a link to the current section. You can start typing your bug report right away.

A Bugzilla account is required.

- Contributions

To contribute to this documentation, click the icon next to a headline in the HTML version of this document. This will take you to the source code on GitHub, where you can open a pull request.

A GitHub account is required.

Note: only available for EnglishThe icons are only available for the English version of each document. For all other languages, use the icons instead.

For more information about the documentation environment used for this documentation, see the repository's README.

You can also report errors and send feedback concerning the documentation to <doc-team@suse.com>. Include the document title, the product version, and the publication date of the document. Additionally, include the relevant section number and title (or provide the URL) and provide a concise description of the problem.

3 Documentation conventions #

The following notices and typographic conventions are used in this document:

/etc/passwd: Directory names and file namesPLACEHOLDER: Replace PLACEHOLDER with the actual value

PATH: An environment variablels,--help: Commands, options, and parametersuser: The name of a user or grouppackage_name: The name of a software package

Alt, Alt–F1: A key to press or a key combination. Keys are shown in uppercase as on a keyboard.

, › : menu items, buttons

AMD/Intel This paragraph is only relevant for the AMD64/Intel 64 architectures. The arrows mark the beginning and the end of the text block.

IBM Z, POWER This paragraph is only relevant for the architectures

IBM ZandPOWER. The arrows mark the beginning and the end of the text block.Chapter 1, “Example chapter”: A cross-reference to another chapter in this guide.

Commands that must be run with

rootprivileges. You can also prefix these commands with thesudocommand to run them as a non-privileged user:#command>sudocommandCommands that can be run by non-privileged users:

>commandCommands can be split into two or multiple lines by a backslash character (

\) at the end of a line. The backslash informs the shell that the command invocation will continue after the line's end:>echoa b \ c dA code block that shows both the command (preceded by a prompt) and the respective output returned by the shell:

>commandoutputNotices

Warning: Warning noticeVital information you must be aware of before proceeding. Warns you about security issues, potential loss of data, damage to hardware, or physical hazards.

Important: Important noticeImportant information you should be aware of before proceeding.

Note: Note noticeAdditional information, for example about differences in software versions.

Tip: Tip noticeHelpful information, like a guideline or a piece of practical advice.

Compact Notices

Additional information, for example about differences in software versions.

Helpful information, like a guideline or a piece of practical advice.

4 Support #

Find the support statement for SUSE Linux Enterprise Server and general information about technology previews below. For details about the product lifecycle, see https://www.suse.com/lifecycle.

If you are entitled to support, find details on how to collect information for a support ticket at https://documentation.suse.com/sles-15/html/SLES-all/cha-adm-support.html.

4.1 Support statement for SUSE Linux Enterprise Server #

To receive support, you need an appropriate subscription with SUSE. To view the specific support offers available to you, go to https://www.suse.com/support/ and select your product.

The support levels are defined as follows:

- L1

Problem determination, which means technical support designed to provide compatibility information, usage support, ongoing maintenance, information gathering and basic troubleshooting using available documentation.

- L2

Problem isolation, which means technical support designed to analyze data, reproduce customer problems, isolate a problem area and provide a resolution for problems not resolved by Level 1 or prepare for Level 3.

- L3

Problem resolution, which means technical support designed to resolve problems by engaging engineering to resolve product defects which have been identified by Level 2 Support.

For contracted customers and partners, SUSE Linux Enterprise Server is delivered with L3 support for all packages, except for the following:

Technology previews.

Sound, graphics, fonts, and artwork.

Packages that require an additional customer contract.

Some packages shipped as part of the module Workstation Extension are L2-supported only.

Packages with names ending in -devel (containing header files and similar developer resources) will only be supported together with their main packages.

SUSE will only support the usage of original packages. That is, packages that are unchanged and not recompiled.

4.2 Technology previews #

Technology previews are packages, stacks, or features delivered by SUSE to provide glimpses into upcoming innovations. Technology previews are included for your convenience to give you a chance to test new technologies within your environment. We would appreciate your feedback. If you test a technology preview, please contact your SUSE representative and let them know about your experience and use cases. Your input is helpful for future development.

Technology previews have the following limitations:

Technology previews are still in development. Therefore, they may be functionally incomplete, unstable, or otherwise not suitable for production use.

Technology previews are not supported.

Technology previews may only be available for specific hardware architectures.

Details and functionality of technology previews are subject to change. As a result, upgrading to subsequent releases of a technology preview may be impossible and require a fresh installation.

SUSE may discover that a preview does not meet customer or market needs, or does not comply with enterprise standards. Technology previews can be removed from a product at any time. SUSE does not commit to providing a supported version of such technologies in the future.

For an overview of technology previews shipped with your product, see the release notes at https://www.suse.com/releasenotes.

Part I File systems and mounting #

- 1 Overview of file systems in Linux

SUSE Linux Enterprise Server ships with different file systems from which to choose, including Btrfs, Ext4, Ext3, Ext2 and XFS. Each file system has its own advantages and disadvantages. For a side-by-side feature comparison of the major file systems in SUSE Linux Enterprise Server, see https://www.suse.com/releasenotes/x86_64/SUSE-SLES/15-SP3/#file-system-comparison (Comparison of supported file systems). This chapter contains an overview of how these file systems work and what advantages they offer.

- 2 Resizing file systems

Resizing file systems—not to be confused with resizing partitions or volumes—can be used to make space available on physical volumes or to use additional space available on a physical volume.

- 3 Mounting storage devices

This section gives an overview which device identificators are used during mounting of devices, and provides details about mounting network storages.

- 4 Multi-tier caching for block device operations

A multi-tier cache is a replicated/distributed cache that consists of at least two tiers: one is represented by slower but cheaper rotational block devices (hard disks), while the other is more expensive but performs faster data operations (for example SSD flash disks).

1 Overview of file systems in Linux #

SUSE Linux Enterprise Server ships with different file systems from which to choose, including Btrfs, Ext4, Ext3, Ext2 and XFS. Each file system has its own advantages and disadvantages. For a side-by-side feature comparison of the major file systems in SUSE Linux Enterprise Server, see https://www.suse.com/releasenotes/x86_64/SUSE-SLES/15-SP3/#file-system-comparison (Comparison of supported file systems). This chapter contains an overview of how these file systems work and what advantages they offer.

With SUSE Linux Enterprise 12, Btrfs is the default file system for the operating system and XFS is the default for all other use cases. SUSE also continues to support the Ext family of file systems and OCFS2. By default, the Btrfs file system will be set up with subvolumes. Snapshots will be automatically enabled for the root file system using the snapper infrastructure. For more information about snapper, refer to Book “Administration Guide”, Chapter 7 “System recovery and snapshot management with Snapper”.

Professional high-performance setups might require a highly available storage system. To meet the requirements of high-performance clustering scenarios, SUSE Linux Enterprise Server includes OCFS2 (Oracle Cluster File System 2) and the Distributed Replicated Block Device (DRBD) in the High Availability add-on. These advanced storage systems are not covered in this guide. For information, see the Administration Guide for SUSE Linux Enterprise High Availability.

It is very important to remember that no file system best suits all kinds of applications. Each file system has its particular strengths and weaknesses, which must be taken into account. In addition, even the most sophisticated file system cannot replace a reasonable backup strategy.

The terms data integrity and data consistency, when used in this section, do not refer to the consistency of the user space data (the data your application writes to its files). Whether this data is consistent must be controlled by the application itself.

Unless stated otherwise in this section, all the steps required to set up or change partitions and file systems can be performed by using the YaST Partitioner (which is also strongly recommended). For information, see Book “Deployment Guide”, Chapter 10 “”.

1.1 Terminology #

- metadata

A data structure that is internal to the file system. It ensures that all of the on-disk data is properly organized and accessible. Almost every file system has its own structure of metadata, which is one reason the file systems show different performance characteristics. It is extremely important to maintain metadata intact, because otherwise all data on the file system could become inaccessible.

- inode

A data structure on a file system that contains a variety of information about a file, including size, number of links, pointers to the disk blocks where the file contents are actually stored, and date and time of creation, modification, and access.

- journal

In the context of a file system, a journal is an on-disk structure containing a type of log in which the file system stores what it is about to change in the file system’s metadata. Journaling greatly reduces the recovery time of a file system because it has no need for the lengthy search process that checks the entire file system at system start-up. Instead, only the journal is replayed.

1.2 Btrfs #

Btrfs is a copy-on-write (COW) file system developed by Chris Mason. It is based on COW-friendly B-trees developed by Ohad Rodeh. Btrfs is a logging-style file system. Instead of journaling the block changes, it writes them in a new location, then links the change in. Until the last write, the new changes are not committed.

1.2.1 Key features #

Btrfs provides fault tolerance, repair, and easy management features, such as the following:

Writable snapshots that allow you to easily roll back your system if needed after applying updates, or to back up files.

Subvolume support: Btrfs creates a default subvolume in its assigned pool of space. It allows you to create additional subvolumes that act as individual file systems within the same pool of space. The number of subvolumes is limited only by the space allocated to the pool.

The online check and repair functionality

scrubis available as part of the Btrfs command line tools. It verifies the integrity of data and metadata, assuming the tree structure is fine. You can run scrub periodically on a mounted file system; it runs as a background process during normal operation.Different RAID levels for metadata and user data.

Different checksums for metadata and user data to improve error detection.

Integration with Linux Logical Volume Manager (LVM) storage objects.

Integration with the YaST Partitioner and AutoYaST on SUSE Linux Enterprise Server. This also includes creating a Btrfs file system on Multiple Devices (MD) and Device Mapper (DM) storage configurations.

Offline migration from existing Ext2, Ext3, and Ext4 file systems.

Boot loader support for

/boot, allowing to boot from a Btrfs partition.Multivolume Btrfs is supported in RAID0, RAID1, and RAID10 profiles in SUSE Linux Enterprise Server 15 SP3. Higher RAID levels are not supported yet, but might be enabled with a future service pack.

Use Btrfs commands to set up transparent compression.

1.2.2 The root file system setup on SUSE Linux Enterprise Server #

By default, SUSE Linux Enterprise Server is set up using Btrfs and snapshots for the root partition. Snapshots allow you to easily roll back your system if needed after applying updates, or to back up files. Snapshots can easily be managed with the SUSE Snapper infrastructure as explained in Book “Administration Guide”, Chapter 7 “System recovery and snapshot management with Snapper”. For general information about the SUSE Snapper project, see the Snapper Portal wiki at OpenSUSE.org (http://snapper.io).

When using a snapshot to roll back the system, it must be ensured that data such as user's home directories, Web and FTP server contents or log files do not get lost or overwritten during a roll back. This is achieved by using Btrfs subvolumes on the root file system. Subvolumes can be excluded from snapshots. The default root file system setup on SUSE Linux Enterprise Server as proposed by YaST during the installation contains the following subvolumes. They are excluded from snapshots for the reasons given below.

/boot/grub2/i386-pc,/boot/grub2/x86_64-efi,/boot/grub2/powerpc-ieee1275,/boot/grub2/s390x-emuA rollback of the boot loader configuration is not supported. The directories listed above are architecture-specific. The first two directories are present on AMD64/Intel 64 machines, the latter two on IBM POWER and on IBM Z, respectively.

/homeIf

/homedoes not reside on a separate partition, it is excluded to avoid data loss on rollbacks./optThird-party products usually get installed to

/opt. It is excluded to avoid uninstalling these applications on rollbacks./srvContains data for Web and FTP servers. It is excluded to avoid data loss on rollbacks.

/tmpAll directories containing temporary files and caches are excluded from snapshots.

/usr/localThis directory is used when manually installing software. It is excluded to avoid uninstalling these installations on rollbacks.

/varThis directory contains many variable files, including logs, temporary caches, third party products in

/var/opt, and is the default location for virtual machine images and databases. Therefore this subvolume is created to exclude all of this variable data from snapshots and has Copy-On-Write disabled.

Rollbacks are only supported by SUSE if you do not remove any of the preconfigured subvolumes. You may, however, add subvolumes using the YaST Partitioner.

1.2.2.1 Mounting compressed Btrfs file systems #

GRUB 2 cannot boot from lzo or zstd compressed root file systems. Use

zlib compression, or create a separate /boot

partition if you wish to use lzo or zstd compression for root.

The Btrfs file system supports transparent compression. While enabled, Btrfs compresses file data when written and uncompresses file data when read.

Use the compress or compress-force mount

option and select the compression algorithm, zstd,

lzo, or zlib (the default). zlib

compression has a higher compression ratio while lzo is faster and takes

less CPU load. The zstd algorithm offers a modern compromise, with

performance close to lzo and compression ratios similar to zlib.

For example:

# mount -o compress=zstd /dev/sdx /mnt

In case you create a file, write to it, and the compressed result is

greater or equal to the uncompressed size, Btrfs will skip compression for

future write operations forever for this file. If you do not like this

behavior, use the compress-force option. This can be

useful for files that have some initial non-compressible data.

Note, compression takes effect for new files only. Files that were written

without compression are not compressed when the file system is mounted

with the compress or compress-force

option. Furthermore, files with the nodatacow attribute

never get their extents compressed:

#chattr+C FILE#mount-o nodatacow /dev/sdx /mnt

In regard to encryption, this is independent from any compression. After you have written some data to this partition, print the details:

# btrfs filesystem show /mnt

btrfs filesystem show /mnt

Label: 'Test-Btrfs' uuid: 62f0c378-e93e-4aa1-9532-93c6b780749d

Total devices 1 FS bytes used 3.22MiB

devid 1 size 2.00GiB used 240.62MiB path /dev/sdb1

If you want this to be permanent, add the compress or

compress-force option into the

/etc/fstab configuration file. For example:

UUID=1a2b3c4d /home btrfs subvol=@/home,compress 0 01.2.2.2 Mounting subvolumes #

A system rollback from a snapshot on SUSE Linux Enterprise Server is performed by booting from the snapshot first. This allows you to check the snapshot while running before doing the rollback. Being able to boot from snapshots is achieved by mounting the subvolumes (which would normally not be necessary).

In addition to the subvolumes listed in

Section 1.2.2, “The root file system setup on SUSE Linux Enterprise Server” a volume named

@ exists. This is the default subvolume that will be

mounted as the root partition (/). The other

subvolumes will be mounted into this volume.

When booting from a snapshot, not the @ subvolume will

be used, but rather the snapshot. The parts of the file system included in

the snapshot will be mounted read-only as /. The

other subvolumes will be mounted writable into the snapshot. This state is

temporary by default: the previous configuration will be restored with the

next reboot. To make it permanent, execute the snapper

rollback command. This will make the snapshot that is currently

booted the new default subvolume, which will be used

after a reboot.

1.2.2.3 Checking for free space #

File system usage is usually checked by running the df

command. On a Btrfs file system, the output of df can

be misleading, because in addition to the space the raw data allocates, a

Btrfs file system also allocates and uses space for metadata.

Consequently a Btrfs file system may report being out of space even though it seems that plenty of space is still available. In that case, all space allocated for the metadata is used up. Use the following commands to check for used and available space on a Btrfs file system:

btrfs filesystem show>sudobtrfs filesystem show / Label: 'ROOT' uuid: 52011c5e-5711-42d8-8c50-718a005ec4b3 Total devices 1 FS bytes used 10.02GiB devid 1 size 20.02GiB used 13.78GiB path /dev/sda3Shows the total size of the file system and its usage. If these two values in the last line match, all space on the file system has been allocated.

btrfs filesystem df>sudobtrfs filesystem df / Data, single: total=13.00GiB, used=9.61GiB System, single: total=32.00MiB, used=16.00KiB Metadata, single: total=768.00MiB, used=421.36MiB GlobalReserve, single: total=144.00MiB, used=0.00BShows values for allocated (

total) and used space of the file system. If the values fortotalandusedfor the metadata are almost equal, all space for metadata has been allocated.btrfs filesystem usage>sudobtrfs filesystem usage / Overall: Device size: 20.02GiB Device allocated: 13.78GiB Device unallocated: 6.24GiB Device missing: 0.00B Used: 10.02GiB Free (estimated): 9.63GiB (min: 9.63GiB) Data ratio: 1.00 Metadata ratio: 1.00 Global reserve: 144.00MiB (used: 0.00B) Data Metadata System Id Path single single single Unallocated -- --------- -------- --------- -------- ----------- 1 /dev/sda3 13.00GiB 768.00MiB 32.00MiB 6.24GiB -- --------- -------- --------- -------- ----------- Total 13.00GiB 768.00MiB 32.00MiB 6.24GiB Used 9.61GiB 421.36MiB 16.00KiBShows data similar to that of the two previous commands combined.

For more information refer to man 8 btrfs-filesystem

and https://btrfs.wiki.kernel.org/index.php/FAQ.

1.2.3 Migration from ReiserFS and ext file systems to Btrfs #

You can migrate data volumes from existing ReiserFS or Ext (Ext2, Ext3, or

Ext4) to the Btrfs file system using the btrfs-convert

tool. This allows you to do an in-place conversion of unmounted (offline)

file systems, which may require a bootable install media with the

btrfs-convert tool. The tool constructs a Btrfs file

system within the free space of the original file system, directly linking

to the data contained in it. There must be enough free space on the device

to create the metadata or the conversion will fail. The original file

system will be intact and no free space will be occupied by the Btrfs file

system. The amount of space required is dependent on the content of the

file system and can vary based on the number of file system objects (such

as files, directories, extended attributes) contained in it. Since the data

is directly referenced, the amount of data on the file system does not

impact the space required for conversion, except for files that use tail

packing and are larger than about 2 KiB in size.

Converting the root file system to Btrfs is not supported and not

recommended. Automating such a conversion is not possible due to various

steps that need to be tailored to your specific setup—the process

requires a complex configuration to provide a correct rollback,

/boot must be on the root file system, and the system

must have specific subvolumes, etc. Either keep the existing file system

or re-install the whole system from scratch.

To convert the original file system to the Btrfs file system, run:

# btrfs-convert /path/to/device/etc/fstab

After the conversion, you need to ensure that any references to the

original file system in /etc/fstab have been adjusted

to indicate that the device contains a Btrfs file system.

When converted, the contents of the Btrfs file system will reflect the

contents of the source file system. The source file system will be

preserved until you remove the related read-only image created at

fs_root/reiserfs_saved/image.

The image file is effectively a 'snapshot' of the ReiserFS file system

prior to conversion and will not be modified as the Btrfs file system is

modified. To remove the image file, remove the

reiserfs_saved subvolume:

# btrfs subvolume delete fs_root/reiserfs_savedTo revert the file system back to the original one, use the following command:

# btrfs-convert -r /path/to/deviceAny changes you made to the file system while it was mounted as a Btrfs file system will be lost. A balance operation must not have been performed in the interim, or the file system will not be restored correctly.

1.2.4 Btrfs administration #

Btrfs is integrated in the YaST Partitioner and AutoYaST. It is available during the installation to allow you to set up a solution for the root file system. You can use the YaST Partitioner after the installation to view and manage Btrfs volumes.

Btrfs administration tools are provided in the

btrfsprogs package. For information about using Btrfs

commands, see the man 8 btrfs, man 8

btrfsck, and man 8 mkfs.btrfs commands. For

information about Btrfs features, see the Btrfs wiki

at http://btrfs.wiki.kernel.org.

1.2.5 Btrfs quota support for subvolumes #

The Btrfs root file system subvolumes (for example,

/var/log, /var/crash, or

/var/cache) can use all the available disk space

during normal operation, and cause a system malfunction. To help avoid this

situation, SUSE Linux Enterprise Server offers quota support for Btrfs subvolumes. If you

set up the root file system from a YaST proposal, you are ready to enable

and set subvolume quotas.

1.2.5.1 Setting Btrfs quotas using YaST #

To set a quota for a subvolume of the root file system by using YaST, proceed as follows:

Start YaST and select › and confirm the warning with .

In the left pane, click .

In the main window, select the device for which you want to enable subvolume quotas and click at the bottom.

In the window, activate the check box and confirm with .

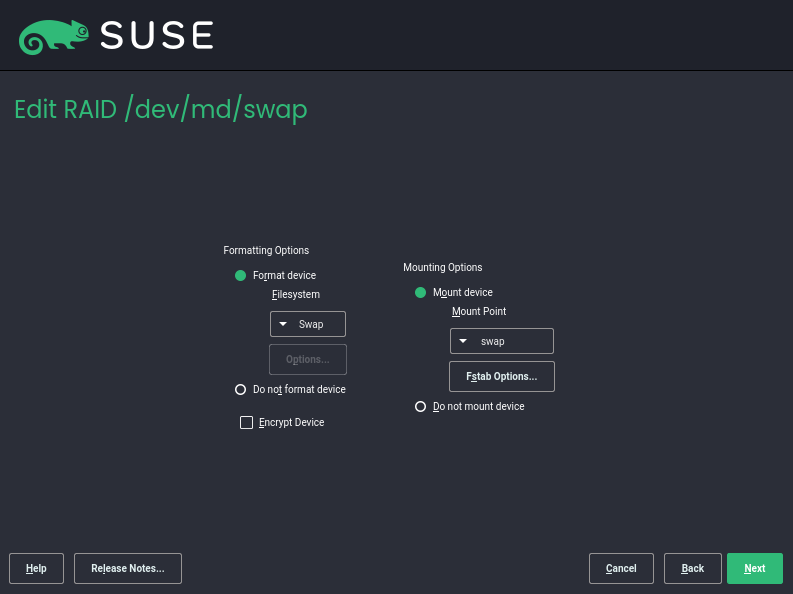

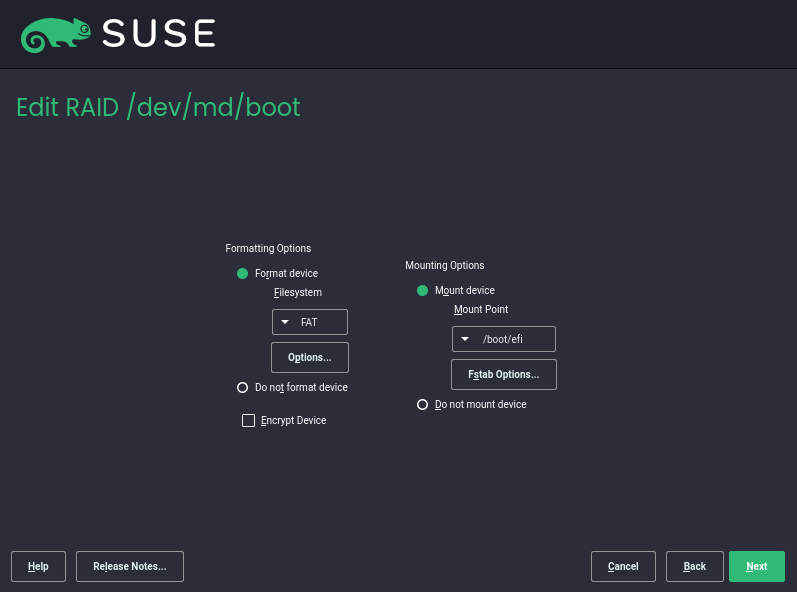

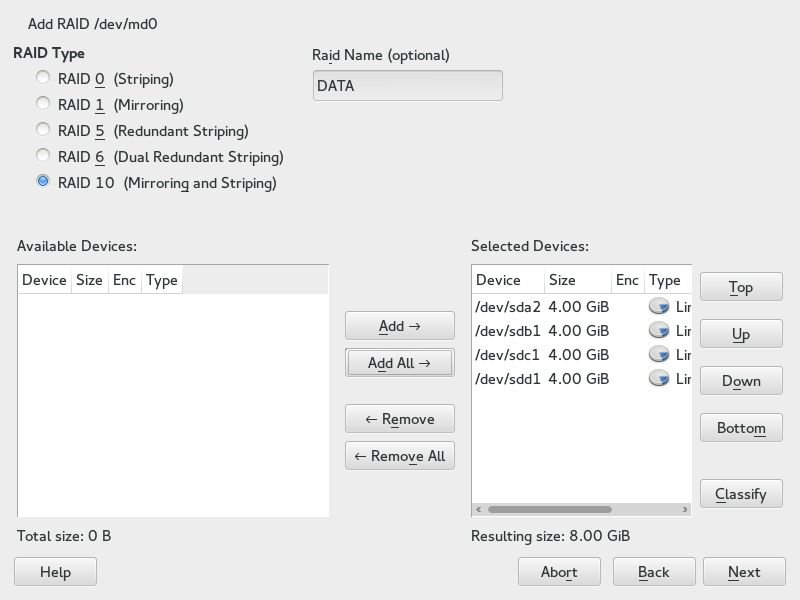

Figure 1.1: Enabling Btrfs quotas #From the list of existing subvolumes, click the subvolume whose size you intend to limit by quota and click at the bottom.

In the window, activate and specify the maximum referenced size. Confirm with .

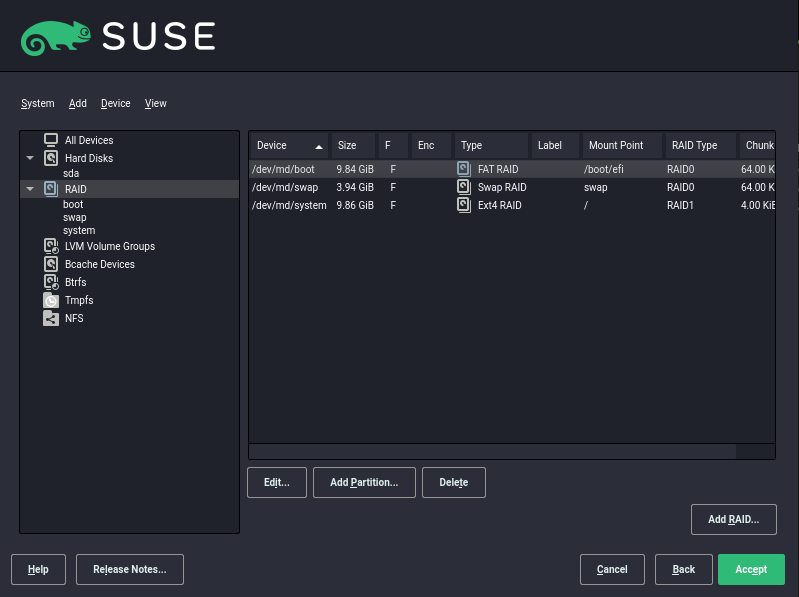

Figure 1.2: Setting quota for a subvolume #The new size limit will be displayed next to the subvolume name:

Figure 1.3: List of subvolumes for a device #Apply changes with .

1.2.5.2 Setting Btrfs quotas on the command line #

To set a quota for a subvolume of the root file system on the command line, proceed as follows:

Enable quota support:

>sudobtrfs quota enable /Get a list of subvolumes:

>sudobtrfs subvolume list /Quotas can only be set for existing subvolumes.

Set a quota for one of the subvolumes that was listed in the previous step. A subvolume can either be identified by path (for example

/var/tmp) or by0/SUBVOLUME ID(for example0/272). The following example sets a quota of 5 GB for/var/tmp.>sudobtrfs qgroup limit 5G /var/tmpThe size can either be specified in bytes (5000000000), kilobytes (5000000K), megabytes (5000M), or gigabytes (5G). The resulting values in bytes slightly differ, since 1024 Bytes = 1 KiB, 1024 KiB = 1 MiB, etc.

To list the existing quotas, use the following command. The column

max_rfershows the quota in bytes.>sudobtrfs qgroup show -r /

In case you want to nullify an existing quota, set a quota size of

none:

>sudobtrfs qgroup limit none /var/tmp

To disable quota support for a partition and all its subvolumes, use

btrfs quota disable:

>sudobtrfs quota disable /

1.2.5.3 More information #

See the man 8 btrfs-qgroup and man 8

btrfs-quota for more details. The

UseCases page on the Btrfs wiki

(https://btrfs.wiki.kernel.org/index.php/UseCases)

also provides more information.

1.2.6 Swapping on Btrfs #

You will not be able to create a snapshot if the source subvolume contains any enabled swap files.

SLES supports swapping to a file on the Btrfs file system if the following criteria relating to the resulting swap file are fulfilled:

The swap file must have the

NODATACOWandNODATASUMmount options.The swap file can not be compressed—you can ensure this by setting the

NODATACOWandNODATASUMmount options. Both options disable swap file compression.The swap file cannot be activated while exclusive operations are running—such as device resizing, adding, removing, or replacing, or when a balancing operation is running.

The swap file cannot be sparse.

The swap file can not be an inline file.

The swap file must be on a

singleallocation profile file system.

1.2.7 Btrfs send/receive #

Btrfs allows to make snapshots to capture the state of the file system. Snapper, for example, uses this feature to create snapshots before and after system changes, allowing a rollback. However, together with the send/receive feature, snapshots can also be used to create and maintain copies of a file system in a remote location. This feature can, for example, be used to do incremental backups.

A btrfs send operation calculates the difference between

two read-only snapshots from the same subvolume and sends it to a file or

to STDOUT. A btrfs receive operation takes the result of

the send command and applies it to a snapshot.

1.2.7.1 Prerequisites #

To use the send/receive feature, the following requirements need to be met:

A Btrfs file system is required on the source side (

send) and on the target side (receive).Btrfs send/receive operates on snapshots, therefore the respective data needs to reside in a Btrfs subvolume.

Snapshots on the source side need to be read-only.

SUSE Linux Enterprise 12 SP2 or better. Earlier versions of SUSE Linux Enterprise do not support send/receive.

1.2.7.2 Incremental backups #

The following procedure shows the basic usage of Btrfs send/receive using

the example of creating incremental backups of /data

(source side) in /backup/data (target side).

/data needs to be a subvolume.

Create the initial snapshot (called

snapshot_0in this example) on the source side and make sure it is written to the disk:>sudobtrfs subvolume snapshot -r /data /data/bkp_data syncA new subvolume

/data/bkp_datais created. It will be used as the basis for the next incremental backup and should be kept as a reference.Send the initial snapshot to the target side. Since this is the initial send/receive operation, the complete snapshot needs to be sent:

>sudobash -c 'btrfs send /data/bkp_data | btrfs receive /backup'A new subvolume

/backup/bkp_datais created on the target side.

When the initial setup has been finished, you can create incremental backups and send the differences between the current and previous snapshots to the target side. The procedure is always the same:

Create a new snapshot on the source side.

Send the differences to the target side.

Optional: Rename and/or clean up snapshots on both sides.

Create a new snapshot on the source side and make sure it is written to the disk. In the following example the snapshot is named bkp_data_CURRENT_DATE:

>sudobtrfs subvolume snapshot -r /data /data/bkp_data_$(date +%F) syncA new subvolume, for example

/data/bkp_data_2016-07-07, is created.Send the difference between the previous snapshot and the one you have created to the target side. This is achieved by specifying the previous snapshot with the option

-p SNAPSHOT.>sudobash -c 'btrfs send -p /data/bkp_data /data/bkp_data_2016-07-07 \ | btrfs receive /backup'A new subvolume

/backup/bkp_data_2016-07-07is created.As a result four snapshots, two on each side, exist:

/data/bkp_data/data/bkp_data_2016-07-07/backup/bkp_data/backup/bkp_data_2016-07-07Now you have three options for how to proceed:

Keep all snapshots on both sides. With this option you can roll back to any snapshot on both sides while having all data duplicated at the same time. No further action is required. When doing the next incremental backup, keep in mind to use the next-to-last snapshot as parent for the send operation.

Only keep the last snapshot on the source side and all snapshots on the target side. Also allows to roll back to any snapshot on both sides—to do a rollback to a specific snapshot on the source side, perform a send/receive operation of a complete snapshot from the target side to the source side. Do a delete/move operation on the source side.

Only keep the last snapshot on both sides. This way you have a backup on the target side that represents the state of the last snapshot made on the source side. It is not possible to roll back to other snapshots. Do a delete/move operation on the source and the target side.

To only keep the last snapshot on the source side, perform the following commands:

>sudobtrfs subvolume delete /data/bkp_data>sudomv /data/bkp_data_2016-07-07 /data/bkp_dataThe first command will delete the previous snapshot, the second command renames the current snapshot to

/data/bkp_data. This ensures that the last snapshot that was backed up is always named/data/bkp_data. As a consequence, you can also always use this subvolume name as a parent for the incremental send operation.To only keep the last snapshot on the target side, perform the following commands:

>sudobtrfs subvolume delete /backup/bkp_data>sudomv /backup/bkp_data_2016-07-07 /backup/bkp_dataThe first command will delete the previous backup snapshot, the second command renames the current backup snapshot to

/backup/bkp_data. This ensures that the latest backup snapshot is always named/backup/bkp_data.

To send the snapshots to a remote machine, use SSH:

> btrfs send /data/bkp_data | ssh root@jupiter.example.com 'btrfs receive /backup'1.2.8 Data deduplication support #

Btrfs supports data deduplication by replacing identical blocks in the file

system with logical links to a single copy of the block in a common storage

location. SUSE Linux Enterprise Server provides the tool duperemove for

scanning the file system for identical blocks. When used on a Btrfs file

system, it can also be used to deduplicate these blocks and thus save space

on the file system. duperemove is not installed by

default. To make it available, install the package

duperemove .

If you intend to deduplicate a large amount of files, use the

--hashfile option:

>sudoduperemove--hashfile HASH_FILEfile1 file2 file3

The --hashfile option stores hashes of all specified

files into the HASH_FILE instead of RAM and

prevents it from being exhausted. HASH_FILE is

reusable—you can deduplicate changes to large datasets very quickly

after an initial run that generated a baseline hash file.

duperemove can either operate on a list of files or

recursively scan a directory:

>sudoduperemove OPTIONS file1 file2 file3>sudoduperemove -r OPTIONS directory

It operates in two modes: read-only and de-duping. When run in read-only

mode (that is without the -d switch), it scans the given

files or directories for duplicated blocks and prints them. This works on

any file system.

Running duperemove in de-duping mode is only supported

on Btrfs file systems. After having scanned the given files or directories,

the duplicated blocks will be submitted for deduplication.

For more information see man 8 duperemove.

1.2.9 Deleting subvolumes from the root file system #

You may need to delete one of the default Btrfs subvolumes from the root

file system for specific purposes. One of them is transforming a

subvolume—for example @/home or

@/srv—into a file system on a separate device.

The following procedure illustrates how to delete a Btrfs subvolume:

Identify the subvolume you need to delete (for example

@/opt). Notice that the root path has always subvolume ID '5'.>sudobtrfs subvolume list / ID 256 gen 30 top level 5 path @ ID 258 gen 887 top level 256 path @/var ID 259 gen 872 top level 256 path @/usr/local ID 260 gen 886 top level 256 path @/tmp ID 261 gen 60 top level 256 path @/srv ID 262 gen 886 top level 256 path @/root ID 263 gen 39 top level 256 path @/opt [...]Find the device name that hosts the root partition:

>sudobtrfs device usage / /dev/sda1, ID: 1 Device size: 23.00GiB Device slack: 0.00B Data,single: 7.01GiB Metadata,DUP: 1.00GiB System,DUP: 16.00MiB Unallocated: 14.98GiBMount the root file system (subvolume with ID 5) on a separate mount point (for example

/mnt):>sudomount -o subvolid=5 /dev/sda1 /mntDelete the

@/optpartition from the mounted root file system:>sudobtrfs subvolume delete /mnt/@/optUnmount the previously mounted root file system:

>sudoumount /mnt

1.3 XFS #

Originally intended as the file system for their IRIX OS, SGI started XFS development in the early 1990s. The idea behind XFS was to create a high-performance 64-bit journaling file system to meet extreme computing challenges. XFS is very good at manipulating large files and performs well on high-end hardware. XFS is the default file system for data partitions in SUSE Linux Enterprise Server.

A quick review of XFS’s key features explains why it might prove to be a strong competitor for other journaling file systems in high-end computing.

- High scalability

XFS offers high scalability by using allocation groups

At the creation time of an XFS file system, the block device underlying the file system is divided into eight or more linear regions of equal size. Those are called allocation groups. Each allocation group manages its own inodes and free disk space. Practically, allocation groups can be seen as file systems in a file system. Because allocation groups are rather independent of each other, more than one of them can be addressed by the kernel simultaneously. This feature is the key to XFS’s great scalability. Naturally, the concept of independent allocation groups suits the needs of multiprocessor systems.

- High performance

XFS offers high performance through efficient management of disk space

Free space and inodes are handled by B+ trees inside the allocation groups. The use of B+ trees greatly contributes to XFS’s performance and scalability. XFS uses delayed allocation, which handles allocation by breaking the process into two pieces. A pending transaction is stored in RAM and the appropriate amount of space is reserved. XFS still does not decide where exactly (in file system blocks) the data should be stored. This decision is delayed until the last possible moment. Some short-lived temporary data might never make its way to disk, because it is obsolete by the time XFS decides where to save it. In this way, XFS increases write performance and reduces file system fragmentation. Because delayed allocation results in less frequent write events than in other file systems, it is likely that data loss after a crash during a write is more severe.

- Preallocation to avoid file system fragmentation

Before writing the data to the file system, XFS reserves (preallocates) the free space needed for a file. Thus, file system fragmentation is greatly reduced. Performance is increased because the contents of a file are not distributed all over the file system.

1.3.1 XFS formats #

SUSE Linux Enterprise Server supports the “on-disk format” (v5) of the XFS file system. The main advantages of this format are automatic checksums of all XFS metadata, file type support, and support for a larger number of access control lists for a file.

Note that this format is not supported by SUSE Linux Enterprise kernels older than version

3.12, by xfsprogs older than version 3.2.0, and GRUB 2

versions released before SUSE Linux Enterprise 12.

XFS is deprecating file systems with the V4 format. This file system format was created by the command:

mkfs.xfs -m crc=0 DEVICE

The format was used in SLE 11 and older releases and currently it creates

a warning message by dmesg:

Deprecated V4 format (crc=0) will not be supported after September 2030

If you see the message above in the output of the dmesg

command, it is recommended that you update your file system to the V5

format:

Back up your data to another device.

Create the file system on the device.

mkfs.xfs -m crc=1 DEVICE

Restore the data from the backup on the updated device.

1.4 Ext2 #

The origins of Ext2 go back to the early days of Linux history. Its predecessor, the Extended File System, was implemented in April 1992 and integrated in Linux 0.96c. The Extended File System underwent several modifications and, as Ext2, became the most popular Linux file system for years. With the creation of journaling file systems and their short recovery times, Ext2 became less important.

A brief summary of Ext2’s strengths might help understand why it was—and in some areas still is—the favorite Linux file system of many Linux users.

- Solidity and speed

Being an “old-timer”, Ext2 underwent many improvements and was heavily tested. This might be the reason people often refer to it as rock-solid. After a system outage when the file system could not be cleanly unmounted, e2fsck starts to analyze the file system data. Metadata is brought into a consistent state and pending files or data blocks are written to a designated directory (called

lost+found). In contrast to journaling file systems, e2fsck analyzes the entire file system and not only the recently modified bits of metadata. This takes significantly longer than checking the log data of a journaling file system. Depending on file system size, this procedure can take half an hour or more. Therefore, it is not desirable to choose Ext2 for any server that needs high availability. However, because Ext2 does not maintain a journal and uses less memory, it is sometimes faster than other file systems.- Easy upgradability

Because Ext3 is based on the Ext2 code and shares its on-disk format and its metadata format, upgrades from Ext2 to Ext3 are very easy.

1.5 Ext3 #

Ext3 was designed by Stephen Tweedie. Unlike all other next-generation file systems, Ext3 does not follow a completely new design principle. It is based on Ext2. These two file systems are very closely related to each other. An Ext3 file system can be easily built on top of an Ext2 file system. The most important difference between Ext2 and Ext3 is that Ext3 supports journaling. In summary, Ext3 has three major advantages to offer:

1.5.1 Easy and highly reliable upgrades from ext2 #

The code for Ext2 is the strong foundation on which Ext3 could become a highly acclaimed next-generation file system. Its reliability and solidity are elegantly combined in Ext3 with the advantages of a journaling file system. Unlike transitions to other journaling file systems, such as XFS, which can be quite tedious (making backups of the entire file system and re-creating it from scratch), a transition to Ext3 is a matter of minutes. It is also very safe, because re-creating an entire file system from scratch might not work flawlessly. Considering the number of existing Ext2 systems that await an upgrade to a journaling file system, you can easily see why Ext3 might be of some importance to many system administrators. Downgrading from Ext3 to Ext2 is as easy as the upgrade. Perform a clean unmount of the Ext3 file system and remount it as an Ext2 file system.

1.5.2 Converting an ext2 file system into ext3 #

To convert an Ext2 file system to Ext3:

Create an Ext3 journal by running

tune2fs -jas therootuser.This creates an Ext3 journal with the default parameters.

To specify how large the journal should be and on which device it should reside, run

tune2fs-Jinstead together with the desired journal optionssize=anddevice=. More information about thetune2fsprogram is available in thetune2fsman page.Edit the file

/etc/fstabas therootuser to change the file system type specified for the corresponding partition fromext2toext3, then save the changes.This ensures that the Ext3 file system is recognized as such. The change takes effect after the next reboot.

To boot a root file system that is set up as an Ext3 partition, add the modules

ext3andjbdin theinitrd. Do so byopening or creating

/etc/dracut.conf.d/filesystem.confand adding the following line (mind the leading blank space):force_drivers+=" ext3 jbd"

and running the

dracut-fcommand.

Reboot the system.

1.6 Ext4 #

In 2006, Ext4 started as a fork from Ext3. It is the latest file system in the extended file system version. Ext4 was originally designed to increase storage size by supporting volumes with a size of up to 1 exbibyte, files with a size of up to 16 tebibytes and an unlimited number of subdirectories. Ext4 uses extents, instead of the traditional direct and indirect block pointers, to map the file contents. Usage of extents improves both storage and retrieval of data from disks.

Ext4 also introduces several performance enhancements such as delayed block allocation and a much faster file system checking routine. Ext4 is also more reliable by supporting journal checksums and by providing time stamps measured in nanoseconds. Ext4 is fully backward compatible to Ext2 and Ext3—both file systems can be mounted as Ext4.

The Ext3 funcionality is fully supported by the Ext4 driver in the Ext4 kernel module.

1.6.1 Reliability and performance #

Some other journaling file systems follow the “metadata-only”

journaling approach. This means your metadata is always kept in a

consistent state, but this cannot be automatically guaranteed for the file

system data itself. Ext4 is designed to take care of both metadata and

data. The degree of “care” can be customized. Mounting Ext4 in

the data=journal mode offers maximum security (data

integrity), but can slow down the system because both metadata and data are

journaled. Another approach is to use the data=ordered

mode, which ensures both data and metadata integrity, but uses journaling

only for metadata. The file system driver collects all data blocks that

correspond to one metadata update. These data blocks are written to disk

before the metadata is updated. As a result, consistency is achieved for

metadata and data without sacrificing performance. A third mount option to

use is data=writeback, which allows data to be written to

the main file system after its metadata has been committed to the journal.

This option is often considered the best in performance. It can, however,

allow old data to reappear in files after crash and recovery while internal

file system integrity is maintained. Ext4 uses the

data=ordered option as the default.

1.6.2 Ext4 file system inode size and number of inodes #

An inode stores information about the file and its block location in the file system. To allow space in the inode for extended attributes and ACLs, the default inode size was increased to 256 bytes.

When you create a new Ext4 file system, the space in the inode table is preallocated for the total number of inodes that can be created. The bytes-per-inode ratio and the size of the file system determine how many inodes are possible. When the file system is made, an inode is created for every bytes-per-inode bytes of space:

number of inodes = total size of the file system divided by the number of bytes per inode

The number of inodes controls the number of files you can have in the file system: one inode for each file.

After the inodes are allocated, you cannot change the settings for the inode size or bytes-per-inode ratio. No new inodes are possible without re-creating the file system with different settings, or unless the file system gets extended. When you exceed the maximum number of inodes, no new files can be created on the file system until some files are deleted.

When you make a new Ext4 file system, you can specify the inode size and

bytes-per-inode ratio to control inode space usage and the number of files

possible on the file system. If the blocks size, inode size, and

bytes-per-inode ratio values are not specified, the default values in the

/etc/mked2fs.conf file are applied. For information,

see the mke2fs.conf(5) man page.

Use the following guidelines:

Inode size: The default inode size is 256 bytes. Specify a value in bytes that is a power of 2 and equal to 128 or larger in bytes and up to the block size, such as 128, 256, 512, and so on. Use 128 bytes only if you do not use extended attributes or ACLs on your Ext4 file systems.

Bytes-per-inode ratio: The default bytes-per-inode ratio is 16384 bytes. Valid bytes-per-inode ratio values must be a power of 2 equal to 1024 or greater in bytes, such as 1024, 2048, 4096, 8192, 16384, 32768, and so on. This value should not be smaller than the block size of the file system, because the block size is the smallest chunk of space used to store data. The default block size for the Ext4 file system is 4 KiB.

In addition, consider the number of files and the size of files you need to store. For example, if your file system will have many small files, you can specify a smaller bytes-per-inode ratio, which increases the number of inodes. If your file system will have very large files, you can specify a larger bytes-per-inode ratio, which reduces the number of possible inodes.

Generally, it is better to have too many inodes than to run out of them. If you have too few inodes and very small files, you could reach the maximum number of files on a disk that is practically empty. If you have too many inodes and very large files, you might have free space reported but be unable to use it because you cannot create new files in space reserved for inodes.

Use any of the following methods to set the inode size and bytes-per-inode ratio:

Modifying the default settings for all new ext4 file systems: In a text editor, modify the

defaultssection of the/etc/mke2fs.conffile to set theinode_sizeandinode_ratioto the desired default values. The values apply to all new Ext4 file systems. For example:blocksize = 4096 inode_size = 128 inode_ratio = 8192

At the command line: Pass the inode size (

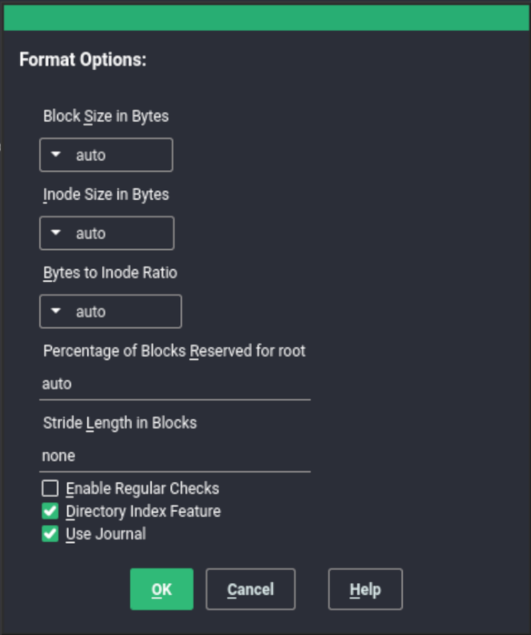

-I 128) and the bytes-per-inode ratio (-i 8192) to themkfs.ext4(8)command or themke2fs(8)command when you create a new ext4 file system. For example, use either of the following commands:>sudomkfs.ext4 -b 4096 -i 8092 -I 128 /dev/sda2>sudomke2fs -t ext4 -b 4096 -i 8192 -I 128 /dev/sda2During installation with YaST: Pass the inode size and bytes-per-inode ratio values when you create a new Ext4 file system during the installation. In the , select the partition, click . Under , select , then click . In the dialog, select the desired values from the , , and drop-down box.

For example, select 4096 for the drop-down box, select 8192 from the drop-down box, select 128 from the drop-down box, then click .

1.6.3 Upgrading to Ext4 #

Back up all data on the file system before performing any update of your file system.

To upgrade from Ext2 or Ext3, you must enable the following:

Features required by Ext4 #- extents

contiguous blocks on the hard disk that are used to keep files close together and prevent fragmentation

- unint_bg

lazy inode table initialization

- dir_index

hashed b-tree lookups for large directores

- on Ext2:

as_journal enable journalling on your Ext2 file system.

To enable the features, run:

on Ext3:

#tune2fs -O extents,uninit_bg,dir_index DEVICE_NAMEon Ext2:

#tune2fs -O extents,uninit_bg,dir_index,has_journal DEVICE_NAME

As

rootedit the/etc/fstabfile: change theext3orext2record toext4. The change takes effect after the next reboot.To boot a file system that is setup on an ext4 parition, add the modules:

ext4andjbdin theinitramfs. Open or create/etc/dracut.conf.d/filesystem.confand add the following line:force_drivers+=" ext4 jbd"

You need to overwrite the existing dracut

initramfsby running:dracut -f

Reboot your system.

1.7 ReiserFS #

ReiserFS support was completely removed with SUSE Linux Enterprise Server 15. To migrate your existing partitions to Btrfs, refer to Section 1.2.3, “Migration from ReiserFS and ext file systems to Btrfs”.

1.8 Other supported file systems #

Table 1.1, “File system types in Linux” summarizes some other file systems supported by Linux. They are supported mainly to ensure compatibility and interchange of data with different kinds of media or foreign operating systems.

|

File System Type |

Description |

|---|---|

|

|

Standard file system on CD-ROMs. |

|

|

|

|

|

Network File System: Here, data can be stored on any machine in a network and access might be granted via a network. |

|

|

Windows NT file system; read-only. |

|

|

File system optimized for use with flash memory, such as USB flash drives and SD cards. |

|

|

Server Message Block is used by products such as Windows to enable file access over a network. |

|

|

Used by BSD, SunOS, and NextStep. Only supported in read-only mode. |

|

|

Unix on MS-DOS: Applied on top of a standard |

|

|

Virtual FAT: Extension of the |

1.9 Blacklisted file systems #

Due to security reasons, some file systems have been blacklisted. These file systems are usually not maintained anymore and are not in common use. However, the kernel module for a blacklisted file system can be loaded, because the in-kernel API is still compatible. A combination of user-mountable file systems and the automatic mounting of file systems on removable devices could result in the situation where unprivileged users might trigger the automatic loading of kernel modules, and the removable devices could store potentially malicious data.

To get a list of currently blacklisted file systems, run the following command:

>sudorpm -ql suse-module-tools | sed -nE 's/.*blacklist_fs-(.*)\.conf/\1/p'

If you try to mount a device with a blacklisted file system using the

mountcommand, the command outputs an error message, for

example:

mount: /mnt/mx: unknown filesystem type 'minix' (hint: possibly blacklisted, see mount(8)).

To enable the mounting of the file system, you need to remove the particular

file system from the blacklist. Each blacklisted file system has its own configuration file, for example,

for efs it is

/etc/modules.d/60-blacklist_fs-efs.conf. To remove the file system

from the blacklist, you have the following options:

Create a symbolic link to

/dev/null, for example, for the efs file system:>sudoln -s /dev/null /etc/modules.d/60-blacklist_fs-efs.confComment the following statement in the configuration file

/etc/modules.d/60-blacklist_fs-omfs.conf:# blacklist omfs

Even though a file system is blacklisted, you can load the corresponding

kernel module for the file system directly using

modprobe:

>sudomodprobe FILESYSTEM

For example, for the cramfs file system, the output

looks as follows:

unblacklist: loading cramfs file system module unblacklist: Do you want to un-blacklist cramfs permanently (<y>es/<n>o/n<e>ver)? y unblacklist: cramfs un-blacklisted by creating /etc/modprobe.d/60-blacklist_fs-cramfs.conf

If you select yes, the modprobe

command calls a script that creates a symbolic link from the configuration

file of the provided file system to /dev/null. Thus,

the file system is removed from the blacklist.

1.10 Large file support in Linux #

Originally, Linux supported a maximum file size of 2 GiB (231 bytes). Unless a file system comes with large file support, the maximum file size on a 32-bit system is 2 GiB.

Currently, all our standard file systems have LFS (large file support), which gives a maximum file size of 263 bytes in theory. Table 1.2, “Maximum sizes of files and file systems (on-disk format, 4 KiB block size)” offers an overview of the current on-disk format limitations of Linux files and file systems. The numbers in the table assume that the file systems are using 4 KiB block size, which is a common standard. When using different block sizes, the results are different. The maximum file sizes in Table 1.2, “Maximum sizes of files and file systems (on-disk format, 4 KiB block size)” can be larger than the file system's actual size when using sparse blocks.

In this document: 1024 Bytes = 1 KiB; 1024 KiB = 1 MiB; 1024 MiB = 1 GiB; 1024 GiB = 1 TiB; 1024 TiB = 1 PiB; 1024 PiB = 1 EiB (see also NIST: Prefixes for Binary Multiples.

|

File System (4 KiB Block Size) |

Maximum File System Size |

Maximum File Size |

|---|---|---|

|

Btrfs |

16 EiB |

16 EiB |

|

Ext3 |

16 TiB |

2 TiB |

|

Ext4 |

1 EiB |

16 TiB |

|

OCFS2 (a cluster-aware file system available in SLE HA) |

16 TiB |

1 EiB |

|

XFS |

16 EiB |

8 EiB |

|

NFSv2 (client side) |

8 EiB |

2 GiB |

|

NFSv3/NFSv4 (client side) |

8 EiB |

8 EiB |

Table 1.2, “Maximum sizes of files and file systems (on-disk format, 4 KiB block size)” describes the limitations regarding the on-disk format. The Linux kernel imposes its own limits on the size of files and file systems handled by it. These are as follows:

- File size

On 32-bit systems, files cannot exceed 2 TiB (241 bytes).

- File system size

File systems can be up to 273 bytes in size. However, this limit is still out of reach for the currently available hardware.

1.11 Linux kernel storage limitations #

Table 1.3, “Storage limitations” summarizes the kernel limits for storage associated with SUSE Linux Enterprise Server.

|

Storage Feature |

Limitation |

|---|---|

|

Maximum number of LUNs supported |

16384 LUNs per target. |

|

Maximum number of paths per single LUN |

No limit by default. Each path is treated as a normal LUN. The actual limit is given by the number of LUNs per target and the number of targets per HBA (16777215 for a Fibre Channel HBA). |

|

Maximum number of HBAs |

Unlimited. The actual limit is determined by the amount of PCI slots of the system. |

|

Maximum number of paths with device-mapper-multipath (in total) per operating system |

Approximately 1024. The actual number depends on the length of the device number strings for each multipath device. It is a compile-time variable within multipath-tools, which can be raised if this limit poses a problem. |

|

Maximum size per block device |

Up to 8 EiB. |

1.12 Freeing unused file system blocks #

On solid-state drives (SSDs) and thinly provisioned volumes, it is useful to

trim blocks that are not in use by the file system. SUSE Linux Enterprise Server fully

supports unmap and TRIM operations on

all file systems supporting them.

There are two types of commonly used TRIM—online

TRIM and periodic TRIM. The most

suitable way of trimming devices depends on your use case. In general, it is

recommended to use periodic TRIM, especially if the device has enough free

blocks. If the device is often near its full capacity, online TRIM is

preferable.

TRIM support on devices

Always verify that your device supports the TRIM

operation before you attempt to use it. Otherwise, you might lose your data

on that device. To verify the TRIM support, run the

command:

>sudolsblk --discard

The command outputs information about all available block devices. If the

values of the columns DISC-GRAN and

DISC-MAX are non-zero, the device supports the

TRIM operation.

1.12.1 Periodic TRIM #

Periodic TRIM is handled by the fstrim command invoked

by systemd on a regular basis. You can also run the command manually.

To schedule periodic TRIM, enable the fstrim.timer as

follows:

>sudosystemctl enable fstrim.timer

systemd creates a unit file in

/usr/lib/systemd/system. By default, the service runs

once a week, which is usually sufficient. However, you can change the

frequency by configuring the OnCalendar option to a

required value.

The default behaviour of fstrim is to discard all blocks

in the file system. You can use options when invoking the command to modify

this behaviour. For example, you can pass the offset

option to define the place where to start the trimming procedure. For

details, see man fstrim.

The fstrim command can perform trimming on all devices

stored in the /etc/fstab file, which support the

TRIM operation—use the -A

option when invoking the command for this purpose.

1.12.2 Online TRIM #

Online TRIM of a device is performed each time data is written to the device.

To enable online TRIM on a device, add the discard

option to the /etc/fstab file as follows:

UID=83df497d-bd6d-48a3-9275-37c0e3c8dc74 / btrfs defaults,discard

Alternatively, on the Ext4 file system you can use the

tune2fs command to set the discard

option in /etc/fstab:

>sudotune2fs -o discard DEVICE

The discard option is also added to

/etc/fstab in case the device was mounted by

mount with the discard option:

>sudomount -o discard DEVICE

Using the discard option may decrease life time of some

lower-quality SSD devices. Online TRIM can also impact the performance of

the device, for example, if a larger amount of data is deleted. In this

situation, an erase block might be reallocated, and shortly afterwards,

the same erase block might be marked as unused again.

1.13 Troubleshooting file systems #

This section describes some known issues and possible solutions for file systems.

1.13.1 Btrfs error: no space is left on device #

The root (/) partition using the Btrfs file system

stops accepting data. You receive the error “No space left

on device”.

See the following sections for information about possible causes and prevention of this issue.

1.13.1.1 Disk space consumed by Snapper snapshots #

If Snapper is running for the Btrfs file system, the “No

space left on device” problem is typically caused by

having too much data stored as snapshots on your system.

You can remove some snapshots from Snapper, however, the snapshots are not deleted immediately and might not free up as much space as you need.

To delete files from Snapper:

Open a terminal.

At the command prompt, enter

btrfs filesystem show, for example:>sudobtrfs filesystem show Label: none uuid: 40123456-cb2c-4678-8b3d-d014d1c78c78 Total devices 1 FS bytes used 20.00GB devid 1 size 20.00GB used 20.00GB path /dev/sda3Enter

>sudobtrfs fi balance start MOUNTPOINT -dusage=5This command attempts to relocate data in empty or near-empty data chunks, allowing the space to be reclaimed and reassigned to metadata. This can take a while (many hours for 1 TB) although the system is otherwise usable during this time.

List the snapshots in Snapper. Enter

>sudosnapper -c root listDelete one or more snapshots from Snapper. Enter

>sudosnapper -c root delete SNAPSHOT_NUMBER(S)Ensure that you delete the oldest snapshots first. The older a snapshot is, the more disk space it occupies.

To help prevent this problem, you can change the Snapper cleanup

algorithms. See Book “Administration Guide”, Chapter 7 “System recovery and snapshot management with Snapper”, Section 7.6.1.2 “Cleanup algorithms” for

details. The configuration values controlling snapshot cleanup are

EMPTY_*, NUMBER_*, and

TIMELINE_*.

If you use Snapper with Btrfs on the file system disk, it is advisable to reserve twice the amount of disk space than the standard storage proposal. The YaST Partitioner automatically proposes twice the standard disk space in the Btrfs storage proposal for the root file system.

1.13.1.2 Disk space consumed by log, crash, and cache files #

If the system disk is filling up with data, you can try deleting files

from /var/log, /var/crash,

/var/lib/systemd/coredump and

/var/cache.

The Btrfs root file system subvolumes /var/log,

/var/crash and /var/cache can

use all of the available disk space during normal operation, and cause a

system malfunction. To help avoid this situation, SUSE Linux Enterprise Server offers

Btrfs quota support for subvolumes. See

Section 1.2.5, “Btrfs quota support for subvolumes” for details.

On test and development machines, especially if you have frequent crashes

of applications, you may also want to have a look at

/var/lib/systemd/coredump where the core dumps are

stored.

1.13.2 Btrfs: balancing data across devices #

The btrfs balance command is part of the

btrfs-progs package. It balances block groups on Btrfs

file systems in the following example situations:

Assume you have a 1 TB drive with 600 GB used by data and you add another 1 TB drive. The balancing will theoretically result in having 300 GB of used space on each drive.

You have a lot of near-empty chunks on a device. Their space will not be available until the balancing has cleared those chunks.

You need to compact half-empty block group based on the percentage of their usage. The following command will balance block groups whose usage is 5% or less:

>sudobtrfs balance start -dusage=5 /TipThe

/usr/lib/systemd/system/btrfs-balance.timertimer takes care of cleaning up unused block groups on a monthly basis.You need to clear out non-full portions of block devices and spread data more evenly.

You need to migrate data between different RAID types. For example, to convert data on a set of disks from RAID1 to RAID5, run the following command:

>sudobtrfs balance start -dprofiles=raid1,convert=raid5 /

To fine-tune the default behavior of balancing data on Btrfs file

systems—for example, how frequently or which mount points to

balance— inspect and customize

/etc/sysconfig/btrfsmaintenance. The relevant options

start with BTRFS_BALANCE_.

For details about the btrfs balance command usage, see

its manual pages (man 8 btrfs-balance).

1.13.3 No defragmentation on SSDs #

Linux file systems contain mechanisms to avoid data fragmentation and usually it is not necessary to defragment. However, there are use cases, where data fragmentation cannot be avoided and where defragmenting the hard disk significantly improves the performance.

This only applies to conventional hard disks. On solid state disks (SSDs) which use flash memory to store data, the firmware provides an algorithm that determines to which chips the data is written. Data is usually spread all over the device. Therefore defragmenting an SSD does not have the desired effect and will reduce the lifespan of an SSD by writing unnecessary data.

For the reasons mentioned above, SUSE explicitly recommends not to defragment SSDs. Some vendors also warn about defragmenting solid state disks. This includes, but it is not limited to the following:

HPE 3PAR StoreServ All-Flash

HPE 3PAR StoreServ Converged Flash

1.14 More information #

Each of the file system projects described above maintains its own home page on which to find mailing list information, further documentation, and FAQs:

The Btrfs Wiki on Kernel.org: https://btrfs.wiki.kernel.org/

E2fsprogs: Ext2/3/4 File System Utilities: http://e2fsprogs.sourceforge.net/

The OCFS2 Project: https://oss.oracle.com/projects/ocfs2/

An in-depth comparison of file systems (not only Linux file systems) is available from the Wikipedia project in Comparison of File Systems (http://en.wikipedia.org/wiki/Comparison_of_file_systems#Comparison).

2 Resizing file systems #

Resizing file systems—not to be confused with resizing partitions or volumes—can be used to make space available on physical volumes or to use additional space available on a physical volume.

2.1 Use cases #

It is strongly recommended to use the YaST Partitioner to resize partitions or logical volumes. When doing so, the file system will automatically be adjusted to the new size of the partition or volume. However, there are some cases where you need to resize the file system manually, because they are not supported by YaST:

After having resized a virtual disk of a VM Guest.

After having resized a volume from a network-attached storage.

After having manually resized partitions (for example by using

fdiskorparted) or logical volumes (for example by usinglvresize).When wanting to shrink Btrfs file systems (as of SUSE Linux Enterprise Server 12, YaST only supports growing Btrfs file systems).

2.2 Guidelines for resizing #

Resizing any file system involves some risks that can potentially result in losing data.

To avoid data loss, ensure that you back up your data before you begin any resizing task.

Consider the following guidelines when planning to resize a file system.

2.2.1 File systems that support resizing #

The file system must support resizing to take advantage of increases in available space for the volume. In SUSE Linux Enterprise Server, file system resizing utilities are available for file systems Ext2, Ext3, and Ext4. The utilities support increasing and decreasing the size as follows:

|

File System |

Utility |

Increase Size (Grow) |

Decrease Size (Shrink) |

|---|---|---|---|

|

Btrfs |

|

Online |

Online |

|

XFS |

|

Online |

Not supported |

|

Ext2 |

|

Online or offline |

Offline only |

|

Ext3 |

|

Online or offline |

Offline only |

|

Ext4 |

|

Online or offline |

Offline only |

2.2.2 Increasing the size of a file system #

You can grow a file system to the maximum space available on the device, or specify an exact size. Ensure that you grow the size of the device or logical volume before you attempt to increase the size of the file system.

When specifying an exact size for the file system, ensure that the new size satisfies the following conditions:

The new size must be greater than the size of the existing data; otherwise, data loss occurs.

The new size must be equal to or less than the current device size because the file system size cannot extend beyond the space available.

2.2.3 Decreasing the size of a file system #

When decreasing the size of the file system on a device, ensure that the new size satisfies the following conditions:

The new size must be greater than the size of the existing data; otherwise, data loss occurs.

The new size must be equal to or less than the current device size because the file system size cannot extend beyond the space available.

If you plan to also decrease the size of the logical volume that holds the file system, ensure that you decrease the size of the file system before you attempt to decrease the size of the device or logical volume.

Decreasing the size of a file system formatted with XFS is not possible, since such a feature is not supported by XFS.

2.3 Changing the size of a Btrfs file system #

The size of a Btrfs file system can be changed by using the btrfs

filesystem resize command when the file system is mounted.

Increasing and decreasing the size are both supported while the file system

is mounted.

Open a terminal.

Make sure the file system you want to change is mounted.

Change the size of the file system using the

btrfs filesystem resizecommand with one of the following methods:To extend the file system size to the maximum available size of the device, enter

>sudobtrfs filesystem resize max /mntTo extend the file system to a specific size, enter

>sudobtrfs filesystem resize SIZE /mntReplace SIZE with the desired size in bytes. You can also specify units on the value, such as 50000K (kilobytes), 250M (megabytes), or 2G (gigabytes). Alternatively, you can specify an increase or decrease to the current size by prefixing the value with a plus (

+) or a minus (-) sign, respectively:>sudobtrfs filesystem resize +SIZE /mnt sudo btrfs filesystem resize -SIZE /mnt

Check the effect of the resize on the mounted file system by entering

>df -hThe Disk Free (