Operations Guide CLM #

At the time of the SUSE OpenStack Cloud 9 release, this guide contains information pertaining to the operation, administration, and user functions of SUSE OpenStack Cloud. The audience is the admin-level operator of the cloud.

- 1 Operations Overview

- 2 Tutorials

- 3 Cloud Lifecycle Manager Admin UI User Guide

- 4 Third-Party Integrations

- 5 Managing Identity

- 5.1 The Identity Service

- 5.2 Supported Upstream Keystone Features

- 5.3 Understanding Domains, Projects, Users, Groups, and Roles

- 5.4 Identity Service Token Validation Example

- 5.5 Configuring the Identity Service

- 5.6 Retrieving the Admin Password

- 5.7 Changing Service Passwords

- 5.8 Reconfiguring the Identity service

- 5.9 Integrating LDAP with the Identity Service

- 5.10 keystone-to-keystone Federation

- 5.11 Configuring Web Single Sign-On

- 5.12 Identity Service Notes and Limitations

- 6 Managing Compute

- 6.1 Managing Compute Hosts using Aggregates and Scheduler Filters

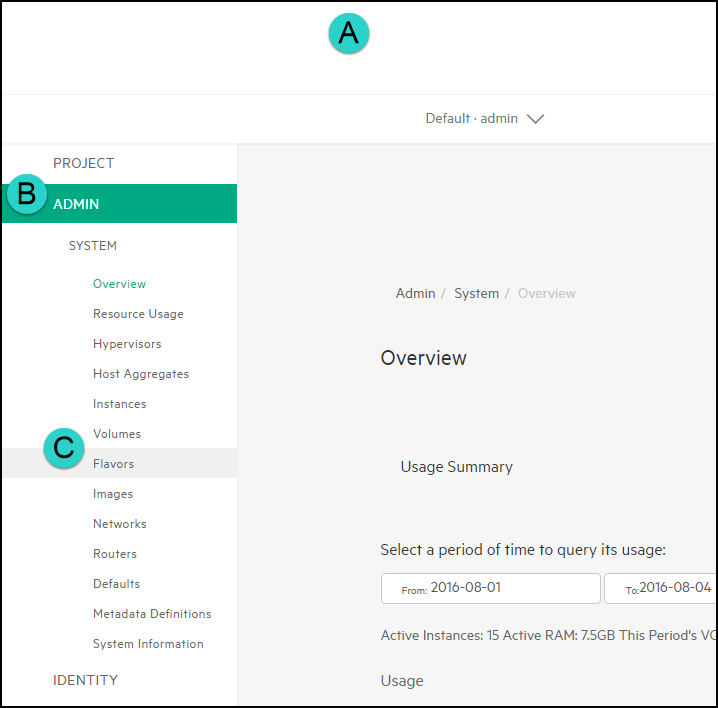

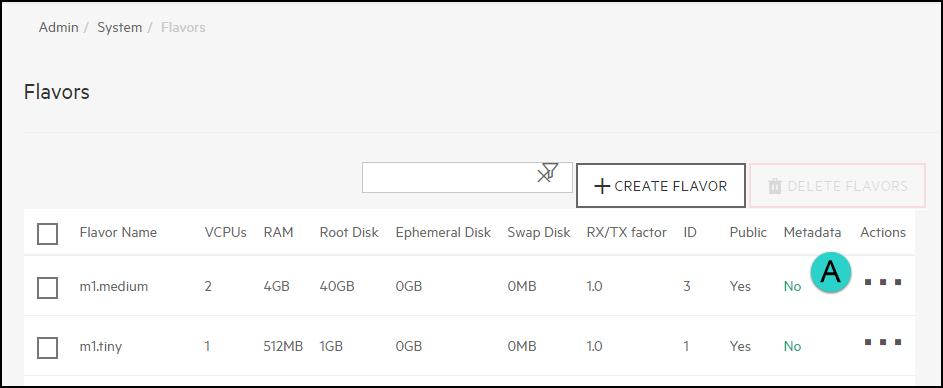

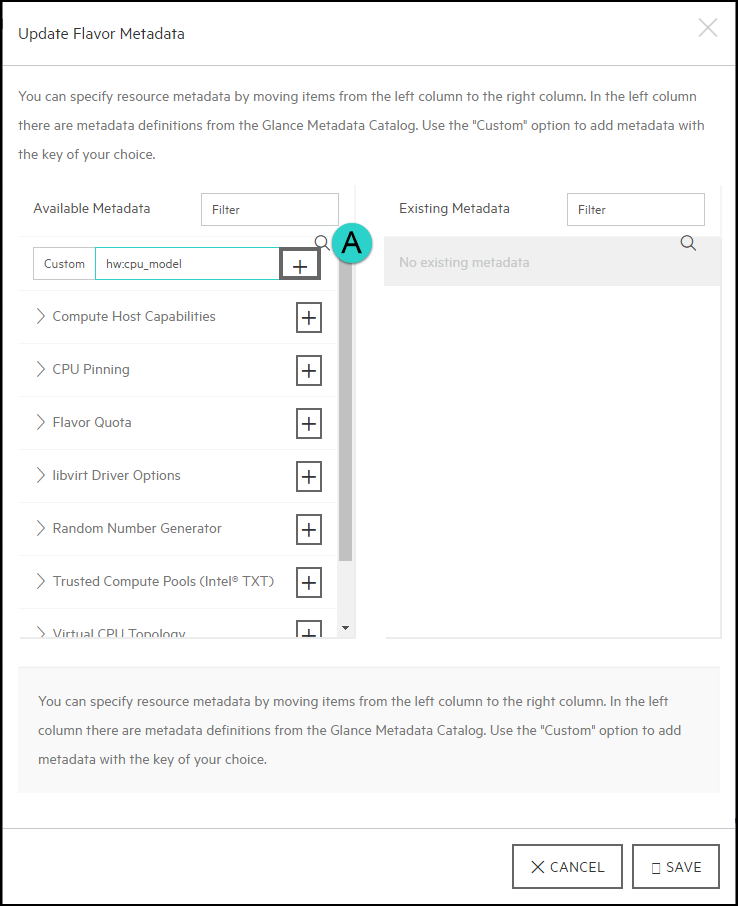

- 6.2 Using Flavor Metadata to Specify CPU Model

- 6.3 Forcing CPU and RAM Overcommit Settings

- 6.4 Enabling the Nova Resize and Migrate Features

- 6.5 Enabling ESX Compute Instance(s) Resize Feature

- 6.6 GPU passthrough

- 6.7 Configuring the Image Service

- 7 Managing ESX

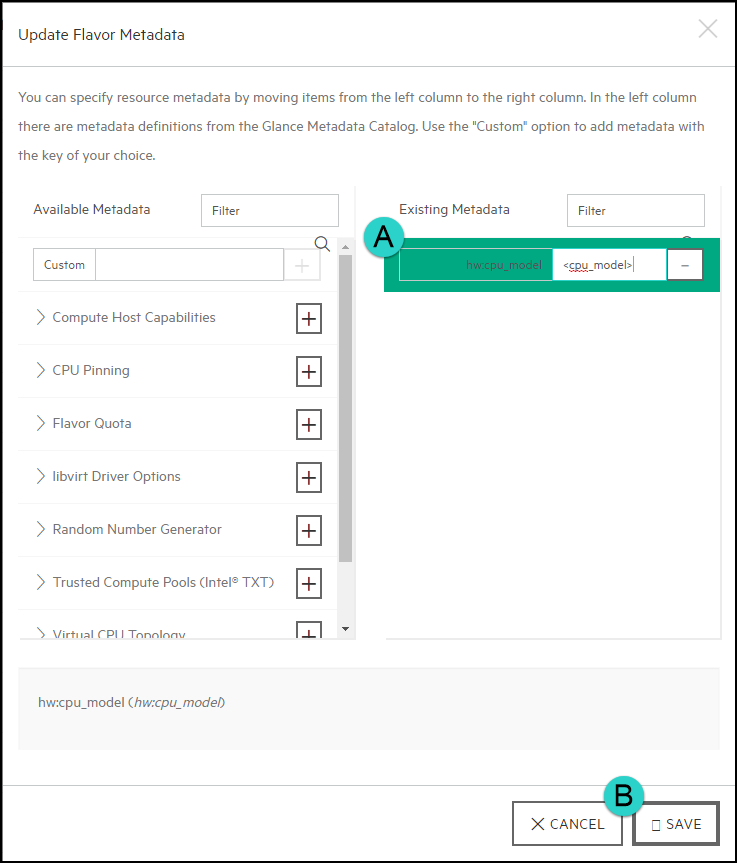

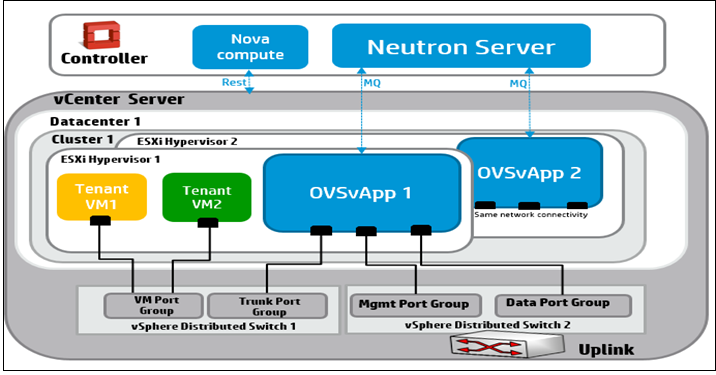

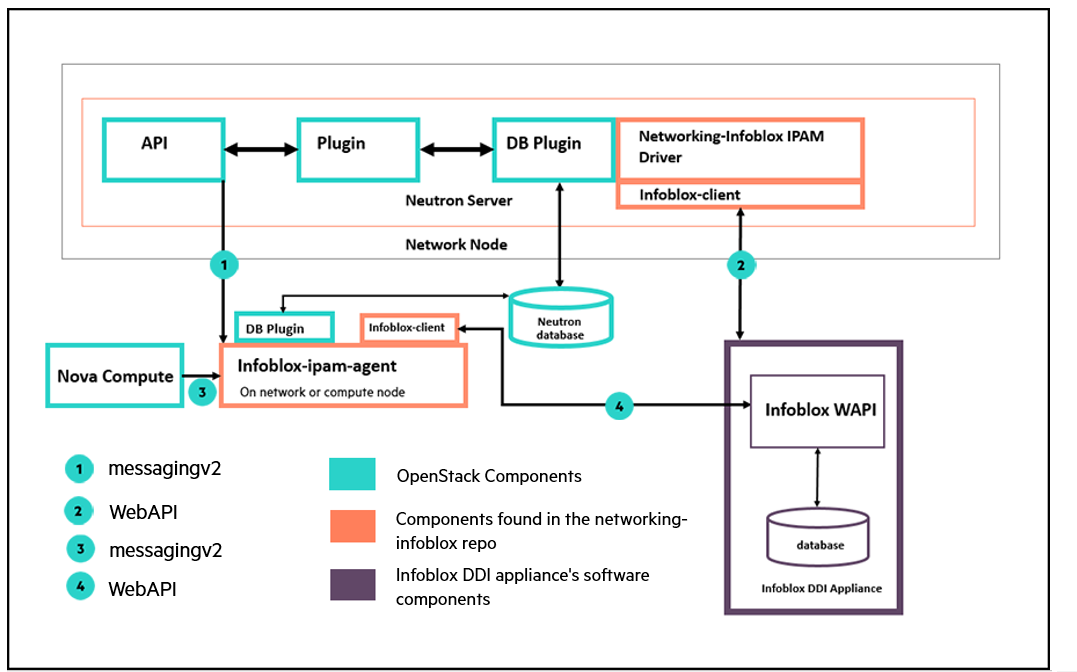

- 7.1 Networking for ESXi Hypervisor (OVSvApp)

- 7.2 Validating the neutron Installation

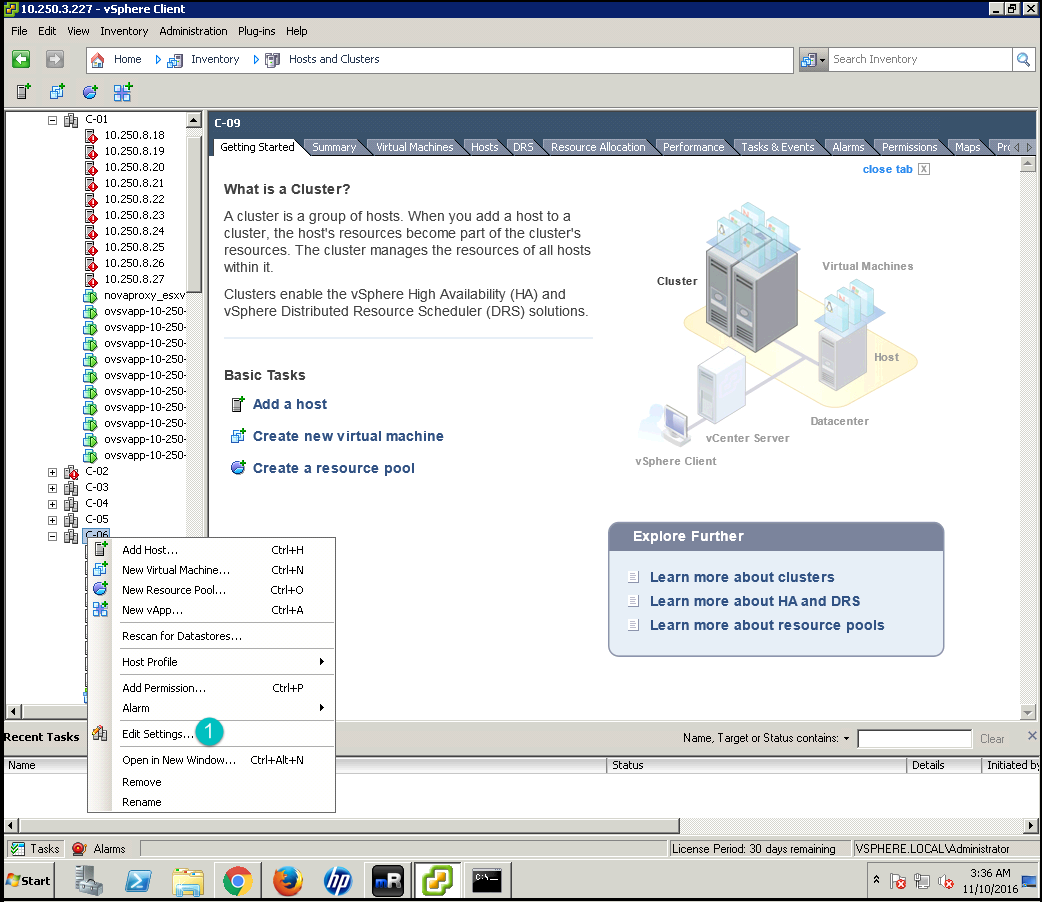

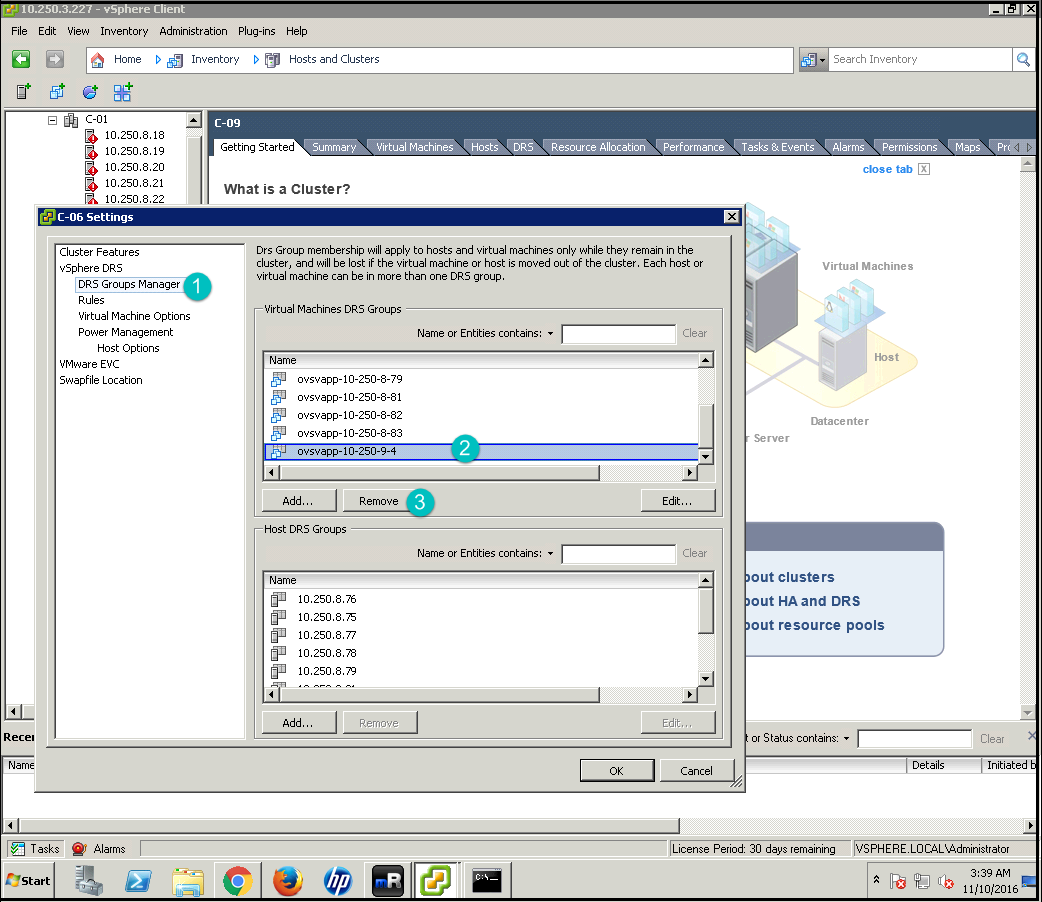

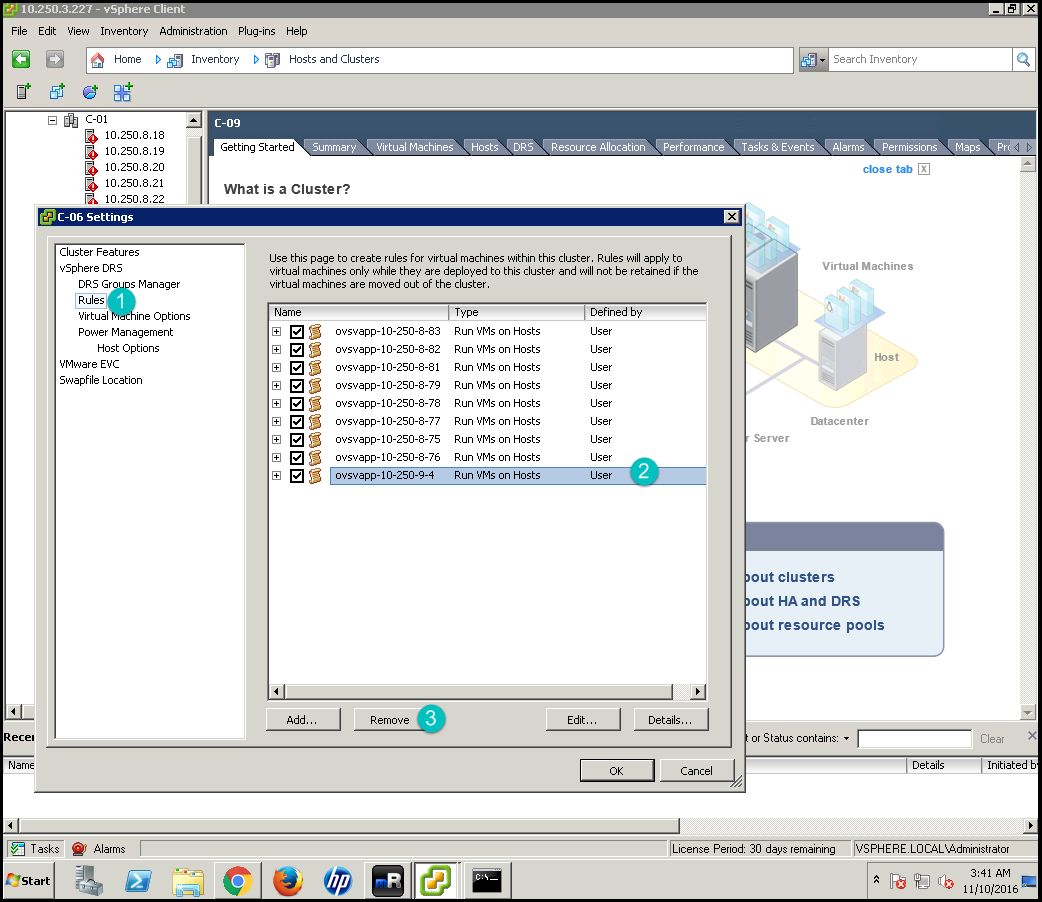

- 7.3 Removing a Cluster from the Compute Resource Pool

- 7.4 Removing an ESXi Host from a Cluster

- 7.5 Configuring Debug Logging

- 7.6 Making Scale Configuration Changes

- 7.7 Monitoring vCenter Clusters

- 7.8 Monitoring Integration with OVSvApp Appliance

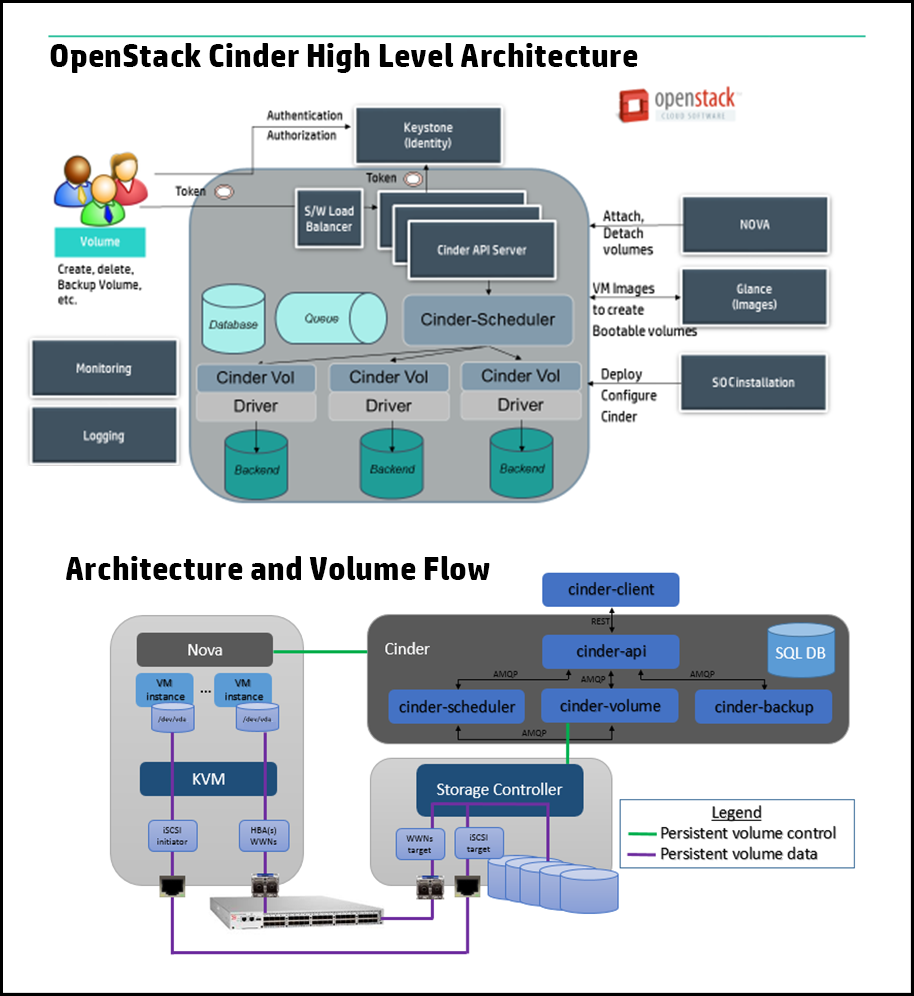

- 8 Managing Block Storage

- 9 Managing Object Storage

- 10 Managing Networking

- 11 Managing the Dashboard

- 12 Managing Orchestration

- 13 Managing Monitoring, Logging, and Usage Reporting

- 14 Managing Container as a Service (Magnum)

- 15 System Maintenance

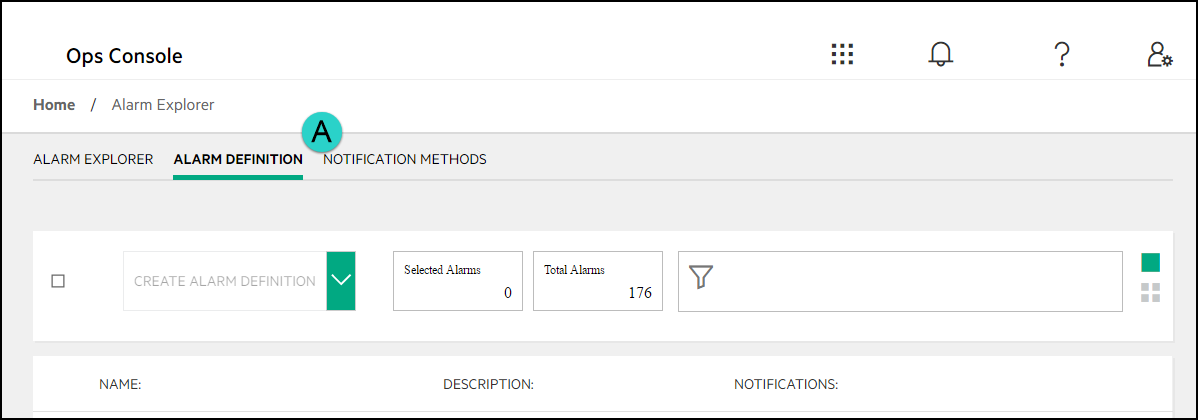

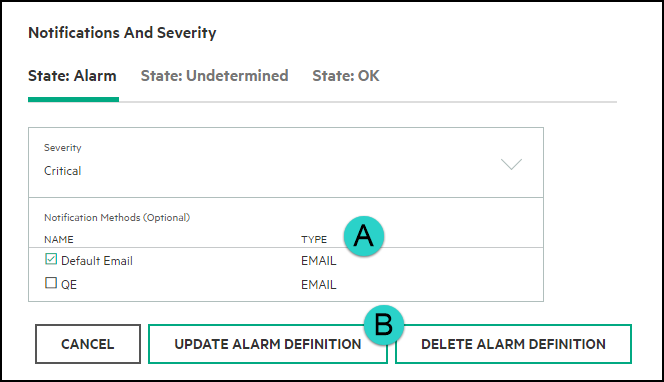

- 16 Operations Console

- 17 Backup and Restore

- 18 Troubleshooting Issues

- 18.1 General Troubleshooting

- 18.2 Control Plane Troubleshooting

- 18.3 Troubleshooting Compute service

- 18.4 Network Service Troubleshooting

- 18.5 Troubleshooting the Image (glance) Service

- 18.6 Storage Troubleshooting

- 18.7 Monitoring, Logging, and Usage Reporting Troubleshooting

- 18.8 Orchestration Troubleshooting

- 18.9 Troubleshooting Tools

- 3.1 Cloud Lifecycle Manager Admin UI Login Page

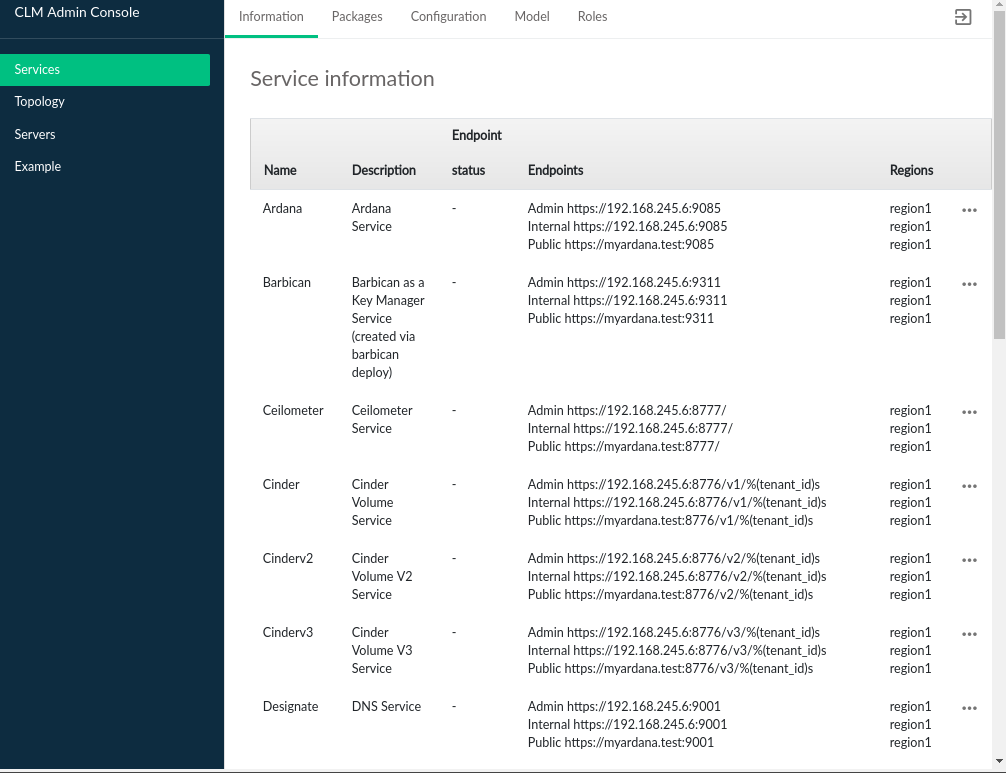

- 3.2 Cloud Lifecycle Manager Admin UI Service Information

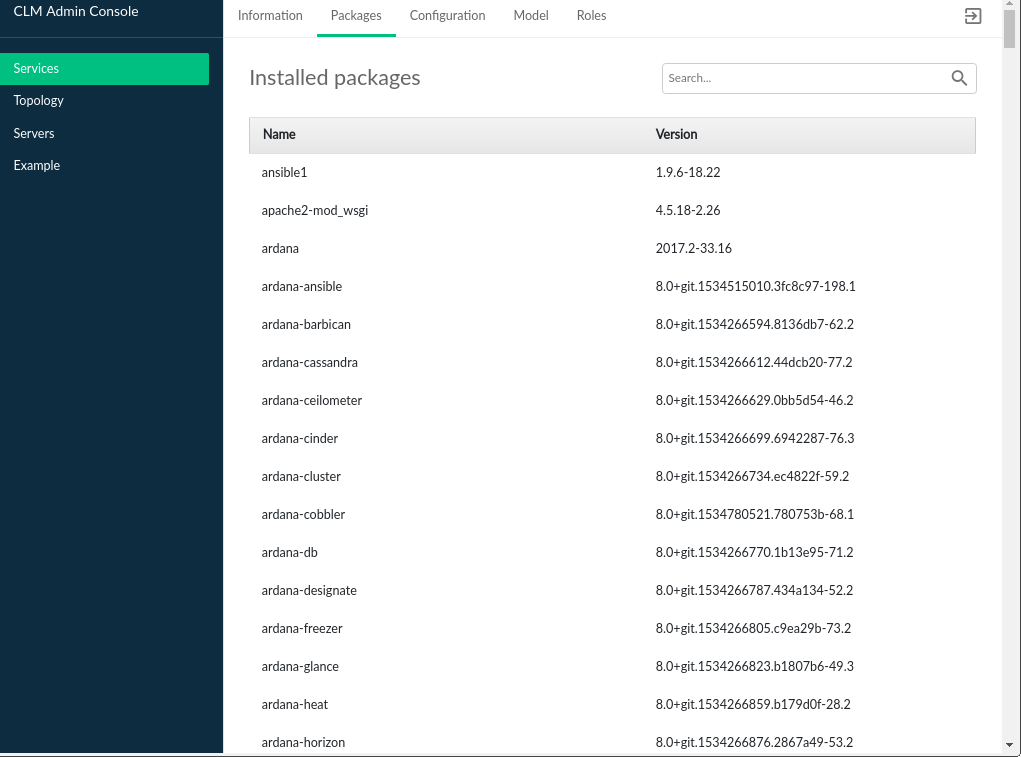

- 3.3 Cloud Lifecycle Manager Admin UI SUSE Cloud Package

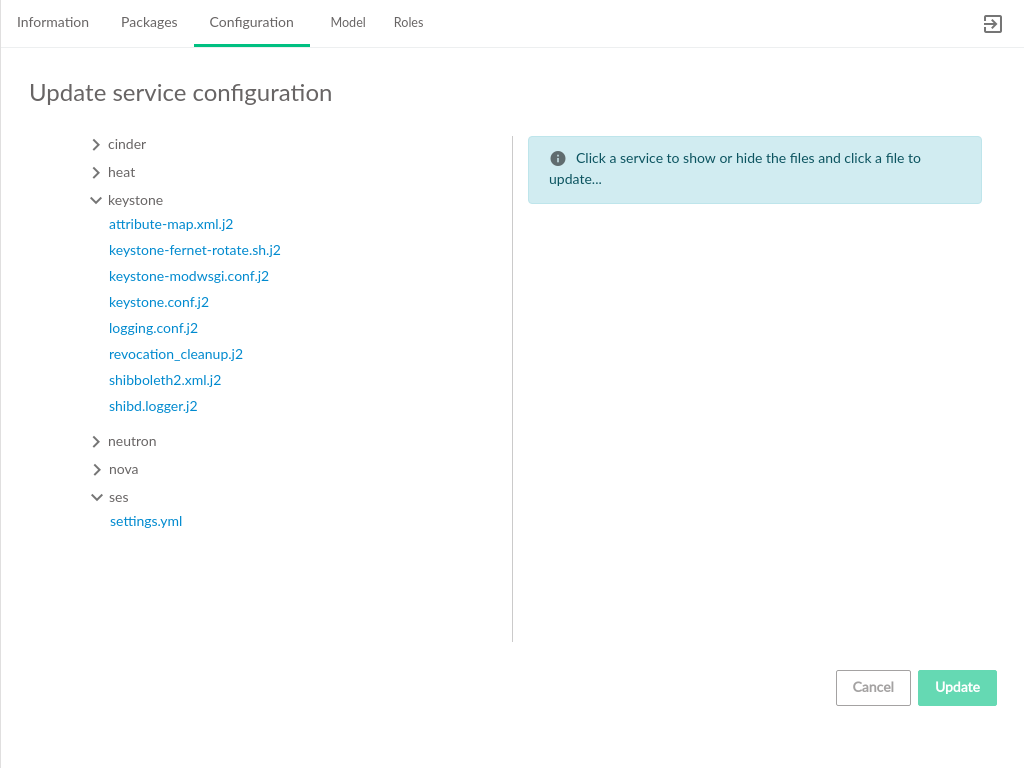

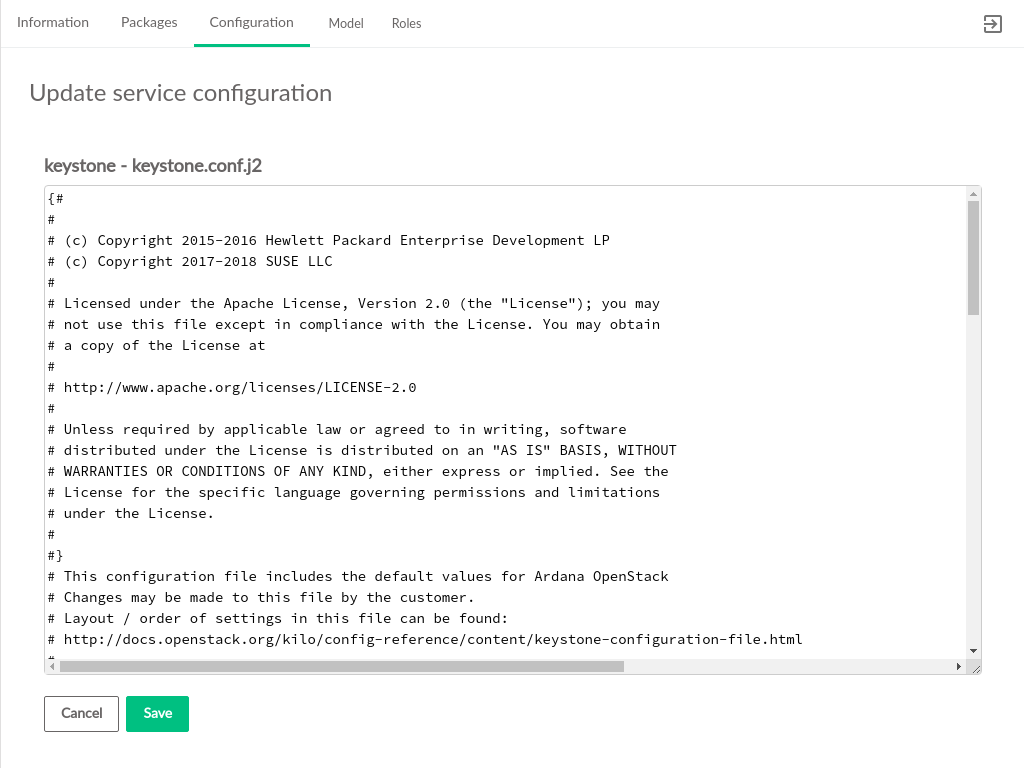

- 3.4 Cloud Lifecycle Manager Admin UI SUSE Service Configuration

- 3.5 Cloud Lifecycle Manager Admin UI SUSE Service Configuration Editor

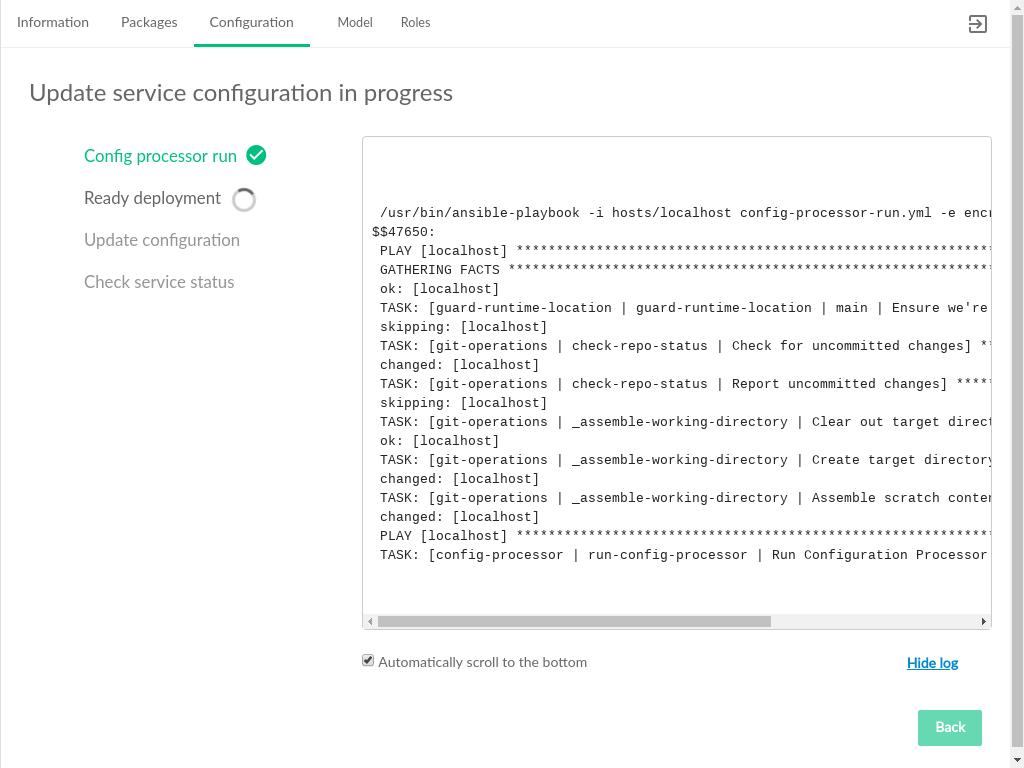

- 3.6 Cloud Lifecycle Manager Admin UI SUSE Service Configuration Update

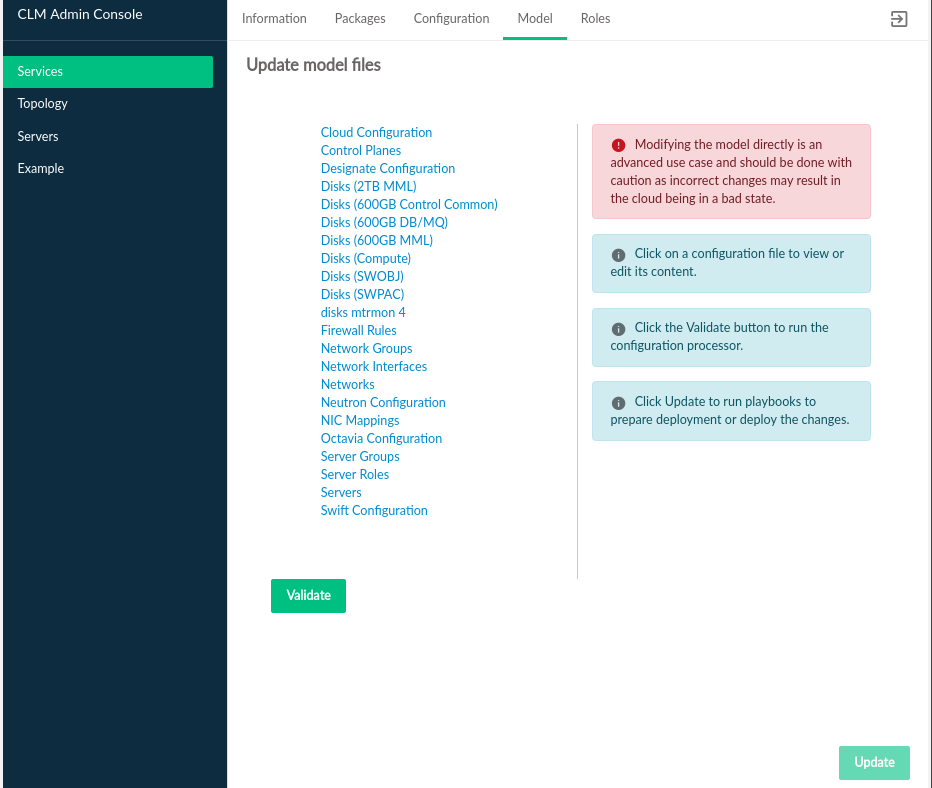

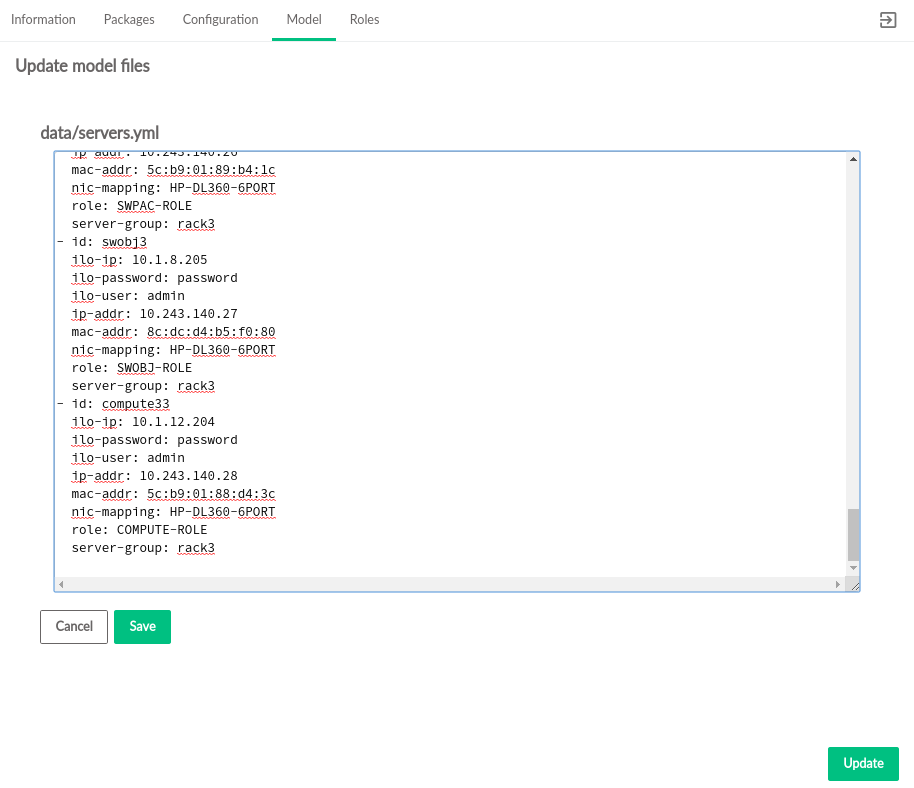

- 3.7 Cloud Lifecycle Manager Admin UI SUSE Service Model

- 3.8 Cloud Lifecycle Manager Admin UI SUSE Service Model Editor

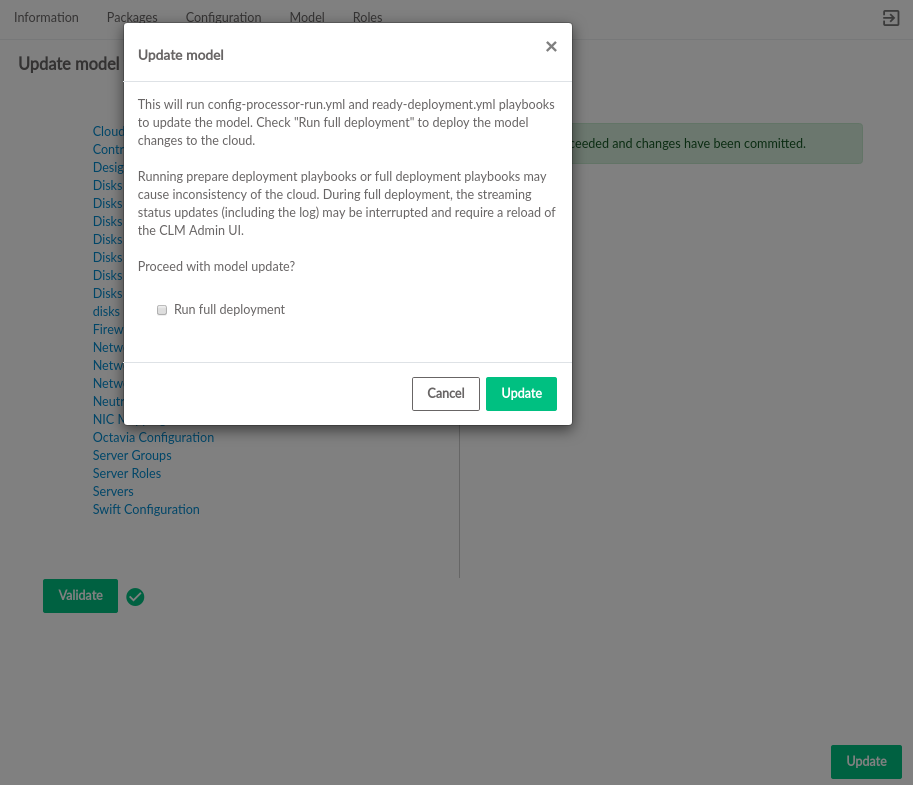

- 3.9 Cloud Lifecycle Manager Admin UI SUSE Service Model Confirmation

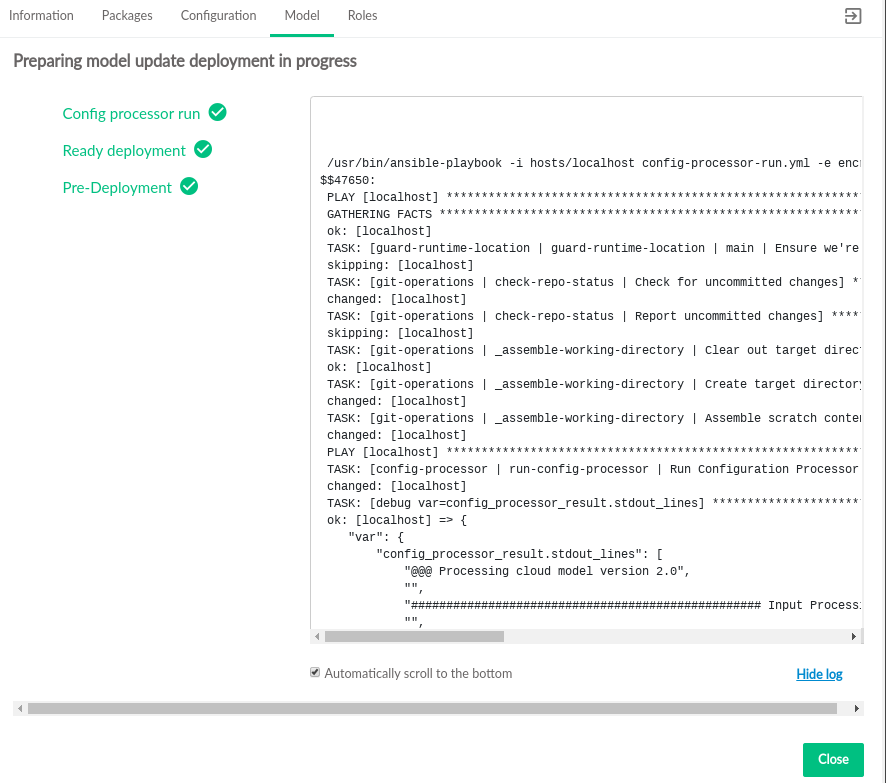

- 3.10 Cloud Lifecycle Manager Admin UI SUSE Service Model Update

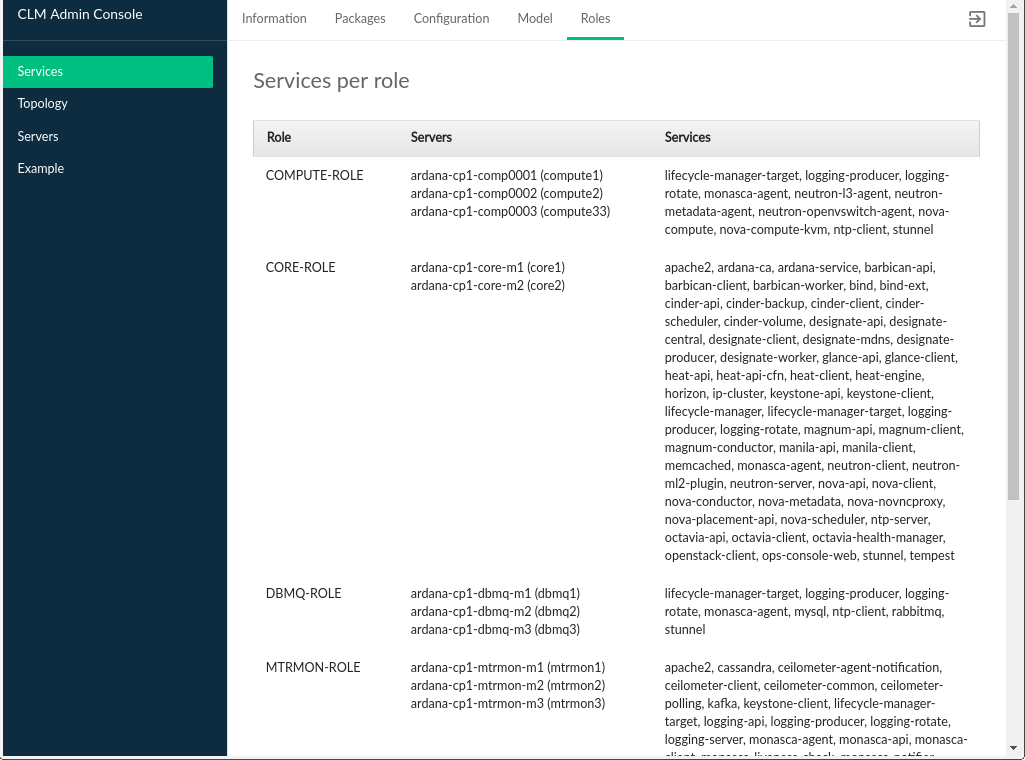

- 3.11 Cloud Lifecycle Manager Admin UI Services Per Role

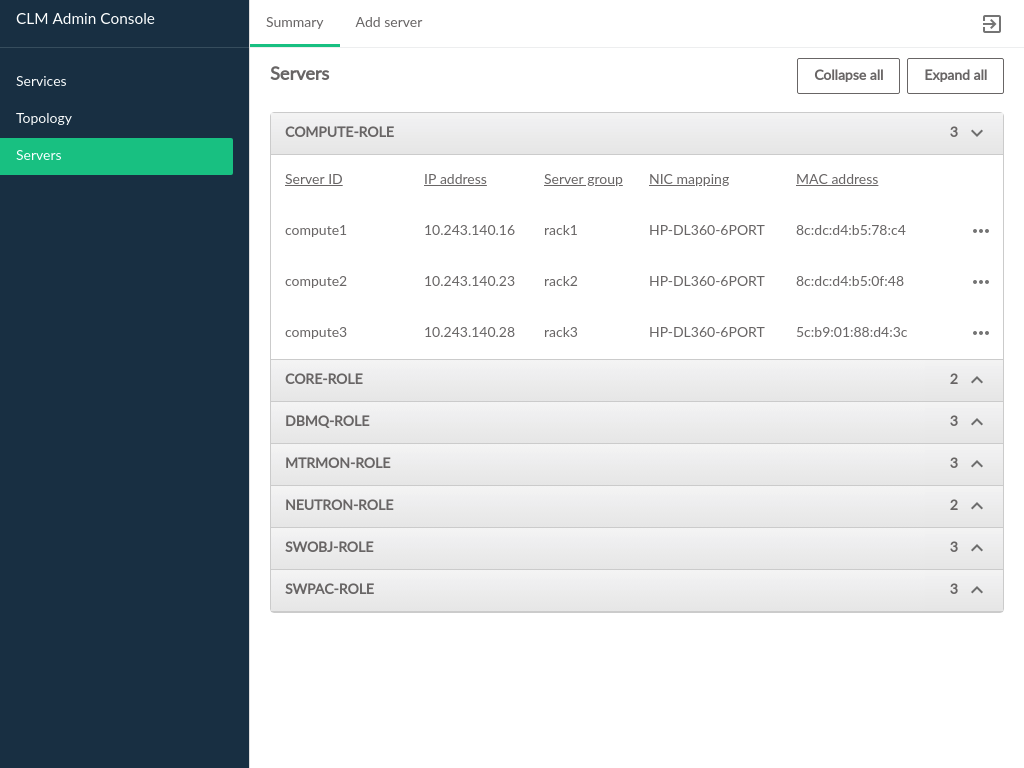

- 3.12 Cloud Lifecycle Manager Admin UI Server Summary

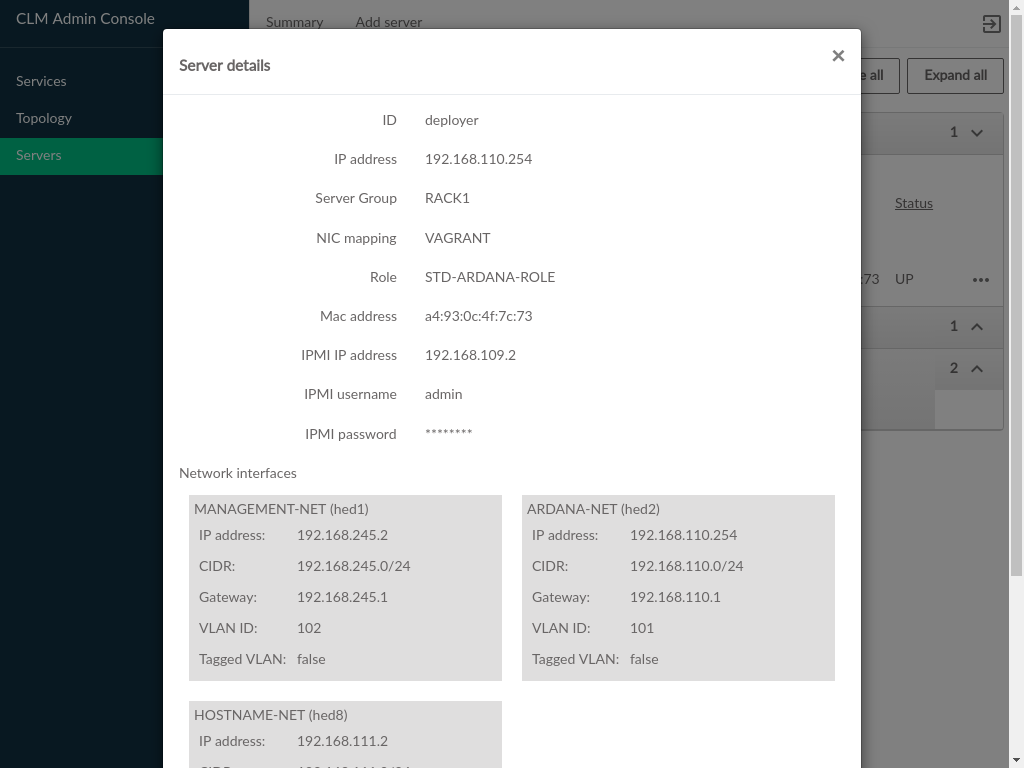

- 3.13 Server Details (1/2)

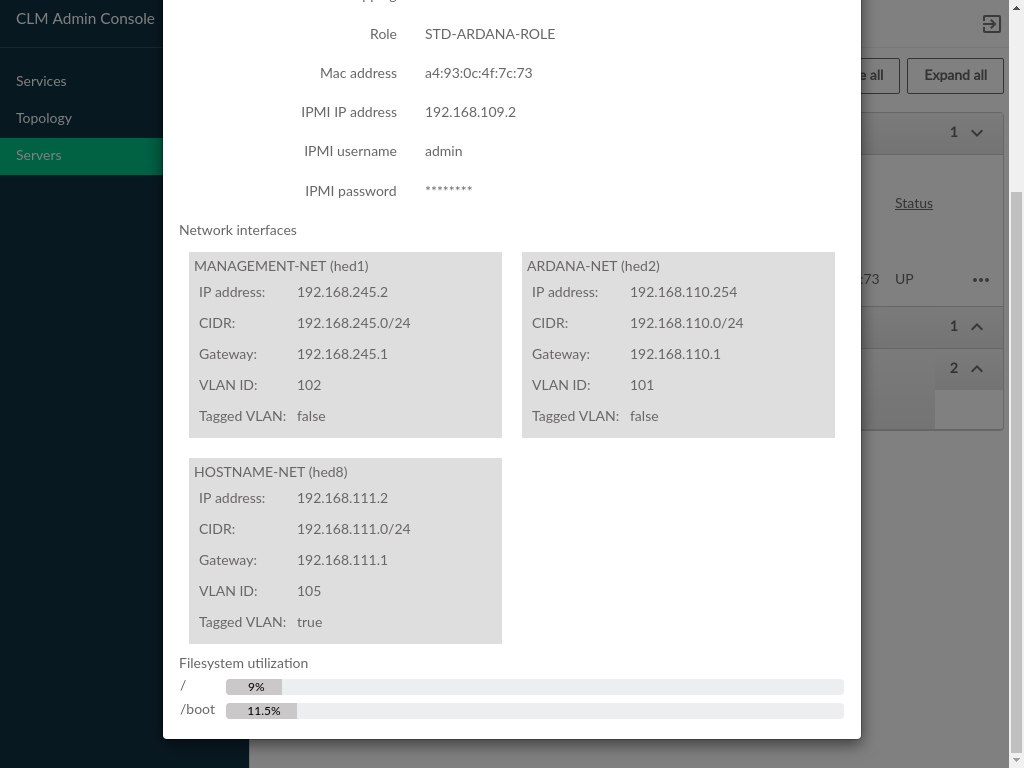

- 3.14 Server Details (2/2)

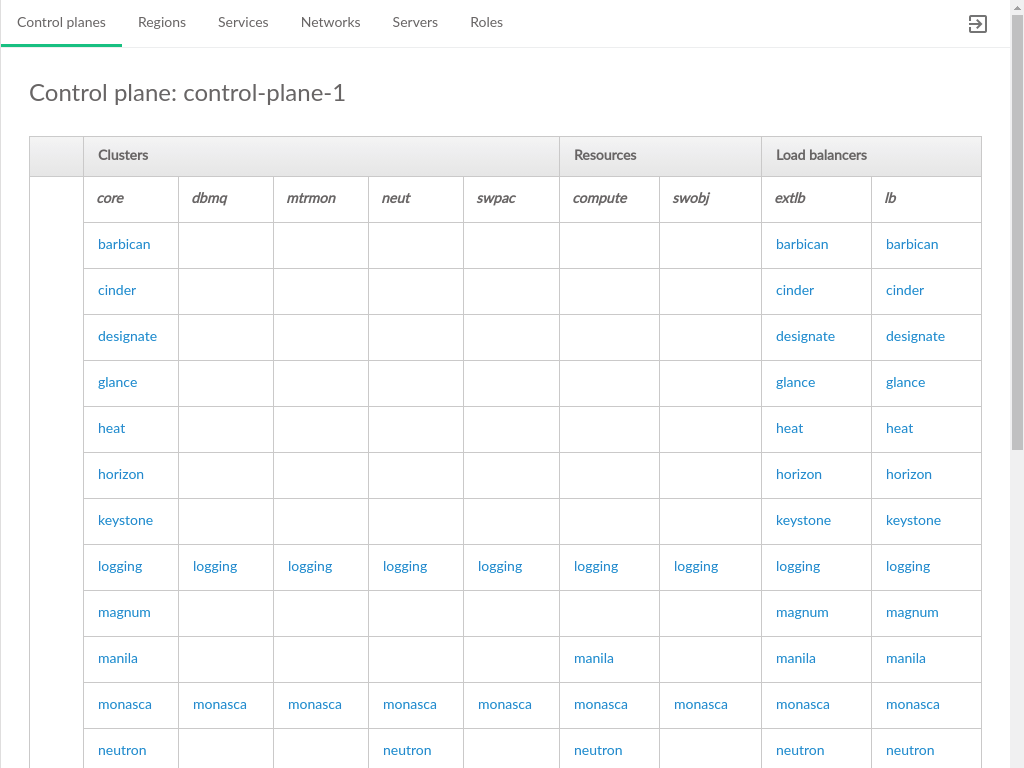

- 3.15 Control Plane Topology

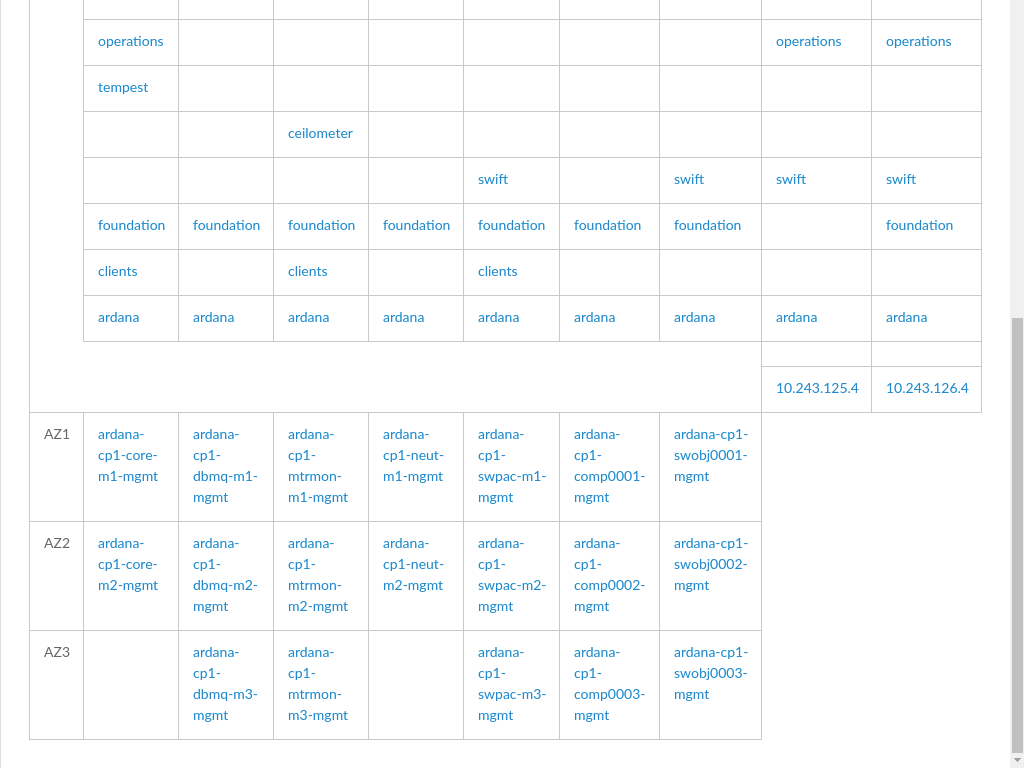

- 3.16 Control Plane Topology - Availability Zones

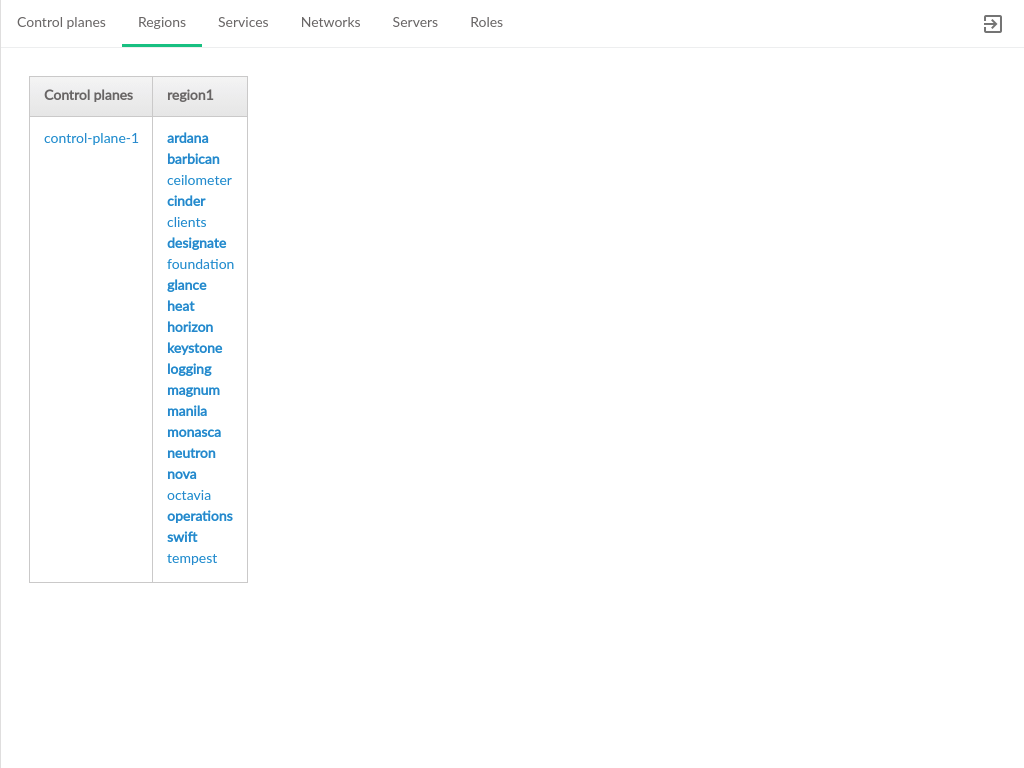

- 3.17 Regions Topology

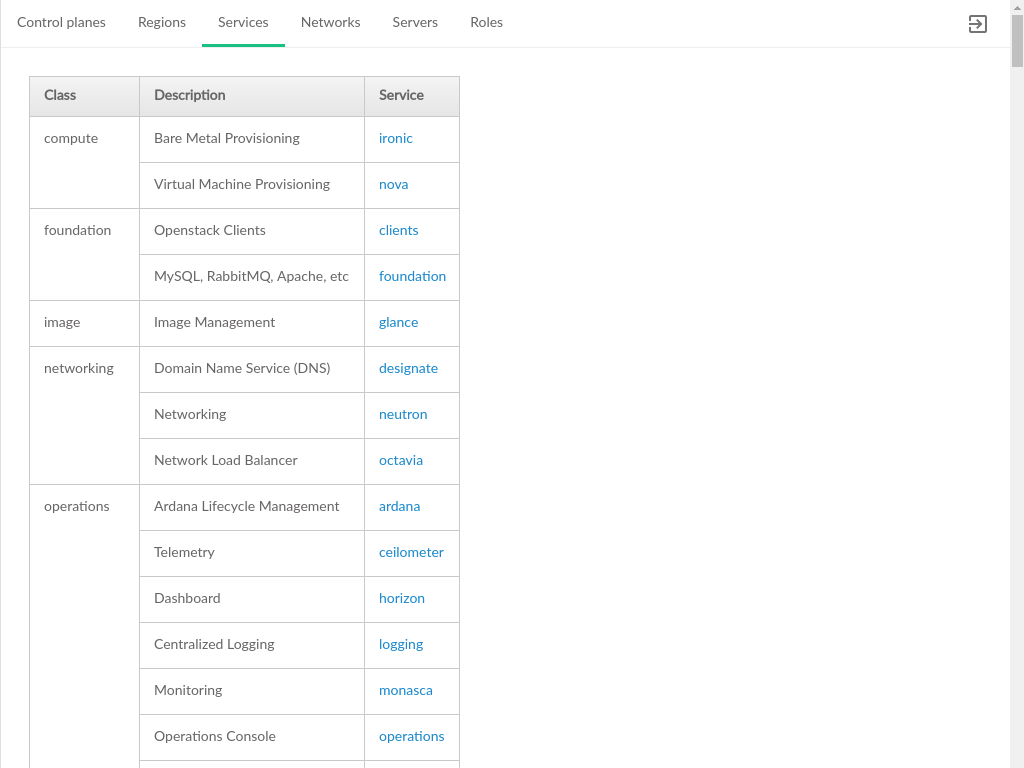

- 3.18 Services Topology

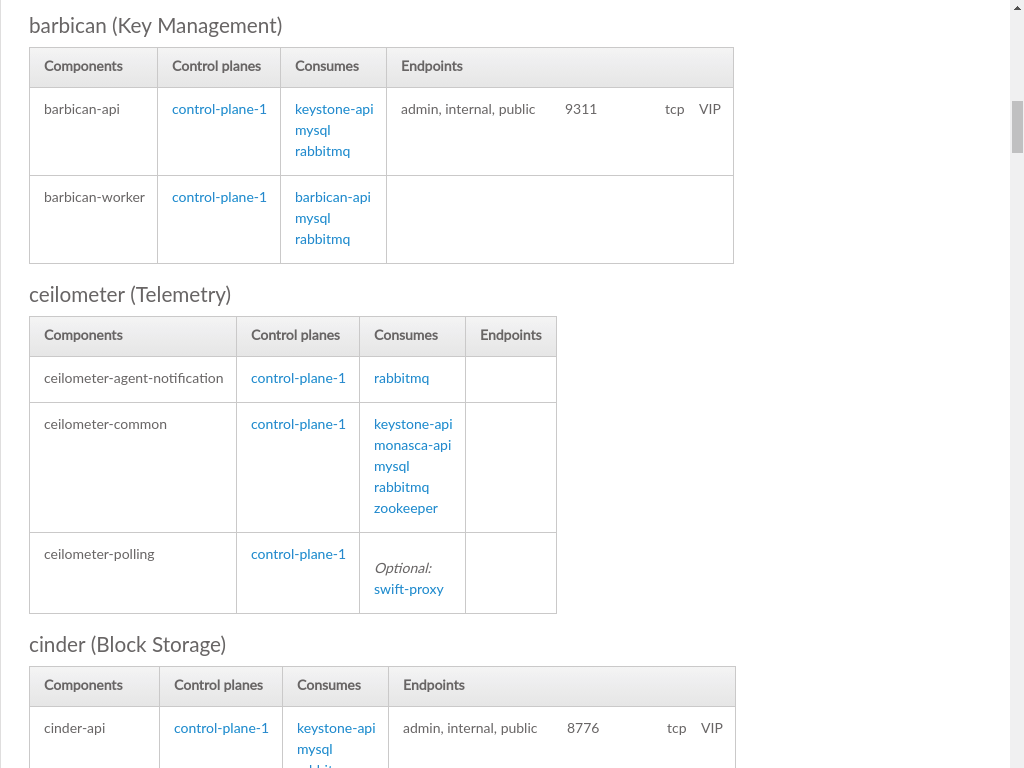

- 3.19 Service Details Topology

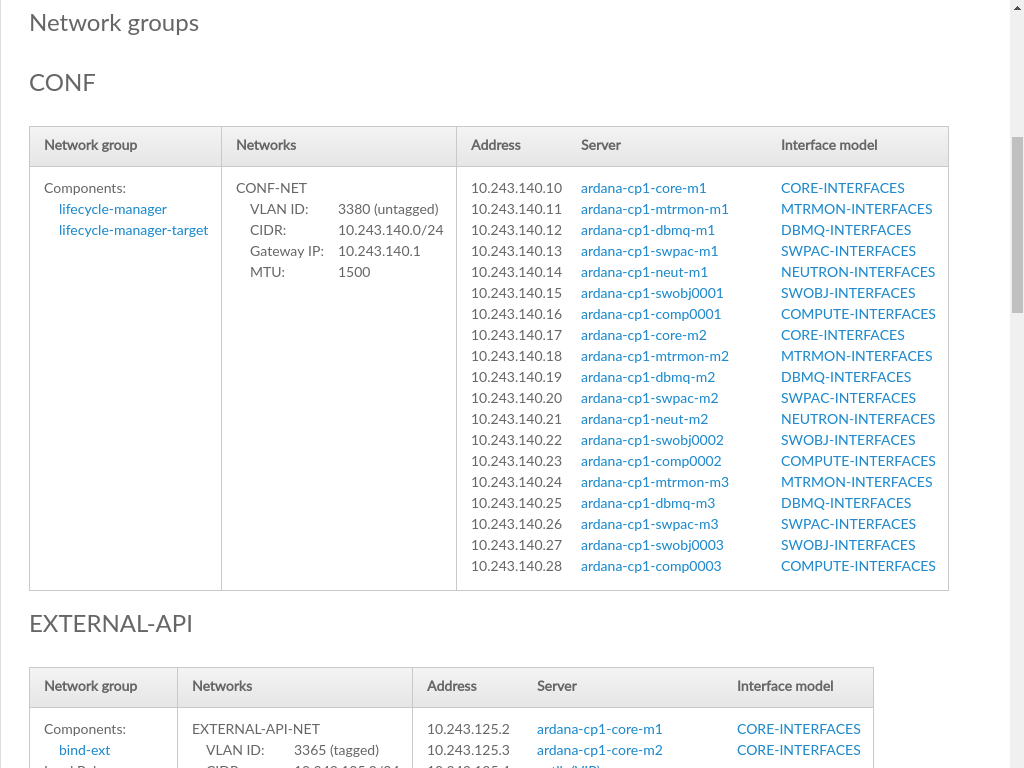

- 3.20 Networks Topology

- 3.21 Network Groups Topology

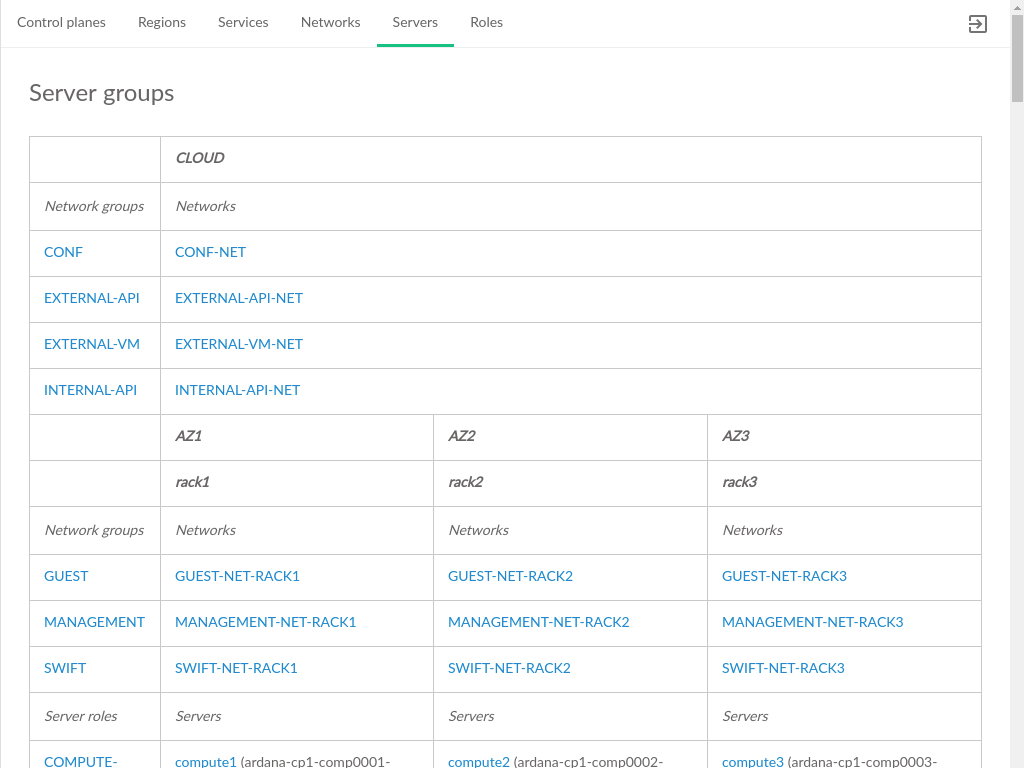

- 3.22 Server Groups Topology

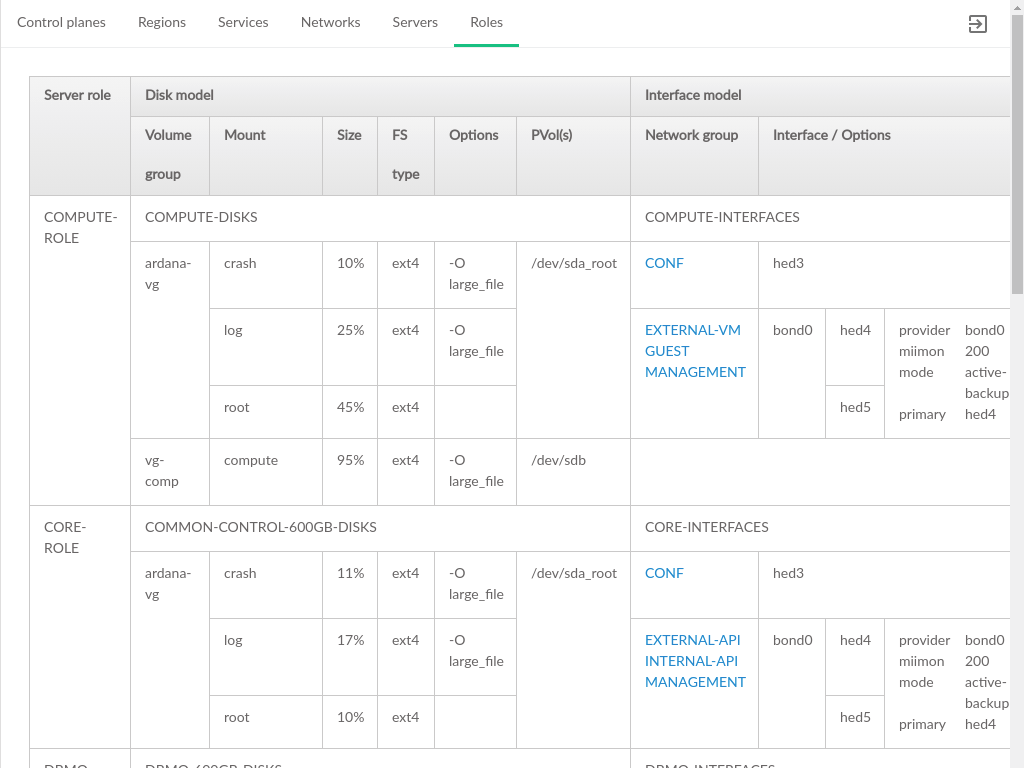

- 3.23 Roles Topology

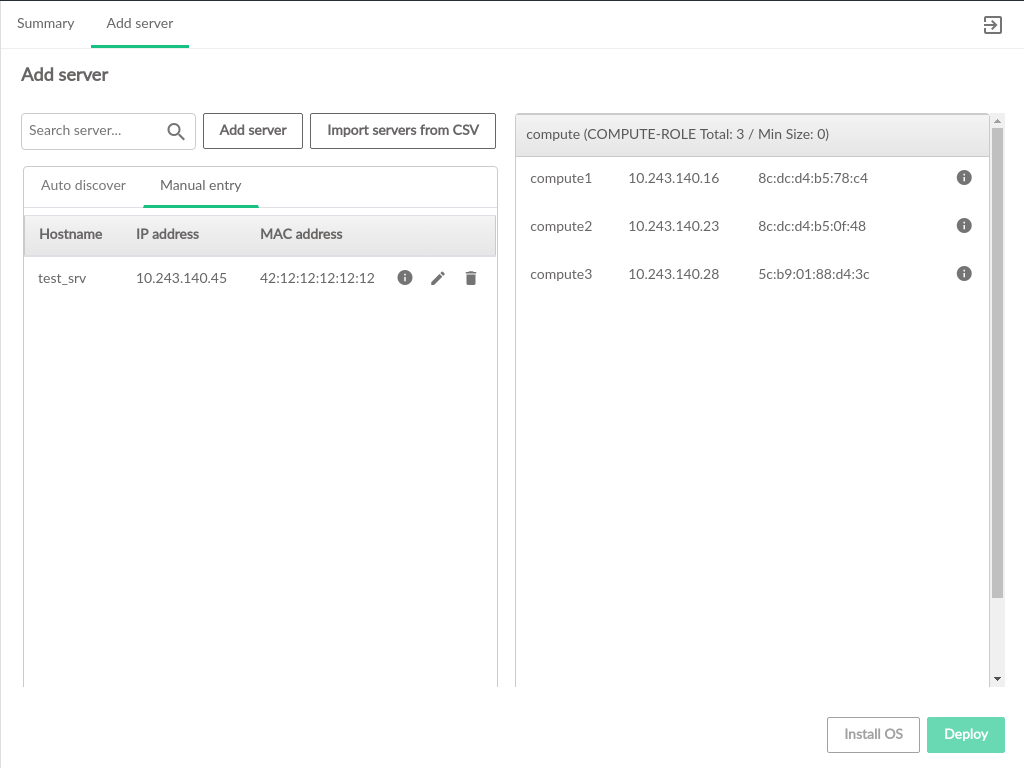

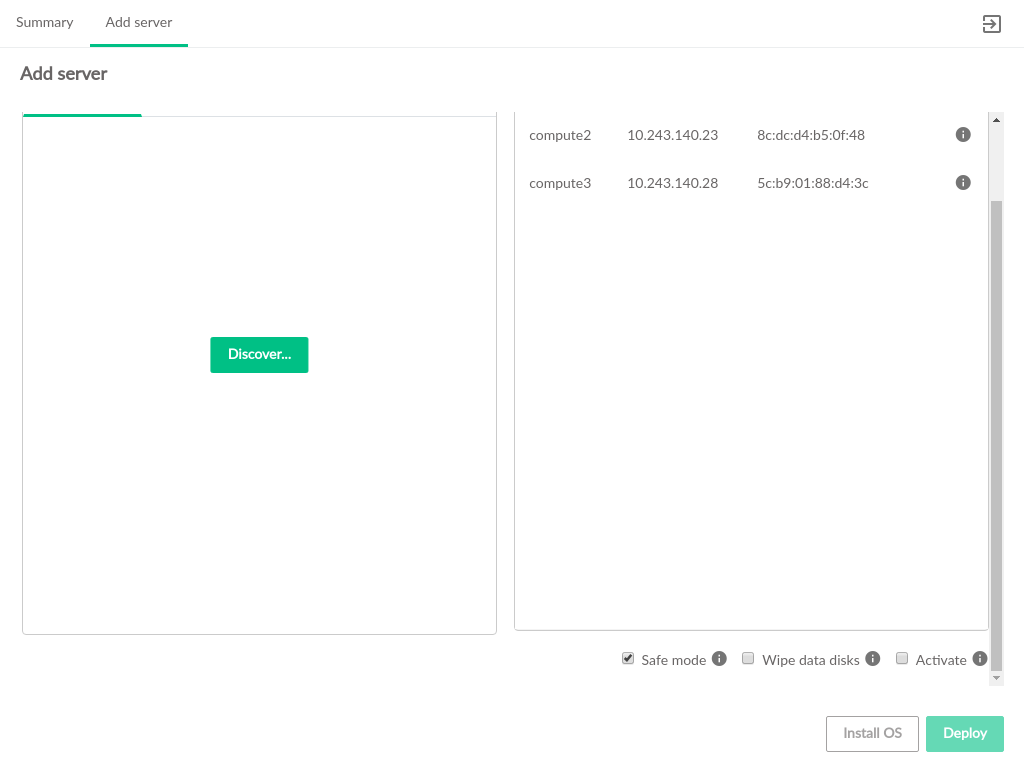

- 3.24 Add Server Overview

- 3.25 Manually Add Server

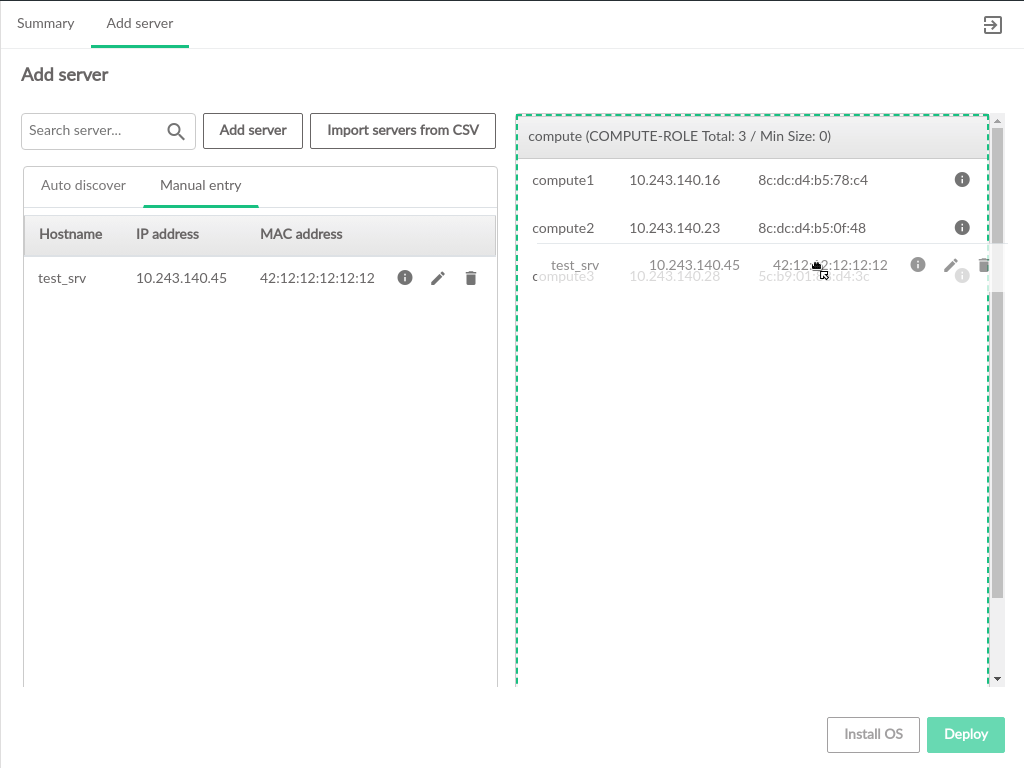

- 3.26 Manually Add Server

- 3.27 Add Server Settings options

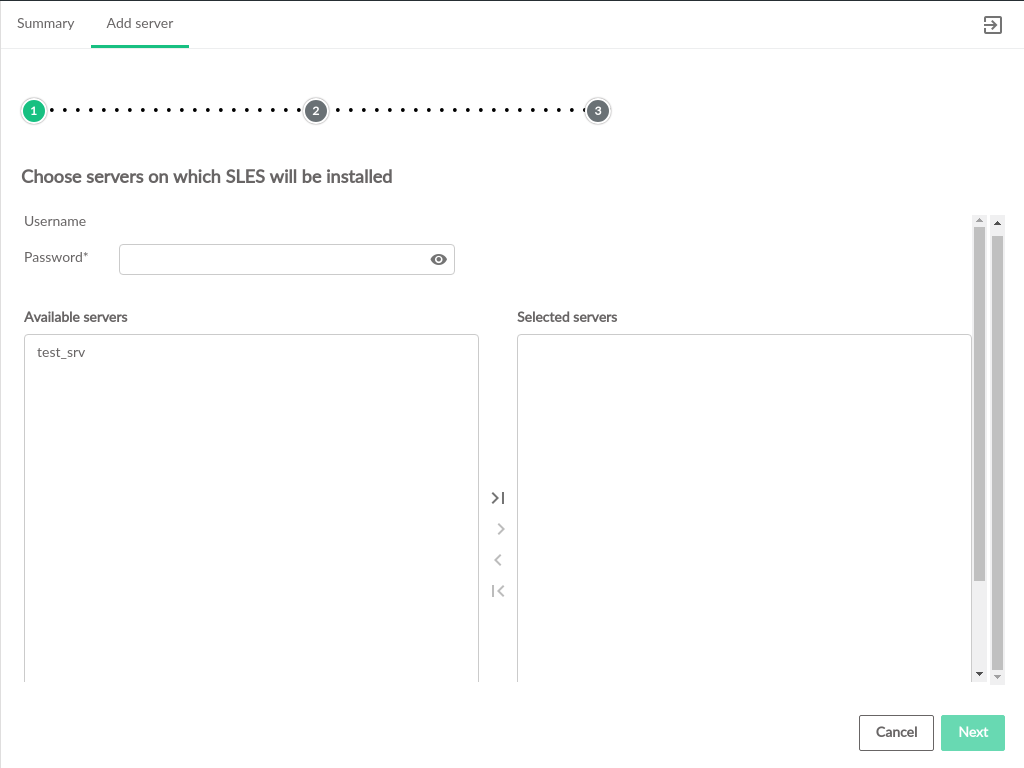

- 3.28 Select Servers to Provision OS

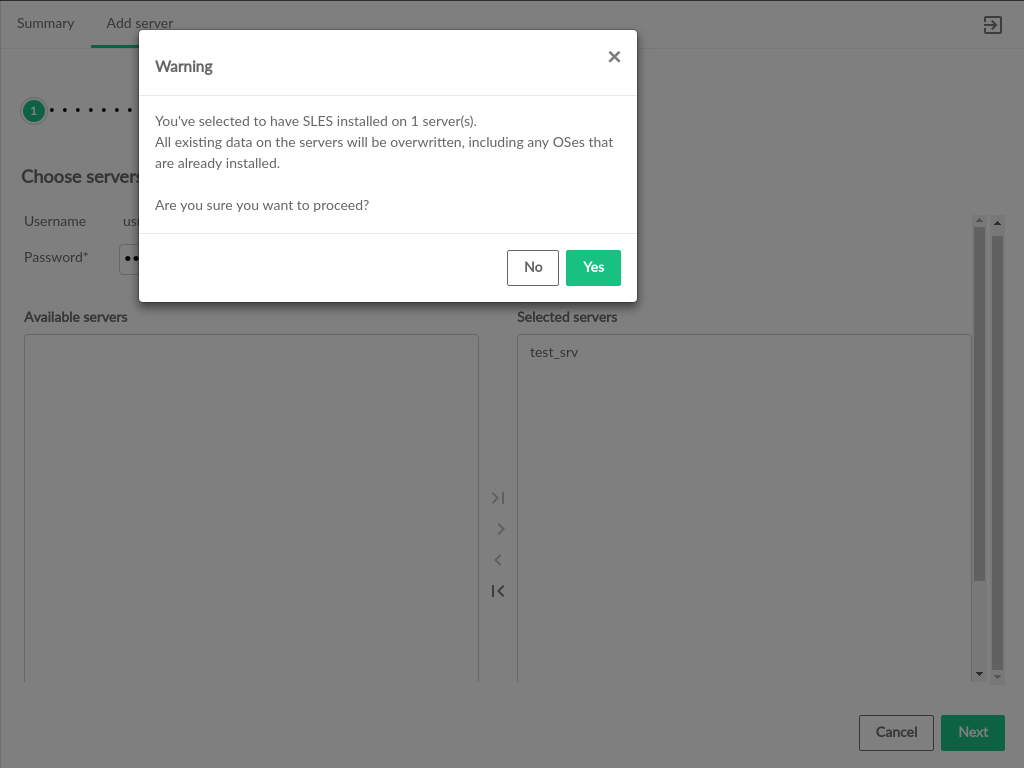

- 3.29 Confirm Provision OS

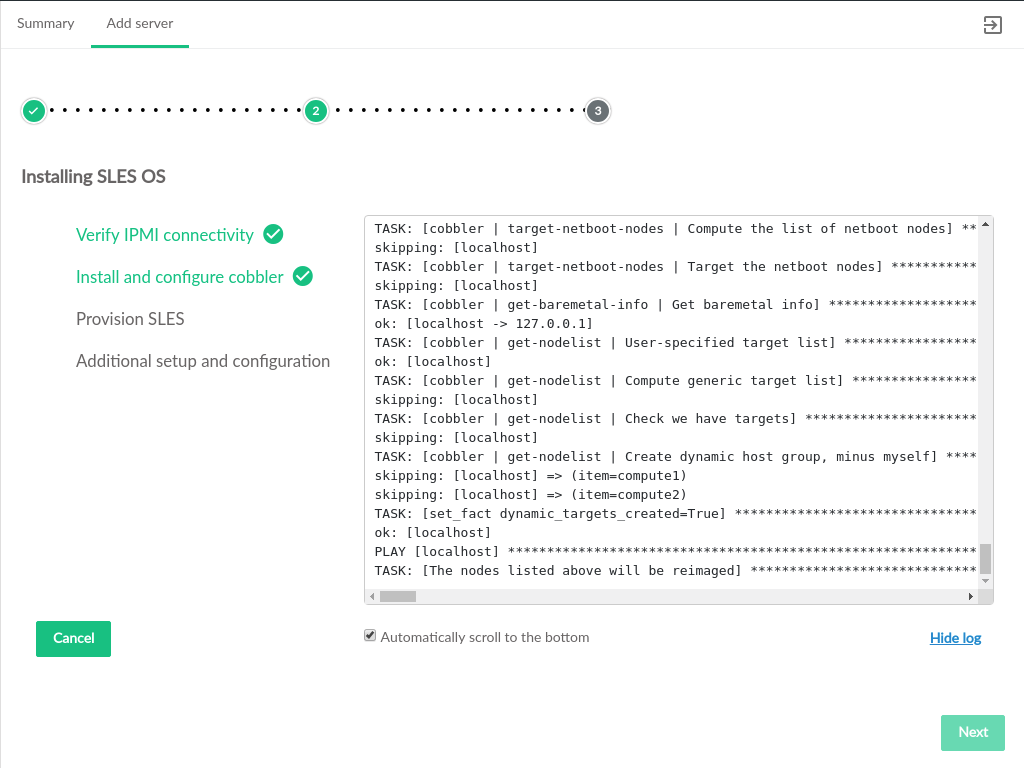

- 3.30 OS Install Progress

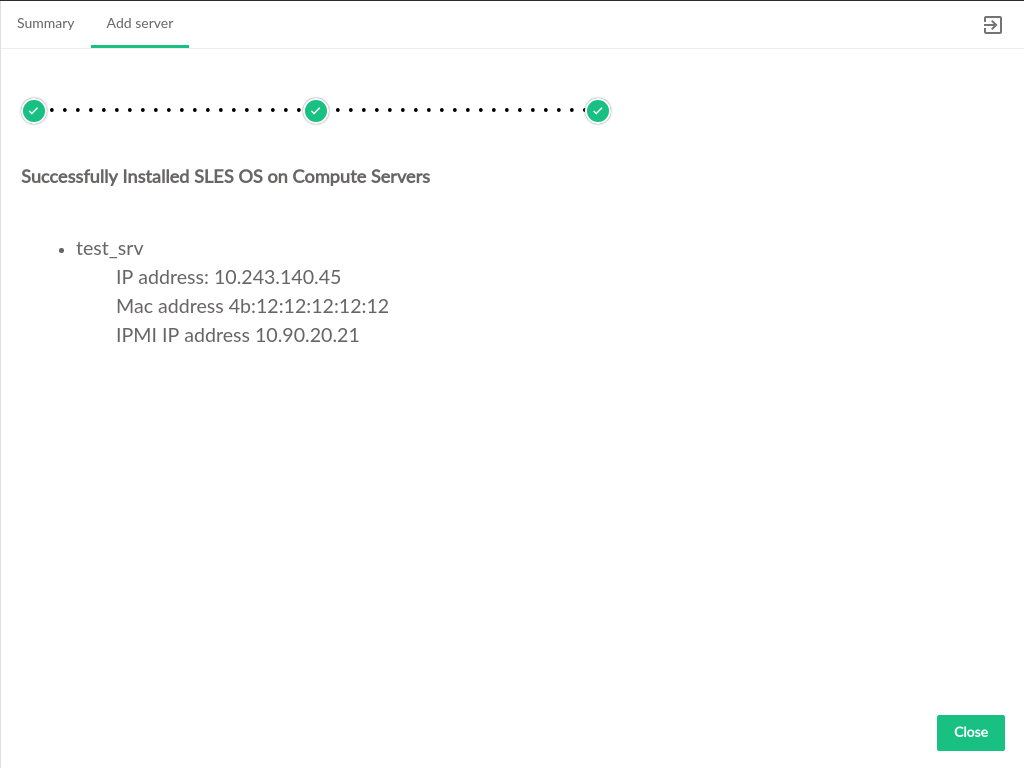

- 3.31 OS Install Summary

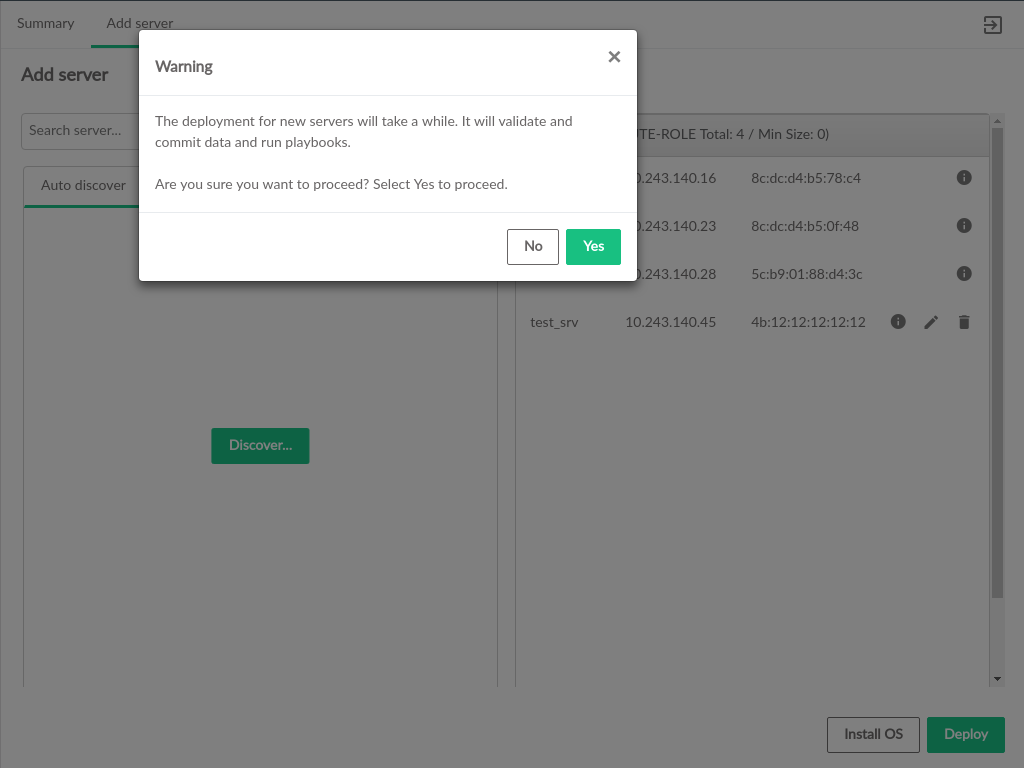

- 3.32 Confirm Deploy Servers

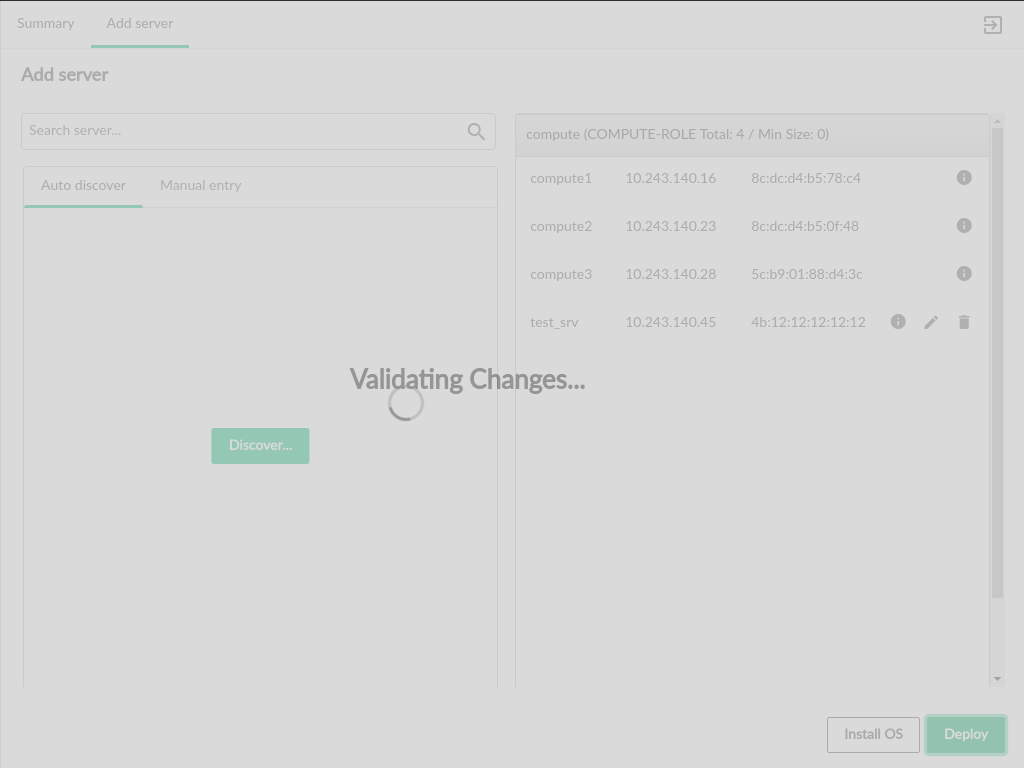

- 3.33 Validate Server Changes

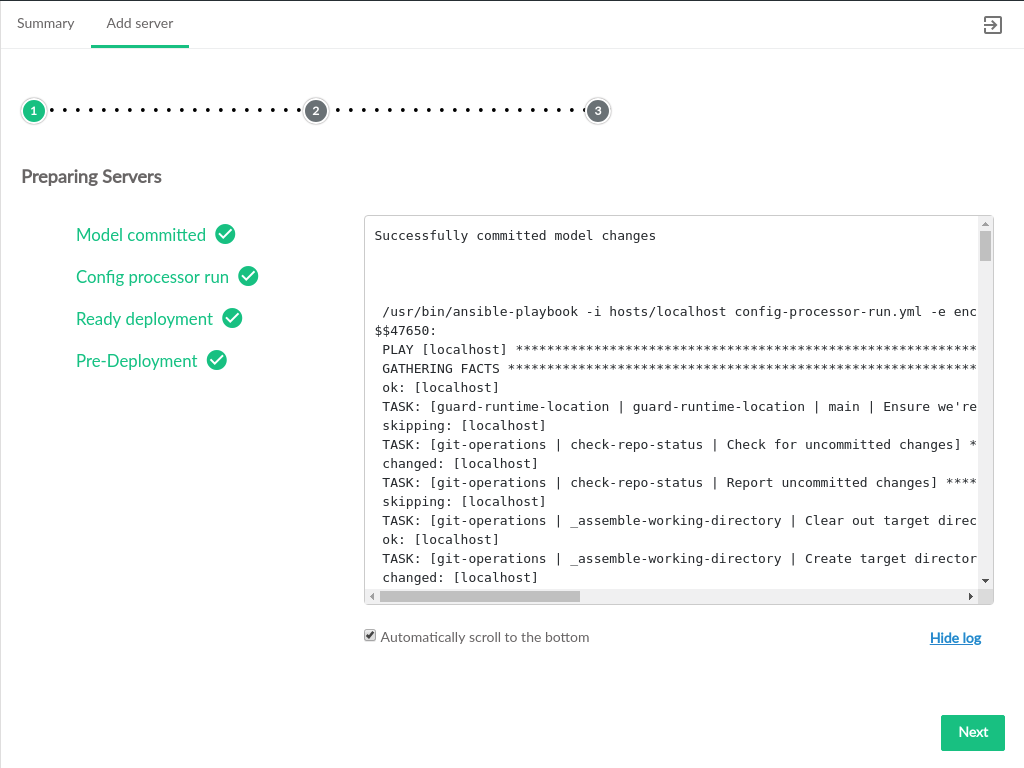

- 3.34 Prepare Servers

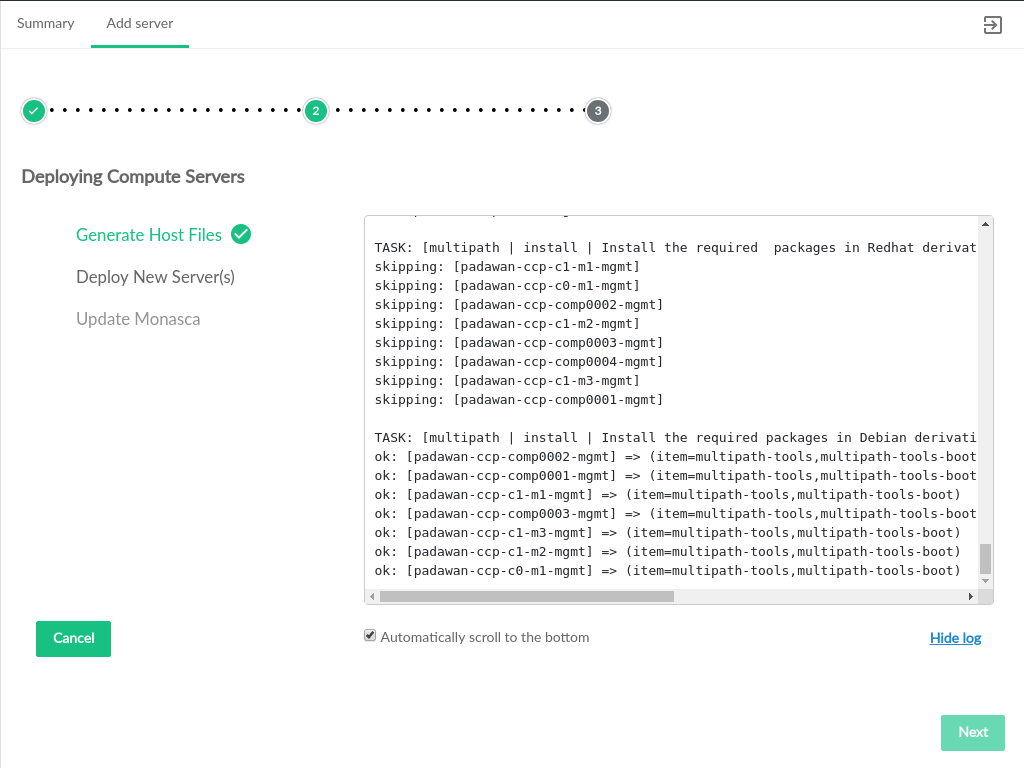

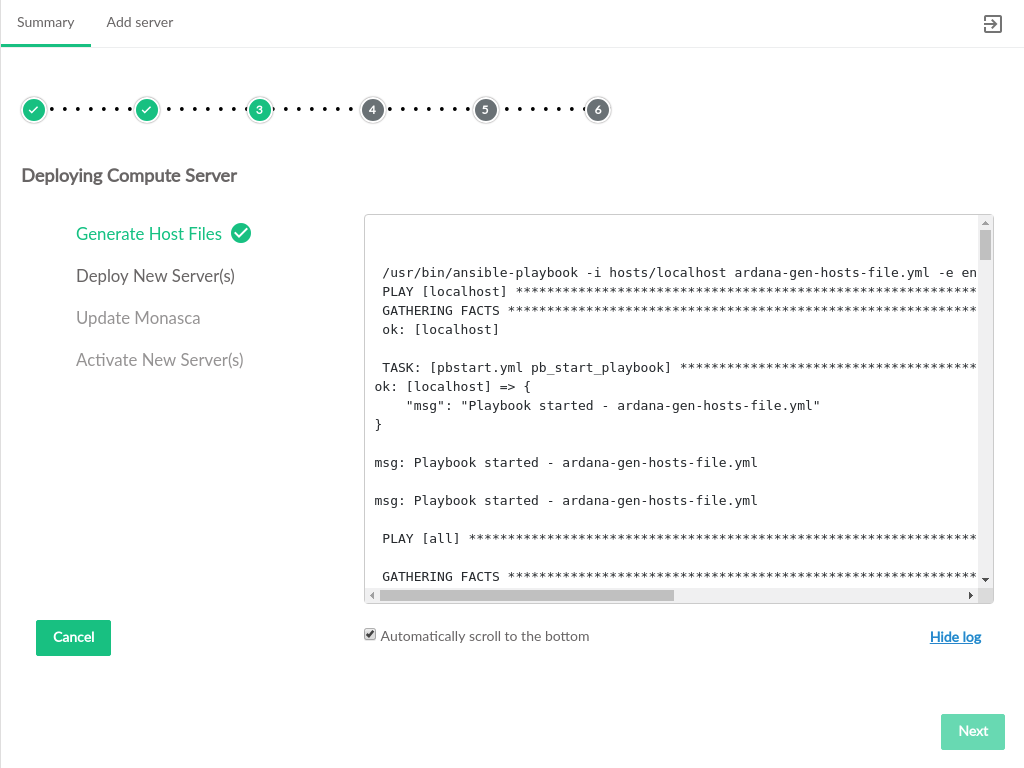

- 3.35 Deploy Servers

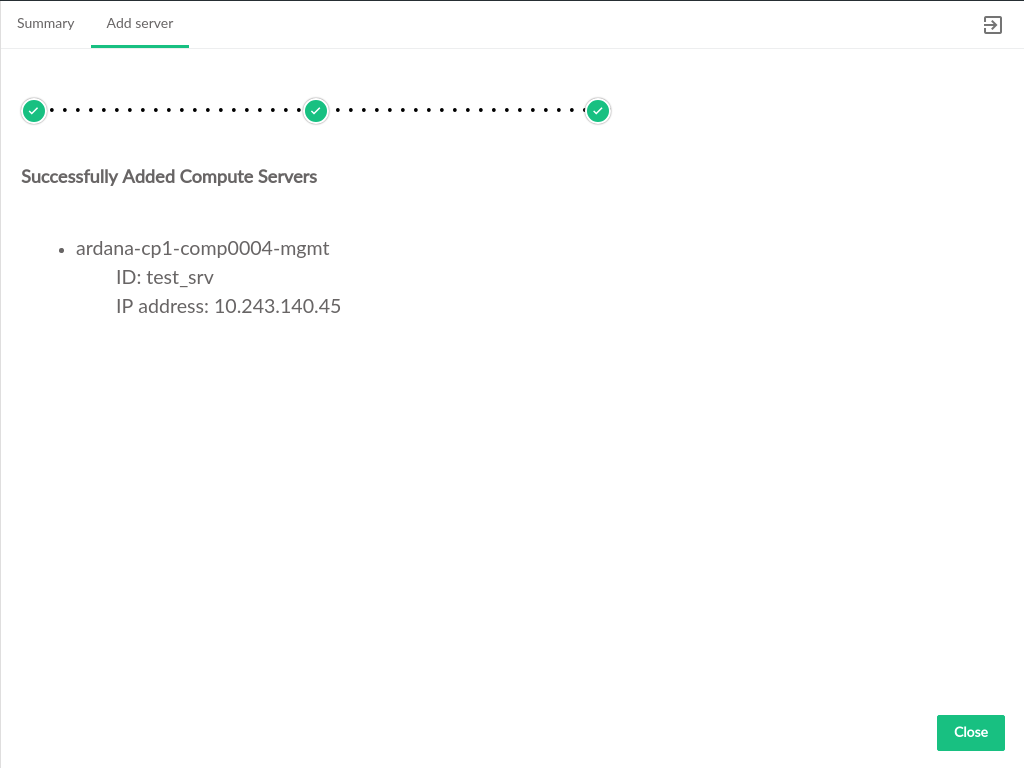

- 3.36 Deploy Summary

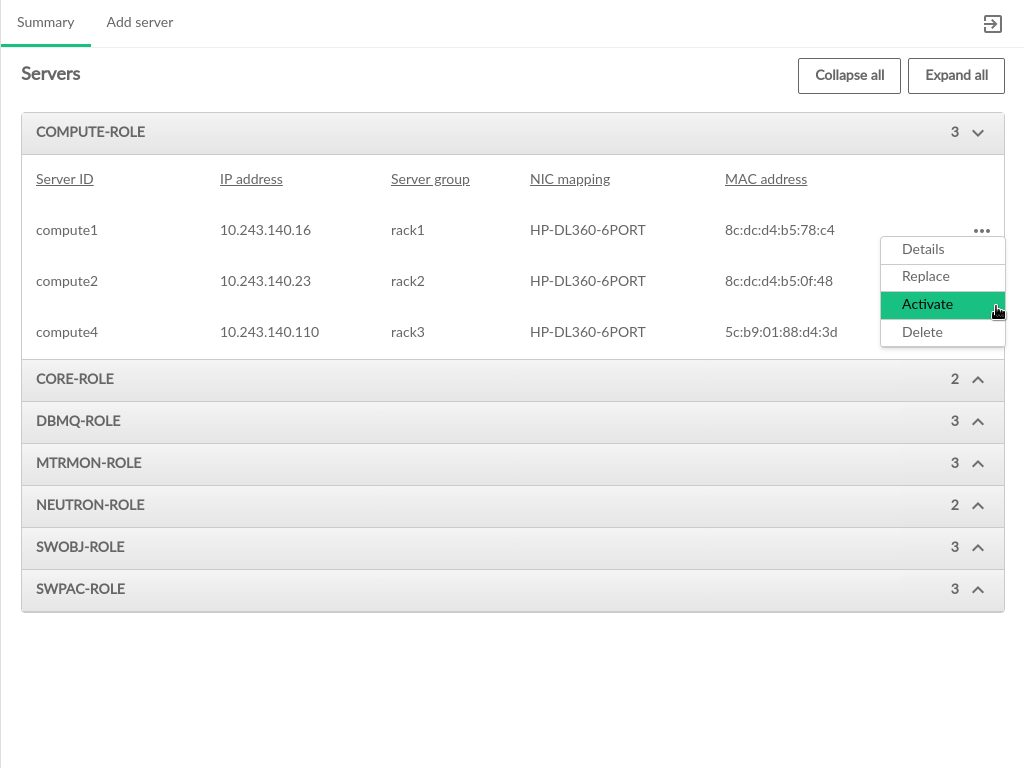

- 3.37 Activate Server

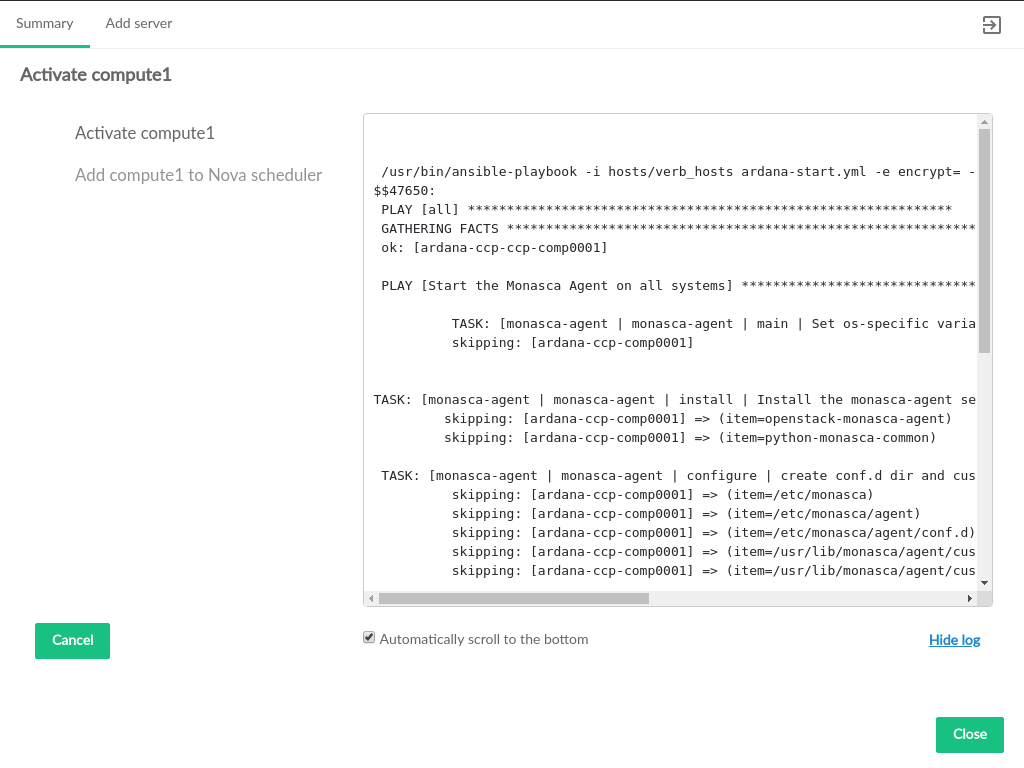

- 3.38 Activate Server Progress

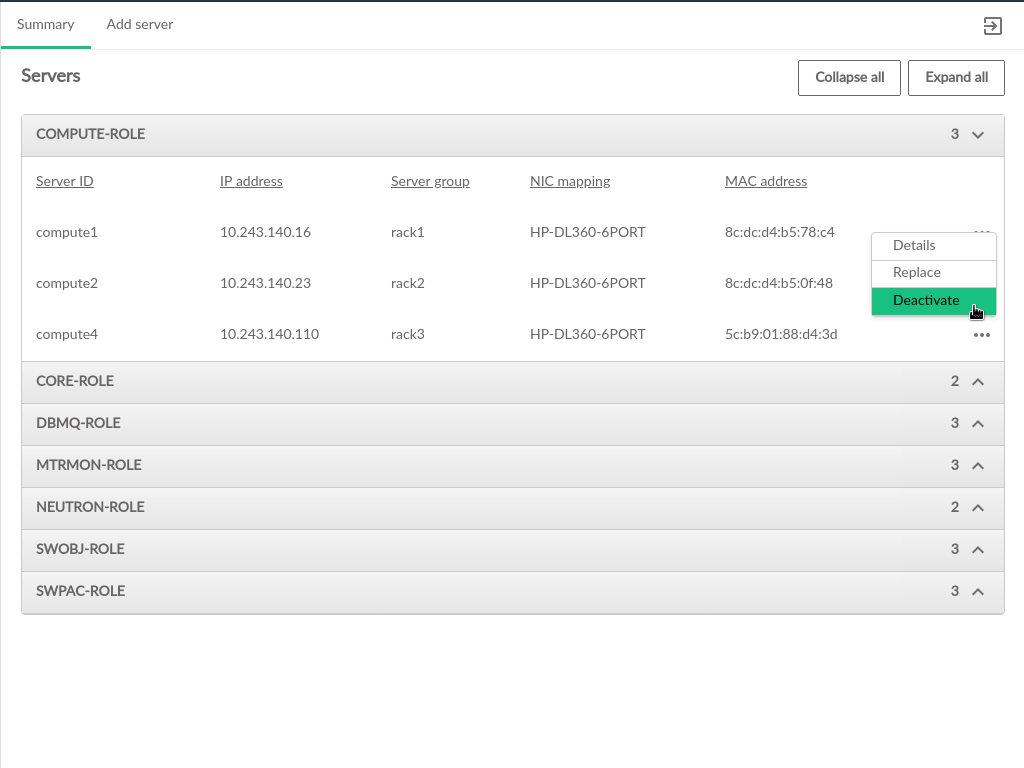

- 3.39 Deactivate Server

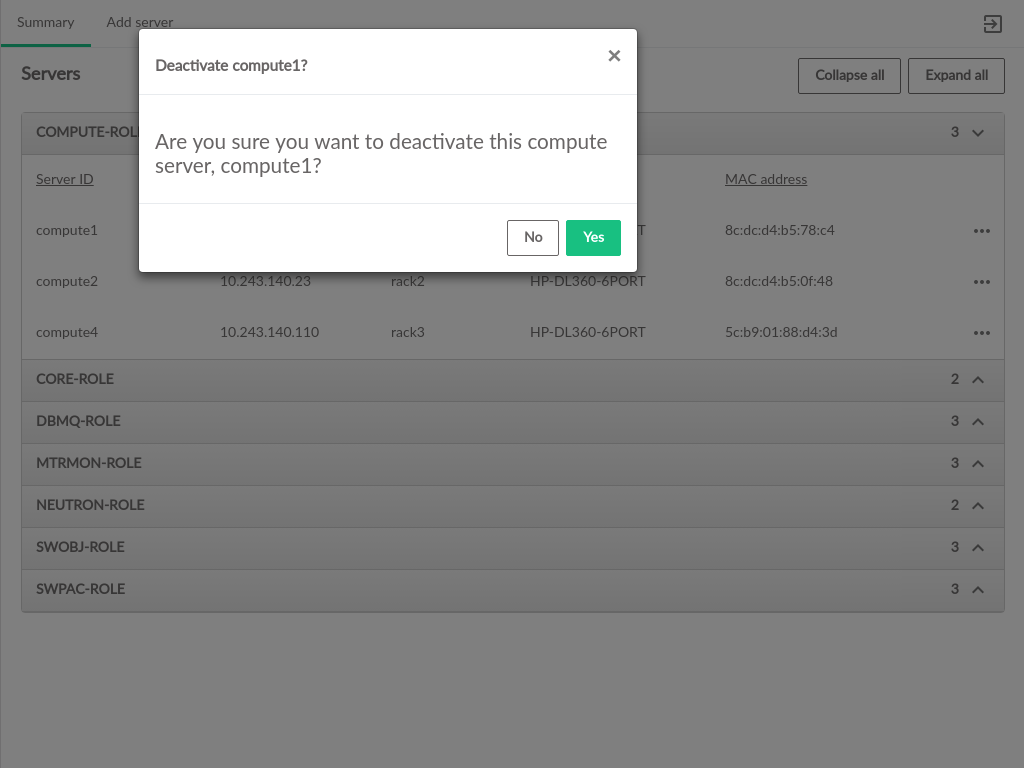

- 3.40 Deactivate Server Confirmation

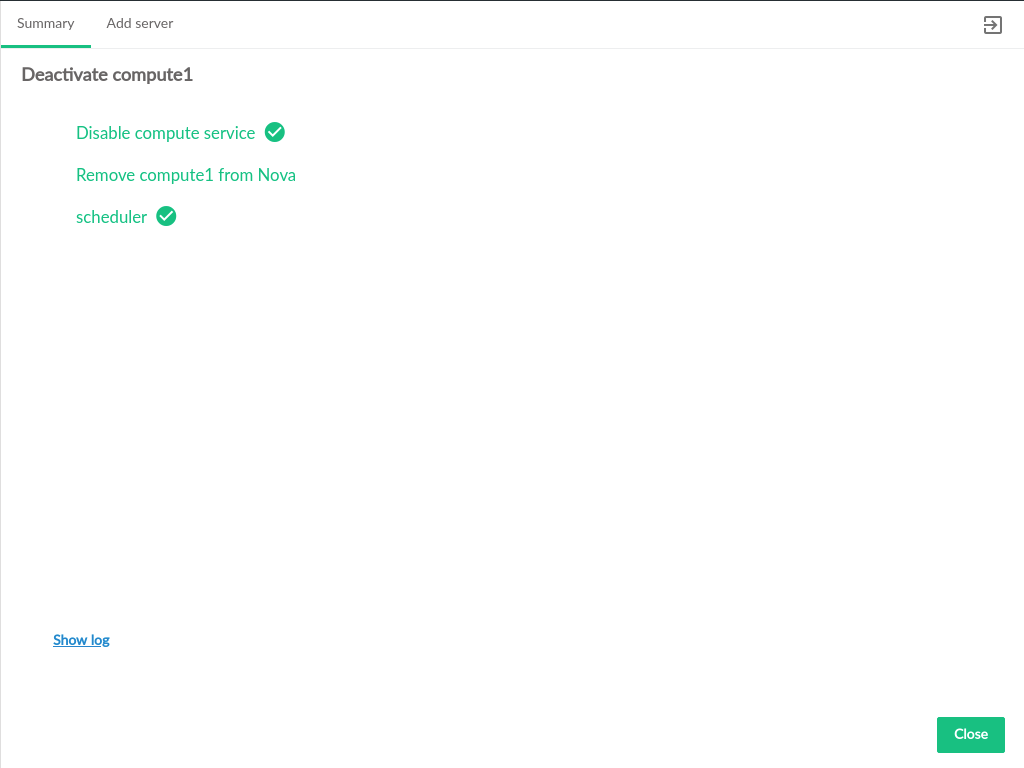

- 3.41 Deactivate Server Progress

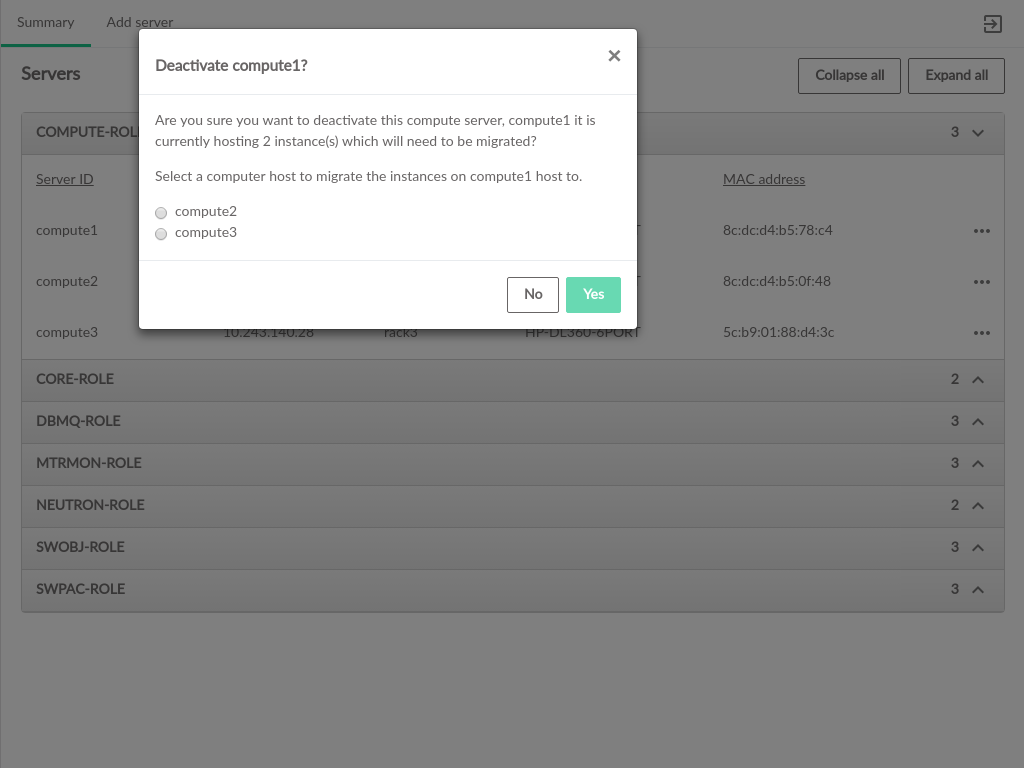

- 3.42 Select Migration Target

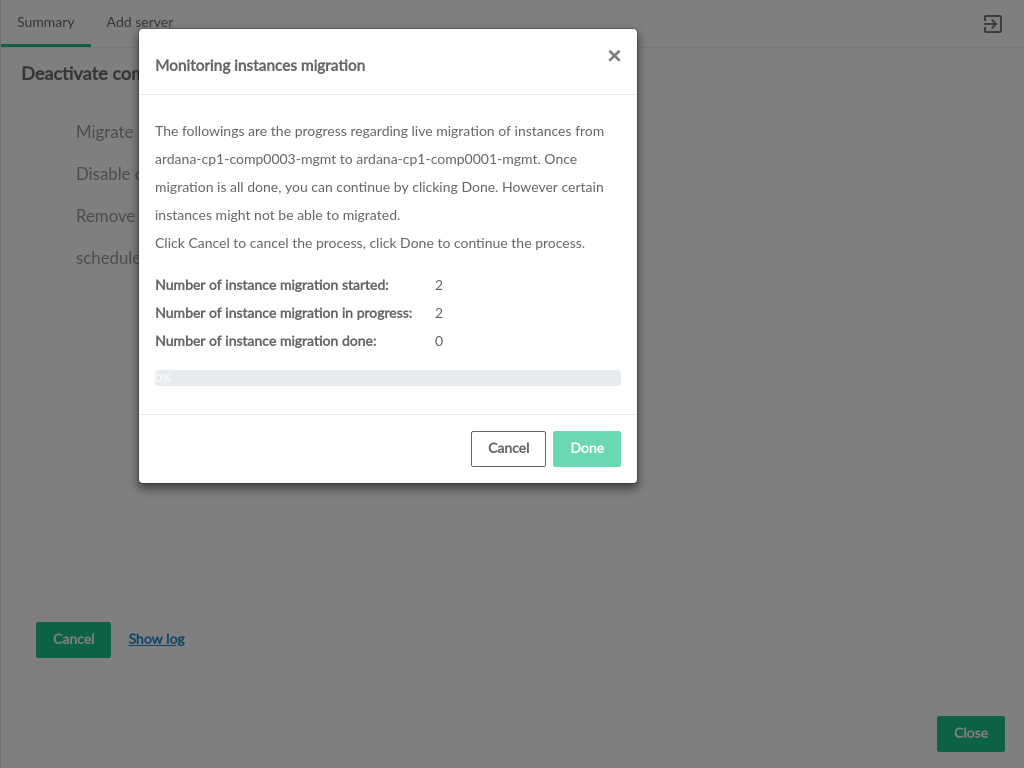

- 3.43 Deactivate Migration Progress

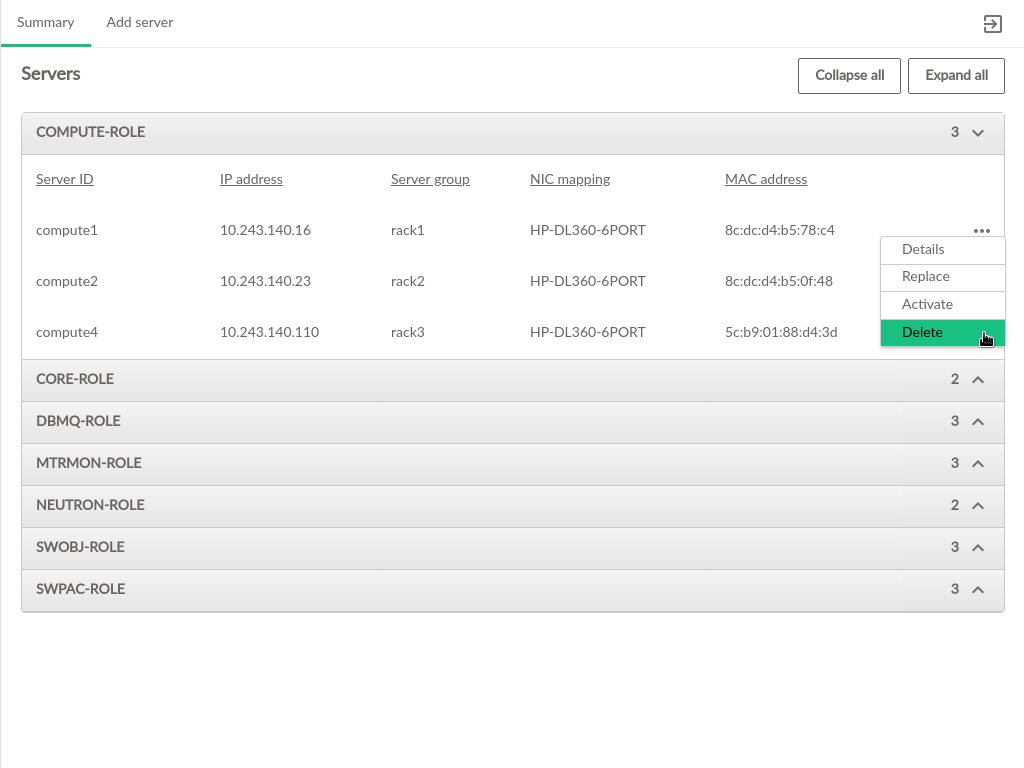

- 3.44 Delete Server

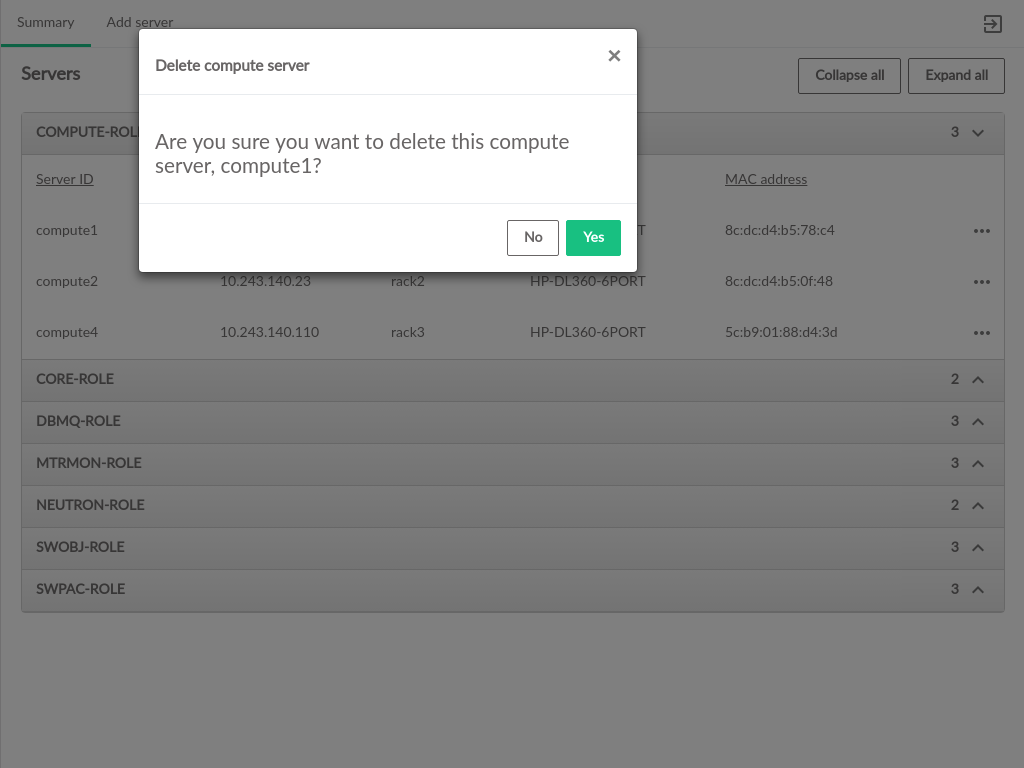

- 3.45 Delete Server Confirmation

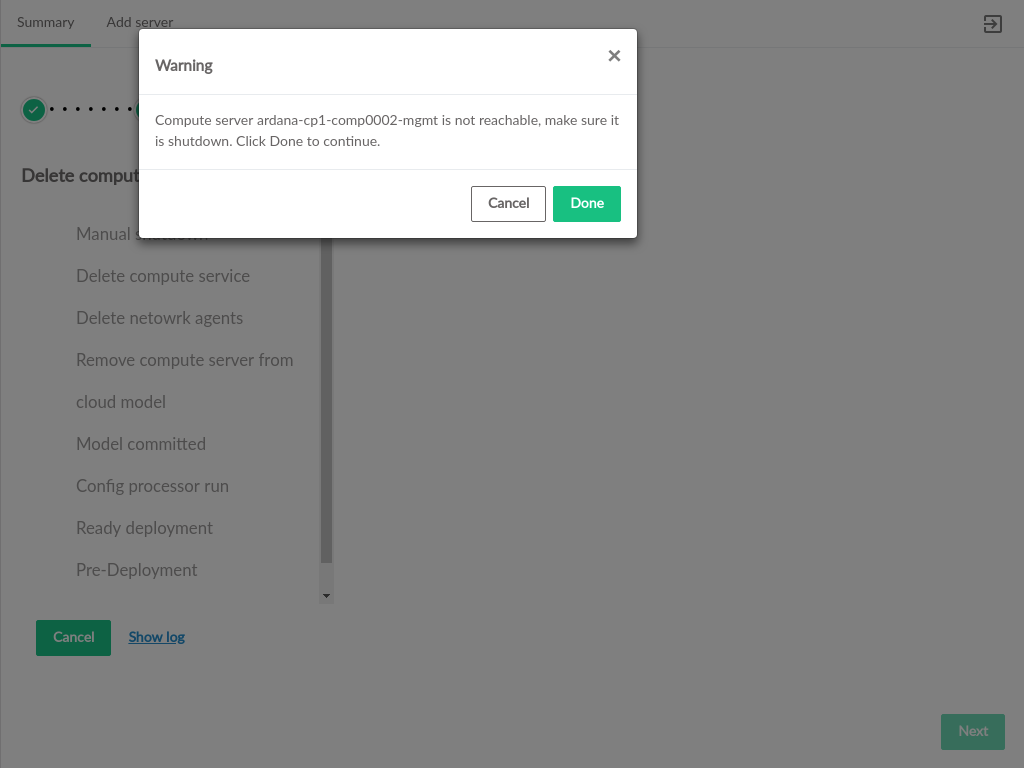

- 3.46 Unreachable Delete Confirmation

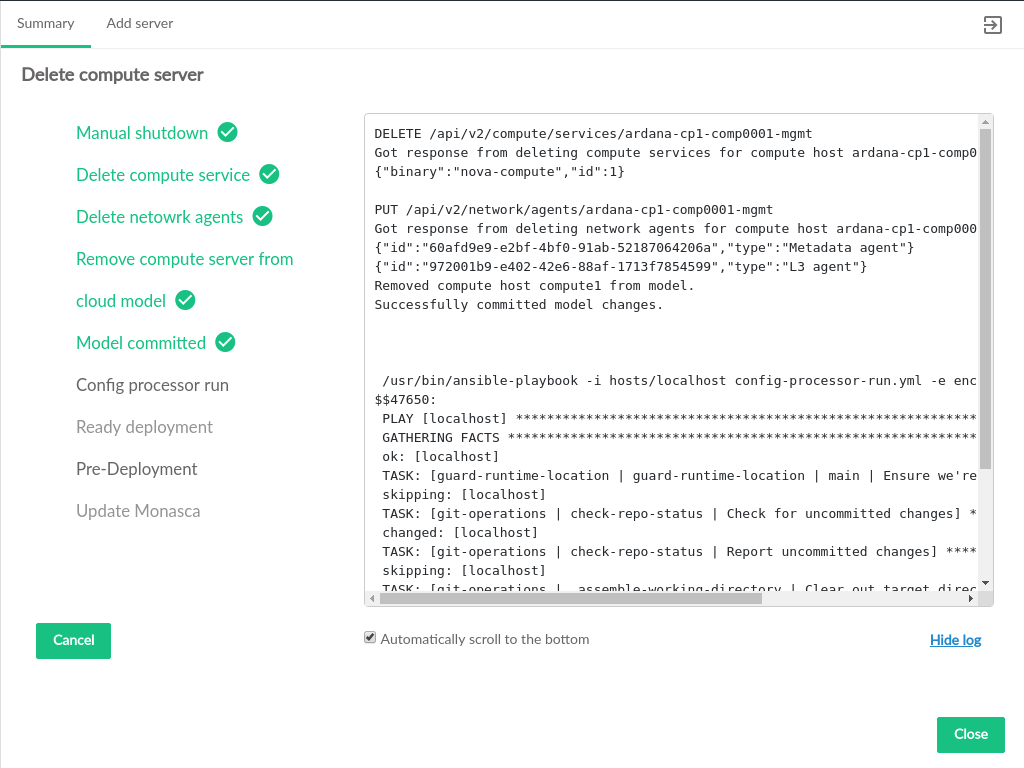

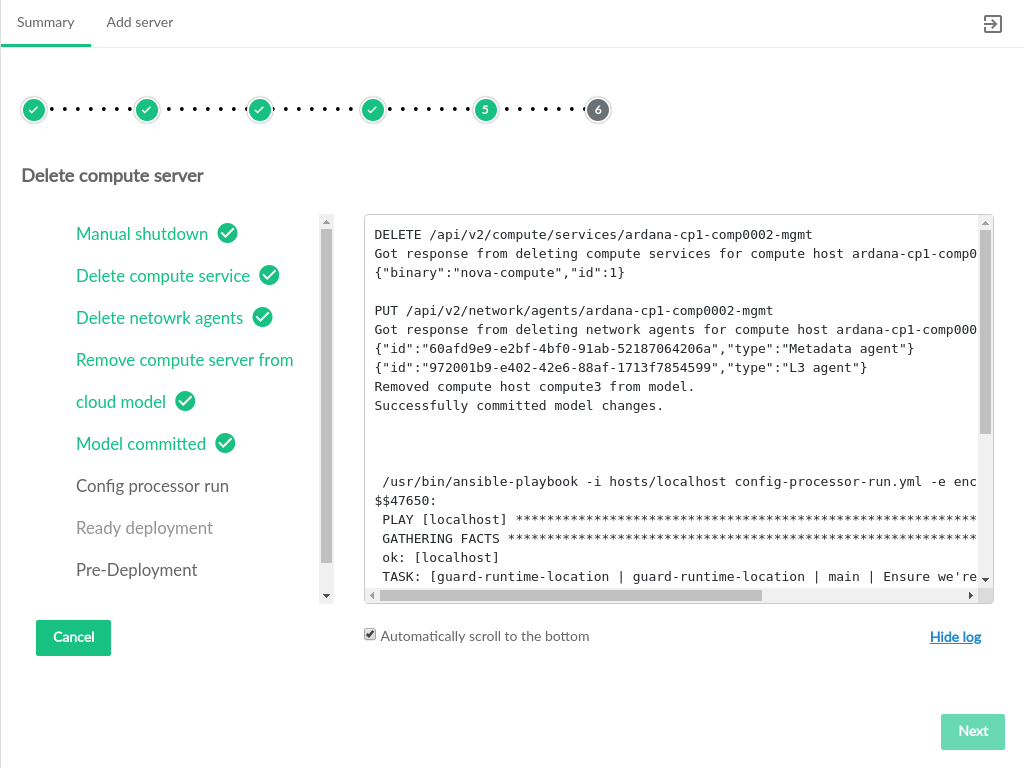

- 3.47 Delete Server Progress

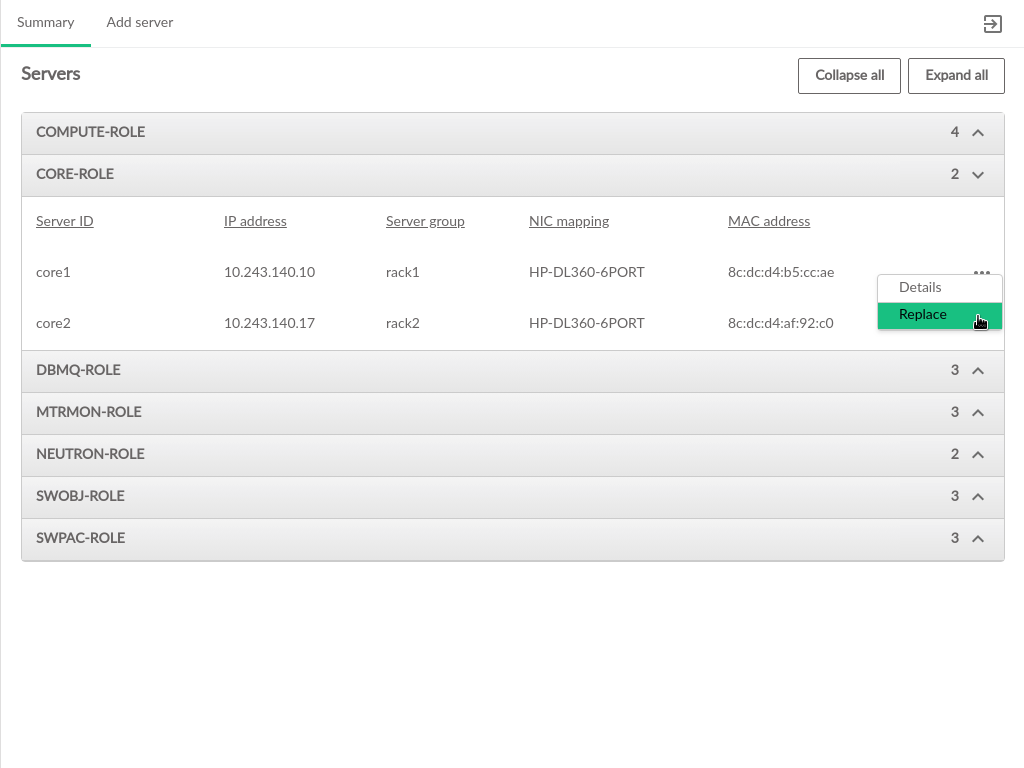

- 3.48 Replace Server Menu

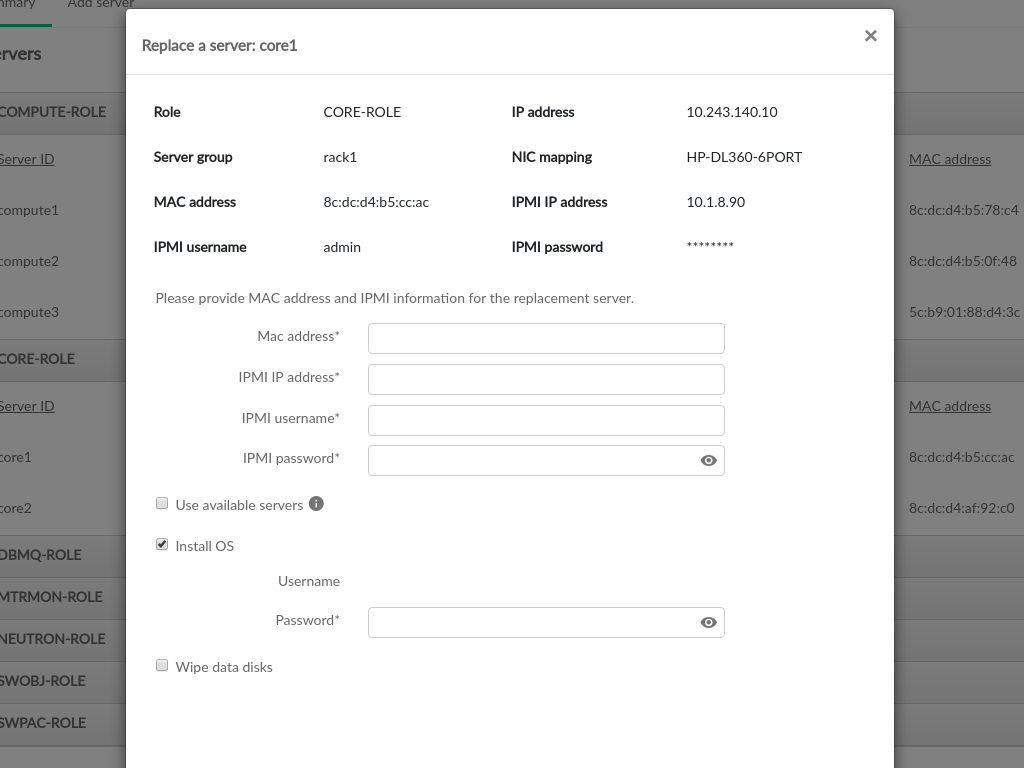

- 3.49 Replace Controller Form

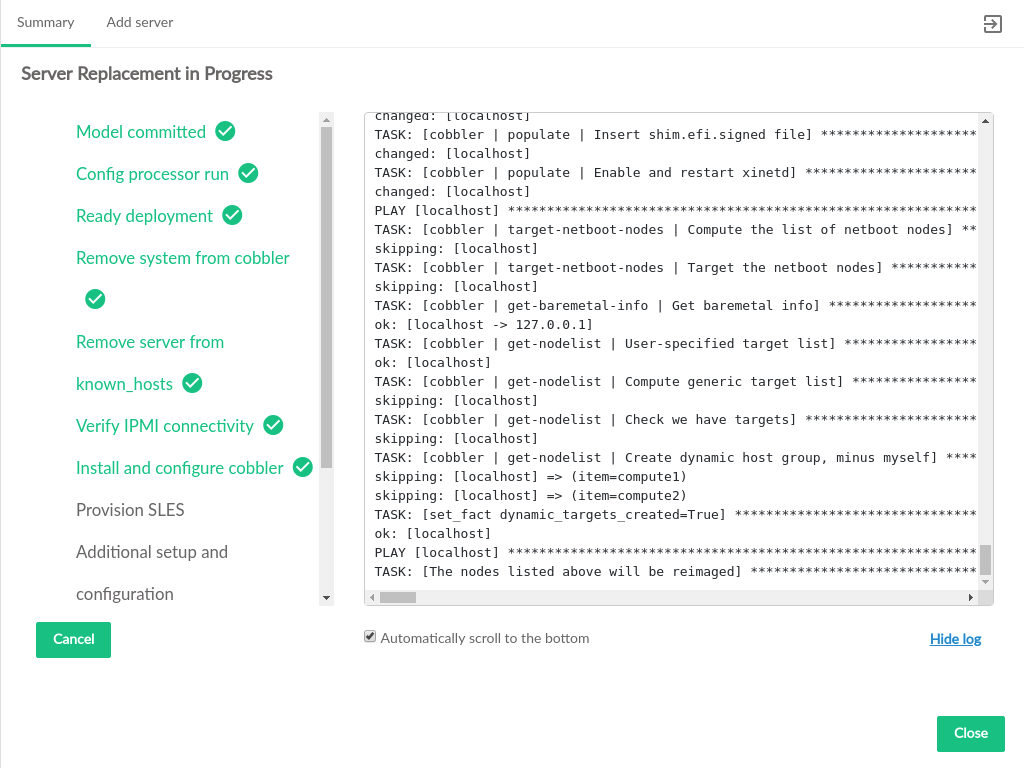

- 3.50 Replace Controller Progress

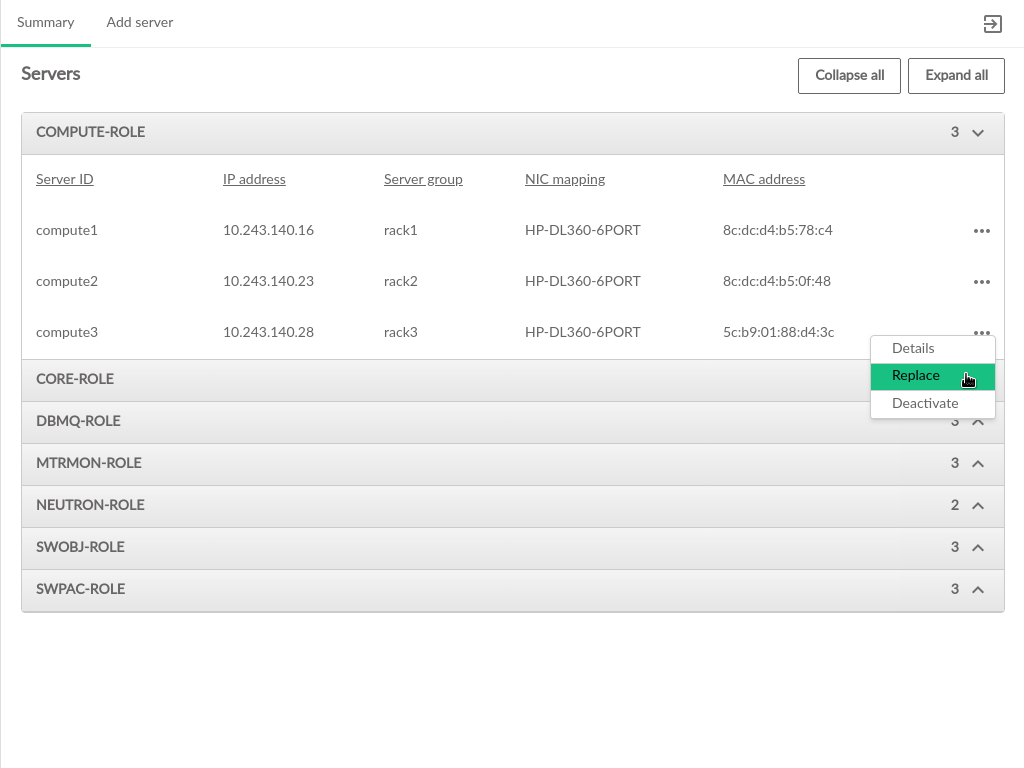

- 3.51 Replace Compute Menu

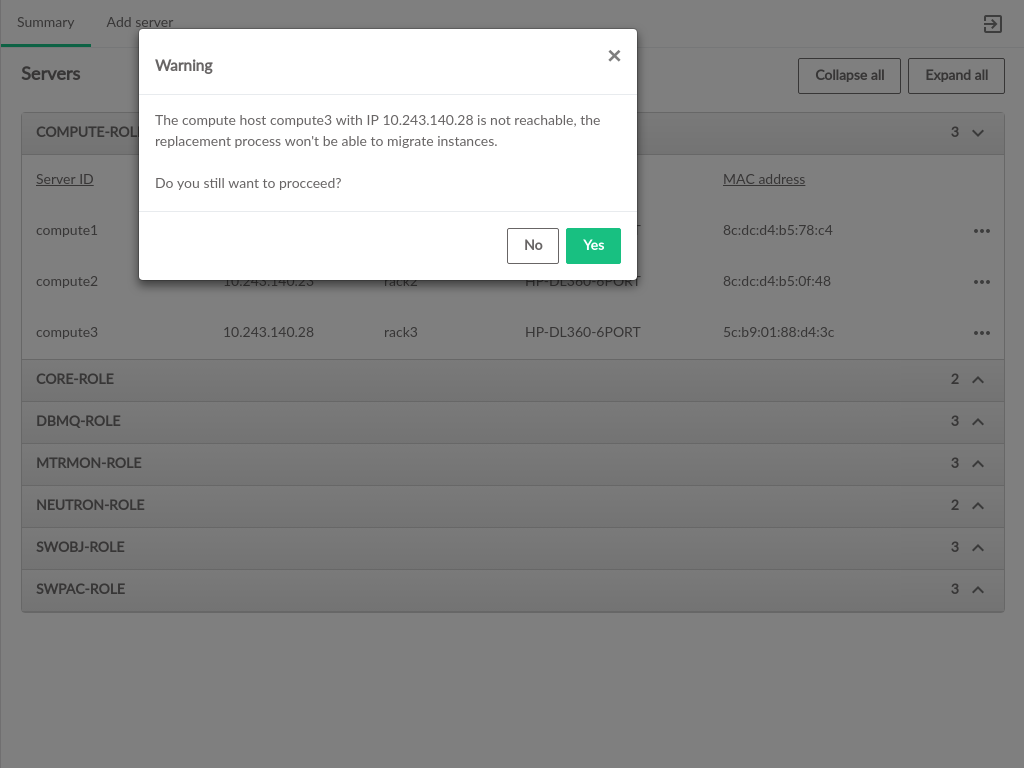

- 3.52 Unreachable Compute Node Warning

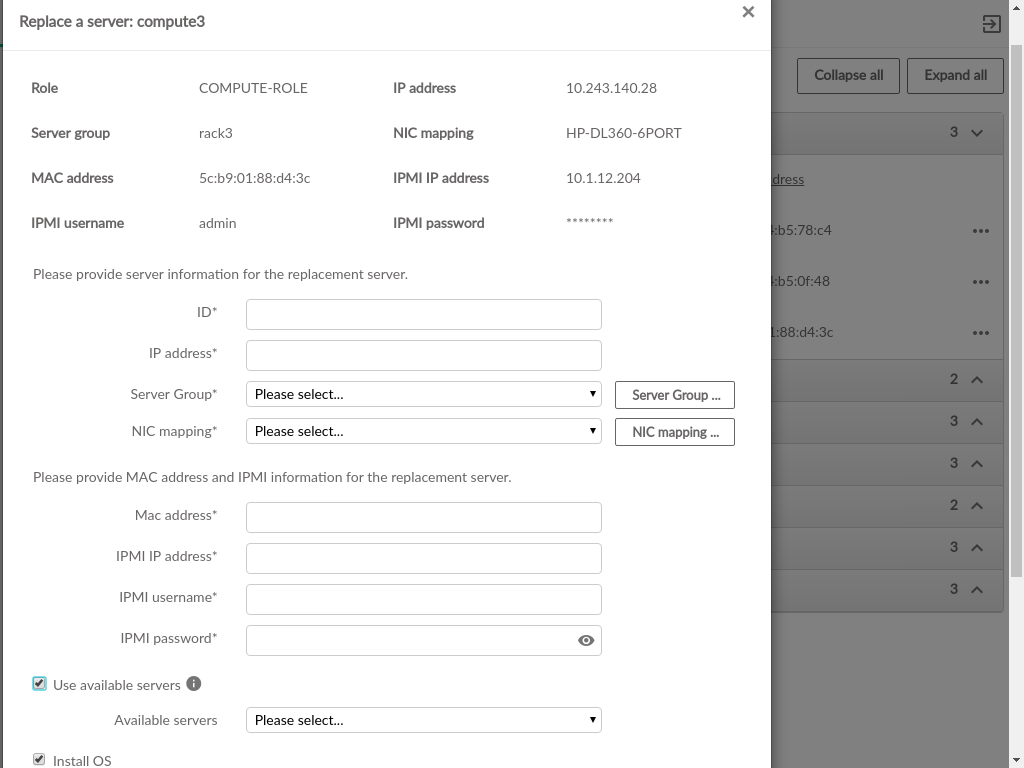

- 3.53 Replace Compute Form

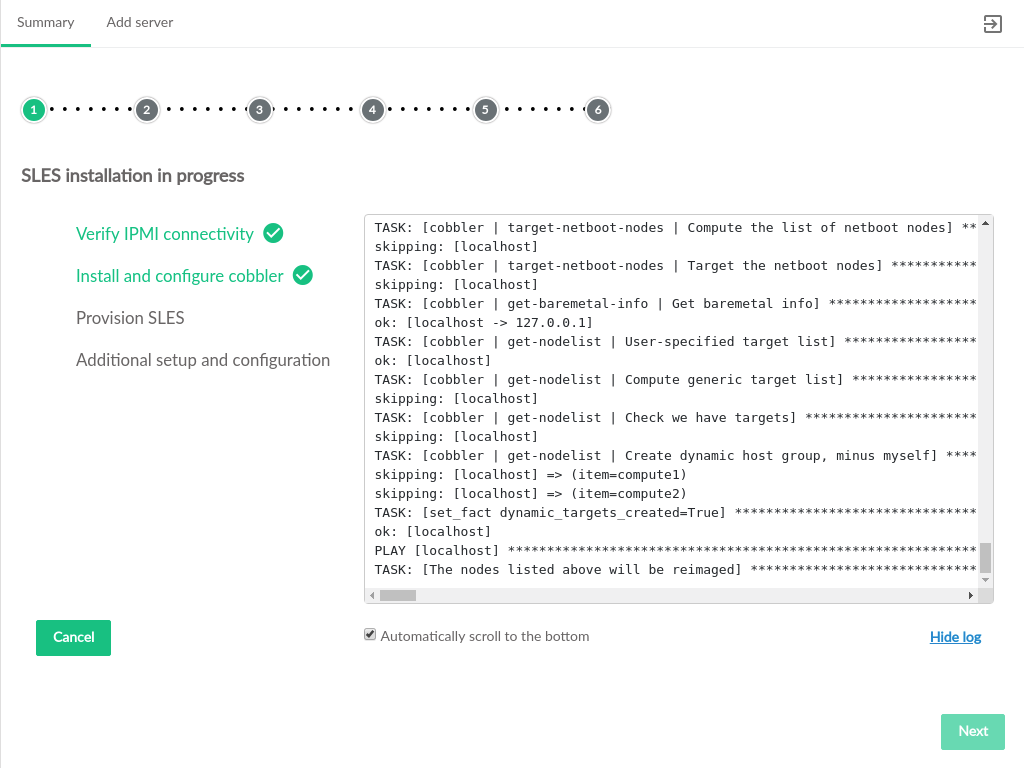

- 3.54 Install SLES on New Compute

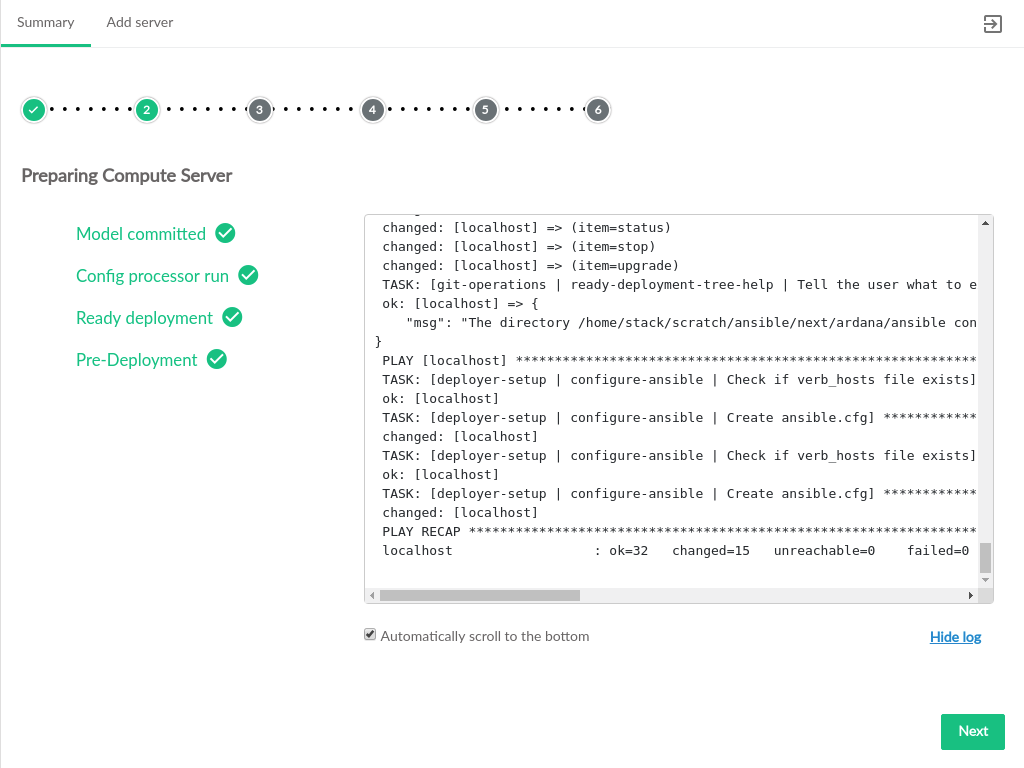

- 3.55 Prepare Compute Server

- 3.56 Deploy New Compute Server

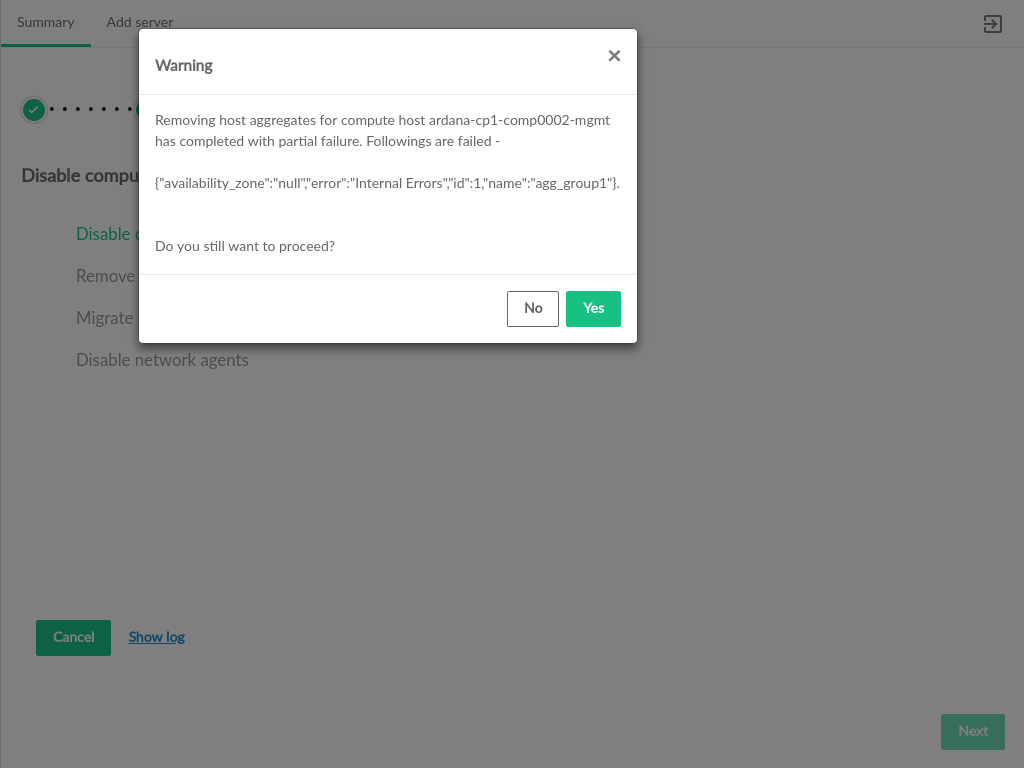

- 3.57 Host Aggregate Removal Warning

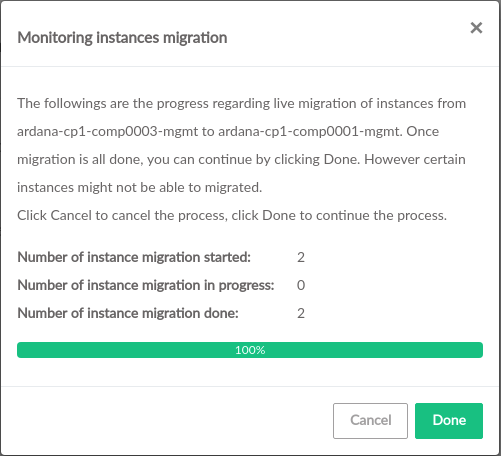

- 3.58 Migrate Instances from Existing Compute Server

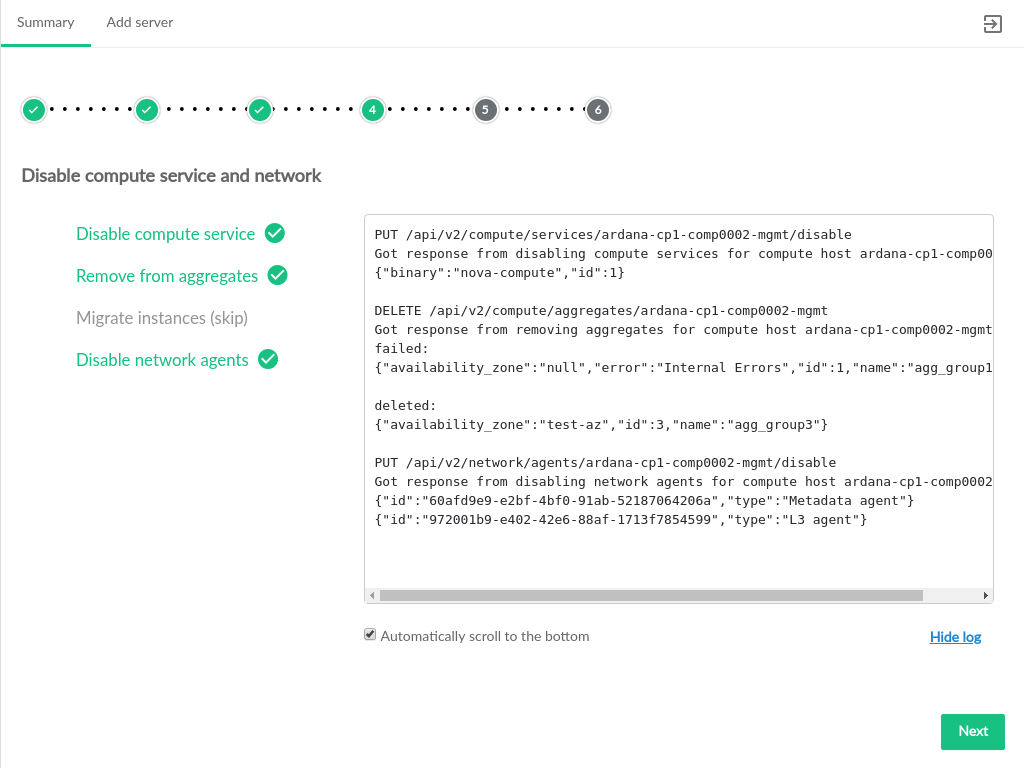

- 3.59 Disable Existing Compute Server

- 3.60 Existing Server Shutdown Check

- 3.61 Existing Server Delete

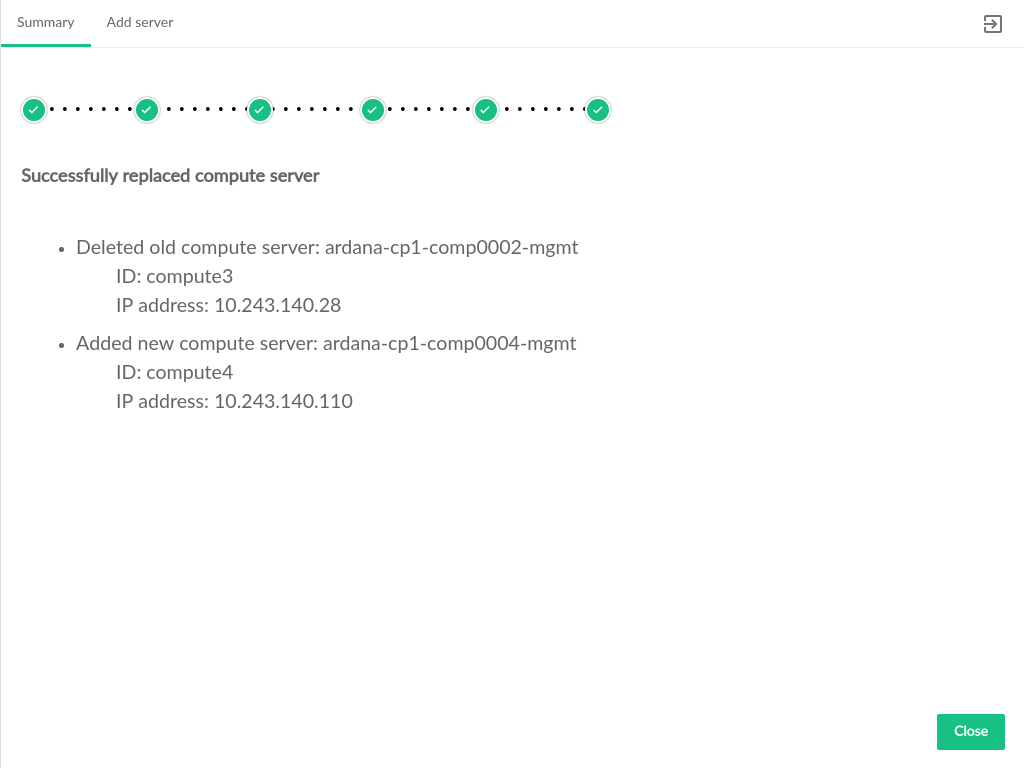

- 3.62 Compute Replacement Summary

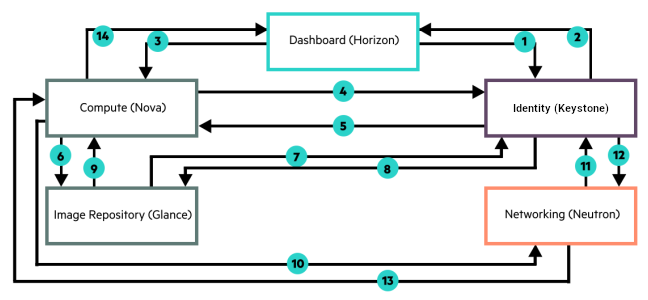

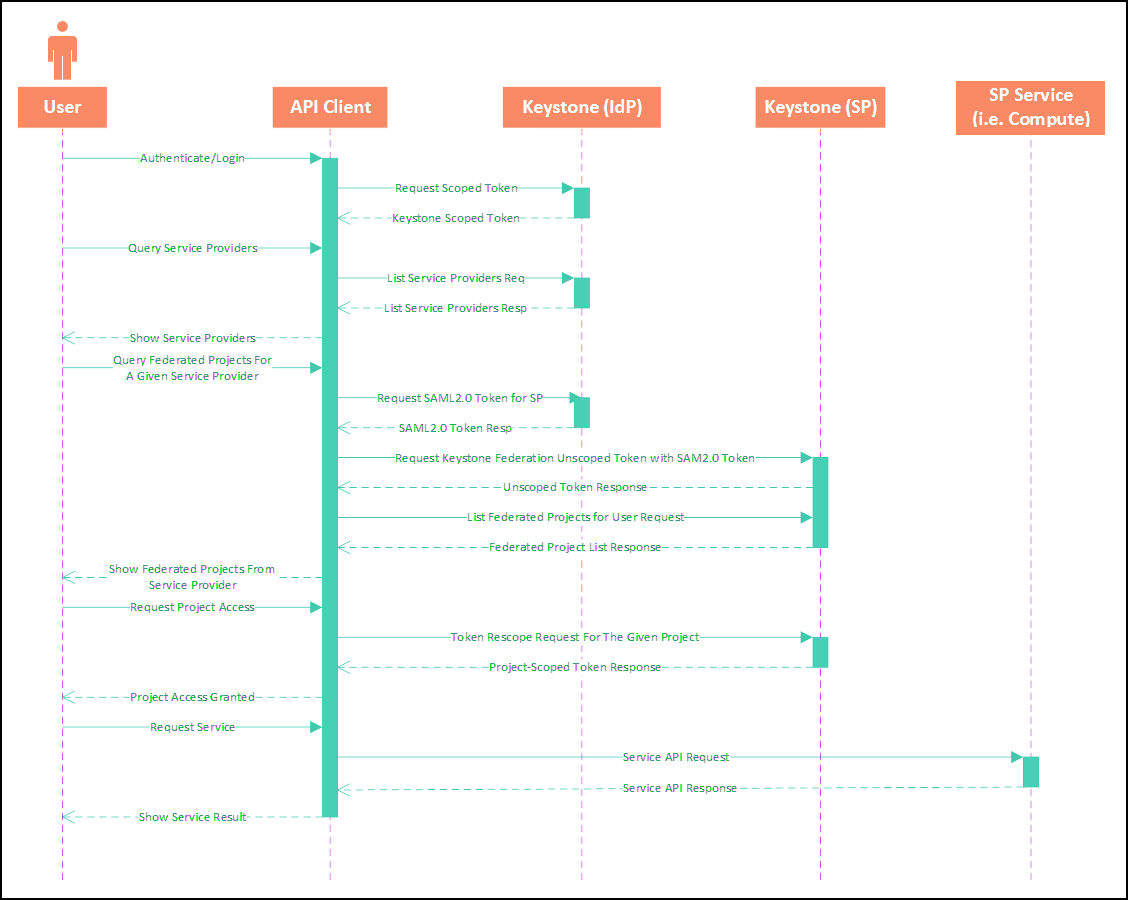

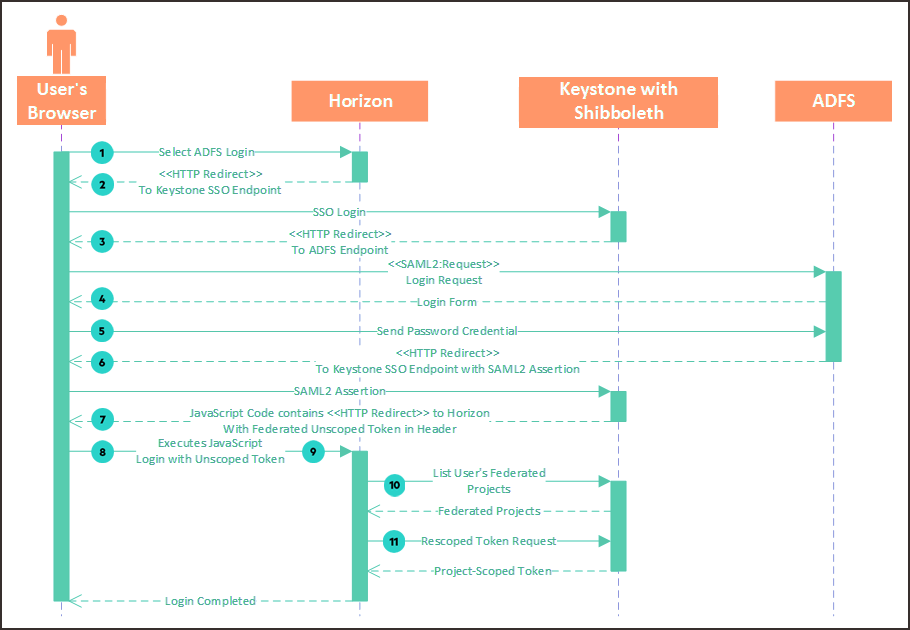

- 5.1 Keystone Authentication Flow

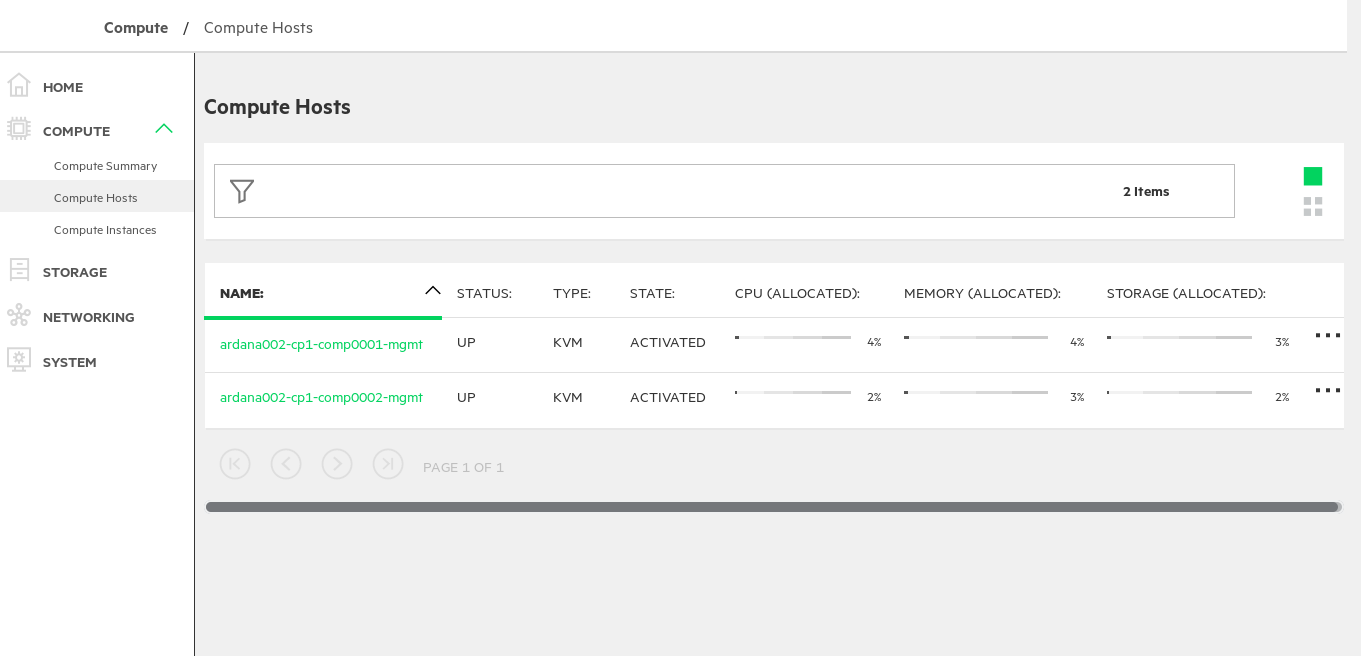

- 16.1 Compute Hosts

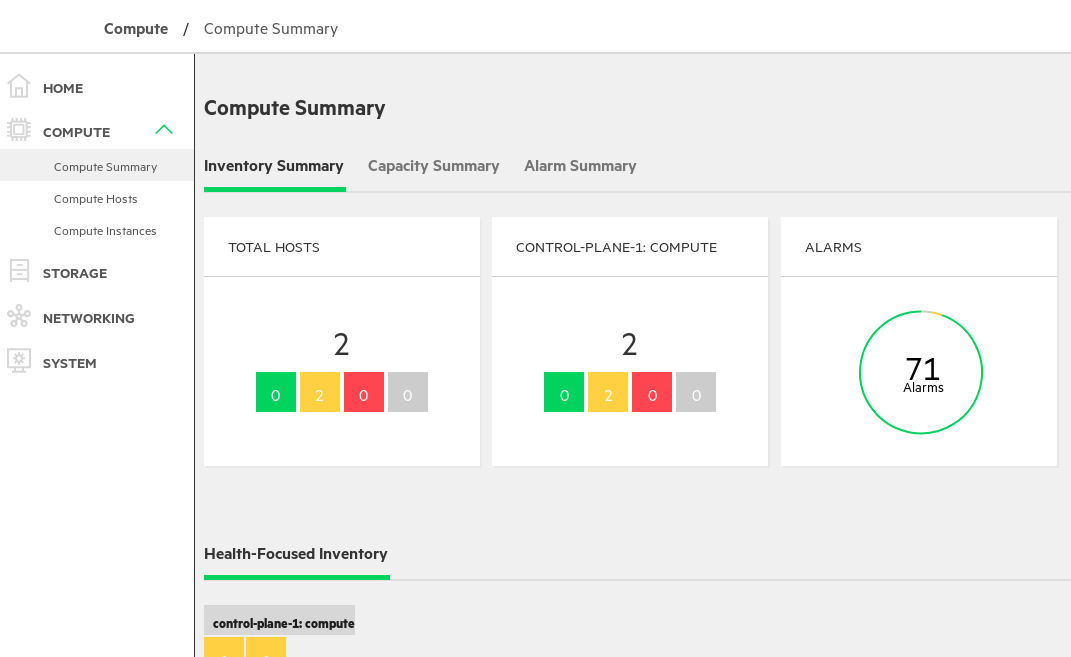

- 16.2 Compute Summary

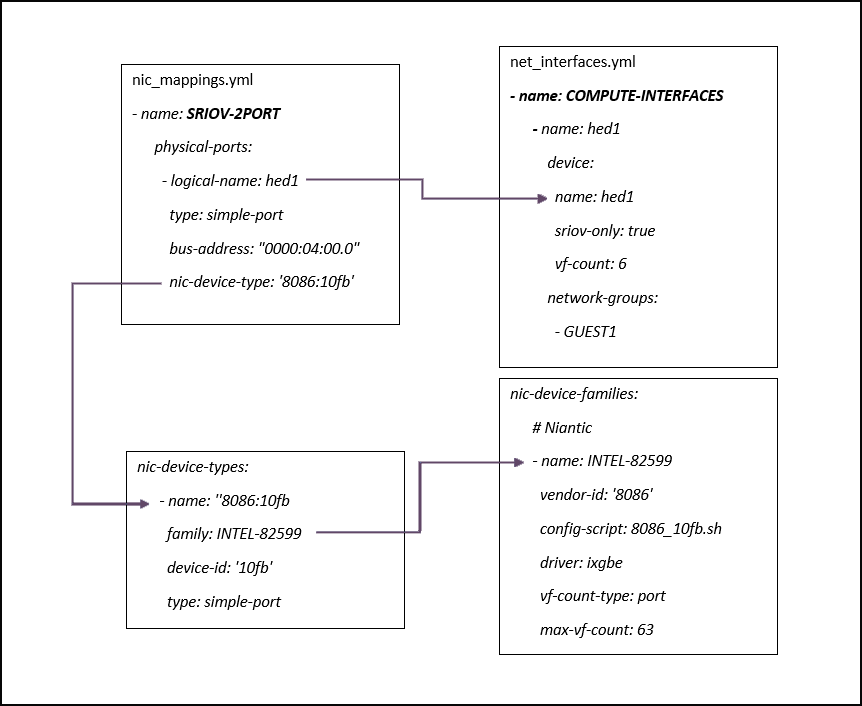

- 10.1 Intel 82599 devices supported with SRIOV and PCIPT

- 13.1 Aggregated Metrics

- 13.2 HTTP Check Metrics

- 13.3 HTTP Metric Components

- 13.4 Tunable Libvirt Metrics

- 13.5 Untunable Libvirt Metrics

- 13.6 Per-router metrics

- 13.7 Per-DHCP port and rate metrics

- 13.8 CPU Metrics

- 13.9 Disk Metrics

- 13.10 Load Metrics

- 13.11 Memory Metrics

- 13.12 Network Metrics

- 17.1 Cloud Lifecycle Manager Backup Paths

Copyright © 2006– 2024 SUSE LLC and contributors. All rights reserved.

Except where otherwise noted, this document is licensed under Creative Commons Attribution 3.0 License : https://creativecommons.org/licenses/by/3.0/legalcode.

For SUSE trademarks, see https://www.suse.com/company/legal/. All other third-party trademarks are the property of their respective owners. Trademark symbols (®, ™ etc.) denote trademarks of SUSE and its affiliates. Asterisks (*) denote third-party trademarks.

All information found in this book has been compiled with utmost attention to detail. However, this does not guarantee complete accuracy. Neither SUSE LLC, its affiliates, the authors nor the translators shall be held liable for possible errors or the consequences thereof.

1 Operations Overview #

A high-level overview of the processes related to operating a SUSE OpenStack Cloud 9 cloud.

1.1 What is a cloud operator? #

When we talk about a cloud operator it is important to understand the scope of the tasks and responsibilities we are referring to. SUSE OpenStack Cloud defines a cloud operator as the person or group of people who will be administering the cloud infrastructure, which includes:

Monitoring the cloud infrastructure, resolving issues as they arise.

Managing hardware resources, adding/removing hardware due to capacity needs.

Repairing, and recovering if needed, any hardware issues.

Performing domain administration tasks, which involves creating and managing projects, users, and groups as well as setting and managing resource quotas.

1.2 Tools provided to operate your cloud #

SUSE OpenStack Cloud provides the following tools which are available to operate your cloud:

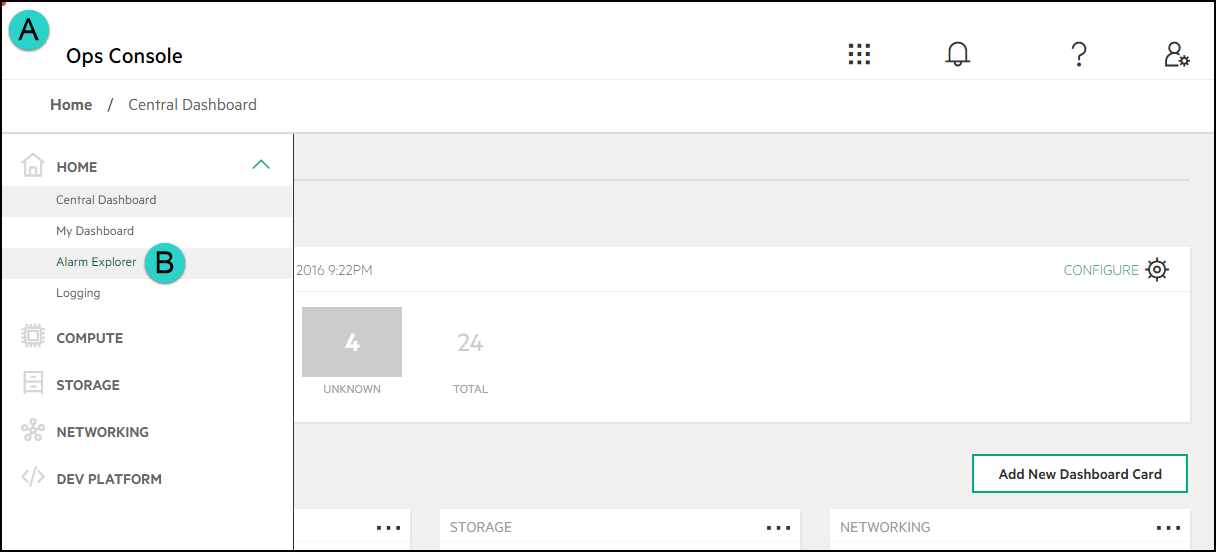

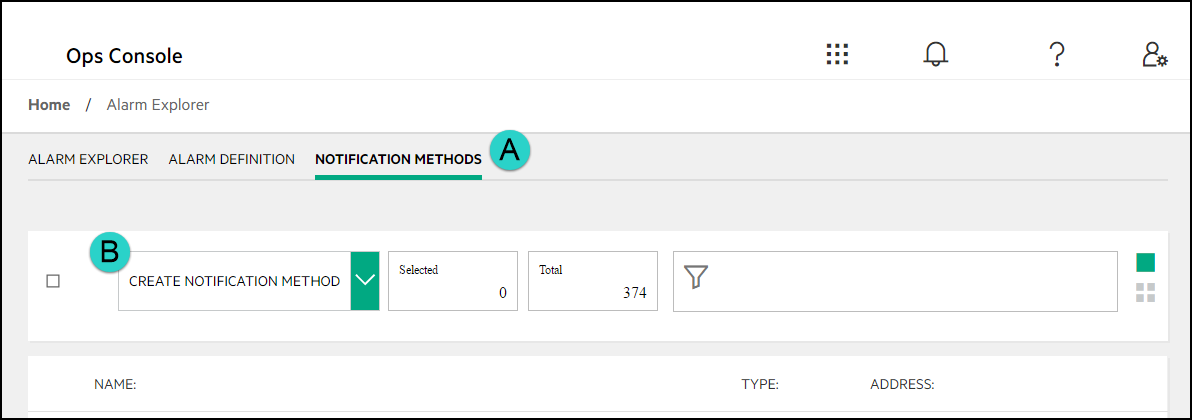

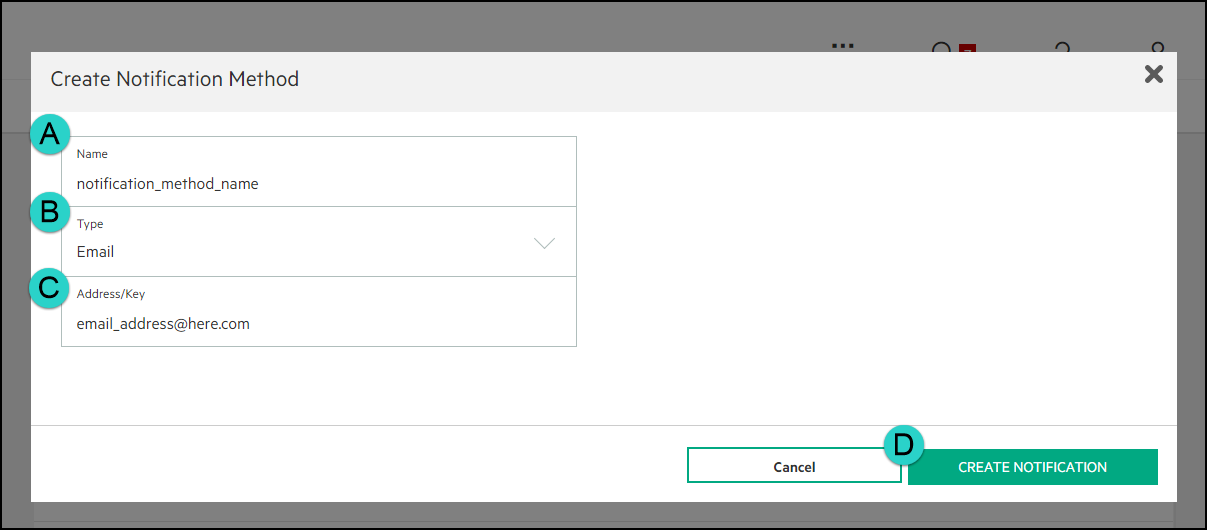

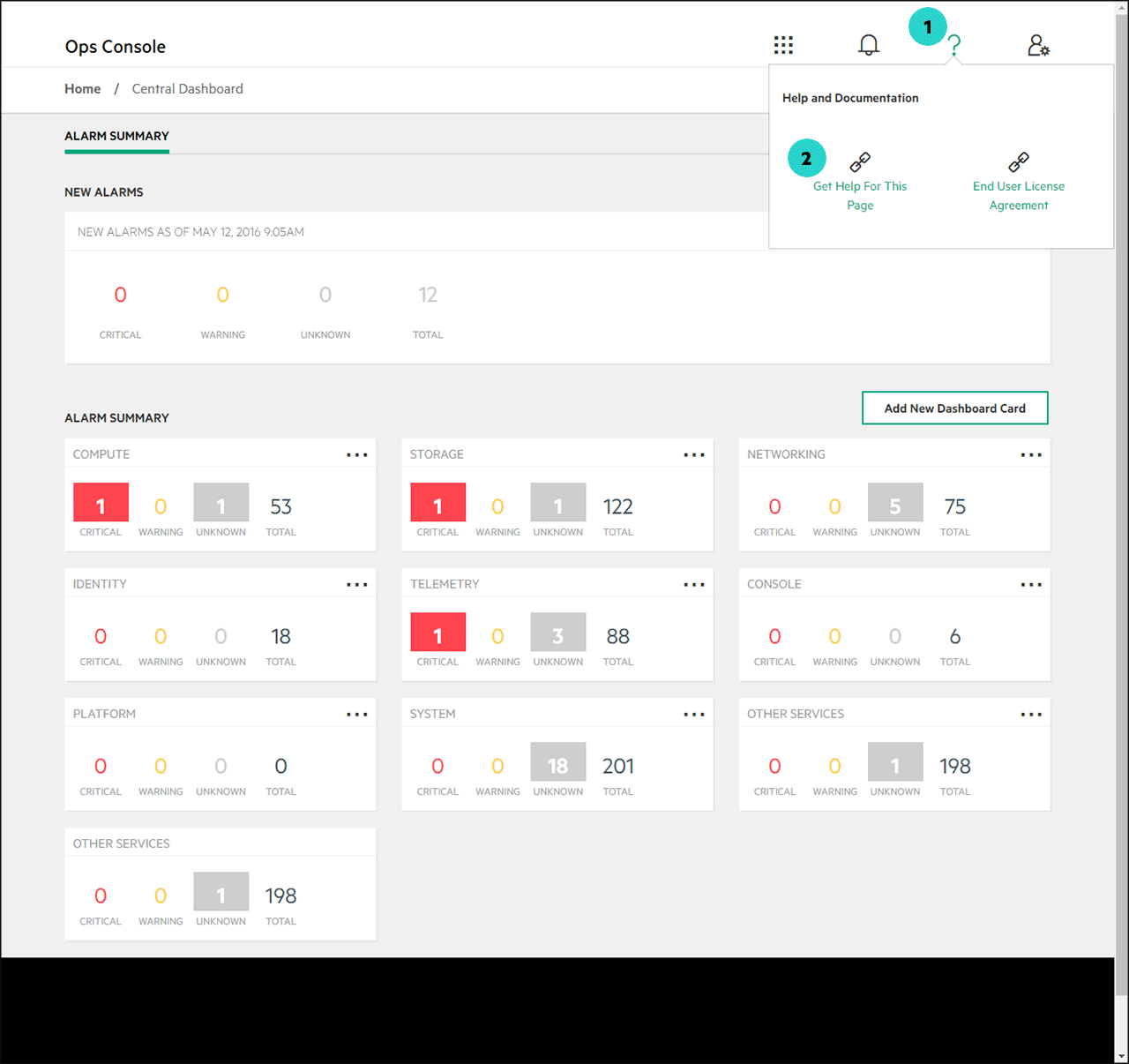

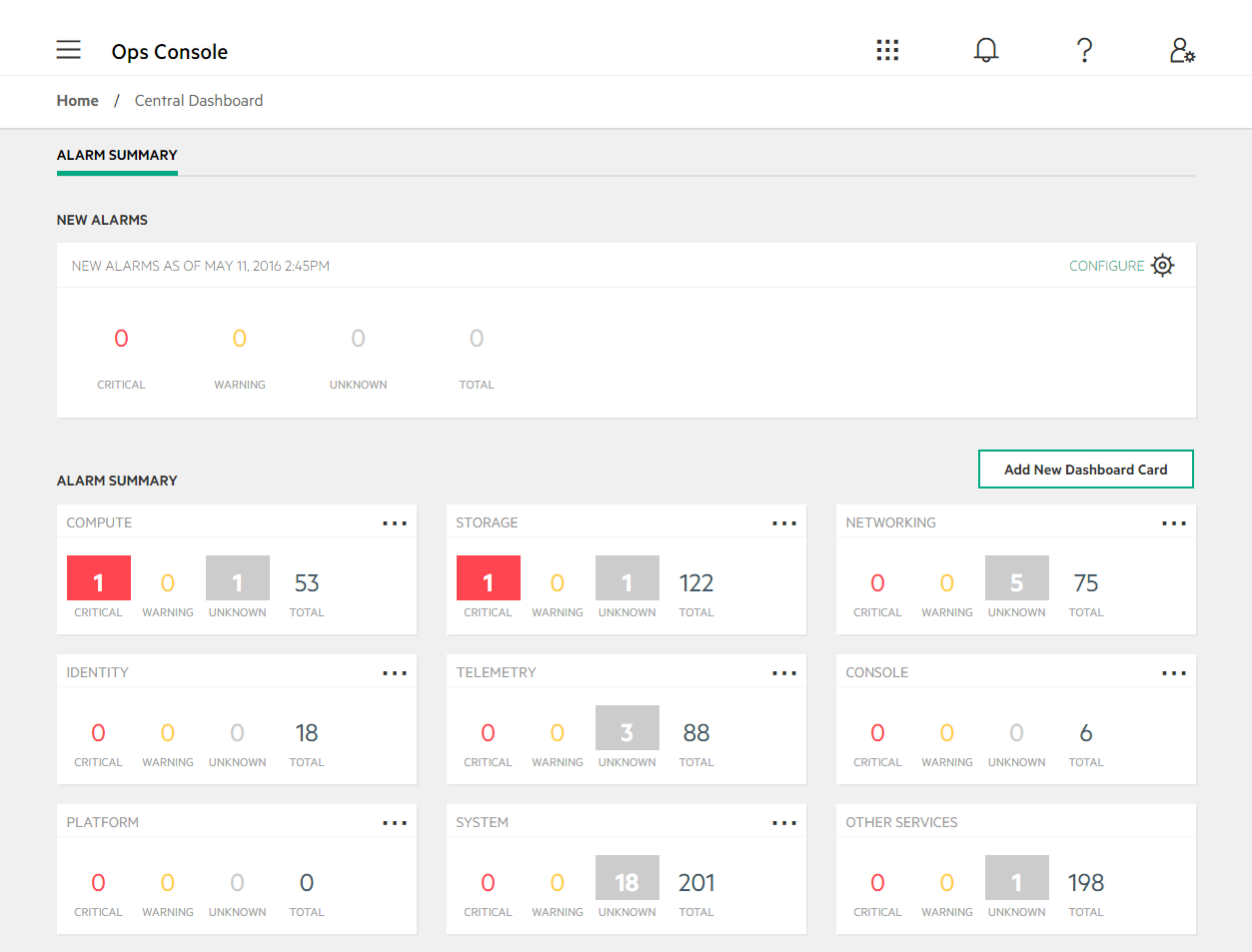

Operations Console

Often referred to as the Ops Console, you can use this console to view data about your cloud infrastructure in a web-based graphical user interface (GUI) to make sure your cloud is operating correctly. By logging on to the console, SUSE OpenStack Cloud administrators can manage data in the following ways:

Triage alarm notifications in the central dashboard

Monitor the environment by giving priority to alarms that take precedence

Manage compute nodes and easily use a form to create a new host

Refine the monitoring environment by creating new alarms to specify a combination of metrics, services, and hosts that match the triggers unique to an environment

Plan for future storage by tracking capacity over time to predict with some degree of reliability the amount of additional storage needed

Dashboard

Often referred to as horizon or the horizon dashboard, you can use this console to manage resources on a domain and project level in a web-based graphical user interface (GUI). The following are some of the typical operational tasks that you may perform using the dashboard:

Creating and managing projects, users, and groups within your domain.

Assigning roles to users and groups to manage access to resources.

Setting and updating resource quotas for the projects.

For more details, see the following page: Section 5.3, “Understanding Domains, Projects, Users, Groups, and Roles”

Command-line interface (CLI)

The OpenStack community has created a unified client, called the openstackclient (OSC), which combines the available commands in the various service-specific clients into one tool. Some service-specific commands do not have OSC equivalents.

You will find processes defined in our documentation that use these command-line tools. There is also a list of common cloud administration tasks which we have outlined which you can use the command-line tools to do.

There are references throughout the SUSE OpenStack Cloud documentation to the HPE Smart Storage Administrator (HPE SSA) CLI. HPE-specific binaries that are not based on open source are distributed directly from and supported by HPE. To download and install the SSACLI utility, please refer to: https://support.hpe.com/hpsc/swd/public/detail?swItemId=MTX_3d16386b418a443388c18da82f

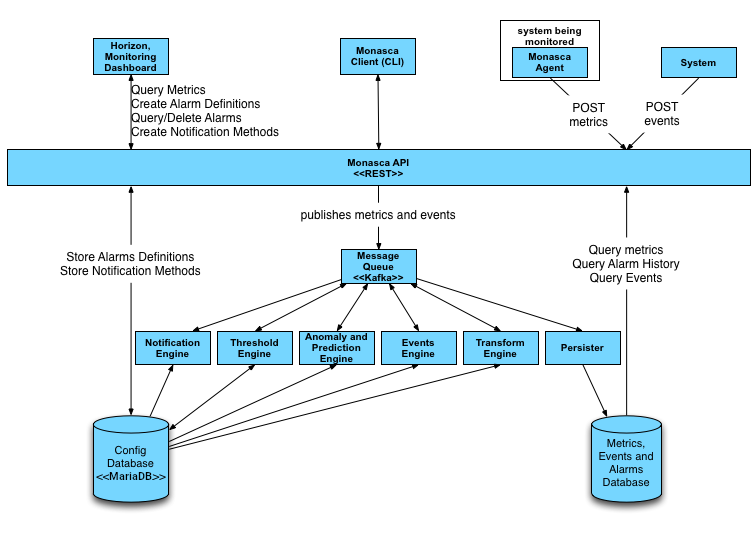

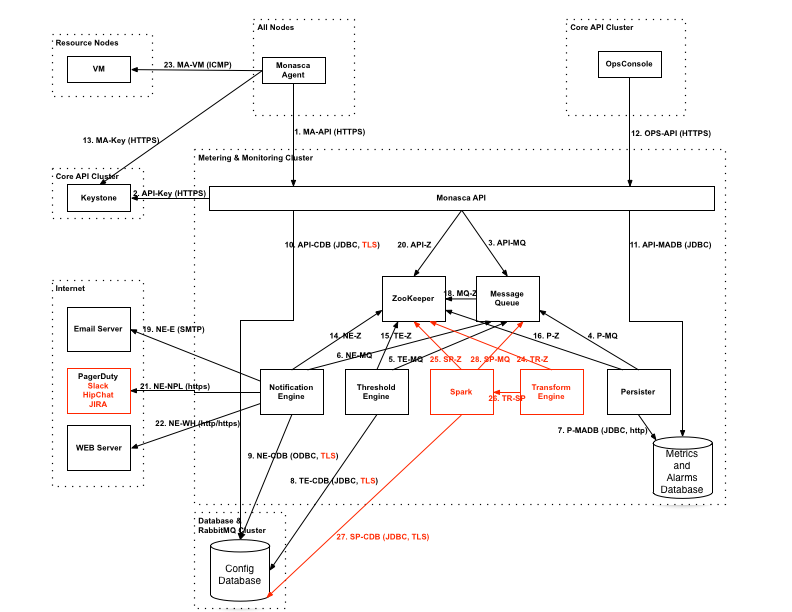

1.3 Daily tasks #

Ensure your cloud is running correctly: SUSE OpenStack Cloud is deployed as a set of highly available services to minimize the impact of failures. That said, hardware and software systems can fail. Detection of failures early in the process will enable you to address issues before they affect the broader system. SUSE OpenStack Cloud provides a monitoring solution, based on OpenStack’s monasca, which provides monitoring and metrics for all OpenStack components and much of the underlying system, including service status, performance metrics, compute node, and virtual machine status. Failures are exposed via the Operations Console and/or alarm notifications. In the case where more detailed diagnostics are required, you can use a centralized logging system based on the Elasticsearch, Logstash, and Kibana (ELK) stack. This provides the ability to search service logs to get detailed information on behavior and errors.

Perform critical maintenance: To ensure your OpenStack installation is running correctly, provides the right access and functionality, and is secure, you should make ongoing adjustments to the environment. Examples of daily maintenance tasks include:

Add/remove projects and users. The frequency of this task depends on your policy.

Apply security patches (if released).

Run daily backups.

1.4 Weekly or monthly tasks #

Do regular capacity planning: Your initial deployment will likely reflect the known near to mid-term scale requirements, but at some point your needs will outgrow your initial deployment’s capacity. You can expand SUSE OpenStack Cloud in a variety of ways, such as by adding compute and storage capacity.

To manage your cloud’s capacity, begin by determining the load on the existing system. OpenStack is a set of relatively independent components and services, so there are multiple subsystems that can affect capacity. These include control plane nodes, compute nodes, object storage nodes, block storage nodes, and an image management system. At the most basic level, you should look at the CPU used, RAM used, I/O load, and the disk space used relative to the amounts available. For compute nodes, you can also evaluate the allocation of resource to hosted virtual machines. This information can be viewed in the Operations Console. You can pull historical information from the monitoring service (OpenStack’s monasca) by using its client or API. Also, OpenStack provides you some ability to manage the hosted resource utilization by using quotas for projects. You can track this usage over time to get your growth trend so that you can project when you will need to add capacity.

1.5 Semi-annual tasks #

Perform upgrades: OpenStack releases new versions on a six-month cycle. In general, SUSE OpenStack Cloud will release new major versions annually with minor versions and maintenance updates more often. Each new release consists of both new functionality and services, as well as bug fixes for existing functionality.

If you are planning to upgrade, this is also an excellent time to evaluate your existing capabilities, especially in terms of capacity (see Capacity Planning above).

1.6 Troubleshooting #

As part of managing your cloud, you should be ready to troubleshoot issues, as needed. The following are some common troubleshooting scenarios and solutions:

How do I determine if my cloud is operating correctly now?: SUSE OpenStack Cloud provides a monitoring solution based on OpenStack’s monasca service. This service provides monitoring and metrics for all OpenStack components, as well as much of the underlying system. By default, SUSE OpenStack Cloud comes with a set of alarms that provide coverage of the primary systems. In addition, you can define alarms based on threshold values for any metrics defined in the system. You can view alarm information in the Operations Console. You can also receive or deliver this information to others by configuring email or other mechanisms. Alarms provide information about whether a component failed and is affecting the system, and also what condition triggered the alarm.

How do I troubleshoot and resolve performance issues for my cloud?: There are a variety of factors that can affect the performance of a cloud system, such as the following:

Health of the control plane

Health of the hosting compute node and virtualization layer

Resource allocation on the compute node

If your cloud users are experiencing performance issues on your cloud, use the following approach:

View the compute summary page on the Operations Console to determine if any alarms have been triggered.

Determine the hosting node of the virtual machine that is having issues.

On the compute hosts page, view the status and resource utilization of the compute node to determine if it has errors or is over-allocated.

On the compute instances page you can view the status of the VM along with its metrics.

How do I troubleshoot and resolve availability issues for my cloud?: If your cloud users are experiencing availability issues, determine what your users are experiencing that indicates to them the cloud is down. For example, can they not access the Dashboard service (horizon) console or APIs, indicating a problem with the control plane? Or are they having trouble accessing resources? Console/API issues would indicate a problem with the control planes. Use the Operations Console to view the status of services to see if there is an issue. However, if it is an issue of accessing a virtual machine, then also search the consolidated logs that are available in the ELK stack or errors related to the virtual machine and supporting networking.

1.7 Common Questions #

What skills do my cloud administrators need?

Your administrators should be experienced Linux admins. They should have experience in application management, as well as experience with Ansible. It is a plus if they have experience with Bash shell scripting and Python programming skills.

In addition, you will need skilled networking engineering staff to administer the cloud network environment.

2 Tutorials #

This section contains tutorials for common tasks for your SUSE OpenStack Cloud 9 cloud.

2.1 SUSE OpenStack Cloud Quickstart Guide #

2.1.1 Introduction #

This document provides simplified instructions for installing and setting up a SUSE OpenStack Cloud. Use this quickstart guide to build testing, demonstration, and lab-type environments., rather than production installations. When you complete this quickstart process, you will have a fully functioning SUSE OpenStack Cloud demo environment.

These simplified instructions are intended for testing or demonstration. Instructions for production installations are in Book “Deployment Guide using Cloud Lifecycle Manager”.

2.1.2 Overview of components #

The following are short descriptions of the components that SUSE OpenStack Cloud employs when installing and deploying your cloud.

Ansible. Ansible is a powerful configuration management tool used by SUSE OpenStack Cloud to manage nearly all aspects of your cloud infrastructure. Most commands in this quickstart guide execute Ansible scripts, known as playbooks. You will run playbooks that install packages, edit configuration files, manage network settings, and take care of the general administration tasks required to get your cloud up and running.

Get more information on Ansible at https://www.ansible.com/.

Cobbler. Cobbler is another third-party tool used by SUSE OpenStack Cloud to deploy operating systems across the physical servers that make up your cloud. Find more info at http://cobbler.github.io/.

Git. Git is the version control system used to manage the configuration files that define your cloud. Any changes made to your cloud configuration files must be committed to the locally hosted git repository to take effect. Read more information on Git at https://git-scm.com/.

2.1.3 Preparation #

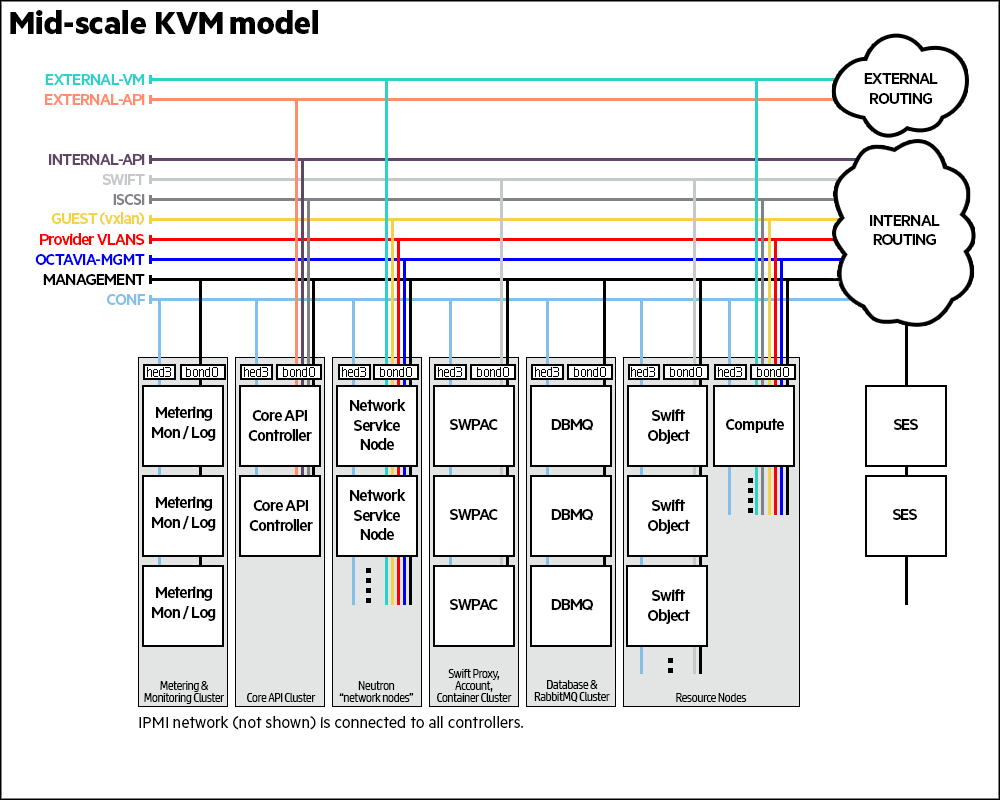

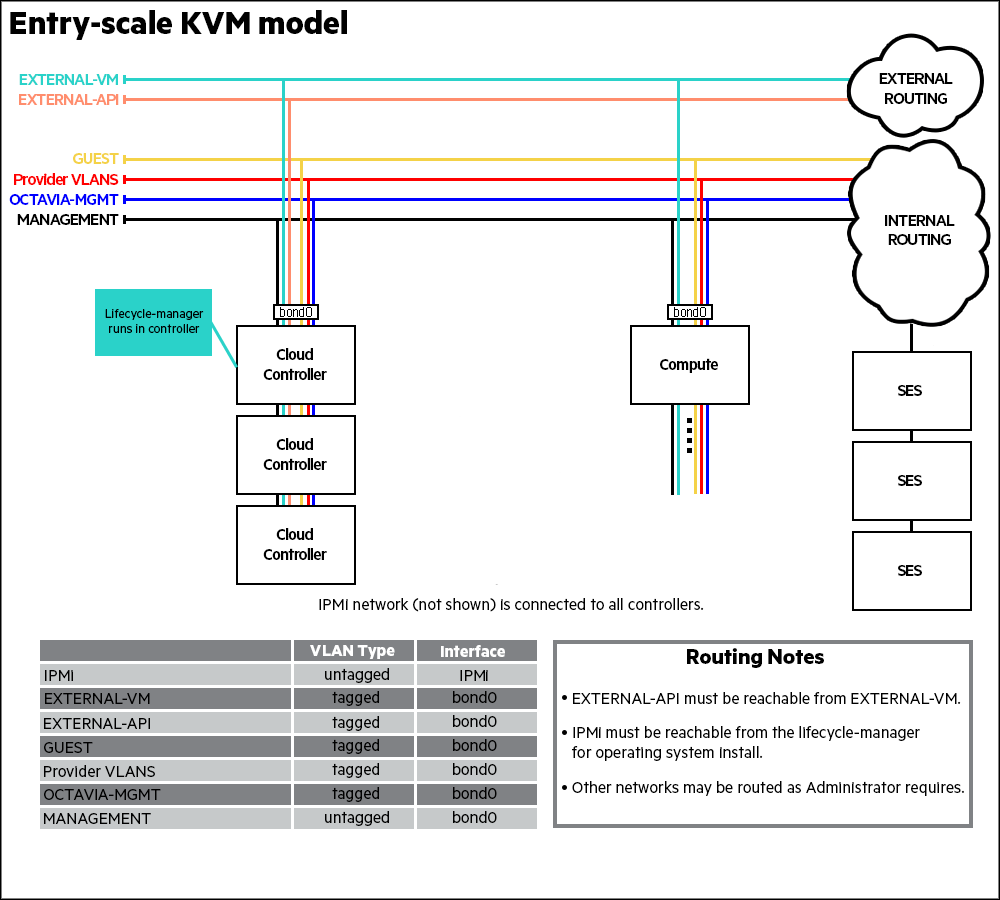

Successfully deploying a SUSE OpenStack Cloud environment is a large endeavor, but it is not complicated. For a successful deployment, you must put a number of components in place before rolling out your cloud. Most importantly, a basic SUSE OpenStack Cloud requires the proper network infrastrucure. Because SUSE OpenStack Cloud segregates the network traffic of many of its elements, if the necessary networks, routes, and firewall access rules are not in place, communication required for a successful deployment will not occur.

2.1.4 Getting Started #

When your network infrastructure is in place, go ahead and set up the Cloud Lifecycle Manager. This is the server that will orchestrate the deployment of the rest of your cloud. It is also the server you will run most of your deployment and management commands on.

Set up the Cloud Lifecycle Manager

Download the installation media

Obtain a copy of the SUSE OpenStack Cloud installation media, and make sure that it is accessible by the server that you are installing it on. Your method of doing this may vary. For instance, some may choose to load the installation ISO on a USB drive and physically attach it to the server, while others may run the IPMI Remote Console and attach the ISO to a virtual disc drive.

Install the operating system

Boot your server, using the installation media as the boot source.

Choose "install" from the list of options and choose your preferred keyboard layout, location, language, and other settings.

Set the address, netmask, and gateway for the primary network interface.

Create a root user account.

Proceed with the OS installation. After the installation is complete and the server has rebooted into the new OS, log in with the user account you created.

Configure the new server

SSH to your new server, and set a valid DNS nameserver in the

/etc/resolv.conffile.Set the environment variable

LC_ALL:export LC_ALL=C

You now have a server running SUSE Linux Enterprise Server (SLES). The next step is to configure this machine as a Cloud Lifecycle Manager.

Configure the Cloud Lifecycle Manager

The installation media you used to install the OS on the server also has the files that will configure your cloud. You need to mount this installation media on your new server in order to use these files.

Using the URL that you obtained the SUSE OpenStack Cloud installation media from, run

wgetto download the ISO file to your server:wget INSTALLATION_ISO_URL

Now mount the ISO in the

/media/cdrom/directorysudo mount INSTALLATION_ISO /media/cdrom/

Unpack the tar file found in the

/media/cdrom/ardana/directory where you just mounted the ISO:tar xvf /media/cdrom/ardana/ardana-x.x.x-x.tar

Now you will install and configure all the components needed to turn this server into a Cloud Lifecycle Manager. Run the

ardana-init.bashscript from the uncompressed tar file:~/ardana-x.x.x/ardana-init.bash

The

ardana-init.bashscript prompts you to enter an optional SSH passphrase. This passphrase protects the RSA key used to SSH to the other cloud nodes. This is an optional passphrase, and you can skip it by pressing Enter at the prompt.The

ardana-init.bashscript automatically installs and configures everything needed to set up this server as the lifecycle manager for your cloud.When the script has finished running, you can proceed to the next step, editing your input files.

Edit your input files

Your SUSE OpenStack Cloud input files are where you define your cloud infrastructure and how it runs. The input files define options such as which servers are included in your cloud, the type of disks the servers use, and their network configuration. The input files also define which services your cloud will provide and use, the network architecture, and the storage backends for your cloud.

There are several example configurations, which you can find on your Cloud Lifecycle Manager in the

~/openstack/examples/directory.The simplest way to set up your cloud is to copy the contents of one of these example configurations to your

~/openstack/mycloud/definition/directory. You can then edit the copied files and define your cloud.cp -r ~/openstack/examples/CHOSEN_EXAMPLE/* ~/openstack/my_cloud/definition/

Edit the files in your

~/openstack/my_cloud/definition/directory to define your cloud.

Commit your changes

When you finish editing the necessary input files, stage them, and then commit the changes to the local Git repository:

cd ~/openstack/ardana/ansible git add -A git commit -m "My commit message"

Image your servers

Now that you have finished editing your input files, you can deploy the configuration to the servers that will comprise your cloud.

Image the servers. You will install the SLES operating system across all the servers in your cloud, using Ansible playbooks to trigger the process.

The following playbook confirms that your servers are accessible over their IPMI ports, which is a prerequisite for the imaging process:

ansible-playbook -i hosts/localhost bm-power-status.yml

Now validate that your cloud configuration files have proper YAML syntax by running the

config-processor-run.ymlplaybook:ansible-playbook -i hosts/localhost config-processor-run.yml

If you receive an error when running the preceeding playbook, one or more of your configuration files has an issue. Refer to the output of the Ansible playbook, and look for clues in the Ansible log file, found at

~/.ansible/ansible.log.The next step is to prepare your imaging system, Cobbler, to deploy operating systems to all your cloud nodes:

ansible-playbook -i hosts/localhost cobbler-deploy.yml

Now you can image your cloud nodes. You will use an Ansible playbook to trigger Cobbler to deploy operating systems to all the nodes you specified in your input files:

ansible-playbook -i hosts/localhost bm-reimage.yml

The

bm-reimage.ymlplaybook performs the following operations:Powers down the servers.

Sets the servers to boot from a network interface.

Powers on the servers and performs a PXE OS installation.

Waits for the servers to power themselves down as part of a successful OS installation. This can take some time.

Sets the servers to boot from their local hard disks and powers on the servers.

Waits for the SSH service to start on the servers and verifies that they have the expected host-key signature.

Deploy your cloud

Now that your servers are running the SLES operating system, it is time to configure them for the roles they will play in your new cloud.

Prepare the Cloud Lifecycle Manager to deploy your cloud configuration to all the nodes:

ansible-playbook -i hosts/localhost ready-deployment.yml

NOTE: The preceding playbook creates a new directory,

~/scratch/ansible/next/ardana/ansible/, from which you will run many of the following commands.(Optional) If you are reusing servers or disks to run your cloud, you can wipe the disks of your newly imaged servers by running the

wipe_disks.ymlplaybook:cd ~/scratch/ansible/next/ardana/ansible/ ansible-playbook -i hosts/verb_hosts wipe_disks.yml

The

wipe_disks.ymlplaybook removes any existing data from the drives on your new servers. This can be helpful if you are reusing servers or disks. This action will not affect the OS partitions on the servers.NoteThe

wipe_disks.ymlplaybook is only meant to be run on systems immediately after runningbm-reimage.yml. If used for any other case, it may not wipe all of the expected partitions. For example, ifsite.ymlfails, you cannot start fresh by runningwipe_disks.yml. You mustbm-reimagethe node first and then runwipe_disks.Now it is time to deploy your cloud. Do this by running the

site.ymlplaybook, which pushes the configuration you defined in the input files out to all the servers that will host your cloud.cd ~/scratch/ansible/next/ardana/ansible/ ansible-playbook -i hosts/verb_hosts site.yml

The

site.ymlplaybook installs packages, starts services, configures network interface settings, sets iptables firewall rules, and more. Upon successful completion of this playbook, your SUSE OpenStack Cloud will be in place and in a running state. This playbook can take up to six hours to complete.

SSH to your nodes

Now that you have successfully run

site.yml, your cloud will be up and running. You can verify connectivity to your nodes by connecting to each one by using SSH. You can find the IP addresses of your nodes by viewing the/etc/hostsfile.For security reasons, you can only SSH to your nodes from the Cloud Lifecycle Manager. SSH connections from any machine other than the Cloud Lifecycle Manager will be refused by the nodes.

From the Cloud Lifecycle Manager, SSH to your nodes:

ssh <management IP address of node>

Also note that SSH is limited to your cloud's management network. Each node has an address on the management network, and you can find this address by reading the

/etc/hostsorserver_info.ymlfile.

2.2 Installing the Command-Line Clients #

During the installation, by default, the suite of OpenStack command-line tools are installed on the Cloud Lifecycle Manager and the control plane in your environment. You can learn more about these in the OpenStack documentation here: OpenStackClient.

If you wish to install the command-line interfaces on other nodes in your environment, there are two methods you can use to do so that we describe below.

2.2.1 Installing the CLI tools using the input model #

During the initial install phase of your cloud you can edit your input model to request that the command-line clients be installed on any of the node clusters in your environment. To do so, follow these steps:

Log in to the Cloud Lifecycle Manager.

Edit your

control_plane.ymlfile. Full path:~/openstack/my_cloud/definition/data/control_plane.yml

In this file you will see a list of

service-componentsto be installed on each of your clusters. These clusters will be divided per role, with your controller node cluster likely coming at the beginning. Here you will see a list of each of the clients that can be installed. These include:keystone-client glance-client cinder-client nova-client neutron-client swift-client heat-client openstack-client monasca-client barbican-client designate-client

For each client you want to install, specify the name under the

service-componentssection for the cluster you want to install it on.So, for example, if you would like to install the nova and neutron clients on your Compute node cluster, you can do so by adding the

nova-clientandneutron-clientservices, like this:resources: - name: compute resource-prefix: comp server-role: COMPUTE-ROLE allocation-policy: any min-count: 0 service-components: - ntp-client - nova-compute - nova-compute-kvm - neutron-l3-agent - neutron-metadata-agent - neutron-openvswitch-agent - nova-client - neutron-clientNoteThis example uses the

entry-scale-kvmsample file. Your model may be different so use this as a guide but do not copy and paste the contents of this example into your input model.Commit your configuration to the local git repo, as follows:

cd ~/openstack/ardana/ansible git add -A git commit -m "My config or other commit message"

Continue with the rest of your installation.

2.2.2 Installing the CLI tools using Ansible #

At any point after your initial installation you can install the command-line clients on any of the nodes in your environment. To do so, follow these steps:

Log in to the Cloud Lifecycle Manager.

Obtain the hostname for the nodes you want to install the clients on by looking in your hosts file:

cat /etc/hosts

Install the clients using this playbook, specifying your hostnames using commas:

cd ~/scratch/ansible/next/ardana/ansible ansible-playbook -i hosts/verb_hosts -e "install_package=<client_name>" client-deploy.yml -e "install_hosts=<hostname>"

So, for example, if you would like to install the novaClient on two of your Compute nodes with hostnames

ardana-cp1-comp0001-mgmtandardana-cp1-comp0002-mgmtyou can use this syntax:cd ~/scratch/ansible/next/ardana/ansible ansible-playbook -i hosts/verb_hosts -e "install_package=novaclient" client-deploy.yml -e "install_hosts=ardana-cp1-comp0001-mgmt,ardana-cp1-comp0002-mgmt"

Once the playbook completes successfully, you should be able to SSH to those nodes and, using the proper credentials, authenticate and use the command-line interfaces you have installed.

2.3 Cloud Admin Actions with the Command Line #

Cloud admins can use the command line tools to perform domain admin tasks such as user and project administration.

2.3.1 Creating Additional Cloud Admins #

You can create additional Cloud Admins to help with the administration of your cloud.

keystone identity service query and administration tasks can be performed using the OpenStack command line utility. The utility is installed by the Cloud Lifecycle Manager onto the Cloud Lifecycle Manager.

keystone administration tasks should be performed by an

admin user with a token scoped to the

default domain via the keystone v3 identity API.

These settings are preconfigured in the file

~/keystone.osrc. By default,

keystone.osrc is configured with the admin endpoint of

keystone. If the admin endpoint is not accessible from your network, change

OS_AUTH_URL to point to the public endpoint.

2.3.2 Command Line Examples #

For a full list of OpenStackClient commands, see OpenStackClient Command List.

Sourcing the keystone Administration Credentials

You can set the environment variables needed for identity administration by

sourcing the keystone.osrc file created by the lifecycle

manager:

source ~/keystone.osrc

List users in the default domain

These users are created by the Cloud Lifecycle Manager in the MySQL back end:

openstack user list

Example output:

$ openstack user list +----------------------------------+------------------+ | ID | Name | +----------------------------------+------------------+ | 155b68eda9634725a1d32c5025b91919 | heat | | 303375d5e44d48f298685db7e6a4efce | octavia | | 40099e245a394e7f8bb2aa91243168ee | logging | | 452596adbf4d49a28cb3768d20a56e38 | admin | | 76971c3ad2274820ad5347d46d7560ec | designate | | 7b2dc0b5bb8e4ffb92fc338f3fa02bf3 | hlm_backup | | 86d345c960e34c9189519548fe13a594 | barbican | | 8e7027ab438c4920b5853d52f1e08a22 | nova_monasca | | 9c57dfff57e2400190ab04955e7d82a0 | barbican_service | | a3f99bcc71b242a1bf79dbc9024eec77 | nova | | aeeb56fc4c4f40e0a6a938761f7b154a | glance-check | | af1ef292a8bb46d9a1167db4da48ac65 | cinder | | af3000158c6d4d3d9257462c9cc68dda | demo | | b41a7d0cb1264d949614dc66f6449870 | swift | | b78a2b17336b43368fb15fea5ed089e9 | cinderinternal | | bae1718dee2d47e6a75cd6196fb940bd | monasca | | d4b9b32f660943668c9f5963f1ff43f9 | ceilometer | | d7bef811fb7e4d8282f19fb3ee5089e9 | swift-monitor | | e22bbb2be91342fd9afa20baad4cd490 | neutron | | ec0ad2418a644e6b995d8af3eb5ff195 | glance | | ef16c37ec7a648338eaf53c029d6e904 | swift-dispersion | | ef1a6daccb6f4694a27a1c41cc5e7a31 | glance-swift | | fed3a599b0864f5b80420c9e387b4901 | monasca-agent | +----------------------------------+------------------+

List domains created by the installation process:

openstack domain list

Example output:

$ openstack domain list +----------------------------------+---------+---------+----------------------------------------------------------------------+ | ID | Name | Enabled | Description | +----------------------------------+---------+---------+----------------------------------------------------------------------+ | 6740dbf7465a4108a36d6476fc967dbd | heat | True | Owns users and projects created by heat | | default | Default | True | Owns users and tenants (i.e. projects) available on Identity API v2. | +----------------------------------+---------+---------+----------------------------------------------------------------------+

List the roles:

openstack role list

Example output:

$ openstack role list +----------------------------------+---------------------------+ | ID | Name | +----------------------------------+---------------------------+ | 0be3da26cd3f4cd38d490b4f1a8b0c03 | designate_admin | | 13ce16e4e714473285824df8188ee7c0 | monasca-agent | | 160f25204add485890bc95a6065b9954 | key-manager:service-admin | | 27755430b38c411c9ef07f1b78b5ebd7 | monitor | | 2b8eb0a261344fbb8b6b3d5934745fe1 | key-manager:observer | | 345f1ec5ab3b4206a7bffdeb5318bd32 | admin | | 49ba3b42696841cea5da8398d0a5d68e | nova_admin | | 5129400d4f934d4fbfc2c3dd608b41d9 | ResellerAdmin | | 60bc2c44f8c7460a9786232a444b56a5 | neutron_admin | | 654bf409c3c94aab8f929e9e82048612 | cinder_admin | | 854e542baa144240bfc761cdb5fe0c07 | monitoring-delegate | | 8946dbdfa3d346b2aa36fa5941b43643 | key-manager:auditor | | 901453d9a4934610ad0d56434d9276b4 | key-manager:admin | | 9bc90d1121544e60a39adbfe624a46bc | monasca-user | | 9fe2a84a3e7443ae868d1009d6ab4521 | service | | 9fe2ff9ee4384b1894a90878d3e92bab | member | | a24d4e0a5de14bffbe166bfd68b36e6a | swiftoperator | | ae088fcbf579425580ee4593bfa680e5 | heat_stack_user | | bfba56b2562942e5a2e09b7ed939f01b | keystoneAdmin | | c05f54cf4bb34c7cb3a4b2b46c2a448b | glance_admin | | fe010be5c57240db8f559e0114a380c1 | key-manager:creator | +----------------------------------+---------------------------+

List admin user role assignment within default domain:

openstack role assignment list --user admin --domain default

Example output:

# This indicates that the admin user is assigned the admin role within the default domain

ardana > openstack role assignment list --user admin --domain default

+----------------------------------+----------------------------------+-------+---------+---------+

| Role | User | Group | Project | Domain |

+----------------------------------+----------------------------------+-------+---------+---------+

| b398322103504546a070d607d02618ad | fed1c038d9e64392890b6b44c38f5bbb | | | default |

+----------------------------------+----------------------------------+-------+---------+---------+Create a new user in default domain:

openstack user create --domain default --password-prompt --email <email_address> --description <description> --enable <username>

Example output showing the creation of a user named

testuser with email address

test@example.com and a description of Test

User:

ardana > openstack user create --domain default --password-prompt --email test@example.com --description "Test User" --enable testuser

User Password:

Repeat User Password:

+-------------+----------------------------------+

| Field | Value |

+-------------+----------------------------------+

| description | Test User |

| domain_id | default |

| email | test@example.com |

| enabled | True |

| id | 8aad69acacf0457e9690abf8c557754b |

| name | testuser |

+-------------+----------------------------------+Assign admin role for testuser within the default domain:

openstack role add admin --user <username> --domain default openstack role assignment list --user <username> --domain default

Example output:

# Just for demonstration purposes - do not do this in a production environment!ardana >openstack role add admin --user testuser --domain defaultardana >openstack role assignment list --user testuser --domain default +----------------------------------+----------------------------------+-------+---------+---------+ | Role | User | Group | Project | Domain | +----------------------------------+----------------------------------+-------+---------+---------+ | b398322103504546a070d607d02618ad | 8aad69acacf0457e9690abf8c557754b | | | default | +----------------------------------+----------------------------------+-------+---------+---------+

2.3.3 Assigning the default service admin roles #

The following examples illustrate how you can assign each of the new service admin roles to a user.

Assigning the glance_admin role

A user must have the role of admin in order to assign the glance_admin role. To assign the role, you will set the environment variables needed for the identity service administrator.

First, source the identity service credentials:

source ~/keystone.osrc

You can add the glance_admin role to a user on a project with this command:

openstack role add --user <username> --project <project_name> glance_admin

Example, showing a user named

testuserbeing granted theglance_adminrole in thetest_projectproject:openstack role add --user testuser --project test_project glance_admin

You can confirm the role assignment by listing out the roles:

openstack role assignment list --user <username>

Example output:

ardana >openstack role assignment list --user testuser +----------------------------------+----------------------------------+-------+----------------------------------+--------+-----------+ | Role | User | Group | Project | Domain | Inherited | +----------------------------------+----------------------------------+-------+----------------------------------+--------+-----------+ | 46ba80078bc64853b051c964db918816 | 8bcfe10101964e0c8ebc4de391f3e345 | | 0ebbf7640d7948d2a17ac08bbbf0ca5b | | False | +----------------------------------+----------------------------------+-------+----------------------------------+--------+-----------+Note that only the role ID is displayed. To get the role name, execute the following:

openstack role show <role_id>

Example output:

ardana >openstack role show 46ba80078bc64853b051c964db918816 +-------+----------------------------------+ | Field | Value | +-------+----------------------------------+ | id | 46ba80078bc64853b051c964db918816 | | name | glance_admin | +-------+----------------------------------+To demonstrate that the user has glance admin privileges, authenticate with those user creds and then upload and publish an image. Only a user with an admin role or glance_admin can publish an image.

The easiest way to do this will be to make a copy of the

service.osrcfile and edit it with your user credentials. You can do that with this command:cp ~/service.osrc ~/user.osrc

Using your preferred editor, edit the

user.osrcfile and replace the values for the following entries to match your user credentials:export OS_USERNAME=<username> export OS_PASSWORD=<password>

You will also need to edit the following lines for your environment:

## Change these values from 'unset' to 'export' export OS_PROJECT_NAME=<project_name> export OS_PROJECT_DOMAIN_NAME=Default

Here is an example output:

unset OS_DOMAIN_NAME export OS_IDENTITY_API_VERSION=3 export OS_AUTH_VERSION=3 export OS_PROJECT_NAME=test_project export OS_PROJECT_DOMAIN_NAME=Default export OS_USERNAME=testuser export OS_USER_DOMAIN_NAME=Default export OS_PASSWORD=testuser export OS_AUTH_URL=http://192.168.245.9:35357/v3 export OS_ENDPOINT_TYPE=internalURL # OpenstackClient uses OS_INTERFACE instead of OS_ENDPOINT export OS_INTERFACE=internal export OS_CACERT=/etc/ssl/certs/ca-certificates.crt

Source the environment variables for your user:

source ~/user.osrc

Upload an image and publicize it:

openstack image create --name "upload me" --visibility public --container-format bare --disk-format qcow2 --file uploadme.txt

Example output:

+------------------+--------------------------------------+ | Property | Value | +------------------+--------------------------------------+ | checksum | dd75c3b840a16570088ef12f6415dd15 | | container_format | bare | | created_at | 2016-01-06T23:31:27Z | | disk_format | qcow2 | | id | cf1490f4-1eb1-477c-92e8-15ebbe91da03 | | min_disk | 0 | | min_ram | 0 | | name | upload me | | owner | bd24897932074780a20b780c4dde34c7 | | protected | False | | size | 10 | | status | active | | tags | [] | | updated_at | 2016-01-06T23:31:31Z | | virtual_size | None | | visibility | public | +------------------+--------------------------------------+

NoteYou can use the command

openstack help image createto get the full syntax for this command.

Assigning the nova_admin role

A user must have the role of admin in order to assign the nova_admin role. To assign the role, you will set the environment variables needed for the identity service administrator.

First, source the identity service credentials:

source ~/keystone.osrc

You can add the glance_admin role to a user on a project with this command:

openstack role add --user <username> --project <project_name> nova_admin

Example, showing a user named

testuserbeing granted theglance_adminrole in thetest_projectproject:openstack role add --user testuser --project test_project nova_admin

You can confirm the role assignment by listing out the roles:

openstack role assignment list --user <username>

Example output:

ardana >openstack role assignment list --user testuser +----------------------------------+----------------------------------+-------+----------------------------------+--------+-----------+ | Role | User | Group | Project | Domain | Inherited | +----------------------------------+----------------------------------+-------+----------------------------------+--------+-----------+ | 8cdb02bab38347f3b65753099f3ab73c | 8bcfe10101964e0c8ebc4de391f3e345 | | 0ebbf7640d7948d2a17ac08bbbf0ca5b | | False | +----------------------------------+----------------------------------+-------+----------------------------------+--------+-----------+Note that only the role ID is displayed. To get the role name, execute the following:

openstack role show <role_id>

Example output:

ardana >openstack role show 8cdb02bab38347f3b65753099f3ab73c +-------+----------------------------------+ | Field | Value | +-------+----------------------------------+ | id | 8cdb02bab38347f3b65753099f3ab73c | | name | nova_admin | +-------+----------------------------------+To demonstrate that the user has nova admin privileges, authenticate with those user creds and then upload and publish an image. Only a user with an admin role or glance_admin can publish an image.

The easiest way to do this will be to make a copy of the

service.osrcfile and edit it with your user credentials. You can do that with this command:cp ~/service.osrc ~/user.osrc

Using your preferred editor, edit the

user.osrcfile and replace the values for the following entries to match your user credentials:export OS_USERNAME=<username> export OS_PASSWORD=<password>

You will also need to edit the following lines for your environment:

## Change these values from 'unset' to 'export' export OS_PROJECT_NAME=<project_name> export OS_PROJECT_DOMAIN_NAME=Default

Here is an example output:

unset OS_DOMAIN_NAME export OS_IDENTITY_API_VERSION=3 export OS_AUTH_VERSION=3 export OS_PROJECT_NAME=test_project export OS_PROJECT_DOMAIN_NAME=Default export OS_USERNAME=testuser export OS_USER_DOMAIN_NAME=Default export OS_PASSWORD=testuser export OS_AUTH_URL=http://192.168.245.9:35357/v3 export OS_ENDPOINT_TYPE=internalURL # OpenstackClient uses OS_INTERFACE instead of OS_ENDPOINT export OS_INTERFACE=internal export OS_CACERT=/etc/ssl/certs/ca-certificates.crt

Source the environment variables for your user:

source ~/user.osrc

List all of the virtual machines in the project specified in user.osrc:

openstack server list

Example output showing no virtual machines, because there are no virtual machines created on the project specified in the user.osrc file:

+--------------------------------------+-------------------------------------------------------+--------+-----------------------------------------------------------------+ | ID | Name | Status | Networks | +--------------------------------------+-------------------------------------------------------+--------+-----------------------------------------------------------------+ +--------------------------------------+-------------------------------------------------------+--------+-----------------------------------------------------------------+

For this demonstration, we do have a virtual machine associated with a different project and because your user has nova_admin permissions, you can view those virtual machines using a slightly different command:

openstack server list --all-projects

Example output, now showing a virtual machine:

ardana >openstack server list --all-projects +--------------------------------------+-------------------------------------------------------+--------+-----------------------------------------------------------------+ | ID | Name | Status | Networks | +--------------------------------------+-------------------------------------------------------+--------+-----------------------------------------------------------------+ | da4f46e2-4432-411b-82f7-71ab546f91f3 | testvml | ACTIVE | | +--------------------------------------+-------------------------------------------------------+--------+-----------------------------------------------------------------+You can also now delete virtual machines in other projects by using the

--all-tenantsswitch:openstack server delete --all-projects <instance_id>

Example, showing us deleting the instance in the previous step:

openstack server delete --all-projects da4f46e2-4432-411b-82f7-71ab546f91f3

You can get a full list of available commands by using this:

openstack -h

You can perform the same steps as above for the neutron and cinder service admin roles:

neutron_admin cinder_admin

2.3.4 Customize policy.json on the Cloud Lifecycle Manager #

One way to deploy policy.json for a service is by going to each of the

target nodes and making changes there. This is not necessary anymore. This

process has been streamlined and policy.json files can be edited on the

Cloud Lifecycle Manager and then deployed to nodes. Please exercise caution when modifying

policy.json files. It is best to validate the changes in a non-production

environment before rolling out policy.json changes into production. It is

not recommended that you make policy.json changes without a way to validate

the desired policy behavior. Updated policy.json files can be deployed using

the appropriate <service_name>-reconfigure.yml

playbook.

2.3.5 Roles #

Service roles represent the functionality used to implement the OpenStack role based access control (RBAC) model. This is used to manage access to each OpenStack service. Roles are named and assigned per user or group for each project by the identity service. Role definition and policy enforcement are defined outside of the identity service independently by each OpenStack service.

The token generated by the identity service for each user authentication contains the role(s) assigned to that user for a particular project. When a user attempts to access a specific OpenStack service, the role is parsed by the service, compared to the service-specific policy file, and then granted the resource access defined for that role by the service policy file.

Each service has its own service policy file with the

/etc/[SERVICE_CODENAME]/policy.json file name format

where [SERVICE_CODENAME] represents a specific OpenStack

service name. For example, the OpenStack nova service would have a policy

file called /etc/nova/policy.json.

Service policy files can be modified and deployed to control nodes from the Cloud Lifecycle Manager. Administrators are advised to validate policy changes before checking in the changes to the site branch of the local git repository before rolling the changes into production. Do not make changes to policy files without having a way to validate them.

The policy files are located at the following site branch directory on the Cloud Lifecycle Manager.

~/openstack/ardana/ansible/roles/

For test and validation, policy files can be modified in a non-production

environment from the ~/scratch/ directory. For a specific

policy file, run a search for policy.json. To deploy

policy changes for a service, run the service specific reconfiguration

playbook (for example, nova-reconfigure.yml). For a

complete list of reconfiguration playbooks, change directories to

~/scratch/ansible/next/ardana/ansible and run this

command:

ls –l | grep reconfigure

Comments added to any *.j2 files (including templates)

must follow proper comment syntax. Otherwise you may see errors when

running the config-processor or any of the service playbooks.

2.4 Log Management and Integration #

2.4.1 Overview #

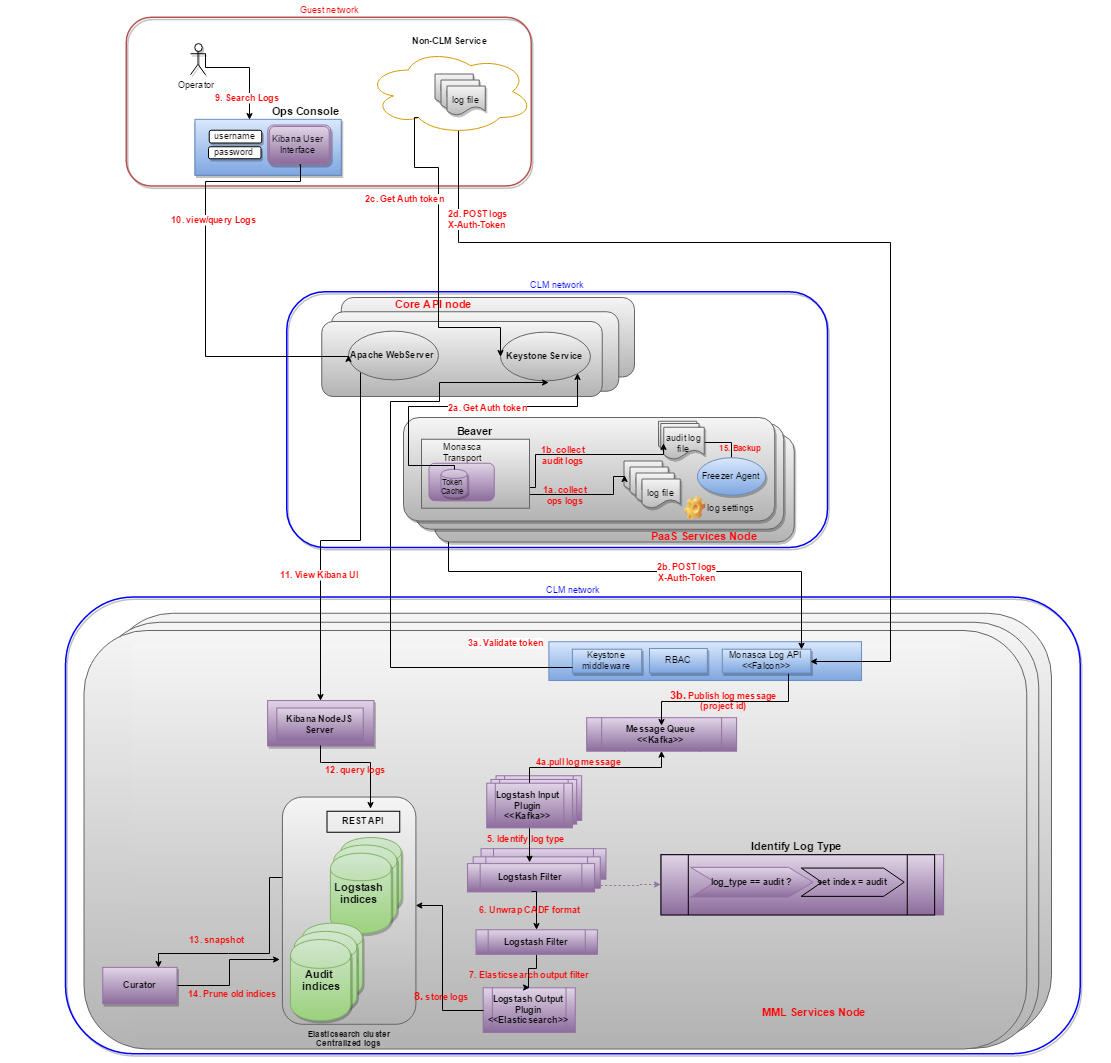

SUSE OpenStack Cloud uses the ELK (Elasticsearch, Logstash, Kibana) stack for log management across the entire cloud infrastructure. This configuration facilitates simple administration as well as integration with third-party tools. This tutorial covers how to forward your logs to a third-party tool or service, and how to access and search the Elasticsearch log stores through API endpoints.

2.4.2 The ELK stack #

The ELK logging stack consists of the Elasticsearch, Logstash, and Kibana elements.

Elasticsearch. Elasticsearch is the storage and indexing component of the ELK stack. It stores and indexes the data received from Logstash. Indexing makes your log data searchable by tools designed for querying and analyzing massive sets of data. You can query the Elasticsearch datasets from the built-in Kibana console, a third-party data analysis tool, or through the Elasticsearch API (covered later).

Logstash. Logstash reads the log data from the services running on your servers, and then aggregates and ships that data to a storage location. By default, Logstash sends the data to the Elasticsearch indexes, but it can also be configured to send data to other storage and indexing tools such as Splunk.

Kibana. Kibana provides a simple and easy-to-use method for searching, analyzing, and visualizing the log data stored in the Elasticsearch indexes. You can customize the Kibana console to provide graphs, charts, and other visualizations of your log data.

2.4.3 Using the Elasticsearch API #

You can query the Elasticsearch indexes through various language-specific

APIs, as well as directly over the IP address and port that Elasticsearch

exposes on your implementation. By default, Elasticsearch presents from

localhost, port 9200. You can run queries directly from a terminal using

curl. For example:

ardana > curl -XGET 'http://localhost:9200/_search?q=tag:yourSearchTag'The preceding command searches all indexes for all data with the "yourSearchTag" tag.

You can also use the Elasticsearch API from outside the logging node. This method connects over the Kibana VIP address, port 5601, using basic http authentication. For example, you can use the following command to perform the same search as the preceding search:

curl -u kibana:<password> kibana_vip:5601/_search?q=tag:yourSearchTag

You can further refine your search to a specific index of data, in this case the "elasticsearch" index:

ardana > curl -XGET 'http://localhost:9200/elasticsearch/_search?q=tag:yourSearchTag'The search API is RESTful, so responses are provided in JSON format. Here's a sample (though empty) response:

{

"took":13,

"timed_out":false,

"_shards":{

"total":45,

"successful":45,

"failed":0

},

"hits":{

"total":0,

"max_score":null,

"hits":[]

}

}2.4.4 For More Information #

You can find more detailed Elasticsearch API documentation at https://www.elastic.co/guide/en/elasticsearch/reference/current/search.html.

Review the Elasticsearch Python API documentation at the following sources: http://elasticsearch-py.readthedocs.io/en/master/api.html

Read the Elasticsearch Java API documentation at https://www.elastic.co/guide/en/elasticsearch/client/java-api/current/index.html.

2.4.5 Forwarding your logs #

You can configure Logstash to ship your logs to an outside storage and indexing system, such as Splunk. Setting up this configuration is as simple as editing a few configuration files, and then running the Ansible playbooks that implement the changes. Here are the steps.

Begin by logging in to the Cloud Lifecycle Manager.

Verify that the logging system is up and running:

ardana >cd ~/scratch/ansible/next/ardana/ansibleardana >ansible-playbook -i hosts/verb_hosts logging-status.ymlWhen the preceding playbook completes without error, proceed to the next step.

Edit the Logstash configuration file, found at the following location:

~/openstack/ardana/ansible/roles/logging-server/templates/logstash.conf.j2

Near the end of the Logstash configuration file, you will find a section for configuring Logstash output destinations. The following example demonstrates the changes necessary to forward your logs to an outside server (changes in bold). The configuration block sets up a TCP connection to the destination server's IP address over port 5514.

# Logstash outputs output { # Configure Elasticsearch output # http://www.elastic.co/guide/en/logstash/current/plugins-outputs-elasticsearch.html elasticsearch { index => "${[@metadata][es_index]"} hosts => ["{{ elasticsearch_http_host }}:{{ elasticsearch_http_port }}"] flush_size => {{ logstash_flush_size }} idle_flush_time => 5 workers => {{ logstash_threads }} } # Forward Logs to Splunk on TCP port 5514 which matches the one specified in Splunk Web UI. tcp { mode => "client" host => "<Enter Destination listener IP address>" port => 5514 } }Logstash can forward log data to multiple sources, so there is no need to remove or alter the Elasticsearch section in the preceding file. However, if you choose to stop forwarding your log data to Elasticsearch, you can do so by removing the related section in this file, and then continue with the following steps.

Commit your changes to the local git repository:

ardana >cd ~/openstack/ardana/ansibleardana >git add -Aardana >git commit -m "Your commit message"Run the configuration processor to check the status of all configuration files:

ardana >ansible-playbook -i hosts/localhost config-processor-run.ymlRun the ready-deployment playbook:

ardana >ansible-playbook -i hosts/localhost ready-deployment.ymlImplement the changes to the Logstash configuration file:

ardana >cd ~/scratch/ansible/next/ardana/ansibleardana >ansible-playbook -i hosts/verb_hosts kronos-server-configure.yml

Configuring the receiving service will vary from product to product. Consult the documentation for your particular product for instructions on how to set it up to receive log files from Logstash.

2.5 Integrating Your Logs with Splunk #

2.5.1 Integrating with Splunk #

The SUSE OpenStack Cloud 9 logging solution provides a flexible and extensible framework to centralize the collection and processing of logs from all nodes in your cloud. The logs are shipped to a highly available and fault-tolerant cluster where they are transformed and stored for better searching and reporting. The SUSE OpenStack Cloud 9 logging solution uses the ELK stack (Elasticsearch, Logstash and Kibana) as a production-grade implementation and can support other storage and indexing technologies.

You can configure Logstash, the service that aggregates and forwards the logs to a searchable index, to send the logs to a third-party target, such as Splunk.

For how to integrate the SUSE OpenStack Cloud 9 centralized logging solution with Splunk, including the steps to set up and forward logs, please refer to Section 4.1, “Splunk Integration”.

2.6 Integrating SUSE OpenStack Cloud with an LDAP System #

You can configure your SUSE OpenStack Cloud cloud to work with an outside user authentication source such as Active Directory or OpenLDAP. keystone, the SUSE OpenStack Cloud identity service, functions as the first stop for any user authorization/authentication requests. keystone can also function as a proxy for user account authentication, passing along authentication and authorization requests to any LDAP-enabled system that has been configured as an outside source. This type of integration lets you use an existing user-management system such as Active Directory and its powerful group-based organization features as a source for permissions in SUSE OpenStack Cloud.

Upon successful completion of this tutorial, your cloud will refer user authentication requests to an outside LDAP-enabled directory system, such as Microsoft Active Directory or OpenLDAP.

2.6.1 Configure your LDAP source #

To configure your SUSE OpenStack Cloud cloud to use an outside user-management source, perform the following steps:

Make sure that the LDAP-enabled system you plan to integrate with is up and running and accessible over the necessary ports from your cloud management network.

Edit the

/var/lib/ardana/openstack/my_cloud/config/keystone/keystone.conf.j2file and set the following options:domain_specific_drivers_enabled = True domain_configurations_from_database = False

Create a YAML file in the

/var/lib/ardana/openstack/my_cloud/config/keystone/directory that defines your LDAP connection. You can make a copy of the sample keystone-LDAP configuration file, and then edit that file with the details of your LDAP connection.The following example copies the

keystone_configure_ldap_sample.ymlfile and names the new filekeystone_configure_ldap_my.yml:ardana >cp /var/lib/ardana/openstack/my_cloud/config/keystone/keystone_configure_ldap_sample.yml \ /var/lib/ardana/openstack/my_cloud/config/keystone/keystone_configure_ldap_my.ymlEdit the new file to define the connection to your LDAP source. This guide does not provide comprehensive information on all aspects of the

keystone_configure_ldap.ymlfile. Find a complete list of keystone/LDAP configuration file options at: https://github.com/openstack/keystone/tree/stable/rocky/etcThe following file illustrates an example keystone configuration that is customized for an Active Directory connection.

keystone_domainldap_conf: # CA certificates file content. # Certificates are stored in Base64 PEM format. This may be entire LDAP server # certificate (in case of self-signed certificates), certificate of authority # which issued LDAP server certificate, or a full certificate chain (Root CA # certificate, intermediate CA certificate(s), issuer certificate). # cert_settings: cacert: | -----BEGIN CERTIFICATE----- certificate appears here -----END CERTIFICATE----- # A domain will be created in MariaDB with this name, and associated with ldap back end. # Installer will also generate a config file named /etc/keystone/domains/keystone.<domain_name>.conf # domain_settings: name: ad description: Dedicated domain for ad users conf_settings: identity: driver: ldap # For a full list and description of ldap configuration options, please refer to # http://docs.openstack.org/liberty/config-reference/content/keystone-configuration-file.html. # # Please note: # 1. LDAP configuration is read-only. Configuration which performs write operations (i.e. creates users, groups, etc) # is not supported at the moment. # 2. LDAP is only supported for identity operations (reading users and groups from LDAP). Assignment # operations with LDAP (i.e. managing roles, projects) are not supported. # 3. LDAP is configured as non-default domain. Configuring LDAP as a default domain is not supported. # ldap: url: ldap://YOUR_COMPANY_AD_URL suffix: YOUR_COMPANY_DC query_scope: sub user_tree_dn: CN=Users,YOUR_COMPANY_DC user : CN=admin,CN=Users,YOUR_COMPANY_DC password: REDACTED user_objectclass: user user_id_attribute: cn user_name_attribute: cn group_tree_dn: CN=Users,YOUR_COMPANY_DC group_objectclass: group group_id_attribute: cn group_name_attribute: cn use_pool: True user_enabled_attribute: userAccountControl user_enabled_mask: 2 user_enabled_default: 512 use_tls: True tls_req_cert: demand # if you are configuring multiple LDAP domains, and LDAP server certificates are issued # by different authorities, make sure that you place certs for all the LDAP backend domains in the # cacert parameter as seen in this sample yml file so that all the certs are combined in a single CA file # and every LDAP domain configuration points to the combined CA file. # Note: # 1. Please be advised that every time a new ldap domain is configured, the single CA file gets overwritten # and hence ensure that you place certs for all the LDAP backend domains in the cacert parameter. # 2. There is a known issue on one cert per CA file per domain when the system processes # concurrent requests to multiple LDAP domains. Using the single CA file with all certs combined # shall get the system working properly. tls_cacertfile: /etc/keystone/ssl/certs/all_ldapdomains_ca.pemAdd your new file to the local Git repository and commit the changes.

ardana >cd ~/openstackardana >git checkout siteardana >git add -Aardana >git commit -m "Adding LDAP server integration config"Run the configuration processor and deployment preparation playbooks to validate the YAML files and prepare the environment for configuration.

ardana >cd ~/openstack/ardana/ansibleardana >ansible-playbook -i hosts/localhost config-processor-run.ymlardana >ansible-playbook -i hosts/localhost ready-deployment.ymlRun the keystone reconfiguration playbook to implement your changes, passing the newly created YAML file as an argument to the

-e@FILE_PATHparameter:ardana >cd ~/scratch/ansible/next/ardana/ansibleardana >ansible-playbook -i hosts/verb_hosts keystone-reconfigure.yml \ -e@/var/lib/ardana/openstack/my_cloud/config/keystone/keystone_configure_ldap_my.ymlTo integrate your SUSE OpenStack Cloud cloud with multiple domains, repeat these steps starting from Step 3 for each domain.

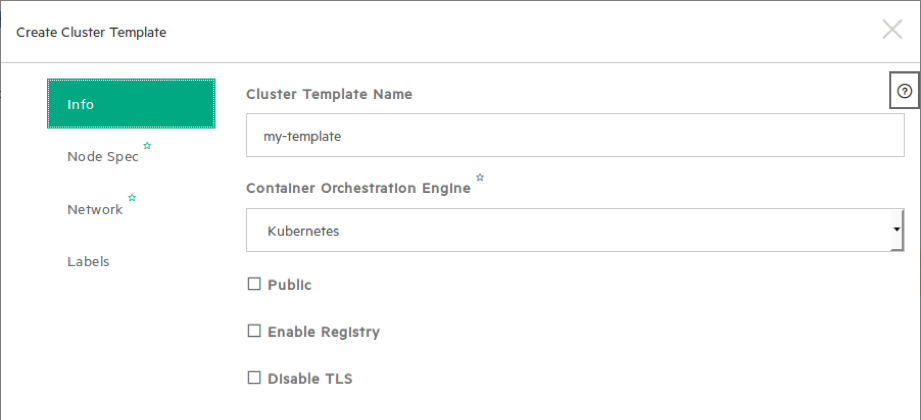

3 Cloud Lifecycle Manager Admin UI User Guide #

The Cloud Lifecycle Manager Admin UI is a web-based GUI for viewing and managing the

configuration of an installed cloud. After successfully deploying the cloud

with the Install UI, the final screen displays a link to the CLM Admin UI.

(For example, see Book “Deployment Guide using Cloud Lifecycle Manager”, Chapter 21 “Installing with the Install UI”, Section 21.5 “Running the Install UI”, Cloud Deployment Successful). Usually the URL

associated with this link is

https://DEPLOYER_MGMT_NET_IP:9085,

although it may be different depending on the cloud configuration and the

installed version of SUSE OpenStack Cloud.

3.1 Accessing the Admin UI #

In a browser, go to

https://DEPLOYER_MGMT_NET_IP:9085.

The

DEPLOYER_MGMT_NET_IP:PORT_NUMBER

is not necessarily the same for all installations, and can be displayed with

the following command:

ardana > openstack endpoint list --service ardana --interface admin -c URLAccessing the Cloud Lifecycle Manager Admin UI requires access to the MANAGEMENT network that was configured when the Cloud was deployed. Access to this network is necessary to be able to access the Cloud Lifecycle Manager Admin UI and log in. Depending on the network setup, it may be necessary to use an SSH tunnel similar to what is recommended in Book “Deployment Guide using Cloud Lifecycle Manager”, Chapter 21 “Installing with the Install UI”, Section 21.5 “Running the Install UI”. The Admin UI requires keystone and HAProxy to be running and to be accesible. If keystone or HAProxy are not running, cloud reconfiguration is limited to the command line.

Logging in requires a keystone user. If the user is not an admin on the default domain and one or more projects, the Cloud Lifecycle Manager Admin UI will not display information about the Cloud and may present errors.

3.2 Admin UI Pages #

3.2.1 Services #

Services pages relay information about the various OpenStack and other

services that have been deployed as part of the cloud. Service information

displays the list of services registered with keystone and the endpoints

associated with those services. The information is equivalent to running

the command openstack endpoint list.

The Service Information table contains the following

information, based on how the service is registered with keystone:

- Name

The name of the service, this may be an OpenStack code name

- Description

Service description, for some services this is a repeat of the name

- Endpoints

Services typically have 1 or more endpoints that are accessible to make API calls. The most common configuration is for a service to have

Admin,Public, andInternalendpoints, with each intended for access by consumers corresponding to the type of endpoint.- Region

Service endpoints are part of a region. In multi-region clouds, some services will have endpoints in multiple regions.

3.2.2 Packages #

The tab displays packages that are part of the SUSE OpenStack Cloud product.

The SUSE Cloud Packages table contains the following:

- Name

The name of the SUSE Cloud package

- Version

The version of the package which is installed in the Cloud

Packages with the venv- prefix denote the version of

the specific OpenStack package that is deployed. The release name can be

determined from the OpenStack Releases

page.

3.2.3 Configuration #

The tab displays services that are

deployed in the cloud and the configuration files associated with those

services. Services may be reconfigured by editing the

.j2 files listed and clicking the

button.

This page also provides the ability to set up SUSE Enterprise Storage Integration after initial deployment.

Clicking one of the listed configuration files opens the file editor where changes can be made. Asterisks identify files that have been edited but have not had their updates applied to the cloud.

After editing the service configuration, click the button to begin deploying configuration changes to the cloud. The status of those changes will be streamed to the UI.

Configure SUSE Enterprise Storage After Initial Deployment

A link to the settings.yml file is available under the

ses selection on the tab.

To set up SUSE Enterprise Storage Integration:

Click on the link to edit the

settings.ymlfile.Uncomment the

ses_config_pathparameter, specify the location on the deployer host containing theses_config.ymlfile, and save thesettings.ymlfile.If the

ses_config.ymlfile does not yet exist in that location on the deployer host, a new link will appear for uploading a file from your local workstation.When

ses_config.ymlis present on the deployer host, it will appear in thesessection of the tab and can be edited directly there.

If the cloud is configured using self-signed certificates, the streaming status updates (including the log) may be interupted and require a reload of the CLM Admin UI. See Book “Security Guide”, Chapter 8 “Transport Layer Security (TLS) Overview”, Section 8.2 “TLS Configuration” for details on using signed certificates.

3.2.4 Model #

The tab displays input models that are deployed in the cloud and the associated model files. The model files listed can be modified.

Clicking one of the listed model files opens the file editor where changes can be made. Asterisks identify files that have been edited but have not had their updates applied to the cloud.

After editing the model file, click the button

to validate changes. If validation is successful,

is enabled. Click the button to deploy the

changes to the cloud. Before starting deployment, a confirmation dialog

shows the choices of only running

config-processor-run.yml and

ready-deployment.yml playbooks or running a full

deployment. It also indicates the risk of updating the deployed cloud.

Click to start deployment. The status of the changes will be streamed to the UI.

If the cloud is configured using self-signed certificates, the streaming status updates (including the log) may be interrupted. The CLM Admin UI must be reloaded. See Book “Security Guide”, Chapter 8 “Transport Layer Security (TLS) Overview”, Section 8.2 “TLS Configuration” for details on using signed certificates.

3.2.5 Roles #

The tab displays the list of all roles that have been defined in the Cloud Lifecycle Manager input model, the list of servers that role, and the services installed on those servers.

The Services Per Role table contains the following:

- Role

The name of the role in the data model. In the included data model templates, these names are descriptive, such as

MTRMON-ROLEfor a metering and monitoring server. There is no strict constraint on role names and they may have been altered at install time.- Servers

The model IDs for the servers that have been assigned this role. This does not necessarily correspond to any DNS or other naming labels a host has, unless the host ID was set that way during install.

- Services

A list of OpenStack and other Cloud related services that comprise this role. Servers that have been assigned this role will have these services installed and enabled.

3.2.6 Servers #

The pages contain information about the hardware that comprises the cloud, including the configuration of the servers, and the ability to add new compute nodes to the cloud.

The Servers table contains the following information:

- ID

This is the ID of the server in the data model. This does not necessarily correspond to any DNS or other naming labels a host has, unless the host ID was set that way during install.

- IP Address

The management network IP address of the server

- Server Group

The server group which this server is assigned to

- NIC Mapping

The NIC mapping that describes the PCI slot addresses for the servers ethernet adapters

- Mac Address

The hardware address of the servers primary physical ethernet adapter

3.2.7 Admin UI Server Details #

Server Details can be viewed by clicking the menu at the

right side of each row in the Servers table, the server

details dialog contains the information from the Servers table and the

following additional fields:

- IPMI IP Address

The IPMI network address, this may be empty if the server was provisioned prior to being added to the Cloud

- IPMI Username

The username that was specified for IPMI access

- IPMI Password

This is obscured in the readonly dialog, but is editable when adding a new server

- Network Interfaces

The network interfaces configured on the server

- Filesystem Utilization

Filesystem usage (percentage of filesystem in use). Only available if monasca is in use

3.3 Topology #

The topology section of the Cloud Lifecycle Manager Admin UI displays an overview of how the Cloud is configured. Each section of the topology represents some facet of the Cloud configuration and provides a visual layout of the way components are associated with each other. Many of the components in the topology are linked to each other, and can be navigated between by clicking on any component that appears as a hyperlink.

3.3.1 Control Planes #

The tab displays control planes and availability zones within the Cloud.

Each control plane is show as a table of clusters, resources, and load balancers (represented by vertical columns in the table).

- Control Plane

A set of servers dedicated to running the infrastructure of the Cloud. Many Cloud configurations will have only a single control plane.

- Clusters

A set of one or more servers hosting a particular set of services, tied to the

rolethat has been assigned to that server.Clustersare generally differentiated fromResourcesin that they are fixed size groups of servers that do not grow as the Cloud grows.- Resources

Servers hosting the scalable parts of the Cloud, such as Compute Hosts that host VMs, or swift servers for object storage. These will vary in number with the size and scale of the Cloud and can generally be increased after the initial Cloud deployment.

- Load Balancers

Servers that distribute API calls across servers hosting the called services.

- Availability Zones

Listed beneath the running services, groups together in a row the hosts in a particular availability zone for a particular cluster or resource type (the rows are AZs, the columns are clusters/resources)

3.3.2 Regions #

Displays the distribution of control plane services across regions. Clouds that have only a single region will list all services in the same cell.

- Control Planes

The group of services that run the Cloud infrastructure

- Region

Each region will be represented by a column with the region name as the column header. The list of services that are running in that region will be in that column, with each row corresponding to a particular control plane.

3.3.3 Services #

A list of services running in the Cloud, organized by the type (class) of service. Each service is then listed along with the control planes that the service is part of, the other services that each particular service consumes (requires), and the endpoints of the service, if the service exposes an API.

- Class

A category of like services, such as "security" or "operations". Multiple services may belong to the same category.

- Description

A short description of the service, typically sourced from the service itself

- Service

The name of the service. For OpenStack services, this is the project codename, such as nova for virtual machine provisioning. Clicking a service will navigate to the section of this page with details for that particular service.

The detail data about a service provides additional insight into the service, such as what other services are required to run a service, and what network protocols can be used to access the service

- Components

Each service is made up of one or more components, which are listed separately here. The components of a service may represent pieces of the service that run on different hosts, provide distinct functionality, or modularize business logic.

- Control Planes

A service may be running in multiple control planes. Each control plane that a service is running in will be listed here.

- Consumes

Other services required for this service to operate correctly.

- Endpoints

How a service can be accessed, typically a REST API, though other network protocols may be listed here. Services that do not expose an API or have any sort of external access will not list any entries here.

3.3.4 Networks #

Lists the networks and network groups that comprise the Cloud. Each network group is respresented by a row in the table, with columns identifying which networks are used by the intersection of the group (row) and cluster/resource (column).

- Group

The network group

- Clusters

A set of one or more servers hosting a particular set of services, tied to the

rolethat has been assigned to that server.Clustersare generally differentiated fromResourcesin that they are fixed size groups of servers that do not grow as the Cloud grows.- Resources

Servers hosting the scalable parts of the Cloud, such as Compute Hosts that host VMs, or swift servers for object storage. These will vary in number with the size and scale of the Cloud and can generally be increased after the initial Cloud deployment.

Cells in the middle of the table represent the network that is running on the resource/cluster represented by that column and is part of the network group identified in the leftmost column of the same row.

Each network group is listed along with the servers and interfaces that comprise the network group.

- Network Group

The elements that make up the network group, whose name is listed above the table

- Networks

Networks that are part of the specified network group

- Address

IP address of the corresponding server

- Server

Server name of the server that is part of this network. Clicking on a server will load the server topology details.

- Interface Model

The particular combination of hardware address and bonding that tie this server to the specified network group. Clicking on an