8 Deploying the remaining core services using cephadm #

After deploying the basic Ceph cluster, deploy core services to more cluster nodes. To make the cluster data accessible to clients, deploy additional services as well.

Currently, we support deployment of Ceph services on the command line by

using the Ceph orchestrator (ceph orch subcommands).

8.1 The ceph orch command #

The Ceph orchestrator command ceph orch—which is

an interface to the cephadm module—will take care of listing cluster

components and deploying Ceph services on new cluster nodes.

8.1.1 Displaying the orchestrator status #

The following command shows the current mode and status of the Ceph orchestrator.

cephuser@adm > ceph orch status8.1.2 Listing devices, services, and daemons #

To list all disk devices, run the following:

cephuser@adm > ceph orch device ls

Hostname Path Type Serial Size Health Ident Fault Available

ses-main /dev/vdb hdd 0d8a... 10.7G Unknown N/A N/A No

ses-node1 /dev/vdc hdd 8304... 10.7G Unknown N/A N/A No

ses-node1 /dev/vdd hdd 7b81... 10.7G Unknown N/A N/A No

[...]Service is a general term for a Ceph service of a specific type, for example Ceph Manager.

Daemon is a specific instance of a service, for

example, a process mgr.ses-node1.gdlcik running on a

node called ses-node1.

To list all services known to cephadm, run:

cephuser@adm > ceph orch ls

NAME RUNNING REFRESHED AGE PLACEMENT IMAGE NAME IMAGE ID

mgr 1/0 5m ago - <no spec> registry.example.com/[...] 5bf12403d0bd

mon 1/0 5m ago - <no spec> registry.example.com/[...] 5bf12403d0bd

You can limit the list to services on a particular node with the optional

-–host parameter, and services of a particular type with

the optional --service-type parameter. Acceptable types

are mon, osd,

mgr, mds, and

rgw.

To list all running daemons deployed by cephadm, run:

cephuser@adm > ceph orch ps

NAME HOST STATUS REFRESHED AGE VERSION IMAGE ID CONTAINER ID

mgr.ses-node1.gd ses-node1 running) 8m ago 12d 15.2.0.108 5bf12403d0bd b8104e09814c

mon.ses-node1 ses-node1 running) 8m ago 12d 15.2.0.108 5bf12403d0bd a719e0087369

To query the status of a particular daemon, use

--daemon_type and --daemon_id. For OSDs,

the ID is the numeric OSD ID. For MDS, the ID is the file system name:

cephuser@adm >ceph orch ps --daemon_type osd --daemon_id 0cephuser@adm >ceph orch ps --daemon_type mds --daemon_id my_cephfs

8.2 Service and placement specification #

The recommended way to specify the deployment of Ceph services is to create a YAML-formatted file with the specification of the services that you intend to deploy.

8.2.1 Creating service specifications #

You can create a separate specification file for each type of service, for example:

root@master # cat nfs.yml

service_type: nfs

service_id: EXAMPLE_NFS

placement:

hosts:

- ses-node1

- ses-node2

spec:

pool: EXAMPLE_POOL

namespace: EXAMPLE_NAMESPACE

Alternatively, you can specify multiple (or all) service types in one

file—for example, cluster.yml—that

describes which nodes will run specific services. Remember to separate

individual service types with three dashes (---):

cephuser@adm > cat cluster.yml

service_type: nfs

service_id: EXAMPLE_NFS

placement:

hosts:

- ses-node1

- ses-node2

spec:

pool: EXAMPLE_POOL

namespace: EXAMPLE_NAMESPACE

---

service_type: rgw

service_id: REALM_NAME.ZONE_NAME

placement:

hosts:

- ses-node1

- ses-node2

- ses-node3

---

[...]The aforementioned properties have the following meaning:

service_typeThe type of the service. It can be either a Ceph service (

mon,mgr,mds,crash,osd, orrbd-mirror), a gateway (nfsorrgw), or part of the monitoring stack (alertmanager,grafana,node-exporter, orprometheus).service_idThe name of the service. Specifications of type

mon,mgr,alertmanager,grafana,node-exporter, andprometheusdo not require theservice_idproperty.placementSpecifies which nodes will be running the service. Refer to Section 8.2.2, “Creating placement specification” for more details.

specAdditional specification relevant for the service type.

Ceph cluster services have usually a number of properties specific to them. For examples and details of individual services' specification, refer to Section 8.3, “Deploy Ceph services”.

8.2.2 Creating placement specification #

To deploy Ceph services, cephadm needs to know on which nodes to deploy

them. Use the placement property and list the short host

names of the nodes that the service applies to:

cephuser@adm > cat cluster.yml

[...]

placement:

hosts:

- host1

- host2

- host3

[...]8.2.2.1 Placement by labels #

You can limit the placement of Ceph services to hosts that match a

specific label. In the following example, we create a

label prometheus, assign it to specific hosts, and

apply the service Prometheus to the group of hosts with the

prometheus label.

Add the

prometheuslabel tohost1,host2, andhost3:cephuser@adm >ceph orch host label add host1 prometheuscephuser@adm >ceph orch host label add host2 prometheuscephuser@adm >ceph orch host label add host3 prometheusVerify that the labels were correctly assigned:

cephuser@adm >ceph orch host ls HOST ADDR LABELS STATUS host1 prometheus host2 prometheus host3 prometheus [...]Create a placement specification using the

labelproperty:cephuser@adm >cat cluster.yml [...] placement: label: "prometheus" [...]

8.2.3 Applying cluster specification #

After you have created a full cluster.yml file with

specifications of all services and their placement, you can apply the

cluster by running the following command:

cephuser@adm > ceph orch apply -i cluster.yml

To view the status of the cluster, run the ceph orch

status command. For more details, see

Section 8.1.1, “Displaying the orchestrator status”.

8.2.4 Exporting the specification of a running cluster #

Although you deployed services to the Ceph cluster by using the specification files as described in Section 8.2, “Service and placement specification”, the configuration of the cluster may diverge from the original specification during its operation. Also, you may have removed the specification files accidentally.

To retrieve a complete specification of a running cluster, run:

cephuser@adm > ceph orch ls --export

placement:

hosts:

- hostname: ses-node1

name: ''

network: ''

service_id: my_cephfs

service_name: mds.my_cephfs

service_type: mds

---

placement:

count: 2

service_name: mgr

service_type: mgr

---

[...]

You can append the --format option to change the default

yaml output format. You can select from

json, json-pretty, or

yaml. For example:

ceph orch ls --export --format json

8.3 Deploy Ceph services #

After the basic cluster is running, you can deploy Ceph services to additional nodes.

8.3.1 Deploying Ceph Monitors and Ceph Managers #

Ceph cluster has three or five MONs deployed across different nodes. If there are five or more nodes in the cluster, we recommend deploying five MONs. A good practice is to have MGRs deployed on the same nodes as MONs.

When deploying MONs and MGRs, remember to include the first MON that you added when configuring the basic cluster in Section 7.2.5, “Specifying first MON/MGR node”.

To deploy MONs, apply the following specification:

service_type: mon placement: hosts: - ses-node1 - ses-node2 - ses-node3

If you need to add another node, append the host name to the same YAML list. For example:

service_type: mon placement: hosts: - ses-node1 - ses-node2 - ses-node3 - ses-node4

Similarly, to deploy MGRs, apply the following specification:

Ensure your deployment has at least three Ceph Managers in each deployment.

service_type: mgr placement: hosts: - ses-node1 - ses-node2 - ses-node3

If MONs or MGRs are not on the same subnet, you need to append the subnet addresses. For example:

service_type: mon placement: hosts: - ses-node1:10.1.2.0/24 - ses-node2:10.1.5.0/24 - ses-node3:10.1.10.0/24

8.3.2 Deploying Ceph OSDs #

A storage device is considered available if all of the following conditions are met:

The device has no partitions.

The device does not have any LVM state.

The device is not be mounted.

The device does not contain a file system.

The device does not contain a BlueStore OSD.

The device is larger than 5 GB.

If the above conditions are not met, Ceph refuses to provision such OSDs.

There are two ways you can deploy OSDs:

Tell Ceph to consume all available and unused storage devices:

cephuser@adm >ceph orch apply osd --all-available-devicesUse DriveGroups (see Section 13.4.3, “Adding OSDs using DriveGroups specification”) to create OSD specification describing devices that will be deployed based on their properties, such as device type (SSD or HDD), device model names, size, or the nodes on which the devices exist. Then apply the specification by running the following command:

cephuser@adm >ceph orch apply osd -i drive_groups.yml

8.3.3 Deploying Metadata Servers #

CephFS requires one or more Metadata Server (MDS) services. To create a CephFS, first create MDS servers by applying the following specification:

Ensure you have at least two pools, one for CephFS data and one for CephFS metadata, created before applying the following specification.

service_type: mds service_id: CEPHFS_NAME placement: hosts: - ses-node1 - ses-node2 - ses-node3

After MDSs are functional, create the CephFS:

ceph fs new CEPHFS_NAME metadata_pool data_pool

8.3.4 Deploying Object Gateways #

cephadm deploys an Object Gateway as a collection of daemons that manage a particular realm and zone.

You can either relate an Object Gateway service to already existing realm and zone, (refer to Section 21.13, “Multisite Object Gateways” for more details), or you can specify a non-existing REALM_NAME and ZONE_NAME and they will be created automatically after you apply the following configuration:

service_type: rgw service_id: REALM_NAME.ZONE_NAME placement: hosts: - ses-node1 - ses-node2 - ses-node3 spec: rgw_realm: RGW_REALM rgw_zone: RGW_ZONE

8.3.4.1 Using secure SSL access #

To use a secure SSL connection to the Object Gateway, you need a file containing both the valid SSL certificate and the key (see Section 21.7, “Enable HTTPS/SSL for Object Gateways” for more details). You need to enable SSL, specify a port number for SSL connections, and the SSL certificate file.

To enable SSL and specify the port number, include the following in your specification:

spec: ssl: true rgw_frontend_port: 443

To specify the SSL certificate and key, you can paste their contents

directly into the YAML specification file. The pipe sign

(|) at the end of line tells the parser to expect a

multi-line string as a value. For example:

spec: ssl: true rgw_frontend_port: 443 rgw_frontend_ssl_certificate: | -----BEGIN CERTIFICATE----- MIIFmjCCA4KgAwIBAgIJAIZ2n35bmwXTMA0GCSqGSIb3DQEBCwUAMGIxCzAJBgNV BAYTAkFVMQwwCgYDVQQIDANOU1cxHTAbBgNVBAoMFEV4YW1wbGUgUkdXIFNTTCBp [...] -----END CERTIFICATE----- -----BEGIN PRIVATE KEY----- MIIJRAIBADANBgkqhkiG9w0BAQEFAASCCS4wggkqAgEAAoICAQDLtFwg6LLl2j4Z BDV+iL4AO7VZ9KbmWIt37Ml2W6y2YeKX3Qwf+3eBz7TVHR1dm6iPpCpqpQjXUsT9 [...] -----END PRIVATE KEY-----

Instead of pasting the content of an SSL certificate file, you can omit

the rgw_frontend_ssl_certificate: directive and upload

the certificate file to the configuration database:

cephuser@adm > ceph config-key set rgw/cert/REALM_NAME/ZONE_NAME.crt \

-i SSL_CERT_FILE8.3.4.1.1 Configure the Object Gateway to listen on both ports 443 and 80 #

To configure the Object Gateway to listen on both ports 443 (HTTPS) and 80 (HTTP), follow these steps:

The commands in the procedure use realm and zone

default.

Deploy the Object Gateway by supplying a specification file. Refer to Section 8.3.4, “Deploying Object Gateways” for more details on the Object Gateway specification. Use the following command:

cephuser@adm >ceph orch apply -i SPEC_FILEIf SSL certificates are not supplied in the specification file, add them by using the following command:

cephuser@adm >ceph config-key set rgw/cert/default/default.crt -i certificate.pemcephuser@adm >ceph config-key set rgw/cert/default/default.key -i key.pemChange the default value of the

rgw_frontendsoption:cephuser@adm >ceph config set client.rgw.default.default rgw_frontends \ "beast port=80 ssl_port=443"Remove the specific configuration created by cephadm. Identify for which target the

rgw_frontendsoption was configured by running the command:cephuser@adm >ceph config dump | grep rgwFor example, the target is

client.rgw.default.default.node4.yiewdu. Remove the current specificrgw_frontendsvalue:cephuser@adm >ceph config rm client.rgw.default.default.node4.yiewdu rgw_frontendsTipInstead of removing a value for

rgw_frontends, you can specify it. For example:cephuser@adm >ceph config set client.rgw.default.default.node4.yiewdu \ rgw_frontends "beast port=80 ssl_port=443"Restart Object Gateways:

cephuser@adm >ceph orch restart rgw.default.default

8.3.4.2 Deploying with a subcluster #

Subclusters help you organize the nodes in your clusters to isolate workloads and make elastic scaling easier. If you are deploying with a subcluster, apply the following configuration:

service_type: rgw service_id: REALM_NAME.ZONE_NAME.SUBCLUSTER placement: hosts: - ses-node1 - ses-node2 - ses-node3 spec: rgw_realm: RGW_REALM rgw_zone: RGW_ZONE subcluster: SUBCLUSTER

8.3.4.3 Deploying High Availability for the Object Gateway #

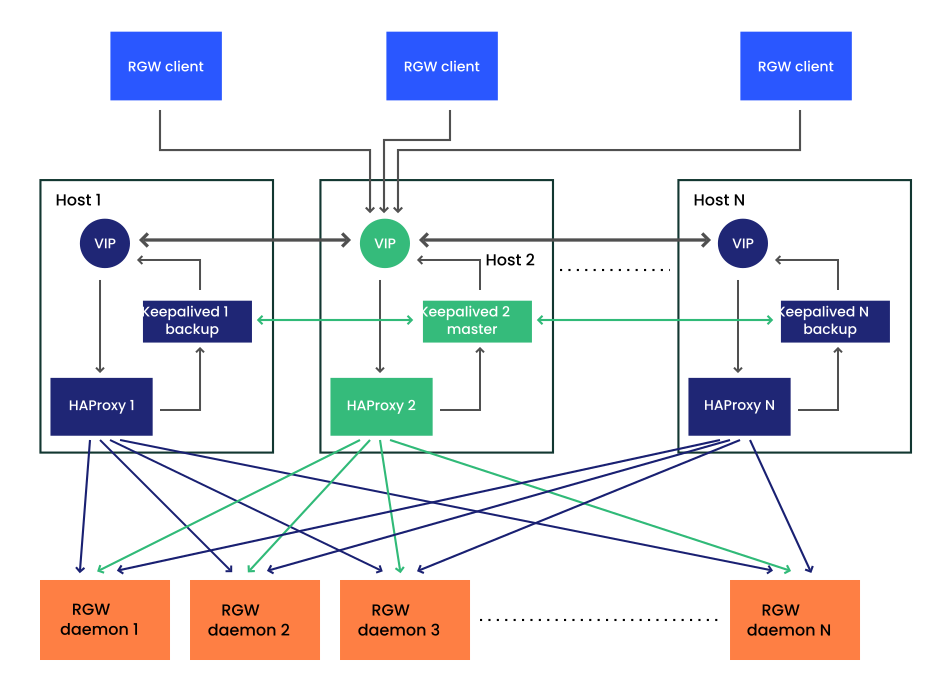

You can create high availability (HA) endpoints for the Object Gateway service by deploying Ingress. Ingress includes a combination of HAProxy and Keepalived to provide load balancing on a floating virtual IP address. A virtual IP address is automatically configured only on one of Ingress hosts at a time.

Each Keepalived daemon checks every few seconds whether the HAProxy daemon on the same host is responding. Keepalived also checks whether the master Keepalived daemon is running without problems. If the master Keepalived or the active HAProxy daemon is not responding, one of the remaining Keepalived daemons running in backup mode is elected as the master daemon, and the virtual IP address will be assigned to that node.

If your Object Gateway deployment uses an SSL connection, disable it and configure Ingress to use the SSL certificate instead of the Object Gateway node.

Create specifications for the Object Gateway and Ingress services, for example:

>cat rgw.yaml service_type: rgw service_id: myrealm.myzone placement: hosts: - ses-node1 - ses-node2 - ses-node3 [...]>cat ingress.yaml service_type: ingress service_id: rgw.myrealm.myzone placement: hosts: - ingress-host1 - ingress-host2 - ingress-host3 spec: backend_service: rgw.myrealm.myzone virtual_ip: 192.168.20.1/24 frontend_port: 8080 monitor_port: 1967 # used by HAproxy for load balancer status ssl_cert: | -----BEGIN CERTIFICATE----- ... -----END CERTIFICATE----- -----BEGIN PRIVATE KEY----- ... -----END PRIVATE KEY-----Apply the specifications:

cephuser@adm >ceph orch apply -i rgw.yamlcephuser@adm >ceph orch apply -i ingress.yaml

8.3.5 Deploying iSCSI Gateways #

cephadm deploys an iSCSI Gateway which is a storage area network (SAN) protocol that allows clients (called initiators) to send SCSI commands to SCSI storage devices (targets) on remote servers.

Apply the following configuration to deploy. Ensure

trusted_ip_list contains the IP addresses of all iSCSI Gateway

and Ceph Manager nodes (see the example output below).

Ensure the pool is created before applying the following specification.

service_type: iscsi service_id: EXAMPLE_ISCSI placement: hosts: - ses-node1 - ses-node2 - ses-node3 spec: pool: EXAMPLE_POOL api_user: EXAMPLE_USER api_password: EXAMPLE_PASSWORD trusted_ip_list: "IP_ADDRESS_1,IP_ADDRESS_2"

Ensure the IPs listed for trusted_ip_list do

not have a space after the comma separation.

8.3.5.1 Secure SSL configuration #

To use a secure SSL connection between the Ceph Dashboard and the iSCSI

target API, you need a pair of valid SSL certificate and key files. These

can be either CA-issued or self-signed (see

Section 10.1.1, “Creating self-signed certificates”). To enable SSL, include

the api_secure: true setting in your specification

file:

spec: api_secure: true

To specify the SSL certificate and key, you can paste the content directly

into the YAML specification file. The pipe sign (|) at

the end of line tells the parser to expect a multi-line string as a value.

For example:

spec:

pool: EXAMPLE_POOL

api_user: EXAMPLE_USER

api_password: EXAMPLE_PASSWORD

trusted_ip_list: "IP_ADDRESS_1,IP_ADDRESS_2"

api_secure: true

ssl_cert: |

-----BEGIN CERTIFICATE-----

MIIDtTCCAp2gAwIBAgIYMC4xNzc1NDQxNjEzMzc2MjMyXzxvQ7EcMA0GCSqGSIb3

DQEBCwUAMG0xCzAJBgNVBAYTAlVTMQ0wCwYDVQQIDARVdGFoMRcwFQYDVQQHDA5T

[...]

-----END CERTIFICATE-----

ssl_key: |

-----BEGIN PRIVATE KEY-----

MIIEvQIBADANBgkqhkiG9w0BAQEFAASCBKcwggSjAgEAAoIBAQC5jdYbjtNTAKW4

/CwQr/7wOiLGzVxChn3mmCIF3DwbL/qvTFTX2d8bDf6LjGwLYloXHscRfxszX/4h

[...]

-----END PRIVATE KEY-----8.3.6 Deploying NFS Ganesha #

NFS Ganesha supports NFS version 4.1 and newer. It does not support NFS version 3.

cephadm deploys NFS Ganesha using a standard pool .nfs

instead of a user-predefined pool. To deploy NFS Ganesha, apply the following

specification:

service_type: nfs service_id: EXAMPLE_NFS placement: hosts: - ses-node1 - ses-node2

EXAMPLE_NFS should be replaced with an arbitrary string that identifies the NFS export.

Enable the nfs module in the Ceph Manager daemon. This allows

defining NFS exports through the NFS section in the Ceph Dashboard:

cephuser@adm > ceph mgr module enable nfs8.3.6.1 Deploying High Availability for the NFS Ganesha #

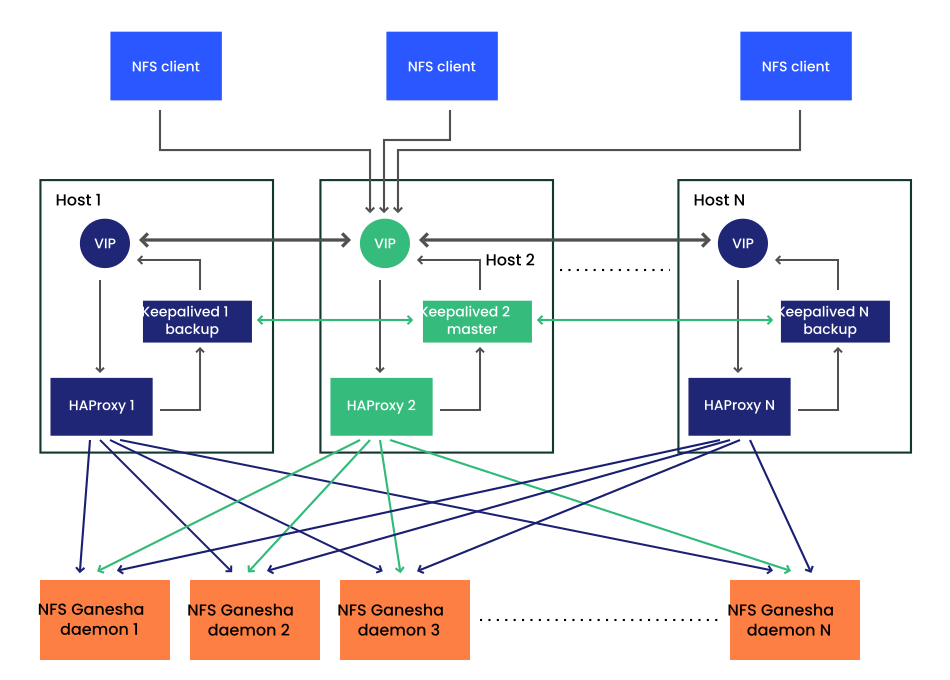

You can create high availability (HA) endpoints for the NFS Ganesha service by deploying Ingress. Ingress includes a combination of HAProxy and Keepalived to provide load balancing on a floating virtual IP address. A virtual IP address is automatically configured only on one of Ingress hosts at a time.

Each Keepalived daemon checks every few seconds whether the HAProxy daemon on the same host is responding. Keepalived also checks whether the master Keepalived daemon is running without problems. If the master Keepalived or the active HAProxy daemon is not responding, one of the remaining Keepalived daemons running in backup mode is elected as the master daemon, and the virtual IP address will be assigned to that node.

Ensure that the following sysctl variables are set on each Ingress host:

net.ipv4.ip_nonlocal_bind=1 net.ipv4.ip_forward=1

TipYou can persistently set the sysctl variables by creating a file

/etc/sysctl.d/90-ingress.confand including the above variables. To apply the changes, runsysctl --systemor reboot the host.Create specifications for the Ingress and NFS Ganesha services, for example:

>cat nfs.yaml service_type: nfs service_id: nfs-ha placement: hosts: - nfs-node1 - nfs-node2 - nfs-node3 spec: port: 12049>cat ingress.yaml service_type: ingress service_id: nfs.nfs-ha placement: hosts: - ingress-node1 - ingress-node2 - ingress-node3 spec: backend_service: nfs.nfs-ha frontend_port: 2049 monitor_port: 9049 virtual_ip: 10.20.27.111WarningWe recommend running at least three NFS Ganesha daemons, otherwise, mounted clients may experience excessively long hangs during failovers.

Apply the customized service specifications:

cephuser@adm >ceph orch apply -i nfs.yamlcephuser@adm >ceph orch apply -i ingress.yamlCreate a NFS Ganesha export. Find more details in Section 7.1, “Creating NFS exports”. Remember to select the High Availability NFS Ganesha cluster and an appropriate storage back-end and pseudo path.

Alternatively, create the export from the command line on the MGR node:

cephuser@adm >ceph nfs export create \ STORAGE_BACKEND \ NFS_HA_CLUSTER \ NFS_PSEUDO_PATH \ STORAGE_BACKEND_INSTANCE \ EXPORT_PATHMount the NFS Ganesha share on your clients:

#mount -t nfs \ VIRTUAL_IP:PORT/NFS_PSEUDO_PATH \ /mnt/nfs

8.3.7 Deploying rbd-mirror #

The rbd-mirror service takes care of synchronizing RADOS Block Device images between

two Ceph clusters (for more details, see

Section 20.4, “RBD image mirrors”). To deploy rbd-mirror, use the

following specification:

service_type: rbd-mirror service_id: EXAMPLE_RBD_MIRROR placement: hosts: - ses-node3

8.3.8 Deploying the monitoring stack #

The monitoring stack consists of Prometheus, Prometheus exporters, Prometheus Alertmanager, and Grafana. Ceph Dashboard makes use of these components to store and visualize detailed metrics on cluster usage and performance.

If your deployment requires custom or locally served container images of the monitoring stack services, refer to Section 16.1, “Configuring custom or local images”.

To deploy the monitoring stack, follow these steps:

Enable the

prometheusmodule in the Ceph Manager daemon. This exposes the internal Ceph metrics so that Prometheus can read them:cephuser@adm >ceph mgr module enable prometheusNoteEnsure this command is run before Prometheus is deployed. If the command was not run before the deployment, you must redeploy Prometheus to update Prometheus' configuration:

cephuser@adm >ceph orch redeploy prometheusCreate a specification file (for example

monitoring.yaml) with a content similar to the following:service_type: prometheus placement: hosts: - ses-node2 --- service_type: node-exporter --- service_type: alertmanager placement: hosts: - ses-node4 --- service_type: grafana placement: hosts: - ses-node3

Apply monitoring services by running:

cephuser@adm >ceph orch apply -i monitoring.yamlIt may take a minute or two for the monitoring services to be deployed.

Prometheus, Grafana, and the Ceph Dashboard are all automatically configured to talk to each other, resulting in a fully functional Grafana integration in the Ceph Dashboard when deployed as described above.

The only exception to this rule is monitoring with RBD images. See Section 16.5.4, “Enabling RBD-image monitoring” for more information.