2 Hardware requirements and recommendations #

The hardware requirements of Ceph are heavily dependent on the IO workload. The following hardware requirements and recommendations should be considered as a starting point for detailed planning.

In general, the recommendations given in this section are on a per-process basis. If several processes are located on the same machine, the CPU, RAM, disk and network requirements need to be added up.

2.1 Network overview #

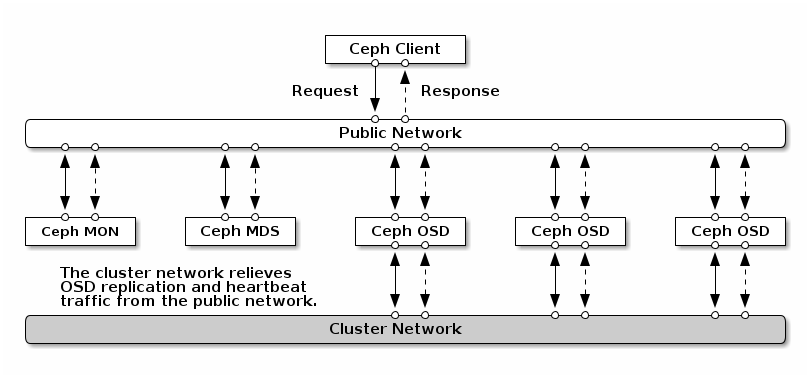

Ceph has several logical networks:

A front-end network called the

public network.A trusted internal network, the back-end network, called the

cluster network. This is optional.One or more client networks for gateways. This is optional and beyond the scope of this chapter.

The public network is the network over which Ceph daemons communicate with each other and with their clients. This means that all Ceph cluster traffic goes over this network except in the case when a cluster network is configured.

The cluster network is the back-end network between the OSD nodes, for replication, re-balancing, and recovery. If configured, this optional network would ideally provide twice the bandwidth of the public network with default three-way replication, since the primary OSD sends two copies to other OSDs via this network. The public network is between clients and gateways on the one side to talk to monitors, managers, MDS nodes, OSD nodes. It is also used by monitors, managers, and MDS nodes to talk with OSD nodes.

2.1.1 Network recommendations #

We recommend a single fault-tolerant network with enough bandwidth to fulfil your requirements. For the Ceph public network environment, we recommend two bonded 25 GbE (or faster) network interfaces bonded using 802.3ad (LACP). This is considered the minimal setup for Ceph. If you are also using a cluster network, we recommend four bonded 25 GbE network interfaces. Bonding two or more network interfaces provides better throughput via link aggregation and, given redundant links and switches, improved fault tolerance and maintainability.

You can also create VLANs to isolate different types of traffic over a bond. For example, you can create a bond to provide two VLAN interfaces, one for the public network, and the second for the cluster network. However, this is not required when setting up Ceph networking. Details on bonding the interfaces can be found in https://documentation.suse.com/sles/15-SP3/html/SLES-all/cha-network.html#sec-network-iface-bonding.

Fault tolerance can be enhanced through isolating the components into failure domains. To improve fault tolerance of the network, bonding one interface from two separate Network Interface Cards (NIC) offers protection against failure of a single NIC. Similarly, creating a bond across two switches protects against failure of a switch. We recommend consulting with the network equipment vendor in order to architect the level of fault tolerance required.

Additional administration network setup—that enables for example separating SSH, Salt, or DNS networking—is neither tested nor supported.

If your storage nodes are configured via DHCP, the default timeouts may

not be sufficient for the network to be configured correctly before the

various Ceph daemons start. If this happens, the Ceph MONs and OSDs

will not start correctly (running systemctl status

ceph\* will result in "unable to bind" errors). To avoid this

issue, we recommend increasing the DHCP client timeout to at least 30

seconds on each node in your storage cluster. This can be done by changing

the following settings on each node:

In /etc/sysconfig/network/dhcp, set

DHCLIENT_WAIT_AT_BOOT="30"

In /etc/sysconfig/network/config, set

WAIT_FOR_INTERFACES="60"

2.1.1.1 Adding a private network to a running cluster #

If you do not specify a cluster network during Ceph deployment, it assumes a single public network environment. While Ceph operates fine with a public network, its performance and security improves when you set a second private cluster network. To support two networks, each Ceph node needs to have at least two network cards.

You need to apply the following changes to each Ceph node. It is relatively quick to do for a small cluster, but can be very time consuming if you have a cluster consisting of hundreds or thousands of nodes.

Set the cluster network using the following command:

#ceph config set global cluster_network MY_NETWORKRestart the OSDs to bind to the specified cluster network:

#systemctl restart ceph-*@osd.*.serviceCheck that the private cluster network works as expected on the OS level.

2.1.1.2 Monitoring nodes on different subnets #

If the monitor nodes are on multiple subnets, for example they are located in different rooms and served by different switches, you need to specify their public network address in CIDR notation:

cephuser@adm > ceph config set mon public_network "MON_NETWORK_1, MON_NETWORK_2, MON_NETWORK_NFor example:

cephuser@adm > ceph config set mon public_network "192.168.1.0/24, 10.10.0.0/16"If you do specify more than one network segment for the public (or cluster) network as described in this section, each of these subnets must be capable of routing to all the others - otherwise, the MONs and other Ceph daemons on different network segments will not be able to communicate and a split cluster will ensue. Additionally, if you are using a firewall, make sure you include each IP address or subnet in your iptables and open ports for them on all nodes as necessary.

2.2 Multiple architecture configurations #

SUSE Enterprise Storage supports both x86 and Arm architectures. When considering each architecture, it is important to note that from a cores per OSD, frequency, and RAM perspective, there is no real difference between CPU architectures for sizing.

As with smaller x86 processors (non-server), lower-performance Arm-based cores may not provide an optimal experience, especially when used for erasure coded pools.

Throughout the documentation, SYSTEM-ARCH is used in place of x86 or Arm.

2.3 Hardware configuration #

For the best product experience, we recommend to start with the recommended cluster configuration. For a test cluster or a cluster with less performance requirements, we document a minimal supported cluster configuration.

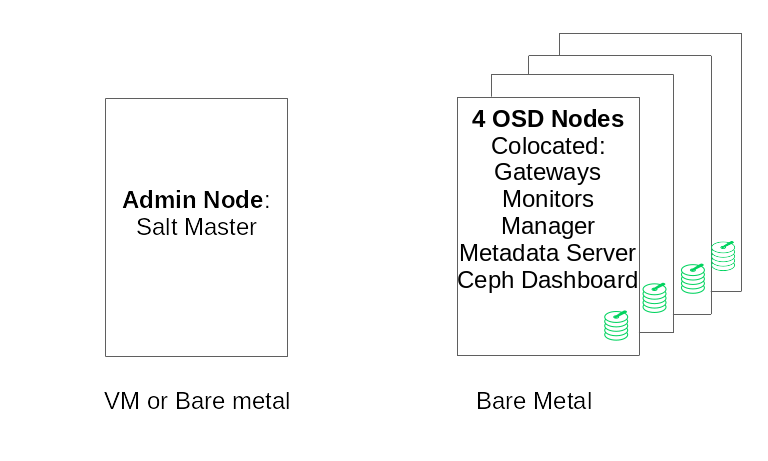

2.3.1 Minimum cluster configuration #

A minimal product cluster configuration consists of:

At least four physical nodes (OSD nodes) with co-location of services

Dual-10 Gb Ethernet as a bonded network

A separate Admin Node (can be virtualized on an external node)

A detailed configuration is:

Separate Admin Node with 4 GB RAM, four cores, 1 TB storage capacity. This is typically the Salt Master node. Ceph services and gateways, such as Ceph Monitor, Metadata Server, Ceph OSD, Object Gateway, or NFS Ganesha are not supported on the Admin Node as it needs to orchestrate the cluster update and upgrade processes independently.

At least four physical OSD nodes, with eight OSD disks each, see Section 2.4.1, “Minimum requirements” for requirements.

The total capacity of the cluster should be sized so that even with one node unavailable, the total used capacity (including redundancy) does not exceed 80%.

Three Ceph Monitor instances. Monitors need to be run from SSD/NVMe storage, not HDDs, for latency reasons.

Monitors, Metadata Server, and gateways can be co-located on the OSD nodes, see Section 2.12, “OSD and monitor sharing one server” for monitor co-location. If you co-locate services, the memory and CPU requirements need to be added up.

iSCSI Gateway, Object Gateway, and Metadata Server require at least incremental 4 GB RAM and four cores.

If you are using CephFS, S3/Swift, iSCSI, at least two instances of the respective roles (Metadata Server, Object Gateway, iSCSI) are required for redundancy and availability.

The nodes are to be dedicated to SUSE Enterprise Storage and must not be used for any other physical, containerized, or virtualized workload.

If any of the gateways (iSCSI, Object Gateway, NFS Ganesha, Metadata Server, ...) are deployed within VMs, these VMs must not be hosted on the physical machines serving other cluster roles. (This is unnecessary, as they are supported as collocated services.)

When deploying services as VMs on hypervisors outside the core physical cluster, failure domains must be respected to ensure redundancy.

For example, do not deploy multiple roles of the same type on the same hypervisor, such as multiple MONs or MDSs instances.

When deploying inside VMs, it is particularly crucial to ensure that the nodes have strong network connectivity and well working time synchronization.

The hypervisor nodes must be adequately sized to avoid interference by other workloads consuming CPU, RAM, network, and storage resources.

2.3.2 Recommended production cluster configuration #

Once you grow your cluster, we recommend relocating Ceph Monitors, Metadata Servers, and Gateways to separate nodes for better fault tolerance.

Seven Object Storage Nodes

No single node exceeds ~15% of total storage.

The total capacity of the cluster should be sized so that even with one node unavailable, the total used capacity (including redundancy) does not exceed 80%.

25 Gb Ethernet or better, bonded for internal cluster and external public network each.

56+ OSDs per storage cluster.

See Section 2.4.1, “Minimum requirements” for further recommendation.

Dedicated physical infrastructure nodes.

Three Ceph Monitor nodes: 4 GB RAM, 4 core processor, RAID 1 SSDs for disk.

See Section 2.5, “Monitor nodes” for further recommendation.

Object Gateway nodes: 32 GB RAM, 8 core processor, RAID 1 SSDs for disk.

See Section 2.6, “Object Gateway nodes” for further recommendation.

iSCSI Gateway nodes: 16 GB RAM, 8 core processor, RAID 1 SSDs for disk.

See Section 2.9, “iSCSI Gateway nodes” for further recommendation.

Metadata Server nodes (one active/one hot standby): 32 GB RAM, 8 core processor, RAID 1 SSDs for disk.

See Section 2.7, “Metadata Server nodes” for further recommendation.

One SES Admin Node: 4 GB RAM, 4 core processor, RAID 1 SSDs for disk.

2.3.3 Multipath configuration #

If you want to use multipath hardware, ensure that LVM sees

multipath_component_detection = 1 in the configuration

file under the devices section. This can be checked via

the lvm config command.

Alternatively, ensure that LVM filters a device's mpath components via the LVM filter configuration. This will be host specific.

This is not recommended and should only ever be considered if

multipath_component_detection = 1 cannot be set.

For more information on multipath configuration, see https://documentation.suse.com/sles/15-SP3/html/SLES-all/cha-multipath.html#sec-multipath-lvm.

2.4 Object Storage Nodes #

2.4.1 Minimum requirements #

The following CPU recommendations account for devices independent of usage by Ceph:

1x 2GHz CPU Thread per spinner.

2x 2GHz CPU Thread per SSD.

4x 2GHz CPU Thread per NVMe.

Separate 10 GbE networks (public/client and internal), required 4x 10 GbE, recommended 2x 25 GbE.

Total RAM required = number of OSDs x (1 GB +

osd_memory_target) + 16 GBRefer to Section 28.4.1, “Configuring automatic cache sizing” for more details on

osd_memory_target.OSD disks in JBOD configurations or individual RAID-0 configurations.

OSD journal can reside on OSD disk.

OSD disks should be exclusively used by SUSE Enterprise Storage.

Dedicated disk and SSD for the operating system, preferably in a RAID 1 configuration.

Allocate at least an additional 4 GB of RAM if this OSD host will host part of a cache pool used for cache tiering.

Ceph Monitors, gateway and Metadata Servers can reside on Object Storage Nodes.

For disk performance reasons, OSD nodes are bare metal nodes. No other workloads should run on an OSD node unless it is a minimal setup of Ceph Monitors and Ceph Managers.

SSDs for Journal with 6:1 ratio SSD journal to OSD.

Ensure that OSD nodes do not have any networked block devices mapped, such as iSCSI or RADOS Block Device images.

2.4.2 Minimum disk size #

There are two types of disk space needed to run on OSD: the space for the WAL/DB device, and the primary space for the stored data. The minimum (and default) value for the WAL/DB is 6 GB. The minimum space for data is 5 GB, as partitions smaller than 5 GB are automatically assigned the weight of 0.

So although the minimum disk space for an OSD is 11 GB, we do not recommend a disk smaller than 20 GB, even for testing purposes.

2.4.3 Recommended size for the BlueStore's WAL and DB device #

We recommend reserving 4 GB for the WAL device. While the minimal DB size is 64 GB for RBD-only workloads, the recommended DB size for Object Gateway and CephFS workloads is 2% of the main device capacity (but at least 196 GB).

ImportantWe recommend larger DB volumes for high-load deployments, especially if there is high RGW or CephFS usage. Reserve some capacity (slots) to install more hardware for more DB space if required.

If you intend to put the WAL and DB device on the same disk, then we recommend using a single partition for both devices, rather than having a separate partition for each. This allows Ceph to use the DB device for the WAL operation as well. Management of the disk space is therefore more effective as Ceph uses the DB partition for the WAL only if there is a need for it. Another advantage is that the probability that the WAL partition gets full is very small, and when it is not used fully then its space is not wasted but used for DB operation.

To share the DB device with the WAL, do not specify the WAL device, and specify only the DB device.

Find more information about specifying an OSD layout in Section 13.4.3, “Adding OSDs using DriveGroups specification”.

2.4.5 Maximum recommended number of disks #

You can have as many disks in one server as it allows. There are a few things to consider when planning the number of disks per server:

Network bandwidth. The more disks you have in a server, the more data must be transferred via the network card(s) for the disk write operations.

Memory. RAM above 2 GB is used for the BlueStore cache. With the default

osd_memory_targetof 4 GB, the system has a reasonable starting cache size for spinning media. If using SSD or NVME, consider increasing the cache size and RAM allocation per OSD to maximize performance.Fault tolerance. If the complete server fails, the more disks it has, the more OSDs the cluster temporarily loses. Moreover, to keep the replication rules running, you need to copy all the data from the failed server among the other nodes in the cluster.

2.5 Monitor nodes #

At least three MON nodes are required. The number of monitors should always be odd (1+2n).

4 GB of RAM.

Processor with four logical cores.

An SSD or other sufficiently fast storage type is highly recommended for monitors, specifically for the

/var/lib/cephpath on each monitor node, as quorum may be unstable with high disk latencies. Two disks in RAID 1 configuration is recommended for redundancy. It is recommended that separate disks or at least separate disk partitions are used for the monitor processes to protect the monitor's available disk space from things like log file creep.There must only be one monitor process per node.

Mixing OSD, MON, or Object Gateway nodes is only supported if sufficient hardware resources are available. That means that the requirements for all services need to be added up.

Two network interfaces bonded to multiple switches.

2.6 Object Gateway nodes #

Object Gateway nodes should have at least six CPU cores and 32 GB of RAM. When other processes are co-located on the same machine, their requirements need to be added up.

2.7 Metadata Server nodes #

Proper sizing of the Metadata Server nodes depends on the specific use case. Generally, the more open files the Metadata Server is to handle, the more CPU and RAM it needs. The following are the minimum requirements:

4 GB of RAM for each Metadata Server daemon.

Bonded network interface.

2.5 GHz CPU with at least 2 cores.

2.8 Admin Node #

At least 4 GB of RAM and a quad-core CPU are required. This includes running the Salt Master on the Admin Node. For large clusters with hundreds of nodes, 6 GB of RAM is suggested.

2.9 iSCSI Gateway nodes #

iSCSI Gateway nodes should have at least six CPU cores and 16 GB of RAM.

2.10 SES and other SUSE products #

This section contains important information about integrating SES with other SUSE products.

2.10.1 SUSE Manager #

SUSE Manager and SUSE Enterprise Storage are not integrated, therefore SUSE Manager cannot currently manage an SES cluster.

2.11 Name limitations #

Ceph does not generally support non-ASCII characters in configuration files, pool names, user names and so forth. When configuring a Ceph cluster we recommend using only simple alphanumeric characters (A-Z, a-z, 0-9) and minimal punctuation ('.', '-', '_') in all Ceph object/configuration names.