- About this guide

- I Introducing SUSE Enterprise Storage (SES)

- 1 SES and Ceph

- 2 Hardware requirements and recommendations

- 2.1 Network overview

- 2.2 Multiple architecture configurations

- 2.3 Hardware configuration

- 2.4 Object Storage Nodes

- 2.5 Monitor nodes

- 2.6 Object Gateway nodes

- 2.7 Metadata Server nodes

- 2.8 Admin Node

- 2.9 iSCSI Gateway nodes

- 2.10 SES and other SUSE products

- 2.11 Name limitations

- 2.12 OSD and monitor sharing one server

- 3 Admin Node HA setup

- II Deploying Ceph Cluster

- III Upgrading from Previous Releases

- A Ceph maintenance updates based on upstream 'Pacific' point releases

- Glossary

- 1.1 Interfaces to the Ceph object store

- 1.2 Small scale Ceph example

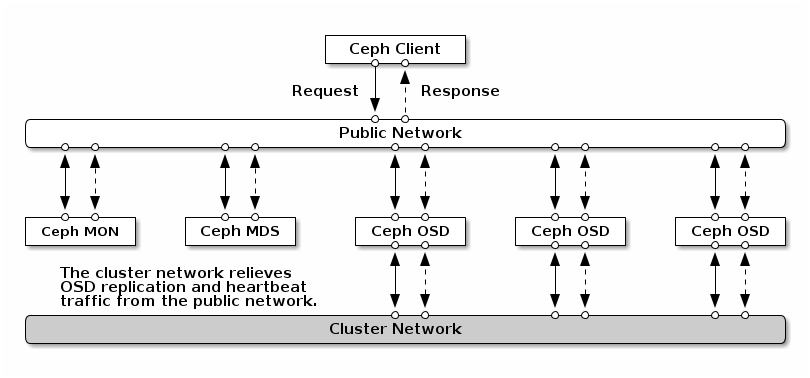

- 2.1 Network overview

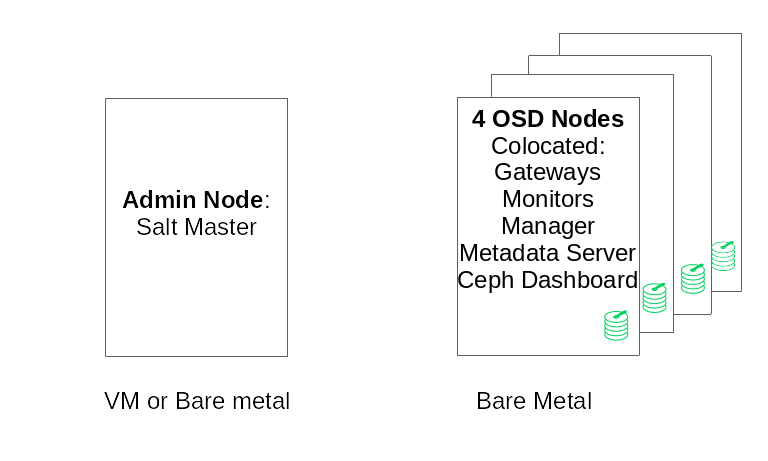

- 2.2 Minimum cluster configuration

- 3.1 2-Node HA cluster for Admin Node

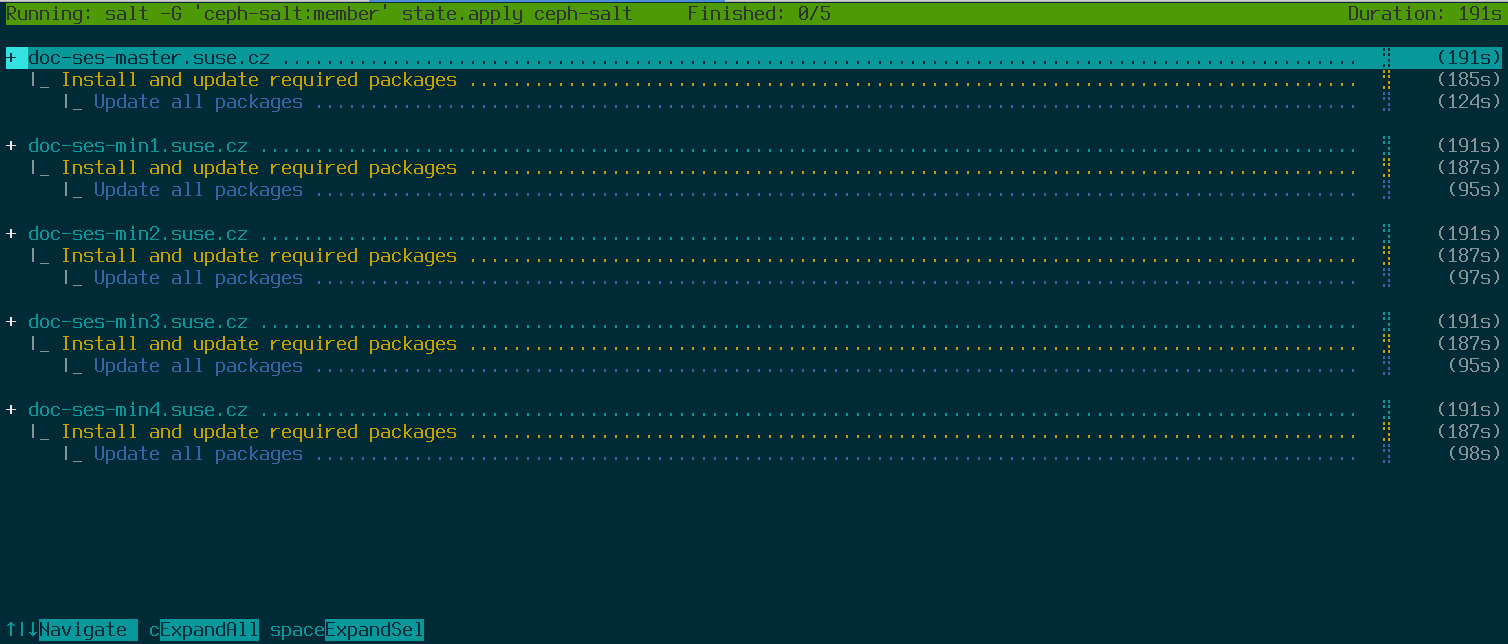

- 7.1 Deployment of a minimal cluster

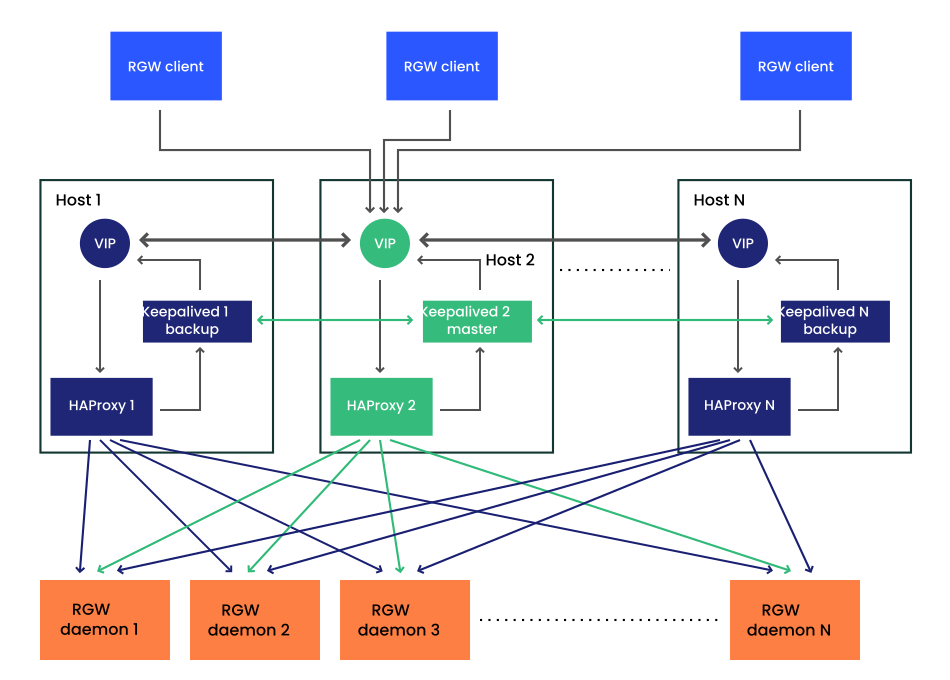

- 8.1 High Availability setup for Object Gateway

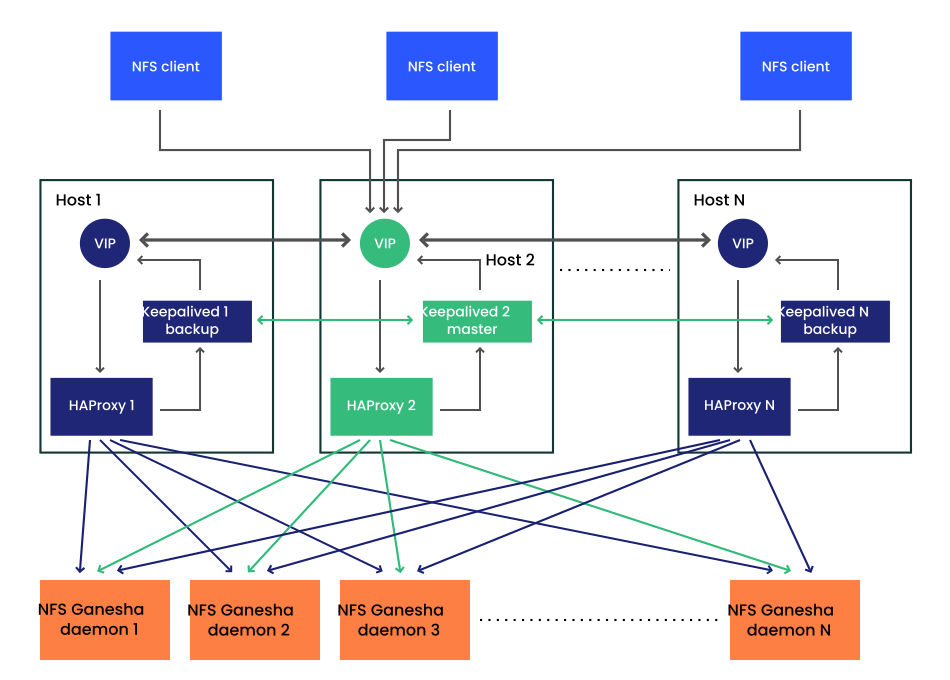

- 8.2 High Availability setup for NFS Ganesha

- 9.1 Ceph Cluster with a Single iSCSI Gateway

- 9.2 Ceph cluster with multiple iSCSI gateways

Copyright © 2020–2025 SUSE LLC and contributors. All rights reserved.

Except where otherwise noted, this document is licensed under Creative Commons Attribution-ShareAlike 4.0 International (CC-BY-SA 4.0): https://creativecommons.org/licenses/by-sa/4.0/legalcode.

For SUSE trademarks, see http://www.suse.com/company/legal/. All third-party trademarks are the property of their respective owners. Trademark symbols (®, ™ etc.) denote trademarks of SUSE and its affiliates. Asterisks (*) denote third-party trademarks.

All information found in this book has been compiled with utmost attention to detail. However, this does not guarantee complete accuracy. Neither SUSE LLC, its affiliates, the authors nor the translators shall be held liable for possible errors or the consequences thereof.

About this guide #

This guide focuses on deploying a basic Ceph cluster, and how to deploy additional services. It also covers the steps for upgrading to SUSE Enterprise Storage 7.1 from the previous product version.

SUSE Enterprise Storage 7.1 is an extension to SUSE Linux Enterprise Server 15 SP3. It combines the capabilities of the Ceph (http://ceph.com/) storage project with the enterprise engineering and support of SUSE. SUSE Enterprise Storage 7.1 provides IT organizations with the ability to deploy a distributed storage architecture that can support a number of use cases using commodity hardware platforms.

1 Available documentation #

Documentation for our products is available at https://documentation.suse.com, where you can also find the latest updates, and browse or download the documentation in various formats. The latest documentation updates can be found in the English language version.

In addition, the product documentation is available in your installed system

under /usr/share/doc/manual. It is included in an RPM

package named

ses-manual_LANG_CODE. Install

it if it is not already on your system, for example:

# zypper install ses-manual_enThe following documentation is available for this product:

- Deployment Guide

This guide focuses on deploying a basic Ceph cluster, and how to deploy additional services. It also cover the steps for upgrading to SUSE Enterprise Storage 7.1 from the previous product version.

- Administration and Operations Guide

This guide focuses on routine tasks that you as an administrator need to take care of after the basic Ceph cluster has been deployed (day 2 operations). It also describes all the supported ways to access data stored in a Ceph cluster.

- Security Hardening Guide

This guide focuses on how to ensure your cluster is secure.

- Troubleshooting Guide

This guide takes you through various common problems when running SUSE Enterprise Storage 7.1 and other related issues to relevant components such as Ceph or Object Gateway.

- SUSE Enterprise Storage for Windows Guide

This guide describes the integration, installation, and configuration of Microsoft Windows environments and SUSE Enterprise Storage using the Windows Driver.

2 Improving the documentation #

Your feedback and contributions to this documentation are welcome. The following channels for giving feedback are available:

- Service requests and support

For services and support options available for your product, see http://www.suse.com/support/.

To open a service request, you need a SUSE subscription registered at SUSE Customer Center. Go to https://scc.suse.com/support/requests, log in, and click .

- Bug reports

Report issues with the documentation at https://bugzilla.suse.com/. A Bugzilla account is required.

To simplify this process, you can use the links next to headlines in the HTML version of this document. These preselect the right product and category in Bugzilla and add a link to the current section. You can start typing your bug report right away.

- Contributions

To contribute to this documentation, use the links next to headlines in the HTML version of this document. They take you to the source code on GitHub, where you can open a pull request. A GitHub account is required.

Note: only available for EnglishThe links are only available for the English version of each document. For all other languages, use the links instead.

For more information about the documentation environment used for this documentation, see the repository's README at https://github.com/SUSE/doc-ses.

You can also report errors and send feedback concerning the documentation to <doc-team@suse.com>. Include the document title, the product version, and the publication date of the document. Additionally, include the relevant section number and title (or provide the URL) and provide a concise description of the problem.

3 Documentation conventions #

The following notices and typographic conventions are used in this document:

/etc/passwd: Directory names and file namesPLACEHOLDER: Replace PLACEHOLDER with the actual value

PATH: An environment variablels,--help: Commands, options, and parametersuser: The name of user or grouppackage_name: The name of a software package

Alt, Alt–F1: A key to press or a key combination. Keys are shown in uppercase as on a keyboard.

, › : menu items, buttons

AMD/Intel This paragraph is only relevant for the AMD64/Intel 64 architectures. The arrows mark the beginning and the end of the text block.

IBM Z, POWER This paragraph is only relevant for the architectures

IBM ZandPOWER. The arrows mark the beginning and the end of the text block.Chapter 1, “Example chapter”: A cross-reference to another chapter in this guide.

Commands that must be run with

rootprivileges. Often you can also prefix these commands with thesudocommand to run them as non-privileged user.#command>sudocommandCommands that can be run by non-privileged users.

>commandNotices

Warning: Warning noticeVital information you must be aware of before proceeding. Warns you about security issues, potential loss of data, damage to hardware, or physical hazards.

Important: Important noticeImportant information you should be aware of before proceeding.

Note: Note noticeAdditional information, for example about differences in software versions.

Tip: Tip noticeHelpful information, like a guideline or a piece of practical advice.

Compact Notices

Additional information, for example about differences in software versions.

Helpful information, like a guideline or a piece of practical advice.

4 Support #

Find the support statement for SUSE Enterprise Storage and general information about technology previews below. For details about the product lifecycle, see https://www.suse.com/lifecycle.

If you are entitled to support, find details on how to collect information for a support ticket at https://documentation.suse.com/sles-15/html/SLES-all/cha-adm-support.html.

4.1 Support statement for SUSE Enterprise Storage #

To receive support, you need an appropriate subscription with SUSE. To view the specific support offerings available to you, go to https://www.suse.com/support/ and select your product.

The support levels are defined as follows:

- L1

Problem determination, which means technical support designed to provide compatibility information, usage support, ongoing maintenance, information gathering and basic troubleshooting using available documentation.

- L2

Problem isolation, which means technical support designed to analyze data, reproduce customer problems, isolate problem area and provide a resolution for problems not resolved by Level 1 or prepare for Level 3.

- L3

Problem resolution, which means technical support designed to resolve problems by engaging engineering to resolve product defects which have been identified by Level 2 Support.

For contracted customers and partners, SUSE Enterprise Storage is delivered with L3 support for all packages, except for the following:

Technology previews.

Sound, graphics, fonts, and artwork.

Packages that require an additional customer contract.

Some packages shipped as part of the module Workstation Extension are L2-supported only.

Packages with names ending in -devel (containing header files and similar developer resources) will only be supported together with their main packages.

SUSE will only support the usage of original packages. That is, packages that are unchanged and not recompiled.

4.2 Technology previews #

Technology previews are packages, stacks, or features delivered by SUSE to provide glimpses into upcoming innovations. Technology previews are included for your convenience to give you a chance to test new technologies within your environment. We would appreciate your feedback! If you test a technology preview, please contact your SUSE representative and let them know about your experience and use cases. Your input is helpful for future development.

Technology previews have the following limitations:

Technology previews are still in development. Therefore, they may be functionally incomplete, unstable, or in other ways not suitable for production use.

Technology previews are not supported.

Technology previews may only be available for specific hardware architectures.

Details and functionality of technology previews are subject to change. As a result, upgrading to subsequent releases of a technology preview may be impossible and require a fresh installation.

SUSE may discover that a preview does not meet customer or market needs, or does not comply with enterprise standards. Technology previews can be removed from a product at any time. SUSE does not commit to providing a supported version of such technologies in the future.

For an overview of technology previews shipped with your product, see the release notes at https://www.suse.com/releasenotes/x86_64/SUSE-Enterprise-Storage/7.1.

5 Ceph contributors #

The Ceph project and its documentation is a result of the work of hundreds of contributors and organizations. See https://ceph.com/contributors/ for more details.

6 Commands and command prompts used in this guide #

As a Ceph cluster administrator, you will be configuring and adjusting the cluster behavior by running specific commands. There are several types of commands you will need:

6.1 Salt-related commands #

These commands help you to deploy Ceph cluster nodes, run commands on

several (or all) cluster nodes at the same time, or assist you when adding

or removing cluster nodes. The most frequently used commands are

ceph-salt and ceph-salt config. You

need to run Salt commands on the Salt Master node as root. These

commands are introduced with the following prompt:

root@master # For example:

root@master # ceph-salt config ls6.2 Ceph related commands #

These are lower-level commands to configure and fine tune all aspects of the

cluster and its gateways on the command line, for example

ceph, cephadm, rbd,

or radosgw-admin.

To run Ceph related commands, you need to have read access to a Ceph

key. The key's capabilities then define your privileges within the Ceph

environment. One option is to run Ceph commands as root (or via

sudo) and use the unrestricted default keyring

'ceph.client.admin.key'.

The safer and recommended option is to create a more restrictive individual key for each administrator user and put it in a directory where the users can read it, for example:

~/.ceph/ceph.client.USERNAME.keyring

To use a custom admin user and keyring, you need to specify the user name

and path to the key each time you run the ceph command

using the -n client.USER_NAME

and --keyring PATH/TO/KEYRING

options.

To avoid this, include these options in the CEPH_ARGS

variable in the individual users' ~/.bashrc files.

Although you can run Ceph-related commands on any cluster node, we

recommend running them on the Admin Node. This documentation uses the cephuser

user to run the commands, therefore they are introduced with the following

prompt:

cephuser@adm > For example:

cephuser@adm > ceph auth listIf the documentation instructs you to run a command on a cluster node with a specific role, it will be addressed by the prompt. For example:

cephuser@mon > 6.2.1 Running ceph-volume #

Starting with SUSE Enterprise Storage 7, Ceph services are running containerized.

If you need to run ceph-volume on an OSD node, you need

to prepend it with the cephadm command, for example:

cephuser@adm > cephadm ceph-volume simple scan6.3 General Linux commands #

Linux commands not related to Ceph, such as mount,

cat, or openssl, are introduced either

with the cephuser@adm > or # prompts, depending on which

privileges the related command requires.

6.4 Additional information #

For more information on Ceph key management, refer to

Book “Administration and Operations Guide”, Chapter 30 “Authentication with cephx”, Section 30.2 “Key management”.

Part I Introducing SUSE Enterprise Storage (SES) #

- 1 SES and Ceph

SUSE Enterprise Storage is a distributed storage system designed for scalability, reliability and performance which is based on the Ceph technology. A Ceph cluster can be run on commodity servers in a common network like Ethernet. The cluster scales up well to thousands of servers (later on referred…

- 2 Hardware requirements and recommendations

The hardware requirements of Ceph are heavily dependent on the IO workload. The following hardware requirements and recommendations should be considered as a starting point for detailed planning.

- 3 Admin Node HA setup

The Admin Node is a Ceph cluster node where the Salt Master service runs. It manages the rest of the cluster nodes by querying and instructing their Salt Minion services. It usually includes other services as well, for example the Grafana dashboard backed by the Prometheus monitoring toolkit.

1 SES and Ceph #

SUSE Enterprise Storage is a distributed storage system designed for scalability, reliability and performance which is based on the Ceph technology. A Ceph cluster can be run on commodity servers in a common network like Ethernet. The cluster scales up well to thousands of servers (later on referred to as nodes) and into the petabyte range. As opposed to conventional systems which have allocation tables to store and fetch data, Ceph uses a deterministic algorithm to allocate storage for data and has no centralized information structure. Ceph assumes that in storage clusters the addition or removal of hardware is the rule, not the exception. The Ceph cluster automates management tasks such as data distribution and redistribution, data replication, failure detection and recovery. Ceph is both self-healing and self-managing which results in a reduction of administrative and budget overhead.

This chapter provides a high level overview of SUSE Enterprise Storage 7.1 and briefly describes the most important components.

1.1 Ceph features #

The Ceph environment has the following features:

- Scalability

Ceph can scale to thousands of nodes and manage storage in the range of petabytes.

- Commodity Hardware

No special hardware is required to run a Ceph cluster. For details, see Chapter 2, Hardware requirements and recommendations

- Self-managing

The Ceph cluster is self-managing. When nodes are added, removed or fail, the cluster automatically redistributes the data. It is also aware of overloaded disks.

- No Single Point of Failure

No node in a cluster stores important information alone. The number of redundancies can be configured.

- Open Source Software

Ceph is an open source software solution and independent of specific hardware or vendors.

1.2 Ceph core components #

To make full use of Ceph's power, it is necessary to understand some of the basic components and concepts. This section introduces some parts of Ceph that are often referenced in other chapters.

1.2.1 RADOS #

The basic component of Ceph is called RADOS (Reliable Autonomic Distributed Object Store). It is responsible for managing the data stored in the cluster. Data in Ceph is usually stored as objects. Each object consists of an identifier and the data.

RADOS provides the following access methods to the stored objects that cover many use cases:

- Object Gateway

Object Gateway is an HTTP REST gateway for the RADOS object store. It enables direct access to objects stored in the Ceph cluster.

- RADOS Block Device

RADOS Block Device (RBD) can be accessed like any other block device. These can be used for example in combination with

libvirtfor virtualization purposes.- CephFS

The Ceph File System is a POSIX-compliant file system.

libradoslibradosis a library that can be used with many programming languages to create an application capable of directly interacting with the storage cluster.

librados is used by Object Gateway and RBD

while CephFS directly interfaces with RADOS

Figure 1.1, “Interfaces to the Ceph object store”.

1.2.2 CRUSH #

At the core of a Ceph cluster is the CRUSH algorithm. CRUSH is the acronym for Controlled Replication Under Scalable Hashing. CRUSH is a function that handles the storage allocation and needs comparably few parameters. That means only a small amount of information is necessary to calculate the storage position of an object. The parameters are a current map of the cluster including the health state, some administrator-defined placement rules and the name of the object that needs to be stored or retrieved. With this information, all nodes in the Ceph cluster are able to calculate where an object and its replicas are stored. This makes writing or reading data very efficient. CRUSH tries to evenly distribute data over all nodes in the cluster.

The CRUSH Map contains all storage nodes and administrator-defined placement rules for storing objects in the cluster. It defines a hierarchical structure that usually corresponds to the physical structure of the cluster. For example, the data-containing disks are in hosts, hosts are in racks, racks in rows and rows in data centers. This structure can be used to define failure domains. Ceph then ensures that replications are stored on different branches of a specific failure domain.

If the failure domain is set to rack, replications of objects are distributed over different racks. This can mitigate outages caused by a failed switch in a rack. If one power distribution unit supplies a row of racks, the failure domain can be set to row. When the power distribution unit fails, the replicated data is still available on other rows.

1.2.3 Ceph nodes and daemons #

In Ceph, nodes are servers working for the cluster. They can run several different types of daemons. We recommend running only one type of daemon on each node, except for Ceph Manager daemons which can be co-located with Ceph Monitors. Each cluster requires at least Ceph Monitor, Ceph Manager, and Ceph OSD daemons:

- Admin Node

The Admin Node is a Ceph cluster node from which you run commands to manage the cluster. The Admin Node is a central point of the Ceph cluster because it manages the rest of the cluster nodes by querying and instructing their Salt Minion services.

- Ceph Monitor

Ceph Monitor (often abbreviated as MON) nodes maintain information about the cluster health state, a map of all nodes and data distribution rules (see Section 1.2.2, “CRUSH”).

If failures or conflicts occur, the Ceph Monitor nodes in the cluster decide by majority which information is correct. To form a qualified majority, it is recommended to have an odd number of Ceph Monitor nodes, and at least three of them.

If more than one site is used, the Ceph Monitor nodes should be distributed over an odd number of sites. The number of Ceph Monitor nodes per site should be such that more than 50% of the Ceph Monitor nodes remain functional if one site fails.

- Ceph Manager

The Ceph Manager collects the state information from the whole cluster. The Ceph Manager daemon runs alongside the Ceph Monitor daemons. It provides additional monitoring, and interfaces the external monitoring and management systems. It includes other services as well. For example, the Ceph Dashboard Web UI runs on the same node as the Ceph Manager.

The Ceph Manager requires no additional configuration, beyond ensuring it is running.

- Ceph OSD

A Ceph OSD is a daemon handling Object Storage Devices which are a physical or logical storage units (hard disks or partitions). Object Storage Devices can be physical disks/partitions or logical volumes. The daemon additionally takes care of data replication and rebalancing in case of added or removed nodes.

Ceph OSD daemons communicate with monitor daemons and provide them with the state of the other OSD daemons.

To use CephFS, Object Gateway, NFS Ganesha, or iSCSI Gateway, additional nodes are required:

- Metadata Server (MDS)

CephFS metadata is stored in its own RADOS pool (see Section 1.3.1, “Pools”). The Metadata Servers act as a smart caching layer for the metadata and serializes access when needed. This allows concurrent access from many clients without explicit synchronization.

- Object Gateway

The Object Gateway is an HTTP REST gateway for the RADOS object store. It is compatible with OpenStack Swift and Amazon S3 and has its own user management.

- NFS Ganesha

NFS Ganesha provides an NFS access to either the Object Gateway or the CephFS. It runs in the user instead of the kernel space and directly interacts with the Object Gateway or CephFS.

- iSCSI Gateway

iSCSI is a storage network protocol that allows clients to send SCSI commands to SCSI storage devices (targets) on remote servers.

- Samba Gateway

The Samba Gateway provides a Samba access to data stored on CephFS.

1.3 Ceph storage structure #

1.3.1 Pools #

Objects that are stored in a Ceph cluster are put into pools. Pools represent logical partitions of the cluster to the outside world. For each pool a set of rules can be defined, for example, how many replications of each object must exist. The standard configuration of pools is called replicated pool.

Pools usually contain objects but can also be configured to act similar to a RAID 5. In this configuration, objects are stored in chunks along with additional coding chunks. The coding chunks contain the redundant information. The number of data and coding chunks can be defined by the administrator. In this configuration, pools are referred to as erasure coded pools or EC pools.

1.3.2 Placement groups #

Placement Groups (PGs) are used for the distribution of data within a pool. When creating a pool, a certain number of placement groups is set. The placement groups are used internally to group objects and are an important factor for the performance of a Ceph cluster. The PG for an object is determined by the object's name.

1.3.3 Example #

This section provides a simplified example of how Ceph manages data (see

Figure 1.2, “Small scale Ceph example”). This example

does not represent a recommended configuration for a Ceph cluster. The

hardware setup consists of three storage nodes or Ceph OSDs

(Host 1, Host 2, Host

3). Each node has three hard disks which are used as OSDs

(osd.1 to osd.9). The Ceph Monitor nodes are

neglected in this example.

While Ceph OSD or Ceph OSD daemon refers to a daemon that is run on a node, the word OSD refers to the logical disk that the daemon interacts with.

The cluster has two pools, Pool A and Pool

B. While Pool A replicates objects only two times, resilience for

Pool B is more important and it has three replications for each object.

When an application puts an object into a pool, for example via the REST

API, a Placement Group (PG1 to PG4)

is selected based on the pool and the object name. The CRUSH algorithm then

calculates on which OSDs the object is stored, based on the Placement Group

that contains the object.

In this example the failure domain is set to host. This ensures that replications of objects are stored on different hosts. Depending on the replication level set for a pool, the object is stored on two or three OSDs that are used by the Placement Group.

An application that writes an object only interacts with one Ceph OSD, the primary Ceph OSD. The primary Ceph OSD takes care of replication and confirms the completion of the write process after all other OSDs have stored the object.

If osd.5 fails, all object in PG1 are

still available on osd.1. As soon as the cluster

recognizes that an OSD has failed, another OSD takes over. In this example

osd.4 is used as a replacement for

osd.5. The objects stored on osd.1

are then replicated to osd.4 to restore the replication

level.

If a new node with new OSDs is added to the cluster, the cluster map is going to change. The CRUSH function then returns different locations for objects. Objects that receive new locations will be relocated. This process results in a balanced usage of all OSDs.

1.4 BlueStore #

BlueStore is a new default storage back-end for Ceph from SES 5. It has better performance than FileStore, full data check-summing, and built-in compression.

BlueStore manages either one, two, or three storage devices. In the simplest case, BlueStore consumes a single primary storage device. The storage device is normally partitioned into two parts:

A small partition named BlueFS that implements file system-like functionalities required by RocksDB.

The rest of the device is normally a large partition occupying the rest of the device. It is managed directly by BlueStore and contains all of the actual data. This primary device is normally identified by a block symbolic link in the data directory.

It is also possible to deploy BlueStore across two additional devices:

A WAL device can be used for BlueStore’s internal

journal or write-ahead log. It is identified by the

block.wal symbolic link in the data directory. It is only

useful to use a separate WAL device if the device is faster than the primary

device or the DB device, for example when:

The WAL device is an NVMe, and the DB device is an SSD, and the data device is either SSD or HDD.

Both the WAL and DB devices are separate SSDs, and the data device is an SSD or HDD.

A DB device can be used for storing BlueStore’s internal metadata. BlueStore (or rather, the embedded RocksDB) will put as much metadata as it can on the DB device to improve performance. Again, it is only helpful to provision a shared DB device if it is faster than the primary device.

Plan thoroughly to ensure sufficient size of the DB device. If the DB device fills up, metadata will spill over to the primary device, which badly degrades the OSD's performance.

You can check if a WAL/DB partition is getting full and spilling over with

the ceph daemon osd.ID perf

dump command. The slow_used_bytes value shows

the amount of data being spilled out:

cephuser@adm > ceph daemon osd.ID perf dump | jq '.bluefs'

"db_total_bytes": 1073741824,

"db_used_bytes": 33554432,

"wal_total_bytes": 0,

"wal_used_bytes": 0,

"slow_total_bytes": 554432,

"slow_used_bytes": 554432,1.5 Additional information #

Ceph as a community project has its own extensive online documentation. For topics not found in this manual, refer to https://docs.ceph.com/en/pacific/.

The original publication CRUSH: Controlled, Scalable, Decentralized Placement of Replicated Data by S.A. Weil, S.A. Brandt, E.L. Miller, C. Maltzahn provides helpful insight into the inner workings of Ceph. Especially when deploying large scale clusters it is a recommended reading. The publication can be found at http://www.ssrc.ucsc.edu/papers/weil-sc06.pdf.

SUSE Enterprise Storage can be used with non-SUSE OpenStack distributions. The Ceph clients need to be at a level that is compatible with SUSE Enterprise Storage.

NoteSUSE supports the server component of the Ceph deployment and the client is supported by the OpenStack distribution vendor.

2 Hardware requirements and recommendations #

The hardware requirements of Ceph are heavily dependent on the IO workload. The following hardware requirements and recommendations should be considered as a starting point for detailed planning.

In general, the recommendations given in this section are on a per-process basis. If several processes are located on the same machine, the CPU, RAM, disk and network requirements need to be added up.

2.1 Network overview #

Ceph has several logical networks:

A front-end network called the

public network.A trusted internal network, the back-end network, called the

cluster network. This is optional.One or more client networks for gateways. This is optional and beyond the scope of this chapter.

The public network is the network over which Ceph daemons communicate with each other and with their clients. This means that all Ceph cluster traffic goes over this network except in the case when a cluster network is configured.

The cluster network is the back-end network between the OSD nodes, for replication, re-balancing, and recovery. If configured, this optional network would ideally provide twice the bandwidth of the public network with default three-way replication, since the primary OSD sends two copies to other OSDs via this network. The public network is between clients and gateways on the one side to talk to monitors, managers, MDS nodes, OSD nodes. It is also used by monitors, managers, and MDS nodes to talk with OSD nodes.

2.1.1 Network recommendations #

We recommend a single fault-tolerant network with enough bandwidth to fulfil your requirements. For the Ceph public network environment, we recommend two bonded 25 GbE (or faster) network interfaces bonded using 802.3ad (LACP). This is considered the minimal setup for Ceph. If you are also using a cluster network, we recommend four bonded 25 GbE network interfaces. Bonding two or more network interfaces provides better throughput via link aggregation and, given redundant links and switches, improved fault tolerance and maintainability.

You can also create VLANs to isolate different types of traffic over a bond. For example, you can create a bond to provide two VLAN interfaces, one for the public network, and the second for the cluster network. However, this is not required when setting up Ceph networking. Details on bonding the interfaces can be found in https://documentation.suse.com/sles/15-SP3/html/SLES-all/cha-network.html#sec-network-iface-bonding.

Fault tolerance can be enhanced through isolating the components into failure domains. To improve fault tolerance of the network, bonding one interface from two separate Network Interface Cards (NIC) offers protection against failure of a single NIC. Similarly, creating a bond across two switches protects against failure of a switch. We recommend consulting with the network equipment vendor in order to architect the level of fault tolerance required.

Additional administration network setup—that enables for example separating SSH, Salt, or DNS networking—is neither tested nor supported.

If your storage nodes are configured via DHCP, the default timeouts may

not be sufficient for the network to be configured correctly before the

various Ceph daemons start. If this happens, the Ceph MONs and OSDs

will not start correctly (running systemctl status

ceph\* will result in "unable to bind" errors). To avoid this

issue, we recommend increasing the DHCP client timeout to at least 30

seconds on each node in your storage cluster. This can be done by changing

the following settings on each node:

In /etc/sysconfig/network/dhcp, set

DHCLIENT_WAIT_AT_BOOT="30"

In /etc/sysconfig/network/config, set

WAIT_FOR_INTERFACES="60"

2.1.1.1 Adding a private network to a running cluster #

If you do not specify a cluster network during Ceph deployment, it assumes a single public network environment. While Ceph operates fine with a public network, its performance and security improves when you set a second private cluster network. To support two networks, each Ceph node needs to have at least two network cards.

You need to apply the following changes to each Ceph node. It is relatively quick to do for a small cluster, but can be very time consuming if you have a cluster consisting of hundreds or thousands of nodes.

Set the cluster network using the following command:

#ceph config set global cluster_network MY_NETWORKRestart the OSDs to bind to the specified cluster network:

#systemctl restart ceph-*@osd.*.serviceCheck that the private cluster network works as expected on the OS level.

2.1.1.2 Monitoring nodes on different subnets #

If the monitor nodes are on multiple subnets, for example they are located in different rooms and served by different switches, you need to specify their public network address in CIDR notation:

cephuser@adm > ceph config set mon public_network "MON_NETWORK_1, MON_NETWORK_2, MON_NETWORK_NFor example:

cephuser@adm > ceph config set mon public_network "192.168.1.0/24, 10.10.0.0/16"If you do specify more than one network segment for the public (or cluster) network as described in this section, each of these subnets must be capable of routing to all the others - otherwise, the MONs and other Ceph daemons on different network segments will not be able to communicate and a split cluster will ensue. Additionally, if you are using a firewall, make sure you include each IP address or subnet in your iptables and open ports for them on all nodes as necessary.

2.2 Multiple architecture configurations #

SUSE Enterprise Storage supports both x86 and Arm architectures. When considering each architecture, it is important to note that from a cores per OSD, frequency, and RAM perspective, there is no real difference between CPU architectures for sizing.

As with smaller x86 processors (non-server), lower-performance Arm-based cores may not provide an optimal experience, especially when used for erasure coded pools.

Throughout the documentation, SYSTEM-ARCH is used in place of x86 or Arm.

2.3 Hardware configuration #

For the best product experience, we recommend to start with the recommended cluster configuration. For a test cluster or a cluster with less performance requirements, we document a minimal supported cluster configuration.

2.3.1 Minimum cluster configuration #

A minimal product cluster configuration consists of:

At least four physical nodes (OSD nodes) with co-location of services

Dual-10 Gb Ethernet as a bonded network

A separate Admin Node (can be virtualized on an external node)

A detailed configuration is:

Separate Admin Node with 4 GB RAM, four cores, 1 TB storage capacity. This is typically the Salt Master node. Ceph services and gateways, such as Ceph Monitor, Metadata Server, Ceph OSD, Object Gateway, or NFS Ganesha are not supported on the Admin Node as it needs to orchestrate the cluster update and upgrade processes independently.

At least four physical OSD nodes, with eight OSD disks each, see Section 2.4.1, “Minimum requirements” for requirements.

The total capacity of the cluster should be sized so that even with one node unavailable, the total used capacity (including redundancy) does not exceed 80%.

Three Ceph Monitor instances. Monitors need to be run from SSD/NVMe storage, not HDDs, for latency reasons.

Monitors, Metadata Server, and gateways can be co-located on the OSD nodes, see Section 2.12, “OSD and monitor sharing one server” for monitor co-location. If you co-locate services, the memory and CPU requirements need to be added up.

iSCSI Gateway, Object Gateway, and Metadata Server require at least incremental 4 GB RAM and four cores.

If you are using CephFS, S3/Swift, iSCSI, at least two instances of the respective roles (Metadata Server, Object Gateway, iSCSI) are required for redundancy and availability.

The nodes are to be dedicated to SUSE Enterprise Storage and must not be used for any other physical, containerized, or virtualized workload.

If any of the gateways (iSCSI, Object Gateway, NFS Ganesha, Metadata Server, ...) are deployed within VMs, these VMs must not be hosted on the physical machines serving other cluster roles. (This is unnecessary, as they are supported as collocated services.)

When deploying services as VMs on hypervisors outside the core physical cluster, failure domains must be respected to ensure redundancy.

For example, do not deploy multiple roles of the same type on the same hypervisor, such as multiple MONs or MDSs instances.

When deploying inside VMs, it is particularly crucial to ensure that the nodes have strong network connectivity and well working time synchronization.

The hypervisor nodes must be adequately sized to avoid interference by other workloads consuming CPU, RAM, network, and storage resources.

2.3.2 Recommended production cluster configuration #

Once you grow your cluster, we recommend relocating Ceph Monitors, Metadata Servers, and Gateways to separate nodes for better fault tolerance.

Seven Object Storage Nodes

No single node exceeds ~15% of total storage.

The total capacity of the cluster should be sized so that even with one node unavailable, the total used capacity (including redundancy) does not exceed 80%.

25 Gb Ethernet or better, bonded for internal cluster and external public network each.

56+ OSDs per storage cluster.

See Section 2.4.1, “Minimum requirements” for further recommendation.

Dedicated physical infrastructure nodes.

Three Ceph Monitor nodes: 4 GB RAM, 4 core processor, RAID 1 SSDs for disk.

See Section 2.5, “Monitor nodes” for further recommendation.

Object Gateway nodes: 32 GB RAM, 8 core processor, RAID 1 SSDs for disk.

See Section 2.6, “Object Gateway nodes” for further recommendation.

iSCSI Gateway nodes: 16 GB RAM, 8 core processor, RAID 1 SSDs for disk.

See Section 2.9, “iSCSI Gateway nodes” for further recommendation.

Metadata Server nodes (one active/one hot standby): 32 GB RAM, 8 core processor, RAID 1 SSDs for disk.

See Section 2.7, “Metadata Server nodes” for further recommendation.

One SES Admin Node: 4 GB RAM, 4 core processor, RAID 1 SSDs for disk.

2.3.3 Multipath configuration #

If you want to use multipath hardware, ensure that LVM sees

multipath_component_detection = 1 in the configuration

file under the devices section. This can be checked via

the lvm config command.

Alternatively, ensure that LVM filters a device's mpath components via the LVM filter configuration. This will be host specific.

This is not recommended and should only ever be considered if

multipath_component_detection = 1 cannot be set.

For more information on multipath configuration, see https://documentation.suse.com/sles/15-SP3/html/SLES-all/cha-multipath.html#sec-multipath-lvm.

2.4 Object Storage Nodes #

2.4.1 Minimum requirements #

The following CPU recommendations account for devices independent of usage by Ceph:

1x 2GHz CPU Thread per spinner.

2x 2GHz CPU Thread per SSD.

4x 2GHz CPU Thread per NVMe.

Separate 10 GbE networks (public/client and internal), required 4x 10 GbE, recommended 2x 25 GbE.

Total RAM required = number of OSDs x (1 GB +

osd_memory_target) + 16 GBRefer to Book “Administration and Operations Guide”, Chapter 28 “Ceph cluster configuration”, Section 28.4.1 “Configuring automatic cache sizing” for more details on

osd_memory_target.OSD disks in JBOD configurations or individual RAID-0 configurations.

OSD journal can reside on OSD disk.

OSD disks should be exclusively used by SUSE Enterprise Storage.

Dedicated disk and SSD for the operating system, preferably in a RAID 1 configuration.

Allocate at least an additional 4 GB of RAM if this OSD host will host part of a cache pool used for cache tiering.

Ceph Monitors, gateway and Metadata Servers can reside on Object Storage Nodes.

For disk performance reasons, OSD nodes are bare metal nodes. No other workloads should run on an OSD node unless it is a minimal setup of Ceph Monitors and Ceph Managers.

SSDs for Journal with 6:1 ratio SSD journal to OSD.

Ensure that OSD nodes do not have any networked block devices mapped, such as iSCSI or RADOS Block Device images.

2.4.2 Minimum disk size #

There are two types of disk space needed to run on OSD: the space for the WAL/DB device, and the primary space for the stored data. The minimum (and default) value for the WAL/DB is 6 GB. The minimum space for data is 5 GB, as partitions smaller than 5 GB are automatically assigned the weight of 0.

So although the minimum disk space for an OSD is 11 GB, we do not recommend a disk smaller than 20 GB, even for testing purposes.

2.4.3 Recommended size for the BlueStore's WAL and DB device #

We recommend reserving 4 GB for the WAL device. While the minimal DB size is 64 GB for RBD-only workloads, the recommended DB size for Object Gateway and CephFS workloads is 2% of the main device capacity (but at least 196 GB).

ImportantWe recommend larger DB volumes for high-load deployments, especially if there is high RGW or CephFS usage. Reserve some capacity (slots) to install more hardware for more DB space if required.

If you intend to put the WAL and DB device on the same disk, then we recommend using a single partition for both devices, rather than having a separate partition for each. This allows Ceph to use the DB device for the WAL operation as well. Management of the disk space is therefore more effective as Ceph uses the DB partition for the WAL only if there is a need for it. Another advantage is that the probability that the WAL partition gets full is very small, and when it is not used fully then its space is not wasted but used for DB operation.

To share the DB device with the WAL, do not specify the WAL device, and specify only the DB device.

Find more information about specifying an OSD layout in Book “Administration and Operations Guide”, Chapter 13 “Operational tasks”, Section 13.4.3 “Adding OSDs using DriveGroups specification”.

2.4.5 Maximum recommended number of disks #

You can have as many disks in one server as it allows. There are a few things to consider when planning the number of disks per server:

Network bandwidth. The more disks you have in a server, the more data must be transferred via the network card(s) for the disk write operations.

Memory. RAM above 2 GB is used for the BlueStore cache. With the default

osd_memory_targetof 4 GB, the system has a reasonable starting cache size for spinning media. If using SSD or NVME, consider increasing the cache size and RAM allocation per OSD to maximize performance.Fault tolerance. If the complete server fails, the more disks it has, the more OSDs the cluster temporarily loses. Moreover, to keep the replication rules running, you need to copy all the data from the failed server among the other nodes in the cluster.

2.5 Monitor nodes #

At least three MON nodes are required. The number of monitors should always be odd (1+2n).

4 GB of RAM.

Processor with four logical cores.

An SSD or other sufficiently fast storage type is highly recommended for monitors, specifically for the

/var/lib/cephpath on each monitor node, as quorum may be unstable with high disk latencies. Two disks in RAID 1 configuration is recommended for redundancy. It is recommended that separate disks or at least separate disk partitions are used for the monitor processes to protect the monitor's available disk space from things like log file creep.There must only be one monitor process per node.

Mixing OSD, MON, or Object Gateway nodes is only supported if sufficient hardware resources are available. That means that the requirements for all services need to be added up.

Two network interfaces bonded to multiple switches.

2.6 Object Gateway nodes #

Object Gateway nodes should have at least six CPU cores and 32 GB of RAM. When other processes are co-located on the same machine, their requirements need to be added up.

2.7 Metadata Server nodes #

Proper sizing of the Metadata Server nodes depends on the specific use case. Generally, the more open files the Metadata Server is to handle, the more CPU and RAM it needs. The following are the minimum requirements:

4 GB of RAM for each Metadata Server daemon.

Bonded network interface.

2.5 GHz CPU with at least 2 cores.

2.8 Admin Node #

At least 4 GB of RAM and a quad-core CPU are required. This includes running the Salt Master on the Admin Node. For large clusters with hundreds of nodes, 6 GB of RAM is suggested.

2.9 iSCSI Gateway nodes #

iSCSI Gateway nodes should have at least six CPU cores and 16 GB of RAM.

2.10 SES and other SUSE products #

This section contains important information about integrating SES with other SUSE products.

2.10.1 SUSE Manager #

SUSE Manager and SUSE Enterprise Storage are not integrated, therefore SUSE Manager cannot currently manage an SES cluster.

2.11 Name limitations #

Ceph does not generally support non-ASCII characters in configuration files, pool names, user names and so forth. When configuring a Ceph cluster we recommend using only simple alphanumeric characters (A-Z, a-z, 0-9) and minimal punctuation ('.', '-', '_') in all Ceph object/configuration names.

3 Admin Node HA setup #

The Admin Node is a Ceph cluster node where the Salt Master service runs. It manages the rest of the cluster nodes by querying and instructing their Salt Minion services. It usually includes other services as well, for example the Grafana dashboard backed by the Prometheus monitoring toolkit.

In case of Admin Node failure, you usually need to provide new working hardware for the node and restore the complete cluster configuration stack from a recent backup. Such a method is time consuming and causes cluster outage.

To prevent the Ceph cluster performance downtime caused by the Admin Node failure, we recommend making use of a High Availability (HA) cluster for the Ceph Admin Node.

3.1 Outline of the HA cluster for Admin Node #

The idea of an HA cluster is that in case of one cluster node failing, the other node automatically takes over its role, including the virtualized Admin Node. This way, other Ceph cluster nodes do not notice that the Admin Node failed.

The minimal HA solution for the Admin Node requires the following hardware:

Two bare metal servers able to run SUSE Linux Enterprise with the High Availability extension and virtualize the Admin Node.

Two or more redundant network communication paths, for example via Network Device Bonding.

Shared storage to host the disk image(s) of the Admin Node virtual machine. The shared storage needs to be accessible from both servers. It can be, for example, an NFS export, a Samba share, or iSCSI target.

Find more details on the cluster requirements at https://documentation.suse.com/sle-ha/15-SP3/html/SLE-HA-all/art-sleha-install-quick.html#sec-ha-inst-quick-req.

3.2 Building an HA cluster with the Admin Node #

The following procedure summarizes the most important steps of building the HA cluster for virtualizing the Admin Node. For details, refer to the indicated links.

Set up a basic 2-node HA cluster with shared storage as described in https://documentation.suse.com/sle-ha/15-SP3/html/SLE-HA-all/art-sleha-install-quick.html.

On both cluster nodes, install all packages required for running the KVM hypervisor and the

libvirttoolkit as described in https://documentation.suse.com/sles/15-SP3/html/SLES-all/cha-vt-installation.html#sec-vt-installation-kvm.On the first cluster node, create a new KVM virtual machine (VM) making use of

libvirtas described in https://documentation.suse.com/sles/15-SP3/html/SLES-all/cha-kvm-inst.html#sec-libvirt-inst-virt-install. Use the preconfigured shared storage to store the disk images of the VM.After the VM setup is complete, export its configuration to an XML file on the shared storage. Use the following syntax:

#virsh dumpxml VM_NAME > /path/to/shared/vm_name.xmlCreate a resource for the Admin Node VM. Refer to https://documentation.suse.com/sle-ha/15-SP3/html/SLE-HA-all/cha-conf-hawk2.html for general info on creating HA resources. Detailed info on creating resources for a KVM virtual machine is described in http://www.linux-ha.org/wiki/VirtualDomain_%28resource_agent%29.

On the newly-created VM guest, deploy the Admin Node including the additional services you need there. Follow the relevant steps in Chapter 6, Deploying Salt. At the same time, deploy the remaining Ceph cluster nodes on the non-HA cluster servers.

Part II Deploying Ceph Cluster #

- 4 Introduction and common tasks

Since SUSE Enterprise Storage 7, Ceph services are deployed as containers instead of RPM packages. The deployment process has two basic steps:

- 5 Installing and configuring SUSE Linux Enterprise Server

Install and register SUSE Linux Enterprise Server 15 SP3 on each cluster node. During installation of SUSE Enterprise Storage, access to the update repositories is required, therefore registration is mandatory. Include at least the following modules:

- 6 Deploying Salt

SUSE Enterprise Storage uses Salt and

ceph-saltfor the initial cluster preparation. Salt helps you configure and run commands on multiple cluster nodes simultaneously from one dedicated host called the Salt Master. Before deploying Salt, consider the following important points:- 7 Deploying the bootstrap cluster using

ceph-salt This section guides you through the process of deploying a basic Ceph cluster. Read the following subsections carefully and execute the included commands in the given order.

- 8 Deploying the remaining core services using cephadm

After deploying the basic Ceph cluster, deploy core services to more cluster nodes. To make the cluster data accessible to clients, deploy additional services as well.

- 9 Deployment of additional services

iSCSI is a storage area network (SAN) protocol that allows clients (called initiators) to send SCSI commands to SCSI storage devices (targets) on remote servers. SUSE Enterprise Storage 7.1 includes a facility that opens Ceph storage management to heterogeneous clients, such as Microsoft Windows* an…

4 Introduction and common tasks #

Since SUSE Enterprise Storage 7, Ceph services are deployed as containers instead of RPM packages. The deployment process has two basic steps:

- Deploying bootstrap cluster

This phase is called Day 1 deployment and consists of the following tasks: It includes installing the underlying operating system, configuring the Salt infrastructure, and deploying the minimal cluster that consist of one MON and one MGR service.

Install and do basic configuration of the underlying operating system—SUSE Linux Enterprise Server 15 SP3—on all cluster nodes.

Deploy the Salt infrastructure on all cluster nodes for performing the initial deployment preparations via

ceph-salt.Configure the basic properties of the cluster via

ceph-saltand deploy it.

- Deploying additional services

During Day 2 deployment, additional core and non-core Ceph services, for example gateways and monitoring stack, are deployed.

Note that the Ceph community documentation uses the cephadm

bootstrap command during initial deployment. ceph-salt calls the

cephadm bootstrap command automatically. The

cephadm bootstrap command should not be run directly. Any

Ceph cluster deployment manually using the cephadm

bootstrap will be unsupported.

Ceph does not support dual stack—running Ceph simultaneously on

IPv4 and IPv6 is not possible. The validation process will reject a mismatch

between public_network and

cluster_network, or within either variable. The following

example will fail the validation:

public_network: "192.168.10.0/24 fd00:10::/64"

4.1 Read the release notes #

In the release notes you can find additional information on changes since the previous release of SUSE Enterprise Storage. Check the release notes to see whether:

your hardware needs special considerations.

any used software packages have changed significantly.

special precautions are necessary for your installation.

The release notes also provide information that could not make it into the manual on time. They also contain notes about known issues.

After having installed the package release-notes-ses,

find the release notes locally in the directory

/usr/share/doc/release-notes or online at

https://www.suse.com/releasenotes/.

5 Installing and configuring SUSE Linux Enterprise Server #

Install and register SUSE Linux Enterprise Server 15 SP3 on each cluster node. During installation of SUSE Enterprise Storage, access to the update repositories is required, therefore registration is mandatory. Include at least the following modules:

Basesystem Module

Server Applications Module

Find more details on how to install SUSE Linux Enterprise Server in https://documentation.suse.com/sles/15-SP3/html/SLES-all/cha-install.html.

Install the SUSE Enterprise Storage 7.1 extension on each cluster node.

Tip: Install SUSE Enterprise Storage together with SUSE Linux Enterprise ServerYou can either install the SUSE Enterprise Storage 7.1 extension separately after you have installed SUSE Linux Enterprise Server 15 SP3, or you can add it during the SUSE Linux Enterprise Server 15 SP3 installation procedure.

Find more details on how to install extensions in https://documentation.suse.com/sles/15-SP3/html/SLES-all/cha-register-sle.html.

Configure network settings including proper DNS name resolution on each node. For more information on configuring a network, see https://documentation.suse.com/sles/15-SP3/html/SLES-all/cha-network.html#sec-network-yast For more information on configuring a DNS server, see https://documentation.suse.com/sles/15-SP3/html/SLES-all/cha-dns.html.

6 Deploying Salt #

SUSE Enterprise Storage uses Salt and ceph-salt for the initial cluster preparation.

Salt helps you configure and run commands on multiple cluster nodes

simultaneously from one dedicated host called the

Salt Master. Before deploying Salt, consider the

following important points:

Salt Minions are the nodes controlled by a dedicated node called Salt Master.

If the Salt Master host should be part of the Ceph cluster, it needs to run its own Salt Minion, but this is not a requirement.

Tip: Sharing multiple roles per serverYou will get the best performance from your Ceph cluster when each role is deployed on a separate node. But real deployments sometimes require sharing one node for multiple roles. To avoid trouble with performance and the upgrade procedure, do not deploy the Ceph OSD, Metadata Server, or Ceph Monitor role to the Admin Node.

Salt Minions need to correctly resolve the Salt Master's host name over the network. By default, they look for the

salthost name, but you can specify any other network-reachable host name in the/etc/salt/minionfile.

Install the

salt-masteron the Salt Master node:root@master #zypper in salt-masterCheck that the

salt-masterservice is enabled and started, and enable and start it if needed:root@master #systemctl enable salt-master.serviceroot@master #systemctl start salt-master.serviceIf you intend to use the firewall, verify that the Salt Master node has ports 4505 and 4506 open to all Salt Minion nodes. If the ports are closed, you can open them using the

yast2 firewallcommand by allowing the service for the appropriate zone. For example,public.Install the package

salt-minionon all minion nodes.root@minion >zypper in salt-minionEdit

/etc/salt/minionand uncomment the following line:#log_level_logfile: warning

Change the

warninglog level toinfo.Note:log_level_logfileandlog_levelWhile

log_levelcontrols which log messages will be displayed on the screen,log_level_logfilecontrols which log messages will be written to/var/log/salt/minion.NoteEnsure you change the log level on all cluster (minion) nodes.

Make sure that the fully qualified domain name of each node can be resolved to an IP address on the public cluster network by all the other nodes.

Configure all minions to connect to the master. If your Salt Master is not reachable by the host name

salt, edit the file/etc/salt/minionor create a new file/etc/salt/minion.d/master.confwith the following content:master: host_name_of_salt_master

If you performed any changes to the configuration files mentioned above, restart the Salt service on all related Salt Minions:

root@minion >systemctl restart salt-minion.serviceCheck that the

salt-minionservice is enabled and started on all nodes. Enable and start it if needed:#systemctl enable salt-minion.service#systemctl start salt-minion.serviceVerify each Salt Minion's fingerprint and accept all salt keys on the Salt Master if the fingerprints match.

NoteIf the Salt Minion fingerprint comes back empty, make sure the Salt Minion has a Salt Master configuration and that it can communicate with the Salt Master.

View each minion's fingerprint:

root@minion >salt-call --local key.finger local: 3f:a3:2f:3f:b4:d3:d9:24:49:ca:6b:2c:e1:6c:3f:c3:83:37:f0:aa:87:42:e8:ff...After gathering fingerprints of all the Salt Minions, list fingerprints of all unaccepted minion keys on the Salt Master:

root@master #salt-key -F [...] Unaccepted Keys: minion1: 3f:a3:2f:3f:b4:d3:d9:24:49:ca:6b:2c:e1:6c:3f:c3:83:37:f0:aa:87:42:e8:ff...If the minions' fingerprints match, accept them:

root@master #salt-key --accept-allVerify that the keys have been accepted:

root@master #salt-key --list-allTest whether all Salt Minions respond:

root@master #salt-run manage.status

7 Deploying the bootstrap cluster using ceph-salt #

This section guides you through the process of deploying a basic Ceph cluster. Read the following subsections carefully and execute the included commands in the given order.

7.1 Installing ceph-salt #

ceph-salt provides tools for deploying Ceph clusters managed by

cephadm. ceph-salt uses the Salt infrastructure to perform OS

management—for example, software updates or time

synchronization—and defining roles for Salt Minions.

On the Salt Master, install the ceph-salt package:

root@master # zypper install ceph-salt

The above command installed ceph-salt-formula as a

dependency which modified the Salt Master configuration by inserting

additional files in the /etc/salt/master.d

directory. To apply the changes, restart

salt-master.service and

synchronize Salt modules:

root@master #systemctl restart salt-master.serviceroot@master #salt \* saltutil.sync_all

7.2 Configuring cluster properties #

Use the ceph-salt config command to configure the

basic properties of the cluster.

The /etc/ceph/ceph.conf file is managed by

cephadm and users should not edit it. Ceph

configuration parameters should be set using the new ceph

config command. See

Book “Administration and Operations Guide”, Chapter 28 “Ceph cluster configuration”, Section 28.2 “Configuration database” for more information.

7.2.1 Using the ceph-salt shell #

If you run ceph-salt config without any path or

subcommand, you will enter an interactive ceph-salt shell. The shell

is convenient if you need to configure multiple properties in one batch

and do not want type the full command syntax.

root@master #ceph-salt config/>ls o- / ............................................................... [...] o- ceph_cluster .................................................. [...] | o- minions .............................................. [no minions] | o- roles ....................................................... [...] | o- admin .............................................. [no minions] | o- bootstrap ........................................... [no minion] | o- cephadm ............................................ [no minions] | o- tuned ..................................................... [...] | o- latency .......................................... [no minions] | o- throughput ....................................... [no minions] o- cephadm_bootstrap ............................................. [...] | o- advanced .................................................... [...] | o- ceph_conf ................................................... [...] | o- ceph_image_path .................................. [ no image path] | o- dashboard ................................................... [...] | | o- force_password_update ................................. [enabled] | | o- password ................................................ [admin] | | o- ssl_certificate ....................................... [not set] | | o- ssl_certificate_key ................................... [not set] | | o- username ................................................ [admin] | o- mon_ip .................................................. [not set] o- containers .................................................... [...] | o- registries_conf ......................................... [enabled] | | o- registries .............................................. [empty] | o- registry_auth ............................................... [...] | o- password .............................................. [not set] | o- registry .............................................. [not set] | o- username .............................................. [not set] o- ssh ............................................... [no key pair set] | o- private_key .................................. [no private key set] | o- public_key .................................... [no public key set] o- time_server ........................... [enabled, no server host set] o- external_servers .......................................... [empty] o- servers ................................................... [empty] o- subnet .................................................. [not set]

As you can see from the output of ceph-salt's ls

command, the cluster configuration is organized in a tree structure. To

configure a specific property of the cluster in the ceph-salt shell,

you have two options:

Run the command from the current position and enter the absolute path to the property as the first argument:

/>/cephadm_bootstrap/dashboard ls o- dashboard ....................................................... [...] o- force_password_update ..................................... [enabled] o- password .................................................... [admin] o- ssl_certificate ........................................... [not set] o- ssl_certificate_key ....................................... [not set] o- username .................................................... [admin]/> /cephadm_bootstrap/dashboard/username set ceph-adminValue set.Change to the path whose property you need to configure and run the command:

/>cd /cephadm_bootstrap/dashboard//ceph_cluster/minions>ls o- dashboard ....................................................... [...] o- force_password_update ..................................... [enabled] o- password .................................................... [admin] o- ssl_certificate ........................................... [not set] o- ssl_certificate_key ....................................... [not set] o- username ................................................[ceph-admin]

While in a ceph-salt shell, you can use the autocompletion feature

similar to a normal Linux shell (Bash) autocompletion. It completes

configuration paths, subcommands, or Salt Minion names. When

autocompleting a configuration path, you have two options:

To let the shell finish a path relative to your current position,press the TAB key →| twice.

To let the shell finish an absolute path, enter / and press the TAB key →| twice.

If you enter cd from the ceph-salt shell without

any path, the command will print a tree structure of the cluster

configuration with the line of the current path active. You can use

the up and down cursor keys to navigate through individual lines.

After you confirm with Enter, the

configuration path will change to the last active one.

To keep the documentation consistent, we will use a single command

syntax without entering the ceph-salt shell. For example, you can

list the cluster configuration tree by using the following command:

root@master # ceph-salt config ls7.2.2 Adding Salt Minions #

Include all or a subset of Salt Minions that we deployed and accepted in

Chapter 6, Deploying Salt to the Ceph cluster configuration. You

can either specify the Salt Minions by their full names, or use a glob

expressions '*' and '?' to include multiple Salt Minions at once. Use the

add subcommand under the

/ceph_cluster/minions path. The following command

includes all accepted Salt Minions:

root@master # ceph-salt config /ceph_cluster/minions add '*'Verify that the specified Salt Minions were added:

root@master # ceph-salt config /ceph_cluster/minions ls

o- minions ............................................... [Minions: 5]

o- ses-main.example.com .................................. [no roles]

o- ses-node1.example.com ................................. [no roles]

o- ses-node2.example.com ................................. [no roles]

o- ses-node3.example.com ................................. [no roles]

o- ses-node4.example.com ................................. [no roles]7.2.3 Specifying Salt Minions managed by cephadm #

Specify which nodes will belong to the Ceph cluster and will be managed by cephadm. Include all nodes that will run Ceph services as well as the Admin Node:

root@master # ceph-salt config /ceph_cluster/roles/cephadm add '*'7.2.4 Specifying Admin Node #

The Admin Node is the node where the ceph.conf

configuration file and the Ceph admin keyring is installed. You

usually run Ceph related commands on the Admin Node.

In a homogeneous environment where all or most hosts belong to SUSE Enterprise Storage, we recommend having the Admin Node on the same host as the Salt Master.

In a heterogeneous environment where one Salt infrastructure hosts more than one cluster, for example, SUSE Enterprise Storage together with SUSE Manager, do not place the Admin Node on the same host as Salt Master.

To specify the Admin Node, run the following command:

root@master #ceph-salt config /ceph_cluster/roles/admin add ses-main.example.com 1 minion added.root@master #ceph-salt config /ceph_cluster/roles/admin ls o- admin ................................................... [Minions: 1] o- ses-main.example.com ...................... [Other roles: cephadm]

ceph.conf and the admin keyring on multiple nodesYou can install the Ceph configuration file and admin keyring on multiple nodes if your deployment requires it. For security reasons, avoid installing them on all the cluster's nodes.

7.2.5 Specifying first MON/MGR node #

You need to specify which of the cluster's Salt Minions will bootstrap the cluster. This minion will become the first one running Ceph Monitor and Ceph Manager services.

root@master #ceph-salt config /ceph_cluster/roles/bootstrap set ses-node1.example.com Value set.root@master #ceph-salt config /ceph_cluster/roles/bootstrap ls o- bootstrap ..................................... [ses-node1.example.com]

Additionally, you need to specify the bootstrap MON's IP address on the

public network to ensure that the public_network

parameter is set correctly, for example:

root@master # ceph-salt config /cephadm_bootstrap/mon_ip set 192.168.10.207.2.6 Specifying tuned profiles #

You need to specify which of the cluster's minions have actively tuned profiles. To do so, add these roles explicitly with the following commands:

One minion cannot have both the latency and

throughput roles.

root@master #ceph-salt config /ceph_cluster/roles/tuned/latency add ses-node1.example.com Adding ses-node1.example.com... 1 minion added.root@master #ceph-salt config /ceph_cluster/roles/tuned/throughput add ses-node2.example.com Adding ses-node2.example.com... 1 minion added.

7.2.7 Generating an SSH key pair #

cephadm uses the SSH protocol to communicate with cluster nodes. A

user account named cephadm is automatically created

and used for SSH communication.

You need to generate the private and public part of the SSH key pair:

root@master #ceph-salt config /ssh generate Key pair generated.root@master #ceph-salt config /ssh ls o- ssh .................................................. [Key Pair set] o- private_key ..... [53:b1:eb:65:d2:3a:ff:51:6c:e2:1b:ca:84:8e:0e:83] o- public_key ...... [53:b1:eb:65:d2:3a:ff:51:6c:e2:1b:ca:84:8e:0e:83]

7.2.8 Configuring the time server #

All cluster nodes need to have their time synchronized with a reliable time source. There are several scenarios to approach time synchronization:

If all cluster nodes are already configured to synchronize their time using an NTP service of choice, disable time server handling completely:

root@master #ceph-salt config /time_server disableIf your site already has a single source of time, specify the host name of the time source:

root@master #ceph-salt config /time_server/servers add time-server.example.comAlternatively,

ceph-salthas the ability to configure one of the Salt Minion to serve as the time server for the rest of the cluster. This is sometimes referred to as an "internal time server". In this scenario,ceph-saltwill configure the internal time server (which should be one of the Salt Minion) to synchronize its time with an external time server, such aspool.ntp.org, and configure all the other minions to get their time from the internal time server. This can be achieved as follows:root@master #ceph-salt config /time_server/servers add ses-main.example.comroot@master #ceph-salt config /time_server/external_servers add pool.ntp.orgThe

/time_server/subnetoption specifies the subnet from which NTP clients are allowed to access the NTP server. It is automatically set when you specify/time_server/servers. If you need to change it or specify it manually, run:root@master #ceph-salt config /time_server/subnet set 10.20.6.0/24

Check the time server settings:

root@master # ceph-salt config /time_server ls

o- time_server ................................................ [enabled]

o- external_servers ............................................... [1]

| o- pool.ntp.org ............................................... [...]

o- servers ........................................................ [1]

| o- ses-main.example.com ...... ............................... [...]

o- subnet .............................................. [10.20.6.0/24]Find more information on setting up time synchronization in https://documentation.suse.com/sles/15-SP3/html/SLES-all/cha-ntp.html#sec-ntp-yast.

7.2.9 Configuring the Ceph Dashboard login credentials #

Ceph Dashboard will be available after the basic cluster is deployed. To access it, you need to set a valid user name and password, for example:

root@master #ceph-salt config /cephadm_bootstrap/dashboard/username set adminroot@master #ceph-salt config /cephadm_bootstrap/dashboard/password set PWD

By default, the first dashboard user will be forced to change their password on first login to the dashboard. To disable this feature, run the following command:

root@master # ceph-salt config /cephadm_bootstrap/dashboard/force_password_update disable7.2.10 Using the container registry #

These instructions assume the private registry will reside on the SES Admin Node. In cases where the private registry will reside on another host, the instructions will need to be adjusted to meet the new environment.

Also, when migrating from registry.suse.com to a

private registry, migrate the current version of container images

being used. After the current configuration has been migrated and

validated, pull new images from registry.suse.com

and upgrade to the new images.

7.2.10.1 Creating the local registry #

To create the local registry, follow these steps:

Verify that the Containers Module extension is enabled:

#SUSEConnect --list-extensions | grep -A2 "Containers Module" Containers Module 15 SP3 x86_64 (Activated)Verify that the following packages are installed: apache2-utils (if enabling a secure registry), cni, cni-plugins, podman, podman-cni-config, and skopeo:

#zypper in apache2-utils cni cni-plugins podman podman-cni-config skopeoGather the following information:

Fully qualified domain name of the registry host (

REG_HOST_FQDN).An available port number used to map to the registry container port of 5000 (

REG_HOST_PORT):>ss -tulpn | grep :5000Whether the registry will be secure or insecure (the

insecure=[true|false]option).If the registry will be a secure private registry, determine a username and password (REG_USERNAME, REG_PASSWORD).

If the registry will be a secure private registry, a self-signed certificate can be created, or a root certificate and key will need to be provided (

REG_CERTIFICATE.CRT,REG_PRIVATE-KEY.KEY).Record the current

ceph-saltcontainers configuration:cephuser@adm >ceph-salt config ls /containers o- containers .......................................................... [...] o- registries_conf ................................................. [enabled] | o- registries ...................................................... [empty] o- registry_auth ....................................................... [...] o- password ........................................................ [not set] o- registry ........................................................ [not set] o- username ........................................................ [not set]

7.2.10.1.1 Starting an insecure private registry (without SSL encryption) #

Configure

ceph-saltfor the insecure registry:cephuser@adm >ceph-salt config containers/registries_conf enablecephuser@adm >ceph-salt config containers/registries_conf/registries \ add prefix=REG_HOST_FQDN insecure=true \ location=REG_HOST_PORT:5000cephuser@adm >ceph-salt apply --non-interactiveStart the insecure registry by creating the necessary directory, for example,

/var/lib/registryand starting the registry with thepodmancommand:#mkdir -p /var/lib/registry#podman run --privileged -d --name registry \ -p REG_HOST_PORT:5000 -v /var/lib/registry:/var/lib/registry \ --restart=always registry:2TipThe

--net=hostparameter allows the Podman service to be reachable from other nodes.To have the registry start after a reboot, create a

systemdunit file for it and enable it:#podman generate systemd --files --name registry#mv container-registry.service /etc/systemd/system/#systemctl enable container-registry.serviceTest if the registry is running on port 5000:

>ss -tulpn | grep :5000Test to see if the registry port (

REG_HOST_PORT) is in anopenstate using thenmapcommand. Afilteringstate will indicate that the service will not be reachable from other nodes.>nmap REG_HOST_FQDNThe configuration is complete now. Continue by following Section 7.2.10.1.3, “Populating the secure local registry”.

7.2.10.1.2 Starting a secure private registry (with SSL encryption) #

If an insecure registry was previously configured on the host, you need to remove the insecure registry configuration before proceeding with the secure private registry configuration.

Create the necessary directories:

#mkdir -p /var/lib/registry/{auth,certs}Generate an SSL certificate:

>openssl req -newkey rsa:4096 -nodes -sha256 \ -keyout /var/lib/registry/certs/REG_PRIVATE-KEY.KEY -x509 -days 365 \ -out /var/lib/registry/certs/REG_CERTIFICATE.CRTTipIn this example and throughout the instructions, the key and certificate files are named REG_PRIVATE-KEY.KEY and REG_CERTIFICATE.CRT.

NoteSet the

CN=[value]value to the fully qualified domain name of the host (REG_HOST_FQDN).TipIf a certificate signed by a certificate authority is provided, copy the *.key, *.crt, and intermediate certificates to

/var/lib/registry/certs/.Copy the certificate to all cluster nodes and refresh the certificate cache: