This guide describes the administration of SUSE Linux Enterprise Micro.

Copyright © 2006–2025 SUSE LLC and contributors. All rights reserved.

Permission is granted to copy, distribute and/or modify this document under the terms of the GNU Free Documentation License, Version 1.2 or (at your option) version 1.3; with the Invariant Section being this copyright notice and license. A copy of the license version 1.2 is included in the section entitled “GNU Free Documentation License”.

For SUSE trademarks, see https://www.suse.com/company/legal/. All third-party trademarks are the property of their respective owners. Trademark symbols (®, ™ etc.) denote trademarks of SUSE and its affiliates. Asterisks (*) denote third-party trademarks.

All information found in this book has been compiled with utmost attention to detail. However, this does not guarantee complete accuracy. Neither SUSE LLC, its affiliates, the authors nor the translators shall be held liable for possible errors or the consequences thereof.

Preface #

1 Available documentation #

- Online documentation

Our documentation is available online at https://documentation.suse.com. Browse or download the documentation in various formats.

Note: Latest updatesThe latest updates are usually available in the English-language version of this documentation.

- SUSE Knowledgebase

If you run into an issue, check out the Technical Information Documents (TIDs) that are available online at https://www.suse.com/support/kb/. Search the SUSE Knowledgebase for known solutions driven by customer need.

- Release notes

For release notes, see https://www.suse.com/releasenotes/.

- In your system

For offline use, the release notes are also available under

/usr/share/doc/release-noteson your system. The documentation for individual packages is available at/usr/share/doc/packages.Many commands are also described in their manual pages. To view them, run

man, followed by a specific command name. If themancommand is not installed on your system, install it withsudo zypper install man.

2 Improving the documentation #

Your feedback and contributions to this documentation are welcome. The following channels for giving feedback are available:

- Service requests and support

For services and support options available for your product, see https://www.suse.com/support/.

To open a service request, you need a SUSE subscription registered at SUSE Customer Center. Go to https://scc.suse.com/support/requests, log in, and click .

- Bug reports

Report issues with the documentation at https://bugzilla.suse.com/.

To simplify this process, click the icon next to a headline in the HTML version of this document. This preselects the right product and category in Bugzilla and adds a link to the current section. You can start typing your bug report right away.

A Bugzilla account is required.

- Contributions

To contribute to this documentation, click the icon next to a headline in the HTML version of this document. This will take you to the source code on GitHub, where you can open a pull request.

A GitHub account is required.

Note: only available for EnglishThe icons are only available for the English version of each document. For all other languages, use the icons instead.

For more information about the documentation environment used for this documentation, see the repository's README.

You can also report errors and send feedback concerning the documentation to <doc-team@suse.com>. Include the document title, the product version, and the publication date of the document. Additionally, include the relevant section number and title (or provide the URL) and provide a concise description of the problem.

3 Documentation conventions #

The following notices and typographic conventions are used in this document:

/etc/passwd: Directory names and file namesPLACEHOLDER: Replace PLACEHOLDER with the actual value

PATH: An environment variablels,--help: Commands, options, and parametersuser: The name of a user or grouppackage_name: The name of a software package

Alt, Alt–F1: A key to press or a key combination. Keys are shown in uppercase as on a keyboard.

, › : menu items, buttons

AMD/Intel This paragraph is only relevant for the AMD64/Intel 64 architectures. The arrows mark the beginning and the end of the text block.

IBM Z, POWER This paragraph is only relevant for the architectures

IBM ZandPOWER. The arrows mark the beginning and the end of the text block.Chapter 1, “Example chapter”: A cross-reference to another chapter in this guide.

Commands that must be run with

rootprivileges. You can also prefix these commands with thesudocommand to run them as a non-privileged user:#command>sudocommandCommands that can be run by non-privileged users:

>commandCommands can be split into two or multiple lines by a backslash character (

\) at the end of a line. The backslash informs the shell that the command invocation will continue after the end of the line:>echoa b \ c dA code block that shows both the command (preceded by a prompt) and the respective output returned by the shell:

>commandoutputNotices

Warning: Warning noticeVital information you must be aware of before proceeding. Warns you about security issues, potential loss of data, damage to hardware, or physical hazards.

Important: Important noticeImportant information you should be aware of before proceeding.

Note: Note noticeAdditional information, for example about differences in software versions.

Tip: Tip noticeHelpful information, like a guideline or a piece of practical advice.

Compact Notices

Additional information, for example about differences in software versions.

Helpful information, like a guideline or a piece of practical advice.

4 Support #

Find the support statement for SUSE Linux Enterprise Micro and general information about technology previews below. For details about the product lifecycle, see https://www.suse.com/lifecycle.

If you are entitled to support, find details on how to collect information for a support ticket at https://documentation.suse.com/sles-15/html/SLES-all/cha-adm-support.html.

4.1 Support statement for SUSE Linux Enterprise Micro #

To receive support, you need an appropriate subscription with SUSE. To view the specific support offers available to you, go to https://www.suse.com/support/ and select your product.

The support levels are defined as follows:

- L1

Problem determination, which means technical support designed to provide compatibility information, usage support, ongoing maintenance, information gathering and basic troubleshooting using available documentation.

- L2

Problem isolation, which means technical support designed to analyze data, reproduce customer problems, isolate a problem area and provide a resolution for problems not resolved by Level 1 or prepare for Level 3.

- L3

Problem resolution, which means technical support designed to resolve problems by engaging engineering to resolve product defects which have been identified by Level 2 Support.

For contracted customers and partners, SUSE Linux Enterprise Micro is delivered with L3 support for all packages, except for the following:

Technology previews.

Sound, graphics, fonts, and artwork.

Packages that require an additional customer contract.

Packages with names ending in -devel (containing header files and similar developer resources) will only be supported together with their main packages.

SUSE will only support the usage of original packages. That is, packages that are unchanged and not recompiled.

4.2 Technology previews #

Technology previews are packages, stacks, or features delivered by SUSE to provide glimpses into upcoming innovations. Technology previews are included for your convenience to give you a chance to test new technologies within your environment. We would appreciate your feedback. If you test a technology preview, please contact your SUSE representative and let them know about your experience and use cases. Your input is helpful for future development.

Technology previews have the following limitations:

Technology previews are still in development. Therefore, they may be functionally incomplete, unstable, or otherwise not suitable for production use.

Technology previews are not supported.

Technology previews may only be available for specific hardware architectures.

Details and functionality of technology previews are subject to change. As a result, upgrading to subsequent releases of a technology preview may be impossible and require a fresh installation.

SUSE may discover that a preview does not meet customer or market needs, or does not comply with enterprise standards. Technology previews can be removed from a product at any time. SUSE does not commit to providing a supported version of such technologies in the future.

For an overview of technology previews shipped with your product, see the release notes at https://www.suse.com/releasenotes.

Part I Common tasks #

- 1 Read-only file system

This chapter focuses on the characteristics of the read-only file system that is used by SLE Micro.

- 2 Snapshots

This chapter describes managing snapshots and gives details about directories included in snapshots.

- 3 Administration using transactional updates

This chapter describes the usage of the

transactional-updatecommand.- 4 User space live patching

This chapter describes the basic principles and usage of user space live patching.

1 Read-only file system #

This chapter focuses on the characteristics of the read-only file system that is used by SLE Micro.

SLE Micro was designed to use a read-only root file system. This means that

after the deployment is complete, you are not able to perform direct

modifications to the root file system, e.g. by using

zypper. Instead, SUSE Linux Enterprise Micro introduces the concept of

transactional updates which enables you to modify your system and keep it up

to date.

The key features of transactional updates are the following:

They are atomic - the update is applied only if it completes successfully.

Changes are applied in a separate snapshot and so do not influence the running system.

Changes can easily be rolled back.

Each time you call the transactional-update command to

change your system—either to install a package, perform an update or

apply a patch—the following actions take place:

A new read-write snapshot is created from your current root file system, or from a snapshot that you specified.

All changes are applied (updates, patches or package installation).

The snapshot is switched back to read-only mode.

If the changes were applied successfully, the new root file system snapshot is set as default.

After rebooting, the system boots into the new snapshot.

NoteBear in mind that without rebooting your system, the changes will not be applied.

In case you do not reboot your machine before performing further changes,

the transactional-update command will create a new

snapshot from the current root file system. This means that you will end up

with several parallel snapshots, each including that particular change but

not changes from the other invocations of the command. After reboot, the

most recently created snapshot will be used as your new root file system,

and it will not include changes done in the previous snapshots.

1.1 /etc on a read-only file system #

Even though /etc is part of the read-only file system,

using an OverlayFS layer on this directory enables you to

write to this directory. All modifications that you performed on the content

of /etc are written to the

/var/lib/overlay/SNAPSHOT_NUMBER/etc.

Each snapshot has one associated OverlayFS directory.

Whenever a new snapshot is created (for example, as a result of a system

update), the content of /etc is synchronized and used

as a base in the new snapshot. In the OverlayFS

terminology, the current snapshot's /etc is mounted as

lowerdir. The new snapshot's /etc is

mounted as upperdir. If there were no changes in the

upperdir /etc, any changes performed

to the lowerdir are visible to the

upperdir. Therefore, the new snapshot also contains the

changes from the current snapshot's /etc.

lowerdir and upperdir

If /etc in both snapshots is modified, only the changes in the new snapshot (upperdir) persist. Changes made to the current snapshot (lowerdir) are not synchronized to the new snapshot. Therefore, we do not recommend changing /etc after a new snapshot has been created and the system has not been rebooted. However, you can still find the changes in the /var/lib/overlay/ directory for the snapshot in which the changes were performed.

--continue option of the transactional-update command

If you use the --continue option of the transactional-update command when performing changes to the file system, all /etc directory layers created by each separate run of transactional-update, except for the one in the newest snapshot, are synchronized to the lowerdir (the lowerdir can have several mount points).

2 Snapshots #

This chapter describes managing snapshots and gives details about directories included in snapshots.

As snapshots are crucial for the correct functioning of SLE Micro, do not disable the feature, and ensure that the root partition is big enough to store the snapshots.

When a snapshot is created, both the snapshot and the original point to the same blocks in the file system. So, initially a snapshot does not occupy additional disk space. If data in the original file system is modified, changed data blocks are copied while the old data blocks are kept for the snapshot.

Snapshots always reside on the same partition or subvolume on which the snapshot has been taken. It is not possible to store snapshots on a different partition or subvolume. As a result, partitions containing snapshots need to be larger than partitions which do not contain snapshots. The exact amount depends strongly on the number of snapshots you keep and the amount of data modifications. As a rule of thumb, give partitions twice as much space as you normally would. To prevent disks from running out of space, old snapshots are automatically cleaned up.

Snapshots that are known to be working properly are marked as important.

2.1 Directories excluded from snapshots #

As some directories store user-specific or volatile data, these directories are excluded from snapshots:

/homeContains users' data. Excluded so that the data will not be included in snapshots and thus potentially overwritten by a rollback operation.

/rootContains root's data. Excluded so that the data will not be included in snapshots and thus potentially overwritten by a rollback operation.

/optThird-party products usually get installed to

/opt. Excluded so that these applications are not uninstalled during rollbacks./srvContains data for Web and FTP servers. Excluded in order to avoid data loss on rollbacks.

/usr/localThis directory is used when manually installing software. It is excluded to avoid uninstalling these installations on rollbacks.

/varThis directory contains many variable files, including logs, temporary caches, third-party products in

/var/opt, and is the default location for virtual machine images and databases. Therefore, a separate subvolume is created with Copy-On-Write disabled, so as to exclude all of this variable data from snapshots./tmpThe directory contains temporary data.

- the architecture-specific

/boot/grub2directory Rollback of the boot loader binaries is not supported.

2.2 Showing exclusive disk space used by snapshots #

Snapshots share data, for efficient use of storage space, so using ordinary

commands like du and df won't measure

used disk space accurately. When you want to free up disk space on Btrfs

with quotas enabled, you need to know how much exclusive disk space is used

by each snapshot, rather than shared space. The btrfs

command provides a view of space used by snapshots:

# btrfs qgroup show -p /

qgroupid rfer excl parent

-------- ---- ---- ------

0/5 16.00KiB 16.00KiB ---

[...]

0/272 3.09GiB 14.23MiB 1/0

0/273 3.11GiB 144.00KiB 1/0

0/274 3.11GiB 112.00KiB 1/0

0/275 3.11GiB 128.00KiB 1/0

0/276 3.11GiB 80.00KiB 1/0

0/277 3.11GiB 256.00KiB 1/0

0/278 3.11GiB 112.00KiB 1/0

0/279 3.12GiB 64.00KiB 1/0

0/280 3.12GiB 16.00KiB 1/0

1/0 3.33GiB 222.95MiB ---

The qgroupid column displays the identification number

for each subvolume, assigning a qgroup level/ID combination.

The rfer column displays the total amount of data

referred to in the subvolume.

The excl column displays the exclusive data in each

subvolume.

The parent column shows the parent qgroup of the

subvolumes.

The final item, 1/0, shows the totals for the parent

qgroup. In the above example, 222.95 MiB will be freed if all subvolumes are

removed. Run the following command to see which snapshots are associated

with each subvolume:

# btrfs subvolume list -st /3 Administration using transactional updates #

This chapter describes the usage of the

transactional-update command.

In case you do not reboot your machine before performing further changes,

the transactional-update command will create a new

snapshot from the current root file system. This means that you will end

up with several parallel snapshots, each including that particular change

but not changes from the other invocations of the command. After reboot,

the most recently created snapshot will be used as your new root file

system, and it will not include changes done in the previous snapshots.

3.1 transactional-update usage #

The transactional-update command enables the atomic

installation or removal of updates; updates are applied only if all of

them can be successfully installed.

transactional-update creates a snapshot of your system

and uses it to update the system. Later you can restore this snapshot. All

changes become active only after reboot.

The transactional-update command syntax is as follows:

transactional-update [option] [general_command] [package_command] standalone_commandtransactional-update

without arguments

If you do not specify any command or option while running the

transactional-update command, the system updates

itself.

Possible command parameters are described further.

transactional-update options #--interactive, -iCan be used along with a package command to turn on interactive mode.

--non-interactive, -nCan be used along with a package command to turn on non-interactive mode.

--continue [number], -cThe

--continueoption is for making multiple changes to an existing snapshot without rebooting.The default

transactional-updatebehavior is to create a new snapshot from the current root file system. If you forget something, such as installing a new package, you have to reboot to apply your previous changes, runtransactional-updateagain to install the forgotten package, and reboot again. You cannot run thetransactional-updatecommand multiple times without rebooting to add more changes to the snapshot, because this will create separate independent snapshots that do not include changes from the previous snapshots.Use the

--continueoption to make as many changes as you want without rebooting. A separate snapshot is made each time, and each snapshot contains all the changes you made in the previous snapshots, plus your new changes. Repeat this process as many times as you want, and when the final snapshot includes everything you want, reboot the system, and your final snapshot becomes the new root file system.Another useful feature of the

--continueoption is that you may select any existing snapshot as the base for your new snapshot. The following example demonstrates runningtransactional-updateto install a new package in a snapshot based on snapshot 13, and then running it again to install another package:#transactional-update pkg install package_1#transactional-update --continue 13 pkg install package_2--no-selfupdateDisables self-updating of

transactional-update.--drop-if-no-change, -dDiscards the snapshot created by

transactional-updateif there were no changes to the root file system. If there are some changes to the/etcdirectory, those changes are merged back to the current file system.--quietThe

transactional-updatecommand will not output tostdout.--help, -hPrints help for the

transactional-updatecommand.--versionDisplays the version of the

transactional-updatecommand.

The general commands are the following:

cleanup-snapshotsThe command marks all unused snapshots that are intended to be removed.

cleanup-overlaysThe command removes all unused overlay layers of

/etc.cleanupThe command combines the

cleanup-snapshotsandcleanup-overlayscommands. For more details, refer to Section 3.2, “Snapshots cleanup”.grub.cfgUse this command to rebuild the GRUB boot loader configuration file.

bootloaderThe command reinstalls the boot loader.

initrdUse the command to rebuild

initrd.kdumpIf you perform changes to your hardware or storage, you may need to rebuild the kdump initrd.

shellOpens a read-write shell in the new snapshot before exiting. The command is typically used for debugging purposes.

rebootThe system reboots after the transactional-update is complete.

run<command>Runs the provided command in a new snapshot.

setup-selinuxInstalls and enables the targeted SELinux policy.

The package commands are the following:

The installation of packages from repositories other than

the official ones (for example, the SUSE Linux Enterprise Server repositories) is

not supported and not

recommended. To use the tools available for SUSE Linux Enterprise Server, run the

toolbox container and install the tools inside

the container. For details about the toolbox

container, refer to Chapter 9, toolbox for SLE Micro debugging.

dupPerforms an upgrade of your system. The default option for this command is

--non-interactive.migrationThe command migrates your system to a selected target. Typically, it is used to upgrade your system if it has been registered via SUSE Customer Center.

patchChecks for available patches and installs them. The default option for this command is

--non-interactive.pkg installInstalls individual packages from the available channels using the

zypper installcommand. This command can also be used to install Program Temporary Fix (PTF) RPM files. The default option for this command is--interactive.#transactional-update pkg install package_nameor

#transactional-update pkg install rpm1 rpm2pkg removeRemoves individual packages from the active snapshot using the

zypper removecommand. This command can also be used to remove PTF RPM files. The default option for this command is--interactive.#transactional-update pkg remove package_namepkg updateUpdates individual packages from the active snapshot using the

zypper updatecommand. Only packages that are part of the snapshot of the base file system can be updated. The default option for this command is--interactive.#transactional-update pkg update package_nameregisterThe

registercommand enables you to register/deregister your system. For a complete usage description, refer to Section 3.1.1, “Theregistercommand”.upUpdates installed packages to newer versions. The default option for this command is

--non-interactive.

The standalone commands are the following:

rollback<snapshot number>This sets the default subvolume. The current system is set as the new default root file system. If you specify a number, that snapshot is used as the default root file system. On a read-only file system, it does not create any additional snapshots.

#transactional-update rollback snapshot_numberrollback lastThis command sets the last known to be working snapshot as the default.

3.1.1 The register command #

The register command enables you to handle all tasks

regarding registration and subscription management. You can supply the

following options:

--list-extensionsWith this option, the command will list available extensions for your system. You can use the output to find a product identifier for product activation.

-p, --productUse this option to specify a product for activation. The product identifier has the following format: <name>/<version>/<architecture>, for example,

sle-module-live-patching/15.3/x86_64. The appropriate command will then be the following:#transactional-update register -p sle-module-live-patching/15.3/x86_64-r, --regcodeRegister your system with the provided registration code. The command will register the subscription and enable software repositories.

-d, --de-registerThe option deregisters the system, or when used along with the

-poption, deregisters an extension.-e, --emailSpecify an email address that will be used in SUSE Customer Center for registration.

--urlSpecify the URL of your registration server. The URL is stored in the configuration and will be used in subsequent command invocations. For example:

#transactional-update register --url https://scc.suse.com-s, --statusDisplays the current registration status in JSON format.

--write-configWrites the provided options value to the

/etc/SUSEConnectconfiguration file.--cleanupRemoves old system credentials.

--versionPrints the version.

--helpDisplays the usage of the command.

3.2 Snapshots cleanup #

If you run the command transactional-update cleanup,

all old snapshots without a cleanup algorithm will have one set. All

important snapshots are also marked. The command also removes all

unreferenced (and thus unused) /etc overlay

directories in /var/lib/overlay.

The snapshots with the set number cleanup algorithm

will be deleted according to the rules configured in

/etc/snapper/configs/root by the following

parameters:

- NUMBER_MIN_AGE

Defines the minimum age of a snapshot (in seconds) that can be automatically removed.

- NUMBER_LIMIT/NUMBER_LIMIT_IMPORTANT

Defines the maximum count of stored snapshots. The cleaning algorithms delete snapshots above the specified maximum value, without taking into account the snapshot and file system space. The algorithms also delete snapshots above the minimum value until the limits for the snapshot and file system are reached.

The snapshot cleanup is also regularly performed by systemd.

3.3 System rollback #

GRUB 2 enables booting from btrfs snapshots and thus allows you to use any older functional snapshot in case the new snapshot does not work correctly.

When booting a snapshot, the parts of the file system included in the snapshot are mounted read-only; all other file systems and parts that are excluded from snapshots are mounted read-write and can be modified.

An initial bootable snapshot is created at the end of the initial

system installation. You can go back to that state at any time by

booting this snapshot. The snapshot can be identified by the

description after installation.

There are two methods to perform a system rollback.

From a running system, you can set the default snapshot, see more in Procedure 3.1, “Rollback from a running system”.

Especially in cases where the current snapshot is broken, you can boot into the new snapshot and set it to default. For details, refer to Procedure 3.2, “Rollback to a working snapshot”.

If your current snapshot is functional, you can use the following procedure for a system rollback.

Choose the snapshot that should be set as default, run:

#snapper listto get a list of available snapshots. Note the number of the snapshot to be set as default.

Set the snapshot as default by running:

#transactional-update rollback snapshot_numberIf you omit the snapshot number, the current snapshot will be set as default.

Reboot your system to boot into the new default snapshot.

The following procedure is used in case the current snapshot is broken and you are not able to boot into it.

Reboot your system and select

Start bootloader from a read-only snapshot.Choose a snapshot to boot. The snapshots are sorted according to the date of creation, with the latest one at the top.

Log in to your system and check whether everything works as expected. The data written to directories excluded from the snapshots will stay untouched.

If the snapshot you booted into is not suitable for the rollback, reboot your system and choose another one.

If the snapshot works as expected, you can perform the rollback by running the following command:

#transactional-update rollbackAnd reboot afterwards.

3.4 Managing automatic transactional updates #

Automatic updates are controlled by systemd.timer

that runs once per day. This applies all updates and informs

rebootmgrd that the machine should be rebooted. You

may adjust the time when the update runs, see systemd.timer(5)

documentation.

You can disable automatic transactional updates with this command:

#systemctl --now disable transactional-update.timer

4 User space live patching #

This chapter describes the basic principles and usage of user space live patching.

4.1 About user space live patching #

On SLE Micro, ULP is a technical preview only.

Only the currently running processes are affected by the live patches. As the libraries are changed in the new snapshot and not in the current one, new processes started in the current snapshot still use the non-patched libraries until you reboot. After the reboot, the system switches to the new snapshot and all started processes will use the patched libraries.

User space live patching (ULP) refers to the process of applying patches to the libraries used by a running process without interrupting them. Every time a security fix is available as a live patch, customer services will be secured after applying the live patch without restarting the processes.

Live patching operations are performed using the

ulp tool that is part of

libpulp. libpulp is a

framework that consists of the libpulp.so

library and the ulp binary that makes libraries live

patchable and applies live patches.

You can run the ulp command either as a normal user

or a privileged user via the sudo mechanism. The difference is that

running ulp via sudo lets you view information of

processes or patch processes that are running by root.

4.1.1 Prerequisites #

For ULP to work, two requirements must be met.

Install the ULP on your system by running:

>transactional-update pkg in libpulp0 libpulp-toolsAfter successful installation, reboot your system.

Applications with desired live patch support must be launched preloading the

libpulp.so.0library. See Section 4.1.3, “Usinglibpulp” for more details.

4.1.2 Supported libraries #

Currently, only glibc and

openssl (openssl1_1)

are supported. Additional packages will be available after they are

prepared for live patching. To receive glibc

and openssl live patches, install both

glibc-livepatches and

openssl-1_1-livepatches packages:

> transactional-update pkg in glibc-livepatches openssl-1_1-livepatchesAfter successful installation, reboot your system.

4.1.3 Using libpulp #

To enable live patching on an application, you need to preload the

libpulp.so.0 library when starting the

application:

> LD_PRELOAD=/usr/lib64/libpulp.so.0 APPLICATION_CMD4.1.3.1 Checking if a library is live patchable #

To check whether a library is live patchable, use the following command:

> ulp livepatchable PATH_TO_LIBRARY4.1.3.2 Checking if a .so file is a live patch container #

A shared object (.so) is a live patch container

if it contains the ULP patch description embedded into it. You can

verify it with the following command:

> readelf -S SHARED_OBJECT | grep .ulp

If the output shows that there are both .ulp and

.ulp.rev sections in the shared object, then it is

a live patch container.

4.1.3.3 Applying live patches #

Live patches are applied using the ulp trigger

command, for example:

> ulp trigger -p PID LIVEPATCH.so

Replace PID with the process ID of the running

process that uses the library to be patched and

LIVEPATCH.so with the actual live patch file. The

command returns one of the following status messages:

- SUCCESS

The live patching operation was successful.

- SKIPPED

The patch was skipped because it was not designed for any library that is loaded in the process.

- ERROR

An error occurred, and you can retrieve more information by inspecting the

libpulpinternal message buffer. See Section 4.1.3.6, “View internal message queue” for more information.

It is also possible to apply multiple live patches by using wildcards, for example:

> ulp trigger '*.so'

The command tries to apply every patch in the current folder to every

process that have the libpulp library

loaded. If the patch is not suitable for the process, it is

automatically skipped. In the end, the tool shows how many patches it

successfully applied to how many processes.

4.1.3.4 Reverting live patches #

You can use the ulp trigger command to revert live

patches. There are two ways to revert live patches. You can revert a

live patch by using the --revert switch and passing

the live patch container:

> ulp trigger -p PID --revert LIVEPATCH.soAlternatively, it is possible to remove all patches associated with a particular library, for example:

> ulp trigger -p PID --revert-all=LIBRARY

In the example, LIBRARY refers to the

actual library, such as libcrypto.so.1.1.

The latter approach can be useful when the source code of the original live patch is not available. Or you want to remove a specific old patch and apply a new one while the target application is still running a secure code, for example:

> ulp trigger -p PID --revert-all=libcrypto.so.1.1 new_livepatch2.so4.1.3.5 View applied patches #

It is possible to verify which applications have live patches applied by running:

> ulp patchesThe output shows which libraries are live patchable and patches loaded in programs, as well which bugs the patch addresses:

PID: 10636, name: test

Livepatchable libraries:

in /lib64/libc.so.6:

livepatch: libc_livepatch1.so

bug labels: jsc#SLE-0000

in /usr/lib64/libpulp.so.0:It is also possible to see which functions are patched by the live patch:

> ulp dump LIVEPATCH.so4.1.3.6 View internal message queue #

Log messages from libpulp.so are stored in a

buffer inside the library and are not displayed unless requested by

the user. To show these messages, run:

> ulp messages -p PID4.2 More information #

Further information about libpulp is available

in the project's Git

repository.

Part II Networking #

- 5 Basic networking

Linux offers the necessary networking tools and features for integration into all types of network structures. Network access using a network card can be configured with YaST. Manual configuration is also possible. In this chapter, only the fundamental mechanisms and the relevant network configuration files are covered.

- 6 NetworkManager and

wicked This chapter focuses on the difference between NetworkManager and

wickedand provides a description how to switch fromwickedto NetworkManager.- 7 NetworkManager configuration and usage

NetworkManager is shipped so it can run out of the box, but you might need to reconfigure or restart the tool. This chapter focuses on these tasks.

5 Basic networking #

Linux offers the necessary networking tools and features for integration into all types of network structures. Network access using a network card can be configured with YaST. Manual configuration is also possible. In this chapter, only the fundamental mechanisms and the relevant network configuration files are covered.

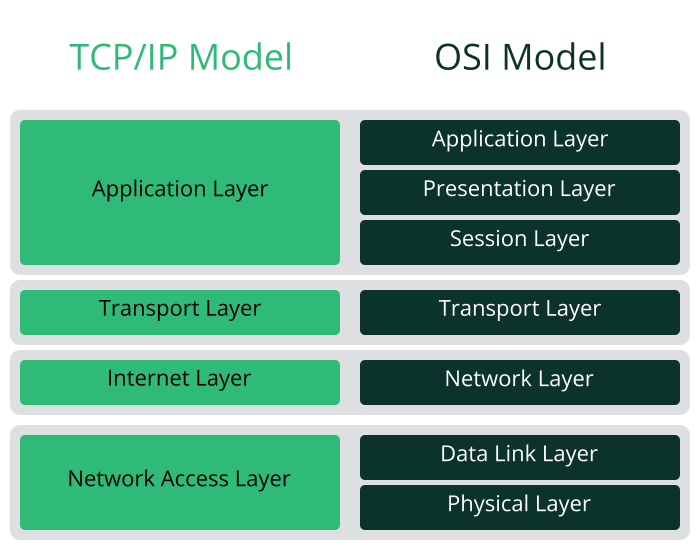

Linux and other Unix operating systems use the TCP/IP protocol. It is not a single network protocol, but a family of network protocols that offer various services. The protocols listed in Several protocols in the TCP/IP protocol family are provided for exchanging data between two machines via TCP/IP. Networks combined by TCP/IP, comprising a worldwide network, are also called “the Internet.”

RFC stands for Request for Comments. RFCs are documents that describe various Internet protocols and implementation procedures for the operating system and its applications. The RFC documents describe the setup of Internet protocols. For more information about RFCs, see https://datatracker.ietf.org/.

- TCP

Transmission Control Protocol: a connection-oriented secure protocol. The data to transmit is first sent by the application as a stream of data and converted into the appropriate format by the operating system. The data arrives at the respective application on the destination host in the original data stream format it was initially sent. TCP determines whether any data has been lost or jumbled during the transmission. TCP is implemented wherever the data sequence matters.

- UDP

User Datagram Protocol: a connectionless, insecure protocol. The data to transmit is sent in the form of packets generated by the application. The order in which the data arrives at the recipient is not guaranteed and data loss is possible. UDP is suitable for record-oriented applications. It features a smaller latency period than TCP.

- ICMP

Internet Control Message Protocol: This is not a protocol for the end user, but a special control protocol that issues error reports and can control the behavior of machines participating in TCP/IP data transfer. In addition, it provides a special echo mode that can be viewed using the program ping.

- IGMP

Internet Group Management Protocol: This protocol controls machine behavior when implementing IP multicast.

As shown in Figure 5.1, “Simplified layer model for TCP/IP”, data exchange takes place in different layers. The actual network layer is the insecure data transfer via IP (Internet protocol). On top of IP, TCP (transmission control protocol) guarantees, to a certain extent, security of the data transfer. The IP layer is supported by the underlying hardware-dependent protocol, such as Ethernet.

The diagram provides one or two examples for each layer. The layers are ordered according to abstraction levels. The lowest layer is very close to the hardware. The uppermost layer, however, is almost a complete abstraction from the hardware. Every layer has its own special function. The special functions of each layer are mostly implicit in their description. The data link and physical layers represent the physical network used, such as Ethernet.

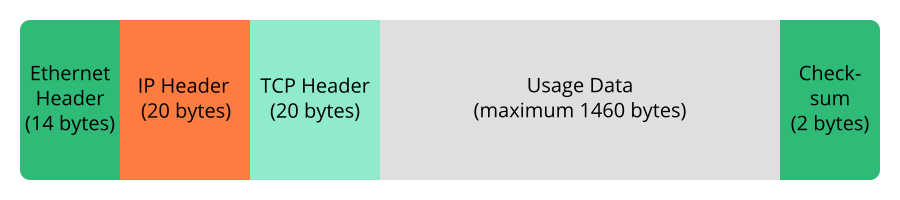

Almost all hardware protocols work on a packet-oriented basis. The data to transmit is collected into packets (it cannot be sent all at once). The maximum size of a TCP/IP packet is approximately 64 KB. Packets are normally quite small, as the network hardware can be a limiting factor. The maximum size of a data packet on Ethernet is about fifteen hundred bytes. The size of a TCP/IP packet is limited to this amount when the data is sent over Ethernet. If more data is transferred, more data packets need to be sent by the operating system.

For the layers to serve their designated functions, additional information regarding each layer must be saved in the data packet. This takes place in the header of the packet. Every layer attaches a small block of data, called the protocol header, to the front of each emerging packet. A sample TCP/IP data packet traveling over an Ethernet cable is illustrated in Figure 5.2, “TCP/IP Ethernet packet”. The proof sum is located at the end of the packet, not at the beginning. This simplifies things for the network hardware.

When an application sends data over the network, the data passes through each layer, all implemented in the Linux kernel except the physical layer. Each layer is responsible for preparing the data so it can be passed to the next layer. The lowest layer is ultimately responsible for sending the data. The entire procedure is reversed when data is received. Like the layers of an onion, in each layer the protocol headers are removed from the transported data. Finally, the transport layer is responsible for making the data available for use by the applications at the destination. In this manner, one layer only communicates with the layer directly above or below it. For applications, it is irrelevant whether data is transmitted via a wireless or wired connection. Likewise, it is irrelevant for the data line which kind of data is transmitted, as long as packets are in the correct format.

5.1 IP addresses and routing #

The discussion in this section is limited to IPv4 networks. For information about IPv6 protocol, the successor to IPv4, refer to Section 5.2, “IPv6—the next generation Internet”.

5.1.1 IP addresses #

Every computer on the Internet has a unique 32-bit address. These 32 bits (or 4 bytes) are normally written as illustrated in the second row in Example 5.1, “Writing IP addresses”.

IP Address (binary): 11000000 10101000 00000000 00010100 IP Address (decimal): 192. 168. 0. 20

In decimal form, the four bytes are written in the decimal number system, separated by periods. The IP address is assigned to a host or a network interface. It can be used only once throughout the world. There are exceptions to this rule, but these are not relevant to the following passages.

The points in IP addresses indicate the hierarchical system. Until the 1990s, IP addresses were strictly categorized in classes. However, this system proved too inflexible and was discontinued. Now, classless routing (CIDR, classless interdomain routing) is used.

5.1.2 Netmasks and routing #

Netmasks are used to define the address range of a subnet. If two hosts are in the same subnet, they can reach each other directly. If they are not in the same subnet, they need the address of a gateway that handles all the traffic for the subnet. To check if two IP addresses are in the same subnet, simply “AND” both addresses with the netmask. If the result is identical, both IP addresses are in the same local network. If there are differences, the remote IP address, and thus the remote interface, can only be reached over a gateway.

To understand how the netmask works, look at

Example 5.2, “Linking IP addresses to the netmask”. The netmask consists of 32 bits

that identify how much of an IP address belongs to the network. All those

bits that are 1 mark the corresponding bit in the IP

address as belonging to the network. All bits that are 0

mark bits inside the subnet. This means that the more bits are

1, the smaller the subnet is. Because the netmask always

consists of several successive 1 bits, it is also

possible to count the number of bits in the netmask. In

Example 5.2, “Linking IP addresses to the netmask” the first net with 24 bits could

also be written as 192.168.0.0/24.

IP address (192.168.0.20): 11000000 10101000 00000000 00010100 Netmask (255.255.255.0): 11111111 11111111 11111111 00000000 --------------------------------------------------------------- Result of the link: 11000000 10101000 00000000 00000000 In the decimal system: 192. 168. 0. 0 IP address (213.95.15.200): 11010101 10111111 00001111 11001000 Netmask (255.255.255.0): 11111111 11111111 11111111 00000000 --------------------------------------------------------------- Result of the link: 11010101 10111111 00001111 00000000 In the decimal system: 213. 95. 15. 0

To give another example: all machines connected with the same Ethernet cable are usually located in the same subnet and are directly accessible. Even when the subnet is physically divided by switches or bridges, these hosts can still be reached directly.

IP addresses outside the local subnet can only be reached if a gateway is configured for the target network. In the most common case, there is only one gateway that handles all traffic that is external. However, it is also possible to configure several gateways for different subnets.

If a gateway has been configured, all external IP packets are sent to the appropriate gateway. This gateway then attempts to forward the packets in the same manner—from host to host—until it reaches the destination host or the packet's TTL (time to live) expires.

- Base Network Address

This is the netmask AND any address in the network, as shown in Example 5.2, “Linking IP addresses to the netmask” under

Result. This address cannot be assigned to any hosts.- Broadcast Address

This could be paraphrased as: “Access all hosts in this subnet.” To generate this, the netmask is inverted in binary form and linked to the base network address with a logical OR. The above example therefore results in 192.168.0.255. This address cannot be assigned to any hosts.

- Local Host

The address

127.0.0.1is assigned to the “loopback device” on each host. A connection can be set up to your own machine with this address and with all addresses from the complete127.0.0.0/8loopback network as defined with IPv4. With IPv6 there is only one loopback address (::1).

Because IP addresses must be unique all over the world, you cannot select random addresses. There are three address domains to use if you want to set up a private IP-based network. These cannot get any connection from the rest of the Internet, because they cannot be transmitted over the Internet. These address domains are specified in RFC 1597 and listed in Table 5.1, “Private IP address domains”.

|

Network/Netmask |

Domain |

|---|---|

|

|

|

|

|

|

|

|

|

5.2 IPv6—the next generation Internet #

Because of the emergence of the World Wide Web (WWW), the Internet has experienced explosive growth, with an increasing number of computers communicating via TCP/IP in the past fifteen years. Since Tim Berners-Lee at CERN (http://public.web.cern.ch) invented the WWW in 1990, the number of Internet hosts has grown from a few thousand to about a hundred million.

As mentioned, an IPv4 address consists of only 32 bits. Also, quite a few IP addresses are lost—they cannot be used because of the way in which networks are organized. The number of addresses available in your subnet is two to the power of the number of bits, minus two. A subnet has, for example, 2, 6, or 14 addresses available. To connect 128 hosts to the Internet, for example, you need a subnet with 256 IP addresses, from which only 254 are usable, because two IP addresses are needed for the structure of the subnet itself: the broadcast and the base network address.

Under the current IPv4 protocol, DHCP or NAT (network address translation) are the typical mechanisms used to circumvent the potential address shortage. Combined with the convention to keep private and public address spaces separate, these methods can certainly mitigate the shortage. To set up a host in an IPv4 network, you need several address items, such as the host's own IP address, the subnetmask, the gateway address, and maybe a name server address. All these items need to be known and cannot be derived from somewhere else.

With IPv6, both the address shortage and the complicated configuration should be a thing of the past. The following sections tell more about the improvements and benefits brought by IPv6 and about the transition from the old protocol to the new one.

5.2.1 Advantages #

The most important and most visible improvement brought by the IPv6 protocol is the enormous expansion of the available address space. An IPv6 address is made up of 128 bit values instead of the traditional 32 bits. This provides for as many as several quadrillion IP addresses.

However, IPv6 addresses are not only different from their predecessors with regard to their length. They also have a different internal structure that may contain more specific information about the systems and the networks to which they belong. More details about this are found in Section 5.2.2, “Address types and structure”.

The following is a list of other advantages of the IPv6 protocol:

- Autoconfiguration

IPv6 makes the network “plug and play” capable, which means that a newly configured system integrates into the (local) network without any manual configuration. The new host uses its automatic configuration mechanism to derive its own address from the information made available by the neighboring routers, relying on a protocol called the neighbor discovery (ND) protocol. This method does not require any intervention on the administrator's part and there is no need to maintain a central server for address allocation—an additional advantage over IPv4, where automatic address allocation requires a DHCP server.

Nevertheless if a router is connected to a switch, the router should send periodic advertisements with flags telling the hosts of a network how they should interact with each other. For more information, see RFC 2462 and the

radvd.conf(5)man page, and RFC 3315.- Mobility

IPv6 makes it possible to assign several addresses to one network interface at the same time. This allows users to access several networks easily, something that could be compared with the international roaming services offered by mobile phone companies. When you take your mobile phone abroad, the phone automatically logs in to a foreign service when it enters the corresponding area, so you can be reached under the same number everywhere and can place an outgoing call, as you would in your home area.

- Secure communication

With IPv4, network security is an add-on function. IPv6 includes IPsec as one of its core features, allowing systems to communicate over a secure tunnel to avoid eavesdropping by outsiders on the Internet.

- Backward compatibility

Realistically, it would be impossible to switch the entire Internet from IPv4 to IPv6 at one time. Therefore, it is crucial that both protocols can coexist not only on the Internet, but also on one system. This is ensured by compatible addresses (IPv4 addresses can easily be translated into IPv6 addresses) and by using several tunnels. See Section 5.2.3, “Coexistence of IPv4 and IPv6”. Also, systems can rely on a dual stack IP technique to support both protocols at the same time, meaning that they have two network stacks that are completely separate, such that there is no interference between the two protocol versions.

5.2.2 Address types and structure #

As mentioned, the current IP protocol has two major limitations: there is an increasing shortage of IP addresses and configuring the network and maintaining the routing tables is becoming a more complex and burdensome task. IPv6 solves the first problem by expanding the address space to 128 bits. The second one is mitigated by introducing a hierarchical address structure combined with sophisticated techniques to allocate network addresses, and multihoming (the ability to assign several addresses to one device, giving access to several networks).

When dealing with IPv6, it is useful to know about three different types of addresses:

- Unicast

Addresses of this type are associated with exactly one network interface. Packets with such an address are delivered to only one destination. Accordingly, unicast addresses are used to transfer packets to individual hosts on the local network or the Internet.

- Multicast

Addresses of this type relate to a group of network interfaces. Packets with such an address are delivered to all destinations that belong to the group. Multicast addresses are mainly used by certain network services to communicate with certain groups of hosts in a well-directed manner.

- Anycast

Addresses of this type are related to a group of interfaces. Packets with such an address are delivered to the member of the group that is closest to the sender, according to the principles of the underlying routing protocol. Anycast addresses are used to make it easier for hosts to find out about servers offering certain services in the given network area. All servers of the same type have the same anycast address. Whenever a host requests a service, it receives a reply from the server with the closest location, as determined by the routing protocol. If this server should fail for some reason, the protocol automatically selects the second closest server, then the third one, and so forth.

An IPv6 address is made up of eight four-digit fields, each representing 16

bits, written in hexadecimal notation. They are separated by colons

(:). Any leading zero bytes within a given field may be

dropped, but zeros within the field or at its end may not. Another

convention is that more than four consecutive zero bytes may be collapsed

into a double colon. However, only one such :: is

allowed per address. This kind of shorthand notation is shown in

Example 5.3, “Sample IPv6 address”, where all three lines represent the

same address.

fe80 : 0000 : 0000 : 0000 : 0000 : 10 : 1000 : 1a4 fe80 : 0 : 0 : 0 : 0 : 10 : 1000 : 1a4 fe80 : : 10 : 1000 : 1a4

Each part of an IPv6 address has a defined function. The first bytes form

the prefix and specify the type of address. The center part is the network

portion of the address, but it may be unused. The end of the address forms

the host part. With IPv6, the netmask is defined by indicating the length

of the prefix after a slash at the end of the address. An address, as shown

in Example 5.4, “IPv6 address specifying the prefix length”, contains the information that the

first 64 bits form the network part of the address and the last 64 form its

host part. In other words, the 64 means that the netmask

is filled with 64 1-bit values from the left. As with IPv4, the IP address

is combined with AND with the values from the netmask to determine whether

the host is located in the same subnet or in another one.

fe80::10:1000:1a4/64

IPv6 knows about several predefined types of prefixes. Some are shown in Various IPv6 prefixes.

00IPv4 addresses and IPv4 over IPv6 compatibility addresses. These are used to maintain compatibility with IPv4. Their use still requires a router able to translate IPv6 packets into IPv4 packets. Several special addresses, such as the one for the loopback device, have this prefix as well.

2or3as the first digitAggregatable global unicast addresses. As is the case with IPv4, an interface can be assigned to form part of a certain subnet. Currently, there are the following address spaces:

2001::/16(production quality address space) and2002::/16(6to4 address space).fe80::/10Link-local addresses. Addresses with this prefix should not be routed and should therefore only be reachable from within the same subnet.

fec0::/10Site-local addresses. These may be routed, but only within the network of the organization to which they belong. In effect, they are the IPv6 equivalent of the current private network address space, such as

10.x.x.x.ffThese are multicast addresses.

A unicast address consists of three basic components:

- Public topology

The first part (which also contains one of the prefixes mentioned above) is used to route packets through the public Internet. It includes information about the company or institution that provides the Internet access.

- Site topology

The second part contains routing information about the subnet to which to deliver the packet.

- Interface ID

The third part identifies the interface to which to deliver the packet. This also allows for the MAC to form part of the address. Given that the MAC is a globally unique, fixed identifier coded into the device by the hardware maker, the configuration procedure is substantially simplified. In fact, the first 64 address bits are consolidated to form the

EUI-64token, with the last 48 bits taken from the MAC, and the remaining 24 bits containing special information about the token type. This also makes it possible to assign anEUI-64token to interfaces that do not have a MAC, such as those based on point-to-point protocol (PPP).

On top of this basic structure, IPv6 distinguishes between five different types of unicast addresses:

::(unspecified)This address is used by the host as its source address when the interface is initialized for the first time (at which point, the address cannot yet be determined by other means).

::1(loopback)The address of the loopback device.

- IPv4 compatible addresses

The IPv6 address is formed by the IPv4 address and a prefix consisting of 96 zero bits. This type of compatibility address is used for tunneling (see Section 5.2.3, “Coexistence of IPv4 and IPv6”) to allow IPv4 and IPv6 hosts to communicate with others operating in a pure IPv4 environment.

- IPv4 addresses mapped to IPv6

This type of address specifies a pure IPv4 address in IPv6 notation.

- Local addresses

There are two address types for local use:

- link-local

This type of address can only be used in the local subnet. Packets with a source or target address of this type should not be routed to the Internet or other subnets. These addresses contain a special prefix (

fe80::/10) and the interface ID of the network card, with the middle part consisting of zero bytes. Addresses of this type are used during automatic configuration to communicate with other hosts belonging to the same subnet.- site-local

Packets with this type of address may be routed to other subnets, but not to the wider Internet—they must remain inside the organization's own network. Such addresses are used for intranets and are an equivalent of the private address space defined by IPv4. They contain a special prefix (

fec0::/10), the interface ID, and a 16-bit field specifying the subnet ID. Again, the rest is filled with zero bytes.

As a completely new feature introduced with IPv6, each network interface normally gets several IP addresses, with the advantage that several networks can be accessed through the same interface. One of these networks can be configured completely automatically using the MAC and a known prefix with the result that all hosts on the local network can be reached when IPv6 is enabled (using the link-local address). With the MAC forming part of it, any IP address used in the world is unique. The only variable parts of the address are those specifying the site topology and the public topology, depending on the actual network in which the host is currently operating.

For a host to go back and forth between different networks, it needs at least two addresses. One of them, the home address, not only contains the interface ID but also an identifier of the home network to which it normally belongs (and the corresponding prefix). The home address is a static address and, as such, it does not normally change. Still, all packets destined to the mobile host can be delivered to it, regardless of whether it operates in the home network or somewhere outside. This is made possible by the completely new features introduced with IPv6, such as stateless autoconfiguration and neighbor discovery. In addition to its home address, a mobile host gets one or more additional addresses that belong to the foreign networks where it is roaming. These are called care-of addresses. The home network has a facility that forwards any packets destined to the host when it is roaming outside. In an IPv6 environment, this task is performed by the home agent, which takes all packets destined to the home address and relays them through a tunnel. On the other hand, those packets destined to the care-of address are directly transferred to the mobile host without any special detours.

5.2.3 Coexistence of IPv4 and IPv6 #

The migration of all hosts connected to the Internet from IPv4 to IPv6 is a gradual process. Both protocols will coexist for some time to come. The coexistence on one system is guaranteed where there is a dual stack implementation of both protocols. That still leaves the question of how an IPv6 enabled host should communicate with an IPv4 host and how IPv6 packets should be transported by the current networks, which are predominantly IPv4-based. The best solutions offer tunneling and compatibility addresses (see Section 5.2.2, “Address types and structure”).

IPv6 hosts that are more or less isolated in the (worldwide) IPv4 network can communicate through tunnels: IPv6 packets are encapsulated as IPv4 packets to move them across an IPv4 network. Such a connection between two IPv4 hosts is called a tunnel. To achieve this, packets must include the IPv6 destination address (or the corresponding prefix) and the IPv4 address of the remote host at the receiving end of the tunnel. A basic tunnel can be configured manually according to an agreement between the hosts' administrators. This is also called static tunneling.

However, the configuration and maintenance of static tunnels is often too labor-intensive to use them for daily communication needs. Therefore, IPv6 provides for three different methods of dynamic tunneling:

- 6over4

IPv6 packets are automatically encapsulated as IPv4 packets and sent over an IPv4 network capable of multicasting. IPv6 is tricked into seeing the whole network (Internet) as a huge local area network (LAN). This makes it possible to determine the receiving end of the IPv4 tunnel automatically. However, this method does not scale very well and is also hampered because IP multicasting is far from widespread on the Internet. Therefore, it only provides a solution for smaller corporate or institutional networks where multicasting can be enabled. The specifications for this method are laid down in RFC 2529.

- 6to4

With this method, IPv4 addresses are automatically generated from IPv6 addresses, enabling isolated IPv6 hosts to communicate over an IPv4 network. However, several problems have been reported regarding the communication between those isolated IPv6 hosts and the Internet. The method is described in RFC 3056.

- IPv6 tunnel broker

This method relies on special servers that provide dedicated tunnels for IPv6 hosts. It is described in RFC 3053.

5.2.4 Configuring IPv6 #

To configure IPv6, you normally do not need to make any changes on the

individual workstations. IPv6 is enabled by default. To disable or enable

IPv6 on an installed system, use the YaST module. On the tab,

select or deselect the option as necessary.

To enable it temporarily until the next reboot, enter

modprobe -i ipv6 as

root. It is impossible to unload

the IPv6 module after it has been loaded.

Because of the autoconfiguration concept of IPv6, the network card is assigned an address in the link-local network. Normally, no routing table management takes place on a workstation. The network routers can be queried by the workstation, using the router advertisement protocol, for what prefix and gateways should be implemented. The radvd program can be used to set up an IPv6 router. This program informs the workstations which prefix to use for the IPv6 addresses and which routers. Alternatively, use zebra/quagga for automatic configuration of both addresses and routing.

5.3 Name resolution #

DNS assists in assigning an IP address to one or more names and assigning a name to an IP address. In Linux, this conversion is usually carried out by a special type of software known as bind. The machine that takes care of this conversion is called a name server. The names make up a hierarchical system in which each name component is separated by a period. The name hierarchy is, however, independent of the IP address hierarchy described above.

Consider a complete name, such as

jupiter.example.com, written in the

format hostname.domain. A full

name, called a fully qualified domain name (FQDN),

consists of a host name and a domain name

(example.com). The latter

also includes the top level domain or TLD

(com).

TLD assignment has become quite confusing for historical reasons.

Traditionally, three-letter domain names are used in the USA. In the rest of

the world, the two-letter ISO national codes are the standard. In addition

to that, longer TLDs were introduced in 2000 that represent certain spheres

of activity (for example, .info,

.name,

.museum).

In the early days of the Internet (before 1990), the file

/etc/hosts was used to store the names of all the

machines represented over the Internet. This quickly proved to be

impractical in the face of the rapidly growing number of computers connected

to the Internet. For this reason, a decentralized database was developed to

store the host names in a widely distributed manner. This database, similar

to the name server, does not have the data pertaining to all hosts in the

Internet readily available, but can dispatch requests to other name servers.

The top of the hierarchy is occupied by root name servers. These root name servers manage the top level domains and are run by the Network Information Center (NIC). Each root name server knows about the name servers responsible for a given top level domain. Information about top level domain NICs is available at http://www.internic.net.

DNS can do more than resolve host names. The name server also knows which host is receiving e-mails for an entire domain—the mail exchanger (MX).

For your machine to resolve an IP address, it must know about at least one name server and its IP address.

The .local top level domain is treated as link-local

domain by the resolver. DNS requests are sent as multicast DNS requests

instead of normal DNS requests. If you already use the

.local domain in your name server configuration, you

must switch this option off in /etc/host.conf. For

more information, see the host.conf manual page.

To switch off MDNS during installation, use nomdns=1 as

a boot parameter.

For more information on multicast DNS, see http://www.multicastdns.org.

6 NetworkManager and wicked #

This chapter focuses on the difference between NetworkManager and

wicked and provides a description how to switch from wicked to NetworkManager.

NetworkManager is a program that manages the primary network connection and other

connection interfaces. NetworkManager has been designed to be fully automatic by

default. NetworkManager is handled by systemd and is shipped with all necessary

service unit files.

wicked is a network management tool that provides network

configuration as a service and enables changing the network

configuration dynamically.

NetworkManager and wicked provide similar functionality; however,

they differ in the following points:

rootprivilegesIf you use NetworkManager for network setup, you can easily switch, stop, or start your network connection at any time. NetworkManager also makes it possible to change and configure wireless card connections without requiring

rootprivileges.wickedalso provides some ways to switch, stop, or start the connection with or without user intervention, like user-managed devices. However, this always requiresrootprivileges to change or configure a network device.- Types of network connections

Both

wickedand NetworkManager can handle network connections with a wireless network (with WEP, WPA-PSK, and WPA-Enterprise access) and wired networks using DHCP and static configuration. They also support connection through dial-up and VPN. With NetworkManager, you can also connect a mobile broadband (3G) modem or set up a DSL connection, which is not possible with the traditional configuration.NetworkManager tries to keep your computer connected at all times using the best connection available. If the network cable is accidentally disconnected, it tries to reconnect. NetworkManager can find the network with the best signal strength from the list of your wireless connections and automatically use it to connect. To get the same functionality with

wicked, more configuration effort is required.- k8s integration

Some k8s plugins require NetworkManager to run and are not compatible with

wicked.

6.1 Switching from wicked to NetworkManager #

Even though NetworkManager and wicked are similar in functionalities, we cannot guarantee full feature parity. The conversion of the wicked configuration or automated switching to NetworkManager is not supported.

wicked configuration compatibility with NetworkManager

The /etc/sysconfig/network/ifcfg-* files are usually compatible except for some rare cases. But when you use the wicked configuration located in /etc/wicked/*.xml, you need to migrate the configuration manually.

To change your networking managing service from wicked to NetworkManager, proceed as follows:

wicked to NetworkManager #Run the following command to create a new snapshot where you perform all other changes to the system:

#transactional-update shellInstall NetworkManager:

#zypper in NetworkManagerRemove

wickedfrom the system:#zypper rm wicked wicked-serviceEnable the NetworkManager service:

#systemctl enable NetworkManagerIf needed, configure the service according to your needs.

Close the

transactional-updateshell:#exitReboot your system to switch to the new snapshot.

7 NetworkManager configuration and usage #

NetworkManager is shipped so it can run out of the box, but you might need to reconfigure or restart the tool. This chapter focuses on these tasks.

NetworkManager stores all network configuration as a connection, which is a collection

of data that describes how to create or connect to a network. These

connections are stored as files in the

/etc/NetworkManager/system-connections/ directory.

A connection is active when a particular device uses the connection. The device may have more than one connection configured, but only one can be active at a given time. The other connections can be used to fast switch from one connection to another. For example, if the active connection is not available, NetworkManager tries to connect the device to another configured connection.

To manage connections, use the nmcli, described in

the section below.

To change how NetworkManager behaves, change or add values to the configuration file

described in Section 7.3, “The NetworkManager.conf configuration file”.

7.1 Starting and stopping NetworkManager #

As NetworkManager is a systemd service, you can use common systemd commands to

start, stop, or restart NetworkManager.

To start NetworkManager:

# systemctl start networkTo restart NetworkManager:

# systemctl restart networkTo stop NetworkManager:

# systemctl stop network7.2 The nmcli command #

NetworkManager provides a CLI interface to manage your connections. By using the

nmcli interface, you can connect to a particular network,

edit a connection, edit a device, etc. The generic syntax of the

nmcli is as follows:

# nmcli OPTIONS SUBCOMMAND SUBCOMMAND_ARGUMENTS

where OPTIONS are described in

Section 7.2.1, “The nmcli command options” and

SUBCOMMAND can be any of the following:

connectionenables you to configure your network connection. For details, refer to Section 7.2.2, “The

connectionsubcommand”.deviceFor details, refer to Section 7.2.3, “The

devicesubcommand”.generalshows status and permissions. For details refer to Section 7.2.4, “The

generalsubcommand”.monitormonitors activity of NetworkManager and watches for changes in the state of connectivity and devices. This subcommand does not take any arguments.

networkingqueries the networking status. For details, refer to Section 7.2.5, “The

networkingsubcommand”.

7.2.1 The nmcli command options #

Besides the subcommands and their arguments, the nmcli

command can take the following options:

-a|--askthe command will stop its run to ask for any missing arguments, for example, for a password to connect to a network.

-c|--color {yes|no|auto}controls the color output:

yesto enable the colors,noto disable them, andautocreates color output only when the standard output is directed to a terminal.-m|--mode {tabular|multiline}switches between

table(each line describes a single entry, columns define particular properties of the entry) andmultiline(each entry comprises more lines, each property is on its own line).tabularis the default value.-h|--helpprints help.

-w|--wait secondssets a time-out period for which to wait for NetworkManager to finish operations. Using this option is recommended for commands that might take longer to complete, for example, connection activation.

7.2.2 The connection subcommand #

The connection command enables you to manage connections

or view any information about particular connections. The nmcli

connection provides the following commands to manage your network

connections:

showto list connections:

#nmcli connection showYou can also use this command to show details about a specified connection:

#nmcli connection show CONNECTION_IDwhere CONNECTION_ID is any of the identifiers: a connection name, UUID, or a path

upto activate the provided connection. Use the command to reload a connection. Also run this command after you perform any change to the connection.

#nmcli connection up [--active] [CONNECTION_ID]When

--activeis specified, only the active profiles are displayed. The default is to display both active connections and static configuration.downto deactivate a connection.

#nmcli connection down CONNECTION_IDwhere: CONNECTION_ID is any of the identifiers: a connection name, UUID, or a path

If you deactivate the connection, it will not reconnect later even if it has the

autoconnectflag.modifyto change or delete a property of a connection.

#nmcli connection modify CONNECTION_ID SETTING.PROPERTY PROPERTY_VALUEwhere:

CONNECTION_ID is any of the identifiers: a connection name, UUID, or a path

SETTING.PROPERTY is the name of the property, for example,

ipv4.addressesPROPERTY_VALUE is the desired value of SETTING.PROPERTY

The following example deactivates the

autoconnectoption on theethernet1connection:#nmcli connection modify ethernet1 connection.autoconnect noaddto add a connection with the provided details. The command syntax is similar to the

modifycommand:#nmcli connection add CONNECTION_ID save YES|NO SETTING.PROPERTY PROPERTY_VALUEYou should at least specify a

connection.typeor usetype. The following example adds an Ethernet connection tied to theeth0interface with DHCP, and disables the connection'sautoconnectflag:#nmcli connection add type ethernet autoconnect no ifname eth0editto edit an existing connection using an interactive editor.

#nmcli connection edit CONNECTION_IDcloneto clone an already existing connection. The minimal syntax follows:

#nmcli connection clone CONNECTION_ID NEW_NAMEwhere CONNECTION_ID is the connection to be cloned.

deleteto delete an existing connection:

#nmcli connection delete CONNECTION_IDmonitorto monitor the provided connection. Each time the connection changes, NetworkManager prints a line.

#nmcli connection monitor CONNECTION_IDreloadto reload all connection files from the disk. As NetworkManager does not monitor changes performed to the connection files, you need to use this command whenever you make changes to the files. This command does not take any further subcommands.

loadto load/reload a particular connection file, run:

#nmcli connection load CONNECTION_FILE

7.2.3 The device subcommand #

The device subcommand enables you to show and manage

network interfaces. The nmcli device command recognizes

the following commands:

statusto print the status of all devices.

#nmcli device statusshowshows detailed information about a device. If no device is specified, all devices are displayed.

#mcli device show [DEVICE_NAME]connectto connect a device. NetworkManager tries to find a suitable connection that will be activated. If there is no compatible connection, a new profile is created.

#nmcli device connect DEVICE_NAMEmodifyperforms temporary changes to the configuration that is active on the particular device. The changes are not stored in the connection profile.

#nmcli device modify DEVICE_NAME [+|-] SETTING.PROPERTY VALUEFor possible SETTING.PROPERTY values, refer to nm-settings-nmcli(5).

The example below starts the IPv4 shared connection sharing on the device

con1.#nmcli dev modify con1 ipv4.method shareddisconnectdisconnects a device and prevents the device from automatically activating further connections without manual intervention.

#nmcli device disconnect DEVICE_NAMEdeleteto delete the interface from the system. You can use the command to delete only software devices like bonds and bridges. You cannot delete hardware devices with this command.

#nmcli device DEVICE_NAMEwifilists all available access points.

#nmcli device wifiwifi connectconnects to a Wi-Fi network specified by its SSID or BSSID. The command takes the following options:

password- password for secured networksifname- interface that will be used for activationname- you can give the connection a name