- About this guide

- I Ceph Dashboard

- II Cluster Operation

- 12 Determine the cluster state

- 13 Operational tasks

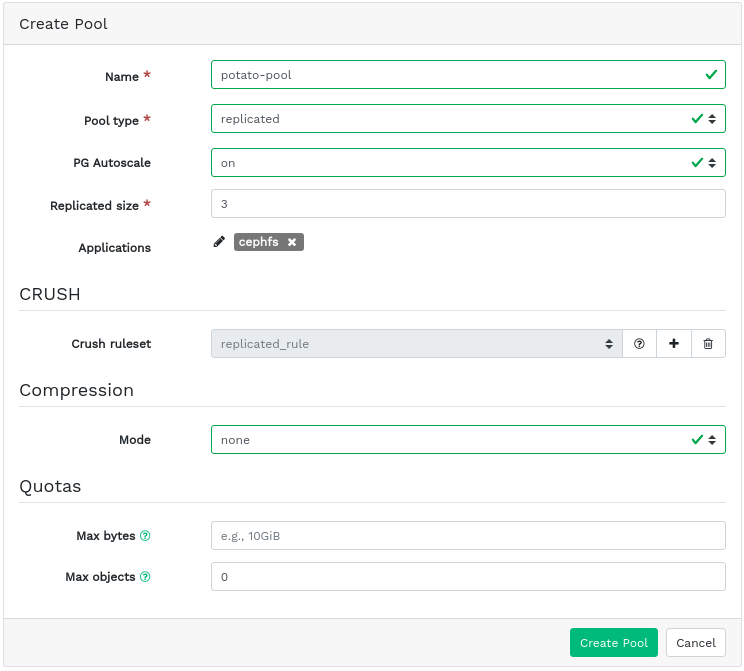

- 13.1 Modifying the cluster configuration

- 13.2 Adding nodes

- 13.3 Removing nodes

- 13.4 OSD management

- 13.5 Moving the Salt Master to a new node

- 13.6 Updating the cluster nodes

- 13.7 Updating Ceph

- 13.8 Halting or rebooting cluster

- 13.9 Removing an entire Ceph cluster

- 13.10 Offline container management

- 13.11 Refreshing expired SSL certificates

- 14 Operation of Ceph services

- 15 Backup and restore

- 16 Monitoring and alerting

- III Storing Data in a Cluster

- IV Accessing Cluster Data

- 21 Ceph Object Gateway

- 21.1 Object Gateway restrictions and naming limitations

- 21.2 Deploying the Object Gateway

- 21.3 Operating the Object Gateway service

- 21.4 Configuration options

- 21.5 Managing Object Gateway access

- 21.6 HTTP front-ends

- 21.7 Enable HTTPS/SSL for Object Gateways

- 21.8 Synchronization modules

- 21.9 LDAP authentication

- 21.10 Bucket index sharding

- 21.11 OpenStack Keystone integration

- 21.12 Pool placement and storage classes

- 21.13 Multisite Object Gateways

- 22 Ceph iSCSI gateway

- 23 Clustered file system

- 24 Export Ceph data via Samba

- 25 NFS Ganesha

- 21 Ceph Object Gateway

- V Integration with Virtualization Tools

- VI Configuring a Cluster

- A Ceph maintenance updates based on upstream 'Pacific' point releases

- Glossary

- 2.1 Ceph Dashboard login screen

- 2.2 Notification about a new SUSE Enterprise Storage release

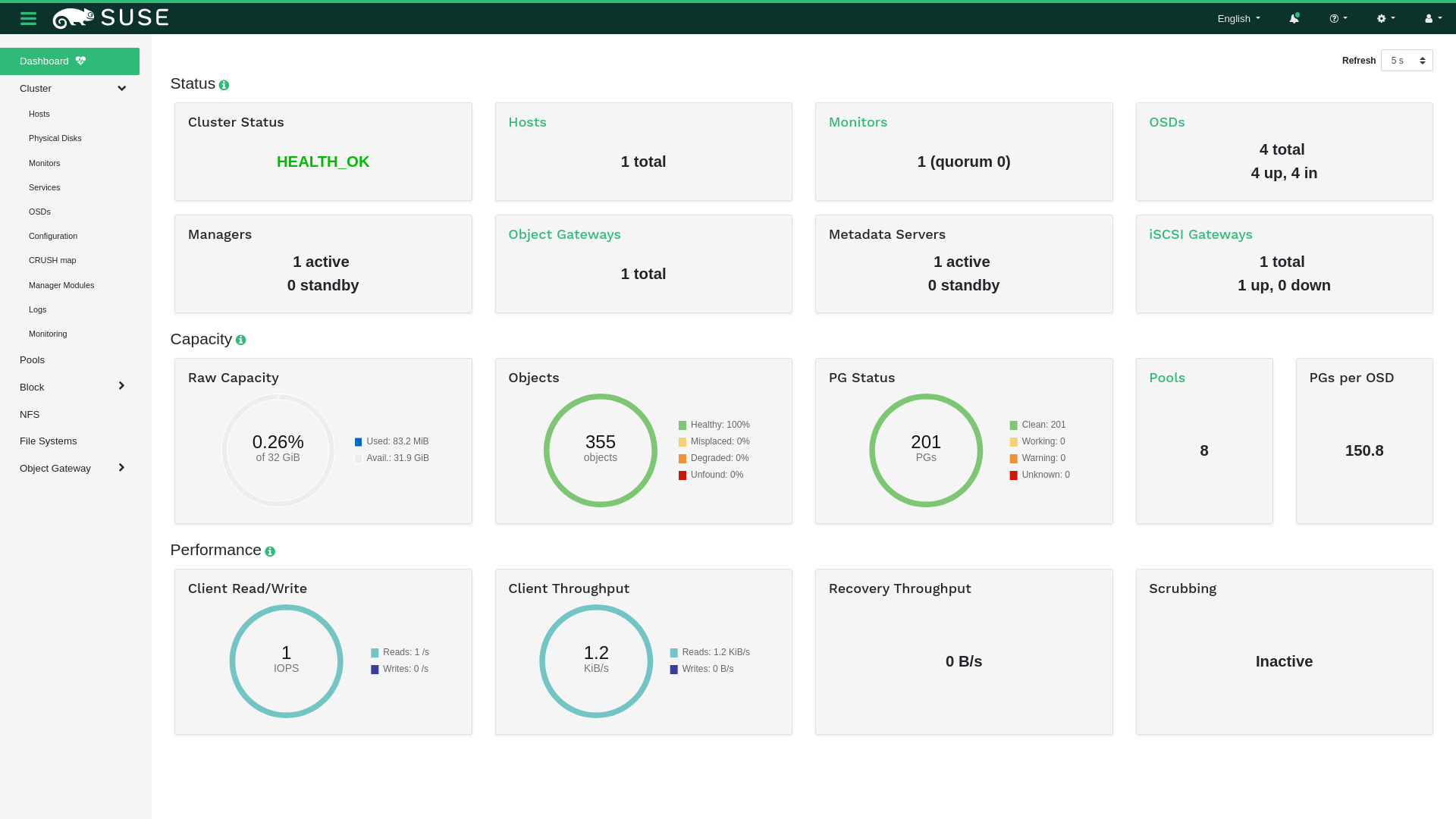

- 2.3 Ceph Dashboard home page

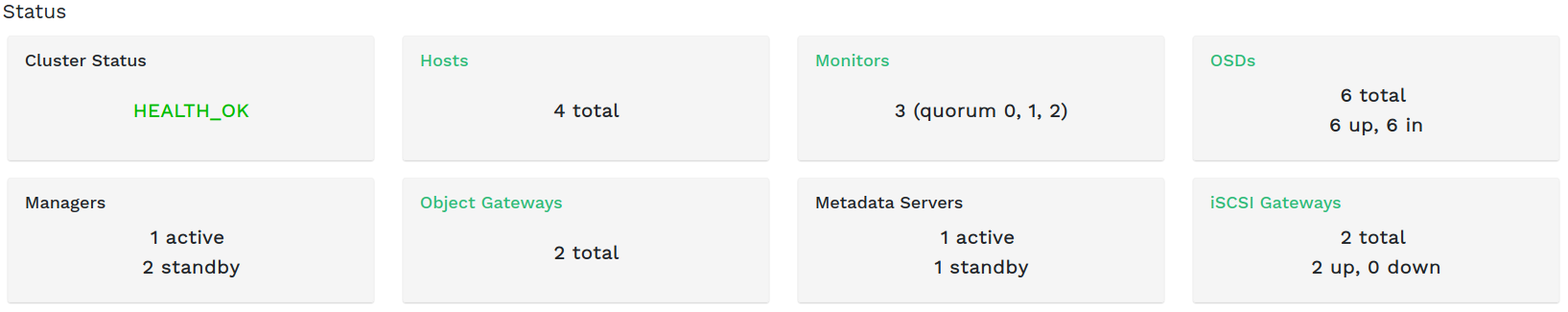

- 2.4 Status widgets

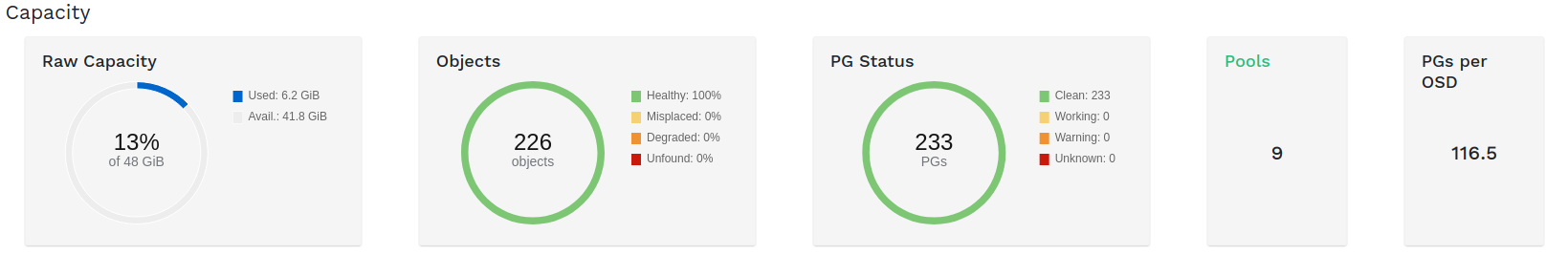

- 2.5 Capacity widgets

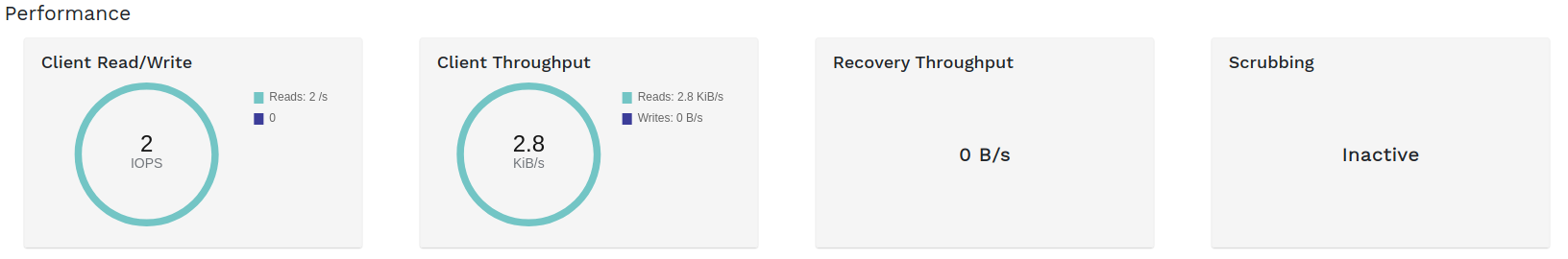

- 2.6 performance widgets

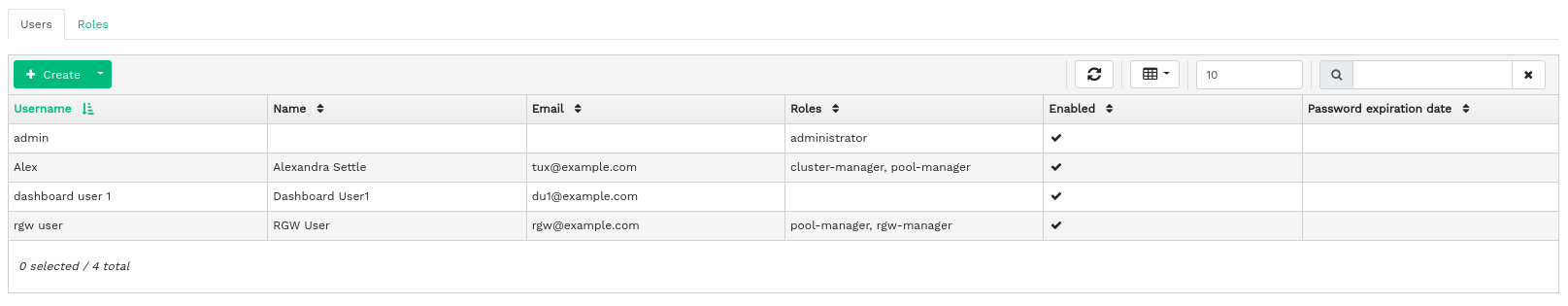

- 3.1 User management

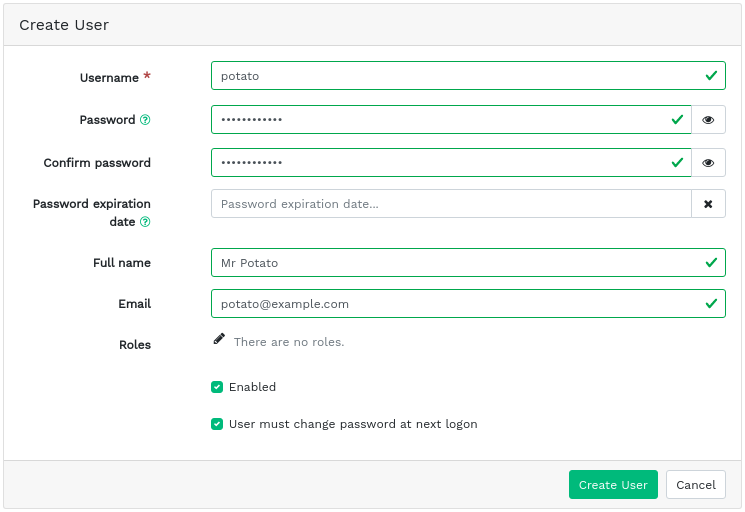

- 3.2 Adding a user

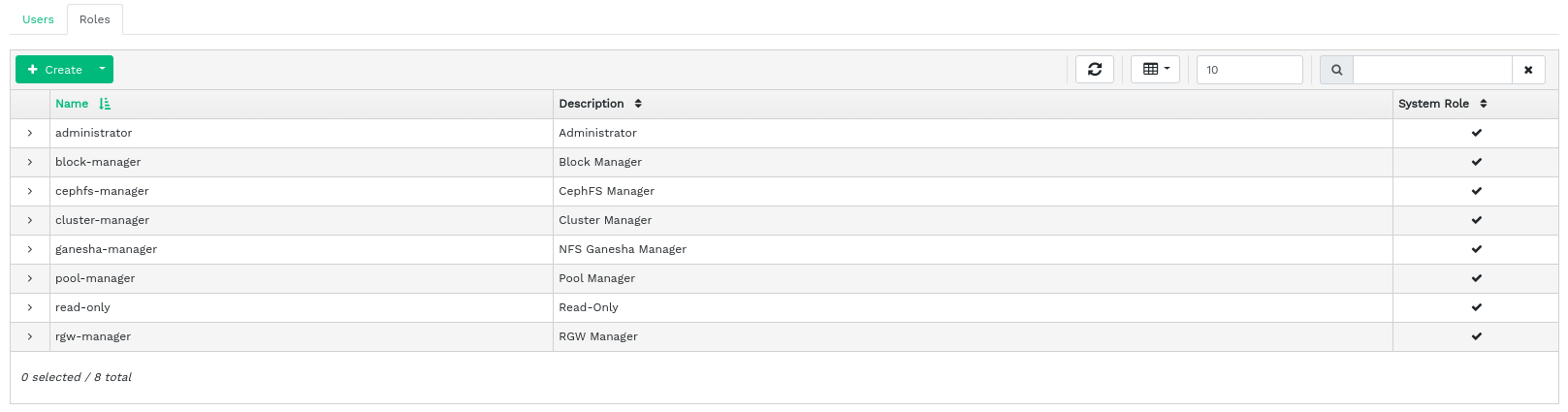

- 3.3 User roles

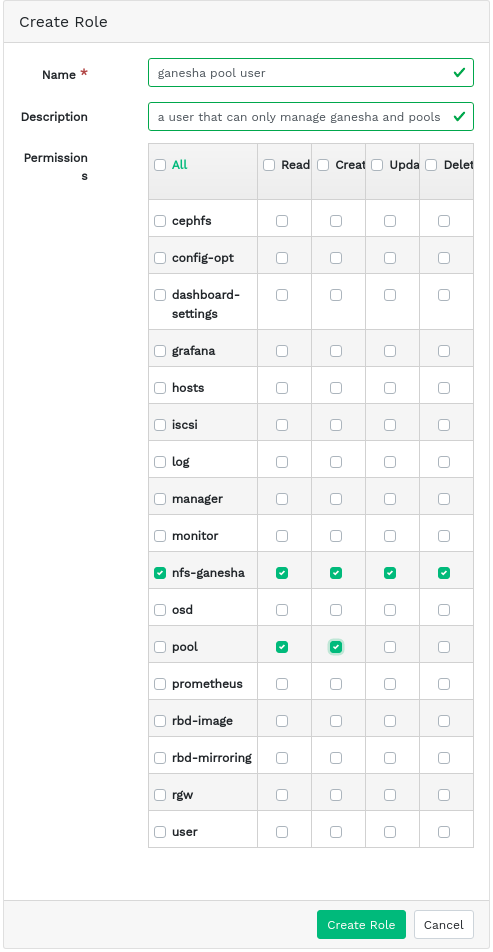

- 3.4 Adding a role

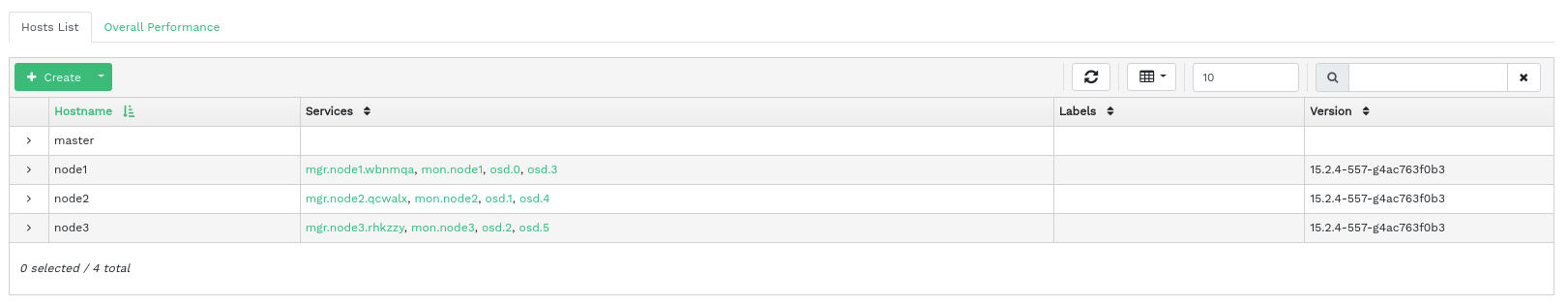

- 4.1 Hosts

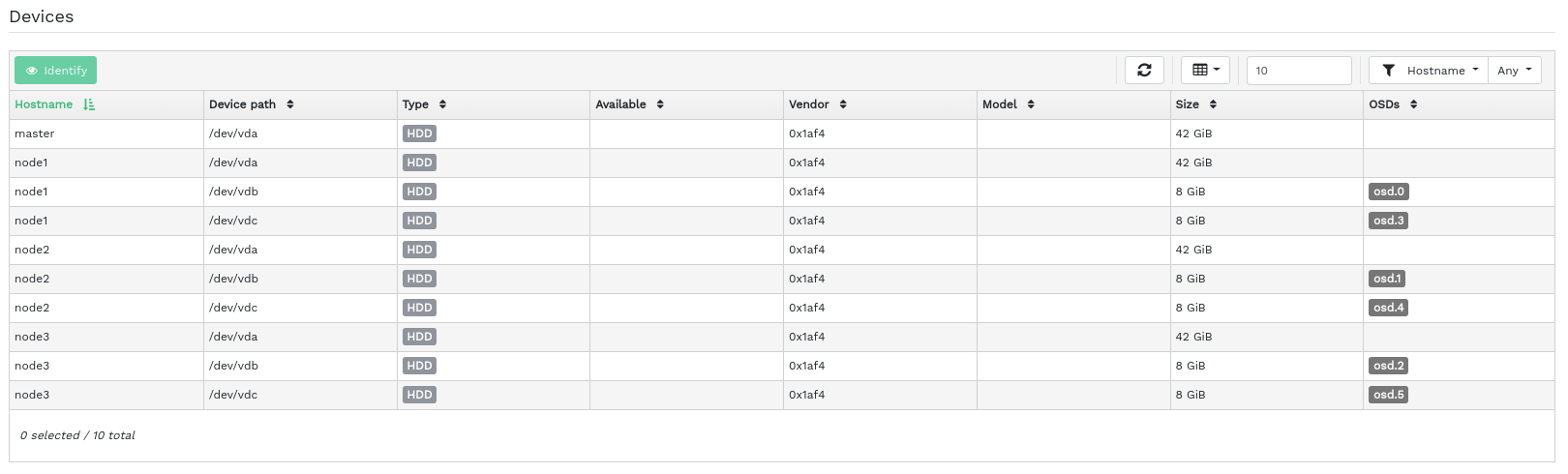

- 4.2 Physical disks

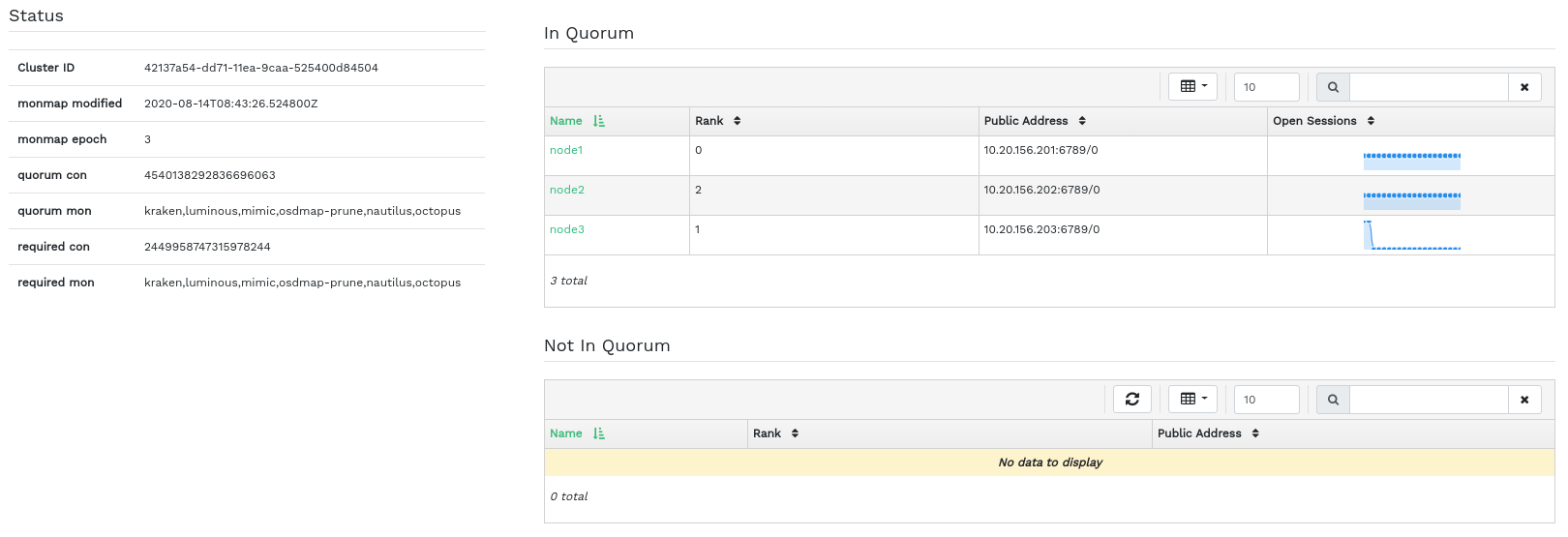

- 4.3 Ceph Monitors

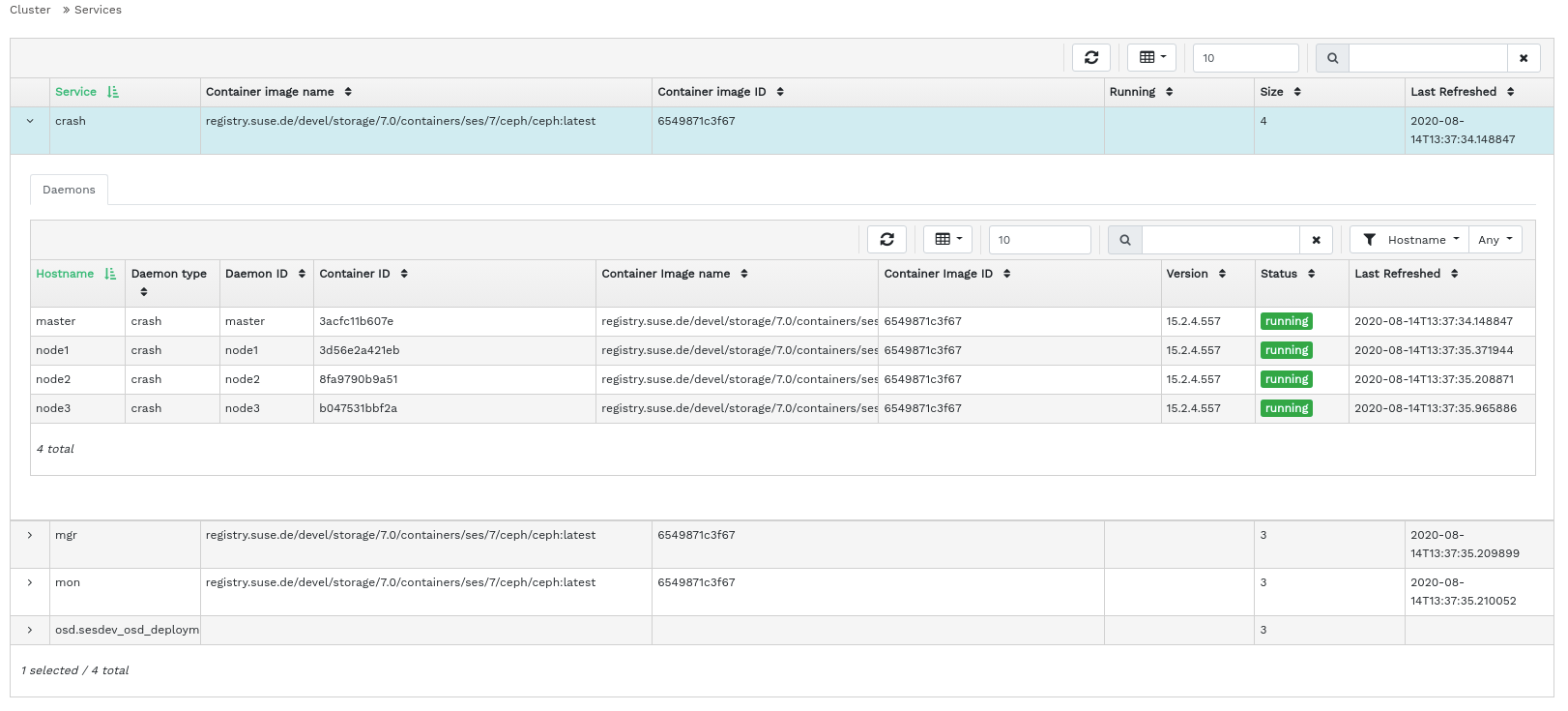

- 4.4 Services

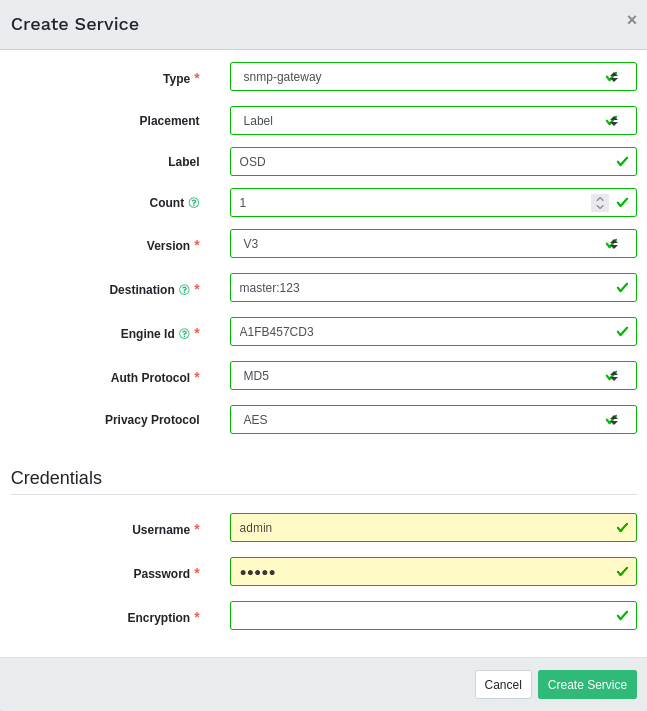

- 4.5 Creating a new cluster service

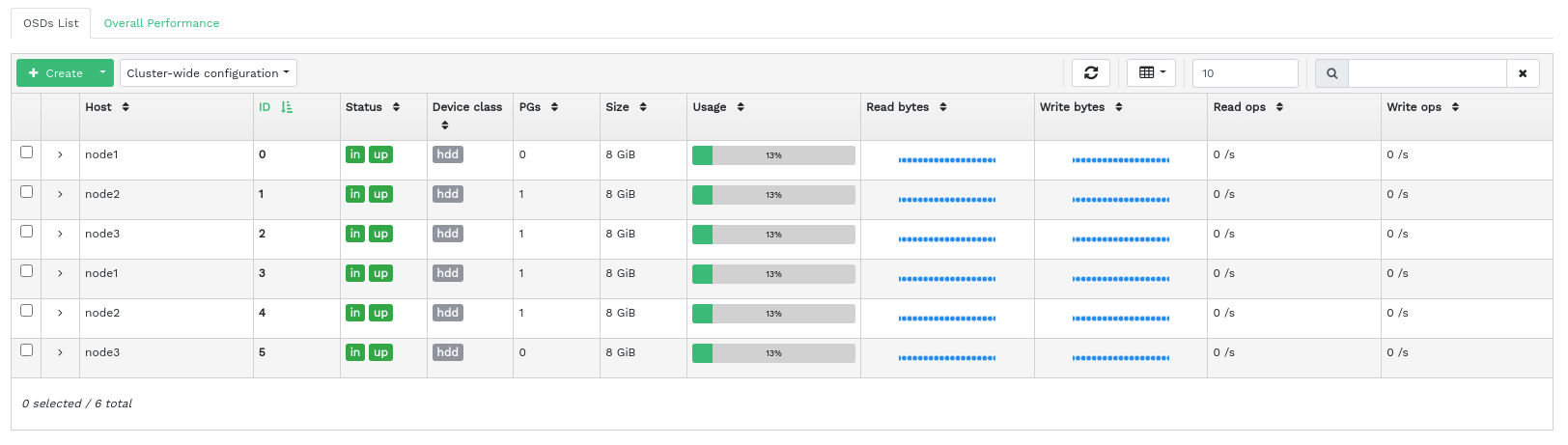

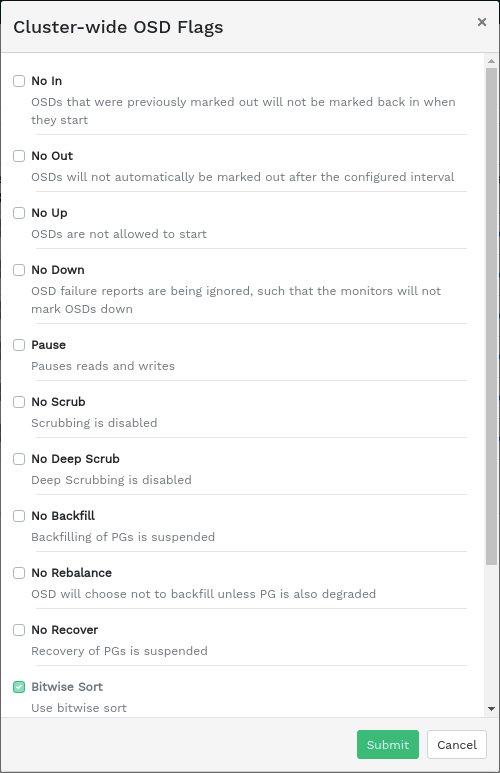

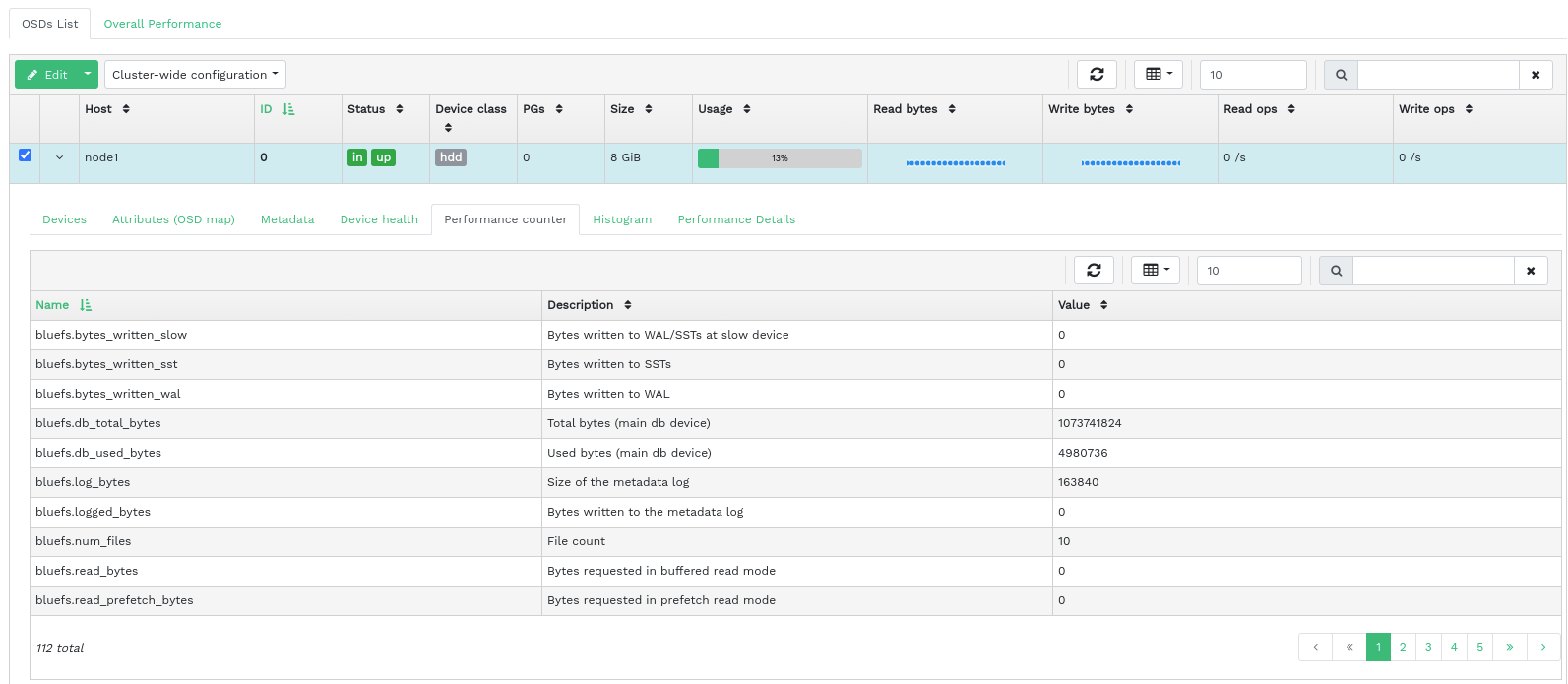

- 4.6 Ceph OSDs

- 4.7 OSD flags

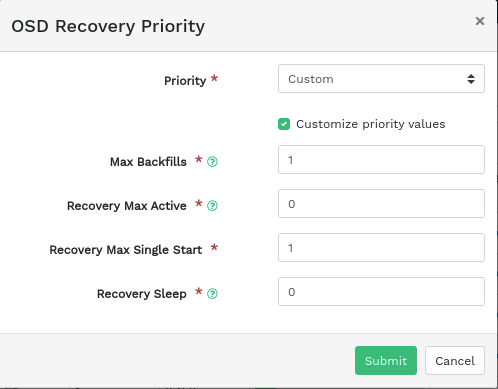

- 4.8 OSD recovery priority

- 4.9 OSD details

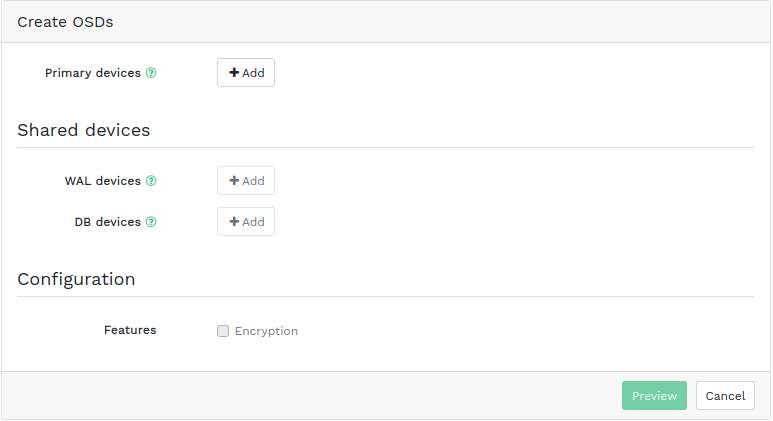

- 4.10 Create OSDs

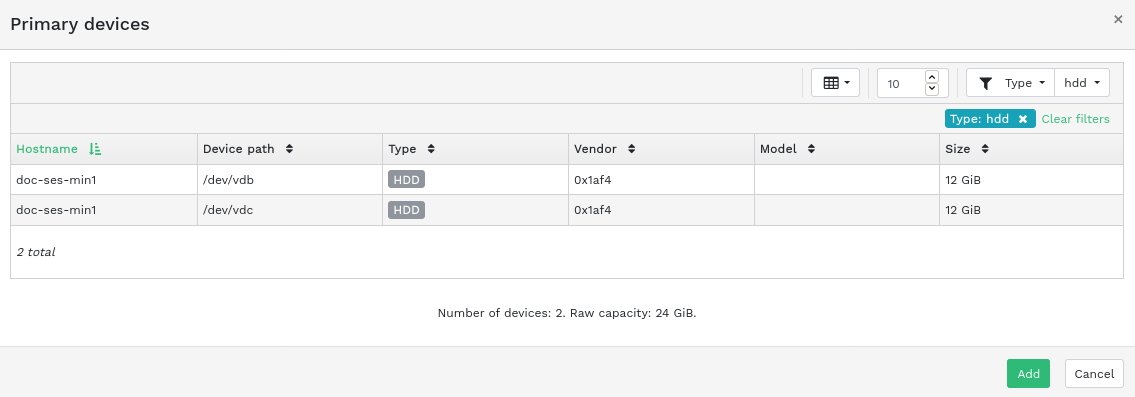

- 4.11 Adding primary devices

- 4.12 Create OSDs with primary devices added

- 4.13

- 4.14 Newly added OSDs

- 4.15 Cluster configuration

- 4.16 CRUSH Map

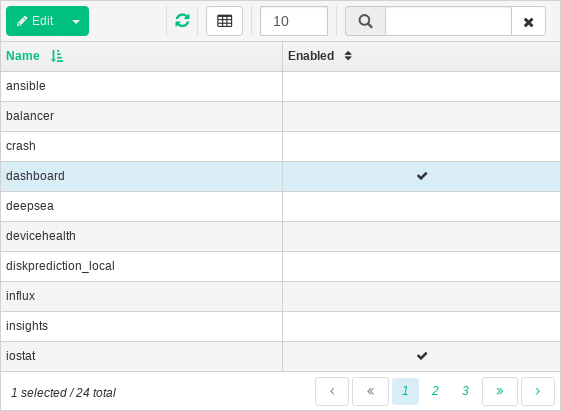

- 4.17 Manager modules

- 4.18 Logs

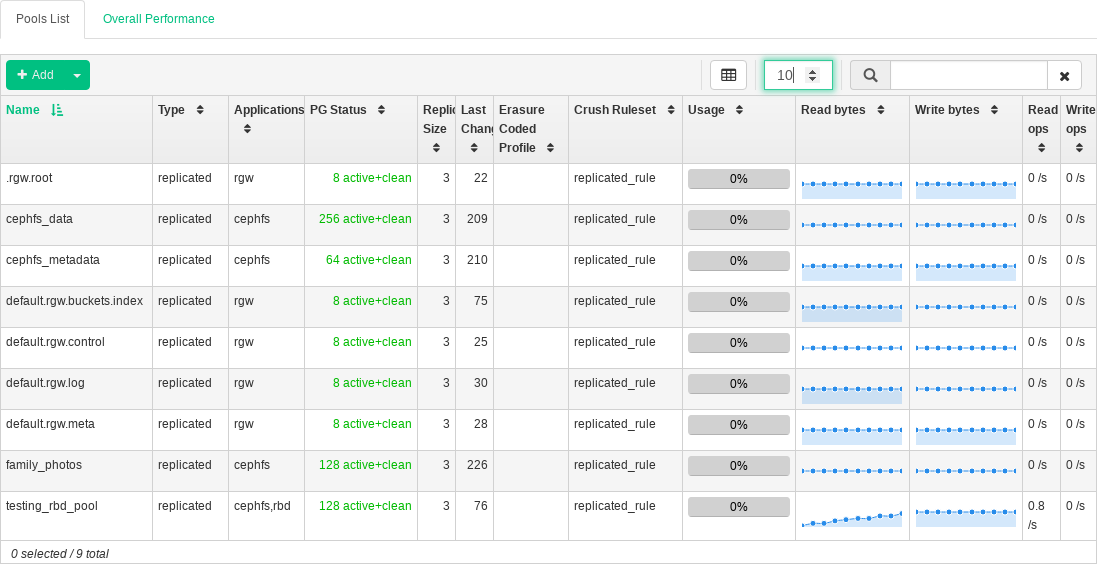

- 5.1 List of pools

- 5.2 Adding a new pool

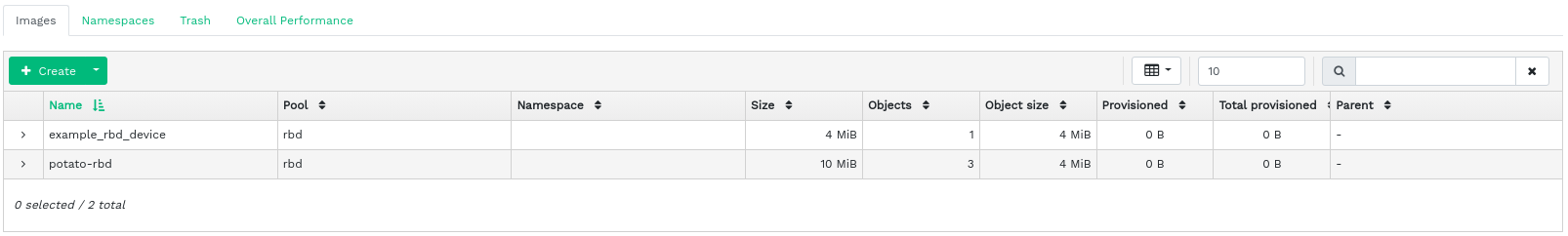

- 6.1 List of RBD images

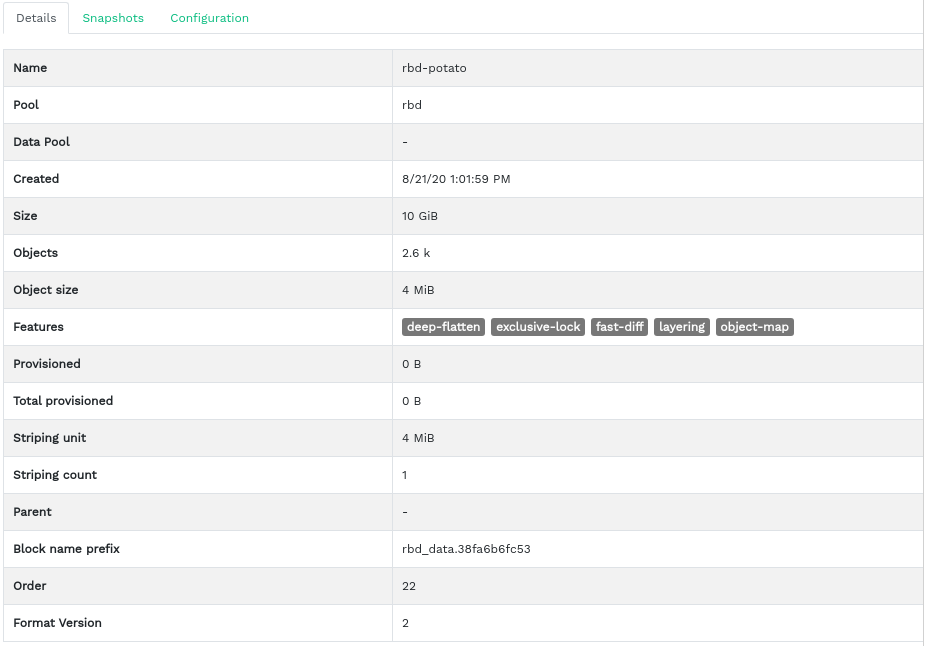

- 6.2 RBD details

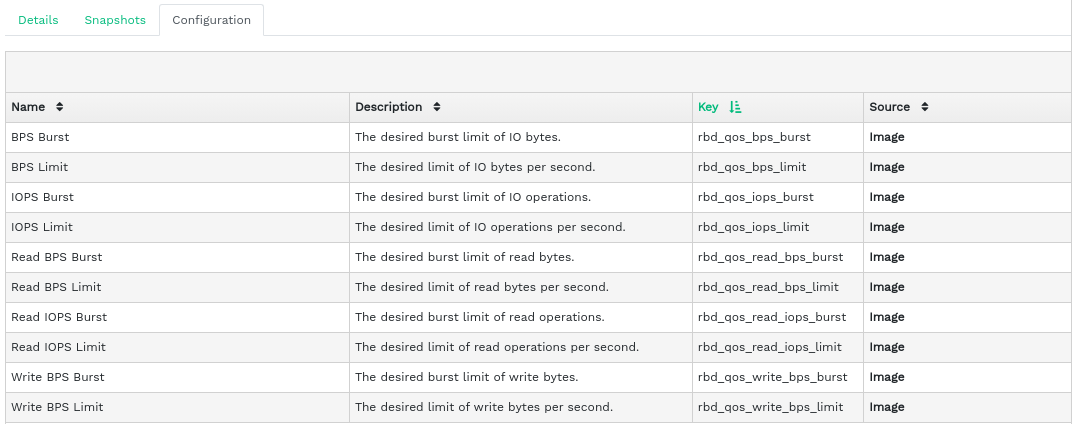

- 6.3 RBD configuration

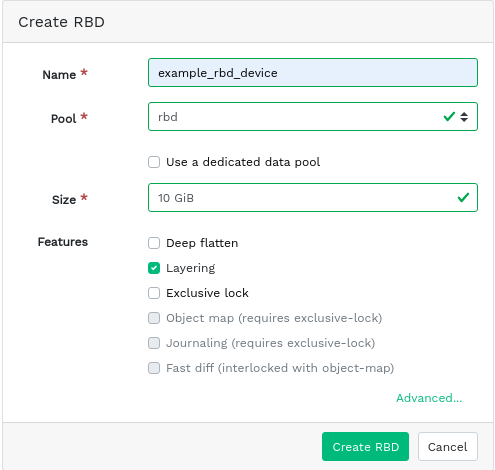

- 6.4 Adding a new RBD

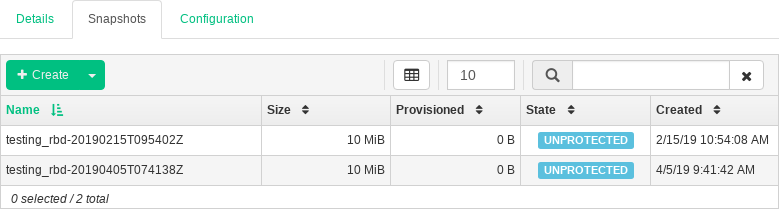

- 6.5 RBD snapshots

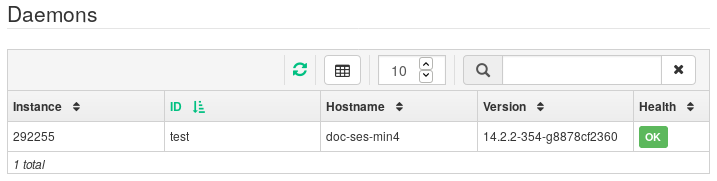

- 6.6 Running

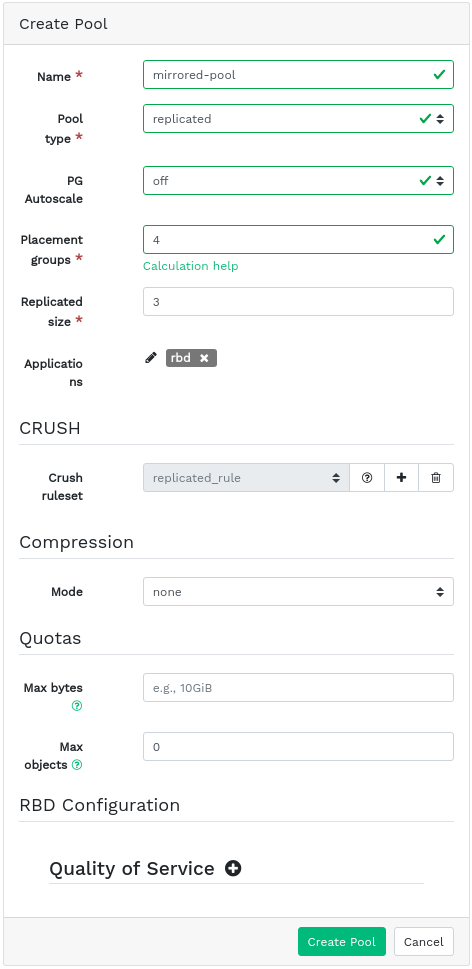

rbd-mirrordaemons - 6.7 Creating a pool with RBD application

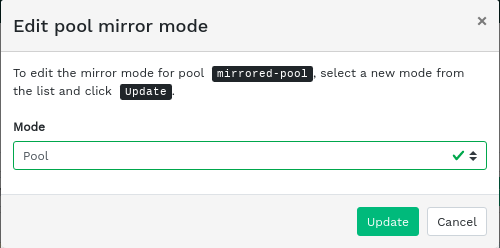

- 6.8 Configuring the replication mode

- 6.9 Adding peer credentials

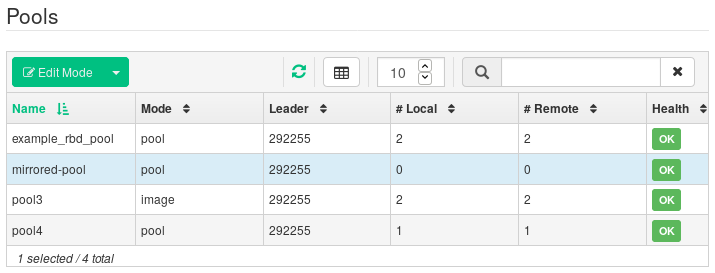

- 6.10 List of replicated pools

- 6.11 New RBD image

- 6.12 New RBD image synchronized

- 6.13 RBD images' replication status

- 6.14 List of iSCSI targets

- 6.15 iSCSI target details

- 6.16 Adding a new target

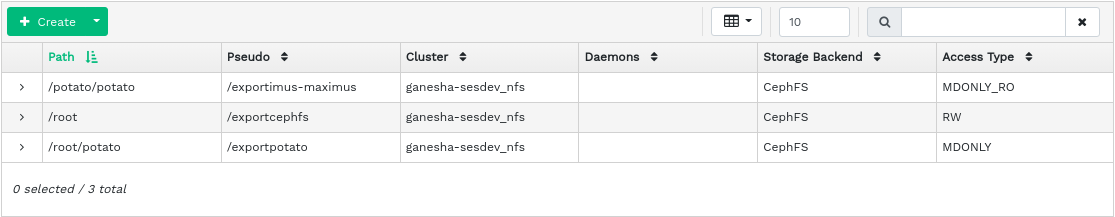

- 7.1 List of NFS exports

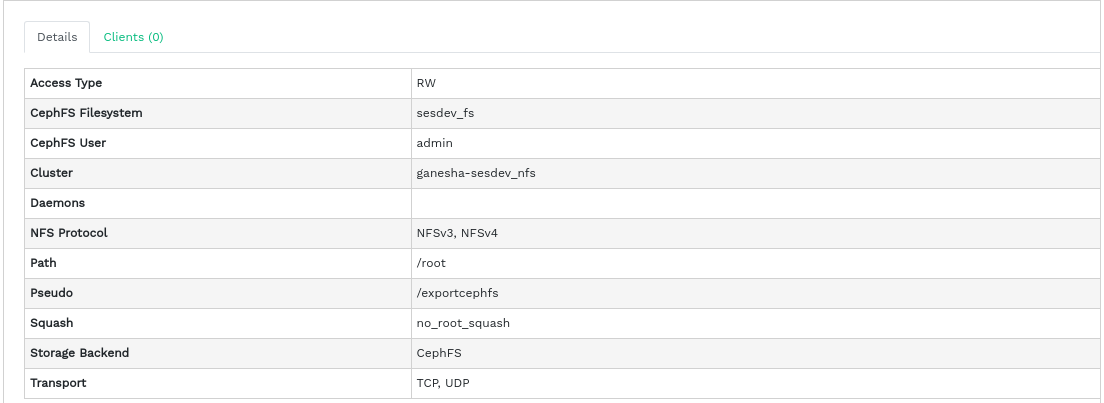

- 7.2 NFS export details

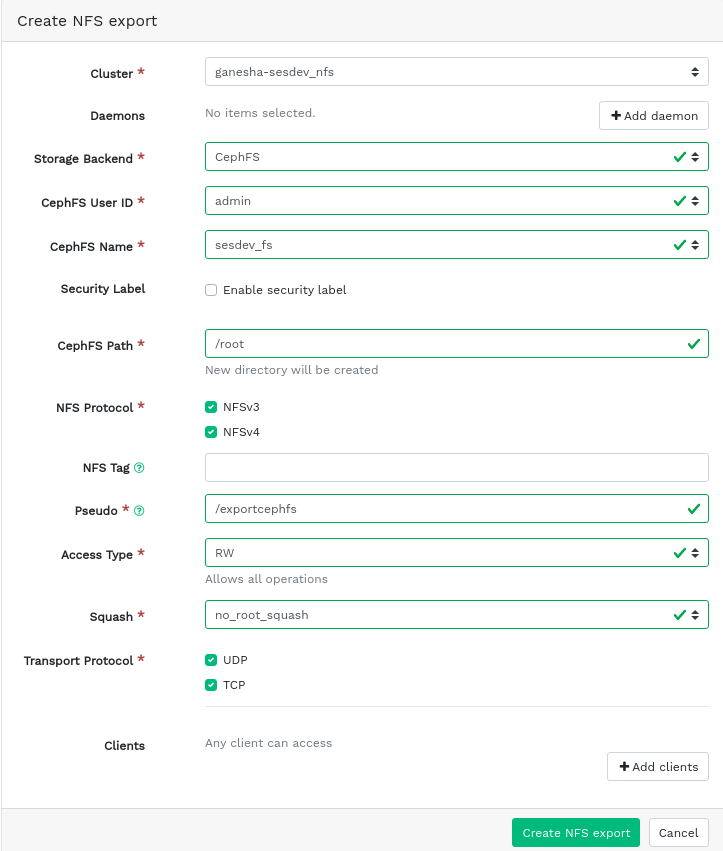

- 7.3 Adding a new NFS export

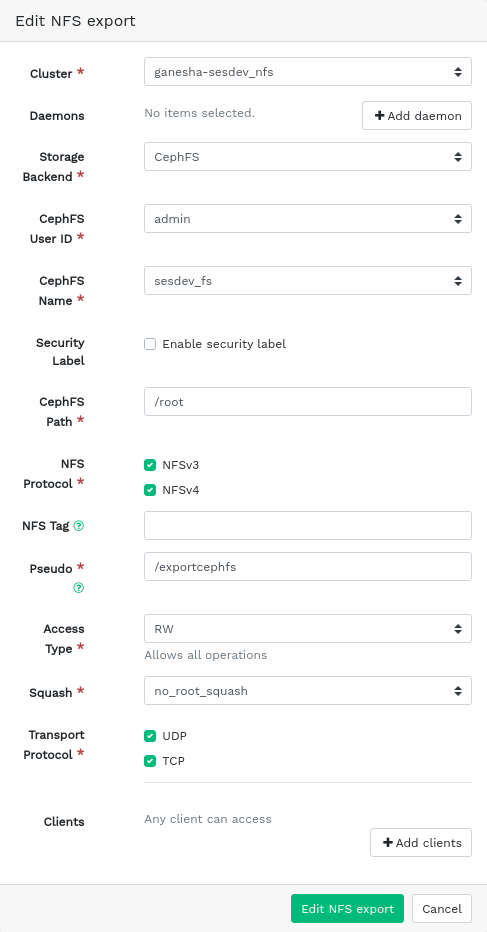

- 7.4 Editing an NFS export

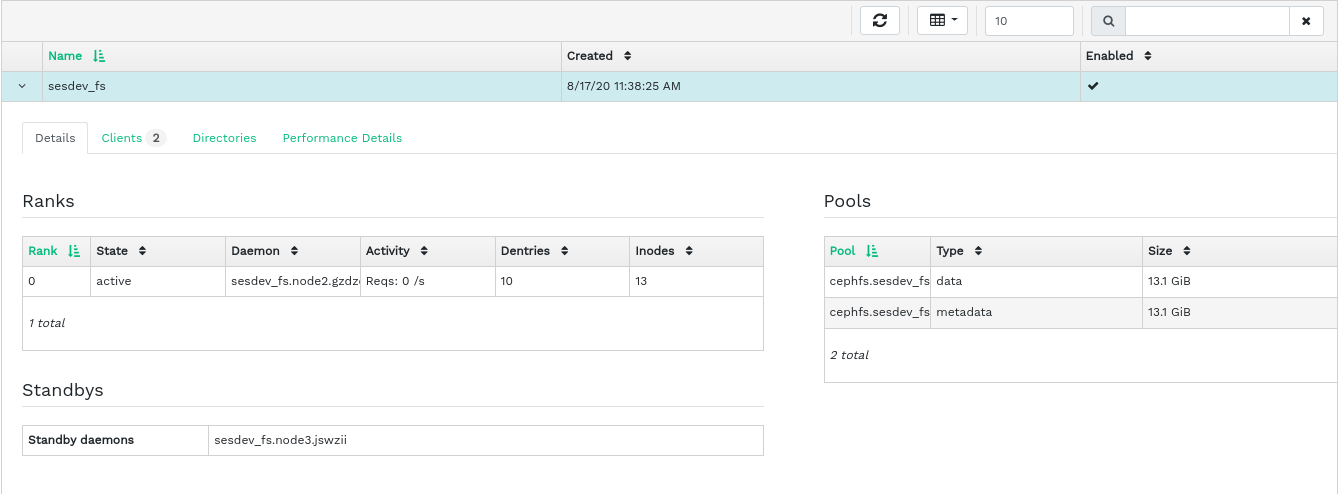

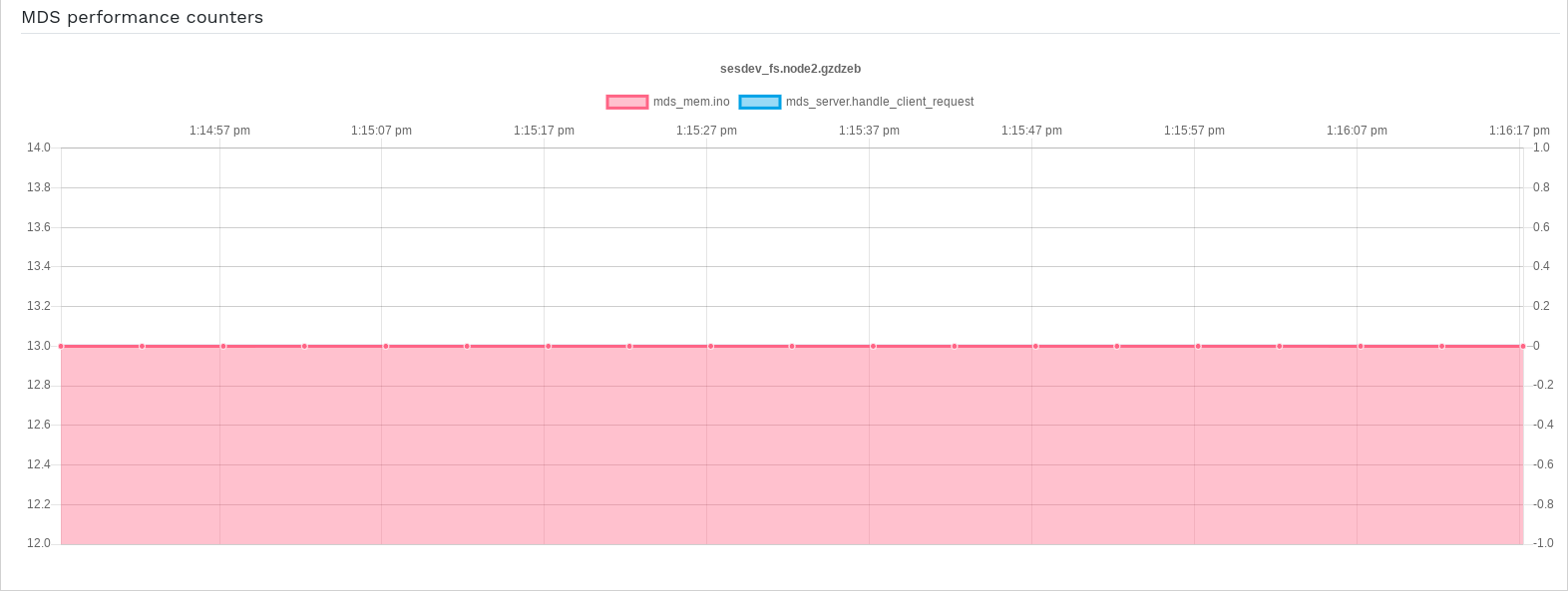

- 8.1 CephFS details

- 8.2 CephFS details

- 9.1 Gateway's details

- 9.2 Gateway users

- 9.3 Adding a new gateway user

- 9.4 Gateway bucket details

- 9.5 Editing the bucket details

- 12.1 Ceph cluster

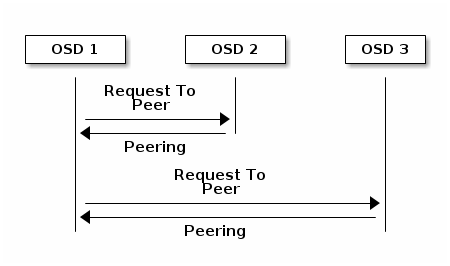

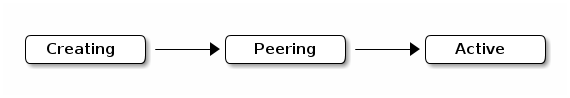

- 12.2 Peering schema

- 12.3 Placement groups status

- 17.1 OSDs with mixed device classes

- 17.2 Example tree

- 17.3 Node replacement methods

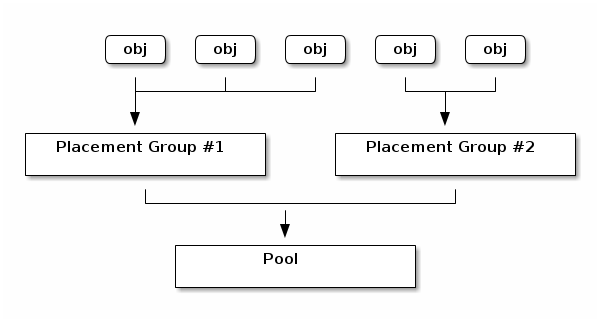

- 17.4 Placement groups in a pool

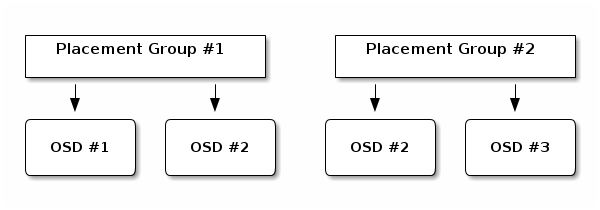

- 17.5 Placement groups and OSDs

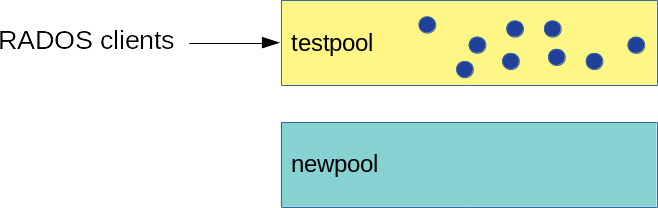

- 18.1 Pools before migration

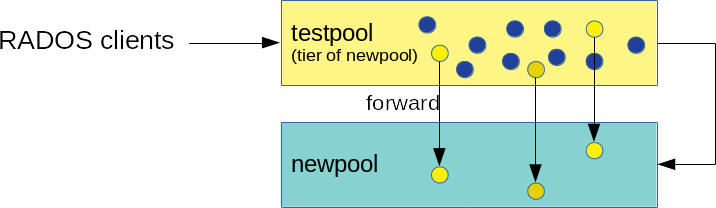

- 18.2 Cache tier setup

- 18.3 Data flushing

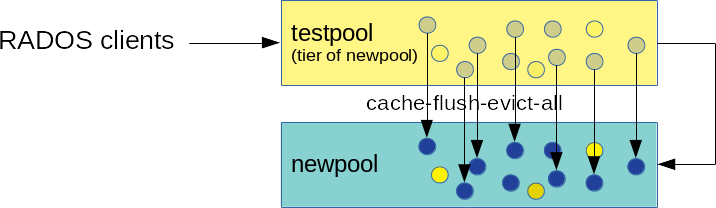

- 18.4 Setting overlay

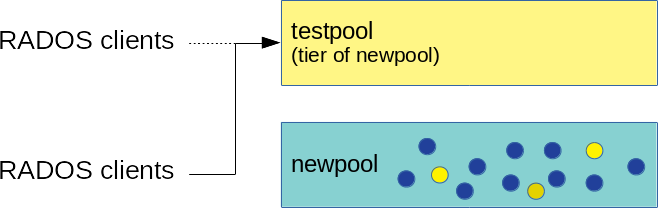

- 18.5 Migration complete

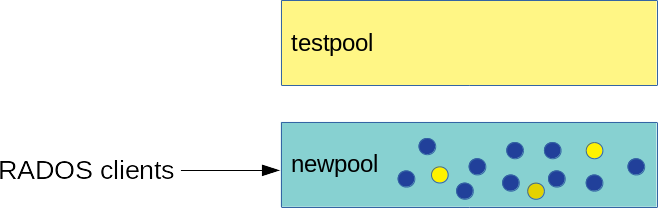

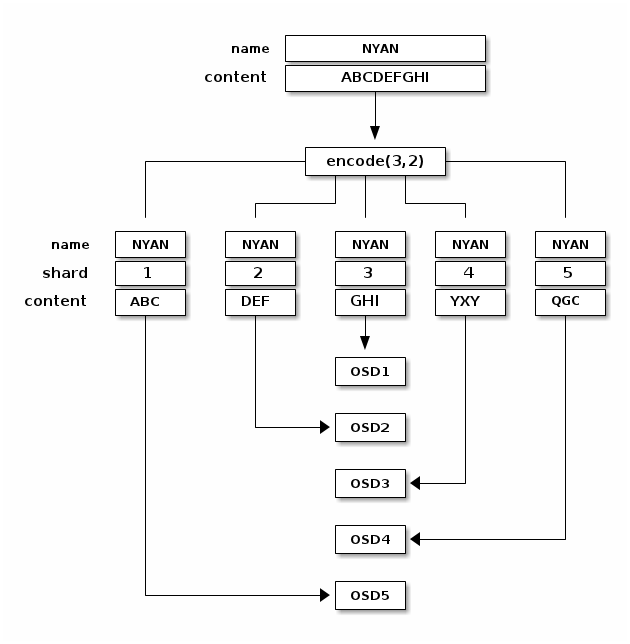

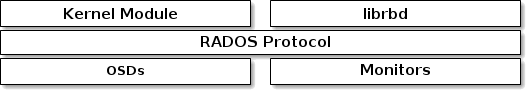

- 20.1 RADOS protocol

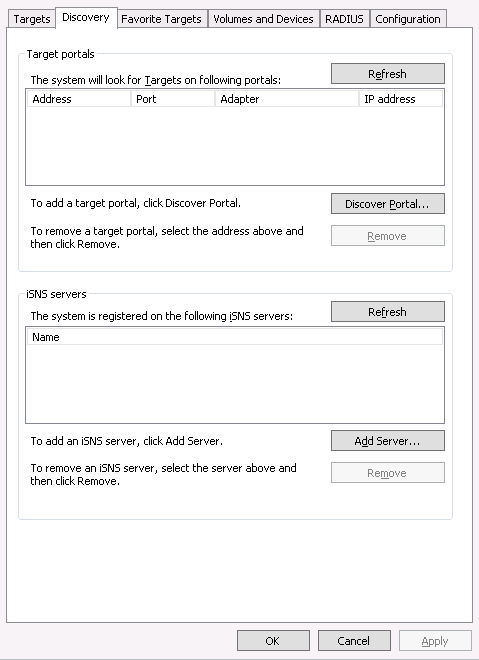

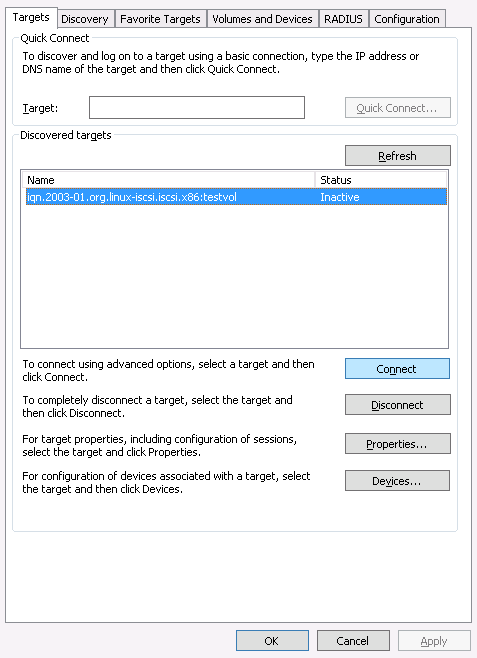

- 22.1 iSCSI initiator properties

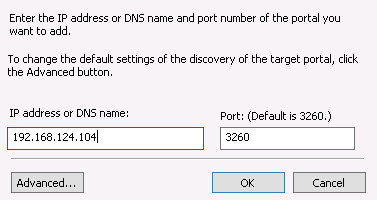

- 22.2 Discover target portal

- 22.3 Target portals

- 22.4 Targets

- 22.5 iSCSI target properties

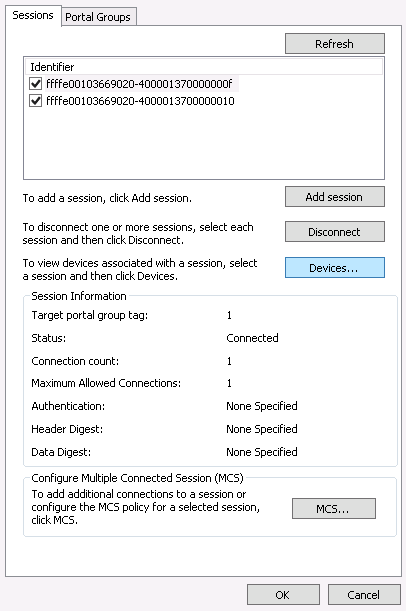

- 22.6 Device details

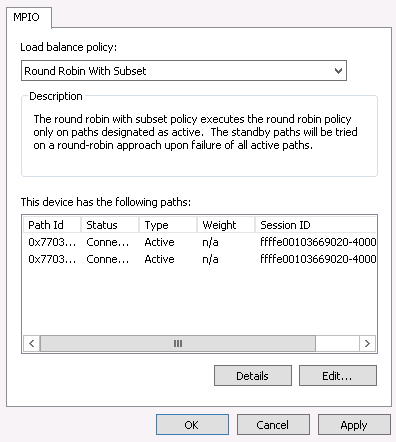

- 22.7 New volume wizard

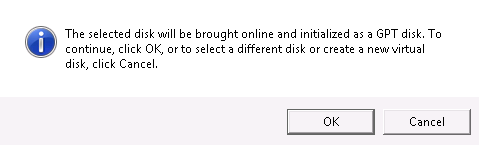

- 22.8 Offline disk prompt

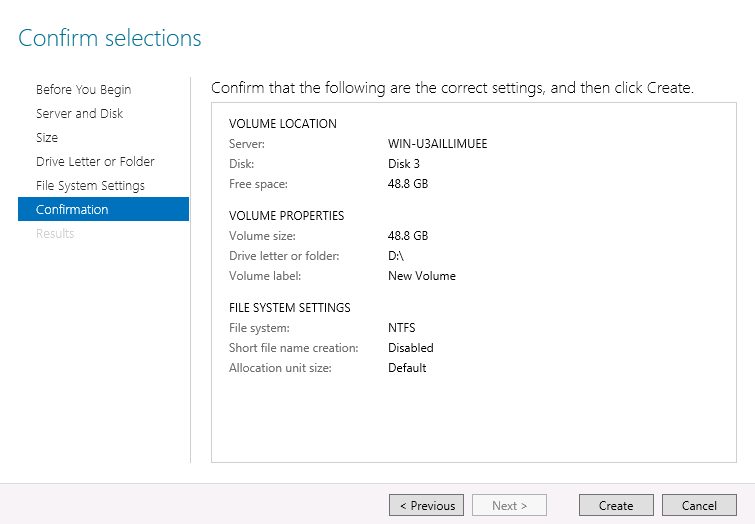

- 22.9 Confirm volume selections

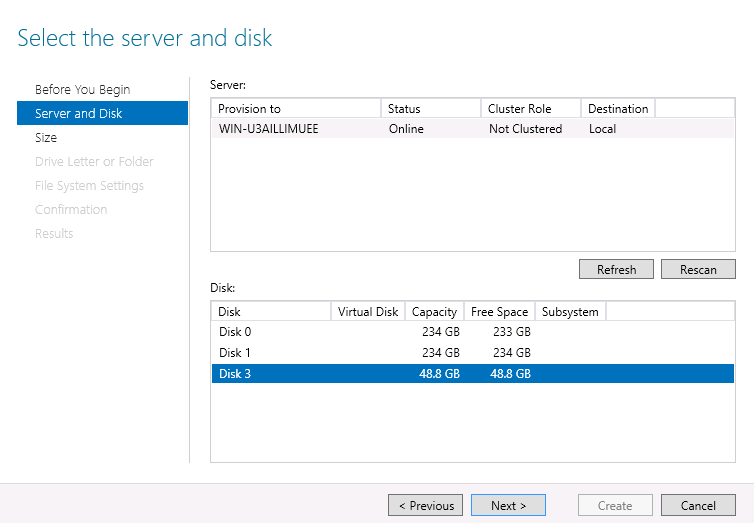

- 22.10 iSCSI initiator properties

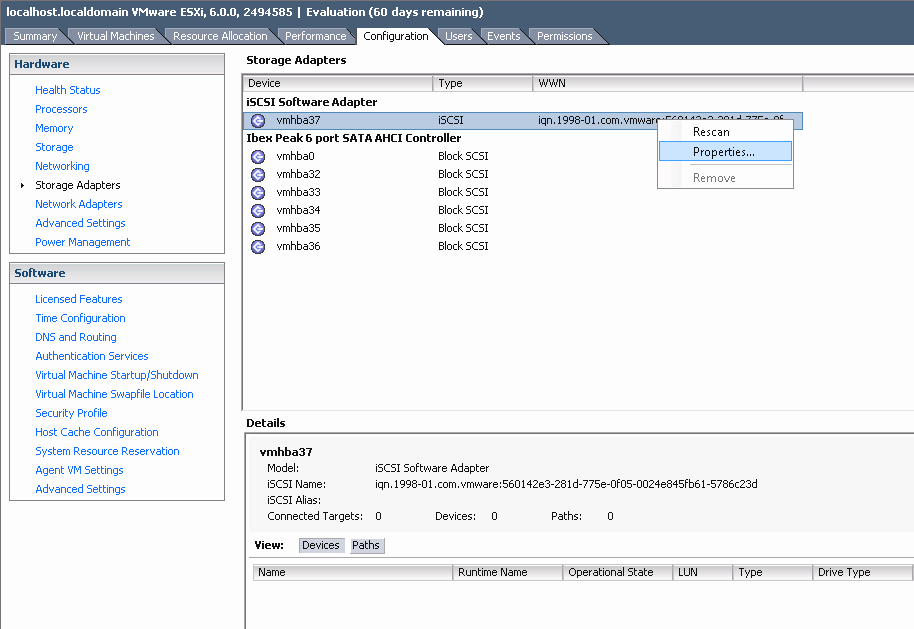

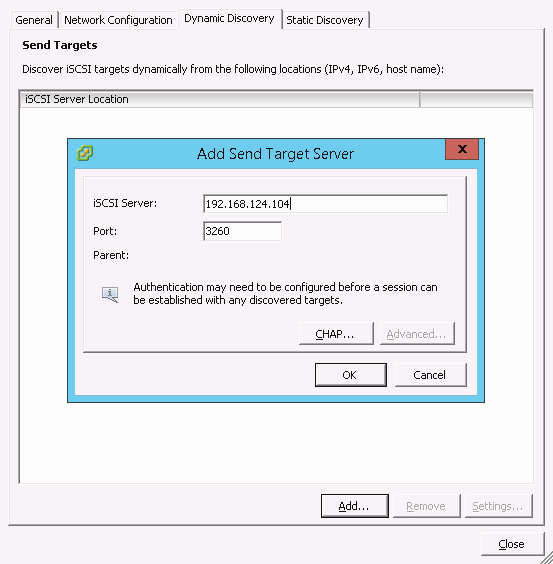

- 22.11 Add target server

- 22.12 Manage multipath devices

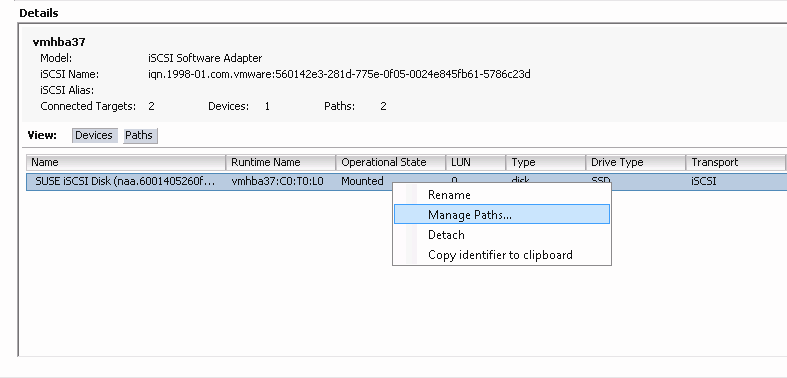

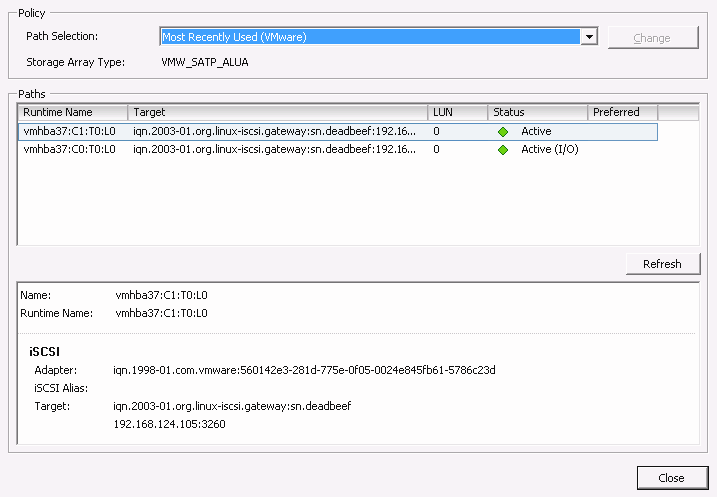

- 22.13 Paths listing for multipath

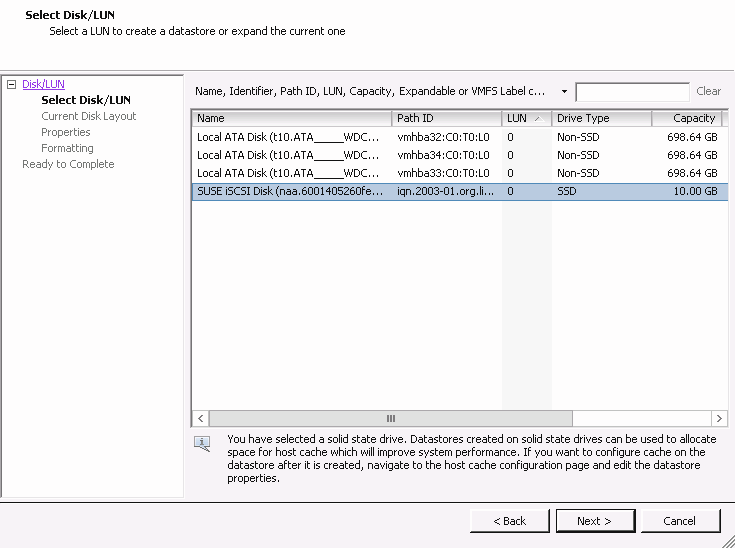

- 22.14 Add storage dialog

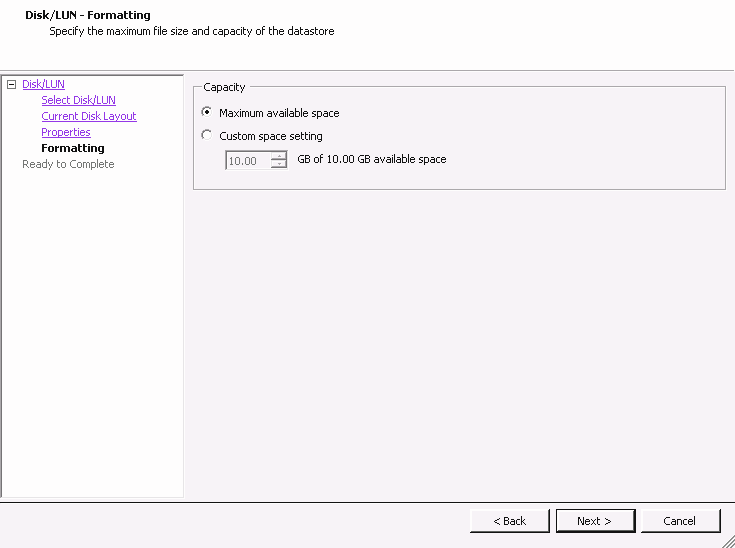

- 22.15 Custom space setting

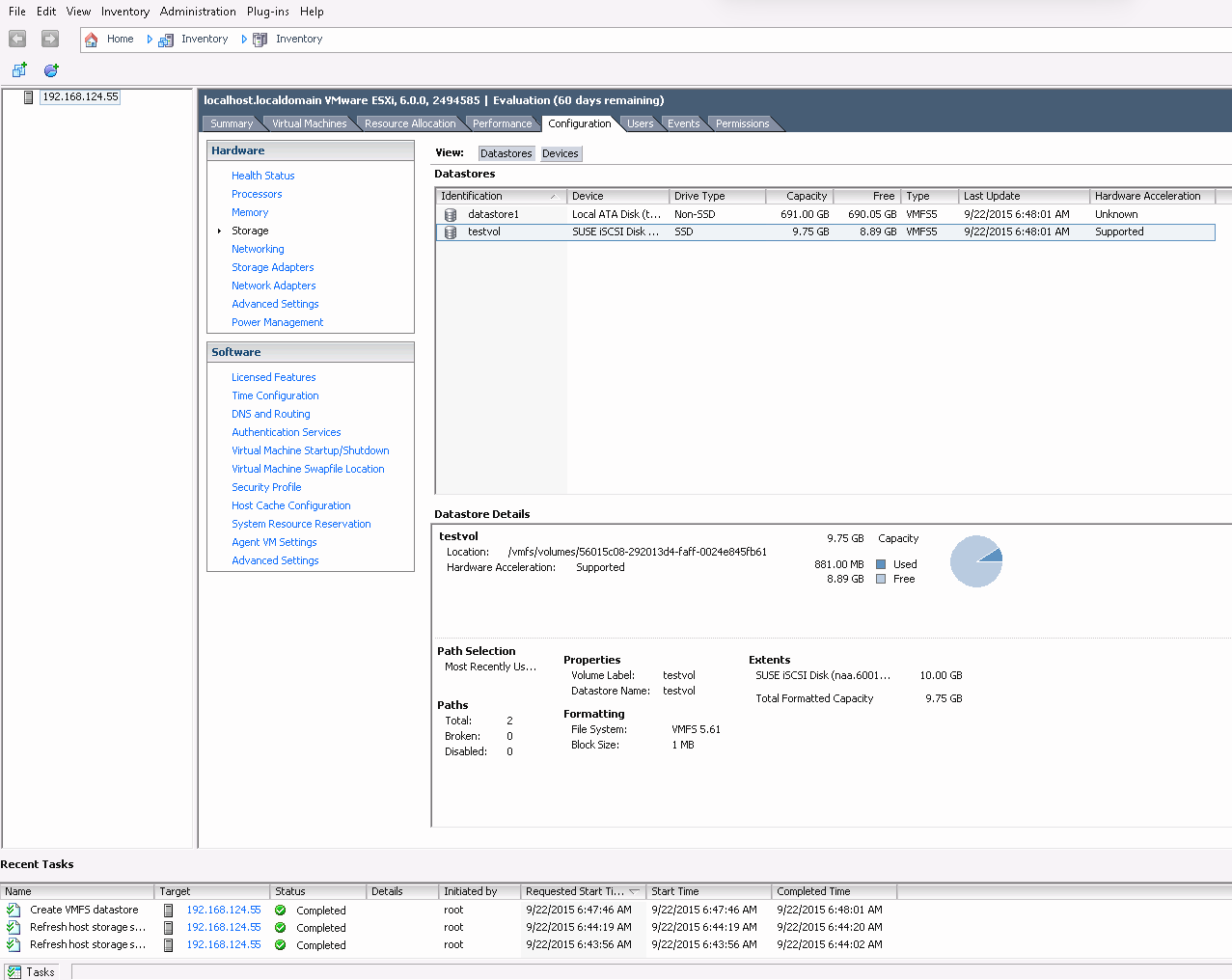

- 22.16 iSCSI datastore overview

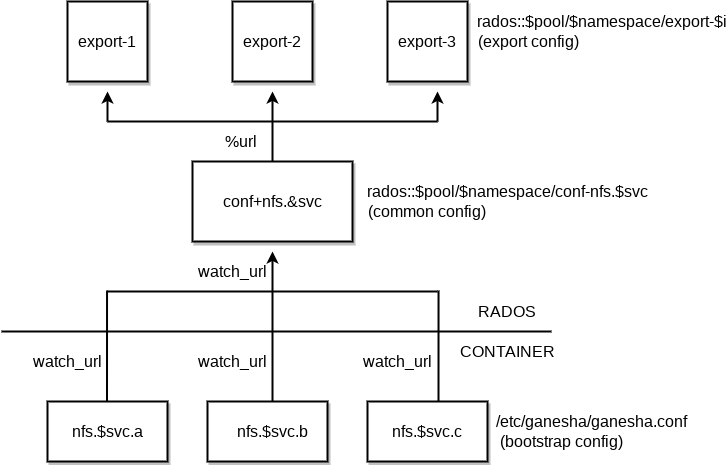

- 25.1 NFS Ganesha structure

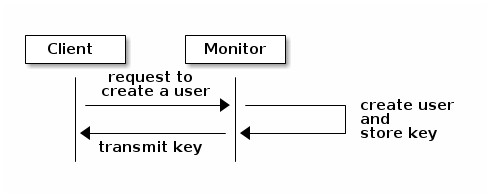

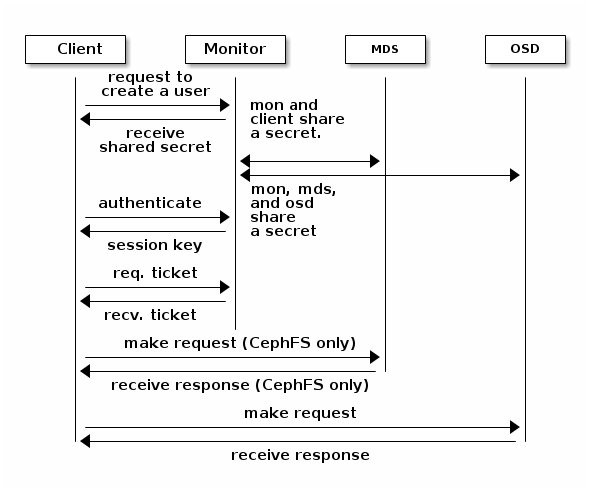

- 30.1 Basic

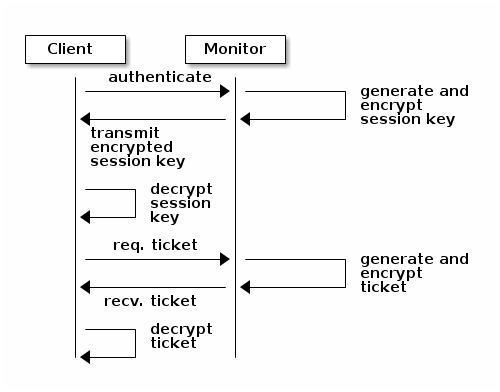

cephxauthentication - 30.2

cephxauthentication - 30.3

cephxauthentication - MDS and OSD

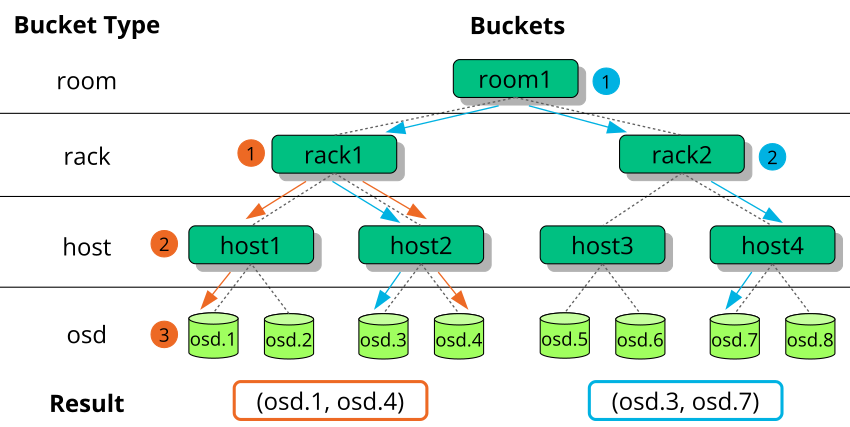

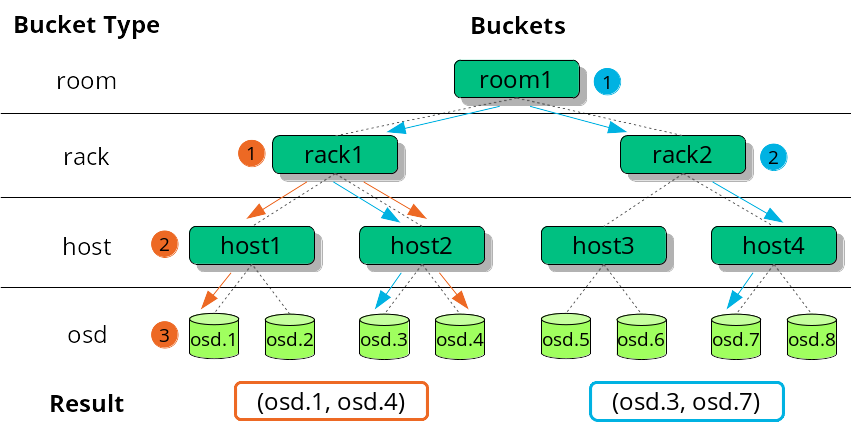

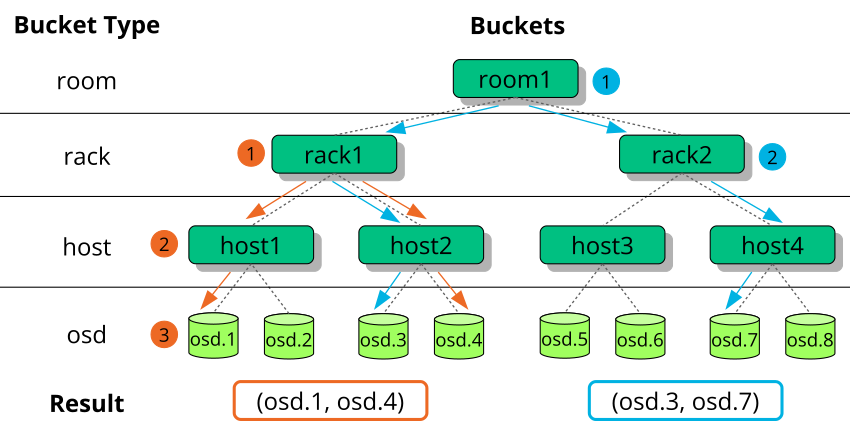

- 12.1 Locating an object

- 13.1 Matching by disk size

- 13.2 Simple setup

- 13.3 Advanced setup

- 13.4 Advanced setup with non-uniform nodes

- 13.5 Expert setup

- 13.6 Complex (and unlikely) setup

- 17.1

crushtool --reclassify-root - 17.2

crushtool --reclassify-bucket - 21.1 Trivial configuration

- 21.2 Non-trivial configuration

- 28.1 Example Beast Configuration

- 28.2 Example Civetweb Configuration in

/etc/ceph/ceph.conf

Copyright © 2020–2025 SUSE LLC and contributors. All rights reserved.

Except where otherwise noted, this document is licensed under Creative Commons Attribution-ShareAlike 4.0 International (CC-BY-SA 4.0): https://creativecommons.org/licenses/by-sa/4.0/legalcode.

For SUSE trademarks, see http://www.suse.com/company/legal/. All third-party trademarks are the property of their respective owners. Trademark symbols (®, ™ etc.) denote trademarks of SUSE and its affiliates. Asterisks (*) denote third-party trademarks.

All information found in this book has been compiled with utmost attention to detail. However, this does not guarantee complete accuracy. Neither SUSE LLC, its affiliates, the authors nor the translators shall be held liable for possible errors or the consequences thereof.

About this guide #

This guide focuses on routine tasks that you as an administrator need to take care of after the basic Ceph cluster has been deployed (day 2 operations). It also describes all the supported ways to access data stored in a Ceph cluster.

SUSE Enterprise Storage 7.1 is an extension to SUSE Linux Enterprise Server 15 SP3. It combines the capabilities of the Ceph (http://ceph.com/) storage project with the enterprise engineering and support of SUSE. SUSE Enterprise Storage 7.1 provides IT organizations with the ability to deploy a distributed storage architecture that can support a number of use cases using commodity hardware platforms.

1 Available documentation #

Documentation for our products is available at https://documentation.suse.com, where you can also find the latest updates, and browse or download the documentation in various formats. The latest documentation updates can be found in the English language version.

In addition, the product documentation is available in your installed system

under /usr/share/doc/manual. It is included in an RPM

package named

ses-manual_LANG_CODE. Install

it if it is not already on your system, for example:

# zypper install ses-manual_enThe following documentation is available for this product:

- Deployment Guide

This guide focuses on deploying a basic Ceph cluster, and how to deploy additional services. It also cover the steps for upgrading to SUSE Enterprise Storage 7.1 from the previous product version.

- Administration and Operations Guide

This guide focuses on routine tasks that you as an administrator need to take care of after the basic Ceph cluster has been deployed (day 2 operations). It also describes all the supported ways to access data stored in a Ceph cluster.

- Security Hardening Guide

This guide focuses on how to ensure your cluster is secure.

- Troubleshooting Guide

This guide takes you through various common problems when running SUSE Enterprise Storage 7.1 and other related issues to relevant components such as Ceph or Object Gateway.

- SUSE Enterprise Storage for Windows Guide

This guide describes the integration, installation, and configuration of Microsoft Windows environments and SUSE Enterprise Storage using the Windows Driver.

2 Improving the documentation #

Your feedback and contributions to this documentation are welcome. The following channels for giving feedback are available:

- Service requests and support

For services and support options available for your product, see http://www.suse.com/support/.

To open a service request, you need a SUSE subscription registered at SUSE Customer Center. Go to https://scc.suse.com/support/requests, log in, and click .

- Bug reports

Report issues with the documentation at https://bugzilla.suse.com/. A Bugzilla account is required.

To simplify this process, you can use the links next to headlines in the HTML version of this document. These preselect the right product and category in Bugzilla and add a link to the current section. You can start typing your bug report right away.

- Contributions

To contribute to this documentation, use the links next to headlines in the HTML version of this document. They take you to the source code on GitHub, where you can open a pull request. A GitHub account is required.

Note: only available for EnglishThe links are only available for the English version of each document. For all other languages, use the links instead.

For more information about the documentation environment used for this documentation, see the repository's README at https://github.com/SUSE/doc-ses.

You can also report errors and send feedback concerning the documentation to <doc-team@suse.com>. Include the document title, the product version, and the publication date of the document. Additionally, include the relevant section number and title (or provide the URL) and provide a concise description of the problem.

3 Documentation conventions #

The following notices and typographic conventions are used in this document:

/etc/passwd: Directory names and file namesPLACEHOLDER: Replace PLACEHOLDER with the actual value

PATH: An environment variablels,--help: Commands, options, and parametersuser: The name of user or grouppackage_name: The name of a software package

Alt, Alt–F1: A key to press or a key combination. Keys are shown in uppercase as on a keyboard.

, › : menu items, buttons

AMD/Intel This paragraph is only relevant for the AMD64/Intel 64 architectures. The arrows mark the beginning and the end of the text block.

IBM Z, POWER This paragraph is only relevant for the architectures

IBM ZandPOWER. The arrows mark the beginning and the end of the text block.Chapter 1, “Example chapter”: A cross-reference to another chapter in this guide.

Commands that must be run with

rootprivileges. Often you can also prefix these commands with thesudocommand to run them as non-privileged user.#command>sudocommandCommands that can be run by non-privileged users.

>commandNotices

Warning: Warning noticeVital information you must be aware of before proceeding. Warns you about security issues, potential loss of data, damage to hardware, or physical hazards.

Important: Important noticeImportant information you should be aware of before proceeding.

Note: Note noticeAdditional information, for example about differences in software versions.

Tip: Tip noticeHelpful information, like a guideline or a piece of practical advice.

Compact Notices

Additional information, for example about differences in software versions.

Helpful information, like a guideline or a piece of practical advice.

4 Support #

Find the support statement for SUSE Enterprise Storage and general information about technology previews below. For details about the product lifecycle, see https://www.suse.com/lifecycle.

If you are entitled to support, find details on how to collect information for a support ticket at https://documentation.suse.com/sles-15/html/SLES-all/cha-adm-support.html.

4.1 Support statement for SUSE Enterprise Storage #

To receive support, you need an appropriate subscription with SUSE. To view the specific support offerings available to you, go to https://www.suse.com/support/ and select your product.

The support levels are defined as follows:

- L1

Problem determination, which means technical support designed to provide compatibility information, usage support, ongoing maintenance, information gathering and basic troubleshooting using available documentation.

- L2

Problem isolation, which means technical support designed to analyze data, reproduce customer problems, isolate problem area and provide a resolution for problems not resolved by Level 1 or prepare for Level 3.

- L3

Problem resolution, which means technical support designed to resolve problems by engaging engineering to resolve product defects which have been identified by Level 2 Support.

For contracted customers and partners, SUSE Enterprise Storage is delivered with L3 support for all packages, except for the following:

Technology previews.

Sound, graphics, fonts, and artwork.

Packages that require an additional customer contract.

Some packages shipped as part of the module Workstation Extension are L2-supported only.

Packages with names ending in -devel (containing header files and similar developer resources) will only be supported together with their main packages.

SUSE will only support the usage of original packages. That is, packages that are unchanged and not recompiled.

4.2 Technology previews #

Technology previews are packages, stacks, or features delivered by SUSE to provide glimpses into upcoming innovations. Technology previews are included for your convenience to give you a chance to test new technologies within your environment. We would appreciate your feedback! If you test a technology preview, please contact your SUSE representative and let them know about your experience and use cases. Your input is helpful for future development.

Technology previews have the following limitations:

Technology previews are still in development. Therefore, they may be functionally incomplete, unstable, or in other ways not suitable for production use.

Technology previews are not supported.

Technology previews may only be available for specific hardware architectures.

Details and functionality of technology previews are subject to change. As a result, upgrading to subsequent releases of a technology preview may be impossible and require a fresh installation.

SUSE may discover that a preview does not meet customer or market needs, or does not comply with enterprise standards. Technology previews can be removed from a product at any time. SUSE does not commit to providing a supported version of such technologies in the future.

For an overview of technology previews shipped with your product, see the release notes at https://www.suse.com/releasenotes/x86_64/SUSE-Enterprise-Storage/7.1.

5 Ceph contributors #

The Ceph project and its documentation is a result of the work of hundreds of contributors and organizations. See https://ceph.com/contributors/ for more details.

6 Commands and command prompts used in this guide #

As a Ceph cluster administrator, you will be configuring and adjusting the cluster behavior by running specific commands. There are several types of commands you will need:

6.1 Salt-related commands #

These commands help you to deploy Ceph cluster nodes, run commands on

several (or all) cluster nodes at the same time, or assist you when adding

or removing cluster nodes. The most frequently used commands are

ceph-salt and ceph-salt config. You

need to run Salt commands on the Salt Master node as root. These

commands are introduced with the following prompt:

root@master # For example:

root@master # ceph-salt config ls6.2 Ceph related commands #

These are lower-level commands to configure and fine tune all aspects of the

cluster and its gateways on the command line, for example

ceph, cephadm, rbd,

or radosgw-admin.

To run Ceph related commands, you need to have read access to a Ceph

key. The key's capabilities then define your privileges within the Ceph

environment. One option is to run Ceph commands as root (or via

sudo) and use the unrestricted default keyring

'ceph.client.admin.key'.

The safer and recommended option is to create a more restrictive individual key for each administrator user and put it in a directory where the users can read it, for example:

~/.ceph/ceph.client.USERNAME.keyring

To use a custom admin user and keyring, you need to specify the user name

and path to the key each time you run the ceph command

using the -n client.USER_NAME

and --keyring PATH/TO/KEYRING

options.

To avoid this, include these options in the CEPH_ARGS

variable in the individual users' ~/.bashrc files.

Although you can run Ceph-related commands on any cluster node, we

recommend running them on the Admin Node. This documentation uses the cephuser

user to run the commands, therefore they are introduced with the following

prompt:

cephuser@adm > For example:

cephuser@adm > ceph auth listIf the documentation instructs you to run a command on a cluster node with a specific role, it will be addressed by the prompt. For example:

cephuser@mon > 6.2.1 Running ceph-volume #

Starting with SUSE Enterprise Storage 7, Ceph services are running containerized.

If you need to run ceph-volume on an OSD node, you need

to prepend it with the cephadm command, for example:

cephuser@adm > cephadm ceph-volume simple scan6.3 General Linux commands #

Linux commands not related to Ceph, such as mount,

cat, or openssl, are introduced either

with the cephuser@adm > or # prompts, depending on which

privileges the related command requires.

6.4 Additional information #

For more information on Ceph key management, refer to Section 30.2, “Key management”.

Part I Ceph Dashboard #

- 1 About the Ceph Dashboard

The Ceph Dashboard is a built-in Web-based Ceph management and monitoring application that administers various aspects and objects of the cluster. The dashboard is automatically enabled after the basic cluster is deployed in Book “Deployment Guide”, Chapter 7 “Deploying the bootstrap cluster using c…

- 2 Dashboard's Web user interface

To log in to the Ceph Dashboard, point your browser to its URL including the port number. Run the following command to find the address:

- 3 Manage Ceph Dashboard users and roles

Dashboard user management performed by Ceph commands on the command line was already introduced in Chapter 11, Manage users and roles on the command line.

- 4 View cluster internals

The menu item lets you view detailed information about Ceph cluster hosts, inventory, Ceph Monitors, services, OSDs, configuration, CRUSH Map, Ceph Manager, logs, and monitoring files.

- 5 Manage pools

For more general information about Ceph pools, refer to Chapter 18, Manage storage pools. For information specific to erasure code pools, refer to Chapter 19, Erasure coded pools.

- 6 Manage RADOS Block Device

To list all available RADOS Block Devices (RBDs), click › from the main menu.

- 7 Manage NFS Ganesha

NFS Ganesha supports NFS version 4.1 and newer. It does not support NFS version 3.

- 8 Manage CephFS

To find detailed information about CephFS, refer to Chapter 23, Clustered file system.

- 9 Manage the Object Gateway

Before you begin, you may encounter the following notification when trying to access the Object Gateway front-end on the Ceph Dashboard:

- 10 Manual configuration

This section introduces advanced information for users that prefer configuring dashboard settings manually on the command line.

- 11 Manage users and roles on the command line

This section describes how to manage user accounts used by the Ceph Dashboard. It helps you create or modify user accounts, as well as set proper user roles and permissions.

1 About the Ceph Dashboard #

The Ceph Dashboard is a built-in Web-based Ceph management and monitoring

application that administers various aspects and objects of the cluster. The

dashboard is automatically enabled after the basic cluster is deployed in

Book “Deployment Guide”, Chapter 7 “Deploying the bootstrap cluster using ceph-salt”.

The Ceph Dashboard for SUSE Enterprise Storage 7.1 has added more Web-based management capabilities to make it easier to administer Ceph, including monitoring and application administration to the Ceph Manager. You no longer need to know complex Ceph-related commands to manage and monitor your Ceph cluster. You can either use the Ceph Dashboard's intuitive interface, or its built-in REST API.

The Ceph Dashboard module visualizes information and statistics about the Ceph

cluster using a Web server hosted by ceph-mgr. See

Book “Deployment Guide”, Chapter 1 “SES and Ceph”, Section 1.2.3 “Ceph nodes and daemons” for more details on Ceph Manager.

2 Dashboard's Web user interface #

2.1 Logging in #

To log in to the Ceph Dashboard, point your browser to its URL including the port number. Run the following command to find the address:

cephuser@adm > ceph mgr services | grep dashboard

"dashboard": "https://host:port/",The command returns the URL where the Ceph Dashboard is located. If you are having issues with this command, see Book “Troubleshooting Guide”, Chapter 10 “Troubleshooting the Ceph Dashboard”, Section 10.1 “Locating the Ceph Dashboard”.

Log in by using the credentials that you created during cluster deployment

(see Book “Deployment Guide”, Chapter 7 “Deploying the bootstrap cluster using ceph-salt”, Section 7.2.9 “Configuring the Ceph Dashboard login credentials”).

If you do not want to use the default admin account to access the Ceph Dashboard, create a custom user account with administrator privileges. Refer to Chapter 11, Manage users and roles on the command line for more details.

As soon as an upgrade to a new Ceph major release (code name: Pacific) is available, the Ceph Dashboard will display a relevant message in the top notification area. To perform the upgrade, follow instructions in Book “Deployment Guide”, Chapter 11 “Upgrade from SUSE Enterprise Storage 7 to 7.1”.

The dashboard user interface is graphically divided into several blocks: the utility menu in the top right-hand side of the screen, the main menu on the left-hand side, and the main content pane.

2.4 Content pane #

The content pane occupies the main part of the dashboard's screen. The dashboard home page shows plenty of helpful widgets to inform you briefly about the current status of the cluster, capacity, and performance information.

2.5 Common Web UI features #

In Ceph Dashboard, you often work with lists—for example, lists of pools, OSD nodes, or RBD devices. All lists will automatically refresh themselves by default every five seconds. The following common widgets help you manage or adjust these list:

Click ![]() to trigger a manual refresh of the list.

to trigger a manual refresh of the list.

Click ![]() to display or hide individual table columns.

to display or hide individual table columns.

Click ![]() and enter (or select) how many rows to display on a

single page.

and enter (or select) how many rows to display on a

single page.

Click inside  and filter the rows by typing the string to search for.

and filter the rows by typing the string to search for.

Use  to change the currently displayed page if the list

spans across multiple pages.

to change the currently displayed page if the list

spans across multiple pages.

2.6 Dashboard widgets #

Each dashboard widget shows specific status information related to a specific aspect of a running Ceph cluster. Some widgets are active links and after clicking them, they will redirect you to a related detailed page of the topic they represent.

Some graphical widgets show you more detail when you move the mouse over them.

2.6.1 Status widgets #

widgets give you a brief overview about the cluster's current status.

Presents basic information about the cluster's health.

Shows the total number of cluster nodes.

Shows the number of running monitors and their quorum.

Shows the total number of OSDs, as well as the number of up and in OSDs.

Shows the number of active and standby Ceph Manager daemons.

Shows the number of running Object Gateways.

Shows the number of Metadata Servers.

Shows the number of configured iSCSI gateways.

2.6.2 Capacity widgets #

widgets show brief information about the storage capacity.

Shows the ratio of used and available raw storage capacity.

Shows the number of data objects stored in the cluster.

Displays a chart of the placement groups according to their status.

Shows the number of pools in the cluster.

Shows the average number of placement groups per OSD.

2.6.3 Performance widgets #

widgets refer to basic performance data of Ceph clients.

The amount of clients' read and write operations per second.

The amount of data transferred to and from Ceph clients in bytes per second.

The throughput of data recovered per second.

Shows the scrubbing (see Section 17.4.9, “Scrubbing a placement group”) status. It is either

inactive,enabled, oractive.

3 Manage Ceph Dashboard users and roles #

Dashboard user management performed by Ceph commands on the command line was already introduced in Chapter 11, Manage users and roles on the command line.

This section describes how to manage user accounts by using the Dashboard Web user interface.

3.1 Listing users #

Click ![]() in the utility menu and select .

in the utility menu and select .

The list contains each user's user name, full name, e-mail, a list of assigned roles, whether the role is enabled, and the password expiration date.

3.2 Adding new users #

Click in the top left of the table heading to add a new user. Enter their user name, password, and optionally a full name and an e-mail.

Click the little pen icon to assign predefined roles to the user. Confirm with .

3.3 Editing users #

Click a user's table row to highlight the selection Select to edit details about the user. Confirm with .

3.4 Deleting users #

Click a user's table row to highlight the selection Select the drop-down box next to and select from the list to delete the user account. Activate the check box and confirm with .

3.5 Listing user roles #

Click ![]() in the utility menu and select . Then click the tab.

in the utility menu and select . Then click the tab.

The list contains each role's name, description, and whether it is a system role.

3.6 Adding custom roles #

Click in the top left of the table heading to add a new custom role. Enter the and and next to , select the appropriate permissions.

If you create custom user roles and intend to remove the Ceph cluster

with the ceph-salt purge command later on, you need to

purge the custom roles first. Find more details in

Section 13.9, “Removing an entire Ceph cluster”.

By activating the check box that precedes the topic name, you activate all permissions for that topic. By activating the check box, you activate all permissions for all the topics.

Confirm with .

3.7 Editing custom roles #

Click a user's table row to highlight the selection Select in the top left of the table heading to edit a description and permissions of the custom role. Confirm with .

3.8 Deleting custom roles #

Click a role's table row to highlight the selection Select the drop-down box next to and select from the list to delete the role. Activate the check box and confirm with .

4 View cluster internals #

The menu item lets you view detailed information about Ceph cluster hosts, inventory, Ceph Monitors, services, OSDs, configuration, CRUSH Map, Ceph Manager, logs, and monitoring files.

4.1 Viewing cluster nodes #

Click › to view a list of cluster nodes.

Click the drop-down arrow next to a node name in the column to view the performance details of the node.

The column lists all daemons that are running on each related node. Click a daemon name to view its detailed configuration.

4.2 Listing physical disks #

Click › to view a list of physical disks. The list includes the device path, type, availability, vendor, model, size, and the OSDs.

Click to select a node name in the column. When selected, click to identify the device the host is running on. This tells the device to blink its LEDs. Select the duration of this action between 1, 2, 5, 10, or 15 minutes. Click .

4.3 Viewing Ceph Monitors #

Click

›

to view a list of cluster nodes with running Ceph monitors. The content

pane is split into two views: Status, and In

Quorum or Not In Quorum.

The table shows general statistics about the running Ceph Monitors, including the following:

Cluster ID

monmap modified

monmap epoch

quorum con

quorum mon

required con

required mon

The In Quorum and Not In Quorum panes

include each monitor's name, rank number, public IP address, and number of

open sessions.

Click a node name in the column to view the related Ceph Monitor configuration.

4.4 Displaying services #

Click

›

to view details on each of the available services: crash,

Ceph Manager, and Ceph Monitors. The list includes the container image name, container

image ID, status of what is running, size, and when it was last refreshed.

Click the drop-down arrow next to a service name in the column to view details of the daemon. The detail list includes the host name, daemon type, daemon ID, container ID, container image name, container image ID, version number, status, and when it was last refreshed.

4.4.1 Adding new cluster services #

To add a new service to a cluster, click the button in the top left corner of the table.

In the window, specify the type of the service and then fill the required options that are relevant for the service you previously selected. Confirm with .

4.5 Displaying Ceph OSDs #

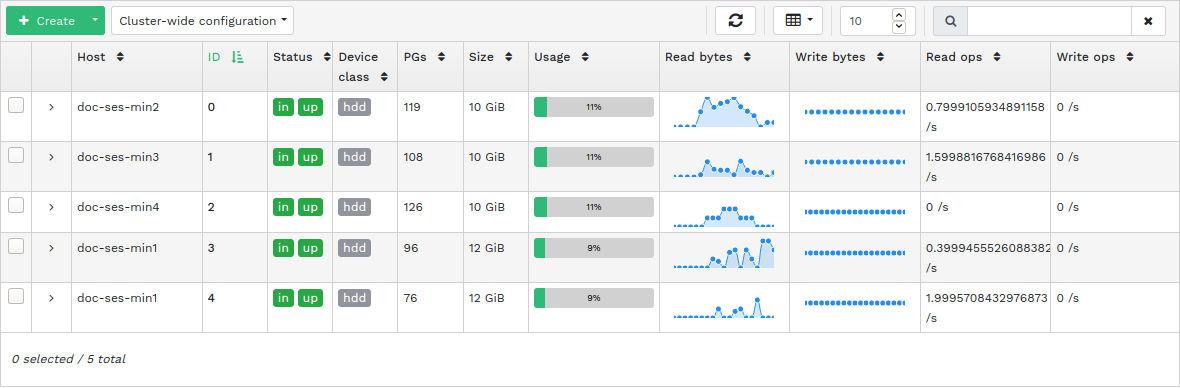

Click › to view a list of nodes with running OSD daemons. The list includes each node's name, ID, status, device class, number of placement groups, size, usage, reads/writes chart in time, and the rate of read/write operations per second.

Select from the drop-down menu in the table heading to open a pop-up window. This has a list of flags that apply to the whole cluster. You can activate or deactivate individual flags, and confirm with .

Select from the drop-down menu in the table heading to open a pop-up window. This has a list of OSD recovery priorities that apply to the whole cluster. You can activate the preferred priority profile and fine-tune the individual values below. Confirm with .

Click the drop-down arrow next to a node name in the column to view an extended table with details about the device settings and performance. Browsing through several tabs, you can see lists of , , , , a graphical of reads and writes, and .

After you click an OSD node name, the table row is highlighted. This means that you can now perform a task on the node. You can choose to perform any of the following actions: , , , , , , , , , , , or .

Click the down arrow in the top left of the table heading next to the button and select the task you want to perform.

4.5.1 Adding OSDs #

To add new OSDs, follow these steps:

Verify that some cluster nodes have storage devices whose status is

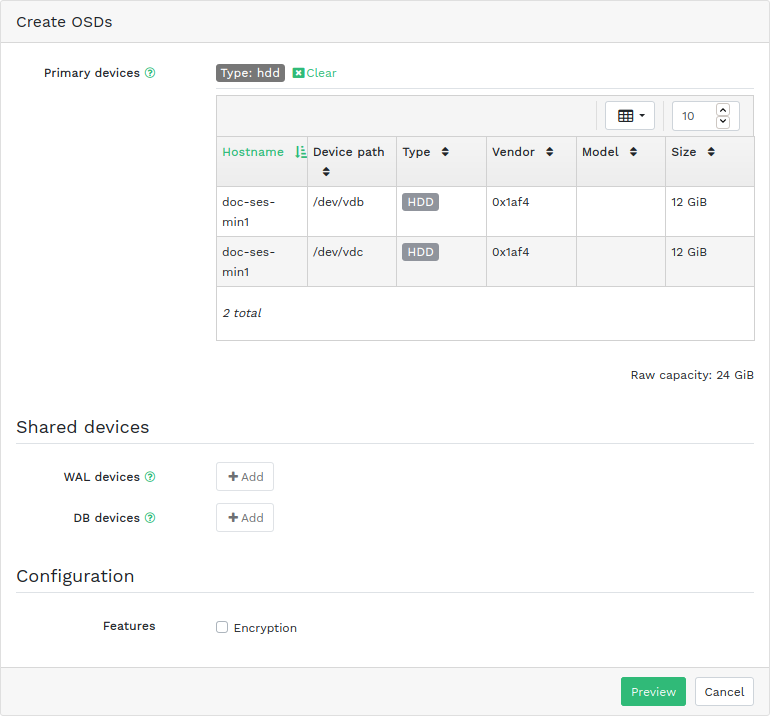

available. Then click the down arrow in the top left of the table heading and select . This opens the window.Figure 4.10: Create OSDs #To add primary storage devices for OSDs, click . Before you can add storage devices, you need to specify filtering criteria in the top right of the table—for example . Confirm with .

Figure 4.11: Adding primary devices #In the updated window, optionally add shared WAL and BD devices, or enable device encryption.

Figure 4.12: Create OSDs with primary devices added #Click to view the preview of DriveGroups specification for the devices you previously added. Confirm with .

Figure 4.13: #New devices will be added to the list of OSDs.

Figure 4.14: Newly added OSDs #NoteThere is no progress visualization of the OSD creation process. It takes some time before they are actually created. The OSDs will appear in the list when they have been deployed. If you want to check the deployment status, view the logs by clicking › .

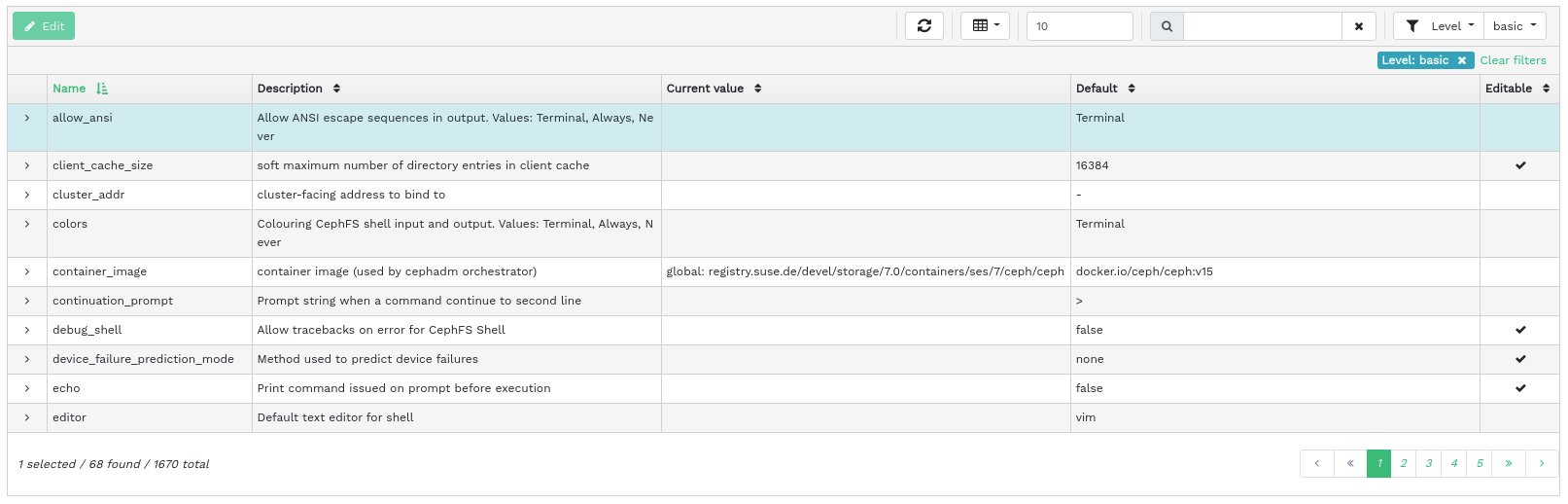

4.6 Viewing cluster configuration #

Click › to view a complete list of Ceph cluster configuration options. The list contains the name of the option, its short description, and its current and default values, and whether the option is editable.

Click the drop-down arrow next to a configuration option in the column to view an extended table with detailed information about the option, such as its type of value, minimum and maximum permitted values, whether it can be updated at runtime, and many more.

After highlighting a specific option, you can edit its value(s) by clicking the button in the top left of the table heading. Confirm changes with .

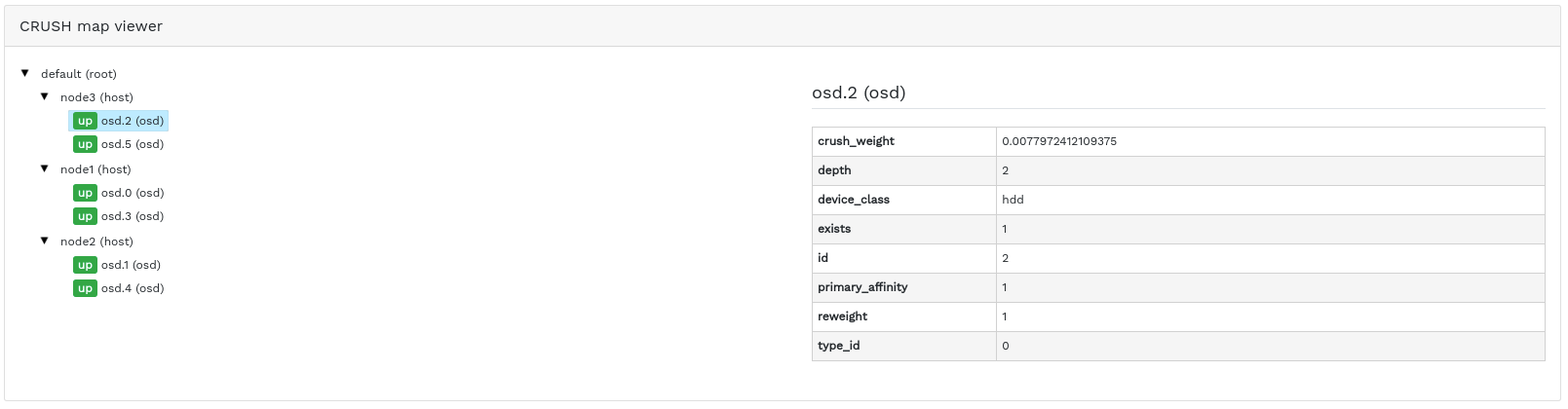

4.7 Viewing the CRUSH Map #

Click › to view a CRUSH Map of the cluster. For more general information on CRUSH Maps, refer to Section 17.5, “CRUSH Map manipulation”.

Click the root, nodes, or individual OSDs to view more detailed information, such as crush weight, depth in the map tree, device class of the OSD, and many more.

4.8 Viewing manager modules #

Click › to view a list of available Ceph Manager modules. Each line consists of a module name and information on whether it is currently enabled or not.

Click the drop-down arrow next to a module in the column to view an extended table with detailed settings in the table below. Edit them by clicking the button in the top left of the table heading. Confirm changes with .

Click the drop-down arrow next to the button in the top left of the table heading to or a module.

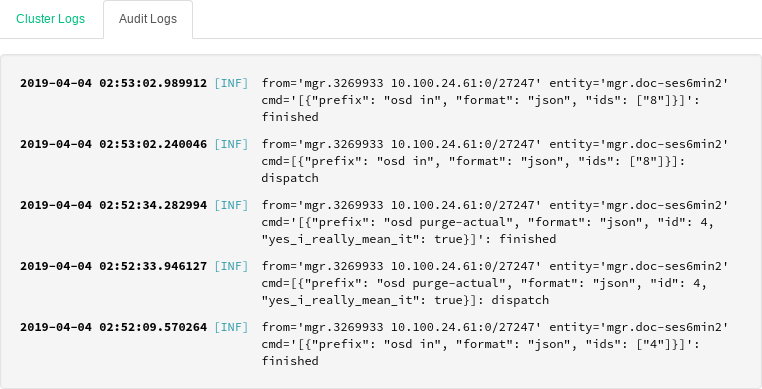

4.9 Viewing logs #

Click › to view a list of cluster's recent log entries. Each line consists of a time stamp, the type of the log entry, and the logged message itself.

Click the tab to view log entries of the auditing subsystem. Refer to Section 11.5, “Auditing API requests” for commands to enable or disable auditing.

4.10 Viewing monitoring #

Click › to manage and view details on Prometheus alerts.

If you have Prometheus active, in this content pane you can view detailed information on , , or .

If you do not have Prometheus deployed, an information banner will appear and link to relevant documentation.

5 Manage pools #

For more general information about Ceph pools, refer to Chapter 18, Manage storage pools. For information specific to erasure code pools, refer to Chapter 19, Erasure coded pools.

To list all available pools, click from the main menu.

The list shows each pool's name, type, related application, placement group status, replica size, last change, erasure coded profile, crush ruleset, usage, and read/write statistics.

Click the drop-down arrow next to a pool name in the column to view an extended table with detailed information on the pool, such as the general details, performance details, and configuration.

5.1 Adding a new pool #

To add a new pool, click in the top left of the pools table. In the pool form you can enter the pool's name, type, its applications, compression mode, and quotas including maximum byes and maximum objects. The pool form itself pre-calculates the number of placement groups that best suited to this specific pool. The calculation is based on the amount of OSDs in the cluster and the selected pool type with its specific settings. As soon as a placement groups number is set manually, it will be replaced by a calculated number. Confirm with .

5.2 Deleting pools #

To delete a pool, select the pool in the table row. Click the drop-down arrow next to the button and click .

5.3 Editing a pool's options #

To edit a pool's options, select the pool in the table row and click in the top left of the pools table.

You can change the name of the pool, increase the number of placement groups, change the list of the pool's applications and compression settings. Confirm with .

6 Manage RADOS Block Device #

To list all available RADOS Block Devices (RBDs), click › from the main menu.

The list shows brief information about the device, such as the device's name, the related pool name, namespace, size of the device, number and size of objects on the device, details on the provisioning of the details, and the parent.

6.1 Viewing details about RBDs #

To view more detailed information about a device, click its row in the table:

6.2 Viewing RBD's configuration #

To view detailed configuration of a device, click its row in the table and then the tab in the lower table:

6.3 Creating RBDs #

To add a new device, click in the top left of the table heading and do the following on the screen:

Enter the name of the new device. Refer to Book “Deployment Guide”, Chapter 2 “Hardware requirements and recommendations”, Section 2.11 “Name limitations” for naming limitations.

Select the pool with the

rbdapplication assigned from which the new RBD device will be created.Specify the size of the new device.

Specify additional options for the device. To fine-tune the device parameters, click and enter values for object size, stripe unit, or stripe count. To enter Quality of Service (QoS) limits, click and enter them.

Confirm with .

6.4 Deleting RBDs #

To delete a device, select the device in the table row. Click the drop-down arrow next to the button and click . Confirm the deletion with .

Deleting an RBD is an irreversible action. If you instead, you can restore the device later on by selecting it on the tab of the main table and clicking in the top left of the table heading.

6.5 Creating RADOS Block Device snapshots #

To create a RADOS Block Device snapshot, select the device in the table row and the detailed configuration content pane appears. Select the tab and click in the top left of the table heading. Enter the snapshot's name and confirm with .

After selecting a snapshot, you can perform additional actions on the device, such as rename, protect, clone, copy, or delete. restores the device's state from the current snapshot.

6.6 RBD mirroring #

RADOS Block Device images can be asynchronously mirrored between two Ceph clusters. You can use the Ceph Dashboard to configure replication of RBD images between two or more clusters. This capability is available in two modes:

- Journal-based

This mode uses the RBD journaling image feature to ensure point-in-time, crash-consistent replication between clusters.

- Snapshot-based

This mode uses periodically scheduled or manually created RBD image mirror-snapshots to replicate crash-consistent RBD images between clusters.

Mirroring is configured on a per-pool basis within peer clusters and can be configured on a specific subset of images within the pool or configured to automatically mirror all images within a pool when using journal-based mirroring only.

Mirroring is configured using the rbd command, which is

installed by default in SUSE Enterprise Storage 7.1. The

rbd-mirror daemon is responsible for

pulling image updates from the remote, peer cluster and applying them to the

image within the local cluster. See

Section 6.6.2, “Enabling the rbd-mirror daemon” for more information on enabling

the rbd-mirror daemon.

Depending on the need for replication, RADOS Block Device mirroring can be configured for either one- or two-way replication:

- One-way Replication

When data is only mirrored from a primary cluster to a secondary cluster, the

rbd-mirrordaemon runs only on the secondary cluster.- Two-way Replication

When data is mirrored from primary images on one cluster to non-primary images on another cluster (and vice-versa), the

rbd-mirrordaemon runs on both clusters.

Each instance of the rbd-mirror

daemon must be able to connect to both the local and remote Ceph clusters

simultaneously, for example all monitor and OSD hosts. Additionally, the

network must have sufficient bandwidth between the two data centers to

handle mirroring workload.

For general information and the command line approach to RADOS Block Device mirroring, refer to Section 20.4, “RBD image mirrors”.

6.6.1 Configuring primary and secondary clusters #

A primary cluster is where the original pool with images is created. A secondary cluster is where the pool or images are replicated from the primary cluster.

The primary and secondary terms can be relative in the context of replication because they relate more to individual pools than to clusters. For example, in two-way replication, one pool can be mirrored from the primary cluster to the secondary one, while another pool can be mirrored from the secondary cluster to the primary one.

6.6.2 Enabling the rbd-mirror daemon #

The following procedures demonstrate how to perform the basic

administrative tasks to configure mirroring using the

rbd command. Mirroring is configured on a per-pool basis

within the Ceph clusters.

The pool configuration steps should be performed on both peer clusters. These procedures assume two clusters, named “primary” and “secondary”, are accessible from a single host for clarity.

The rbd-mirror daemon performs the

actual cluster data replication.

Rename

ceph.confand keyring files and copy them from the primary host to the secondary host:cephuser@secondary >cp /etc/ceph/ceph.conf /etc/ceph/primary.confcephuser@secondary >cp /etc/ceph/ceph.admin.client.keyring \ /etc/ceph/primary.client.admin.keyringcephuser@secondary >scp PRIMARY_HOST:/etc/ceph/ceph.conf \ /etc/ceph/secondary.confcephuser@secondary >scp PRIMARY_HOST:/etc/ceph/ceph.client.admin.keyring \ /etc/ceph/secondary.client.admin.keyringTo enable mirroring on a pool with

rbd, specify themirror pool enable, the pool name, and the mirroring mode:cephuser@adm >rbd mirror pool enable POOL_NAME MODENoteThe mirroring mode can either be

imageorpool. For example:cephuser@secondary >rbd --cluster primary mirror pool enable image-pool imagecephuser@secondary >rbd --cluster secondary mirror pool enable image-pool imageOn the Ceph Dashboard, navigate to › . The table to the left shows actively running

rbd-mirrordaemons and their health.Figure 6.6: Runningrbd-mirrordaemons #

6.6.3 Disabling mirroring #

To disable mirroring on a pool with rbd, specify the

mirror pool disable command and the pool name:

cephuser@adm > rbd mirror pool disable POOL_NAMEWhen mirroring is disabled on a pool in this way, mirroring will also be disabled on any images (within the pool) for which mirroring was enabled explicitly.

6.6.4 Bootstrapping peers #

In order for the rbd-mirror to

discover its peer cluster, the peer needs to be registered to the pool and

a user account needs to be created. This process can be automated with

rbd by using the mirror pool peer bootstrap

create and mirror pool peer bootstrap import

commands.

To manually create a new bootstrap token with rbd,

specify the mirror pool peer bootstrap create command, a

pool name, along with an optional site name to describe the local cluster:

cephuser@adm > rbd mirror pool peer bootstrap create [--site-name local-site-name] pool-name

The output of mirror pool peer bootstrap create will be

a token that should be provided to the mirror pool peer bootstrap

import command. For example, on the primary cluster:

cephuser@adm > rbd --cluster primary mirror pool peer bootstrap create --site-name primary

image-pool eyJmc2lkIjoiOWY1MjgyZGItYjg5OS00NTk2LTgwOTgtMzIwYzFmYzM5NmYzIiwiY2xpZW50X2lkIjoicmJkL \

W1pcnJvci1wZWVyIiwia2V5IjoiQVFBUnczOWQwdkhvQmhBQVlMM1I4RmR5dHNJQU50bkFTZ0lOTVE9PSIsIm1vbl9ob3N0I \

joiW3YyOjE5Mi4xNjguMS4zOjY4MjAsdjE6MTkyLjE2OC4xLjM6NjgyMV0ifQ==

To manually import the bootstrap token created by another cluster with the

rbd command, specify the mirror pool peer

bootstrap import command, the pool name, a file path to the

created token (or ‘-‘ to read from standard input), along with an

optional site name to describe the local cluster and a mirroring direction

(defaults to rx-tx for bidirectional mirroring, but can

also be set to rx-only for unidirectional mirroring):

cephuser@adm > rbd mirror pool peer bootstrap import [--site-name local-site-name] \

[--direction rx-only or rx-tx] pool-name token-pathFor example, on the secondary cluster:

cephuser@adm >cat >>EOF < token eyJmc2lkIjoiOWY1MjgyZGItYjg5OS00NTk2LTgwOTgtMzIwYzFmYzM5NmYzIiwiY2xpZW50X2lkIjoicmJkLW1pcn \ Jvci1wZWVyIiwia2V5IjoiQVFBUnczOWQwdkhvQmhBQVlMM1I4RmR5dHNJQU50bkFTZ0lOTVE9PSIsIm1vbl9ob3N0I \ joiW3YyOjE5Mi4xNjguMS4zOjY4MjAsdjE6MTkyLjE2OC4xLjM6NjgyMV0ifQ== EOFcephuser@adm >rbd --cluster secondary mirror pool peer bootstrap import --site-name secondary image-pool token

6.6.5 Removing cluster peer #

To remove a mirroring peer Ceph cluster with the rbd

command, specify the mirror pool peer remove command,

the pool name, and the peer UUID (available from the rbd mirror

pool info command):

cephuser@adm > rbd mirror pool peer remove pool-name peer-uuid6.6.6 Configuring pool replication in the Ceph Dashboard #

The rbd-mirror daemon needs to have

access to the primary cluster to be able to mirror RBD images. Ensure you

have followed the steps in Section 6.6.4, “Bootstrapping peers”

before continuing.

On both the primary and secondary cluster, create pools with an identical name and assign the

rbdapplication to them. Refer to Section 5.1, “Adding a new pool” for more details on creating a new pool.Figure 6.7: Creating a pool with RBD application #On both the primary and secondary cluster's dashboards, navigate to › . In the table on the right, click the name of the pool to replicate, and after clicking , select the replication mode. In this example, we will work with a pool replication mode, which means that all images within a given pool will be replicated. Confirm with .

Figure 6.8: Configuring the replication mode #Important: Error or warning on the primary clusterAfter updating the replication mode, an error or warning flag will appear in the corresponding right column. That is because the pool has no peer user for replication assigned yet. Ignore this flag for the primary cluster as we assign a peer user to the secondary cluster only.

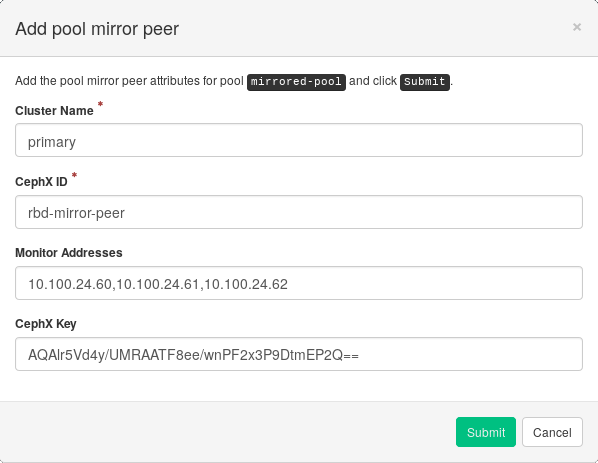

On the secondary cluster's Dashboard, navigate to › . Add the pool mirror peer by selecting . Provide the primary cluster's details:

Figure 6.9: Adding peer credentials #An arbitrary unique string that identifies the primary cluster, such as 'primary'. The cluster name needs to be different from the real secondary cluster's name.

The Ceph user ID that you created as a mirroring peer. In this example it is 'rbd-mirror-peer'.

Comma-separated list of IP addresses of the primary cluster's Ceph Monitor nodes.

The key related to the peer user ID. You can retrieve it by running the following example command on the primary cluster:

cephuser@adm >ceph auth print_key pool-mirror-peer-name

Confirm with .

Figure 6.10: List of replicated pools #

6.6.7 Verifying that RBD image replication works #

When the rbd-mirror daemon is

running and RBD image replication is configured on the Ceph Dashboard, it is

time to verify whether the replication actually works:

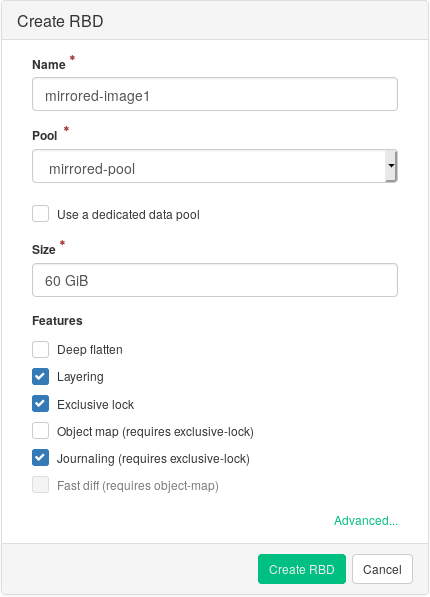

On the primary cluster's Ceph Dashboard, create an RBD image so that its parent pool is the pool that you already created for replication purposes. Enable the

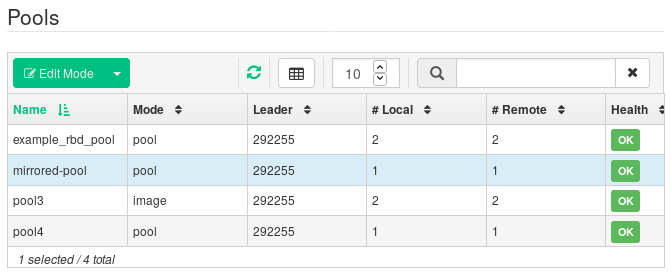

Exclusive lockandJournalingfeatures for the image. Refer to Section 6.3, “Creating RBDs” for details on how to create RBD images.Figure 6.11: New RBD image #After you create the image that you want to replicate, open the secondary cluster's Ceph Dashboard and navigate to › . The table on the right will reflect the change in the number of images and synchronize the number of images.

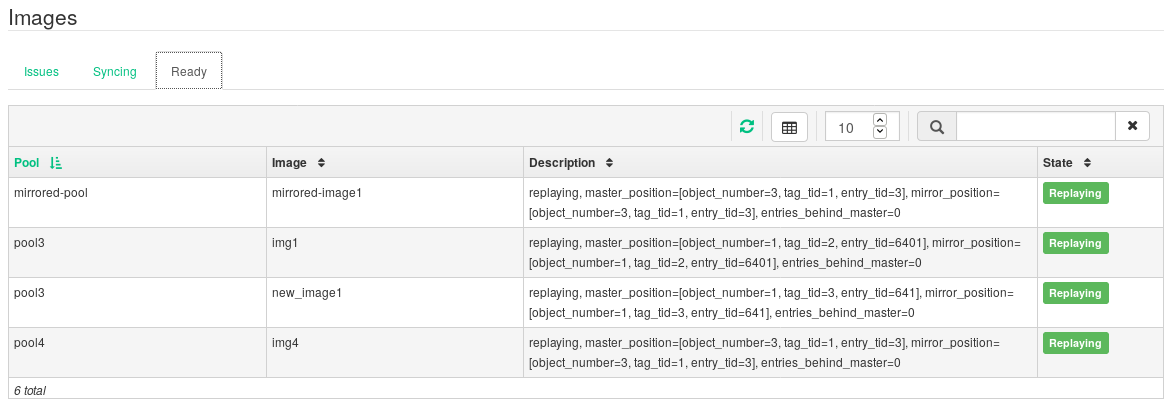

Figure 6.12: New RBD image synchronized #Tip: Replication progressThe table at the bottom of the page shows the status of replication of RBD images. The tab includes possible problems, the tab displays the progress of image replication, and the tab lists all images with successful replication.

Figure 6.13: RBD images' replication status #On the primary cluster, write data to the RBD image. On the secondary cluster's Ceph Dashboard, navigate to › and monitor whether the corresponding image's size is growing as the data on the primary cluster is written.

6.7 Managing iSCSI Gateways #

For more general information about iSCSI Gateways, refer to Chapter 22, Ceph iSCSI gateway.

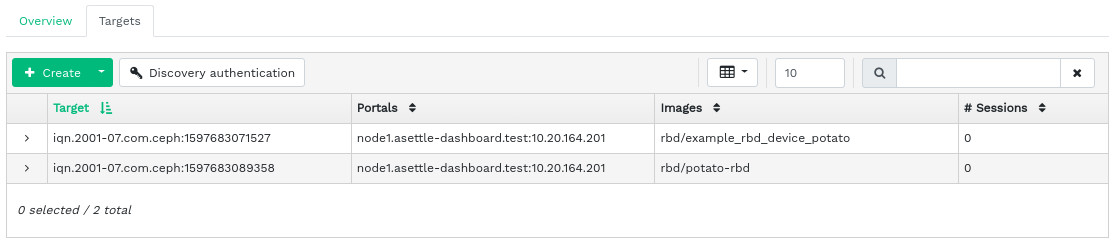

To list all available gateways and mapped images, click › from the main menu. An tab opens, listing currently configured iSCSI Gateways and mapped RBD images.

The table lists each gateway's state, number of iSCSI targets, and number of sessions. The table lists each mapped image's name, related pool name backstore type, and other statistical details.

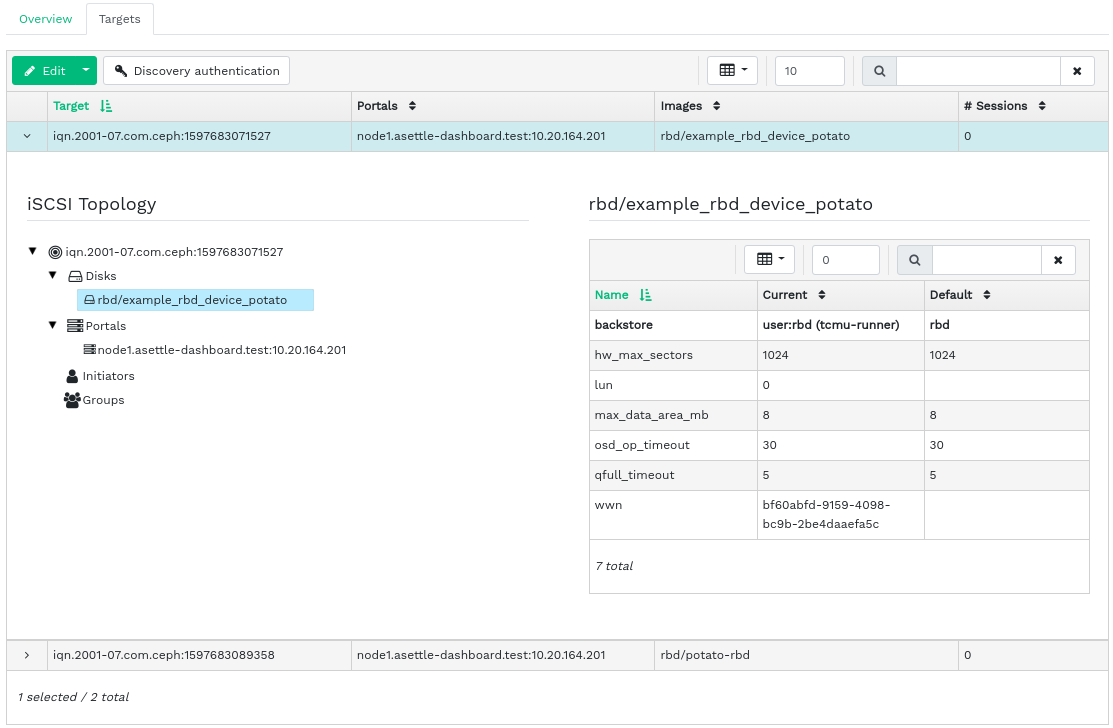

The tab lists currently configured iSCSI targets.

To view more detailed information about a target, click the drop-down arrow on the target table row. A tree-structured schema opens, listing disks, portals, initiators, and groups. Click an item to expand it and view its detailed contents, optionally with a related configuration in the table on the right.

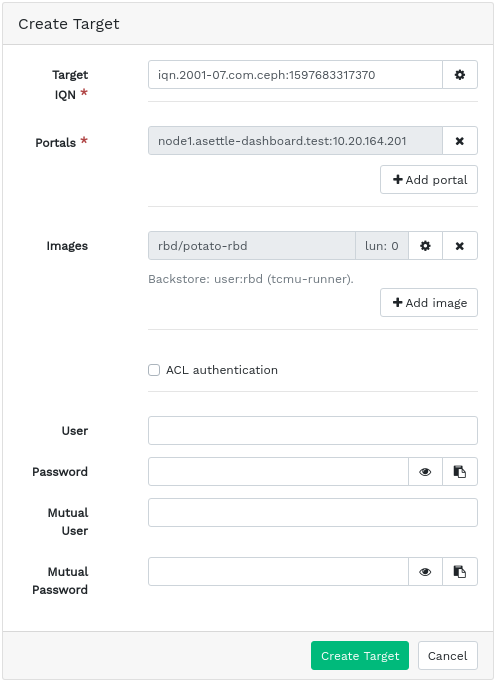

6.7.1 Adding iSCSI targets #

To add a new iSCSI target, click in the top left of the table and enter the required information.

Enter the target address of the new gateway.

Click and select one or multiple iSCSI portals from the list.

Click and select one or multiple RBD images for the gateway.

If you need to use authentication to access the gateway, activate the check box and enter the credentials. You can find more advanced authentication options after activating and .

Confirm with .

6.7.2 Editing iSCSI targets #

To edit an existing iSCSI target, click its row in the table and click in the top left of the table.

You can then modify the iSCSI target, add or delete portals, and add or delete related RBD images. You can also adjust authentication information for the gateway.

6.7.3 Deleting iSCSI targets #

To delete an iSCSI target, select the table row and click the drop-down arrow next to the button and select . Activate and confirm with .

6.8 RBD Quality of Service (QoS) #

For more general information and a description of RBD QoS configuration options, refer to Section 20.6, “QoS settings”.

The QoS options can be configured at different levels.

Globally

On a per-pool basis

On a per-image basis

The global configuration is at the top of the list and will be used for all newly created RBD images and for those images that do not override these values on the pool or RBD image layer. An option value specified globally can be overridden on a per-pool or per-image basis. Options specified on a pool will be applied to all RBD images of that pool unless overridden by a configuration option set on an image. Options specified on an image will override options specified on a pool and will override options specified globally.

This way it is possible to define defaults globally, adapt them for all RBD images of a specific pool, and override the pool configuration for individual RBD images.

6.8.1 Configuring options globally #

To configure the RADOS Block Device options globally, select › from the main menu.

To list all available global configuration options, next to , choose from the drop-down menu.

Filter the results of the table by filtering for

rbd_qosin the search field. This lists all available configuration options for QoS.To change a value, click the row in the table, then select at the top left of the table. The dialog contains six different fields for specifying values. The RBD configuration option values are required in the text box.

NoteUnlike the other dialogs, this one does not allow you to specify the value in convenient units. You need to set these values in either bytes or IOPS, depending on the option you are editing.

6.8.2 Configuring options on a new pool #

To create a new pool and configure RBD configuration options on it, click

› . Select

as pool type. You will then need to add the

rbd application tag to the pool to be able to configure

the RBD QoS options.

It is not possible to configure RBD QoS configuration options on an erasure coded pool. To configure the RBD QoS options for erasure coded pools, you need to edit the replicated metadata pool of an RBD image. The configuration will then be applied to the erasure coded data pool of that image.

6.8.3 Configuring options on an existing pool #

To configure RBD QoS options on an existing pool, click , then click the pool's table row and select at the top left of the table.

You should see the section in the dialog, followed by a section.

If you see neither the nor the section, you are likely either editing an erasure coded pool, which cannot be used to set RBD configuration options, or the pool is not configured to be used by RBD images. In the latter case, assign the application tag to the pool and the corresponding configuration sections will show up.

6.8.4 Configuration options #

Click to expand the configuration options. A list of all available options will show up. The units of the configuration options are already shown in the text boxes. In case of any bytes per second (BPS) option, you are free to use shortcuts such as '1M' or '5G'. They will be automatically converted to '1 MB/s' and '5 GB/s' respectively.

By clicking the reset button to the right of each text box, any value set on the pool will be removed. This does not remove configuration values of options configured globally or on an RBD image.

6.8.5 Creating RBD QoS options with a new RBD image #

To create an RBD image with RBD QoS options set on that image, select › and then click . Click to expand the advanced configuration section. Click to open all available configuration options.

6.8.6 Editing RBD QoS options on existing images #

To edit RBD QoS options on an existing image, select › , then click the pool's table row, and lastly click . The edit dialog will show up. Click to expand the advanced configuration section. Click to open all available configuration options.

6.8.7 Changing configuration options when copying or cloning images #

If an RBD image is cloned or copied, the values set on that particular image will be copied too, by default. If you want to change them while copying or cloning, you can do so by specifying the updated configuration values in the copy/clone dialog, the same way as when creating or editing an RBD image. Doing so will only set (or reset) the values for the RBD image that is copied or cloned. This operation changes neither the source RBD image configuration, nor the global configuration.

If you choose to reset the option value on copying/cloning, no value for that option will be set on that image. This means that any value of that option specified for the parent pool will be used if the parent pool has the value configured. Otherwise, the global default will be used.

7 Manage NFS Ganesha #

NFS Ganesha supports NFS version 4.1 and newer. It does not support NFS version 3.

For more general information about NFS Ganesha, refer to Chapter 25, NFS Ganesha.

To list all available NFS exports, click from the main menu.

The list shows each export's directory, daemon host name, type of storage back-end, and access type.

To view more detailed information about an NFS export, click its table row.

7.1 Creating NFS exports #

To add a new NFS export, click in the top left of the exports table and enter the required information.

Select one or more NFS Ganesha daemons that will run the export.

Select a storage back-end.

ImportantAt this time, only NFS exports backed by CephFS are supported.

Select a user ID and other back-end related options.

Enter the directory path for the NFS export. If the directory does not exist on the server, it will be created.

Specify other NFS related options, such as supported NFS protocol version, pseudo, access type, squashing, or transport protocol.

If you need to limit access to specific clients only, click and add their IP addresses together with access type and squashing options.

Confirm with .

7.2 Deleting NFS exports #

To delete an export, select and highlight the export in the table row. Click the drop-down arrow next to the button and select . Activate the check box and confirm with .

7.3 Editing NFS exports #

To edit an existing export, select and highlight the export in the table row and click in the top left of the exports table.

You can then adjust all the details of the NFS export.

8 Manage CephFS #

To find detailed information about CephFS, refer to Chapter 23, Clustered file system.

8.1 Viewing CephFS overview #

Click from the main menu to view the overview of configured file systems. The main table shows each file system's name, date of creation, and whether it is enabled or not.

By clicking a file system's table row, you reveal details about its rank and pools added to the file system.

At the bottom of the screen, you can see statistics counting the number of related MDS inodes and client requests, collected in real time.

9 Manage the Object Gateway #

Before you begin, you may encounter the following notification when trying to access the Object Gateway front-end on the Ceph Dashboard:

Information No RGW credentials found, please consult the documentation on how to enable RGW for the dashboard. Please consult the documentation on how to configure and enable the Object Gateway management functionality.

This is because the Object Gateway has not been automatically configured by cephadm for the Ceph Dashboard. If you encounter this notification, follow the instructions at Section 10.4, “Enabling the Object Gateway management front-end” to manually enable the Object Gateway front-end for the Ceph Dashboard.

For more general information about Object Gateway, refer to Chapter 21, Ceph Object Gateway.

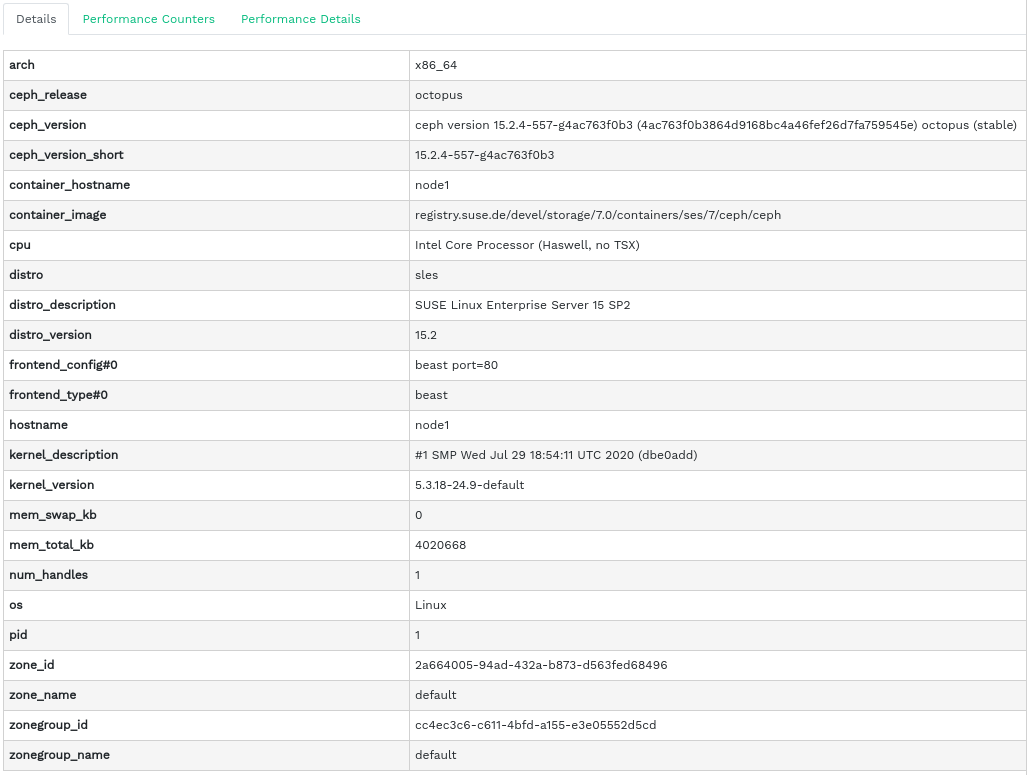

9.1 Viewing Object Gateways #

To view a list of configured Object Gateways, click › . The list includes the ID of the gateway, host name of the cluster node where the gateway daemon is running, and the gateway's version number.

Click the drop-down arrow next to the gateway's name to view detailed information about the gateway. The tab shows details about read/write operations and cache statistics.

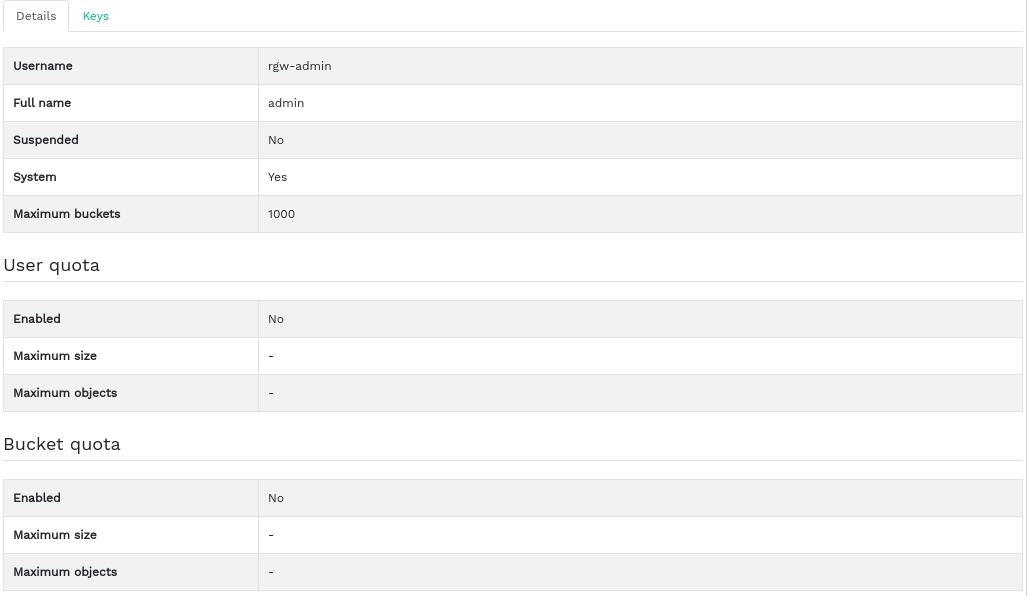

9.2 Managing Object Gateway users #

Click › to view a list of existing Object Gateway users.

Click the drop-down arrow next to the user name to view details about the user account, such as status information or the user and bucket quota details.

9.2.1 Adding a new gateway user #

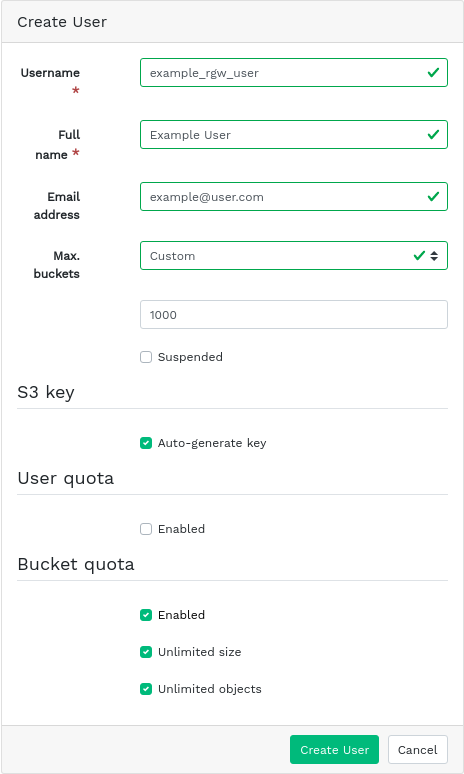

To add a new gateway user, click in the top left of the table heading. Fill in their credentials, details about the S3 key and user and bucket quotas, then confirm with .

9.2.2 Deleting gateway users #

To delete a gateway user, select and highlight the user. Click the drop-down button next to and select from the list to delete the user account. Activate the check box and confirm with .

9.2.3 Editing gateway user details #

To change gateway user details, select and highlight the user. Click in the top left of the table heading.

Modify basic or additional user information, such as their capabilities, keys, sub-users, and quota information. Confirm with .

The tab includes a read-only list of the gateway's users and their access and secret keys. To view the keys, click a user name in the list and then select in the top left of the table heading. In the dialog, click the 'eye' icon to unveil the keys, or click the clipboard icon to copy the related key to the clipboard.

9.3 Managing the Object Gateway buckets #

Object Gateway (OGW) buckets implement the functionality of OpenStack Swift containers. Object Gateway buckets serve as containers for storing data objects.

Click › to view a list of Object Gateway buckets.

9.3.1 Adding a new bucket #

To add a new Object Gateway bucket, click in the top left of the table heading. Enter the bucket's name, select the owner, and set the placement target. Confirm with .

At this stage you can also enable locking by selecting ; however, this is configurable after creation. See Section 9.3.3, “Editing the bucket” for more information.

9.3.2 Viewing bucket details #

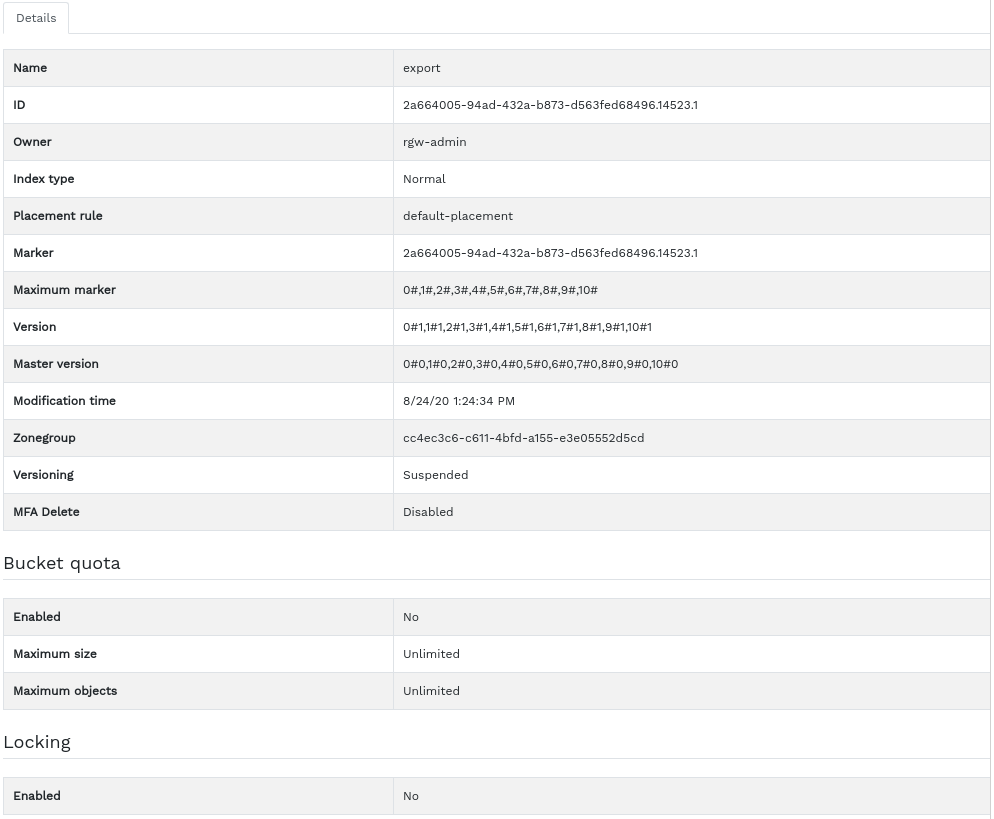

To view detailed information about an Object Gateway bucket, click the drop-down arrow next to the bucket name.

Below the table, you can find details about the bucket quota and locking settings.

9.3.3 Editing the bucket #

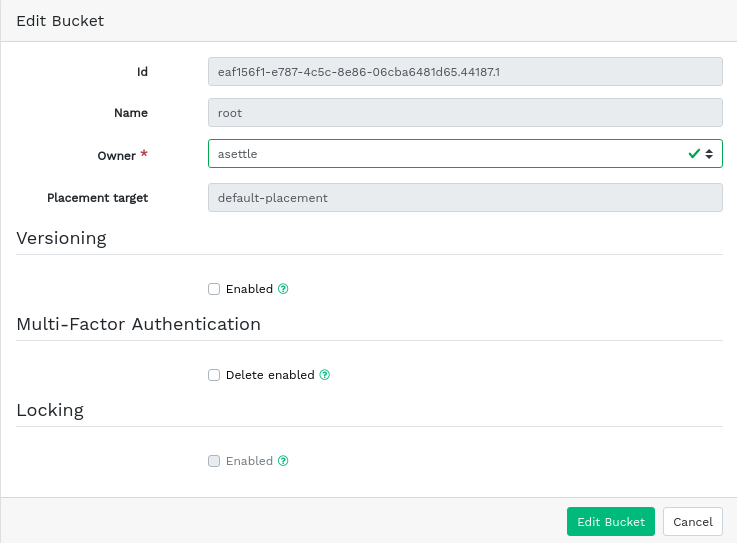

Select and highlight a bucket, then click in the top left of the table heading.

You can update the owner of the bucket or enable versioning, multi-factor authentication or locking. Confirm any changes with .

9.3.4 Deleting a bucket #

To delete an Object Gateway bucket, select and highlight the bucket. Click the drop-down button next to and select from the list to delete the bucket. Activate the check box and confirm with .

10 Manual configuration #

This section introduces advanced information for users that prefer configuring dashboard settings manually on the command line.

10.1 Configuring TLS/SSL support #

All HTTP connections to the dashboard are secured with TLS/SSL by default. A secure connection requires an SSL certificate. You can either use a self-signed certificate, or generate a certificate and have a well known certificate authority (CA) sign it.

You may want to disable the SSL support for a specific reason. For example, if the dashboard is running behind a proxy that does not support SSL.

Use caution when disabling SSL as user names and passwords will be sent to the dashboard unencrypted.

To disable SSL, run:

cephuser@adm > ceph config set mgr mgr/dashboard/ssl falseYou need to restart the Ceph Manager processes manually after changing the SSL certificate and key. You can do so by either running

cephuser@adm > ceph mgr fail ACTIVE-MANAGER-NAMEor by disabling and re-enabling the dashboard module, which also triggers the manager to respawn itself:

cephuser@adm >ceph mgr module disable dashboardcephuser@adm >ceph mgr module enable dashboard

10.1.1 Creating self-signed certificates #

Creating a self-signed certificate for secure communication is simple. This way you can get the dashboard running quickly.

Most Web browsers will complain about a self-signed certificate and require explicit confirmation before establishing a secure connection to the dashboard.

To generate and install a self-signed certificate, use the following built-in command:

cephuser@adm > ceph dashboard create-self-signed-cert10.1.2 Using certificates signed by CA #

To properly secure the connection to the dashboard and to eliminate Web browser complaints about a self-signed certificate, we recommend using a certificate that is signed by a CA.

You can generate a certificate key pair with a command similar to the following:

# openssl req -new -nodes -x509 \

-subj "/O=IT/CN=ceph-mgr-dashboard" -days 3650 \

-keyout dashboard.key -out dashboard.crt -extensions v3_ca

The above command outputs dashboard.key and

dashboard.crt files. After you get the

dashboard.crt file signed by a CA, enable it for all

Ceph Manager instances by running the following commands:

cephuser@adm >ceph dashboard set-ssl-certificate -i dashboard.crtcephuser@adm >ceph dashboard set-ssl-certificate-key -i dashboard.key

If you require different certificates for each Ceph Manager instance, modify the commands and include the name of the instance as follows. Replace NAME with the name of the Ceph Manager instance (usually the related host name):

cephuser@adm >ceph dashboard set-ssl-certificate NAME -i dashboard.crtcephuser@adm >ceph dashboard set-ssl-certificate-key NAME -i dashboard.key

10.2 Changing host name and port number #

The Ceph Dashboard binds to a specific TCP/IP address and TCP port. By default, the currently active Ceph Manager that hosts the dashboard binds to TCP port 8443 (or 8080 when SSL is disabled).

If a firewall is enabled on the hosts running Ceph Manager (and thus the Ceph Dashboard), you may need to change the configuration to enable access to these ports. For more information on firewall settings for Ceph, see Book “Troubleshooting Guide”, Chapter 13 “Hints and tips”, Section 13.7 “Firewall settings for Ceph”.

The Ceph Dashboard binds to "::" by default, which corresponds to all available IPv4 and IPv6 addresses. You can change the IP address and port number of the Web application so that they apply to all Ceph Manager instances by using the following commands:

cephuser@adm >ceph config set mgr mgr/dashboard/server_addr IP_ADDRESScephuser@adm >ceph config set mgr mgr/dashboard/server_port PORT_NUMBER

Since each ceph-mgr daemon hosts

its own instance of the dashboard, you may need to configure them

separately. Change the IP address and port number for a specific manager

instance by using the following commands (replace

NAME with the ID of the

ceph-mgr instance):

cephuser@adm >ceph config set mgr mgr/dashboard/NAME/server_addr IP_ADDRESScephuser@adm >ceph config set mgr mgr/dashboard/NAME/server_port PORT_NUMBER

The ceph mgr services command displays all endpoints

that are currently configured. Look for the dashboard

key to obtain the URL for accessing the dashboard.

10.3 Adjusting user names and passwords #

If you do not want to use the default administrator account, create a different user account and associate it with at least one role. We provide a set of predefined system roles that you can use. For more details refer to Chapter 11, Manage users and roles on the command line.

To create a user with administrator privileges, use the following command:

cephuser@adm > ceph dashboard ac-user-create USER_NAME PASSWORD administrator10.4 Enabling the Object Gateway management front-end #

To use the Object Gateway management functionality of the dashboard, you need to

provide the login credentials of a user with the system

flag enabled:

If you do not have a user with the

systemflag, create one:cephuser@adm >radosgw-admin user create --uid=USER_ID --display-name=DISPLAY_NAME --systemTake note of the access_key and secret_key keys in the output of the command.

You can also obtain the credentials of an existing user by using the

radosgw-admincommand:cephuser@adm >radosgw-admin user info --uid=USER_IDProvide the received credentials to the dashboard in separate files:

cephuser@adm >ceph dashboard set-rgw-api-access-key ACCESS_KEY_FILEcephuser@adm >ceph dashboard set-rgw-api-secret-key SECRET_KEY_FILE

By default the firewall is enabled in SUSE Linux Enterprise Server 15 SP3. For more information on firewall configuration, see Book “Troubleshooting Guide”, Chapter 13 “Hints and tips”, Section 13.7 “Firewall settings for Ceph”.

There are several points to consider:

The host name and port number of the Object Gateway are determined automatically.

If multiple zones are used, it will automatically determine the host within the master zonegroup and master zone. This is sufficient for most setups, but in some circumstances you may want to set the host name and port manually:

cephuser@adm >ceph dashboard set-rgw-api-host HOSTcephuser@adm >ceph dashboard set-rgw-api-port PORTThese are additional settings that you may need:

cephuser@adm >ceph dashboard set-rgw-api-scheme SCHEME # http or httpscephuser@adm >ceph dashboard set-rgw-api-admin-resource ADMIN_RESOURCEcephuser@adm >ceph dashboard set-rgw-api-user-id USER_IDIf you are using a self-signed certificate (Section 10.1, “Configuring TLS/SSL support”) in your Object Gateway setup, disable certificate verification in the dashboard to avoid refused connections caused by certificates signed by an unknown CA or not matching the host name:

cephuser@adm >ceph dashboard set-rgw-api-ssl-verify FalseIf the Object Gateway takes too long to process requests and the dashboard runs into timeouts, the timeout value can be adjusted (default is 45 seconds):

cephuser@adm >ceph dashboard set-rest-requests-timeout SECONDS

10.5 Enabling iSCSI management #

The Ceph Dashboard manages iSCSI targets using the REST API provided by the

rbd-target-api service of the

Ceph iSCSI gateway. Ensure it is installed and enabled on iSCSI

gateways.

The iSCSI management functionality of the Ceph Dashboard depends on the

latest version 3 of the ceph-iscsi project. Ensure that

your operating system provides the correct version, otherwise the

Ceph Dashboard will not enable the management features.

If the ceph-iscsi REST API is configured in HTTPS mode

and it is using a self-signed certificate, configure the dashboard to avoid

SSL certificate verification when accessing ceph-iscsi API.

Disable API SSL verification:

cephuser@adm > ceph dashboard set-iscsi-api-ssl-verification falseDefine the available iSCSI gateways:

cephuser@adm >ceph dashboard iscsi-gateway-listcephuser@adm >ceph dashboard iscsi-gateway-add scheme://username:password@host[:port]cephuser@adm >ceph dashboard iscsi-gateway-rm gateway_name

10.6 Enabling Single Sign-On #

Single Sign-On (SSO) is an access control method that enables users to log in with a single ID and password to multiple applications simultaneously.

The Ceph Dashboard supports external authentication of users via the SAML 2.0 protocol. Because authorization is still performed by the dashboard, you first need to create user accounts and associate them with the desired roles. However, the authentication process can be performed by an existing Identity Provider (IdP).

To configure Single Sign-On, use the following command:

cephuser@adm > ceph dashboard sso setup saml2 CEPH_DASHBOARD_BASE_URL \

IDP_METADATA IDP_USERNAME_ATTRIBUTE \

IDP_ENTITY_ID SP_X_509_CERT \

SP_PRIVATE_KEYParameters:

- CEPH_DASHBOARD_BASE_URL

Base URL where Ceph Dashboard is accessible (for example, 'https://cephdashboard.local').

- IDP_METADATA

URL, file path, or content of the IdP metadata XML (for example, 'https://myidp/metadata').

- IDP_USERNAME_ATTRIBUTE

Optional. Attribute that will be used to get the user name from the authentication response. Defaults to 'uid'.

- IDP_ENTITY_ID

Optional. Use when more than one entity ID exists on the IdP metadata.

- SP_X_509_CERT / SP_PRIVATE_KEY

Optional. File path or content of the certificate that will be used by Ceph Dashboard (Service Provider) for signing and encryption. These file paths need to be accessible from the active Ceph Manager instance.

The issuer value of SAML requests will follow this pattern:

CEPH_DASHBOARD_BASE_URL/auth/saml2/metadata

To display the current SAML 2.0 configuration, run:

cephuser@adm > ceph dashboard sso show saml2To disable Single Sign-On, run:

cephuser@adm > ceph dashboard sso disableTo check if SSO is enabled, run:

cephuser@adm > ceph dashboard sso statusTo enable SSO, run:

cephuser@adm > ceph dashboard sso enable saml211 Manage users and roles on the command line #

This section describes how to manage user accounts used by the Ceph Dashboard. It helps you create or modify user accounts, as well as set proper user roles and permissions.

11.1 Managing the password policy #

By default the password policy feature is enabled including the following checks:

Is the password longer than N characters?

Are the old and new password the same?

The password policy feature can be switched on or off completely:

cephuser@adm > ceph dashboard set-pwd-policy-enabled true|falseThe following individual checks can be switched on or off:

cephuser@adm >ceph dashboard set-pwd-policy-check-length-enabled true|falsecephuser@adm >ceph dashboard set-pwd-policy-check-oldpwd-enabled true|falsecephuser@adm >ceph dashboard set-pwd-policy-check-username-enabled true|falsecephuser@adm >ceph dashboard set-pwd-policy-check-exclusion-list-enabled true|falsecephuser@adm >ceph dashboard set-pwd-policy-check-complexity-enabled true|falsecephuser@adm >ceph dashboard set-pwd-policy-check-sequential-chars-enabled true|falsecephuser@adm >ceph dashboard set-pwd-policy-check-repetitive-chars-enabled true|false

In addition, the following options are available to configure the password policy behaviour.

The minimum password length (defaults to 8):

cephuser@adm >ceph dashboard set-pwd-policy-min-length NThe minimum password complexity (defaults to 10):

cephuser@adm >ceph dashboard set-pwd-policy-min-complexity NThe password complexity is calculated by classifying each character in the password.

A list of comma-separated words that are not allowed to be used in a password:

cephuser@adm >ceph dashboard set-pwd-policy-exclusion-list word[,...]

11.2 Managing user accounts #

The Ceph Dashboard supports managing multiple user accounts. Each user account

consists of a user name, a password (stored in encrypted form using

bcrypt), an optional name, and an optional e-mail

address.

User accounts are stored in Ceph Monitor's configuration database and are shared globally across all Ceph Manager instances.

Use the following commands to manage user accounts:

- Show existing users:

cephuser@adm >ceph dashboard ac-user-show [USERNAME]- Create a new user:

cephuser@adm >ceph dashboard ac-user-create USERNAME -i [PASSWORD_FILE] [ROLENAME] [NAME] [EMAIL]- Delete a user:

cephuser@adm >ceph dashboard ac-user-delete USERNAME- Change a user's password:

cephuser@adm >ceph dashboard ac-user-set-password USERNAME -i PASSWORD_FILE- Modify a user's name and email:

cephuser@adm >ceph dashboard ac-user-set-info USERNAME NAME EMAIL- Disable user

cephuser@adm >ceph dashboard ac-user-disable USERNAME- Enable User

cephuser@adm >ceph dashboard ac-user-enable USERNAME

11.3 User roles and permissions #

This section describes what security scopes you can assign to a user role, how to manage user roles and assign them to user accounts.

11.3.1 Defining security scopes #

User accounts are associated with a set of roles that define which parts of the dashboard can be accessed by the user. The dashboard parts are grouped within a security scope. Security scopes are predefined and static. The following security scopes are currently available:

- hosts

Includes all features related to the menu entry.

- config-opt

Includes all features related to the management of Ceph configuration options.

- pool

Includes all features related to pool management.

- osd

Includes all features related to the Ceph OSD management.

- monitor

Includes all features related to the Ceph Monitor management.

- rbd-image

Includes all features related to the RADOS Block Device image management.

- rbd-mirroring

Includes all features related to the RADOS Block Device mirroring management.

- iscsi

Includes all features related to iSCSI management.

- rgw

Includes all features related to the Object Gateway management.

- cephfs

Includes all features related to CephFS management.

- manager

Includes all features related to the Ceph Manager management.

- log

Includes all features related to Ceph logs management.

- grafana

Includes all features related to the Grafana proxy.

- prometheus

Include all features related to Prometheus alert management.

- dashboard-settings

Allows changing dashboard settings.

11.3.2 Specifying user roles #

A role specifies a set of mappings between a security scope and a set of permissions. There are four types of permissions: 'read', 'create', 'update', and 'delete'.

The following example specifies a role where a user has 'read' and 'create' permissions for features related to pool management, and has full permissions for features related to RBD image management:

{

'role': 'my_new_role',

'description': 'My new role',

'scopes_permissions': {

'pool': ['read', 'create'],

'rbd-image': ['read', 'create', 'update', 'delete']

}

}The dashboard already provides a set of predefined roles that we call system roles. You can instantly use them after a fresh Ceph Dashboard installation:

- administrator

Provides full permissions for all security scopes.

- read-only

Provides read permission for all security scopes except the dashboard settings.

- block-manager

Provides full permissions for 'rbd-image', 'rbd-mirroring', and 'iscsi' scopes.

- rgw-manager

Provides full permissions for the 'rgw' scope.

- cluster-manager

Provides full permissions for the 'hosts', 'osd', 'monitor', 'manager', and 'config-opt' scopes.

- pool-manager

Provides full permissions for the 'pool' scope.

- cephfs-manager

Provides full permissions for the 'cephfs' scope.

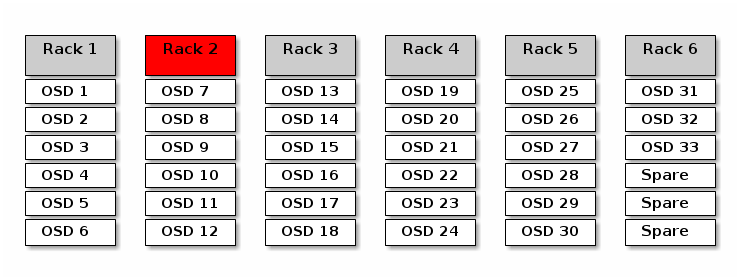

11.3.2.1 Managing custom roles #