Deployment of containerized Ceph clusters on SUSE CaaS Platform is released under limited availability for the SUSE Enterprise Storage 7.1 release.

For more details about the SUSE CaaS Platform product, see https://documentation.suse.com/suse-caasp/4.5/.

- About this guide

- I Quick Start: Deploying and Upgrading Ceph on SUSE CaaS Platform

- II Administrating Ceph on SUSE CaaS Platform

- III Troubleshooting Ceph on SUSE CaaS Platform

- A Ceph maintenance updates based on upstream 'Pacific' point releases

- Glossary

Copyright © 2020–2025 SUSE LLC and contributors. All rights reserved.

Except where otherwise noted, this document is licensed under Creative Commons Attribution-ShareAlike 4.0 International (CC-BY-SA 4.0): https://creativecommons.org/licenses/by-sa/4.0/legalcode.

For SUSE trademarks, see http://www.suse.com/company/legal/. All third-party trademarks are the property of their respective owners. Trademark symbols (®, ™ etc.) denote trademarks of SUSE and its affiliates. Asterisks (*) denote third-party trademarks.

All information found in this book has been compiled with utmost attention to detail. However, this does not guarantee complete accuracy. Neither SUSE LLC, its affiliates, the authors nor the translators shall be held liable for possible errors or the consequences thereof.

About this guide #

Part of the SUSE Enterprise Storage family is the Rook deployment tool, which runs on SUSE CaaS Platform. Rook allows you to deploy and run Ceph on top of Kubernetes, in order to provide container workloads with all their storage needs.

Deployment using Rook is currently in limited availability, meaning that it is only available to nominated and approved customers. For information on how to get so nominated, contact your SUSE sales team.

Rook is a so-called storage operator: it automates many steps that you need to do manually in a "traditional" setup with cephadm. This guide explains how to install Rook after you installed SUSE CaaS Platform, and how to administer it.

SUSE Enterprise Storage 7.1 is an extension to SUSE Linux Enterprise Server 15 SP3. It combines the capabilities of the Ceph (http://ceph.com/) storage project with the enterprise engineering and support of SUSE. SUSE Enterprise Storage 7.1 provides IT organizations with the ability to deploy a distributed storage architecture that can support a number of use cases using commodity hardware platforms.

1 Available documentation #

Documentation for our products is available at https://documentation.suse.com, where you can also find the latest updates, and browse or download the documentation in various formats. The latest documentation updates can be found in the English language version.

In addition, the product documentation is available in your installed system

under /usr/share/doc/manual. It is included in an RPM

package named

ses-manual_LANG_CODE. Install

it if it is not already on your system, for example:

# zypper install ses-manual_enThe following documentation is available for this product:

- Deployment Guide

This guide focuses on deploying a basic Ceph cluster, and how to deploy additional services. It also cover the steps for upgrading to SUSE Enterprise Storage 7.1 from the previous product version.

- Administration and Operations Guide

This guide focuses on routine tasks that you as an administrator need to take care of after the basic Ceph cluster has been deployed (day 2 operations). It also describes all the supported ways to access data stored in a Ceph cluster.

- Security Hardening Guide

This guide focuses on how to ensure your cluster is secure.

- Troubleshooting Guide

This guide takes you through various common problems when running SUSE Enterprise Storage 7.1 and other related issues to relevant components such as Ceph or Object Gateway.

- SUSE Enterprise Storage for Windows Guide

This guide describes the integration, installation, and configuration of Microsoft Windows environments and SUSE Enterprise Storage using the Windows Driver.

2 Improving the documentation #

Your feedback and contributions to this documentation are welcome. The following channels for giving feedback are available:

- Service requests and support

For services and support options available for your product, see http://www.suse.com/support/.

To open a service request, you need a SUSE subscription registered at SUSE Customer Center. Go to https://scc.suse.com/support/requests, log in, and click .

- Bug reports

Report issues with the documentation at https://bugzilla.suse.com/. A Bugzilla account is required.

To simplify this process, you can use the links next to headlines in the HTML version of this document. These preselect the right product and category in Bugzilla and add a link to the current section. You can start typing your bug report right away.

- Contributions

To contribute to this documentation, use the links next to headlines in the HTML version of this document. They take you to the source code on GitHub, where you can open a pull request. A GitHub account is required.

Note: only available for EnglishThe links are only available for the English version of each document. For all other languages, use the links instead.

For more information about the documentation environment used for this documentation, see the repository's README at https://github.com/SUSE/doc-ses.

You can also report errors and send feedback concerning the documentation to <doc-team@suse.com>. Include the document title, the product version, and the publication date of the document. Additionally, include the relevant section number and title (or provide the URL) and provide a concise description of the problem.

3 Documentation conventions #

The following notices and typographic conventions are used in this document:

/etc/passwd: Directory names and file namesPLACEHOLDER: Replace PLACEHOLDER with the actual value

PATH: An environment variablels,--help: Commands, options, and parametersuser: The name of user or grouppackage_name: The name of a software package

Alt, Alt–F1: A key to press or a key combination. Keys are shown in uppercase as on a keyboard.

, › : menu items, buttons

AMD/Intel This paragraph is only relevant for the AMD64/Intel 64 architectures. The arrows mark the beginning and the end of the text block.

IBM Z, POWER This paragraph is only relevant for the architectures

IBM ZandPOWER. The arrows mark the beginning and the end of the text block.Chapter 1, “Example chapter”: A cross-reference to another chapter in this guide.

Commands that must be run with

rootprivileges. Often you can also prefix these commands with thesudocommand to run them as non-privileged user.#command>sudocommandCommands that can be run by non-privileged users.

>commandNotices

Warning: Warning noticeVital information you must be aware of before proceeding. Warns you about security issues, potential loss of data, damage to hardware, or physical hazards.

Important: Important noticeImportant information you should be aware of before proceeding.

Note: Note noticeAdditional information, for example about differences in software versions.

Tip: Tip noticeHelpful information, like a guideline or a piece of practical advice.

Compact Notices

Additional information, for example about differences in software versions.

Helpful information, like a guideline or a piece of practical advice.

4 Support #

Find the support statement for SUSE Enterprise Storage and general information about technology previews below. For details about the product lifecycle, see https://www.suse.com/lifecycle.

If you are entitled to support, find details on how to collect information for a support ticket at https://documentation.suse.com/sles-15/html/SLES-all/cha-adm-support.html.

4.1 Support statement for SUSE Enterprise Storage #

To receive support, you need an appropriate subscription with SUSE. To view the specific support offerings available to you, go to https://www.suse.com/support/ and select your product.

The support levels are defined as follows:

- L1

Problem determination, which means technical support designed to provide compatibility information, usage support, ongoing maintenance, information gathering and basic troubleshooting using available documentation.

- L2

Problem isolation, which means technical support designed to analyze data, reproduce customer problems, isolate problem area and provide a resolution for problems not resolved by Level 1 or prepare for Level 3.

- L3

Problem resolution, which means technical support designed to resolve problems by engaging engineering to resolve product defects which have been identified by Level 2 Support.

For contracted customers and partners, SUSE Enterprise Storage is delivered with L3 support for all packages, except for the following:

Technology previews.

Sound, graphics, fonts, and artwork.

Packages that require an additional customer contract.

Some packages shipped as part of the module Workstation Extension are L2-supported only.

Packages with names ending in -devel (containing header files and similar developer resources) will only be supported together with their main packages.

SUSE will only support the usage of original packages. That is, packages that are unchanged and not recompiled.

4.2 Technology previews #

Technology previews are packages, stacks, or features delivered by SUSE to provide glimpses into upcoming innovations. Technology previews are included for your convenience to give you a chance to test new technologies within your environment. We would appreciate your feedback! If you test a technology preview, please contact your SUSE representative and let them know about your experience and use cases. Your input is helpful for future development.

Technology previews have the following limitations:

Technology previews are still in development. Therefore, they may be functionally incomplete, unstable, or in other ways not suitable for production use.

Technology previews are not supported.

Technology previews may only be available for specific hardware architectures.

Details and functionality of technology previews are subject to change. As a result, upgrading to subsequent releases of a technology preview may be impossible and require a fresh installation.

SUSE may discover that a preview does not meet customer or market needs, or does not comply with enterprise standards. Technology previews can be removed from a product at any time. SUSE does not commit to providing a supported version of such technologies in the future.

For an overview of technology previews shipped with your product, see the release notes at https://www.suse.com/releasenotes/x86_64/SUSE-Enterprise-Storage/7.1.

5 Ceph contributors #

The Ceph project and its documentation is a result of the work of hundreds of contributors and organizations. See https://ceph.com/contributors/ for more details.

6 Commands and command prompts used in this guide #

As a Ceph cluster administrator, you will be configuring and adjusting the cluster behavior by running specific commands. There are several types of commands you will need:

6.1 Salt-related commands #

These commands help you to deploy Ceph cluster nodes, run commands on

several (or all) cluster nodes at the same time, or assist you when adding

or removing cluster nodes. The most frequently used commands are

ceph-salt and ceph-salt config. You

need to run Salt commands on the Salt Master node as root. These

commands are introduced with the following prompt:

root@master # For example:

root@master # ceph-salt config ls6.2 Ceph related commands #

These are lower-level commands to configure and fine tune all aspects of the

cluster and its gateways on the command line, for example

ceph, cephadm, rbd,

or radosgw-admin.

To run Ceph related commands, you need to have read access to a Ceph

key. The key's capabilities then define your privileges within the Ceph

environment. One option is to run Ceph commands as root (or via

sudo) and use the unrestricted default keyring

'ceph.client.admin.key'.

The safer and recommended option is to create a more restrictive individual key for each administrator user and put it in a directory where the users can read it, for example:

~/.ceph/ceph.client.USERNAME.keyring

To use a custom admin user and keyring, you need to specify the user name

and path to the key each time you run the ceph command

using the -n client.USER_NAME

and --keyring PATH/TO/KEYRING

options.

To avoid this, include these options in the CEPH_ARGS

variable in the individual users' ~/.bashrc files.

Although you can run Ceph-related commands on any cluster node, we

recommend running them on the Admin Node. This documentation uses the cephuser

user to run the commands, therefore they are introduced with the following

prompt:

cephuser@adm > For example:

cephuser@adm > ceph auth listIf the documentation instructs you to run a command on a cluster node with a specific role, it will be addressed by the prompt. For example:

cephuser@mon > 6.2.1 Running ceph-volume #

Starting with SUSE Enterprise Storage 7, Ceph services are running containerized.

If you need to run ceph-volume on an OSD node, you need

to prepend it with the cephadm command, for example:

cephuser@adm > cephadm ceph-volume simple scan6.3 General Linux commands #

Linux commands not related to Ceph, such as mount,

cat, or openssl, are introduced either

with the cephuser@adm > or # prompts, depending on which

privileges the related command requires.

6.4 Additional information #

For more information on Ceph key management, refer to

Book “Administration and Operations Guide”, Chapter 30 “Authentication with cephx”, Section 30.2 “Key management”.

Part I Quick Start: Deploying and Upgrading Ceph on SUSE CaaS Platform #

- 1 Quick start

SUSE Enterprise Storage is a distributed storage system designed for scalability, reliability, and performance, which is based on the Ceph technology. The traditional way to run a Ceph cluster is setting up a dedicated cluster to provide block, file, and object storage to a variety of clients.

- 2 Updating Rook

This chapter describes how to update containerized SUSE Enterprise Storage 7 on top of a SUSE CaaS Platform 4.5 Kubernetes cluster.

1 Quick start #

SUSE Enterprise Storage is a distributed storage system designed for scalability, reliability, and performance, which is based on the Ceph technology. The traditional way to run a Ceph cluster is setting up a dedicated cluster to provide block, file, and object storage to a variety of clients.

Rook manages Ceph as a containerized application on Kubernetes and allows a hyper-converged setup, in which a single Kubernetes cluster runs applications and storage together. The primary purpose of SUSE Enterprise Storage deployed with Rook is to provide storage to other applications running in the Kubernetes cluster. This can be block, file, or object storage.

This chapter describes how to quickly deploy containerized SUSE Enterprise Storage 7.1 on top of a SUSE CaaS Platform 4.5 Kubernetes cluster.

1.1 Recommended hardware specifications #

For SUSE Enterprise Storage deployed with Rook, the minimal configuration is preliminary, we will update it based on real customer needs.

For the purpose of this document, consider the following minimum configuration:

A highly available Kubernetes cluster with 3 master nodes

Four physical Kubernetes worker nodes, each with two OSD disks and 5GB of RAM per OSD disk

Allow additional 4GB of RAM per additional daemon deployed on a node

Dual-10 Gb ethernet as bonded network

If you are running a hyper-converged infrastructure (HCI), ensure you add any additional requirements for your workloads.

1.2 Prerequisites #

Ensure the following prerequisites are met before continuing with this quickstart guide:

Installation of SUSE CaaS Platform 4.5. See the SUSE CaaS Platform documentation for more details on how to install: https://documentation.suse.com/en-us/suse-caasp/4.5/.

Ensure

ceph-csi(and required sidecars) are running in your Kubernetes cluster.Installation of the LVM2 package on the host where the OSDs are running.

Ensure you have one of the following storage options to configure Ceph properly:

Raw devices (no partitions or formatted file systems)

Raw partitions (no formatted file system)

Ensure the SUSE CaaS Platform 4.5 repository is enabled for the installation of Helm 3.

1.3 Getting started with Rook #

The following instructions are designed for a quick start deployment only. For more information on installing Helm, see https://documentation.suse.com/en-us/suse-caasp/4.5/.

Install Helm v3:

#zypper in helmOn a node with access to the Kubernetes cluster, execute the following:

>export HELM_EXPERIMENTAL_OCI=1Create a local copy of the Helm chart to your local registry:

>helm chart pull registry.suse.com/ses/7.1/charts/rook-ceph:latestIf you are using a version of Helm >= 3.7, you do not need to specify the subcommand

chart. The protocol is also explicit and the version is presumed to belatest.>helm pull oci://registry.suse.com/ses/7.1/charts/rook-cephExport the Helm charts to a Rook-Ceph sub-directory under your current working directory:

>helm chart export registry.suse.com/ses/7.1/charts/rook-ceph:latestFor Helm versions >= 3.7, you just need to extract the tarball yourself:

>tar -xzf rook-ceph-1.8.6.tar.gzCreate a file named

myvalues.yamlbased off therook-ceph/values.yaml file.Set local parameters in

myvalues.yaml.Create the namespace:

kubectl@adm >kubectl create namespace rook-cephInstall the helm charts:

>helm install -n rook-ceph rook-ceph ./rook-ceph/ -f myvalues.yamlVerify the

rook-operatoris running:kubectl@adm >kubectl -n rook-ceph get pod -l app=rook-ceph-operator

1.4 Deploying Ceph with Rook #

You need to apply labels to your Kubernetes nodes before deploying your Ceph cluster. The key

node-role.rook-ceph/clusteraccepts one of the following values:anymonmon-mgrmon-mgr-osd

Run the following the get the names of your cluster's nodes:

kubectl@adm >kubectl get nodesOn the Master node, run the following:

kubectl@adm >kubectl label nodes node-name label-key=label-valueFor example:

kubectl@adm >kubectl label node k8s-worker-node-1 node-role.rook-ceph/cluster=anyVerify the application of the label by re-running the following command:

kubectl@adm >kubectl get nodes --show-labelsYou can also use the

describecommand to get the full list of labels given to the node. For example:kubectl@adm >kubectl describe node node-nameNext, you need to apply a Ceph cluster manifest file, for example,

cluster.yaml, to your Kubernetes cluster. You can apply the examplecluster.yamlas is without any additional services or requirements from the Rook Helm chart.To apply the example Ceph cluster manifest to your Kubernetes cluster, run the following command:

>kubectl create -f rook-ceph/examples/cluster.yaml

1.5 Configuring the Ceph cluster #

You can have two types of integration with your SUSE Enterprise Storage intregrated cluster. These types are: CephFS or RADOS Block Device (RBD).

Before you start the SUSE CaaS Platform and SUSE Enterprise Storage integration, ensure you have met the following prerequisites:

The SUSE CaaS Platform cluster must have

ceph-commonandxfsprogsinstalled on all nodes. You can check this by running therpm -q ceph-commoncommand or therpm -q xfsprogscommand.That the SUSE Enterprise Storage cluster has a pool with a RBD device or CephFS enabled.

1.5.1 Configure CephFS #

For more information on configuring CephFS, see https://documentation.suse.com/en-us/suse-caasp/4.5/ for steps and more information. This section will also provide the necessary procedure on attaching a pod to either an CephFS static or dynamic volume.

1.5.2 Configure RADOS block device #

For instructions on configuring the RADOS Block Device (RBD) in a pod, see https://documentation.suse.com/en-us/suse-caasp/4.5/ for more information. This section will also provide the necessary procedure on attaching a pod to either an RBD static or dynamic volume.

1.6 Updating local images #

To update your local image to the latest tag, apply the new parameters in

myvalues.yaml:image: refix: rook repository: registry.suse.com/ses/7.1/rook/ceph tag: LATEST_TAG pullPolicy: IfNotPresent

Re-pull a new local copy of the Helm chart to your local registry:

>helm3 chart pull REGISTRY_URLExport the Helm charts to a Rook-Ceph sub-directory under your current working directory:

>helm3 chart export REGISTRY_URLUpgrade the Helm charts:

>helm3 upgrade -n rook-ceph rook-ceph ./rook-ceph/ -f myvalues.yaml

1.7 Uninstalling #

Delete any Kubernetes applications that are consuming Rook storage.

Delete all object, file, and block storage artifacts.

Remove the CephCluster:

kubectl@adm >>kubectl delete -f cluster.yamlUninstall the operator:

>helm uninstall REGISTRY_URLOr, if you are using Helm >= 3.7:

>helm uninstall -n rook-ceph rook-cephDelete any data on the hosts:

>rm -rf /var/lib/rookWipe the disks if necessary.

Delete the namespace:

>kubectl delete namespace rook-ceph

2 Updating Rook #

This chapter describes how to update containerized SUSE Enterprise Storage 7 on top of a SUSE CaaS Platform 4.5 Kubernetes cluster.

This chapter takes you through the steps to update the software in a Rook-Ceph cluster from one version to the next. This includes both the Rook-Ceph Operator software itself as well as the Ceph cluster software.

Version

2.1 Recommended hardware specifications #

For SUSE Enterprise Storage deployed with Rook, the minimal configuration is preliminary, we will update it based on real customer needs.

For the purpose of this document, consider the following minimum configuration:

A highly available Kubernetes cluster with 3 master nodes

Four physical Kubernetes worker nodes, each with two OSD disks and 5 GB of RAM per OSD disk

Allow additional 4 GB of RAM per additional daemon deployed on a node

Dual-10 Gb ethernet as bonded network

If you are running a hyper-converged infrastructure (HCI), ensure you add any additional requirements for your workloads.

2.2 Patch release upgrades #

To update a patch release of Rook to another, you need to update the common resources and the image of the Rook Operator.

Get the latest common resource manifests that contain the relevant changes to the latest version:

>zypper in rook-k8s-yaml>cd /usr/share/k8s-yaml/rook/ceph/Apply the latest changes from the next version and update the Rook Operator image:

kubectl@adm >helm upgrade -n rook-ceph rook-ceph ./rook-ceph/ -f myvalues.yamlkubectl@adm >kubectl -n rook-ceph set image deploy/rook-ceph-operator registry.suse.com/ses/7.1/rook/ceph:version-numberUpgrade the Ceph version:

kubectl@adm >kubectl -n ROOK_CLUSTER_NAMESPACE patch CephCluster CLUSTER_NAME --type=merge -p "{\"spec\": {\"cephVersion\": {\"image\": \"registry.suse.com/ses/7.1/ceph/ceph:version-number\"}}}"Mark the Ceph cluster to only support the updated version:

# from a ceph-toolbox

#ceph osd require-osd-release version-name

We recommend updating the Rook-Ceph common resources from the example manifests before any update. The common resources and CRDs might not be updated with every release, but K8s will only apply updates to the ones that changed.

2.3 Rook-Ceph Updates #

This is a general guide for updating your Rook cluster. For detailed instructions on updating to each supported version, refer to the upstream Rook upgrade documentation: https://rook.io/docs/rook/v1.8/ceph-upgrade.html.

To successfully upgrade a Rook cluster, the following prerequisites must be met:

Cluster health status is healthy with full functionality.

All pods consuming Rook storage should be created, running, and in a steady state.

Each version upgrade has specific details outlined in the Rook documentation. Use the following steps as a base guideline.

These methods should work for any number of Rook-Ceph clusters and Rook

Operators as long as you parameterize the environment correctly. Merely

repeat these steps for each Rook-Ceph cluster

(ROOK_CLUSTER_NAMESPACE), and be sure to update the

ROOK_OPERATOR_NAMESPACE parameter each time if

applicable.

Update common resources and CRDs.

Update Ceph CSI versions.

Update the Rook Operator.

Wait for the upgrade to complete and verify the updated cluster.

Update

CephRBDMirrorandCephBlockPoolconfiguration options.

Part II Administrating Ceph on SUSE CaaS Platform #

- 3 Rook-Ceph administration

This part of the guide focuses on routine tasks that you as an administrator need to take care of after the basic Ceph cluster has been deployed ("day two operations"). It also describes all the supported ways to access data stored in a Ceph cluster.

- 4 Ceph cluster administration

This chapter introduces tasks that are performed on the whole cluster.

- 5 Block Storage

Block Storage allows a single pod to mount storage. This guide shows how to create a simple, multi-tier web application on Kubernetes using persistent volumes enabled by Rook.

- 6 CephFS

A shared file system can be mounted with read/write permission from multiple pods. This may be useful for applications which can be clustered using a shared file system.

- 7 Ceph cluster custom resource definitions

Rook allows the creation and customization of storage clusters through Custom Resource Definitions (CRDs). There are two different methods of cluster creation, depending on whether the storage on which to base the Ceph cluster can be dynamically provisioned.

- 8 Configuration

For almost any Ceph cluster, the user will want—and may need— to change some Ceph configurations. These changes often may be warranted in order to alter performance to meet SLAs, or to update default data resiliency settings.

- 9 Toolboxes

The Rook toolbox is a container with common tools used for rook debugging and testing. The toolbox is based on SUSE Linux Enterprise Server, so more tools of your choosing can be installed with

zypper.- 10 Ceph OSD management

Ceph Object Storage Daemons (OSDs) are the heart and soul of the Ceph storage platform. Each OSD manages a local device and together they provide the distributed storage. Rook will automate creation and management of OSDs to hide the complexity based on the desired state in the CephCluster CR as muc…

- 11 Ceph examples

Configuration for Rook and Ceph can be configured in multiple ways to provide block devices, shared file system volumes, or object storage in a Kubernetes namespace. We have provided several examples to simplify storage setup, but remember there are many tunables and you will need to decide what set…

- 12 Advanced configuration

These examples show how to perform advanced configuration tasks on your Rook storage cluster.

- 13 Object Storage

Object Storage exposes an S3 API to the storage cluster for applications to

putandgetdata.- 14 Ceph Dashboard

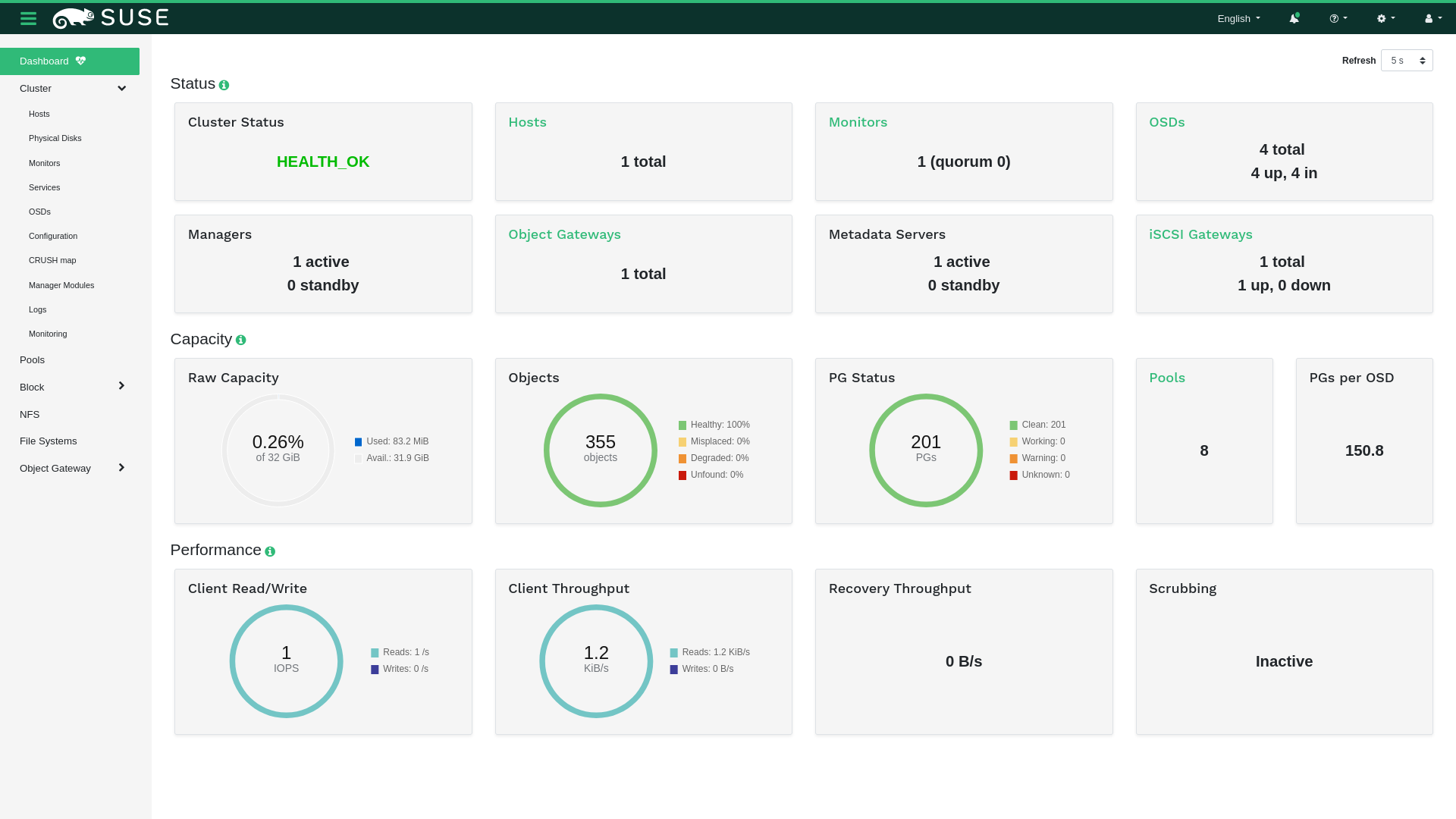

The Ceph Dashboard is a helpful tool to give you an overview of the status of your Ceph cluster, including overall health, status of the MOPN quorum, status of the MGR, OSD, and other Ceph daemons, view pools and PG status, show logs for the daemons, and more. Rook makes it simple to enable the dash…

3 Rook-Ceph administration #

This part of the guide focuses on routine tasks that you as an administrator need to take care of after the basic Ceph cluster has been deployed ("day two operations"). It also describes all the supported ways to access data stored in a Ceph cluster.

The chapters in this part contain links to additional documentation resources. These include additional documentation that is available on the system, as well as documentation available on the Internet.

For an overview of the documentation available for your product and the latest documentation updates, refer to https://documentation.suse.com.

4 Ceph cluster administration #

This chapter introduces tasks that are performed on the whole cluster.

4.1 Shutting down and restarting the cluster #

To shut down the whole Ceph cluster for planned maintenance tasks, follow these steps:

Stop all clients that are using the cluster.

Verify that the cluster is in a healthy state. Use the following commands:

cephuser@adm >ceph statuscephuser@adm >ceph healthSet the following OSD flags:

cephuser@adm >ceph osd set nooutcephuser@adm >ceph osd set nobackfillcephuser@adm >ceph osd set norecoverShutdown service nodes one by one (non-storage workers).

Shutdown Ceph Monitor nodes one by one (masters by default).

Shutdown Admin Node (masters).

After you finish the maintenance, you can start the cluster again by running the above procedure in reverse order.

5 Block Storage #

Block Storage allows a single pod to mount storage. This guide shows how to create a simple, multi-tier web application on Kubernetes using persistent volumes enabled by Rook.

5.1 Provisioning Block Storage #

Before Rook can provision storage, a StorageClass and a

CephBlockPool need to be created. This will allow Kubernetes

to interoperate with Rook when provisioning persistent volumes.

This sample requires at least one OSD per node, with each OSD located on three different nodes.

Each OSD must be located on a different node, because the

failureDomain

is set to host and the replicated.size

is set to 3.

This example uses the CSI driver, which is the preferred driver going forward for Kubernetes 1.13 and newer. Examples are found in the CSI RBD directory.

Save this StorageClass definition as

storageclass.yaml:

apiVersion: ceph.rook.io/v1

kind: CephBlockPool

metadata:

name: replicapool

namespace: rook-ceph

spec:

failureDomain: host

replicated:

size: 3

---

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: rook-ceph-block

# Change "rook-ceph" provisioner prefix to match the operator namespace if needed

provisioner: rook-ceph.rbd.csi.ceph.com

parameters:

# clusterID is the namespace where the rook cluster is running

clusterID: rook-ceph

# Ceph pool into which the RBD image shall be created

pool: replicapool

# RBD image format. Defaults to "2".

imageFormat: "2"

# RBD image features. Available for imageFormat: "2". CSI RBD currently supports only `layering` feature.

imageFeatures: layering

# The secrets contain Ceph admin credentials.

csi.storage.k8s.io/provisioner-secret-name: rook-csi-rbd-provisioner

csi.storage.k8s.io/provisioner-secret-namespace: rook-ceph

csi.storage.k8s.io/controller-expand-secret-name: rook-csi-rbd-provisioner

csi.storage.k8s.io/controller-expand-secret-namespace: rook-ceph

csi.storage.k8s.io/node-stage-secret-name: rook-csi-rbd-node

csi.storage.k8s.io/node-stage-secret-namespace: rook-ceph

# Specify the filesystem type of the volume. If not specified, csi-provisioner

# will set default as `ext4`. Note that `xfs` is not recommended due to potential deadlock

# in hyperconverged settings where the volume is mounted on the same node as the osds.

csi.storage.k8s.io/fstype: ext4

# Delete the rbd volume when a PVC is deleted

reclaimPolicy: DeleteIf you have deployed the Rook operator in a namespace other than “rook-ceph”, change the prefix in the provisioner to match the namespace you used. For example, if the Rook operator is running in the namespace “my-namespace” the provisioner value should be “my-namespace.rbd.csi.ceph.com”.

Create the storage class.

kubectl@adm > kubectl create -f cluster/examples/kubernetes/ceph/csi/rbd/storageclass.yaml

As

specified

by Kubernetes, when using the Retain reclaim policy,

any Ceph RBD image that is backed by a

PersistentVolume will continue to exist even after the

PersistentVolume has been deleted. These Ceph RBD

images will need to be cleaned up manually using rbd rm.

5.2 Consuming storage: WordPress sample #

In this example, we will create a sample application to consume the block storage provisioned by Rook with the classic WordPress and MySQL apps. Both of these applications will make use of block volumes provisioned by Rook.

Start MySQL and WordPress from the

cluster/examples/kubernetes folder:

kubectl@adm > kubectl create -f mysql.yaml

kubectl create -f wordpress.yamlBoth of these applications create a block volume, and mount it to their respective pod. You can see the Kubernetes volume claims by running the following:

kubectl@adm > kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESSMODES AGE

mysql-pv-claim Bound pvc-95402dbc-efc0-11e6-bc9a-0cc47a3459ee 20Gi RWO 1m

wp-pv-claim Bound pvc-39e43169-efc1-11e6-bc9a-0cc47a3459ee 20Gi RWO 1m

Once the WordPress and MySQL pods are in the Running

state, get the cluster IP of the WordPress app and enter it in your browser:

kubectl@adm > kubectl get svc wordpress

NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE

wordpress 10.3.0.155 <pending> 80:30841/TCP 2mYou should see the WordPress application running.

If you are using Minikube, the WordPress URL can be retrieved with this one-line command:

kubectl@adm > echo http://$(minikube ip):$(kubectl get service wordpress -o jsonpath='{.spec.ports[0].nodePort}')

When running in a Vagrant environment, there will be no external IP address

to reach WordPress with. You will only be able to reach WordPress via the

CLUSTER-IP from inside the Kubernetes cluster.

5.3 Consuming the storage: Toolbox #

With the pool that was created above, we can also create a block image and mount it directly in a pod.

5.4 Teardown #

To clean up all the artifacts created by the block-storage demonstration:

kubectl@adm >kubectl delete -f wordpress.yamlkubectl@adm >kubectl delete -f mysql.yamlkubectl@adm >kubectl delete -n rook-ceph cephblockpools.ceph.rook.io replicapoolkubectl@adm >kubectl delete storageclass rook-ceph-block

5.5 Advanced Example: Erasure-Coded Block Storage #

If you want to use erasure-coded pools with RBD, your OSDs must use

bluestore as their storeType.

Additionally, the nodes that will mount the erasure-coded RBD block storage

must have Linux kernel 4.11 or above.

This example requires at least three bluestore OSDs, with each OSD located on a different node.

The OSDs must be located on different nodes, because the

failureDomain is set to host and the

erasureCoded chunk settings require at least three

different OSDs (two dataChunks plus one

codingChunk).

To be able to use an erasure-coded pool, you need to create two pools (as seen below in the definitions): one erasure-coded, and one replicated.

5.5.1 Erasure coded CSI driver #

The erasure-coded pool must be set as the dataPool

parameter in

storageclass-ec.yaml

It is used for the data of the RBD images.

6 CephFS #

7 Ceph cluster custom resource definitions #

7.1 Ceph cluster CRD #

Rook allows the creation and customization of storage clusters through Custom Resource Definitions (CRDs). There are two different methods of cluster creation, depending on whether the storage on which to base the Ceph cluster can be dynamically provisioned.

Specify the host paths and raw devices.

Specify the storage class Rook should use to consume storage via PVCs.

Examples for each of these approaches follow.

7.1.1 Host-based cluster #

To get you started, here is a simple example of a CRD to configure a Ceph cluster with all nodes and all devices. In the next example, the MONs and OSDs are backed by PVCs.

In addition to your CephCluster object, you need to create the namespace,

service accounts, and RBAC rules for the namespace in which you will

create the CephCluster. These resources are defined in the example

common.yaml file.

apiVersion: ceph.rook.io/v1

kind: CephCluster

metadata:

name: rook-ceph

namespace: rook-ceph

spec:

cephVersion:

# see the "Cluster Settings" section below for more details on which image of Ceph to run

image: ceph/ceph:v15.2.4

dataDirHostPath: /var/lib/rook

mon:

count: 3

allowMultiplePerNode: true

storage:

useAllNodes: true

useAllDevices: true7.1.2 PVC-based cluster #

Kubernetes version 1.13.0 or greater is required to provision OSDs on PVCs.

apiVersion: ceph.rook.io/v1

kind: CephCluster

metadata:

name: rook-ceph

namespace: rook-ceph

spec:

cephVersion:

# see the "Cluster Settings" section below for more details on which image of Ceph to run

image: ceph/ceph:v15.2.4

dataDirHostPath: /var/lib/rook

mon:

count: 3

volumeClaimTemplate:

spec:

storageClassName: local-storage

resources:

requests:

storage: 10Gi

storage:

storageClassDeviceSets:

- name: set1

count: 3

portable: false

tuneDeviceClass: false

encrypted: false

volumeClaimTemplates:

- metadata:

name: data

spec:

resources:

requests:

storage: 10Gi

# IMPORTANT: Change the storage class depending on your environment (e.g. local-storage, gp2)

storageClassName: local-storage

volumeMode: Block

accessModes:

- ReadWriteOnceFor more advanced scenarios, such as adding a dedicated device, please refer to Section 7.1.4.8, “Dedicated metadata and WAL device for OSD on PVC”.

7.1.3 Settings #

Settings can be specified at the global level to apply to the cluster as a whole, while other settings can be specified at more fine-grained levels. If any setting is unspecified, a suitable default will be used automatically.

7.1.3.1 Cluster metadata #

name: The name that will be used internally for the Ceph cluster. Most commonly, the name is the same as the namespace since multiple clusters are not supported in the same namespace.namespace: The Kubernetes namespace that will be created for the Rook cluster. The services, pods, and other resources created by the operator will be added to this namespace. The common scenario is to create a single Rook cluster. If multiple clusters are created, they must not have conflicting devices or host paths.

7.1.3.2 Cluster settings #

external:enable: iftrue, the cluster will not be managed by Rook but via an external entity. This mode is intended to connect to an existing cluster. In this case, Rook will only consume the external cluster. However, if an image is provided, Rook will be able to deploy various daemons in Kubernetes, such as object gateways, MDS and NFS. If an image is not provided, it will refuse. If this setting is enabled, all the other options will be ignored exceptcephVersion.imageanddataDirHostPath. See Section 7.1.4.9, “External cluster”. IfcephVersion.imageis left blank, Rook will refuse the creation of extra CRs such as object, file and NFS.

cephVersion: The version information for launching the Ceph daemons.image: The image used for running the Ceph daemons. For example,ceph/ceph:v16.2.7orceph/ceph:v15.2.4. To ensure a consistent version of the image is running across all nodes in the cluster, we recommend to use a very specific image version. Tags also exist that would give the latest version, but they are only recommended for test environments. Using thev14or similar tag is not recommended in production because it may lead to inconsistent versions of the image running across different nodes in the cluster.

dataDirHostPath: The path on the host where config and data should be stored for each of the services. If the directory does not exist, it will be created. Because this directory persists on the host, it will remain after pods are deleted. You must not use the following paths and any of their subpaths:/etc/ceph,/rookor/var/log/ceph.On Minikube environments, use

/data/rook. Minikube boots into atmpfsbut it provides some directories where files can persist across reboots. Using one of these directories will ensure that Rook’s data and configuration files persist and that enough storage space is available.WarningWARNING: For test scenarios, if you delete a cluster and start a new cluster on the same hosts, the path used by

dataDirHostPathmust be deleted. Otherwise, stale keys and other configuration will remain from the previous cluster and the new MONs will fail to start. If this value is empty, each pod will get an ephemeral directory to store their config files that is tied to the lifetime of the pod running on that node.

continueUpgradeAfterChecksEvenIfNotHealthy: if set totrue, Rook will continue the OSD daemon upgrade process even if the PGs are not clean, or continue with the MDS upgrade even the file system is not healthy.dashboard: Settings for the Ceph Dashboard. To view the dashboard in your browser, see Book “Administration and Operations Guide”.enabled: Whether to enable the dashboard to view cluster status.urlPrefix: Allows serving the dashboard under a subpath (useful when you are accessing the dashboard via a reverse proxy).port: Allows changing the default port where the dashboard is served.ssl: Whether to serve the dashboard via SSL; ignored on Ceph versions older than13.2.2.

monitoring: Settings for monitoring Ceph using Prometheus. To enable monitoring on your cluster, see the Book “Administration and Operations Guide”, Chapter 16 “Monitoring and alerting”.enabled: Whether to enable-Prometheus based monitoring for this cluster.rulesNamespace: Namespace to deployprometheusRule. If empty, the namespace of the cluster will be used. We recommend:If you have a single Rook Ceph cluster, set the

rulesNamespaceto the same namespace as the cluster, or leave it empty.If you have multiple Rook Ceph clusters in the same Kubernetes cluster, choose the same namespace to set

rulesNamespacefor all the clusters (ideally, namespace with Prometheus deployed). Otherwise, you will get duplicate alerts with duplicate alert definitions.

network: For the network settings for the cluster, refer to Section 7.1.3.5, “Network configuration settings”.mon: contains MON related options Section 7.1.3.3, “MON settings”.mgr: manager top level section.modules: is the list of Ceph Manager modules to enable.

crashCollector: The settings for crash collector daemon(s).disable: if set totrue, the crash collector will not run on any node where a Ceph daemon runs.

annotations: Section 7.1.3.10, “Annotations and labels”placement: Section 7.1.3.11, “Placement configuration settings”resources: Section 7.1.3.12, “Cluster-wide resources configuration settings”priorityClassNames: Section 7.1.3.14, “Priority class names configuration settings”storage: Storage selection and configuration that will be used across the cluster. Note that these settings can be overridden for specific nodes.useAllNodes:trueorfalse, indicating if all nodes in the cluster should be used for storage according to the cluster level storage selection and configuration values. If individual nodes are specified under thenodesfield, thenuseAllNodesmust be set tofalse.nodes: Names of individual nodes in the cluster that should have their storage included in accordance with either the cluster level configuration specified above or any node specific overrides described in the next section below.useAllNodesmust be set tofalseto use specific nodes and their configuration. See Section 7.1.3.6, “Node settings” below.config: Config settings applied to all OSDs on the node unless overridden bydevices.

disruptionManagement: The section for configuring management of daemon disruptionsmanagePodBudgets: iftrue, the operator will create and managePodDisruptionBudgetsfor OSD, MON, RGW, and MDS daemons. The operator will block eviction of OSDs by default and unblock them safely when drains are detected.osdMaintenanceTimeout: is a duration in minutes that determines how long an entire failure domain likeregion/zone/hostwill be held innoout(in addition to the default DOWN/OUT interval) when it is draining. This is only relevant whenmanagePodBudgetsistrue. The default value is30minutes.manageMachineDisruptionBudgets: iftrue, the operator will create and manageMachineDisruptionBudgetsto ensure OSDs are only fenced when the cluster is healthy. Only available on OpenShift.machineDisruptionBudgetNamespace: the namespace in which to watch theMachineDisruptionBudgets.

removeOSDsIfOutAndSafeToRemove: Iftruethe operator will remove the OSDs that are down and whose data has been restored to other OSDs.cleanupPolicy: Section 7.1.4.10, “Cleanup policy”

7.1.3.3 MON settings #

count: Set the number of MONs to be started. This should be an odd number between one and nine. If not specified, the default is set to three, andallowMultiplePerNodeis also set totrue.allowMultiplePerNode: Enable (true) or disable (false) the placement of multiple MONs on one node. Default isfalse.volumeClaimTemplate: APersistentVolumeSpecused by Rook to create PVCs for monitor storage. This field is optional, and when not provided, HostPath volume mounts are used. The current set of fields from template that are used arestorageClassNameand thestorageresource request and limit. The default storage size request for new PVCs is10Gi. Ensure that associated storage class is configured to usevolumeBindingMode: WaitForFirstConsumer. This setting only applies to new monitors that are created when the requested number of monitors increases, or when a monitor fails and is recreated.

If these settings are changed in the CRD, the operator will update the number of MONs during a periodic check of the MON health, which by default is every 45 seconds.

To change the defaults that the operator uses to determine the MON health and whether to failover a MON, refer to the Section 7.1.3.15, “Health settings”. The intervals should be small enough that you have confidence the MONs will maintain quorum, while also being long enough to ignore network blips where MONs are failed over too often.

7.1.3.4 Ceph Manager settings #

You can use the cluster CR to enable or disable any manager module. For example, this can be configured:

mgr:

modules:

- name: <name of the module>

enabled: true

Some modules will have special configuration to ensure the module is fully

functional after being enabled. Specifically, the

pg_autoscaler—Rook will configure all new pools

with PG autoscaling by setting:

osd_pool_default_pg_autoscale_mode = on

7.1.3.5 Network configuration settings #

If not specified, the default SDN will be used. Configure the network that will be enabled for the cluster and services.

provider: Specifies the network provider that will be used to connect the network interface.selectors: List the network selector(s) that will be used associated by a key.

Changing networking configuration after a Ceph cluster has been deployed is not supported and will result in a non-functioning cluster.

To use host networking, set provider: host.

7.1.3.6 Node settings #

In addition to the cluster level settings specified above, each individual node can also specify configuration to override the cluster level settings and defaults. If a node does not specify any configuration, then it will inherit the cluster level settings.

name: The name of the node, which should match itskubernetes.io/hostnamelabel.config: Configuration settings applied to all OSDs on the node unless overridden bydevices.

When useAllNodes is set to true,

Rook attempts to make Ceph cluster management as hands-off as possible

while still maintaining reasonable data safety. If a usable node comes

online, Rook will begin to use it automatically. To maintain a balance

between hands-off usability and data safety, nodes are removed from Ceph

as OSD hosts only (1) if the node is deleted from Kubernetes itself or (2) if

the node has its taints or affinities modified in such a way that the node

is no longer usable by Rook. Any changes to taints or affinities,

intentional or unintentional, may affect the data reliability of the

Ceph cluster. In order to help protect against this somewhat, deletion

of nodes by taint or affinity modifications must be confirmed by deleting

the Rook-Ceph operator pod and allowing the operator deployment to

restart the pod.

For production clusters, we recommend that useAllNodes

is set to false to prevent the Ceph cluster from

suffering reduced data reliability unintentionally due to a user mistake.

When useAllNodes is set to false,

Rook relies on the user to be explicit about when nodes are added to or

removed from the Ceph cluster. Nodes are only added to the Ceph

cluster if the node is added to the Ceph cluster resource. Similarly,

nodes are only removed if the node is removed from the Ceph cluster

resource.

7.1.3.6.1 Node updates #

Nodes can be added and removed over time by updating the cluster CRD —for example, with the following command:

kubectl -n rook-ceph edit cephcluster rook-ceph

This will bring up your default text editor and allow you to add and

remove storage nodes from the cluster. This feature is only available

when useAllNodes has been set to

false.

7.1.3.7 Storage selection settings #

Below are the settings available, both at the cluster and individual node level, for selecting which storage resources will be included in the cluster.

useAllDevices:trueorfalse, indicating whether all devices found on nodes in the cluster should be automatically consumed by OSDs. This is Not recommended unless you have a very controlled environment where you will not risk formatting of devices with existing data. Whentrue, all devices/partitions will be used. Is overridden bydeviceFilterif specified.deviceFilter: A regular expression for short kernel names of devices (for example,sda) that allows selection of devices to be consumed by OSDs. If individual devices have been specified for a node then this filter will be ignored. For example:sdb: Selects only thesdbdevice (if found).^sd: Selects all devices starting withsd.^sd[a-d]: Selects devices starting withsda,sdb,sdc, andsdd(if found).^s: Selects all devices that start withs.^[^r]: Selects all devices that do not start withr

devicePathFilter: A regular expression for device paths (for example,/dev/disk/by-path/pci-0:1:2:3-scsi-1) that allows selection of devices to be consumed by OSDs. If individual devices ordeviceFilterhave been specified for a node then this filter will be ignored. For example:^/dev/sd.: Selects all devices starting withsd^/dev/disk/by-path/pci-.*: Selects all devices which are connected to PCI bus

devices: A list of individual device names belonging to this node to include in the storage cluster.name: The name of the device (for example,sda), or full udev path (such as,/dev/disk/by-id/ata-ST4000DM004-XXXX— this will not change after reboots).config: Device-specific configuration settings.

storageClassDeviceSets: Explained in Section 7.1.3.8, “Storage class device sets”.

7.1.3.8 Storage class device sets #

The following are the settings for Storage Class Device Sets which can be configured to create OSDs that are backed by block mode PVs.

name: A name for the set.count: The number of devices in the set.resources: The CPU and RAM requests or limits for the devices (optional).placement: The placement criteria for the devices (optional; default is no placement criteria).The syntax is the same as for Section 7.1.3.11, “Placement configuration settings”. It supports

nodeAffinity,podAffinity,podAntiAffinityandtolerationskeys.We recommend configuring the placement such that the OSDs will be as evenly spread across nodes as possible. At a minimum, anti-affinity should be added, so at least one OSD will be placed on each available node.

However, if there are more OSDs than nodes, this anti-affinity will not be effective. Another placement scheme to consider is adding labels to the nodes in such a way that the OSDs can be grouped on those nodes, create multiple

storageClassDeviceSets, and add node affinity to each of the device sets that will place the OSDs in those sets of nodes.preparePlacement: The placement criteria for the preparation of the OSD devices. Creating OSDs is a two-step process and the prepare job may require different placement than the OSD daemons. If thepreparePlacementis not specified, theplacementwill instead be applied for consistent placement for the OSD prepare jobs and OSD deployments. ThepreparePlacementis only useful forportableOSDs in the device sets. OSDs that are not portable will be tied to the host where the OSD prepare job initially runs.For example, provisioning may require topology spread constraints across zones, but the OSD daemons may require constraints across hosts within the zones.

portable: Iftrue, the OSDs will be allowed to move between nodes during failover. This requires a storage class that supports portability (for example,aws-ebs, but not the local storage provisioner). Iffalse, the OSDs will be assigned to a node permanently. Rook will configure Ceph’s CRUSH map to support the portability.tuneDeviceClass: Iftrue, because the OSD can be on a slow device class, Rook will adapt to that by tuning the OSD process. This will make Ceph perform better under that slow device.volumeClaimTemplates: A list of PVC templates to use for provisioning the underlying storage devices.resources.requests.storage: The desired capacity for the underlying storage devices.storageClassName: The StorageClass to provision PVCs from. The default is to use the cluster-default StorageClass. This StorageClass should provide a raw block device, multipath device, or logical volume. Other types are not supported. If you want to use logical volumes, please see the known issue of OSD on LV-backed PVC: https://github.com/rook/rook/blob/master/Documentation/ceph-common-issues.md#lvm-metadata-can-be-corrupted-with-osd-on-lv-backed-pvcvolumeMode: The volume mode to be set for the PVC.accessModes: The access mode for the PVC to be bound by OSD.

schedulerName: Scheduler name for OSD pod placement (optional).encrypted: whether to encrypt all the OSDs in a given storageClassDeviceSet.

7.1.3.9 Storage selection via Ceph DriveGroups #

Ceph DriveGroups allow for specifying highly advanced OSD layouts. Refer to Book “Administration and Operations Guide”, Chapter 13 “Operational tasks”, Section 13.4.3 “Adding OSDs using DriveGroups specification” for both general information and detailed specification of DriveGroups with useful examples.

When managing a Rook/Ceph cluster’s OSD layouts with DriveGroups, the

storage configuration is mostly ignored.

storageClassDeviceSets can still be used to create

OSDs on PVC, but Rook will no longer use storage

configurations for creating OSDs on a node's devices. To avoid confusion,

we recommend using the storage configuration

or DriveGroups, but never both.

Because storage and DriveGroups

should not be used simultaneously, Rook only supports provisioning OSDs

with DriveGroups on new Rook-Ceph clusters.

DriveGroups are defined by a name, a Ceph DriveGroups spec, and a Rook placement.

name: A name for the DriveGroups.spec: The Ceph DriveGroups spec. Some components of the spec are treated differently in the context of Rook as noted below.Rook overrides Ceph’s definition of

placementin order to use Rook’splacementbelow.Rook overrides Ceph’s

service_idfield to be the same as the DriveGroupsnameabove.

placement: The placement criteria for nodes to provision with the DriveGroups (optional; default is no placement criteria, which matches all untainted nodes). The syntax is the same as for Section 7.1.3.11, “Placement configuration settings”.

7.1.3.10 Annotations and labels #

Annotations and Labels can be specified so that the Rook components will have those annotations or labels added to them.

You can set annotations and labels for Rook components for the list of key value pairs:

all: Set annotations / labels for all componentsmgr: Set annotations / labels for MGRsmon: Set annotations / labels for MONsosd: Set annotations / labels for OSDsprepareosd: Set annotations / labels for OSD Prepare Jobs

When other keys are set, all will be merged together

with the specific component.

7.1.3.11 Placement configuration settings #

Placement configuration for the cluster services. It includes the

following keys: mgr, mon,

osd, cleanup, and

all. Each service will have its placement configuration

generated by merging the generic configuration under

all with the most specific one (which will override any

attributes).

Placement of OSD pods is controlled using the

Section 7.1.3.8, “Storage class device sets”, not the general

placement configuration.

A placement configuration is specified (according to the Kubernetes PodSpec) as:

nodeAffinitypodAffinitypodAntiAffinitytolerationstopologySpreadConstraints

If you use labelSelector for OSD pods, you must write

two rules both for rook-ceph-osd and

rook-ceph-osd-prepare.

The Rook Ceph operator creates a job called

rook-ceph-detect-version to detect the full Ceph

version used by the given cephVersion.image. The

placement from the MON section is used for the job except for the

PodAntiAffinity field.

7.1.3.12 Cluster-wide resources configuration settings #

Resources should be specified so that the Rook components are handled after Kubernetes Pod Quality of Service classes. This allows to keep Rook components running when for example a node runs out of memory and the Rook components are not killed depending on their Quality of Service class.

You can set resource requests/limits for Rook components through the Section 7.1.3.13, “Resource requirements and limits” structure in the following keys:

mgr: Set resource requests/limits for MGRs.mon: Set resource requests/limits for MONs.osd: Set resource requests/limits for OSDs.prepareosd: Set resource requests/limits for OSD prepare job.crashcollector: Set resource requests and limits for crash. This pod runs wherever there is a Ceph pod running. It scrapes for Ceph daemon core dumps and sends them to the Ceph manager crash module so that core dumps are centralized and can be easily listed/accessed.cleanup: Set resource requests and limits for cleanup job, responsible for wiping cluster’s data after uninstall.

In order to provide the best possible experience running Ceph in containers, Rook internally recommends minimum memory limits if resource limits are passed. If a user configures a limit or request value that is too low, Rook will still run the pod(s) and print a warning to the operator log.

mon: 1024 MBmgr: 512 MBosd: 2048 MBmds: 4096 MBprepareosd: 50 MBcrashcollector: 60MB

7.1.3.13 Resource requirements and limits #

requests: Requests for CPU or memory.cpu: Request for CPU (example: one CPU core1, 50% of one CPU core500m).memory: Limit for Memory (example: one gigabyte of memory1Gi, half a gigabyte of memory512Mi).

limits: Limits for CPU or memory.cpu: Limit for CPU (example: one CPU core1, 50% of one CPU core500m).memory: Limit for Memory (example: one gigabyte of memory1Gi, half a gigabyte of memory512Mi).

7.1.3.14 Priority class names configuration settings #

Priority class names can be specified so that the Rook components will have those priority class names added to them.

You can set priority class names for Rook components for the list of key value pairs:

all: Set priority class names for MGRs, MONs, OSDs.mgr: Set priority class names for MGRs.mon: Set priority class names for MONs.osd: Set priority class names for OSDs.

The specific component keys will act as overrides to

all.

7.1.3.15 Health settings #

Rook-Ceph will monitor the state of the CephCluster on various components by default. The following CRD settings are available:

healthCheck: main Ceph cluster health monitoring section

Currently three health checks are implemented:

mon: health check on the Ceph monitors. Basic check as to whether monitors are members of the quorum. If after a certain timeout a given monitor has not rejoined the quorum, it will be failed over and replaced by a new monitor.osd: health check on the Ceph OSDs.status: Ceph health status check; periodically checks the Ceph health state, and reflects it in the CephCluster CR status field.

The liveness probe of each daemon can also be controlled via

livenessProbe. The setting is valid for

mon, mgr and osd.

Here is a complete example for both daemonHealth and

livenessProbe:

healthCheck:

daemonHealth:

mon:

disabled: false

interval: 45s

timeout: 600s

osd:

disabled: false

interval: 60s

status:

disabled: false

livenessProbe:

mon:

disabled: false

mgr:

disabled: false

osd:

disabled: false

You can change the mgr probe by applying the following:

healthCheck:

livenessProbe:

mgr:

disabled: false

probe:

httpGet:

path: /

port: 9283

initialDelaySeconds: 3

periodSeconds: 3Changing the liveness probe is an advanced operation and should rarely be necessary. If you want to change these settings, start with the probe specification that Rook generates by default and then modify the desired settings.

7.1.4 Samples #

Here are several samples for configuring Ceph clusters. Each of the samples must also include the namespace and corresponding access granted for management by the Ceph operator. See the common cluster resources below.

7.1.4.1 Storage configuration: All devices #

apiVersion: ceph.rook.io/v1

kind: CephCluster

metadata:

name: rook-ceph

namespace: rook-ceph

spec:

cephVersion:

image: ceph/ceph:v15.2.4

dataDirHostPath: /var/lib/rook

mon:

count: 3

allowMultiplePerNode: true

dashboard:

enabled: true

# cluster level storage configuration and selection

storage:

useAllNodes: true

useAllDevices: true

deviceFilter:

config:

metadataDevice:

databaseSizeMB: "1024" # this value can be removed for environments with normal sized disks (100 GB or larger)

journalSizeMB: "1024" # this value can be removed for environments with normal sized disks (20 GB or larger)

osdsPerDevice: "1"7.1.4.2 Storage configuration: Specific devices #

Individual nodes and their configurations can be specified so that only the named nodes below will be used as storage resources. Each node’s “name” field should match their “kubernetes.io/hostname” label.

apiVersion: ceph.rook.io/v1

kind: CephCluster

metadata:

name: rook-ceph

namespace: rook-ceph

spec:

cephVersion:

image: ceph/ceph:v15.2.4

dataDirHostPath: /var/lib/rook

mon:

count: 3

allowMultiplePerNode: true

dashboard:

enabled: true

# cluster level storage configuration and selection

storage:

useAllNodes: false

useAllDevices: false

deviceFilter:

config:

metadataDevice:

databaseSizeMB: "1024" # this value can be removed for environments with normal sized disks (100 GB or larger)

nodes:

- name: "172.17.4.201"

devices: # specific devices to use for storage can be specified for each node

- name: "sdb" # Whole storage device

- name: "sdc1" # One specific partition. Should not have a file system on it.

- name: "/dev/disk/by-id/ata-ST4000DM004-XXXX" # both device name and explicit udev links are supported

config: # configuration can be specified at the node level which overrides the cluster level config

storeType: bluestore

- name: "172.17.4.301"

deviceFilter: "^sd."7.1.4.3 Node affinity #

To control where various services will be scheduled by Kubernetes, use the placement configuration sections below. The example under “all” would have all services scheduled on Kubernetes nodes labeled with “role=storage-node” and tolerate taints with a key of “storage-node”.

apiVersion: ceph.rook.io/v1

kind: CephCluster

metadata:

name: rook-ceph

namespace: rook-ceph

spec:

cephVersion:

image: ceph/ceph:v15.2.4

dataDirHostPath: /var/lib/rook

mon:

count: 3

allowMultiplePerNode: true

# enable the Ceph dashboard for viewing cluster status

dashboard:

enabled: true

placement:

all:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: role

operator: In

values:

- storage-node

tolerations:

- key: storage-node

operator: Exists

mgr:

nodeAffinity:

tolerations:

mon:

nodeAffinity:

tolerations:

osd:

nodeAffinity:

tolerations:7.1.4.4 Resource requests and limits #

To control how many resources the Rook components can request/use, you

can set requests and limits in Kubernetes for them. You can override these

requests and limits for OSDs per node when using useAllNodes:

false in the node item in the

nodes list.

Before setting resource requests/limits, review the Ceph documentation for hardware recommendations for each component.

apiVersion: ceph.rook.io/v1

kind: CephCluster

metadata:

name: rook-ceph

namespace: rook-ceph

spec:

cephVersion:

image: ceph/ceph:v15.2.4

dataDirHostPath: /var/lib/rook

mon:

count: 3

allowMultiplePerNode: true

# enable the Ceph dashboard for viewing cluster status

dashboard:

enabled: true

# cluster level resource requests/limits configuration

resources:

storage:

useAllNodes: false

nodes:

- name: "172.17.4.201"

resources:

limits:

cpu: "2"

memory: "4096Mi"

requests:

cpu: "2"

memory: "4096Mi"7.1.4.5 OSD topology #

The topology of the cluster is important in production environments where you want your data spread across failure domains. The topology can be controlled by adding labels to the nodes. When the labels are found on a node at first OSD deployment, Rook will add them to the desired level in the CRUSH map.

The complete list of labels in hierarchy order from highest to lowest is:

topology.kubernetes.io/region topology.kubernetes.io/zone topology.rook.io/datacenter topology.rook.io/room topology.rook.io/pod topology.rook.io/pdu topology.rook.io/row topology.rook.io/rack topology.rook.io/chassis

For example, if the following labels were added to a node:

kubectl label node mynode topology.kubernetes.io/zone=zone1 kubectl label node mynode topology.rook.io/rack=rack1

For versions previous to K8s 1.17, use the topology key:

failure-domain.beta.kubernetes.io/zone or region.

These labels would result in the following hierarchy for OSDs on that node (this command can be run in the Rook toolbox):

[root@mynode /]# ceph osd tree ID CLASS WEIGHT TYPE NAME STATUS REWEIGHT PRI-AFF -1 0.01358 root default -5 0.01358 zone zone1 -4 0.01358 rack rack1 -3 0.01358 host mynode 0 hdd 0.00679 osd.0 up 1.00000 1.00000 1 hdd 0.00679 osd.1 up 1.00000 1.00000

Ceph requires unique names at every level in the hierarchy (CRUSH map). For example, you cannot have two racks with the same name that are in different zones. Racks in different zones must be named uniquely.

Note that the host is added automatically to the

hierarchy by Rook. The host cannot be specified with a topology label.

All topology labels are optional.

When setting the node labels prior to CephCluster

creation, these settings take immediate effect. However, applying this to

an already deployed CephCluster requires removing each

node from the cluster first and then re-adding it with new configuration

to take effect. Do this node by node to keep your data safe! Check the

result with ceph osd tree from the

Chapter 9, Toolboxes. The OSD tree should display

the hierarchy for the nodes that already have been re-added.

To utilize the failureDomain based on the node labels,

specify the corresponding option in the CephBlockPool.

apiVersion: ceph.rook.io/v1

kind: CephBlockPool

metadata:

name: replicapool

namespace: rook-ceph

spec:

failureDomain: rack # this matches the topology labels on nodes

replicated:

size: 3This configuration will split the replication of volumes across unique racks in the data center setup.

7.1.4.6 Using PVC storage for monitors #

In the CRD specification below three monitors are created each using a

10Gi PVC created by Rook using the local-storage

storage class.

apiVersion: ceph.rook.io/v1

kind: CephCluster

metadata:

name: rook-ceph

namespace: rook-ceph

spec:

cephVersion:

image: ceph/ceph:v15.2.4

dataDirHostPath: /var/lib/rook

mon:

count: 3

allowMultiplePerNode: false

volumeClaimTemplate:

spec:

storageClassName: local-storage

resources:

requests:

storage: 10Gi

dashboard:

enabled: true

storage:

useAllNodes: true

useAllDevices: true

deviceFilter:

config:

metadataDevice:

databaseSizeMB: "1024" # this value can be removed for environments with normal sized disks (100 GB or larger)

journalSizeMB: "1024" # this value can be removed for environments with normal sized disks (20 GB or larger)

osdsPerDevice: "1"7.1.4.7 Using StorageClassDeviceSets #

In the CRD specification below, three OSDs (having specific placement and

resource values) and three MONs with each using a 10Gi PVC, are created by

Rook using the local-storage storage class.

apiVersion: ceph.rook.io/v1

kind: CephCluster

metadata:

name: rook-ceph

namespace: rook-ceph

spec:

dataDirHostPath: /var/lib/rook

mon:

count: 3

allowMultiplePerNode: false

volumeClaimTemplate:

spec:

storageClassName: local-storage

resources:

requests:

storage: 10Gi

cephVersion:

image: ceph/ceph:v15.2.4

allowUnsupported: false

dashboard:

enabled: true

network:

hostNetwork: false

storage:

storageClassDeviceSets:

- name: set1

count: 3

portable: false

tuneDeviceClass: false

resources:

limits:

cpu: "500m"

memory: "4Gi"

requests:

cpu: "500m"

memory: "4Gi"

placement:

podAntiAffinity:

preferredDuringSchedulingIgnoredDuringExecution:

- weight: 100

podAffinityTerm:

labelSelector:

matchExpressions:

- key: "rook.io/cluster"

operator: In

values:

- cluster1

topologyKey: "topology.kubernetes.io/zone"

volumeClaimTemplates:

- metadata:

name: data

spec:

resources:

requests:

storage: 10Gi

storageClassName: local-storage

volumeMode: Block

accessModes:

- ReadWriteOnce7.1.4.8 Dedicated metadata and WAL device for OSD on PVC #

In the simplest case, Ceph OSD BlueStore consumes a single (primary) storage device. BlueStore is the engine used by the OSD to store data.

The storage device is normally used as a whole, occupying the full device that is managed directly by BlueStore. It is also possible to deploy BlueStore across additional devices such as a DB device. This device can be used for storing BlueStore’s internal metadata. BlueStore (or rather, the embedded RocksDB) will put as much metadata as it can on the DB device to improve performance. If the DB device fills up, metadata will spill back onto the primary device (where it would have been otherwise). Again, it is only helpful to provision a DB device if it is faster than the primary device.

You can have multiple volumeClaimTemplates where each

might either represent a device or a metadata device. So just taking the

storage section this will give something like:

storage:

storageClassDeviceSets:

- name: set1

count: 3

portable: false

tuneDeviceClass: false

volumeClaimTemplates:

- metadata:

name: data

spec:

resources:

requests:

storage: 10Gi

# IMPORTANT: Change the storage class depending on your environment (e.g. local-storage, gp2)

storageClassName: gp2

volumeMode: Block

accessModes:

- ReadWriteOnce

- metadata:

name: metadata

spec:

resources:

requests:

# Find the right size https://docs.ceph.com/docs/master/rados/configuration/bluestore-config-ref/#sizing

storage: 5Gi

# IMPORTANT: Change the storage class depending on your environment (e.g. local-storage, io1)

storageClassName: io1

volumeMode: Block

accessModes:

- ReadWriteOnceRook only supports three naming conventions for a given template:

data: represents the main OSD block device, where your data is being stored.

metadata: represents the metadata (including

block.dbandblock.wal) device used to store the Ceph Bluestore database for an OSD.“wal”: represents the

block.waldevice used to store the Ceph BlueStore database for an OSD. If this device is set, “metadata” device will refer specifically to theblock.dbdevice. It is recommended to use a faster storage class for the metadata or wal device, with a slower device for the data. Otherwise, having a separate metadata device will not improve the performance.

The BlueStore partition has the following reference combinations supported by the ceph-volume utility:

A single “data” device.

storage: storageClassDeviceSets: - name: set1 count: 3 portable: false tuneDeviceClass: false volumeClaimTemplates: - metadata: name: data spec: resources: requests: storage: 10Gi # IMPORTANT: Change the storage class depending on your environment (e.g. local-storage, gp2) storageClassName: gp2 volumeMode: Block accessModes: - ReadWriteOnceA data device and a metadata device.

storage: storageClassDeviceSets: - name: set1 count: 3 portable: false tuneDeviceClass: false volumeClaimTemplates: - metadata: name: data spec: resources: requests: storage: 10Gi # IMPORTANT: Change the storage class depending on your environment (e.g. local-storage, gp2) storageClassName: gp2 volumeMode: Block accessModes: - ReadWriteOnce - metadata: name: metadata spec: resources: requests: # Find the right size https://docs.ceph.com/docs/master/rados/configuration/bluestore-config-ref/#sizing storage: 5Gi # IMPORTANT: Change the storage class depending on your environment (e.g. local-storage, io1) storageClassName: io1 volumeMode: Block accessModes: - ReadWriteOnceA data device and a WAL device. A WAL device can be used for BlueStore’s internal journal or write-ahead log (

block.wal). It is only useful to use a WAL device if the device is faster than the primary device (the data device). There is no separate metadata device in this case; the data of main OSD block andblock.dbare located in data device.storage: storageClassDeviceSets: - name: set1 count: 3 portable: false tuneDeviceClass: false volumeClaimTemplates: - metadata: name: data spec: resources: requests: storage: 10Gi # IMPORTANT: Change the storage class depending on your environment (e.g. local-storage, gp2) storageClassName: gp2 volumeMode: Block accessModes: - ReadWriteOnce - metadata: name: wal spec: resources: requests: # Find the right size https://docs.ceph.com/docs/master/rados/configuration/bluestore-config-ref/#sizing storage: 5Gi # IMPORTANT: Change the storage class depending on your environment (e.g. local-storage, io1) storageClassName: io1 volumeMode: Block accessModes: - ReadWriteOnceA data device, a metadata device and a wal device.

storage: storageClassDeviceSets: - name: set1 count: 3 portable: false tuneDeviceClass: false volumeClaimTemplates: - metadata: name: data spec: resources: requests: storage: 10Gi # IMPORTANT: Change the storage class depending on your environment (e.g. local-storage, gp2) storageClassName: gp2 volumeMode: Block accessModes: - ReadWriteOnce - metadata: name: metadata spec: resources: requests: # Find the right size https://docs.ceph.com/docs/master/rados/configuration/bluestore-config-ref/#sizing storage: 5Gi # IMPORTANT: Change the storage class depending on your environment (e.g. local-storage, io1) storageClassName: io1 volumeMode: Block accessModes: - ReadWriteOnce - metadata: name: wal spec: resources: requests: # Find the right size https://docs.ceph.com/docs/master/rados/configuration/bluestore-config-ref/#sizing storage: 5Gi # IMPORTANT: Change the storage class depending on your environment (e.g. local-storage, io1) storageClassName: io1 volumeMode: Block accessModes: - ReadWriteOnce

With the present configuration, each OSD will have its main block allocated a 10 GB device as well a 5 GB device to act as a BlueStore database.

7.1.4.9 External cluster #

The minimum supported Ceph version for the External Cluster is Luminous 12.2.x.

The features available from the external cluster will vary depending on the version of Ceph. The following table shows the minimum version of Ceph for some of the features: