Getting started with Trento Premium #

SUSE® Linux Enterprise Server for SAP Applications

Trento Premium is an open cloud native Web console that aims to help SAP Basis consultants and administrators to check the configuration, monitor and manage the entire OS stack of their SAP environments, including HA features.

Copyright © 20XX–2024 SUSE LLC and contributors. All rights reserved.

Permission is granted to copy, distribute and/or modify this document under the terms of the GNU Free Documentation License, Version 1.2 or (at your option) version 1.3; with the Invariant Section being this copyright notice and license. A copy of the license version 1.2 is included in the section entitled “GNU Free Documentation License”.

For SUSE trademarks, see http://www.suse.com/company/legal/. All third-party trademarks are the property of their respective owners. Trademark symbols (®, ™ etc.) denote trademarks of SUSE and its affiliates. Asterisks (*) denote third-party trademarks.

All information found in this book has been compiled with utmost attention to detail. However, this does not guarantee complete accuracy. Neither SUSE LLC, its affiliates, the authors nor the translators shall be held liable for possible errors or the consequences thereof.

The product continues to be under active development and is available at no extra cost for any SUSE customer with a registered SUSE Linux Enterprise Server for SAP Applications 15 (SP1 or higher). Contact https://www.suse.com/support for further assistance.

1 What is Trento Premium? #

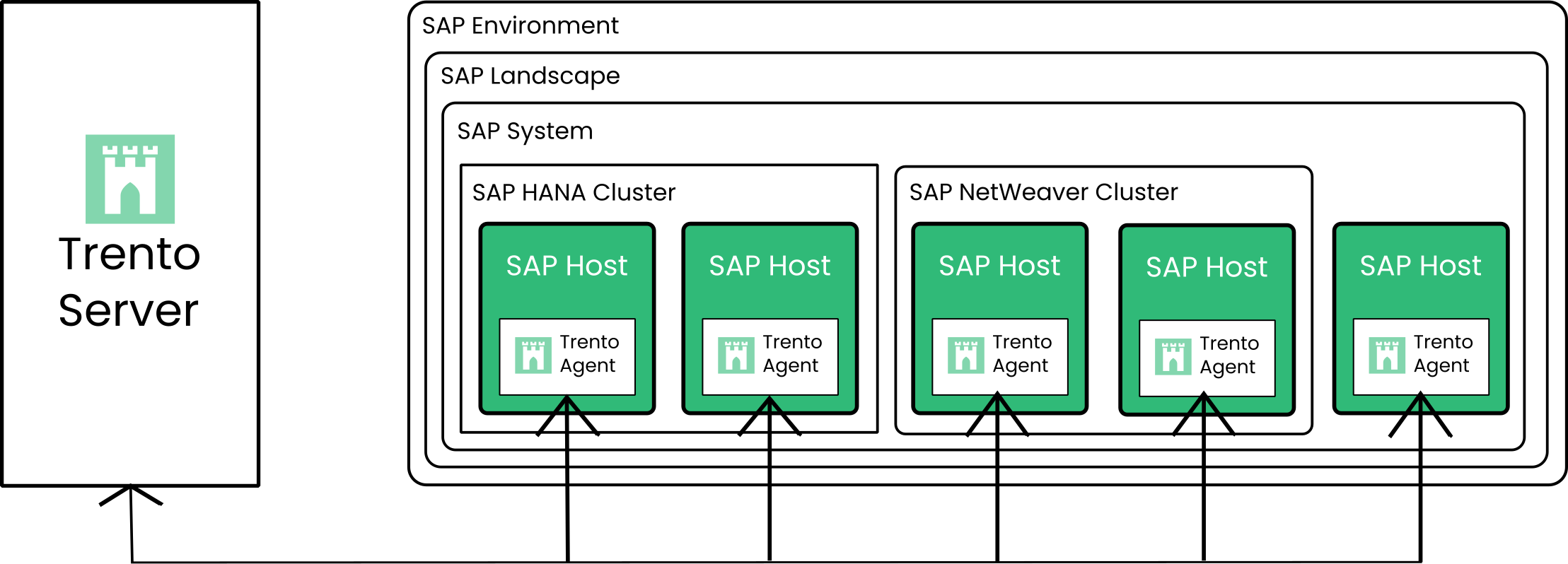

Trento Premium is the extended version of the Trento community project containing additional checks. Apart from their naming, both are comprehensive monitoring solutions consisting of two main components, the Trento Server and the Trento Agent. Both Trento variants provide the following features:

A simplified, reactive web UI targeting SAP admins

Automated discovery of SAP Systems, SAP Instances, SAP HANA clusters and ASCS/ERS clusters

Configuration validation for SAP HANA Scale-Up Performance-optimized two-node clusters deployed on Azure, AWS, GCP or on-premise bare metal platforms, including KVM and Nutanix

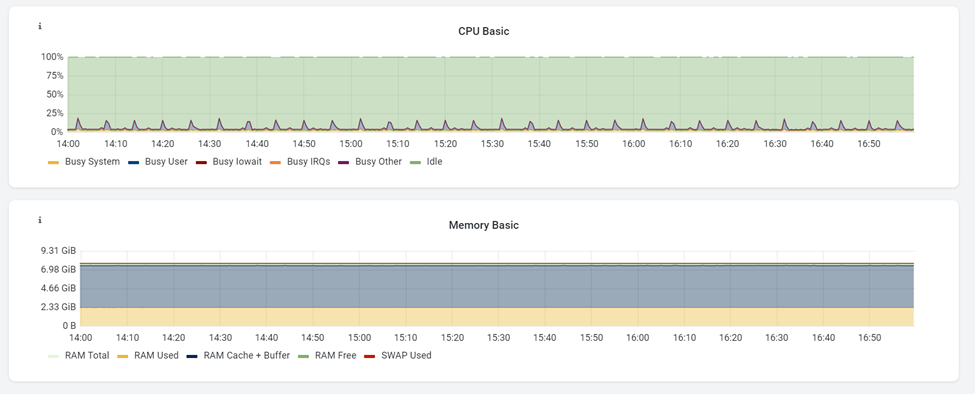

Monitoring of CPU and Memory usage at host level through basic integration with Grafana and Prometheus

Email alerting for critical events in the monitored landscape

The Trento Server is an independent, cloud native, distributed system, designed to run on a Kubernetes cluster. The Trento Server interacts with users via a Web front-end.

The Trento Agent is a single

background process (trento agent) running

on each host of the SAP infrastructure that is monitored.

For both Trento Premium and Trento Community, SUSE collects telemetry data relevant for further product development.

See Figure 1, “Architectural overview” for additional details.

2 Product lifecycle and update strategy #

Trento Premium is available at no extra cost for SLES for SAP subscribers. Particularly, Trento's two main components have the following product lifecycles:

- Trento Agent

Delivery mechanism:RPM package for SUSE Linux Enterprise Server for SAP Applications 15 SP1 and newer.

Supported runtime: Supports SUSE Linux Enterprise Server for SAP Applications 15 SP1 and newer.

- Trento Server

Delivery mechanism: A set of container images from the SUSE public registry together with a Helm chart that facilitates their installation.

Kubernetes: The Trento Server runs on any current Cloud Native Computing Foundation (CNCF)-certified Kubernetes distributions based on a x86_64 architecture. Depending on your background and needs, SUSE supports several usage scenarios:

If you already use a CNCF-certified Kubernetes cluster, you can run the Trento Server in it.

If you have no Kubernetes cluster and want enterprise support, SUSE recommends SUSE Rancher Kubernetes Engine (RKE) version 1 or 2.

If you do not have a Kubernetes enterprise solution but you still want to try Trento Premium, SUSE Rancher's K3s provides you with an easy way to get started. But keep in mind that K3s default installation process deploys a single node Kubernetes cluster, which is not a recommended setup for a stable Trento production instance.

3 Requirements #

This section describes requirements for the Trento Server and its Trento Agents.

3.1 Trento Server requirements #

Running all the Trento Server components requires a minimum of 4 GB of RAM,

two CPU cores and 64 GB of storage.

When using K3s, such storage should be provided under /var/lib/rancher/k3s.

Trento is powered by event driven technology. Registered events are stored in a PostgreSQL database with a retention period of 10 days. For each host registered with Trento, you need to allocate at least 1.5GB of space in the PostgreSQL database.

While the Trento Server supports various usage scenarios, depending on the existing infrastructure, it is designed to be cloud native and OS agnostic. It can be installed on the following services:

RKE1 (Rancher Kubernetes Engine version 1)

RKE2

any other CNCF-certified Kubernetes running on x86_64 architecture

A proper, production ready installation of Trento Server requires Kubernetes knowledge. The Helm chart is meant to be used by customers lacking such knowledge or who want to get started quickly. However, Helm chart delivers a basic deployment of the Trento Server with all the components running on a single node of the cluster.

3.2 Trento Agent requirements #

The resource footprint of the Trento Agent is designed to not impact the performance of the host it runs on.

The Trento Agent component needs to interact with several low-level system components that are part of the SUSE Linux Enterprise Server for SAP Applications distribution.

Trento requires unique machine identifiers (ids) on the hosts to be registered. Therefore, if any host in your environment is built as a clone of another one, ensure that you change the machine identifier as part of the cloning process before starting the Trento Agent on it.

3.3 Network requirements #

From any Trento Agent host, the Web component of the Trento Server must be reachable via HTTP (port TCP/80) or via HTTPS (port TCP/443) if SSL is enabled.

From any Trento Agent host, the checks engine component of the Trento Server, called Wanda, must be reachable via Advanced Message Queuing Protocol or AMQP (port TCP/5672).

The Prometheus component of the Trento Server must be able to reach the Node Exporter in the Trento Agent hosts (port TCP/9100).

The SAP Basis administrator needs access to the Web component of the Trento Server via HTTP (port TCP/80) or via HTTPS (port TCP/443) if SSL is enabled.

3.4 Installation prerequisites #

Trento Server. Access to SUSE public registry for the deployment of Trento Server premium containers.

Trento Agents. A registered SUSE Linux Enterprise Server for SAP Applications 15 (SP1 or higher) distribution.

4 Installing Trento Server #

The product is under active development. Expect changes in the described installation procedure.

The subsection uses the following placeholders:

TRENTO_SERVER_HOSTNAME: the host name of the Trento Server host.

ADMIN_PASSWORD: the password that the SAP administrator will use to access the Web console. It should have at least 8 characters.

4.1 Installing Trento Server on an existing Kubernetes cluster #

Trento Server consists of a few components which are delivered as container images and meant to be deployed on a Kubernetes cluster. A manual deployment of these components in a production ready fashion requires Kubernetes knowledge. Customers lacking such knowledge or who want to get started quickly with Trento, can use the Trento Helm chart. This approach automates the deployment of all the required components on a single Kubernetes cluster node. You can use the Trento Helm chart in order to install Trento Server on a existing Kubernetes cluster as follows:

Install Helm:

curl https://raw.githubusercontent.com/helm/helm/master/scripts/get-helm-3 | bash

Connect Helm to the existing Kubernetes cluster.

Use Helm to install Trento Server with the Trento Helm chart:

helm upgrade \ --install trento-server oci://registry.suse.com/trento/trento-server \ --set trento-web.adminUser.password=ADMIN_PASSWORD

When using a Helm version lower than 3.8.0, a experimental flag must be set before the helm command:

HELM_EXPERIMENTAL_OCI=1 helm upgrade \ --install trento-server oci://registry.suse.com/trento/trento-server \ --set trento-web.adminUser.password=ADMIN_PASSWORD

To verify that the Trento Server installation was successful, open the URL of the Trento Web console (

http://TRENTO_SERVER_HOSTNAME) from a workstation located in the SAP administrator's LAN.

4.2 Installing Trento Server on K3s #

If you do not have a Kubernetes cluster or have one but do not want to use it for Trento, SUSE Rancher's K3s provides you with an easy way to get started. All you need is a small server or VM (see Section 3.1, “Trento Server requirements” for minimum requirements) and follow steps in Procedure 1, “Manually installing Trento on a Trento Server host” to get Trento Server up and running.

The following procedure deploys Trento Server on a single-node K3s cluster. Such set up is not recommended for production purposes.

Log in to the Trento Server host.

Install K3s:

Installing as user

root#curl -sfL https://get.k3s.io | INSTALL_K3S_SKIP_SELINUX_RPM=true shInstalling as non-

rootuser:>curl -sfL https://get.k3s.io | INSTALL_K3S_SKIP_SELINUX_RPM=true sh -s - --write-kubeconfig-mode 644

Install Helm as

root:#curl https://raw.githubusercontent.com/helm/helm/master/scripts/get-helm-3 | bashSet the

KUBECONFIGenvironment variable for the same user that installed K3s:export KUBECONFIG=/etc/rancher/k3s/k3s.yaml

With the same user that installed K3s, use Helm to install Trento Server with the Helm chart:

helm upgrade \ --install trento-server oci://registry.suse.com/trento/trento-server \ --set trento-web.adminUser.password=ADMIN_PASSWORD

When using a Helm version lower than 3.8.0, a experimental flag must be set before the helm command:

HELM_EXPERIMENTAL_OCI=1 helm upgrade \ --install trento-server oci://registry.suse.com/trento/trento-server \ --set trento-web.adminUser.password=ADMIN_PASSWORD

Monitor the creation and start-up of the Trento Server pods and wait until they are all ready and running:

watch kubectl get pods

All pods should be in ready and running status.

Log out of the Trento Server host.

To verify that the Trento Server installation was successful, open the URL of the Trento Web console (

http://TRENTO_SERVER_HOSTNAME) from a workstation located in the SAP administrator's LAN.

4.3 Installing Trento Server on K3s running on SUSE Linux Enterprise Server for SAP Applications 15 #

If you choose to deploy K3s on a VM with a registered SUSE Linux Enterprise Server for SAP Applications 15 distro,

you can install the package trento-server-installer and

then execute script install-trento-server:

it will run Step 2 to

Step 5 in

Procedure 1, “Manually installing Trento on a Trento Server host” for you automatically.

4.4 Deploying Trento Server on selected nodes #

If you use a multi node Kubernetes cluster, it is possible to deploy Trento Server images on selected nodes by specifying the field nodeSelector in the helm upgrade command, with the following syntax:

HELM_EXPERIMENTAL_OCI=1 helm upgrade \ --install trento-server oci://registry.suse.com/trento/trento-server \ --set trento-web.adminUser.password=ADMIN_PASSWORD \ --set prometheus.server.nodeSelector.LABEL=VALUE \ --set postgresql.primary.nodeSelector.LABEL=VALUE \ --set trento-web.nodeSelector.LABEL=VALUE \ --set trento-runner.nodeSelector.LABEL=VALUE \ --set grafana.nodeSelector.LABEL=VALUE

4.5 Enabling email alerts #

Email alerting feature notifies the SAP administrator about important changes in the SAP Landscape being monitored/observed by Trento.

Some of the reported events are:

Host heartbeat failed

Cluster health detected critical

Database health detected critical

SAP System health detected critical

This feature is disabled by default. It can be enabled at installation time or anytime at a later stage. In both cases, the procedure is the same and uses the following placeholders:

- SMTP_SERVER

The SMTP server designated to send alerting emails.

- SMTP_PORT

The port on the SMTP server.

- SMTP_USER

User name to access SMTP server.

- SMTP_PASSWORD

Password to access SMTP server.

- ALERTING_SENDER

Sender email for alerting notifications.

- ALERTING_RECIPIENT

Recipient email for alerting notifications.

The command to enable email alerting is the following:

HELM_EXPERIMENTAL_OCI=1 helm upgrade \ --install trento-server oci://registry.suse.com/trento/trento-server \ --set trento-web.adminUser.password=ADMIN_PASSWORD \ --set trento-web.alerting.enabled=true \ --set trento-web.alerting.smtpServer=SMTP_SERVER \ --set trento-web.alerting.smtpPort=SMTP_PORT \ --set trento-web.alerting.smtpUser=SMTP_USER \ --set trento-web.alerting.smtpPassword=SMTP_PASSWORD \ --set trento-web.alerting.sender=ALERTING_SENDER \ --set trento-web.alerting.recipient=ALERTING_RECIPIENT

4.6 Enabling SSL #

Ingress may be used to provide SSL termination for the Web component of Trento Server. This would allow to encrypt the communication from the agent to the server, which is already secured by the corresponding API key. It would also allow HTTPS access to the Web console with trusted certificates.

Configuration must be done in the tls section of the values.yaml

file of the chart of the Trento Server Web component.

For details on the required Ingress setup and configuration, refer to: https://kubernetes.io/docs/concepts/services-networking/ingress/. Particularly, refer to section https://kubernetes.io/docs/concepts/services-networking/ingress/#tls for details on the secret format in the YAML configuration file.

Additional steps are required on the Agent side.

5 Installing Trento Agents #

The product is under active development. Expect changes in the described installation procedure.

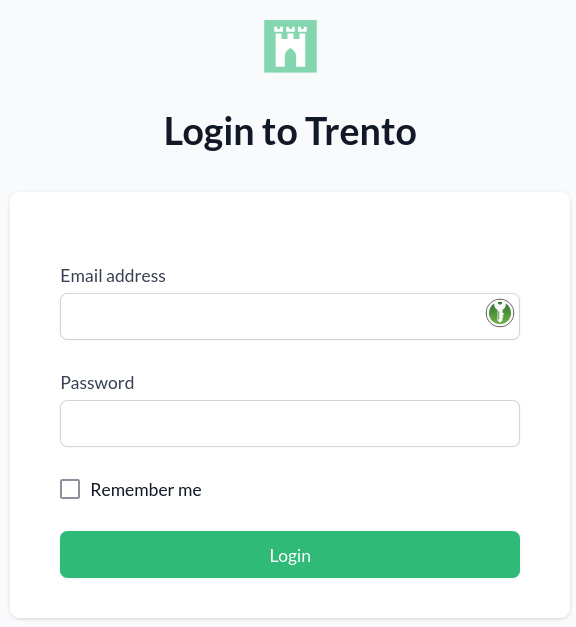

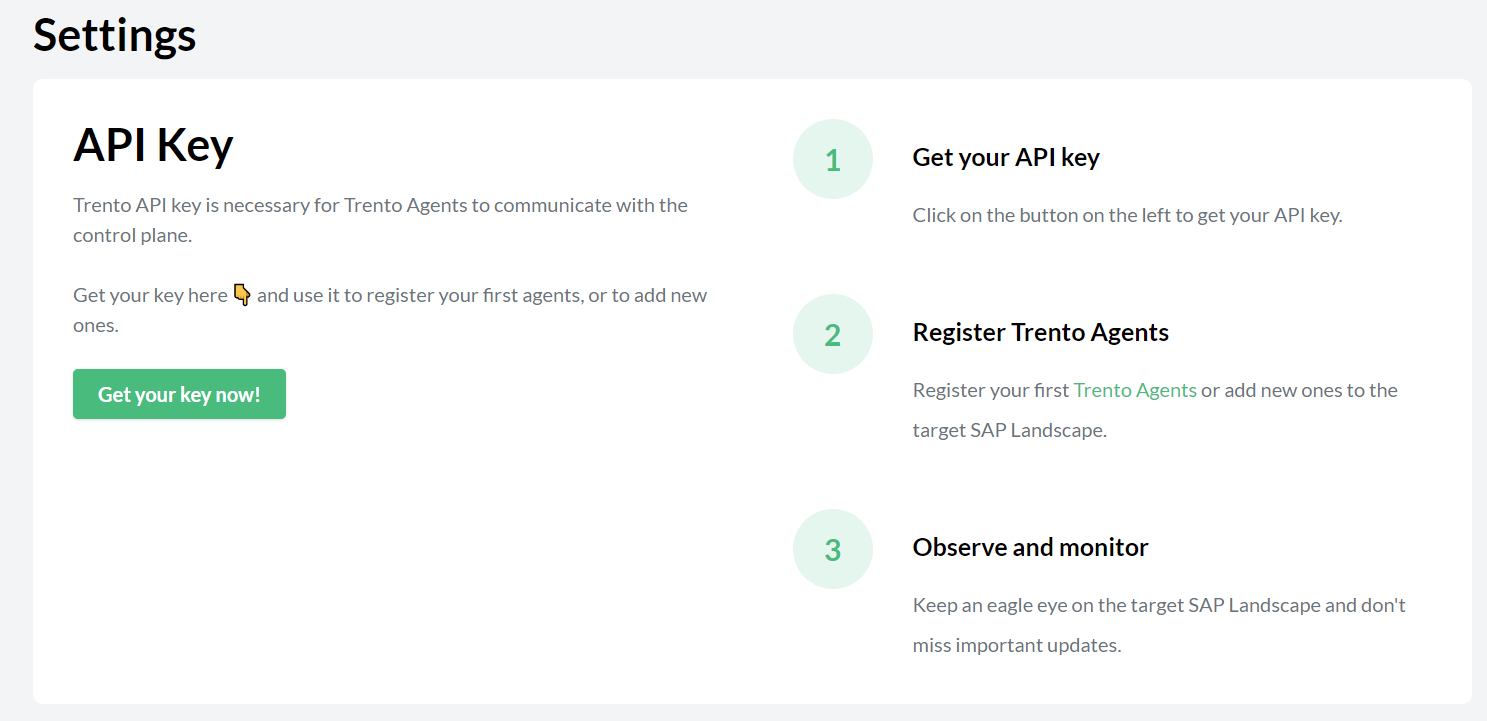

Before you can install a Trento Agent, retrieve the API key of your Trento Server. Proceed as follows:

Open the URL of the Trento Web console (

http://TRENTO_SERVER_HOSTNAME). It prompts you for a user name and password:Enter the credentials for the

adminuser (established when installing Trento Server).Click . As this is the first time you access the console, you will be prompted to accept the license agreement. Click to continue. Otherwise, you cannot use Trento.

Once inside the console, go to Settings:

Click the button.

Write down the API key.

To install the Trento Agent on an SAP host and register it with the Trento Server, repeat the steps in Procedure 2, “Installing Trento Agents”:

Install the package:

>sudo zypper ref>sudo zypper install trento-agentOpen the configuration file

/etc/trento/agent.ymland uncomment (use#) the entries for facts-service-url, server-url and api-key. Update the values appropriately:facts-service-url: the address of the AMQP service shared with the checks engine, where fact gathering requests are received. The right syntax is

amqp://trento:trento@TRENTO_SERVER_HOSTNAME:5672.server-url: URL for the Trento Server (

http://TRENTO_SERVER_HOSTNAME)api-key: the API key retrieved from the Web console

If SSL termination has been enabled on the server side (refer to Section 4.6, “Enabling SSL”), you can encrypt the communication from the agent to the server as follows:

Provide an HTTPS URL instead of an HTTP one.

Import the certificate from the CA that has issued your Trento Server SSL certificate into the Trento Agent host as follows:

Copy the CA certificate in PEM format to

/etc/pki/trust/anchors/. If your CA certificate is in CRT format, convert it to PEM using theopensslcommand as follows:opensslx509 -in mycert.crt -out mycert.pem -outform PEMRun the

update-ca-certificatescommand.

Start the Trento Agent:

>sudo systemctl enable --now trento-agentCheck the status of the Trento Agent:

>sudo systemctl status trento-agent ● trento-agent.service - Trento Agent service Loaded: loaded (/usr/lib/systemd/system/trento-agent.service; enabled; vendor preset: disabled) Active: active (running) since Wed 2021-11-24 17:37:46 UTC; 4s ago Main PID: 22055 (trento) Tasks: 10 CGroup: /system.slice/trento-agent.service ├─22055 /usr/bin/trento agent start --consul-config-dir=/srv/consul/consul.d └─22220 /usr/bin/ruby.ruby2.5 /usr/sbin/SUSEConnect -s [...]Repeat this procedure in all SAP hosts that you want to monitor.

6 Performing configuration checks #

One of Trento's main features is to provide checks for the HANA scale-up performance optimized two-node clusters in your SAP Landscape. To run these checks, proceed as follows:

Log in to Trento

In the left panel, click .

In the list, search for an SAP HANA cluster.

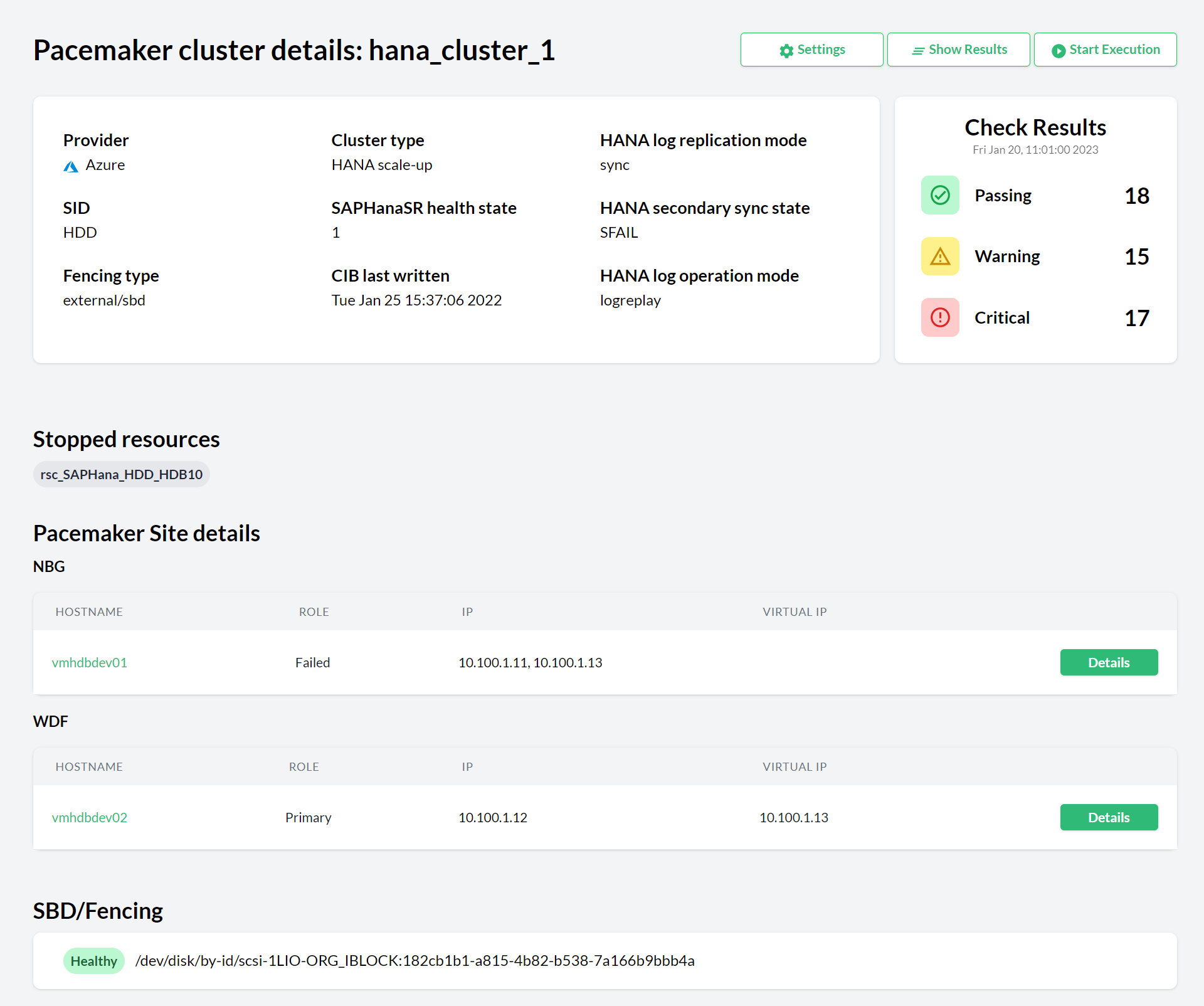

Click the respective cluster name in the column. The view opens.

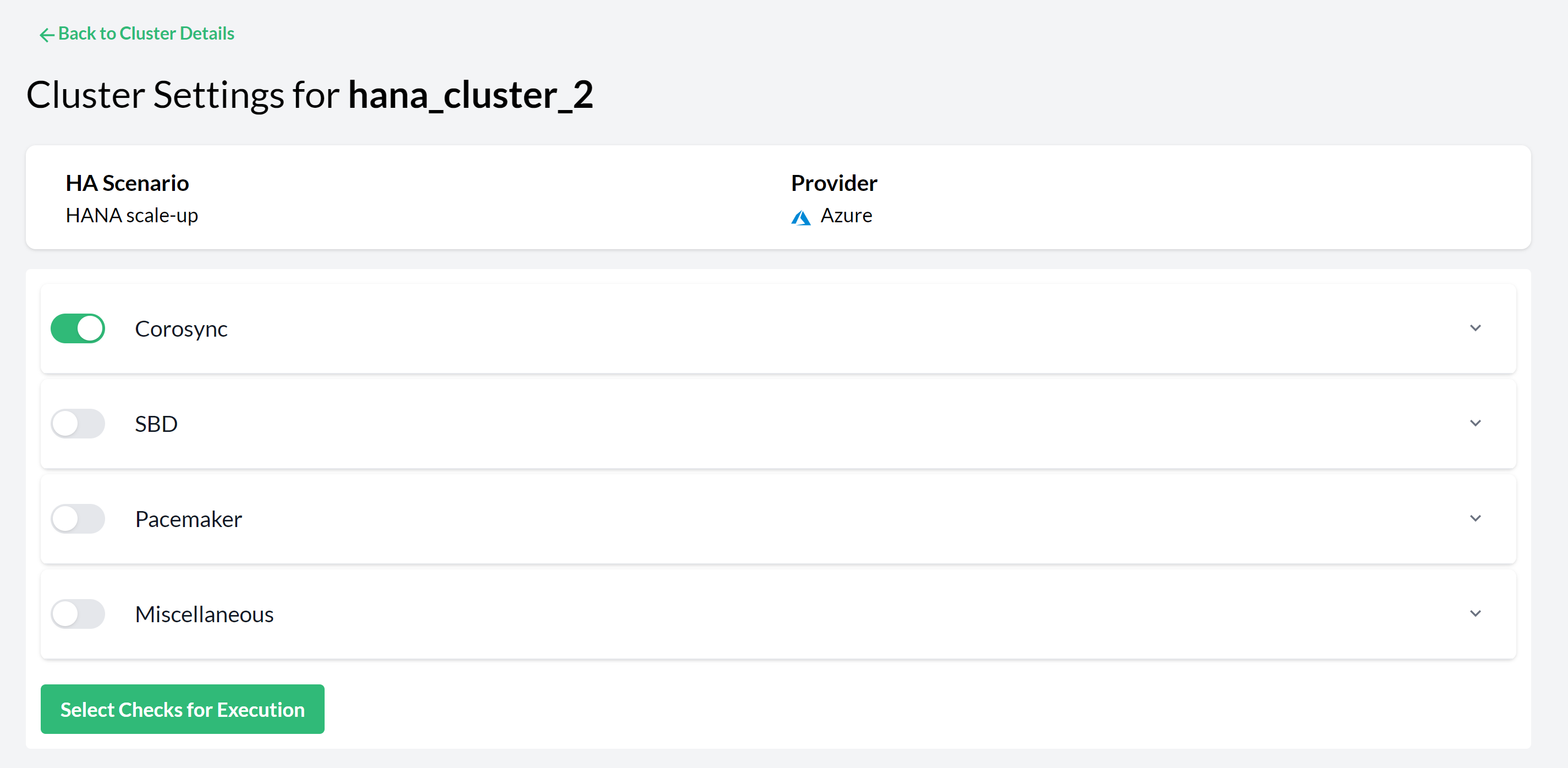

Figure 2: Pacemaker cluster details #Click the button to change the cluster settings of the respective cluster. For checks to be executed, a checks selection must be made. Select the checks to be executed and click the button . See Figure 3, “Pacemaker Cluster Settings—Checks Selection”:

Figure 3: Pacemaker Cluster Settings—Checks Selection #At this moment, you can either wait for Trento to execute the selected checks or trigger an execution immediately by clicking the button that has appeared in the Checks Selection tab.

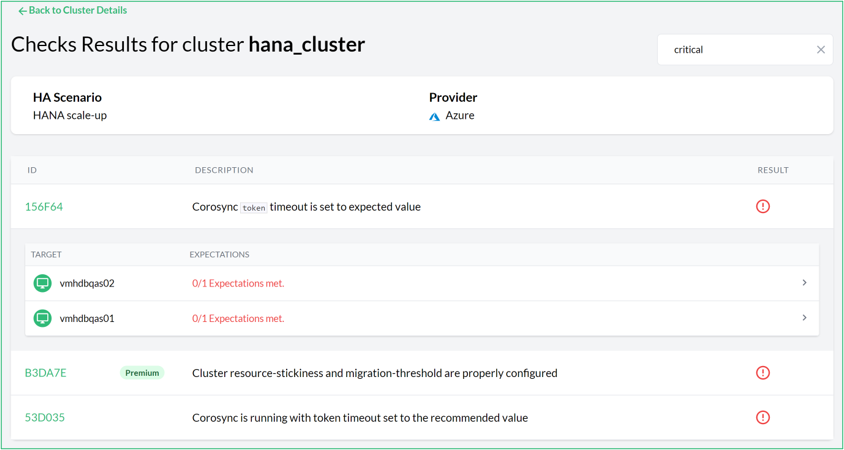

Investigate the result in the Checks Results view. Each row in this view shows you a check ID, a short description of the check and the check execution result. By clicking on a row, a section will open providing information about the execution on each node of the cluster. See Figure 4, “Check results for a cluster”:

Figure 4: Check results for a cluster #The result of a check execution can be passing, warning or critical:

Passing means that the checked configuration meets the recommendation.

Warning means that the recommendation is not met but the configuration is not critical for the proper running of the cluster.

Critical means that either the execution itself errored (for example, a timeout) or the recommendation is not met and is critical for the well-being of the cluster.

Use the filter to reduce the list to only show, for example, critical results.

Click a check's link to open the view of this check. This shows you an abstract and how to remedy the problem. The section contains links to the documentation of the different vendors to provide more context when necessary. Close the view with the Esc key or click outside of the view.

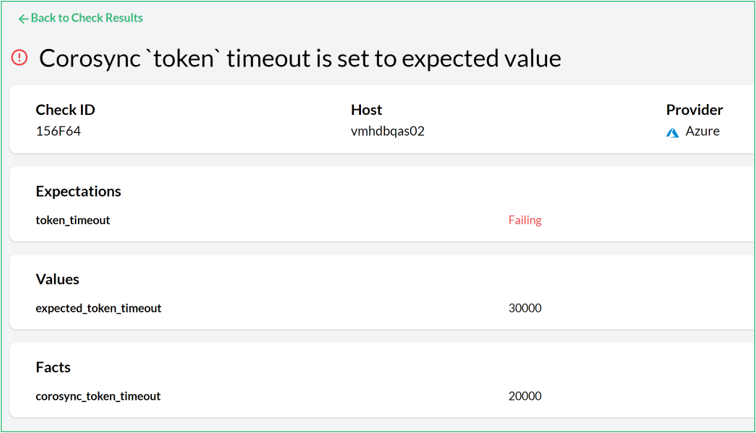

For each not-met expectation, there will be a detailed view providing information about it: what facts were gathered, what values were expected and what was the result of the evaluation. This will help users understand better why a certain configuration check is failing:

Figure 5: Non-met expectation detail view #

Once a check selection for a given cluster has been made, Trento executes them automatically every five minutes, and results are updated accordingly. A check execution result icon spinning means that an execution is running.

7 Using Trento Web console #

When you access the Trento Web console for the first time, it asks to accept the license. Click to continue.

After you have accepted the license, Trento can be used. The left sidebar in the Trento Web console contains the following entries:

. Determine at a glance the health status of your SAP environment.

. Overview of all registered hosts running the Trento Agent.

. Overview of all discovered Pacemaker clusters.

. Overview of all discovered SAP Systems; identified by the corresponding system IDs.

. Overview of all discovered SAP HANA databases; identified by the corresponding system IDs.

. Overview of the catalog of configuration checks that Trento may perform for the different cluster components (Pacemaker, Corosync, SBD, etc.) in any of the supported platforms: Azure, AWS, GCP, or on-premise bare metal (default).

. Lets you retrieve the API key for this particular installation, which is required for the Trento Agent configuration.

. Shows the Trento flavor, the current server version, a link to the GitHub repository of the Trento Web component, and the number of registered SUSE Linux Enterprise Server for SAP Applications subscriptions that has been discovered.

7.1 Getting the global health state #

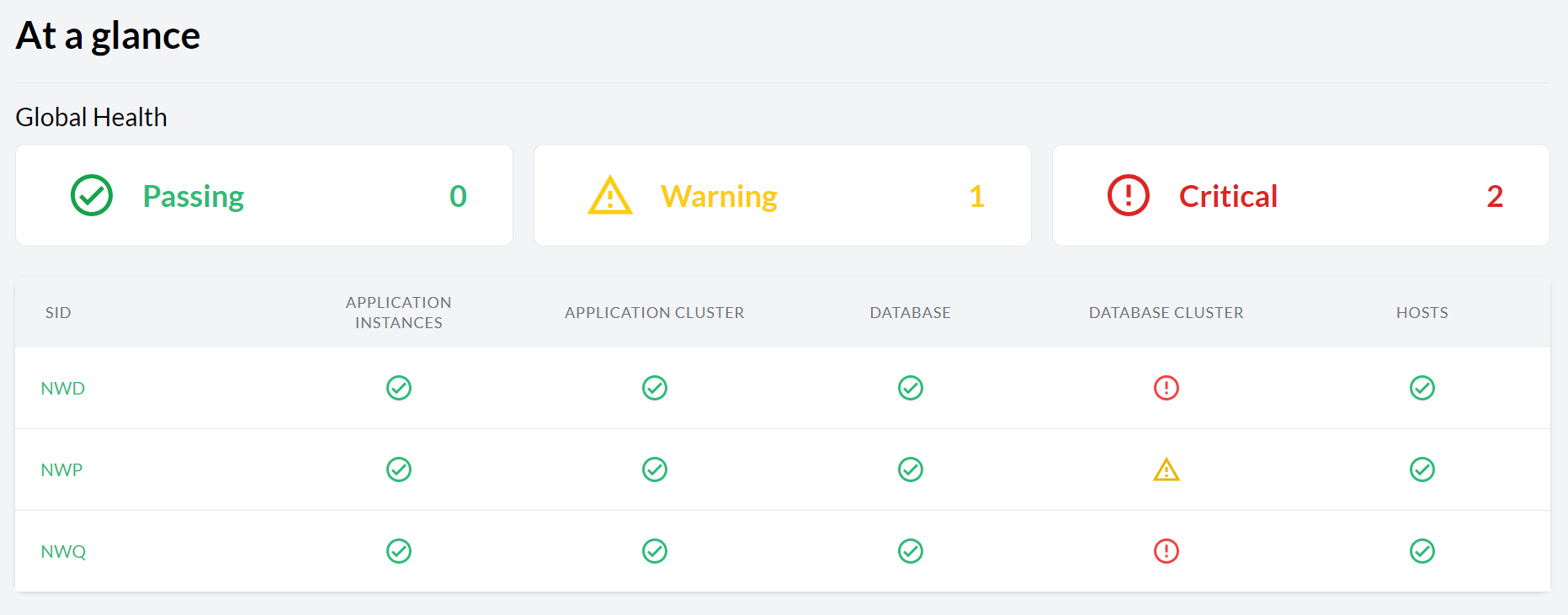

The dashboard allows you to determine at a glance the health status of your SAP environment. It is the home page of the Trento Web console, and you can go back to it any time by clicking on in the left sidebar.

The health status of a registered SAP system is the compound of its health status at three different layers representing the SAP architecture:

:it reflects the heartbeat of the Trento Agent and, when applicable, the compliance status.

:the status here is based on the running status of the cluster and the results of the configuration checks.

:it collects the status of the HANA instances as returned by

sapcontrol.:it summarizes the status of the application instances as returned by

sapcontrol.

Complementing the operating system layer, you also have information about the health status of the HA components, whenever they exit:

Database cluster:the status here is based on the running status of the database cluster and the results of the selected configuration checks.

Application cluster:the status here is based on the running status of the ASCS/ERS cluster and, eventually, the results of the selected configuration checks.

The dashboard groups systems in three different health boxes (see Figure 6, “Dashboard with the global health state”):

It shows the number of systems with all layers in passing (green) status.

It shows the number of systems with at least one layer in warning (yellow) status and the rest in passing (green) status.

It shows the number of systems with at least one layer in critical (red) status.

The health boxes in the dashboard are clickable. By clicking on one particular box, you filter the dashboard by systems with the corresponding health status. In large SAP environments, this feature facilitates the SAP administrator knowing which systems are in a given status.

The icons representing the health summary of a particular layer contain links to the views in the Trento console that can help determine where an issue is coming from:

:Link to the Hosts overview, filtered by SID equal to the SAPSID and the DBSID of the corresponding SAP system.

:Link to the corresponding SAP HANA Cluster Details view.

:Link to the corresponding HANA Database Details view.

:Link to the corresponding ASCS/ERS Cluster Details view.

:Link to the corresponding SAP System Details view.

A grey status is returned when either a component does not exist, or it is stopped

(as returned by sapcontrol), or its status is unknown (for instance,

if a command to determine the status fails).

Grey statuses are not yet counted in the calculation of the global health status.

7.2 Viewing the status #

Getting to know the status of a system is an important task for every administrator. The status allows you to see if some of your systems need to be examined further.

The following subsection gives you an overview of specific parts of your SAP Landscape to show their state. Each status site shows you an overview of the health states (see details in The three different health states).

7.2.1 Viewing the status of hosts #

To display the lists of registered hosts and their details, proceed as follows:

Log in to the Trento Web console.

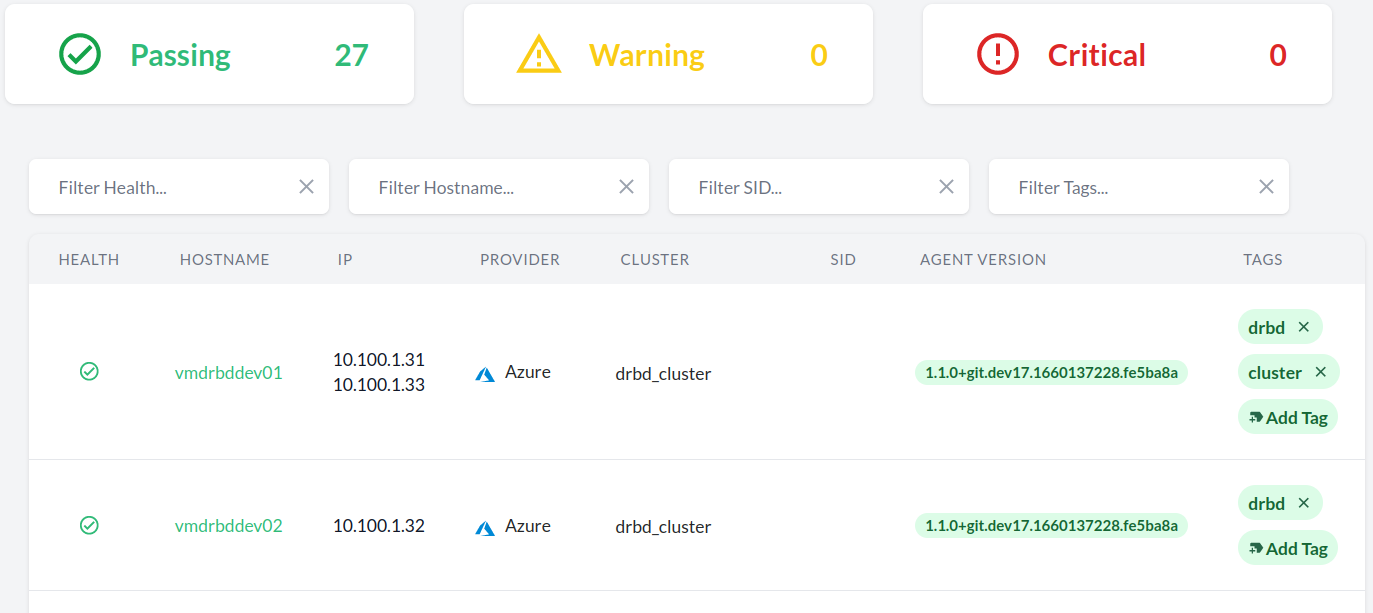

Click the entry in the left sidebar to show a summary of the state for all hosts (see Figure 7, “Hosts entry”).

Figure 7: Hosts entry #To investigate the specific host details, click the host name in the respective column to open the corresponding view. If the list is too big, reduce the list by providing filters.

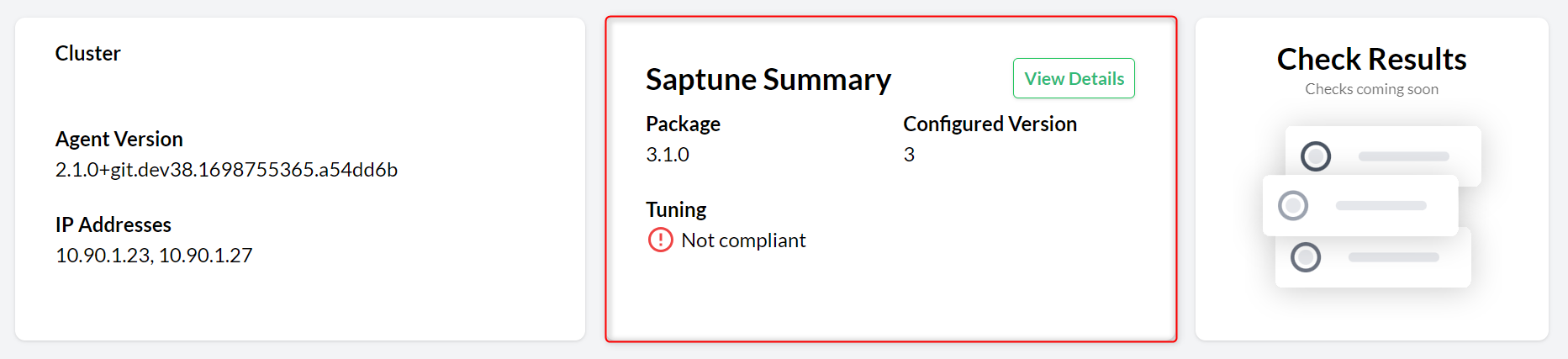

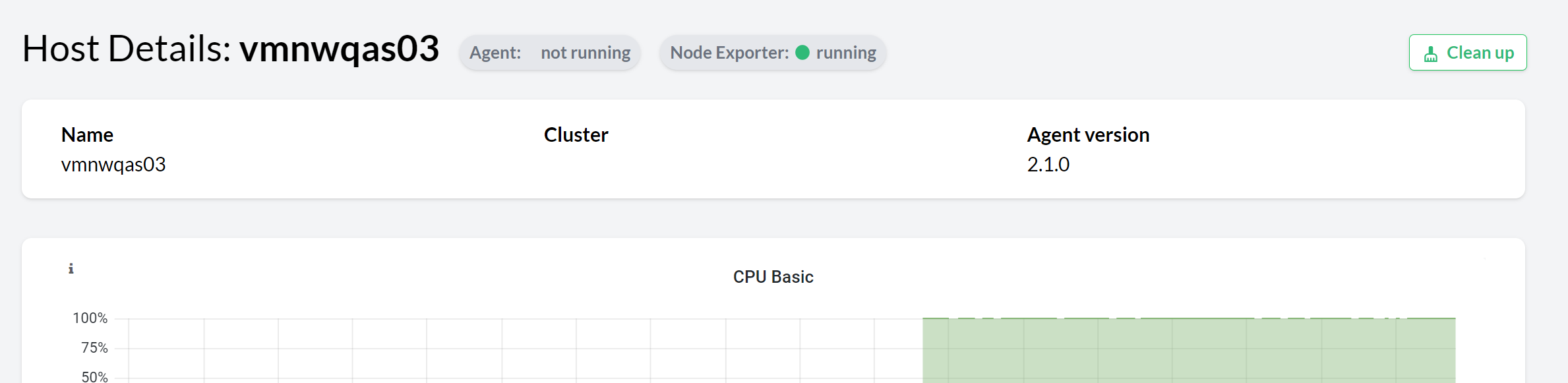

Clicking on a host name opens the corresponding view. This view provides the following:

view #section: it shows the status of both the Trento Agent and the Node Exporter and provides the host name, the cluster name (when applicable), the Trento Agent version and the host IP addresses.

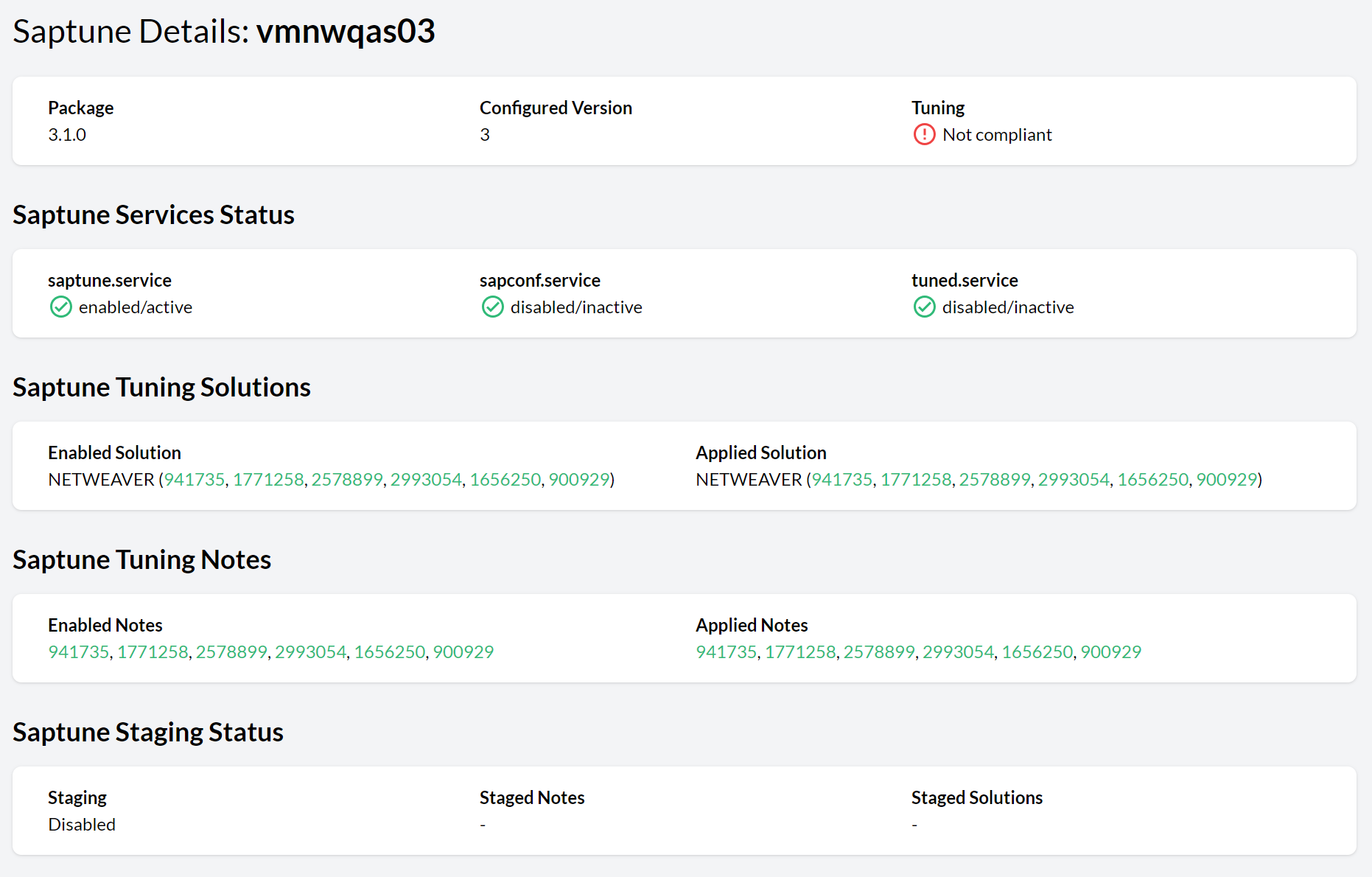

saptune summary section: saptune is a solution that comes with SUSE Linux Enterprise Server for SAP Applications and allows SAP administrators to ensure that their SAP hosts are properly configured to run the corresponding SAP workloads. The integration of saptune in the Trento console gives the SAP administrator visibility over the tool even when they are not working at operating system level. The integration supports saptune 3.1.0 and higher, and includes the addition of the host tuning status in the aggregated health status of the host.

Figure 8: saptune summary section #If an SAP workload is running on the host but no saptune or a version lower than 3.1.0 is installed, a warning is added to the aggregated health status of the host. When saptune version 3.1.0 or higher, is installed, a details view is also available showing detailed information about the saptune status:

Figure 9: saptune details view #Checks results summary section: as soon as host specific checks become available in the catalog, this section will show a summary of the execution results.

: it shows the CPU and Memory usage for the specific hosts.

section: when applicable, it shows the name of the cloud provider, the name of the virtual machine, the name of the resource group it belongs to, the location, the size of the virtual machine, and other information.

section: when applicable, it lists the ID, SID, type, features, and instance number of any SAP instance running on the host (SAP NetWeaver or SAP HANA).

section: it lists the different components or modules that are part of the subscription, and for each one of them, it provides the architecture, the version and type, the registration and subscription status as well as the start and end dates of the subscription.

7.2.2 Viewing the Pacemaker cluster status #

To display a list of all available Pacemaker clusters and their details, proceed as follows:

Log in to the Trento Web console.

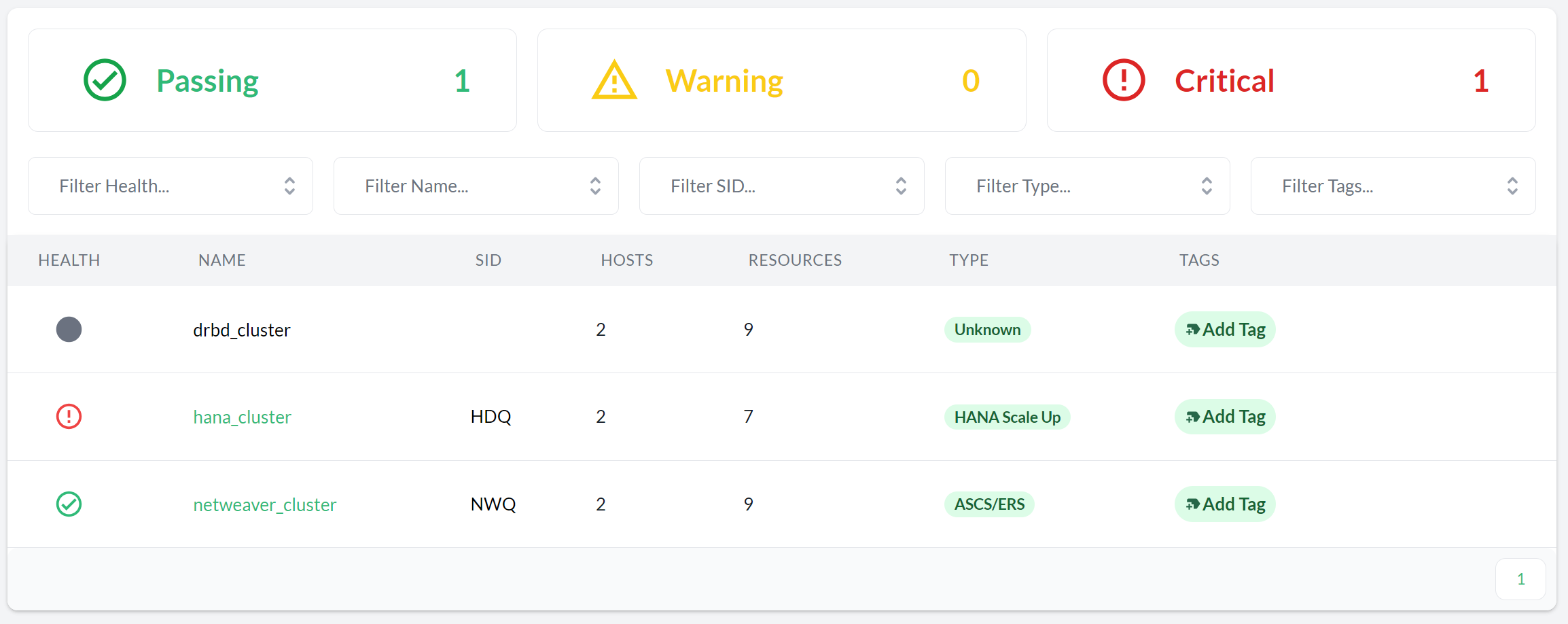

Click the entry in the left sidebar to show a summary of the state for all Pacemaker clusters (see Figure 10, “Pacemaker clusters”).

Figure 10: Pacemaker clusters #To investigate the specific Pacemaker cluster details, click the cluster name in the respective column to open the corresponding view. If the list is too big, reduce the list by providing filters.

The detail views of a HANA scale-up cluster and an ASCS/ERS cluster are different:

view #The , , and buttons. These buttons are used to enable or disable checks and to start them. If you want to perform specific checks, proceed with Step 5 of Procedure 3, “Performing configuration checks”.

The cloud provider, the cluster type, HANA log, SID (when applicable), SAPHanaSR health state, HANA secondary sync state, fencing type, when the CIB was last written, and HANA log operation mode.

section: with a summary of the health of the clusters based on the runtime status and the selected configuration checks for each one of them (passed, warning and critical).

section: with a summary of resources which have been stopped on the cluster.

section: with a summary of NBG and WDF. Both list the host name, their role, the IP and virtual IP address. If you click the , you can view the attributes of the site and their resources. Close this view with the Esc key.

section: with a status and the SBD device.

view #A section showing the cloud provider, the cluster type, fencing type and when the CIB was last written.

Another section showing the SAP SID, the Enqueue Server version, whether the ASCS and ERS are running on different hosts or not and whether the instance filesystems are resource based or not. This is a multi-tab section. When multiple systems share the same cluster, there will be a tab for each system in the cluster and you can scroll left and right to go through the different systems.

A section, which will become enabled as soon as configuration checks for this type of clusters are added to the catalog.

A section: with a summary of resources which have been stopped on the cluster.

A section showing the following for each node in the cluster: the host name, the role, the virtual IP address and, in case of resource managed filesystems, the full mounting path. If you click on , you can view the attributes and resources associated to that particular role. Close this view with the Esc key.

This section is system specific. It will show the information corresponding to the system selected in the multi-tab section above.

section: with a status and a list of the SBD decices.

7.2.3 Viewing the SAP Systems status #

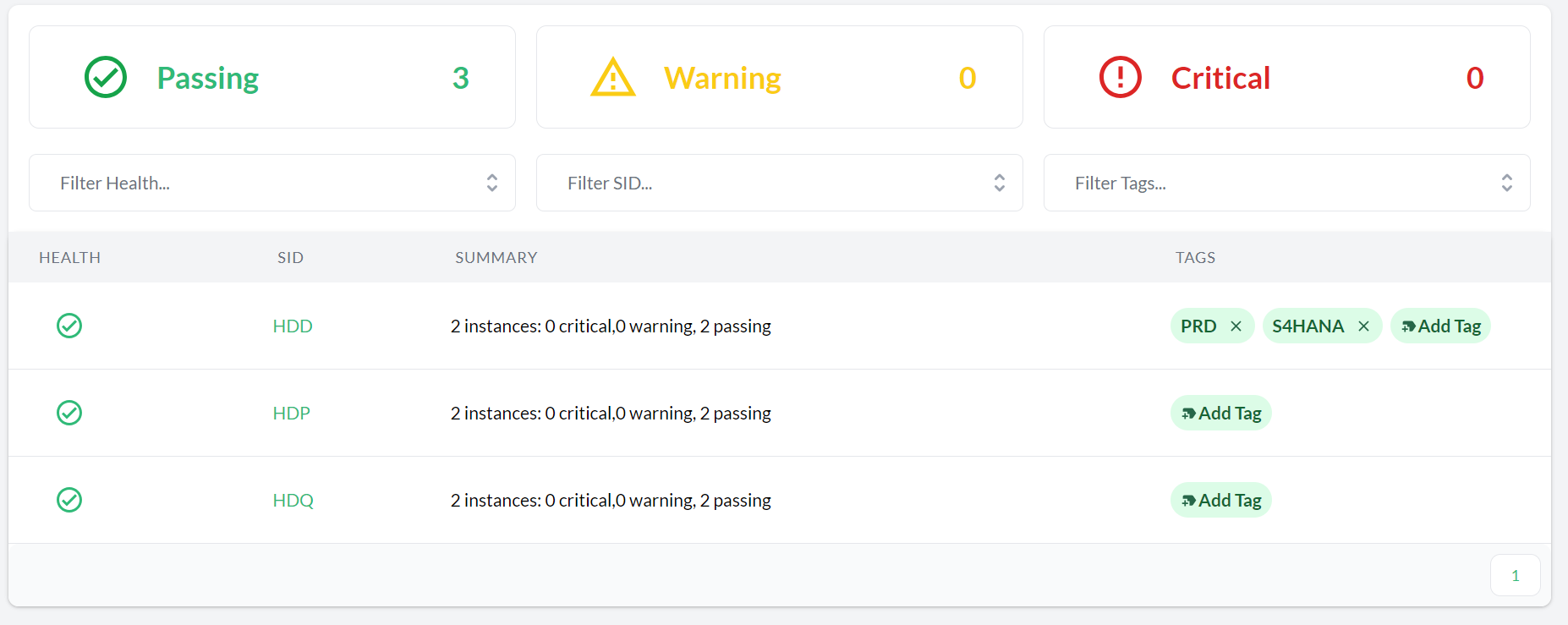

To display a list of all available SAP Systems and their details, proceed as follows:

Log in to the Trento Web console.

To investigate the specific SAP Systems details, click the entry in the left sidebar to show a summary of the state for all SAP Systems (see Figure 11, “SAP Systems”).

Figure 11: SAP Systems #Decide which part you want to examine. You can view the details of the column of an entry, or you go with to see the details of the database. If the list is too big, reduce the list by providing filters.

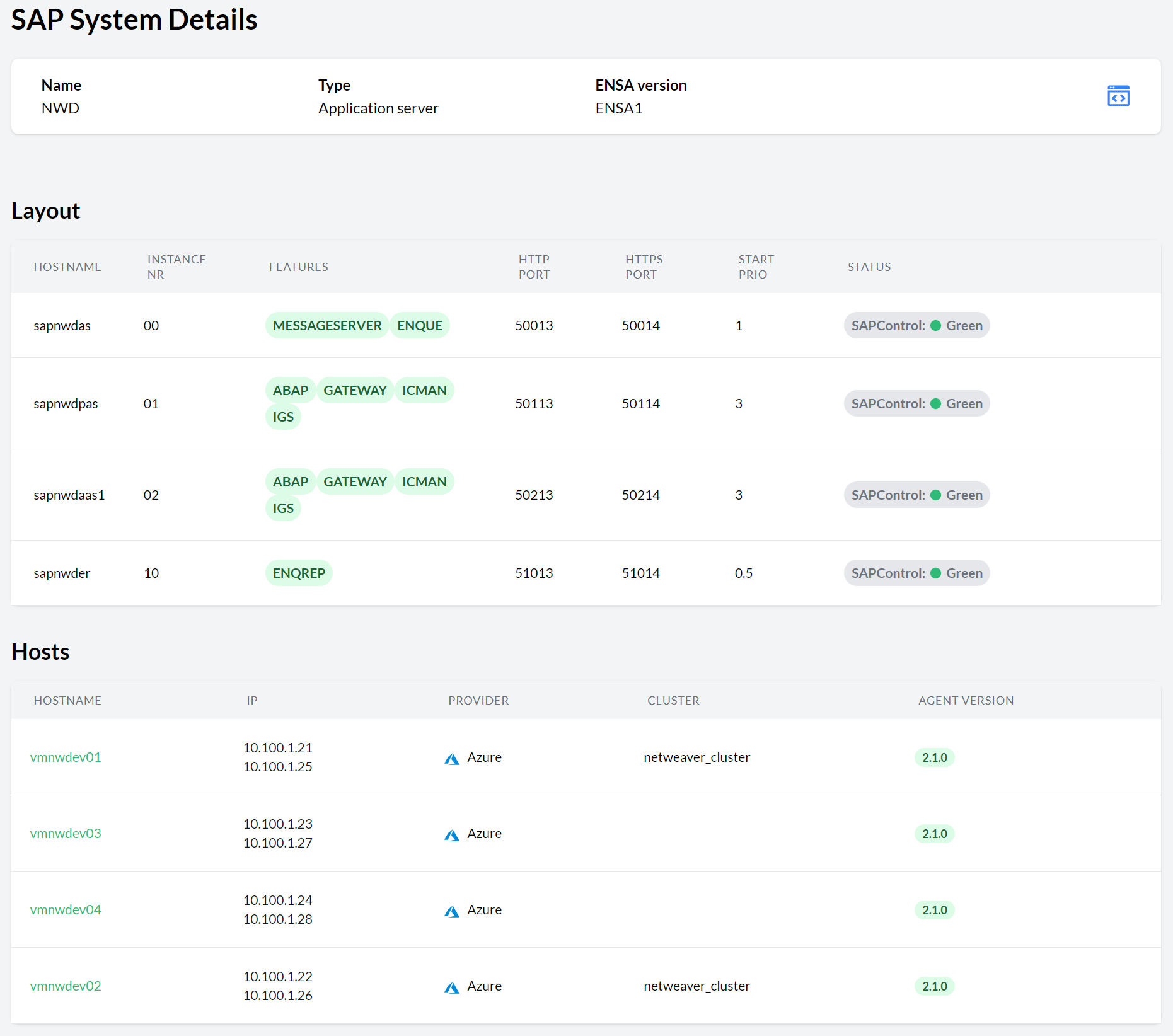

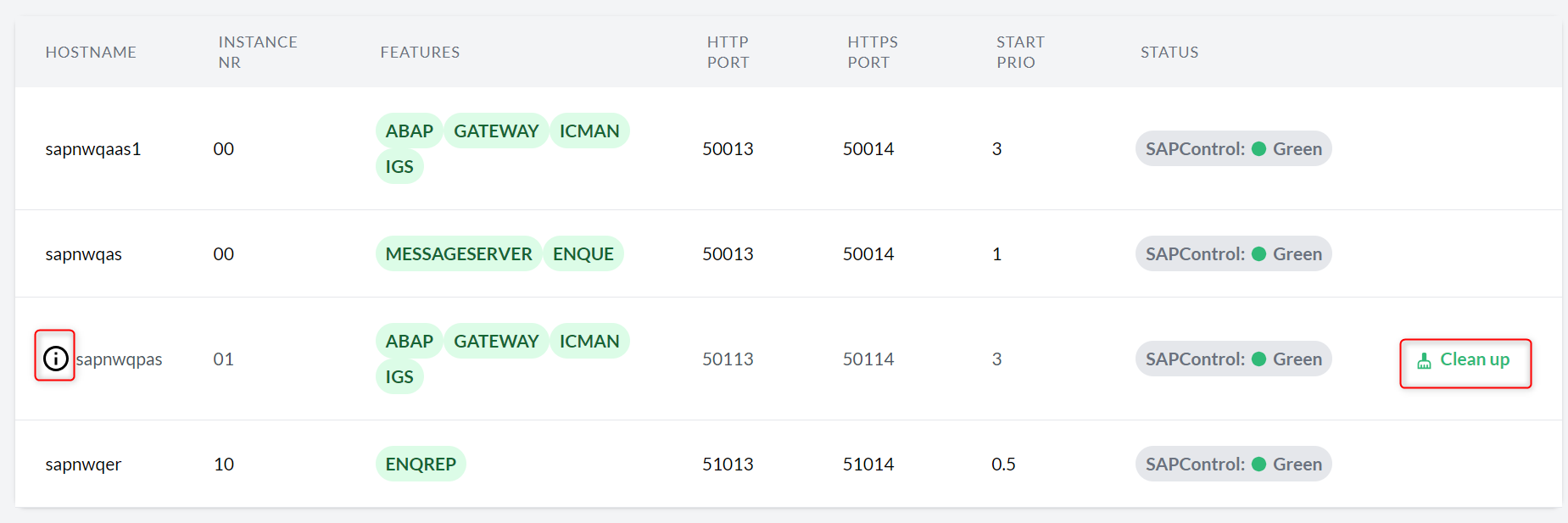

If you click on an entry in the column, the view opens. This view provides the following:

SAP System details #The and of this SAP System.

section: for each instance, virtual host name, instance number, features (processes), HTTP and HTTPS ports, start priority, and SAPControl status.

section: with the host name, the IP address, the cloud provider (when applicable), the cluster name (when applicable), and the Trento Agent version for each listed host. When you click the host name, it takes you to the corresponding view.

Figure 12: SAP System Details #

7.2.4 Viewing the SAP HANA database status #

To display a list of all available SAP HANA databases and their details, proceed as follows:

Log in to the Trento Web console.

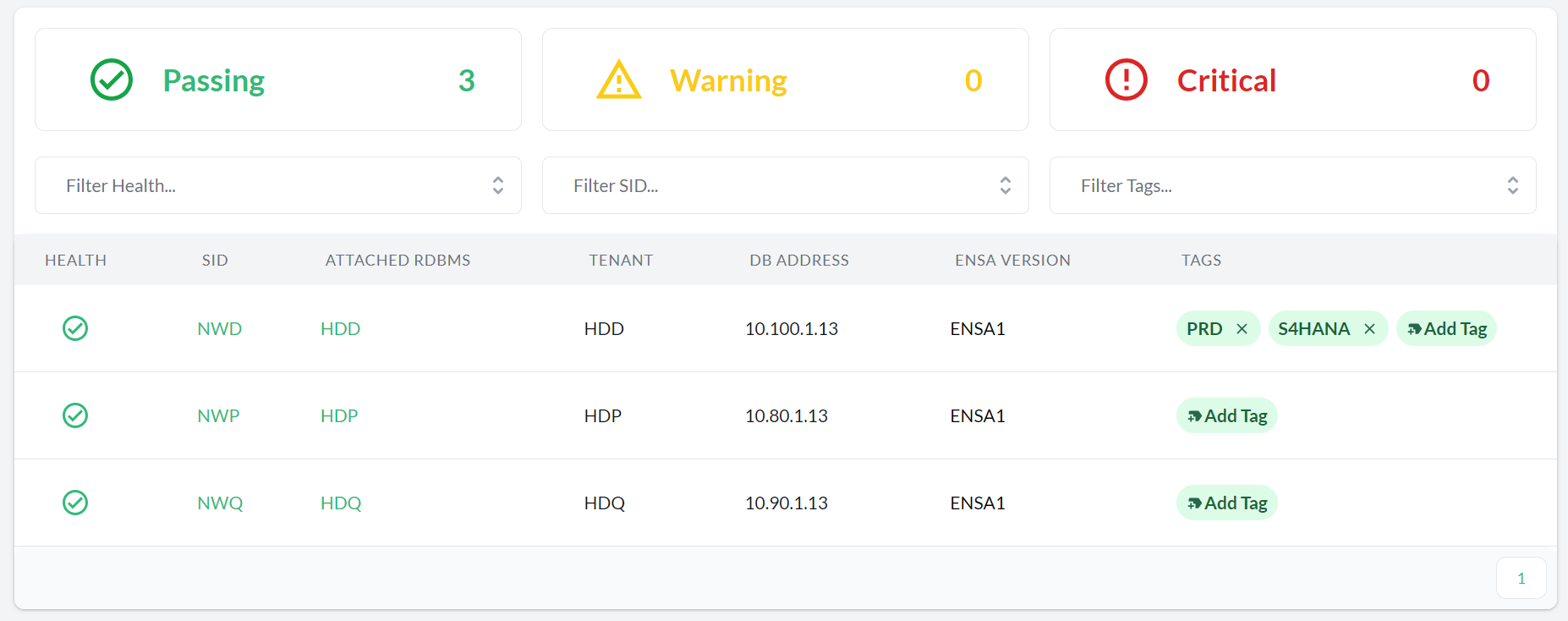

Click the entry in the left sidebar to show a summary of the state for all SAP HANA databases (see Figure 13, “HANA databases”).

Figure 13: HANA databases #To investigate the specific SAP HANA database details, click the respective name in the column to open the corresponding view. If the list is too big, reduce the list by providing filters.

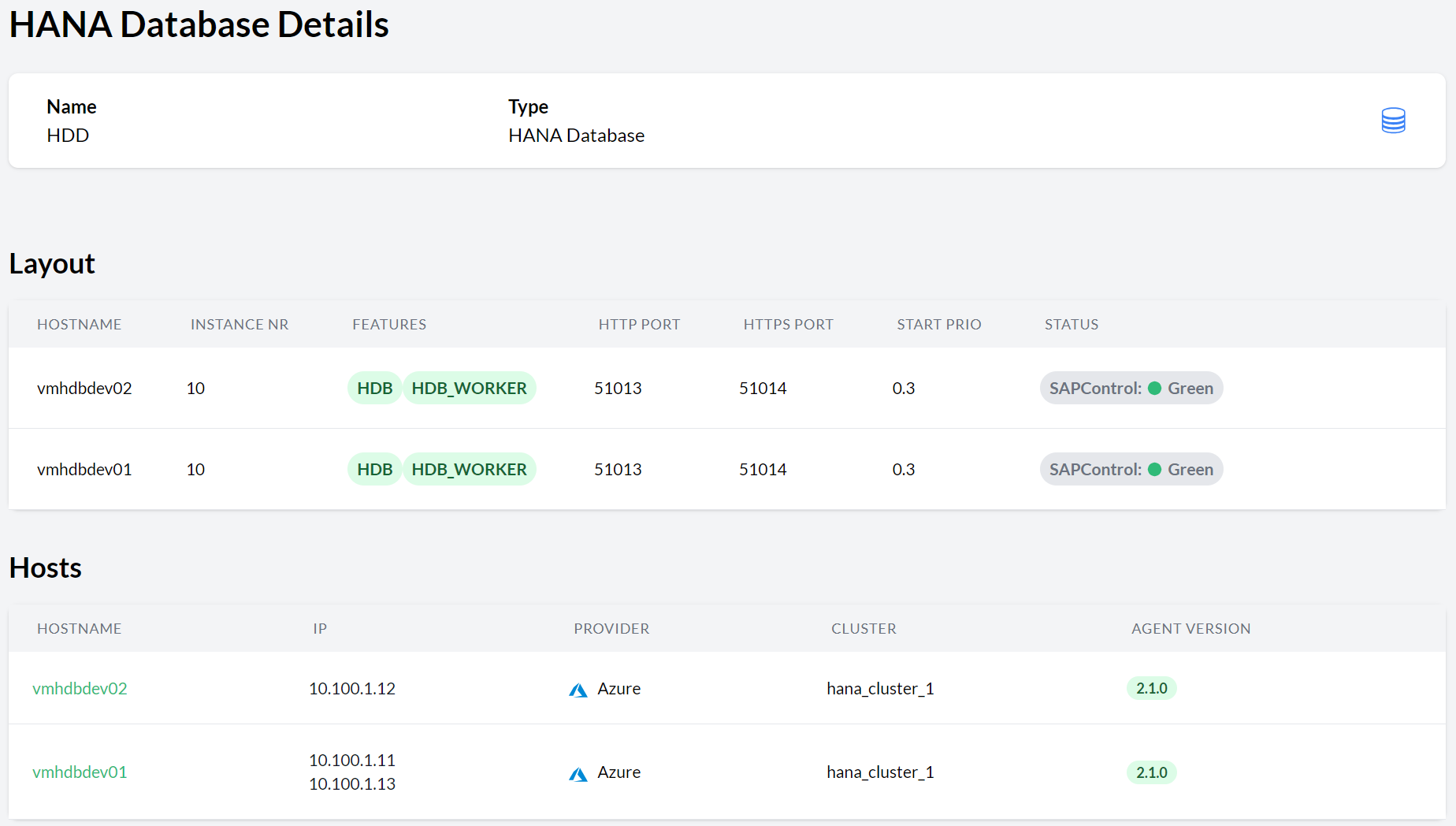

Clicking on one of the s opens the detail view. This view provides the following:

details view #The and of this SAP System.

section: lists all related SAP HANA instances with their corresponding virtual host name, instance number, features (roles), HTTP/HTTPS ports, start priorities, and SAPControl status.

section: lists the hosts where all related instances are running. For each host, it provides the host name, the local IP address(es), the cloud provider (when applicable), the cluster name (when applicable), the system ID, and the Trento Agent version.

Clicking on a host name takes you to the corresponding view.

Figure 14: HANA Database details #

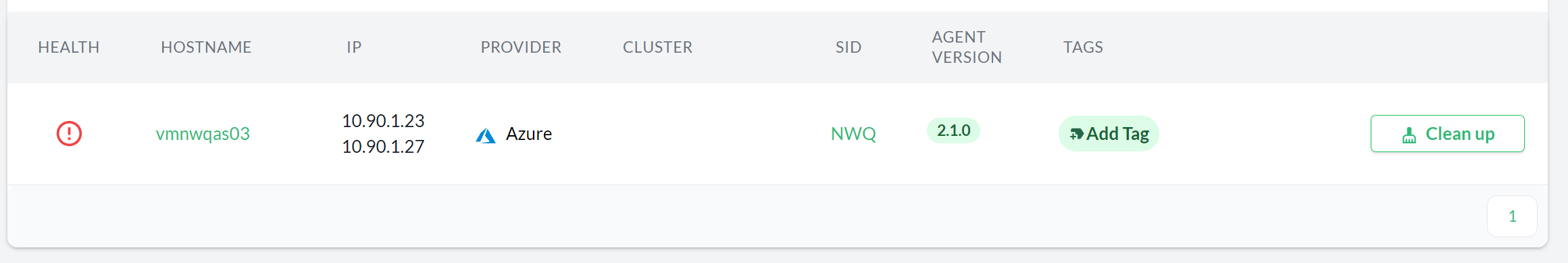

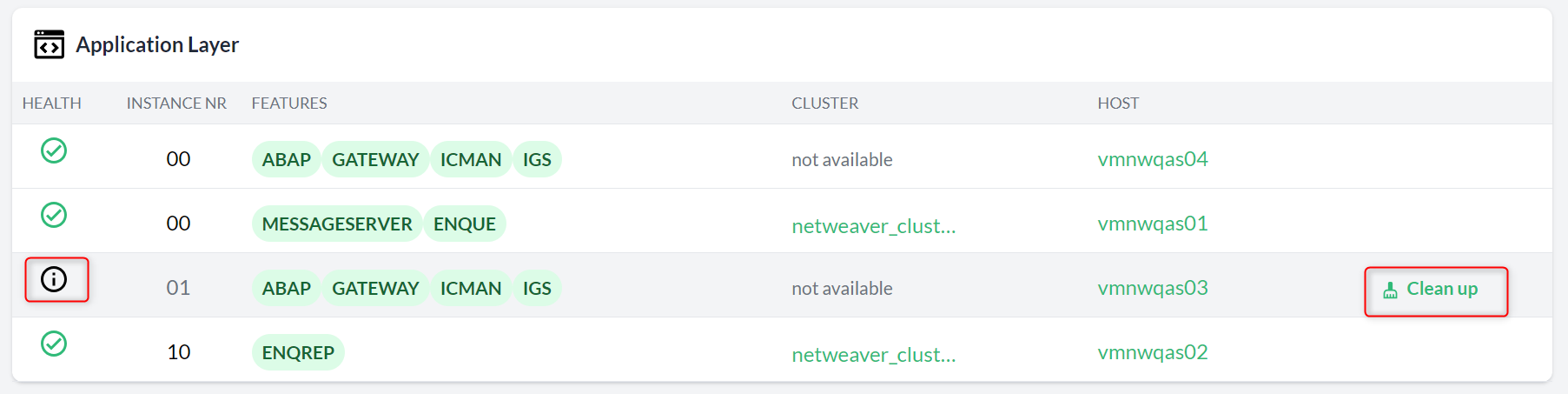

7.3 Cleaning up hosts and instances #

When the heartbeat of an agent fails, an option to clean-up the corresponding host will show up both in the overview and the corresponding view.

When the user clicks on the cleanup button, a box will open asking for confirmation. If the user grants it, all the components discovered by the agent in that particular host, including the host itself and other components that might depend on the ones running on the host, will be removed from the console. For example, if the user cleans up the host where the primary application server of an SAP System is registered, the entire SAP System will be removed from the console.

Similarly, when a registered application or SAP HANA instance is no longer discovered, an option to clean it up will show up both in the corresponding overview and the corresponding details view.

When the user clicks the cleanup button, a box will open asking for confirmation. If the user grants it, the instance will be removed from the console along with any possible dependencies For example, if the user cleans up the ASCS instance of an SAP system, the entire SAP system will be removed from the console.

7.4 Managing tags #

Tags are a means to label specific objects with a purpose, location, owner, or any other property you like. Such objects can be hosts, clusters, databases, or SAP systems. Tags make it easier to distinguish and show all these different objects, making your lists more readable and searchable. You can use any text you like to create your tags except blank spaces and special characters other than + - = . , _ : and @.

The following subsection shows how you can add, remove, and filter objects based on your tags.

7.4.1 Adding tags to hosts, clusters, databases, and SAP Systems #

To add one or more tags to your objects, do the following:

Log in to Trento.

In the Trento dashboard, go to the overview corresponding to the object for which you want to create tags. For example, the overview.

In the overview, search for the host you want to add a tag to.

In the column, click the entry.

Enter the respective tag and finish with Enter.

Repeat this procedure to assign as many tags as you want. It can be on the same or on a different host.

After you have finished the above procedure, the changed host contains one or more tags.

The same principle applies to other objects in Trento, be it , , or .

7.4.2 Removing tags #

If you want to remove existing tags, click the respective part in the dashboard:

Log in to Trento.

In the Trento dashboard, go to the overview corresponding to the object for which you want to remove tags. For example, the overview.

In the overview, search for the host you want to remove a tag from.

In the column, click the icon to remove the tag.

Repeat this procedure to remove as many tags as you want. It can be on the same or on a different host.

7.4.3 Filter tags #

With tags, you can show only those objects you are interested in. For example, if

you have created a drbd tag for some hosts, you can show only

those hosts that have this drbd tag assigned.

In the Trento dashboard, go to the respective overview you are interested in.

Make sure you have already assigned some tags. If not, refer to Section 7.4.1, “Adding tags to hosts, clusters, databases, and SAP Systems”.

In the second row, click the last drop-down list with the name . The drop-down list opens and shows you all of your defined tags.

Select the tag you want to filter from the drop-down list. It is possible to select more than one tag.

After you have finished the above procedure, Trento shows all respective hosts that contain one or more of your selected tags.

To remove the filter, click the icon from the same drop-down list.

8 Updating Trento Server #

The procedure to update the Trento Server depends on how it was installed. If it was installed manually, then it must be updated manually using the latest versions of the container images available in the SUSE public registry. If it was installed using Helm chart, it can be updated using the same Helm command as for the installation:

helm upgrade \ --install trento-server oci://registry.suse.com/trento/trento-server \ --set trento-web.adminUser.password=ADMIN_PASSWORD

A few things to consider:

Before updating Trento Server ensure that all the Trento Agents in the environment are supported by the target version. See section Section 14, “Compatibility matrix between Trento Server and Trento Agents” for details.

Remember to set the helm experimental flag if you are using a version of Helm lower than 3.8.0.

When updating from a Trento version lower than 2.0.0 to version 2.0.0 or higher, an additional flag must be set in the Helm command:

helm upgrade \ --install trento-server oci://registry.suse.com/trento/trento-server \ --set trento-web.adminUser.password=ADMIN_PASSWORD \ --set rabbitmq.auth.erlangCookie=$(openssl rand -hex 16)

If email alerting has been enabled, then the corresponding trento-web.alerting flags should be set in the Helm command as well.

9 Updating a Trento Agent #

To update the Trento Agent, do the following:

Log in to the Trento Agent host.

Stop the Trento Agent:

>sudo systemctl stop trento-agentInstall the new package:

>sudo zypper ref>sudo zypper install trento-agentCopy file

/etc/trento/agent.yaml.rpmsaveonto/etc/trento/agent.yamland ensure that entries facts-service-url, server-url, and api-key are maintained properly in the latter:facts-service-url: the address of the AMQP service shared with the checks engine where fact gathering requests are received. The right syntax is

amqp://trento:trento@TRENTO_SERVER_HOSTNAME:5672.server-url: HTTP URL for the Trento Server (

http://TRENTO_SERVER_HOSTNAME)api-key: the API key retrieved from the Web console

Start the Trento Agent:

>sudo systemctl start trento-agentCheck the status of the Trento Agent:

>sudo systemctl status trento-agent ● trento-agent.service - Trento Agent service Loaded: loaded (/usr/lib/systemd/system/trento-agent.service; enabled; vendor preset: disabled) Active: active (running) since Wed 2021-11-24 17:37:46 UTC; 4s ago Main PID: 22055 (trento) Tasks: 10 CGroup: /system.slice/trento-agent.service ├─22055 /usr/bin/trento agent start --consul-config-dir=/srv/consul/consul.d └─22220 /usr/bin/ruby.ruby2.5 /usr/sbin/SUSEConnect -s [...]Check the version in the overview of the Trento Web console (URL

http://TRENTO_SERVER_HOSTNAME).Repeat this procedure in all Trento Agent hosts.

10 Uninstalling Trento Server #

If Trento Server was deployed manually, then you need to uninstall it manually. If Trento Server was deployed using the Helm chart, you can also use Helm to uninstall it as follows:

helm uninstall trento-server

11 Uninstalling a Trento Agent #

To uninstall a Trento Agent, perform the following steps:

Log in to the Trento Agent host.

Stop the Trento Agent:

>sudo systemctl stop trento-agentRemove the package:

>sudo zypper remove trento-agent

12 Reporting a Problem #

Any SUSE customer, with a registered SUSE Linux Enterprise Server for SAP Applications 15 (SP1 or higher) distribution, experiencing an issue with Trento Premium, can report the problem either directly in the SUSE Customer Center or through the corresponding vendor, depending on their licensing model. Please report the problem under SUSE Linux Enterprise Server for SAP Applications 15 and component trento.

When opening a support case for Trento, provide the following information, which can be retrieved as explained in section Section 13, “Problem Analysis”:

The output of the Trento support plugin.

Your scenario dump.

For issues with a particular Trento Agent, or a component discovered by a particular Trento Agent, also provide the following:

The status of the Trento Agent.

The journal of the Trento Agent.

The output of the command

supportconfigin the Trento Agent host. See https://documentation.suse.com/sles/html/SLES-all/cha-adm-support.html#sec-admsupport-cli for information on how to run this command from command line.

13 Problem Analysis #

This section covers some common problems and how to analyze them.

13.1 Trento Server #

To analyze issues within the application, we have two tools at our disposal. They both help us collect information/data that might be useful when troubleshooting/analyzing the issue.

13.1.1 Trento Support Plugin #

The Trento support plugin automates the collection of logs and relevant runtime information on the server side. Using the plugin requires a host with the following setup:

The packages jq and python3-yq are installed.

Helm is installed.

The command

kubectlis installed and connected to the K8s cluster where Trento Server is running.

To use it, proceed as follows:

Download the Trento support plugin script:

#wgethttps://raw.githubusercontent.com/trento-project/helm-charts/main/scripts/trento-support.shNote: Package available in SUSE Linux Enterprise Server for SAP Applications 15 SP3 and higherCustomers of SUSE Linux Enterprise Server for SAP Applications 15 SP3 and higher can install the trento-supportconfig-plugin package directly from the official SLES for SAP 15 repos using Zypper.

Make the script executable:

#chmod+x trento-support.shEnsure

kubectlis connected to the K8s cluster where Trento Server is running and execute the script:#./trento-support.sh--output file-tgz --collect allSend the generated archive file to support for analysis.

The Trento support plugin admits the following options:

-o,--outputOutput type. Options: stdout|file|file-tgz

-c,--collectCollection options: configuration|base|kubernetes|all

-r,--release-nameRelease name to use for the chart installation. "trento-server" by default.

-n,--namespaceKubernetes namespace used when installing the chart. "default" by default.

--helpShows help messages

13.1.2 Scenario dump #

The scenario dump is a dump of the Trento database. It helps the Trento team to recreate the scenario to test it. Using the script that generates the dump requires a host with the following setup:

The command

kubectlis installed and connected to the K8s cluster where Trento Server is running.

To generate the dump, proceed as follows:

Download the latest version of the dump script:

>wgethttps://raw.githubusercontent.com/trento-project/web/main/hack/dump_scenario_from_k8.shMake the script executable:

>chmod+x dump_scenario_from_k8.shEnsure

kubectlis connected to the K8s cluster where Trento Server is running and execute the script as follows:>./dump_scenario_from_k8.sh-n SCENARIO_NAME -p PATHGo to

/scenarios/, package all the JSON files and send them to support for analysis.

13.1.3 Pods descriptions and logs #

In additon to the output of the Trento Support Plugin and the Dump Scenario script, the descriptions and logs of the

pods of the Trento Server can also be useful for analysis and troubleshooting purposes. These descriptions and logs can be

easily retrieved with the kubectl command, for which you need a host where command kubectl

is installed and connected to the K8s cluster where Trento Server is running.

List the pods running in Kubernetes cluster and their status. Trento Server currently consists of the following six pods:

>kubectl get podstrento-server-grafana-* trento-server-prometheus-server-* trento-server-postgresql-0 trento-server-web-* trento-server-wanda-* trento-server-rabbitmq-0Retrieve the description of one particular pod as follows:

>kubectl describe podPOD_NAMERetrieve the log of one particular pod as follows:

>kubectl logsPOD_NAMEMonitor the log of one particular pod as follows:

>kubectl logsPOD_NAME --follow

13.2 Trento Agent #

The first source to analyze issues with the Trento Agent is the Trento Agent status, which can be retrieved as follows:

>sudo systemctl statustrento-agent

If further analysis is required, it is convenient to activate debug log level for the agent. A detailed log can be then retrieved from the journal:

Add parameter log-level with value

debugto the/etc/trento/agent.yamlconfiguration file.>sudo vi/etc/trento/agent.yamlAdd the following entry:

log-level: debug

Restart the agent:

>sudo systemctl restarttrento-agentRetrieve the log from the journal:

>sudo journalctl-u trento-agent

14 Compatibility matrix between Trento Server and Trento Agents #

|

Trento Agent Compatibility matrix | Trento Server versions | ||||||

|---|---|---|---|---|---|---|---|

| 1.0.0 | 1.1.0 | 1.2.0 | 2.0.0 | 2.1.0 | 2.2.0 | ||

|

Trento Agent versions | 1.0.0 | ✓ | |||||

| 1.1.0 | ✓ | ✓ | |||||

| 1.2.0 | ✓ | ✓ | ✓ | ||||

| 2.0.0 | ✓ | ||||||

| 2.1.0 | ✓ | ✓ | |||||

| 2.2.0 | ✓ | ✓ | ✓ | ||||

15 Highlights of Trento versions #

The following list shows the most important user-facing features in the different versions of Trento. For a more detailed information about the changes included in each new version, visit the GitHub project at https://github.com/trento-project. Particularly:

For changes in the Trento Helm Chart, visit https://github.com/trento-project/helm-charts/releases.

For changes in the Trento Server Web component, visit https://github.com/trento-project/web/releases.

For changes in the Trento Server old checks engine component (runner), visit https://github.com/trento-project/runner/releases.

For changes in the Trento Server new checks engine component (wanda), visit https://github.com/trento-project/wanda/releases.

For changes in the Trento Agent, visit https://github.com/trento-project/agent/releases.

- Version 2.2.0 (released on 2023/12/04)

Consisting of Helm chart 2.2.0, Web component 2.2.0, checks engine (wanda) 1.2.0 and agent 2.2.0.

saptune Web integration.

Intance clean-up capabilities.

Ability to run host-level configuration checks.

- Version 2.1.0 (released on 2023/08/02)

Consisting of Helm chart 2.1.0, Web component 2.1.0, checks engine (wanda) 1.1.0 and agent 2.1.0.

ASCS/ERS cluster discovery, from single-sid, two-node scenarios to multi-sid, multi-node setups. The discovery covers both versions of the enqueue server, ENSA1 and ENSA2, and both scenarios with resource managed instance filesystems and simple mount setups.

Host clean-up capabilities, allowing users to get rid of hosts that are no longer part of their SAP environment.

New checks results view that leverages the potential of the new checks engine (wanda) and provides the user with insightful information about the check execution.

- Version 2.0.0 (released on 2023/04/26)

Consisting of Helm chart 2.0.0, Web component 2.0.0, checks engine (wanda) 1.0.0 and agent 2.0.0.

A brand-new safer, faster, ligher SSH-less configuration checks engine (wanda) which not only opens the door to configuration checks for other HA scenarios (ASCS/ER, HANA scale-up cost optimized, SAP HANA scale-out) and other targes in the environment (hosts, SAP HANA databases, SAP NetWeaver instances), but also will allow customization of existing checks and addtion of custom checks.

Addition of VMware to the list of known platforms.

Versioned external APIs for both the Web and the checks engine (wanda) components. The full list of available APIs can be found at https://www.trento-project.io/web/swaggerui/ and https://www.trento-project.io/wanda/swaggerui/ respectiverly.

- Version 1.2.0 (released on 2022/11/04)

Consisting of Helm chart 1.2.0, Web component 1.2.0, checks engine (runner) 1.1.0 and agent 1.1.0.

Configration checks for HANA scale-up peformance optimized two-node clusters on on-premise bare metal platforms, including KVM and Nutanix.

A dynamic dashboard that allows you to determine, at a glance, the overall health status of your SAP environment.

- Version 1.1.0 (released on 2022/07/14)

Consisting of Helm chart 1.1.0, Web component 1.1.0, checks engine (runner) 1.0.1 and agent 1.1.0.

Fix for major bug in the checks engine that prevented the Web component to load properly.

- Version 1.0.0 (general availability, released on 2022/04/29)

Consisting of Helm chart 1.0.0, Web component 1.0.0, checks engine (runner) 1.0.0 and agent 1.0.0.

Clean, simple Web console designed with SAP basis in mind. It reacts in real time to changes happening in the backend environment thanks to the event-driven technology behind it.

Automated discovery of SAP systems, SAP instances and SAP HANA scale-up two-node clusters

Configuration checks for SAP HANA scale-up performance optimized two-node clusters on Azure, AWS and GCP.

Basic integration with Grafana and Prometheus to provide graphic dashboards about CPU and memory utilization in each discovered host.

Basic alert emails for critical events happening in the monitored environment.

16 More information #

Homepage: https://www.trento-project.io/

Trento project: https://github.com/trento-project/trento