Trento is an open cloud native Web console that aims to help SAP Basis consultants and administrators to check the configuration, monitor and manage the entire OS stack of their SAP environments, including HA features.

- 1 What is Trento?

- 2 Lifecycle

- 3 Requirements

- 4 Installation

- 5 Update

- 6 Uninstallation

- 7 Prometheus integration

- 8 MCP Integration

- 9 Core Features

- 10 Compliance Features

- 11 Using Trento Web

- 12 Integration with SUSE Multi-Linux Manager

- 13 Operations

- 14 Reporting an Issue

- 15 Problem Analysis

- 16 Compatibility matrix between Trento Server and Trento Agents

- 17 Highlights of Trento versions

- 18 More information

- 1.1 Architectural overview

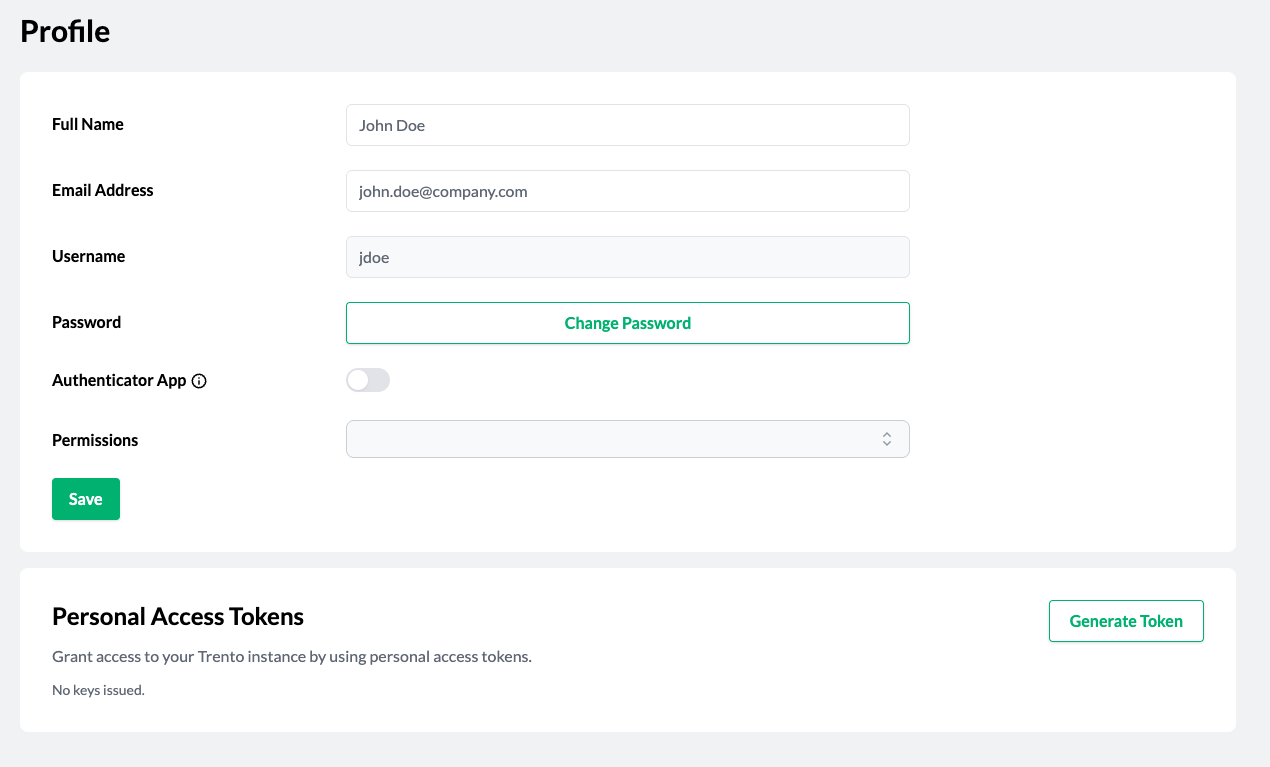

- 8.1 Generate a Personal Access Token in Trento

- 8.2 MCPHost initial screen with the Trento MCP Server connected

- 8.3 Generate a Personal Access Token in Trento

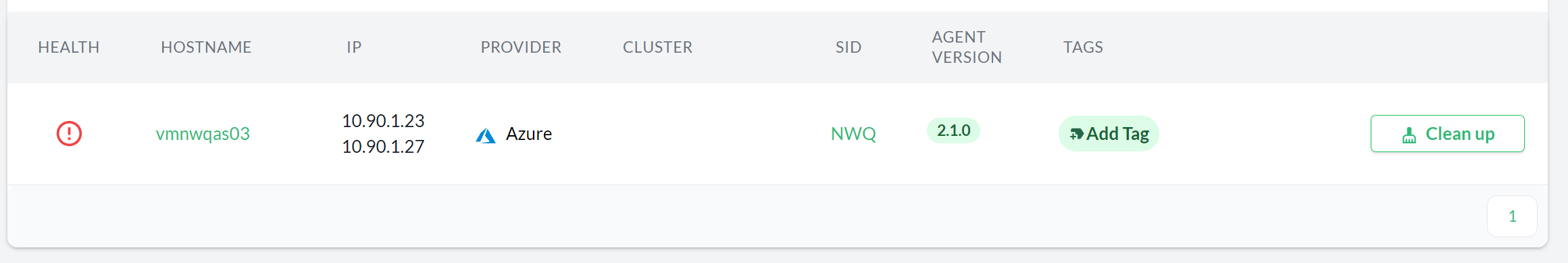

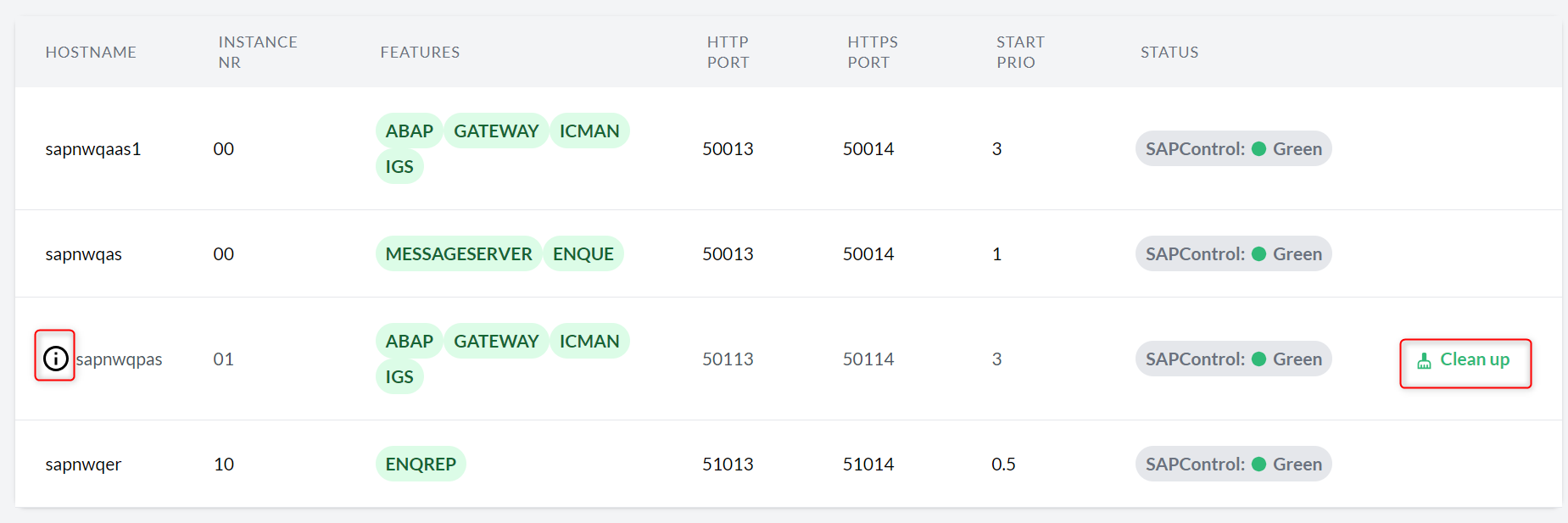

- 9.1 Clean up button in Hosts overview

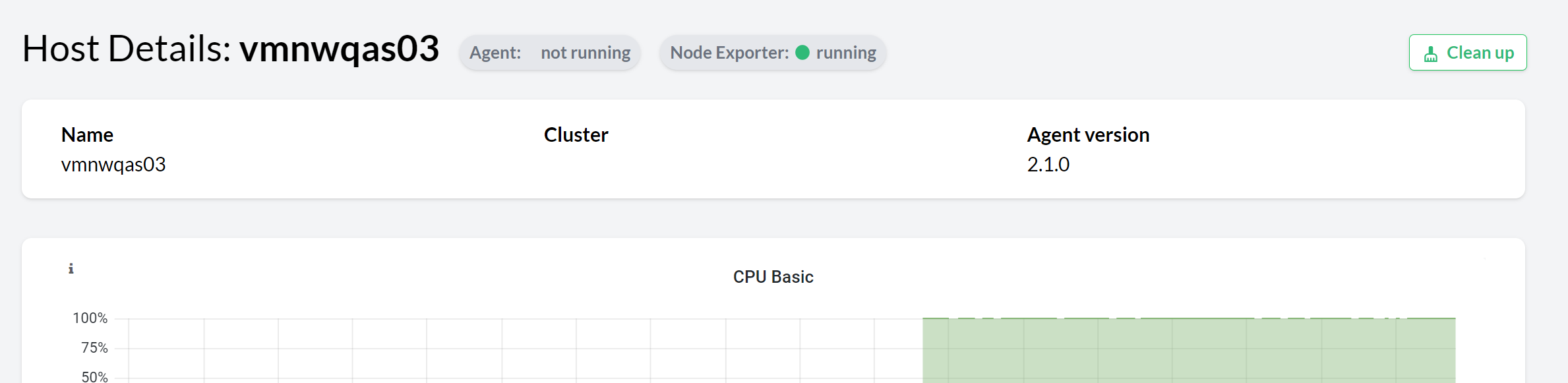

- 9.2 Clean up button in Host details view

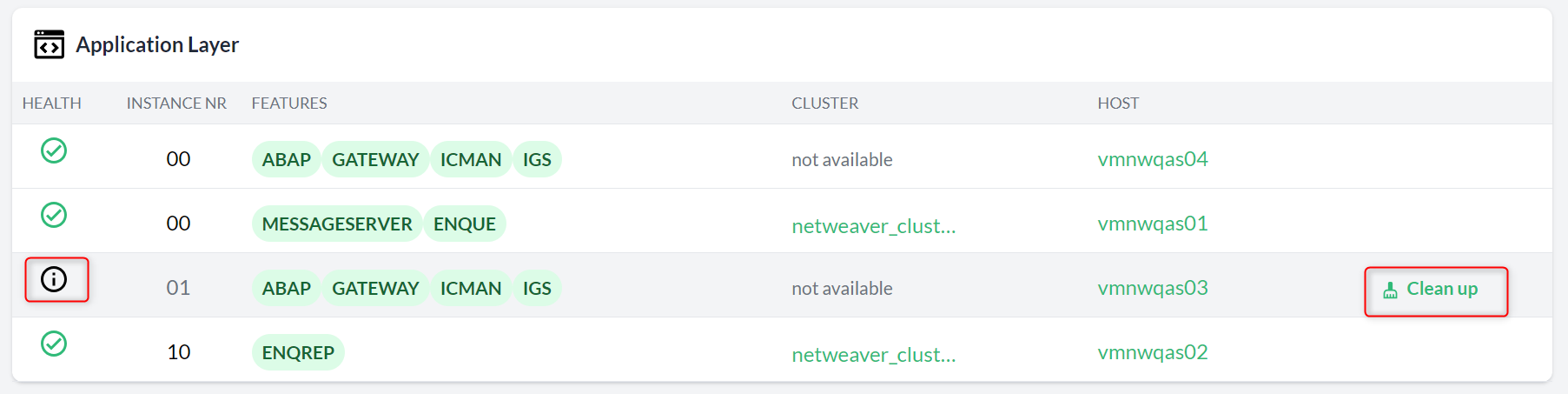

- 9.3 Clean up button SAP systems overview

- 9.4 Clean up button in SAP system details view

- 9.5 Checks catalog

- 9.6 Profile

- 9.7 Generate personal access token modal

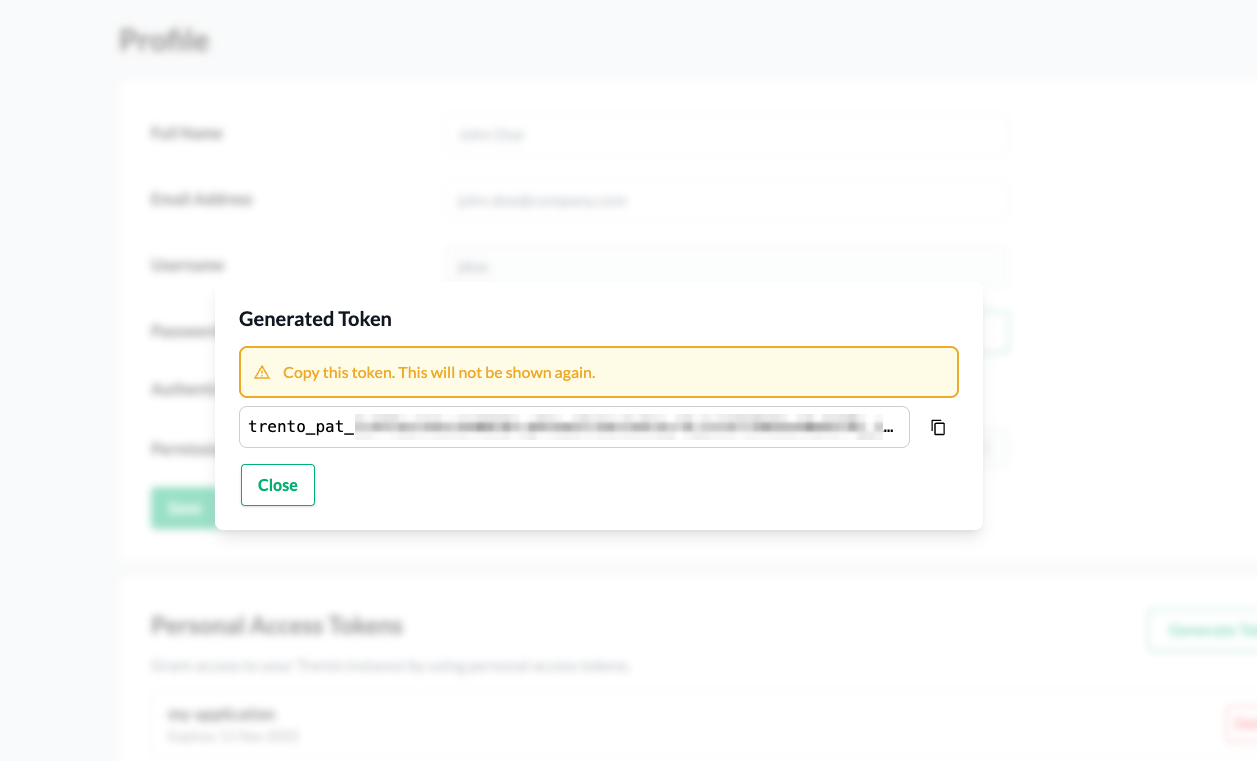

- 9.8 Generated personal access token

- 9.9 Personal access tokens section

- 9.10 Delete personal access token modal

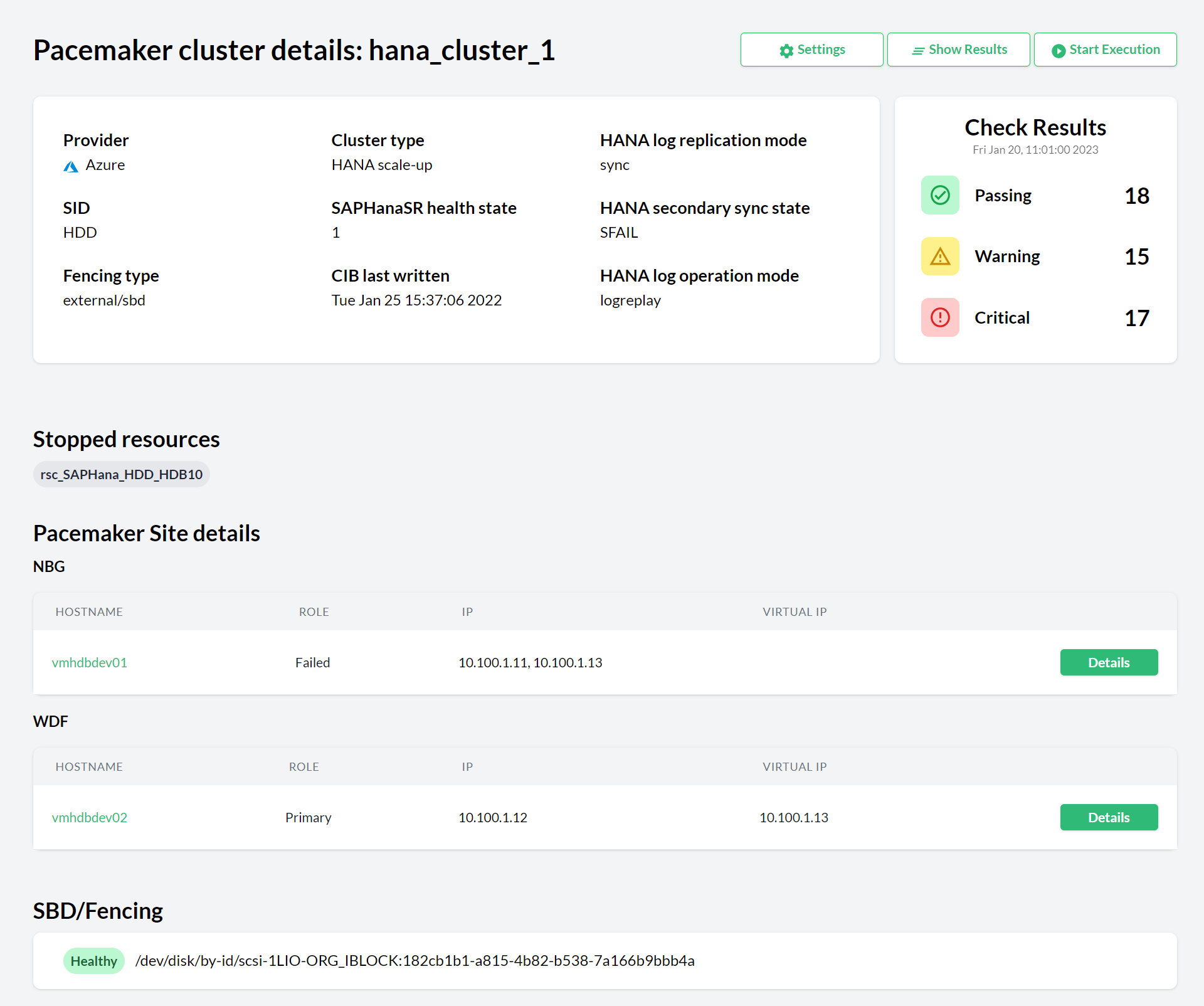

- 10.1 Pacemaker cluster details

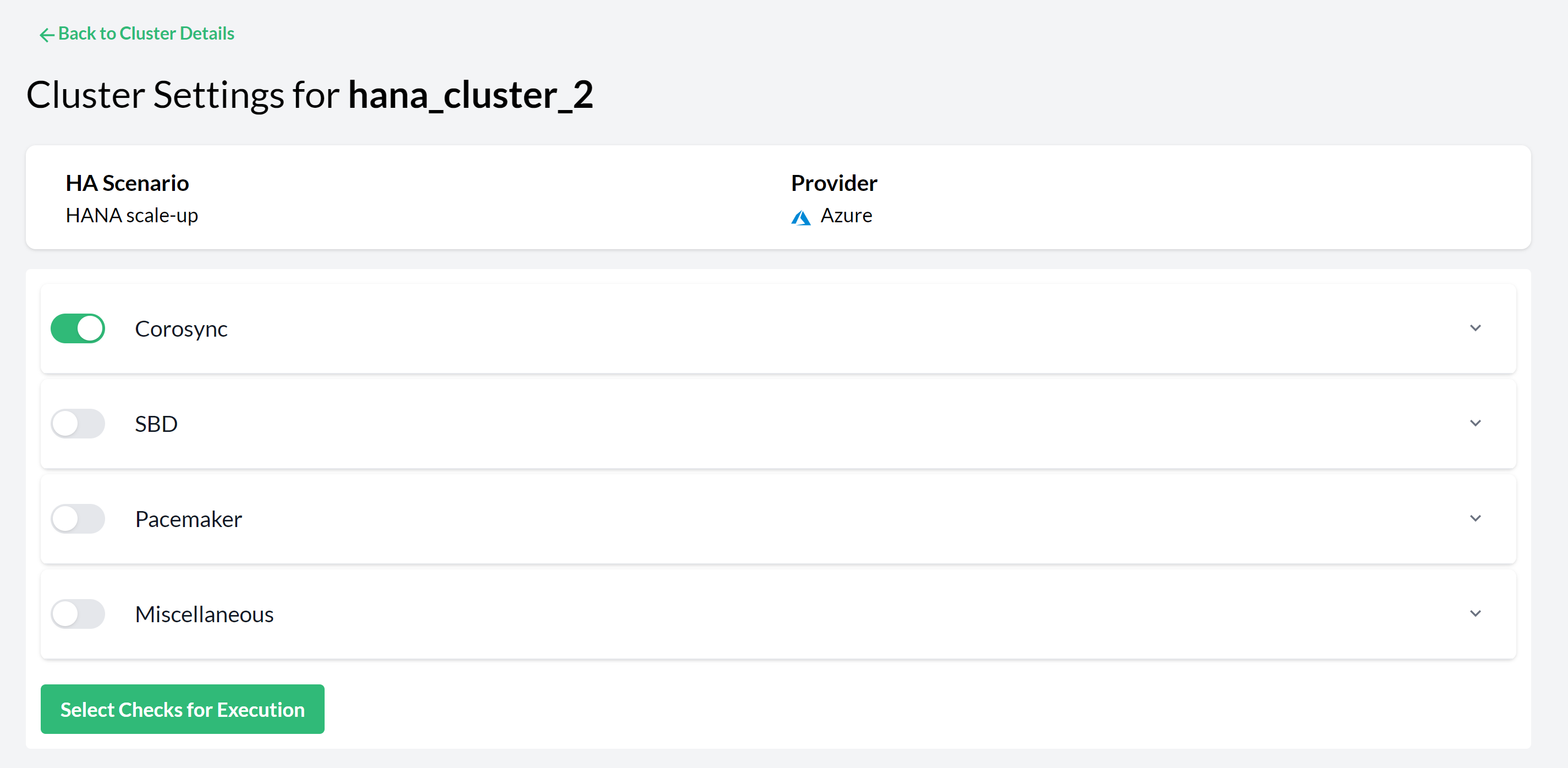

- 10.2 Pacemaker Cluster Settings—Checks Selection

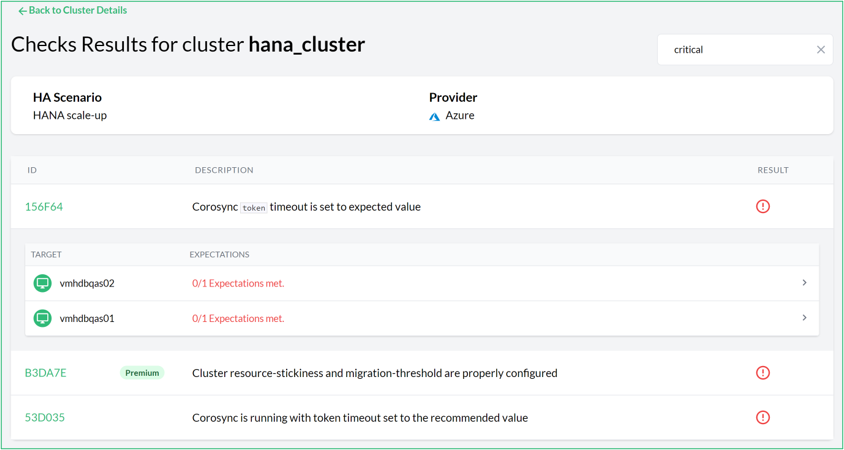

- 10.3 Check results for a cluster

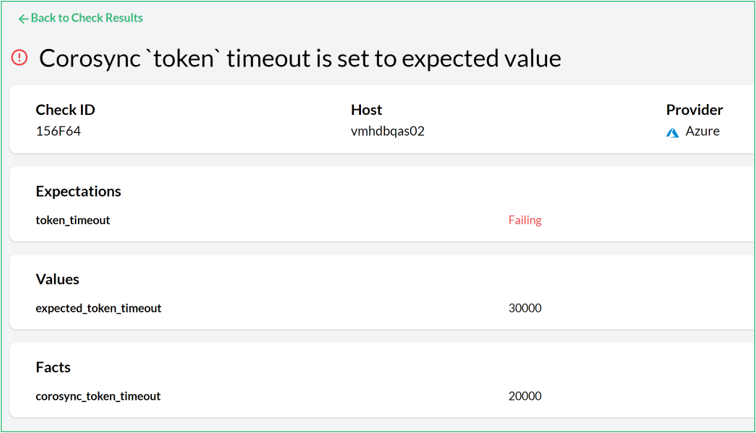

- 10.4 Unmet expected result detail view

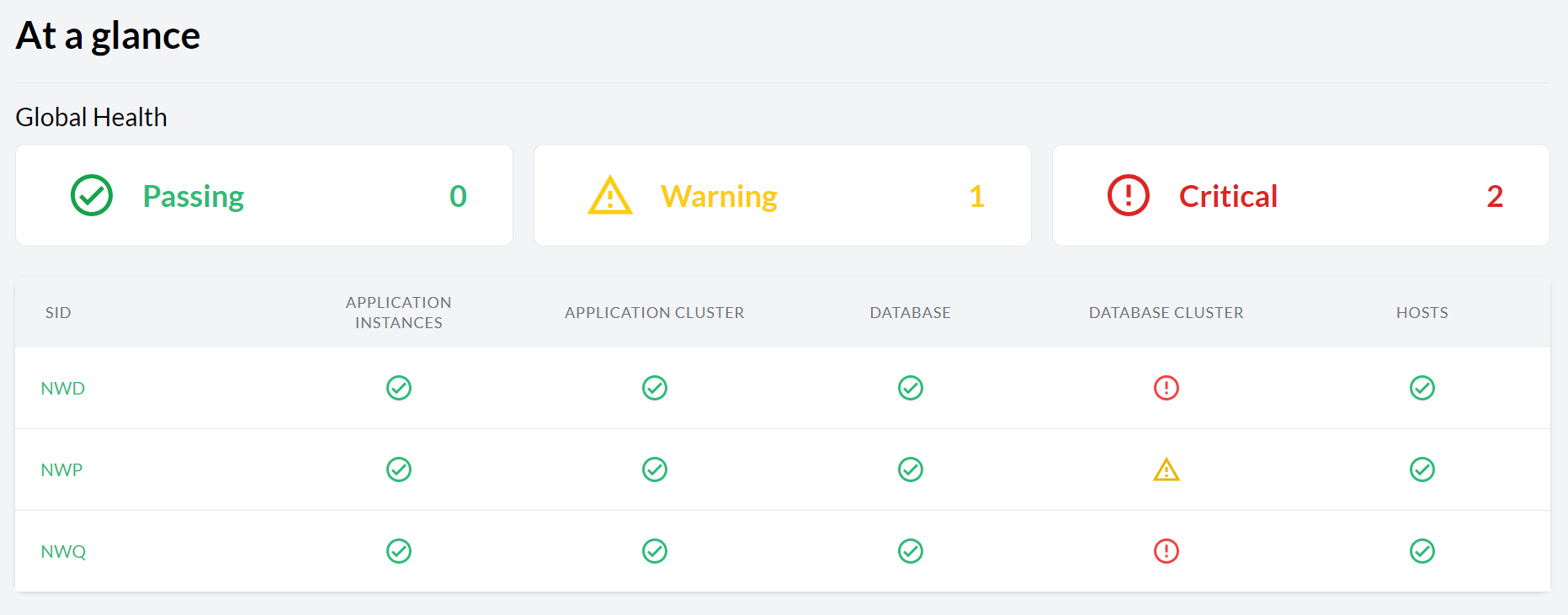

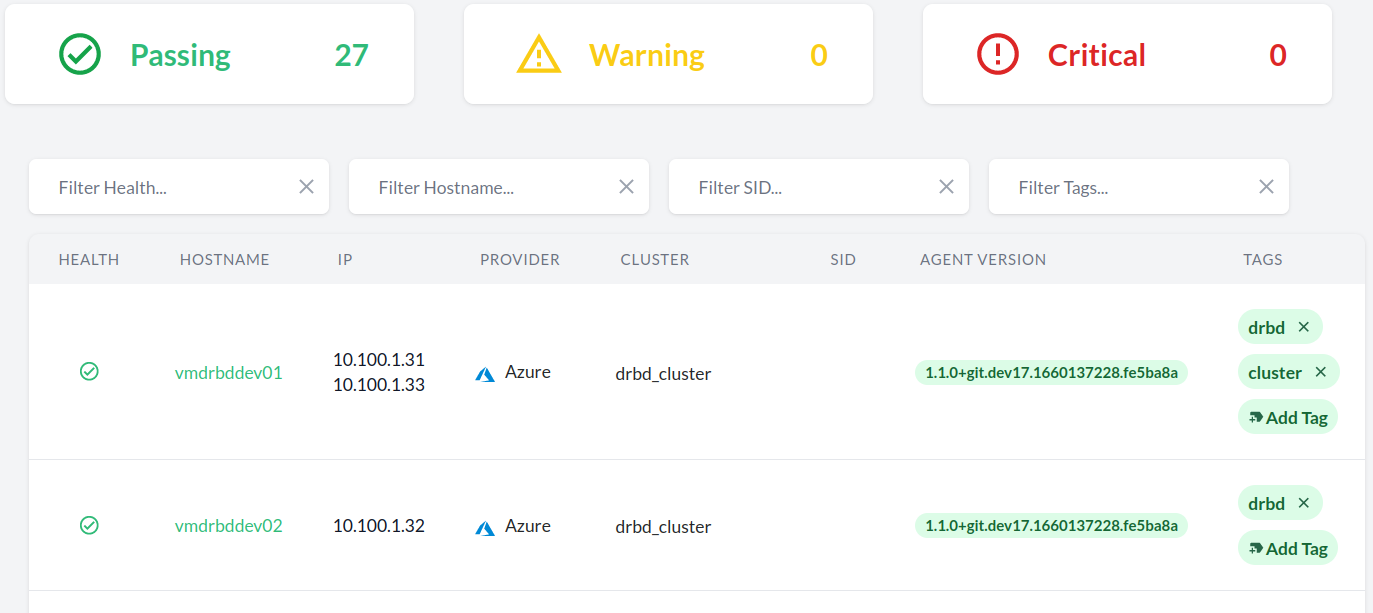

- 11.1 Dashboard with the global health state

- 11.2 Hosts entry

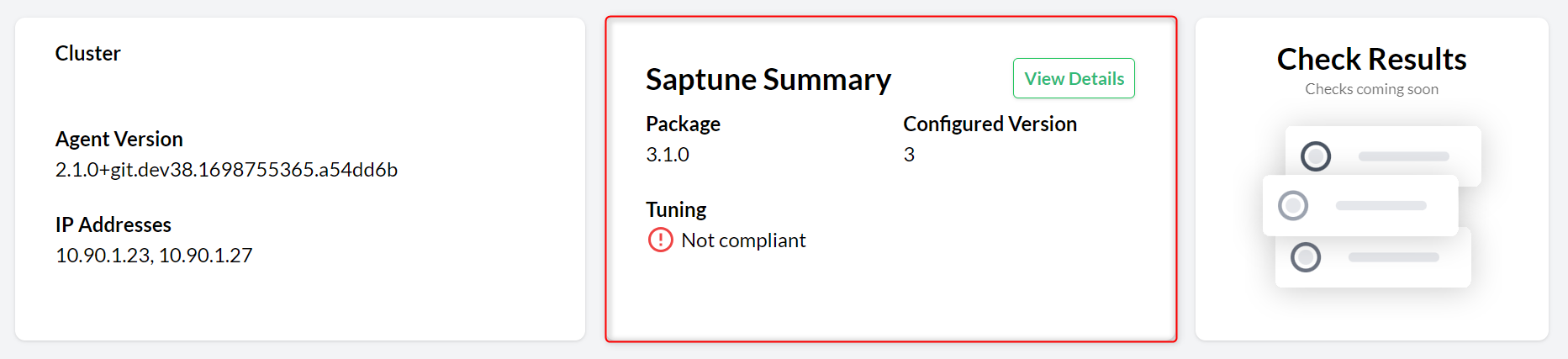

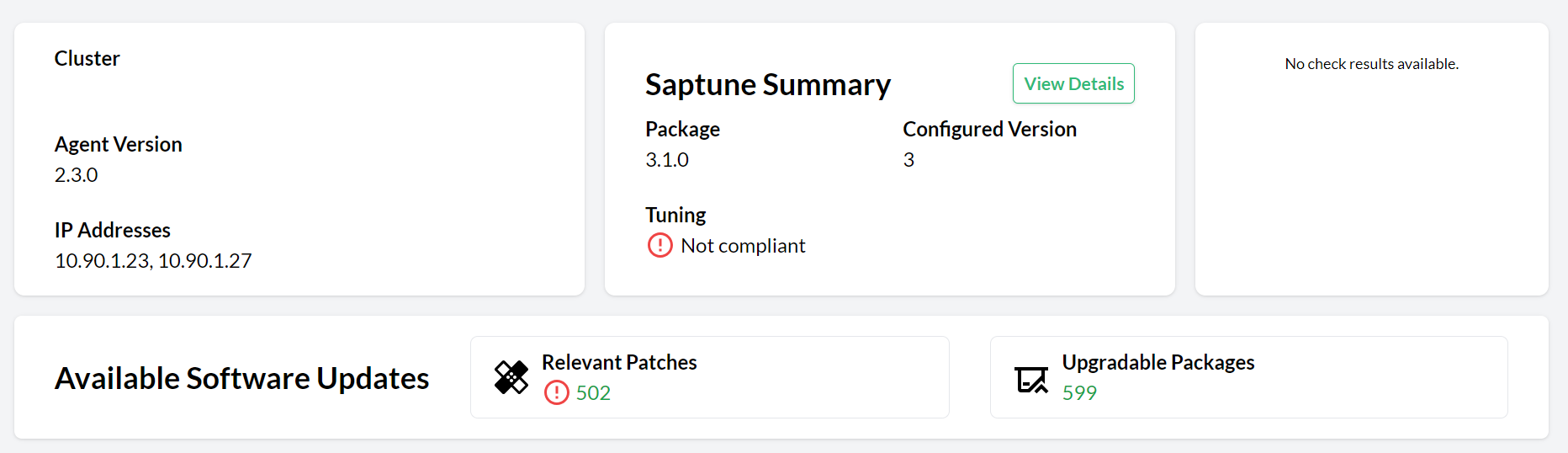

- 11.3 saptune Summary section

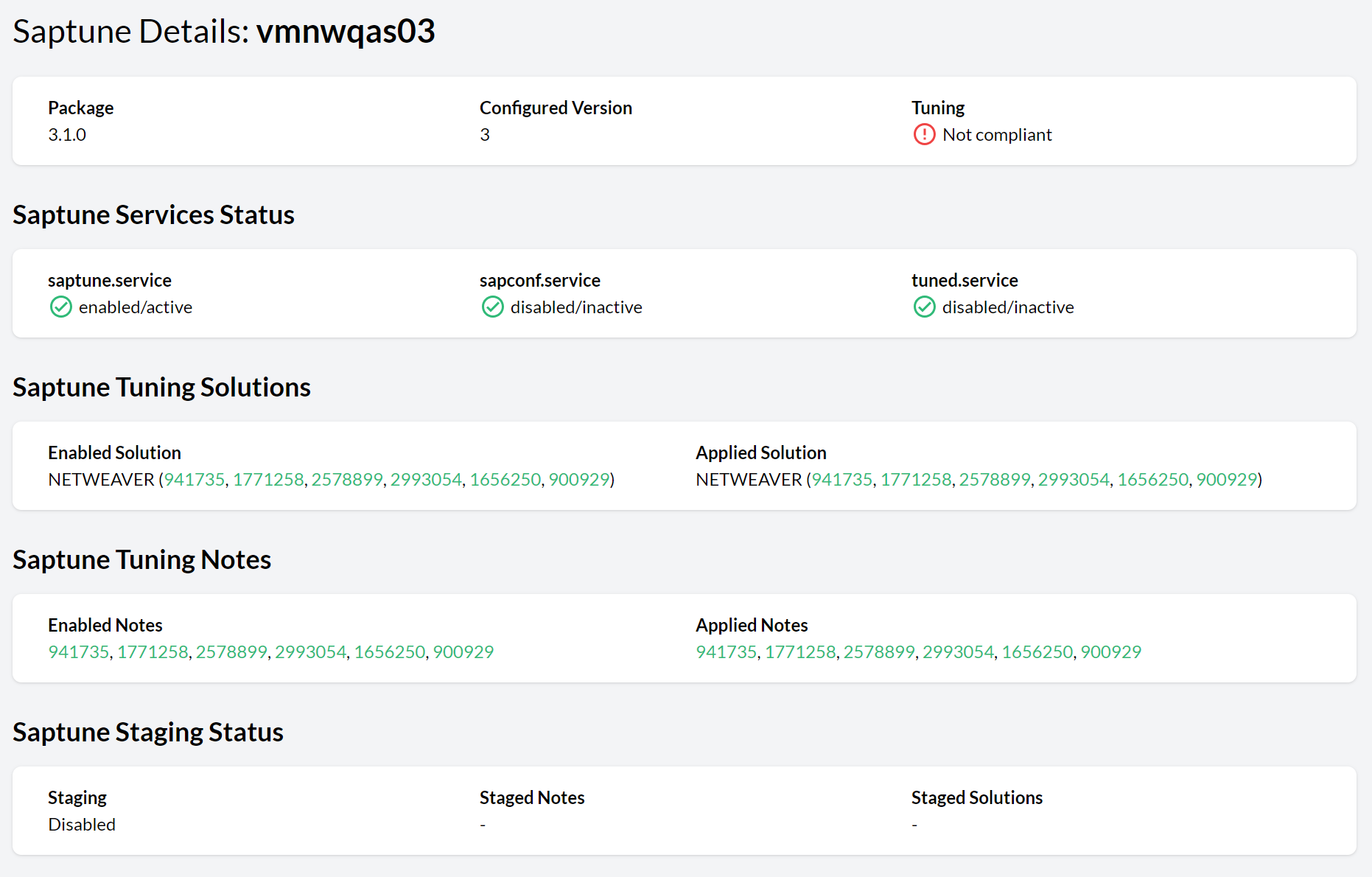

- 11.4 saptune details view

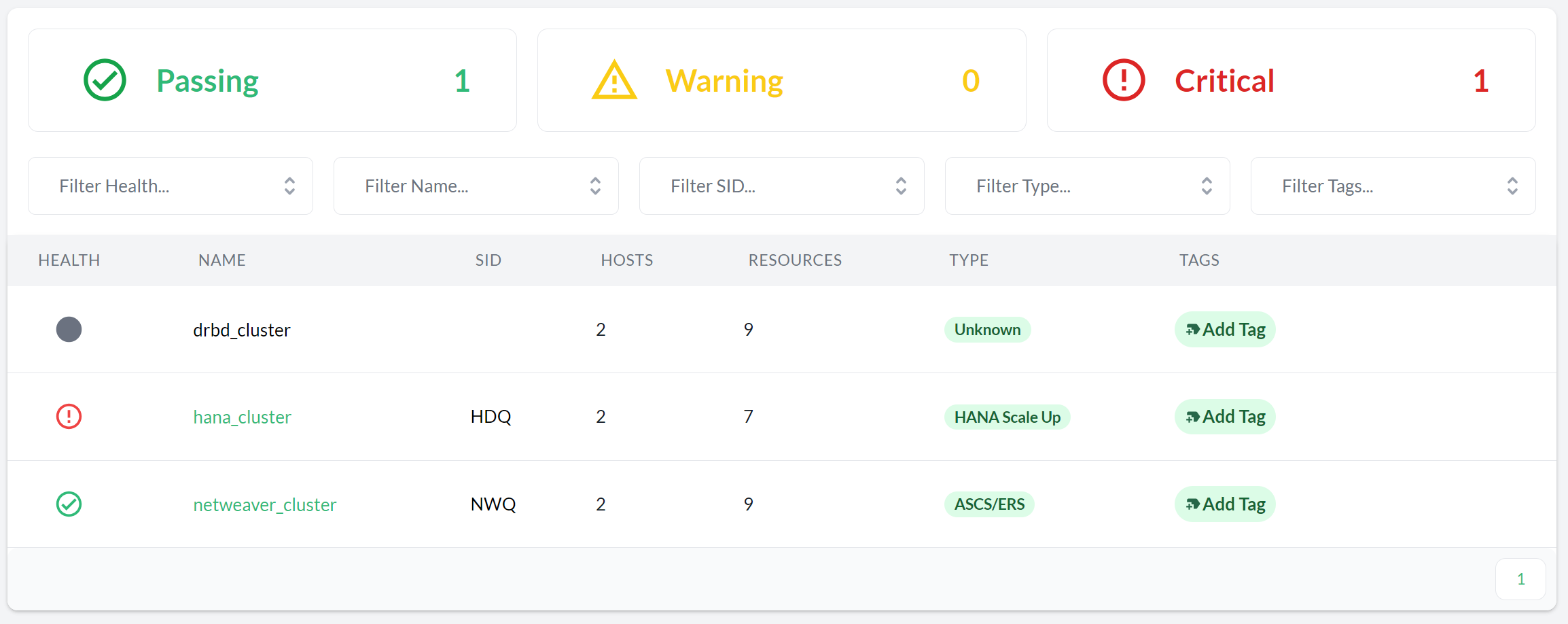

- 11.5 Pacemaker clusters

- 11.6 SAP Systems

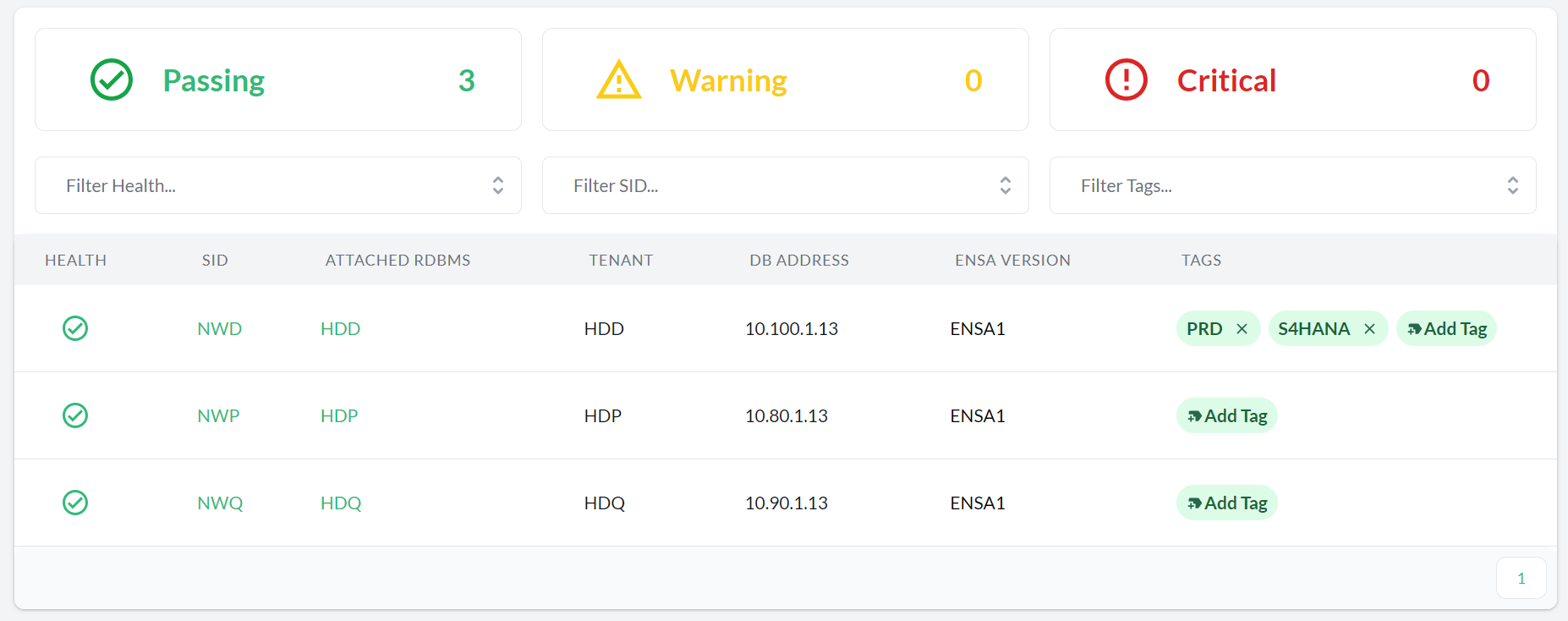

- 11.7 SAP System Details

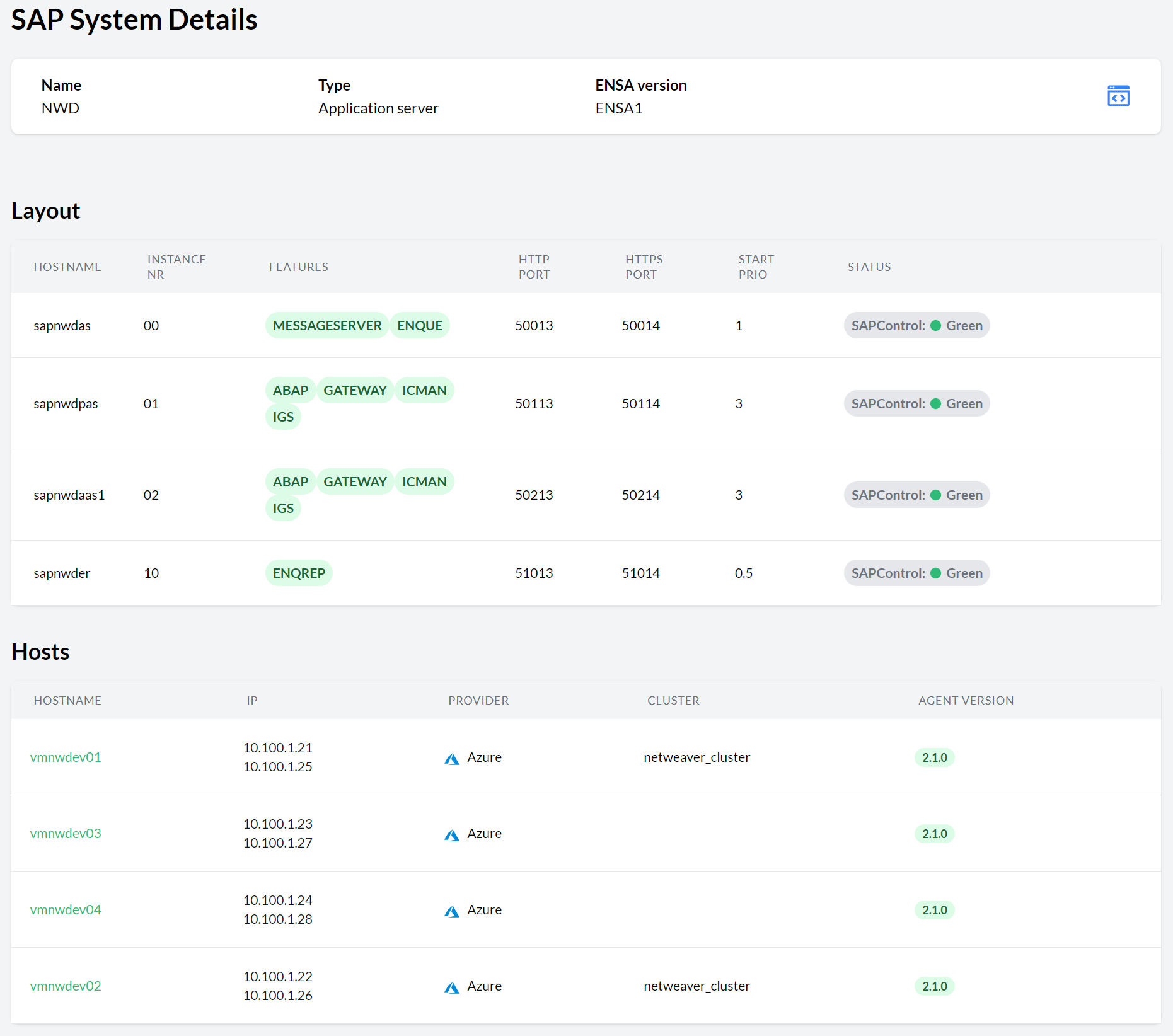

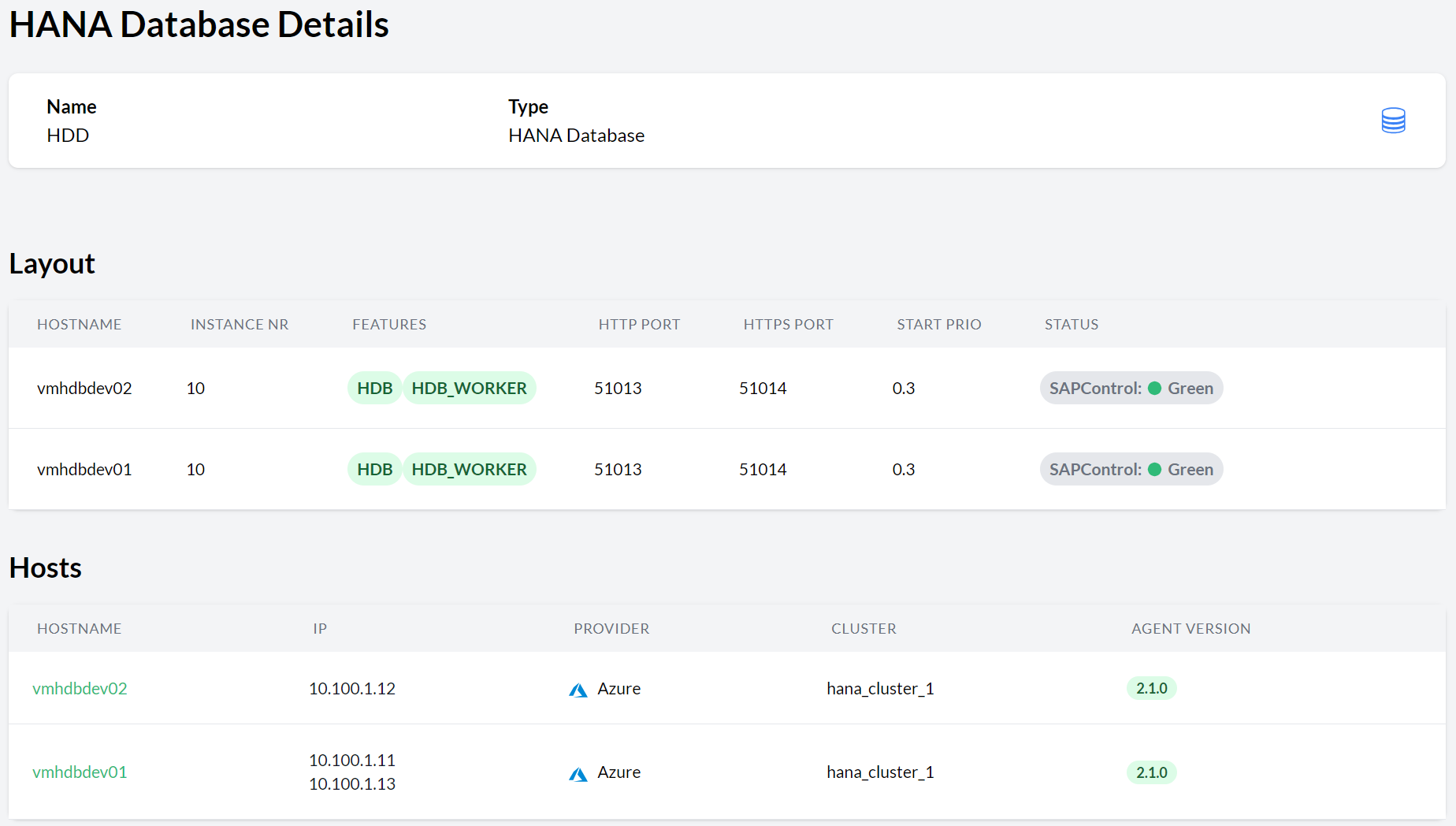

- 11.8 HANA databases

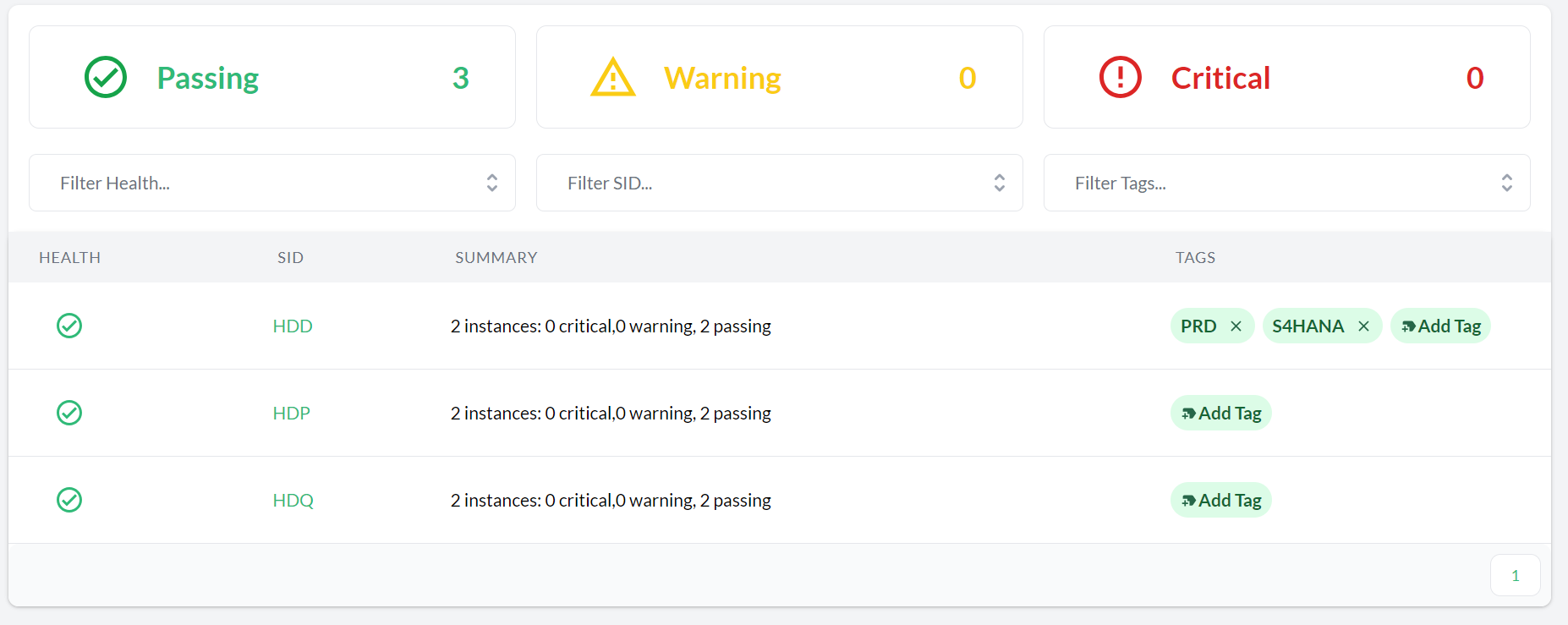

- 11.9 HANA Database details

- 12.1 SUSE Multi-Linux Manager settings

- 12.2 Available software updates in the Host Details view

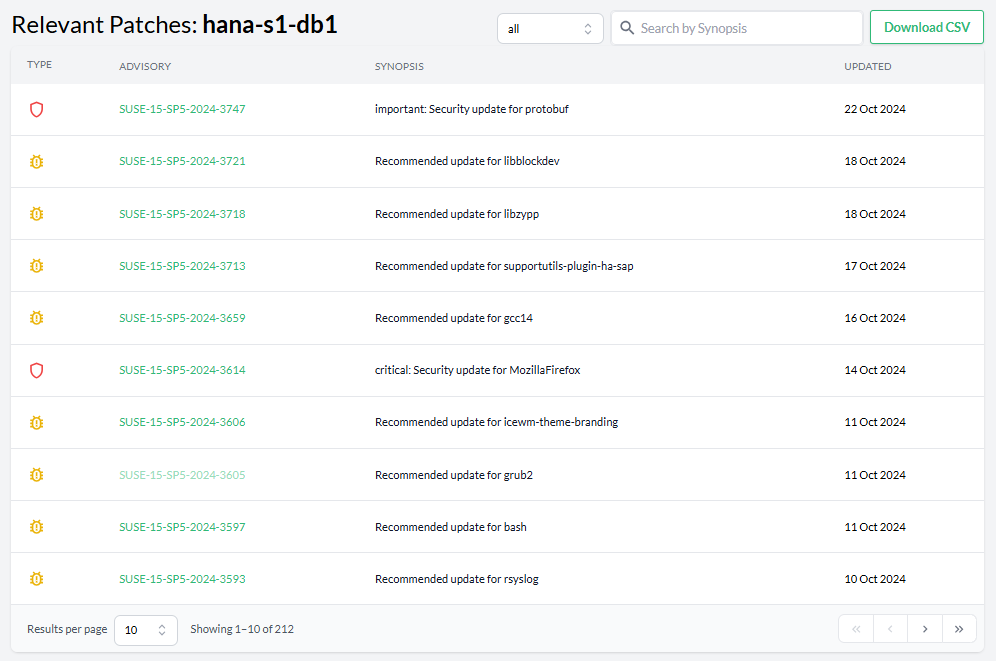

- 12.3 Available Patches overview

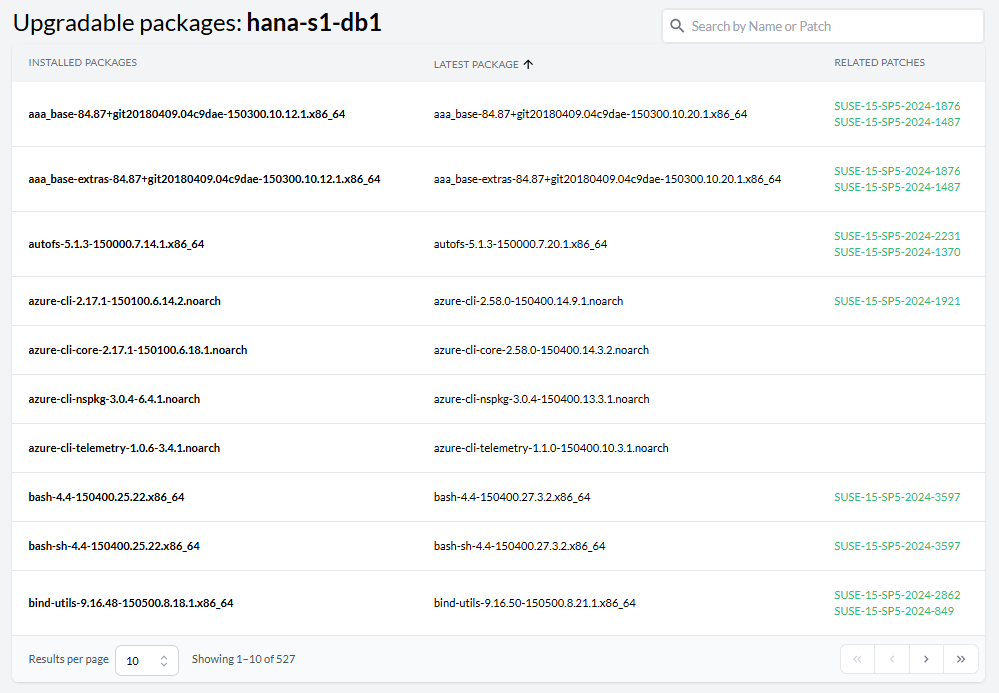

- 12.4 Upgradable Packages overview

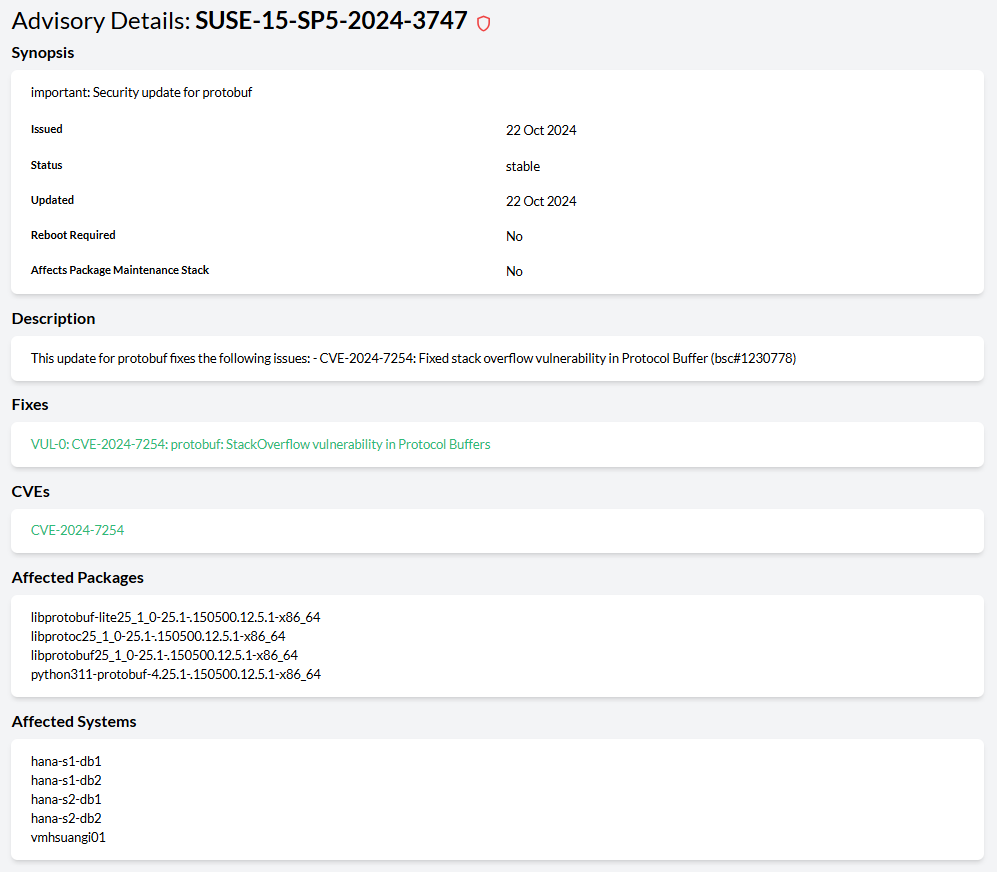

- 12.5 Advisory Details view

1 What is Trento? #

Trento is the official version of the Trento community project. It is a comprehensive monitoring solution consisting of two main components: the Trento Server and the Trento Agent. Trento provides the following functionality and features:

A user-friendly reactive Web interface for SAP Basis administrators.

Automated discovery of Pacemaker clusters using SAPHanaSR classic or angi as well as different fencing mechanisms, including diskless SBD.

Automated discovery of SAP systems running on ABAP or JAVA stacks and HANA databases.

Awareness of maintenance situations in a Pacemaker cluster at cluster, node, or resource level.

Configuration validation for SAP HANA Scale-Up Performance/Cost-optimized, SAP HANA Scale-out and ASCS/ERS clusters deployed on Azure, AWS, GCP or on-premises bare metal platforms, including KVM and Nutanix.

Useful information that offers insights about the execution of configuration checks.

Delivery of configuration checks decoupled from core functionality.

Email alerting for critical events in the monitored landscape.

Integration of saptune into the console and specific configuration checks at host and cluster levels.

Information about relevant patches and upgradable packages for registered hosts via integration with SUSE Multi-Linux Manager.

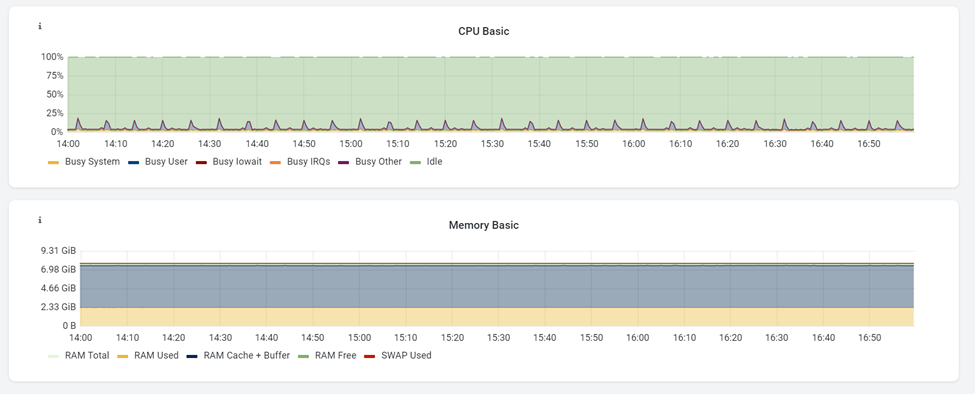

Monitoring of CPU and memory usage at the host level through basic integration with Prometheus.

API-based architecture to facilitate integration with other monitoring tools.

Rotating API key to protect communication from the Trento Agent to the Trento Server.

AI assistance via Model Context Protocol (MCP) integration.

Housekeeping capabilities.

1.1 Trento Architecture #

1.2 Trento Server #

The Trento Server is an independent, distributed system designed to run on a Kubernetes cluster or as a regular systemd stack. It provides both a Web front-end for user interaction and backend APIs for automation and integration with components such as the Trento MCP Server. Together with the optional Trento MCP Server, it enables secure, AI-assisted operations by exposing Trento Server APIs for natural-language interactions with tools like MCPHost, Copilot, Claude, and SUSE AI.

The Trento Server consists of the following components:

The Web component that acts as a control plane responsible for internal and external communications as well as rendering the UI.

The orchestration engine named Wanda that orchestrates the execution of compliance checks and operations.

The Trento MCP Server which creates a secure bridge between the infrastructure data collected by Trento and your Large Language Model (LLM) of choice.

A PostgreSQL database for data persistence.

The RabbitMQ message broker for communication between the orchestration engine and the agents.

A Prometheus instance that retrieves the metrics collected by the Prometheus node exporter in the registered hosts. This Prometheus instance is optional in a systemd deployment.

1.3 Trento Agent #

The Trento Agent is a single background process (trento-agent) running on each monitored host of the SAP infrastructure.

2 Lifecycle #

Trento is part of SUSE Linux Enterprise Server for SAP applications. Trento’s two main components have the following product lifecycles:

- Trento Agent

- Delivery mechanism

RPM package for SUSE Linux Enterprise Server for SAP applications 15 SP4 and newer, and SUSE Linux Enterprise Server for SAP applications 16.0.

- Supported runtime

Supported in SUSE Linux Enterprise Server for SAP applications 15 SP4 and newer, and SUSE Linux Enterprise Server for SAP applications 16.0, on x86_64 and ppc64le architectures.

- Trento Server

- Delivery mechanisms

A set of container images from the SUSE public registry together with a Helm chart that facilitates their installation or a set of RPM packages for SUSE Linux Enterprise Server for SAP applications 15 SP4 and newer, and SUSE Linux Enterprise Server for SAP applications 16.0.

- Kubernetes deployment

The Trento Server runs on any current Cloud Native Computing Foundation (CNCF)-certified Kubernetes distribution based on a x86_64 architecture. Depending on your scenario and needs, SUSE supports several usage scenarios:

If you already use a CNCF-certified Kubernetes cluster, you can run the Trento Server in it.

If you don’t have a Kubernetes cluster, and need enterprise support, SUSE recommends SUSE Rancher Prime Kubernetes Engine (RKE) (RKE) version 2.

If you do not have a Kubernetes enterprise solution but you want to try Trento, SUSE Rancher’s K3s provides you with an easy way to get started. But keep in mind that K3s default installation process deploys a single node Kubernetes cluster, which is not a recommended setup for a stable Trento production instance.

- systemd deployments

Supported in SUSE Linux Enterprise Server for SAP applications 15 SP4 and newer, and SUSE Linux Enterprise Server for SAP applications 16.0 on x86_64 and ppc64le architectures.

3 Requirements #

This section describes requirements for the Trento Server and its Trento Agents, as well as the SAP systems and clusters that we want to monitor in the backend environment.

3.1 Trento Server requirements #

Running all the Trento Server components requires a minimum of 4 GB of RAM, two CPU cores and 64 GB of storage. When using K3s, such storage should be provided under /var/lib/rancher/k3s.

Trento is based on event-driven technology. Registered events are stored in a PostgreSQL database with a default retention period of 10 days. For each host registered with Trento, you need to allocate at least 1.5GB of space in the PostgreSQL database.

Trento Server supports different deployment scenarios: Kubernetes and systemd. A Kubernetes-based deployment of Trento Server is cloud-native and OS-agnostic. It can be performed on the following services:

RKE2

a Kubernetes service in a cloud provider

any other CNCF-certified Kubernetes running on x86_64 architecture

The Helm chart and the container images required for a Kubernetes-based deployment are available in SUSE public registry.

A production-ready Kubernetes-based deployment of Trento Server requires Kubernetes knowledge. The Helm chart is intended to be used by customers without in-house Kubernetes expertise, or as a way to try Trento with a minimum of effort. However, Helm chart delivers a basic deployment of the Trento Server with all the components running on a single node of the cluster.

The packages required for a systemd deployment are available in the repositories of SUSE Linux Enterprise Server for SAP applications 15 (SP4 or higher) or SUSE Linux Enterprise Server for SAP applications 16.0.

3.2 Trento Agent requirements #

The resource footprint of the Trento Agent is designed to not impact the performance of the host it runs on.

The Trento Agent component needs to interact with several low-level system components that are part of the SUSE Linux Enterprise Server for SAP applications distribution.

The hosts must have unique machine identifiers (ids) in order to be registered in Trento. This means that if a host in your environment is built as a clone of another one, make sure to change the machine’s identifier as part of the cloning process before starting the Trento Agent on it.

Similarly, the clusters must have unique authkeys in order to be registered in Trento.

The Trento Agent package is available in the repositories of SUSE Linux Enterprise Server for SAP applications 15 (SP4 or higher) or SUSE Linux Enterprise Server for SAP applications 16.0.

3.3 Network requirements #

Required inbound connectivity:

From any Trento Agent host to Trento Server at port TCP/80 (HTTP), or TCP/443 (HTTPS) if SSL is enabled.

From any Trento Agent host to Trento Server at port TCP/5672 (Advanced Message Queuing Protocol or AMQP)

From the user network to Trento Server at port TCP/80 (HTTP), or TCP/443 (HTTPS) if SSL is enabled.

If the Trento MCP Server is installed and enabled:

For a Kubernetes deployment, from the MCP client to the Trento Server ingress at TCP/80 or TCP/443. Internally, the MCP Server Pod uses TCP/5000 and TCP/8080 if the health check is enabled.

For a systemd deployment, from the user network to Trento MCP Server at TCP/5000.

Optionally: From the user network to Trento Server at ports TCP/4000, TCP/4001 and TCP/8080 (health checks for web, wanda and MCP, respectively).

From Prometheus server, when it exists, to each Trento Agent host at the port used by the Node Exporter.

3.4 SAP requirements #

An SAP system must run on a HANA database to be discovered by Trento. In addition, the parameter dbs/hdb/dbname must be set in the DEFAULT profile of the SAP system to the correct database (tenant) name.

The agent must be installed in all the hosts that are part of the SAP system architecture. Particularly:

the host where the ASCS instance is running

the host where the ERS instance, if it exists, is running

all the hosts where an application server instance is running

all the database hosts

3.5 Cluster requirements #

The initial discovery of a pacemaker cluster requires the DC node to be online. Once the cluster has been discovered, all nodes can be stopped and Trento will continue to discover the cluster.

4 Installation #

4.1 Installing Trento Server #

Trento Server can be deployed in different ways depending on your infrastructure and requirements.

Supported deployment methods:

4.1.1 Kubernetes deployment #

The subsection uses the following placeholders:

TRENTO_SERVER_HOSTNAME: the host name used by the end user to access the console.ADMIN_PASSWORD: the password of the admin user created during the installation process.The password must meet the following requirements:

minimum length of 8 characters

the password must not contain 3 identical numbers or letters in a row (for example, 111 or aaa)

the password must not contain 4 sequential numbers or letters (for example, 1234, abcd, ABCD)

By default, the provided Helm chart uses Traefik as ingress class

Main usages are related to:

path rewriting

endpoint protection

Search for Traefik specific usage scenarios on GitHub. In case another ingress controller is used, adapt accordingly.

4.1.1.1 Installing Trento Server on an existing Kubernetes cluster #

Trento Server consists of a several components delivered as container images and intended for deployment on a Kubernetes cluster. A manual production-ready deployment of these components requires Kubernetes knowledge. Customers without in-house Kubernetes expertise and those who want to try Trento with a minimum of effort, can use the Trento Helm chart. This approach automates the deployment of all the required components on a single Kubernetes cluster node. You can use the Trento Helm chart to install Trento Server on a existing Kubernetes cluster as follows:

The examples in this section do not specify a Kubernetes namespace for simplicity. By default, Helm installs to the default namespace.

For production deployments, create and use a dedicated namespace.

Example:

kubectl create namespace trento

helm upgrade \

--install trento-server oci://registry.suse.com/trento/trento-server \

--namespace trento \

--set global.trentoWeb.origin=TRENTO_SERVER_HOSTNAME \

--set trento-web.adminUser.password=ADMIN_PASSWORDInstall Helm:

curl https://raw.githubusercontent.com/helm/helm/master/scripts/get-helm-3 | bashConnect Helm to an existing Kubernetes cluster.

Use Helm to install Trento Server with the Trento Helm chart:

helm upgrade \ --install trento-server oci://registry.suse.com/trento/trento-server \ --set global.trentoWeb.origin=TRENTO_SERVER_HOSTNAME \ --set trento-web.adminUser.password=ADMIN_PASSWORDWhen using a Helm version lower than 3.8.0, an experimental flag must be set as follows:

HELM_EXPERIMENTAL_OCI=1 helm upgrade \ --install trento-server oci://registry.suse.com/trento/trento-server \ --set global.trentoWeb.origin=TRENTO_SERVER_HOSTNAME \ --set trento-web.adminUser.password=ADMIN_PASSWORDTo verify that the Trento Server installation was successful, open the URL of the Trento Web (

http://TRENTO_SERVER_HOSTNAME) from a workstation on the SAP administrator’s LAN.

4.1.1.2 Installing Trento Server on K3s #

If you do not have a Kubernetes cluster, or you have one but you do not want to use it for Trento, you can use SUSE Rancher’s K3s as an alternative. To deploy Trento Server on K3s, you need a server or VM (see Section 3.1, “Trento Server requirements” for minimum requirements) and follow steps in Section 4.1.1.2.1, “Manually installing Trento on a Trento Server host”.

The following procedure deploys Trento Server on a single-node K3s cluster. Note that this setup is not recommended for production use.

4.1.1.2.1 Manually installing Trento on a Trento Server host #

Log in to the Trento Server host.

Install K3s either as root or a non-root user.

Installing as user root:

curl -sfL https://get.k3s.io | INSTALL_K3S_SKIP_SELINUX_RPM=true sh

Installing as a non-root user:

curl -sfL https://get.k3s.io | INSTALL_K3S_SKIP_SELINUX_RPM=true sh -s - --write-kubeconfig-mode 644

Install Helm as root.

curl https://raw.githubusercontent.com/helm/helm/master/scripts/get-helm-3 | bash

Set the

KUBECONFIGenvironment variable for the same user that installed K3s:export KUBECONFIG=/etc/rancher/k3s/k3s.yamlWith the same user that installed K3s, install Trento Server using the Helm chart:

helm upgrade \ --install trento-server oci://registry.suse.com/trento/trento-server \ --set global.trentoWeb.origin=TRENTO_SERVER_HOSTNAME \ --set trento-web.adminUser.password=ADMIN_PASSWORDWhen using a Helm version lower than 3.8.0, an experimental flag must be set as follows:

HELM_EXPERIMENTAL_OCI=1 helm upgrade \ --install trento-server oci://registry.suse.com/trento/trento-server \ --set global.trentoWeb.origin=TRENTO_SERVER_HOSTNAME \ --set trento-web.adminUser.password=ADMIN_PASSWORDMonitor the creation and start-up of the Trento Server pods, and wait until they are ready and running:

watch kubectl get podsAll pods must be in the ready and running state.

Log out of the Trento Server host.

To verify that the Trento Server installation was successful, open the URL of the Trento Web (

http://TRENTO_SERVER_HOSTNAME) from a workstation on the SAP administrator’s LAN.

4.1.1.3 Deploying Trento Server on selected nodes #

If you use a multi-node Kubernetes cluster, it is possible to deploy Trento Server images on selected nodes by specifying the field nodeSelector in the helm upgrade command as follows:

HELM_EXPERIMENTAL_OCI=1 helm upgrade \

--install trento-server oci://registry.suse.com/trento/trento-server \

--set global.trentoWeb.origin=TRENTO_SERVER_HOSTNAME \

--set trento-web.adminUser.password=ADMIN_PASSWORD \

--set prometheus.server.nodeSelector.LABEL=VALUE \

--set postgresql.primary.nodeSelector.LABEL=VALUE \

--set trento-web.nodeSelector.LABEL=VALUE \

--set trento-runner.nodeSelector.LABEL=VALUE4.1.1.4 Configuring event pruning #

The event pruning feature allows administrators to manage how long registered events are stored in the database and how often the expired events are removed.

The following configuration options are available:

pruneEventsOlderThanThe number of days registered events are stored in the database. The default value is 10. Keep in mind that

pruneEventsOlderThancan be set to 0. However, this deletes all events whenever the cron job runs, making it impossible to analyze and troubleshoot issues with the applicationpruneEventsCronjobScheduleThe frequency of the cron job that deletes expired events. The default value is "0 0 * * *", which runs daily at midnight.

To modify the default values, execute the following Helm command:

helm ... \

--set trento-web.pruneEventsOlderThan=<<EXPIRATION_IN_DAYS>> \

--set trento-web.pruneEventsCronjobSchedule="<<NEW_SCHEDULE>>"Replace the placeholders with the desired values:

EXPIRATION_IN_DAYSNumber of days to retain events in the database before pruning.

NEW_SCHEDULEThe cron rule specifying how frequently the pruning job is performed.

Example command to retain events for 30 days and schedule pruning daily at 3 AM:

helm upgrade \

--install trento-server oci://registry.suse.com/trento/trento-server \

--set global.trentoWeb.origin=TRENTO_SERVER_HOSTNAME \

--set trento-web.adminUser.password=ADMIN_PASSWORD \

--set trento-web.pruneEventsOlderThan=30 \

--set trento-web.pruneEventsCronjobSchedule="0 3 * * *"4.1.1.5 Enabling email alerts #

Email alerting feature notifies the SAP Basis administrator about important changes in the SAP Landscape being monitored by Trento.

The reported events include the following:

Host heartbeat failed

Cluster health detected critical

Database health detected critical

SAP System health detected critical

This feature is disabled by default. It can be enabled at installation time or anytime at a later stage. In both cases, the procedure is the same and uses the following placeholders:

SMTP_SERVERThe SMTP server designated to send email alerts

SMTP_PORTPort on the SMTP server

SMTP_USERUser name to access SMTP server

SMTP_PASSWORDPassword to access SMTP server

ALERTING_SENDERSender email for alert notifications

ALERTING_RECIPIENTEmail address to receive alert notifications.

The command to enable email alerts is as follows:

HELM_EXPERIMENTAL_OCI=1 helm upgrade \

--install trento-server oci://registry.suse.com/trento/trento-server \

--set global.trentoWeb.origin=TRENTO_SERVER_HOSTNAME \

--set trento-web.adminUser.password=ADMIN_PASSWORD \

--set trento-web.alerting.enabled=true \

--set trento-web.alerting.smtpServer=SMTP_SERVER \

--set trento-web.alerting.smtpPort=SMTP_PORT \

--set trento-web.alerting.smtpUser=SMTP_USER \

--set trento-web.alerting.smtpPassword=SMTP_PASSWORD \

--set trento-web.alerting.sender=ALERTING_SENDER \

--set trento-web.alerting.recipient=ALERTING_RECIPIENT4.1.1.6 Enabling SSL #

Ingress may be used to provide SSL termination for the Web component of Trento Server. This would allow to encrypt the communication from the agent to the server, which is already secured by the corresponding API key. It would also allow HTTPS access to the Web console with trusted certificates.

Configuration must be done in the tls section of the values.yaml file of the chart of the Trento Server Web component.

For details on the required Ingress setup and configuration, refer to: https://kubernetes.io/docs/concepts/services-networking/ingress/. Particularly, refer to section https://kubernetes.io/docs/concepts/services-networking/ingress/#tls for details on the secret format in the YAML configuration file.

Additional steps are required on the Agent side.

4.1.2 systemd deployment #

A systemd-based installation of the Trento Server using RPM packages can be performed manually on the latest supported versions of SUSE Linux Enterprise Server for SAP applications, from 15 SP4 up to 16. For installations on service packs other than the current one, make sure to update the repository URL as described in the relevant notes throughout this guide.

4.1.2.1 List of dependencies #

4.1.2.2 Install Trento dependencies #

4.1.2.2.1 Install PostgreSQL #

The current instructions are tested with the following PostgreSQL versions:

| SUSE Linux Enterprise Server for SAP applications | PostgreSQL Version |

|---|---|

15 SP4 | 14.10 |

15 SP5 | 15.5 |

15 SP6 | 16.9 |

15 SP7 | 17.5 |

16.0 | 17.6 |

Using a different version of PostgreSQL may require different steps or configurations, especially when changing the major number. For more details, refer to the official PostgreSQL documentation.

Install PostgreSQL server:

zypper in postgresql-serverEnable and start PostgreSQL server:

systemctl enable --now postgresql

4.1.2.2.2 Configure PostgreSQL #

Start

psqlwith thepostgresuser to open a connection to the database:su - postgres psqlInitialize the databases in the

psqlconsole:CREATE DATABASE wanda; CREATE DATABASE trento; CREATE DATABASE trento_event_store;Create the users:

CREATE USER wanda_user WITH PASSWORD 'wanda_password'; CREATE USER trento_user WITH PASSWORD 'web_password';Grant required privileges to the users and close the connection:

\c wanda GRANT ALL ON SCHEMA public TO wanda_user; \c trento GRANT ALL ON SCHEMA public TO trento_user; \c trento_event_store; GRANT ALL ON SCHEMA public TO trento_user; \qYou can exit from the

psqlconsole andpostgresuser.Allow the PostgreSQL database to receive connections to the respective databases and users. To do this, add the following to

/var/lib/pgsql/data/pg_hba.conf:host wanda wanda_user 0.0.0.0/0 scram-sha-256 host trento,trento_event_store trento_user 0.0.0.0/0 scram-sha-256NoteThe

pg_hba.conffile works sequentially. This means that the rules on the top have preference over the ones below. The example above shows a permissive address range. So for this to work, the entires must be written at the top of thehostentries. For further information, refer to the pg_hba.conf documentation.Allow PostgreSQL to bind on all network interfaces in

/var/lib/pgsql/data/postgresql.confby changing the following line:listen_addresses = '*'Restart PostgreSQL to apply the changes:

systemctl restart postgresql

4.1.2.2.3 Install RabbitMQ #

Install RabbitMQ server:

zypper install rabbitmq-serverAllow connections from external hosts by modifying

/etc/rabbitmq/rabbitmq.conf, so the Trento-agent can reach RabbitMQ:listeners.tcp.default = 5672If firewalld is running, add a rule to firewalld:

firewall-cmd --zone=public --add-port=5672/tcp --permanent; firewall-cmd --reloadEnable the RabbitMQ service:

systemctl enable --now rabbitmq-server

4.1.2.2.4 Configure RabbitMQ #

To configure RabbitMQ for a production system, follow the official suggestions in the RabbitMQ guide.

Create a new RabbitMQ user:

rabbitmqctl add_user trento_user trento_user_passwordCreate a virtual host:

rabbitmqctl add_vhost vhostSet permissions for the user on the virtual host:

rabbitmqctl set_permissions -p vhost trento_user ".*" ".*" ".*"

4.1.2.3 Install Trento using RPM packages #

The trento-web and trento-wanda packages are available by default on supported SUSE Linux Enterprise Server for SAP applications distributions.

Install Trento web, wanda and checks:

zypper install trento-web trento-wanda4.1.2.3.1 Create the configuration files #

Both services depend on respective configuration files. They must be

placed in /etc/trento/trento-web and /etc/trento/trento-wanda

respectively, and examples of how to modify them are available in

/etc/trento/trento-web.example and /etc/trento/trento-wanda.example.

You can create the content of the secret variables such as

SECRET_KEY_BASE, ACCESS_TOKEN_ENC_SECRET and REFRESH_TOKEN_ENC_SECRET using openssl:

openssl rand -out /dev/stdout 48 | base64Also ensure that a valid hostname, FQDN, or IP address is configured in

TRENTO_WEB_ORIGIN when using HTTPS.

Otherwise, WebSocket connections will fail, preventing real-time updates

in the web interface.

4.1.2.3.2 trento-web configuration #

# /etc/trento/trento-web

AMQP_URL=amqp://trento_user:trento_user_password@localhost:5672/vhost

DATABASE_URL=ecto://trento_user:web_password@localhost/trento

EVENTSTORE_URL=ecto://trento_user:web_password@localhost/trento_event_store

ENABLE_ALERTING=false

CHARTS_ENABLED=false

ADMIN_USER=admin

ADMIN_PASSWORD=trentodemo

ENABLE_API_KEY=true

PORT=4000

TRENTO_WEB_ORIGIN=trento.example.com

SECRET_KEY_BASE=some-secret

ACCESS_TOKEN_ENC_SECRET=some-secret

REFRESH_TOKEN_ENC_SECRET=some-secret

CHECKS_SERVICE_BASE_URL=/wanda

OAS_SERVER_URL=https://trento.example.comThe ADMIN_PASSWORD variable must must meet the following requiements:

minimum of 8 characters

the password not contain 3 consecutive identical numbers or letters (for example, 111 or aaa)

the password must not contain 4 consecutive numbers or letters (for example, 1234, abcd, ABCD)

The ENABLE_ALERTING enables the

alerting system to receive email notifications. Set ENABLE_ALERTING to true and add additional variables to the /etc/trento/trento-web, to enable the feature.

# /etc/trento/trento-web

ENABLE_ALERTING=true

ALERT_SENDER=<<SENDER_EMAIL_ADDRESS>>

ALERT_RECIPIENT=<<RECIPIENT_EMAIL_ADDRESS>>

SMTP_SERVER=<<SMTP_SERVER_ADDRESS>>

SMTP_PORT=<<SMTP_PORT>>

SMTP_USER=<<SMTP_USER>>

SMTP_PASSWORD=<<SMTP_PASSWORD>>4.1.2.3.3 trento-wanda configuration #

# /etc/trento/trento-wanda

CORS_ORIGIN=http://localhost

AMQP_URL=amqp://trento_user:trento_user_password@localhost:5672/vhost

DATABASE_URL=ecto://wanda_user:wanda_password@localhost/wanda

PORT=4001

SECRET_KEY_BASE=some-secret

OAS_SERVER_URL=https://trento.example.com/wanda

AUTH_SERVER_URL=http://localhost:40004.1.2.3.4 Start the services #

In some SUSE Linux Enterprise Server for SAP applications environments, SELinux may be enabled and set to enforcing mode by default. If Trento services fail to start or show permission-related errors, check the SELinux status:

getenforceIf SELinux is set to enforcing, switch it to permissive mode either temporarily or permanently:

Temporary change (until reboot):

setenforce 0Permanent change (persists after reboot):

Edit

/etc/selinux/configand set:SELINUX=permissive

Enable and start the services:

systemctl enable --now trento-web trento-wanda4.1.2.3.5 Monitor the services #

Use journalctl to check if the services are up and running

correctly. For example:

journalctl -fu trento-web4.1.2.4 Check the health status of Trento Web and Trento Wanda #

You can check if Trento Web and Trento Wanda services function correctly by

accessing accessing the healthz and readyz API.

Check Trento Web health status using

curl:curl http://localhost:4000/api/readyzcurl http://localhost:4000/api/healthzCheck Trento wanda health status using

curl:curl http://localhost:4001/api/readyzcurl http://localhost:4001/api/healthz

If Trento web and wanda are ready, and the database connection is set up correctly, the output should be as follows:

{"ready":true}{"database":"pass"}4.1.2.5 Install and configure NGINX #

Install NGINX package:

zypper install nginxIf firewalld is running, add firewalld rules for HTTP and HTTPS:

firewall-cmd --zone=public --add-service=https --permanent firewall-cmd --zone=public --add-service=http --permanent firewall-cmd --reloadStart and enable NGINX:

systemctl enable --now nginxCreate a

/etc/nginx/conf.d/trento.confTrento configuration file:map $http_upgrade $connection_upgrade { default upgrade; '' close; } upstream web { server 127.0.0.1:4000 max_fails=5 fail_timeout=60s; } upstream wanda { server 127.0.0.1:4001 max_fails=5 fail_timeout=60s; } server { # Redirect HTTP to HTTPS listen 80; server_name trento.example.com; return 301 https://$host$request_uri; } server { server_name trento.example.com; listen 443 ssl; ssl_certificate /etc/nginx/ssl/certs/trento.crt; ssl_certificate_key /etc/ssl/private/trento.key; ssl_protocols TLSv1.2 TLSv1.3; ssl_ciphers 'ECDHE-ECDSA-AES128-GCM-SHA256:ECDHE-RSA-AES128-GCM-SHA256:ECDHE-ECDSA-AES256-GCM-SHA384:ECDHE-RSA-AES256-GCM-SHA384:DHE-RSA-AES128-GCM-SHA256:DHE-RSA-AES256-GCM-SHA384'; ssl_prefer_server_ciphers on; ssl_session_cache shared:SSL:10m; # Wanda rule location /wanda/ { allow all; # Proxy Headers proxy_http_version 1.1; proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for; proxy_set_header Host $http_host; proxy_set_header X-Cluster-Client-Ip $remote_addr; # Important Websocket Bits! proxy_set_header Upgrade $http_upgrade; proxy_set_header Connection "upgrade"; # Add final slash to replace the location path value by the value in proxy_pass # https://nginx.org/en/docs/http/ngx_http_proxy_module.html#proxy_pass proxy_pass http://wanda/; } # Web rule location / { # this endpoint should not be accessible publicly # it is internally used by wanda to introspect access tokens and personal access tokens location /api/session/token/introspect { deny all; return 404; } allow all; # Proxy Headers proxy_http_version 1.1; proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for; proxy_set_header Host $http_host; proxy_set_header X-Cluster-Client-Ip $remote_addr; # The Important Websocket Bits! proxy_set_header Upgrade $http_upgrade; proxy_set_header Connection "upgrade"; proxy_pass http://web; } }

4.1.2.6 Prepare SSL certificate for NGINX #

Create or provide a certificate for NGINX to enable SSL for Trento.

4.1.2.6.1 Create a self-signed certificate #

Generate a self-signed certificate:

NoteAdjust

subjectAltName = DNS:trento.example.comby replacingtrento.example.comwith your domain and change the value5to the number of days for which you need the certificate to be valid. For example,-days 365for one year.openssl req -newkey rsa:2048 --nodes -keyout trento.key -x509 -days 5 -out trento.crt -addext "subjectAltName = DNS:trento.example.com"Copy the generated

trento.keyto a location accessible by NGINX:cp trento.key /etc/ssl/private/trento.keyCreate a directory for the generated

trento.crtfile. The directory must be accessible by NGINX:mkdir -p /etc/nginx/ssl/certs/Copy the generated

trento.crtfile to the created directory:cp trento.crt /etc/nginx/ssl/certs/trento.crtCheck the NGINX configuration:

nginx -tIf the configuration is correct, the output should be as follows:

nginx: the configuration file /etc/nginx/nginx.conf syntax is ok nginx: configuration file /etc/nginx/nginx.conf test is successfulIf there are issues with the configuration, the output indicates what needs to be adjusted.

Enable NGINX:

systemctl restart nginx

4.1.2.6.2 Create a signed certificate with Let’s Encrypt using PackageHub repository #

Enable the PackageHub repository (replace

x.xwith your OS version, for example15.7):SUSEConnect --product PackageHub/x.x/x86_64 zypper refreshInstall Certbot and its NGINX plugin:

NoteService Packs include version-specific Certbot NGINX plugin packages, for example

python311-certbot-nginx,python313-certbot-nginxorpython3-certbot-nginx. Install the package available in the Service Pack you currently use.zypper install certbot python311-certbot-nginxObtain a certificate and configure NGINX with Certbot:

NoteReplace

example.comwith your domain. For more information, refer to Certbot instructions for NGINXcertbot --nginx -d trento.example.comNoteCertbot certificates are valid for 90 days. Refer to the above link for details on how to renew certificates.

4.1.2.7 Accessing the trento-web UI #

Pin the browser to https://trento.example.com. You should be able to

login using the credentials specified in the ADMIN_USER and

ADMIN_PASSWORD environment variables.

4.1.3 Automated deployment with Ansible #

An automated installation of Trento Server using RPM packages can be performed with a Ansible playbook. For further information, refer to the Trento Ansible project.

4.2 Installing Trento Agents #

Before you can install a Trento Agent, you must obtain the API key of your Trento Server. Proceed as follows:

Open the URL of the Trento Web console. It prompts you for a user name and password:

Enter the credentials for the

adminuser (specified during installation of Trento Server).Click Login.

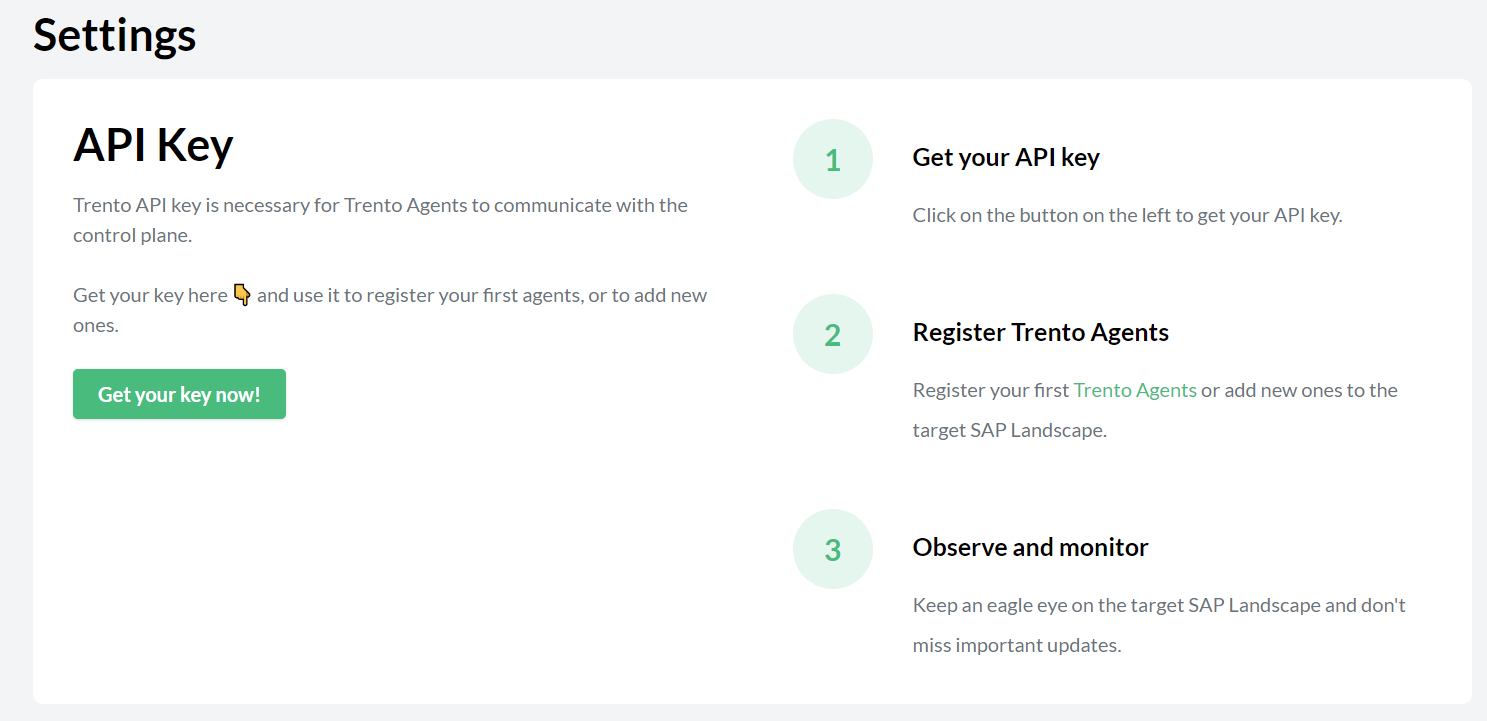

When you are logged in, go to Settings:

Click the Copy button to copy the key to the clipboard.

Install the Trento Agent on an SAP host and register it with the Trento Server as follows:

Install the package:

> sudo zypper ref > sudo zypper install trento-agentA configuration file named

/agent.yamlis created under/etc/trento/in SUSE Linux Enterprise Server for SAP applications 15 or under/usr/etc/trento/in SUSE Linux Enterprise Server for SAP applications 16.Open the configuration file and uncomment (remove the

#character) the entries forfacts-service-url,server-urlandapi-key. Update the values if necessary:facts-service-url: the address of the AMQP RabbitMQ service used for communication with the checks engine (wanda). The correct value of this parameter depends on how Trento Server was deployed.In a Kubernetes deployment, it is amqp://trento:trento@TRENTO_SERVER_HOSTNAME:5672/. If the default RabbitMQ username and password (

trento:trento) were updated using Helm, the parameter must use a user-defined value.In a systemd deployment, the correct value is

amqp://TRENTO_USER:TRENTO_USER_PASSWORD@TRENTO_SERVER_HOSTNAME:5672/vhost. IfTRENTO_USERandTRENTO_USER_PASSWORDhave been replaced with custom values, you must use them.server-url: URL for the Trento Server (http://TRENTO_SERVER_HOSTNAME)api-key: the API key retrieved from the Web consolenode-exporter-target: specifies IP address and port for node exporter as<ip_address>:<port>. In situations where the host has multiple IP addresses and/or the exporter is listening to a port different from the default one, configuring this settings enables Prometheus to connect to the correct IP address and port of the host.

If SSL termination has been enabled on the server side, you can encrypt the communication from the agent to the server as follows:

Provide an HTTPS URL instead of an HTTP one.

Import the certificate from the Certificate Authority that has issued your Trento Server SSL certificate into the Trento Agent host as follows:

Copy the CA certificate in the PEM format to

/etc/pki/trust/anchors/. If the CA certificate is in the CRT format, convert it to PEM using the followingopensslcommand:openssl x509 -in mycert.crt -out mycert.pem -outform PEMRun the

update-ca-certificatescommand.

Start the Trento Agent:

> sudo systemctl enable --now trento-agentCheck the status of the Trento Agent:

> sudo systemctl status trento-agent ● trento-agent.service - Trento Agent service Loaded: loaded (/usr/lib/systemd/system/trento-agent.service; enabled; vendor preset: disabled) Active: active (running) since Wed 2021-11-24 17:37:46 UTC; 4s ago Main PID: 22055 (trento) Tasks: 10 CGroup: /system.slice/trento-agent.service ├─22055 /usr/bin/trento agent start --consul-config-dir=/srv/consul/consul.d └─22220 /usr/bin/ruby.ruby2.5 /usr/sbin/SUSEConnect -s [...]Repeat this procedure on all SAP hosts that you want to monitor.

5 Update #

5.1 Updating Trento Server #

The procedure to update Trento Server depends on the chosen deployment option: Kubernetes or systemd.

Consider the following when performing an update:

Before updating Trento Server, ensure that all the Trento Agents in the environment are supported by the target version. For more information, see section Chapter 16, Compatibility matrix between Trento Server and Trento Agents.

When updating Trento to version 2.4 or higher, the admin password may need to be adjusted to follow the rules described in the User Management section.

In a Kubernetes deployment, you can use Helm to update Trento Server:

helm upgrade \

--install trento-server oci://registry.suse.com/trento/trento-server \

--set global.trentoWeb.origin=TRENTO_SERVER_HOSTNAME \

--set trento-web.adminUser.password=ADMIN_PASSWORDIf you have configured options like email alerting, the Helm command must be adjusted accordingly. In this case, consider the following:

Remember to set the helm experimental flag if you are using a version of Helm lower than 3.8.0.

When updating Trento to version 2.0.0 or higher, an additional flag must be set in the Helm command:

helm upgrade \ --install trento-server oci://registry.suse.com/trento/trento-server \ --set global.trentoWeb.origin=TRENTO_SERVER_HOSTNAME \ --set trento-web.adminUser.password=ADMIN_PASSWORD \ --set rabbitmq.auth.erlangCookie=$(openssl rand -hex 16)When updating Trento to version 2.3 or higher, a new API key is generated and the configuration of all registered Trento Agents must be updated accordingly.

In a system deployment, you can use zypper to update Trento Server:

zypper refresh

zypper update trento-web

zypper update trento-wanda

systemctl restart trento-web

systemctl restart trento-wanda5.2 Updating Trento Checks #

Configuration checks are an integral part of the checks engine, but they are delivered separately. This allows customers to update the checks catalog in their setup whenever updates to existing checks and new checks are released, without waiting for a new version release cycle.

The procedure of updating the configuration checks depends on the Trento Server deployment type: Kubernetes or systemd.

In a Kubernetes deployment, checks are delivered as a container image, and you can use Helm with the following options to pull the latest image:

helm ... \ --set trento-wanda.checks.image.tag=latest \ --set trento-wanda.checks.image.repository=registry.suse.com/trento/trento-checks \ --set trento-wanda.checks.image.pullPolicy=Always \ ...

In a systemd deployment, checks are delivered as an RPM package, and you can use Zypper to update your checks catalog:

> sudo zypper ref

> sudo zypper update trento-checks5.3 Updating a Trento Agent #

To update the Trento Agent, follow the procedure below:

Log in to the Trento Agent host.

Stop the Trento Agent:

> sudo systemctl stop trento-agentInstall the new package:

> sudo zypper ref > sudo zypper install trento-agentCopy the file

/etc/trento/agent.yaml.rpmsaveto/etc/trento/agent.yaml. Make sure that entriesfacts-service-url,server-url, andapi-keyin/etc/trento/agent.yamlare correct.Start the Trento Agent:

> sudo systemctl start trento-agentCheck the status of the Trento Agent:

sudo systemctl status trento-agent ● trento-agent.service - Trento Agent service Loaded: loaded (/usr/lib/systemd/system/trento-agent.service; enabled; vendor preset: disabled) Active: active (running) since Wed 2021-11-24 17:37:46 UTC; 4s ago Main PID: 22055 (trento) Tasks: 10 CGroup: /system.slice/trento-agent.service ├─22055 /usr/bin/trento agent start --consul-config-dir=/srv/consul/consul.d └─22220 /usr/bin/ruby.ruby2.5 /usr/sbin/SUSEConnect -s [...]Check the version in the Hosts overview of the Trento UI (URL

http://TRENTO_SERVER_HOSTNAME).Repeat this procedure in all Trento Agent hosts.

6 Uninstallation #

6.1 Uninstalling Trento Server #

The procedure to uninstall the Trento Server depends on the deployment type: Kubernetes or systemd. The section covers Kubernetes deployments.

If Trento Server was deployed manually, you need to uninstall it manually. If Trento Server was deployed using the Helm chart, you can also use Helm to uninstall it as follows:

helm uninstall trento-server6.2 Uninstalling a Trento Agent #

To uninstall a Trento Agent, perform the following steps:

Log in to the Trento Agent host.

Stop the Trento Agent:

> sudo systemctl stop trento-agentRemove the package:

> sudo zypper remove trento-agent

7 Prometheus integration #

Prometheus Server is a Trento Server component responsible for retrieving the memory and CPU utilization metrics collected by the node-exporter in the agent hosts and serving them to the web component. The web component renders the metrics as dynamic graphical charts in the details view of the registered hosts.

7.1 Requirements #

The node-exporter must be installed and running in the agent hosts and Prometheus Server must be able to reach the agent hosts at the IP address and port that the node-exporter is bound to.

The IP address and port that Prometheus Server uses to reach the node-exporter can be changed by setting parameter node-exporter-target with value <ip_address>:<port> in the agent configuration file.

If the parameter is not set, Prometheus Server uses the lowest IPv4 address discovered in the host with default port 9100.

7.2 Kubernetes deployment #

When using the Helm chart to deploy Trento Server on a Kubernetes cluster, an image of Prometheus Server is deployed automatically. No further actions are required by the user, other than ensuring that it can reach the node-exporter in the agent hosts.

In a Kubernetes cluster with multiple nodes, the user can select on which node to deploy the Prometheus Server by adding the following flag to the Helm installation command:

--set prometheus.server.nodeSelector.LABEL=<value>Where <value> is the label assigned to the node where the user wants Prometheus Server to be deployed.

7.3 systemd deployment #

In a systemd deployment of Trento Server, you can choose between using an existing installation of Prometheus Server, installing a dedicated Prometheus Server instance, or not using Prometheus Server at all.

7.3.1 Use an existing installation #

If you already have an existing Prometheus Server that you want to use with Trento Server, you must set CHARTS_ENABLED=true and PROMETHEUS_URL pointing to the right address and port in the Trento Web configuration file. You must restart restart the Trento Web service to enable the changes.

The lowest required Prometheus Server version is 2.28.0.

Use the section Install Prometheus on SUSE Linux Enterprise Server for SAP applications 16.0 as a reference to adjust the Prometheus Server configuration.

7.3.2 Install Prometheus Server from SUSE Package Hub on SUSE Linux Enterprise Server for SAP applications 15.x #

SUSE Package Hub packages are tested by SUSE but are not officially supported as part of the SUSE Linux Enterprise Server for SAP applications base product. Users should assess the suitability of these packages based on their own risk tolerance and support needs.

Enable the SUSE Package Hub repository (replace 15.x with the version of your operating system, for example 15.7):

SUSEConnect --product PackageHub/15.x/x86_64

zypper refreshNow proceed with the same steps as in Install Prometheus on SUSE Linux Enterprise Server for SAP applications 16.0.

7.3.3 Install Prometheus on SUSE Linux Enterprise Server for SAP applications 16.0 #

Create the Prometheus user and group:

groupadd --system prometheus useradd -s /sbin/nologin --system -g prometheus prometheusInstall Prometheus using Zypper:

zypper in golang-github-prometheus-prometheusConfigure Prometheus for Trento by replacing or updating the existing configuration at

/etc/prometheus/prometheus.ymlwith:global: scrape_interval: 30s evaluation_interval: 10s scrape_configs: - job_name: "http_sd_hosts" honor_timestamps: true scrape_interval: 30s scrape_timeout: 30s scheme: http follow_redirects: true http_sd_configs: - follow_redirects: true refresh_interval: 1m url: http://localhost:4000/api/prometheus/targetsNote: the value of the

urlparameter above assumes that the Trento Web service is running in the same host as Prometheus Server.Enable and start the Prometheus service:

systemctl enable --now prometheusIf firewalld is running, allow Prometheus to be accessible and add an exception to firewalld:

firewall-cmd --zone=public --add-port=9090/tcp --permanent firewall-cmd --reloadSet

CHARTS_ENABLED=trueandPROMETHEUS_URL=http://localhost:9090in the Trento Web configuration file and restart the Trento Web service.systemctl restart trento-webNote: the value of the

PROMETHEUS_URLparameter above assumes that the Trento Web service is running in the same host as Prometheus Server.

7.3.4 Not using Prometheus Server #

If you decide not to use Prometheus Server in your Trento installation, you must disable graphical charts in the UI by setting CHARTS_ENABLED=false in the Trento Web configuration file.

8 MCP Integration #

The Trento MCP Server is an optional component that enables AI-assisted infrastructure management for your SAP landscape. It exposes Trento functionality through the Model Context Protocol (MCP), an open standard that facilitates secure communication between data sources and AI agents. While the core Trento Server operates independently, the Trento MCP Server component allows you to integrate Trento into an agentic AI workflow. This enables the use of Large Language Models (LLMs) to perform common monitoring and troubleshooting tasks using natural language, providing a standardized way for AI tools to access real-time system state and best-practice validations.

8.1 Installing Trento MCP Server #

The Trento MCP Server can be deployed in different ways depending on your infrastructure and requirements.

Supported installation methods:

8.1.1 Prerequisites Trento MCP Server #

The Trento MCP Server is lightweight and stateless. No persistent storage is required; allocate space for logs as per your logging policy. Before installing the Trento MCP Server, both Trento Web and Trento Wanda components must be running and be accessible for the Trento MCP Server to function properly.

There must be network connectivity between the Trento MCP Server and Trento Server components.

Access to the Trento Server URL (important when deployed behind NGINX, or any other reverse proxy) must be possible.

8.1.2 Kubernetes deployment of Trento MCP Server #

The subsection uses the following placeholders:

TRENTO_SERVER_HOSTNAME: the host name used by the end user to access the console.ADMIN_PASSWORD: the password of the admin user created during the installation process.The password must meet the following requirements:

minimum length of 8 characters

the password must not contain 3 identical numbers or letters in a row (for example, 111 or aaa)

the password must not contain 4 sequential numbers or letters (for example, 1234, abcd, ABCD)

8.1.2.1 Enable the Trento MCP Server #

When installing Trento Server following the instructions in Section 4.1.1, “Kubernetes deployment”, the Trento MCP Server is disabled by default. Enable it by passing --set trento-mcp-server.enabled=true:

helm upgrade --install trento-server oci://registry.suse.com/trento/trento-server \

--set global.trentoWeb.origin=TRENTO_SERVER_HOSTNAME \

--set trento-web.adminUser.password=ADMIN_PASSWORD \

--set trento-mcp-server.enabled=trueThe Trento MCP Server will automatically connect to the Trento Web and Trento Wanda internal services within the Kubernetes cluster.

8.1.2.2 Verify the Trento MCP Server installation #

Check that the Trento MCP Server Pod is running:

kubectl get pods -l app.kubernetes.io/name=mcp-serverExample output:

NAME READY STATUS RESTARTS AGE trento-server-mcp-server-xxxxxxxxxx-xxxxx 1/1 Running 0 2mCheck the logs:

kubectl logs -l app.kubernetes.io/name=mcp-serverCheck the Trento MCP Server health endpoints:

# Expose the health check port from the Pod, as it is not exposed as a Kubernetes Service. kubectl port-forward --namespace default \ $(kubectl get pods --namespace default -l app.kubernetes.io/name=mcp-server -o jsonpath="{.items[0].metadata.name}") \ 8080:8080While the previous command is running, perform the following check:

# Liveness endpoint: curl http://localhost:8080/livez# Readiness endpoint: curl http://localhost:8080/readyzExample output:

# Liveness: {"info":{"name":"trento-mcp-server","version":"0.1.0"},"status":"up"} # Readiness: {"status":"up","details":{"mcp-server":{"status":"up","timestamp":"2025-10-09T12:11:09.528898849Z"},"wanda-api":{"status":"up","timestamp":"2025-10-09T12:11:09.544855047Z"},"web-api":{"status":"up","timestamp":"2025-10-09T12:11:09.544855047Z"}}}

8.1.2.3 Trento MCP Server Helm configuration options #

The Trento MCP Server Helm chart supports various configuration options:

8.1.2.3.1 Customize Ingress Path #

By default, ingress is enabled. To customize the ingress configuration in a basic K3s installation, run the command below:

helm upgrade --install trento-server oci://registry.suse.com/trento/trento-server \

--set global.trentoWeb.origin=TRENTO_SERVER_HOSTNAME \

--set trento-web.adminUser.password=ADMIN_PASSWORD \

--set trento-mcp-server.enabled=true \

--set trento-mcp-server.ingress.hosts[0].host=TRENTO_SERVER_HOSTNAME \

--set trento-mcp-server.ingress.hosts[0].paths[0].path=/mcp-server-trento \

--set trento-mcp-server.ingress.hosts[0].paths[0].pathType=ImplementationSpecificThe Trento MCP Server endpoint will be: https://TRENTO_SERVER_HOSTNAME/mcp-server-trento/mcp

8.1.2.3.2 Adjust Log Verbosity #

The default log level is info. Adjust it for debugging:

helm upgrade --install trento-server oci://registry.suse.com/trento/trento-server \

--set global.trentoWeb.origin=TRENTO_SERVER_HOSTNAME \

--set trento-web.adminUser.password=ADMIN_PASSWORD \

--set trento-mcp-server.enabled=true \

--set trento-mcp-server.mcpServer.verbosity=debug8.1.2.3.3 Adjust Resource Limits #

For production deployments with different resource requirements:

helm upgrade --install trento-server oci://registry.suse.com/trento/trento-server \

--set global.trentoWeb.origin=TRENTO_SERVER_HOSTNAME \

--set trento-web.adminUser.password=ADMIN_PASSWORD \

--set trento-mcp-server.enabled=true \

--set trento-mcp-server.resources.requests.cpu=100m \

--set trento-mcp-server.resources.requests.memory=128Mi \

--set trento-mcp-server.resources.limits.cpu=1000m \

--set trento-mcp-server.resources.limits.memory=1Gi8.1.2.3.4 Disabling Health Check Probes #

Health check probes are enabled by default. To disable them if needed, run the following command:

helm upgrade --install trento-server oci://registry.suse.com/trento/trento-server \

--set global.trentoWeb.origin=TRENTO_SERVER_HOSTNAME \

--set trento-web.adminUser.password=ADMIN_PASSWORD \

--set trento-mcp-server.enabled=true \

--set trento-mcp-server.livenessProbe.enabled=false \

--set trento-mcp-server.readinessProbe.enabled=false8.1.2.4 Complete configuration example #

Below is a complete example that configures external access via a custom ingress path:

helm upgrade --install trento-server oci://registry.suse.com/trento/trento-server \

--set global.trentoWeb.origin=https://trento.example.com \

--set trento-web.adminUser.password=SecurePassword123 \

--set trento-mcp-server.enabled=true \

--set trento-mcp-server.mcpServer.trentoURL=https://trento.example.com \

--set trento-mcp-server.ingress.hosts[0].host=trento.example.com \

--set trento-mcp-server.ingress.hosts[0].paths[0].path=/mcp-server-trento \

--set trento-mcp-server.ingress.hosts[0].paths[0].pathType=ImplementationSpecific8.1.3 systemd deployment #

A systemd-based installation of the Trento MCP Server using RPM packages can be performed manually on the latest supported versions of SUSE Linux Enterprise Server for SAP applications.

Supported versions:

SUSE Linux Enterprise Server for SAP applications 15: SP4–SP7

SUSE Linux Enterprise Server for SAP applications 16.0

8.1.3.1 Installing Trento MCP Server using RPM packages #

Install the Trento MCP Server package:

zypper install mcp-server-trento

8.1.3.2 Configure Trento MCP Server #

Create the Trento MCP Server configuration file

/etc/trento/mcp-server-trentoby copying the example:cp /etc/trento/mcp-server-trento.example /etc/trento/mcp-server-trentoEdit the configuration file to point to your Trento Server:

vim /etc/trento/mcp-server-trentoExample configuration:

AUTODISCOVERY_PATHS=/api/all/openapi,/wanda/api/all/openapi ENABLE_HEALTH_CHECK=false HEADER_NAME=Authorization HEALTH_API_PATH=/api/healthz HEALTH_PORT=8080 # OAS_PATH=https://trento.example.com/api/all/openapi,https://trento.example.com/wanda/api/all/openapi PORT=5000 TAG_FILTER=MCP TRANSPORT=streamable TRENTO_URL=https://trento.example.com VERBOSITY=info INSECURE_SKIP_TLS_VERIFY=false

Configure the Trento MCP Server using either TRENTO_URL or OAS_PATH.

If OAS_PATH is left empty, the Trento MCP Server automatically discovers the APIs from the Trento Server using TRENTO_URL.

If OAS_PATH is set, it takes precedence and TRENTO_URL is ignored.

Use TRENTO_URL when one or more of the following conditions apply:

Trento Server is deployed behind a reverse proxy (NGINX, etc.).

The Trento MCP Server runs on a different host or network than Trento Server.

You want to use external or public URLs.

You prefer automatic API autodiscovery.

Use OAS_PATH when one or more of the following conditions apply:

You want to connect directly to internal services without autodiscovery.

You need to bypass reverse proxy configurations.

8.1.3.2.1 Start the Trento MCP Server service #

Enable and start the Trento MCP Server service:

systemctl enable --now mcp-server-trento8.1.3.3 Verify the Trento MCP Server service #

Verify the service is running:

systemctl status mcp-server-trentoExpected output:

● mcp-server-trento.service - Trento MCP Server service Loaded: loaded (/usr/lib/systemd/system/mcp-server-trento.service; enabled) Active: active (running) since ...Check the service logs:

journalctl -u mcp-server-trento -fIf firewalld is running, allow Trento MCP Server to be accessible and add an exception to firewalld:

firewall-cmd --zone=public --add-port=5000/tcp --permanent firewall-cmd --reloadIf you enabled health checks and want to expose them, also allow the health check port:

firewall-cmd --zone=public --add-port=8080/tcp --permanent firewall-cmd --reloadIf you enabled health checks, verify the endpoints locally:

# Note: Replace localhost with the server's IP/hostname if running these commands from a remote machine, # and ensure the health port is allowed by your firewall. # Liveness endpoint: curl http://localhost:8080/livez # Example output: # {"info":{"name":"trento-mcp-server","version":"0.1.0"},"status":"up"} # Readiness endpoint: curl http://localhost:8080/readyz # Example output: # {"status":"up","details":{"mcp-server":{"status":"up","timestamp":"2025-10-09T12:11:09.528898849Z"},"wanda-api":{"status":"up","timestamp":"2025-10-09T12:11:09.542078327Z"},"web-api":{"status":"up","timestamp":"2025-10-09T12:11:09.544855047Z"}}}

8.2 Configuring Trento MCP Server #

This section provides an overview of how to configure the Trento MCP Server depending on the deployment method.

8.2.1 Configuration in a Kubernetes deployment #

8.2.1.1 Configuration Sources #

The Trento MCP Server supports multiple configuration sources with the following priority order (highest to lowest):

Environment variables - Used for containerized deployments.

Built-in defaults - Fallback values.

8.2.1.2 Configuration Overview #

| Environment Variable | Default Value | Description |

|---|---|---|

|

| Custom paths for API autodiscovery. |

|

| Enable the health check server. |

| (empty) | Configuration file path. |

|

| Header name used to pass the Trento API key to the Trento MCP Server. |

|

| API path used for health checks on target servers. |

|

| Port where the health check server runs. |

|

| Skip TLS certificate verification when fetching OAS specs from HTTPS. |

|

| Path(s) to OpenAPI specification file(s). Can be set multiple times. |

|

| Port where the Trento MCP Server runs. |

|

| Only include operations that contain one of these tags. |

|

| Protocol to use: |

| (empty) | Target Trento server URL. Required for autodiscovery if OAS path is not set. |

|

| Log level: |

8.2.1.3 Kubernetes Deployment Example #

apiVersion: apps/v1

kind: Deployment

metadata:

name: mcp-server-trento

spec:

template:

spec:

containers:

- name: mcp-server-trento

image: mcp-server-trento:latest

env:

- name: TRENTO_MCP_PORT

value: "5000"

- name: TRENTO_MCP_HEALTH_PORT

value: "8080"

- name: TRENTO_MCP_ENABLE_HEALTH_CHECK

value: "true"

- name: TRENTO_MCP_TRENTO_URL

value: "https://trento.example.com"

- name: TRENTO_MCP_VERBOSITY

value: "info"

ports:

- containerPort: 5000

name: mcp

- containerPort: 8080

name: health8.2.2 Configuration in an systemd deployment #

8.2.2.1 Configuration Sources #

The Trento MCP Server supports multiple configuration sources with the following priority order (highest to lowest):

Command-line flags - Override config for the current process.

Configuration file - Persistent settings configuration.

8.2.2.2 Configuration Overview #

The mcp-server-trento binary accepts several command-line flags to configure its behavior. The following table lists all available configuration options, their corresponding flags, configuration variables, and default values.

| Flag | Config Variable | Default Value | Description |

|---|---|---|---|

|

|

| Custom paths for API autodiscovery. |

| (empty) | (empty) | Configuration file path. |

|

|

| Enable the health check server. |

|

|

| Header name used to pass the Trento API key to the Trento MCP Server. |

|

|

| API path used for health checks on target servers. |

|

|

| Port where the health check server runs. |

|

|

| Skip TLS certificate verification when fetching OAS specs from HTTPS. |

|

|

| Path(s) to OpenAPI specification file(s). Can be set multiple times. |

|

|

| Port where the Trento MCP Server runs. |

|

|

| Only include operations that contain one of these tags. |

|

|

| Protocol to use: |

|

| (empty) | Target Trento server URL. Required for autodiscovery if OAS path is not set. |

|

|

| Log level: |

8.2.2.3 Configure Trento MCP Server with Command-Line Flags #

Trento MCP Server allows you to override configuration settings using command-line flags for temporary changes. These overrides are not persistent and are lost when the process stops or the system is rebooted. To make configuration changes permanent for the systemd service, update /etc/trento/mcp-server-trento and restart the service.

Basic usage with custom port, verbosity, and target URL:

mcp-server-trento --port 9000 --verbosity debug --trento-url https://trento.example.comUsing multiple OpenAPI specifications:

mcp-server-trento --oas-path https://api1.example.com/openapi.json --oas-path https://api2.example.com/openapi.jsonAutodiscovery with custom paths:

mcp-server-trento --trento-url https://trento.example.com --autodiscovery-paths /api/v1/openapi,/wanda/api/v1/openapiEnable health checks on a custom port:

mcp-server-trento --enable-health-check --health-port 8080 --port 50008.2.2.4 Help and Validation #

You can see all available flags by running:

mcp-server-trento --helpThe server will validate the configuration on startup and log any issues with debug verbosity enabled.

8.2.3 Health Check Configuration #

The Trento MCP Server includes built-in health check endpoints for systemd and Kubernetes integration.

Health check functionality is disabled by default and must be explicitly enabled using the --enable-health-check flag or the TRENTO_MCP_ENABLE_HEALTH_CHECK environment variable.

8.2.3.1 Health Check Endpoints #

The health check server provides the following endpoints:

/livez- Liveness probe for Kubernetes pod restart decisions./readyz- Readiness probe for traffic routing decisions.

The readiness endpoint performs comprehensive health checks, including:

mcp-server- Validates Trento MCP Server connectivity using an MCP client.api-server- Verifies connectivity to the configured Trento API server.

8.2.3.2 Enable Health Checks with Helm on a Kubernetes deployment #

Enable health checks when deploying on Kubernetes with Helm:

helm upgrade \

--install trento-server oci://registry.suse.com/trento/trento-server \

--set global.trentoWeb.origin=TRENTO_SERVER_HOSTNAME \

--set trento-web.adminUser.password=ADMIN_PASSWORD \

--set trento-mcp-server.enabled=true \

--set TRENTO_MCP_ENABLE_HEALTH_CHECK=true \

--set TRENTO_MCP_HEALTH_PORT=8080The health port is internal to the Kubernetes cluster. To reach it from the host running Kubernetes, forward the Pod port. Replace NAMESPACE with your target namespace (Helm defaults to default).

kubectl port-forward --namespace NAMESPACE \

$(kubectl get pods --namespace NAMESPACE -l app.kubernetes.io/name=mcp-server -o jsonpath="{.items[0].metadata.name}") \

8080:8080 &With the port forward active, test the endpoints in Testing Health Endpoints.

8.2.3.3 Enable Health Checks with the command-line for systemd deployment #

mcp-server-trento --enable-health-checkmcp-server-trento --enable-health-check --health-port 80808.2.3.4 Testing Health Endpoints #

# Test liveness endpoint

curl http://localhost:8080/livez

# Test readiness endpoint

curl http://localhost:8080/readyz

# Expected readiness response format:

# {"status":"up","checks":{"mcp-server":{"status":"up"},"api-server":{"status":"up"},"api-documentation":{"status":"up"}}}

# Expected liveness response format:

# {"status":"up"}8.2.4 Troubleshooting #

This section provides solutions for common issues when deploying and using the Trento MCP Server.

8.2.4.1 Connection Issues #

Trento MCP Server cannot connect to Trento API

Verify the

TRENTO_URLorOAS_PATHconfiguration points to accessible endpointsCheck network connectivity between the Trento MCP Server and Trento components

Ensure API authentication is properly configured with valid tokens

MCP clients cannot connect to Trento MCP Server

Verify the Trento MCP Server is running and listening on the correct port (default: 5000)

Check firewall rules allow access to the Trento MCP Server port

Ensure the Trento MCP Server endpoint URL is correctly configured in client applications

8.2.4.2 Authentication Issues #

API token authentication fails

Verify the Personal Access Token is valid and not expired

Ensure the token has the necessary permissions in Trento

Check that the

HEADER_NAMEconfiguration matches between server and client

Token not accepted

Confirm the token was generated from the correct Trento instance

Verify the token format and ensure it includes the "Bearer " prefix if required

8.2.4.3 Configuration Issues #

OpenAPI specification not found

Check that

TRENTO_URLorOAS_PATHpoint to valid Trento API endpointsVerify the Trento Web and Trento Wanda services are running and accessible

Ensure autodiscovery paths are correct if using

TRENTO_URL

Tools not appearing in MCP clients

Check the

TAG_FILTERconfiguration - only operations with matching tags are exposedVerify the OpenAPI specifications are accessible and valid

Ensure the Trento MCP Server can parse the API documentation

8.2.4.4 Performance Issues #

Slow response times

Check network latency between Trento MCP Server and Trento components

Review Trento API performance and database query times

Consider enabling debug logging to identify bottlenecks

High resource usage

Monitor Trento MCP Server memory and CPU usage

Check for memory leaks in long-running processes

Consider adjusting logging verbosity to reduce I/O overhead

8.2.4.5 Health Check Issues #

Health checks failing

Verify health check endpoints are accessible

Check that all required services (Trento API, Trento MCP Server) are responding

Review health check configuration and timeouts

8.2.4.6 Logging and Debugging #

Enable debug logging

Set

VERBOSITY=debugto get detailed logsCheck Trento MCP Server logs for error messages and connection attempts

Review Trento component logs for API-related issues

Common log messages

"Failed to fetch OpenAPI specification" - Check API endpoint accessibility

"Authentication failed" - Verify API token configuration

"No tools available" - Check tag filtering and API documentation

8.2.4.7 Getting Help #

If you continue to experience issues:

Check the Trento MCP Server logs for detailed error messages

Verify configuration values:

For systemd deployments, use

mcp-server-trento --helpFor Kubernetes deployments, run Helm with

--render-subchart-notesto view the rendered Trento MCP Server settings

Test API connectivity directly using curl or similar tools

Check the Trento server logs for API authentication and access issues

8.3 Using the Trento MCP Server #

The Trento MCP Server provides the interface for AI-assisted infrastructure operations, enabling agentic assistants to integrate with Trento. By utilizing the Model Context Protocol, these assistants can perform monitoring and troubleshooting tasks through natural language. See MCPHost on SLES or Using alternative MCP clients for details.

8.3.1 Integrating the Trento MCP Server with MCPHost #

This guide explains how to connect the Trento MCP Server to SUSE Linux Enterprise Server 16 using MCPHost, a lightweight CLI tool for the Model Context Protocol (MCP).

Supported only on SUSE Linux Enterprise Server for SAP applications 16.0

8.3.1.1 Prerequisites #

To configure MCPHost, ensure you have the following:

8.3.1.2 Prerequisites #

An LLM provider and credentials.

Public hosted options, such as Google Gemini, OpenAI, etc.

Private/on-premises option, such as SUSE AI.

A running Trento Server installation with the Trento MCP Server component enabled.

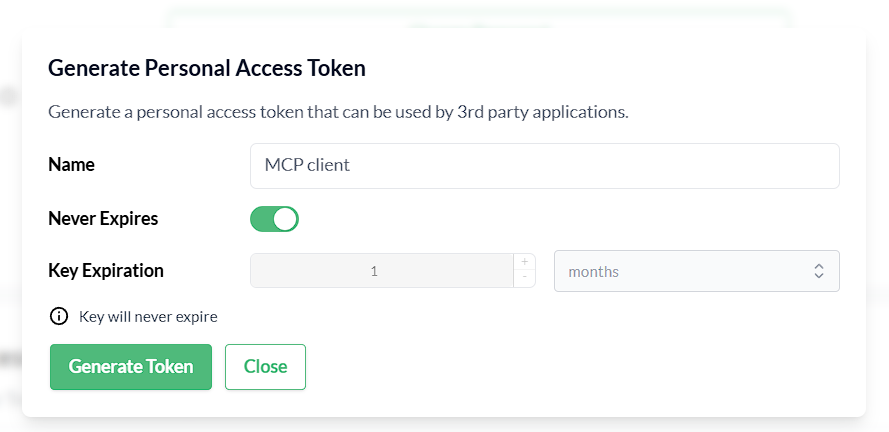

A Trento Personal Access Token generated in Trento Web Profile view.

Figure 8.1: Generate a Personal Access Token in Trento #

8.3.1.3 Install MCPHost #

To install MCPHost, open a terminal and run the following commands:

sudo zypper refresh

sudo zypper install mcphostAfter installation, verify that MCPHost is available and working by checking its version:

mcphost --version8.3.1.4 Configure MCPHost #

MCPHost reads its configuration from several locations; one common location is ~/.mcphost.yml. Create ~/.mcphost.yml with the following content:

mcpServers:

trento-mcp-server:

type: "remote"

url: https://trento.example.com/mcp-server-trento/mcp

headers:

- "Authorization: Bearer ${env://TRENTO_PAT}"Replace

https://trento.example.com/mcp-server-trento/mcpwith the actual URL where your Trento MCP Server is accessible:For Kubernetes deployments with ingress, use the ingress URL (e.g.,

https://trento.example.com/mcp-server-trento/mcp).For local or development setups, use

http://localhost:5000/mcp(adjust the port as needed).The transport type is configured on the Trento MCP Server, if using Server-Sent Events (SSE) transport instead of the default streamable transport, change the path from

/mcpto/sse.

If you configured a custom header name (using

HEADER_NAMEor--header-name), updateAuthorizationaccordingly.

Security best practice: Keep secrets out of configuration files. Store your keys in environment variables instead of hardcoding them.

Export your keys in the shell before running MCPHost. For example:

export GOOGLE_API_KEY=<your-google-api-key>

export TRENTO_PAT=<your-trento-personal-access-token>Configure remote LLM models directly in your MCPHost configuration. For example, to use Google Gemini as your model provider:

model: "google:gemini-2.5-flash"

provider-url: "https://generativelanguage.googleapis.com/v1beta/openai/"

provider-api-key: "${env://GOOGLE_API_KEY}"

mcpServers:

mcp-server-trento:

type: "remote"

url: https://trento.example.com/mcp-server-trento/mcp

headers:

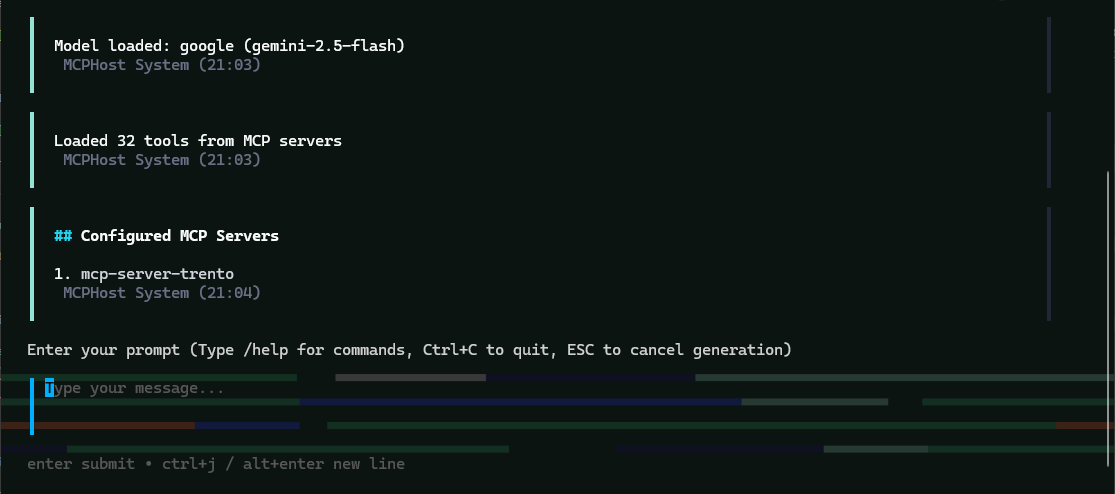

- "Authorization: Bearer ${env://TRENTO_PAT}"8.3.1.5 Run MCPHost and use Trento tools #

Start MCPHost:

mcphostTipIf no servers appear on startup, confirm your configuration file exists at

~/.mcphost.ymland that your environment variables are exported in the same shell session.Verify the connection to Trento and basic status:

/serversFigure 8.2: MCPHost initial screen with the Trento MCP Server connected #

8.3.1.6 Use MCPHost to interact with Trento Server #

"List all SAP systems managed".

"Show my HANA clusters".

"Are my SAP systems compliant?"

"What is the health status of my SAP landscape?"

"Show me all hosts running SAP applications".

"Are there any critical alerts I need to address?"

"Get details about the latest check execution results".

"Which SAP systems are currently running?"

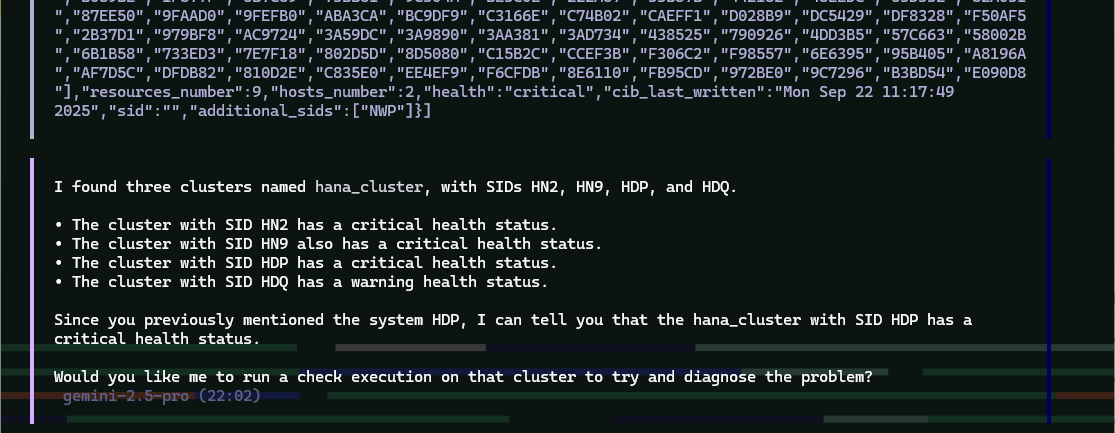

Example MCPHost session querying Trento about SAP systems:

8.3.1.7 MCPHost Troubleshooting #

If you encounter issues connecting MCPHost to the Trento MCP Server:

Connection errors

Verify that the Trento MCP Server URL in

~/.mcphost.ymlis correct and accessible from your system.Check if the Trento MCP Server is running by reviewing logs from your Trento installation.

Ensure network connectivity and that any required firewall rules are in place.

Test basic connectivity:

curl -I https://trento.example.com/mcp-server-trento/mcp.

Authentication errors

Verify that your personal access token is valid by testing it directly with your Trento Server API.

Ensure

TRENTO_PATis exported in the same shell session before runningmcphost.Check that the header name matches your server configuration (default:

Authorization).Ensure the token has the necessary permissions in Trento.

LLM provider errors

Verify that LLM

GOOGLE_API_KEY(or your provider’s API key) is exported correctly.Check the

provider-urlandmodelconfiguration in your~/.mcphost.yml.Confirm that your API key has sufficient quota and permissions with your provider.

General issues

Check the MCPHost terminal output for detailed error messages during startup or operation.

Review the Trento MCP Server logs for connection attempts and errors.

Verify that your configuration file exists at

~/.mcphost.ymland has correct YAML syntax.

8.3.2 Integrating the Trento MCP Server with other clients #

The Trento MCP Server can be integrated with any client application that supports the Model Context Protocol. This makes it possible to interact with the Trento API and execute tools defined in the OpenAPI specification through your preferred AI assistant or development tool.

This guide uses Visual Studio Code with GitHub Copilot as an example, but the configuration procedures apply to any MCP-compatible client.

8.3.2.1 Prerequisites #

To configure your client, make sure that you have the following:

8.3.2.2 Prerequisites #

An LLM provider and credentials.

Public hosted options, such as Google Gemini, OpenAI, etc.

Private/on-premises option, such as SUSE AI.

A running Trento Server installation with the Trento MCP Server component enabled.

A Trento Personal Access Token generated in Trento Web Profile view.

Figure 8.3: Generate a Personal Access Token in Trento #

8.3.2.3 Configuring your client #

Once you have your Trento Server installation ready with the Trento MCP Server URL and API token, you can configure the MCP Server client. The examples below show JSON configuration format used by most MCP Server clients, including VS Code, Claude Desktop, and others.

8.3.2.3.1 Option 1: Configuration with prompted input #

This configuration asks for your personal access token when the client starts, keeping credentials secure and out of configuration files. The password: true setting ensures your personal access token input is masked when you type it.

This option is supported by most clients, including VS Code. Check your client’s documentation if the prompt feature is not available.

{

"servers": {

"trento": {

"type": "http",

"url": "https://trento.example.com/mcp-server-trento/mcp",

"headers": {

"Authorization": "${input:trento-personal-access-token}"

}

}

},

"inputs": [

{

"type": "promptString",

"id": "trento-personal-access-token",

"description": "Trento API key",

"password": true

}

]

}8.3.2.3.2 Option 2: Direct header configuration #

For clients that don’t support prompted input, or for testing purposes, set your Trento personal access token directly in the configuration file.

When using this option, ensure your configuration file has appropriate permissions and is not committed to version control systems.

{

"servers": {

"trento": {

"type": "http",

"url": "https://trento.example.com/mcp-server-trento/mcp",

"headers": {

"Authorization": "trento-personal-access-token"

}

}

}

}Replace https://trento.example.com/mcp-server-trento/mcp with your actual Trento MCP Server endpoint URL from your installation.

8.3.2.3.3 Client options #

For detailed guidance on taking advantage of MCP capabilities in different tools, refer to the following official documentation:

9 Core Features #

9.1 User management #

Trento provides a local permission-based user management feature with optional multi-factor authentication. This feature enables segregation of duties in the Trento interface and ensures that only authorized users with the right permissions can access it.

User management actions are performed in the Users view in the left-hand side panel of the Trento UI.

By default, a newly created user is granted display access rights except for the Users view. Where available, a user with default access can configure filters and pagination settings matching their preferences.

To perform protected actions, the user must have additional permissions added to their user profile. Below is the list of currently available permissions:

all:users: grants full access to user management actions under the Users viewall:checks_selection: grants check selection capabilities for any target in the registered environment for which checks are availableall:checks_execution: grants check execution capabilities for any target in the registered environment for which checks are available and have been previously selectedall:tags: allows creation and deletion of the available tagscleanup:all: allows triggering housekeeping actions on hosts where agents heartbeat is lost and SAP or HANA instances that are no longer foundall:settings: grants changing capabilities on any system settings under the Settings viewall:all: grants all the permissions above

Using the described permissions, it is possible to create the following types of users:

User managers: users with

all:userspermissionsSAP Basis administrator with Trento display-only access: users with default permissions

SAP Basis administrator with Trento configuration access: users with

all:checks_selection,all:tagsandall:settingspermissionsSAP Basis administrator with Trento operation access: users with

all:check_executionandcleanup:allpermissions.

The default admin user created during the installation process is

granted all:all permissions and cannot be modified or deleted. Use

it only to create the first user manager (a user with all:users

permissions who creates all the other required users). Once a user with

all:users permissions is created, the default admin user must be