This guide describes virtualization technology in general. It introduces libvirt—the unified interface to virtualization—and provides detailed information on specific hypervisors.

- Preface

- I Introduction

- II Managing virtual machines with

libvirt- 8

libvirtdaemons - 9 Preparing the VM Host Server

- 10 Guest installation

- 11 Basic VM Guest management

- 12 Connecting and authorizing

- 13 Advanced storage topics

- 14 Configuring virtual machines with Virtual Machine Manager

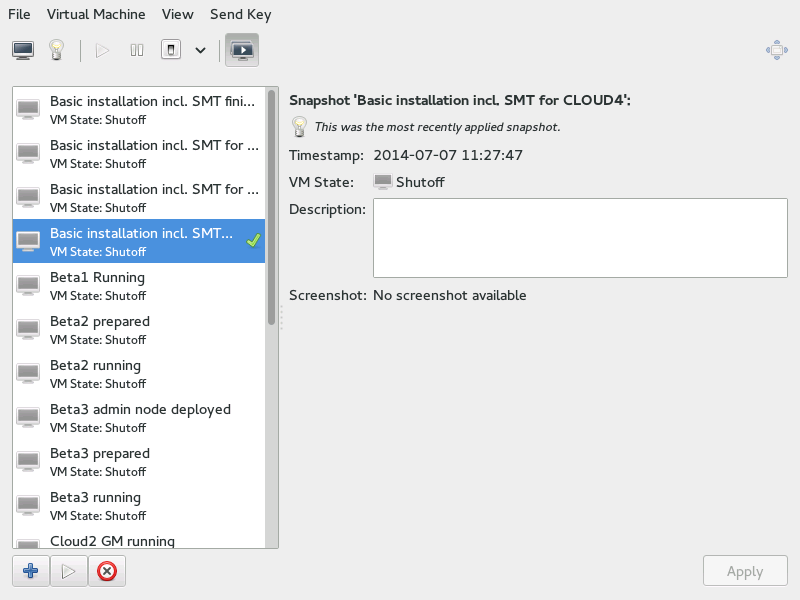

- 14.1 Machine setup

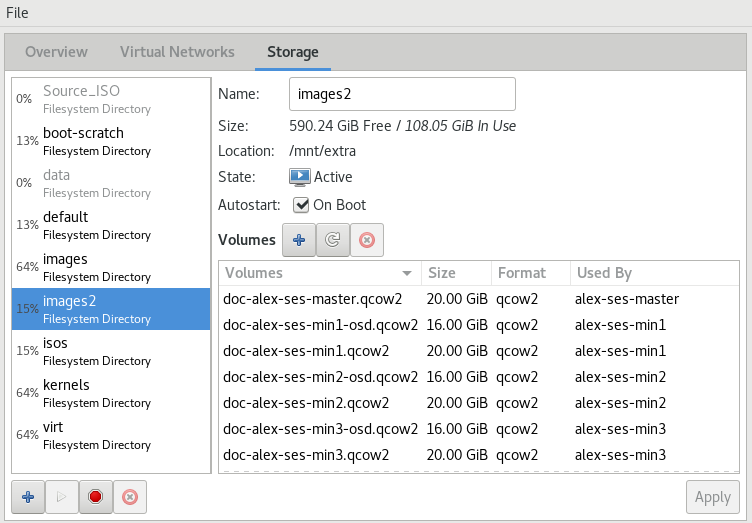

- 14.2 Storage

- 14.3 Controllers

- 14.4 Networking

- 14.5 Input devices

- 14.6 Video

- 14.7 USB redirectors

- 14.8 Miscellaneous

- 14.9 Adding a CD/DVD-ROM device with Virtual Machine Manager

- 14.10 Adding a floppy device with Virtual Machine Manager

- 14.11 Ejecting and changing floppy or CD/DVD-ROM media with Virtual Machine Manager

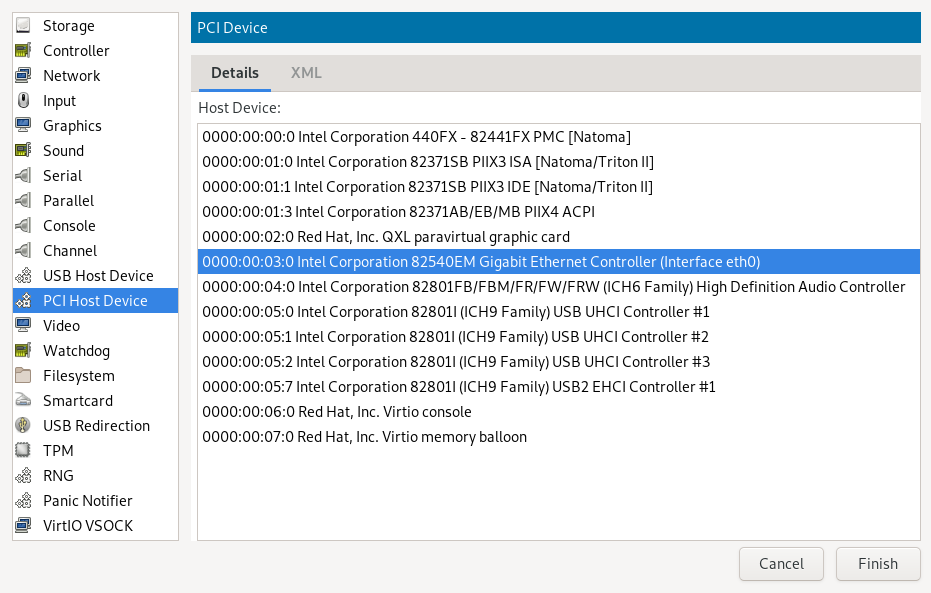

- 14.12 Assigning a host PCI device to a VM Guest

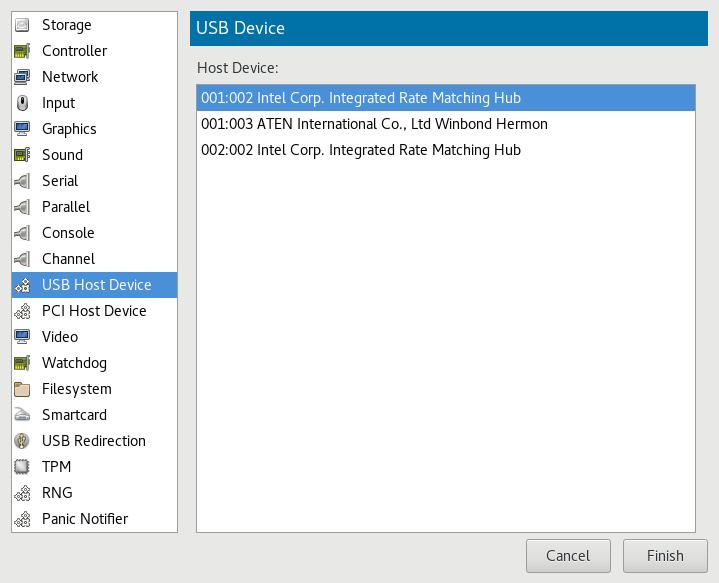

- 14.13 Assigning a host USB device to a VM Guest

- 15 Configuring virtual machines with

virsh- 15.1 Editing the VM configuration

- 15.2 Changing the machine type

- 15.3 Configuring hypervisor features

- 15.4 Configuring CPU

- 15.5 Changing boot options

- 15.6 Configuring memory allocation

- 15.7 Adding a PCI device

- 15.8 Adding a USB device

- 15.9 Adding SR-IOV devices

- 15.10 Listing attached devices

- 15.11 Configuring storage devices

- 15.12 Configuring controller devices

- 15.13 Configuring video devices

- 15.14 Configuring network devices

- 15.15 Using macvtap to share VM Host Server network interfaces

- 15.16 Disabling a memory balloon device

- 15.17 Configuring multiple monitors (dual head)

- 15.18 Crypto adapter pass-through to KVM guests on IBM Z

- 16 Enhancing virtual machine security with AMD SEV-SNP

- 17 Migrating VM Guests

- 18 Xen to KVM migration guide

- 8

- III Hypervisor-independent features

- IV Managing virtual machines with Xen

- 25 Setting up a virtual machine host

- 26 Virtual networking

- 27 Managing a virtualization environment

- 28 Block devices in Xen

- 29 Virtualization: configuration options and settings

- 30 Administrative tasks

- 31 XenStore: configuration database shared between domains

- 32 Xen as a high-availability virtualization host

- 33 Xen: converting a paravirtual (PV) guest into a fully virtual (FV/HVM) guest

- V Managing virtual machines with QEMU

- 34 QEMU overview

- 35 Setting up a KVM VM Host Server

- 36 Guest installation

- 37 Running virtual machines with qemu-system-ARCH

- 38 Virtual machine administration using QEMU monitor

- 38.1 Accessing monitor console

- 38.2 Getting information about the guest system

- 38.3 Changing VNC password

- 38.4 Managing devices

- 38.5 Controlling keyboard and mouse

- 38.6 Changing available memory

- 38.7 Dumping virtual machine memory

- 38.8 Managing virtual machine snapshots

- 38.9 Suspending and resuming virtual machine execution

- 38.10 Live migration

- 38.11 QMP - QEMU machine protocol

- VI Troubleshooting

- Glossary

- A Virtual machine drivers

- B Configuring GPU Pass-Through for NVIDIA cards

- C XM, XL toolstacks, and the

libvirtframework - D GNU licenses

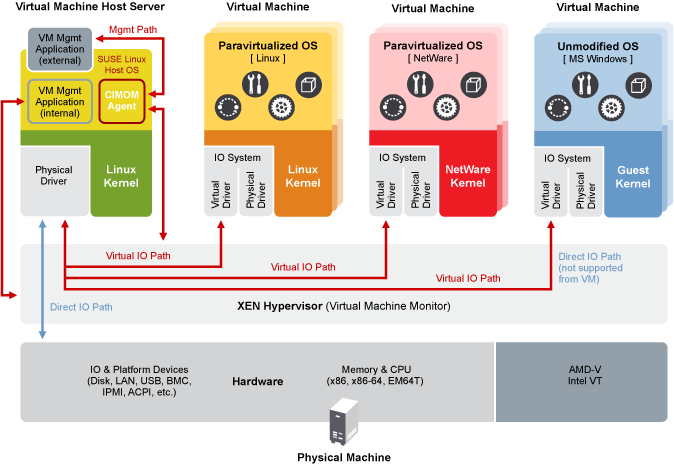

- 3.1 Xen virtualization architecture

- 4.1 KVM virtualization architecture

- 6.1 System Role screen

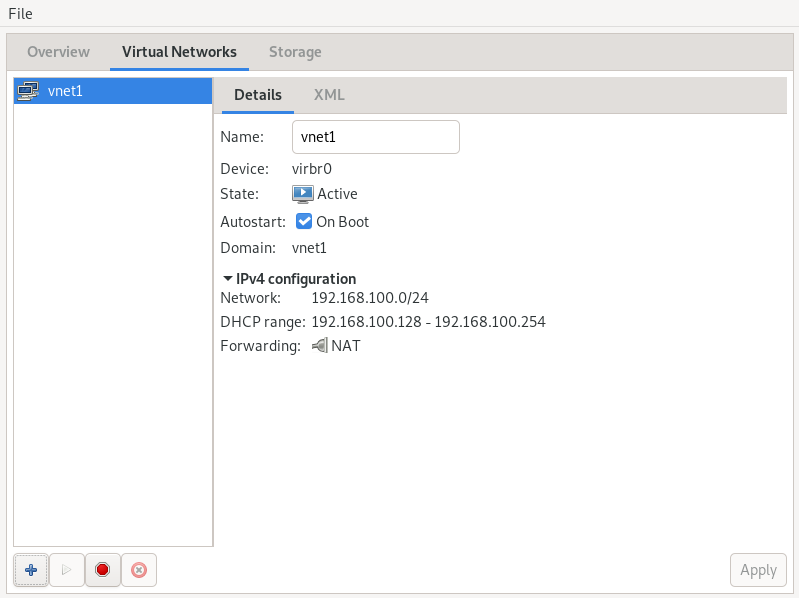

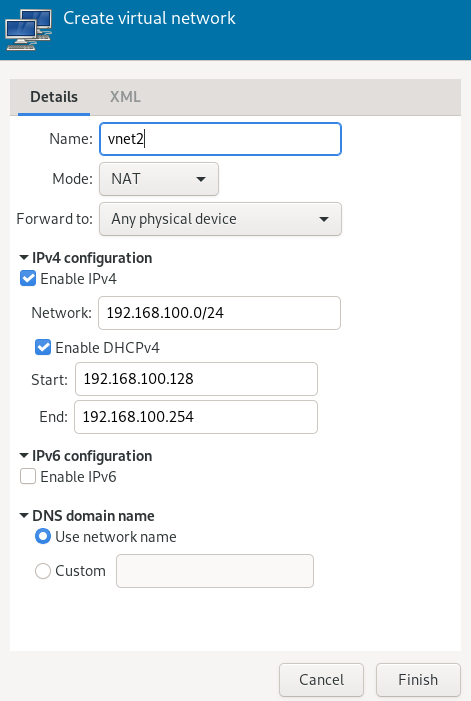

- 9.1 Connection details

- 9.2 Create virtual network

- 10.1 Specifying default options for new VMs

- 14.1 view of a VM Guest

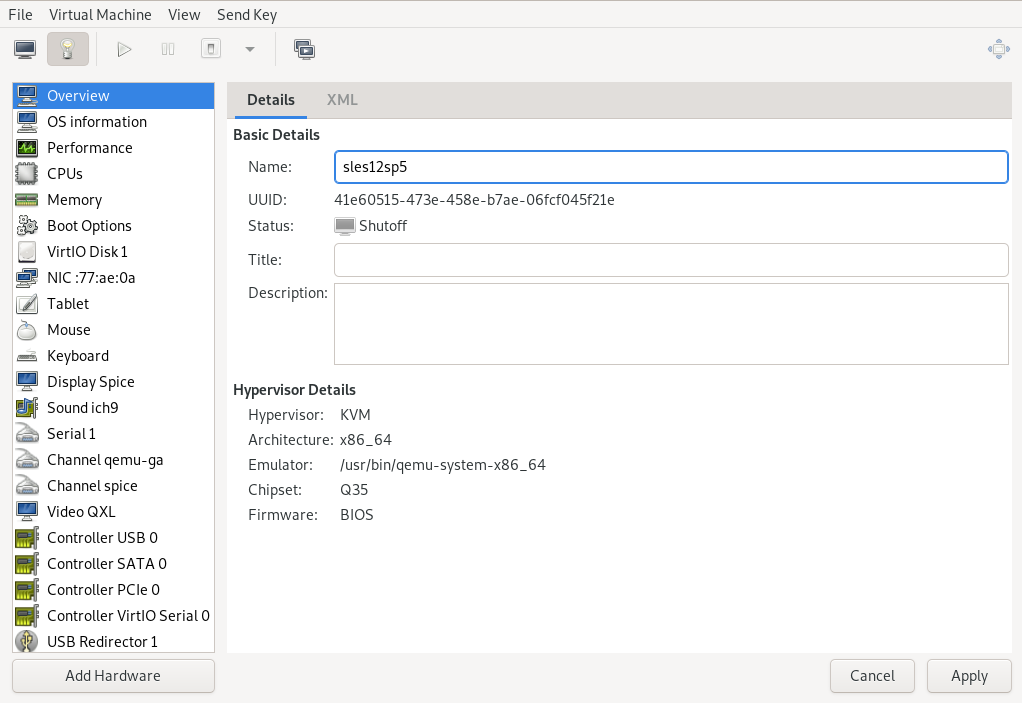

- 14.2 Overview details

- 14.3 VM Guest title and description

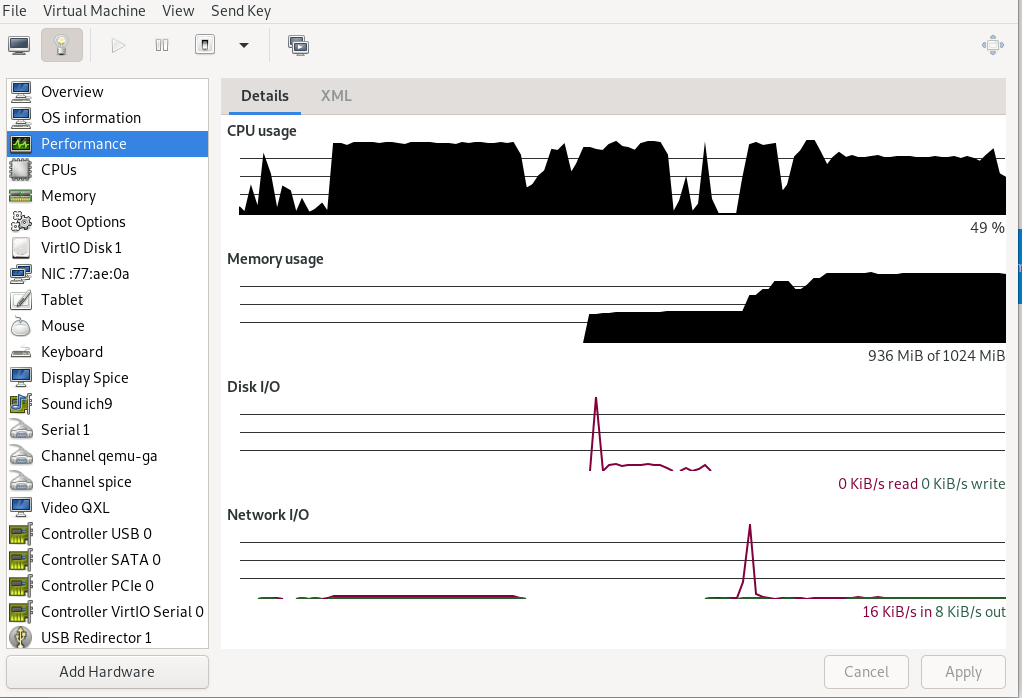

- 14.4 Performance

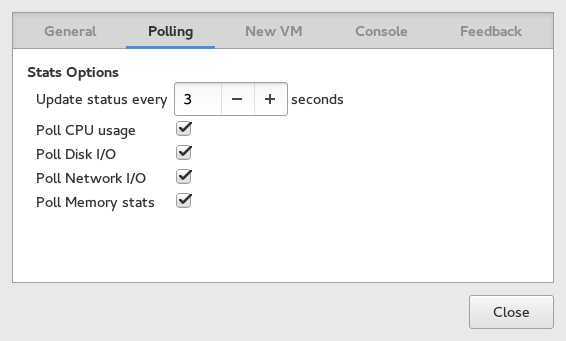

- 14.5 Statistics charts

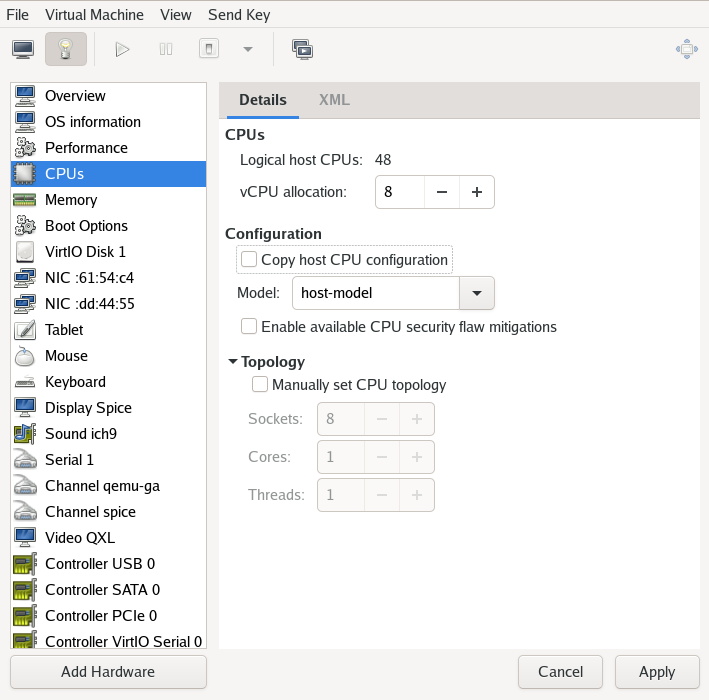

- 14.6 Processor view

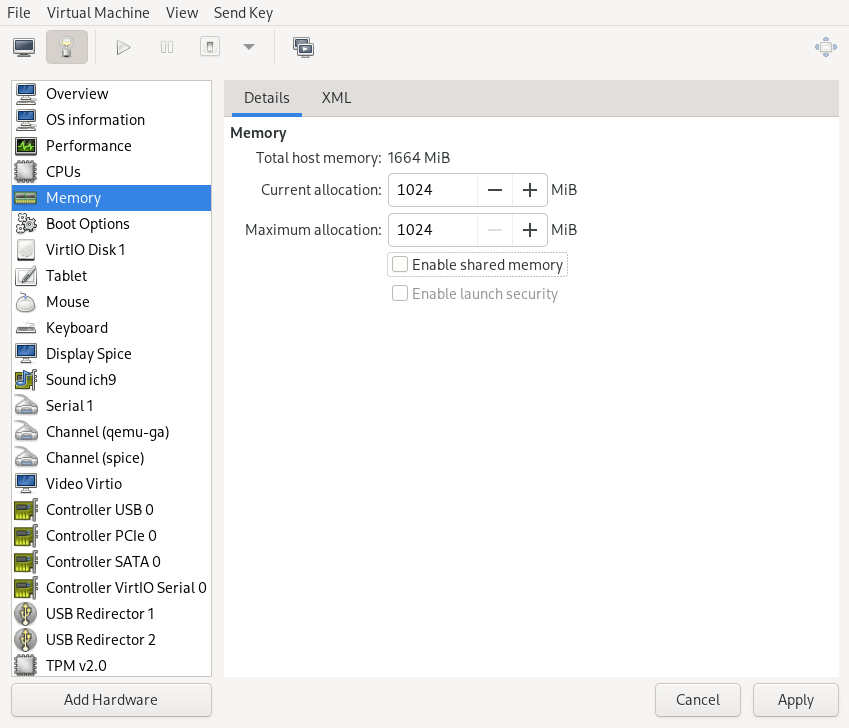

- 14.7 Memory view

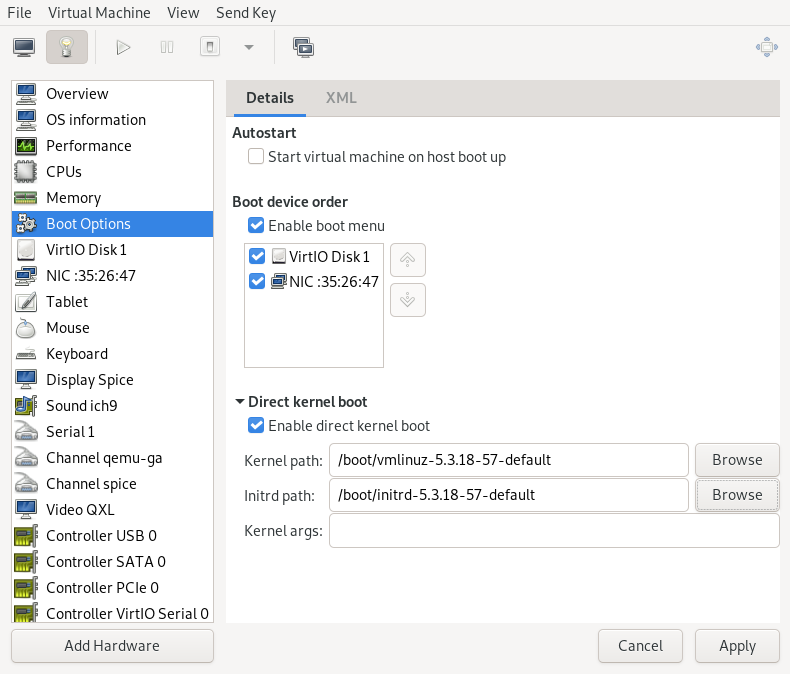

- 14.8 Boot options

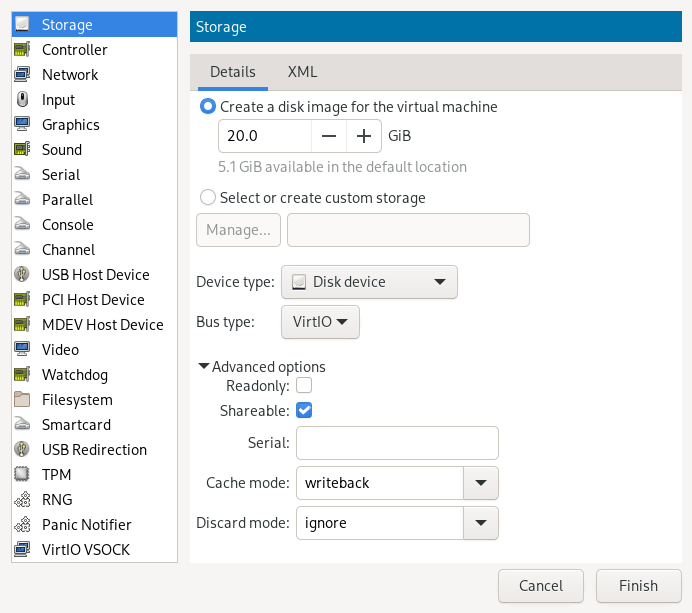

- 14.9 Add a new storage

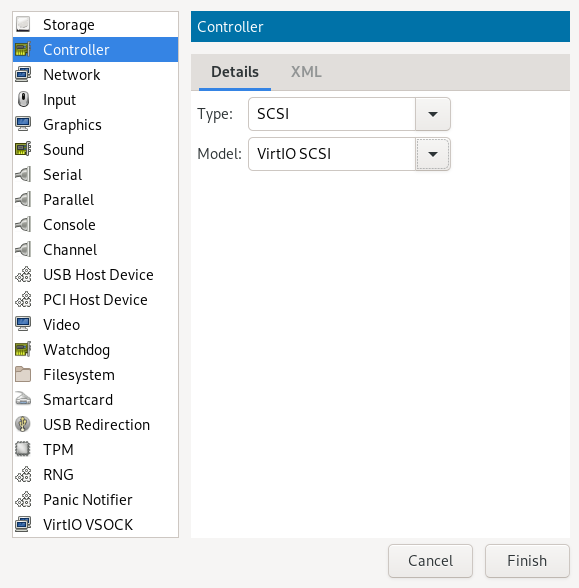

- 14.10 Add a new controller

- 14.11 Add a new network interface

- 14.12 Add a new input device

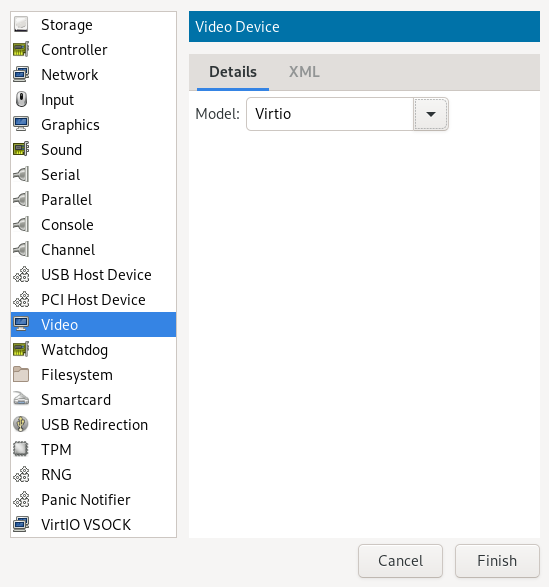

- 14.13 Add a new video device

- 14.14 Add a new USB redirector

- 14.15 Adding a PCI device

- 14.16 Adding a USB device

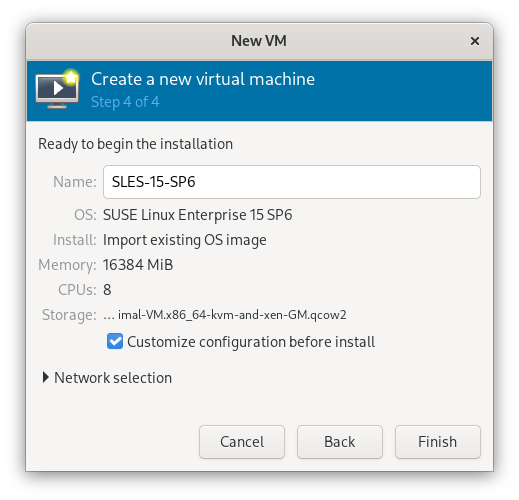

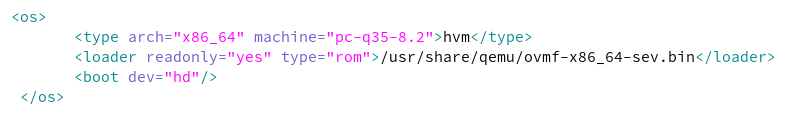

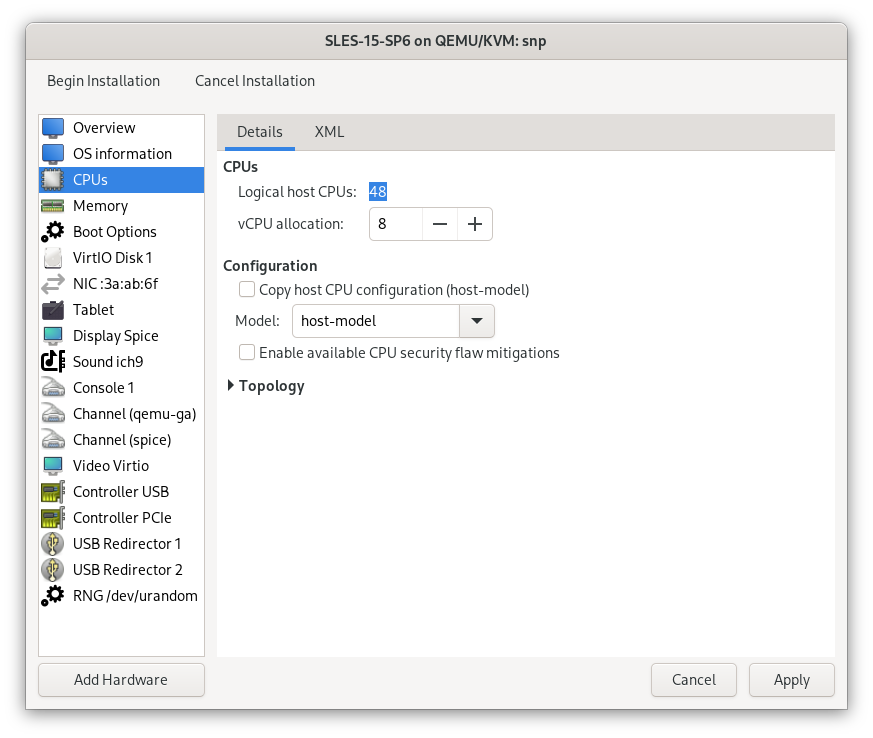

- 16.1 Create Virtual Machine

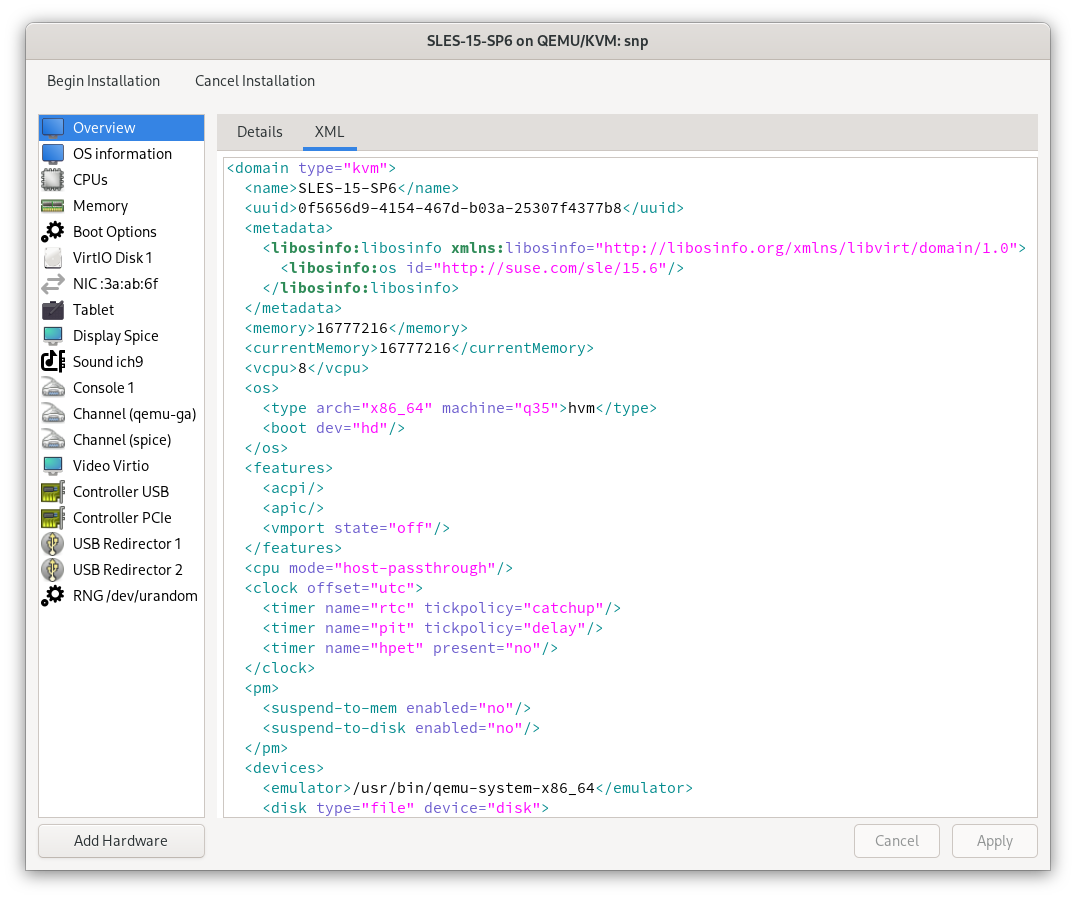

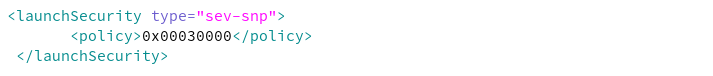

- 16.2 view of virtual machine configuration

- 16.3 Set firmware

- 16.4 launchSecurity

- 16.5 The view of virtual machine configuration

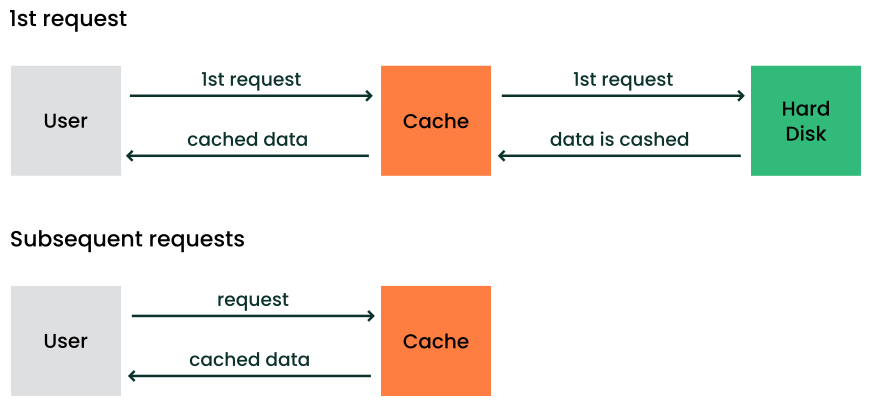

- 19.1 Caching mechanism

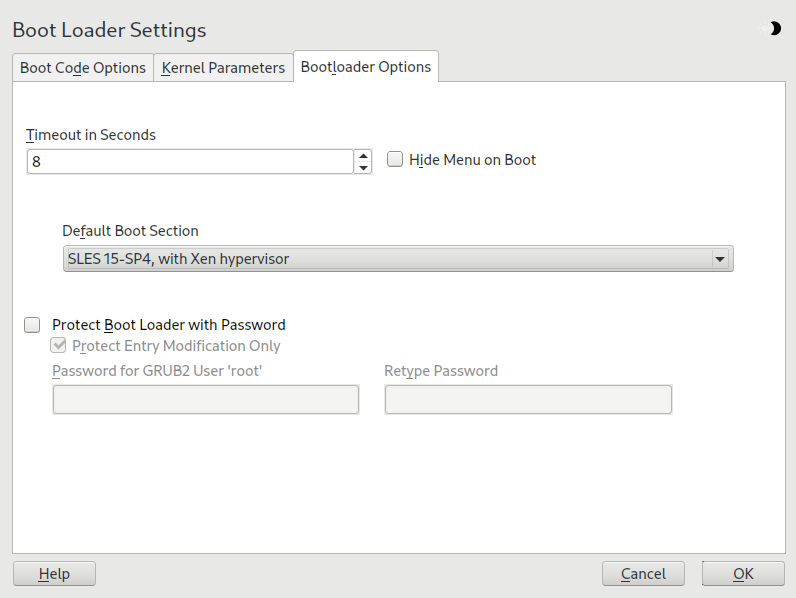

- 30.1 Boot loader settings

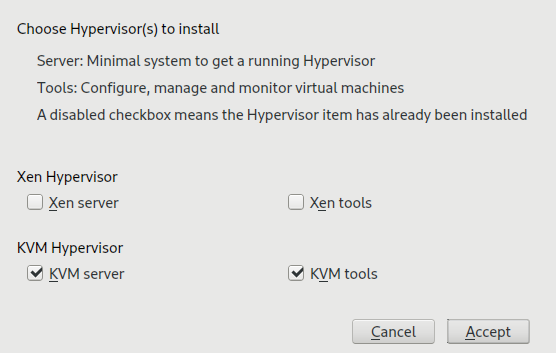

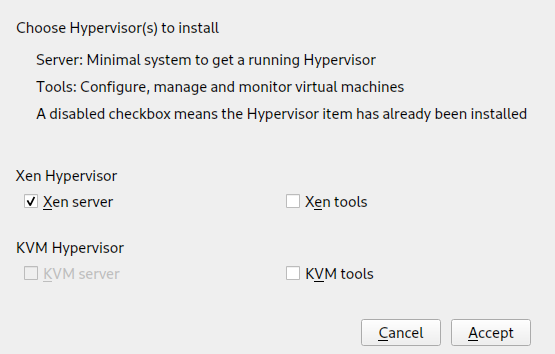

- 35.1 Installing the KVM hypervisor and tools

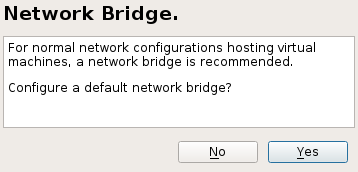

- 35.2 Network bridge

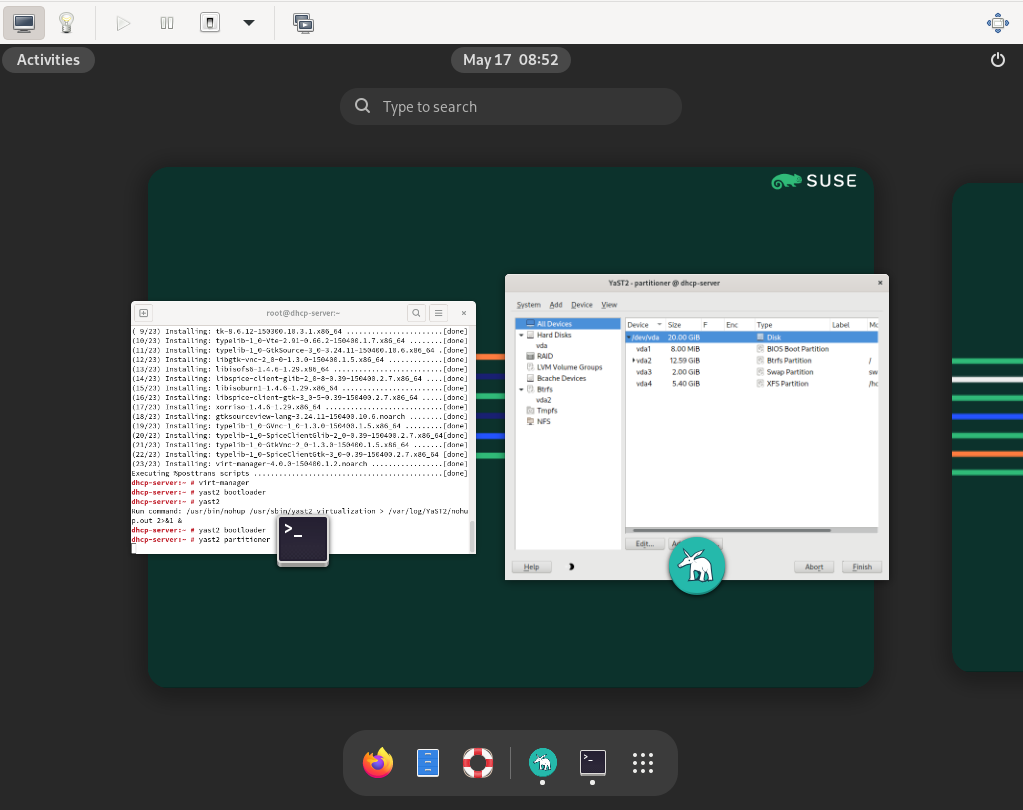

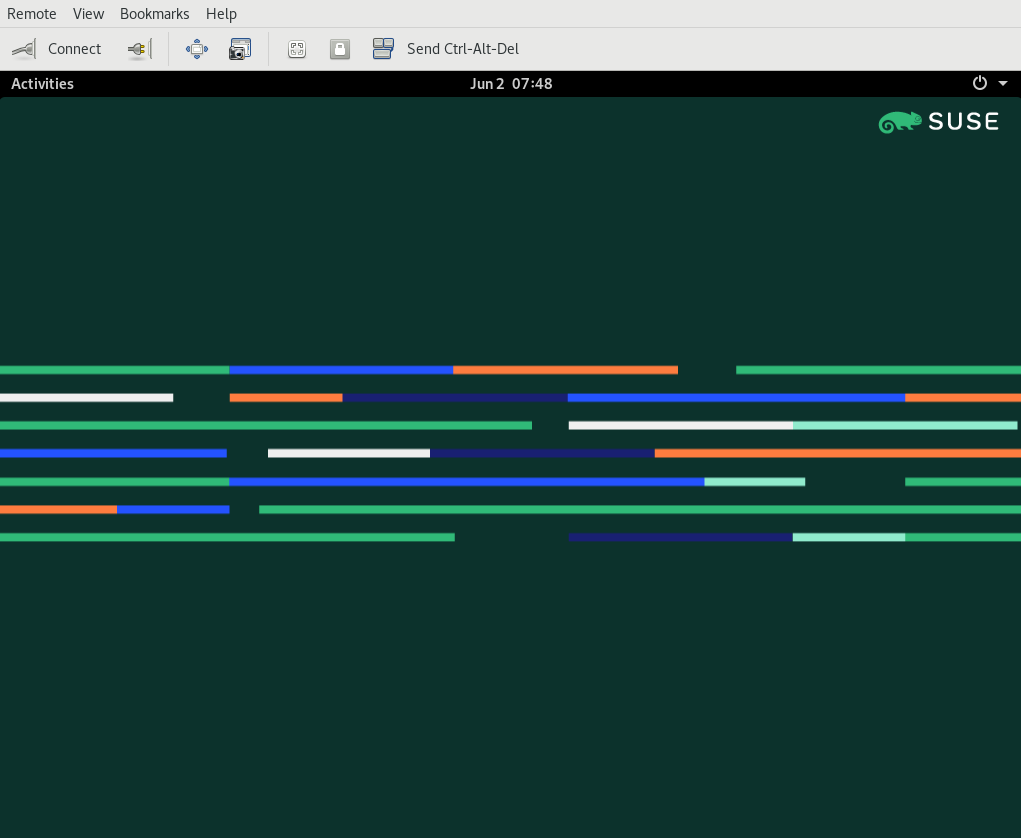

- 37.1 QEMU window with SLES as VM Guest

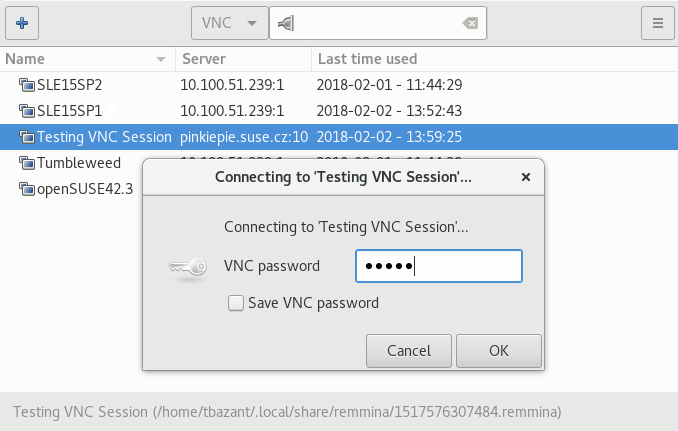

- 37.2 QEMU VNC session

- 37.3 Authentication dialog in Remmina

- 7.1 KVM VM limits

- 7.2 Xen VM limits

- 7.3 Xen host limits

- 7.4 The following SUSE host environments are supported

- 7.5 Supported offline migration guests

- 7.6 Supported live migration guests

- 7.7 Feature support—host (

Dom0) - 7.8 Guest feature support for Xen and KVM

- 32.1 Xen remote storage

- C1 Notation conventions

- C2 New global options

- C3 Common options

- C4 Domain management removed options

- C5 USB devices management removed options

- C6 CPU management removed options

- C7 Other options

- C8

xlcreateChanged options - C9

xmcreateRemoved options - C10

xlcreateAdded options - C11

xlconsoleAdded options - C12

xminfoRemoved options - C13

xmdump-coreRemoved options - C14

xmlistRemoved options - C15

xllistAdded options - C16

xlmem-*Changed options - C17

xmmigrateRemoved options - C18

xlmigrateAdded options - C19

xmrebootRemoved options - C20

xlrebootAdded options - C21

xlsaveAdded options - C22

xlrestoreAdded options - C23

xmshutdownRemoved options - C24

xlshutdownAdded options - C25

xltriggerChanged options - C26

xmsched-creditRemoved options - C27

xlsched-creditAdded options - C28

xmsched-credit2Removed options - C29

xlsched-credit2Added options - C30

xmsched-sedfremoved options - C31

xlsched-sedfadded options - C32

xmcpupool-listremoved options - C33

xmcpupool-createremoved options - C34

xlpci-detachadded options - C35

xmblock-listremoved options - C36 Other options

- C37 Network options

- C38

xlnetwork-attachremoved options - C39 New options

- 9.1 NAT-based network

- 9.2 Routed network

- 9.3 Isolated network

- 9.4 Using an existing bridge on VM Host Server

- 10.1 Loading kernel and initrd from HTTP server

- 10.2 Example of a

virt-installcommand line - 11.1 Typical output of

kvm_stat - 15.1 Example XML configuration file

- 27.1 Guest domain configuration file for SLED 12:

/etc/xen/sled12.cfg - 35.1 Exporting host's file system with VirtFS

- 37.1 Restricted user-mode networking

- 37.2 User-mode networking with custom IP range

- 37.3 User-mode networking with network-boot and TFTP

- 37.4 User-mode networking with host port forwarding

- 37.5 Password authentication

- 37.6 x509 certificate authentication

- 37.7 x509 certificate and password authentication

- 37.8 SASL authentication

- C1 Converting Xen domain configuration to

libvirt

Copyright © 2006–2025 SUSE LLC and contributors. All rights reserved.

Permission is granted to copy, distribute and/or modify this document under the terms of the GNU Free Documentation License, Version 1.2 or (at your option) version 1.3; with the Invariant Section being this copyright notice and license. A copy of the license version 1.2 is included in the section entitled “GNU Free Documentation License”.

For SUSE trademarks, see https://www.suse.com/company/legal/. All third-party trademarks are the property of their respective owners. Trademark symbols (®, ™ etc.) denote trademarks of SUSE and its affiliates. Asterisks (*) denote third-party trademarks.

All information found in this book has been compiled with utmost attention to detail. However, this does not guarantee complete accuracy. Neither SUSE LLC, its affiliates, the authors nor the translators shall be held liable for possible errors or the consequences thereof.

1 Available documentation #

- Online documentation

Our documentation is available online at https://documentation.suse.com. Browse or download the documentation in various formats.

Note: Latest updatesThe latest updates are usually available in the English-language version of this documentation.

- SUSE Knowledgebase

If you run into an issue, check out the Technical Information Documents (TIDs) that are available online at https://www.suse.com/support/kb/. Search the SUSE Knowledgebase for known solutions driven by customer need.

- Release notes

For release notes, see https://www.suse.com/releasenotes/.

- In your system

For offline use, the release notes are also available under

/usr/share/doc/release-noteson your system. The documentation for individual packages is available at/usr/share/doc/packages.Many commands are also described in their manual pages. To view them, run

man, followed by a specific command name. If themancommand is not installed on your system, install it withsudo zypper install man.

2 Improving the documentation #

Your feedback and contributions to this documentation are welcome. The following channels for giving feedback are available:

- Service requests and support

For services and support options available for your product, see https://www.suse.com/support/.

To open a service request, you need a SUSE subscription registered at SUSE Customer Center. Go to https://scc.suse.com/support/requests, log in, and click .

- Bug reports

Report issues with the documentation at https://bugzilla.suse.com/.

To simplify this process, click the icon next to a headline in the HTML version of this document. This preselects the right product and category in Bugzilla and adds a link to the current section. You can start typing your bug report right away.

A Bugzilla account is required.

- Contributions

To contribute to this documentation, click the icon next to a headline in the HTML version of this document. This will take you to the source code on GitHub, where you can open a pull request.

A GitHub account is required.

Note: only available for EnglishThe icons are only available for the English version of each document. For all other languages, use the icons instead.

For more information about the documentation environment used for this documentation, see the repository's README.

You can also report errors and send feedback concerning the documentation to <doc-team@suse.com>. Include the document title, the product version, and the publication date of the document. Additionally, include the relevant section number and title (or provide the URL) and provide a concise description of the problem.

3 Documentation conventions #

The following notices and typographic conventions are used in this document:

/etc/passwd: Directory names and file namesPLACEHOLDER: Replace PLACEHOLDER with the actual value

PATH: An environment variablels,--help: Commands, options, and parametersuser: The name of a user or grouppackage_name: The name of a software package

Alt, Alt–F1: A key to press or a key combination. Keys are shown in uppercase as on a keyboard.

, › : menu items, buttons

AMD/Intel This paragraph is only relevant for the AMD64/Intel 64 architectures. The arrows mark the beginning and the end of the text block.

IBM Z, POWER This paragraph is only relevant for the architectures

IBM ZandPOWER. The arrows mark the beginning and the end of the text block.Chapter 1, “Example chapter”: A cross-reference to another chapter in this guide.

Commands that must be run with

rootprivileges. You can also prefix these commands with thesudocommand to run them as a non-privileged user:#command>sudocommandCommands that can be run by non-privileged users:

>commandCommands can be split into two or multiple lines by a backslash character (

\) at the end of a line. The backslash informs the shell that the command invocation will continue after the end of the line:>echoa b \ c dA code block that shows both the command (preceded by a prompt) and the respective output returned by the shell:

>commandoutputNotices

Warning: Warning noticeVital information you must be aware of before proceeding. Warns you about security issues, potential loss of data, damage to hardware, or physical hazards.

Important: Important noticeImportant information you should be aware of before proceeding.

Note: Note noticeAdditional information, for example about differences in software versions.

Tip: Tip noticeHelpful information, like a guideline or a piece of practical advice.

Compact Notices

Additional information, for example about differences in software versions.

Helpful information, like a guideline or a piece of practical advice.

4 Support #

Find the support statement for SUSE Linux Enterprise Server and general information about technology previews below. For details about the product lifecycle, see https://www.suse.com/lifecycle. For the virtualization support status, see Chapter 7, Virtualization limits and support.

If you are entitled to support, find details on how to collect information for a support ticket at https://documentation.suse.com/sles-15/html/SLES-all/cha-adm-support.html.

4.1 Support statement for SUSE Linux Enterprise Server #

To receive support, you need an appropriate subscription with SUSE. To view the specific support offers available to you, go to https://www.suse.com/support/ and select your product.

The support levels are defined as follows:

- L1

Problem determination, which means technical support designed to provide compatibility information, usage support, ongoing maintenance, information gathering and basic troubleshooting using available documentation.

- L2

Problem isolation, which means technical support designed to analyze data, reproduce customer problems, isolate a problem area and provide a resolution for problems not resolved by Level 1 or prepare for Level 3.

- L3

Problem resolution, which means technical support designed to resolve problems by engaging engineering to resolve product defects which have been identified by Level 2 Support.

For contracted customers and partners, SUSE Linux Enterprise Server is delivered with L3 support for all packages, except for the following:

Technology previews.

Sound, graphics, fonts, and artwork.

Packages that require an additional customer contract.

Some packages shipped as part of the module Workstation Extension are L2-supported only.

Packages with names ending in -devel (containing header files and similar developer resources) will only be supported together with their main packages.

SUSE will only support the usage of original packages. That is, packages that are unchanged and not recompiled.

4.2 Technology previews #

Technology previews are packages, stacks, or features delivered by SUSE to provide glimpses into upcoming innovations. Technology previews are included for your convenience to give you a chance to test new technologies within your environment. We would appreciate your feedback. If you test a technology preview, please contact your SUSE representative and let them know about your experience and use cases. Your input is helpful for future development.

Technology previews have the following limitations:

Technology previews are still in development. Therefore, they may be functionally incomplete, unstable, or otherwise not suitable for production use.

Technology previews are not supported.

Technology previews may only be available for specific hardware architectures.

Details and functionality of technology previews are subject to change. As a result, upgrading to subsequent releases of a technology preview may be impossible and require a fresh installation.

SUSE may discover that a preview does not meet customer or market needs, or does not comply with enterprise standards. Technology previews can be removed from a product at any time. SUSE does not commit to providing a supported version of such technologies in the future.

For an overview of technology previews shipped with your product, see the release notes at https://www.suse.com/releasenotes.

Part I Introduction #

- 1 Virtualization technology

Virtualization is a technology that provides a way for a machine (Host) to run another operating system (guest virtual machines) on top of the host operating system.

- 2 Virtualization scenarios

- 3 Introduction to Xen virtualization

- 4 Introduction to KVM virtualization

- 5 Virtualization tools

libvirtis a library that provides a common API for managing popular virtualization solutions, among them KVM and Xen. The library provides a normalized management API for these virtualization solutions, allowing a stable, cross-hypervisor interface for higher-level management tools. The library also provides APIs for management of virtual networks and storage on the VM Host Server. The configuration of each VM Guest is stored in an XML file.With

libvirt, you can also manage your VM Guests remotely. It supports TLS encryption, x509 certificates and authentication with SASL. This enables managing VM Host Servers centrally from a single workstation, alleviating the need to access each VM Host Server individually.Using the

libvirt-based tools is the recommended way of managing VM Guests. Interoperability betweenlibvirtandlibvirt-based applications has been tested and is an essential part of SUSE's support stance.- 6 Installation of virtualization components

- 7 Virtualization limits and support

1 Virtualization technology #

Virtualization is a technology that provides a way for a machine (Host) to run another operating system (guest virtual machines) on top of the host operating system.

1.1 Overview #

SUSE Linux Enterprise Server includes the latest open source virtualization technologies, Xen and KVM. With these hypervisors, SUSE Linux Enterprise Server can be used to provision, de-provision, install, monitor and manage multiple virtual machines (VM Guests) on a single physical system (for more information see Hypervisor). SUSE Linux Enterprise Server can create virtual machines running both modified, highly tuned, paravirtualized operating systems and fully virtualized unmodified operating systems.

The primary component of the operating system that enables virtualization is a hypervisor (or virtual machine manager), which is a layer of software that runs directly on server hardware. It controls platform resources, sharing them among multiple VM Guests and their operating systems by presenting virtualized hardware interfaces to each VM Guest.

SUSE Linux Enterprise is an enterprise-class Linux server operating system that offers two types of hypervisors: Xen and KVM.

SUSE Linux Enterprise Server with Xen or KVM acts as a virtualization host server (VHS) that supports VM Guests with its own guest operating systems. The SUSE VM Guest architecture consists of a hypervisor and management components that constitute the VHS, which runs many application-hosting VM Guests.

In Xen, the management components run in a privileged VM Guest often called Dom0. In KVM, where the Linux kernel acts as the hypervisor, the management components run directly on the VHS.

1.2 Virtualization benefits #

Virtualization brings a lot of advantages while providing the same service as a hardware server.

First, it reduces the cost of your infrastructure. Servers are mainly used to provide a service to a customer, and a virtualized operating system can provide the same service, with:

Less hardware: you can run several operating systems on a single host, therefore all hardware maintenance is reduced.

Less power/cooling: less hardware means you do not need to invest more in electric power, backup power, and cooling if you need more service.

Save space: your data center space is saved because you do not need more hardware servers (less servers than service running).

Less management: using a VM Guest simplifies the administration of your infrastructure.

Agility and productivity: Virtualization provides migration capabilities, live migration and snapshots. These features reduce downtime, and bring an easy way to move your service from one place to another without any service interruption.

1.3 Virtualization modes #

Guest operating systems are hosted on virtual machines in either full virtualization (FV) mode or paravirtual (PV) mode. Each virtualization mode has advantages and disadvantages.

Full virtualization mode lets virtual machines run unmodified operating systems, such as Windows* Server 2003. It can use either Binary Translation or hardware-assisted virtualization technology, such as AMD* Virtualization or Intel* Virtualization Technology. Using hardware assistance allows for better performance on processors that support it.

Certain guest operating systems hosted in full virtualization mode can be configured to use drivers from the SUSE Virtual Machine Drivers Pack (VMDP) instead of drivers originating from the operating system. Running virtual machine drivers improves performance dramatically on guest operating systems, such as Windows Server 2003. For more information, see Appendix A, Virtual machine drivers.

To be able to run under paravirtual mode, guest operating systems normally need to be modified for the virtualization environment. However, operating systems running in paravirtual mode have better performance than those running under full virtualization.

Operating systems currently modified to run in paravirtual mode are called paravirtualized operating systems and include SUSE Linux Enterprise Server.

1.4 I/O virtualization #

VM Guests not only share CPU and memory resources of the host system, but also the I/O subsystem. Because software I/O virtualization techniques deliver less performance than bare metal, hardware solutions that deliver almost “native” performance have been developed recently. SUSE Linux Enterprise Server supports the following I/O virtualization techniques:

- Full virtualization

Fully Virtualized (FV) drivers emulate widely supported real devices, which can be used with an existing driver in the VM Guest. The guest is also called Hardware Virtual Machine (HVM). Since the physical device on the VM Host Server may differ from the emulated one, the hypervisor needs to process all I/O operations before handing them over to the physical device. Therefore all I/O operations need to traverse two software layers, a process that not only significantly impacts I/O performance, but also consumes CPU time.

- Paravirtualization

Paravirtualization (PV) allows direct communication between the hypervisor and the VM Guest. With less overhead involved, performance is much better than with full virtualization. However, paravirtualization requires either the guest operating system to be modified to support the paravirtualization API or paravirtualized drivers. See Section 7.4.1, “Availability of paravirtualized drivers” for a list of guest operating systems supporting paravirtualization.

- PVHVM

This type of virtualization enhances HVM (see Full virtualization) with paravirtualized (PV) drivers, and PV interrupt and timer handling.

- VFIO

VFIO stands for Virtual Function I/O and is a new user-level driver framework for Linux. It replaces the traditional KVM PCI Pass-Through device assignment. The VFIO driver exposes direct device access to user space in a secure memory (IOMMU) protected environment. With VFIO, a VM Guest can directly access hardware devices on the VM Host Server (pass-through), avoiding performance issues caused by emulation in performance critical paths. This method does not allow to share devices—each device can only be assigned to a single VM Guest. VFIO needs to be supported by the VM Host Server CPU, chipset and the BIOS/EFI.

Compared to the legacy KVM PCI device assignment, VFIO has the following advantages:

Resource access is compatible with UEFI Secure Boot.

Device is isolated and its memory access protected.

Offers a user space device driver with more flexible device ownership model.

Is independent of KVM technology, and not bound to x86 architecture only.

In SUSE Linux Enterprise Server the USB and PCI pass-through methods of device assignment are considered deprecated and are superseded by the VFIO model.

- SR-IOV

The latest I/O virtualization technique, Single Root I/O Virtualization SR-IOV combines the benefits of the aforementioned techniques—performance and the ability to share a device with several VM Guests. SR-IOV requires special I/O devices, that are capable of replicating resources so they appear as multiple separate devices. Each such “pseudo” device can be directly used by a single guest. However, for network cards for example the number of concurrent queues that can be used is limited, potentially reducing performance for the VM Guest compared to paravirtualized drivers. On the VM Host Server, SR-IOV must be supported by the I/O device, the CPU and chipset, the BIOS/EFI and the hypervisor—for setup instructions see Section 14.12, “Assigning a host PCI device to a VM Guest”.

To be able to use the VFIO and SR-IOV features, the VM Host Server needs to fulfill the following requirements:

IOMMU needs to be enabled in the BIOS/EFI.

For Intel CPUs, the kernel parameter

intel_iommu=onneeds to be provided on the kernel command line. For more information, see https://github.com/torvalds/linux/blob/master/Documentation/admin-guide/kernel-parameters.txt#L1951.The VFIO infrastructure needs to be available. This can be achieved by loading the kernel module

vfio_pci. For more information, see Book “Administration Guide”, Chapter 19 “Thesystemddaemon”, Section 19.6.4 “Loading kernel modules”.

2 Virtualization scenarios #

Virtualization provides several useful capabilities to your organization, for example:

more efficient hardware usage

support for legacy software

operating system isolation

live migration

disaster recovery

load balancing

2.1 Server consolidation #

Many servers can be replaced by one big physical server, so that hardware is consolidated, and guest operating systems are converted to virtual machines. This also supports running legacy software on new hardware.

Better usage of resources that were not running at 100%

Fewer server locations needed

More efficient use of computer resources: multiple workloads on the same server

Simplification of data center infrastructure

Simplifies moving workloads to other hosts, avoiding service downtime

Faster and agile virtual machine provisioning

Multiple guest operating systems can run on a single host

Server consolidation requires special attention to the following points:

Maintenance windows should be carefully planned

Storage is key: it must be able to support migration and growing disk usage

You must verify that your servers can support the additional workloads

2.2 Isolation #

Guest operating systems are fully isolated from the host running them. Therefore, if there are problems inside virtual machines, the host is not harmed. Also, problems inside one VM do not affect other VMs. No data is shared between VMs.

UEFI Secure Boot can be used for VMs.

KSM should be avoided. For more details on KSM, refer to KSM.

Individual CPU cores can be assigned to VMs.

Hyper-threading (HT) should be disabled to avoid potential security issues.

VM should not share network, storage, or network hardware.

Use of advanced hypervisor features such as PCI pass-through or NUMA adversely affects VM migration capabilities.

Use of paravirtualization and

virtiodrivers improves VM performance and efficiency.

AMD provides specific features regarding the security of virtualization.

2.3 Disaster recovery #

The hypervisor can make snapshots of VMs, enabling restoration to a known good state, or to any desired earlier state. Since Virtualized OSes are less dependent on hardware configuration than those running directly on bare metal, these snapshots can be restored onto different server hardware so long as it is running the same hypervisor.

2.4 Dynamic load balancing #

Live migration provides a simple way to load-balance your services across your infrastructure, by moving VMs from busy hosts to those with spare capacity, on demand.

3 Introduction to Xen virtualization #

This chapter introduces and explains the components and technologies you need to understand to set up and manage a Xen-based virtualization environment.

3.1 Basic components #

The basic components of a Xen-based virtualization environment are the following:

Xen hypervisor

Dom0

any number of other VM Guests

tools, commands and configuration files to manage virtualization

Collectively, the physical computer running all these components is called a VM Host Server because together these components form a platform for hosting virtual machines.

- The Xen hypervisor

The Xen hypervisor, sometimes simply called a virtual machine monitor, is an open source software program that coordinates the low-level interaction between virtual machines and physical hardware.

- The Dom0

The virtual machine host environment, also called Dom0 or controlling domain, is composed of several components, such as:

SUSE Linux Enterprise Server provides a graphical and a command line environment to manage the virtual machine host components and its virtual machines.

NoteThe term “Dom0” refers to a special domain that provides the management environment. This may be run either in graphical or in command line mode.

The xl tool stack based on the xenlight library (libxl). Use it to manage Xen guest domains.

QEMU—an open source software that emulates a full computer system, including a processor and multiple peripherals. It provides the ability to host operating systems in both full virtualization or paravirtualization mode.

- Xen-based virtual machines

A Xen-based virtual machine, also called a VM Guest or DomU, consists of the following components:

At least one virtual disk that contains a bootable operating system. The virtual disk can be based on a file, partition, volume, or other type of block device.

A configuration file for each guest domain. It is a text file following the syntax described in the man page

man 5 xl.conf.Several network devices, connected to the virtual network provided by the controlling domain.

- Management tools, commands, and configuration files

There is a combination of GUI tools, commands and configuration files to help you manage and customize your virtualization environment.

3.2 Xen virtualization architecture #

The following graphic depicts a virtual machine host with four virtual machines. The Xen hypervisor is shown as running directly on the physical hardware platform. The controlling domain is also a virtual machine, although it has several additional management tasks compared to all the other virtual machines.

On the left, the virtual machine host’s Dom0 is shown running the SUSE Linux Enterprise Server operating system. The two virtual machines shown in the middle are running paravirtualized operating systems. The virtual machine on the right shows a fully virtual machine running an unmodified operating system, such as the latest version of Microsoft Windows/Server.

4 Introduction to KVM virtualization #

4.1 Basic components #

KVM is a full virtualization solution for hardware architectures that support hardware virtualization (refer to Section 7.1, “Architecture support” for more details on supported architectures).

VM Guests (virtual machines), virtual storage and virtual networks can

be managed with QEMU tools directly or with the libvirt-based stack.

The QEMU tools include qemu-system-ARCH, the QEMU

monitor, qemu-img, and qemu-ndb. A

libvirt-based stack includes libvirt itself, along with

libvirt-based applications such as virsh,

virt-manager, virt-install, and

virt-viewer.

4.2 KVM virtualization architecture #

This full virtualization solution consists of two main components:

A set of kernel modules (

kvm.ko,kvm-intel.ko, andkvm-amd.ko) that provides the core virtualization infrastructure and processor-specific drivers.A user space program (

qemu-system-ARCH) that provides emulation for virtual devices and control mechanisms to manage VM Guests (virtual machines).

The term KVM more properly refers to the kernel level virtualization functionality, but is in practice more commonly used to refer to the user space component.

5 Virtualization tools #

libvirt is a library that provides a common API for managing popular

virtualization solutions, among them KVM and Xen. The library

provides a normalized management API for these virtualization

solutions, allowing a stable, cross-hypervisor interface for

higher-level management tools. The library also provides APIs for

management of virtual networks and storage on the VM Host Server. The

configuration of each VM Guest is stored in an XML file.

With libvirt, you can also manage your VM Guests remotely. It

supports TLS encryption, x509 certificates and authentication with

SASL. This enables managing VM Host Servers centrally from a single

workstation, alleviating the need to access each VM Host Server individually.

Using the libvirt-based tools is the recommended way of managing

VM Guests. Interoperability between libvirt and libvirt-based

applications has been tested and is an essential part of SUSE's

support stance.

5.1 Virtualization console tools #

libvirt includes several command-line utilities to manage virtual

machines. The most important ones are:

virsh(Package: libvirt-client)A command-line tool to manage VM Guests with similar functionality as the Virtual Machine Manager.

virshallows you to change a VM Guest's status, set up new guests and devices, or edit existing configurations.virshis also useful to script VM Guest management operations.virshtakes the first argument as a command and further arguments as options to this command:virsh [-c URI] COMMAND DOMAIN-ID [OPTIONS]

Like

zypper,virshcan also be called without a command. In this case, it starts a shell waiting for your commands. This mode is useful when having to run subsequent commands:~> virsh -c qemu+ssh://wilber@mercury.example.com/system Enter passphrase for key '/home/wilber/.ssh/id_rsa': Welcome to virsh, the virtualization interactive terminal. Type: 'help' for help with commands 'quit' to quit virsh # hostname mercury.example.comvirt-install(Package: virt-install)A command-line tool for creating new VM Guests using the

libvirtlibrary. It supports graphical installations via VNC or SPICE protocols. Given suitable command-line arguments,virt-installcan run unattended. This allows for easy automation of guest installs.virt-installis the default installation tool used by the Virtual Machine Manager.remote-viewer(Package: virt-viewer)A simple viewer of a remote desktop. It supports SPICE and VNC protocols.

virt-clone(Package: virt-install)A tool for cloning existing virtual machine images using the

libvirthypervisor management library.virt-host-validate(Package: libvirt-client)A tool that validates whether the host is configured in a suitable way to run

libvirthypervisor drivers.

5.2 Virtualization GUI tools #

The following libvirt-based graphical tools are available on SUSE Linux Enterprise Server. All tools are provided by packages carrying the tool's name.

- Virtual Machine Manager (package: virt-manager)

The Virtual Machine Manager is a desktop tool for managing VM Guests. It provides the ability to control the lifecycle of existing machines (start/shutdown, pause/resume, save/restore) and create new VM Guests. It allows managing multiple types of storage and virtual networks. It provides access to the graphical console of VM Guests with a built-in VNC viewer and can be used to view performance statistics.

virt-managersupports connecting to a locallibvirtd, managing a local VM Host Server, or a remotelibvirtdmanaging a remote VM Host Server.To start the Virtual Machine Manager, enter

virt-managerat the command prompt.NoteTo disable automatic USB device redirection for VM Guest using spice, either launch

virt-managerwith the--spice-disable-auto-usbredirparameter or run the following command to persistently change the default behavior:>dconf write /org/virt-manager/virt-manager/console/auto-redirect falsevirt-viewer(Package: virt-viewer)A viewer for the graphical console of a VM Guest. It uses SPICE (configured by default on the VM Guest) or VNC protocols and supports TLS and x509 certificates. VM Guests can be accessed by name, ID or UUID. If the guest is not already running, the viewer can be told to wait until the guest starts, before attempting to connect to the console.

virt-vieweris not installed by default and is available after installing the packagevirt-viewer.NoteTo disable automatic USB device redirection for VM Guest using spice, add an empty filter using the

--spice-usbredir-auto-redirect-filter=''parameter.yast2 vm(Package: yast2-vm)A YaST module that simplifies the installation of virtualization tools and can set up a network bridge:

6 Installation of virtualization components #

6.1 Introduction #

To run a virtualization server (VM Host Server) that can host one or more guest systems (VM Guests), you need to install required virtualization components on the server. These components vary depending on which virtualization technology you want to use.

6.2 Installing virtualization components #

You can install the virtualization tools required to run a VM Host Server in one of the following ways:

By selecting a specific system role during SUSE Linux Enterprise Server installation on the VM Host Server

By running the YaST Virtualization module on an already installed and running SUSE Linux Enterprise Server.

By installing specific installation patterns on an already installed and running SUSE Linux Enterprise Server.

6.2.1 Specifying a system role #

You can install all the tools required for virtualization during the installation of SUSE Linux Enterprise Server on the VM Host Server. During the installation, you are presented with the screen.

Here you can select either the or the roles. The appropriate software selection and setup is automatically performed during SUSE Linux Enterprise Server installation.

Both virtualization system roles create a dedicated

/var/lib/libvirt partition, and enable the

firewalld and Kdump services.

6.2.2 Running the YaST Virtualization module #

Depending on the scope of SUSE Linux Enterprise Server installation on the VM Host Server, none of the virtualization tools may be installed on your system. They are automatically installed when configuring the hypervisor with the YaST Virtualization module.

The YaST Virtualization module is included in the yast2-vm package. Verify it is installed on the VM Host Server before installing virtualization components.

To install the KVM virtualization environment and related tools, proceed as follows:

Start YaST and select › .

Select for a minimal installation of QEMU and KVM environment. Select to use the

libvirt-based management stack as well. Confirm with .YaST offers to automatically configure a network bridge on the VM Host Server. It ensures proper networking capabilities of the VM Guest. Agree to do so by selecting , otherwise choose .

After the setup has been finished, you can start creating and configuring VM Guests. Rebooting the VM Host Server is not required.

To install the Xen virtualization environment, proceed as follows:

Start YaST and select › .

Select for a minimal installation of Xen environment. Select to use the

libvirt-based management stack as well. Confirm with .YaST offers to automatically configure a network bridge on the VM Host Server. It ensures proper networking capabilities of the VM Guest. Agree to do so by selecting , otherwise choose .

After the setup has been finished, you need to reboot the machine with the Xen kernel.

Tip: Default boot kernelIf everything works as expected, change the default boot kernel with YaST and make the Xen-enabled kernel the default. For more information about changing the default kernel, see Book “Administration Guide”, Chapter 18 “The boot loader GRUB 2”, Section 18.3 “Configuring the boot loader with YaST”.

6.2.3 Installing specific installation patterns #

Related software packages from SUSE Linux Enterprise Server software repositories are

organized into installation patterns. You can use

these patterns to install specific virtualization components on an

already running SUSE Linux Enterprise Server. Use zypper to install

them:

zypper install -t pattern PATTERN_NAME

To install the KVM environment, consider the following patterns:

kvm_serverInstalls basic VM Host Server with the KVM and QEMU environments.

kvm_toolsInstalls

libvirttools for managing and monitoring VM Guests in KVM environment.

To install the Xen environment, consider the following patterns:

xen_serverInstalls a basic Xen VM Host Server.

xen_toolsInstalls

libvirttools for managing and monitoring VM Guests in Xen environment.

6.3 Enable nested virtualization in KVM #

KVM's nested virtualization is still a technology preview. It is provided for testing purposes and is not supported.

Nested guests are KVM guests run in a KVM guest. When describing nested guests, we use the following virtualization layers:

- L0

A bare metal host running KVM.

- L1

A virtual machine running on L0. Because it can run another KVM, it is called a guest hypervisor.

- L2

A virtual machine running on L1. It is called a nested guest.

Nested virtualization has many advantages. You can benefit from it in the following scenarios:

Manage your own virtual machines directly with your hypervisor of choice in cloud environments.

Enable the live migration of hypervisors and their guest virtual machines as a single entity.

NoteLive migration of a nested VM Guest is not supported.

Use it for software development and testing.

To enable nesting temporarily, remove the module and reload it with the

nested KVM module parameter:

For Intel CPUs, run:

>sudomodprobe -r kvm_intel && modprobe kvm_intel nested=1For AMD CPUs, run:

>sudomodprobe -r kvm_amd && modprobe kvm_amd nested=1

To enable nesting permanently, enable the nested KVM

module parameter in the /etc/modprobe.d/kvm_*.conf

file, depending on your CPU:

For Intel CPUs, edit

/etc/modprobe.d/kvm_intel.confand add the following line:options kvm_intel nested=1

For AMD CPUs, edit

/etc/modprobe.d/kvm_amd.confand add the following line:options kvm_amd nested=1

When your L0 host is capable of nesting, you can start an L1 guest in one of the following ways:

Use the

-cpu hostQEMU command line option.Add the

vmx(for Intel CPUs) or thesvm(for AMD CPUs) CPU feature to the-cpuQEMU command line option, which enables virtualization for the virtual CPU.

6.3.1 VMware ESX as a guest hypervisor #

If you use VMware ESX as a guest hypervisor on top of a KVM bare metal hypervisor, you may experience unstable network communication. This problem occurs especially between nested KVM guests and the KVM bare metal hypervisor or external network. The following default CPU configuration of the nested KVM guest is causing the problem:

<cpu mode='host-model' check='partial'/>

To fix it, modify the CPU configuration as follow:

[...] <cpu mode='host-passthrough' check='none'> <cache mode='passthrough'/> </cpu> [...]

7 Virtualization limits and support #

QEMU is only supported when used for virtualization together with the KVM or Xen hypervisors. The TCG accelerator is not supported, even when it is distributed within SUSE products. Users must not rely on QEMU TCG to provide guest isolation, or for any security guarantees. See also https://qemu-project.gitlab.io/qemu/system/security.html.

7.1 Architecture support #

7.1.1 KVM hardware requirements #

SUSE supports KVM full virtualization on AMD64/Intel 64, AArch64, IBM Z and IBM LinuxONE hosts.

On the AMD64/Intel 64 architecture, KVM is designed around hardware virtualization features included in AMD* (AMD-V) and Intel* (VT-x) CPUs. It supports virtualization features of chipsets and PCI devices, such as an I/O Memory Mapping Unit (IOMMU) and Single Root I/O Virtualization (SR-IOV). You can test whether your CPU supports hardware virtualization with the following command:

>egrep '(vmx|svm)' /proc/cpuinfoIf this command returns no output, your processor either does not support hardware virtualization, or this feature has been disabled in the BIOS or firmware.

The following Web sites identify AMD64/Intel 64 processors that support hardware virtualization: https://ark.intel.com/Products/VirtualizationTechnology (for Intel CPUs), and https://products.amd.com/ (for AMD CPUs).

On the Arm architecture, Armv8-A processors include support for virtualization.

On the Arm architecture, we only support running QEMU/KVM via the CPU model

host(it is namedhost-passthroughin Virtual Machine Manager orlibvirt).

The KVM kernel modules only load if the CPU hardware virtualization features are available.

The general minimum hardware requirements for the VM Host Server are the same as outlined in Book “Deployment Guide”, Chapter 2 “Installation on AMD64 and Intel 64”, Section 2.1 “Hardware requirements”. However, additional RAM for each virtualized guest is needed. It should at least be the same amount that is needed for a physical installation. It is also strongly recommended to have at least one processor core or hyper-thread for each running guest.

AArch64 is a continuously evolving platform. It does not have a traditional standards and compliance certification program to enable interoperability with operating systems and hypervisors. Ask your vendor for the support statement on SUSE Linux Enterprise Server.

Running KVM or Xen hypervisors on the POWER platform is not supported.

7.1.2 Xen hardware requirements #

SUSE supports Xen on AMD64/Intel 64.

7.2 Hypervisor limits #

New features and virtualization limits for Xen and KVM are outlined in the Release Notes for each Service Pack (SP).

Only packages that are part of the official repositories for SUSE Linux Enterprise Server are

supported. Conversely, all optional subpackages and plug-ins (for QEMU,

libvirt) provided at

packagehub are

not supported.

For the maximum total virtual CPUs per host, see Article “Virtualization Best Practices”, Section 4.5.1 “Assigning CPUs”. The total number of virtual CPUs should be proportional to the number of available physical CPUs.

With SUSE Linux Enterprise Server 11 SP2, we removed virtualization host facilities from 32-bit editions. 32-bit guests are not affected and are fully supported using the provided 64-bit hypervisor.

7.2.1 KVM limits #

Supported (and tested) virtualization limits of a SUSE Linux Enterprise Server 15 SP6 host running Linux guests on AMD64/Intel 64. For other operating systems, refer to the specific vendor.

|

Maximum virtual CPUs per VM |

768 |

|

Maximum memory per VM |

4 TiB |

KVM host limits are identical to SUSE Linux Enterprise Server (see the corresponding section of release notes), except for:

Maximum virtual CPUs per VM: see recommendations in the Virtualization Best Practices Guide regarding over-commitment of physical CPUs at Article “Virtualization Best Practices”, Section 4.5.1 “Assigning CPUs”. The total virtual CPUs should be proportional to the available physical CPUs.

7.2.2 Xen limits #

|

Maximum virtual CPUs per VM |

64 (HVM Windows guest), 128 (trusted HVMs), or 512 (PV) |

|

Maximum memory per VM |

2 TiB (64-bit guest), 16 GiB (32-bit guest with PAE) |

|

Maximum total physical CPUs |

1024 |

|

Maximum total virtual CPUs per host |

See recommendations in the Virtualization Best Practices Guide regarding over-commitment of physical CPUs in sec-vt-best-perf-cpu-assign. The total virtual CPUs should be proportional to the available physical CPUs. |

|

Maximum physical memory |

16 TiB |

|

Suspend and hibernate modes |

Not supported. |

7.3 Supported host environments (hypervisors) #

This section describes the support status of SUSE Linux Enterprise Server 15 SP6 running as a guest operating system on top of different virtualization hosts (hypervisors).

|

SUSE Linux Enterprise Server |

Hypervisors |

|---|---|

|

SUSE Linux Enterprise Server 12 SP5 |

Xen and KVM (SUSE Linux Enterprise Server 15 SP6 guest must use UEFI boot) |

|

SUSE Linux Enterprise Server 15 SP3 to SP6 |

Xen and KVM |

Windows Server 2016, 2019, 2022

You can also search in the SUSE YES certification database.

Support for SUSE host operating systems is full L3 (both for the guest and host), according to the respective product lifecycle.

SUSE provides full L3 support for SUSE Linux Enterprise Server guests within third-party host environments.

Support for the host and cooperation with SUSE Linux Enterprise Server guests must be provided by the host system's vendor.

7.4 Supported guest operating systems #

This section lists the support status for guest operating systems virtualized on top of SUSE Linux Enterprise Server 15 SP6 for KVM and Xen hypervisors.

Microsoft Windows guests can be rebooted by libvirt/virsh only if

paravirtualized drivers are installed in the guest. Refer to

https://www.suse.com/products/vmdriverpack/ for

more details on downloading and installing PV drivers.

SUSE Linux Enterprise Server 12 SP5

SUSE Linux Enterprise Server 15 SP2, 15 SP3, 15 SP4, 15 SP5, 15 SP6

SUSE Linux Enterprise Micro 5.1, 5.2, 5.3, 5.4, 5.5, 6.0

Windows Server 2016, 2019

Oracle Linux 6, 7, 8 (KVM hypervisor only)

SLED 15 SP3

Windows 10 / 11

Refer to the SUSE Liberty Linux documentation at https://documentation.suse.com/liberty for the list of available combinations and supported releases. In other cases, they are supported on a limited basis (L2, fixes if reasonable).

Starting from RHEL 7.2, Red Hat removed Xen PV drivers.

In other combinations, L2 support is provided but fixes are available only if feasible. SUSE fully supports the host OS (hypervisor). The guest OS issues need to be supported by the respective OS vendor. If an issue fix involves both the host and guest environments, the customer needs to approach both SUSE and the guest VM OS vendor.

All guest operating systems are supported both fully virtualized and paravirtualized. The exception is Windows systems, which are only supported fully virtualized (but they can use PV drivers: https://www.suse.com/products/vmdriverpack/), and OES operating systems, which are supported only paravirtualized.

All guest operating systems are supported both in 32-bit and 64-bit environments, unless stated otherwise.

7.4.1 Availability of paravirtualized drivers #

To improve the performance of the guest operating system, paravirtualized drivers are provided when available. Although they are not required, it is strongly recommended to use them.

Starting with SUSE Linux Enterprise Server 12 SP2, we switched to a PVops kernel. We are no longer using a dedicated kernel-xen package:

The kernel-default+kernel-xen on dom0 was replaced by the kernel-default package.

The kernel-xen package on PV domU was replaced by the kernel-default package.

The kernel-default+xen-kmp on HVM domU was replaced by kernel-default.

For SUSE Linux Enterprise Server 12 SP1 and older (down to 10 SP4), the paravirtualized drivers are included in a dedicated kernel-xen package.

The paravirtualized drivers are available as follows:

- SUSE Linux Enterprise Server 12 / 12 SP1 / 12 SP2

Included in kernel

- SUSE Linux Enterprise Server 11 / 11 SP1 / 11 SP2 / 11 SP3 / 11 SP4

Included in kernel

- SUSE Linux Enterprise Server 10 SP4

Included in kernel

- Red Hat

Available since Red Hat Enterprise Linux 5.4. Starting from Red Hat Enterprise Linux 7.2, Red Hat removed the PV drivers.

- Windows

SUSE has developed virtio-based drivers for Windows, which are available in the Virtual Machine Driver Pack (VMDP). For more information, see https://www.suse.com/products/vmdriverpack/.

7.5 Supported VM migration scenarios #

SUSE Linux Enterprise Server supports migrating a virtual machine from one physical host to another.

7.5.1 Offline migration scenarios #

SUSE supports offline migration, powering off a guest VM, then moving it to a host running a different SLE product, from SLE 12 to SLE 15 SPX. The following host operating system combinations are fully supported (L3) for migrating guests from one host to another:

| Target SLES host | 12 SP3 | 12 SP4 | 12 SP5 | 15 GA | 15 SP1 | 15 SP2 | 15 SP3 | 15 SP4 | 15 SP5 | 15 SP6 |

|---|---|---|---|---|---|---|---|---|---|---|

| Source SLES host | ||||||||||

| 12 SP3 | ✓ | ✓ | ✓ | ✓ | ❌ | ❌ | ❌ | ❌ | ❌ | ❌ |

| 12 SP4 | ❌ | ✓ | ✓ | ✓1 | ✓ | ❌ | ❌ | ❌ | ❌ | ❌ |

| 12 SP5 | ❌ | ❌ | ✓ | ❌ | ✓ | ✓ | ❌ | ❌ | ❌ | ❌ |

| 15 GA | ❌ | ❌ | ❌ | ❌ | ✓ | ✓ | ✓ | ❌ | ❌ | ❌ |

| 15 SP1 | ❌ | ❌ | ❌ | ❌ | ✓ | ✓ | ✓ | ❌ | ❌ | ❌ |

| 15 SP2 | ❌ | ❌ | ❌ | ❌ | ❌ | ✓ | ✓ | ✓ | ❌ | ❌ |

| 15 SP3 | ❌ | ❌ | ❌ | ❌ | ❌ | ❌ | ✓ | ✓ | ✓ | ✓ |

| 15 SP4 | ❌ | ❌ | ❌ | ❌ | ❌ | ❌ | ❌ | ✓ | ✓ | ✓ |

| 15 SP5 | ❌ | ❌ | ❌ | ❌ | ❌ | ❌ | ❌ | ❌ | ✓ | ✓ |

| 15 SP6 | ❌ | ❌ | ❌ | ❌ | ❌ | ❌ | ❌ | ❌ | ❌ | ✓ |

| ✓ |

Fully compatible and fully supported |

| ✓1 |

Supported for KVM hypervisor only |

| ❌ |

Not supported |

7.5.2 Live migration scenarios #

This section lists support status of live migration scenarios when running virtualized on top of SLES. Also, refer to the supported Section 17.2, “Migration requirements”. The following host operating system combinations are fully supported (L3 according to the respective product life cycle).

SUSE always supports live migration of virtual machines between hosts running SLES with successive service pack numbers. For example, from SLES 15 SP4 to 15 SP5.

SUSE strives to support live migration of virtual machines from a host running a service pack under LTSS to a host running a newer service pack, within the same major version of SUSE Linux Enterprise Server. For example, virtual machine migration from a SLES 12 SP2 host to a SLES 12 SP5 host. SUSE only performs minimal testing of LTSS-to-newer migration scenarios and recommends thorough on-site testing before attempting to migrate critical virtual machines.

Live migration between SLE 11 and SLE 12 is not supported because of the different tool stack, see the Release notes for more details.

SLES 15 SP6 and newer include kernel patches and tooling to enable AMD and Intel Confidential Computing technology in the product. As this technology is not yet fully ready for a production environment, it is provided as a technology preview.

| Target SLES host | 12 SP4 | 12 SP5 | 15 GA | 15 SP1 | 15 SP2 | 15 SP3 | 15 SP4 | 15 SP5 | 15 SP6 | 15 SP7 |

|---|---|---|---|---|---|---|---|---|---|---|

| Source SLES host | ||||||||||

| 12 SP3 | ✓ | ❌ | ❌ | ❌ | ❌ | ❌ | ❌ | ❌ | ❌ | ❌ |

| 12 SP4 | ✓ | ✓ | ✓1 | ❌ | ❌ | ❌ | ❌ | ❌ | ❌ | ❌ |

| 12 SP5 | ❌ | ✓ | ❌ | ✓ | ❌ | ❌ | ❌ | ❌ | ❌ | ❌ |

| 15 GA | ❌ | ❌ | ✓ | ✓ | ❌ | ❌ | ❌ | ❌ | ❌ | ❌ |

| 15 SP1 | ❌ | ❌ | ❌ | ✓ | ✓ | ❌ | ❌ | ❌ | ❌ | ❌ |

| 15 SP2 | ❌ | ❌ | ❌ | ❌ | ✓ | ✓ | ❌ | ❌ | ❌ | ❌ |

| 15 SP3 | ❌ | ❌ | ❌ | ❌ | ❌ | ✓ | ✓ | ❌ | ❌ | ❌ |

| 15 SP4 | ❌ | ❌ | ❌ | ❌ | ❌ | ❌ | ✓ | ✓ | ❌ | ❌ |

| 15 SP5 | ❌ | ❌ | ❌ | ❌ | ❌ | ❌ | ❌ | ✓ | ✓ | ❌ |

| 15 SP6 | ❌ | ❌ | ❌ | ❌ | ❌ | ❌ | ❌ | ❌ | ✓ | ✓2 |

| ✓ |

Fully compatible and fully supported |

| ✓1 |

Supported for KVM hypervisor only |

| ✓2 |

When available |

| ❌ |

Not supported |

7.6 Feature support #

Nested virtualization allows you to run a virtual machine inside another VM while still using hardware acceleration from the host. It has low performance and adds more complexity while debugging. Nested virtualization is normally used for testing purposes. In SUSE Linux Enterprise Server, nested virtualization is a technology preview. It is only provided for testing and is not supported. Bugs can be reported, but they are treated with low priority. Any attempt to live migrate or to save or restore VMs in the presence of nested virtualization is also explicitly unsupported.

Post-copy is a method to live migrate virtual machines that is intended to get VMs running as soon as possible on the destination host, and have the VM RAM transferred gradually in the background over time as needed. Under certain conditions, this can be an optimization compared to the traditional pre-copy method. However, this comes with a major drawback: An error occurring during the migration (especially a network failure) can cause the whole VM RAM contents to be lost. Therefore, we recommend using pre-copy only in production, while post-copy can be used for testing and experimentation in case losing the VM state is not a major concern.

7.6.1 Xen host (Dom0) #

Dom0) #| Features | Xen |

|---|---|

| Network and block device hotplugging | ✓ |

| Physical CPU hotplugging | ❌ |

| Virtual CPU hotplugging | ✓ |

| Virtual CPU pinning | ✓ |

| Virtual CPU capping | ✓ |

| Intel* VT-x2: FlexPriority, FlexMigrate (migration constraints apply to dissimilar CPU architectures) | ✓ |

| Intel* VT-d2 (DMA remapping with interrupt filtering and queued invalidation) | ✓ |

| AMD* IOMMU (I/O page table with guest-to-host physical address translation) | ✓ |

The addition or removal of physical CPUs at runtime is not supported. However, virtual CPUs can be added or removed for each VM Guest while offline.

7.6.2 Guest feature support #

For live migration, both source and target system architectures need to match; that is, the processors (AMD* or Intel*) must be the same. Unless CPU ID masking is used, such as with Intel FlexMigration, the target should feature the same processor revision or a more recent processor revision than the source. If VMs are moved among different systems, the same rules apply for each move. To avoid failing optimized code at runtime or application start-up, source and target CPUs need to expose the same processor extensions. Xen exposes the physical CPU extensions to the VMs transparently. To summarize, guests can be 32-bit or 64-bit, but the VHS must be identical.

Hotplugging of virtual network and virtual block devices, and resizing, shrinking and restoring dynamic virtual memory are supported in Xen and KVM only if PV drivers are being used (VMDP).

For machines that support Intel FlexMigration, CPU-ID masking and faulting allow for more flexibility in cross-CPU migration.

For KVM, a detailed description of supported limits, features,

recommended settings and scenarios, and other useful information is

maintained in kvm-supported.txt. This file is

part of the KVM package and can be found in

/usr/share/doc/packages/qemu-kvm.

| Features | Xen PV guest (DomU) | Xen FV guest | KVM FV guest |

|---|---|---|---|

| Virtual network and virtual block device hotplugging | ✓ | ✓ | ✓ |

| Virtual CPU hotplugging | ✓ | ❌ | ❌ |

| Virtual CPU over-commitment | ✓ | ✓ | ✓ |

| Dynamic virtual memory resize | ✓ | ✓ | ✓ |

| VM save and restore | ✓ | ✓ | ✓ |

| VM Live Migration | ✓ [1] | ✓ [1] | ✓ |

| VM snapshot | ✓ | ✓ | ✓ |

| Advanced debugging with GDBC | ✓ | ✓ | ✓ |

| Dom0 metrics visible to VM | ✓ | ✓ | ✓ |

| Memory ballooning | ✓ | ❌ | ❌ |

| PCI Pass-Through | ✓ [2] | ✓ | ✓ |

| AMD SEV | ❌ | ❌ | ✓ [3] |

| ✓ |

Fully compatible and fully supported |

| ❌ |

Not supported |

| [1] | See Section 17.2, “Migration requirements”. |

| [2] | NetWare guests are excluded. |

| [3] | See https://documentation.suse.com/sles/html/SLES-amd-sev/article-amd-sev.html |

Part II Managing virtual machines with libvirt #

- 8

libvirtdaemons - 9 Preparing the VM Host Server

- 10 Guest installation

- 11 Basic VM Guest management

- 12 Connecting and authorizing

- 13 Advanced storage topics

- 14 Configuring virtual machines with Virtual Machine Manager

Virtual Machine Manager's view offers in-depth information about the VM Guest's complete configuration and hardware equipment. Using this view, you can also change the guest configuration or add and modify virtual hardware. To access this view, open the guest's console in Virtual Machine Manager and either choose › from the menu, or click in the toolbar.

- 15 Configuring virtual machines with

virsh You can use

virshto configure virtual machines (VM) on the command line as an alternative to using the Virtual Machine Manager. Withvirsh, you can control the state of a VM, edit the configuration of a VM or even migrate a VM to another host. The following sections describe how to manage VMs by usingvirsh.- 16 Enhancing virtual machine security with AMD SEV-SNP

You can enhance the security of your virtual machines with AMD Secure Encrypted Virtualization-Secure Nested Paging (SEV-SNP). The AMD SEV-SNP feature isolates virtual machines from the host system and other VMs, protecting the data and code. This feature encrypts data and ensures that all changes with the code and data in the VM are detected or tracked. Since this isolates VMs, the other VMs or the host machine are not affected with threats.

This section explains the steps to enable and use AMD SEV-SNP on your AMD EPYC server with SUSE Linux Enterprise Server 15 SP6.

- 17 Migrating VM Guests

- 18 Xen to KVM migration guide

8 libvirt daemons #

A libvirt deployment for accessing KVM or Xen requires one or more

daemons to be installed and active on the host. libvirt provides two

daemon deployment options: monolithic or modular daemons. libvirt has

always provided the single monolithic daemon libvirtd. It includes the

primary hypervisor drivers and all secondary drivers needed for managing

storage, networking, node devices, etc. The monolithic libvirtd also

provides secure remote access for external clients. Over time, libvirt

added support for modular daemons, where each driver runs in its own

daemon, allowing users to customize their libvirt deployment. Modular

daemons are enabled by default, but a deployment can be switched to the

traditional monolithic daemon by disabling the individual daemons and

enabling libvirtd.

The modular daemon deployment is useful in scenarios where minimal

libvirt support is needed. For example, if virtual machine storage and

networking is not provided by libvirt, the

libvirt-daemon-driver-storage and

libvirt-daemon-driver-network packages are not

required. Kubernetes is an example of an extreme case, where it handles all

networking, storage, cgroups and namespace integration, etc. Only the

libvirt-daemon-driver-QEMU package, providing

virtqemud, needs to be installed.

Modular daemons allow configuring a custom libvirt deployment containing

only the components required for the use case.

8.1 Starting and stopping the modular daemons #

The modular daemons are named after the driver which they are running,

with the pattern “virtDRIVERd”.

They are configured via the files

/etc/libvirt/virtDRIVERd.conf.

SUSE supports the virtqemud and

virtxend hypervisor daemons, along with

all the secondary daemons:

virtnetworkd - The virtual network management daemon which provides

libvirt's virtual network management APIs. For example, virtnetworkd can be used to create a NAT virtual network on the host for use by virtual machines.virtnodedevd - The host physical device management daemon which provides

libvirt's node device management APIs. For example, virtnodedevd can be used to detach a PCI device from the host for use by a virtual machine.virtnwfilterd - The host firewall management daemon which provides

libvirt's firewall management APIs. For example, virtnwfilterd can be used to configure network traffic filtering rules for virtual machines.virtsecretd - The host secret management daemon which provides

libvirt's secret management APIs. For example, virtsecretd can be used to store a key associated with a LUKs volume.virtstoraged - The host storage management daemon which provides

libvirt's storage management APIs. virtstoraged can be used to create storage pools and create volumes from those pools.virtinterfaced - The host NIC management daemon which provides

libvirt's host network interface management APIs. For example, virtinterfaced can be used to create a bonded network device on the host. SUSE discourages the use oflibvirt's interface management APIs in favor of default networking tools like wicked or NetworkManager. It is recommended to disable virtinterfaced.virtproxyd - A daemon to proxy connections between the traditional

libvirtdsockets and the modular daemon sockets. With a modularlibvirtdeployment, virtproxyd allows remote clients to access thelibvirtAPIs similar to the monolithiclibvirtd. It can also be used by local clients that connect to the monolithiclibvirtdsockets.virtlogd - A daemon to manage logs from virtual machine consoles. virtlogd is also used by the monolithic

libvirtd. The monolithic daemon and virtqemudsystemdunit files require virtlogd, so it is not necessary to explicitly start virtlogd.virtlockd - A daemon to manage locks held against virtual machine resources such as disks. virtlockd is also used by the monolithic

libvirtd. The monolithic daemon, virtqemud, and virtxendsystemdunit files require virtlockd, so it is not necessary to explicitly start virtlockd.

virtlogd and

virtlockd are also used by the

monolithic libvirtd. These daemons have always been separate from

libvirtd for security reasons.

By default, the modular daemons listen for connections on the

/var/run/libvirt/virtDRIVERd-sock

and

/var/run/libvirt/virtDRIVERd-sock-ro

Unix Domain Sockets. The client library prefers these sockets over the

traditional /var/run/libvirt/libvirtd-sock. The

virtproxyd daemon is available for remote clients or local clients

expecting the traditional libvirtd socket.

The virtqemud and

virtxend services are enabled in

the systemd presets. The sockets for

virtnetworkd,

virtnodedevd,

virtnwfilterd,

virtstoraged and

virtsecretd are also enabled in

the presets, ensuring the daemons are enabled and available when the

corresponding packages are installed. Although enabled in presets for

convenience, the modular daemons can also be managed with their systemd

unit files:

virtDRIVERd.service - The main unit file for launching the virtDRIVERd daemon. We recommend configuring the service to start on boot if VMs are also configured to start on host boot.

virtDRIVERd.socket - The unit file corresponding to the main read-write UNIX socket

/var/run/libvirt/virtDRIVERd-sock. We recommend starting this socket on boot by default.virtDRIVERd-ro.socket - The unit file corresponding to the main read-only UNIX socket

/var/run/libvirt/virtDRIVERd-sock-ro. We recommend starting this socket on boot by default.virtDRIVERd-admin.socket - The unit file corresponding to the administrative UNIX socket

/var/run/libvirt/virtDRIVERd-admin-sock. We recommend starting this socket on boot by default.

When systemd socket activation is used, several configuration settings

in virtDRIVERd.conf are no longer honored.

Instead, these settings must be controlled via the system unit files:

unix_sock_group - UNIX socket group owner, controlled via the

SocketGroupparameter in thevirtDRIVERd.socketandvirtDRIVERd-ro.socketunit files.unix_sock_ro_perms - Read-only UNIX socket permissions, controlled via the

SocketModeparameter in thevirtDRIVERd-ro.socketunit file.unix_sock_rw_perms - Read-write UNIX socket permissions, controlled via the

SocketModeparameter in thevirtDRIVERd.socketunit file.unix_sock_admin_perms - Admin UNIX socket permissions, controlled via the

SocketModeparameter in thevirtDRIVERd-admin.socketunit file.unix_sock_dir - Directory in which all UNIX sockets are created, independently controlled via the

ListenStreamparameter in any of thevirtDRIVERd.socket,virtDRIVERd-ro.socketandvirtDRIVERd-admin.socketunit files.

8.2 Starting and stopping the monolithic daemon #

The monolithic daemon is known as libvirtd and is configured via

/etc/libvirt/libvirtd.conf. libvirtd is managed

with several systemd unit files:

libvirtd.service - The main

systemdunit file for launchinglibvirtd. We recommend configuringlibvirtd.serviceto start on boot if VMs are also configured to start on host boot.libvirtd.socket - The unit file corresponding to the main read-write UNIX socket

/var/run/libvirt/libvirt-sock. We recommend enabling this unit on boot.libvirtd-ro.socket - The unit file corresponding to the main read-only UNIX socket

/var/run/libvirt/libvirt-sock-ro. We recommend enabling this unit on boot.libvirtd-admin.socket - The unit file corresponding to the administrative UNIX socket

/var/run/libvirt/libvirt-admin-sock. We recommend enabling this unit on boot.libvirtd-tcp.socket - The unit file corresponding to the TCP 16509 port for non-TLS remote access. This unit should not be configured to start on boot until the administrator has configured a suitable authentication mechanism.

libvirtd-tls.socket - The unit file corresponding to the TCP 16509 port for TLS remote access. This unit should not be configured to start on boot until the administrator has deployed x509 certificates and optionally configured a suitable authentication mechanism.

When systemd socket activation is used, certain configuration settings

in libvirtd.conf are no longer honored. Instead,

these settings must be controlled via the system unit files:

listen_tcp - TCP socket usage is enabled by starting the

libvirtd-tcp.socketunit file.listen_tls - TLS socket usage is enabled by starting the

libvirtd-tls.socketunit file.tcp_port - Port for the non-TLS TCP socket, controlled via the

ListenStreamparameter in thelibvirtd-tcp.socketunit file.tls_port - Port for the TLS TCP socket, controlled via the

ListenStreamparameter in thelibvirtd-tls.socketunit file.listen_addr - IP address to listen on, independently controlled via the

ListenStreamparameter in thelibvirtd-tcp.socketorlibvirtd-tls.socketunit files.unix_sock_group - UNIX socket group owner, controlled via the

SocketGroupparameter in thelibvirtd.socketandlibvirtd-ro.socketunit files.unix_sock_ro_perms - Read-only UNIX socket permissions, controlled via the

SocketModeparameter in thelibvirtd-ro.socketunit file.unix_sock_rw_perms - Read-write UNIX socket permissions, controlled via the

SocketModeparameter in thelibvirtd.socketunit file.unix_sock_admin_perms - Admin UNIX socket permissions, controlled via the

SocketModeparameter in thelibvirtd-admin.socketunit file.unix_sock_dir - Directory in which all UNIX sockets are created, independently controlled via the

ListenStreamparameter in any of thelibvirtd.socket,libvirtd-ro.socketandlibvirtd-admin.socketunit files.

libvirtd and xendomains

If libvirtd fails to start, check if the service

xendomains is loaded:

> systemctl is-active xendomains active

If the command returns active, you need to stop

xendomains before you can start

the libvirtd daemon. If you want libvirtd to also start after

rebooting, additionally prevent

xendomains from starting

automatically. Disable the service:

>sudosystemctl stop xendomains>sudosystemctl disable xendomains>sudosystemctl start libvirtd

xendomains and libvirtd

provide the same service and when used in parallel, may interfere with

one another. As an example,

xendomains may attempt to start

a domU already started by libvirtd.

8.3 Switching to the monolithic daemon #

Several services need to be changed when switching from modular to the monolithic daemon. It is recommended to stop or evict any running virtual machines before switching between the daemon options.

Stop the modular daemons and their sockets. The following example disables the QEMU daemon for KVM and several secondary daemons.

for drv in qemu network nodedev nwfilter secret storage do

>sudosystemctl stop virt${drv}d.service>sudosystemctl stop virt${drv}d{,-ro,-admin}.socket doneDisable future start of the modular daemons

for drv in qemu network nodedev nwfilter secret storage do

>sudosystemctl disable virt${drv}d.service>sudosystemctl disable virt${drv}d{,-ro,-admin}.socket doneEnable the monolithic

libvirtdservice and sockets>sudosystemctl enable libvirtd.service>sudosystemctl enable libvirtd{,-ro,-admin}.socketStart the monolithic

libvirtdsockets>sudosystemctl start libvirtd{,-ro,-admin}.socket

9 Preparing the VM Host Server #

Before you can install guest virtual machines, you need to prepare the VM Host Server to provide the guests with the resources that they need for their operation. Specifically, you need to configure:

Networking so that guests can make use of the network connection provided the host.

A storage pool reachable from the host so that the guests can store their disk images.

9.1 Configuring networks #

There are two common network configurations to provide a VM Guest with a network connection:

A network bridge. This is the default and recommended way of providing the guests with network connection.

A virtual network with forwarding enabled.

9.1.1 Network bridge #

The network bridge configuration provides a Layer 2 switch for VM Guests, switching Layer 2 Ethernet packets between ports on the bridge based on MAC addresses associated with the ports. This gives the VM Guest Layer 2 access to the VM Host Server's network. This configuration is analogous to connecting the VM Guest's virtual Ethernet cable into a hub that is shared with the host and other VM Guests running on the host. The configuration is often referred to as shared physical device.

The network bridge configuration is the default configuration of SUSE Linux Enterprise Server when configured as a KVM or Xen hypervisor. It is the preferred configuration when you simply want to connect VM Guests to the VM Host Server's LAN.

Which tool to use to create the network bridge depends on the service you use to manage the network connection on the VM Host Server:

If a network connection is managed by

wicked, use either YaST or the command line to create the network bridge.wickedis the default on server hosts.If a network connection is managed by NetworkManager, use the NetworkManager command line tool

nmclito create the network bridge. NetworkManager is the default on desktop and laptops.

9.1.1.1 Managing network bridges with YaST #

This section includes procedures to add or remove network bridges with YaST.

9.1.1.1.1 Adding a network bridge #

To add a network bridge on VM Host Server, follow these steps:

Start › › .

Activate the tab and click .

Select from the list and enter the bridge device interface name in the entry. Click the button to proceed.

In the tab, specify networking details such as DHCP/static IP address, subnet mask or host name.

Using is only useful when also assigning a device to a bridge that is connected to a DHCP server.

If you intend to create a virtual bridge that has no connection to a real network device, use . In this case, it is a good idea to use addresses from the private IP address ranges, for example,

192.168.0.0/16,172.16.0.0/12, or10.0.0.0/8.To create a bridge that should only serve as a connection between the different guests without connection to the host system, set the IP address to

0.0.0.0and the subnet mask to255.255.255.255. The network scripts handle this special address as an unset IP address.Activate the tab and activate the network devices you want to include in the network bridge.

Click to return to the tab and confirm with . The new network bridge should now be active on VM Host Server.

9.1.1.1.2 Deleting a network bridge #

To delete an existing network bridge, follow these steps:

Start › › .

Select the bridge device you want to delete from the list in the tab.

Delete the bridge with and confirm with .

9.1.1.2 Managing network bridges from the command line #

This section includes procedures to add or remove network bridges using the command line.

9.1.1.2.1 Adding a network bridge #

To add a new network bridge device on VM Host Server, follow these steps:

Log in as