Container Guide

The following guide covers the SUSE Linux Enterprise Server container ecosystem. Since containers are a constantly evolving technology, the guide is regularly updated, expanded and improved to reflect the latest technological developments.

1 Introduction to Linux containers #

Linux containers offer a lightweight virtualization method to run multiple isolated virtual environments simultaneously on a single host. This technology is based on the Linux kernel's namespaces for process isolation and kernel control groups (cgroups) for resource (CPU, memory, disk I/O, network, etc.) management.

Unlike Xen and KVM, where a full guest operating system is executed through a virtualization layer, Linux containers share and directly use the host OS kernel.

- Advantages of using containers

Size: Container images should only include the content needed to run an application, whereas a virtual machine includes an entire operating system,

Performance: Containers provide near-native performance, as the kernel overhead is lower compared to virtualization and emulation.

Security: Containers make it possible to isolate applications into self-contained units, separated from the rest of the host system.

Resources management: It is possible to granularly control CPU, memory, disk I/O, network interfaces, etc. inside containers (via cgroups).

Flexibility: Container images hold all necessary libraries, dependencies, and files needed to run an application, thus can be easily developed and deployed on multiple hosts.

- Limitations of containers

Containers share the host system's kernel, so the containers have to use the specific kernel version provided by the host.

Only Linux-based applications can be containerized on a Linux host.

A container encapsulates binaries for a specific architecture (AMD64/Intel 64 or AArch64 for instance). So a container made for AMD64/Intel 64 only runs on an AMD64/Intel 64 system host without the use of emulation.

Containers in themselves are no more secure than executing binaries outside of a container, and the overall security of containers depends on the host system. While containerized applications can be secured through AppArmor or SELinux profiles, container security requires putting in place tools and policies that ensure security of the container infrastructure and applications.

1.1 Key concepts and introduction to Podman #

Although Docker Open Source Engine is a popular choice for working with images and containers, Podman provides a drop-in replacement for Docker that offers several advantages. While Section 14, “Podman overview” provides more information on Podman, this chapter offers a quick introduction to key concepts and a basic procedure for creating a container image and using Podman to run a container.

The basic Podman workflow is as follows:

Running a container, either on a local machine or in a cloud service, normally involves the following steps:

Fetch a base image by pulling it from a registry to your local machine.

Create a

Dockerfileand use it to build a custom image on top of the base image.Use the created image to start one or more containers.

To run a container, you need an image. An image includes all dependencies needed to run the application. For example, the SLE BCI-Base image contains the SLE distribution with a minimal package selection.

While it is possible to create an image from scratch, few applications would work in such an empty environment. Thus, using an existing base image is more practical in most situations. A base image has no parent, meaning it is not based on another image.

Although you can use a base image for running containers, the main purpose of base images is to serve as foundations for creating custom images that can run containers with specific applications, servers, services, and so on.

Both base and custom images are available through a repository of images called a registry. Unless a registry is explicitly specified, Podman pulls images from the openSUSE and Docker Hub registry. While you can fetch a base image manually, Podman can do that automatically when building a custom image.

To build a custom image, you must create a special file called a

Containerfile or Dockerfile

containing building instructions. For example, a

Dockerfile can contain instructions to update the

system software, install the desired application, open specific network

ports, run commands, etc.

You can build images not only from base images, but also on top of custom images. So you can have an image consisting of multiple layers. Please refer to Section 19, “Creating custom container images” for more information.

2 Tools for building images and managing containers #

All the tools described below are part of the SUSE Linux Enterprise Server Containers Module, except the Open Build Service. You can see the full list of packages in the Containers Module in the SUSE Customer Center.

2.1 SUSE Registry #

https://registry.suse.com is the official source of SLE Base Container Images. It contains tested, updated and certified SLE Base Container Images. All images in the SUSE Registry are regularly rebuilt to include updates and fixes. The SUSE Registry's Web user interface lists a subset of the available images: Base Container Images, Development Stack Container Images, Application Container Images, SUSE Linux Enterprise Server Images, and Releases Out of General Support. The Web UI also provides additional information for each image, including release date, support level, size, digest of the images and packages inside the image.

2.2 Docker #

Docker is a system for creating and managing containers. Its core is the Docker Open Source Engine—a lightweight virtualization solution to run containers simultaneously on a single host. Docker containers can be built using Dockerfiles.

2.3 Podman #

Podman stands for Pod Manager tool. It is a daemonless container engine for developing, managing and running Open Container Initiative (OCI) containers on a Linux system, and it offers a drop-in alternative for Docker. Podman is the recommended container runtime for SLES. For a general introduction to Podman, refer to Section 14, “Podman overview”.

2.4 Buildah #

Buildah is a utility for

building OCI container images. It is a complementary tool to Podman. In

fact, the podman build command uses Buildah to

perform container image builds. Buildah makes it possible to build

images from scratch, from existing images, and using Dockerfiles. OCI

images built using the Buildah command-line tool and the underlying

OCI-based technologies (for example, containers/image

and

containers/storage ) are portable and can therefore

run in a Docker Open Source Engine environment. For information on

installing and using Buildah, refer to

Section 18, “Buildah overview”.

2.5 skopeo #

skopeo is

a command-line utility for managing, inspecting and signing container

images and image repositories. skopeo can be used to inspect containers

and repositories on remote and local container registries. skopeo can

copy container images between different storage back-ends. skopeo is part

of the Basesystem Module of SUSE Linux Enterprise Server.

2.6 Helm #

Helm is the Kubernetes package manager, and it is the de-facto standard for deploying containerized applications on Kubernetes clusters using charts. Helm can be used to install, update and remove containerized applications in Kubernetes environments. It can also handle the associated resources, such as configuration, storage volumes, etc. For instance, it is used for instance to deploy the RMT server (see RMT documentation for more information).

2.7 Distribution #

Distribution is an open-source registry implementation for storing and distributing container images using the OCI Distribution Specification. It provides a simple, secure and scalable base for building a large scale registry solution or running a simple private registry. Distribution can also mirror Docker Hub but not any other private registry.

2.8 Open Build Service #

The Open Build Service

(OBS) provides free infrastructure for building and storing RPM

packages including different container formats. The

OBS Container

Registry provides a detailed listing of all container images built

by the OBS, complete with commands for pulling the images into your local

environment. The OBS

openSUSE container

image templates can be modified to specific needs, which offers

the easiest way to create your own container branch. Container images can

be built with Docker tools from an existing image using a

Dockerfile. Alternatively, images can be built from

scratch using the KIWI NG image-building solution.

Instructions on how to build images on OBS can be found in the following blog post.

SUSE Container Images, called SLE Base Container Images (SLE BCIs) are the only official container images. They are not available at https://build.opensuse.org, and the RPMs available on the OBS are not identical to the RPMs distributed as part of SUSE Linux Enterprise Server. This means that it is not possible to build supported images at https://build.opensuse.org.

For more information about SLE BCIs, refer to Section 4, “General-purpose SLE Base Container Images”.

2.9 KIWI NG #

KIWI Next

Generation (KIWI NG) is a multi-purpose tool for building images. In addition to

container images, regular installation ISO images, and images for virtual

machines, KIWI NG can build images that boot via PXE or Vagrant boxes. The

main building block in KIWI NG is an image XML description, a directory that

includes the config.xml or config.kiwi

file along with scripts or configuration data. The process of creating

images with KIWI NG is fully automated and does not require any user

interaction. Any information required for the image creation process is

provided by the primary configuration file config.xml.

The image can be customized using the config.sh and

images.sh scripts.

It is important to distinguish between KIWI NG (currently version 9.20.9) and its unmaintained legacy versions (7.x.x or older), now called KIWI Legacy.

For information on how to install KIWI NG and use it to build images, see the KIWI NG documentation. A collection of example image descriptions can be found on the KIWI NG GitHub repository.

KIWI NG's man pages provide information on using the tool. To access the man

pages, install the kiwi-man-pages package.

3 Introduction to SLE Base Container Images #

SLE Base Container Images (SLE BCIs) are minimal SLES 15-based images that you can use to develop, deploy and share applications. There are two types of SLE BCIs:

General-purpose SLE BCIs can be used for building custom container images and for deploying applications.

Development Stack SLE BCIs provide minimal environments for developing and deploying applications in specific programming languages.

SLE Base Container Images are available from the SUSE Registry. It contains tested and updated SLE Base Container Images. All images in the SUSE Registry undergo a maintenance process. The images are built to contain the latest available updates and fixes. The SUSE Registry's Web user interface lists a subset of the available images. For information about the SUSE Registry, see Section 2.1, “SUSE Registry”.

SLE base images in the SUSE Registry receive security updates and are covered by the SUSE support plans. For more information about these support plans, see Section 22, “Compatibility and support conditions”.

3.1 Why SLE Base Container Images #

SLE BCIs offer a platform for creating SLES-based custom container images and containerized applications that can be distributed freely. SLE BCIs feature the same predictable enterprise lifecycle as SLES. The SLE_BCI 15 SP3 and SP4 repository (which is a subset of the SLE repository) gives SLE BCIs access to 4000 packages available for the AMD64/Intel 64, AArch64, POWER and IBM Z architectures. The packages in the repository have undergone quality assurance and security audits by SUSE. The container images are FIPS-compliant when running on a host in FIPS mode. In addition to that, SUSE can provide support for SLE BCIs through SUSE subscription plans.

- Security

Each package in the SLE_BCI repository undergoes security audits, and SLE BCIs benefit from the same mechanism of dealing with CVEs as SUSE Linux Enterprise Server. All discovered and fixed vulnerabilities are announced via e-mail, the dedicated CVE pages, and as OVAL and CVRF data. To ensure a secure supply chain, all container images are signed with Notary v1, Podman's GPG signatures, and Sigstore Cosign.

- Stability

Since SLE BCIs are based on SLES, they feature the same level of stability and quality assurance. Similar to SLES, SLE BCIs receive maintenance updates that provide bug fixes, improvements and security patches.

- Tooling and integration

SLE BCIs are designed to provide drop-in replacements for popular container images available on hub.docker.com. You can use the general-purpose SLE BCIs and the tools they put at your disposal to create custom container images, while the Development Stack SLE BCIs provide a foundation and the required tooling for building containerized applications.

- Redistribution

SLE Base Container Images are covered by a permissive EULA that allows you to redistribute custom container images based on a SLE Base Container Image.

3.2 Highlights #

SLE BCIs are fully compatible with SLES, but they do not require a subscription to run and distribute them.

SLE BCIs automatically run in FIPS-compatible mode when the host operating system is running in FIPS mode.

Each SLE BCI includes the RPM database, which makes it possible to audit the contents of the container image. You can use the RPM database to determine the specific version of the RPM package any given file belongs to. This allows you to ensure that a container image is not susceptible to known and already fixed vulnerabilities.

All SLE BCIs (except for those without Zypper) come with the

container-suseconnectservice. This gives containers that run on a registered SLES host access to the full SLES repositories.container-suseconnectis invoked automatically when you run Zypper for the first time, and the service adds the correct SLES repositories into the running container. On an unregistered SLES host or on a non-SLES host, the service does nothing. See Section 11.2, “Using container-suseconnect with SLE BCIs” for more information.

There is a SLE_BCI repository for each SLE service pack. This means that SLE BCIs based on SP4 have access to the SLE_BCI repository for SP4, all SLE BCIs based on SP5 use the SLE_BCI repository for SP5, and so on. Each SLE_BCI repository contains all SLE packages except kernels, boot loaders, installers (including YaST), desktop environments and hypervisors (such as KVM and Xen).

If the SLE_BCI repository does not have a package you need, you have two options. As an existing SUSE customer, you can file a feature request. As a regular user, you can request a package to be created by creating an issue in Bugzilla.

4 General-purpose SLE Base Container Images #

There are four general-purpose SLE BCIs, and each container image comes with a minimum set of packages to keep its size small. You can use a general-purpose SLE BCI either as a starting point for building custom container images, or as a platform for deploying specific software.

SUSE offers several general-purpose SLE BCIs that are intended as deployment targets or as foundations for creating customized images: SLE BCI-Base, SLE BCI-Minimal, SLE BCI-Micro and SLE BCI-BusyBox. These images share the common SLES base, and none of them ship with a specific language or an application stack. All images feature the RPM database (even if the specific image does not include the RPM package manager) that can be used to verify the provenance of every file in the image. Each image includes the SLES certificate bundle, which allows the deployed applications to use the system's certificates to verify TLS connections.

The table below provides a quick overview of the differences between SLE BCI-Base, SLE BCI-Minimal, SLE BCI-Micro and SLE BCI-BusyBox.

| Features | SLE BCI-Base | SLE BCI-Minimal | SLE BCI-Micro | SLE BCI-BusyBox |

|---|---|---|---|---|

|

glibc |

✓ |

✓ |

✓ |

✓ |

|

CA certificates |

✓ |

✓ |

✓ |

✓ |

|

rpm database |

✓ |

✓ |

✓ |

✓ |

|

coreutils |

✓ |

✓ |

✓ |

busybox |

|

bash |

✓ |

✓ |

✓ |

╳ |

|

rpm (binary) |

✓ |

✓ |

╳ |

╳ |

|

zypper |

✓ |

╳ |

╳ |

╳ |

4.1 SLE BCI-Base and SLE BCI-Init: When you need flexibility #

SLE BCI-Base comes with the Zypper package manager and the free SLE_BCI repository. This allows you to install software available in the repository and customize the image during the build. The downside is the size of the image. It is the largest of the general-purpose SLE BCIs, so it is not always the best choice for a deployment image.

A variant of SLE BCI-Base called SLE BCI-Init comes with systemd preinstalled. The SLE BCI-Init container image can be useful in scenarios requiring systemd for managing services in a single container.

When using SLE BCI-init container with Docker, you must use the

following arguments for systemd to work correctly in the container:

> docker run -ti --tmpfs /run -v /sys/fs/cgroup:/sys/fs/cgroup:rw --cgroupns=host registry.suse.com/bci/bci-init:latest

To correctly shut down the container, use the following command:

> docker kill -s SIGRTMIN+3 CONTAINER_ID

4.2 SLE BCI-Minimal: When you do not need Zypper #

This is a stripped-down version of the SLE BCI-Base image. SLE BCI-Minimal comes without Zypper, but it does have the RPM package manager installed. This significantly reduces the size of the image. However, while RPM can install and remove packages, it lacks support for repositories and automated dependency resolution. The SLE BCI-Minimal image is therefore intended for creating deployment containers, and then installing the desired RPM packages inside the containers. Although you can install the required dependencies, you need to download and resolve them manually. However, this approach is not recommended as it is prone to errors.

4.3 SLE BCI-Micro: When you need to deploy static binaries #

This image is similar to SLE BCI-Minimal but without the RPM package manager. The primary use case for the image is deploying static binaries produced externally or during multi-stage builds. As there is no straightforward way to install additional dependencies inside the container image, we recommend deploying a project using the SLE BCI-Micro image only when the final build artifact bundles all dependencies and has no external runtime requirements (like Python or Ruby).

4.4 SLE BCI-BusyBox: When you need the smallest and GPLv3-free image #

Similar to SLE BCI-Micro, the SLE BCI-BusyBox image comes with the most basic tools only. However, these tools are provided by the BusyBox project. This has the benefit of further size reduction. Furthermore, the image contains no GPLv3 licensed software. When using the image, keep in mind that there are certain differences between the BusyBox tools and the GNU Coreutils. So scripts written for a system that uses GNU Coreutils may require modification to work with BusyBox.

4.5 Approximate sizes #

For your reference, the list below provides an approximate size of each SLE BCI. Keep in mind that the provided numbers are rough estimations.

SLE BCI-Base~94 MBSLE BCI-Minimal~42 MBSLE BCI-Micro~26 MBSLE BCI-BusyBox~14 MB

5 Using Long Term Service Pack Support container images from the SUSE Registry #

Long Term Service Pack Support (LTSS) container images are available at https://registry.suse.com/. To access and use the container images, you must have a valid LTSS subscription.

Before you can pull or download LTSS container images, you must log in to the SUSE Registry as a user. There are three ways to do that.

- Use the system registration of your host system

If the host system you are using to build or run a container is already registered with the correct subscription required for accessing the LTSS container images, you can use the registration information from the host to log in to the registry.

The file

/etc/zypp/credentials.d/SCCcredentialscontains a username and a password. These credentials allow you to access any container that is available under the subscription of the respective host system. You can use these credentials to log in to SUSE Registry using the following commands (use the leading space before theechocommand to avoid storing the credentials in the shell history):>set +o history>echo PASSWORD | podman login -u USERNAME --password-stdin registry.suse.com>set -o history- Use a separate SUSE Customer Center registration code

If the host system is not registered with SUSE Customer Center, you can use a valid SUSE Customer Center registration code to log in to the registry:

>set +o history>echo SCC_REGISTRATION_CODE | podman login -u "regcode" --password-stdin registry.suse.com>set -o historyThe user parameter in this case is the verbatim string

regcode, and SCC_REGISTRATION_CODE is the actual registration code obtained from SUSE.- Use the organization mirroring credentials

You can also use the organization mirroring credentials to log in to the SUSE Registry:

>set +o history>echo SCC_MIRRORING_PASSWORD | podman login -u "SCC_MIRRORING_USER" --password-stdin registry.suse.com>set -o historyThese credentials give you access to all subscriptions the organization owns, including those related to container images in the SUSE Registry. The credentials are highly privileged and should be preferably used for a private mirroring registry only.

6 Development Stack SLE Base Container Images #

Development Stack SLE BCIs are built on top of the SLE BCI-Base. Each container

image comes with the Zypper stack and the free

SLE_BCI repository. Additionally, each image includes

most common tools for building and deploying applications in the specific

language environment. This includes tools like a compiler or interpreter as

well as the language-specific package manager.

Below is an overview of the Development Stack SLE BCIs available in the SUSE Registry.

- python

Ships with the python3 version from the tag and pip3, curl, git tools.

- node

Comes with nodejs version from the tag, npm and git. The yarn package manager can be installed with the

npm install -g yarncommand.- openjdk

Ships with the OpenJDK runtime. Designed for deploying Java applications.

- openjdk-devel

Includes the development part of OpenJDK in addition to the OpenJDK runtime. Instead of Bash, the default entry point is the jshell shell.

- ruby

A standard development environment based on Ruby 2.5, featuring ruby, gem and bundler as well as git and curl.

- rust

Ships with the Rust compiler and the Cargo package manager.

- golang

Ships with the go compiler version specified in the tag.

- dotnet-runtime

Includes the .NET runtime from Microsoft and the Microsoft .NET repository.

- dotnet-aspnet

Ships with the ASP.NET runtime from Microsoft and the Microsoft .NET repository.

- dotnet-sdk

Comes with the .NET and ASP.NET SDK from Microsoft as well as the Microsoft .NET repository.

- php

Ships with the PHP version specified in the tag.

7 Application SLE Base Container Images #

Application SLE BCIs are SLE BCI-Base container images that include specific applications, such as the PostgreSQL database and the Performance Co-Pilot a system-level performance analysis toolkit. Application SLE BCIs are available in the dedicated section of the SUSE Registry.

8 Important note about the support status of SLE Base Container Images #

All container images, except for base, are currently classified as tech

preview, and SUSE does not provide support for them. This

information is visible on the web on

registry.suse.com. It

is also indicated via the com.suse.supportlevel label

whether a container image still has the tech preview status. You can use

the skopeo and jq utilities to check the status of the desired SLE BCI as

follows:

>skopeo inspect docker://registry.suse.com/bci/bci-micro:15.4 | jq '.Labels["com.suse.supportlevel"]' "techpreview">skopeo inspect docker://registry.suse.com/bci/bci-base:15.4 | jq '.Labels["com.suse.supportlevel"]' "l3"

In the example above, the com.suse.supportlevel label is

set to techpreview in the bci-micro

container image, indicating that the image still has the tech preview

status. The bci-base container image, on the other hand,

has full L3 support. Unlike the general purpose SLE BCIs, the

Development Stack SLE BCIs may not follow the lifecycle of the SLES

distribution: they are supported as long as the respective language stack

receives support. In other words, new versions of SLE BCIs (indicated by the

OCI tags) may be released during the lifecycle of a SLES Service Pack,

while older versions may become unsupported. Refer to

https://suse.com/lifecycle to find out whether the

container in question is still under support.

A SLE Base Container Image is no longer updated after its support period ends. You will not receive any notification when that happens.

9 SLE Base Container Image labels #

SLE BCIs feature the following labels.

- com.suse.eula

Marks which section of the SUSE EULA applies to the container image.

- com.suse.release-stage

Indicates the current release stage of the image.

prototypeIndicates that the container image is in a prototype phase.alphaPrevents the container image from appearing in the registry.suse.com Web interface even if it is available there. The value also indicates the alpha quality of the container image.betaLists the container image in the Beta Container Images section of the registry.suse.com Web interface and adds the Beta label to the image. The value also indicates the beta quality of the container image.releasedIndicates that the container image is released and suitable for production use.

- com.suse.supportlevel

Shows the support level for the container.

l2Problem isolation, which means technical support designed to analyze data, reproduce customer problems, isolate problem areas, and provide a resolution for problems not resolved by Level 1, or prepare for Level 3.l3Problem resolution, which means technical support designed to resolve problems by engaging engineering to resolve product defects which have been identified by Level 2 Support.accSoftware delivered with the SLE Base Container Image may require an external contract.techpreviewThe image is unsupported and intended for use in proof-of-concept scenarios.unsupportedNo support is provided for the image.

- com.suse.lifecycle-url

Points to the https://www.suse.com/lifecycle/ page that offers information about the lifecycle of the product an image is based on.

9.1 Working with SLE BCI labels #

All SLE Base Container Images include information such as a build time stamp and description. This information is provided in the form of labels attached to the base images, and is therefore available for derived images and containers.

Here is an example of the labels information shown by podman

inspect:

podman inspect registry.suse.com/suse/sle15

[...]

"Labels": {

"com.suse.bci.base.created": "2023-01-26T22:15:08.381030307Z",

"com.suse.bci.base.description": "Image for containers based on SUSE Linux Enterprise Server 15 SP4.",

"com.suse.bci.base.disturl": "obs://build.suse.de/SUSE:SLE-15-SP4:Update:CR/images/1477b070ae019f95b0f2c3c0dce13daf-sles15-image",

"com.suse.bci.base.eula": "sle-bci",

"com.suse.bci.base.image-type": "sle-bci",

"com.suse.bci.base.lifecycle-url": "https://www.suse.com/lifecycle",

"com.suse.bci.base.reference": "registry.suse.com/suse/sle15:15.4.27.14.31",

"com.suse.bci.base.release-stage": "released",

"com.suse.bci.base.source": "https://sources.suse.com/SUSE:SLE-15-SP4:Update:CR/sles15-image/1477b070ae019f95b0f2c3c0dce13daf/",

"com.suse.bci.base.supportlevel": "l3",

"com.suse.bci.base.title": "SLE 15 SP4 Base Container Image",

"com.suse.bci.base.url": "https://www.suse.com/products/server/",

"com.suse.bci.base.vendor": "SUSE LLC",

"com.suse.bci.base.version": "15.4.27.14.31",

"com.suse.eula": "sle-bci",

"com.suse.image-type": "sle-bci",

"com.suse.lifecycle-url": "https://www.suse.com/lifecycle",

"com.suse.release-stage": "released",

"com.suse.sle.base.created": "2023-01-26T22:15:08.381030307Z",

"com.suse.sle.base.description": "Image for containers based on SUSE Linux Enterprise Server 15 SP4.",

"com.suse.sle.base.disturl": "obs://build.suse.de/SUSE:SLE-15-SP4:Update:CR/images/1477b070ae019f95b0f2c3c0dce13daf-sles15-image",

"com.suse.sle.base.eula": "sle-bci",

"com.suse.sle.base.image-type": "sle-bci",

"com.suse.sle.base.lifecycle-url": "https://www.suse.com/lifecycle",

"com.suse.sle.base.reference": "registry.suse.com/suse/sle15:15.4.27.14.31",

"com.suse.sle.base.release-stage": "released",

"com.suse.sle.base.source": "https://sources.suse.com/SUSE:SLE-15-SP4:Update:CR/sles15-image/1477b070ae019f95b0f2c3c0dce13daf/",

"com.suse.sle.base.supportlevel": "l3",

"com.suse.sle.base.title": "SLE 15 SP4 Base Container Image",

"com.suse.sle.base.url": "https://www.suse.com/products/server/",

"com.suse.sle.base.vendor": "SUSE LLC",

"com.suse.sle.base.version": "15.4.27.14.31",

"com.suse.supportlevel": "l3",

"org.openbuildservice.disturl": "obs://build.suse.de/SUSE:SLE-15-SP4:Update:CR/images/1477b070ae019f95b0f2c3c0dce13daf-sles15-image",

"org.opencontainers.image.created": "2023-01-26T22:15:08.381030307Z",

"org.opencontainers.image.description": "Image for containers based on SUSE Linux Enterprise Server 15 SP4.",

"org.opencontainers.image.source": "https://sources.suse.com/SUSE:SLE-15-SP4:Update:CR/sles15-image/1477b070ae019f95b0f2c3c0dce13daf/",

"org.opencontainers.image.title": "SLE 15 SP4 Base Container Image",

"org.opencontainers.image.url": "https://www.suse.com/products/server/",

"org.opencontainers.image.vendor": "SUSE LLC",

"org.opencontainers.image.version": "15.4.27.14.31",

"org.opensuse.reference": "registry.suse.com/suse/sle15:15.4.27.14.31"

},

[...]All labels are shown twice to ensure that the information in derived images about the original base image is still visible and not overwritten.

Use Podman to retrieve labels of a local image. The following command lists all labels and only the labels information of the bci-base:15.5 image:

podman inspect -f {{.Labels | json}} registry.suse.com/bci/bci-base:15.5It is also possible to retrieve the value of a specific label:

podman inspect -f {{ index .Labels \"com.suse.sle.base.supportlevel\" }} registry.suse.com/bci/bci-base:15.5

The preceding command retrieves the value of the

com.suse.sle.base.supportlevel label.

The skopeo tool makes it possible to examine labels of an image without pulling it first. For example:

skopeo inspect -f {{.Labels | json}} docker://registry.suse.com/bci/bci-base:15.5

skopeo inspect -f {{ index .Labels \"com.suse.sle.base.supportlevel\" }} docker://registry.suse.com/bci/bci-base:15.510 SLE BCI tags #

Tags are used to refer to images. A tag forms a part of the image's name. Unlike labels, tags can be freely defined, and they are usually used to indicate a version number.

If a tag exists in multiple images, the newest image is used. The image maintainer decides which tags to assign to the container image.

The conventional tag format is repository name:

image version specification (usually version number).

For example, the tag for the latest published image of SLE 15 SP2 would

be suse/sle15:15.2.

11 Understanding SLE BCIs #

There are certain features that set SLE BCIs apart from similar offerings, like images based on Debian or Alpine Linux. Understanding the specifics can help you to get the most out of SLE BCIs in the shortest time possible.

11.1 Package manager #

The default package manager in SLES is Zypper. Similar to APT in Debian and APK in Alpine Linux, Zypper offers a command-line interface for all package management tasks. Below is a brief overview of commonly used container-related Zypper commands.

- Install packages

zypper --non-interactive install PACKAGE_NAME- Add a repository

zypper --non-interactive addrepo REPOSITORY_URL;zypper --non-interactive refresh- Update all packages

zypper --non-interactive update- Remove a package

zypper --non-interactive remove --clean-deps PACKAGE_NAME(the--clean-depsflag ensures that no longer required dependencies are removed as well)- Clean up temporary files

zypper clean

For more information on using Zypper, refer to https://documentation.suse.com/sles/html/SLES-all/cha-sw-cl.html#sec-zypper.

All the described commands use the --non-interactive

flag to skip confirmations, since you cannot approve these manually

during container builds. Keep in mind that you must use the flag with any

command that modifies the system. Also note that

--non-interactive is not a "yes to all" flag. Instead,

--non-interactive confirms what is considered to be the

intention of the user. For example, an installation command with the

--non-interactive option fails if it needs to import new

repository signing keys, as that is something that the user must verify

themselves.

11.2 Using container-suseconnect with SLE BCIs #

container-suseconnect

is a plugin available in all SLE BCIs that ship with Zypper. When the

plugin detects the host's SUSE Linux Enterprise Server registration

credentials, it uses them to give the container access to the SUSE Linux

Enterprise repositories. This includes additional modules and previous

package versions that are not part of the free SLE_BCI repository. Refer

to Section 13.3, “container-suseconnect” for more information on

how to use the repository for SLES, openSUSE and non-SLES hosts.

11.3 Common patterns #

The following examples demonstrate how to accomplish certain tasks in a SLE BCI compared to Debian.

- Remove orphaned packages

Debian:

apt-get autoremove -ySLE BCI: Not required if you remove installed packages using the

zypper --non-interactive remove --clean-deps PACKAGE_NAME

- Obtain container's architecture

Debian:

dpkgArch="$(dpkg --print-architecture | awk -F- '{ print $NF }')"SLE BCI:

arch="$(uname -p)"

- Install packages required for compilation

Debian:

apt-get install -y build-essentialSLE BCI:

zypper -n in gcc gcc-c++ make

- Verify GnuPG signatures

Debian:

gpg --batch --verify SIGNATURE_URL FILE_TO_VERIFYSLE BCI:

zypper -n in dirmngr; gpg --batch --verify SIGNATURE_URL FILE_TO_VERIFY; zypper -n remove --clean-deps dirmngr; zypper -n clean

11.4 Package naming conventions #

SLE package naming conventions differ from Debian, Ubuntu and Alpine,

and they are closer to those of Red Hat Enterprise Linux. The main difference is that

development packages of libraries (that is, packages containing headers

and build description files) are named PACKAGE-devel

in SLE, as opposed to PACKAGE-dev in Debian and

Ubuntu. When in doubt, search for the package using the following

command: docker run --rm

registry.suse.com/bci/bci-base:OS_VERSION

zypper search PACKAGE_NAME (replace

OS_VERSION with the appropriate service

version number, for example: 15.3 or

15.4).

11.5 Adding GPG signing keys #

The SUSE keys are already in the RPM database of the SLE Base Container Image. This means that you do not have to import them.

However, adding external repositories to a container or container image

normally requires importing the GPG key used for signing the packages.

This can be done with the rpm --import

KEY_URL command. This adds the key

to the RPM database, and all packages from the repository can be

installed afterwards.

12 Getting started with SLE Base Container Images #

The SLE BCIs are available as OCI-compatible container images directly from the SUSE Registry, and they can be used like any other container image, for example:

> podman run --rm -it registry.suse.com/bci/bci-base:15.4 grep '^NAME' /etc/os-release NAME="{sles}"

Alternatively, you can use a SLE BCI in Dockerfile as

follows:

FROM registry.suse.com/bci/bci-base:15.4

RUN zypper --non-interactive in python3 && \

echo "Hello Green World!" > index.html

ENTRYPOINT ["/usr/bin/python3", "-m", "http.server"]

EXPOSE 8000

You can then build container images using the docker build

. or buildah bud . commands:

> docker build .

Sending build context to Docker daemon 2.048kB

Step 1/4 : FROM registry.suse.com/bci/bci-base:15.4

---> e34487b4c4e1

Step 2/4 : RUN zypper --non-interactive in python3 && echo "Hello Green World!" > index.html

---> Using cache

---> 9b527dfa45e8

Step 3/4 : ENTRYPOINT ["/usr/bin/python3", "-m", "http.server"]

---> Using cache

---> 953080e91e1e

Step 4/4 : EXPOSE 8000

---> Using cache

---> 48b33ec590a6

Successfully built 48b33ec590a6

> docker run -p 8000:8000 --rm -d 48b33ec590a6

575ad7edf43e11c2c9474055f7f6b7a221078739fc8ce5765b0e34a0c899b46a

> curl localhost:8000

Hello Green World!13 Registration and online repositories #

As a pre-requisite to work with containers on a SUSE Linux Enterprise Server, you have to enable the SLE Containers Module. This consists of container-related packages, including container engine and core container tools like on-premise registry. For more information about SLE Modules, refer to https://documentation.suse.com/sles/html/SLES-all/article-modules.html.

The regular SLE subscription includes SLE Containers Module free of charge.

13.1 Enabling the Containers Module using the YaST graphical interface #

Start YaST, and select › .

Click to open the add-on dialog.

Select

Extensions and ModulesfromRegistration Serverand click .From the list of available extensions and modules, select

Containers Module 15 SP4 x86_64and click . This adds theContainers Moduleand its repositories to the system.If you use Repository Mirroring Tool, update the list of repositories on the RMT server.

13.2 Enabling the Containers Module from the command line using SUSEConnect #

The Containers Module can also be added with the following command:

> sudo SUSEConnect -p sle-module-containers/15.4/x86_64

13.3 container-suseconnect #

container-suseconnect

is a plugin available in all SLE Base Container Images that ship with Zypper. When the

plugin detects the host's SUSE Linux Enterprise Server registration credentials, it uses them

to give the container access the SUSE Linux Enterprise repositories. This includes additional modules providing access to all packages included in SLES.

13.3.1 Using container-suseconnect on SLES and openSUSE #

If you are running a registered SLES system with Docker,

container-suseconnect automatically detects and uses

the subscription, without requiring any action on your part.

On openSUSE systems with Docker, you must copy the files

/etc/SUSEConnect and

/etc/zypp/credentials.d/SCCcredentials from a

registered SLES machine to your local machine. Note that the

/etc/SUSEConnect file is required only if you are

using RMT for managing your registration credentials.

13.3.2 Using container-suseconnect on non-SLES hosts or with Podman and Buildah #

You need a registered SLES system to use

container-suseconnect on non-SLE hosts or with

Podman and Buildah. This can be a physical machine, a virtual

machine, or the SLE BCI-Base container with

SUSEConnect installed and registered.

If you do not use RMT, copy

/etc/zypp/credentials.d/SCCcredentials to the

development machine. Otherwise, copy both the

/etc/zypp/credentials.d/SCCcredentials and

/etc/SUSEConnect files.

You can use the following command to obtain

SCCcredentials (replace

REGISTRATION_CODE with your SCC registration

code)

podman run --rm registry.suse.com/suse/sle15:latest bash -c \

"zypper -n in SUSEConnect; SUSEConnect --regcode REGISTRATION_CODE; \

cat /etc/zypp/credentials.d/SCCcredentials"

If you are running a container based on a SLE BCI, mount

SCCcredentials (and optionally

/etc/SUSEConnect) in the correct destination. The

following example shows how to mount

SCCcredentials in the current working directory:

podman run -v /path/to/SCCcredentials:/etc/zypp/credentials.d/SCCcredentials \

-it --pull=always registry.suse.com/bci/bci-base:latest

Do not copy the SCCcredentials and

SUSEConnect files into the container image to

avoid inadvertently adding them to the final image. Use secrets

instead, as they are only available to a single layer and are not part

of the built image. To do this, put a copy of

SCCcredentials (and optionally

SUSEConnect) somewhere on the file system and

modify the RUN instructions that invoke Zypper as

follows:

FROM registry.suse.com/bci/bci-base:latest

RUN --mount=type=secret,id=SUSEConnect \

--mount=type=secret,id=SCCcredentials \

zypper -n in fluxbox

Buildah support mounting secrets via the --secret

flag as follows:

buildah bud --layers --secret=id=SCCcredentials,src=/path/to/SCCcredentials \

--secret=id=SUSEConnect,src=/path/to/SUSEConnect .container-suseconnect runs automatically every time you invoke Zypper. If you are not using a registered SLES host, you may see the following error message:

> zypper ref

Refreshing service 'container-suseconnect-zypp'.

Problem retrieving the repository index file for service 'container-suseconnect-zypp':

[container-suseconnect-zypp|file:/usr/lib/zypp/plugins/services/container-suseconnect-zypp]

Warning: Skipping service 'container-suseconnect-zypp' because of the above error.Ignore the message, as it simply indicates that container-suseconnect was not able to retrieve your SUSE Customer Center credentials, and thus could not add the full SLE repositories. You still have full access to the SLE_BCI repository, and can continue using the container as intended.

13.3.3 Adding modules into the container or container Image #

container-suseconnect allows you to automatically

add SLE Modules into a container or container image. What modules

are added is determined by the environment variable

ADDITIONAL_MODULES that includes a comma-separated

list of the module names. In a Dockerfile, this is

done using the ENV directive as follows:

FROM registry.suse.com/bci/bci-base:latest

ENV ADDITIONAL_MODULES sle-module-desktop-applications,sle-module-development-tools

RUN --mount=type=secret,id=SCCcredentials zypper -n in fluxbox && zypper -n clean14 Podman overview #

Podman is short for Pod

Manager Tool. It is a daemonless container engine for managing Open

Container Initiative (OCI) containers on a Linux system. By default, Podman

supports rootless containers, which reduces attack surface when running

containers. Podman can be used to create OCI-compliant container images

using a Dockerfile and a range of commands identical to

Docker Open Source Engine. For example, the podman build command performs

the same task as docker build. In other words, Podman

provides a drop-in replacement for Docker Open Source Engine.

Moving from Docker Open Source Engine to Podman does not require any changes in the established workflow. There is no need to rebuild images, and you can use the exact same commands to build and manage images as well as run and control containers.

Podman differs from Docker Open Source Engine in the following ways:

Podman does not use a daemon, so the container engine interacts directly with an image registry, containers and image storage when needed.

Podman features native systemd integration that allows for the use of systemd to run containers. Generating the required systemd unit files is supported by Podman using the

podman generate systemdcommand. Moreover, Podman can run systemd inside containers.Podman does not require root privileges to create and run containers. This means that Podman can run under the

rootuser as well as in an unprivileged environment. Moreover, a container created by an unprivileged user cannot get higher privileges on the host than the container's creator.Podman can be configured to search multiple registries by reading

/etc/containers/registries.conffile.Podman can deploy applications from Kubernetes manifests

Podman supports launching systemd inside a container and requires no potentially dangerous workarounds.

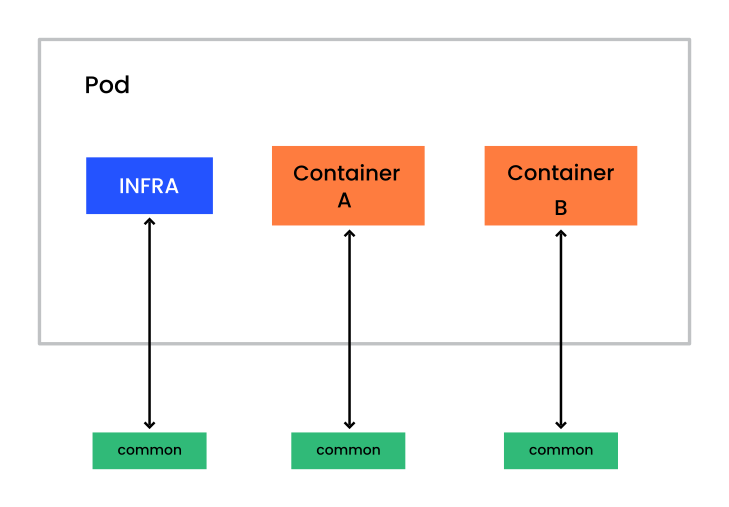

Podman makes it possible to group containers into pods. Pods share the same network interface. A typical scenario for grouping containers into a pod is a container that runs a database and a container with a client that accesses the database. For further information about pods, refer to Section 21.1, “Single container host with Podman”.

14.1 Podman installation #

To install Podman, make sure you have the SLE Containers Module

enabled (see Section 13, “Registration and online repositories”), run the

command sudo zypper in podman. Then run

podman info to check whether Podman has been

installed successfully.

By default, Podman launches containers as the current user. For unprivileged users, this means launching containers in rootless mode. Support for rootless containers is enabled for all newly created users in SLE by default, and no additional steps are necessary.

In case Podman fails to launch containers in rootless mode, check

whether an entry for the current user is present in

/etc/subuid:

> grep $(id -nu) /etc/subuid

user:10000:65536When no entry is found, add the required sub-UID and sub-GID entries via the following command:

> sudo usermod --add-subuids 100000-165535 --add-subgids 100000-165535 $(id -nu)

To enable the change, reboot the machine or stop the session of the

current user. To do the latter, run loginctl list-sessions |

grep USER and note the session ID.

Then run loginctl kill-session

SESSION_ID to stop the session.

The usermod above defines a range of local UIDs to

which the UIDs allocated to users inside the container are mapped on the

host. Note that the ranges defined for different users must not overlap.

It is also important that the ranges do not reuse the UID of an existing

local user or group. By default, adding a user with the

useradd command on SUSE Linux Enterprise automatically

allocates sub-UID and sub-GID ranges.

When using rootless containers with Podman, it is recommended to use

cgroups v2. cgroups v1 are limited in terms of functionality compared to

v2. For example, cgroups v1 do not allow proper hierarchical delegation to

the user's subtrees. Additionally, Podman is unable to read container logs

properly with cgroups v1 and the systemd log driver. To enable cgroups v2,

add the following to the kernel cmdline:

systemd.unified_cgroup_hierarchy=1

Running a container with Podman in rootless mode on SUSE Linux Enterprise Server may fail,

because the container needs read access to the SUSE Customer Center credentials. For

example, running a container with the command podman run -it

--rm registry.suse.com/suse/sle15 bash and then executing

zypper ref results in the following error message:

Refreshing service 'container-suseconnect-zypp'.

Problem retrieving the repository index file for service 'container-suseconnect-zypp':

[container-suseconnect-zypp|file:/usr/lib/zypp/plugins/services/container-suseconnect-zypp]

Warning: Skipping service 'container-suseconnect-zypp' because of the above error.

Warning: There are no enabled repositories defined.

Use 'zypper addrepo' or 'zypper modifyrepo' commands to add or enable repositoriesTo solve the problem, grant the current user the required access rights by running the following command on the host:

> sudo setfacl -m u:$(id -nu):r /etc/zypp/credentials.d/*Log out and log in again to apply the changes.

To give multiple users the required access, create a dedicated group

using the groupadd

GROUPNAME command. Then use the

following command to change the group ownership and rights of files in

the /etc/zypp/credentials.d/ directory.

>sudo chgrp GROUPNAME /etc/zypp/credentials.d/*>sudo chmod g+r /etc/zypp/credentials.d/*

You can then grant a specific user write access by adding them to the created group.

14.1.1 Tips and tricks for rootless containers #

Podman remaps user IDs with rootless containers. In the following example, Podman remaps the current user to the default user in the container:

> podman run --rm -it registry.suse.com/bci/bci-base id

uid=0(root) gid=0(root) groups=0(root)Note that even if you are root in the container, you cannot gain superuser privileges outside of it.

This user remapping can have undesired side effects when sharing data

with the host, where the shared files belong to different user IDs in

the container and on the host. The issue can be solved using the

command-line flag --userns=keep-id that makes it

possible to keep the current user id in the container:

> podman run --userns=keep-id --rm -it registry.suse.com/bci/bci-base id

uid=1000(user) gid=1000(users) groups=1000(users)

The flag --userns=keep-id has a similar effect when

used with bind mounts:

>podman run --rm -it -v $(pwd):/share/ registry.suse.com/bci/bci-base stat /share/ File: /share/ Size: 318 Blocks: 0 IO Block: 4096 directory Device: 2ch/44d Inode: 3506170 Links: 1 Access: (0755/drwxr-xr-x) Uid: ( 0/ root) Gid: ( 0/ root) Access: 2023-05-03 12:52:18.636392618 +0000 Modify: 2023-05-03 12:52:17.178380923 +0000 Change: 2023-05-03 12:52:17.178380923 +0000 Birth: 2023-05-03 12:52:15.852370288 +0000>podman run --userns=keep-id --rm -it -v $(pwd):/share/ registry.suse.com/bci/bci-base stat /share/ File: /share/ Size: 318 Blocks: 0 IO Block: 4096 directory Device: 2ch/44d Inode: 3506170 Links: 1 Access: (0755/drwxr-xr-x) Uid: ( 1000/ user) Gid: ( 1000/ users) Access: 2023-05-03 12:52:18.636392618 +0000 Modify: 2023-05-03 12:52:17.178380923 +0000 Change: 2023-05-03 12:52:17.178380923 +0000 Birth: 2023-05-03 12:52:15.852370288 +0000

Podman stores the containers' data in the storage graph root (default

is ~/.local/share/containers/storage). Because of

the way Podman remaps user IDs in rootless containers, the graph root

may contain files that are not owned by your current user but by a user

ID in the sub-UID region assigned to your user. As these files do not

belong to your current user, they can be inaccessible to you.

To read or modify any file in the graph root, enter a shell as follows:

>podman unshare bash>id uid=0(root) gid=0(root) groups=0(root),65534(nobody)

Note that podman unshare performs the same user

remapping as podman run does when launching a

rootless container. You cannot gain elevated privileges via

podman unshare.

Do not modify files in the graph root as this can corrupt Podman's internal state and render your containers, images and volumes inoperable.

14.1.2 Caveats of rootless containers #

Because unprivileged users cannot configure network namespaces on

Linux, Podman relies on a userspace network implementation called

slirp4netns. It emulates the full TCP-IP stack and

can cause a heavy performance degradation for workloads relying on high

network transfer rates. This means that rootless containers suffer from

slow network transfers.

On Linux, unprivileged users cannot open ports below port number 1024.

This limitation also applies to Podman, so by default, rootless

containers cannot expose ports below port number 1024. You can remove

this limitation using the following command: sysctl

net.ipv4.ip_unprivileged_port_start=0.

To remove the limitation permanently, run sysctl -w

net.ipv4.ip_unprivileged_port_start=0.

Note that this allows all unprivileged applications to bind to ports below 1024.

14.1.3 podman-docker #

Because Podman is compatible with Docker Open Source Engine, it features the same

command-line interface. You can also install the package

podman-docker that allows you to use an emulated

Docker CLI with Podman. For example, the docker

pull command, that fetches a container image from a registry,

executes podman pull instead. The docker

build command executes podman build, etc.

Podman also features a Docker Open Source Engine compatible socket that can be launched using the following command:

> sudo systemctl start podman.socket

The Podman socket can be used by applications designed to communicate

with Docker Open Source Engine to launch containers transparently via Podman. The

Podman socket can be used to launch containers using docker

compose, without running Docker Open Source Engine.

14.2 Obtaining container images #

14.2.1 Configuring container registries #

By default, Podman is configured to use SUSE Registry only. To make

Podman search the SUSE Registry first and use Docker Hub as a fallback,

make sure that the

/etc/containers/registries.conf file contains the following configuration:

unqualified-search-registries = ["registry.suse.com", "docker.io"]

14.2.2 Searching images in registries #

Using the podman search command allow you to list

available containers in the registries defined in

/etc/containers/registries.conf.

To search in all registries:

podman search go

To search in a specific registry:

podman search registry.suse.com/go

14.2.3 Downloading (pulling) images #

The podman pull command pulls an image from an image

registry:

> podman pull REGISTRY:PORT/NAMESPACE/NAME:TAGFor example:

> podman pull registry.suse.com/bci/bci-base

Note that if you do not specify a tag, Podman pulls the

latest tag.

14.3 Renaming images and image tags #

Tags are used to assign descriptive names to container images, thus making it easier to identify individual images.

Pull the SLE BCI-Base image from SUSE Registry:

> podman pull registry.suse.com/bci/bci-base

Trying to pull registry.suse.com/bci/bci-base:latest...

Getting image source signatures

Copying blob bf6ca87723f2 done

Copying config 34578a383c done

Writing manifest to image destination

Storing signatures

34578a383c7b6fdcb85f90fbad59b7e7a16071cf47843688e90fe20ff64a684List the pulled images:

> podman images

REPOSITORY TAG IMAGE ID CREATED SIZE

registry.suse.com/bci/bci-base latest 34578a383c7b 22 hours ago 122 MB

Rename the SLE BCI-Base image to my-base:

podman tag 34578a383c7b my-base

podman images

REPOSITORY TAG IMAGE ID CREATED SIZE

registry.suse.com/bci/bci-base latest 34578a383c7b 22 hours ago 122 MB

localhost/my-base latest 34578a383c7b 22 hours ago 122 MB

Add a custom tag 1 (indicating that this version 1 of

the image) to my-base:

> podman tag 34578a383c7b my-base:1> podman images

REPOSITORY TAG IMAGE ID CREATED SIZE

registry.suse.com/bci/bci-base latest 34578a383c7b 22 hours ago 122 MB

localhost/my-base latest 34578a383c7b 22 hours ago 122 MB

localhost/my-base 1 34578a383c7b 22 hours ago 122 MB

Note that the default tag latest is still present.

14.4 Deploying container images #

Similar to Docker Open Source Engine, Podman can run containers in an interactive mode,

allowing you to inspect and work with an image. To run the

suse/sle15 image in interactive mode, use the

following command:

> podman run --rm -ti suse/sle15

Rootful containers may occasionally become inaccessible from the outside. The

issue is caused by firewalld reloading its permanent rules and discarding any

temporary rules created by Podman's networking back-end (either CNI or

Netavark).

A temporary workaround is to reload the Podman network using the

podman network reload --all command.

If you use Netavark 1.9.0 or higher as the network back-end, a permanent fix to the problem is to use the

netavark-firewalld-reload.service service. Enable and

start the service as follows:

#systemctl enable netavark-firewalld-reload.service#systemctl restart netavark-firewalld-reload.service

You can check which back-end and version you are using by running podman info --format "{{.Host.NetworkBackend}}" and podman info --format "{{.Host.NetworkBackendInfo.Version}}", respectively.

We recommend adding permanent firewall rules for containers you want to be accessible from outside of the host. This ensures that the rules persist on firewall reloads and system reboots. This approach also offers greater flexibility (for example, it allows you to assign the rules to a certain firewalld zone).

To make a rootful container on an IBM Z system work with applications that require access to audit (for example, OpenSSH), you must run the container with the --cap-add=AUDIT_WRITE parameter. For example: podman run --cap-add=AUDIT_WRITE -it IMAGE.

14.5 Building images with Podman #

Podman can build images from a Dockerfile. The

podman build command behaves as docker

build, and it accepts the same options.

Podman's companion tool Buildah provides an alternative way to build images. For further information about Buildah, refer to Section 18, “Buildah overview”.

15 Setting up Docker Open Source Engine #

15.1 Preparing the host #

Before installing any Docker-related packages, you need to enable the Containers Module:

Container orchestration is a part of Docker Open Source Engine. Even though this feature is available in SUSE Linux Enterprise, it is not supported by SUSE, and is only provided as a technology preview. Use Kubernetes for container orchestration. For details, refer to the Kubernetes documentation.

15.1.1 Installing and configuring Docker Open Source Engine #

Install the docker package:

>sudo zypper install dockerEnable the Docker service, so it starts automatically at boot time:

>sudo systemctl enable docker.serviceThis also enables

docker.socket.Open the

/etc/sysconfig/dockerfile. Search for the parameterDOCKER_OPTSand add--insecure-registry ADDRESS_OF_YOUR_REGISTRY.Add CA certificates to the directory

/etc/docker/certs.d/REGISTRY_ADDRESS:>sudo cp CA /etc/pki/trust/anchors/Copy the CA certificates to your system:

>sudo update-ca-certificates

Start the Docker service:

>sudo systemctl start docker.serviceThis also starts

docker.socket.

The Docker daemon listens on a local socket accessible only by the root user and by the members of the docker group. The docker group is automatically created during package installation.

To allow a certain user to connect to the local Docker daemon, use the following command:

> sudo /usr/sbin/usermod -aG docker USERNAMEThis allows the user to communicate with the local Docker daemon.

15.2 Configuring the network #

To give the containers access to the external network, enable the

ipv4 ip_forward rule.

15.2.1 How Docker Open Source Engine interacts with iptables #

To learn more about how containers interact with each other and the system firewall, see the Docker documentation.

It is also possible to prevent Docker Open Source Engine from manipulating

iptables. See the

Docker

documentation.

15.3 Storage drivers #

Docker Open Source Engine supports different storage drivers:

vfs: This driver is automatically used when the Docker host file system does not support copy-on-write. This driver is simpler than the others listed and does not offer certain advantages of Docker Open Source Engine such as shared layers. It is a slow but reliable driver.

devicemapper: This driver relies on the device-mapper thin provisioning module. It supports copy-on-write, so it provides all the advantages of Docker Open Source Engine.

btrfs: This driver relies on Btrfs to offer all the features required by Docker Open Source Engine. To use this driver, the

/var/lib/dockerdirectory must be on a Btrfs file system.

SUSE Linux Enterprise uses the Btrfs file system by default. This forces Docker Open Source Engine to use the btrfs driver.

It is possible to specify what driver to use by changing the value of the

DOCKER_OPTS variable defined in the

/etc/sysconfig/docker file. This can be done either

manually or using YaST by browsing to the

› › › ›

menu and entering the -s storage_driver string.

For example, to enable the devicemapper driver, enter the following text:

DOCKER_OPTS="-s devicemapper"

/var/lib/docker

It is recommended to mount /var/lib/docker on a

separate partition or volume. In case of file system corruption, this

allows the operating system to run Docker Open Source Engine unaffected.

If you choose the Btrfs file system for

/var/lib/docker, it is strongly recommended to

create a subvolume for it. This ensures that the directory is excluded

from file system snapshots. If you do not exclude

/var/lib/docker from snapshots, there is a risk of

the file system running out of disk space soon after you start

deploying containers. Moreover, a rollback to a previous snapshot will

also reset the Docker database and images. For more information, see

https://documentation.suse.com/sles/html/SLES-all/cha-snapper.html#sec-snapper-setup-customizing-new-subvolume.

15.4 Updates #

All updates to the docker package are marked as interactive (that is, no automatic updates) to avoid accidental updates that can break running container workloads. Stop all running containers before applying a Docker Open Source Engine update.

To prevent data loss, avoid workloads that rely on containers that

automatically start after Docker Open Source Engine update. Although it is technically

possible to keep containers running during an update via the

--live-restore option, such updates can introduce

regressions. SUSE does not support this feature.

16 Configuring image storage #

Before creating custom images, decide where you want to store images. A simple solution is to push images to Docker Hub. By default, all images pushed to Docker Hub are public. Make sure not to publish sensitive data or software not licensed for public use.

You can restrict access to custom container images with the following:

Docker Hub allows creating private repositories for paid subscribers.

An on-site Docker Registry allows storing all the container images used by your organization.

Instead of using Docker Hub, you can run a local instance of Docker Registry, an open source platform for storing and retrieving container images.

16.1 Running a Docker Registry #

The SUSE Registry provides a container image that makes it possible to

run a local Docker Registry as a container. It stores the pushed images in a

container volume corresponding to the directory

/var/lib/docker-registry. It is recommended to

either create a named volume for the registry or to bind mount a

persistent directory on the host to

/var/lib/docker-registry in the container. This

ensures that pushed images persist in deleting the container.

Run the following command to pull the registry container image from the SUSE Registry and start a container that can be accessed on port 5000 with the container storage bind mounted locally to /PATH/DIR/:

> podman run -d --restart=always --name registry -p 5000:5000 \

-v /PATH/DIR:/var/lib/docker-registry registry.suse.com/suse/registryAlternatively, create a named volume registry for the SUSE Registry container as follows:

> podman run -d --restart=always --name registry -p 5000:5000 \

-v registry:/var/lib/docker-registry registry.suse.com/suse/registryTo make it easier to manage the registry, create a corresponding system unit:

> podman generate systemd registry | \

sudo tee /etc/systemd/system/suse_registry.serviceEnable and start the registry service, then verify its status:

>sudo systemctl enable suse_registry.service>sudo systemctl start suse_registry.service>sudo systemctl status suse_registry.service

For more details about Docker Registry and its configuration, see the official documentation at https://docs.docker.com/registry/.

16.2 Limitations #

Docker Registry has two major limitations:

It lacks any form of authentication. That means everybody with access to Docker Registry can push and pull images to it. That includes overwriting existing images. It is recommended to set up some form of access restriction as described in the upstream documentation https://distribution.github.io/distribution/about/deploying/#restricting-access.

It is not possible to see which images have been pushed to Docker Registry. You need to keep a record of what is being stored on it. There is also no search functionality.

17 Verifying container images #

Verifying container images allows you to confirm their provenance, thus ensuring the supply chain security. This chapter provides information on signing and verifying container images.

17.1 Verifying SLE Base Container Images with Docker #

Signatures for images available through SUSE Registry are stored in the Notary. You can verify the signature of a specific image using the following command:

> docker trust inspect --pretty registry.suse.com/suse/IMAGE:TAG

For example, the command docker trust inspect --pretty

registry.suse.com/suse/sle15:latest verifies the signature of

the latest SLE15 base image.

To automatically validate an image when you pull it, set the environment

DOCKER_CONTENT_TRUST to 1. For

example:

env DOCKER_CONTENT_TRUST=1 docker pull registry.suse.com/suse/sle15:latest

17.2 Verifying SLE Base Container Images with Cosign #

To verify a SLE BCI, run Cosign in the container. The command below fetches the cosign container from the SUSE registry, which includes the SUSE signing key and uses it to verify the latest SLE BCI-Base container image.

> podman run --rm -it registry.suse.com/suse/cosign verify \

--key /usr/share/pki/containers/suse-container-key.pem \

registry.suse.com/bci/bci-base:latest | tail -1 | jq

[

{

"critical": {

"identity": {

"docker-reference": "registry.suse.com/bci/bci-base"

},

"image": {

"docker-manifest-digest": "sha256:52a828600279746ef669cf02a599660cd53faf4b2430a6b211d593c3add047f5"

},

"type": "cosign container image signature"

},

"optional": {

"creator": "OBS"

}

}

]

The signing key can be used to verify all SLE BCIs, and it also ships with

SUSE Linux Enterprise (the

/usr/share/pki/containers/suse-container-key.pem

file).

You can also check SLE BCIs against rekor, the immutable tamper resistant ledger. For example:

> podman run --rm -it -e COSIGN_EXPERIMENTAL=1 registry.suse.com/suse/cosign \

verify --key /usr/share/pki/containers/suse-container-key.pem \

registry.suse.com/bci/bci-base:latest | tail -1 | jq

[

{

"critical": {

"identity": {

"docker-reference": "registry.suse.com/bci/bci-base"

},

"image": {

"docker-manifest-digest": "sha256:52a828600279746ef669cf02a599660cd53faf4b2430a6b211d593c3add047f5"

},

"type": "cosign container image signature"

},

"optional": {

"creator": "OBS"

}

}

]

If verification fails, the output of the cosign verify

command is similar to the one below.

Error: no matching signatures:

crypto/rsa: verification error

main.go:62: error during command execution: no matching signatures:

crypto/rsa: verification error17.3 Verifying Helm charts with Cosign #

Cosign can also be used to verify Helm charts. This can be done using the following command:

> podman run --rm -it registry.suse.com/suse/cosign verify --key /usr/share/pki/containers/suse-container-key.pem registry.suse.com/path/to/chart17.4 Verifying SLE Base Container Images with Podman #

Before you can verify SLE BCIs using Podman, you must specify

registry.suse.com as the registry for image

verification.

Skip this step on SUSE Linux Enterprise, as the correct configuration is already in place.

To do this, add the following configuration to

/etc/containers/registries.d/default.yaml:

docker:

registry.suse.com:

use-sigstore-attachments: true

Instead of editing the default.yaml, you can create

a new file in /etc/containers/registries.d/ with a

filename of your choice.

Next, modify the

/etc/containers/policy.json

file. Under the docker attribute, add the

registry.suse.com configuration similar to the

following:

{

"default": [

{

"type": "insecureAcceptAnything"

}

],

"transports": {

"docker-daemon": {

"": [

{

"type": "insecureAcceptAnything"

}

]

},

"docker": {

"registry.suse.com": [

{

"type": "sigstoreSigned",

"keyPath": "/usr/share/pki/containers/suse-container-key.pem",

"signedIdentity": {

"type": "matchRepository"

}

}

]

}

}

}

The specified configuration instructs Podman, skopeo and Buildah to

verify images under the registry.suse.com repository.

This way,Podman checks the validity of the signature using the

specified public key before pulling the image. It rejects the image if

the validation fails.

Do not remove existing entries in

transports.docker. Append the entry for

registry.suse.com to the list.

Fetch the public key used to sign SLE BCIs from SUSE Signing Keys, or use the following command:

> sudo curl -s https://ftp.suse.com/pub/projects/security/keys/container–key.pem \

-o /usr/share/pki/containers/suse-container-key.pem

This step is optional on SUSE Linux Enterprise. The signing key is already

available in

/usr/share/pki/containers/suse-container-key.pem

Buildah, Podman and skopeo automatically verifies every image pulled

from registry.suse.com from now on. There are no

additional steps required.

If verification fails, the command returns an error message as follows:

> podman pull registry.suse.com/bci/bci-base:latest

Trying to pull registry.suse.com/bci/bci-base:latest...

Error: copying system image from manifest list: Source image rejected: Signature for identity registry.suse.com/bci/bci-base is not acceptedIf there are no issues with the signed image and your configuration, you can proceed with using the container image.

18 Buildah overview #

Buildah is tool for building OCI-compliant container images. Buildah can handle the following tasks:

Create containers from scratch or from existing images.

Create an image from a working container or via a

Dockerfile.Build images in the OCI or Docker Open Source Engine image formats.

Mount a working container's root file system for manipulation.

Use the updated contents of a container's root file system as a file system layer to create a new image.

Delete a working container or an image and rename a local container.

Compared to Docker Open Source Engine, Buildah offers the following advantages:

The tool makes it possible to mount a working container's file system, so it becomes accessible by the host.

The process of building container images using Buildah can be automated via scripts by using Buildah subcommands instead of a

ContainerfileorDockerfile.Similar to Podman, Buildah does not require a daemon to run and can be used by unprivileged users.

It is possible to build images inside a container without mounting the Docker socket, which improves security.

Both Podman and Buildah can be used to build container images. While Podman makes it possible to build images using Dockerfiles, Buildah offers an expanded range of image building options and capabilities.

18.1 Buildah installation #

To install Buildah, run the command sudo zypper in

buildah. Run the command buildah --version

to check whether Buildah has been installed successfully.

If you already have Podman installed and set up for use in rootless mode, Buildah can be used in an unprivileged environment without any further configuration. If you need to enable rootless mode for Buildah, run the following command:

> sudo usermod --add-subuids 100000-165535 --add-subgids 100000-165535 USERThis command enables rootless mode for the current user. After running the command, log out and log in again to enable the changes.

The command above defines a range of local UIDs on the host, onto which

the UIDs allocated to users inside the container are mapped. Note that

the ranges defined for different users must not overlap. It is also

important that the ranges do not reuse the UID of any existing local

users or groups. By default, adding a user with the

useradd command on SUSE Linux Enterprise automatically

allocates subUID and subGID ranges.

In rootless mode, Buildah commands must be executed in a modified

user namespace of the user. To enter this user namespace, run the

command buildah unshare. Otherwise, the

buildah mount command will fail.

18.2 Building images with Buildah #

Instead of a special file with instructions, Buildah uses individual commands to build an image. Building an image with Buildah involves the following steps:

run a container based on the specified image

edit the container (install packages, configure settings, etc.)

configure the container options

commit all changes into a new image

While this process may include additional steps, such as mounting the container's file system and working with it, the basic workflow logic remains the same.

The following example can give you a general idea of how to build an image with Buildah.

container=$(buildah from suse/sle15) 1 buildah run $container zypper up 2 buildah copy $container . /usr/src/example/ 3 buildah config --workingdir /usr/src/example $container 4 buildah config --port 8000 $container buildah config --cmd "php -S 0.0.0.0:8000" $container buildah config --label maintainer="Tux" $container 5 buildah config --label version="0.1" $container buildah commit $container example 6 buildah rm $container 7

Specify a container (also called a working container) based on the

specified image (in this case, | |

Run a command in the working container you just created. In this

example, Buildah runs the | |

Copy files and directories to the specified location in the

container. In this example, Buildah copies the entire contents of

the current directory to | |

The | |

The | |

Create an image from the working container by committing all the modifications. | |

Delete the working container. |

19 Creating custom container images #

To create a custom image, you need a base image of SUSE Linux Enterprise Server. You can use any of the pre-built SUSE Linux Enterprise Server images.

19.1 Pulling base SUSE Linux Enterprise Server images #

To obtain a pre-built base image, use the following command:

> podman pull registry.suse.com/suse/IMAGENAMEFor example, for SUSE Linux Enterprise Server 15, the command is as follows:

> podman pull registry.suse.com/suse/sle15For information on obtaining specific base images, refer to Section 3, “Introduction to SLE Base Container Images”.

When the container image is ready, you can customize it as described in Section 19.2, “Customizing container images”.

19.2 Customizing container images #

19.2.1 Repositories and registration #

The pre-built images do not have any repositories configured and do not

include any modules or extensions. They contain a

zypper

service that contacts either the SUSE Customer Center or a

Repository Mirroring Tool (RMT) server, according to the

configuration of the SUSE Linux Enterprise Server host that runs the container. The service

obtains the list of repositories available for the product used by the

container image. You can also directly declare extensions in your

Dockerfile. For more information, see

Section 13.3, “container-suseconnect”.