Administration Guide

- About This Guide

- I Installation, Setup and Upgrade

- II Configuration and Administration

- 6 Configuration and Administration Basics

- 7 Configuring and Managing Cluster Resources with Hawk2

- 8 Configuring and Managing Cluster Resources (Command Line)

- 9 Adding or Modifying Resource Agents

- 10 Fencing and STONITH

- 11 Storage Protection and SBD

- 12 Access Control Lists

- 13 Network Device Bonding

- 14 Load Balancing

- 15 Geo Clusters (Multi-Site Clusters)

- 16 Executing Maintenance Tasks

- III Storage and Data Replication

- IV Appendix

- Glossary

- E GNU Licenses

23.1 Conceptual Overview #

Trivial Database (TDB) has been used by Samba for many years. It allows multiple applications to write simultaneously. To make sure all write operations are successfully performed and do not collide with each other, TDB uses an internal locking mechanism.

Cluster Trivial Database (CTDB) is a small extension of the existing TDB. CTDB is described by the project as a “cluster implementation of the TDB database used by Samba and other projects to store temporary data”.

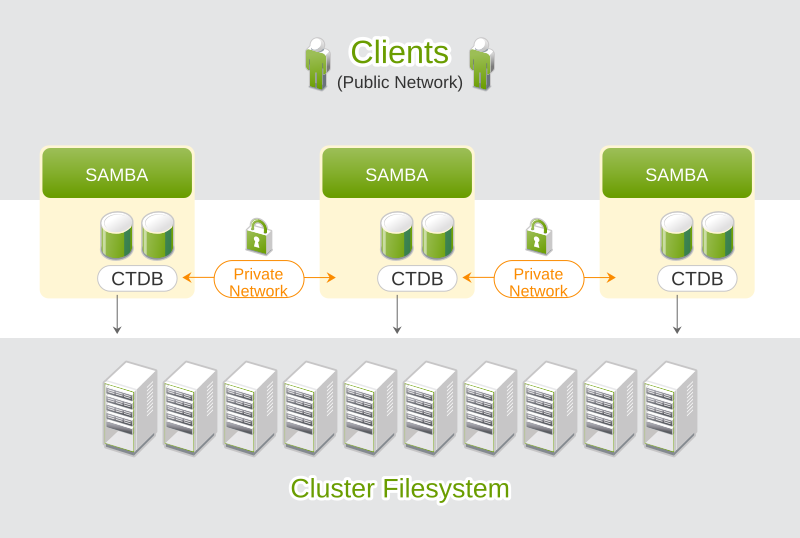

Each cluster node runs a local CTDB daemon. Samba communicates with its local CTDB daemon instead of writing directly to its TDB. The daemons exchange metadata over the network, but actual write and read operations are done on a local copy with fast storage. The concept of CTDB is displayed in Figure 23.1, “Structure of a CTDB Cluster”.

Note: CTDB For Samba Only

The current implementation of the CTDB Resource Agent configures CTDB to only manage Samba. Everything else, including IP failover, should be configured with Pacemaker.

CTDB is only supported for completely homogeneous clusters. For example, all nodes in the cluster need to have the same architecture. You cannot mix x86 with AMD64/Intel 64.

Figure 23.1: Structure of a CTDB Cluster #

A clustered Samba server must share certain data:

Mapping table that associates Unix user and group IDs to Windows users and groups.

The user database must be synchronized between all nodes.

Join information for a member server in a Windows domain must be available on all nodes.

Metadata needs to be available on all nodes, like active SMB sessions, share connections, and various locks.

The goal is that a clustered Samba server with N+1 nodes is faster than with only N nodes. One node is not slower than an unclustered Samba server.

23.2 Basic Configuration #

Note: Changed Configuration Files

The CTDB Resource Agent automatically changes

/etc/sysconfig/ctdb. Use crm

ra info CTDB to list all parameters

that can be specified for the CTDB resource.

To set up a clustered Samba server, proceed as follows:

Procedure 23.1: Setting Up a Basic Clustered Samba Server #

Prepare your cluster:

Make sure the following packages are installed before you proceed:

ctdb,tdb-tools, andsamba(needed forsmbandnmbresources).Configure your cluster (Pacemaker, OCFS2) as described in this guide in Part II, “Configuration and Administration”.

Configure a shared file system, like OCFS2, and mount it, for example, on

/srv/clusterfs. See Chapter 18, OCFS2 for more information.If you want to turn on POSIX ACLs, enable it:

For a new OCFS2 file system use:

root #mkfs.ocfs2--fs-features=xattr ...For an existing OCFS2 file system use:

root #tunefs.ocfs2--fs-feature=xattr DEVICEMake sure the

acloption is specified in the file system resource. Use thecrmshell as follows:crm(live)configure#primitiveocfs2-3 ocf:heartbeat:Filesystem params options="acl" ...

Make sure the services

ctdb,smb, andnmbare disabled:root #systemctldisable ctdbroot #systemctldisable smbroot #systemctldisable nmbOpen port

4379of your firewall on all nodes. This is needed for CTDB to communicate with other cluster nodes.

Create a directory for the CTDB lock on the shared file system:

root #mkdir-p /srv/clusterfs/samba/In

/etc/ctdb/nodesinsert all nodes which contain all private IP addresses of each node in the cluster:192.168.1.10 192.168.1.11

Configure Samba. Add the following lines in the

[global]section of/etc/samba/smb.conf. Use the host name of your choice in place of "CTDB-SERVER" (all nodes in the cluster will appear as one big node with this name, effectively):[global] # ... # settings applicable for all CTDB deployments netbios name = CTDB-SERVER clustering = yes idmap config * : backend = tdb2 passdb backend = tdbsam ctdbd socket = /var/lib/ctdb/ctdb.socket # settings necessary for CTDB on OCFS2 fileid:algorithm = fsid vfs objects = fileid # ...Copy the configuration file to all of your nodes by using

csync2:root #csync2-xvFor more information, see Procedure 4.6, “Synchronizing the Configuration Files with Csync2”.

Add a CTDB resource to the cluster:

root #crmconfigurecrm(live)configure#primitivectdb ocf:heartbeat:CTDB params \ ctdb_manages_winbind="false" \ ctdb_manages_samba="false" \ ctdb_recovery_lock="/srv/clusterfs/samba/ctdb.lock" \ ctdb_socket="/var/lib/ctdb/ctdb.socket" \ op monitor interval="10" timeout="20" \ op start interval="0" timeout="90" \ op stop interval="0" timeout="100"crm(live)configure#primitivenmb systemd:nmb \ op start timeout="60" interval="0" \ op stop timeout="60" interval="0" \ op monitor interval="60" timeout="60"crm(live)configure#primitivesmb systemd:smb \ op start timeout="60" interval="0" \ op stop timeout="60" interval="0" \ op monitor interval="60" timeout="60"crm(live)configure#groupg-ctdb ctdb nmb smbcrm(live)configure#clonecl-ctdb g-ctdb meta interleave="true"crm(live)configure#colocationcol-ctdb-with-clusterfs inf: cl-ctdb cl-clusterfscrm(live)configure#ordero-clusterfs-then-ctdb inf: cl-clusterfs cl-ctdbcrm(live)configure#commitAdd a clustered IP address:

crm(live)configure#primitiveip ocf:heartbeat:IPaddr2 params ip=192.168.2.222 \ unique_clone_address="true" \ op monitor interval="60" \ meta resource-stickiness="0"crm(live)configure#clonecl-ip ip \ meta interleave="true" clone-node-max="2" globally-unique="true"crm(live)configure#colocationcol-ip-with-ctdb 0: cl-ip cl-ctdbcrm(live)configure#ordero-ip-then-ctdb 0: cl-ip cl-ctdbcrm(live)configure#commitIf

unique_clone_addressis set totrue, the IPaddr2 resource agent adds a clone ID to the specified address, leading to three different IP addresses. These are usually not needed, but help with load balancing. For further information about this topic, see Section 14.2, “Configuring Load Balancing with Linux Virtual Server”.Commit your change:

crm(live)configure#commitCheck the result:

root #crmstatus Clone Set: cl-storage [dlm] Started: [ factory-1 ] Stopped: [ factory-0 ] Clone Set: cl-clusterfs [clusterfs] Started: [ factory-1 ] Stopped: [ factory-0 ] Clone Set: cl-ctdb [g-ctdb] Started: [ factory-1 ] Started: [ factory-0 ] Clone Set: cl-ip [ip] (unique) ip:0 (ocf:heartbeat:IPaddr2): Started factory-0 ip:1 (ocf:heartbeat:IPaddr2): Started factory-1Test from a client machine. On a Linux client, run the following command to see if you can copy files from and to the system:

root #smbclient//192.168.2.222/myshare

23.3 Joining an Active Directory Domain #

Active Directory (AD) is a directory service for Windows server systems.

The following instructions outline how to join a CTDB cluster to an Active Directory domain:

Create a CTDB resource as described in Procedure 23.1, “Setting Up a Basic Clustered Samba Server”.

Install the

samba-winbindpackage.Disable the

winbindservice:root #systemctldisable winbindDefine a winbind cluster resource:

root #crmconfigurecrm(live)configure#primitivewinbind systemd:winbind \ op start timeout="60" interval="0" \ op stop timeout="60" interval="0" \ op monitor interval="60" timeout="60"crm(live)configure#commitEdit the

g-ctdbgroup and insertwinbindbetween thenmbandsmbresources:crm(live)configure#editg-ctdbSave and close the editor with :–w (

vim).Consult your Windows Server documentation for instructions on how to set up an Active Directory domain. In this example, we use the following parameters:

AD and DNS server

win2k3.2k3test.example.com

AD domain

2k3test.example.com

Cluster AD member NetBIOS name

CTDB-SERVER

Finally, join your cluster to the Active Directory server:

Procedure 23.2: Joining Active Directory #

Make sure the following files are included in Csync2's configuration to become installed on all cluster hosts:

/etc/samba/smb.conf /etc/security/pam_winbind.conf /etc/krb5.conf /etc/nsswitch.conf /etc/security/pam_mount.conf.xml /etc/pam.d/common-session

You can also use YaST's module for this task, see Section 4.5, “Transferring the Configuration to All Nodes”.

Run YaST and open the module from the entry.

Enter your domain or workgroup settings and finish with .

23.4 Debugging and Testing Clustered Samba #

To debug your clustered Samba server, the following tools which operate on different levels are available:

ctdb_diagnosticsRun this tool to diagnose your clustered Samba server. Detailed debug messages should help you track down any problems you might have.

The

ctdb_diagnosticscommand searches for the following files which must be available on all nodes:/etc/krb5.conf /etc/hosts /etc/ctdb/nodes /etc/sysconfig/ctdb /etc/resolv.conf /etc/nsswitch.conf /etc/sysctl.conf /etc/samba/smb.conf /etc/fstab /etc/multipath.conf /etc/pam.d/system-auth /etc/sysconfig/nfs /etc/exports /etc/vsftpd/vsftpd.conf

If the files

/etc/ctdb/public_addressesand/etc/ctdb/static-routesexist, they will be checked as well.ping_pongCheck whether your file system is suitable for CTDB with

ping_pong. It performs certain tests of your cluster file system like coherence and performance (see http://wiki.samba.org/index.php/Ping_pong) and gives some indication how your cluster may behave under high load.send_arpTool andSendArpResource AgentThe

SendArpresource agent is located in/usr/lib/heartbeat/send_arp(or/usr/lib64/heartbeat/send_arp). Thesend_arptool sends out a gratuitous ARP (Address Resolution Protocol) packet and can be used for updating other machines' ARP tables. It can help to identify communication problems after a failover process. If you cannot connect to a node or ping it although it shows the clustered IP address for Samba, use thesend_arpcommand to test if the nodes only need an ARP table update.For more information, refer to http://wiki.wireshark.org/Gratuitous_ARP.

To test certain aspects of your cluster file system proceed as follows:

Procedure 23.3: Test Coherence and Performance of Your Cluster File System #

Start the command

ping_pongon one node and replace the placeholder N with the amount of nodes plus one. The fileABSPATH/data.txtis available in your shared storage and is therefore accessible on all nodes (ABSPATH indicates an absolute path):ping_pongABSPATH/data.txt NExpect a very high locking rate as you are running only one node. If the program does not print a locking rate, replace your cluster file system.

Start a second copy of

ping_pongon another node with the same parameters.Expect to see a dramatic drop in the locking rate. If any of the following applies to your cluster file system, replace it:

ping_pongdoes not print a locking rate per second,the locking rates in the two instances are not almost equal,

the locking rate did not drop after you started the second instance.

Start a third copy of

ping_pong. Add another node and note how the locking rates change.Kill the

ping_pongcommands one after the other. You should observe an increase of the locking rate until you get back to the single node case. If you did not get the expected behavior, find more information in Chapter 18, OCFS2.