9 Creating Software RAID 10 Devices #

This section describes how to set up nested and complex RAID 10 devices. A RAID 10 device consists of nested RAID 1 (mirroring) and RAID 0 (striping) arrays. Nested RAIDs can either be set up as striped mirrors (RAID 1+0) or as mirrored stripes (RAID 0+1). A complex RAID 10 setup also combines mirrors and stripes and additional data security by supporting a higher data redundancy level.

9.1 Creating Nested RAID 10 Devices with mdadm #

A nested RAID device consists of a RAID array that uses another RAID array

as its basic element, instead of using physical disks. The goal of this

configuration is to improve the performance and fault tolerance of the RAID.

Setting up nested RAID levels is not supported by YaST, but can be done by

using the mdadm command line tool.

Based on the order of nesting, two different nested RAIDs can be set up. This document uses the following terminology:

RAID 1+0: RAID 1 (mirror) arrays are built first, then combined to form a RAID 0 (stripe) array.

RAID 0+1: RAID 0 (stripe) arrays are built first, then combined to form a RAID 1 (mirror) array.

The following table describes the advantages and disadvantages of RAID 10 nesting as 1+0 versus 0+1. It assumes that the storage objects you use reside on different disks, each with a dedicated I/O capability.

|

RAID Level |

Description |

Performance and Fault Tolerance |

|---|---|---|

|

10 (1+0) |

RAID 0 (stripe) built with RAID 1 (mirror) arrays |

RAID 1+0 provides high levels of I/O performance, data redundancy, and disk fault tolerance. Because each member device in the RAID 0 is mirrored individually, multiple disk failures can be tolerated and data remains available as long as the disks that fail are in different mirrors. You can optionally configure a spare for each underlying mirrored array, or configure a spare to serve a spare group that serves all mirrors. |

|

10 (0+1) |

RAID 1 (mirror) built with RAID 0 (stripe) arrays |

RAID 0+1 provides high levels of I/O performance and data redundancy, but slightly less fault tolerance than a 1+0. If multiple disks fail on one side of the mirror, then the other mirror is available. However, if disks are lost concurrently on both sides of the mirror, all data is lost. This solution offers less disk fault tolerance than a 1+0 solution, but if you need to perform maintenance or maintain the mirror on a different site, you can take an entire side of the mirror offline and still have a fully functional storage device. Also, if you lose the connection between the two sites, either site operates independently of the other. That is not true if you stripe the mirrored segments, because the mirrors are managed at a lower level. If a device fails, the mirror on that side fails because RAID 1 is not fault-tolerant. Create a new RAID 0 to replace the failed side, then resynchronize the mirrors. |

9.1.1 Creating Nested RAID 10 (1+0) with mdadm #

A nested RAID 1+0 is built by creating two or more RAID 1 (mirror) devices, then using them as component devices in a RAID 0.

If you need to manage multiple connections to the devices, you must configure multipath I/O before configuring the RAID devices. For information, see Chapter 18, Managing Multipath I/O for Devices.

The procedure in this section uses the device names shown in the following table. Ensure that you modify the device names with the names of your own devices.

|

Raw Devices |

RAID 1 (mirror) |

RAID 1+0 (striped mirrors) | ||

|---|---|---|---|---|

|

|

| ||

|

|

Open a terminal.

If necessary, create four 0xFD Linux RAID partitions of equal size using a disk partitioner such as parted.

Create two software RAID 1 devices, using two different devices for each device. At the command prompt, enter these two commands:

>sudomdadm --create /dev/md0 --run --level=1 --raid-devices=2 /dev/sdb1 /dev/sdc1 sudo mdadm --create /dev/md1 --run --level=1 --raid-devices=2 /dev/sdd1 /dev/sde1Create the nested RAID 1+0 device. At the command prompt, enter the following command using the software RAID 1 devices you created in the previous step:

>sudomdadm --create /dev/md2 --run --level=0 --chunk=64 \ --raid-devices=2 /dev/md0 /dev/md1The default chunk size is 64 KB.

Create a file system on the RAID 1+0 device

/dev/md2, for example an XFS file system:>sudomkfs.xfs /dev/md2Modify the command to use a different file system.

Edit the

/etc/mdadm.conffile or create it, if it does not exist (for example by runningsudo vi /etc/mdadm.conf). Add the following lines (if the file already exists, the first line probably already exists).DEVICE containers partitions ARRAY /dev/md0 UUID=UUID ARRAY /dev/md1 UUID=UUID ARRAY /dev/md2 UUID=UUID

The UUID of each device can be retrieved with the following command:

>sudomdadm -D /dev/DEVICE | grep UUIDEdit the

/etc/fstabfile to add an entry for the RAID 1+0 device/dev/md2. The following example shows an entry for a RAID device with the XFS file system and/dataas a mount point./dev/md2 /data xfs defaults 1 2

Mount the RAID device:

>sudomount /data

9.1.2 Creating Nested RAID 10 (0+1) with mdadm #

A nested RAID 0+1 is built by creating two to four RAID 0 (striping) devices, then mirroring them as component devices in a RAID 1.

If you need to manage multiple connections to the devices, you must configure multipath I/O before configuring the RAID devices. For information, see Chapter 18, Managing Multipath I/O for Devices.

In this configuration, spare devices cannot be specified for the underlying RAID 0 devices because RAID 0 cannot tolerate a device loss. If a device fails on one side of the mirror, you must create a replacement RAID 0 device, than add it into the mirror.

The procedure in this section uses the device names shown in the following table. Ensure that you modify the device names with the names of your own devices.

|

Raw Devices |

RAID 0 (stripe) |

RAID 0+1 (mirrored stripes) | ||

|---|---|---|---|---|

|

|

| ||

|

|

Open a terminal.

If necessary, create four 0xFD Linux RAID partitions of equal size using a disk partitioner such as parted.

Create two software RAID 0 devices, using two different devices for each RAID 0 device. At the command prompt, enter these two commands:

>sudomdadm --create /dev/md0 --run --level=0 --chunk=64 \ --raid-devices=2 /dev/sdb1 /dev/sdc1 sudo mdadm --create /dev/md1 --run --level=0 --chunk=64 \ --raid-devices=2 /dev/sdd1 /dev/sde1The default chunk size is 64 KB.

Create the nested RAID 0+1 device. At the command prompt, enter the following command using the software RAID 0 devices you created in the previous step:

>sudomdadm --create /dev/md2 --run --level=1 --raid-devices=2 /dev/md0 /dev/md1Create a file system on the RAID 1+0 device

/dev/md2, for example an XFS file system:>sudomkfs.xfs /dev/md2Modify the command to use a different file system.

Edit the

/etc/mdadm.conffile or create it, if it does not exist (for example by runningsudo vi /etc/mdadm.conf). Add the following lines (if the file exists, the first line probably already exists, too).DEVICE containers partitions ARRAY /dev/md0 UUID=UUID ARRAY /dev/md1 UUID=UUID ARRAY /dev/md2 UUID=UUID

The UUID of each device can be retrieved with the following command:

>sudomdadm -D /dev/DEVICE | grep UUIDEdit the

/etc/fstabfile to add an entry for the RAID 1+0 device/dev/md2. The following example shows an entry for a RAID device with the XFS file system and/dataas a mount point./dev/md2 /data xfs defaults 1 2

Mount the RAID device:

>sudomount /data

9.2 Creating a Complex RAID 10 #

YaST (and mdadm with the --level=10

option) creates a single complex software RAID 10 that combines

features of both RAID 0 (striping) and RAID 1 (mirroring). Multiple copies

of all data blocks are arranged on multiple drives following a striping

discipline. Component devices should be the same size.

The complex RAID 10 is similar in purpose to a nested RAID 10 (1+0), but differs in the following ways:

|

Feature |

Complex RAID 10 |

Nested RAID 10 (1+0) |

|---|---|---|

|

Number of devices |

Allows an even or odd number of component devices |

Requires an even number of component devices |

|

Component devices |

Managed as a single RAID device |

Manage as a nested RAID device |

|

Striping |

Striping occurs in the near or far layout on component devices. The far layout provides sequential read throughput that scales by number of drives, rather than number of RAID 1 pairs. |

Striping occurs consecutively across component devices |

|

Multiple copies of data |

Two or more copies, up to the number of devices in the array |

Copies on each mirrored segment |

|

Hot spare devices |

A single spare can service all component devices |

Configure a spare for each underlying mirrored array, or configure a spare to serve a spare group that serves all mirrors. |

9.2.1 Number of Devices and Replicas in the Complex RAID 10 #

When configuring a complex RAID 10 array, you must specify the number of replicas of each data block that are required. The default number of replicas is two, but the value can be two to the number of devices in the array.

You must use at least as many component devices as the number of replicas you specify. However, the number of component devices in a RAID 10 array does not need to be a multiple of the number of replicas of each data block. The effective storage size is the number of devices divided by the number of replicas.

For example, if you specify two replicas for an array created with five component devices, a copy of each block is stored on two different devices. The effective storage size for one copy of all data is 5/2 or 2.5 times the size of a component device.

9.2.2 Layout #

The complex RAID 10 setup supports three different layouts which define how the data blocks are arranged on the disks. The available layouts are near (default), far and offset. They have different performance characteristics, so it is important to choose the right layout for your workload.

9.2.2.1 Near Layout #

With the near layout, copies of a block of data are striped near each other on different component devices. That is, multiple copies of one data block are at similar offsets in different devices. Near is the default layout for RAID 10. For example, if you use an odd number of component devices and two copies of data, some copies are perhaps one chunk further into the device.

The near layout for the complex RAID 10 yields read and write performance similar to RAID 0 over half the number of drives.

Near layout with an even number of disks and two replicas:

sda1 sdb1 sdc1 sde1 0 0 1 1 2 2 3 3 4 4 5 5 6 6 7 7 8 8 9 9

Near layout with an odd number of disks and two replicas:

sda1 sdb1 sdc1 sde1 sdf1 0 0 1 1 2 2 3 3 4 4 5 5 6 6 7 7 8 8 9 9 10 10 11 11 12

9.2.2.2 Far Layout #

The far layout stripes data over the early part of all drives, then stripes a second copy of the data over the later part of all drives, making sure that all copies of a block are on different drives. The second set of values starts halfway through the component drives.

With a far layout, the read performance of the complex RAID 10 is similar to a RAID 0 over the full number of drives, but write performance is substantially slower than a RAID 0 because there is more seeking of the drive heads. It is best used for read-intensive operations such as for read-only file servers.

The speed of the RAID 10 for writing is similar to other mirrored RAID types, like RAID 1 and RAID 10 using near layout, as the elevator of the file system schedules the writes in a more optimal way than raw writing. Using RAID 10 in the far layout is well suited for mirrored writing applications.

Far layout with an even number of disks and two replicas:

sda1 sdb1 sdc1 sde1 0 1 2 3 4 5 6 7 . . . 3 0 1 2 7 4 5 6

Far layout with an odd number of disks and two replicas:

sda1 sdb1 sdc1 sde1 sdf1 0 1 2 3 4 5 6 7 8 9 . . . 4 0 1 2 3 9 5 6 7 8

9.2.2.3 Offset Layout #

The offset layout duplicates stripes so that the multiple copies of a given chunk are laid out on consecutive drives and at consecutive offsets. Effectively, each stripe is duplicated and the copies are offset by one device. This should give similar read characteristics to a far layout if a suitably large chunk size is used, but without as much seeking for writes.

Offset layout with an even number of disks and two replicas:

sda1 sdb1 sdc1 sde1 0 1 2 3 3 0 1 2 4 5 6 7 7 4 5 6 8 9 10 11 11 8 9 10

Offset layout with an odd number of disks and two replicas:

sda1 sdb1 sdc1 sde1 sdf1 0 1 2 3 4 4 0 1 2 3 5 6 7 8 9 9 5 6 7 8 10 11 12 13 14 14 10 11 12 13

9.2.2.4 Specifying the number of Replicas and the Layout with YaST and mdadm #

The number of replicas and the layout is specified as in YaST or with the --layout

parameter for mdadm. The following values are accepted:

nNSpecify

nfor near layout and replace N with the number of replicas.n2is the default that is used when not configuring layout and the number of replicas.fNSpecify

ffor far layout and replace N with the number of replicas.oNSpecify

ofor offset layout and replace N with the number of replicas.

YaST automatically offers a selection of all possible values for the parameter.

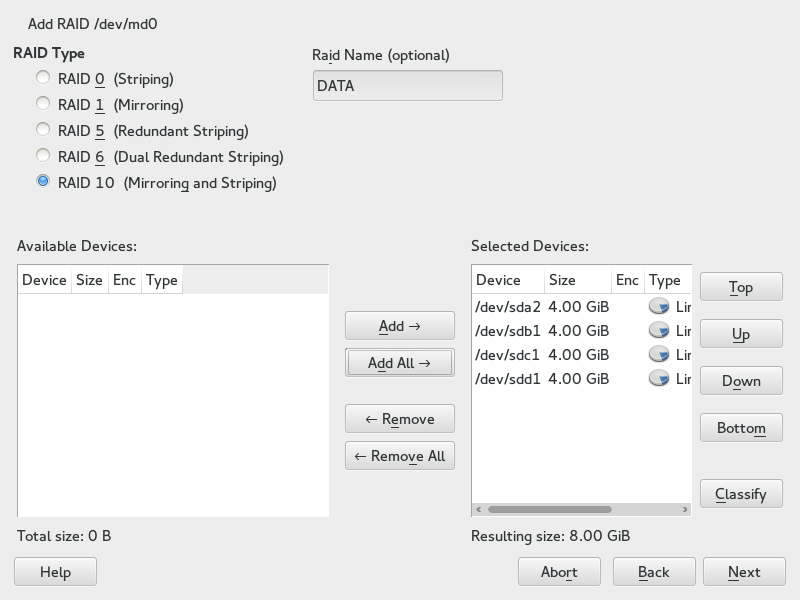

9.2.3 Creating a Complex RAID 10 with the YaST Partitioner #

Launch YaST and open the Partitioner.

If necessary, create partitions that should be used with your RAID configuration. Do not format them and set the partition type to . When using existing partitions it is not necessary to change their partition type—YaST will automatically do so. Refer to Abschnitt 10.1, „Verwenden der Expertenpartitionierung“ for details.

For RAID 10 at least four partitions are needed. It is strongly recommended to use partitions stored on different hard disks to decrease the risk of losing data if one is defective. It is recommended to use only partitions of the same size because each segment can contribute only the same amount of space as the smallest sized partition.

In the left panel, select .

A list of existing RAID configurations opens in the right panel.

At the lower left of the RAID page, click .

Under , select .

You can optionally assign a to your RAID. It will make it available as

/dev/md/NAME. See Section 7.2.1, “RAID Names” for more information.In the list, select the desired partitions, then click to move them to the list.

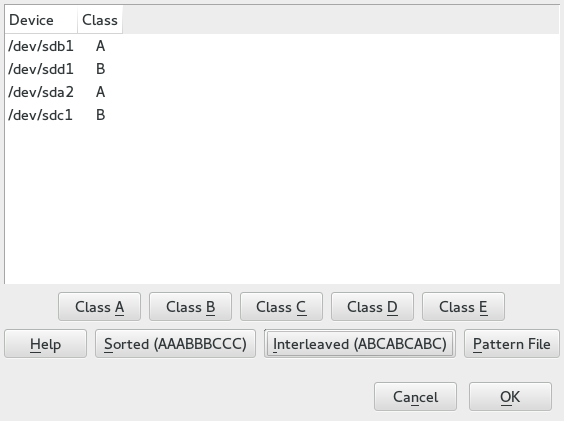

(Optional) Click to specify the preferred order of the disks in the RAID array.

For RAID types such as RAID 10, where the order of added disks matters, you can specify the order in which the devices will be used. This will ensure that one half of the array resides on one disk subsystem and the other half of the array resides on a different disk subsystem. For example, if one disk subsystem fails, the system keeps running from the second disk subsystem.

Select each disk in turn and click one of the buttons, where X is the letter you want to assign to the disk. Available classes are A, B, C, D and E but for many cases fewer classes are needed (only A and B, for example). Assign all available RAID disks this way.

You can press the Ctrl or Shift key to select multiple devices. You can also right-click a selected device and choose the appropriate class from the context menu.

Specify the order of the devices by selecting one of the sorting options:

: Sorts all devices of class A before all devices of class B and so on. For example:

AABBCC.: Sorts devices by the first device of class A, then first device of class B, then all the following classes with assigned devices. Then the second device of class A, the second device of class B, and so on follows. All devices without a class are sorted to the end of the devices list. For example:

ABCABC.Pattern File: Select an existing file that contains multiple lines, where each is a regular expression and a class name (

"sda.* A"). All devices that match the regular expression are assigned to the specified class for that line. The regular expression is matched against the kernel name (/dev/sda1), the udev path name (/dev/disk/by-path/pci-0000:00:1f.2-scsi-0:0:0:0-part1) and then the udev ID (dev/disk/by-id/ata-ST3500418AS_9VMN8X8L-part1). The first match made determines the class if a device’s name matches more than one regular expression.At the bottom of the dialog, click to confirm the order.

Click .

Under , specify the and , then click .

For a RAID 10, the parity options are n (near), f (far), and o (offset). The number indicates the number of replicas of each data block that are required. Two is the default. For information, see Section 9.2.2, “Layout”.

Add a file system and mount options to the RAID device, then click .

Click .

Verify the changes to be made, then click to create the RAID.

9.2.4 Creating a Complex RAID 10 with mdadm #

The procedure in this section uses the device names shown in the following table. Ensure that you modify the device names with the names of your own devices.

|

Raw Devices |

RAID 10 |

|---|---|

|

|

|

Open a terminal.

If necessary, create at least four 0xFD Linux RAID partitions of equal size using a disk partitioner such as parted.

Create a RAID 10 by entering the following command.

>sudomdadm --create /dev/md3 --run --level=10 --chunk=32 --raid-devices=4 \ /dev/sdf1 /dev/sdg1 /dev/sdh1 /dev/sdi1Make sure to adjust the value for

--raid-devicesand the list of partitions according to your setup.The command creates an array with near layout and two replicas. To change any of the two values, use the

--layoutas described in Section 9.2.2.4, “Specifying the number of Replicas and the Layout with YaST and mdadm”.Create a file system on the RAID 10 device

/dev/md3, for example an XFS file system:>sudomkfs.xfs /dev/md3Modify the command to use a different file system.

Edit the

/etc/mdadm.conffile or create it, if it does not exist (for example by runningsudo vi /etc/mdadm.conf). Add the following lines (if the file exists, the first line probably already exists, too).DEVICE containers partitions ARRAY /dev/md3 UUID=UUID

The UUID of the device can be retrieved with the following command:

>sudomdadm -D /dev/md3 | grep UUIDEdit the

/etc/fstabfile to add an entry for the RAID 10 device/dev/md3. The following example shows an entry for a RAID device with the XFS file system and/dataas a mount point./dev/md3 /data xfs defaults 1 2

Mount the RAID device:

>sudomount /data