6 Manage RADOS Block Device #

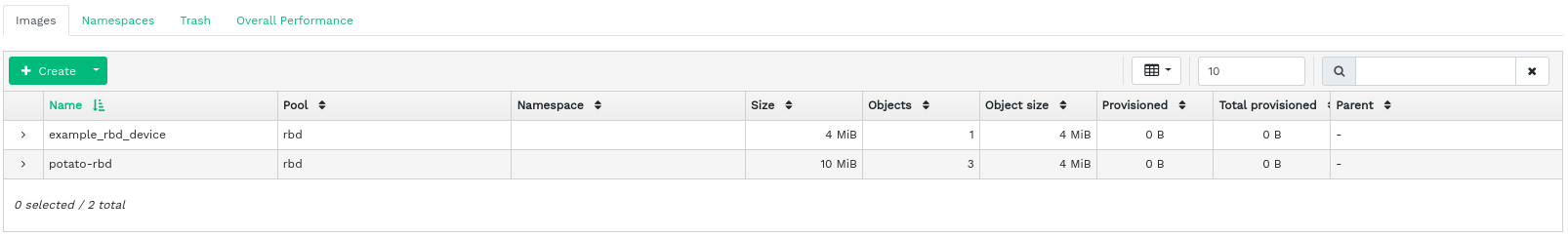

To list all available RADOS Block Devices (RBDs), click › from the main menu.

The list shows brief information about the device, such as the device's name, the related pool name, namespace, size of the device, number and size of objects on the device, details on the provisioning of the details, and the parent.

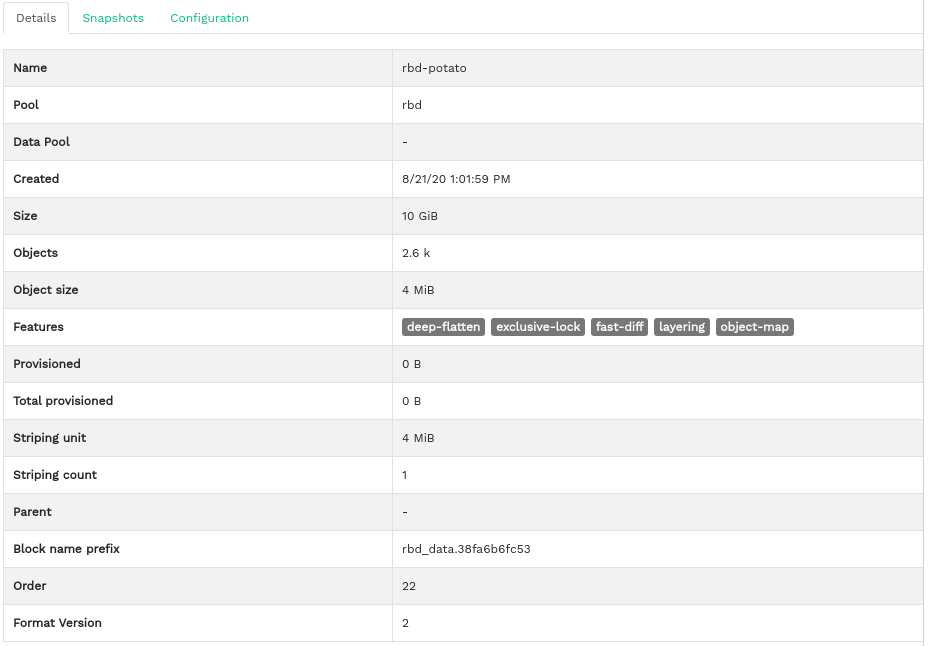

6.1 Viewing details about RBDs #

To view more detailed information about a device, click its row in the table:

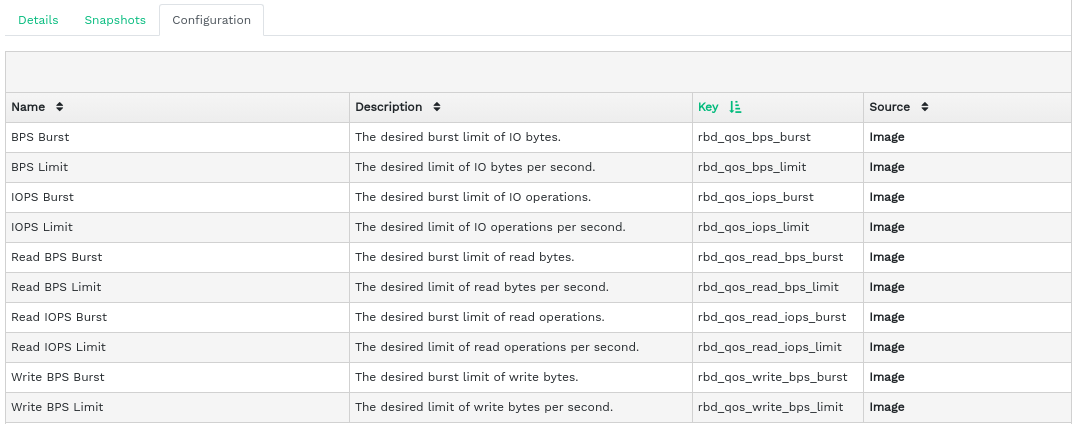

6.2 Viewing RBD's configuration #

To view detailed configuration of a device, click its row in the table and then the tab in the lower table:

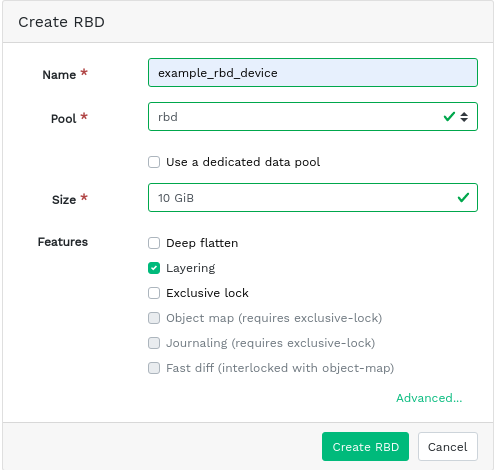

6.3 Creating RBDs #

To add a new device, click in the top left of the table heading and do the following on the screen:

Enter the name of the new device. Refer to Section 2.11, “Name limitations” for naming limitations.

Select the pool with the

rbdapplication assigned from which the new RBD device will be created.Specify the size of the new device.

Specify additional options for the device. To fine-tune the device parameters, click and enter values for object size, stripe unit, or stripe count. To enter Quality of Service (QoS) limits, click and enter them.

Confirm with .

6.4 Deleting RBDs #

To delete a device, select the device in the table row. Click the drop-down arrow next to the button and click . Confirm the deletion with .

Deleting an RBD is an irreversible action. If you instead, you can restore the device later on by selecting it on the tab of the main table and clicking in the top left of the table heading.

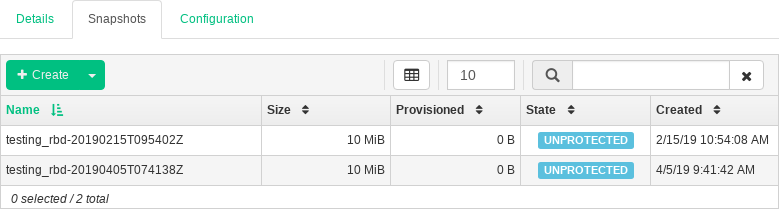

6.5 Creating RADOS Block Device snapshots #

To create a RADOS Block Device snapshot, select the device in the table row and the detailed configuration content pane appears. Select the tab and click in the top left of the table heading. Enter the snapshot's name and confirm with .

After selecting a snapshot, you can perform additional actions on the device, such as rename, protect, clone, copy, or delete. restores the device's state from the current snapshot.

6.6 RBD mirroring #

RADOS Block Device images can be asynchronously mirrored between two Ceph clusters. You can use the Ceph Dashboard to configure replication of RBD images between two or more clusters. This capability is available in two modes:

- Journal-based

This mode uses the RBD journaling image feature to ensure point-in-time, crash-consistent replication between clusters.

- Snapshot-based

This mode uses periodically scheduled or manually created RBD image mirror-snapshots to replicate crash-consistent RBD images between clusters.

Mirroring is configured on a per-pool basis within peer clusters and can be configured on a specific subset of images within the pool or configured to automatically mirror all images within a pool when using journal-based mirroring only.

Mirroring is configured using the rbd command, which is

installed by default in SUSE Enterprise Storage 7.1. The

rbd-mirror daemon is responsible for

pulling image updates from the remote, peer cluster and applying them to the

image within the local cluster. See

Section 6.6.2, “Enabling the rbd-mirror daemon” for more information on enabling

the rbd-mirror daemon.

Depending on the need for replication, RADOS Block Device mirroring can be configured for either one- or two-way replication:

- One-way Replication

When data is only mirrored from a primary cluster to a secondary cluster, the

rbd-mirrordaemon runs only on the secondary cluster.- Two-way Replication

When data is mirrored from primary images on one cluster to non-primary images on another cluster (and vice-versa), the

rbd-mirrordaemon runs on both clusters.

Each instance of the rbd-mirror

daemon must be able to connect to both the local and remote Ceph clusters

simultaneously, for example all monitor and OSD hosts. Additionally, the

network must have sufficient bandwidth between the two data centers to

handle mirroring workload.

For general information and the command line approach to RADOS Block Device mirroring, refer to Section 20.4, “RBD image mirrors”.

6.6.1 Configuring primary and secondary clusters #

A primary cluster is where the original pool with images is created. A secondary cluster is where the pool or images are replicated from the primary cluster.

The primary and secondary terms can be relative in the context of replication because they relate more to individual pools than to clusters. For example, in two-way replication, one pool can be mirrored from the primary cluster to the secondary one, while another pool can be mirrored from the secondary cluster to the primary one.

6.6.2 Enabling the rbd-mirror daemon #

The following procedures demonstrate how to perform the basic

administrative tasks to configure mirroring using the

rbd command. Mirroring is configured on a per-pool basis

within the Ceph clusters.

The pool configuration steps should be performed on both peer clusters. These procedures assume two clusters, named “primary” and “secondary”, are accessible from a single host for clarity.

The rbd-mirror daemon performs the

actual cluster data replication.

Rename

ceph.confand keyring files and copy them from the primary host to the secondary host:cephuser@secondary >cp /etc/ceph/ceph.conf /etc/ceph/primary.confcephuser@secondary >cp /etc/ceph/ceph.admin.client.keyring \ /etc/ceph/primary.client.admin.keyringcephuser@secondary >scp PRIMARY_HOST:/etc/ceph/ceph.conf \ /etc/ceph/secondary.confcephuser@secondary >scp PRIMARY_HOST:/etc/ceph/ceph.client.admin.keyring \ /etc/ceph/secondary.client.admin.keyringTo enable mirroring on a pool with

rbd, specify themirror pool enable, the pool name, and the mirroring mode:cephuser@adm >rbd mirror pool enable POOL_NAME MODENoteThe mirroring mode can either be

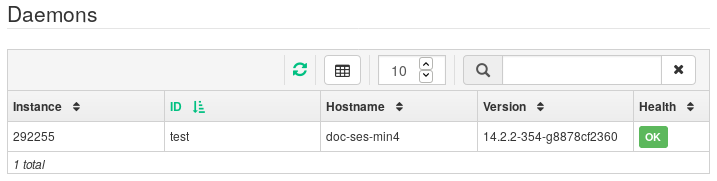

imageorpool. For example:cephuser@secondary >rbd --cluster primary mirror pool enable image-pool imagecephuser@secondary >rbd --cluster secondary mirror pool enable image-pool imageOn the Ceph Dashboard, navigate to › . The table to the left shows actively running

rbd-mirrordaemons and their health.Figure 6.6: Runningrbd-mirrordaemons #

6.6.3 Disabling mirroring #

To disable mirroring on a pool with rbd, specify the

mirror pool disable command and the pool name:

cephuser@adm > rbd mirror pool disable POOL_NAMEWhen mirroring is disabled on a pool in this way, mirroring will also be disabled on any images (within the pool) for which mirroring was enabled explicitly.

6.6.4 Bootstrapping peers #

In order for the rbd-mirror to

discover its peer cluster, the peer needs to be registered to the pool and

a user account needs to be created. This process can be automated with

rbd by using the mirror pool peer bootstrap

create and mirror pool peer bootstrap import

commands.

To manually create a new bootstrap token with rbd,

specify the mirror pool peer bootstrap create command, a

pool name, along with an optional site name to describe the local cluster:

cephuser@adm > rbd mirror pool peer bootstrap create [--site-name local-site-name] pool-name

The output of mirror pool peer bootstrap create will be

a token that should be provided to the mirror pool peer bootstrap

import command. For example, on the primary cluster:

cephuser@adm > rbd --cluster primary mirror pool peer bootstrap create --site-name primary

image-pool eyJmc2lkIjoiOWY1MjgyZGItYjg5OS00NTk2LTgwOTgtMzIwYzFmYzM5NmYzIiwiY2xpZW50X2lkIjoicmJkL \

W1pcnJvci1wZWVyIiwia2V5IjoiQVFBUnczOWQwdkhvQmhBQVlMM1I4RmR5dHNJQU50bkFTZ0lOTVE9PSIsIm1vbl9ob3N0I \

joiW3YyOjE5Mi4xNjguMS4zOjY4MjAsdjE6MTkyLjE2OC4xLjM6NjgyMV0ifQ==

To manually import the bootstrap token created by another cluster with the

rbd command, specify the mirror pool peer

bootstrap import command, the pool name, a file path to the

created token (or ‘-‘ to read from standard input), along with an

optional site name to describe the local cluster and a mirroring direction

(defaults to rx-tx for bidirectional mirroring, but can

also be set to rx-only for unidirectional mirroring):

cephuser@adm > rbd mirror pool peer bootstrap import [--site-name local-site-name] \

[--direction rx-only or rx-tx] pool-name token-pathFor example, on the secondary cluster:

cephuser@adm >cat >>EOF < token eyJmc2lkIjoiOWY1MjgyZGItYjg5OS00NTk2LTgwOTgtMzIwYzFmYzM5NmYzIiwiY2xpZW50X2lkIjoicmJkLW1pcn \ Jvci1wZWVyIiwia2V5IjoiQVFBUnczOWQwdkhvQmhBQVlMM1I4RmR5dHNJQU50bkFTZ0lOTVE9PSIsIm1vbl9ob3N0I \ joiW3YyOjE5Mi4xNjguMS4zOjY4MjAsdjE6MTkyLjE2OC4xLjM6NjgyMV0ifQ== EOFcephuser@adm >rbd --cluster secondary mirror pool peer bootstrap import --site-name secondary image-pool token

6.6.5 Removing cluster peer #

To remove a mirroring peer Ceph cluster with the rbd

command, specify the mirror pool peer remove command,

the pool name, and the peer UUID (available from the rbd mirror

pool info command):

cephuser@adm > rbd mirror pool peer remove pool-name peer-uuid6.6.6 Configuring pool replication in the Ceph Dashboard #

The rbd-mirror daemon needs to have

access to the primary cluster to be able to mirror RBD images. Ensure you

have followed the steps in Section 6.6.4, “Bootstrapping peers”

before continuing.

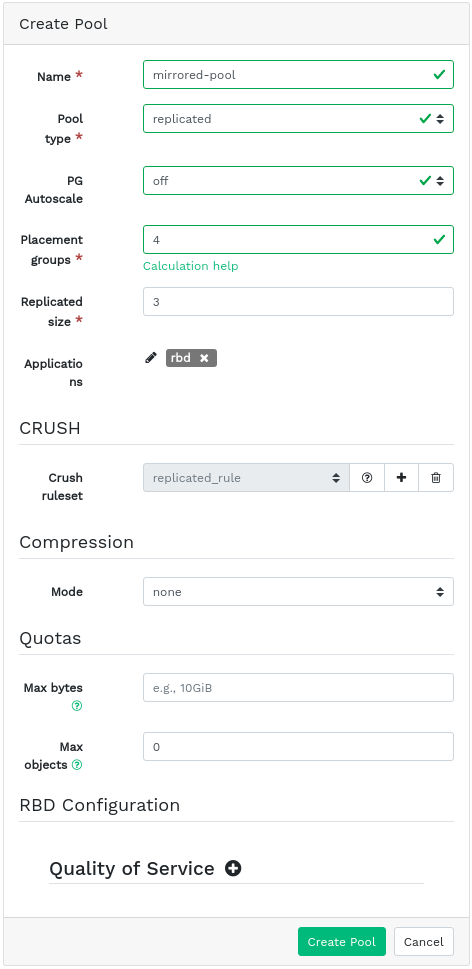

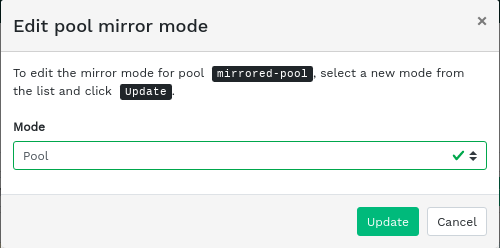

On both the primary and secondary cluster, create pools with an identical name and assign the

rbdapplication to them. Refer to Section 5.1, “Adding a new pool” for more details on creating a new pool.Figure 6.7: Creating a pool with RBD application #On both the primary and secondary cluster's dashboards, navigate to › . In the table on the right, click the name of the pool to replicate, and after clicking , select the replication mode. In this example, we will work with a pool replication mode, which means that all images within a given pool will be replicated. Confirm with .

Figure 6.8: Configuring the replication mode #Important: Error or warning on the primary clusterAfter updating the replication mode, an error or warning flag will appear in the corresponding right column. That is because the pool has no peer user for replication assigned yet. Ignore this flag for the primary cluster as we assign a peer user to the secondary cluster only.

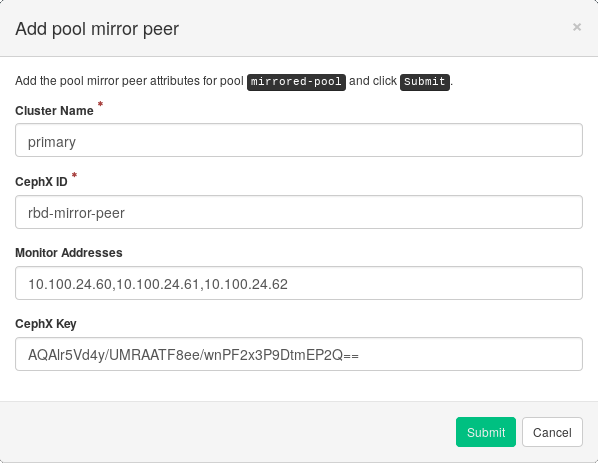

On the secondary cluster's Dashboard, navigate to › . Add the pool mirror peer by selecting . Provide the primary cluster's details:

Figure 6.9: Adding peer credentials #An arbitrary unique string that identifies the primary cluster, such as 'primary'. The cluster name needs to be different from the real secondary cluster's name.

The Ceph user ID that you created as a mirroring peer. In this example it is 'rbd-mirror-peer'.

Comma-separated list of IP addresses of the primary cluster's Ceph Monitor nodes.

The key related to the peer user ID. You can retrieve it by running the following example command on the primary cluster:

cephuser@adm >ceph auth print_key pool-mirror-peer-name

Confirm with .

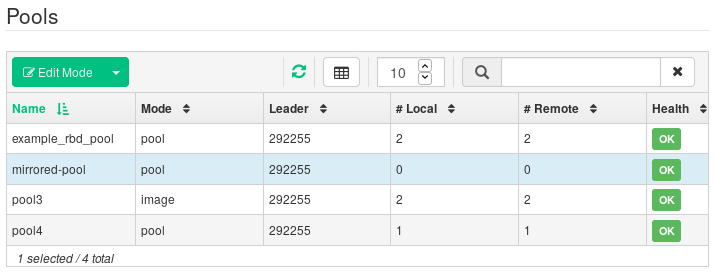

Figure 6.10: List of replicated pools #

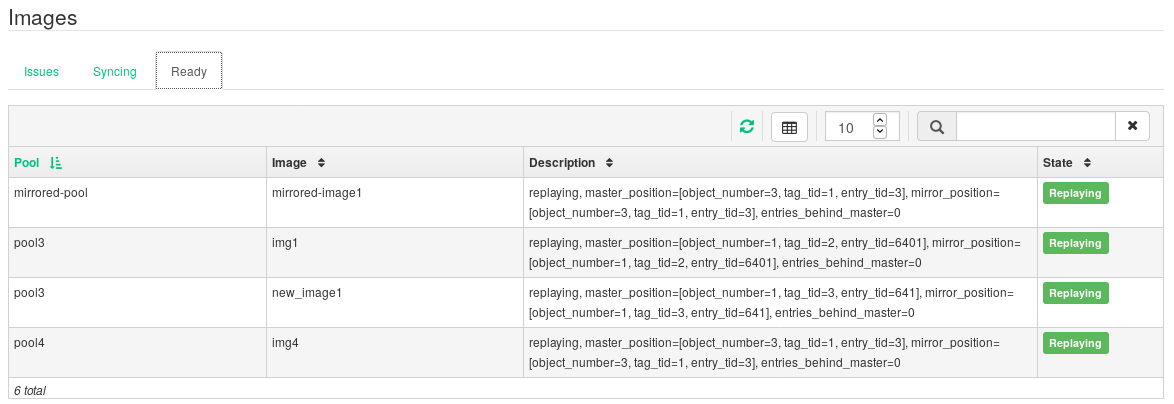

6.6.7 Verifying that RBD image replication works #

When the rbd-mirror daemon is

running and RBD image replication is configured on the Ceph Dashboard, it is

time to verify whether the replication actually works:

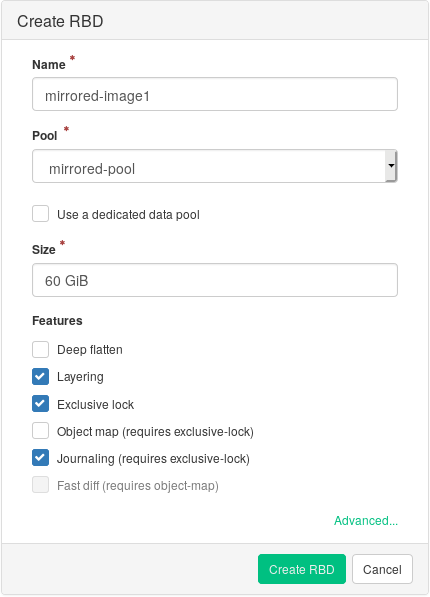

On the primary cluster's Ceph Dashboard, create an RBD image so that its parent pool is the pool that you already created for replication purposes. Enable the

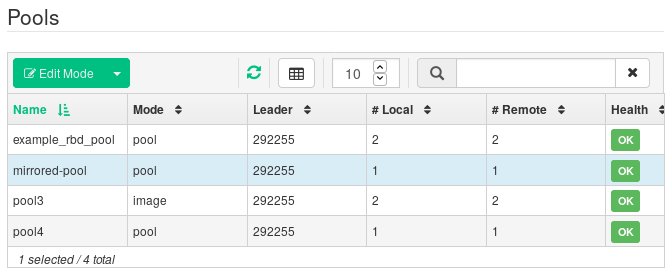

Exclusive lockandJournalingfeatures for the image. Refer to Section 6.3, “Creating RBDs” for details on how to create RBD images.Figure 6.11: New RBD image #After you create the image that you want to replicate, open the secondary cluster's Ceph Dashboard and navigate to › . The table on the right will reflect the change in the number of images and synchronize the number of images.

Figure 6.12: New RBD image synchronized #Tip: Replication progressThe table at the bottom of the page shows the status of replication of RBD images. The tab includes possible problems, the tab displays the progress of image replication, and the tab lists all images with successful replication.

Figure 6.13: RBD images' replication status #On the primary cluster, write data to the RBD image. On the secondary cluster's Ceph Dashboard, navigate to › and monitor whether the corresponding image's size is growing as the data on the primary cluster is written.

6.7 Managing iSCSI Gateways #

For more general information about iSCSI Gateways, refer to Chapter 22, Ceph iSCSI gateway.

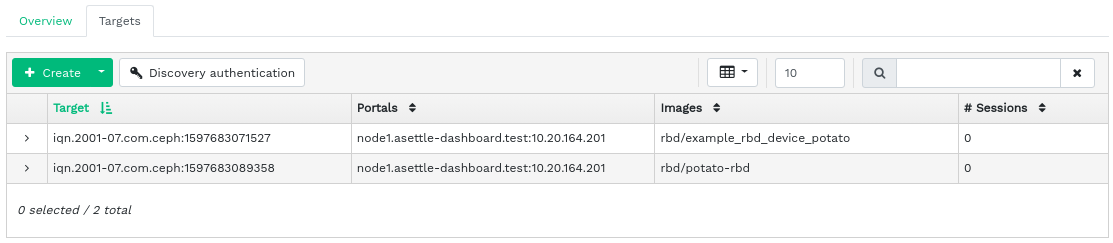

To list all available gateways and mapped images, click › from the main menu. An tab opens, listing currently configured iSCSI Gateways and mapped RBD images.

The table lists each gateway's state, number of iSCSI targets, and number of sessions. The table lists each mapped image's name, related pool name backstore type, and other statistical details.

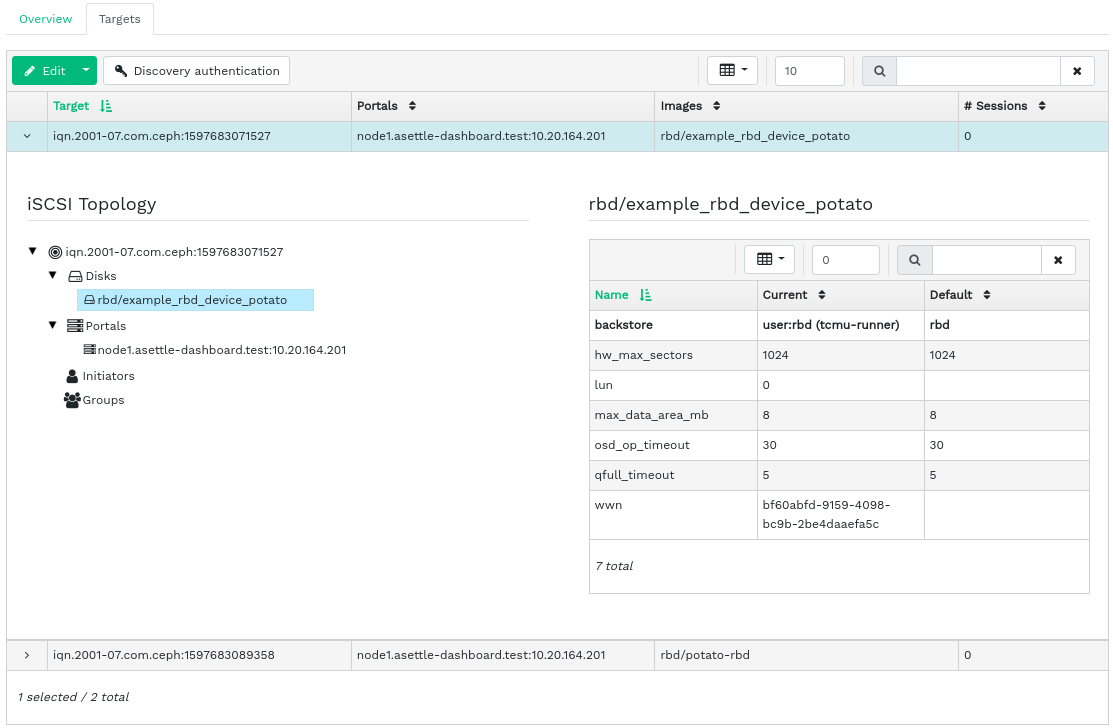

The tab lists currently configured iSCSI targets.

To view more detailed information about a target, click the drop-down arrow on the target table row. A tree-structured schema opens, listing disks, portals, initiators, and groups. Click an item to expand it and view its detailed contents, optionally with a related configuration in the table on the right.

6.7.1 Adding iSCSI targets #

To add a new iSCSI target, click in the top left of the table and enter the required information.

Enter the target address of the new gateway.

Click and select one or multiple iSCSI portals from the list.

Click and select one or multiple RBD images for the gateway.

If you need to use authentication to access the gateway, activate the check box and enter the credentials. You can find more advanced authentication options after activating and .

Confirm with .

6.7.2 Editing iSCSI targets #

To edit an existing iSCSI target, click its row in the table and click in the top left of the table.

You can then modify the iSCSI target, add or delete portals, and add or delete related RBD images. You can also adjust authentication information for the gateway.

6.7.3 Deleting iSCSI targets #

To delete an iSCSI target, select the table row and click the drop-down arrow next to the button and select . Activate and confirm with .

6.8 RBD Quality of Service (QoS) #

For more general information and a description of RBD QoS configuration options, refer to Section 20.6, “QoS settings”.

The QoS options can be configured at different levels.

Globally

On a per-pool basis

On a per-image basis

The global configuration is at the top of the list and will be used for all newly created RBD images and for those images that do not override these values on the pool or RBD image layer. An option value specified globally can be overridden on a per-pool or per-image basis. Options specified on a pool will be applied to all RBD images of that pool unless overridden by a configuration option set on an image. Options specified on an image will override options specified on a pool and will override options specified globally.

This way it is possible to define defaults globally, adapt them for all RBD images of a specific pool, and override the pool configuration for individual RBD images.

6.8.1 Configuring options globally #

To configure the RADOS Block Device options globally, select › from the main menu.

To list all available global configuration options, next to , choose from the drop-down menu.

Filter the results of the table by filtering for

rbd_qosin the search field. This lists all available configuration options for QoS.To change a value, click the row in the table, then select at the top left of the table. The dialog contains six different fields for specifying values. The RBD configuration option values are required in the text box.

NoteUnlike the other dialogs, this one does not allow you to specify the value in convenient units. You need to set these values in either bytes or IOPS, depending on the option you are editing.

6.8.2 Configuring options on a new pool #

To create a new pool and configure RBD configuration options on it, click

› . Select

as pool type. You will then need to add the

rbd application tag to the pool to be able to configure

the RBD QoS options.

It is not possible to configure RBD QoS configuration options on an erasure coded pool. To configure the RBD QoS options for erasure coded pools, you need to edit the replicated metadata pool of an RBD image. The configuration will then be applied to the erasure coded data pool of that image.

6.8.3 Configuring options on an existing pool #

To configure RBD QoS options on an existing pool, click , then click the pool's table row and select at the top left of the table.

You should see the section in the dialog, followed by a section.

If you see neither the nor the section, you are likely either editing an erasure coded pool, which cannot be used to set RBD configuration options, or the pool is not configured to be used by RBD images. In the latter case, assign the application tag to the pool and the corresponding configuration sections will show up.

6.8.4 Configuration options #

Click to expand the configuration options. A list of all available options will show up. The units of the configuration options are already shown in the text boxes. In case of any bytes per second (BPS) option, you are free to use shortcuts such as '1M' or '5G'. They will be automatically converted to '1 MB/s' and '5 GB/s' respectively.

By clicking the reset button to the right of each text box, any value set on the pool will be removed. This does not remove configuration values of options configured globally or on an RBD image.

6.8.5 Creating RBD QoS options with a new RBD image #

To create an RBD image with RBD QoS options set on that image, select › and then click . Click to expand the advanced configuration section. Click to open all available configuration options.

6.8.6 Editing RBD QoS options on existing images #

To edit RBD QoS options on an existing image, select › , then click the pool's table row, and lastly click . The edit dialog will show up. Click to expand the advanced configuration section. Click to open all available configuration options.

6.8.7 Changing configuration options when copying or cloning images #

If an RBD image is cloned or copied, the values set on that particular image will be copied too, by default. If you want to change them while copying or cloning, you can do so by specifying the updated configuration values in the copy/clone dialog, the same way as when creating or editing an RBD image. Doing so will only set (or reset) the values for the RBD image that is copied or cloned. This operation changes neither the source RBD image configuration, nor the global configuration.

If you choose to reset the option value on copying/cloning, no value for that option will be set on that image. This means that any value of that option specified for the parent pool will be used if the parent pool has the value configured. Otherwise, the global default will be used.