5 Configuration and administration basics #

The main purpose of an HA cluster is to manage user services. Typical examples of user services are an Apache Web server or a database. From the user's point of view, the services do something specific when ordered to do so. To the cluster, however, they are only resources which may be started or stopped—the nature of the service is irrelevant to the cluster.

This chapter introduces some basic concepts you need to know when administering your cluster. The following chapters show you how to execute the main configuration and administration tasks with each of the management tools SUSE Linux Enterprise High Availability provides.

5.1 Use case scenarios #

In general, clusters fall into one of two categories:

Two-node clusters

Clusters with more than two nodes. This usually means an odd number of nodes.

Adding also different topologies, different use cases can be derived. The following use cases are the most common:

- Two-node cluster in one location

Configuration:FC SAN or similar shared storage, layer 2 network.

Usage scenario:Embedded clusters that focus on service high availability and not data redundancy for data replication. Such a setup is used for radio stations or assembly line controllers, for example.

- Two-node clusters in two locations (most widely used)

Configuration:Symmetrical stretched cluster, FC SAN, and layer 2 network all across two locations.

Usage scenario:Classic stretched clusters, focus on high availability of services and local data redundancy. For databases and enterprise resource planning. One of the most popular setups.

- Odd number of nodes in three locations

Configuration:2×N+1 nodes, FC SAN across two main locations. Auxiliary third site with no FC SAN, but acts as a majority maker. Layer 2 network at least across two main locations.

Usage scenario:Classic stretched cluster, focus on high availability of services and data redundancy. For example, databases, enterprise resource planning.

5.2 Quorum determination #

Whenever communication fails between one or more nodes and the rest of the cluster, a cluster partition occurs. The nodes can only communicate with other nodes in the same partition and are unaware of the separated nodes. A cluster partition is defined as having quorum (being “quorate”) if it has the majority of nodes (or votes). How this is achieved is done by quorum calculation. Quorum is a requirement for fencing.

Quorum is not calculated or determined by Pacemaker. Corosync can handle quorum for two-node clusters directly without changing the Pacemaker configuration.

How quorum is calculated is influenced by the following factors:

- Number of cluster nodes

To keep services running, a cluster with more than two nodes relies on quorum (majority vote) to resolve cluster partitions. Based on the following formula, you can calculate the minimum number of operational nodes required for the cluster to function:

N ≥ C/2 + 1 N = minimum number of operational nodes C = number of cluster nodes

For example, a five-node cluster needs a minimum of three operational nodes (or two nodes which can fail).

We strongly recommend to use either a two-node cluster or an odd number of cluster nodes. Two-node clusters make sense for stretched setups across two sites. Clusters with an odd number of nodes can either be built on one single site or might be spread across three sites.

- Corosync configuration

Corosync is a messaging and membership layer, see Section 5.2.1, “Corosync configuration for two-node clusters” and Section 5.2.2, “Corosync configuration for n-node clusters”.

5.2.1 Corosync configuration for two-node clusters #

When using the bootstrap scripts, the Corosync configuration contains

a quorum section with the following options:

quorum {

# Enable and configure quorum subsystem (default: off)

# see also corosync.conf.5 and votequorum.5

provider: corosync_votequorum

expected_votes: 2

two_node: 1

}

By default, when two_node: 1 is set, the

wait_for_all option is automatically enabled.

If wait_for_all is not enabled, the cluster should be

started on both nodes in parallel. Otherwise the first node will perform

a startup-fencing on the missing second node.

5.2.2 Corosync configuration for n-node clusters #

When not using a two-node cluster, we strongly recommend an odd number of nodes for your N-node cluster. With regards to quorum configuration, you have the following options:

Adding additional nodes with the

ha-cluster-joincommand, orAdapting the Corosync configuration manually.

If you adjust /etc/corosync/corosync.conf manually,

use the following settings:

quorum {

provider: corosync_votequorum 1

expected_votes: N 2

wait_for_all: 1 3

}Use the quorum service from Corosync | |

The number of votes to expect. This parameter can either be

provided inside the | |

Enables the wait for all (WFA) feature.

When WFA is enabled, the cluster will be quorate for the first time

only after all nodes have become visible.

To avoid some startup race conditions, setting |

5.3 Global cluster options #

Global cluster options control how the cluster behaves when

confronted with certain situations. They are grouped into sets and can be

viewed and modified with the cluster management tools like Hawk2 and

the crm shell.

The predefined values can usually be kept. However, to make key functions of your cluster work as expected, you might need to adjust the following parameters after basic cluster setup:

5.3.1 Global option no-quorum-policy #

This global option defines what to do when a cluster partition does not have quorum (no majority of nodes is part of the partition).

The following values are available:

ignoreSetting

no-quorum-policytoignoremakes a cluster partition behave like it has quorum, even if it does not. The cluster partition is allowed to issue fencing and continue resource management.On SLES 11 this was the recommended setting for a two-node cluster. Starting with SLES 12, the value

ignoreis obsolete and must not be used. Based on configuration and conditions, Corosync gives cluster nodes or a single node “quorum”—or not.For two-node clusters the only meaningful behavior is to always react in case of node loss. The first step should always be to try to fence the lost node.

freezeIf quorum is lost, the cluster partition freezes. Resource management is continued: running resources are not stopped (but possibly restarted in response to monitor events), but no further resources are started within the affected partition.

This setting is recommended for clusters where certain resources depend on communication with other nodes (for example, OCFS2 mounts). In this case, the default setting

no-quorum-policy=stopis not useful, as it would lead to the following scenario: Stopping those resources would not be possible while the peer nodes are unreachable. Instead, an attempt to stop them would eventually time out and cause astop failure, triggering escalated recovery and fencing.stop(default value)If quorum is lost, all resources in the affected cluster partition are stopped in an orderly fashion.

suicideIf quorum is lost, all nodes in the affected cluster partition are fenced. This option works only in combination with SBD, see Chapter 13, Storage protection and SBD.

5.3.2 Global option stonith-enabled #

This global option defines whether to apply fencing, allowing STONITH

devices to shoot failed nodes and nodes with resources that cannot be

stopped. By default, this global option is set to

true, because for normal cluster operation it is

necessary to use STONITH devices. According to the default value,

the cluster will refuse to start any resources if no STONITH

resources have been defined.

If you need to disable fencing for any reasons, set

stonith-enabled to false, but be

aware that this has impact on the support status for your product.

Furthermore, with stonith-enabled="false", resources

like the Distributed Lock Manager (DLM) and all services depending on

DLM (such as lvmlockd, GFS2, and OCFS2) will fail to start.

A cluster without STONITH is not supported.

5.4 Introduction to Hawk2 #

To configure and manage cluster resources, either use Hawk2, or

the CRM Shell (crmsh) command line utility.

Hawk2's user-friendly Web interface allows you to monitor and administer your High Availability clusters from Linux or non-Linux machines alike. Hawk2 can be accessed from any machine inside or outside of the cluster by using a (graphical) Web browser.

5.4.1 Hawk2 requirements #

Before users can log in to Hawk2, the following requirements need to be fulfilled:

- hawk2 Package

The hawk2 package must be installed on all cluster nodes you want to connect to with Hawk2.

- Web browser

On the machine from which to access a cluster node using Hawk2, you need a (graphical) Web browser (with JavaScript and cookies enabled) to establish the connection.

- Hawk2 service

To use Hawk2, the respective Web service must be started on the node that you want to connect to via the Web interface. See Procedure 5.1, “Starting Hawk2 services”.

If you have set up your cluster with the scripts from the

ha-cluster-bootstrappackage, the Hawk2 service is already enabled.- Username, group and password on each cluster node

Hawk2 users must be members of the

haclientgroup. The installation creates a Linux user namedhacluster, who is added to thehaclientgroup.When using the

ha-cluster-initscript for setup, a default password is set for thehaclusteruser. Before starting Hawk2, change it to a secure password. If you did not use theha-cluster-initscript, either set a password for thehaclusterfirst or create a new user which is a member of thehaclientgroup. Do this on every node you will connect to with Hawk2.- Wildcard certificate handling

A wildcard certificate is a public key certificate that is valid for multiple sub-domains. For example, a wildcard certificate for

*.example.comsecures the domainswww.example.com,login.example.com, and possibly more.Hawk2 supports wildcard certificates as well as conventional certificates. A self-signed default private key and certificate is generated by

/srv/www/hawk/bin/generate-ssl-cert.To use your own certificate (conventional or wildcard), replace the generated certificate at

/etc/ssl/certs/hawk.pemwith your own.

On the node you want to connect to, open a shell and log in as

root.Check the status of the service by entering

#systemctlstatus hawkIf the service is not running, start it with

#systemctlstart hawkIf you want Hawk2 to start automatically at boot time, execute the following command:

#systemctlenable hawk

5.4.2 Logging in #

The Hawk2 Web interface uses the HTTPS protocol and port

7630.

Instead of logging in to an individual cluster node with Hawk2, you can

configure a floating, virtual IP address (IPaddr or

IPaddr2) as a cluster resource. It does not need any

special configuration. It allows clients to connect to the Hawk service no

matter which physical node the service is running on.

When setting up the cluster with the

ha-cluster-bootstrap scripts,

you will be asked whether to configure a virtual IP for cluster

administration.

On any machine, start a Web browser and enter the following URL:

https://HAWKSERVER:7630/

Replace HAWKSERVER with the IP address or host name of any cluster node running the Hawk Web service. If a virtual IP address has been configured for cluster administration with Hawk2, replace HAWKSERVER with the virtual IP address.

Note: Certificate warningIf a certificate warning appears when you try to access the URL for the first time, a self-signed certificate is in use. Self-signed certificates are not considered trustworthy by default.

To verify the certificate, ask your cluster operator for the certificate details.

To proceed anyway, you can add an exception in the browser to bypass the warning.

For information on how to replace the self-signed certificate with a certificate signed by an official Certificate Authority, refer to Replacing the self-signed certificate.

On the Hawk2 login screen, enter the and of the

haclusteruser (or of any other user that is a member of thehaclientgroup).Click .

5.4.3 Hawk2 overview: main elements #

After logging in to Hawk2, you will see a navigation bar on the left-hand side and a top-level row with several links on the right-hand side.

By default, users logged in as root or

hacluster have full

read-write access to all cluster configuration tasks. However,

Access control lists (ACLs) can be used to

define fine-grained access permissions.

If ACLs are enabled in the CRM, the available functions in Hawk2 depend

on the user role and their assigned access permissions. The

in Hawk2 can only be executed by the

user hacluster.

5.4.3.2 Top-level row #

Hawk2's top-level row shows the following entries:

: Click to switch to batch mode. This allows you to simulate and stage changes and to apply them as a single transaction. For details, see Section 5.4.7, “Using the batch mode”.

: Allows you to set preferences for Hawk2 (for example, the language for the Web interface, or whether to display a warning if STONITH is disabled).

: Access the SUSE Linux Enterprise High Availability documentation, read the release notes or report a bug.

: Click to log out.

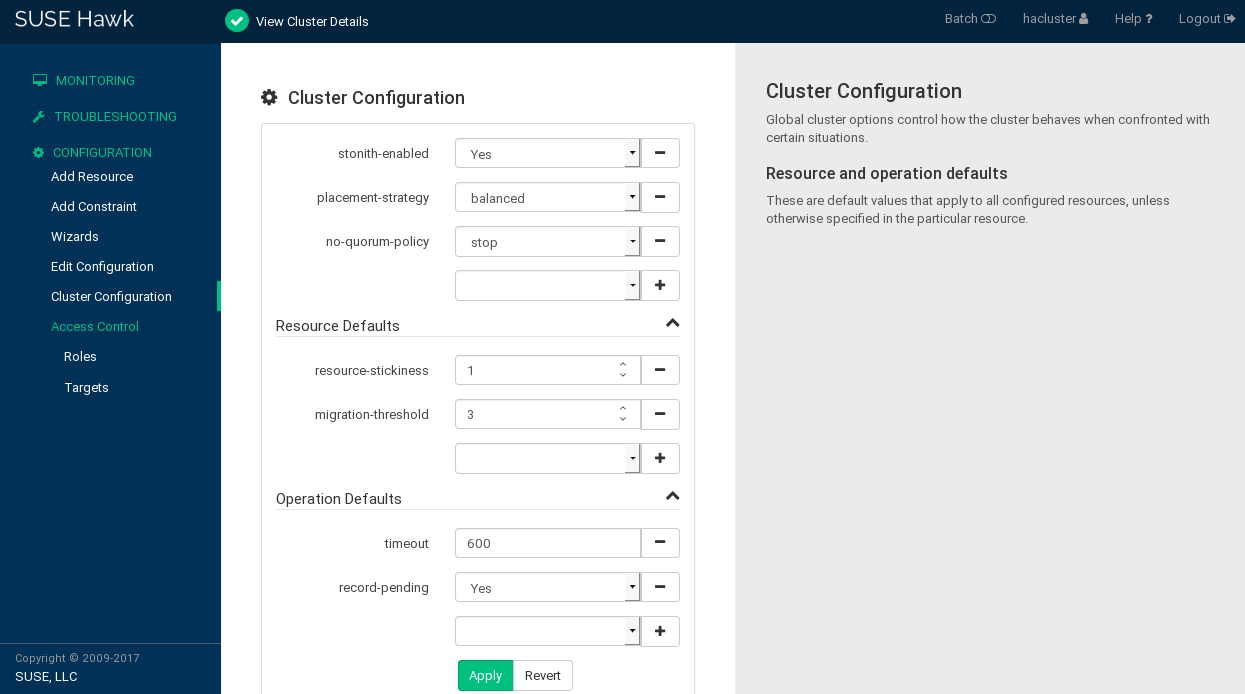

5.4.4 Configuring global cluster options #

Global cluster options control how the cluster behaves when confronted with

certain situations. They are grouped into sets and can be viewed and

modified with cluster management tools like Hawk2 and crmsh. The

predefined values can usually be kept. However, to ensure the key functions

of your cluster work correctly, you need to adjust the following parameters

after basic cluster setup:

Log in to Hawk2:

https://HAWKSERVER:7630/

From the left navigation bar, select › .

The screen opens. It displays the global cluster options and their current values.

To display a short description of the parameter on the right-hand side of the screen, hover your mouse over a parameter.

Figure 5.1: Hawk2—cluster configuration #Check the values for and and adjust them, if necessary:

Set to the appropriate value. See Section 5.3.1, “Global option

no-quorum-policy” for more details.If you need to disable fencing for any reason, set to

no. By default, it is set totrue, because using STONITH devices is necessary for normal cluster operation. According to the default value, the cluster will refuse to start any resources if no STONITH resources have been configured.Important: No Support Without STONITHYou must have a node fencing mechanism for your cluster.

The global cluster options

stonith-enabledandstartup-fencingmust be set totrue. When you change them, you lose support.

To remove a parameter from the cluster configuration, click the icon next to the parameter. If a parameter is deleted, the cluster will behave as if that parameter had the default value.

To add a new parameter to the cluster configuration, choose one from the drop-down box.

If you need to change or , proceed as follows:

To adjust a value, either select a different value from the drop-down box or edit the value directly.

To add a new resource default or operation default, choose one from the empty drop-down box and enter a value. If there are default values, Hawk2 proposes them automatically.

To remove a parameter, click the icon next to it. If no values are specified for and , the cluster uses the default values that are documented in Section 6.12, “Resource options (meta attributes)” and Section 6.14, “Resource operations”.

Confirm your changes.

5.4.5 Showing the current cluster configuration (CIB) #

Sometimes a cluster administrator needs to know the cluster configuration. Hawk2 can show the current configuration in CRM Shell syntax, as XML and as a graph. To view the cluster configuration in CRM Shell syntax, from the left navigation bar select › and click . To show the configuration in raw XML instead, click . Click for a graphical representation of the nodes and resources configured in the CIB. It also shows the relationships between resources.

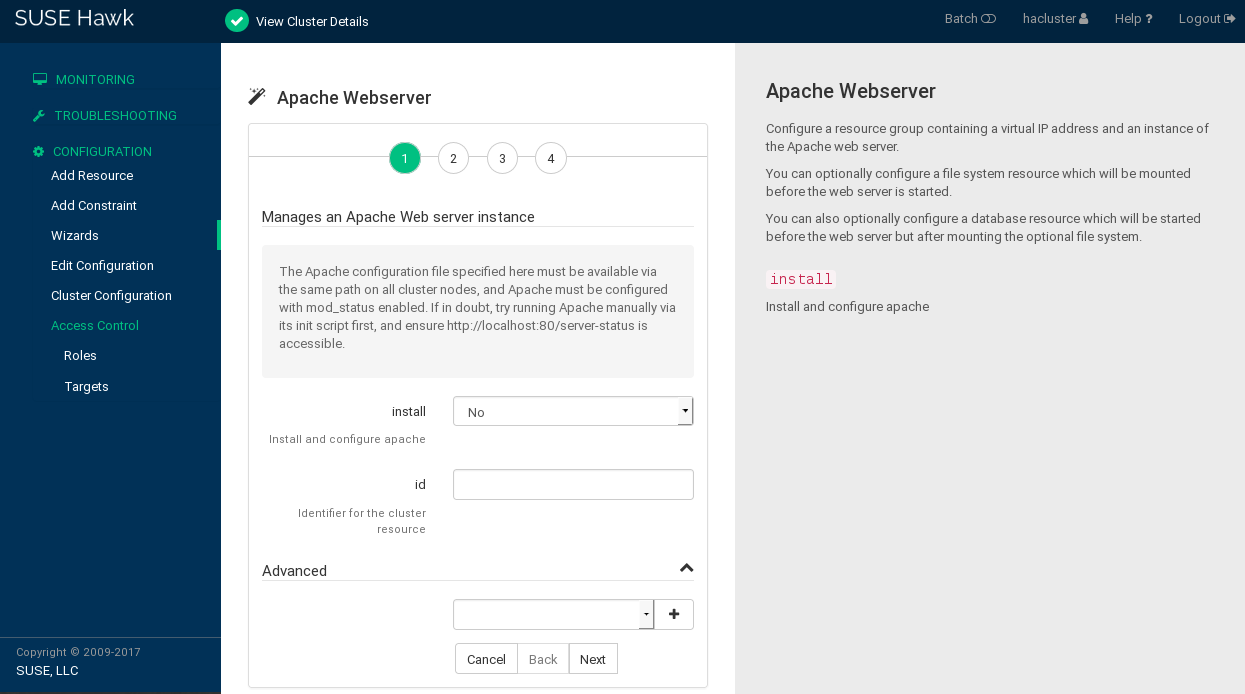

5.4.6 Adding resources with the wizard #

The Hawk2 wizard is a convenient way of setting up simple resources like a virtual IP address or an SBD STONITH resource, for example. It is also useful for complex configurations that include multiple resources, like the resource configuration for a DRBD block device or an Apache Web server. The wizard guides you through the configuration steps and provides information about the parameters you need to enter.

Log in to Hawk2:

https://HAWKSERVER:7630/

From the left navigation bar, select › .

Expand the individual categories by clicking the arrow down icon next to them and select the desired wizard.

Follow the instructions on the screen. After the last configuration step, the values you have entered.

Hawk2 shows which actions it is going to perform and what the configuration looks like. Depending on the configuration, you might be prompted for the

rootpassword before you can the configuration.

For more information, see Chapter 6, Configuring cluster resources.

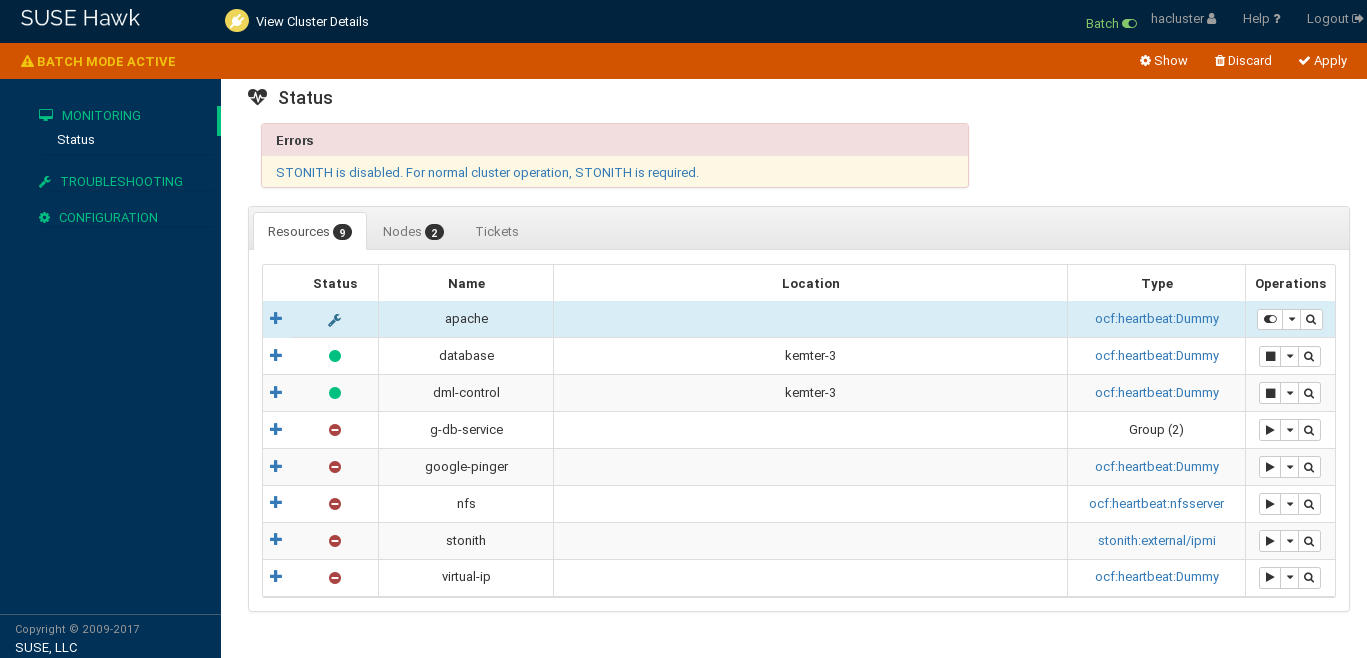

5.4.7 Using the batch mode #

Hawk2 provides a , including a cluster simulator. It can be used for the following:

Staging changes to the cluster and applying them as a single transaction, instead of having each change take effect immediately.

Simulating changes and cluster events, for example, to explore potential failure scenarios.

For example, batch mode can be used when creating groups of resources that depend on each other. Using batch mode, you can avoid applying intermediate or incomplete configurations to the cluster.

While batch mode is enabled, you can add or edit resources and constraints or change the cluster configuration. It is also possible to simulate events in the cluster, including nodes going online or offline, resource operations and tickets being granted or revoked. See Procedure 5.6, “Injecting node, resource or ticket events” for details.

The cluster simulator runs automatically after every change and shows the expected outcome in the user interface. For example, this also means: If you stop a resource while in batch mode, the user interface shows the resource as stopped—while actually, the resource is still running.

Some wizards include actions beyond mere cluster configuration. When using those wizards in batch mode, any changes that go beyond cluster configuration would be applied to the live system immediately.

Therefore wizards that require root permission cannot be executed in

batch mode.

Log in to Hawk2:

https://HAWKSERVER:7630/

To activate the batch mode, select from the top-level row.

An additional bar appears below the top-level row. It indicates that batch mode is active and contains links to actions that you can execute in batch mode.

Figure 5.3: Hawk2 batch mode activated #While batch mode is active, perform any changes to your cluster, like adding or editing resources and constraints or editing the cluster configuration.

The changes will be simulated and shown in all screens.

To view details of the changes you have made, select from the batch mode bar. The window opens.

For any configuration changes it shows the difference between the live state and the simulated changes in

crmshsyntax: Lines starting with a-character represent the current state whereas lines starting with+show the proposed state.To inject events or view even more details, see Procedure 5.6. Otherwise the window.

Choose to either or the simulated changes and confirm your choice. This also deactivates batch mode and takes you back to normal mode.

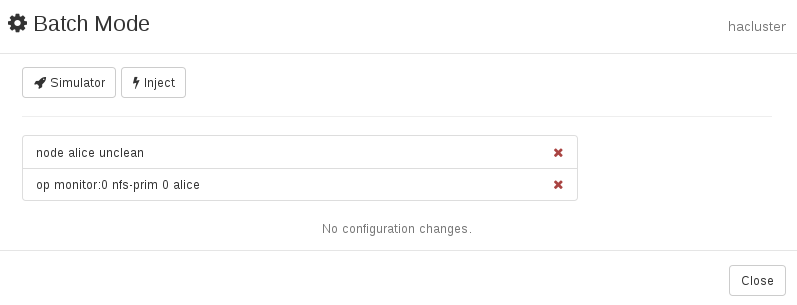

When running in batch mode, Hawk2 also allows you to inject and .

Let you change the state of a node. Available states are , , and .

Let you change some properties of a resource. For example, you can set an operation (like

start,stop,monitor), the node it applies to, and the expected result to be simulated.Let you test the impact of granting and revoking tickets (used for Geo clusters).

Log in to Hawk2:

https://HAWKSERVER:7630/

If batch mode is not active yet, click at the top-level row to switch to batch mode.

In the batch mode bar, click to open the window.

To simulate a status change of a node:

Click › .

Select the you want to manipulate and select its target .

Confirm your changes. Your event is added to the queue of events listed in the dialog.

To simulate a resource operation:

Click › .

Select the you want to manipulate and select the to simulate.

If necessary, define an .

Select the on which to run the operation and the targeted . Your event is added to the queue of events listed in the dialog.

Confirm your changes.

To simulate a ticket action:

Click › .

Select the you want to manipulate and select the to simulate.

Confirm your changes. Your event is added to the queue of events listed in the dialog.

The dialog (Figure 5.4) shows a new line per injected event. Any event listed here is simulated immediately and is reflected on the screen.

If you have made any configuration changes, too, the difference between the live state and the simulated changes is shown below the injected events.

Figure 5.4: Hawk2 batch mode—injected invents and configuration changes #To remove an injected event, click the icon next to it. Hawk2 updates the screen accordingly.

To view more details about the simulation run, click and choose one of the following:

Shows a detailed summary.

- /

shows the initial CIB state. shows what the CIB would look like after the transition.

Shows a graphical representation of the transition.

Shows an XML representation of the transition.

If you have reviewed the simulated changes, close the window.

To leave the batch mode, either or the simulated changes.

5.5 Introduction to crmsh #

To configure and manage cluster resources, either use the CRM Shell

(crmsh) command line utility or Hawk2, a Web-based

user interface.

This section introduces the command line tool crm.

The crm command has several subcommands which manage

resources, CIBs, nodes, resource agents, and others. It offers a thorough

help system with embedded examples. All examples follow a naming

convention described in

Appendix B.

Events are logged to /var/log/crmsh/crmsh.log.

Sufficient privileges are necessary to manage a cluster. The

crm command and its subcommands need to be run either

as root user or as the CRM owner user (typically the user

hacluster).

However, the user option allows you to run

crm and its subcommands as a regular (unprivileged)

user and to change its ID using sudo whenever

necessary. For example, with the following command crm

will use hacluster as the

privileged user ID:

#crmoptions user hacluster

Note that you need to set up /etc/sudoers so that

sudo does not ask for a password.

By using crm without arguments (or with only one sublevel as argument), the CRM Shell enters the interactive mode. This mode is indicated by the following prompt:

crm(live/HOSTNAME)For readability reasons, we omit the host name in the interactive crm prompts in our documentation. We only include the host name if you need to run the interactive shell on a specific node, like alice for example:

crm(live/alice)5.5.1 Getting help #

Help can be accessed in several ways:

To output the usage of

crmand its command line options:#crm--helpTo give a list of all available commands:

#crmhelpTo access other help sections, not just the command reference:

#crmhelp topicsTo view the extensive help text of the

configuresubcommand:#crmconfigure helpTo print the syntax, its usage, and examples of the

groupsubcommand ofconfigure:#crmconfigure help groupThis is the same:

#crmhelp configure group

Almost all output of the help subcommand (do not mix

it up with the --help option) opens a text viewer. This

text viewer allows you to scroll up or down and read the help text more

comfortably. To leave the text viewer, press the Q key.

The crmsh supports full tab completion in Bash directly, not only

for the interactive shell. For example, typing crm help

config→| will complete the word

like in the interactive shell.

5.5.2 Executing crmsh's subcommands #

The crm command itself can be used in the following

ways:

Directly: Concatenate all subcommands to

crm, press Enter and you see the output immediately. For example, entercrmhelp rato get information about therasubcommand (resource agents).It is possible to abbreviate subcommands as long as they are unique. For example, you can shorten

statusasstandcrmshwill know what you have meant.Another feature is to shorten parameters. Usually, you add parameters through the

paramskeyword. You can leave out theparamssection if it is the first and only section. For example, this line:#crmprimitive ipaddr IPaddr2 params ip=192.168.0.55is equivalent to this line:

#crmprimitive ipaddr IPaddr2 ip=192.168.0.55As crm shell script: Crm shell scripts contain subcommands of

crm. For more information, see Section 5.5.4, “Usingcrmsh's shell scripts”.As

crmshcluster scripts:These are a collection of metadata, references to RPM packages, configuration files, andcrmshsubcommands bundled under a single, yet descriptive name. They are managed through thecrm scriptcommand.Do not confuse them with

crmshshell scripts: although both share some common objectives, the crm shell scripts only contain subcommands whereas cluster scripts incorporate much more than a simple enumeration of commands. For more information, see Section 5.5.5, “Usingcrmsh's cluster scripts”.Interactive as internal shell: Type

crmto enter the internal shell. The prompt changes tocrm(live). Withhelpyou can get an overview of the available subcommands. As the internal shell has different levels of subcommands, you can “enter” one by typing this subcommand and press Enter.For example, if you type

resourceyou enter the resource management level. Your prompt changes tocrm(live)resource#. To leave the internal shell, use the commandquit. If you need to go one level back, useback,up,end, orcd.You can enter the level directly by typing

crmand the respective subcommand(s) without any options and press Enter.The internal shell supports also tab completion for subcommands and resources. Type the beginning of a command, press →| and

crmcompletes the respective object.

In addition to previously explained methods, crmsh also supports

synchronous command execution. Use the -w option to

activate it. If you have started crm without

-w, you can enable it later with the user preference's

wait set to yes (options

wait yes). If this option is enabled, crm

waits until the transition is finished. Whenever a transaction is

started, dots are printed to indicate progress. Synchronous command

execution is only applicable for commands like resource

start.

The crm tool has management capability (the

subcommands resource and node)

and can be used for configuration (cib,

configure).

The following subsections give you an overview of some important aspects

of the crm tool.

5.5.3 Displaying information about OCF resource agents #

As you need to deal with resource agents in your cluster configuration

all the time, the crm tool contains the

ra command. Use it to show information about resource

agents and to manage them (for additional information, see also

Section 6.2, “Supported resource agent classes”):

#crmracrm(live)ra#

The command classes lists all classes and providers:

crm(live)ra#classeslsb ocf / heartbeat linbit lvm2 ocfs2 pacemaker service stonith systemd

To get an overview of all available resource agents for a class (and

provider) use the list command:

crm(live)ra#listocf AoEtarget AudibleAlarm CTDB ClusterMon Delay Dummy EvmsSCC Evmsd Filesystem HealthCPU HealthSMART ICP IPaddr IPaddr2 IPsrcaddr IPv6addr LVM LinuxSCSI MailTo ManageRAID ManageVE Pure-FTPd Raid1 Route SAPDatabase SAPInstance SendArp ServeRAID ...

An overview of a resource agent can be viewed with

info:

crm(live)ra#infoocf:linbit:drbd This resource agent manages a DRBD* resource as a master/slave resource. DRBD is a shared-nothing replicated storage device. (ocf:linbit:drbd) Master/Slave OCF Resource Agent for DRBD Parameters (* denotes required, [] the default): drbd_resource* (string): drbd resource name The name of the drbd resource from the drbd.conf file. drbdconf (string, [/etc/drbd.conf]): Path to drbd.conf Full path to the drbd.conf file. Operations' defaults (advisory minimum): start timeout=240 promote timeout=90 demote timeout=90 notify timeout=90 stop timeout=100 monitor_Slave_0 interval=20 timeout=20 start-delay=1m monitor_Master_0 interval=10 timeout=20 start-delay=1m

Leave the viewer by pressing Q.

crm directly

In the former example we used the internal shell of the

crm command. However, you do not necessarily need to

use it. You get the same results if you add the respective subcommands

to crm. For example, you can list all the OCF

resource agents by entering crm ra list

ocf in your shell.

5.5.4 Using crmsh's shell scripts #

The crmsh shell scripts provide a convenient way to enumerate crmsh

subcommands into a file. This makes it easy to comment specific lines or

to replay them later. Keep in mind that a crmsh shell script can contain

only crmsh subcommands. Any other commands are not

allowed.

Before you can use a crmsh shell script, create a file with specific

commands. For example, the following file prints the status of the cluster

and gives a list of all nodes:

crmsh shell script ## A small example file with some crm subcommandsstatusnodelist

Any line starting with the hash symbol (#) is a

comment and is ignored. If a line is too long, insert a backslash

(\) at the end and continue in the next line. It is

recommended to indent lines that belong to a certain subcommand to improve

readability.

To use this script, use one of the following methods:

#crm-f example.cli#crm< example.cli

5.5.5 Using crmsh's cluster scripts #

Collecting information from all cluster nodes and deploying any

changes is a key cluster administration task. Instead of performing

the same procedures manually on different nodes (which is error-prone),

you can use the crmsh cluster scripts.

Do not confuse them with the crmsh shell scripts,

which are explained in Section 5.5.4, “Using crmsh's shell scripts”.

In contrast to crmsh shell scripts, cluster scripts performs

additional tasks like:

Installing software that is required for a specific task.

Creating or modifying any configuration files.

Collecting information and reporting potential problems with the cluster.

Deploying the changes to all nodes.

crmsh cluster scripts do not replace other tools for managing

clusters—they provide an integrated way to perform the above

tasks across the cluster. Find detailed information at http://crmsh.github.io/scripts/.

5.5.5.1 Usage #

To get a list of all available cluster scripts, run:

#crmscript list

To view the components of a script, use the

show command and the name of the cluster script,

for example:

#crmscript show mailto mailto (Basic) MailTo This is a resource agent for MailTo. It sends email to a sysadmin whenever a takeover occurs. 1. Notifies recipients by email in the event of resource takeover id (required) (unique) Identifier for the cluster resource email (required) Email address subject Subject

The output of show contains a title, a

short description, and a procedure. Each procedure is divided

into a series of steps, performed in the given order.

Each step contains a list of required and optional parameters, along with a short description and its default value.

Each cluster script understands a set of common parameters. These parameters can be passed to any script:

| Parameter | Argument | Description |

|---|---|---|

| action | INDEX | If set, only execute a single action (index, as returned by verify) |

| dry_run | BOOL | If set, simulate execution only (default: no) |

| nodes | LIST | List of nodes to execute the script for |

| port | NUMBER | Port to connect to |

| statefile | FILE | When single-stepping, the state is saved in the given file |

| sudo | BOOL | If set, crm will prompt for a sudo password and use sudo where appropriate (default: no) |

| timeout | NUMBER | Execution timeout in seconds (default: 600) |

| user | USER | Run script as the given user |

5.5.5.2 Verifying and running a cluster script #

Before running a cluster script, review the actions that it will perform and verify its parameters to avoid problems. A cluster script can potentially perform a series of actions and may fail for various reasons. Thus, verifying your parameters before running it helps to avoid problems.

For example, the mailto resource agent

requires a unique identifier and an e-mail address. To verify these

parameters, run:

#crmscript verify mailto id=sysadmin email=tux@example.org 1. Ensure mail package is installed mailx 2. Configure cluster resources primitive sysadmin MailTo email="tux@example.org" op start timeout="10" op stop timeout="10" op monitor interval="10" timeout="10" clone c-sysadmin sysadmin

The verify prints the steps and replaces

any placeholders with your given parameters. If verify

finds any problems, it will report it.

If everything is OK, replace the verify

command with run:

#crmscript run mailto id=sysadmin email=tux@example.org INFO: MailTo INFO: Nodes: alice, bob OK: Ensure mail package is installed OK: Configure cluster resources

Check whether your resource is integrated into your cluster

with crm status:

#crmstatus [...] Clone Set: c-sysadmin [sysadmin] Started: [ alice bob ]

5.5.6 Using configuration templates #

The use of configuration templates is deprecated and will

be removed in the future. Configuration templates will be replaced

by cluster scripts, see Section 5.5.5, “Using crmsh's cluster scripts”.

Configuration templates are ready-made cluster configurations for

crmsh. Do not confuse them with the resource

templates (as described in

Section 6.8.2, “Creating resource templates with crmsh”). Those are

templates for the cluster and not for the crm

shell.

Configuration templates require minimum effort to be tailored to the particular user's needs. Whenever a template creates a configuration, warning messages give hints which can be edited later for further customization.

The following procedure shows how to create a simple yet functional Apache configuration:

Log in as

rootand start thecrminteractive shell:#crmconfigureCreate a new configuration from a configuration template:

Switch to the

templatesubcommand:crm(live)configure#templateList the available configuration templates:

crm(live)configure template#listtemplates gfs2-base filesystem virtual-ip apache clvm ocfs2 gfs2Decide which configuration template you need. As we need an Apache configuration, we select the

apachetemplate and name itg-intranet:crm(live)configure template#newg-intranet apache INFO: pulling in template apache INFO: pulling in template virtual-ip

Define your parameters:

List the configuration you have created:

crm(live)configure template#listg-intranetDisplay the minimum required changes that need to be filled out by you:

crm(live)configure template#showERROR: 23: required parameter ip not set ERROR: 61: required parameter id not set ERROR: 65: required parameter configfile not setInvoke your preferred text editor and fill out all lines that have been displayed as errors in Step 3.b:

crm(live)configure template#edit

Show the configuration and check whether it is valid (bold text depends on the configuration you have entered in Step 3.c):

crm(live)configure template#showprimitive virtual-ip ocf:heartbeat:IPaddr \ params ip="192.168.1.101" primitive apache apache \ params configfile="/etc/apache2/httpd.conf" monitor apache 120s:60s group g-intranet \ apache virtual-ipApply the configuration:

crm(live)configure template#applycrm(live)configure#cd ..crm(live)configure#showSubmit your changes to the CIB:

crm(live)configure#commit

It is possible to simplify the commands even more, if you know the details. The above procedure can be summarized with the following command on the shell:

#crmconfigure template \ new g-intranet apache params \ configfile="/etc/apache2/httpd.conf" ip="192.168.1.101"

If you are inside your internal crm shell, use the

following command:

crm(live)configure template#newintranet apache params \ configfile="/etc/apache2/httpd.conf" ip="192.168.1.101"

However, the previous command only creates its configuration from the configuration template. It does not apply nor commit it to the CIB.

5.5.7 Testing with shadow configuration #

A shadow configuration is used to test different configuration scenarios. If you have created several shadow configurations, you can test them one by one to see the effects of your changes.

The usual process looks like this:

Log in as

rootand start thecrminteractive shell:#crmconfigureCreate a new shadow configuration:

crm(live)configure#cibnew myNewConfig INFO: myNewConfig shadow CIB createdIf you omit the name of the shadow CIB, a temporary name

@tmp@is created.To copy the current live configuration into your shadow configuration, use the following command, otherwise skip this step:

crm(myNewConfig)#

cibreset myNewConfigThe previous command makes it easier to modify any existing resources later.

Make your changes as usual. After you have created the shadow configuration, all changes go there. To save all your changes, use the following command:

crm(myNewConfig)#

commitIf you need the live cluster configuration again, switch back with the following command:

crm(myNewConfig)configure#

cibuse livecrm(live)#

5.5.8 Debugging your configuration changes #

Before loading your configuration changes back into the cluster, it is

recommended to review your changes with ptest. The

ptest command can show a diagram of actions that will

be induced by committing the changes. You need the

graphviz package to display the diagrams. The

following example is a transcript, adding a monitor operation:

#crmconfigurecrm(live)configure#showfence-bob primitive fence-bob stonith:apcsmart \ params hostlist="bob"crm(live)configure#monitorfence-bob 120m:60scrm(live)configure#showchanged primitive fence-bob stonith:apcsmart \ params hostlist="bob" \ op monitor interval="120m" timeout="60s"crm(live)configure#ptestcrm(live)configure#commit

5.5.9 Cluster diagram #

To output a cluster diagram, use the command

crm configure graph. It displays

the current configuration on its current window, therefore requiring

X11.

If you prefer Scalable Vector Graphics (SVG), use the following command:

#crmconfigure graph dot config.svg svg

5.5.10 Managing Corosync configuration #

Corosync is the underlying messaging layer for most HA clusters. The

corosync subcommand provides commands for editing and

managing the Corosync configuration.

For example, to list the status of the cluster, use

status:

#crmcorosync status Printing ring status. Local node ID 175704363 RING ID 0 id = 10.121.9.43 status = ring 0 active with no faults Quorum information ------------------ Date: Thu May 8 16:41:56 2014 Quorum provider: corosync_votequorum Nodes: 2 Node ID: 175704363 Ring ID: 4032 Quorate: Yes Votequorum information ---------------------- Expected votes: 2 Highest expected: 2 Total votes: 2 Quorum: 2 Flags: Quorate Membership information ---------------------- Nodeid Votes Name 175704363 1 alice.example.com (local) 175704619 1 bob.example.com

The diff command is very helpful: It compares the

Corosync configuration on all nodes (if not stated otherwise) and

prints the difference between:

#crmcorosync diff --- bob +++ alice @@ -46,2 +46,2 @@ - expected_votes: 2 - two_node: 1 + expected_votes: 1 + two_node: 0

For more details, see http://crmsh.nongnu.org/crm.8.html#cmdhelp_corosync.

5.5.11 Setting passwords independent of cib.xml #

If your cluster configuration contains sensitive information, such as passwords, it should be stored in local files. That way, these parameters will never be logged or leaked in support reports.

Before using secret, better run the

show command first to get an overview of all your

resources:

#crmconfigure show primitive mydb mysql \ params replication_user=admin ...

To set a password for the above mydb

resource, use the following commands:

#crmresource secret mydb set passwd linux INFO: syncing /var/lib/heartbeat/lrm/secrets/mydb/passwd to [your node list]

You can get the saved password back with:

#crmresource secret mydb show passwd linux

Note that the parameters need to be synchronized between nodes; the

crm resource secret command will take care of that. We

highly recommend to only use this command to manage secret parameters.

5.6 For more information #

- http://crmsh.github.io/

Home page of the CRM Shell (

crmsh), the advanced command line interface for High Availability cluster management.- http://crmsh.github.io/documentation

Holds several documents about the CRM Shell, including a Getting Started tutorial for basic cluster setup with

crmshand the comprehensive Manual for the CRM Shell. The latter is available at http://crmsh.github.io/man-2.0/. Find the tutorial at http://crmsh.github.io/start-guide/.- http://clusterlabs.org/

Home page of Pacemaker, the cluster resource manager shipped with SUSE Linux Enterprise High Availability.

- https://clusterlabs.org/projects/pacemaker/doc/

Holds several comprehensive manuals and some shorter documents explaining general concepts. For example:

Pacemaker Explained: Contains comprehensive and very detailed information for reference.

Colocation Explained

Ordering Explained