Optimizing Linux for AMD EPYC™ 7002 Series Processors with SUSE Linux Enterprise 15 SP1

Tuning & Performance

The document at hand provides an overview of the AMD EPYC* 7002 Series Processors and how some computational-intensive workloads can be tuned on SUSE Linux Enterprise Server 15 SP1.

Disclaimer: Documents published as part of the SUSE Best Practices series have been contributed voluntarily by SUSE employees and third parties. They are meant to serve as examples of how particular actions can be performed. They have been compiled with utmost attention to detail. However, this does not guarantee complete accuracy. SUSE cannot verify that actions described in these documents do what is claimed or whether actions described have unintended consequences. SUSE LLC, its affiliates, the authors, and the translators may not be held liable for possible errors or the consequences thereof.

1 Overview #

The AMD EPYC 7002 Series Processor is the latest generation of the AMD64 System-on-Chip (SoC) processor family. It is based on the Zen 2 microarchitecture introduced in 2019, supporting up to 64 cores (128 threads) and 8 memory channels per socket. At the time of writing, 1-socket and 2-socket models are available from Original Equipment Manufacturers (OEMs). This document provides an overview of the AMD EPYC 7002 Series Processor and how computational-intensive workloads can be tuned on SUSE Linux Enterprise Server 15 SP1.

2 AMD EPYC 7002 Series Processor Architecture #

Symmetric multiprocessing (SMP) systems are those that contain two or more physical processing cores. Each core may have two threads if Symmetric Multi-Threading (SMT) is enabled with some resources being shared between SMT siblings. To minimize access latencies, multiple layers of caches are used with each level being larger but with higher access costs. Cores may share different levels of cache which should be considered when tuning for a workload.

Historically, a single socket contained several cores sharing a hierarchy of caches and memory channels and multiple sockets were connected via a memory interconnect. Modern configurations may have multiple dies as a Multi-Chip Module (MCM) with one set of interconnects within the socket and a separate interconnect for each socket. In practical terms, it means that some CPUs and memory are faster to access than others depending on the “distance”. This should be considered when tuning for Non-Uniform Memory Architecture (NUMA) as all memory accesses are not necessarily to local memory incurring a variable access penalty.

The 2nd Generation AMD EPYC Processor has an MCM design with up to nine dies on each package. This is significantly different to the 1st Generation AMD EPYC Processor design. One die is a central IO die through which all off-chip communication passes through. The basic building block of a compute die is a four-core Core CompleX (CCX) with its own L1-L3 cache hierarchy. One Core Complex Die (CCD) consists of two CCXs with an Infinity Link to the IO die. A 64-core 2nd Generation AMD EPYC Processor socket therefore consists of 8 CCDs consisting of 16 CCXs or 64 cores in total with one additional IO die for 9 dies in total. Figure 1, “AMD EPYC 7002 Series Processor High Level View of CCD” shows a high-level view of the CCD.

Communication between the chip and memory happens via the IO die. Each CCD has one dedicated Infinity Fabric link to the die and two memory channels per CCD located on the die. The practical consequence of this architecture versus the 1st Generation AMD EPYC Processor is that the topology is simpler. The first generation had separate memory channels per die and links between dies giving two levels of NUMA distance within a single socket and a third distance when communicating between sockets. This meant that a two-socket machine for EPYC had 4 NUMA nodes (3 levels of NUMA distance). The 2nd Generation AMD EPYC Processor has only 2 NUMA nodes (2 levels of NUMA distance) which makes it easier to tune and optimize.

The IO die has a total of 8 memory controllers, each with 2 channels supporting 1 DDR4 Dual Inline Memory Modules (DIMMs) each (16 DIMMs in total). With a peak DDR4 frequency of 3.2GHz, this yields a total of 204.8 GB/sec peak theoretical bandwidth. The exact bandwidth, however, depends on the DIMMs selected, the number of memory channels populated and the efficiency of the application. The maximum total capacity is 4 TB.

Power management on the links is careful to minimize the amount of power required. If the links are idle then the power may be used to boost the frequency of individual cores. Hence, minimizing access is not only important from a memory access latency point of view, but it also has an impact on the speed of individual cores.

There are eight IO x16 PCIe 4.0 lanes per socket where lanes can be used as Infinity links, PCI Express links or SATA links with a limit of 32 SATA or NVMe devices. This allows very large IO configurations and a high degree of flexibility given that either IO bandwidth can be optimized, or the bandwidth between sockets depending on the OEM requirements. The most likely configuration is that the number of PCIe links will be the same for 1 and socket machines given that some lanes per socket will be used for inter-socket communication. The upshot is that a 1 or 2 socket configuration does not need to compromise on the available IO channels. The exact configuration used depends on the platform.

3 AMD EPYC 7002 Series Processor Topology #

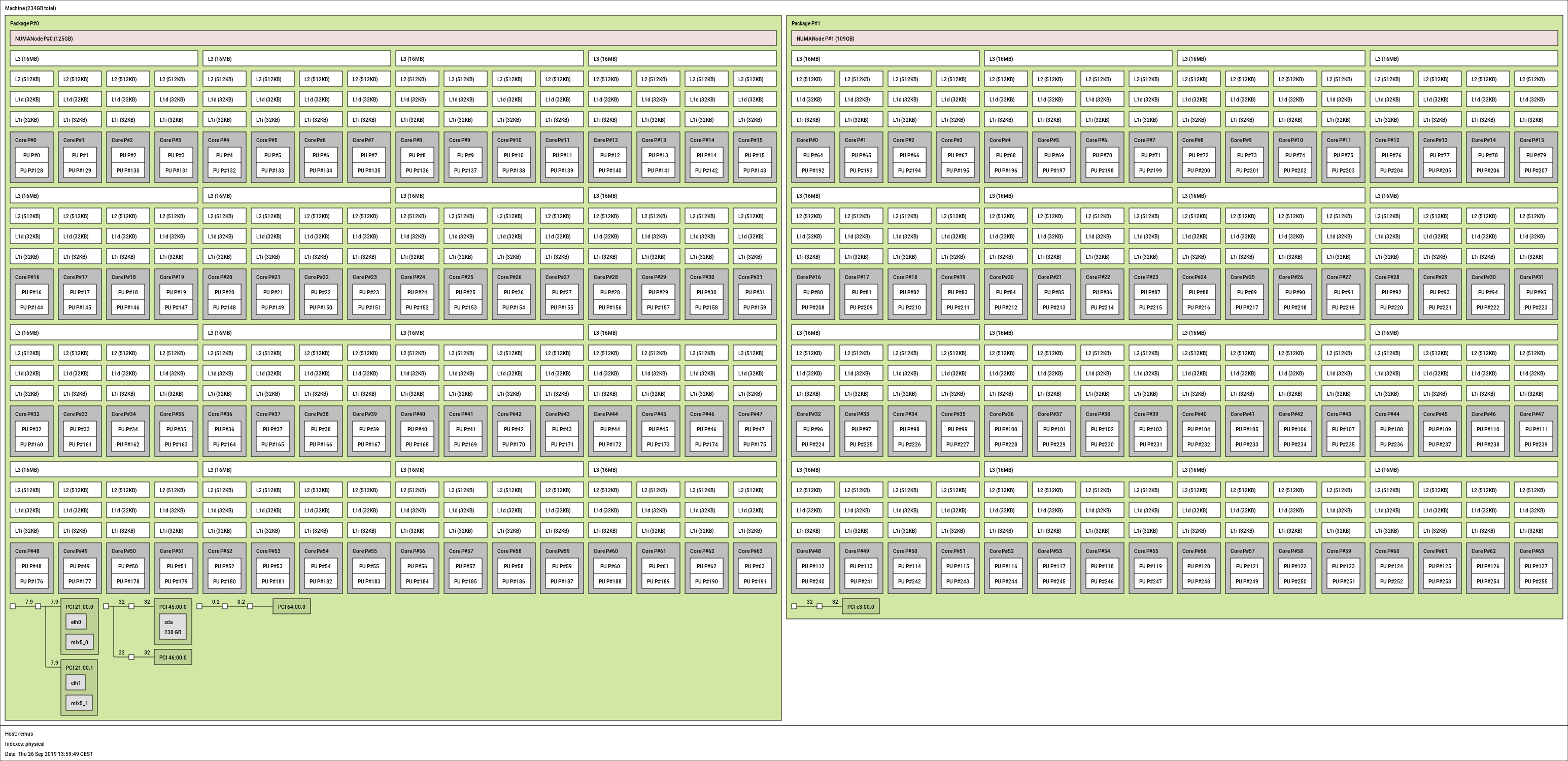

Figure 2, “AMD EPYC 7002 Series Processor Topology” below shows the topology of an example machine generated by the lstopo tool.

This tool is part of the hwloc package which is not supported in SUSE Linux Enterprise Server 15 SP1 but can be installed for illustration. The two “packages” correspond to each socket. The CCXs consisting of 4 cores (8 threads) each should be clear as each CCX has 1 L3 cache. What is not obvious is the links to the IO die, but it should be taken into account when splitting a workload to optimize bandwidth to memory. In this example, the IO channels are not heavily used, but the focus will be CPU and memory-intensive loads. If optimizing for IO, it is recommended, where possible, that the workload is located on the nodes local to the IO channel.

The computer output below shows a conventional view of the topology using the numactl tool which is slightly edited for clarify. The CPU IDs that map to each node are reported on the “node X cpus:” lines and note the NUMA distances on the table at the bottom of the computer output. Node 0 and node 1 are a distance of 32 apart as they are on separate sockets. The distance is a not a guarantee of the access latency. But it is a rule of thumb that accesses between sockets are roughly twice the cost of accessing another die on the same socket.

epyc:~ # numactl --hardware available: 2 nodes (0-1) node 0 cpus: 0 ... 63 128 ... 191 node 0 size: 128255 MB node 0 free: 125477 MB node 1 cpus: 64 ... 127 ... 255 node 1 size: 111609 MB node 1 free: 111048 MB node distances: node 0 1 0: 10 32 1: 32 10

Finally, the cache topology can be discovered in a variety of fashions. While

lstopo can provide the information, it is not always available.

Fortunately, the level, size and ID of CPUs that share cache can be identified from the files

under /sys/devices/system/cpu/cpuN/cache.

4 Memory and CPU Binding #

NUMA is a scalable memory architecture for multiprocessor systems that can reduce contention on a memory channel. A full discussion on tuning for NUMA is beyond the scope for this paper. But the document “A NUMA API for Linux” at http://developer.amd.com/wordpress/media/2012/10/LibNUMA-WP-fv1.pdf provides a valuable introduction.

The default policy for programs is the “local policy”. A program which calls

malloc() or mmap() reserves virtual address space but

does not immediately allocate physical memory. The physical memory is allocated the first time

the address is accessed by any thread and, if possible, the memory will be local to the

accessing CPU. If the mapping is of a file, the first access may have occurred at any time in

the past so there are no guarantees about locality.

When memory is allocated to a node, it is less likely to move if a thread changes to a CPU on another node or if multiple programs are remote accessing the data unless Automatic NUMA Balancing (NUMAB) is enabled. When NUMAB is enabled, unbound process accesses are sampled and if there are enough remote accesses then the data will be migrated to local memory. This mechanism is not perfect and incurs overhead of its own. This can be important for performance for thread and process migrations between nodes to be minimized and for memory placement to be carefully considered and tuned.

The taskset tool is used to set or get the CPU affinity for new or existing processes. An example use is to confine a new process to CPUs local to one node. Where possible, local memory will be used. But if the total required memory is larger than the node, then remote memory can still be used. In such configurations, it is recommended to size the workload such that it fits in the node. This avoids that any of the data is being paged out when kswapd wakes to reclaim memory from the local node.

numactl controls both memory and CPU policies for processes that it launches and can modify existing processes. In many respects, the parameters are easier to specify than taskset. For example, it can bind a task to all CPUs on a specified node instead of having to specify individual CPUs with taskset. Most importantly, it can set the memory allocation policy without requiring application awareness.

Using policies, a preferred node can be specified where the task will use that node if memory is available. This is typically used in combination with binding the task to CPUs on that node. If a workload's memory requirements are larger than a single node and predictable performance is required then the “interleave” policy will round-robin allocations from allowed nodes. This gives sub-optimal but predictable access latencies to main memory. More importantly, interleaving reduces the probability that the OS will need to reclaim any data belonging to a large task.

Further improvements can be made to access latencies by binding a workload to a single CPU Complex (CCX) within a node. Since L3 caches are not shared between CCXs, binding a workload to a CCX avoids L3 cache misses caused by workload migration. However, it should be carefully considered whether binding on a per CCD basis to share memory bandwidth between multiple processes is preferred.

Find examples below on how taskset and numactl can be used to start commands bound to different CPUs depending on the topology.

# Run a command bound to CPU 1 epyc:~ # taskset -c 1 [command] # Run a command bound to CPUs belonging to node 0 epyc:~ # taskset -c `cat /sys/devices/system/node/node0/cpulist` [command] # Run a command bound to CPUs belonging to nodes 0 and 1 epyc:~ # numactl –cpunodebind=0,1 [command] # Run a command bound to CPUs that share L3 cache with cpu 1 epyc:~ # taskset -c `cat /sys/devices/system/cpu/cpu1/cache/index3/shared_cpu_list` [command]

4.1 Tuning for Local Access Without Binding #

The ability to use local memory where possible and remote memory if necessary is

valuable. But there are cases where it is imperative that local memory always be used. If

this is the case, the first priority is to bind the task to that node. If that is not

possible then the command sysctl vm.zone_reclaim_mode=1 can be used to

aggressively reclaim memory if local memory is not available.

While this option is good from a locality perspective, it can incur high costs because of stalls related to reclaim and the possibility that data from the task will be reclaimed. Treat this option with a high degree of caution and testing.

4.2 Hazards with CPU Binding #

There are three major hazards to consider with CPU binding.

The first is to watch for remote memory nodes being used where the process is not allowed to run on CPUs local to that node. Going more in detail here is outside the scope of this paper. However, the most common scenario is an IO-bound thread communicating with a kernel IO thread on a remote node bound to the IO controller whose accesses are never local.

While tasks may be bound to CPUs, the resources they are accessing such as network or

storage devices may not have interrupts routed locally. irqbalance

generally makes good decisions. But in cases where network or IO is extremely high

performance or the application has very low latency requirements, it may be necessary to

disable irqbalance using systemctl. When that is done,

the IRQs for the target device need to be routed manually to CPUs local to the target

workload for optimal performance.

The second is that guides about CPU binding tend to focus on binding to a single CPU. This is not always optimal when the task communicates with other threads, as fixed bindings potentially miss an opportunity for the processes to use idle cores sharing an L1 or L2 cache. This is particularly true when dispatching IO, be it to disk or a network interface where a task may benefit from being able to migrate close to the related threads but also applies to pipeline-based communicating threads for a computational workload. Hence, focus initially on binding to CPUs sharing L3 cache. Then consider whether to bind based on a L1/L2 cache or a single CPU using the primary metric of the workload to establish whether the tuning is appropriate.

The final hazard is similar: if many tasks are bound to a smaller set of CPUs, then the subset of CPUs could be over-saturated even though the machine overall has spare capacity.

4.3 cpusets and Memory Control Groups #

cpusets are ideal when multiple workloads must be isolated on a machine in a predictable fashion. cpusets allow a machine to be partitioned into subsets. These sets may overlap, and in that case they suffer from similar problems as CPU affinities. In the event there is no overlap, they can be switched to “exclusive” mode which treats them completely in isolation with relatively little overhead. Similarly, they are well suited when a primary workload must be protected from interference because of low-priority tasks in which case the low priority tasks can be placed in a cpuset. The caveat with cpusets is that the overhead is higher than using scheduler and memory policies. Ordinarily, the accounting code for cpusets is completely disabled. But when a single cpuset is created, there is a second layer of checks against scheduler and memory policies.

Similarly memcg can be used to limit the amount of memory that can be used by a set of processes. When the limits are exceeded then the memory will be reclaimed by tasks within memcg directly without interfering with any other tasks. This is ideal for ensuring there is no inference between two or more sets of tasks. Similar to cpusets, there is some management overhead incurred. This means if tasks can simply be isolated on a NUMA boundary then this is preferred from a performance perspective. The major hazard is that, if the limits are exceeded, then the processes directly stall to reclaim the memory which can incur significant latencies.

Without memcg, when memory gets low, the global reclaim daemon does

work in the background and if it reclaims quickly enough, no stalls are incurred. When

using memcg, observe the allocstall counter in

/proc/vmstat as this can detect early if stalling is a

problem.

5 High-performance Storage Devices and Interrupt Affinity #

High-performance storage devices like Non-Volatile Memory Express (NVMe) or Serial Attached SCSI (SAS) controller are designed to take advantage of parallel IO submission. These devices typically support a large number of submit and receive queues, which are tied to MSI-X interrupts. Ideally these devices should provide as many MSI-X vectors as CPUs are in the system. To achieve the best performance each MSI-X vector should be assigned to an individual CPU.

6 Automatic NUMA Balancing #

Automatic NUMA Balancing will ignore any task that uses memory policies. If the workloads

can be manually optimized with policies, then do so and disable automatic NUMA balancing by

specifying numa_balancing=disable on the kernel command line or via

sysctl. There are many cases where it is impractical or impossible to

specify policies in which case the balancing should be sufficient for throughput-sensitive

workloads. For latency sensitive workloads, the sampling for NUMA balancing may be too high in

which case it may be necessary to disable balancing. The final corner case where NUMA

balancing is a hazard is a case where the number of runnable tasks always exceeds the number

of CPUs in a single node. In this case, the load balancer (and potentially affine wakes) will

constantly pull tasks away from the preferred node as identified by automatic NUMA balancing

resulting in excessive sampling and CPU migrations.

7 Evaluating Workloads #

The first and foremost step when evaluating how a workload should be tuned is to establish a primary metric such as latency, throughput or elapsed time. When each tuning step is considered or applied, it is critical that the primary metric be examined before conducting any further analysis to avoid intensive focus on the wrong bottleneck. Make sure that the metric is measured multiple times to ensure that the result is reproducible and reliable within reasonable boundaries. When that is established, analyze how the workload is using different system resources to determine what area should be the focus. The focus in this paper is on how CPU and memory is used. But other evaluations may need to consider the IO subsystem, network subsystem, system call interfaces, external libraries etc. The methodologies that can be employed to conduct this are outside the scope of the paper but the book “Systems Performance: Enterprise and the Cloud” by Brendan Gregg (see http://www.brendangregg.com/sysperfbook.html) is a recommended primer on the subject.

7.1 CPU Utilization and Saturation #

Decisions on whether to bind a workload to a subset of CPUs require that the CPU

utilization and any saturation risk is known. Both the ps and

pidstat commands can be used to sample the number of threads in a

system. Typically pidstat yields more useful information with the

important exception of the run state. A system may have many threads but if they are idle

then they are not contributing to utilization. The mpstat command can

report the utilization of each CPU in the system.

High utilization of a small subset of CPUs may be indicative of a single-threaded workload that is pushing the CPU to the limits and may indicate a bottleneck. Conversely, low utilization may indicate a task that is not CPU-bound, is idling frequently or is migrating excessively. While each workload is different, load utilization of CPUs may show a workload that can run on a subset of CPUs to reduce latencies because of either migrations or remote accesses. When utilization is high, it is important to determine if the system could be saturated. The vmstat tool reports the number of runnable tasks waiting for CPU in the “r” column where any value over 1 indicates that wakeup latencies may be incurred. While the exact wakeup latency can be calculated using trace points, knowing that there are tasks queued is an important step. If a system is saturated, it may be possible to tune the workload to use fewer threads.

Overall, the initial intent should be to use CPUs from as few NUMA nodes as possible to reduce access latency but there are exceptions. The AMD EPYC 7002 Series Processor has an exceptional number of high-speed memory channels to main memory. Thus consider the workload thread activity. If they are co-operating threads or sharing data, isolate them on as few nodes as possible to minimize cross-node memory accesses. If the threads are completely independent with no shared data, it may be best to isolate them on a subset of CPUs from each node. This is to maximize the number of available memory channels and throughput to main memory. For some computational workloads, it may be possible to use hybrid models such as MPI for parallelization across nodes and using OpenMP for threads within nodes.

7.2 Transparent Huge Pages #

Huge pages are a mechanism by which performance can be improved. This is done by

reducing the number of page faults, the cost of translating virtual addresses to physical

addresses because of fewer layers in the page table and being able to cache translations for

a larger portion of memory. Transparent Huge Pages (THP)

is supported for private anonymous memory that automatically backs mappings with huge pages

where anonymous memory could be allocated as heap,

malloc(), mmap(MAP_ANONYMOUS), etc. While the

feature has existed for a long time, it has evolved significantly.

Many tuning guides recommend disabling THP because of problems with early implementations. Specifically, when the machine was running for long enough, the use of THP could incur severe latencies and could aggressively reclaim memory in certain circumstances. These problems have been resolved by the time SUSE Linux Enterprise Server 15 SP1 was released. This means there are no good grounds for automatically disabling THP because of severe latency issues without measuring the impact. However, there are exceptions that are worth considering for specific workloads.

Some high-end in-memory databases and other applications aggressively use

mprotect() to ensure that unprivileged data is never leaked. If these

protections are at the base page granularity then there may be many THP splits and rebuilds

that incur overhead. It can be identified if this is a potential problem by using

strace to detect the frequency and granularity of the system call. If

they are high frequency then consider disabling THP. It can also be sometimes inferred from

observing the thp_split and thp_collapse_alloc

counters in /proc/vmstat.

Workloads that sparsely address large mappings may have a higher memory footprint when using THP. This could result in premature reclaim or fallback to remote nodes. An example would be HPC workloads operating on large sparse matrices. If memory usage is much higher than expected then compare memory usage with and without THP to decide if the trade off is not worthwhile. This may be critical on the AMD EPYC 7002 Series Processor given that any spillover will congest the Infinity links and potentially cause cores to run at a lower frequency.

This is specific to sparsely addressed memory. A secondary hint for this case may be that the application primarily uses large mappings with a much higher Virtual Size (VSZ, see Section 7.1, “CPU Utilization and Saturation”) than Resident Set Size (RSS). Applications which densely address memory benefit from the use of THP by achieving greater bandwidth to memory.

Parallelized workloads that operate on shared buffers with threads using more CPUs that are on a single node may experience a slowdown with THP if the granularity of partitioning is not aligned to the huge page. The problem is that if a large shared buffer is partitioned on a 4K boundary then false sharing may occur whereby one thread accesses a huge page locally and other threads access it remotely. If this situation is encountered, it is preferable that the granularity of sharing is increased to the THP size. But if that is not possible then disabling THP is an option.

Applications that are extremely latency sensitive or must always perform in a

deterministic fashion can be hindered by THP. While there are fewer faults, the time for

each fault is higher as memory must be allocated and cleared before being visible. The

increase in fault times may be in the microsecond granularity. Ensure this is a relevant

problem as it typically only applies to extremely latency-sensitive applications. The

secondary problem is that a kernel daemon periodically scans a process looking for

contiguous regions that can be backed by huge pages. When creating a huge page, there is a

window during which that memory cannot be accessed by the application and new mappings

cannot be created until the operation is complete. This can be identified as a problem with

thread-intensive applications that frequently allocate memory. In this case consider

effectively disabling khugepaged by setting a large value in

/sys/kernel/mm/transparent_hugepage/khugepaged/alloc_sleep_millisecs.

This will still allow THP to be used opportunistically while avoiding stalls when calling

malloc() or mmap().

THP can be disabled. To do so, specify transparent_hugepage=disable

on the kernel command line, at runtime via

/sys/kernel/mm/transparent_hugepage/enabled or on a per process basis

by using a wrapper to execute the workload that calls

prctl(PR_SET_THP_DISABLE).

7.3 User/Kernel Footprint #

Assuming an application is mostly CPU or memory bound, it is useful to determine if the

footprint is primarily in user space or kernel space. This gives a hint where tuning should

be focused. The percentage of CPU time can be measured on a coarse-grained fashion using

vmstat or a fine-grained fashion using mpstat. If an

application is mostly spending time in user space then the focus should be on tuning the

application itself. If the application is spending time in the kernel then it should be

determined which subsystem dominates. The strace or perf

trace commands can measure the type, frequency and duration of system calls as

they are the primary reasons an application spends time within the kernel. In some cases, an

application may be tuned or modified to reduce the frequency and duration of system calls.

In other cases, a profile is required to identify which portions of the kernel are most

relevant as a target for tuning.

7.4 Memory Utilization and Saturation #

The traditional means of measuring memory utilization of a workload is to examine the

Virtual Size (VSZ) and Resident

Set Size (RSS) using either the ps or

pidstat tool. This is a reasonable first step but is potentially

misleading when shared memory is used and multiple processes are examined. VSZ is simply a

measure of memory space reservation and is not necessarily used. RSS may be double accounted

if it is a shared segment between multiple processes. The file

/proc/pid/maps can be used to identify all segments used and whether

they are private or shared. The file /proc/pid/smaps yields more

detailed information including the Proportional Set Size

(PSS). PSS is an estimate of RSS except it is divided between the number of

processes mapping that segment which can give a more accurate estimate of utilization. Note

that the smaps file is very expensive to read and should not be

monitored at a high frequency. Finally, the Working Set Size

(WSS) is the amount of memory active required to complete computations during

an arbitrary phase of a programs execution. It is not a value that can be trivially

measured. But conceptually it is useful as the interaction between WSS relative to available

memory affects memory residency and page fault rates.

On NUMA systems, the first saturation point is a node overflow when the “local” policy is in effect. Given no binding of memory, when a node is filled, a remote node’s memory will be used transparently and background reclaim will take place on the local node. Two consequences of this are that remote access penalties will be used and old memory from the local node will be reclaimed. If the WSS of the application exceeds the size of a local node then paging and refaults may be incurred.

The first thing to identify is that a remote node overflow occurred which is accounted

for in /proc/vmstat as the numa_hit,

numa_miss, numa_foreign,

numa_interleave, numa_local and numa_other

counters:

numa_hitis incremented when an allocation uses the preferred node where preferred may be either a local node or one specified by a memory policy.numa_missis incremented when an alternative node is used to satisfy an allocation.numa_foreignis rarely useful but is accounted against a node that was preferred. It is a subtle distinction from numa_miss that is rarely useful.numa_interleaveis incremented when an interleave policy was used to select allowed nodes in a round-robin fashion.numa_localincrements when a local node is used for an allocation regardless of policy.numa_otheris used when a remote node is used for an allocation regardless of policy.

For the local memory policy, the numa_hit and

numa_miss counters are the most important to pay attention to. An

application that is allocating memory that starts incrementing the

numa_miss implies that the first level of saturation has been reached.

If monitoring the proc is undesirable then the

numastat provides the same information. If this is observed on the AMD

EPYC 7002 Series Processor, it may be valuable to bind the application to nodes that

represent dies on a single socket. If the ratio of hits to misses is close to 1, consider an

evaluation of the interleave policy to avoid unnecessary reclaim.

These NUMA statistics only apply at the time a physical page is allocated and is not

related to the reference behavior of the workload. For example, if a task running on node

0 allocates memory local to node 0 then it will be accounted for as a

node_hit in the statistics. However, if the memory is shared with a

task running on node 1, all the accesses may be remote, which is a miss from the

perspective of the hardware but not accounted for in /proc/vmstat.

Detecting remote and local accesses at a hardware level requires using the hardware's

Performance Management Unit to detect.

When the first saturation point is reached then reclaim will be active. This can be

observed by monitoring the pgscan_kswapd and

pgsteal_kswapd

/proc/vmstat counters. If this is matched with an increase in major

faults or minor faults then it may be indicative of severe thrashing. In this case the

interleave policy should be considered. An ideal tuning option is to identify if shared

memory is the source of the usage. If this is the case, then interleave the shared memory

segments. This can be done in some circumstances using numactl or by

modifying the application directly.

More severe saturation is observed if the pgscan_direct and

pgsteal_direct counters are also increasing as these indicate that the

application is stalling while memory is being reclaimed. If the application was bound to

individual nodes, increasing the number of available nodes will alleviate the pressure. If

the application is unbound, it indicates that the WSS of the workload exceeds all available

memory. It can only be alleviated by tuning the application to use less memory or increasing

the amount of RAM available.

As before, whether to use memory nodes from one socket or two sockets depends on the application. If the individual processes are independent then either socket can be used. But where possible, keep communicating processes on the same socket to maximize memory throughput while minimizing the socket interconnect traffic.

7.5 Other Resources #

The analysis of other resources is outside the scope of this paper. However, a common

scenario is that an application is IO-bound. A superficial check can be made using the

vmstat tool. This tool checks what percentage of CPU time is spent idle

combined with the number of processes that are blocked and the values in the bi and bo columns. Further

analysis is required to determine if an application is IO rather than CPU or memory bound.

But this is a sufficient check to start with.

8 Power Management #

Modern CPUs balance power consumption and performance through Performance States (P-States). Low utilization workloads may use lower P-States to conserve power while still achieving acceptable performance. When a CPU is idle, lower power idle states (C-States) can be selected to further conserve power. However this comes with higher exit latencies when lower power states are selected. It is further complicated by the fact that, if individual cores are idle and running at low power, the additional power can be used to boost the performance of active cores. This means this scenario is not a straight-forward balance between power consumption and performance. More complexity is added on the AMD EPYC 7002 Series Processor whereby spare power may be used to boost either cores or the Infinity links.

The 2nd Generation AMD EPYC Processor provides SenseMI

which, among other capabilities, enables CPUs to make adjustments to voltage and frequency

depending on the historical state of the CPU. There is a latency penalty when switching

P-States, but the AMD EPYC 7002 Series Processor is capable of fine-grained in the adjustments

that can be made to reduce likelihood that the latency is a bottleneck. On SUSE Linux

Enterprise Server, the AMD EPYC 7002 Series Processor uses the acpi_cpufreq

driver. This allows P-states to be configured to match requested performance. However, this is

limited in terms of the full capabilities of the hardware. It cannot boost the frequency

beyond the maximum stated frequencies, and if a target is specified, then the highest

frequency below the target will be used. A special case is if the governor is set to performance. In this situation the hardware will use the highest

available frequency in an attempt to work quickly and then return to idle.

What should be determined is whether power management is likely to be a factor for a workload. One that is limited to a subset of active CPUs and nodes will have high enough utilization. This means that power management will not be active on those cores and no action is required. Hence, with CPU binding, the issue of power management may be side-stepped.

Secondly, a workload that does not communicate heavily with other processes and is mostly CPU-bound will also not experience any side effects because of power management.

The workloads that are most likely to be affected are those that synchronously communicate between multiple threads or those that idle frequently and have low CPU utilization overall. It will be further compounded if the threads are sensitive to wakeup latency. However, there are secondary effects if a workload must complete quickly but the CPU is running at a low frequency.

The P-State and C-State of each CPU can be examined using the turbostat

utility. The computer output below shows an example where a workload is busy on CPU 0 and

other workloads are idle. A useful exercise is to start a workload and monitor the output of

turbostat paying close attention to CPUs that have moderate utilization

and running at a lower frequency. If the workload is latency-sensitive, it is grounds for

either minimizing the number of CPUs available to the workload or configuring power

management.

Package Core CPU Avg_MHz Busy% Bzy_MHz TSC_MHz IRQ POLL C1 C2 POLL% C1% C2% - - - 197 6.29 3132 1997 25214 4 48557 3055 0.00 0.06 93.68 0 0 0 15 0.82 1859 1997 74 0 0 68 0.00 0.00 99.23 0 0 128 0 0.01 1781 1997 10 0 0 10 0.00 0.00 100.03 0 1 1 3137 100.00 3137 1997 1345 0 0 0 0.00 0.00 0.00 0 1 129 0 0.01 3131 1997 10 0 1 9 0.00 0.00 100.01 0 2 2 0 0.01 1781 1997 9 0 0 9 0.00 0.00 100.02 0 2 130 0 0.01 1781 1997 9 0 0 9 0.00 0.00 100.02 0 3 3 0 0.01 1780 1997 9 0 0 9 0.00 0.00 100.02 0 3 131 0 0.01 1780 1997 9 0 0 9 0.00 0.00 100.02

In the event it is determined that tuning CPU frequency management is appropriate. Then

the following actions can be taken to set the management policy to performance using the

cpupower utility:

epyc:~# cpupower frequency-set -g performance Setting cpu: 0 Setting cpu: 1 Setting cpu: 2 ...

Persisting it across reboots can be done via a local init script, via

udev or via one-shot systemd service file if it is

deemed to be necessary. Note that turbostat will still show that idling

CPUs use a low frequency. The impact of the policy is that the highest P-State will be used as

soon as possible when the CPU is active. In some cases, a latency bottleneck will occur

because of a CPU exiting idle. If this is identified on the AMD EPYC 7002 Series Processor,

restrict the C-state by specifying processor.max_cstate=2 on the kernel

command line. This will prevent CPUs from entering lower C-states. It is expected on the AMD

EPYC 7002 Series Processor that the exit latency from C1 is very low. But by allowing C2, it

reduces interference from the idle loop injecting micro-operations into the pipeline and

should be the best state overall. It is also possible to set the max idle state on individual

cores using cpupower idle-set. If SMT is enabled, the idle state should be

set on both siblings.

9 Security Mitigations #

On occasion, a security fix is applied to a distribution that has a performance impact. The most recent notable examples are Meltdown and two variants of Spectre. The AMD EPYC 7002 Series Processor is immune to the Meltdown variant. Page table isolation is never active. However, it is vulnerable to both Spectre variants. The following table lists all security vulnerabilities that affect the 2nd Generation AMD EPYC Processor and which mitigations are enabled by default for SUSE Linux Enterprise Server 15 SP1.

| Vulnerability | Affected | Mitigations |

|---|---|---|

|

L1TF |

No |

N/A |

|

MDS |

No |

N/A |

|

Meltdown |

No |

N/A |

|

Speculative Store Bypass |

Yes |

prctl and seccomp |

|

Spectre v1 |

Yes |

usercopy/swapgs barriers and __user pointer sanitization |

|

Spectre v2 |

Yes |

retpoline, RSB filling, and conditional IBPB, IBRS_FW, and STIBP |

If it can be guaranteed that the server is in a trusted environment running only known code that is not malicious, the mitigations=off parameter can be specified on the kernel command line. This option disables all security mitigations and might improve performance, but the gains for the AMD EPYC 7002 Series Processor will only be marginal when compared against other CPUs.

10 Hardware-based Profiling #

The AMD EPYC 7002 Series Processor has extensive Performance Monitoring Unit (PMU)

capabilities, and advanced monitoring of a workload can be conducted via

oprofile or perf. Both commands support a range of

hardware events including cycles, L1 cache access/misses, TLB access/misses, retired branch

instructions and mispredicted branches. To identify what subsystem may be worth tuning in the

OS, the most useful invocation is perf record -a -e cycles sleep 30 to

capture 30 seconds of data for the entire system. You can also call perf record -e

cycles command to gather a profile of a given workload. Specific information on

the OS can be gathered through tracepoints or creating probe points with

perf or trace-cmd. But the details on how to conduct

such analysis are beyond the scope of this paper.

11 Candidate Workloads #

The workloads that will benefit most from the 2nd Generation AMD EPYC Processor architecture are those that can be parallelized and are either memory or IO-bound. This is particularly true for workloads that are “NUMA friendly”. They can be parallelized, and each thread can operate independently for the majority of the workloads lifetime. For memory-bound workloads, the primary benefit will be taking advantage of the high bandwidth available on each channel. For IO-bound workloads, the primary benefit will be realized when there are multiple storage devices, each of which is connected to the node local to a task issuing IO.

11.1 Test Setup #

The following sections will demonstrate how an OpenMP and MPI workload can be configured and tuned on an AMD EPYC 7002 Series Processor reference platform. The system has two AMD EPYC 7702P processors, each with 64 cores and SMT enabled for a total of 128 cores (256 logical CPUs). Half the memory banks are populated with DDR4 2666 MHz RDIMMs, with a theoretical maximum transfer speed of 21.3 GB/sec each. With single ranking, the peak memory transfer speed is 341 GB/sec. Note that the peak theoretical transfer speed is rarely reached in practice given that it can be affected by the mix of reads/writes and the location and temporal proximity of memory locations accessed.

|

CPU |

2x AMD EPYC 7702P |

|

Platform |

AMD Engineering Sample Platform |

|

Drive |

Micron 1100 SSD |

|

OS |

SUSE Linux Enterprise Server 15 SP1 |

|

Memory Interleaving |

Channel |

|

Memory Speed |

2667MHz (single rank) |

|

Kernel command line |

|

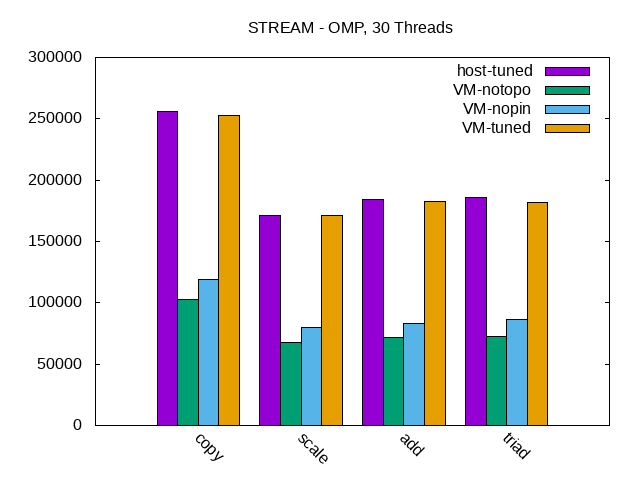

11.2 Test workload: STREAM #

STREAM is a memory bandwidth benchmark created by Dr. John D. McCalpin from the University of Virginia (for more information, see https://www.cs.virginia.edu/stream/. It can be used to measure bandwidth of each cache level and bandwidth to main memory while calculating four basic vector operations. Each operation can exhibit different throughputs to main memory depending on the locations and type of access.

The benchmark was configured to run both single-threaded and parallelized with OpenMP to take advantage of multiple memory controllers. The array elements for the benchmark was set at 268,435,456 elements at compile time so each that array was 2048MB in size for a total memory footprint of approximately 6144 MB. The size was selected in line with the recommendation from STREAM that the array sizes be at least 4 times the total size of L3 cache available in the system. An array-size offset was used so that the separate arrays for each parallelized thread would not share a Transparent Huge Page. The reason for that is that NUMA balancing may choose to migrate shared pages leading to some distortion of the results.

|

Compiler |

gcc (SUSE Linux Enterprise) 7.4.1 |

|

Compiler flags |

-Ofast -march=znver1 -mcmodel=medium -DOFFSET=512 |

|

OpenMP compiler flag |

-fopemp |

|

OpenMP environment variables |

OMP_PROC_BIND=SPREAD OMP_NUM_THREADS=32 |

The march=znver1 is a reflection of the compiler available in SLE

15 SP1. From gcc 9.2, full architectural support via

march=znver2 will be available. The number of OpenMP threads was

selected to have at least one thread running for every memory channel by having one thread

per L3 cache available. The OMP_PROC_BIND parameter spreads the

threads such that one thread is bound to each available dedicated L3 cache to maximize

available bandwidth. This can be verified using trace-cmd, as illustrated

below with slight editing for formatting and clarity.

epyc:~ # trace-cmd record -e sched:sched_migrate_task ./stream epyc:~ # trace-cmd report ... stream-47249 x: sched_migrate_task: comm=stream pid=7250 prio=120 orig_cpu=0 dest_cpu=4 stream-47249 x: sched_migrate_task: comm=stream pid=7251 prio=120 orig_cpu=0 dest_cpu=8 stream-47249 x: sched_migrate_task: comm=stream pid=7252 prio=120 orig_cpu=0 dest_cpu=12 stream-47249 x: sched_migrate_task: comm=stream pid=7253 prio=120 orig_cpu=0 dest_cpu=16 stream-47249 x: sched_migrate_task: comm=stream pid=7254 prio=120 orig_cpu=0 dest_cpu=20 stream-47249 x: sched_migrate_task: comm=stream pid=7255 prio=120 orig_cpu=0 dest_cpu=24 stream-47249 x: sched_migrate_task: comm=stream pid=7256 prio=120 orig_cpu=0 dest_cpu=28 stream-47249 x: sched_migrate_task: comm=stream pid=7257 prio=120 orig_cpu=0 dest_cpu=32 stream-47249 x: sched_migrate_task: comm=stream pid=7258 prio=120 orig_cpu=0 dest_cpu=36 stream-47249 x: sched_migrate_task: comm=stream pid=7259 prio=120 orig_cpu=0 dest_cpu=40 stream-47249 x: sched_migrate_task: comm=stream pid=7260 prio=120 orig_cpu=0 dest_cpu=44 stream-47249 x: sched_migrate_task: comm=stream pid=7261 prio=120 orig_cpu=0 dest_cpu=48 stream-47249 x: sched_migrate_task: comm=stream pid=7262 prio=120 orig_cpu=0 dest_cpu=52 stream-47249 x: sched_migrate_task: comm=stream pid=7263 prio=120 orig_cpu=0 dest_cpu=56 stream-47249 x: sched_migrate_task: comm=stream pid=7264 prio=120 orig_cpu=0 dest_cpu=60 stream-47249 x: sched_migrate_task: comm=stream pid=7265 prio=120 orig_cpu=0 dest_cpu=64 stream-47249 x: sched_migrate_task: comm=stream pid=7266 prio=120 orig_cpu=0 dest_cpu=68 stream-47249 x: sched_migrate_task: comm=stream pid=7267 prio=120 orig_cpu=0 dest_cpu=72 stream-47249 x: sched_migrate_task: comm=stream pid=7268 prio=120 orig_cpu=0 dest_cpu=76 stream-47249 x: sched_migrate_task: comm=stream pid=7269 prio=120 orig_cpu=0 dest_cpu=80 stream-47249 x: sched_migrate_task: comm=stream pid=7270 prio=120 orig_cpu=0 dest_cpu=84 stream-47249 x: sched_migrate_task: comm=stream pid=7271 prio=120 orig_cpu=0 dest_cpu=88 stream-47249 x: sched_migrate_task: comm=stream pid=7272 prio=120 orig_cpu=0 dest_cpu=92 stream-47249 x: sched_migrate_task: comm=stream pid=7273 prio=120 orig_cpu=0 dest_cpu=96 stream-47249 x: sched_migrate_task: comm=stream pid=7274 prio=120 orig_cpu=0 dest_cpu=100 stream-47249 x: sched_migrate_task: comm=stream pid=7275 prio=120 orig_cpu=0 dest_cpu=104 stream-47249 x: sched_migrate_task: comm=stream pid=7276 prio=120 orig_cpu=0 dest_cpu=108 stream-47249 x: sched_migrate_task: comm=stream pid=7277 prio=120 orig_cpu=0 dest_cpu=112 stream-47249 x: sched_migrate_task: comm=stream pid=7278 prio=120 orig_cpu=0 dest_cpu=116 stream-47249 x: sched_migrate_task: comm=stream pid=7279 prio=120 orig_cpu=0 dest_cpu=120 stream-47249 x: sched_migrate_task: comm=stream pid=7280 prio=120 orig_cpu=0 dest_cpu=124

Several options were considered for the test system that were unnecessary for STREAM running on the AMD EPYC 7002 Series Processor but may be useful in other situations. STREAM performance can be limited if a load/store instruction stalls to fetch data. CPUs may automatically prefetch data based on historical behavior but it is not guaranteed. In limited cases depending on the CPU and workload, this may be addressed by specifying -fprefetch-loop-arrays and depending on whether the workload is store-intensive, -mprefetchwt1. In the test system using AMD EPYC 7002 Series Processors, explicit prefetching did not help and was omitted. This is because an explicitly scheduled prefetch may disable a CPUs predictive algorithms and degrade performance. Similarly, for some workloads branch mispredictions can be a major problem, and in some cases breach mispredictions can be offset against I-Cache pressure by specifying -funroll-loops. In the case of STREAM on the test system, the CPU accurately predicted the branches rendering the unrolling of loops unnecessary. For math-intensive workloads it can be beneficial to link the application with -lmvec depending on the application. In the case of STREAM, the workload did not use significant math-based operations and so this option was not used. Some styles of code blocks and loops can also be optimized to use vectored operations by specifying -ftree-vectorize and explicitly adding support for CPU features such as -mavx2. In all cases, STREAM does not benefit as its operations are very basic. But it should be considered on an application-by-application basis and when building support libraries such as numerical libraries. In all cases, experimentation is recommended but caution advised, particularly when considering options like prefetch that may have been advisable on much older CPUs or completely different workloads. Such options are not universally beneficial or always suitable for modern CPUs such as the AMD EPYC 7002 Series Processors.

In the case of STREAM running on the AMD EPYC 7002 Series Processor, it was sufficient to enable -Ofast. This includes the -O3 optimizations to enable vectorization. In addition, it gives some leeway for optimizations that increase the code size with additional options for fast path that may not be standards-compliant.

For OpenMP, the SPREAD option was used to spread the load across L3 caches. Spreading across memory controllers may have been more optimal but that information is not trivially discoverable. OpenMP has a variety of different placement options if manually tuning placement. But careful attention should be paid to OMP_PLACES given the importance of the L3 Cache topology in AMD EPYC 7002 Series Processors. At the time of writing, it is not possible to specify l3cache as a place similar to what MPI has. In this case, the topology will have to be examined either with library support such as hwloc, directly via the sysfs or manually. While it is possible to guess via the CPUID, it is not recommended as CPUs may be offlined or the enumeration may change. An example specification of places based on L3 cache for the test system is;

{0:4,128:4}, {4:4,132:4}, {8:4,136:4}, {12:4,140:4}, {16:4,144:4}, {20:4,148:4},

{24:4,152:4}, {28:4,156:4}, {32:4,160:4}, {36:4,164:4}, {40:4,168:4}, {44:4,172:4},

{48:4,176:4}, {52:4,180:4}, {56:4,184:4}, {60:4,188:4}, {64:4,192:4}, {68:4,196:4},

{72:4,200:4}, {76:4,204:4}, {80:4,208:4}, {84:4,212:4}, {88:4,216:4}, {92:4,220:4},

{96:4,224:4}, {100:4,228:4}, {104:4,232:4}, {108:4,236:4}, {112:4,240:4},

{116:4,244:4}, {120:4,248:4}, {124:4,252:4}Figure 3, “STREAM Bandwidth, Single Threaded and Parallelized” shows the reported bandwidth for the single and parallelized case. The single-threaded bandwidth for the basic Copy vector operation on a single core was 34.2 GB/sec. This is higher than the theoretical maximum of a single DIMM, but the IO die may interleave accesses. Channels are interleaved in this configuration. This has been recommended as the best balance for a variety of workloads but limits the absolute maximum of a specialized benchmark like STREAM. The total throughput for each parallel operation ranged from 173 GB/sec to 256 GB/sec depending on the type of operation and how efficiently memory bandwidth was used.

Higher STREAM scores can be reported by reducing the array sizes so that cache is partially used with the maximum score requiring that each threads memory footprint fits inside the L1 cache. Additionally, it is possible to achieve results closer to the theoretical maximum by manual optimization of the STREAM benchmark using vectored instructions and explicit scheduling of loads and stores. The purpose of this configuration was to illustrate the impact of properly binding a workload that can be fully parallelized with data-independent threads.

11.3 Test Workload: NASA Parallel Benchmark #

NASA Parallel Benchmark (NPB) is a small set of compute-intensive kernels designed to evaluate the performance of supercomputers. They are small compute kernels derived from Computational Fluid Dynamics (CFD) applications. The problem size can be adjusted for different memory sizes. Reference implementations exist for both MPI and OpenMP. This setup will focus on the MPI reference implementation.

While each application behaves differently, one common characteristic is that the workload is very context-switch intensive, barriers frequently yield the CPU to other tasks and the lifetime of individual processes can be very short-lived. The following paragraphs detail the tuning selected for this workload.

The most basic step is setting the CPU governor to “performance” although it is not mandatory. This can address issues with short-lived, or mobile tasks, failing to run long enough for a higher P-State to be selected even though the workload is very throughput sensitive. The migration cost parameter is set to reduce the frequency the load balancer will move an individual task. The minimum granularity is adjusted to reduce over-scheduling effects.

Depending on the computational kernel used, the workload may require a power-of-two number or a square number of processes to be used. However, note that using all available CPUs can mean that the application can contend with itself for CPU time. Furthermore, as IO may be issued to shared memory backed by disk, there are system threads that also need CPU time. Finally, if there is CPU contention, MPI tasks can be stalled waiting on an available CPU and OpenMPI may yield tasks prematurely if it detects there are more MPI tasks than CPUs available. These factors should be carefully considered when tuning for parallelized workloads in general and MPI workloads in particular.

In the specific case of testing NPB on the System Under Test, there was usually limited advantage to limiting the number of CPUs used. For the Embarrassingly Parallel (EP) load in particular, it benefits from using all available CPUs. Hence, the default configuration used all available CPUs (256) which is both a power-of-two and square number of CPUs because it was a sensible starting point. However, this is not universally true. Using perf, it was found that some workloads are memory-bound and do not benefit from a high degree of parallelization. In addition, for the final configuration, some workloads were parallelized to have one task per L3 cache in the system to maximize cache usage. The exception was the Scalar Pentadiagonal (SP) workload which was both memory bound and benefited from using all available CPUs. Also, note that the System Under Test used a pre-production CPU, that all memory channels were not populated and the memory speed was not the maximum speed supported by the platform. Hence, the optimal choices for this test may not be universally true for all AMD EPYC platforms. This highlights that there is no universal good choice for optimization a workload for a platform and that experimentation and validation of tuning parameters is vital.

The basic compilation flags simply turned on all safe optimizations. The tuned flags used -Ofast which can be unsafe for some mathematical workloads but generated the correct output for NPB. The other flags used the optimal instructions available on the distribution compiler and vectorized some operations.

As NPB uses shared files, an XFS partition was used for the temporary files. It is,

however, only used for mapping shared files and is not a critical path for the benchmark and

no IO tuning is necessary. In some cases with MPI applications, it will be possible to use a

tmpfs partition for OpenMPI. This avoids unnecessary IO assuming the

increased physical memory usage does not cause the application to be paged out.

|

Compiler |

gcc (SUSE Linux) 7.4.1 20190424, mpif77, mpicc |

|

OpenMPI |

openmpi2-2.1.6-3.3.x86_64 |

|

Default compiler flags |

-m64 -O3 -mcmodel=large |

|

Default number processes |

256 |

|

Selective number processes |

bt=256 sp=256 mg=32 lu=121 ep=256 cg=32 |

|

Tuned compiler flags |

-Ofast -march=znver1 -mtune=znver1 -ftree-vectorize |

|

CPU governor performance |

cpupower frequency-set -g performance |

|

Scheduler parameters |

sysctl -w kernel.sched_migration_cost_ns=5000000 sysctl -w kernel.sched_min_granularity_ns=10000000 |

|

mpirun parameters |

-mca btl ^openib,udapl -np 64 --bind-to l3cache:overload-allowed |

|

mpirun environment |

TMPDIR=/xfs-data-partition |

Figure 4, “NAS MPI Results” shows the time, as reported by the benchmark, for each of the kernels to complete.

The baseline test used all available CPUs, basic compilation options and the performance governor. The second test tuned used tuned compilation options, scheduler tuning and bound tasks to L3 caches gaining between 0.9% and 17.54% performance on average. The final test selective used processes that either used all CPUs, avoided heavy overloaded or limited processes to one per L3 cache showing overall gains between 0.9% and 21.6% depending on the computational kernel. Despite the binding, automatic NUMA balancing was not tuned as the majority of accesses were local to the NUMA domain and the overhead of NUMA balancing was negligible.

In some cases, it will be necessary to compile an application that can run on different CPUs. In such cases, -march=znver1 may not be suitable if it generates binaries that are incompatible with other vendors. In such cases, it is possible to specify the ISA-specific options that are cross-compatible with many x86-based CPUs such as -mavx2, -mfma, -msse2 or msse4a while favoring optimal code generation for AMD EPYC 7002 Series Processors with -mtune=znver1. This can be used to strike a balance between excellent performance on a single CPU and great performance on multiple CPUs.

12 Using AMD EPYC 7002 Series Processors for Virtualization #

Running Virtual Machines (VMs) has some aspects in common with running “regular” tasks on a host Operating System. Therefore, most of the tuning advice described so far in this document are valid and applicable to this section too.

However, virtualization workloads do pose their own specific challenges and some special considerations need to be made, to achieve a better tailored and more effective set of tuning advice, for cases where a server is used only as a virtualization host. And this is especially relevant for large systems, such as AMD EPYC 7002 Series Processors.

This is because:

VMs typically run longer, and consume more memory, than most of others “regular” OS processes;

VMs can be configured to behave either as NUMA-aware or non NUMA-aware “workloads”.

In fact, VMs often run for hours, days, or even months, without being terminated or restarted. Therefore, it is almost never acceptable to pay the price of suboptimal resource partitioning and allocation, even when there is the expectation that things will be better next time. For example, it is always desirable that vCPUs run close to the memory that they are accessing. For reasonably big NUMA aware VMs, this happens only with proper mapping of the virtual NUMA nodes of the guest to physical NUMA nodes on the host. For smaller, NUMA-unaware VMs, that means allocating all their memory on the smallest possible number of host NUMA nodes, and making their vCPUs run on the pCPUs of those nodes as much as possible.

Also, poor mapping of virtual machine resources (virtual CPUs and memory, but also IO) on the host topology induces performance degradation to everything that runs inside the virtual machine – and potentially even to other components of the system .

Regarding NUMA-awareness, a VM is called out to be NUMA aware if a (virtual) NUMA topology is defined and exposed to the VM itself and if the OS that the VM runs (guest OS) is also NUMA aware. On the contrary, a VM is called NUMA-unaware if either no (virtual) NUMA topology is exposed or the guest OS is not NUMA aware.

VMs that are large enough (in terms of amount of memory and number of virtual CPUs) to span multiple host NUMA nodes, benefit from being configured as NUMA aware VMs. However, even for small and NUMA-unaware VMs, wise placement of their memory on the host nodes, and effective mapping of their virtual CPUs (vCPUs) on the host physical CPUs (pCPUs) is key for achieving good and consistent performance.

This second half of the present document focuses on tuning a system where CPU and memory intensive workloads run inside VMs. We will explain how to configure and tune both the host and the VMs, in a way that performance comparable to the ones of the host can be achieved.

Both the Kernel-based Virtual Machine (KVM) and the Xen-Project hypervisor (Xen), as available in SUSE Linux Enterprise Server 15 SP1, provide mechanisms to enact this kind of resource partitioning and allocation.

KVM only supports one type of VM – a fully hardware-based virtual machine (HVM). Under Xen, VMs can be paravirtualized (PV) or hardware virtualized machines (HVM).

Xen HVM guests with paravirtualized interfaces enabled (called PVHVM, or HVM) are very similar to KVM VMs, which are based on hardware virtualization but also employ paravirtualized IO (VirtIO). In this document, we always refer to Xen VMs of the (PV)HVM type.

13 Resources Allocation and Tuning of the Host #

No details are given, here, about how to install and configure a system so that it becomes a suitable virtualization host. For similar instructions, refer to the SUSE documentation at SUSE Linux Enterprise Server 15 SP1 Virtualization Guide: Installation of Virtualization Components.

The same applies to configuring things such as networking and storage, either for the host or for the VMs. For similar instructions, refer to suitable chapters of OS and virtualization documentation and manuals. As an example, to know how to assign network interfaces (or ports) to one or more VMs, for improved network throughput, refer to SUSE Linux Enterprise Server 15 SP1 Virtualization Guide: Assigning a Host PCI Device to a VM Guest.

13.1 Allocating Resources to the Host OS #

Even if the main purpose of a server is “limited” to running VMs, some activities will be carried out on the host OS. In fact, in both Xen and KVM, the host OS is at least responsible for helping with the IO of the VMs. It is, therefore, good practise to make sure that the host OS has some resources (namely, CPUs and memory) exclusively assigned to itself.

On KVM, the host OS is the SUSE Linux Enterprise distribution installed on the server, which then loads the hypervisor kernel modules. On Xen, the host OS still is a SUSE Linux Enterprise distribution, but it runs inside what is to all the effect an (although special) Virtual Machine (called Domain 0, or Dom0).

In the absence of any specific requirements involving host resources, a good rule of thumb suggests that 5 to 10 percent of the physical RAM should be reserved to the host OS. On KVM, increase that quota in case the plan is to run many (for example hundreds) of VMs. On Xen, it is ok to always give dom0 not more than a few gigabytes of memory. This is especially the case when planning to take advantage of disaggregation (see https://wiki.xenproject.org/wiki/Dom0_Disaggregation).

In terms of CPUs, we advise to reserve to the host OS / dom0 at least one physical core on each NUMA node. This can be relaxed, but the host OS should always get at least one core on the all the NUMA nodes that have IO channels attached to them. In fact, host OS activities are mostly related to performing IO for VMs. It is beneficial for performance if the kernel threads that handle devices can run on the node to which the devices themselves are attached. As an example, in the system shown in Figure 2, “AMD EPYC 7002 Series Processor Topology”, this would mean reserving at least one physical core for the host OS on node 0 (node 1 has an IO controller, but no device attached).

System administrators need to be able to reach out and login to the system, to monitor, manage and troubleshoot it. Thus, some more resources should be assigned to the host OS. This permits that management tools (for example, the SSH daemon) can be reached, and that the hypervisor's toolstack (for example, libvirt) can run without too much contention.

13.1.1 Reserving CPUs and Memory for the Host on KVM #

When using KVM, sparing, for example, 2 cores and 12 GB of RAM for the host OS is done by stopping the creation of VMs when the total number of vCPUs of all VMs has reached 254, or when the total cumulative amount of allocated RAM has reached 220 GB.

Following all the advice and recommendations provided in this guide (including the ones given later about VM configuration) will automatically make sure that the unused CPUs are available to the host OS. There are other ways to enforce this, for example with cgroups, or by means of the isolcpus boot parameter, but they will not be described in details in this document.

13.1.2 Reserving CPUs and Memory for the Host on Xen #

When using Xen, dom0 resource allocation needs to be done explicitly at system boot

time. For example, assigning 8 physical cores and 12 GB of RAM to it is done by specifying

the following additional parameters on the hypervisor boot command line (for example, by

editing /etc/defaults/grub, and then updating the boot loader):

dom0_mem=12288M,max:12288M dom0_max_vcpus=16

The number of vCPUs is 16 because we want Dom0 to have 8 physical cores, and the AMD EPYC 7002 Series Processor has Symmetric MultiThreading (SMT). 12288M memory (that is 12 GB) is specified both as current and as maximum value, to prevent Dom0 from using ballooning (see https://wiki.xenproject.org/wiki/Tuning_Xen_for_Performance#Memory ).

Making sure that Dom0 vCPUs run on specific pCPUs is not strictly necessary. If wanted, it can be enforced acting on the Xen scheduler, when Dom0 is booted (as there is currently no mechanism to set up this via Xen boot time parameters). If using the xl toolstack, the command is:

xl vcpu-pin 0 <vcpu-ID> <pcpu-list>

Or, with virsh:

virsh vcpupin 0 --vcpu <vcpu-ID> --cpulist <pcpu-list>

Note that virsh vcpupin –config ... is not effective for

Dom0.

If wanting to limit Dom0 to only a specific (set of) NUMA node(s), the

dom0_nodes=<nodeid> boot command line option can be used. This

will affect both memory and vCPUs. In fact, it means that memory for Dom0 will be

allocated on the specified node(s), and the vCPUs of Dom0 will be restricted to run on

those same node(s). When the Dom0 has booted, it is still possible to use xl

vcpu-pin or virsh vcpupin to change where its vCPUs will be

scheduled. But the memory will never be moved from where it has been allocated during

boot. On AMD EPYC 7002 Series Processors, using this option is not recommended.

13.1.3 Reserving CPUs for the Host under IO Intensive VM Workloads #

Covering the cases where VMs run IO intensive workloads, is out of the scope of this guide. As general advice, if IO done in VM is important, it may be appropriate to leave to the host OS either one core or one thread for each IO device used by each VM. If this is not possible, for example because it reduces to much the CPUs that remain available for running VMs (especially in case the goal is to run many of them), then exploring alternative solutions for handling IO devices (such as SR-IOV) is recommended.

13.2 (Transparent) Huge Pages #

For virtualization workloads, rather than using Transparent Huge Pages (THP) on the host, it is recommended that 1 GB huge pages are used for the memory of the VMs. This sensibly reduces both the page table management overhead and the level of resource contention that the system faces when VMs update their page tables. Moreover, if the host is entirely devoted to running VMs, Huge Pages are likely not required for host OS processes. Actually, in this case, having them active on the host may even negatively affect performance, as the THP service daemon (which is there for merging “regular” pages and form Huge Pages) can interfere with VMs' vCPUs execution. For disabling Huge Pages for the host OS, in a KVM setup, add the following host kernel command-line option:

transparent_hugepage=never

Or execute this command, at runtime:

echo never > /sys/kernel/mm/transparent_hugepage/enabled

For being able to use 1 GB Huge Pages as backing memory of KVM guests, such pages need to be allocated at the host OS level. It is best to make that happen by adding the following boot time parameter:

default_hugepagesz=1GB hugepagesz=1GB hugepages=<number of hugepages>

The value <number of hugepages> can be computed by taking the amount of memory we want to devoted to VMs, and dividing it by the page size (1 GB). For example on our host with 256 GB of RAM, creating 200 Huge Pages means we can accommodate up to 210 GB of VMs' RAM, and leave plenty (~50GB) to the host OS.

On Xen, none of the above is necessary. In fact, Dom0 is a paravirtualized guest, for which Huge Pages support is not present. On the other hand, for the memory used for the hypervisor and for the memory allocated by the hypervisor for HVM VMs, Huge Pages are always used as much as possible. So no explicit tuning is needed.

13.3 Automatic NUMA Balancing #

On Xen, Automatic NUMA Balancing (NUMAB) in the host OS should be disabled. Dom0 is, currently, a paravirtualized guest without a (virtual) NUMA topology and, thus, NUMAB would be totally useless.

On KVM, NUMAB can be useful in dynamic virtualization scenarios, where VMs are created, destroyed and re-created relatively quickly. Or, in general, it can be useful in cases where it is not possible or desirable to statically partition the host resources and assign them explicitly to VMs. This, however, comes at the price of some latency being introduced. Plus, NUMAB's own operation can interfere with VMs' execution and cause further performance degradation. In any case, this document focuses on achieving the best possible performance for VMs, through specific tuning and static resource allocation, therefore it is recommended to leave NUMAB turned off. This can be done by adding the following parameter to the host kernel command line:

numa_balancing=disable

If anything changes and the system is repurposed to achieve different goals, NUMAB can be enabled on-line with the command:

echo 0 > /proc/sys/kernel/numa_balancing

13.4 Services, Daemons and Power Management #

Service daemons have been discussed already, in the first part of the document. Most of the consideration done there, applies here as well.

For example, tuned should either not be used, or the profile should

be set to one that does not implicitly put the CPUs in polling mode. Both

throughput-performance and virtual-host profiles

from SUSE Linux Enterprise Server 15 SP1 are ok, as neither of them touches

/dev/cpu_dma_latency.

As far as power management is concerned, the cpufreq governor can either be kept as it is by default, or switched to performance, depending on the nature of the workloads of interest.

For anything that concerns power management on KVM, changing the tuned profile or using cpupower, from the host OS will have the effects one can expect, and described already in the first half of the document. On Xen, CPU frequency scaling is enacted by the hypervisor. It can be controlled from within Dom0, but the tool that needs to be used is different. For example, for setting governor to performance, we need the following:

xenpm set-scaling-governor performance

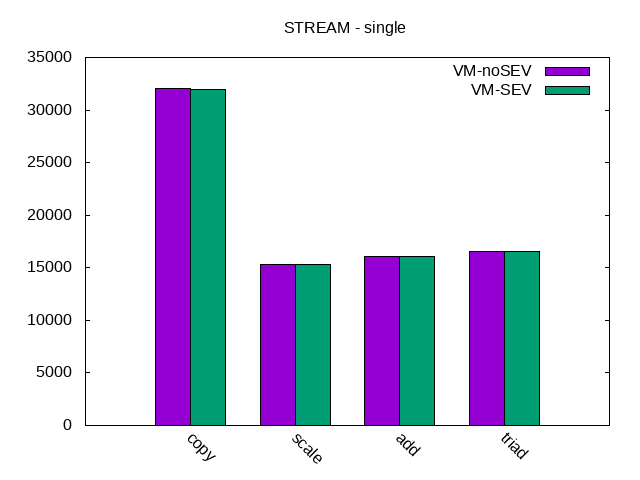

13.5 Secure Encrypted Virtualization #

Secure Encrypted Virtualization (SEV) technology, supported on the 2nd Generation AMD EPYC Processors, allows the memory of the VMs to be encrypted. Enabling it for a VM happens in the VM's own configuration file, but there are preparation steps that needs to occur at the host level.

The libvirt documentation contains all the necessary steps required for enabling SEV on a host that supports it. It has been enough to add the following boot parameters to the host kernel command line, in the boot loader:

mem_encrypt=on kvm_amd.sev=1

For further details, refer to libvirt documentation: Enabling SEV on the host.

It should be noted that, at least as far as the workload analyzed in this document, enabling SEV on the host has not caused any noticeable performance degradation. In fact, running CPU and memory intensive workloads, both on the host and in VMs, with or without the parameters above added to the host kernel boot command line, resulted in indistinguishable results. Therefore, enabling SEV at the host level can be considered safe, from the point of view of not hurting performance of VMs and workloads that will not enable VM memory encryption. Of course, this applies to when SEV is enabled for the host and is not used for encrypting VMs.

What happens when SEV is used for encrypting VMs' memory will be described later in the guide.

In SUSE Linux Enterprise Server 15 SP1, SEV is not available on Xen.

14 Resources Allocation and Tuning of VMs #

For instructions on how to create an initial VM configuration, start the VM, and install a guest OS, refer to the SUSE documentation at SUSE Linux Enterprise Server 15 SP1 Virtualization Guide: Guest Installation.

From a VM configuration perspective, the two most important factors for achieving top performance on CPU and memory bound workloads are:

partitioning of host resources and placement of the VMs;

enlightenment of the VMs about their virtual topology.

For example, if there are two VMs, each one should be run on one socket, to minimize CPU contention and maximize and memory access parallelism. Also, and especially if the VMs are big enough, they should be made aware of their own virtual topology. That way, all the tuning actions described in the first part of this document become applicable to the workloads running inside the VMs too.

14.1 Placement of VMs #

When a VM is created, memory is allocated on the host to act as its virtual RAM. Moving this memory, for example on a different NUMA node from the one where it is first allocated, incurs (when possible) in a significant performance impact. Therefore, it is of paramount importance that the initial placement of the VMs is as optimal as possible. Both Xen and KVM can make “educated guesses” on what a good placement might be. For the purposes of this document, however, we are interested in what is the absolute best possible initial placement of the VMs, taking into account the specific characteristics of AMD EPYC 7002 Series Processor systems. And this can only be achieved by manually doing the initial placement. Of course, this comes at the price of reduced flexibility, and is only possible when there is no oversubscription.

Deciding on what pCPUs the vCPUs of a VM run, can be done at creation time, but also easily changed along the VM lifecycle. It is, however, still recommended to start the VMs with good vCPUs placement. This is particularly important on Xen, where vCPU placement is what actually drives and controls memory placement.

Placement of VM memory on host NUMA nodes happens by means of the <numatune> XML element:

<numatune> <memory mode='strict' nodeset='0-7'/> <memnode cellid='0' mode='strict' nodeset='0'/> <memnode cellid='1' mode='strict' nodeset='1'/> ... </numatune>

The 'strict' guarantees that the all memory for the VM will come only from the specified (set of) NUMA node(s) specified in nodeset. A cell is, in fact, a virtual NUMA node, with cellid being its ID and nodeset telling exactly from what host physical NUMA node(s) the memory for this virtual node should be allocated. A NUMA-unaware VMs can still include this element in its configuration file. It will have only one <memnode> element.

Placement of vCPUs happens via the <cputune> element, as in the example below:

<vcpu placement='static'>240</vcpu> <cputune> <vcpupin vcpu='0' cpuset='4'/> <vcpupin vcpu='1' cpuset='132'/> <vcpupin vcpu='2' cpuset='5'/> <vcpupin vcpu='3' cpuset='133'/> ... </cputune>

The value 240 means that the VM will have 240 vCPUs. The static guarantees that each vCPU will stay on the pCPU(s) on which it is “pinned” to. The various <vcpupin> elements are where the mapping between vCPUs and pCPUs is established (vcpu being the vCPU ID and cpuset being either one or a list of pCPU IDs).

When pinning vCPUs to pCPUs, adjacent vCPU IDs (like vCPU ID 0 and vCPU ID 1) must be assigned to host SMT siblings (like pCPU 4 and pCPU 132, on our test server). In fact, QEMU uses a static SMT sibling CPU ID assignment. This is how we guarantee that virtual SMT siblings will always execute on actual physical SMT siblings.

The following sections give more specific and precise details about placement of vCPUs and memory for VM of varying sizes of VMs, on the reference system for this guide (see Figure 2, “AMD EPYC 7002 Series Processor Topology”.

14.1.1 Placement of Only One Large VM #

An interesting use case when “only one” VM is used. Reasons for doing something like that include security/isolation, flexibility, high availability, and others. In this cases, typically, the VM is almost as large as the host itself.

Let us, therefore, consider a VM with 240 vCPUs and 200 GB of RAM. Such VM spans multiple host NUMA nodes and therefore it is recommended to create a virtual NUMA topology for it.

We should create a virtual topology with 2 virtual NUMA nodes (that is, as many as there are physical NUMA nodes), and split the VM’s memory equally among them. The 240 vCPUs are assigned to the 2 nodes, 120 on each. Full cores must always be used. That means, assign vCPUs 0 and 1 to Core P#4, vCPUs 2 and 3 to Core P#5, vCPUs 4 and 5 to Core P#6, etc. To enact this, we must pin vCPUs 0 and 1 to pCPUs 4 and 132; vCPUs 2 and 3 to pCPUs 5 and 133; vCPUs 4 and 5 to pCPUs 6 and 134, etc. And, of course, the two nodes must have the same number of vCPUs.

Cores P#0, P#1, P#2 and P#3 of both the host NUMA nodes (this means one die, which is composed of 8 cores, per node) are reserved to the host. They are PU-s: P#0, P#1, P#2, P#3, P#128, P#129, P#130, P#131, P#64, P#65, P#66, P#67, P#192, P#193, P#194, P#195.

The VM Memory must be split equally among the 2 nodes. At this point, if a suitable virtual topology is also provided to the VM, each of the VM’s vCPUs will access its own memory directly, and use Infinity Fabric inter-socket links only to reach foreign memory, as it happens on the host. Workloads running inside the VM can be tuned exactly like they were running on a bare metal 2nd Generation AMD EPYC Processor server.

The following example numactl output comes from a VM configured as

explained: