- I Planning an Installation using Cloud Lifecycle Manager

- 1 Registering SLES

- 2 Hardware and Software Support Matrix

- 3 Recommended Hardware Minimums for the Example Configurations

- 3.1 Recommended Hardware Minimums for an Entry-scale KVM

- 3.2 Recommended Hardware Minimums for an Entry-scale ESX KVM Model

- 3.3 Recommended Hardware Minimums for an Entry-scale ESX, KVM with Dedicated Cluster for Metering, Monitoring, and Logging

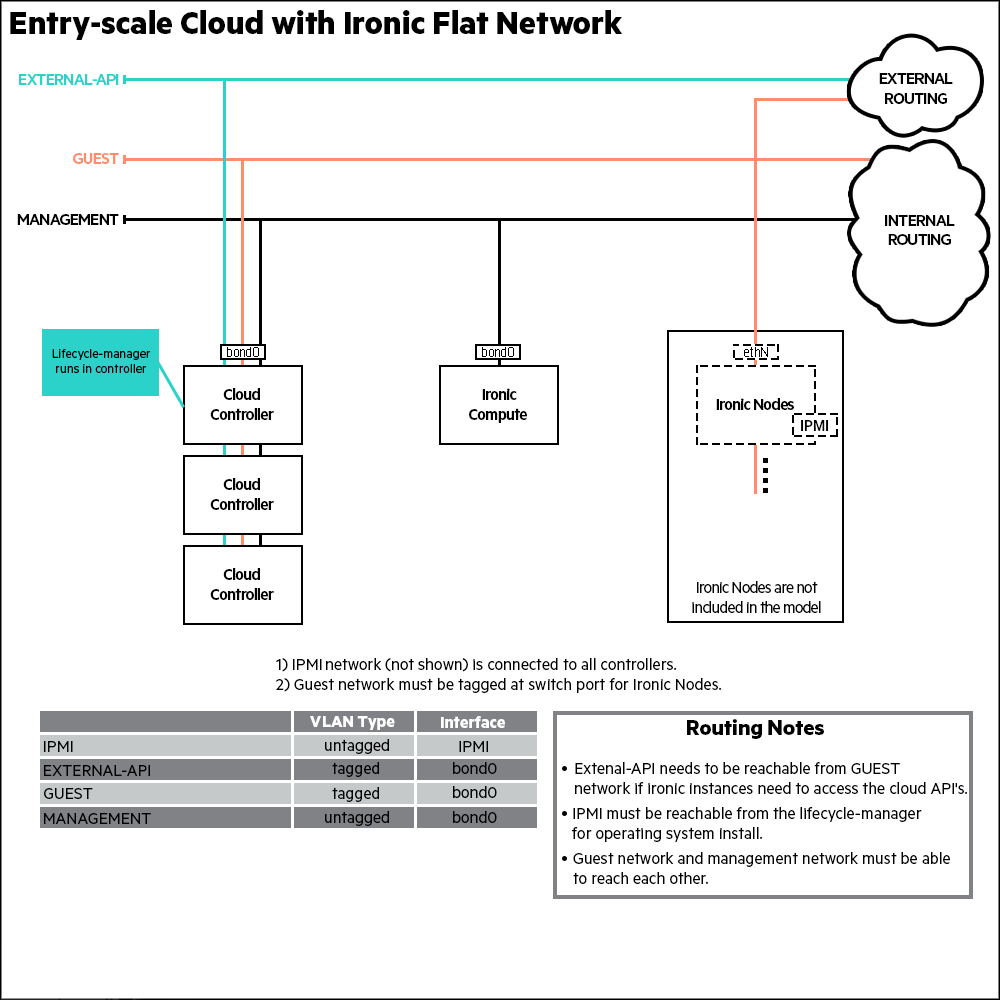

- 3.4 Recommended Hardware Minimums for an Ironic Flat Network Model

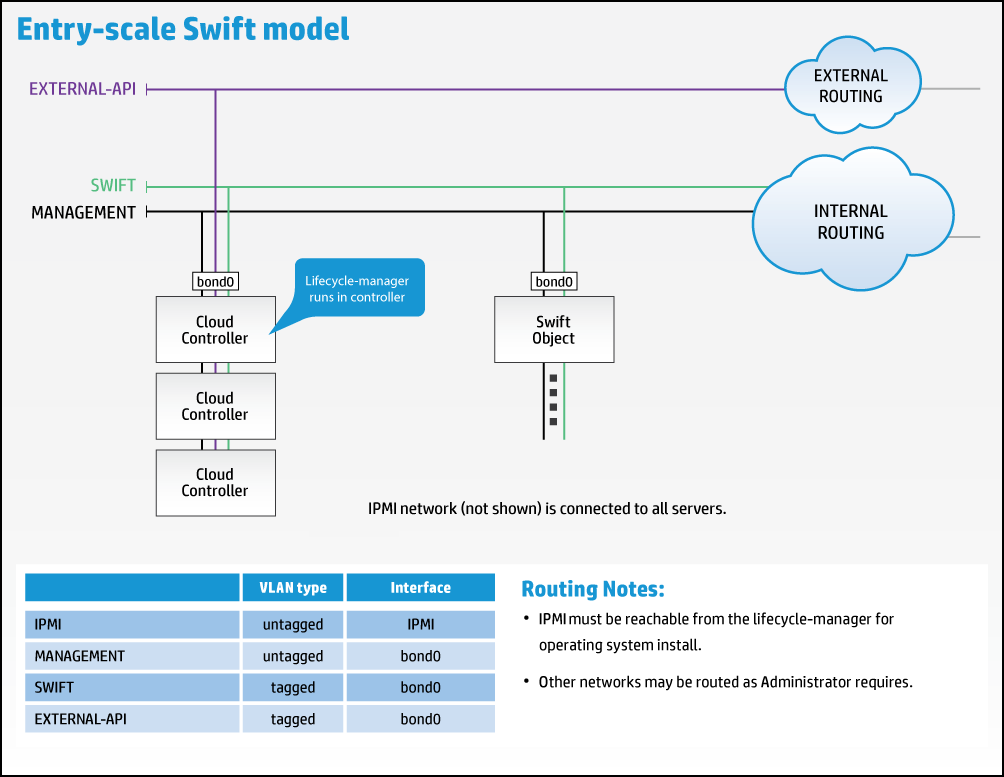

- 3.5 Recommended Hardware Minimums for an Entry-scale Swift Model

- 4 High Availability

- 4.1 High Availability Concepts Overview

- 4.2 Highly Available Cloud Infrastructure

- 4.3 High Availability of Controllers

- 4.4 High Availability Routing - Centralized

- 4.5 Availability Zones

- 4.6 Compute with KVM

- 4.7 Nova Availability Zones

- 4.8 Compute with ESX Hypervisor

- 4.9 cinder Availability Zones

- 4.10 Object Storage with Swift

- 4.11 Highly Available Cloud Applications and Workloads

- 4.12 What is not Highly Available?

- 4.13 More Information

- II Cloud Lifecycle Manager Overview

- 5 Input Model

- 6 Configuration Objects

- 7 Other Topics

- 8 Configuration Processor Information Files

- 8.1 address_info.yml

- 8.2 firewall_info.yml

- 8.3 route_info.yml

- 8.4 server_info.yml

- 8.5 service_info.yml

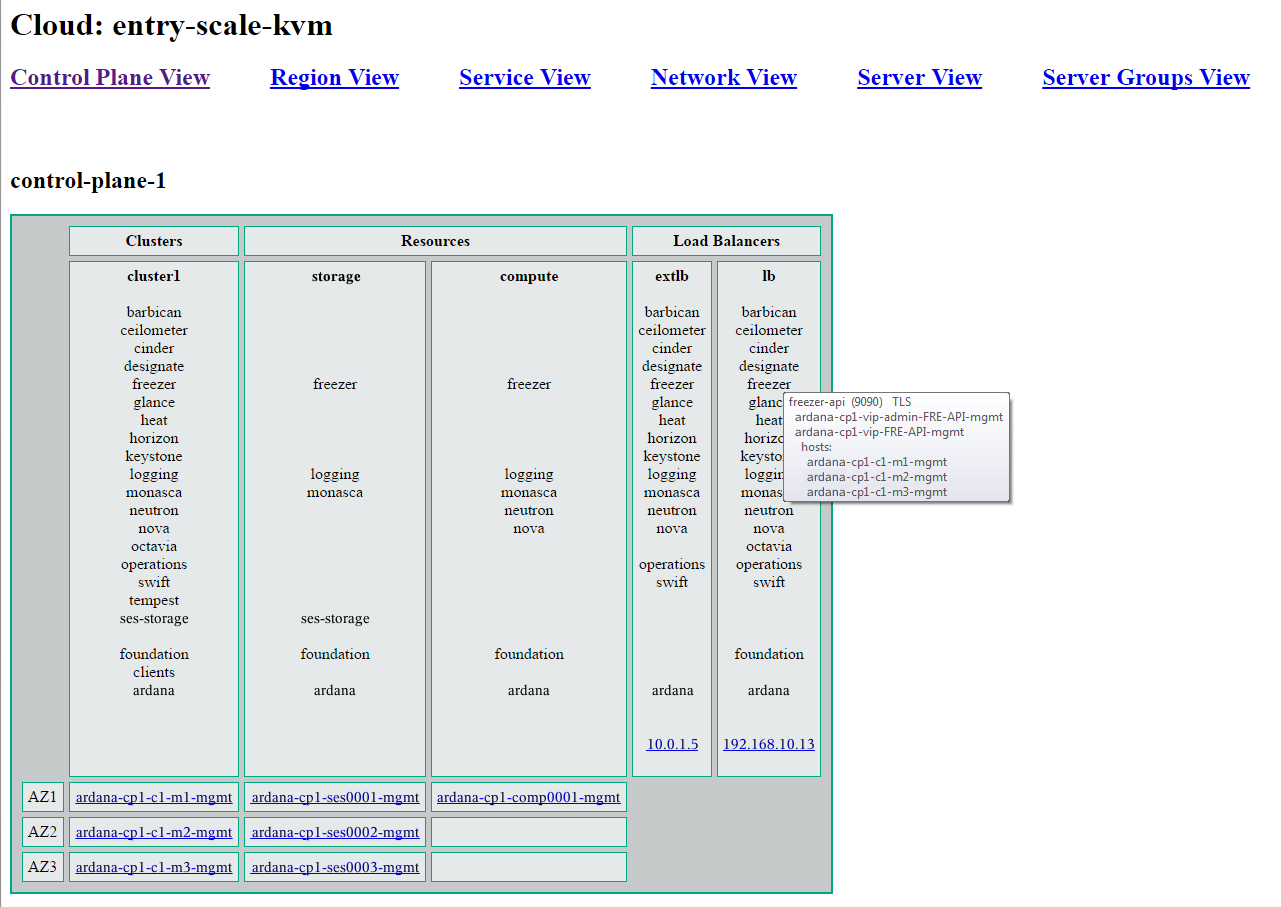

- 8.6 control_plane_topology.yml

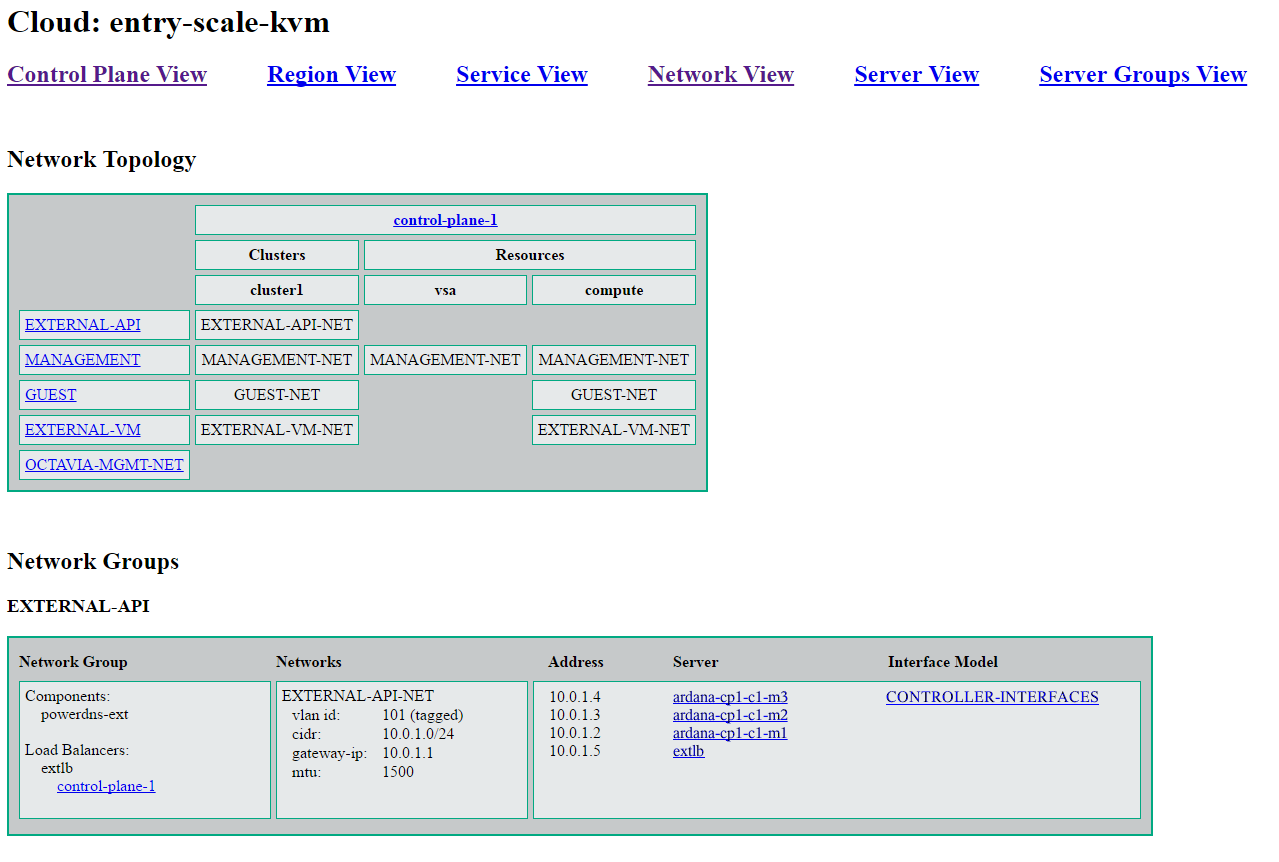

- 8.7 network_topology.yml

- 8.8 region_topology.yml

- 8.9 service_topology.yml

- 8.10 private_data_metadata_ccp.yml

- 8.11 password_change.yml

- 8.12 explain.txt

- 8.13 CloudDiagram.txt

- 8.14 HTML Representation

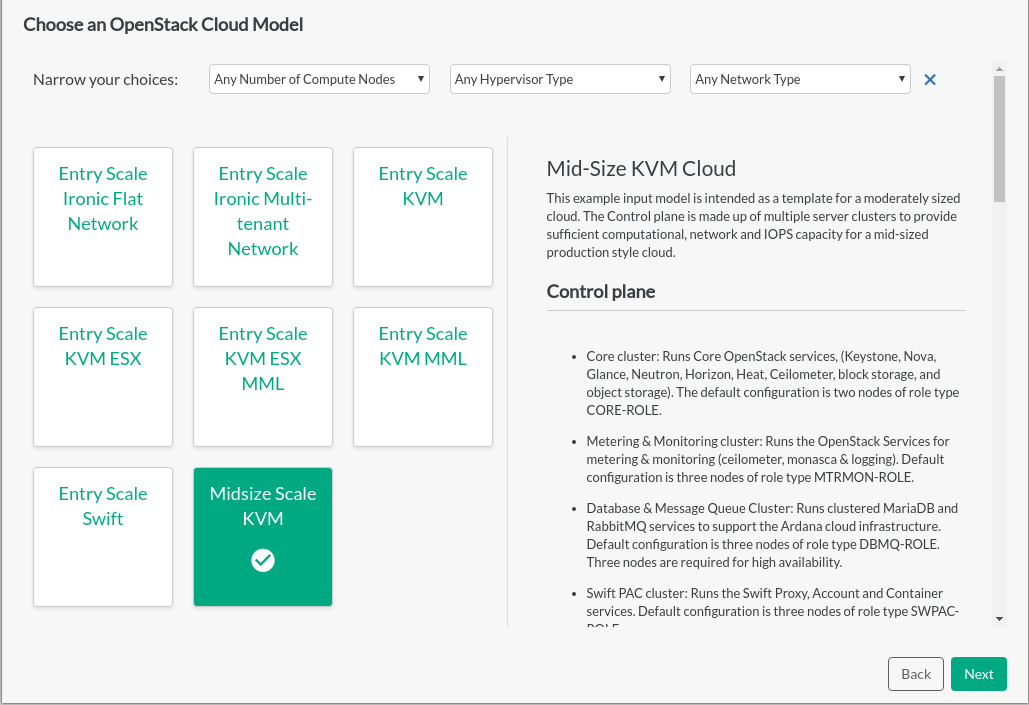

- 9 Example Configurations

- 10 Modifying Example Configurations for Compute Nodes

- 11 Modifying Example Configurations for Object Storage using Swift

- 11.1 Object Storage using swift Overview

- 11.2 Allocating Proxy, Account, and Container (PAC) Servers for Object Storage

- 11.3 Allocating Object Servers

- 11.4 Creating Roles for swift Nodes

- 11.5 Allocating Disk Drives for Object Storage

- 11.6 Swift Requirements for Device Group Drives

- 11.7 Creating a Swift Proxy, Account, and Container (PAC) Cluster

- 11.8 Creating Object Server Resource Nodes

- 11.9 Understanding Swift Network and Service Requirements

- 11.10 Understanding Swift Ring Specifications

- 11.11 Designing Storage Policies

- 11.12 Designing Swift Zones

- 11.13 Customizing Swift Service Configuration Files

- 12 Alternative Configurations

- III Pre-Installation

- IV Cloud Installation

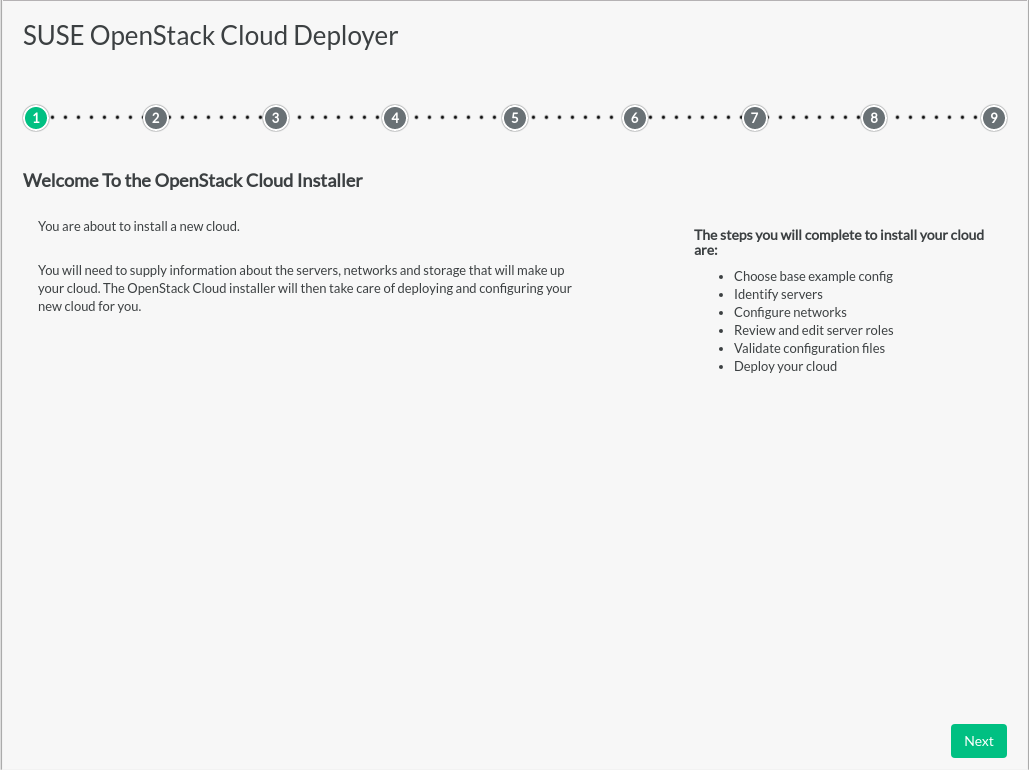

- 19 Overview

- 20 Preparing for Stand-Alone Deployment

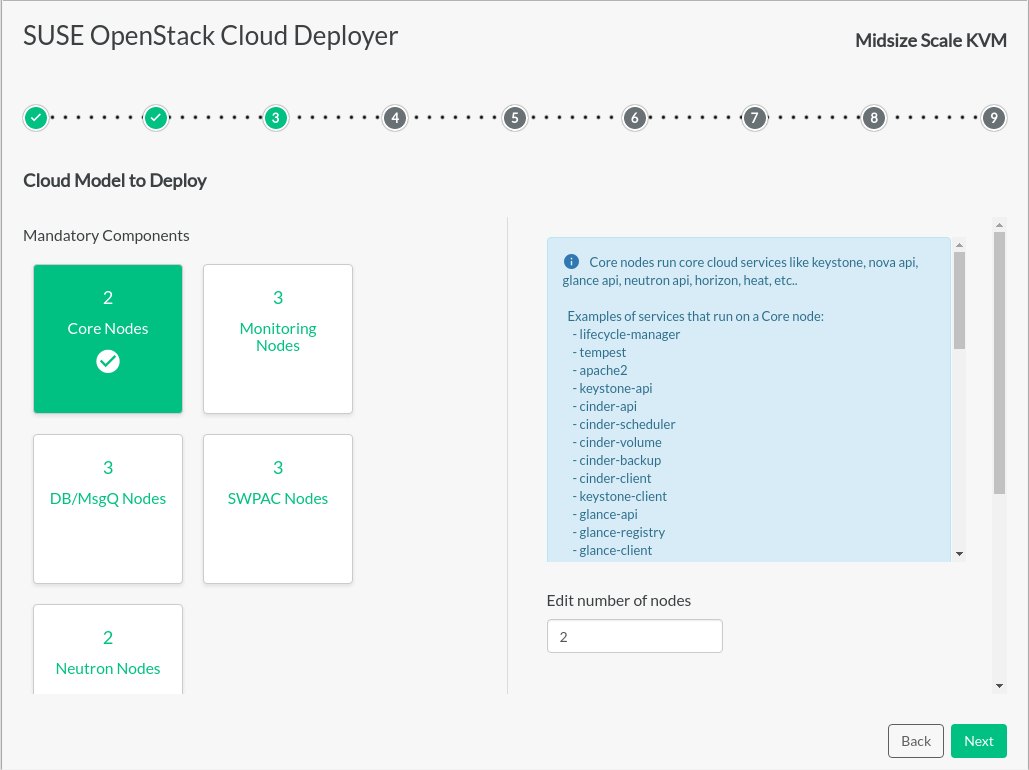

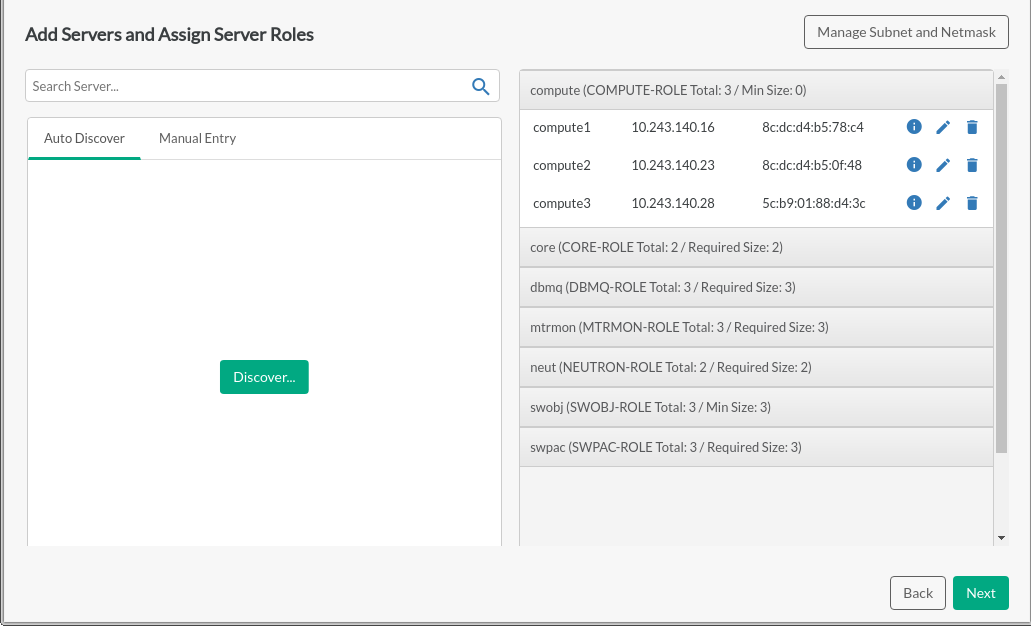

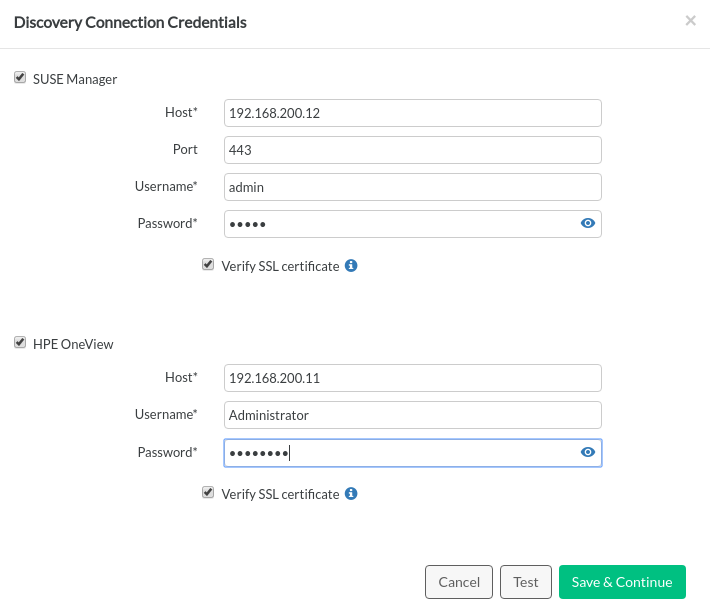

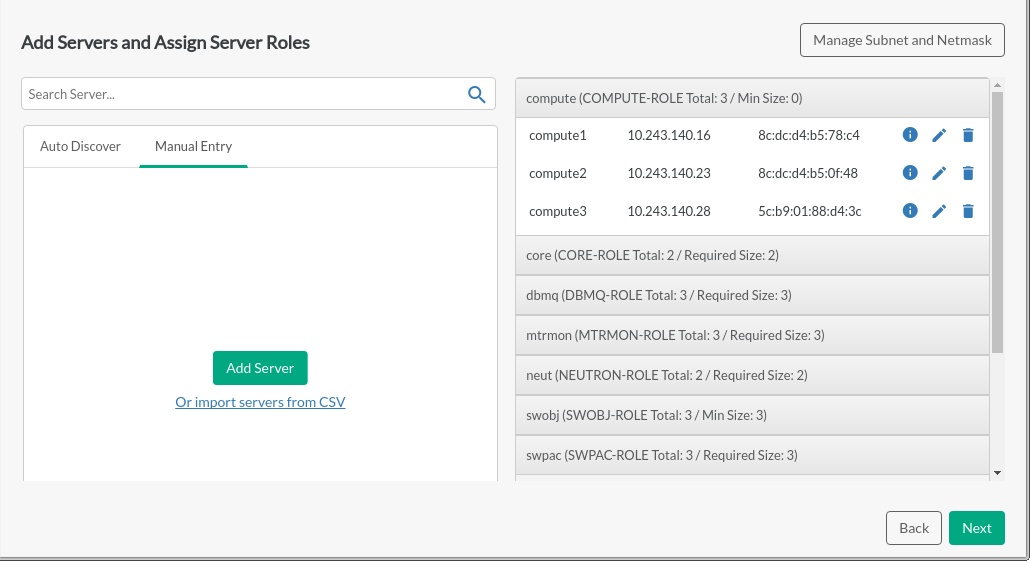

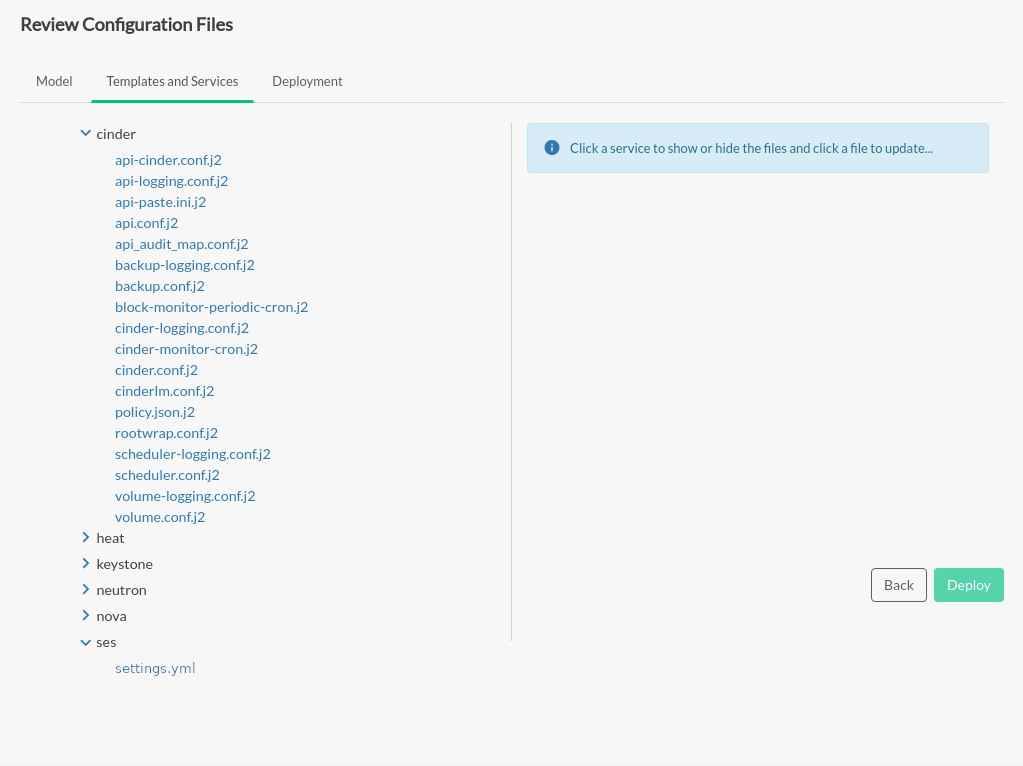

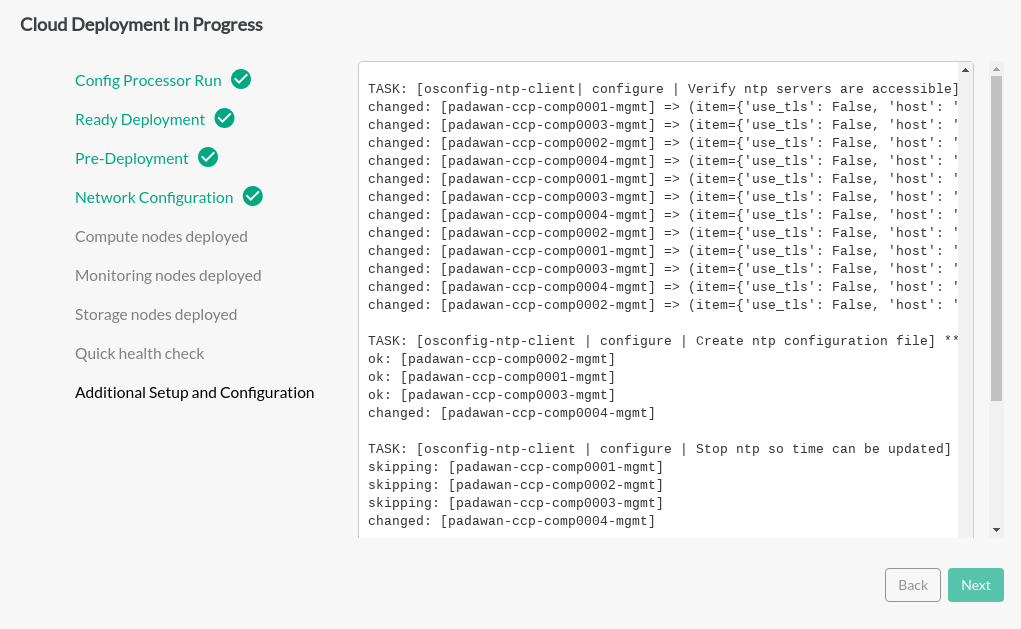

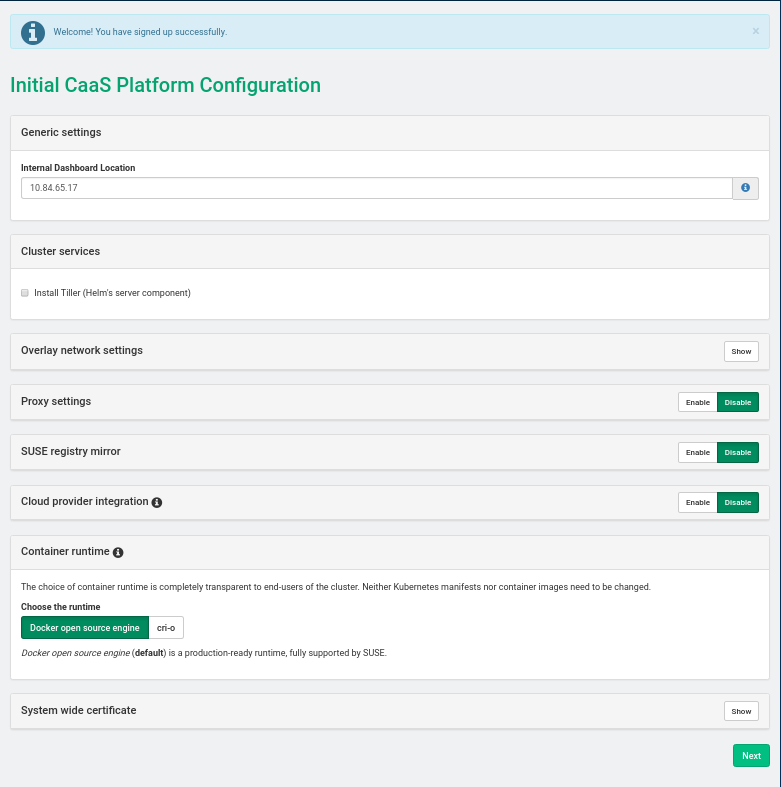

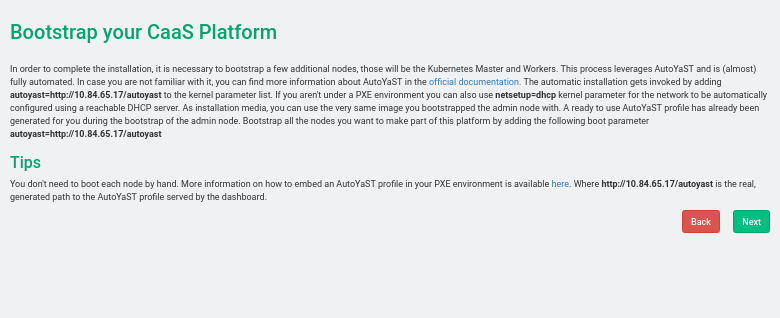

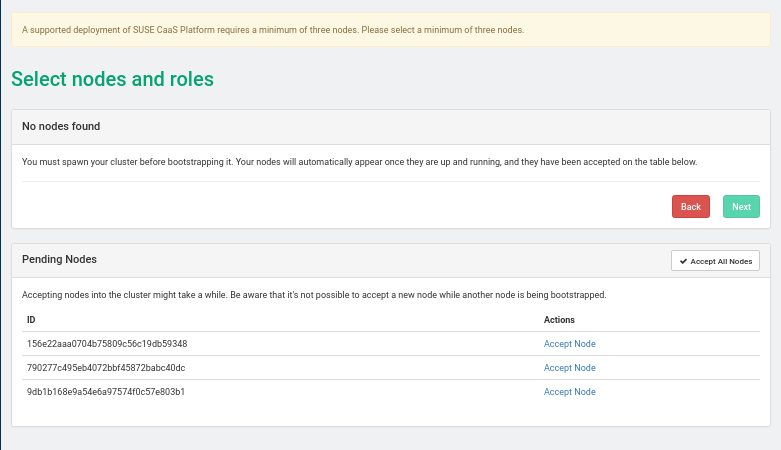

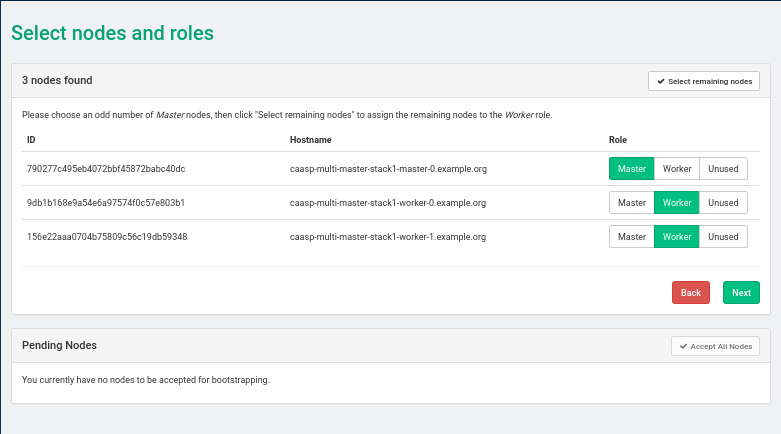

- 21 Installing with the Install UI

- 22 Using Git for Configuration Management

- 23 Installing a Stand-Alone Cloud Lifecycle Manager

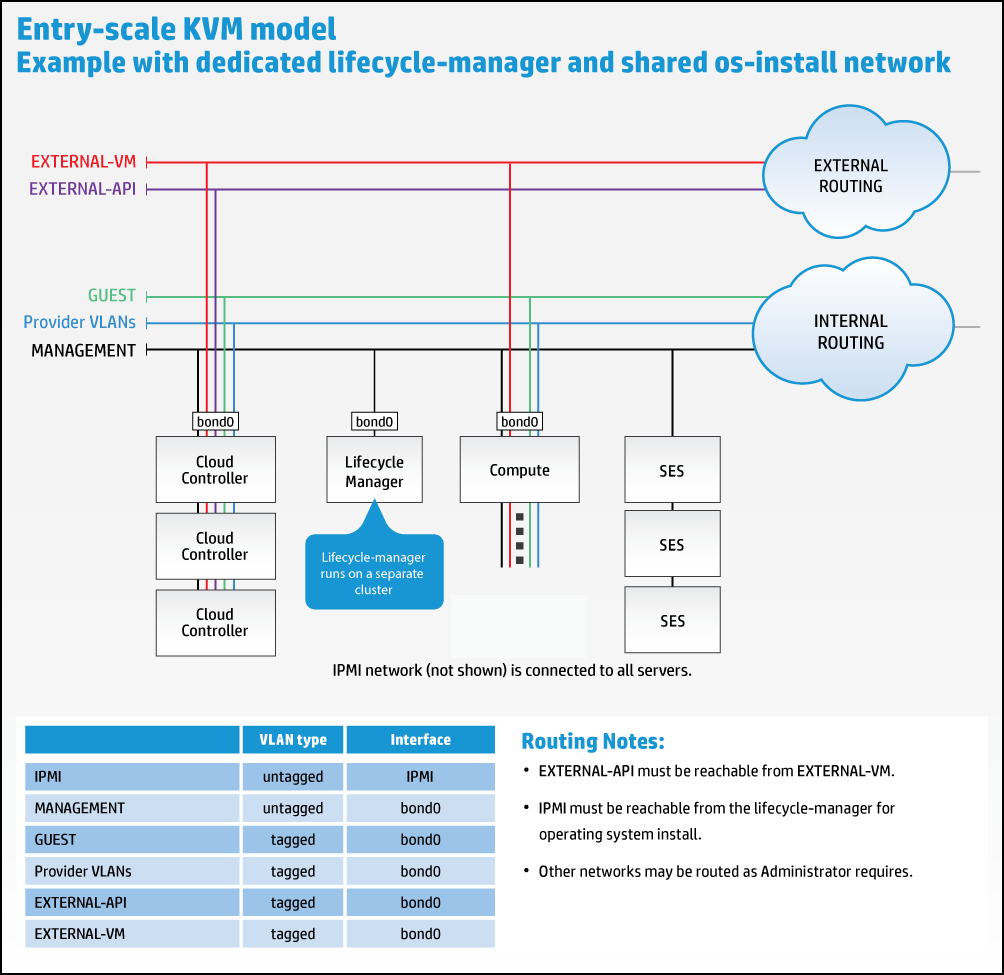

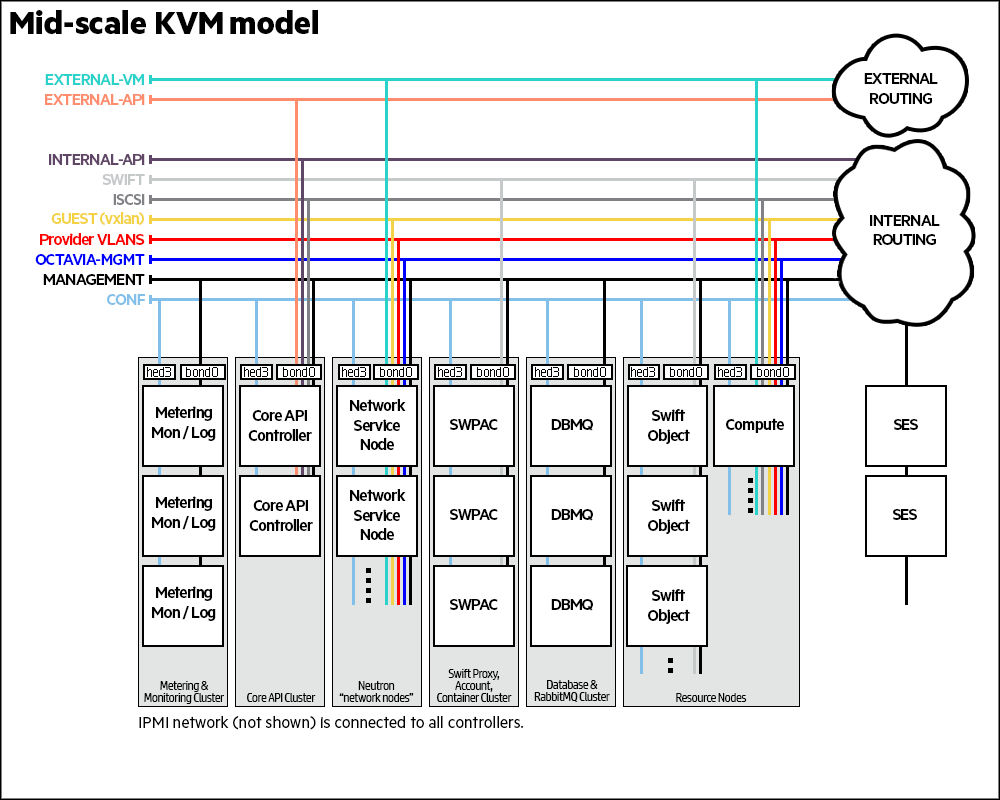

- 24 Installing Mid-scale and Entry-scale KVM

- 24.1 Important Notes

- 24.2 Prepare for Cloud Installation

- 24.3 Configuring Your Environment

- 24.4 Provisioning Your Baremetal Nodes

- 24.5 Running the Configuration Processor

- 24.6 Configuring TLS

- 24.7 Deploying the Cloud

- 24.8 Configuring a Block Storage Backend

- 24.9 Post-Installation Verification and Administration

- 25 DNS Service Installation Overview

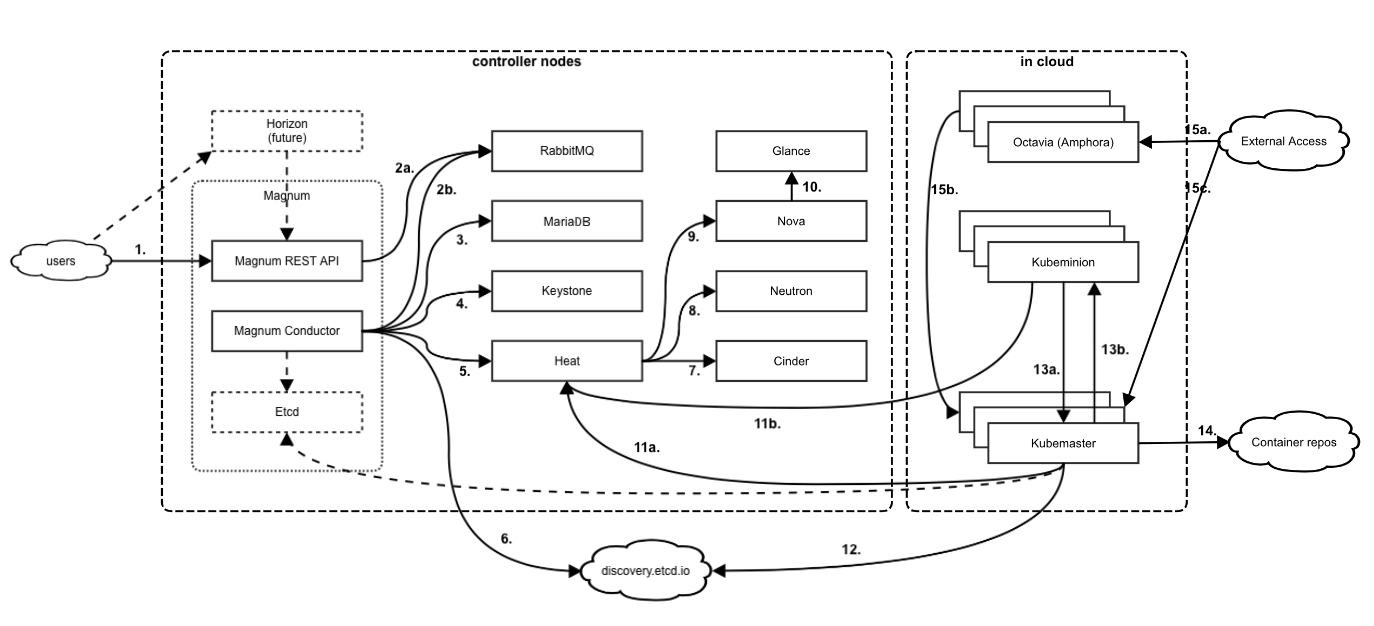

- 26 Magnum Overview

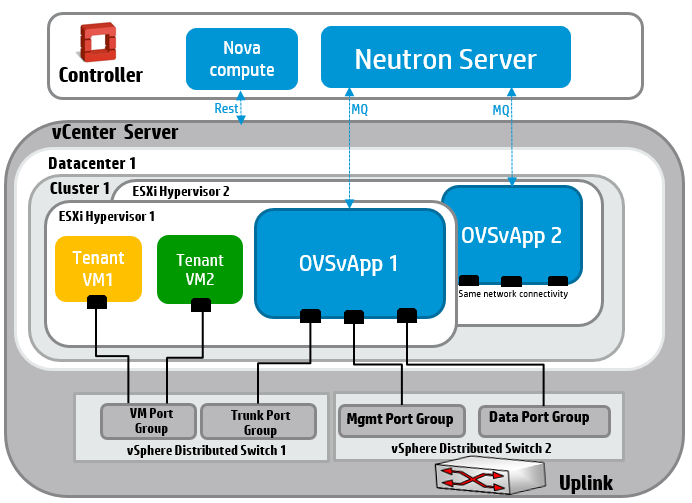

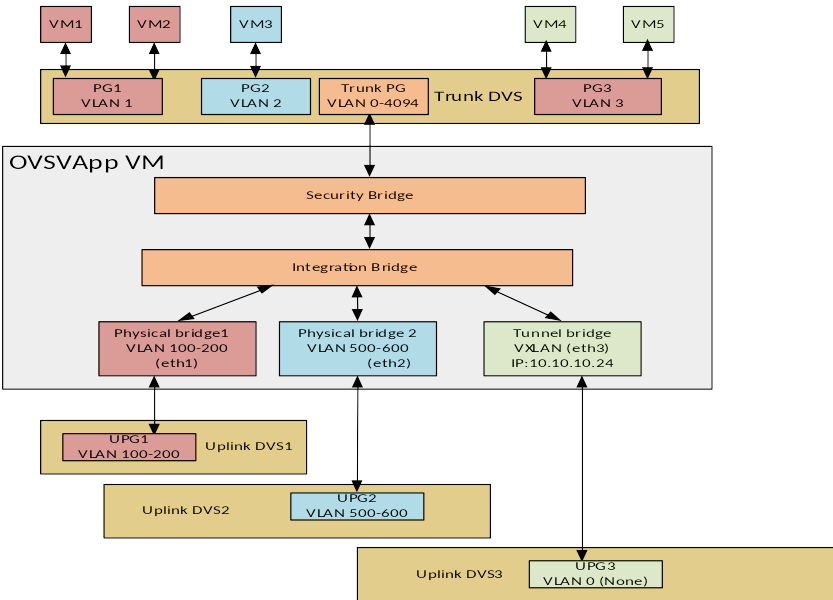

- 27 Installing ESX Computes and OVSvAPP

- 27.1 Prepare for Cloud Installation

- 27.2 Setting Up the Cloud Lifecycle Manager

- 27.3 Overview of ESXi and OVSvApp

- 27.4 VM Appliances Used in OVSvApp Implementation

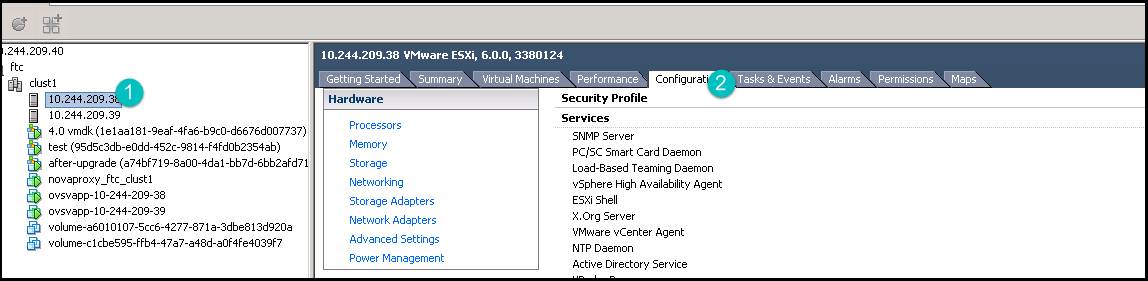

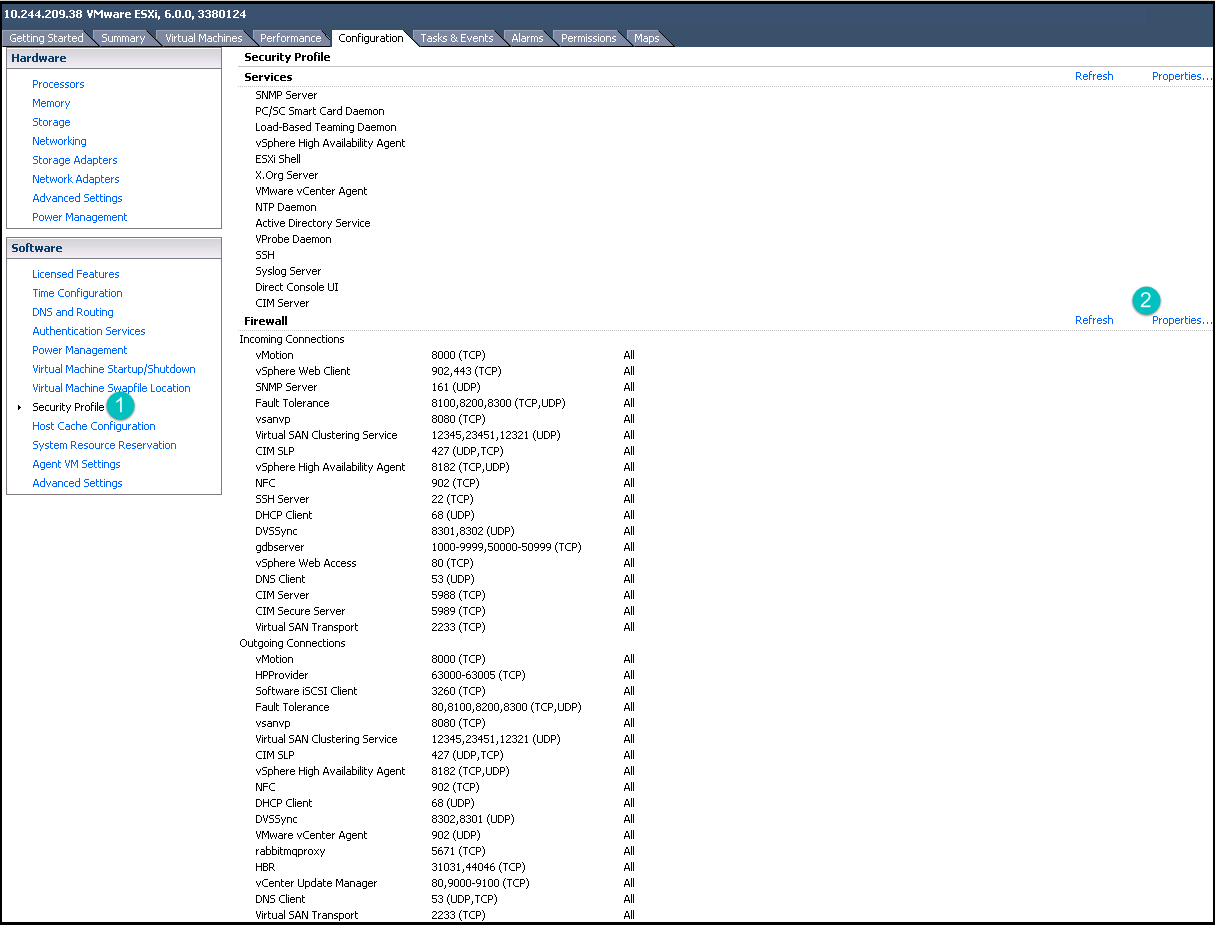

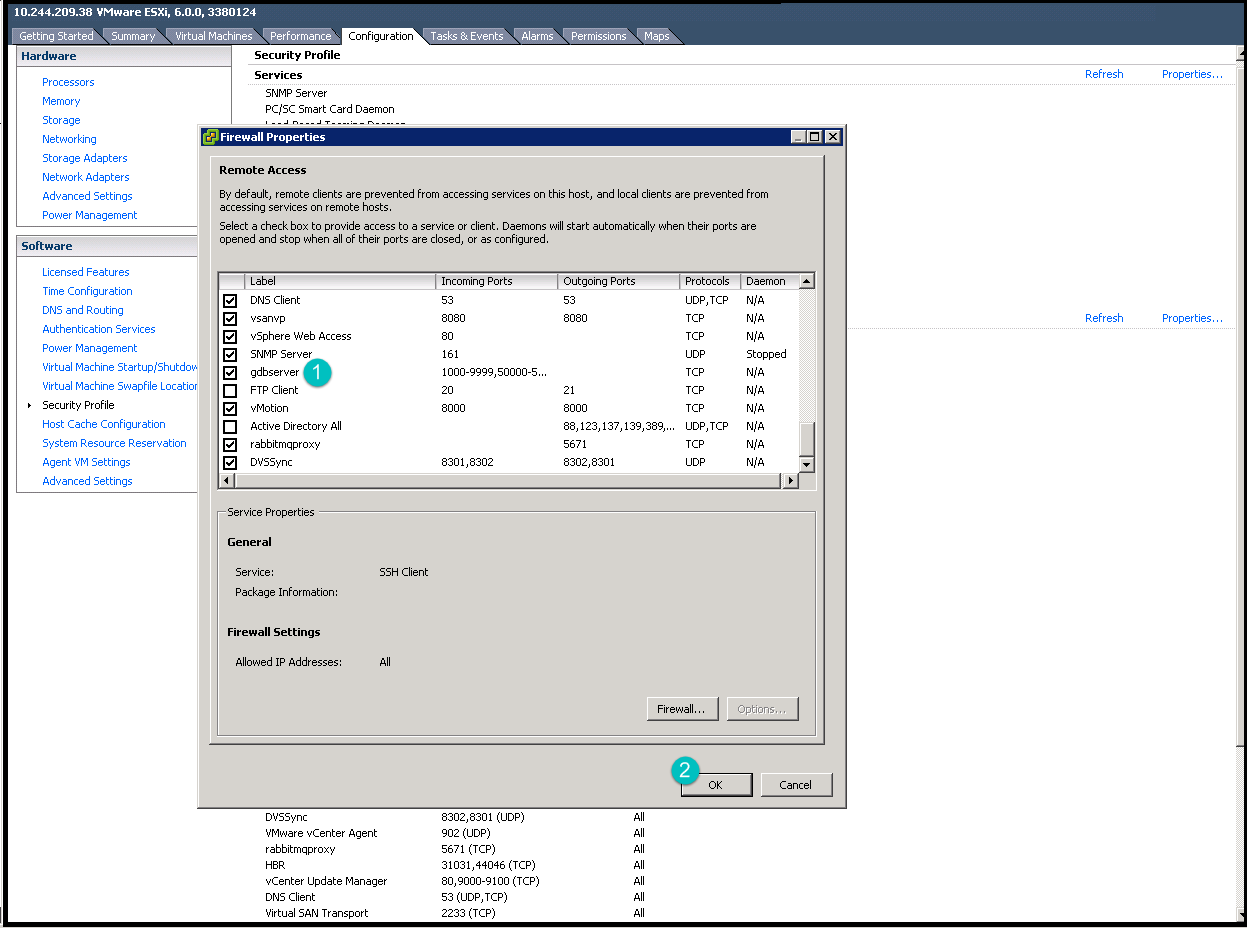

- 27.5 Prerequisites for Installing ESXi and Managing with vCenter

- 27.6 ESXi/vCenter System Requirements

- 27.7 Creating an ESX Cluster

- 27.8 Configuring the Required Distributed vSwitches and Port Groups

- 27.9 Create a SUSE-based Virtual Appliance Template in vCenter

- 27.10 ESX Network Model Requirements

- 27.11 Creating and Configuring Virtual Machines Based on Virtual Appliance Template

- 27.12 Collect vCenter Credentials and UUID

- 27.13 Edit Input Models to Add and Configure Virtual Appliances

- 27.14 Running the Configuration Processor With Applied Changes

- 27.15 Test the ESX-OVSvApp Environment

- 28 Integrating NSX for vSphere

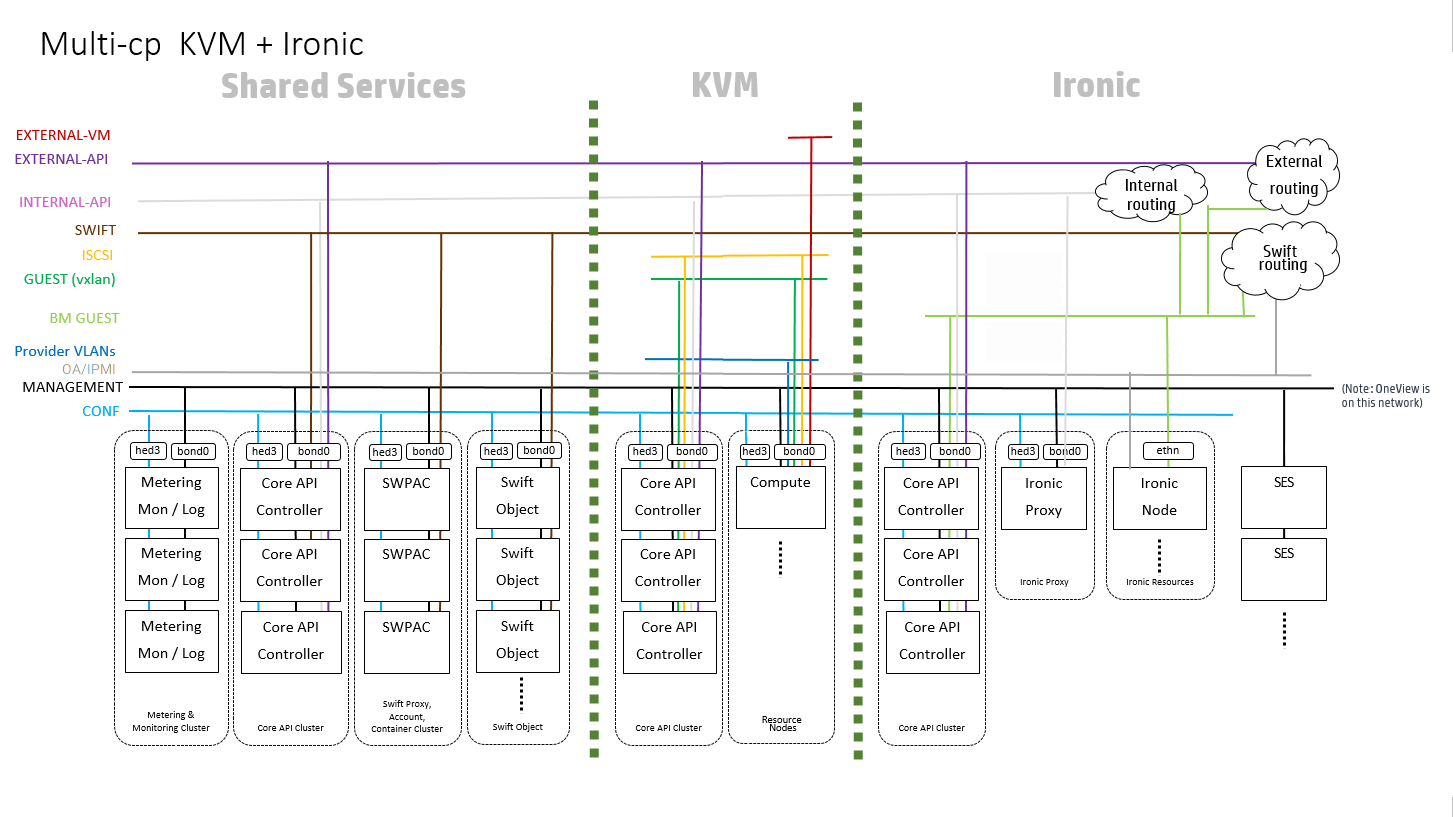

- 29 Installing Baremetal (Ironic)

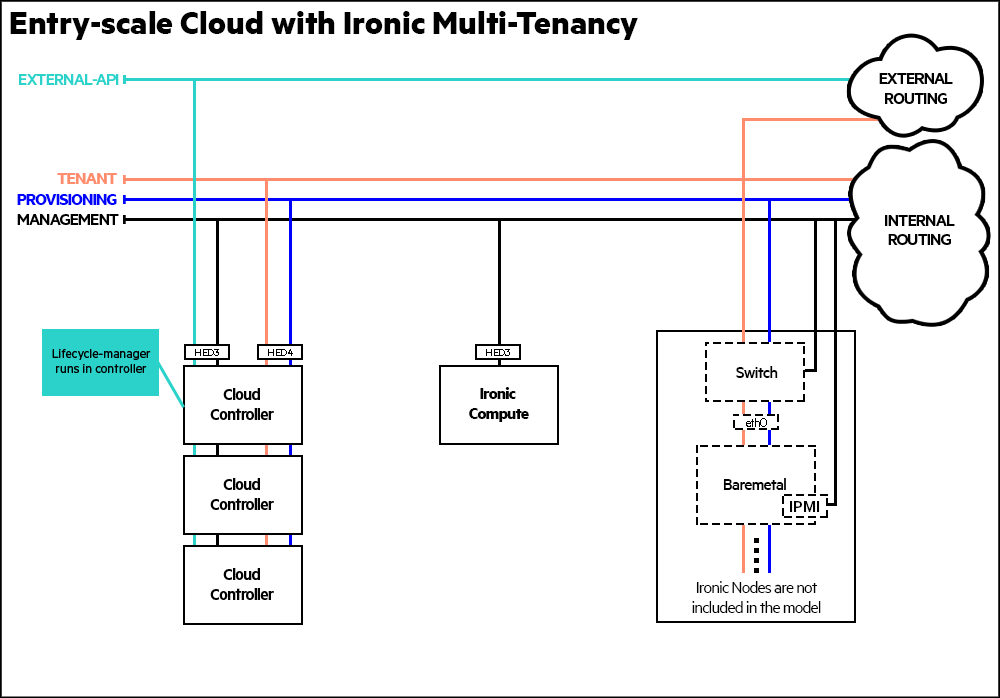

- 29.1 Installation for SUSE OpenStack Cloud Entry-scale Cloud with Ironic Flat Network

- 29.2 ironic in Multiple Control Plane

- 29.3 Provisioning Bare-Metal Nodes with Flat Network Model

- 29.4 Provisioning Baremetal Nodes with Multi-Tenancy

- 29.5 View Ironic System Details

- 29.6 Troubleshooting ironic Installation

- 29.7 Node Cleaning

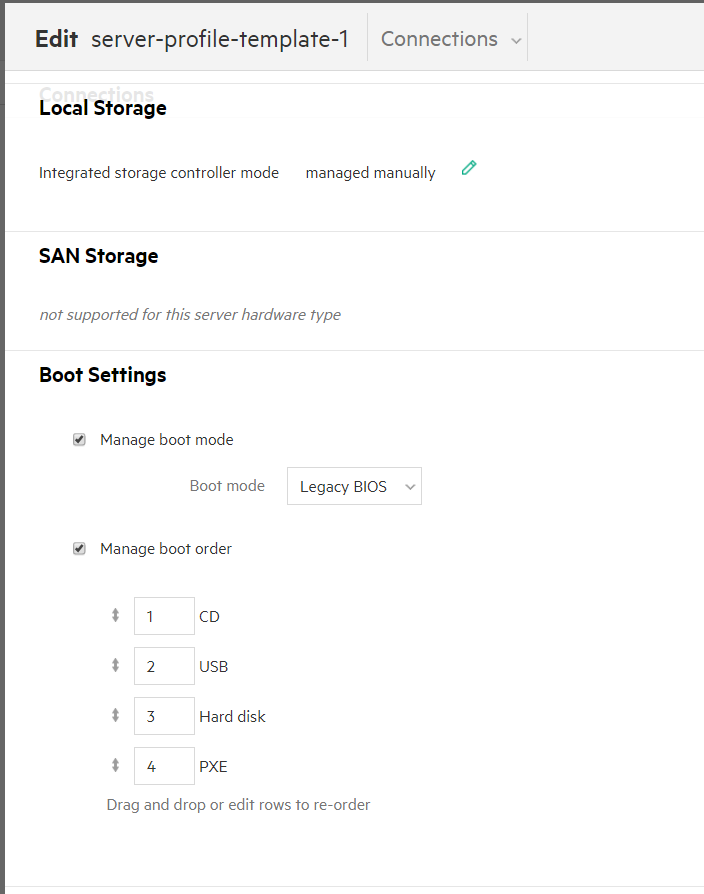

- 29.8 Ironic and HPE OneView

- 29.9 RAID Configuration for Ironic

- 29.10 Audit Support for Ironic

- 30 Installation for SUSE OpenStack Cloud Entry-scale Cloud with Swift Only

- 31 Installing SLES Compute

- 32 Installing manila and Creating manila Shares

- 33 Installing SUSE CaaS Platform heat Templates

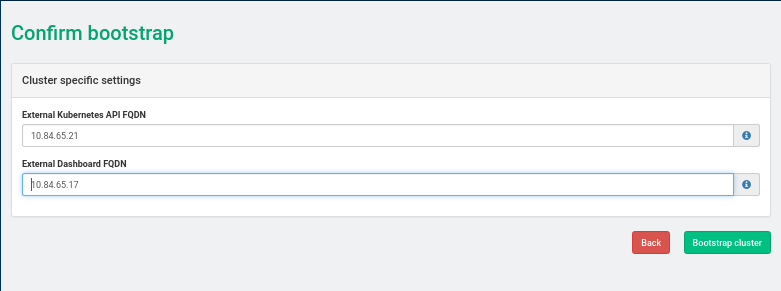

- 33.1 SUSE CaaS Platform heat Installation Procedure

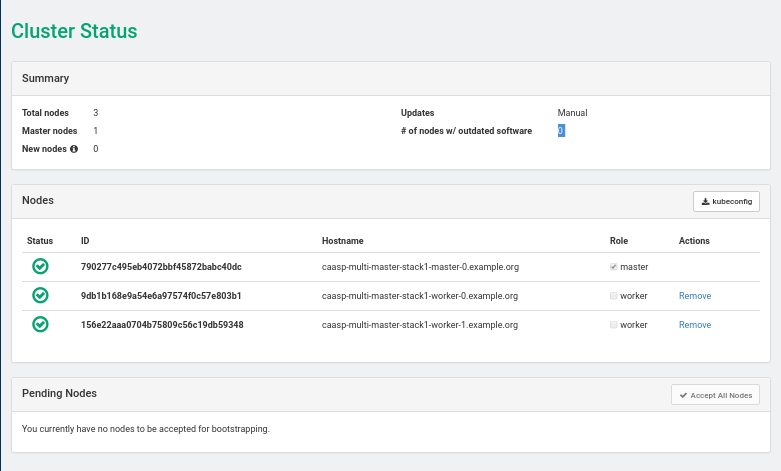

- 33.2 Installing SUSE CaaS Platform with Multiple Masters

- 33.3 Deploy SUSE CaaS Platform Stack Using heat SUSE CaaS Platform Playbook

- 33.4 Deploy SUSE CaaS Platform Cluster with Multiple Masters Using heat CaasP Playbook

- 33.5 SUSE CaaS Platform OpenStack Image for heat SUSE CaaS Platform Playbook

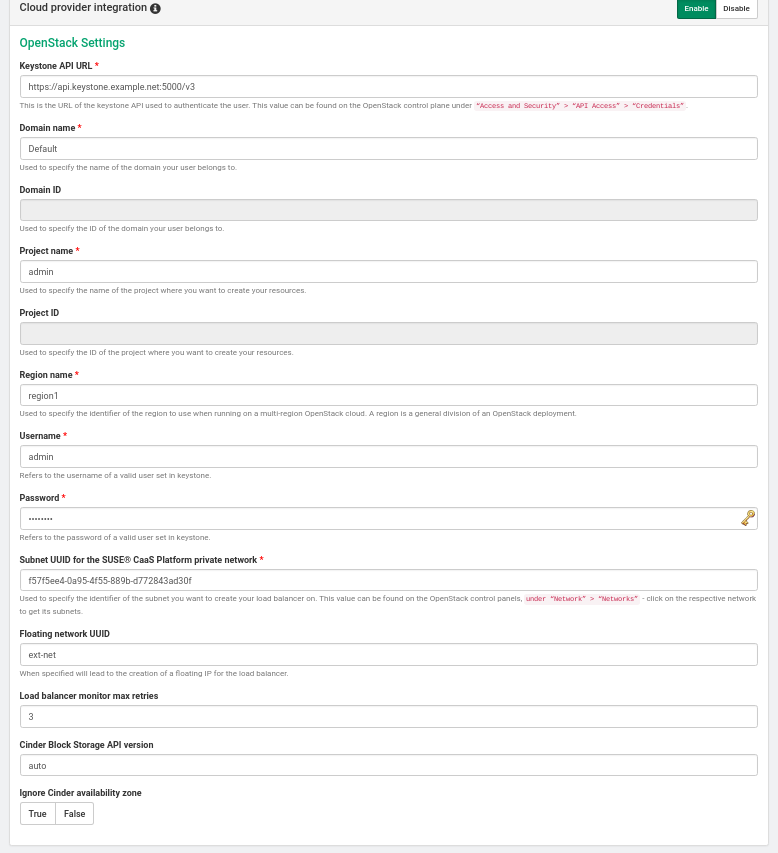

- 33.6 Enabling the Cloud Provider Integration (CPI) Feature

- 33.7 Register SUSE CaaS Platform Cluster for Software Updates

- 34 Installing SUSE CaaS Platform v4 using terraform

- 35 Integrations

- 36 Troubleshooting the Installation

- 37 Troubleshooting the ESX

- V Post-Installation

- 38 Post Installation Tasks

- 39 UI Verification

- 40 Installing OpenStack Clients

- 41 Configuring Transport Layer Security (TLS)

- 41.1 Configuring TLS in the input model

- 41.2 User-provided certificates and trust chains

- 41.3 Edit the input model to include your certificate files

- 41.4 Generate a self-signed CA

- 41.5 Generate a certificate signing request

- 41.6 Generate a server certificate

- 41.7 Upload to the Cloud Lifecycle Manager

- 41.8 Configuring the cipher suite

- 41.9 Testing

- 41.10 Verifying that the trust chain is correctly deployed

- 41.11 Turning TLS on or off

- 42 Configuring Availability Zones

- 43 Configuring Load Balancer as a Service

- 44 Other Common Post-Installation Tasks

- VI Support

- 6.1 neutron.networks.vxlan

- 6.2 neutron.networks.vlan

- 6.3 neutron.networks.flat

- 6.4 neutron.l3_agent.external_network_bridge

- 14.1 Control Plane 1

- 14.2 Control Plane 2

- 14.3 Control Plane 3

- 17.1 Local Product Repositories for SUSE OpenStack Cloud

- 17.2 SMT Repositories Hosted on the Cloud Lifecycle Manager

- 17.3 Repository Locations on the Cloud Lifecycle Manager server

- 25.1 DNS Backends

- 26.1 Data

- 26.2 Interfaces

- 26.3 Security Groups

- 26.4 Network Ports

- 28.1 NSX Hardware Requirements for Virtual Machine Integration

- 28.2 NSX Hardware Requirements for Baremetal Integration

- 28.3 NSX Interface Requirements

Copyright © 2006– 2024 SUSE LLC and contributors. All rights reserved.

Except where otherwise noted, this document is licensed under Creative Commons Attribution 3.0 License : https://creativecommons.org/licenses/by/3.0/legalcode.

For SUSE trademarks, see https://www.suse.com/company/legal/. All other third-party trademarks are the property of their respective owners. Trademark symbols (®, ™ etc.) denote trademarks of SUSE and its affiliates. Asterisks (*) denote third-party trademarks.

All information found in this book has been compiled with utmost attention to detail. However, this does not guarantee complete accuracy. Neither SUSE LLC, its affiliates, the authors nor the translators shall be held liable for possible errors or the consequences thereof.

Part I Planning an Installation using Cloud Lifecycle Manager #

- 1 Registering SLES

To get technical support and product updates, you need to register and activate your SUSE product with the SUSE Customer Center. It is recommended to register during the installation, since this will enable you to install the system with the latest updates and patches available. However, if you are …

- 2 Hardware and Software Support Matrix

This document lists the details about the supported hardware and software for SUSE OpenStack Cloud 9

- 3 Recommended Hardware Minimums for the Example Configurations

These recommended minimums are based on example configurations included with the installation models (see Chapter 9, Example Configurations). They are suitable only for demo environments. For production systems you will want to consider your capacity and performance requirements when making decision…

- 4 High Availability

This chapter covers High Availability concepts overview and cloud infrastructure.

1 Registering SLES #

To get technical support and product updates, you need to register and activate your SUSE product with the SUSE Customer Center. It is recommended to register during the installation, since this will enable you to install the system with the latest updates and patches available. However, if you are offline or want to skip the registration step, you can register at any time later from the installed system.

In case your organization does not provide a local registration server, registering SLES requires a SUSE account. In case you do not have a SUSE account yet, go to the SUSE Customer Center home page (https://scc.suse.com/) to create one.

1.1 Registering SLES during the Installation #

To register your system, provide the E-mail address associated with the SUSE account you or your organization uses to manage subscriptions. In case you do not have a SUSE account yet, go to the SUSE Customer Center home page (https://scc.suse.com/) to create one.

Enter the Registration Code you received with your copy of SUSE Linux Enterprise Server. Proceed with to start the registration process.

By default the system is registered with the SUSE Customer Center. However, if your

organization provides local registration servers you can either choose one

from the list of auto-detected servers or provide the URL at

Register System via local SMT Server. Proceed with

.

During the registration, the online update repositories will be added to your installation setup. When finished, you can choose whether to install the latest available package versions from the update repositories. This ensures that SUSE Linux Enterprise Server is installed with the latest security updates available. If you choose No, all packages will be installed from the installation media. Proceed with Next.

If the system was successfully registered during installation, YaST will disable repositories from local installation media such as CD/DVD or flash disks when the installation has been completed. This prevents problems if the installation source is no longer available and ensures that you always get the latest updates from the online repositories.

1.2 Registering SLES from the Installed System #

1.2.1 Registering from the Installed System #

If you have skipped the registration during the installation or want to

re-register your system, you can register the system at any time using the

YaST module or the command line

tool SUSEConnect.

Registering with YaST

To register the system start › › . Provide the E-mail address associated with the SUSE account you or your organization uses to manage subscriptions. In case you do not have a SUSE account yet, go to the SUSE Customer Center homepage (https://scc.suse.com/) to create one.

Enter the Registration Code you received with your copy of SUSE Linux Enterprise Server. Proceed with to start the registration process.

By default the system is registered with the SUSE Customer Center. However, if your organization provides local registration servers you can either choose one form the list of auto-detected servers or provide the URl at . Proceed with .

Registering with SUSEConnect

To register from the command line, use the command

tux > sudo SUSEConnect -r REGISTRATION_CODE -e EMAIL_ADDRESSReplace REGISTRATION_CODE with the Registration Code you received with your copy of SUSE Linux Enterprise Server. Replace EMAIL_ADDRESS with the E-mail address associated with the SUSE account you or your organization uses to manage subscriptions. To register with a local registration server, also provide the URL to the server:

tux > sudo SUSEConnect -r REGISTRATION_CODE -e EMAIL_ADDRESS \

--url "https://suse_register.example.com/"1.3 Registering SLES during Automated Deployment #

If you deploy your instances automatically using AutoYaST, you can register the system during the installation by providing the respective information in the AutoYaST control file. Refer to https://documentation.suse.com/sles/15-SP1/single-html/SLES-autoyast/#CreateProfile-Register for details.

2 Hardware and Software Support Matrix #

This document lists the details about the supported hardware and software for SUSE OpenStack Cloud 9

2.1 OpenStack Version Information #

SUSE OpenStack Cloud 9 services have been updated to the OpenStack Rocky release.

2.2 Supported Hardware Configurations #

SUSE OpenStack Cloud 9 supports hardware that is certified for SLES through the YES certification program. You will find a database of certified hardware at https://www.suse.com/yessearch/.

2.3 Support for Core and Non-Core OpenStack Features #

| OpenStack Service | Packages | Supported | OpenStack Service | Packages | Supported | |

| aodh | No | No | barbican | Yes | Yes | |

| ceilometer | Yes | Yes | cinder | Yes | Yes | |

| designate | Yes | Yes | glance | Yes | Yes | |

| heat | Yes | Yes | horizon | Yes | Yes | |

| ironic | Yes | Yes | keystone | Yes | Yes | |

| Magnum | Yes | Yes | manila | Yes | Yes | |

| monasca | Yes | Yes | monasca-ceilometer | Yes | Yes | |

| neutron | Yes | Yes | neutron(LBaaSv2) | No | No | |

| neutron(VPNaaS) | Yes | Yes | neutron(FWaaS) | Yes | Yes | |

| nova | Yes | Yes | Octavia | Yes | Yes | |

| swift | Yes | Yes |

nova

Supported | Not Supported |

|---|---|

SLES KVM Hypervisor | Xen hypervisor |

VMware ESX Hypervisor | Hyper-V |

Non-x86 Architectures |

neutron

Supported | Not Supported |

|---|---|

|

Tenant networks

|

Distributed Virtual Router (DVR) with any of the following:

|

VMware ESX Hypervisor | QoS |

glance Supported Features

swift and Ceph backends

cinder

Supported | Not Supported |

|---|---|

Encrypted & private volumes | VSA |

Incremental backup, backup attached volume, encrypted volume backup, backup snapshots |

swift

Supported | Not Supported |

|---|---|

Erasure coding | Geographically distributed clusters |

Dispersion report | |

swift zones |

keystone

Supported | Not Supported |

|---|---|

Domains | Web SSO |

Fernet tokens | Multi-Factor authentication |

| LDAP integration | Federation keystone to keystone |

Hierarchical multi-tenancy |

barbican Supported Features

Encryption for the following:

cinder

Hardware security model

Encrypted data volumes

Symmetric keys

Storage keys

CADF format auditing events

ceilometer

Supported | Not Supported |

|---|---|

keystone v3 support | Gnocchi |

glance v2 API | IPMI and SNMP |

ceilometer Compute Agent |

heat Features Not Supported

Multi-region stack

ironic

The table below shows supported node configurations. UEFI

secure is not supported.

| Hardware Type | Interface | ||||

|---|---|---|---|---|---|

| Boot | Deploy | Inspect | Management | Power | |

| ilo | ilo-virtual-media | direct | ilo | ilo | ilo |

| ilo | ilo-pxe | iscsi | ilo | ilo | ilo |

| ipmi | pxe | direct | no-inspect | ipmitool | ipmitool |

| ipmi | pxe | iscsi | no-inspect | ipmitool | ipmitool |

| redfish | pxe | iscsi | no-inspect | redfish | redfish |

2.4 Cloud Scaling #

SUSE OpenStack Cloud 9 has been tested and qualified with a total of 200 total compute nodes in a single region (Region0).

SUSE OpenStack Cloud 9 has been tested and qualified with a total of 12,000 virtual machines across a total of 200 compute nodes.

Larger configurations are possible, but SUSE has tested and qualified this configuration size. Typically larger configurations are enabled with services and engineering engagements.

2.5 Supported Software #

Supported ESXi versions

SUSE OpenStack Cloud 9 currently supports the following ESXi versions:

ESXi version 6.0

ESXi version 6.0 (Update 1b)

ESXi version 6.5

The following are the requirements for your vCenter server:

Software: vCenter (It is recommended to run the same server version as the ESXi hosts.)

License Requirements: vSphere Enterprise Plus license

2.6 Notes About Performance #

We have the following recommendations to ensure good performance of your cloud environment:

On the control plane nodes, you will want good I/O performance. Your array controllers must have cache controllers and we advise against the use of RAID-5.

On compute nodes, the I/O performance will influence the virtual machine start-up performance. We also recommend the use of cache controllers in your storage arrays.

If you are using dedicated object storage (swift) nodes, in particular the account, container, and object servers, we recommend that your storage arrays have cache controllers.

For best performance on, set the servers power management setting in the iLO to OS Control Mode. This power mode setting is only available on servers that include the HP Power Regulator.

2.7 KVM Guest OS Support #

For a list of the supported VM guests, see https://documentation.suse.com/sles/15-SP1/single-html/SLES-virtualization/#virt-support-guests

2.8 ESX Guest OS Support #

For ESX, refer to the VMware Compatibility Guide. The information for SUSE OpenStack Cloud is below the search form.

2.9 Ironic Guest OS Support #

A Verified Guest OS has been tested by SUSE and appears to function properly as a bare metal instance on SUSE OpenStack Cloud 9.

A Certified Guest OS has been officially tested by the operating system vendor, or by SUSE under the vendor's authorized program, and will be supported by the operating system vendor as a bare metal instance on SUSE OpenStack Cloud 9.

| ironic Guest Operating System | Verified | Certified |

|---|---|---|

| SUSE Linux Enterprise Server 12 SP4 | Yes | Yes |

3 Recommended Hardware Minimums for the Example Configurations #

3.1 Recommended Hardware Minimums for an Entry-scale KVM #

These recommended minimums are based on example configurations included with the installation models (see Chapter 9, Example Configurations). They are suitable only for demo environments. For production systems you will want to consider your capacity and performance requirements when making decisions about your hardware.

The disk requirements detailed below can be met with logical drives, logical volumes, or external storage such as a 3PAR array.

| Node Type | Role Name | Required Number | Server Hardware - Minimum Requirements and Recommendations | |||

|---|---|---|---|---|---|---|

| Disk | Memory | Network | CPU | |||

| Dedicated Cloud Lifecycle Manager (optional) | Lifecycle-manager | 1 | 300 GB | 8 GB | 1 x 10 Gbit/s with PXE Support | 8 CPU (64-bit) cores total (Intel x86_64) |

| Control Plane | Controller | 3 |

| 128 GB | 2 x 10 Gbit/s with one PXE enabled port | 8 CPU (64-bit) cores total (Intel x86_64) |

| Compute | Compute | 1-3 | 2 x 600 GB (minimum) | 32 GB (memory must be sized based on the virtual machine instances hosted on the Compute node) | 2 x 10 Gbit/s with one PXE enabled port | 8 CPU (64-bit) cores total (Intel x86_64) with hardware virtualization support. The CPU cores must be sized based on the VM instances hosted by the Compute node. |

For more details about the supported network requirements, see Chapter 9, Example Configurations.

3.2 Recommended Hardware Minimums for an Entry-scale ESX KVM Model #

These recommended minimums are based on example configurations included with the installation models (see Chapter 9, Example Configurations). They are suitable only for demo environments. For production systems you will want to consider your capacity and performance requirements when making decisions about your hardware.

SUSE OpenStack Cloud currently supports the following ESXi versions:

ESXi version 6.0

ESXi version 6.0 (Update 1b)

ESXi version 6.5

The following are the requirements for your vCenter server:

Software: vCenter (It is recommended to run the same server version as the ESXi hosts.)

License Requirements: vSphere Enterprise Plus license

| Node Type | Role Name | Required Number | Server Hardware - Minimum Requirements and Recommendations | |||

|---|---|---|---|---|---|---|

| Disk | Memory | Network | CPU | |||

| Dedicated Cloud Lifecycle Manager (optional) | Lifecycle-manager | 1 | 300 GB | 8 GB | 1 x 10 Gbit/s with PXE Support | 8 CPU (64-bit) cores total (Intel x86_64) |

| Control Plane | Controller | 3 |

| 128 GB | 2 x 10 Gbit/s with one PXE enabled port | 8 CPU (64-bit) cores total (Intel x86_64) |

| Compute (ESXi hypervisor) | 2 | 2 x 1 TB (minimum, shared across all nodes) | 128 GB (minimum) | 2 x 10 Gbit/s +1 NIC (for DC access) | 16 CPU (64-bit) cores total (Intel x86_64) | |

| Compute (KVM hypervisor) | kvm-compute | 1-3 | 2 x 600 GB (minimum) | 32 GB (memory must be sized based on the virtual machine instances hosted on the Compute node) | 2 x 10 Gbit/s with one PXE enabled port | 8 CPU (64-bit) cores total (Intel x86_64) with hardware virtualization support. The CPU cores must be sized based on the VM instances hosted by the Compute node. |

| OVSvApp VM | on VMWare cluster | 1 | 80 GB | 4 GB | 3 VMXNET Virtual Network Adapters | 2 vCPU |

| nova proxy VM | on VMWare cluster | 1 per cluster | 80 GB | 4 GB | 3 VMXNET Virtual Network Adapters | 2 vCPU |

3.3 Recommended Hardware Minimums for an Entry-scale ESX, KVM with Dedicated Cluster for Metering, Monitoring, and Logging #

These recommended minimums are based on example configurations included with the installation models (see Chapter 9, Example Configurations). They are suitable only for demo environments. For production systems you will want to consider your capacity and performance requirements when making decisions about your hardware.

SUSE OpenStack Cloud currently supports the following ESXi versions:

ESXi version 6.0

ESXi version 6.0 (Update 1b)

ESXi version 6.5

The following are the requirements for your vCenter server:

Software: vCenter (It is recommended to run the same server version as the ESXi hosts.)

License Requirements: vSphere Enterprise Plus license

| Node Type | Role Name | Required Number | Server Hardware - Minimum Requirements and Recommendations | |||

|---|---|---|---|---|---|---|

| Disk | Memory | Network | CPU | |||

| Dedicated Cloud Lifecycle Manager (optional) | Lifecycle-manager | 1 | 300 GB | 8 GB | 1 x 10 Gbit/s with PXE Support | 8 CPU (64-bit) cores total (Intel x86_64) |

| Control Plane | Core-API Controller | 2 |

| 128 GB | 2 x 10 Gbit/s with PXE Support | 24 CPU (64-bit) cores total (Intel x86_64) |

| DBMQ Cluster | 3 |

| 96 GB | 2 x 10 Gbit/s with PXE Support | 24 CPU (64-bit) cores total (Intel x86_64) | |

| Metering Mon/Log Cluster | 3 |

| 128 GB | 2 x 10 Gbit/s with one PXE enabled port | 24 CPU (64-bit) cores total (Intel x86_64) | |

| Compute (ESXi hypervisor) | 2 (minimum) | 2 X 1 TB (minimum, shared across all nodes) | 64 GB (memory must be sized based on the virtual machine instances hosted on the Compute node) | 2 x 10 Gbit/s +1 NIC (for Data Center access) | 16 CPU (64-bit) cores total (Intel x86_64) | |

| Compute (KVM hypervisor) | kvm-compute | 1-3 | 2 X 600 GB (minimum) | 32 GB (memory must be sized based on the virtual machine instances hosted on the Compute node) | 2 x 10 Gbit/s with one PXE enabled port | 8 CPU (64-bit) cores total (Intel x86_64) with hardware virtualization support. The CPU cores must be sized based on the VM instances hosted by the Compute node. |

| OVSvApp VM | on VMWare cluster | 1 | 80 GB | 4 GB | 3 VMXNET Virtual Network Adapters | 2 vCPU |

| nova proxy VM | on VMWare cluster | 1 per cluster | 80 GB | 4 GB | 3 VMXNET Virtual Network Adapters | 2 vCPU |

3.4 Recommended Hardware Minimums for an Ironic Flat Network Model #

When using the agent_ilo driver, you should ensure that

the most recent iLO controller firmware is installed. A recommended minimum

for the iLO4 controller is version 2.30.

The recommended minimum hardware requirements are based on the Chapter 9, Example Configurations included with the base installation and are suitable only for demo environments. For production systems you will want to consider your capacity and performance requirements when making decisions about your hardware.

| Node Type | Role Name | Required Number | Server Hardware - Minimum Requirements and Recommendations | |||

|---|---|---|---|---|---|---|

| Disk | Memory | Network | CPU | |||

| Dedicated Cloud Lifecycle Manager (optional) | Lifecycle-manager | 1 | 300 GB | 8 GB | 1 x 10 Gbit/s with PXE Support | 8 CPU (64-bit) cores total (Intel x86_64) |

| Control Plane | Controller | 3 |

| 128 GB | 2 x 10 Gbit/s with one PXE enabled port | 8 CPU (64-bit) cores total (Intel x86_64) |

| Compute | Compute | 1 | 1 x 600 GB (minimum) | 16 GB | 2 x 10 Gbit/s with one PXE enabled port | 16 CPU (64-bit) cores total (Intel x86_64) |

For more details about the supported network requirements, see Chapter 9, Example Configurations.

3.5 Recommended Hardware Minimums for an Entry-scale Swift Model #

These recommended minimums are based on the included Chapter 9, Example Configurations included with the base installation and are suitable only for demo environments. For production systems you will want to consider your capacity and performance requirements when making decisions about your hardware.

The entry-scale-swift example runs the swift proxy,

account and container services on the three controller servers. However, it

is possible to extend the model to include the swift proxy, account and

container services on dedicated servers (typically referred to as the swift

proxy servers). If you are using this model, we have included the recommended

swift proxy servers specs in the table below.

| Node Type | Role Name | Required Number | Server Hardware - Minimum Requirements and Recommendations | |||

|---|---|---|---|---|---|---|

| Disk | Memory | Network | CPU | |||

| Dedicated Cloud Lifecycle Manager (optional) | Lifecycle-manager | 1 | 300 GB | 8 GB | 1 x 10 Gbit/s with PXE Support | 8 CPU (64-bit) cores total (Intel x86_64) |

| Control Plane | Controller | 3 |

| 128 GB | 2 x 10 Gbit/s with one PXE enabled port | 8 CPU (64-bit) cores total (Intel x86_64) |

| swift Object | swobj | 3 |

If using x3 replication only:

If using Erasure Codes only or a mix of x3 replication and Erasure Codes:

| 32 GB (see considerations at bottom of page for more details) | 2 x 10 Gbit/s with one PXE enabled port | 8 CPU (64-bit) cores total (Intel x86_64) |

| swift Proxy, Account, and Container | swpac | 3 | 2 x 600 GB (minimum, see considerations at bottom of page for more details) | 64 GB (see considerations at bottom of page for more details) | 2 x 10 Gbit/s with one PXE enabled port | 8 CPU (64-bit) cores total (Intel x86_64) |

The disk speeds (RPM) chosen should be consistent within the same ring or storage policy. It is best to not use disks with mixed disk speeds within the same swift ring.

Considerations for your swift object and proxy, account, container servers RAM and disk capacity needs

swift can have a diverse number of hardware configurations. For example, a swift object server may have just a few disks (minimum of 6 for erasure codes) or up to 70 and beyond. The memory requirement needs to be increased as more disks are added. The general rule of thumb for memory needed is 0.5 GB per TB of storage. For example, a system with 24 hard drives at 8TB each, giving a total capacity of 192TB, should use 96GB of RAM. However, this does not work well for a system with a small number of small hard drives or a very large number of very large drives. So, if after calculating the memory given this guideline, if the answer is less than 32GB then go with 32GB of memory minimum and if the answer is over 256GB then use 256GB maximum, no need to use more memory than that.

When considering the capacity needs for the swift proxy, account, and container (PAC) servers, you should calculate 2% of the total raw storage size of your object servers to specify the storage required for the PAC servers. So, for example, if you were using the example we provided earlier and you had an object server setup of 24 hard drives with 8TB each for a total of 192TB and you had a total of 6 object servers, that would give a raw total of 1152TB. So you would take 2% of that, which is 23TB, and ensure that much storage capacity was available on your swift proxy, account, and container (PAC) server cluster. If you had a cluster of three swift PAC servers, that would be ~8TB each.

Another general rule of thumb is that if you are expecting to have more than a million objects in a container then you should consider using SSDs on the swift PAC servers rather than HDDs.

4 High Availability #

This chapter covers High Availability concepts overview and cloud infrastructure.

4.1 High Availability Concepts Overview #

A highly available (HA) cloud ensures that a minimum level of cloud resources are always available on request, which results in uninterrupted operations for users.

In order to achieve this high availability of infrastructure and workloads, we define the scope of HA to be limited to protecting these only against single points of failure (SPOF). Single points of failure include:

Hardware SPOFs: Hardware failures can take the form of server failures, memory going bad, power failures, hypervisors crashing, hard disks dying, NIC cards breaking, switch ports failing, network cables loosening, and so forth.

Software SPOFs: Server processes can crash due to software defects, out-of-memory conditions, operating system kernel panic, and so forth.

By design, SUSE OpenStack Cloud strives to create a system architecture resilient to SPOFs, and does not attempt to automatically protect the system against multiple cascading levels of failures; such cascading failures will result in an unpredictable state. The cloud operator is encouraged to recover and restore any failed component as soon as the first level of failure occurs.

4.2 Highly Available Cloud Infrastructure #

The highly available cloud infrastructure consists of the following:

High Availability of Controllers

Availability Zones

Compute with KVM

nova Availability Zones

Compute with ESX

Object Storage with swift

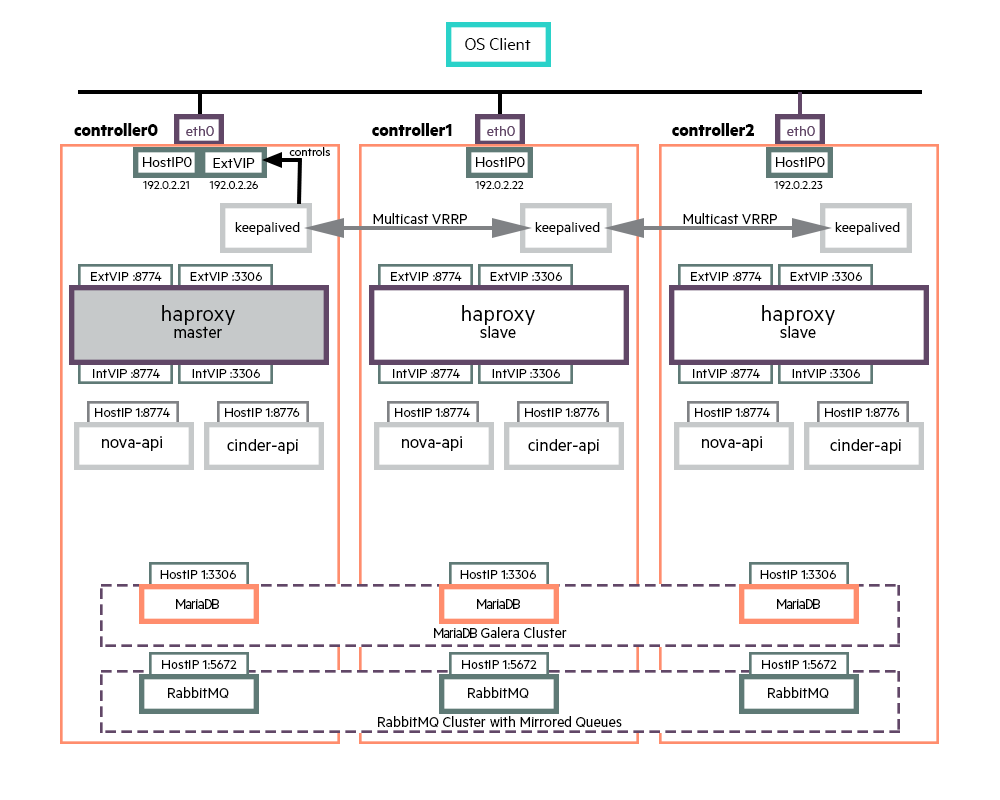

4.3 High Availability of Controllers #

The SUSE OpenStack Cloud installer deploys highly available configurations of OpenStack cloud services, resilient against single points of failure.

The high availability of the controller components comes in two main forms.

Many services are stateless and multiple instances are run across the control plane in active-active mode. The API services (nova-api, cinder-api, etc.) are accessed through the HA proxy load balancer whereas the internal services (nova-scheduler, cinder-scheduler, etc.), are accessed through the message broker. These services use the database cluster to persist any data.

NoteThe HA proxy load balancer is also run in active-active mode and keepalived (used for Virtual IP (VIP) Management) is run in active-active mode, with only one keepalived instance holding the VIP at any one point in time.

The high availability of the message queue service and the database service is achieved by running these in a clustered mode across the three nodes of the control plane: RabbitMQ cluster with Mirrored Queues and MariaDB Galera cluster.

The above diagram illustrates the HA architecture with the focus on VIP management and load balancing. It only shows a subset of active-active API instances and does not show examples of other services such as nova-scheduler, cinder-scheduler, etc.

In the above diagram, requests from an OpenStack client to the API services are sent to VIP and port combination; for example, 192.0.2.26:8774 for a nova request. The load balancer listens for requests on that VIP and port. When it receives a request, it selects one of the controller nodes configured for handling nova requests, in this particular case, and then forwards the request to the IP of the selected controller node on the same port.

The nova-api service, which is listening for requests on the IP of its host machine, then receives the request and deals with it accordingly. The database service is also accessed through the load balancer. RabbitMQ, on the other hand, is not currently accessed through VIP/HA proxy as the clients are configured with the set of nodes in the RabbitMQ cluster and failover between cluster nodes is automatically handled by the clients.

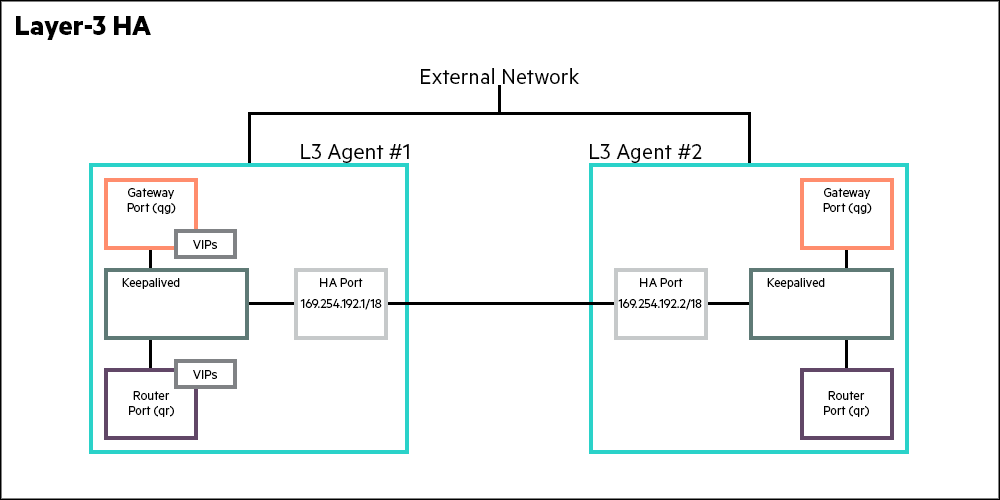

4.4 High Availability Routing - Centralized #

Incorporating High Availability into a system involves implementing redundancies in the component that is being made highly available. In Centralized Virtual Router (CVR), that element is the Layer 3 agent (L3 agent). By making L3 agent highly available, upon failure all HA routers are migrated from the primary L3 agent to a secondary L3 agent. The implementation efficiency of an HA subsystem is measured by the number of packets that are lost when the secondary L3 agent is made the master.

In SUSE OpenStack Cloud, the primary and secondary L3 agents run continuously, and failover involves a rapid switchover of mastership to the secondary agent (IEFT RFC 5798). The failover essentially involves a switchover from an already running master to an already running slave. This substantially reduces the latency of the HA. The mechanism used by the master and the slave to implement a failover is implemented using Linux’s pacemaker HA resource manager. This CRM (Cluster resource manager) uses VRRP (Virtual Router Redundancy Protocol) to implement the HA mechanism. VRRP is a industry standard protocol and defined in RFC 5798.

L3 HA uses of VRRP comes with several benefits.

The primary benefit is that the failover mechanism does not involve interprocess communication overhead. Such overhead would be in the order of 10s of seconds. By not using an RPC mechanism to invoke the secondary agent to assume the primary agents role enables VRRP to achieve failover within 1-2 seconds.

In VRRP, the primary and secondary routers are all active. As the routers are running, it is a matter of making the router aware of its primary/master status. This switchover takes less than 2 seconds instead of 60+ seconds it would have taken to start a backup router and failover.

The failover depends upon a heartbeat link between the primary and secondary. That link in SUSE OpenStack Cloud uses keepalived package of the pacemaker resource manager. The heartbeats are sent at a 2 second intervals between the primary and secondary. As per the VRRP protocol, if the secondary does not hear from the master after 3 intervals, it assumes the function of the primary.

Further, all the routable IP addresses, that is the VIPs (virtual IPs) are assigned to the primary agent.

4.5 Availability Zones #

While planning your OpenStack deployment, you should decide on how to zone various types of nodes - such as compute, block storage, and object storage. For example, you may decide to place all servers in the same rack in the same zone. For larger deployments, you may plan more elaborate redundancy schemes for redundant power, network ISP connection, and even physical firewalling between zones (this aspect is outside the scope of this document).

SUSE OpenStack Cloud offers APIs, CLIs and horizon UIs for the administrator to define and user to consume, availability zones for nova, cinder and swift services. This section outlines the process to deploy specific types of nodes to specific physical servers, and makes a statement of available support for these types of availability zones in the current release.

By default, SUSE OpenStack Cloud is deployed in a single availability zone upon installation. Multiple availability zones can be configured by an administrator post-install, if required. Refer to OpenStack Documentation

4.6 Compute with KVM #

You can deploy your KVM nova-compute nodes either during initial installation or by adding compute nodes post initial installation.

While adding compute nodes post initial installation, you can specify the target physical servers for deploying the compute nodes.

Learn more about adding compute nodes in Book “Operations Guide CLM”, Chapter 15 “System Maintenance”, Section 15.1 “Planned System Maintenance”, Section 15.1.3 “Planned Compute Maintenance”, Section 15.1.3.4 “Adding Compute Node”.

4.7 Nova Availability Zones #

nova host aggregates and nova availability zones can be used to segregate nova compute nodes across different failure zones.

4.8 Compute with ESX Hypervisor #

Compute nodes deployed on ESX Hypervisor can be made highly available using the HA feature of VMware ESX Clusters. For more information on VMware HA, please refer to your VMware ESX documentation.

4.9 cinder Availability Zones #

cinder availability zones are not supported for general consumption in the current release.

4.10 Object Storage with Swift #

High availability in swift is achieved at two levels.

Control Plane

The swift API is served by multiple swift proxy nodes. Client requests are directed to all swift proxy nodes by the HA Proxy load balancer in round-robin fashion. The HA Proxy load balancer regularly checks the node is responding, so that if it fails, traffic is directed to the remaining nodes. The swift service will continue to operate and respond to client requests as long as at least one swift proxy server is running.

If a swift proxy node fails in the middle of a transaction, the transaction fails. However it is standard practice for swift clients to retry operations. This is transparent to applications that use the python-swiftclient library.

The entry-scale example cloud models contain three swift proxy nodes. However, it is possible to add additional clusters with additional swift proxy nodes to handle a larger workload or to provide additional resiliency.

Data

Multiple replicas of all data is stored. This happens for account, container and object data. The example cloud models recommend a replica count of three. However, you may change this to a higher value if needed.

When swift stores different replicas of the same item on disk, it ensures that as far as possible, each replica is stored in a different zone, server or drive. This means that if a single server of disk drives fails, there should be two copies of the item on other servers or disk drives.

If a disk drive is failed, swift will continue to store three replicas. The replicas that would normally be stored on the failed drive are “handed off” to another drive on the system. When the failed drive is replaced, the data on that drive is reconstructed by the replication process. The replication process re-creates the “missing” replicas by copying them to the drive using one of the other remaining replicas. While this is happening, swift can continue to store and retrieve data.

4.11 Highly Available Cloud Applications and Workloads #

Projects writing applications to be deployed in the cloud must be aware of the cloud architecture and potential points of failure and architect their applications accordingly for high availability.

Some guidelines for consideration:

Assume intermittent failures and plan for retries

OpenStack Service APIs: invocations can fail - you should carefully evaluate the response of each invocation, and retry in case of failures.

Compute: VMs can die - monitor and restart them

Network: Network calls can fail - retry should be successful

Storage: Storage connection can hiccup - retry should be successful

Build redundancy into your application tiers

Replicate VMs containing stateless services such as Web application tier or Web service API tier and put them behind load balancers. You must implement your own HA Proxy type load balancer in your application VMs.

Boot the replicated VMs into different nova availability zones.

If your VM stores state information on its local disk (Ephemeral Storage), and you cannot afford to lose it, then boot the VM off a cinder volume.

Take periodic snapshots of the VM which will back it up to swift through glance.

Your data on ephemeral may get corrupted (but not your backup data in swift and not your data on cinder volumes).

Take regular snapshots of cinder volumes and also back up cinder volumes or your data exports into swift.

Instead of rolling your own highly available stateful services, use readily available SUSE OpenStack Cloud platform services such as designate, the DNS service.

4.12 What is not Highly Available? #

- Cloud Lifecycle Manager

The Cloud Lifecycle Manager in SUSE OpenStack Cloud is not highly available.

- Control Plane

High availability (HA) is supported for the Network Service FWaaS. HA is not supported for VPNaaS.

- cinder Volume and Backup Services

cinder Volume and Backup Services are not high availability and started on one controller node at a time. More information on cinder Volume and Backup Services can be found in Book “Operations Guide CLM”, Chapter 8 “Managing Block Storage”, Section 8.1 “Managing Block Storage using Cinder”, Section 8.1.3 “Managing cinder Volume and Backup Services”.

- keystone Cron Jobs

The keystone cron job is a singleton service, which can only run on a single node at a time. A manual setup process for this job will be required in case of a node failure. More information on enabling the cron job for keystone on the other nodes can be found in Book “Operations Guide CLM”, Chapter 5 “Managing Identity”, Section 5.12 “Identity Service Notes and Limitations”, Section 5.12.4 “System cron jobs need setup”.

4.13 More Information #

Part II Cloud Lifecycle Manager Overview #

This section contains information on the Input Model and the Example Configurations.

- 5 Input Model

This document describes how SUSE OpenStack Cloud input models can be used to define and configure the cloud.

- 6 Configuration Objects

The top-level cloud configuration file,

cloudConfig.yml, defines some global values for SUSE OpenStack Cloud, as described in the table below.- 7 Other Topics

Names are generated by the configuration processor for all allocated IP addresses. A server connected to multiple networks will have multiple names associated with it. One of these may be assigned as the hostname for a server via the network-group configuration (see Section 6.12, “NIC Mappings”). Na…

- 8 Configuration Processor Information Files

In addition to producing all of the data needed to deploy and configure the cloud, the configuration processor also creates a number of information files that provide details of the resulting configuration.

- 9 Example Configurations

The SUSE OpenStack Cloud 9 system ships with a collection of pre-qualified example configurations. These are designed to help you to get up and running quickly with a minimum number of configuration changes.

- 10 Modifying Example Configurations for Compute Nodes

This section contains detailed information about the Compute Node parts of the input model. For example input models, see Chapter 9, Example Configurations. For general descriptions of the input model, see Section 6.14, “Networks”.

- 11 Modifying Example Configurations for Object Storage using Swift

This section contains detailed descriptions about the swift-specific parts of the input model. For example input models, see Chapter 9, Example Configurations. For general descriptions of the input model, see Section 6.14, “Networks”. In addition, the swift ring specifications are available in the ~…

- 12 Alternative Configurations

In SUSE OpenStack Cloud 9 there are alternative configurations that we recommend for specific purposes.

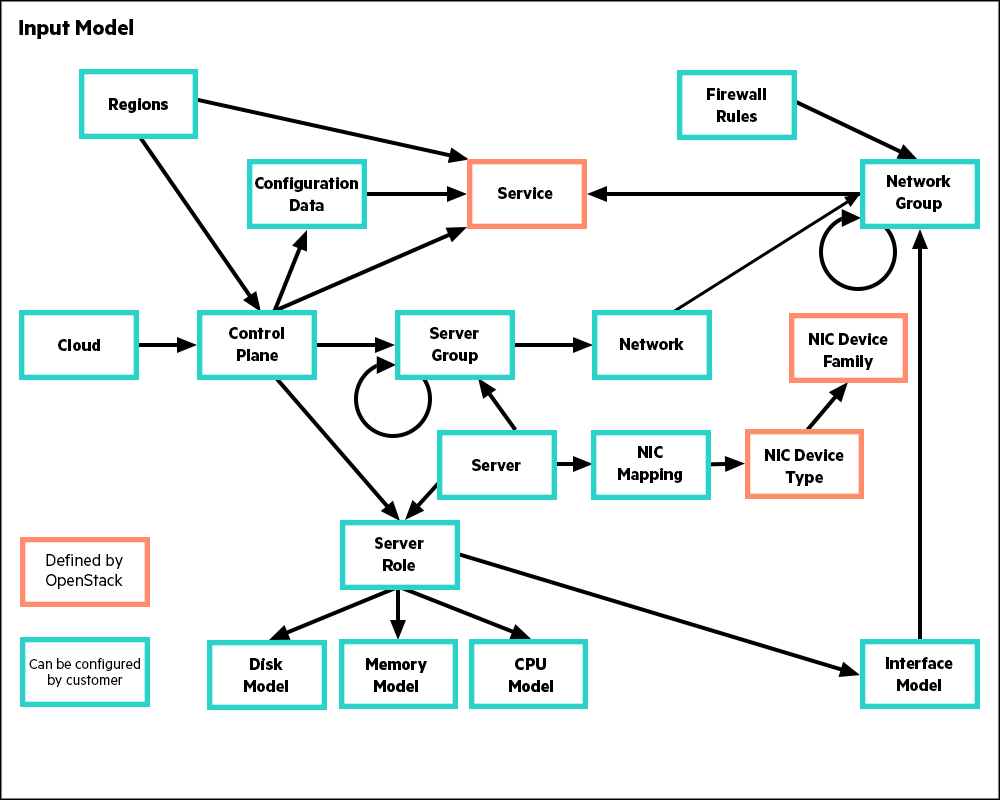

5 Input Model #

5.1 Introduction to the Input Model #

This document describes how SUSE OpenStack Cloud input models can be used to define and configure the cloud.

SUSE OpenStack Cloud ships with a set of example input models that can be used as starting points for defining a custom cloud. An input model allows you, the cloud administrator, to describe the cloud configuration in terms of:

Which OpenStack services run on which server nodes

How individual servers are configured in terms of disk and network adapters

The overall network configuration of the cloud

Network traffic separation

CIDR and VLAN assignments

The input model is consumed by the configuration processor which parses and validates the input model and outputs the effective configuration that will be deployed to each server that makes up your cloud.

The document is structured as follows:

- This explains the ideas behind the declarative model approach used in SUSE OpenStack Cloud 9 and the core concepts used in describing that model

- This section provides a description of each of the configuration entities in the input model

- In this section we provide samples and definitions of some of the more important configuration entities

5.2 Concepts #

An SUSE OpenStack Cloud 9 cloud is defined by a declarative model that is described in a series of configuration objects. These configuration objects are represented in YAML files which together constitute the various example configurations provided as templates with this release. These examples can be used nearly unchanged, with the exception of necessary changes to IP addresses and other site and hardware-specific identifiers. Alternatively, the examples may be customized to meet site requirements.

The following diagram shows the set of configuration objects and their relationships. All objects have a name that you may set to be something meaningful for your context. In the examples these names are provided in capital letters as a convention. These names have no significance to SUSE OpenStack Cloud, rather it is the relationships between them that define the configuration.

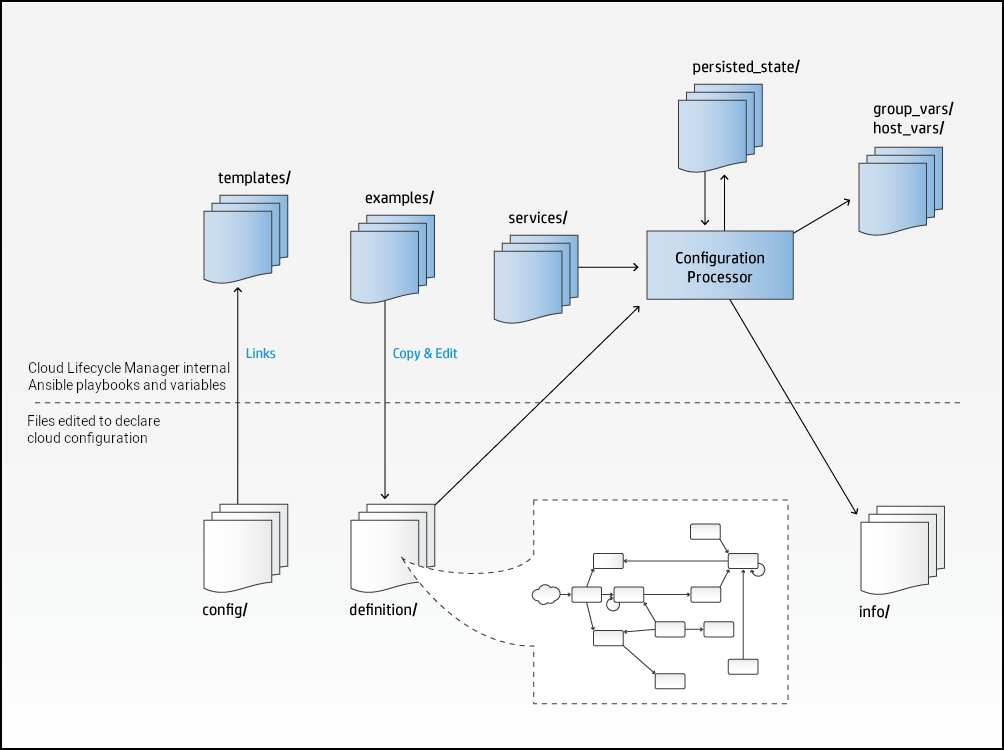

The configuration processor reads and validates the input model described in the YAML files discussed above, combines it with the service definitions provided by SUSE OpenStack Cloud and any persisted state information about the current deployment to produce a set of Ansible variables that can be used to deploy the cloud. It also produces a set of information files that provide details about the configuration.

The relationship between the file systems on the SUSE OpenStack Cloud deployment server and the configuration processor is shown in the following diagram. Below the line are the directories that you, the cloud administrator, edit to declare the cloud configuration. Above the line are the directories that are internal to the Cloud Lifecycle Manager such as Ansible playbooks and variables.

The input model is read from the

~/openstack/my_cloud/definition directory. Although the

supplied examples use separate files for each type of object in the model,

the names and layout of the files have no significance to the configuration

processor, it simply reads all of the .yml files in this directory. Cloud

administrators are therefore free to use whatever structure is best for their

context. For example, you may decide to maintain separate files or

sub-directories for each physical rack of servers.

As mentioned, the examples use the conventional upper casing for object names, but these strings are used only to define the relationship between objects. They have no specific significance to the configuration processor.

5.2.1 Cloud #

The Cloud definition includes a few top-level configuration values such as the name of the cloud, the host prefix, details of external services (NTP, DNS, SMTP) and the firewall settings.

The location of the cloud configuration file also tells the configuration processor where to look for the files that define all of the other objects in the input model.

5.2.2 Control Planes #

A control-plane runs one or more distributed across and .

A control-plane uses servers with a particular .

A provides the operating environment for a set of ; normally consisting of a set of shared services (MariaDB, RabbitMQ, HA Proxy, Apache, etc.), OpenStack control services (API, schedulers, etc.) and the they are managing (compute, storage, etc.).

A simple cloud may have a single which runs all of the . A more complex cloud may have multiple to allow for more than one instance of some services. Services that need to consume (use) another service (such as neutron consuming MariaDB, nova consuming neutron) always use the service within the same . In addition a control-plane can describe which services can be consumed from other control-planes. It is one of the functions of the configuration processor to resolve these relationships and make sure that each consumer/service is provided with the configuration details to connect to the appropriate provider/service.

Each is structured as and . The are typically used to host the OpenStack services that manage the cloud such as API servers, database servers, neutron agents, and swift proxies, while the are used to host the scale-out OpenStack services such as nova-Compute or swift-Object services. This is a representation convenience rather than a strict rule, for example it is possible to run the swift-Object service in the management cluster in a smaller-scale cloud that is not designed for scale-out object serving.

A cluster can contain one or more and you can have one or more depending on the capacity and scalability needs of the cloud that you are building. Spreading services across multiple provides greater scalability, but it requires a greater number of physical servers. A common pattern for a large cloud is to run high data volume services such as monitoring and logging in a separate cluster. A cloud with a high object storage requirement will typically also run the swift service in its own cluster.

Clusters in this context are a mechanism for grouping service components in physical servers, but all instances of a component in a work collectively. For example, if HA Proxy is configured to run on multiple clusters within the same then all of those instances will work as a single instance of the ha-proxy service.

Both and define the type (via a list of ) and number of servers (min and max or count) they require.

The can also define a list of failure-zones () from which to allocate servers.

5.2.2.1 Control Planes and Regions #

A region in OpenStack terms is a collection of URLs that together provide a

consistent set of services (nova, neutron, swift, etc). Regions are

represented in the keystone identity service catalog. In SUSE OpenStack Cloud,

multiple regions are not supported. Only Region0 is valid.

In a simple single control-plane cloud, there is no need for a separate region definition and the control-plane itself can define the region name.

5.2.3 Services #

A runs one or more .

A service is the collection of that provide a particular feature; for example, nova provides the compute service and consists of the following service-components: nova-api, nova-scheduler, nova-conductor, nova-novncproxy, and nova-compute. Some services, like the authentication/identity service keystone, only consist of a single service-component.

To define your cloud, all you need to know about a service are the names of the . The details of the services themselves and how they interact with each other is captured in service definition files provided by SUSE OpenStack Cloud.

When specifying your SUSE OpenStack Cloud cloud you have to decide where components will run and how they connect to the networks. For example, should they all run in one sharing common services or be distributed across multiple to provide separate instances of some services? The SUSE OpenStack Cloud supplied examples provide solutions for some typical configurations.

Where services run is defined in the . How they connect to networks is defined in the .

5.2.4 Server Roles #

and use with a particular set of s.

You are going to be running the services on physical , and you are going to need a way to specify which type of servers you want to use where. This is defined via the . Each describes how to configure the physical aspects of a server to fulfill the needs of a particular role. You will generally use a different role whenever the servers are physically different (have different disks or network interfaces) or if you want to use some specific servers in a particular role (for example to choose which of a set of identical servers are to be used in the control plane).

Each has a relationship to four other entities:

The specifies how to configure and use a server's local storage and it specifies disk sizing information for virtual machine servers. The disk model is described in the next section.

The describes how a server's network interfaces are to be configured and used. This is covered in more details in the networking section.

An optional specifies how to configure and use huge pages. The memory-model specifies memory sizing information for virtual machine servers.

An optional specifies how the CPUs will be used by nova and by DPDK. The cpu-model specifies CPU sizing information for virtual machine servers.

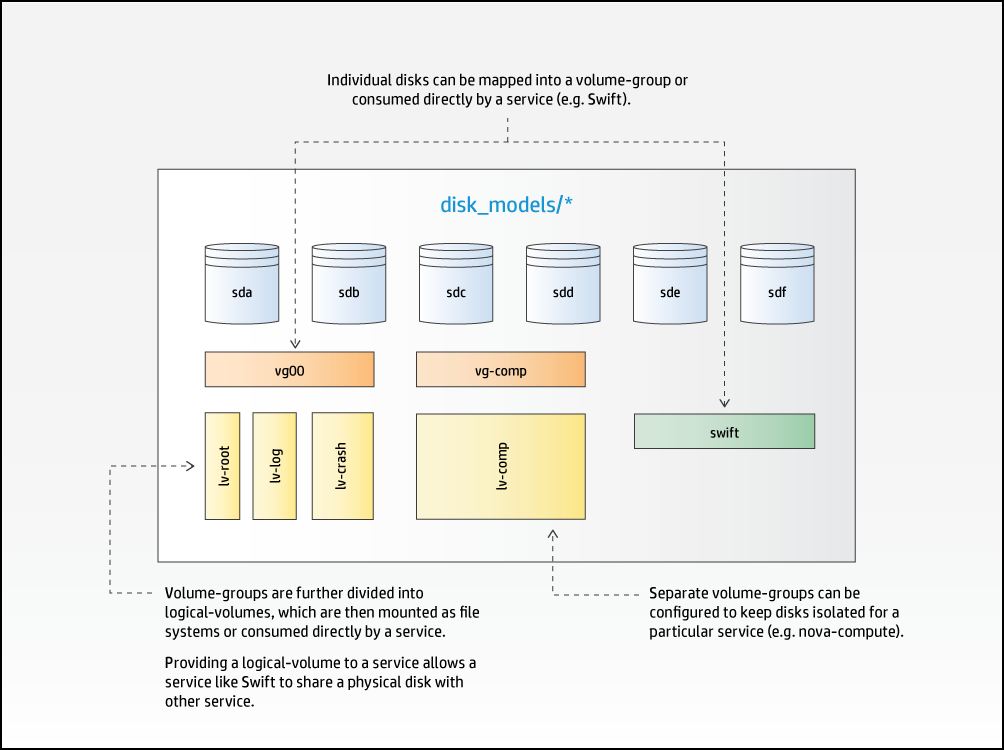

5.2.5 Disk Model #

Each physical disk device is associated with a or a .

are consumed by .

are divided into .

are mounted as file systems or consumed by services.

Disk-models define how local storage is to be configured and presented to . Disk-models are identified by a name, which you will specify. The SUSE OpenStack Cloud examples provide some typical configurations. As this is an area that varies with respect to the services that are hosted on a server and the number of disks available, it is impossible to cover all possible permutations you may need to express via modifications to the examples.

Within a , disk devices are assigned to either a or a .

A is a set of one or more disks that are to be consumed directly by a service. For example, a set of disks to be used by swift. The device-group identifies the list of disk devices, the service, and a few service-specific attributes that tell the service about the intended use (for example, in the case of swift this is the ring names). When a device is assigned to a device-group, the associated service is responsible for the management of the disks. This management includes the creation and mounting of file systems. (swift can provide additional data integrity when it has full control over the file systems and mount points.)

A is used to present disk devices in a LVM volume group. It also contains details of the logical volumes to be created including the file system type and mount point. Logical volume sizes are expressed as a percentage of the total capacity of the volume group. A can also be consumed by a service in the same way as a . This allows services to manage their own devices on configurations that have limited numbers of disk drives.

Disk models also provide disk sizing information for virtual machine servers.

5.2.6 Memory Model #

Memory models define how the memory of a server should be configured to meet the needs of a particular role. It allows a number of HugePages to be defined at both the server and numa-node level.

Memory models also provide memory sizing information for virtual machine servers.

Memory models are optional - it is valid to have a server role without a memory model.

5.2.7 CPU Model #

CPU models define how CPUs of a server will be used. The model allows CPUs to be assigned for use by components such as nova (for VMs) and Open vSwitch (for DPDK). It also allows those CPUs to be isolated from the general kernel SMP balancing and scheduling algorithms.

CPU models also provide CPU sizing information for virtual machine servers.

CPU models are optional - it is valid to have a server role without a cpu model.

5.2.8 Servers #

have a which determines how they will be used in the cloud.

(in the input model) enumerate the resources available for your cloud. In addition, in this definition file you can either provide SUSE OpenStack Cloud with all of the details it needs to PXE boot and install an operating system onto the server, or, if you prefer to use your own operating system installation tooling you can simply provide the details needed to be able to SSH into the servers and start the deployment.

The address specified for the server will be the one used by SUSE OpenStack Cloud for lifecycle management and must be part of a network which is in the input model. If you are using SUSE OpenStack Cloud to install the operating system this network must be an untagged VLAN. The first server must be installed manually from the SUSE OpenStack Cloud ISO and this server must be included in the input model as well.

In addition to the network details used to install or connect to the server, each server defines what its is and to which it belongs.

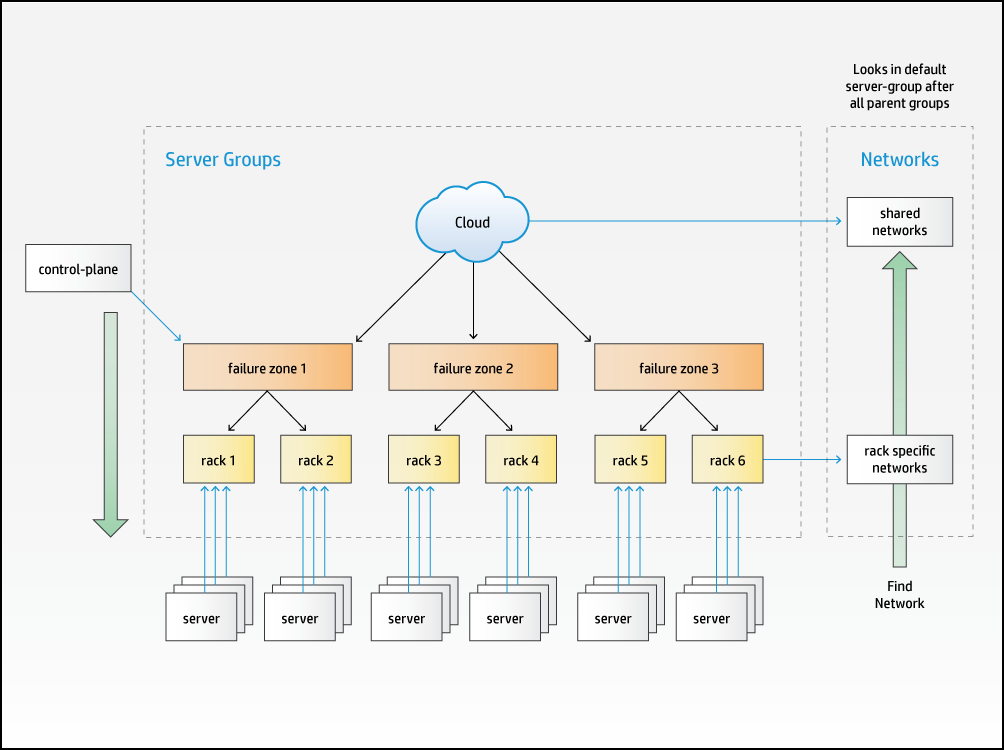

5.2.9 Server Groups #

A is associated with a .

A can use as failure zones for server allocation.

A may be associated with a list of .

A can contain other .

The practice of locating physical servers in a number of racks or enclosures in a data center is common. Such racks generally provide a degree of physical isolation that allows for separate power and/or network connectivity.

In the SUSE OpenStack Cloud model we support this configuration by allowing you to define a hierarchy of . Each is associated with one , normally at the bottom of the hierarchy.

are an optional part of the input model - if you do not define any, then all and will be allocated as if they are part of the same .

5.2.9.1 Server Groups and Failure Zones #

A defines a list of as the failure zones from which it wants to use servers. All servers in a listed as a failure zone in the and any they contain are considered part of that failure zone for allocation purposes. The following example shows how three levels of can be used to model a failure zone consisting of multiple racks, each of which in turn contains a number of .

When allocating , the configuration processor will traverse down the hierarchy of listed as failure zones until it can find an available server with the required . If the allocation policy is defined to be strict, it will allocate equally across each of the failure zones. A or can also independently specify the failure zones it wants to use if needed.

5.2.9.2 Server Groups and Networks #

Each L3 in a cloud must be associated with all or some of the , typically following a physical pattern (such as having separate networks for each rack or set of racks). This is also represented in the SUSE OpenStack Cloud model via , each group lists zero or more networks to which associated with at or below this point in the hierarchy are connected.

When the configuration processor needs to resolve the specific a should be configured to use, it traverses up the hierarchy of , starting with the group the server is directly associated with, until it finds a server-group that lists a network in the required network group.

The level in the hierarchy at which a is associated will depend on the span of connectivity it must provide. In the above example there might be networks in some which are per rack (that is Rack 1 and Rack 2 list different networks from the same ) and in a different that span failure zones (the network used to provide floating IP addresses to virtual machines for example).

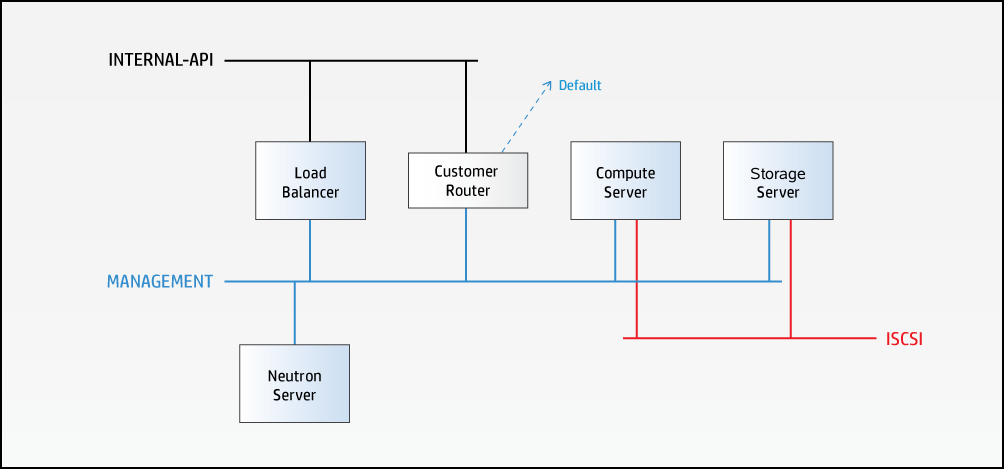

5.2.10 Networking #

In addition to the mapping of to specific and we must also be able to define how the connect to one or more .

In a simple cloud there may be a single L3 network but more typically there are functional and physical layers of network separation that need to be expressed.

Functional network separation provides different networks for different types of traffic; for example, it is common practice in even small clouds to separate the External APIs that users will use to access the cloud and the external IP addresses that users will use to access their virtual machines. In more complex clouds it is common to also separate out virtual networking between virtual machines, block storage traffic, and volume traffic onto their own sets of networks. In the input model, this level of separation is represented by .

Physical separation is required when there are separate L3 network segments providing the same type of traffic; for example, where each rack uses a different subnet. This level of separation is represented in the input model by the within each .

5.2.10.1 Network Groups #

Service endpoints attach to in a specific .

can define routes to other .

encapsulate the configuration for via

A defines the traffic separation model and all of the properties that are common to the set of L3 networks that carry each type of traffic. They define where services are attached to the network model and the routing within that model.

In terms of connectivity, all that has to be captured in the definition are the same service-component names that are used when defining . SUSE OpenStack Cloud also allows a default attachment to be used to specify "all service-components" that are not explicitly connected to another . So, for example, to isolate swift traffic, the swift-account, swift-container, and swift-object service components are attached to an "Object" and all other services are connected to "MANAGEMENT" via the default relationship.

The name of the "MANAGEMENT" cannot be changed. It must be upper case. Every SUSE OpenStack Cloud requires this network group in order to be valid.

The details of how each service connects, such as what port it uses, if it should be behind a load balancer, if and how it should be registered in keystone, and so forth, are defined in the service definition files provided by SUSE OpenStack Cloud.

In any configuration with multiple networks, controlling the routing is a major consideration. In SUSE OpenStack Cloud, routing is controlled at the level. First, all are configured to provide the route to any other in the same . In addition, a may be configured to provide the route any other in the same ; for example, if the internal APIs are in a dedicated (a common configuration in a complex network because a network group with load balancers cannot be segmented) then other may need to include a route to the internal API so that services can access the internal API endpoints. Routes may also be required to define how to access an external storage network or to define a general default route.

As part of the SUSE OpenStack Cloud deployment, networks are configured to act as the default route for all traffic that was received via that network (so that response packets always return via the network the request came from).

Note that SUSE OpenStack Cloud will configure the routing rules on the servers it deploys and will validate that the routes between services exist in the model, but ensuring that gateways can provide the required routes is the responsibility of your network configuration. The configuration processor provides information about the routes it is expecting to be configured.

For a detailed description of how the configuration processor validates routes, refer to Section 7.6, “Network Route Validation”.

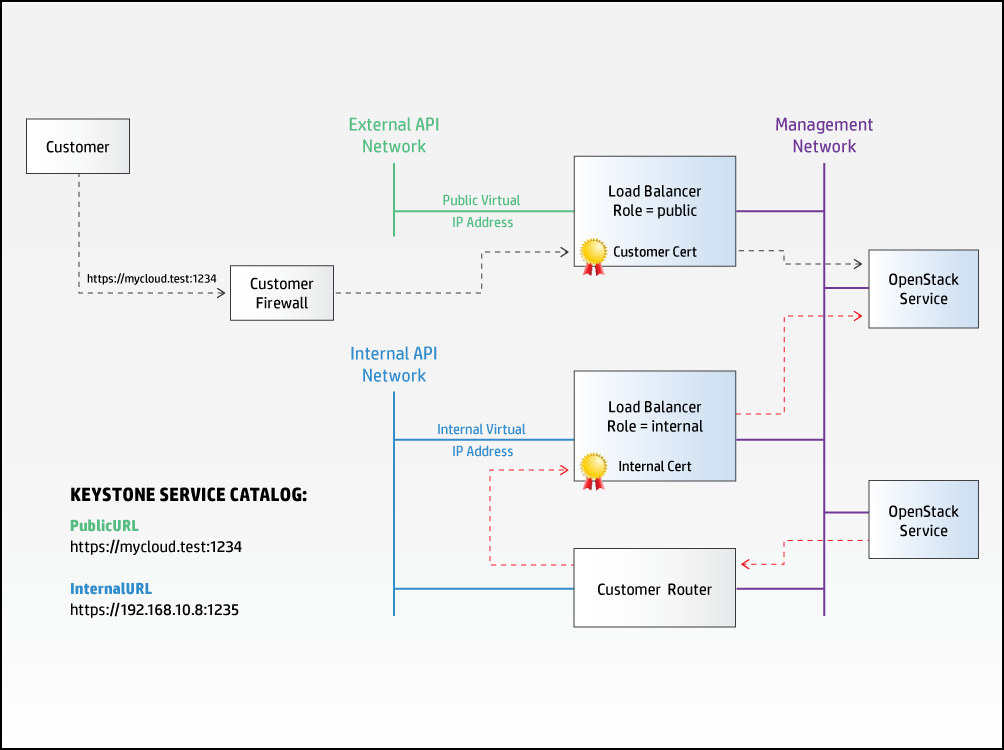

5.2.10.1.1 Load Balancers #

provide a specific type of routing and are defined as a relationship between the virtual IP address (VIP) on a network in one and a set of service endpoints (which may be on in the same or a different ).

As each is defined providing a virtual IP on a , it follows that those s can each only have one associated to them.

The definition includes a list of and endpoint roles it will provide a virtual IP for. This model allows service-specific to be defined on different . A "default" value is used to express "all service-components" which require a virtual IP address and are not explicitly configured in another configuration. The details of how the should be configured for each service, such as which ports to use, how to check for service liveness, etc., are provided in the SUSE OpenStack Cloud supplied service definition files.

Where there are multiple instances of a service (for example, in a cloud with multiple control-planes), each control-plane needs its own set of virtual IP address and different values for some properties such as the external name and security certificate. To accommodate this in SUSE OpenStack Cloud 9, load-balancers are defined as part of the control-plane, with the network groups defining just which load-balancers are attached to them.

Load balancers are always implemented by an ha-proxy service in the same control-plane as the services.

5.2.10.1.2 Separation of Public, Admin, and Internal Endpoints #

The list of endpoint roles for a make it possible to configure separate for public and internal access to services, and the configuration processor uses this information to both ensure the correct registrations in keystone and to make sure the internal traffic is routed to the correct endpoint. SUSE OpenStack Cloud services are configured to only connect to other services via internal virtual IP addresses and endpoints, allowing the name and security certificate of public endpoints to be controlled by the customer and set to values that may not be resolvable/accessible from the servers making up the cloud.

Note that each defined in the input model will be allocated a separate virtual IP address even when the load-balancers are part of the same . Because of the need to be able to separate both public and internal access, SUSE OpenStack Cloud will not allow a single to provide both public and internal access. in this context are logical entities (sets of rules to transfer traffic from a virtual IP address to one or more endpoints).

The following diagram shows a possible configuration in which the hostname associated with the public URL has been configured to resolve to a firewall controlling external access to the cloud. Within the cloud, SUSE OpenStack Cloud services are configured to use the internal URL to access a separate virtual IP address.

5.2.10.1.3 Network Tags #

Network tags are defined by some SUSE OpenStack Cloud and are used to convey information between the network model and the service, allowing the dependent aspects of the service to be automatically configured.

Network tags also convey requirements a service may have for aspects of the server network configuration, for example, that a bridge is required on the corresponding network device on a server where that service-component is installed.

See Section 6.13.2, “Network Tags” for more information on specific tags and their usage.

5.2.10.2 Networks #

A is part of a .

are fairly simple definitions. Each defines the details of its VLAN, optional address details (CIDR, start and end address, gateway address), and which it is a member of.

5.2.10.3 Interface Model #

A identifies an that describes how its network interfaces are to be configured and used.

Network groups are mapped onto specific network interfaces via an , which describes the network devices that need to be created (bonds, ovs-bridges, etc.) and their properties.

An acts like a template; it can define how some or all of the are to be mapped for a particular combination of physical NICs. However, it is the on each server that determine which are required and hence which interfaces and will be configured. This means that can be shared between different . For example, an API role and a database role may share an interface model even though they may have different disk models and they will require a different subset of the .

Within an , physical ports are identified by a device name, which in turn is resolved to a physical port on a server basis via a . To allow different physical servers to share an , the is defined as a property of each .

The interface-model can also used to describe how network

devices are to be configured for use with DPDK, SR-IOV, and PCI Passthrough.

5.2.10.4 NIC Mapping #

When a has more than a single physical network

port, a is required to unambiguously identify

each port. Standard Linux mapping of ports to interface names at the time of

initial discovery (for example, eth0,

eth1, eth2, ...) is not uniformly

consistent

from server to server, so a mapping of PCI bus address to interface name is

instead.

NIC mappings are also used to specify the device type for interfaces that are to be used for SR-IOV or PCI Passthrough. Each SUSE OpenStack Cloud release includes the data for the supported device types.

5.2.10.5 Firewall Configuration #

The configuration processor uses the details it has about which networks and ports use to create a set of firewall rules for each server. The model allows additional user-defined rules on a per basis.

5.2.11 Configuration Data #

Configuration Data is used to provide settings which have to be applied in a specific context, or where the data needs to be verified against or merged with other values in the input model.

For example, when defining a neutron provider network to be used by Octavia, the network needs to be included in the routing configuration generated by the Configuration Processor.

6 Configuration Objects #

6.1 Cloud Configuration #

The top-level cloud configuration file, cloudConfig.yml,

defines some global values for SUSE OpenStack Cloud, as described in the table below.

The snippet below shows the start of the control plane definition file.

---

product:

version: 2

cloud:

name: entry-scale-kvm

hostname-data:

host-prefix: ardana

member-prefix: -m

ntp-servers:

- "ntp-server1"

# dns resolving configuration for your site

dns-settings:

nameservers:

- name-server1

firewall-settings:

enable: true

# log dropped packets

logging: true

audit-settings:

audit-dir: /var/audit

default: disabled

enabled-services:

- keystone| Key | Value Description |

|---|---|

| name | An administrator-defined name for the cloud |

| hostname-data (optional) |

Provides control over some parts of the generated names (see ) Consists of two values:

|

| ntp-servers (optional) |

A list of external NTP servers your cloud has access to. If specified by name then the names need to be resolvable via the external DNS nameservers you specify in the next section. All servers running the "ntp-server" component will be configured to use these external NTP servers. |

| dns-settings (optional) |

DNS configuration data that will be applied to all servers. See example configuration for a full list of values. |

| smtp-settings (optional) |

SMTP client configuration data that will be applied to all servers. See example configurations for a full list of values. |

| firewall-settings (optional) |

Used to enable/disable the firewall feature and to enable/disable logging of dropped packets. The default is to have the firewall enabled. |

| audit-settings (optional) |

Used to enable/disable the production of audit data from services. The default is to have audit disabled for all services. |

6.2 Control Plane #

The snippet below shows the start of the control plane definition file.

---

product:

version: 2

control-planes:

- name: control-plane-1

control-plane-prefix: cp1

region-name: region0

failure-zones:

- AZ1

- AZ2

- AZ3

configuration-data:

- NEUTRON-CONFIG-CP1

- OCTAVIA-CONFIG-CP1

common-service-components:

- logging-producer

- monasca-agent

- stunnel

- lifecycle-manager-target

clusters:

- name: cluster1

cluster-prefix: c1

server-role: CONTROLLER-ROLE

member-count: 3

allocation-policy: strict

service-components:

- lifecycle-manager

- ntp-server

- swift-ring-builder

- mysql

- ip-cluster

...

resources:

- name: compute

resource-prefix: comp

server-role: COMPUTE-ROLE

allocation-policy: any

min-count: 0

service-components:

- ntp-client

- nova-compute

- nova-compute-kvm

- neutron-l3-agent

...| Key | Value Description |

|---|---|

| name |

This name identifies the control plane. This value is used to persist server allocations Section 7.3, “Persisted Data” and cannot be changed once servers have been allocated. |

| control-plane-prefix (optional) |

The control-plane-prefix is used as part of the hostname (see Section 7.2, “Name Generation”). If not specified, the control plane name is used. |

| region-name |

This name identifies the keystone region within which services in the

control plane will be registered. In SUSE OpenStack Cloud, multiple regions are

not supported. Only

For clouds consisting of multiple control planes, this attribute should

be omitted and the regions object should be used to set the region

name ( |

| uses (optional) |

Identifies the services this control will consume from other control planes (see Section 6.2.3, “Multiple Control Planes”). |

| load-balancers (optional) |

A list of load balancer definitions for this control plane (see Section 6.2.4, “Load Balancer Definitions in Control Planes”). For a multi control-plane cloud load balancers must be defined in each control-plane. For a single control-plane cloud they may be defined either in the control plane or as part of a network group. |

| common-service-components (optional) |

This lists a set of service components that run on all servers in the control plane (clusters and resource pools). |

| failure-zones (optional) |

A list of names that servers for this control plane will be allocated from. If no failure-zones are specified, only servers not associated with a will be used. (See Section 5.2.9.1, “Server Groups and Failure Zones” for a description of server-groups as failure zones.) |

| configuration-data (optional) |

A list of configuration data settings to be used for services in this control plane (see Section 5.2.11, “Configuration Data”). |

| clusters |

A list of clusters for this control plane (see Section 6.2.1, “ Clusters”). |

| resources |

A list of resource groups for this control plane (see Section 6.2.2, “Resources”). |

6.2.1 Clusters #

| Key | Value Description |

|---|---|

| name |

Cluster and resource names must be unique within a control plane. This value is used to persist server allocations (see Section 7.3, “Persisted Data”) and cannot be changed once servers have been allocated. |

| cluster-prefix (optional) |

The cluster prefix is used in the hostname (see Section 7.2, “Name Generation”). If not supplied then the cluster name is used. |

| server-role |

This can either be a string (for a single role) or a list of roles. Only servers matching one of the specified will be allocated to this cluster. (see Section 5.2.4, “Server Roles” for a description of server roles) |

| service-components |

The list of to be deployed on the servers allocated for the cluster. (The common-service-components for the control plane are also deployed.) |

|

member-count min-count max-count (all optional) |

Defines the number of servers to add to the cluster. The number of servers that can be supported in a cluster depends on the services it is running. For example MariaDB and RabbitMQ can only be deployed on clusters on 1 (non-HA) or 3 (HA) servers. Other services may support different sizes of cluster. If min-count is specified, then at least that number of servers will be allocated to the cluster. If min-count is not specified it defaults to a value of 1. If max-count is specified, then the cluster will be limited to that number of servers. If max-count is not specified then all servers matching the required role and failure-zones will be allocated to the cluster. Specifying member-count is equivalent to specifying min-count and max-count with the same value. |

| failure-zones (optional) |

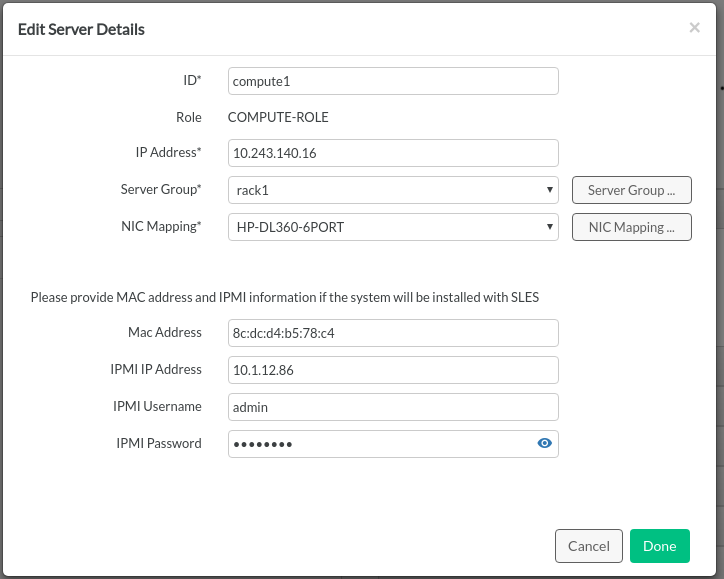

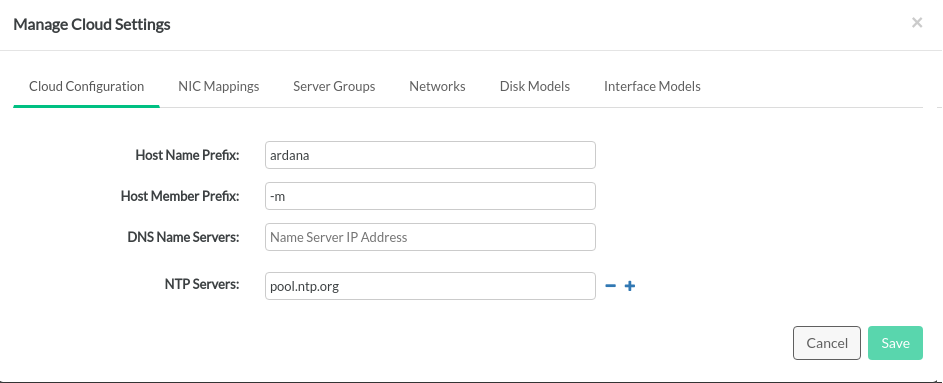

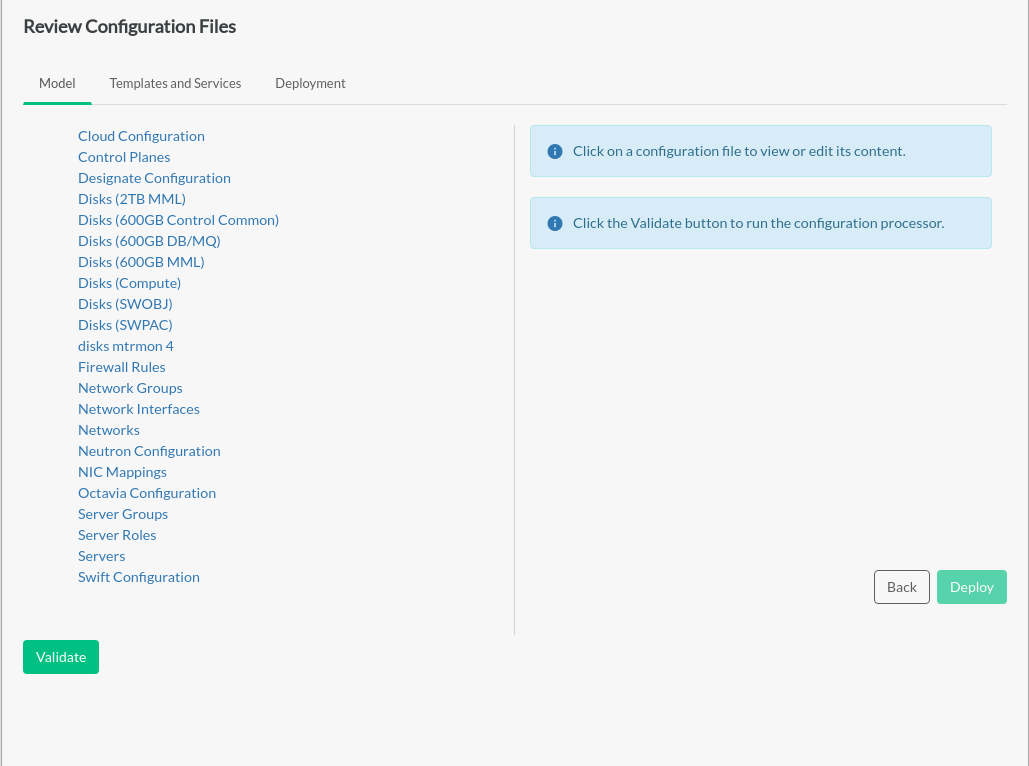

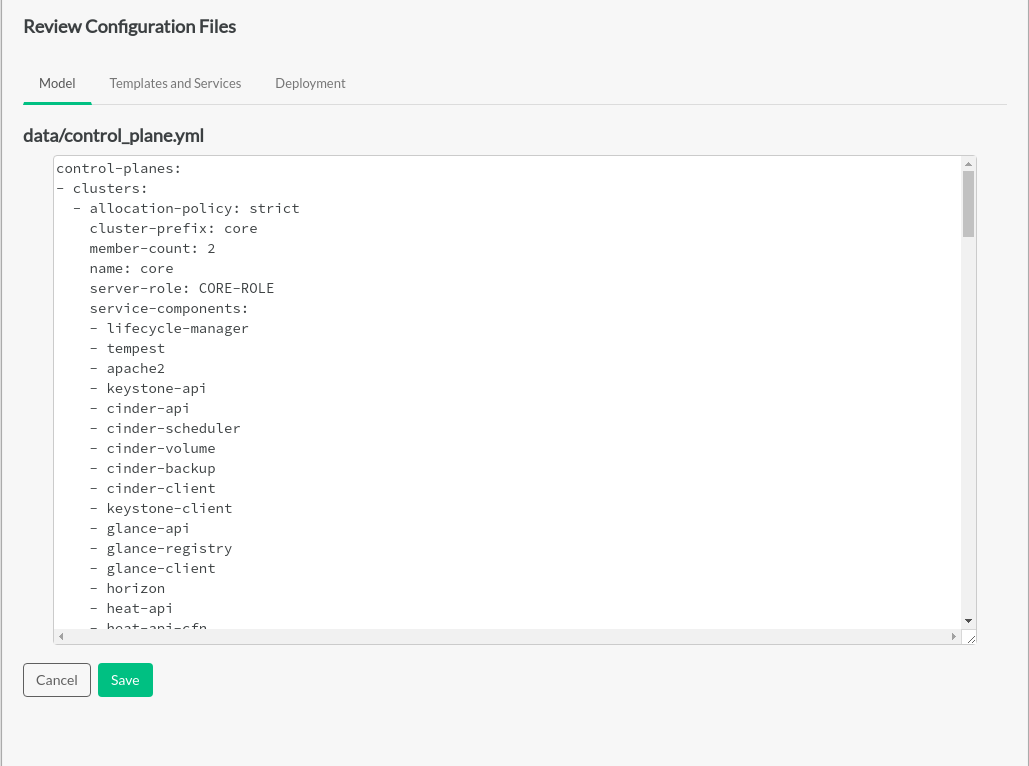

A list of that servers will be allocated from. If specified, it overrides the list of values specified for the control-plane. If not specified, the control-plane value is used. (see Section 5.2.9.1, “Server Groups and Failure Zones” for a description of server groups as failure zones). |