Monitoring SUSE AI with OpenTelemetry and SUSE Observability #

- WHAT?

This document focuses on techniques for gathering telemetry data from all SUSE AI components, including metrics, logs and traces.

- WHY?

To observe, analyze and maintain the behavior, performance and health of your SUSE AI environment, and to troubleshoot issues effectively.

- EFFORT

Setting up the recommended monitoring configurations with SUSE Observability is straightforward. Advanced setups for more granular control may require additional time for specialized analysis and fine-tuning.

- GOAL

To visualize the complete topology of your services and operations, providing deep insights and clarity into your SUSE AI environment.

1 Introduction #

This document focuses on techniques for gathering telemetry data from all SUSE AI components.

For most of the components, it presents two distinct paths:

- Recommended settings

Straightforward configurations designed to utilize the SUSE Observability Extension, providing a quick solution for your environment.

- Advanced configuration

For users who require deeper and more granular control. Advanced options unlock additional observability signals that are relevant for specialized analysis and fine-tuning.

Several setups are specific to the product, while others—particularly for scraping metrics—are configured directly within the OpenTelemetry Collector. By implementing the recommended settings, you can visualize the complete topology of your services and operations, bringing clarity to your SUSE AI environment.

1.1 What is SUSE AI monitoring? #

Monitoring SUSE AI involves observing and analyzing the behavior, performance and health of its components. In a complex, distributed system like SUSE AI, this is achieved by collecting and interpreting telemetry data. This data is typically categorized into the three pillars of observability:

- Metrics

Numerical data representing system performance, such as CPU usage, memory consumption or request latency.

- Logs

Time-stamped text records of events that occurred within the system, useful for debugging and auditing.

- Traces

A representation of the path of a request as it travels through all the different services in the system. Traces are essential for understanding performance bottlenecks and errors in the system architecture.

1.2 How monitoring works #

SUSE AI uses OpenTelemetry, an open-source observability framework, for instrumenting applications. Instrumentation is the process of adding code to an application to generate telemetry data. By using OpenTelemetry, SUSE AI ensures a standardized, vendor-neutral approach to data collection.

The collected data is then sent to SUSE Observability, which provides a comprehensive platform for visualizing, analyzing and alerting on the telemetry data. This allows administrators and developers to gain deep insights into the system, maintain optimal performance, and troubleshoot issues effectively.

2 Monitoring GPU usage #

To effectively monitor the performance and utilization of your GPUs, configure the OpenTelemetry Collector to scrape metrics from the NVIDIA DCGM Exporter, which is deployed as part of the NVIDIA GPU Operator.

Grant permissions (RBAC). The OpenTelemetry Collector requires specific permissions to discover the GPU metrics endpoint within the cluster.

Create a file named

otel-rbac.yamlwith the following content. It defines aRolewith permissions to get services and endpoints, and aRoleBindingto grant these permissions to the OpenTelemetry Collector’s service account.--- apiVersion: rbac.authorization.k8s.io/v1 kind: Role metadata: name: suse-observability-otel-scraper rules: - apiGroups: - "" resources: - services - endpoints verbs: - list - watch --- apiVersion: rbac.authorization.k8s.io/v1 kind: RoleBinding metadata: name: suse-observability-otel-scraper roleRef: apiGroup: rbac.authorization.k8s.io kind: Role name: suse-observability-otel-scraper subjects: - kind: ServiceAccount name: OPENTELEMETRY-COLLECTOR namespace: OBSERVABILITY ---ImportantVerify that the

ServiceAccountname and namespace in theRoleBindingmatch your OpenTelemetry Collector’s deployment.Apply this configuration to the

gpu-operatornamespace.> kubectl apply -n gpu-operator -f otel-rbac.yamlConfigure the OpenTelemetry Collector. Add the following Prometheus receiver configuration to your OpenTelemetry Collector’s values file. This tells the collector to scrape metrics from any endpoint in the

gpu-operatornamespace every 10 seconds.config: receivers: prometheus: config: scrape_configs: - job_name: 'gpu-metrics' scrape_interval: 10s scheme: http kubernetes_sd_configs: - role: endpoints namespaces: names: - gpu-operator

3 Monitoring Open WebUI #

The preferred way of retrieving relevant telemetry data from Open WebUI is to use the SUSE AI Filter. It requires enabling and configuring Open WebUI Pipelines.

Verify that the Open WebUI installation override file

owui_custom_overrides.yamlincludes the following content.pipelines: enabled: true persistence: storageClass: longhorn 1 extraEnvVars: 2 - name: PIPELINES_URLS 3 value: "https://raw.githubusercontent.com/SUSE/suse-ai-observability-extension/refs/heads/main/integrations/oi-filter/suse_ai_filter.py" - name: OTEL_SERVICE_NAME 4 value: "Open WebUI" - name: OTEL_EXPORTER_HTTP_OTLP_ENDPOINT 5 value: "http://opentelemetry-collector.suse-observability.svc.cluster.local:4318" - name: PRICING_JSON 6 value: "https://raw.githubusercontent.com/SUSE/suse-ai-observability-extension/refs/heads/main/integrations/oi-filter/pricing.json" extraEnvVars: - name: OPENAI_API_KEY 7 value: "0p3n-w3bu!"NoteIn the above example, there are two

extraEnvVarsblocks: one at the root level and another inside thepipelinesconfiguration. The root-levelextraEnvVarsis fed into Open WebUI to configure the communication between Open WebUI and Open WebUI Pipelines. TheextraEnvVarsinside the configuration are injected into the container that acts as a runtime for thepipelines.longhornorlocal-path.The environment variables that you are making available to the pipeline’s runtime container.

A list of pipeline URLs to be downloaded and installed by default. Individual URLs are separated by a semicolon (

;).For air-gapped deployments, you must provide the pipelines at URLs that are accessible from the local host, such as an internal GitLab instance.

The service name that appears in traces and topological representations in SUSE Observability.

The endpoint for the OpenTelemetry collector. Make sure to use the HTTP port of your collector.

A file for the model multipliers in cost estimation. You can customize it to match your actual infrastructure experimentally.

For air-gapped deployments, you must provide the pipelines at URLs that are accessible from the local host, such as an internal GitLab instance.

The value for the API key between Open WebUI and Open WebUI Pipelines. The default value is

0p3n-w3bu!.After you fill the override file with correct values, install or update Open WebUI.

> helm upgrade \ --install open-webui oci://dp.apps.rancher.io/charts/open-webui \ -n SUSE_AI_NAMESPACE \ --create-namespace \ --version 7.2.0 \ -f owui_custom_overrides.yamlTipMake sure to set the version, namespace and other options to the proper values.

After the installation is successful, you can access tracing data in SUSE Observability for each chat.

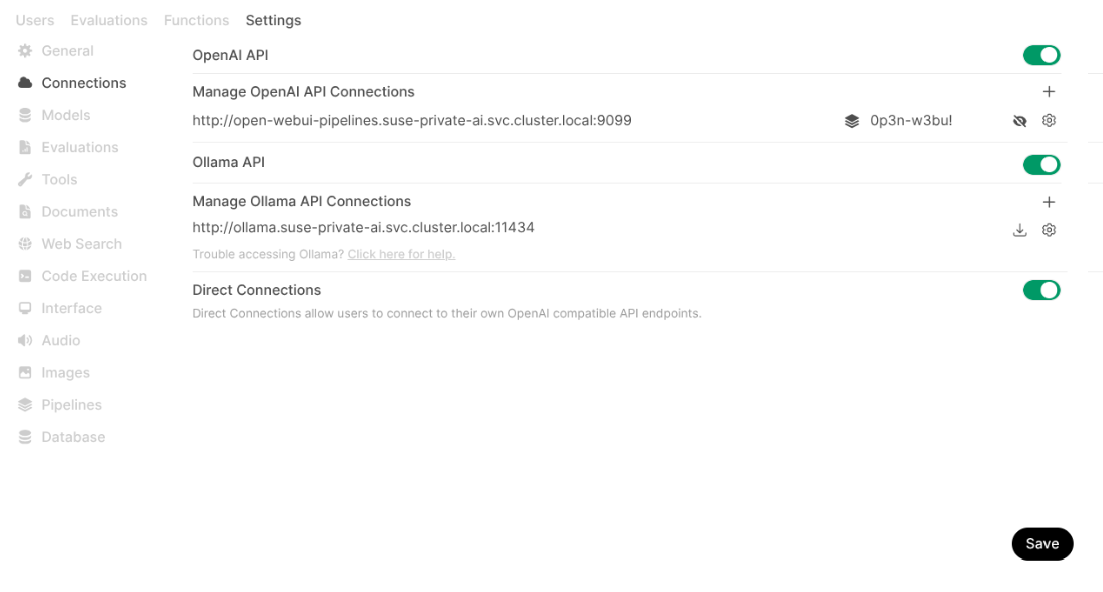

TipYou can verify that a new connection was created with correct credentials in › › .

Figure 1: New connection added for the pipeline #

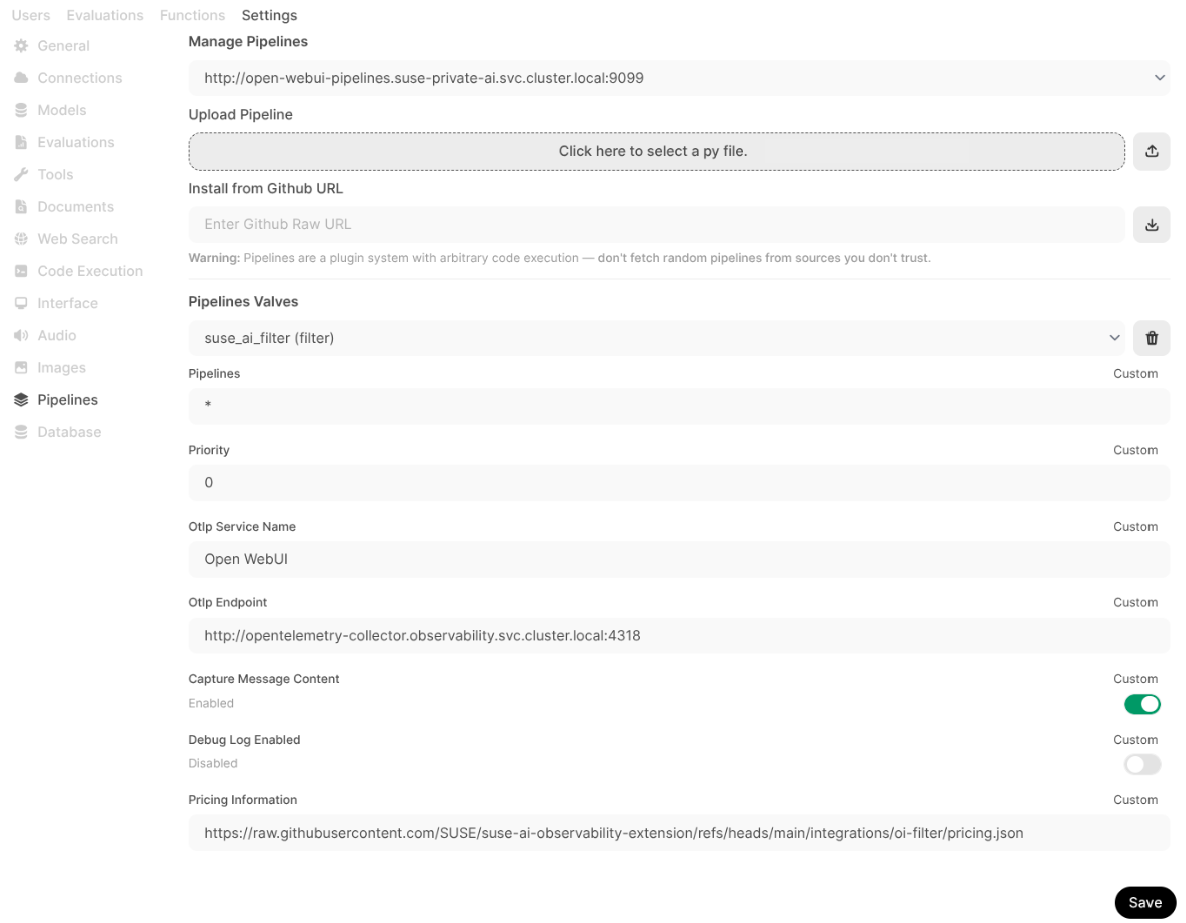

3.1 Configuring a pipeline filter in Open WebUI (recommended) #

If you already have a running instance of Open WebUI with the pipelines enabled and configured, you can set up the SUSE AI Filter in its Web user interface.

You must have Open WebUI administrator privileges to access configuration screens or settings mentioned in this section.

In the bottom left of the Open WebUI window, click your avatar icon to open the user menu and select .

Click the tab and select from the left menu.

In the section, enter

https://raw.githubusercontent.com/SUSE/suse-ai-observability-extension/refs/heads/main/integrations/oi-filter/suse_ai_filter.pyand click the upload button on the right to upload the pipeline from the URL.After the upload is finished, you can review the configuration of the pipeline. Confirm with .

Figure 2: Adding SUSE AI filter pipeline #

3.2 Configuring default Open WebUI metrics and traces (advanced) #

Open WebUI also offers certain built-in OpenTelemetry integration for traces and metrics. These signals are related to the API consumption but do not provide details about the GenAI monitoring. That is, why we need to configure the SUSE AI filter as described in Configuring pipeline filter during Open WebUI installation (recommended).

Append the following environment variables to your

extraEnvVarssection in theowui_custom_overrides.yamlfile mentioned in Configuring pipeline filter during Open WebUI installation (recommended).extraEnvVars: - name: OPENAI_API_KEY value: "0p3n-w3bu!" - name: ENABLE_OTEL value: "true" - name: ENABLE_OTEL_METRICS value: "true" - name: OTEL_EXPORTER_OTLP_INSECURE value: "false" 1 - name: OTEL_EXPORTER_OTLP_ENDPOINT value: CUSTOM_OTEL_ENDPOINT 2 - name: OTEL_SERVICE_NAME value: CUSTOM_OTEL_IDENTIFYER 3

Set to "true" for testing or controlled environments, and "false" for production deployments with TLS communication.

Enter your custom OpenTelemetry collector endpoint URL, such as "http://opentelemetry-collector.suse-observability.svc.cluster.local:4318".

Specify a custom identifier for the OpenTelemetry service, such as

OI Core.Save the enhanced override file and update Open WebUI:

> helm upgrade \ --install open-webui oci://dp.apps.rancher.io/charts/open-webui \ -n <replaceable>SUSE_AI_NAMESPACE</replaceable> \ --create-namespace \ --version 7.2.0 \ -f owui_custom_overrides.yaml

4 Monitoring Milvus #

Milvus is monitored by scraping its Prometheus-compatible metrics endpoint. The SUSE Observability Extension uses these metrics to visualize Milvus’s status and activity.

4.1 Scraping the metrics (recommended) #

Add the following job to the scrape_configs section of your OpenTelemetry Collector’s configuration.

It instructs the collector to scrape the /metrics endpoint of the Milvus service every 15 seconds.

config:

receivers:

prometheus:

config:

scrape_configs:

- job_name: 'milvus'

scrape_interval: 15s

metrics_path: '/metrics'

static_configs:

- targets: ['milvus.SUSE_AI_NAMESPACE.svc.cluster.local:9091'] 1Your Milvus service metrics endpoint.

The example |

4.2 Tracing (advanced) #

Milvus can also export detailed tracing data.

Enabling tracing in Milvus can generate a large amount of data. We recommend configuring sampling at the collector level to avoid performance issues and high storage costs.

To enable tracing, configure the following settings in your Milvus Helm chart values:

extraConfigFiles:

user.yaml: |+

trace:

exporter: jaeger

sampleFraction: 1

jaeger: url: "http://opentelemetry-collector.observability.svc.cluster.local:14268/api/traces" 1The URL of the OpenTelemetry Collector installed by the user. |

5 Monitoring vLLM #

vLLM is monitored by scraping its Prometheus-compatible metrics endpoints. The SUSE Observability Extension uses these metrics to visualize vLLM’s status and activity.

5.1 Metrics Scraping (recommended) #

Add the following job to the scrape_configs section of your OpenTelemetry Collector’s configuration.

This configures the collector to scrape the /metrics endpoint from all vLLM services every 10 seconds.

Remember that you can have several jobs defined, so if you defined other jobs—such as Milvus—you can just append the new job to your list.

If you have deployed vLLM’s services with non-default values, you can easily change the service discovery rules.

Before using the following example, replace the VLLM_NAMESPACE and VLLM_RELEASE_NAME placeholders with the actual values you used while deploying vLLM.

config:

receivers:

prometheus:

config:

scrape_configs:

- job_name: 'vllm'

scrape_interval: 10s

scheme: http

kubernetes_sd_configs:

- role: service

relabel_configs:

- source_labels: [__meta_kubernetes_namespace]

action: keep

regex: 'VLLM_NAMESPACE'

- source_labels: [__meta_kubernetes_service_name]

action: keep

regex: '.*VLLM_RELEASE_NAME.*'With SUSE Observability version 2.6.2, a change of the standard behavior broke the vLLM monitoring performed by the extension.

To fix it, update otel-values.yaml to include the following additions.

No changes are required for people using SUSE Observability version 2.6.1 and below.

Add a new processor.

config: processors: ... # same as before transform: metric_statements: - context: metric statements: - replace_pattern(name, "^vllm:", "vllm_")Modify the metrics pipeline to perform the transformation defined above:

config: service: pipelines: ... # same as before metrics: receivers: [otlp, spanmetrics, prometheus] processors: [transform, memory_limiter, resource, batch] exporters: [debug, otlp]

6 Monitoring user-managed applications #

To monitor other applications, you can utilize OpenTelemetry SDKs or any other instrumentation provider compatible with OpenTelemetry’s semantics, for example, OpenLIT SDK. For more details, refer to Appendix B, Instrument applications with OpenLIT SDK.

OpenTelemetry offers several instrumentation techniques for different deployment scenarios and applications. You can instrument applications either manually, with more detailed control, or automatically for an easier starting point.

One of the most straightforward ways of getting started with OpenTelemetry is using the OpenTelemetry Operator for Kubernetes, which is available in the SUSE Application Collection. Find more information in this extensive guide on how to use this operator for instrumenting your applications.

6.1 Ensuring that the telemetry data is properly captured by the SUSE Observability Extension #

For the SUSE Observability Extension to acknowledge an application as a GenAI application, it needs to have a meter configured.

It must provide at least the RequestsTotal metric with the following attributes:

TelemetrySDKLanguageServiceNameServiceInstanceIdServiceNamespaceGenAIEnvironmentGenAiApplicationNameGenAiSystemGenAiOperationNameGenAiRequestModel

Both the meter and the tracer must contain the following resource attributes:

- service.name

The logical name of the service. Defaults to 'My App'.

- service.version

The version of the service. Defaults to '1.0'.

- deployment.environment

The name of the deployment environment, such as 'production' or 'staging'. Defaults to 'default'.

- telemetry.sdk.name

The value must be 'openlit'.

The following metrics are utilized in the graphs of the SUSE Observability Extension:

- gen_ai.client.token.usage

Measures the number of used input and output tokens.

Type: histogram

Unit: token

- gen_ai.total.requests

Number of requests.

Type: counter

Unit: integer

- gen_ai.usage.cost

The distribution of GenAI request costs.

Type: histogram

Unit: USD

- gen_ai.usage.input_tokens

Number of prompt tokens processed.

Type: counter

Unit: integer

- gen_ai.usage.output_tokens

Number of completion tokens processed.

Type: counter

Unit: integer

- gen_ai.client.token.usage

Number of tokens processed.

Type: counter

Unit: integer

6.2 Troubleshooting #

- No metrics received from any components.

Verify the OpenTelemetry Collector deployment.

Check if the exporter is properly set to the SUSE Observability collector and with the correct API key and endpoint specified.

- No metrics received from the GPU.

Verify if the RBAC rules were applied.

Verify if the metrics receiver scraper is configured.

Check the NVIDIA DCGM Exporter for errors.

- No metrics received from Milvus.

Verify if Milvus chart configuration is exposing the metrics endpoint.

Verify if the metrics receiver scraper is configured.

For usage metrics, confirm that requests were actually made to Milvus.

- No metrics received from vLLM.

Verify if the vLLM chart configuration is exposing the metrics endpoint.

Verify if the metrics receiver scraper is properly configured, in particular, the placeholders.

If some metrics are present, but not usage-related ones, verify if you actually made requests to vLLM.

If vLLM was not identified and/or vLLM metrics have the prefix "vllm:" , check the collector configuration. You may be using SUSE Observability 2.6.2, which requires additional configuration.

- GPU nodes are stuck in

NotReadystatus. Verify if there is a driver version mismatch between the host and the version that the GPU operator expects.

You may need to reinstall the Kubernetes stackpack.

- No tracing data received from any components.

Verify the OpenTelemetry Collector deployment.

Check if the exporter is properly set to the SUSE Observability collector, with the right API key and endpoint set.

- No tracing data received from Open WebUI.

Verify if the SUSE AI Observability Filter was installed and configured properly.

Verify if chat requests actually happened.

- Cost estimation is far from real values.

Recalculate the multipliers for the

PRICING_JSONin the SUSE AI Observability Filter.- There is high demand for storage volume.

Verify if sampling is being applied in the OpenTelemetry Collector.

7 Monitoring with the OpenTelemetry Operator #

This section describes how to instrument Kubernetes applications using the OpenTelemetry Operator for Kubernetes. It also explains how to forward telemetry (metrics and traces) to SUSE Observability. The Operator simplifies the deployment of OpenTelemetry components. It also enables automatic instrumentation without modifying the application code.

The guidance is presented in two paths:

Path A: You already have an OpenTelemetry Collector deployed and configured.

Path B: You prefer to have the Operator manage the Collector using an

OpenTelemetryCollectorcustom resource.

7.1 Prerequisites #

Ensure the following are in place before proceeding:

A Kubernetes cluster managed with Rancher.

cert-manager installed (required for the Operator’s admission webhooks).

SUSE Observability installed and reachable from the cluster with a valid service token or API key.

7.2 Installing the OpenTelemetry Operator #

Install the OpenTelemetry Operator into your cluster using the SUSE Application Collection Helm chart. The Operator supports managing OpenTelemetry Collector instances. It also supports automatic instrumentation of workloads.

Pin the chart version explicitly to avoid unexpected changes during upgrades.

Install the Operator into the namespace where you run your SUSE Observability resources.

> helm install opentelemetry-operator oci://dp.apps.rancher.io/charts/opentelemetry-operator \ --namespace <SUSE_OBSERVABILITY_NAMESPACE> \ --version <CHART-VERSION> \ --set manager.autoInstrumentation.go.enabled=true \ --set global.imagePullSecrets={application-collection}NoteIf the previous command fails with your Helm version, use the following alternative.

> helm install opentelemetry-operator oci://dp.apps.rancher.io/charts/opentelemetry-operator \ --namespace <SUSE_OBSERVABILITY_NAMESPACE> \ --version <CHART-VERSION> \ --set manager.autoInstrumentation.go.enabled=true \ --set global.imagePullSecrets[0].name=application-collectionThe Helm chart deploys the Operator controller. It manages the following custom resources (CRs):

OpenTelemetryCollector: Defines and deploys OpenTelemetry Collector instances managed by the Operator.TargetAllocator: Distributes Prometheus scrape targets across Collector replicas.OpAMPBridge: Optional component that reports and manages Collector state via the OpAMP protocol.Instrumentation: Defines automatic instrumentation settings and exporter configurations for workloads.

Verify that the CRDs are installed.

# kubectl api-resources --api-group=opentelemetry.io

7.3 Path A: Use an existing Collector #

If you already have an OpenTelemetry Collector deployed (for example, installed via Helm and exporting telemetry to SUSE Observability), follow these steps. This enables auto-instrumentation without replacing the Collector.

7.3.1 Enable OTLP reception on the Collector #

Ensure your Collector is configured to receive OTLP telemetry from instrumented workloads. Enable at least one OTLP protocol (gRPC or HTTP).

The following snippet shows a SUSE AI example otel-values.yaml:

receivers:

otlp:

protocols:

grpc:

endpoint: "0.0.0.0:4317"

http:

endpoint: "0.0.0.0:4318"

service:

pipelines:

traces:

receivers: [otlp, jaeger]

metrics:

receivers: [otlp, prometheus, spanmetrics]After adding the protocols, update the Collector.

> helm upgrade --install opentelemetry-collector \

oci://dp.apps.rancher.io/charts/opentelemetry-collector \

-n <SUSE_OBSERVABILITY_NAMESPACE> \

--version <CHART_VERSION> \

-f otel-values.yaml7.3.2 Create an Instrumentation custom resource #

Create an Instrumentation custom resource that defines automatic instrumentation behavior and the OTLP export destination.

Namespace rule:

The Instrumentation resource must exist before the pod is created.

The Operator resolves it either from the same namespace as the pod, or from another namespace when referenced as <namespace>/<name> in the annotation.

Create a file named

instrumentation.yamlwith the following content.apiVersion: opentelemetry.io/v1alpha1 kind: Instrumentation metadata: name: otel-instrumentation spec: exporter: endpoint: http://opentelemetry-collector.observability.svc.cluster.local:4317 propagators: - tracecontext - baggage defaults: useLabelsForResourceAttributes: true python: env: - name: OTEL_EXPORTER_OTLP_ENDPOINT value: http://opentelemetry-collector.observability.svc.cluster.local:4318 go: env: - name: OTEL_EXPORTER_OTLP_ENDPOINT value: http://opentelemetry-collector.observability.svc.cluster.local:4318 sampler: type: parentbased_traceidratio argument: "1"NoteMost auto-instrumentation SDKs (Python, Go, NodeJS) default to OTLP/HTTP (port

4318). If your Collector only exposes OTLP/gRPC (4317), explicitly configure the SDK endpoint.Apply the resource.

> kubectl apply \ --namespace <SUSE_OBSERVABILITY_NAMESPACE> \ -f instrumentation.yaml

7.3.3 Enable auto-instrumentation for workloads #

To instruct the Operator to auto-instrument application pods, add an annotation to the pod template. Use the annotation matching your workload language.

Java:

instrumentation.opentelemetry.io/inject-java: <namespace>/otel-instrumentationNodeJS:

instrumentation.opentelemetry.io/inject-nodejs: <namespace>/otel-instrumentationPython:

instrumentation.opentelemetry.io/inject-python: <namespace>/otel-instrumentationGo:

instrumentation.opentelemetry.io/inject-go: <namespace>/otel-instrumentation

For Go workloads, an additional annotation is required:

instrumentation.opentelemetry.io/otel-go-auto-target-exe: <path-to-binary>

You can also enable injection at the namespace level:

apiVersion: v1

kind: Namespace

metadata:

name: <APP-NAMESPACE>

annotations:

instrumentation.opentelemetry.io/inject-python: "true"Or per Deployment:

spec:

template:

metadata:

annotations:

instrumentation.opentelemetry.io/inject-python: "true"Annotation values may be:

"true"to use anInstrumentationresource in the same namespace."my-instrumentation"to use a named resource in the same namespace."other-namespace/my-instrumentation"for a cross-namespace reference."false"to disable injection.

When a pod with injection annotations is created, the Operator mutates it via an admission webhook:

An init container is injected to copy auto-instrumentation binaries.

The application container is modified to preload the instrumentation.

Environment variables are added to configure the SDK.

The following procedure shows how to inject instrumentation into the open-webui-mcpo workload.

Edit the deployment.

> kubectl edit deployment open-webui-mcpo -n suse-private-aiAdd an injection annotation to

spec.template.metadata.annotations.spec: template: metadata: annotations: instrumentation.opentelemetry.io/inject-python: <namespace>/otel-instrumentationNoteFor Go workloads, the binary being instrumented must provide the

.gopclntabsection. Binaries stripped of this section during or after compilation are not compatible. To check if yourollamabinary has symbols, runnm /bin/ollama. If it returnsno symbols, auto-instrumentation will not work with that build.Roll out the updated deployment.

> kubectl rollout restart deployment open-webui-mcpo -n suse-private-ai

7.3.4 Verify the telemetry workflow #

After injecting instrumentation, verify that an init container was injected automatically.

> kubectl -n suse-private-ai get pod <OPENWEBUI_MCPO_POD> \

-o jsonpath="{.spec.initContainers[*]['name','image']}"Example output:

> opentelemetry-auto-instrumentation-python \

ghcr.io/open-telemetry/opentelemetry-operator/autoinstrumentation-python:0.59b07.3.5 Verify SUSE Observability UI #

In the SUSE Observability UI, verify that application traces and metrics are visible in the appropriate dashboards. For example, check the OpenTelemetry Services and Traces views.

After completing the instrumentation steps, allow a short period of time for data to be collected.

Ensure that the instrumented pods are receiving traffic.

Once data is available, the application appears under its service name (for example, open-webui-mcpo) in OpenTelemetry Services and Service Instances.

Application traces are visible in the Trace Explorer. They are also visible in the Trace perspective for both the service and service instance components. Span metrics and language-specific metrics (when available) appear in the Metrics perspective for the corresponding components.

If the Kubernetes StackPack is installed, traces for the instrumented pods are also available directly in the Traces perspective.

From OpenTelemetry services:

From the traces perspective:

7.4 Path B: Use an Operator-managed Collector #

If you prefer the Operator to manage the Collector deployment and configuration, use the OpenTelemetryCollector custom resource.

7.4.1 Configure image pulls from the Application Collection #

To pull the Collector image from the Application Collection, create a ServiceAccount with imagePullSecrets.

Then attach it to the Collector CR via the spec.serviceAccount attribute.

> kubectl -n <SUSE_OBSERVABILITY_NAMESPACE> create serviceaccount image-puller

> kubectl -n <SUSE_OBSERVABILITY_NAMESPACE> patch serviceaccount image-puller \

--patch '{"imagePullSecrets":[{"name":"application-collection"}]}'7.4.2 Create an OpenTelemetryCollector resource #

An OpenTelemetryCollector resource encapsulates the desired Collector configuration.

This includes receivers, processors, exporters and routing logic.

Create a file named

opentelemetry-collector.yamlwith the following content.apiVersion: opentelemetry.io/v1beta1 kind: OpenTelemetryCollector metadata: name: opentelemetry spec: serviceAccount: image-puller mode: deployment envFrom: - secretRef: name: open-telemetry-collector config: receivers: otlp: protocols: grpc: endpoint: 0.0.0.0:4317 http: endpoint: 0.0.0.0:4318 prometheus: config: scrape_configs: - job_name: opentelemetry-collector scrape_interval: 10s static_configs: - targets: - 0.0.0.0:8888 exporters: debug: {} nop: {} otlp: endpoint: http://suse-observability-otel-collector.suse-observability.svc.cluster.local:4317 headers: Authorization: "SUSEObservability ${env:API_KEY}" tls: insecure: true processors: tail_sampling: decision_wait: 10s policies: - name: rate-limited-composite type: composite composite: max_total_spans_per_second: 500 policy_order: [errors, slow-traces, rest] composite_sub_policy: - name: errors type: status_code status_code: status_codes: [ERROR] - name: slow-traces type: latency latency: threshold_ms: 1000 - name: rest type: always_sample rate_allocation: - policy: errors percent: 33 - policy: slow-traces percent: 33 - policy: rest percent: 34 resource: attributes: - key: k8s.cluster.name action: upsert value: local - key: service.instance.id from_attribute: k8s.pod.uid action: insert filter/dropMissingK8sAttributes: error_mode: ignore traces: span: - resource.attributes["k8s.node.name"] == nil - resource.attributes["k8s.pod.uid"] == nil - resource.attributes["k8s.namespace.name"] == nil - resource.attributes["k8s.pod.name"] == nil connectors: spanmetrics: metrics_expiration: 5m namespace: otel_span routing/traces: error_mode: ignore table: - statement: route() pipelines: [traces/sampling, traces/spanmetrics] service: pipelines: traces: receivers: [otlp] processors: [filter/dropMissingK8sAttributes, resource] exporters: [routing/traces] traces/spanmetrics: receivers: [routing/traces] processors: [] exporters: [spanmetrics] traces/sampling: receivers: [routing/traces] processors: [tail_sampling] exporters: [debug, otlp] metrics: receivers: [otlp, spanmetrics, prometheus] processors: [resource] exporters: [debug, otlp]Customize the configuration to include any scrape jobs, processors, or routing logic required.

Apply the resource.

> kubectl apply \ --namespace <SUSE_OBSERVABILITY_NAMESPACE> \ -f opentelemetry-collector.yaml

7.4.3 Configure Instrumentation, annotation, and verification #

These steps are the same as for Section 7.3, “Path A: Use an existing Collector”. Follow Section 7.3.2, “Create an Instrumentation custom resource” through Section 7.3.5, “Verify SUSE Observability UI”.

7.5 Common validation steps #

Collector readiness: Ensure the Collector is running and listening on the configured OTLP endpoint.

Instrumentation injection: Pod annotations should result in injected init containers or sidecars.

Telemetry export: In SUSE Observability, confirm that traces and metrics from your applications appear alongside other monitored data.

Resource enrichment: Kubernetes attributes (for example,

k8s.pod.nameandk8s.namespace.name) help SUSE Observability correlate telemetry with topology.

7.6 Troubleshooting #

- Go auto-instrumentation silent or failing.

Go auto-instrumentation (eBPF-based) may require kernel support,

shareProcessNamespace: true, and (depending on the Operator version) privileged containers.Verify Operator version requirements and feature gates.

Ensure pod security settings allow eBPF.

If this is not possible, use manual SDK instrumentation.

- No init container or injection not happening.

This may be caused by a typo in the annotation, the wrong language annotation (for example,

inject-javavsinject-python), or theInstrumentationresource not being present in the namespace at pod startup.Confirm that the annotation matches the intended language.

Ensure the

Instrumentationresource exists in the pod namespace before pods are created.If pods are already running, redeploy them after creating

Instrumentation.

- Telemetry not reaching the Collector (exporter pointing to localhost).

Instrumentation defaults to

http://localhost:4317ifspec.exporter.endpointis omitted. Telemetry is dropped or sent to a pod-local endpoint.Set

spec.exporter.endpointto the Collector Service FQDN (for example,http://<collector-name>.<namespace>.svc.cluster.local:4318).Verify

OTEL_EXPORTER_OTLP_ENDPOINTin the pod environment.

- Webhook or admission failed (TLS or cert errors).

The Operator webhook rejects resources. You can see error events for webhook certificates.

Ensure

cert-manageris installed.Ensure the chart values enable certificates (for example,

admissionWebhooks.certManager.enabled: true), or enable auto-generated certificates per chart values.Check

kubectl get validatingwebhookconfigurationsand review the Operator logs.

- Image pull or permission issues.

The init container fails to start due to image pull errors.

Run

kubectl describe podand look forImagePullBackOff.Fix the image pull secrets and registry access.

- Late annotations (Operator did not inject).

The pod started before the

Instrumentationresource existed.Delete and recreate the pod after the

Instrumentationresource exists.Alternatively, add automation around re-initialization during rollout.

- TLS to the Collector (secure OTLP).

Your environment requires

Instrumentation.spec.exporter.tls(mTLS or a custom CA).Create a ConfigMap containing the CA bundle.

Reference it from

Instrumentation.spec.exporter.tls.configMapName.