Installing NVIDIA GPU Drivers on SUSE Linux Enterprise Server in Air-Gapped Environments

- WHAT?

NVIDIA GPU drivers use the full potential of the GPU unit.

- WHY?

To learn how to install NVIDIA GPU drivers on SUSE Linux Enterprise Server to fully use the computing power of the GPU in higher-level applications, such as AI workloads.

- EFFORT

Understanding the information in this article and installing NVIDIA GPU drivers on your SUSE Linux Enterprise Server host requires less than one hour of your time and basic Linux administration skills.

- GOAL

You can install NVIDIA GPU drivers on a SUSE Linux Enterprise Server host with a supported GPU card attached.

This article demonstrates how to implement host-level NVIDIA GPU support via the open-driver on SUSE Linux Enterprise Server 15 SP6. The open-driver is part of the core SUSE Linux Enterprise Server package repositories. Therefore, there is no need to compile it or download executable packages. This driver is built into the operating system rather than dynamically loaded by the NVIDIA GPU Operator. This configuration is desirable for customers who want to pre-build all artifacts required for deployment into the image, and where the dynamic selection of the driver version via Kubernetes is not a requirement.

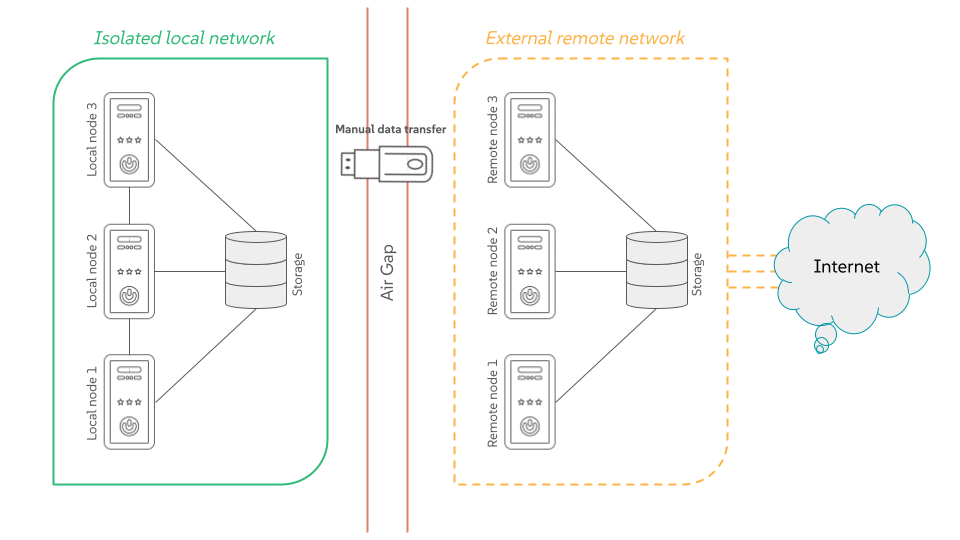

1 Air-gapped environments #

An air-gapped environment is a security measure where a single host or the whole network is isolated from all other networks, such as the public Internet. This “air gap” acts as a physical or logical barrier, preventing any direct connection that could be exploited by cyber threats.

1.1 Why you need an air-gapped environment? #

The primary goal is to protect highly sensitive data and critical systems from unauthorized access, cyber attacks, malware and ransomware. Air-gapped environments are typically found in situations where security is of the utmost importance, such as:

Military and government networks handling classified information.

Industrial control systems (ICS) for critical infrastructure like power plants and water treatment facilities.

Financial institutions and stock exchanges.

Systems controlling nuclear power plants or other life-critical operations.

1.2 How do air-gapped environments work? #

There are two types of air gaps:

- Physical air gaps

This is the most secure method, where the system is disconnected from any network. It might even involve placing the system in a shielded room.

- Logical air gaps

This type uses software controls such as firewall rules and network segmentation to create a highly restricted connection. While it offers more convenience, it is not as secure as a physical air gap because the air-gapped system is still technically connected to a network.

1.3 What challenges do air-gapped environments face? #

When working in air-gapped systems, you usually face the following limitations:

- Manual updates

Air-gapped systems cannot automatically receive software or security updates from external networks. You must manually download and install updates, which can be time-consuming and create vulnerabilities if not done regularly.

- Insider threats and physical attacks

An air gap does not protect against threats that gain physical access to the system, such as a malicious insider with a compromised USB drive.

- Limited functionality

The lack of connectivity limits the system's ability to communicate with other devices or services, making it less efficient for many modern applications.

1.4 Air-gapped stack #

The air-gapped stack is a set of scripts that ease the successful air-gap installation of certain SUSE Linux Enterprise Server components. To use them, you need to clone or download them from the stack's GitHub repository.

1.4.1 What scripts does the stack include? #

The following scripts are included in the air-gapped stack:

SUSE-AI-mirror-nvidia.shMirrors all RPM packages from a specified Web URLs.

SUSE-AI-get-images.shDownloads Docker images of SUSE Linux Enterprise Server applications from SUSE Application Collection.

SUSE-AI-load-images.shLoads downloaded Docker images into a custom Docker image registry.

1.4.2 Where do the scripts fit into the air-gap installation? #

The scripts are required in several places during the SUSE Linux Enterprise Server air-gapped installation. The following simplified workflow outlines the intended usage:

Use

SUSE-AI-mirror-nvidia.shon a remote host to download the required NVIDIA RPM packages. Transfer the downloaded content to an air-gapped local host and add it as a Zypper repository to install NVIDIA drivers on local GPU nodes.Use

SUSE-AI-get-images.shon a remote host to download Docker images of required SUSE Linux Enterprise Server components. Transfer them to an air-gapped local host.Use

SUSE-AI-load-images.shto load the transferred Docker images of SUSE Linux Enterprise Server components into a custom local $Docker image registry.Install AI Library components on the local Kubernetes cluster from the local custom Docker registry.

2 Installation procedure #

2.1 Requirements #

If you are following this guide, it assumes that you have the following already available:

At least one host with SUSE Linux Enterprise Server 15 SP6 installed, physical or virtual.

Your hosts are attached to a subscription as this is required for package access.

A compatible NVIDIA GPU installed or fully passed through to the virtual machine in which SUSE Linux Enterprise Server is running.

Access to the

rootuser—these instructions assume you are therootuser, and not escalating your privileges viasudo.

2.2 Considerations before the installation #

2.2.1 Select the driver generation #

You must verify the driver generation for the NVIDIA GPU that your

system has. For modern GPUs, the G06 driver is the

most common choice. Find more details in

the

support database.

This section details the installation of the G06

generation of the driver.

2.2.2 Additional NVIDIA components #

Besides the NVIDIA open-driver provided by SUSE as part of

SUSE Linux Enterprise Server, you might also need additional NVIDIA components. These

could include OpenGL libraries, CUDA toolkits, command-line utilities

such as nvidia-smi, and container-integration

components such as nvidia-container-toolkit. Many of these components

are not shipped by SUSE as they are proprietary NVIDIA software.

This section describes how to configure additional repositories that

give you access to these components and provides examples of using these

tools to achieve a fully functional system.

2.3 The installation procedure #

On the remote host, run the script

SUSE-AI-mirror-nvidia.shfrom the air-gapped stack (see Section 1.4, “Air-gapped stack”) to download all required NVIDIA RPM packages to a local directory, for example:>SUSE-AI-mirror-nvidia.sh \ -p /LOCAL_MIRROR_DIRECTORY \ -l https://nvidia.github.io/libnvidia-container/stable/rpm/x86_64 \ https://developer.download.nvidia.com/compute/cuda/repos/sles15/x86_64/After the download is complete, transfer the downloaded directory with all its content to each GPU-enabled local host.

On each GPU-enabled local host in its

transactional-update shellsession, add a package repository from the safely transferred NVIDIA RPM packages directory. This allows pulling in additional utilities, for example,nvidia-smi.#zypper ar --no-gpgcheck \ file://LOCAL_MIRROR_DIRECTORY \ nvidia-local-mirrortransactional update #zypper --gpg-auto-import-keys refreshInstall the Open Kernel driver KMP and detect the driver version.

#zypper install -y --auto-agree-with-licenses \ nv-prefer-signed-open-driver#version=$(rpm -qa --queryformat '%{VERSION}\n' \ nv-prefer-signed-open-driver | cut -d "_" -f1 | sort -u | tail -n 1)You can then install the appropriate packages for additional utilities that are useful for testing purposes.

#zypper install -y --auto-agree-with-licenses \ nvidia-compute-utils-G06=${version}Reboot the host to make the changes effective.

#rebootLog back in and use the

nvidia-smitool to verify that the driver is loaded successfully and that it can both access and enumerate your GPUs.#nvidia-smiThe output of this command should show you something similar to the following output. In the example below, the system has one GPU.

Fri Aug 1 15:32:10 2025 +-----------------------------------------------------------------------------------------+ | NVIDIA-SMI 575.57.08 Driver Version: 575.57.08 CUDA Version: 12.9 | |-----------------------------------------+------------------------+----------------------+ | GPU Name Persistence-M | Bus-Id Disp.A | Volatile Uncorr. ECC | | Fan Temp Perf Pwr:Usage/Cap | Memory-Usage | GPU-Util Compute M. | | | | MIG M. | |=========================================+========================+======================| | 0 Tesla T4 On | 00000000:00:1E.0 Off | 0 | | N/A 33C P8 13W / 70W | 0MiB / 15360MiB | 0% Default | | | | N/A | +-----------------------------------------+------------------------+----------------------+ +-----------------------------------------------------------------------------------------+ | Processes: | | GPU GI CI PID Type Process name GPU Memory | | ID ID Usage | |=========================================================================================| | No running processes found | +-----------------------------------------------------------------------------------------+

2.4 Validation of the driver installation #

Running the nvidia-smi command has verified that, at

the host level, the NVIDIA device can be accessed and that the drivers

are loading successfully. To validate that it is functioning, you need to

validate that the GPU can take instructions from a user-space application,

ideally via a container and through the CUDA library, as that is

typically what a real workload would use. For this, we can make a further

modification to the host OS by installing

nvidia-container-toolkit.

Install the nvidia-container-toolkit package from the NVIDIA Container Toolkit repository.

#zypper ar \ "https://nvidia.github.io/libnvidia-container/stable/rpm/"\ nvidia-container-toolkit.repo#zypper --gpg-auto-import-keys install \ -y nvidia-container-toolkitThe

nvidia-container-toolkit.repofile contains a stable repositorynvidia-container-toolkitand an experimental repositorynvidia-container-toolkit-experimental. Use the stable repository for production use. The experimental repository is disabled by default.Verify that the system can successfully enumerate the devices using the NVIDIA Container Toolkit. The output should be verbose, with INFO and WARN messages, but no ERROR messages.

#nvidia-ctk cdi generate --output=/etc/cdi/nvidia.yamlThis ensures that any container started on the machine can employ discovered NVIDIA GPU devices.

You can then run a Podman-based container. Doing this via

podmangives you a good way of validating access to the NVIDIA device from within a container, which should give confidence for doing the same with Kubernetes at a later stage.Give Podman access to the labeled NVIDIA devices that were taken care of by the previous command and simply run the

bashcommand.#podman run --rm --device nvidia.com/gpu=all \ --security-opt=label=disable \ -it registry.suse.com/bci/bci-base:latest bashYou can now execute commands from within a temporary Podman container. It does not have access to your underlying system and is ephemeral—whatever you change in the container does not persist. Also, you cannot break anything on the underlying host.

Inside the container, install the required CUDA libraries. Identify their version from the output of the

nvidia-smicommand. From the above example, we are installing CUDA version 12.9 with many examples, demos and development kits to fully validate the GPU.#zypper ar \ http://developer.download.nvidia.com/compute/cuda/repos/sles15/x86_64/ \ cuda-sle15-sp6#zypper --gpg-auto-import-keys refresh#zypper install -y cuda-libraries-12-9 cuda-demo-suite-12-9Inside the container, run the

deviceQueryCUDA example of the same version, which comprehensively validates GPU access via CUDA and from within the container itself.#/usr/local/cuda-12.9/extras/demo_suite/deviceQueryStarting... CUDA Device Query (Runtime API) Detected 1 CUDA Capable device(s) Device 0: "Tesla T4" CUDA Driver Version / Runtime Version 12.9 / 12.9 CUDA Capability Major/Minor version number: 7.5 Total amount of global memory: 14913 MBytes (15637086208 bytes) (40) Multiprocessors, ( 64) CUDA Cores/MP: 2560 CUDA Cores GPU Max Clock rate: 1590 MHz (1.59 GHz) Memory Clock rate: 5001 Mhz Memory Bus Width: 256-bit L2 Cache Size: 4194304 bytes Maximum Texture Dimension Size (x,y,z) 1D=(131072), 2D=(131072, 65536), 3D=(16384, 16384, 16384) Maximum Layered 1D Texture Size, (num) layers 1D=(32768), 2048 layers Maximum Layered 2D Texture Size, (num) layers 2D=(32768, 32768), 2048 layers Total amount of constant memory: 65536 bytes Total amount of shared memory per block: 49152 bytes Total number of registers available per block: 65536 Warp size: 32 Maximum number of threads per multiprocessor: 1024 Maximum number of threads per block: 1024 Max dimension size of a thread block (x,y,z): (1024, 1024, 64) Max dimension size of a grid size (x,y,z): (2147483647, 65535, 65535) Maximum memory pitch: 2147483647 bytes Texture alignment: 512 bytes Concurrent copy and kernel execution: Yes with 3 copy engine(s) Run time limit on kernels: No Integrated GPU sharing Host Memory: No Support host page-locked memory mapping: Yes Alignment requirement for Surfaces: Yes Device has ECC support: Enabled Device supports Unified Addressing (UVA): Yes Device supports Compute Preemption: Yes Supports Cooperative Kernel Launch: Yes Supports MultiDevice Co-op Kernel Launch: Yes Device PCI Domain ID / Bus ID / location ID: 0 / 0 / 30 Compute Mode: < Default (multiple host threads can use ::cudaSetDevice() with device simultaneously) > deviceQuery, CUDA Driver = CUDART, CUDA Driver Version = 12.9, CUDA Runtime Version = 12.9, NumDevs = 1, Device0 = Tesla T4 Result = PASSFrom inside the container, you can continue to run any other CUDA workload—such as compilers—to run further tests. When finished, you can exit the container.

#exitImportantChanges you have made in the container and packages you have installed inside will be lost and will not impact the underlying operating system.

3 Legal Notice #

Copyright© 2006–2025 SUSE LLC and contributors. All rights reserved.

Permission is granted to copy, distribute and/or modify this document under the terms of the GNU Free Documentation License, Version 1.2 or (at your option) version 1.3; with the Invariant Section being this copyright notice and license. A copy of the license version 1.2 is included in the section entitled “GNU Free Documentation License”.

For SUSE trademarks, see https://www.suse.com/company/legal/. All other third-party trademarks are the property of their respective owners. Trademark symbols (®, ™ etc.) denote trademarks of SUSE and its affiliates. Asterisks (*) denote third-party trademarks.

All information found in this book has been compiled with utmost attention to detail. However, this does not guarantee complete accuracy. Neither SUSE LLC, its affiliates, the authors, nor the translators shall be held liable for possible errors or the consequences thereof.