5 Deployment #

This section describes the process steps for the deployment of the Rancher Kubernetes Engine solution. It describes the process steps to deploy each of the component layers starting as a base functional proof-of-concept, having considerations on migration toward production, providing scaling guidance that is needed to create the solution.

5.1 Deployment overview #

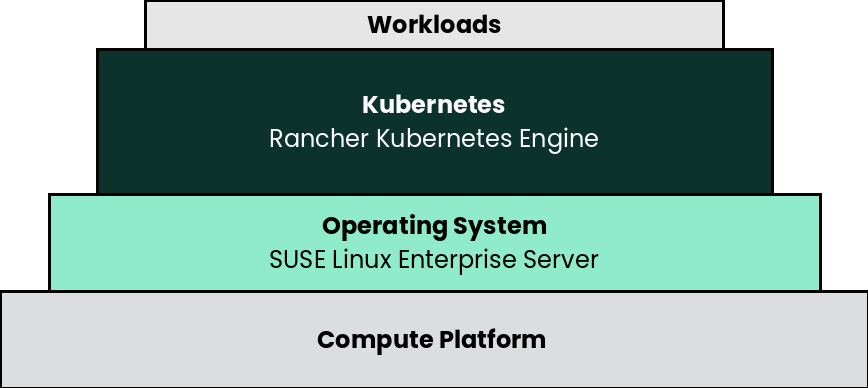

The deployment stack is represented in the following figure:

and details are covered for each layer in the following sections.

The following section’s content is ordered and described from the bottom layer up to the top.

5.2 Compute Platform #

- Preparation(s)

For each node used in the deployment:

Validate the necessary CPU, memory, disk capacity, and network interconnect quantity and type are present for each node and its intended role. Refer to the recommended CPU/Memory/Disk/Networking requirements as noted in the Rancher Kubernetes Engine Hardware Requirements.

Further suggestions

Disk : Use a pair of local, direct attached, mirrored disk drives is present on each node (SSDs are preferred); these will become the target for the operating system installation.

Network : Prepare an IP addressing scheme and optionally create both a public and private network, along with the respective subnets and desired VLAN designations for the target environment.

Baseboard Management Controller : If present, consider using a distinct management network for controlled access.

Boot Settings : BIOS/uEFI reset to defaults for a known baseline, consistent state or perhaps with desired, localized values.

Firmware : Use consistent and up-to-date versions for BIOS/uEFI/device firmware to reduce potential troubleshooting issues later

5.3 SUSE Linux Enterprise Server #

As the base software layer, use an enterprise-grade Linux operating system. For example, SUSE Linux Enterprise Server.

- Preparation(s)

To meet the solution stack prerequisites and requirements, SUSE operating system offerings, like SUSE Linux Enterprise Server can be used.

Ensure these services are in place and configured for this node to use:

Domain Name Service (DNS) - an external network-accessible service to map IP Addresses to host names

Network Time Protocol (NTP) - an external network-accessible service to obtain and synchronize system times to aid in time stamp consistency

Software Update Service - access to a network-based repository for software update packages. This can be accessed directly from each node via registration to

the general, internet-based SUSE Customer Center (SCC) or

an organization’s SUSE Manager infrastructure or

a local server running an instance of Repository Mirroring Tool (RMT)

NoteDuring the node’s installation, it can be pointed to the respective update service. This can also be accomplished post-installation with the command line tool named SUSEConnect.

- Deployment Process

On the compute platform node, install the noted SUSE operating system, by following these steps:

Download the SUSE Linux Enterprise Server product (either for the ISO or Virtual Machine image)

Identify the appropriate, supported version of SUSE Linux Enterprise Server by reviewing the support matrix for SUSE Rancher versions Web page.

The installation process is described and can be performed with default values by following steps from the product documentation, see Installation Quick Start

TipAdjust both the password and the local network addressing setup to comply with local environment guidelines and requirements.

- Deployment Consideration(s)

To further optimize deployment factors, leverage the following practices:

To reduce user intervention, unattended deployments of SUSE Linux Enterprise Server can be automated

for ISO-based installations, by referring to the AutoYaST Guide

5.4 Rancher Kubernetes Engine #

- Preparation(s)

Identify the appropriate, desired version of the Rancher Kubernetes Engine binary (for example vX.Y.Z) that includes the needed Kubernetes version by reviewing

the "Supported Rancher Kubernetes Engine Versions" associated with the respective SUSE Rancher version from "Rancher Kubernetes Engine Downstream Clusters" section, or

the "Releases" on the Download Web page.

On the target node with a default installation of SUSE Linux Enterprise Server operating system, log in to the node either as root or as a user with sudo privileges and enable the required container runtime engine

sudo SUSEConnect -p sle-module-containers/15.3/x86_64 sudo zypper refresh ; zypper install docker sudo systemctl enable --now docker.service

Then validate the container runtime engine is working

sudo systemctl status docker.service sudo docker ps --all

For the underlying operating system firewall service, either

enable and configure the necessary inbound ports or

stop and completely disable the firewall service.

- Deployment Process

The primary steps for deploying this Rancher Kubernetes Engine Kubernetes are:

NoteInstalling Rancher Kubernetes Engine requires a client system (i.e. admin workstation) that has been configured with kubectl.

Download the Rancher Kubernetes Engine binary according to the instructions on product documentation page, then follow the directions on that page, but with the following exceptions:

Create the cluster.yml file with the command

rke configNoteSee product documentation for example-yamls and config-options for detailed examples and descriptions of the cluster.yml parameters.

It is recommended to create a unique SSH key for this Rancher Kubernetes Engine cluster with the command

ssh-keygenProvide the path to that key for the option "Cluster Level SSH Private Key Path"

The option "Number of Hosts" refers to the number of hosts to configure at this time

Additional hosts can be added very easily after Rancher Kubernetes Engine cluster creation

For this implementation it is recommended to configure one or three hosts

Give all hosts the roles of "Control Plane", "Worker", and "etcd"

Answer "n" for the option "Enable PodSecurityPolicy"

Update the cluster.yml file before continuing with the step "Deploying Kubernetes with RKE"

If a load balancer has been deployed for the Rancher Kubernetes Engine control-plane nodes, update the cluster.yml file before deploying Rancher Kubernetes Engine to include the IP address or FQDN of the load balancer. The appropriate location is under authentication.sans. For example:

LB_IP_Host=""

authentication: strategy: x509 sans: ["${LB_IP_Host}"]Verify password-less SSH is available from the admin workstation to each of the cluster hosts as the user specified in the cluster.yml file

When ready, run

rke upto create the RKE clusterAfter the

rke upcommand completes, the RKE cluster will continue the Kubernetes installation processMonitor the progress of the installation:

Export the variable KUBECONFIG to the absolute path name of the kube_config_cluster.yml file. I.e.

export KUBECONFIG=~/rke-cluster/kube_config_cluster.ymlRun the command:

watch -c "kubectl get deployments -A"The cluster deployment is complete when elements of all the deployments show at least "1" as "AVAILABLE"

Use Ctrl+c to exit the watch loop after all deployment pods are running

TipTo address Availability and possible scaling to a multiple node cluster, etcd is enabled instead of using the default SQLite datastore.

- Deployment Consideration(s)

To further optimize deployment factors, leverage the following practices:

A full high-availability Rancher Kubernetes Engine cluster is recommended for production workloads. For this use case, two additional hosts should be added; for a total of three. All three hosts will perform the roles of control-plane, etcd, and worker.

Deploy the same operating system on the new compute platform nodes, and prepare them in the same way as the first node

Update the cluster.yml file to include the additional node

Using a text editor, copy the information for the first node (found under the "nodes:" section)

The node information usually starts with "- address:" and ends with the start of another node entry, or the beginning of the "services: " section, i.e.

- address: 172.16.240.71 port: "22" internal_address: "" role: - controlplane - worker - etcd . . . labels: {} taints: []

Paste the information into the same section, once for each additional host

Update the pasted information, as appropriate, for each additional host

When the cluster.yml file is updated with the information specific to each node, run the command

rke upRun the command:

watch -c "kubectl get deployments -A"The cluster deployment is complete when elements of all the deployments show at least "1" as "AVAILABLE"

Use Ctrl+c to exit the watch loop after all deployment pods are running

After this successful deployment of the Rancher Kubernetes Engine solution, review the product documentation for details on how to directly use this Kubernetes cluster. Furthermore, by reviewing the SUSE Rancher product documentation this solution can also be:

imported (refer to subsection "Importing Existing Clusters"), then

managed (refer to subsection "Cluster Administration") and

accessed (refer to subsection "Cluster Access") to address orchestration of workloads, maintaining security and many more functions are readily available.