Installation Details

The installation is broken up into two different use cases: single and multi-cluster. The single cluster install is for if you wish to use GitOps to manage a single cluster, in which case you do not need a centralized manager cluster. In the multi-cluster use case you will setup a centralized manager cluster to which you can register clusters.

If you are just learning SUSE® Rancher Prime Continuous Delivery the single cluster install is the recommended starting point. After which you can move from single cluster to multi-cluster setup down the line.

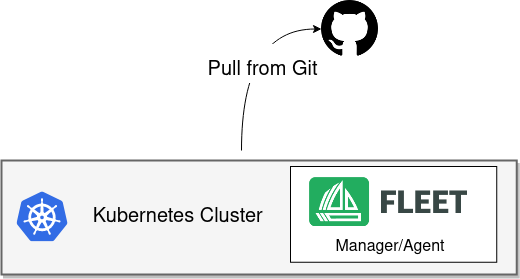

Single-cluster is the default installation. The same cluster will run both the SUSE® Rancher Prime Continuous Delivery manager and the SUSE® Rancher Prime Continuous Delivery agent. The cluster will communicate with Git server to deploy resources to this local cluster. This is the simplest setup and very useful for dev/test and small scale setups. This use case is supported as a valid use case for production.

Prerequisites

-

Helm 3

-

Kubernetes

SUSE® Rancher Prime Continuous Delivery is distributed as a Helm chart. Helm 3 is a CLI, has no server side component, and is fairly straight forward. To install the Helm 3 CLI follow the official install instructions.

SUSE® Rancher Prime Continuous Delivery is a controller running on a Kubernetes cluster so an existing cluster is required. For the single cluster use case you will install SUSE® Rancher Prime Continuous Delivery to the cluster which you intend to manage with GitOps. Any Kubernetes community supported version of Kubernetes will work, in practice this means 1.20.5 or greater.

Default Install

Install the following two Helm charts.

-

Install

-

Verify

|

SUSE® Rancher Prime Continuous Delivery in Rancher

Rancher has separate helm charts for SUSE® Rancher Prime Continuous Delivery and uses a different repository. |

First add Fleet’s Helm repository.

helm repo add fleet https://rancher.github.io/fleet-helm-charts/Second install the SUSE® Rancher Prime Continuous Delivery CustomResourcesDefintions.

helm -n cattle-fleet-system install --create-namespace --wait fleet-crd \\ fleet/fleet-crdThird install the SUSE® Rancher Prime Continuous Delivery controllers.

helm -n cattle-fleet-system install --create-namespace --wait fleet \\ fleet/fleetSUSE® Rancher Prime Continuous Delivery should be ready to use now for single cluster. You can check the status of the SUSE® Rancher Prime Continuous Delivery controller pods by running the below commands.

kubectl -n cattle-fleet-system logs -l app=fleet-controller kubectl -n cattle-fleet-system get pods -l app=fleet-controllerNAME READY STATUS RESTARTS AGE fleet-controller-64f49d756b-n57wq 1/1 Running 0 3m21sYou can now register some git repos in the fleet-local namespace to start deploying Kubernetes resources.

Multi-controller install: sharding

Deployment

From 0.10 onwards, SUSE® Rancher Prime Continuous Delivery supports static sharding. Each shard is defined by its shard ID. Optionally, a shard can have a [node selector](https://kubernetes.io/docs/concepts/scheduling-eviction/assign-pod-node/#nodeselector), instructing SUSE® Rancher Prime Continuous Delivery to create all controller pods and jobs for that shard on nodes matching that selector.

The SUSE® Rancher Prime Continuous Delivery controller chart can be installed with the following arguments:

* --set shards[$index].id=$shard_id

* --set shards[$index].nodeSelector.$key=$value

This will result in: * as many SUSE® Rancher Prime Continuous Delivery controller and gitjob deployments as specified unique shard IDs, * plus the usual unsharded SUSE® Rancher Prime Continuous Delivery controller pod. That latter pod will be the only one containing agent management and cleanup containers.

For instance:

$ helm -n cattle-fleet-system install --create-namespace --wait fleet fleet/fleet \

--set shards[0].id=foo \

--set shards[0].nodeSelector."kubernetes\.io/hostname"=k3d-upstream-server-0 \

--set shards[1].id=bar \

--set shards[1].nodeSelector."kubernetes\.io/hostname"=k3d-upstream-server-1 \

--set shards[2].id=baz \

--set shards[2].nodeSelector."kubernetes\.io/hostname"=k3d-upstream-server-2 \

$ kubectl -n cattle-fleet-system get pods -l app=fleet-controller \

-o=custom-columns='Name:.metadata.name,Shard-ID:.metadata.labels.fleet\.cattle\.io/shard-id,Node:spec.nodeName'

Name Shard-ID Node

fleet-controller-b4c469c85-rj2q8 k3d-upstream-server-2

fleet-controller-shard-bar-5f5999958f-nt4bm bar k3d-upstream-server-1

fleet-controller-shard-baz-75c8587898-2wkk9 baz k3d-upstream-server-2

fleet-controller-shard-foo-55478fb9d8-42q2f foo k3d-upstream-server-0

$ kubectl -n cattle-fleet-system get pods -l app=gitjob \

-o=custom-columns='Name:.metadata.name,Shard-ID:.metadata.labels.fleet\.cattle\.io/shard-id,Node:spec.nodeName'

Name Shard-ID Node

gitjob-8498c6d78b-mdhgh k3d-upstream-server-1

gitjob-shard-bar-8659ffc945-9vtlx bar k3d-upstream-server-1

gitjob-shard-baz-6d67f596dc-fsz9m baz k3d-upstream-server-2

gitjob-shard-foo-8697bb7f67-wzsfj foo k3d-upstream-server-0How it works

With sharding in place, each SUSE® Rancher Prime Continuous Delivery controller will process resources bearing its own shard ID. This also holds for the unsharded controller, which has no set shard ID and will therefore process all unsharded resources.

To deploy a GitRepo for a specific shard, simply add label fleet.cattle.io/shard-ref with your desired shard ID as a

value.

Here is an example:

$ kubectl apply -n fleet-local -f - <<EOF

kind: GitRepo

apiVersion: fleet.cattle.io/v1alpha1

metadata:

name: sharding-test

labels:

fleet.cattle.io/shard-ref: foo

spec:

repo: https://github.com/rancher/fleet-examples

paths:

- single-cluster/helm

EOFA GitRepo with a label ID for which a SUSE® Rancher Prime Continuous Delivery controller is deployed (eg. foo in the above example) will then be

processed by that controller.

On the other hand, a GitRepo with an unknown label ID (eg. boo in the above example) will not be processed by any

SUSE® Rancher Prime Continuous Delivery controller, hence no resources other than the GitRepo itself will be created.

Removing or adding supported shard IDs currently requires redeploying SUSE® Rancher Prime Continuous Delivery with a new set of shard IDs.

Configuration for Multi-Cluster

|

Downstream clusters in Rancher are automatically registered inSUSE® Rancher Prime Continuous Delivery. Users can access SUSE® Rancher Prime Continuous Delivery under The multi-cluster install described below is only covered in standalone Fleet, which is untested by Rancher QA. |

|

The setup is the same as for a single cluster. After installing the SUSE® Rancher Prime Continuous Delivery manager, you will then need to register remote downstream clusters with the SUSE® Rancher Prime Continuous Delivery manager. However, to allow for manager-initiated registration of downstream clusters, a few extra settings are required. Without the API server URL and the CA, only agent-initiated registration of downstream clusters is possible. |

API Server URL and CA certificate

In order for your SUSE® Rancher Prime Continuous Delivery management installation to properly work it is important

the correct API server URL and CA certificates are configured properly. The SUSE® Rancher Prime Continuous Delivery agents

will communicate to the Kubernetes API server URL. This means the Kubernetes

API server must be accessible to the downstream clusters. You will also need

to obtain the CA certificate of the API server. The easiest way to obtain this information

is typically from your kubeconfig file ($HOME/.kube/config). The server,

certificate-authority-data, or certificate-authority fields will have these values.

title="$HOME/.kube/config"

apiVersion: v1

clusters:

* cluster:

certificate-authority-data: LS0tLS1CRUdJTi...

server: https://<EXAMPLE_URL>:6443Extract CA certificate

Please note that the certificate-authority-data field is base64 encoded and will need to be

decoded before you save it into a file. This can be done by saving the base64 encoded contents to

a file and then running

base64 -d encoded-file > ca.pemNext, retrieve the CA certificate from your kubeconfig.

-

Extract First

-

Multiple Entries

If you have jq and base64 available then this one-liners will pull all CA certificates from your KUBECONFIG and place then in a file named ca.pem.

kubectl config view -o json --raw | jq -r '.clusters[].cluster["certificate-authority-data"]' | base64 -d > ca.pemOr, if you have a multi-cluster setup, you can use this command:

# replace CLUSTERNAME with the name of the cluster according to your KUBECONFIG

kubectl config view -o json --raw | jq -r '.clusters[] | select(.name=="CLUSTERNAME").cluster["certificate-authority-data"]' | base64 -d > ca.pemExtract API Server

If you have a multi-cluster setup, you can use this command:

# replace CLUSTERNAME with the name of the cluster according to your KUBECONFIG

API_SERVER_URL=$(kubectl config view -o json --raw | jq -r '.clusters[] | select(.name=="CLUSTER").cluster["server"]')

# Leave empty if your API server is signed by a well known CA

API_SERVER_CA="ca.pem"Validate

First validate the server URL is correct.

curl -fLk "$API_SERVER_URL/version"The output of this command should be JSON with the version of the Kubernetes server or a 401 Unauthorized error.

If you do not get either of these results than please ensure you have the correct URL. The API server port is typically

6443 for Kubernetes.

Next validate that the CA certificate is proper by running the below command. If your API server is signed by a

well known CA then omit the --cacert "$API_SERVER_CA" part of the command.

curl -fL --cacert "$API_SERVER_CA" "$API_SERVER_URL/version"If you get a valid JSON response or an 401 Unauthorized then it worked. The Unauthorized error is

only because the curl command is not setting proper credentials, but this validates that the TLS

connection work and the ca.pem is correct for this URL. If you get a SSL certificate problem then

the ca.pem is not correct. The contents of the $API_SERVER_CA file should look similar to the below:

----BEGIN CERTIFICATE----

MIIBVjCB/qADAgECAgEAMAoGCCqGSM49BAMCMCMxITAfBgNVBAMMGGszcy1zZXJ2

ZXItY2FAMTU5ODM5MDQ0NzAeFw0yMDA4MjUyMTIwNDdaFw0zMDA4MjMyMTIwNDda

MCMxITAfBgNVBAMMGGszcy1zZXJ2ZXItY2FAMTU5ODM5MDQ0NzBZMBMGByqGSM49

AgEGCCqGSM49AwEHA0IABDXlQNkXnwUPdbSgGz5Rk6U9ldGFjF6y1YyF36cNGk4E

0lMgNcVVD9gKuUSXEJk8tzHz3ra/+yTwSL5xQeLHBl+jIzAhMA4GA1UdDwEB/wQE

AwICpDAPBgNVHRMBAf8EBTADAQH/MAoGCCqGSM49BAMCA0cAMEQCIFMtZ5gGDoDs

ciRyve+T4xbRNVHES39tjjup/LuN4tAgAiAteeB3jgpTMpZyZcOOHl9gpZ8PgEcN

KDs/pb3fnMTtpA==

----END CERTIFICATE----Install for Multi-Cluster

In the following example it will be assumed the API server URL from the KUBECONFIG which is https://example.com:6443

and the CA certificate is in the file ca.pem. If your API server URL is signed by a well-known CA you can

omit the apiServerCA parameter below or just create an empty ca.pem file (ie touch ca.pem).

Setup the environment with your specific values, e.g.:

API_SERVER_URL="https://example.com:6443"

API_SERVER_CA="ca.pem"Once you have validated the API server URL and API server CA parameters, install the following two Helm charts.

-

Install

-

Verify

First add Fleet’s Helm repository.

helm repo add fleet https://rancher.github.io/fleet-helm-charts/Second install the SUSE® Rancher Prime Continuous Delivery CustomResourcesDefintions.

helm -n cattle-fleet-system install --create-namespace --wait \\ fleet-crd`} {versions.next.fleetCRD}Third install the SUSE® Rancher Prime Continuous Delivery controllers.

helm -n cattle-fleet-system install --create-namespace --wait \\ --set apiServerURL="$API_SERVER_URL" \\ --set-file apiServerCA="$API_SERVER_CA" \\ fleet`} {versions.next.fleet}SUSE® Rancher Prime Continuous Delivery should be ready to use. You can check the status of the SUSE® Rancher Prime Continuous Delivery controller pods by running the below commands.

kubectl -n cattle-fleet-system logs -l app=fleet-controller kubectl -n cattle-fleet-system get pods -l app=fleet-controllerNAME READY STATUS RESTARTS AGE fleet-controller-64f49d756b-n57wq 1/1 Running 0 3m21sAt this point the SUSE® Rancher Prime Continuous Delivery manager should be ready. You can now register clusters and git repos with the SUSE® Rancher Prime Continuous Delivery manager.