1 Product overview #

SUSE® Linux Enterprise High Availability is an integrated suite of open source clustering technologies. It enables you to implement highly available physical and virtual Linux clusters, and to eliminate single points of failure. It ensures the high availability and manageability of critical network resources including data, applications, and services. Thus, it helps you maintain business continuity, protect data integrity, and reduce unplanned downtime for your mission-critical Linux workloads.

It ships with essential monitoring, messaging, and cluster resource management functionality (supporting failover, failback, and migration (load balancing) of individually managed cluster resources).

This chapter introduces the main product features and benefits of SUSE Linux Enterprise High Availability. Inside you will find several example clusters and learn about the components making up a cluster. The last section provides an overview of the architecture, describing the individual architecture layers and processes within the cluster.

For explanations of some common terms used in the context of High Availability clusters, refer to Glossary.

1.1 Availability as a module or extension #

High Availability is available as a module or extension for several products. For details, see https://documentation.suse.com/sles/html/SLES-all/article-modules.html#art-modules-high-availability.

1.2 Key features #

SUSE® Linux Enterprise High Availability helps you ensure and manage the availability of your network resources. The following sections highlight some of the key features:

1.2.1 Wide range of clustering scenarios #

SUSE Linux Enterprise High Availability supports the following scenarios:

Active/active configurations

Active/passive configurations: N+1, N+M, N to 1, N to M

Hybrid physical and virtual clusters, allowing virtual servers to be clustered with physical servers. This improves service availability and resource usage.

Local clusters

Metro clusters (“stretched” local clusters)

Geo clusters (geographically dispersed clusters)

All nodes belonging to a cluster should have the same processor platform: x86, IBM Z, or POWER. Clusters of mixed architectures are not supported.

Your cluster can contain up to 32 Linux servers. Using pacemaker_remote, the cluster can be extended to include additional Linux servers beyond this limit. Any server in the cluster can restart resources (applications, services, IP addresses, and file systems) from a failed server in the cluster.

1.2.2 Flexibility #

SUSE Linux Enterprise High Availability ships with Corosync messaging and membership layer and Pacemaker Cluster Resource Manager. Using Pacemaker, administrators can continually monitor the health and status of their resources, and manage dependencies. They can automatically stop and start services based on highly configurable rules and policies. SUSE Linux Enterprise High Availability allows you to tailor a cluster to the specific applications and hardware infrastructure that fit your organization. Time-dependent configuration enables services to automatically migrate back to repaired nodes at specified times.

1.2.3 Storage and data replication #

With SUSE Linux Enterprise High Availability you can dynamically assign and reassign server storage as needed. It supports Fibre Channel or iSCSI storage area networks (SANs). Shared disk systems are also supported, but they are not a requirement. SUSE Linux Enterprise High Availability also comes with a cluster-aware file system (OCFS2) and the cluster Logical Volume Manager (Cluster LVM). For replication of your data, use DRBD* to mirror the data of a High Availability service from the active node of a cluster to its standby node. Furthermore, SUSE Linux Enterprise High Availability also supports CTDB (Cluster Trivial Database), a technology for Samba clustering.

1.2.4 Support for virtualized environments #

SUSE Linux Enterprise High Availability supports the mixed clustering of both physical and virtual Linux servers. SUSE Linux Enterprise Server 15 SP4 ships with Xen, an open source virtualization hypervisor, and with KVM (Kernel-based Virtual Machine). KVM is a virtualization software for Linux which is based on hardware virtualization extensions. The cluster resource manager in SUSE Linux Enterprise High Availability can recognize, monitor, and manage services running within virtual servers and services running in physical servers. Guest systems can be managed as services by the cluster.

Use caution when performing live migration of nodes in an active cluster. The cluster stack might not tolerate an operating system freeze caused by the live migration process, which could lead to the node being fenced.

We recommend either of the following actions to help avoid node fencing during live migration:

Increase the Corosync token timeout and the SBD watchdog timeout, along with any other related settings. The appropriate values depend on your specific setup. For more information, see Section 13.5, “Calculation of timeouts”.

Before performing live migration, stop the cluster services on either the node or the whole cluster. For more information, see Section 28.2, “Different options for maintenance tasks”.

You must thoroughly test this setup before attempting live migration in a production environment.

1.2.5 Support of local, metro, and Geo clusters #

SUSE Linux Enterprise High Availability supports different geographical scenarios, including geographically dispersed clusters (Geo clusters).

- Local clusters

A single cluster in one location (for example, all nodes are located in one data center). The cluster uses multicast or unicast for communication between the nodes and manages failover internally. Network latency can be neglected. Storage is typically accessed synchronously by all nodes.

- Metro clusters

A single cluster that can stretch over multiple buildings or data centers, with all sites connected by fibre channel. The cluster uses multicast or unicast for communication between the nodes and manages failover internally. Network latency is usually low (<5 ms for distances of approximately 20 miles). Storage is frequently replicated (mirroring or synchronous replication).

- Geo clusters (multi-site clusters)

Multiple, geographically dispersed sites with a local cluster each. The sites communicate via IP. Failover across the sites is coordinated by a higher-level entity. Geo clusters need to cope with limited network bandwidth and high latency. Storage is replicated asynchronously.

Note: Geo clustering and SAP workloadsCurrently Geo clusters neither support SAP HANA system replication nor SAP S/4HANA and SAP NetWeaver enqueue replication setups.

The greater the geographical distance between individual cluster nodes, the more factors may potentially disturb the high availability of services the cluster provides. Network latency, limited bandwidth and access to storage are the main challenges for long-distance clusters.

1.2.6 Resource agents #

SUSE Linux Enterprise High Availability includes a huge number of resource agents to manage

resources such as Apache, IPv4, IPv6 and many more. It also ships with

resource agents for popular third party applications such as IBM

WebSphere Application Server. For an overview of Open Cluster Framework

(OCF) resource agents included with your product, use the crm

ra command as described in

Section 5.5.3, “Displaying information about OCF resource agents”.

1.2.7 User-friendly administration tools #

SUSE Linux Enterprise High Availability ships with a set of powerful tools. Use them for basic installation and setup of your cluster and for effective configuration and administration:

- YaST

A graphical user interface for general system installation and administration. Use it to install SUSE Linux Enterprise High Availability on top of SUSE Linux Enterprise Server as described in the Installation and Setup Quick Start. YaST also provides the following modules in the High Availability category to help configure your cluster or individual components:

Cluster: Basic cluster setup. For details, refer to Chapter 4, Using the YaST cluster module.

DRBD: Configuration of a Distributed Replicated Block Device.

IP Load Balancing: Configuration of load balancing with Linux Virtual Server or HAProxy. For details, refer to Chapter 17, Load balancing.

- Hawk2

A user-friendly Web-based interface with which you can monitor and administer your High Availability clusters from Linux or non-Linux machines alike. Hawk2 can be accessed from any machine inside or outside of the cluster by using a (graphical) Web browser. Therefore it is the ideal solution even if the system on which you are working only provides a minimal graphical user interface. For details, Section 5.4, “Introduction to Hawk2”.

crmShellA powerful unified command line interface to configure resources and execute all monitoring or administration tasks. For details, refer to Section 5.5, “Introduction to

crmsh”.

1.3 Benefits #

SUSE Linux Enterprise High Availability allows you to configure up to 32 Linux servers into a high-availability cluster (HA cluster). Resources can be dynamically switched or moved to any node in the cluster. Resources can be configured to automatically migrate if a node fails, or they can be moved manually to troubleshoot hardware or balance the workload.

SUSE Linux Enterprise High Availability provides high availability from commodity components. Lower costs are obtained through the consolidation of applications and operations onto a cluster. SUSE Linux Enterprise High Availability also allows you to centrally manage the complete cluster. You can adjust resources to meet changing workload requirements (thus, manually “load balance” the cluster). Allowing clusters of more than two nodes also provides savings by allowing several nodes to share a “hot spare”.

An equally important benefit is the potential reduction of unplanned service outages and planned outages for software and hardware maintenance and upgrades.

Reasons that you would want to implement a cluster include:

Increased availability

Improved performance

Low cost of operation

Scalability

Disaster recovery

Data protection

Server consolidation

Storage consolidation

Shared disk fault tolerance can be obtained by implementing RAID on the shared disk subsystem.

The following scenario illustrates some benefits SUSE Linux Enterprise High Availability can provide.

Example cluster scenario#

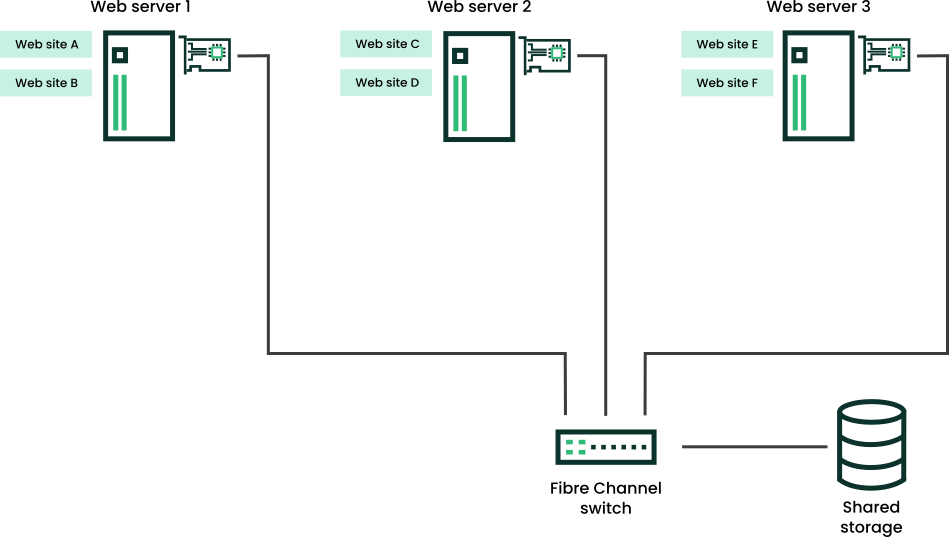

Suppose you have configured a three-node cluster, with a Web server installed on each of the three nodes in the cluster. Each of the nodes in the cluster hosts two Web sites. All the data, graphics, and Web page content for each Web site are stored on a shared disk subsystem connected to each of the nodes in the cluster. The following figure depicts how this setup might look.

During normal cluster operation, each node is in constant communication with the other nodes in the cluster and performs periodic polling of all registered resources to detect failure.

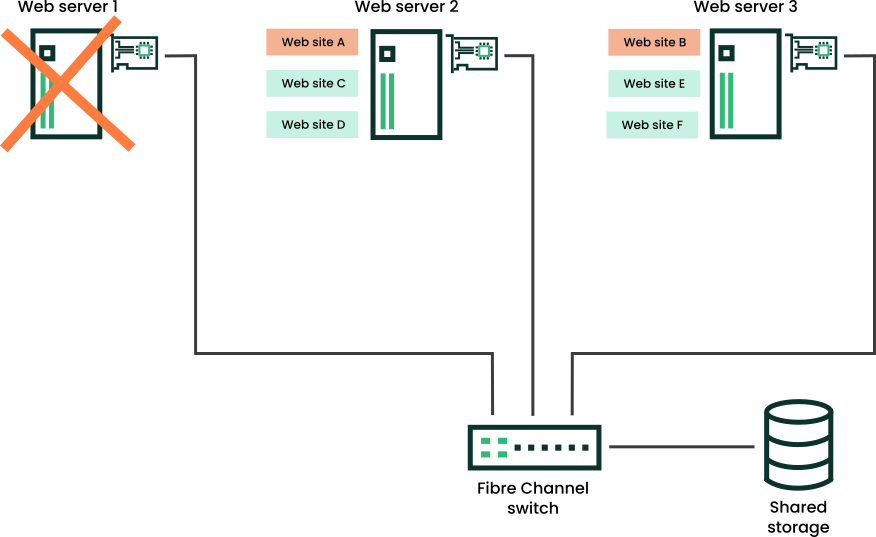

Suppose Web Server 1 experiences hardware or software problems and the users depending on Web Server 1 for Internet access, e-mail, and information lose their connections. The following figure shows how resources are moved when Web Server 1 fails.

Web Site A moves to Web Server 2 and Web Site B moves to Web Server 3. IP addresses and certificates also move to Web Server 2 and Web Server 3.

When you configured the cluster, you decided where the Web sites hosted on each Web server would go should a failure occur. In the previous example, you configured Web Site A to move to Web Server 2 and Web Site B to move to Web Server 3. This way, the workload formerly handled by Web Server 1 continues to be available and is evenly distributed between any surviving cluster members.

When Web Server 1 failed, the High Availability software did the following:

Detected a failure and verified with STONITH that Web Server 1 was really dead. STONITH is an acronym for “Shoot The Other Node In The Head”. It is a means of bringing down misbehaving nodes to prevent them from causing trouble in the cluster.

Remounted the shared data directories that were formerly mounted on Web server 1 on Web Server 2 and Web Server 3.

Restarted applications that were running on Web Server 1 on Web Server 2 and Web Server 3.

Transferred IP addresses to Web Server 2 and Web Server 3.

In this example, the failover process happened quickly and users regained access to Web site information within seconds, usually without needing to log in again.

Now suppose the problems with Web Server 1 are resolved, and Web Server 1 is returned to a normal operating state. Web Site A and Web Site B can either automatically fail back (move back) to Web Server 1, or they can stay where they are. This depends on how you configured the resources for them. Migrating the services back to Web Server 1 will incur some down-time. Therefore SUSE Linux Enterprise High Availability also allows you to defer the migration until a period when it will cause little or no service interruption. There are advantages and disadvantages to both alternatives.

SUSE Linux Enterprise High Availability also provides resource migration capabilities. You can move applications, Web sites, etc. to other servers in your cluster as required for system management.

For example, you could have manually moved Web Site A or Web Site B from Web Server 1 to either of the other servers in the cluster. Use cases for this are upgrading or performing scheduled maintenance on Web Server 1, or increasing performance or accessibility of the Web sites.

1.4 Cluster configurations: storage #

Cluster configurations with SUSE Linux Enterprise High Availability might or might not include a shared disk subsystem. The shared disk subsystem can be connected via high-speed Fibre Channel cards, cables, and switches, or it can be configured to use iSCSI. If a node fails, another designated node in the cluster automatically mounts the shared disk directories that were previously mounted on the failed node. This gives network users continuous access to the directories on the shared disk subsystem.

When using a shared disk subsystem with LVM, that subsystem must be connected to all servers in the cluster from which it needs to be accessed.

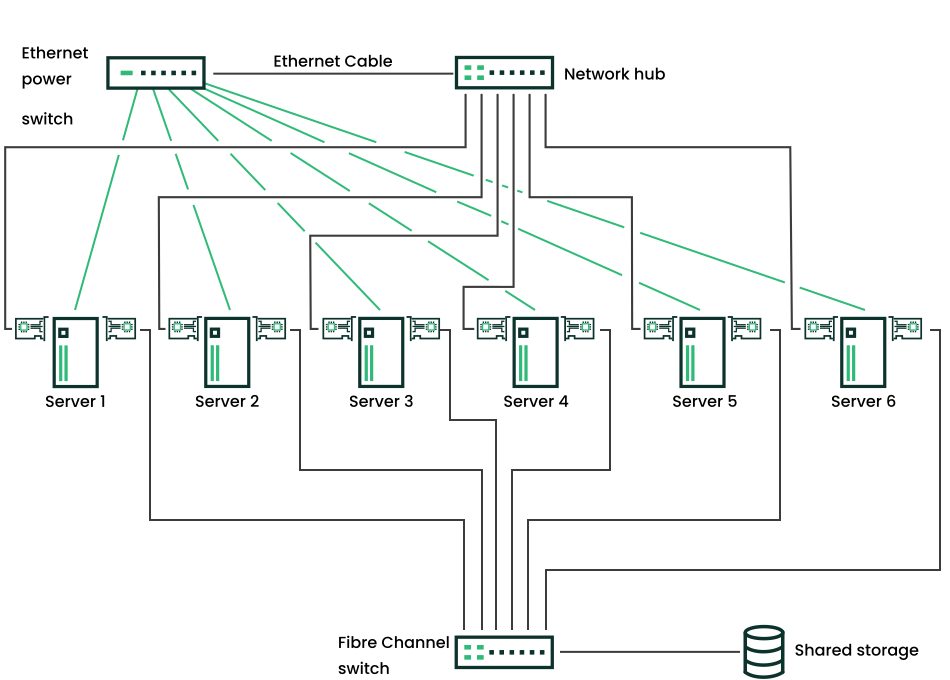

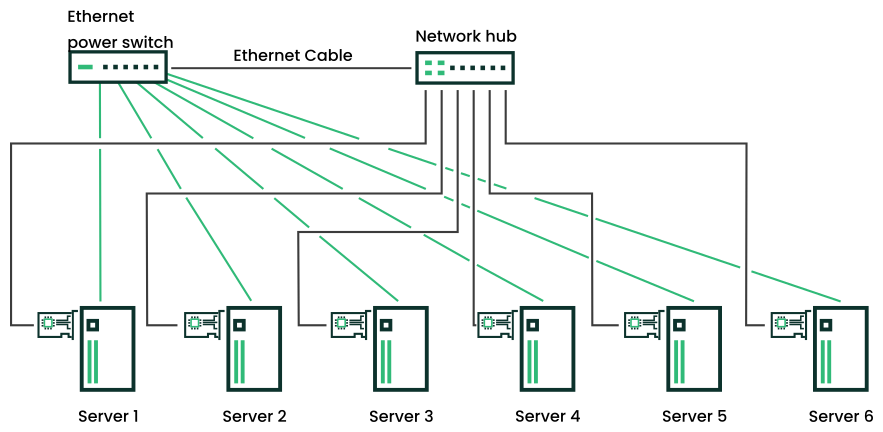

Typical resources might include data, applications, and services. The following figures show how a typical Fibre Channel cluster configuration might look. The green lines depict connections to an Ethernet power switch. Such a device can be controlled over a network and can reboot a node when a ping request fails.

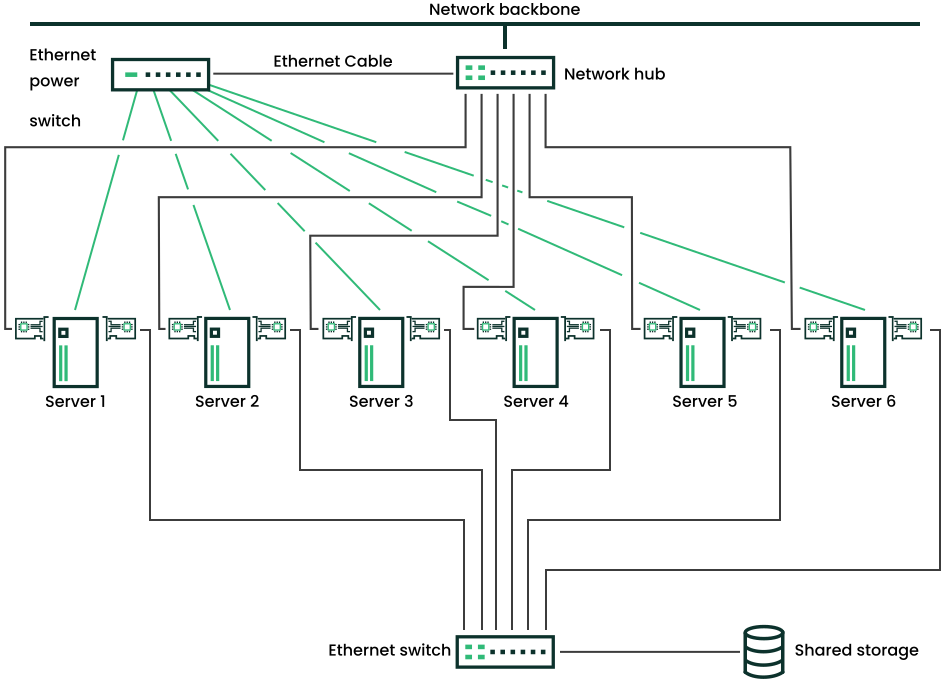

Although Fibre Channel provides the best performance, you can also configure your cluster to use iSCSI. iSCSI is an alternative to Fibre Channel that can be used to create a low-cost Storage Area Network (SAN). The following figure shows how a typical iSCSI cluster configuration might look.

Although most clusters include a shared disk subsystem, it is also possible to create a cluster without a shared disk subsystem. The following figure shows how a cluster without a shared disk subsystem might look.

1.5 Architecture #

This section provides a brief overview of SUSE Linux Enterprise High Availability architecture. It identifies and provides information on the architectural components, and describes how those components interoperate.

1.5.1 Architecture layers #

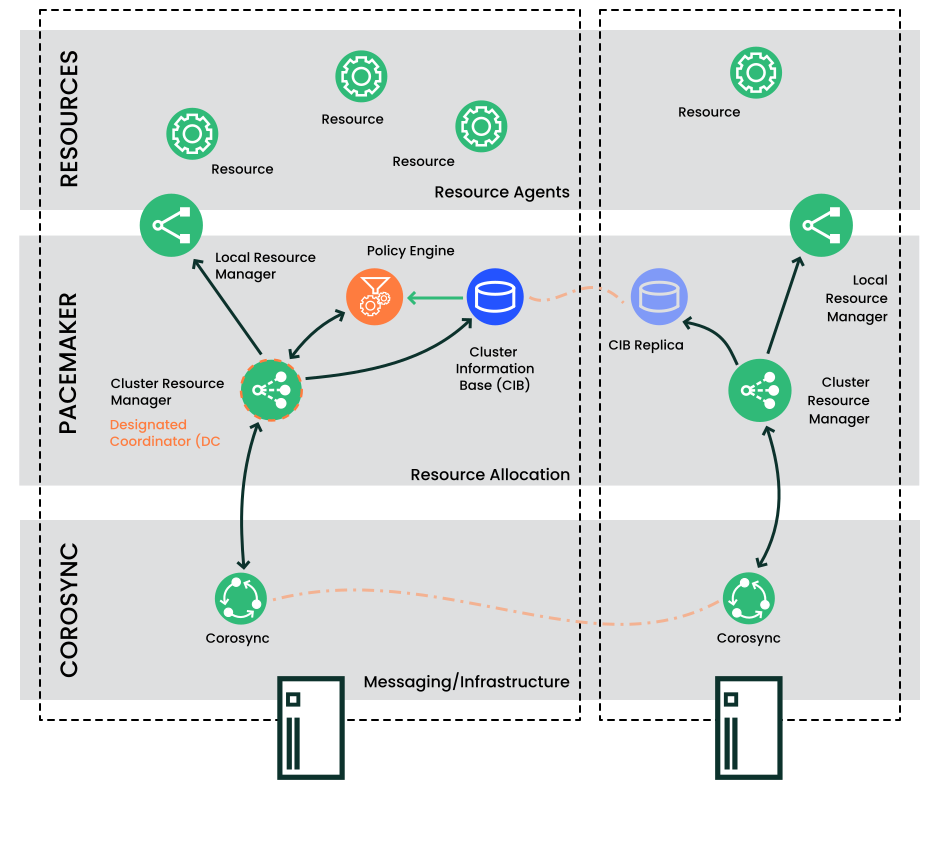

SUSE Linux Enterprise High Availability has a layered architecture. Figure 1.6, “Architecture” illustrates the different layers and their associated components.

1.5.1.1 Membership and messaging layer (Corosync) #

This component provides reliable messaging, membership, and quorum information about the cluster. This is handled by the Corosync cluster engine, a group communication system.

1.5.1.2 Cluster resource manager (Pacemaker) #

Pacemaker as cluster resource manager is the “brain”

which reacts to events occurring in the cluster. It is implemented as

pacemaker-controld, the cluster

controller, which coordinates all actions. Events can be nodes that join

or leave the cluster, failure of resources, or scheduled activities such

as maintenance, for example.

- Local resource manager

The local resource manager is located between the Pacemaker layer and the resources layer on each node. It is implemented as

pacemaker-execddaemon. Through this daemon, Pacemaker can start, stop, and monitor resources.- Cluster Information Database (CIB)

On every node, Pacemaker maintains the cluster information database (CIB). It is an XML representation of the cluster configuration (including cluster options, nodes, resources, constraints and the relationship to each other). The CIB also reflects the current cluster status. Each cluster node contains a CIB replica, which is synchronized across the whole cluster. The

pacemaker-baseddaemon takes care of reading and writing cluster configuration and status.- Designated Coordinator (DC)

The DC is elected from all nodes in the cluster. This happens if there is no DC yet or if the current DC leaves the cluster for any reason. The DC is the only entity in the cluster that can decide that a cluster-wide change needs to be performed, such as fencing a node or moving resources around. All other nodes get their configuration and resource allocation information from the current DC.

- Policy Engine

The policy engine runs on every node, but the one on the DC is the active one. The engine is implemented as

pacemaker-schedulerddaemon. When a cluster transition is needed, based on the current state and configuration,pacemaker-schedulerdcalculates the expected next state of the cluster. It determines what actions need to be scheduled to achieve the next state.

1.5.1.3 Resources and resource agents #

In a High Availability cluster, the services that need to be highly available are called resources. Resource agents (RAs) are scripts that start, stop, and monitor cluster resources.

1.5.2 Process flow #

The pacemakerd daemon launches and

monitors all other related daemons. The daemon that coordinates all actions,

pacemaker-controld, has an instance on

each cluster node. Pacemaker centralizes all cluster decision-making by

electing one of those instances as a primary. Should the elected pacemaker-controld daemon fail, a new primary is

established.

Many actions performed in the cluster will cause a cluster-wide change. These actions can include things like adding or removing a cluster resource or changing resource constraints. It is important to understand what happens in the cluster when you perform such an action.

For example, suppose you want to add a cluster IP address resource. To do this, you can use the CRM Shell or the Web interface to modify the CIB. It is not required to perform the actions on the DC. You can use either tool on any node in the cluster and they will be relayed to the DC. The DC will then replicate the CIB change to all cluster nodes.

Based on the information in the CIB, the pacemaker-schedulerd then computes the ideal

state of the cluster and how it should be achieved. It feeds a list of

instructions to the DC. The DC sends commands via the messaging/infrastructure

layer which are received by the pacemaker-controld peers on

other nodes. Each of them uses its local resource agent executor (implemented

as pacemaker-execd) to perform

resource modifications. The pacemaker-execd is not cluster-aware and interacts

directly with resource agents.

All peer nodes report the results of their operations back to the DC.

After the DC concludes that all necessary operations are successfully

performed in the cluster, the cluster will go back to the idle state and

wait for further events. If any operation was not carried out as

planned, the pacemaker-schedulerd

is invoked again with the new information recorded in

the CIB.

In some cases, it might be necessary to power off nodes to protect shared

data or complete resource recovery. In a Pacemaker cluster, the implementation

of node level fencing is STONITH. For this, Pacemaker comes with a

fencing subsystem, pacemaker-fenced.

STONITH devices must be configured as cluster resources (that use

specific fencing agents), because this allows monitoring of the fencing devices.

When clients detect a failure, they send a request to pacemaker-fenced,

which then executes the fencing agent to bring down the node.