19 Disk cache modes #

19.1 What is a disk cache? #

A disk cache is a memory used to speed up the process of storing and accessing data from the hard disk. Physical hard disks have their cache integrated as a standard feature. For virtual disks, the cache uses VM Host Server's memory and you can fine-tune its behavior, for example, by setting its type.

19.2 How does a disk cache work? #

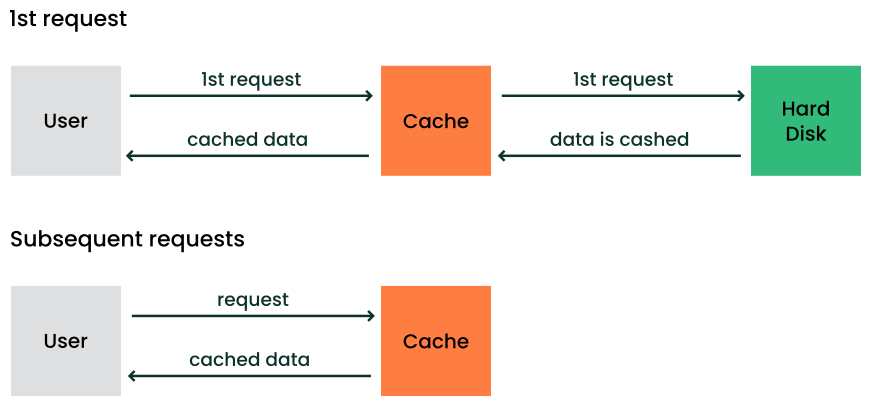

Normally, a disk cache stores the most recent and frequently used programs and data. When a user or program requests data, the operating system first checks the disk cache. If the data is there, the operating system quickly delivers the data to the program instead of re-reading the data from the disk.

19.3 Benefits of disk caching #

Caching of virtual disk devices affects the overall performance of guest machines. You can improve the performance by optimizing the combination of cache mode, disk image format, and storage subsystem.

19.4 Virtual disk cache modes #

If you do not specify a cache mode, writeback is used

by default. Each guest disk can use one of the following cache modes:

- writeback

writebackuses the host page cache. Writes are reported to the guest as completed when they are placed in the host cache. Cache management handles commitment to the storage device. The guest's virtual storage adapter is informed of the writeback cache and therefore expected to send flush commands as needed to manage data integrity.- writethrough

Writes are reported as completed only when the data has been committed to the storage device. The guest's virtual storage adapter is informed that there is no writeback cache, so the guest does not need to send flush commands to manage data integrity.

- none

The host cache is bypassed, and reads and writes happen directly between the hypervisor and the storage device. Because the actual storage device may report a write as completed when the data is still placed in its write queue only, the guest's virtual storage adapter is informed that there is a writeback cache. This mode is equivalent to direct access to the host's disk.

- unsafe

Similar to the writeback mode, except all flush commands from the guests are ignored. Using this mode implies that the user prioritizes performance gain over the risk of data loss in case of a host failure. This mode can be useful during guest installation, but not for production workloads.

- directsync

Writes are reported as completed only when the data has been committed to the storage device and the host cache is bypassed. Similar to writethrough, this mode can be useful for guests that do not send flushes when needed.

19.5 Cache modes and data integrity #

- writethrough, none, directsync

These modes are considered to be safest when the guest operating system uses flushes as needed. For unsafe or unstable guests, use writethough or directsync.

- writeback

This mode informs the guest of the presence of a write cache, and it relies on the guest to send flush commands as needed to maintain data integrity within its disk image. This mode exposes the guest to data loss if the host fails. The reason is the gap between the moment a write is reported as completed and the time the write being committed to the storage device.

- unsafe

This mode is similar to writeback caching, except the guest flush commands are ignored. This means a higher risk of data loss caused by host failure.

19.6 Cache modes and live migration #

The caching of storage data restricts the configurations that support

live migration. Currently, only raw and

qcow2 image formats can be used for live migration. If

a clustered file system is used, all cache modes support live migration.

Otherwise the only cache mode that supports live migration on read/write

shared storage is none.

The libvirt management layer includes checks for migration

compatibility based on several factors. If the guest storage is hosted on

a clustered file system, is read-only, or is marked shareable, then the

cache mode is ignored when determining if migration can be allowed.

Otherwise libvirt does not allow migration unless the cache mode is set

to none. However, this restriction can be overridden

with the “--unsafe” option to the migration APIs, which is

also supported by virsh. For example:

> virsh migrate --live --unsafe

The cache mode none is required for the AIO mode

setting native. If another cache mode is used, the

AIO mode is silently switched back to the default

threads.