18 Managing multipath I/O for devices #

This section describes how to manage failover and path load balancing for multiple paths between the servers and block storage devices by using Multipath I/O (MPIO).

18.1 Understanding multipath I/O #

Multipathing is the ability of a server to communicate with the same physical or logical block storage device across multiple physical paths between the host bus adapters in the server and the storage controllers for the device, typically in Fibre Channel (FC) or iSCSI SAN environments.

Linux multipathing provides connection fault tolerance and can provide load balancing across the active connections. When multipathing is configured and running, it automatically isolates and identifies device connection failures, and reroutes I/O to alternate connections.

Multipathing provides fault tolerance against connection failures, but not against failures of the storage device itself. The latter is achieved with complementary techniques like mirroring.

18.1.1 Multipath terminology #

- Storage array

A hardware device with many disks and multiple fabrics connections (controllers) that provides SAN storage to clients. Storage arrays typically have RAID and failover features and support multipathing. Historically, active/passive (failover) and active/active (load-balancing) storage array configurations were distinguished. These concepts still exist but they are merely special cases of the concepts of path groups and access states supported by modern hardware.

- Host, host system

The computer running SUSE Linux Enterprise Server which acts as a client system for a storage array.

- Multipath map, multipath device

A set of path devices. It represents a storage volume on a storage array and is seen as a a single block device by the host system.

- Path device

A member of a multipath map, typically a SCSI device. Each path device represents a unique connection between the host computer and the actual storage volume, for example, a logical unit from an iSCSI session.

- WWID

“World Wide Identifier”.

multipath-toolsuses the WWID to determine which low-level devices should be assembled into a multipath map. The WWID must be distinguished from the configurable map name (see Section 18.12, “Multipath device names and WWIDs”).- uevent, udev event

An event sent by the kernel to user space and processed by the

udevsubsytem. Uevents are generated when devices are added, removed, or change properties.- Device mapper

A framework in the Linux kernel for creating virtual block devices. I/O operations to mapped devices are redirected to underlying block devices. Device mappings may be stacked. Device mapper implements its own event signalling, so called “device mapper events” or “dm events”.

- initramfs

The initial RAM file system, also referred to as “initial RAM disk” (initrd) for historical reasons (see Section 12.1, “Terminology”).

- ALUA

“Asymmetric Logical Unit Access”, a concept introduced with the SCSI standard SCSI-3. Storage volumes can be accessed via multiple ports, which are organized in port groups with different states (active, standby, etc.). ALUA defines SCSI commands to query the port groups and their states and change the state of a port group. Modern storage arrays that support SCSI usually support ALUA, too.

18.2 Hardware support #

The multipathing drivers and tools are available on all architectures supported by SUSE Linux Enterprise Server. The generic, protocol-agnostic driver works with most multipath-capable storage hardware on the market. Some storage array vendors provide their own multipathing management tools. Consult the vendor’s hardware documentation to determine what settings are required.

18.2.1 Multipath implementations: device-mapper and NVMe #

The traditional, generic implementation of multipathing under Linux uses the device mapper framework. For most device types like SCSI devices, device mapper multipathing is the only available implementation. Device mapper multipath is highly configurable and flexible.

The Linux NVM Express (NVMe) kernel subsystem implements multipathing natively in the kernel. This implementation creates less computational overhead for NVMe devices, which are typically fast devices with very low latencies. Native NVMe multipathing requires no user space component. Since SLE 15, native multipathing has been the default for NVMe multipath devices. For details, refer to Section 17.2.4, “Multipathing”.

This chapter documents device mapper multipath and its user-space

component, multipath-tools.

multipath-tools also has limited support for

native NVMe multipathing (see

Section 18.13, “Miscellaneous options”).

18.2.2 Storage array autodetection for multipathing #

Device-mapper multipath is a generic technology. Multipath device detection requires only that the low-level (e.g. SCSI) devices are detected by the kernel, and that device properties reliably identify multiple low-level devices as being different “paths” to the same volume rather than actually different devices.

The multipath-tools package detects storage arrays by

their vendor and product names. It provides built-in configuration defaults

for a large variety of storage products. Consult the hardware documentation

of your storage array: some vendors provide specific recommendations for

Linux multipathing configuration.

If you need to apply changes to the built-in configuration for your storage array, read Section 18.8, “Multipath configuration”.

multipath-tools has built-in presets for many storage

arrays. The existence of such presets for a given storage product

does not imply that the vendor of the storage product

has tested the product with dm-multipath, nor

that the vendor endorses or supports the use of

dm-multipath with the product. Always consult the

original vendor documentation for support-related questions.

18.2.3 Storage arrays that require specific hardware handlers #

Some storage arrays require special commands for failover from one path to the other, or non-standard error-handling methods. These special commands and methods are implemented by hardware handlers in the Linux kernel. Modern SCSI storage arrays support the “Asymmetric Logical Unit Access” (ALUA) hardware handler defined in the SCSI standard. Besides ALUA, the SLE kernel contains hardware handlers for Netapp E-Series (RDAC), the Dell/EMC CLARiiON CX family of arrays, and legacy arrays from HP.

Since Linux kernel 4.4, the Linux kernel has automatically detected

hardware handlers for most arrays, including all arrays supporting ALUA.

The only requirement is that the device handler modules are loaded at the

time the respective devices are probed. The

multipath-tools package ensures this by installing

appropriate configuration files. Once a device handler is attached to a

given device, it cannot be changed anymore.

18.3 Planning for multipathing #

Use the guidelines in this section when planning your multipath I/O solution.

18.3.1 Prerequisites #

The storage array you use for the multipathed device must support multipathing. For more information, see Section 18.2, “Hardware support”.

You need to configure multipathing only if multiple physical paths exist between host bus adapters in the server and host bus controllers for the block storage device.

For some storage arrays, the vendor provides its own multipathing software to manage multipathing for the array’s physical and logical devices. In this case, you should follow the vendor’s instructions for configuring multipathing for those devices.

When using multipathing in a virtualization environment, the multipathing is controlled in the host server environment. Configure multipathing for the device before you assign it to a virtual guest machine.

18.3.2 Multipath installation types #

We distinguish installation types by the way the root device is handled. Section 18.4, “Installing SUSE Linux Enterprise Server on multipath systems” describes how the different setups are created during and after installation.

18.3.2.1 Root file system on multipath (SAN-boot) #

The root file system is on a multipath device. This is typically the case for diskless servers that use SAN storage exclusively. On such systems, multipath support is required for booting, and multipathing must be enabled in the initramfs.

18.3.2.2 Root file system on a local disk #

The root file system (and possibly some other file systems) is on local storage, for example, on a directly attached SATA disk or local RAID, but the system additionally uses file systems in the multipath SAN storage. This system type can be configured in three different ways:

- Multipath setup for local disk

All block devices are part of multipath maps, including the local disk. The root device appears as a degraded multipath map with just one path. This configuration is created if multipathing was enabled during the initial system installation with YaST.

- Local disk is excluded from multipath

In this configuration, multipathing is enabled in the initramfs, but the root device is explicitly excluded from multipath (see Section 18.11.1, “The

blacklistsection inmultipath.conf”). Procedure 18.1, “Disabling multipathing for the root disk after installation” describes how to set up this configuration.- Multipath disabled in the initramfs

This setup is created if multipathing was not enabled during the initial system installation with YaST. This configuration is rather fragile; consider using one of the other options instead.

18.3.3 Disk management tasks #

Use third-party SAN array management tools or your storage array's user interface to create logical devices and assign them to hosts. Make sure to configure the host credentials correctly on both sides.

You can add or remove volumes to a running host, but detecting the changes may require rescanning SCSI targets and reconfiguring multipathing on the host. See Section 18.14.6, “Scanning for new devices without rebooting”.

On some disk arrays, the storage array manages the traffic through storage processors. One processor is active and the other one is passive until there is a failure. If you are connected to the passive storage processor, you might not see the expected LUNs, or you might see the LUNs but encounter I/O errors when you try to access them.

If a disk array has more than one storage processor, ensure that the SAN switch has a connection to the active storage processor that owns the LUNs you want to access.

18.3.4 Software RAID and complex storage stacks #

Multipathing is set up on top of basic storage devices such as SCSI disks. In a multi-layered storage stack, multipathing is always the bottom layer. Other layers such as software RAID, Logical Volume Management, block device encryption, etc. are layered on top of it. Therefore, for each device that has multiple I/O paths and that you plan to use in a software RAID, you must configure the device for multipathing before you attempt to create the software RAID device.

18.3.5 High-availability solutions #

High-availability solutions for clustering storage resources run on top of

the multipathing service on each node. Make sure that the configuration

settings in the /etc/multipath.conf file on each node

are consistent across the cluster.

Make sure that multipath devices have the same name across all devices. Refer to Section 18.12, “Multipath device names and WWIDs” for details.

The Distributed Replicated Block Device (DRBD) high-availability solution for mirroring devices across a LAN runs on top of multipathing. For each device that has multiple I/O paths and that you plan to use in a DRDB solution, you must configure the device for multipathing before you configure DRBD.

Special care must be taken when using multipathing together with clustering

software that relies on shared storage for fencing, such as

pacemaker with sbd. See

Section 18.9.2, “Queuing policy on clustered servers” for details.

18.4 Installing SUSE Linux Enterprise Server on multipath systems #

No special installation parameters are required for the installation of SUSE Linux Enterprise Server on systems with multipath hardware.

18.4.1 Installing without connected multipath devices #

You may want to perform installation on a local disk, without configuring

the fabric and the storage first, with the intention to add multipath SAN

devices to the system later. In this case, the installation will proceed

like on a non-multipath system. After installation,

multipath-tools will be installed, but the systemd

service multipathd.service will be disabled. The

system will be configured as described in

Multipath disabled in the initramfs in

Section 18.3.2.2, “Root file system on a local disk”.

Before adding SAN hardware, you will need to enable and start

multipathd.service. We recommend creating a

blacklist entry in the

/etc/multipath.conf for the root device (see

Section 18.11.1, “The blacklist section in multipath.conf”).

18.4.2 Installing with connected multipath devices #

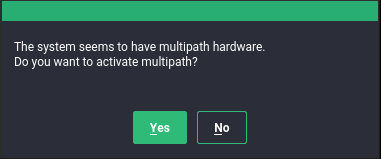

If multipath devices are connected to the system at installation time, YaST will detect them and display a pop-up window asking you whether multipath should be enabled before entering the partitioning stage.

If you select “No” at this prompt (not recommended), the installation will proceed as in Section 18.4.1, “Installing without connected multipath devices”. In the partitioning stage, do not use/edit devices that will later be part of a multipath map.

If you select “Yes” at the multipath prompt,

multipathd will run during the installation. No

device will be added to the blacklist section of /etc/multipath.conf, thus all SCSI and DASD devices, including

local disks, will appear as multipath devices in the partitioning dialogs.

After installation, all SCSI and DASD devices will be multipath devices, as

described in

Section 18.3.2.1, “Root file system on multipath (SAN-boot)”.

This procedure assumes that you installed on a local disk and enabled multipathing during installation, so that the root device is on multipath now, but you prefer to set up the system as described in Local disk is excluded from multipath in Section 18.3.2.2, “Root file system on a local disk”.

Check your system for

/dev/mapper/...references to your local root device, and replace them with references that will still work if the device is not a multipath map anymore (see Section 18.12.4, “Referring to multipath maps”). If the following command finds no references, you do not need to apply changes:>sudogrep -rl /dev/mapper/ /etcSwitch to

by-uuidpersistent device policy fordracut(see Section 18.7.4.2, “Persistent device names in the initramfs”):>echo 'persistent_policy="by-uuid"' | \ sudo tee /etc/dracut.conf.d/10-persistent-policy.confDetermine the WWID of the root device:

>multipathd show paths format "%i %d %w %s" 0:2:0:0 sda 3600605b009e7ed501f0e45370aaeb77f IBM,ServeRAID M5210 ...This command prints all paths devices with their WWIDs and vendor/product information. You will be able to identify the root device (here, the ServeRAID device) and note the WWID.

Create a blacklist entry in

/etc/multipath.conf(see Section 18.11.1, “Theblacklistsection inmultipath.conf”) with the WWID you just determined (do not apply these settings just yet):blacklist { wwid 3600605b009e7ed501f0e45370aaeb77f }Rebuild the initramfs:

>sudodracut -fReboot. Your system should boot with a non-multipath root disk.

18.5 Updating SLE on multipath systems #

When updating your system online, you can proceed as described in Capítulo 5, Actualización con conexión.

The offline update of your system is similar to the fresh installation as described in Section 18.4, “Installing SUSE Linux Enterprise Server on multipath systems”. There is no blacklist, thus if the user selects to enable

multipath, the root device will appear as a multipath device, even if it is

normally not. When dracut builds the initramfs during

the update procedure, it sees a different storage stack as it would see on

the booted system. See

Section 18.7.4.2, “Persistent device names in the initramfs”

and

Section 18.12.4, “Referring to multipath maps”.

18.6 Multipath management tools #

The multipathing support in SUSE Linux Enterprise Server is based on the Device Mapper

Multipath module of the Linux kernel and the

multipath-tools user space package.

The generic multipathing capability is handled by the Device Mapper Multipath (DM-MP) module. For details, refer to Section 18.6.1, “Device mapper multipath module”.

The packages multipath-tools and

kpartx provide tools that handle

automatic path discovery and grouping. The tools are the following:

multipathdThe daemon to set up and monitor multipath maps, and a command-line client to communicate with the daemon process. See Section 18.6.2, “The

multipathddaemon”.multipathThe command-line tool for multipath operations. See Section 18.6.3, “The

multipathcommand”.kpartxThe command-line tool for managing “partitions” on multipath devices. See Section 18.7.3, “Partitions on multipath devices and

kpartx”.mpathpersistThe command-line tool for managing SCSI persistent reservations. See Section 18.6.4, “SCSI persistent reservations and

mpathpersist”.

18.6.1 Device mapper multipath module #

The Device Mapper Multipath (DM-MP) module

dm-multipath.ko provides the generic multipathing

capability for Linux. DM-MPIO is the preferred solution for multipathing on

SUSE Linux Enterprise Server for SCSI and DASD devices, and can be used for NVMe devices

as well.

Since SUSE Linux Enterprise Server 15, native NVMe multipathing (see

Section 18.2.1, “Multipath implementations: device-mapper and NVMe”) is recommended

for NVMe and used by default. To disable native NVMe multipathing and use

device mapper multipath instead (not recommended),

boot with the kernel parameter nvme-core.multipath=0.

The Device Mapper Multipath module handles the following tasks:

Distributing load over multiple paths inside the active path group.

Noticing I/O errors on path devices, and marking these as failed, so that no I/O will be sent to them.

Switching path groups when all paths in the active path group have failed.

Either failing or queuing I/O on the multipath device if all paths have failed, depending on configuration.

The following tasks are handled by the user-space components in the

multipath-tools package, not by the Device Mapper

Multipath module:

Discovering devices representing different paths to the same storage device and assembling multipath maps from them.

Collecting path devices with similar properties into path groups.

Monitoring path devices actively for failure or reinstantiation.

Monitoring of addition and removal of path devices.

The Device Mapper Multipath module does not provide an easy-to-use user interface for setup and configuration.

For details about the components from the multipath-tools package,

refer to Section 18.6.2, “The multipathd daemon”.

DM-MPIO protects against failures in the paths to the device, and not failures in the device itself, such as media errors. The latter kind of errors must be prevented by other means, such as replication.

18.6.2 The multipathd daemon #

multipathd is the most important part of a modern Linux

device mapper multipath setup. It is normally started through the systemd

service multipathd.service (see

Section 18.7.1, “Enabling, starting, and stopping multipath services”).

multipathd serves the following tasks (some of them

depend on the configuration):

On startup, detects path devices and sets up multipath maps from detected devices.

Monitors uevents and device mapper events, adding or removing path mappings to multipath maps as necessary, and initiating failover or failback operations.

Sets up new maps on the fly when new path devices are discovered.

Checks path devices in regular intervals to detect failure, and tests failed paths to reinstate them if they become operational again.

When all paths fail,

multipathdeither fails the map, or switches the map device to queuing mode for a given time interval.Handle path state changes and switch path groups or regroup paths as necessary.

Tests paths for “marginal” state, i.e. shaky fabrics conditions that cause path state flipping between operational and non-operational.

Handle SCSI persistent reservation keys for path devices if configured. See Section 18.6.4, “SCSI persistent reservations and

mpathpersist”.

multipathd also serves as a command-line client to

process interactive commands by sending them to the running daemon. The

general syntax to send commands to the daemon is as follows:

>sudomultipathd COMMAND

or

>sudomultipathd -k'COMMAND'

There is also an interactive mode that allows sending multiple subsequent commands:

>sudomultipathd -k

Many multipathd commands have

multipath equivalents. For example, multipathd

show topology does basically the same thing as

multipath -ll. The notable difference is that the

multipathd command inquires the internal state of the running

multipathd daemon, whereas multipath obtains

information directly from the kernel and I/O operations.

If the multipath daemon is running, we recommend making

modifications to the system by using the multipathd

commands. Otherwise, the daemon may notice configuration changes and react

to them. In some situations, the daemon might even try to undo the applied

changes. multipath automatically delegates certain

possibly dangerous commands, like destroying and flushing maps, to

multipathd if a running daemon is detected.

The list below describes frequently used multipathd

commands:

- show topology

Shows the current map topology and properties. See Section 18.14.2, “Interpreting multipath I/O status”.

- show paths

Shows the currently known path devices.

- show paths format "FORMAT STRING"

Shows the currently known path devices using a format string. Use

show wildcardsto see a list of supported format specifiers.- show maps

Shows the currently configured map devices.

- show maps format FORMAT STRING

Shows the currently configured map devices using a format string. Use

show wildcardsto see a list of supported format specifiers.- show config local

Shows the current configuration that multipathd is using.

- reconfigure

Rereads configuration files, rescans devices, and sets up maps again. This is basically equivalent to a restart of

multipathd. A few options can't be modified without a restart, they are mentioned in the man pagemultipath.conf(5). Thereconfigurecommand reloads only map devices that have changed in some way. To force the reloading of every map device, usereconfigure all(available since SUSE Linux Enterprise Server 15 SP4; on previous versions,reconfigurereloaded every map).- del map MAP DEVICE NAME

Unconfigure and delete the given map device and its partitions. MAP DEVICE NAME can be a device node name like

dm-0, a WWID, or a map name. The command fails if the device is in use.- switchgroup map MAP DEVICE NAME group N

Switch to the path group with the given numeric index (starting at 1). This is useful for maps with manual failback (see Section 18.9, “Configuring policies for failover, queuing, and failback”).

Additional commands are available to modify path states, enable or disable

queuing, and more. See multipathd(8) for details.

18.6.3 The multipath command #

Even though multipath setup is mostly automatic and handled by

multipathd, multipath is still useful

for some administration tasks. Several examples of the command usage

follows:

- multipath

Detects path devices and and configures all multipath maps that it finds.

- multipath -d

Like

multipath, but doesn't actually set up any maps (“dry run”).- multipath DEVICENAME

Configures a specific multipath device. DEVICENAME can denote a member path device by its device node name (

/dev/sdb) or device number inmajor:minorformat. Alternatively, it can be the WWID or name of a multipath map.- multipath -f DEVICENAME

Unconfigures ("flushes") a multipath map and its partition mappings. The command will fail if the map or one of its partitions is in use. See above for possible values of DEVICENAME.

- multipath -F

Unconfigures ("flushes") all multipath maps and their partition mappings. The command will fail for maps in use.

- multipath -ll

Displays the status and topology of all currently configured multipath devices. See Section 18.14.2, “Interpreting multipath I/O status”.

- multipath -ll DEVICENAME

Displays the status of a specified multipath device. See above for possible values of DEVICENAME.

- multipath -t

Shows internal hardware table and active configuration of multipath. Refer to

multipath.conf(5)for details about the configuration parameters.- multipath -T

Has a similar function as the

multipath -tcommand but shows only hardware entries matching the hardware detected on the host.

The option -v controls the verbosity of the output. The

provided value overrides the verbosity option in

/etc/multipath.conf. See

Section 18.13, “Miscellaneous options”.

18.6.4 SCSI persistent reservations and mpathpersist #

The mpathpersist utility is used to manage SCSI

persistent reservations on Device Mapper Multipath devices. Persistent

reservations serve to restrict access to SCSI Logical Units to certain SCSI

initiators. In multipath configurations, it is important to use the same

reservation keys for all I_T nexuses (paths) for a given volume; otherwise,

creating a reservation on one path device would cause I/O errors on other

paths.

Use this utility with the reservation_key attribute in

the /etc/multipath.conf file to set persistent

reservations for SCSI devices. If (and only if) this option is set, the

multipathd daemon checks persistent reservations for

newly discovered paths or reinstated paths.

You can add the attribute to the defaults section or the

multipaths section of

multipath.conf. For example:

multipaths {

multipath {

wwid 3600140508dbcf02acb448188d73ec97d

alias yellow

reservation_key 0x123abc

}

}

After setting the reservation_key parameter for all mpath

devices applicable for persistent management, reload the configuration

using multipathd reconfigure.

reservation_key file”

If the special value reservation_key file is used in

the defaults section of

multipath.conf, reservation keys can be managed

dynamically in the file /etc/multipath/prkeys using

mpathpersist.

This is the recommended way to handle persistent reservations with multipath maps. It is available since SUSE Linux Enterprise Server 12 SP4.

Use the command mpathpersist to query and set persistent

reservations for multipath maps consisting of SCSI devices. Refer to the

manual page mpathpersist(8) for details. The

command-line options are the same as those of the

sg_persist from the sg3_utils

package. The sg_persist(8) manual page explains

the semantics of the options in detail.

In the following examples, DEVICE denotes a

device-mapper multipath device like

/dev/mapper/mpatha. The commands below are listed with

long options for better readability. All options have single-letter

replacements, like in mpathpersist -oGS 123abc

DEVICE.

- mpathpersist --in --read-keys DEVICE

Read the registered reservation keys for the device.

- mpathpersist --in --read-reservation DEVICE

Show existing reservations for the device.

- mpathpersist --out --register --param-sark=123abc DEVICE

Register a reservation key for the device. This will add the reservation key for all I_T nexuses (path devices) on the host.

- mpathpersist --out --reserve --param-rk=123abc --prout-type=5 DEVICE

Create a reservation of type 5 (“write exclusive - registrants only”) for the device, using the previously registered key.

- mpathpersist --out --release --param-rk=123abc --prout-type=5 DEVICE

Release a reservation of type 5 for the device.

- mpathpersist --out --register-ignore --param-sark=0 DEVICE

Delete a previously existing reservation key from the device.

18.7 Configuring the system for multipathing #

18.7.1 Enabling, starting, and stopping multipath services #

To enable multipath services to start at boot time, run the following command:

>sudosystemctl enable multipathd

To manually start the service in the running system, enter:

>sudosystemctl start multipathd

To restart the service, enter:

>sudosystemctl restart multipathd

In most situations, restarting the service isn't necessary. To simply have

multipathd reload its configuration, run:

>sudosystemctl reload multipathd

To check the status of the service, enter:

>sudosystemctl status multipathd

To stop the multipath services in the current session, run:

>sudosystemctl stop multipathd multipathd.socket

Stopping the service does not remove existing multipath maps. To remove unused maps, run:

>sudomultipath -F

multipathd.service enabled

We strongly recommend keeping multipathd.service

always enabled and running on systems with multipath hardware. The service

does support systemd's socket activation mechanism, but

it is discouraged to rely on that. Multipath maps will not be set up

during boot if the service is disabled.

If you need to disable multipath despite the warning above, for example, because a third-party multipathing software is going to be deployed, proceed as follows. Be sure that the system uses no hard-coded references to multipath devices (see Section 18.15.2, “Understanding device referencing issues”).

To disable multipathing just for a single system

boot, use the kernel parameter

multipath=off. This affects both the booted system and

the initramfs, which does not need to be rebuilt in this case.

To disable multipathd services permanently, so that they will not be started on future system boots, run the following commands:

>sudosystemctl disable multipathd multipathd.socket>sudodracut --force --omit multipath

(Whenever you disable or enable the multipath services, rebuild the

initramfs. See

Section 18.7.4, “Keeping the initramfs synchronized”.)

If you want to make sure multipath devices do not get set up,

even when running multipath

manually, add the following lines at the end of

/etc/multipath.conf before rebuilding the initramfs:

blacklist {

wwid .*

}18.7.2 Preparing SAN devices for multipathing #

Before configuring multipath I/O for your SAN devices, prepare the SAN devices, as necessary, by doing the following:

Configure and zone the SAN with the vendor’s tools.

Configure permissions for host LUNs on the storage arrays with the vendor’s tools.

If SUSE Linux Enterprise Server ships no driver for the host bus adapter (HBA), install a Linux driver from the HBA vendor. See the vendor’s specific instructions for more details.

If multipath devices are detected and

multipathd.service is enabled, multipath maps should

be created automatically. If this does not happen,

Section 18.15.3, “Troubleshooting steps in emergency mode”

lists some shell commands that can be used to examine the

situation. When the LUNs are not seen by the HBA driver, check the zoning

setup in the SAN. In particular, check whether LUN masking is active and

whether the LUNs are correctly assigned to the server.

If the HBA driver can see LUNs, but no corresponding block devices are created, additional kernel parameters may be needed. See TID 3955167: Troubleshooting SCSI (LUN) Scanning Issues in the SUSE Knowledge base at https://www.suse.com/support/kb/doc.php?id=3955167.

18.7.3 Partitions on multipath devices and kpartx #

Multipath maps can have partitions like their path devices. Partition table

scanning and device node creation for partitions is done in user space by

the kpartx tool. kpartx is

automatically invoked by udev rules; there is usually no need to run it

manually. See Section 18.12.4, “Referring to multipath maps” for ways to refer

to multipath partitions.

kpartx

The skip_kpartx option in

/etc/multipath.conf can be used to disable invocation

of kpartx on selected multipath maps. This may be

useful on virtualization hosts, for example.

Partition tables and partitions on multipath devices can be manipulated as

usual, using YaST or tools like fdisk or

parted. Changes applied to the partition table will be

noted by the system when the partitioning tool exits. If this doesn't work

(usually because a device is busy), try multipathd

reconfigure, or reboot the system.

18.7.4 Keeping the initramfs synchronized #

Make sure that the initial RAM file system (initramfs) and the booted system behave consistently regarding the use of multipathing for all block devices. Rebuild the initramfs after applying multipath configuration changes.

If multipathing is enabled in the system, it also needs to be enabled in

the initramfs and vice versa. The only exception to

this rule is the option

Multipath disabled in the initramfs in

Section 18.3.2.2, “Root file system on a local disk”.

The multipath configuration must be synchronized between the booted system

and the initramfs. Therefore, if you change any of the files:

/etc/multipath.conf,

/etc/multipath/wwids,

/etc/multipath/bindings, or other configuration files,

or udev rules related to device identification, rebuild initramfs using the

command:

>sudodracut -f

If the initramfs and the system are not synchronized, the

system will not boot properly, and the start-up procedure may result in an

emergency shell. See Section 18.15, “Troubleshooting MPIO” for

instructions on how to avoid or repair such a scenario.

18.7.4.1 Enabling or disabling multipathing in the initramfs #

Special care must be taken if the initramfs is rebuilt in non-standard

situations, for example, from a rescue system or after booting with the

kernel parameter multipath=off.

dracut will automatically include multipathing support

in the initramfs if and only if it detects that the root file system is on

a multipath device while the initramfs is being built. In such cases, it is

necessary to enable or disable multipathing explicitly.

To enable multipath support in the initramfs, run the

command:

>sudodracut --force --add multipath

To disable multipath support in initramfs, run the

command:

>sudodracut --force --omit multipath

18.7.4.2 Persistent device names in the initramfs #

When dracut generates the initramfs, it must

refer to disks and partitions to be mounted in a persistent manner, to

make sure the system will boot correctly. When dracut

detects multipath devices, it will use the DM-MP device names such as

/dev/mapper/3600a098000aad73f00000a3f5a275dc8-part1

for this purpose by default. This is good if the system

always runs in multipath mode. But if the system is

started without multipathing, as described in

Section 18.7.4.1, “Enabling or disabling multipathing in the initramfs”, booting with such an

initramfs will fail, because the /dev/mapper devices

will not exist. See

Section 18.12.4, “Referring to multipath maps” for

another possible problem scenario, and some background information.

To prevent this from happening, change dracut's

persistent device naming policy by using the

--persistent-policy option. We recommend setting

the by-uuid use policy:

>sudodracut --force --omit multipath --persistent-policy=by-uuid

See also Procedure 18.1, “Disabling multipathing for the root disk after installation” and Section 18.15.2, “Understanding device referencing issues”.

18.8 Multipath configuration #

The built-in multipath-tools defaults work well for

most setups. If customizations are needed, a configuration file needs to be

created. The main configuration file is

/etc/multipath.conf. In addition, files in

/etc/multipath/conf.d/ are taken into account. See

Section 18.8.2.1, “Additional configuration files and precedence rules”

for additional information.

Some storage vendors publish recommended values for multipath options in their documentation. These values often represent what the vendor has tested in their environment and found most suitable for the storage product. See the disclaimer in Section 18.2.2, “Storage array autodetection for multipathing”.

multipath-tools has built-in defaults for many

storage arrays that are derived from the published vendor recommendations.

Run multipath -T to see the current settings for your

devices and compare them to vendor recommendations.

18.8.1 Creating /etc/multipath.conf #

It is recommended that you create a minimal

/etc/multipath.conf that just contains those settings

you want to change. In many cases, you do not need to create

/etc/multipath.conf at all.

If you prefer working with a configuration template that contains all possible configuration directives, run:

multipath -T >/etc/multipath.conf

See also Section 18.14.1, “Best practices for configuration”.

18.8.2 multipath.conf syntax #

The /etc/multipath.conf file uses a hierarchy of

sections, subsections, and option/value pairs.

White space separates tokens. Consecutive white space characters are collapsed into a single space, unless quoted (see below).

The hash (

#) and exclamation mark (!) characters cause the rest of the line to be discarded as a comment.Sections and subsections are started with a section name and an opening brace (

{) on the same line, and end with a closing brace (}) on a line on its own.Options and values are written on one line. Line continuations are unsupported.

Options and section names must be keywords. The allowed keywords are documented in

multipath.conf(5).Values may be enclosed in double quotes (

"). They must be enclosed in quotes if they contain white space or comment characters. A double quote character inside a value is represented by a pair of double quotes ("").The values of some options are POSIX regular expressions (see

regex(7)). They are case sensitive and not anchored, so “bar” matches “rhabarber”, but not “Barbie”.

The following example illustrates the syntax:

section {

subsection {

option1 value

option2 "complex value!"

option3 "value with ""quoted"" word"

} ! subsection end

} # section end18.8.2.1 Additional configuration files and precedence rules #

After /etc/multipath.conf, the tools read files

matching the pattern /etc/multipath.conf.d/*.conf.

The additional files follow the same syntax rules as

/etc/multipath.conf. Sections and options can occur

multiple times. If the same option in the same

section is set in multiple files, or on multiple lines in the

same file, the last value takes precedence. Separate precedence rules

apply between multipath.conf sections, see below.

18.8.3 multipath.conf sections #

The /etc/multipath.conf file is organized into the

following sections. Some options can occur in more than one section. See

multipath.conf(5) for details.

- defaults

General default settings.

Important: Overriding built-in device propertiesBuilt-in hardware-specific device properties take precedence over the settings in the

defaultssection. Changes must therefore be made in thedevicessection or in theoverridessection.- blacklist

Lists devices to ignore. See Section 18.11.1, “The

blacklistsection inmultipath.conf”.- blacklist_exceptions

Lists devices to be multipathed even though they are matched by the blacklist. See Section 18.11.1, “The

blacklistsection inmultipath.conf”.- devices

Settings specific to the storage controller. This section is a collection of

devicesubsections. Values in this section override values for the same options in thedefaultssection, and the built-in settings ofmultipath-tools.deviceentries in thedevicessection are matched against the vendor and product of a device using regular expressions. These entries will be “merged”, setting all options from matching sections for the device. If the same option is set in multiple matchingdevicesections, the last device entry takes precedence, even if it is less “specific” than preceding entries. This applies also if the matching entries appear in different configuration files (see Section 18.8.2.1, “Additional configuration files and precedence rules”). In the following example, a deviceSOMECORP STORAGEwill usefast_io_fail_tmo 15.devices { device { vendor SOMECORP product STOR fast_io_fail_tmo 10 } device { vendor SOMECORP product .* fast_io_fail_tmo 15 } }- multipaths

Settings for individual multipath devices. This section is a list of

multipathsubsections. Values override thedefaultsanddevicessections.- overrides

Settings that override values from all other sections.

18.8.4 Applying multipath.conf modifications #

To apply the configuration changes, run:

>sudomultipathd reconfigure

Do not forget to synchronize with the configuration in the initramfs. See Section 18.7.4, “Keeping the initramfs synchronized”.

multipath

Do not apply new settings with the multipath command

while multipathd is running. An inconsistent, possibly

broken setup may result.

It is possible to test modified settings first before they are applied, by running:

>sudomultipath -d -v2

This command shows new maps to be created with the proposed topology, but not whether maps will be removed/flushed. To obtain more information, run with increased verbosity:

>sudomultipath -d -v3 2>&1 | less

18.9 Configuring policies for failover, queuing, and failback #

The goal of multipath I/O is to provide connectivity fault tolerance between the storage system and the server. The desired default behavior depends on whether the server is a stand-alone server or a node in a high-availability cluster.

This section discusses the most important

multipath-tools configuration parameters for

achieving fault tolerance.

- polling_interval

The time interval (in seconds) between health checks for path devices. The default is 5 seconds. Failed devices are checked with this time interval. For healthy devices, the time interval may be increased up to

max_polling_intervalseconds.- detect_checker

If this is set to

yes(default, recommended),multipathdautomatically detects the best path checking algorithm.- path_checker

The algorithm used to check path state. If you need to enable the checker, disable

detect_checkeras follows:defaults { detect_checker no }The following list contains only the most important algorithms. See

multipath.conf(5)for the full list.- tur

Send TEST UNIT READY command. This is the default for SCSI devices with ALUA support.

- directio

Read a device sector using asynchronous I/O (aio).

- rdac

Device-specific checker for NetAPP E-Series and similar arrays.

- none

No path checking is performed.

- checker_timeout

If a device does not respond to a path checker command in the given time, it is considered failed. The default is the kernel's SCSI command timeout for the device (usually 30 seconds).

- fast_io_fail_tmo

If an error on the SCSI transport layer is detected (for example on a Fibre Channel remote port), the kernel transport layer waits for this amount of time (in seconds) for the transport to recover. After that, the path device fails with “transport offline” state. This is very useful for multipath, because it allows a quick path failover for a frequently occurring class of errors. The value must match typical time scale for reconfiguration in the fabric. The default value of 5 seconds works well for Fibre Channel. Other transports, like iSCSI, may require longer timeouts.

- dev_loss_tmo

If a SCSI transport endpoint (for example a Fibre Channel remote port) is not reachable any more, the kernel waits for this amount of time (in seconds) for the port to reappear until it removes the SCSI device node for good. Device node removal is a complex operation which is prone to race conditions or deadlocks and should best be avoided. We therefore recommend setting this to a high value. The special value

infinityis supported. The default is 10 minutes. To avoid deadlock situations,multipathdensures that I/O queuing (seeno_path_retry) is stopped beforedev_loss_tmoexpires.- no_path_retry

Determine what happens if all paths of a given multipath map have failed. The possible values are:

- fail

Fail I/O on the multipath map. This will cause I/O errors in upper layers such as mounted file systems. The affected files systems, and possibly the entire host, will enter degraded mode.

- queue

I/O on the multipath map is queued in the device mapper layer and sent to the device when path devices become available again. This is the safest option to avoid losing data, but it can have negative effects if the path devices do not get reinstated for a long time. Processes reading from the device will hang in uninterruptible sleep (

D) state. Queued data occupies memory, which becomes unavailable for processes. Eventually, memory will be exhausted.- N

N is a positive integer. Keep the map device in queuing mode for N polling intervals. When the time elapses,

multipathdfails the map device. Ifpolling_intervalis 5 seconds andno_path_retryis 6,multipathdwill queue I/O for approximately 6 * 5s = 30s before failing I/O on the map device.

- flush_on_last_del

If set to

yesand all path devices of a map are deleted (as opposed to just failed), fail all I/O in the map before removing it. The default isno.- deferred_remove

If set to

yesand all path devices of a map are deleted, wait for holders to close the file descriptors for the map device before flushing and removing it. If paths reappear before the last holder closed the map, the deferred remove operation will be cancelled. The default isno.- failback

If a failed path device in an inactive path group recovers,

multipathdreevaluates the path group priorities of all path groups (see Section 18.10, “Configuring path grouping and priorities”). After the reevaluation, the highest-priority path group may be one of the currently inactive path groups. This parameter determines what happens in this situation.Important: Observe vendor recommendationsThe optimal failback policy depends on the property of the storage device. It is therefore strongly encouraged to verify

failbacksettings with the storage vendor.- manual

Nothing happens unless the administrator runs a

multipathd switchgroup(see Section 18.6.2, “Themultipathddaemon”).- immediate

The highest-priority path group is activated immediately. This is often beneficial for performance, especially on stand-alone servers, but it should not be used for arrays on which the change of the path group is a costly operation.

- followover

Like

immediate, but only perform failback when the path that has just become active is the only healthy path in its path group. This is useful for cluster configurations: It keeps a node from automatically failing back when another node requested a failover before.- N

N is a positive integer. Wait for N polling intervals before activating the highest priority path group. If the priorities change again during this time, the wait period starts anew.

- eh_deadline

Set an approximate upper limit for the time (in seconds) spent in SCSI error handling if devices are unresponsive and SCSI commands time out without error response. When the deadline has elapsed, the kernel will perform a full HBA reset.

After modifying the /etc/multipath.conf file, apply

your settings as described in

Section 18.8.4, “Applying multipath.conf modifications”.

18.9.1 Queuing policy on stand-alone servers #

When you configure multipath I/O for a stand-alone server, a

no_path_retry setting with value

queue protects the server operating system from

receiving I/O errors as long as possible. It queues messages until a

multipath failover occurs. If “infinite” queuing is not

desired (see above), select a numeric value that is deemed high enough for

the storage paths to recover under ordinary circumstances (see above).

18.9.2 Queuing policy on clustered servers #

When you configure multipath I/O for a node in a high-availability cluster,

you want multipath to report the I/O failure to trigger the resource

failover instead of waiting for a multipath failover to be resolved. In

cluster environments, you must modify the no_path_retry

setting so that the cluster node receives an I/O error in

relation to the cluster verification process (recommended to be 50% of the

heartbeat tolerance) if the connection is lost to the storage system. In

addition, you want the multipath failback to be set to

manual or followover to avoid a

ping-pong of resources because of path failures.

18.10 Configuring path grouping and priorities #

Path devices in multipath maps are grouped in path

groups, also called priority groups. Only

one path group receives I/O at any given time. multipathd

assigns priorities to path groups. Out of the path

groups with active paths, the group with the highest priority is activated

according to the configured failback policy for the map (see

Section 18.9, “Configuring policies for failover, queuing, and failback”).

The priority of a path group is the average of the priorities of the active

path devices in the path group. The priority of a path device is an integer

value calculated from the device properties (see the description of the

prio option below).

This section describes the multipath.conf configuration

parameters relevant for priority determination and path grouping.

- path_grouping_policy

Specifies the method used to combine paths into groups. Only the most important policies are listed here; see

multipath.conf(5)for other less frequently used values.- failover

One path per path group. This setting is useful for traditional “active/passive” storage arrays.

- multibus

All paths in one path group. This is useful for traditional “active/active” arrays.

- group_by_prio

Path devices with the same path priority are grouped together. This option is useful for modern arrays that support asymmetric access states, like ALUA. Combined with the

aluaorsysfspriority algorithms, the priority groups set up bymultipathdwill match the primary target port groups that the storage array reports through ALUA-related SCSI commands.

Using the same policy names, the path grouping policy for a multipath map can be changed temporarily with the command:

>sudomultipath -p POLICY_NAME MAP_NAME- marginal_pathgroups

If set to

onorfpin, “marginal” path devices are sorted into a separate path group. This is independent of the path grouping algorithm in use. See Section 18.13.1, “Handling unreliable (“marginal”) path devices”.- detect_prio

If this is set to

yes(default, recommended),multipathdautomatically detects the best algorithm to set the priority for a storage device and ignores thepriosetting. In practice, this means using thesysfsprio algorithm if ALUA support is detected.- prio

Determines the method to derive priorities for path devices. If you override this, disable

detect_prioas follows:defaults { detect_prio no }The following list contains only the most important methods. Several other methods are available, mainly to support legacy hardware. See

multipath.conf(5)for the full list.- alua

Uses SCSI-3 ALUA access states to derive path priority values. The optional

exclusive_pref_bitargument can be used to change the behavior for devices that have the ALUA “preferred primary target port group” (PREF) bit set:prio alua prio_args exclusive_pref_bit

If this option is set, “preferred” paths get a priority bonus over other active/optimized paths. Otherwise, all active/optimized paths are assigned the same priority.

- sysfs

Like

alua, but instead of sending SCSI commands to the device, it obtains the access states fromsysfs. This causes less I/O load thanalua, but is not suitable for all storage arrays with ALUA support.- const

Uses a constant value for all paths.

- path_latency

Measures I/O latency (time from I/O submission to completion) on path devices, and assigns higher priority to devices with lower latency. See

multipath.conf(5)for details. This algorithm is still experimental.- weightedpath

Assigns a priority to paths based on their name, serial number, Host:Bus:Target:Lun ID (HBTL), or Fibre Channel WWN. The priority value does not change over time. The method requires a

prio_argsargument, seemultipath.conf(5)for details. For example:prio weightedpath prio_args "hbtl 2:.*:.*:.* 10 hbtl 3:.*:.*:.* 20 hbtl .* 1"

This assigns devices on SCSI host 3 a higher priority than devices on SCSI host 2, and all others a lower priority.

- prio_args

Some

prioalgorithms require extra arguments. These are specified in this option, with syntax depending on the algorithm. See above.- hardware_handler

The name of a kernel module that the kernel uses to activate path devices when switching path groups. This option has no effect with recent kernels because hardware handlers are autodetected. See Section 18.2.3, “Storage arrays that require specific hardware handlers”.

- path_selector

The name of a kernel module that is used for load balancing between the paths of the active path group. The available choices depend on the kernel configuration. For historical reasons, the name must always be enclosed in quotes and followed by a “0” in

multipath.conf, like this:path_selector "queue-length 0"

- service-time

Estimates the time pending I/O will need to complete on all paths, and selects the path with the lowest value. This is the default.

- historical-service-time

Estimates future service time based on the historical service time (about which it keeps a moving average) and the number of outstanding requests. Estimates the time pending I/O will need to complete on all paths, and selects the path with the lowest value.

- queue-length

Selects the path with the lowest number of currently pending I/O requests.

- round-robin

Switches paths in round-robin fashion. The number of requests submitted to a path before switching to the next one can be adjusted with the options

rr_min_io_rqandrr_weight.- io-affinity

This path selector currently does not work with

multipath-tools.

After modifying the /etc/multipath.conf file, apply

your settings as described in

Section 18.8.4, “Applying multipath.conf modifications”.

18.11 Selecting devices for multipathing #

On systems with multipath devices, you might want to avoid setting up

multipath maps on some devices (typically local disks).

multipath-tools offers various means for

configuring which devices should be considered multipath path devices.

In general, there is nothing wrong with setting up “degraded” multipath maps with just a single device on top of local disks. It works fine and requires no extra configuration. However, some administrators find this confusing or generally oppose this sort of unnecessary multipathing. Also, the multipath layer causes a slight performance overhead. See also Section 18.3.2.2, “Root file system on a local disk”.

After modifying the /etc/multipath.conf file, apply

your settings as described in

Section 18.8.4, “Applying multipath.conf modifications”.

18.11.1 The blacklist section in multipath.conf #

The /etc/multipath.conf file can contain a

blacklist section that lists all devices that should be

ignored by multipathd and multipath.

The following example illustrates possible ways of excluding devices:

blacklist {

wwid 3600605b009e7ed501f0e45370aaeb77f 1

device { 2

vendor ATA

product .*

}

protocol scsi:sas 3

property SCSI_IDENT_LUN_T10 4

devnode "!^dasd[a-z]*" 5

}

| |

This | |

Excluding by

This form is supported since SLES 15 SP1 and SLES 12 SP5. | |

This | |

Excluding devices by

The example illustrates special syntax that is only supported in the

|

By default, multipath-tools ignores all devices

except SCSI, DASD, or NVMe. Technically, the built-in devnode exclude list

is this negated regular expression:

devnode !^(sd[a-z]|dasd[a-z]|nvme[0-9])

18.11.2 The blacklist exceptions section in multipath.conf #

Sometimes it is desired to configure only very specific devices for

multipathing. In this case, devices are excluded by default, and exceptions

are defined for devices that should be part of a multipath map. The

blacklist_exceptions section exists for this purpose. It

is typically used like in the following example, which excludes everything

except storage with product string “NETAPP”:

blacklist {

wwid .*

}

blacklist_exceptions {

device {

vendor ^NETAPP$

product .*

}

}

The blacklist_exceptions section supports all methods

described for the blacklist section above.

The property directive in

blacklist_exceptions is mandatory because every device

must have at least one of the “allowed”

udev properties to be considered a path device for multipath

(the value of the property does not matter). The built-in default for

property is

property (SCSI_IDENT_|ID_WWN)

Only devices that have at least one udev property matching this regular expression will be included.

18.11.3 Other options affecting device selection #

Besides the blacklist options, there are several other

settings in /etc/multipath.conf that affect which

devices are considered multipath path devices.

- find_multipaths

This option controls the behavior of

multipathandmultipathdwhen a device that is not excluded is first encountered. The possible values are:- greedy

Every device non-excluded by

blacklistin/etc/multipath.confis included. This is the default on SUSE Linux Enterprise. If this setting is active, the only way to prevent devices from being added to multipath maps is setting them as excluded.- strict

Every device is excluded, even if it is not present in the

blacklistsection of/etc/multipath.conf, unless its WWID is listed in the file/etc/multipath/wwids. It requires manual maintenance of the WWIDs file (see note below).- yes

Devices are included if they meet the conditions for

strict, or if at least one other device with the same WWID exists in the system.- smart

If a new WWID is first encountered, it is temporarily marked as multipath path device.

multipathdwaits for some time for additional paths with the same WWID to appear. If this happens, the multipath map is set up as usual. Otherwise, when the timeout expires, the single device is released to the system as non-multipath device. The timeout is configurable with the optionfind_multipaths_timeout.This option depends on

systemdfeatures which are only available on SUSE Linux Enterprise Server 15.

Note: Maintaining/etc/multipath/wwidsmultipath-toolskeeps a record of previously setup multipath maps in the file/etc/multipath/wwids(the “WWIDs file”). Devices with WWIDs listed in this file are considered multipath path devices. The file is important for multipath device selection for all values offind_multipathsexceptgreedy.If

find_multipathsis set toyesorsmart,multipathdadds WWIDs to/etc/multipath/wwidsafter setting up new maps, so that these maps will be detected more quickly in the future.The WWIDs file can be manually modified:

>sudomultipath -a 3600a098000aad1e3000064e45f2c2355 1>sudomultipath -w /dev/sdf 2In the

strictmode, this is the only way to add new multipath devices. After modifying the WWIDs file, runmultipathd reconfigureto apply the changes. We recommend rebuilding the initramfs after applying changes to the WWIDs file (see Section 18.7.4, “Keeping the initramfs synchronized”).- allow_usb_devices

If this option is set to

yes, USB storage devices are considered for multipathing. The default isno.

18.12 Multipath device names and WWIDs #

multipathd and multipath internally

use WWIDs to identify devices. WWIDs are also used as map names by default.

For convenience, multipath-tools supports assigning

simpler, easier memorizable names to multipath devices.

18.12.1 WWIDs and device Identification #

It is crucial for multipath operation to reliably detect devices that

represent paths to the same storage volume.

multipath-tools uses the device's World Wide

Identification (WWID) for this purpose (sometimes also referred to as

Universally Unique ID (UUID) or Unique ID (UID — do not confuse with

“User ID”). The WWID of a map device is always the same as the

WWID of its path devices.

By default, WWIDs of path devices are inferred from udev properties of the devices, which are determined in udev rules, either by reading device attributes from the sysfs file system or by using specific I/O commands. To see the udev properties of a device, run:

> udevadm info /dev/sdx

The udev properties used by multipath-tools to

derive WWIDs are:

ID_SERIALfor SCSI devices (do not confuse this with the device's “serial number”)ID_UIDfor DASD devicesID_WWNfor NVMe devices

It is impossible to change the WWID of a multipath map which is in use. If the WWID of mapped path devices changes because of a configuration change, the map needs to be destroyed, and a new map needs to be set up with the new WWID. This cannot be done while the old map is in use. In extreme cases, data corruption may result from WWID changes. It must therefore be strictly avoided to apply configuration changes that would cause map WWIDs to change.

It is allowed to enable the uid_attrs option in

/etc/multipath.conf, see

Section 18.13, “Miscellaneous options”.

18.12.2 Setting aliases for multipath maps #

Arbitrary map names can be set in the multipaths section

of /etc/multipath.conf as follows:

multipaths {

multipath {

wwid 3600a098000aad1e3000064e45f2c2355

alias postgres

}

}Aliases are expressive, but they need to be assigned to each map individually, which may be cumbersome on large systems.

18.12.3 Using autogenerated user-friendly names #

multipath-tools also supports autogenerated

aliases, so-called “user-friendly names”. The naming scheme of

the aliases follows the pattern:

mpathINDEX, where INDEX is a lower case letter (starting with a). So the first autogenerated alias is mpatha, the next one is mpathb, mpathc to mpathz. After that follows mpathaa, mpathab, and so on.

Map names are only useful if they are persistent.

multipath-tools keeps track of the assigned names

in the file /etc/multipath/bindings (the

“bindings file”). When a new map is created, the WWID is first

looked up in this file. If it is not found, the lowest available

user-friendly name is assigned to it.

Explicit aliases as discussed in Section 18.12.2, “Setting aliases for multipath maps” take precedence over user-friendly names.

The following options in /etc/multipath.conf affect

user-friendly names:

- user_friendly_names

If set to

yes, user-friendly names are assigned and used. Otherwise, the WWID is used as a map name unless an alias is configured.- alias_prefix

The prefix used to create user-friendly names,

mpathby default.

For cluster operations, device names must be identical across all nodes in

the cluster. The multipath-tools configuration

must be kept synchronized between nodes. If

user_friendly_names is used,

multipathd may modify the

/etc/multipath/bindings file at runtime. Such

modifications must be replicated dynamically to all nodes. The same

applies to /etc/multipath/wwids (see

Section 18.11.3, “Other options affecting device selection”).

It is possible to change map names at runtime. Use any of the methods

described in this section and run multipathd

reconfigure, and the map names will change without disrupting

the system operation.

18.12.4 Referring to multipath maps #

Technically, multipath maps are Device Mapper devices, which have generic

names of the form /dev/dm-n

with an integer number n. These names are not

persistent. They should never be used to refer to the

multipath maps. udev creates various symbolic links to

these devices, which are more suitable as persistent references. These

links differ with respect to their invariance against certain configuration

changes. The following typical example shows various symlinks all pointing

to the same device.

/dev/disk/by-id/dm-name-mpathb1 -> ../../dm-1 /dev/disk/by-id/dm-uuid-mpath-3600a098000aad73f00000a3f5a275dc82 -> ../../dm-1 /dev/disk/by-id/scsi-3600a098000aad73f00000a3f5a275dc83 -> ../../dm-1 /dev/disk/by-id/wwn-0x600a098000aad73f00000a3f5a275dc84 -> ../../dm-1 /dev/mapper/mpathb5 -> ../dm-1

These two links use the map name to refer to the map. Thus, the links will change if the map name changes, for example, if you enable or disable user-friendly names. | |

This link uses the device mapper UUID, which is the WWID used by

The device mapper UUID is the preferred form to ensure that

only multipath devices are referenced. For example,

the following line in filter = [ "a|/dev/disk/by-id/dm-uuid-mpath-.*|", "r|.*|" ] | |

These are links that would normally point to path devices. The multipath

device took them over, because it has a higher udev link priority (see

|

For partitions on multipath maps created

by the kpartx tool, there are similar symbolic links,

derived from the parent device name or WWID and the partition number:

/dev/disk/by-id/dm-name-mpatha-part2 -> ../../dm-5 /dev/disk/by-id/dm-uuid-part2-mpath-3600a098000aad1e300000b4b5a275d45 -> ../../dm-5 /dev/disk/by-id/scsi-3600a098000aad1e300000b4b5a275d45-part2 -> ../../dm-5 /dev/disk/by-id/wwn-0x600a098000aad1e300000b4b5a275d45-part2 -> ../../dm-5 /dev/disk/by-partuuid/1c2f70e0-fb91-49f5-8260-38eacaf7992b -> ../../dm-5 /dev/disk/by-uuid/f67c49e9-3cf2-4bb7-8991-63568cb840a4 -> ../../dm-5 /dev/mapper/mpatha-part2 -> ../dm-5

Note that partitions often have by-uuid links, too,

referring not to the device itself but to the file system it contains.

These links are often preferable. They are invariant even if the file

system is copied to a different device or partition.

When dracut builds an initramfs, it creates hard-coded

references to devices in the initramfs, using

/dev/mapper/$MAP_NAME references by default. These

hard-coded references will not be found during boot if the map names used

in the initramfs do not match the names used during building the

initramfs, causing boot failure. Normally this will not happen, because

dracut will add all multipath configuration files to

the initramfs. But problems can occur if the initramfs is built from a

different environment, for example, in the rescue system or during an

offline update. To prevent this boot failure, change

dracut's persistent_policy

setting, as explained in

Section 18.7.4.2, “Persistent device names in the initramfs”.

18.13 Miscellaneous options #

This section lists some useful multipath.conf options

that were not mentioned so far. See

multipath.conf(5) for a full list.

- verbosity

Controls the log verbosity of both

multipathandmultipathd. The command-line option-voverrides this setting for both commands. The value can be between 0 (only fatal errors) and 4 (verbose logging). The default is 2.- uid_attrs

This option enables an optimization for processing udev events, so-called “uevent merging”. It is useful in environments in which hundreds of path devices may fail or reappear simultaneously. In order to make sure that path WWIDs do not change (see Section 18.12.1, “WWIDs and device Identification”), the value should be set exactly like this:

defaults { uid_attrs "sd:ID_SERIAL dasd:ID_UID nvme:ID_WWN" }- skip_kpartx

If set to

yesfor a multipath device (default isno), do not create partition devices on top of the given device (see Section 18.7.3, “Partitions on multipath devices andkpartx”). Useful for multipath devices used by virtual machines. Previous SUSE Linux Enterprise Server releases achieved the same effect with the parameter “features 1 no_partitions”.- max_sectors_kb

Limits the maximum amount of data sent in a single I/O request for all path devices of the multipath map.

- ghost_delay

On active/passive arrays, it can happen that passive paths (in “ghost” state) are probed before active paths. If the map was activated immediately and I/O was sent, this would cause a possibly costly path activation. This parameter specifies the time (in seconds) to wait for active paths of the map to appear before activating the map. The default is

no(no ghost delay).- recheck_wwid

If set to

yes(default isno), double-checks the WWID of restored paths after failure, and removes them if the WWID has changed. This is a safety measure against data corruption.- enable_foreign

multipath-toolsprovides a plugin API for other multipathing backends than Device Mapper multipath. The API supports monitoring and displaying information about the multipath topology using standard commands likemultipath -ll. Modifying the topology is unsupported.The value of

enable_foreignis a regular expression to match against foreign library names. The default value is “NONE”.SUSE Linux Enterprise Server ships the

nvmeplugin, which adds support for the native NVMe multipathing (see Section 18.2.1, “Multipath implementations: device-mapper and NVMe”). To enable thenvmeplugin, setdefaults { enable_foreign nvme }

18.13.1 Handling unreliable (“marginal”) path devices #

Unstable conditions in the fabric can cause path devices to behave

erratically. They exhibit frequent I/O errors, recover, and fail again.

Such path devices are also denoted “marginal” or

“shaky” paths. This section summarizes the options that

multipath-tools provides to deal with this

problem.

If a path device exhibits a second failure (good → bad transition) before

marginal_path_double_failed_time elapses after the

first failure, multipathd starts monitoring the path at

a rate of 10 requests per second, for a monitoring period of

marginal_path_err_sample_time. If the error rate during

the monitoring period exceeds

marginal_path_err_rate_threshold, the path is

classified as marginal. After

marginal_path_err_recheck_gap_time, the path

transitions to normal state again.

This algorithm is used if all four numeric

marginal_path_ parameters are set to a positive value,

and marginal_pathgroups is not set to

fpin. It is available since SUSE Linux Enterprise Server 15 SP1 and

SUSE Linux Enterprise Server 12 SP5.

- marginal_path_double_failed_time

Maximum time (in seconds) between two path failures that triggers path monitoring.

- marginal_path_err_sample_time

Length (in seconds) of the path monitoring interval.

- marginal_path_err_rate_threshold

Minimum error rate (per thousand I/Os).

- marginal_path_err_recheck_gap_time

Time (in seconds) to keep the path in marginal state.

- marginal_pathgroups

This option is available since SLES 15SP3. Possible values are:

- off

Marginal state is determined by

multipathd(see above). Marginal paths are not reinstated as long as they remain in marginal state. This is the default, and the behavior in SUSE Linux Enterprise Server releases before SP3, where themarginal_pathgroupsoption was unavailable.- on

Similar to the

offoption, but instead of keeping them in the failed state, marginal paths are moved to a separate path group, which will be assigned a lower priority than all other path groups (see Section 18.10, “Configuring path grouping and priorities”). Paths in this path group will only be used for I/O if all paths in other path groups have failed.- fpin

This setting is available from SLES 15SP4. Marginal path state is derived from FPIN events (see below). Marginal paths are moved into a separate path group, as described for

off. This setting requires no further host-side configuration. It is the recommended way to handle marginal paths on Fibre Channel fabrics that support FPIN.Note: FPIN-based marginal path detectionmultipathdlistens for Fibre Channel Performance Impact Notifications (FPIN). If an FPIN-LI (Link Integrity) event is received for a path device, the path will enter marginal state. This state will last until a RSCN or Link up event is received on the Fibre channel adapter the device is connected to.

A simpler algorithm using the parameters

san_path_err_threshold,

san_path_err_forget_rate, and

san_path_err_recovery time is also available and

recommended for SUSE Linux Enterprise Server 15 (GA). See the “Shaky paths

detection” section in multipath.conf(5).

18.14 Best practice #

18.14.1 Best practices for configuration #

The large number of configuration directives is daunting at first. Usually, you can get good results with an empty configuration, unless you are in a clustering environment.

Here are some general recommendations for stand-alone servers. They are not mandatory. See the documentation of the respective parameters in the previous sections for background information.

defaults {

deferred_remove yes

find_multipaths smart

enable_foreign nvme

marginal_pathgroups fpin # 15.4 only, if supported by fabric

}

devices {

# A catch-all device entry.

device {

vendor .*

product .*

dev_loss_tmo infinity

no_path_retry 60 # 5 minutes

path_grouping_policy group_by_prio

path_selector "historical-service-time 0"

reservation_key file # if using SCSI persistent reservations

}

# Follow up with specific device entries below, they will take precedence.

}

After modifying the /etc/multipath.conf file, apply

your settings as described in

Section 18.8.4, “Applying multipath.conf modifications”.

18.14.2 Interpreting multipath I/O status #

For a quick overview of the multipath subsystem, use multipath

-ll or multipathd show topology. The output of

these commands has the same format. The former command reads the kernel

state, while the latter prints the status of the multipath daemon. Normally

both states are equal. Here is an example of the output:

>sudomultipathd show topology mpatha1 (3600a098000aad1e300000b4b5a275d452) dm-03 NETAPP,INF-01-004 size=64G features='3 queue_if_no_path pg_init_retries 50'5 hwhandler='1 alua'6 wp=rw7 |-+- 8policy='historical-service-time 2'9 prio=5010 status=active11 | |-12 3:0:0:113 sdb 8:1614 active15 ready16 running17 | `- 4:0:0:1 sdf 8:80 active ready running `-+- policy='historical-service-time 2' prio=10 status=enabled `- 4:0:1:1 sdj 8:144 active ready running

The map name. | |

The map WWID (if different from the map name). | |

The device node name of the map device. | |

The vendor and product name. | |

A path group. The indented lines below the path group list the path devices that belong to it. | |

The path selector algorithm used by the path group. The "2" can be ignored. | |

The priority of the path group. | |

The status of the path group ( | |

A path device. | |

The bus ID of the device (here, a SCSI Host:Bus:Target:Lun ID). | |

The device node name and major/minor number of the path device. | |

The kernel device mapper state of the path ( | |

The multipath path device state (see below). | |

The state of the path device in the kernel. This is a device-type

specific value. For SCSI, it is either |

The multipath path device states are:

|

|

The path is healthy and up |

|

|

A passive path in an active/passive array |

|

|

The path is down or unreachable |

|

|

A checker command timed out |

|

|

Waiting for the completion of a path checker command |

|

|

Path reinstantiation is delayed to avoid "flapping" |

|

|

An unreliable path (emc path checker only) |