Geo Clustering Guide

- 1 Challenges for Geo Clusters

- 2 Conceptual Overview

- 3 Requirements

- 4 Setting Up the Booth Services

- 5 Synchronizing Configuration Files Across All Sites and Arbitrators

- 6 Configuring Cluster Resources and Constraints

- 7 Setting Up IP Relocation via DNS Update

- 8 Managing Geo Clusters

- 9 Troubleshooting

- 10 Upgrading to the Latest Product Version

- 11 For More Information

- A GNU Licenses

5 Synchronizing Configuration Files Across All Sites and Arbitrators #

To replicate important configuration files across all nodes in the cluster

and across Geo clusters, use Csync2.

Csync2 can handle any number of hosts, sorted into synchronization groups.

Each synchronization group has its own list of member hosts and its

include/exclude patterns that define which files should be synchronized in

the synchronization group. The groups, the host names belonging to each

group, and the include/exclude rules for each group are specified in the

Csync2 configuration file, /etc/csync2/csync2.cfg.

For authentication, Csync2 uses the IP addresses and pre-shared keys within a synchronization group. You need to generate one key file for each synchronization group and copy it to all group members.

Csync2 will contact other servers via a TCP port (by default

6556), and start remote Csync2 instances. For detailed

information about Csync2, refer to

http://oss.linbit.com/csync2/paper.pdf

5.1 Csync2 Setup for Geo Clusters #

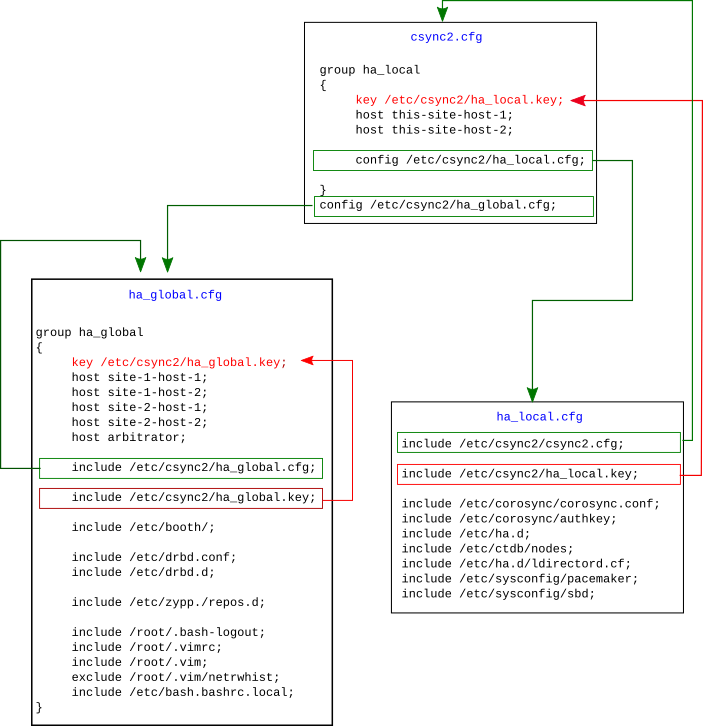

How to set up Csync2 for individual clusters with YaST is explained in the Administration Guide for SUSE Linux Enterprise High Availability Extension, chapter Using the YaST Cluster Module, section Transferring the Configuration to All Nodes. However, YaST cannot handle more complex Csync2 setups, like those that are needed for Geo clusters. For the following setup, as shown in Figure 5.1, “Example Csync2 Setup for Geo Clusters”, configure Csync2 manually by editing the configuration files.

To adjust Csync2 for synchronizing files not only within local clusters but also across geographically dispersed sites, you need to define two synchronization groups in the Csync2 configuration:

A global group

ha_global(for the files that need to be synchronized globally, across all sites and arbitrators belonging to a Geo cluster).A group for the local cluster site

ha_local(for the files that need to be synchronized within the local cluster).

For an overview of the multiple Csync2 configuration files for the two synchronization groups, see Figure 5.1, “Example Csync2 Setup for Geo Clusters”.

Figure 5.1: Example Csync2 Setup for Geo Clusters #

Authentication key files and their references are displayed in red. The names of Csync2 configuration files are displayed in blue, and their references are displayed in green. For details, refer to Example Csync2 Setup: Configuration Files.

Example Csync2 Setup: Configuration Files #

/etc/csync2/csync2.cfgThe main Csync2 configuration file. It is kept short and simple on purpose and only contains the following:

The definition of the synchronization group

ha_local. The group consists of two nodes (this-site-host-1andthis-site-host-2) and uses/etc/csync2/ha_local.keyfor authentication. A list of files to be synchronized for this group only is defined in another Csync2 configuration file,/etc/csync2/ha_local.cfg. It is included with theconfigstatement.A reference to another Csync2 configuration file,

/etc/csync2.cfg/ha_global.cfg, included with theconfigstatement.

/etc/csync2/ha_local.cfgThis file concerns only the local cluster. It specifies a list of files to be synchronized only within the

ha_localsynchronization group, as these files are specific per cluster. The most important ones are the following:/etc/csync2/csync2.cfg, as this file contains the list of the local cluster nodes./etc/csync2/ha_local.key, the authentication key to be used for Csync2 synchronization within the local cluster./etc/corosync/corosync.conf, as this file defines the communication channels between the local cluster nodes./etc/corosync/authkey, the Corosync authentication key.

The rest of the file list depends on your specific cluster setup. The files listed in Figure 5.1, “Example Csync2 Setup for Geo Clusters” are only examples. If you also want to synchronize files for any site-specific applications, include them in

ha_local.cfg, too. Even thoughha_local.cfgis targeted at the nodes belonging to one site of your Geo cluster, the content may be identical on all sites. If you need different sets of hosts or different keys, adding extra groups may be necessary./etc/csync2.cfg/ha_global.cfgThis file defines the Csync2 synchronization group

ha_global. The group spans all cluster nodes across multiple sites, including the arbitrator. As it is recommended to use a separate key for each Csync2 synchronization group, this group uses/etc/csync2/ha_global.keyfor authentication. Theincludestatements define the list of files to be synchronized within theha_globalsynchronization group. The most important ones are the following:/etc/csync2/ha_global.cfgand/etc/csync2/ha_global.key(the configuration file for theha_globalsynchronization group and the authentication key used for synchronization within the group)./etc/booth/, the default directory holding the booth configuration. In case you are using a booth setup for multiple tenants, it contains more than one booth configuration file. If you use authentication for booth, it is useful to place the key file in this directory, too./etc/drbd.confand/etc/drbd.d(if you are using DRBD within your cluster setup). The DRBD configuration can be globally synchronized, as it derives the configuration from the host names contained in the resource configuration file./etc/zypp/repos.de. The package repositories are likely to be the same on all cluster nodes.

The other files shown (

/etc/root/*) are examples that may be included for reasons of convenience (to make a cluster administrator's life easier).

Note

The files csync2.cfg and

ha_local.key are site-specific, which means you need

to create different ones for each cluster site. The files are identical on

the nodes belonging to the same cluster but different on another cluster.

Each csync2.cfg file needs to contain a lists of hosts

(cluster nodes) belonging to the site, plus a site-specific authentication

key.

The arbitrator needs a csync2.cfg file, too. It only

needs to reference ha_global.cfg though.

5.2 Synchronizing Changes with Csync2 #

To successfully synchronize the files with Csync2, the following prerequisites must be met:

The same Csync2 configuration is available on all machines that belong to the same synchronization group.

The Csync2 authentication key for each synchronization group must be available on all members of that group.

Csync2 must be running on all nodes and the arbitrator.

Before the first Csync2 run, you therefore need to make the following preparations:

Log in to one machine per synchronization group and generate an authentication key for the respective group:

root #csync2-k NAME_OF_KEYFILEHowever, do not regenerate the key file on any other member of the same group.

With regard to Figure 5.1, “Example Csync2 Setup for Geo Clusters”, this would result in the following key files:

/etc/csync2/ha_global.keyand one local key (/etc/csync2/ha_local.key) per site.Copy each key file to all members of the respective synchronization group. With regard to Figure 5.1, “Example Csync2 Setup for Geo Clusters”:

Copy

/etc/csync2/ha_global.keyto all parties (the arbitrator and all cluster nodes on all sites of your Geo cluster). The key file needs to be available on all hosts listed within theha_globalgroup that is defined inha_global.cfg.Copy the local key file for each site (

/etc/csync2/ha_local.key) to all cluster nodes belonging to the respective site of your Geo cluster.

Copy the site-specific

/etc/csync2/csync2.cfgconfiguration file to all cluster nodes belonging to the respective site of your Geo cluster and to the arbitrator.Execute the following command on all nodes and the arbitrator to make the csync2 service start automatically at boot time:

root #systemctlenable csync2.socketExecute the following command on all nodes and the arbitrator to start the service now:

root #systemctlstart csync2.socket

Procedure 5.1: Synchronizing Files with Csync2 #

To initially synchronize all files once, execute the following command on the machine that you want to copy the configuration from:

root #csync2-xvThis will synchronize all the files once by pushing them to the other members of the synchronization groups. If all files are synchronized successfully, Csync2 will finish with no errors.

If one or several files that are to be synchronized have been modified on other machines (not only on the current one), Csync2 will report a conflict. You will get an output similar to the one below:

While syncing file /etc/corosync/corosync.conf: ERROR from peer site-2-host-1: File is also marked dirty here! Finished with 1 errors.

If you are sure that the file version on the current machine is the “best” one, you can resolve the conflict by forcing this file and re-synchronizing:

root #csync2-f/etc/corosync/corosync.confroot #csync2-x

For more information on the Csync2 options, run

csync2 -help.

Note: Pushing Synchronization After Any Changes

Csync2 only pushes changes. It does not continuously synchronize files between the machines.

Each time you update files that need to be synchronized, you need to push

the changes to the other machines of the same synchronization group: Run

csync2 -xv on the machine where

you did the changes. If you run the command on any of the other machines

with unchanged files, nothing will happen.