4 Setting Up the Booth Services #

This chapter describes the setup and configuration options for booth, how to synchronize the booth configuration to all sites and arbitrators, how to enable and start the booth services, and how to reconfigure booth while its services are running.

4.1 Booth Configuration and Setup Options #

The default booth configuration is /etc/booth/booth.conf. This file must be the same

on all sites of your Geo cluster, including the arbitrator or arbitrators.

To keep the booth configuration synchronous across all sites and

arbitrators, use Csync2, as described in

Section 4.5, “Synchronizing the Booth Configuration to All Sites and Arbitrators”.

/etc/booth and Files

The directory /etc/booth and all

files therein need to belong to the user

hacluster and the group

haclient. Whenever you copy

a new file from this directory, use the option -p for the

cp command to preserve the ownership. Alternatively,

when you create a new file, set the user and group afterward with

chown hacluster:haclient

FILE.

For setups including multiple Geo clusters, it is possible to “share” the same arbitrator (as of SUSE Linux Enterprise High Availability 12). By providing several booth configuration files, you can start multiple booth instances on the same arbitrator, with each booth instance running on a different port. That way, you can use one machine to serve as arbitrator for different Geo clusters. For details on how to configure booth for multiple Geo clusters, refer to Section 4.4, “Using a Multi-Tenant Booth Setup”.

To prevent malicious parties from disrupting the booth service, you can configure authentication for talking to booth, based on a shared key. For details, see 5 in Example 4.1, “A Booth Configuration File”. All hosts that communicate with various booth servers need this key. Therefore make sure to include the key file in the Csync2 configuration or to synchronize it manually across all parties.

4.2 Automatic versus Manual Tickets #

A ticket grants the right to run certain resources on a specific cluster site. Two types of tickets are supported:

Automatic tickets are controlled by the

boothddaemon.Manual tickets are managed by the cluster administrator only.

Automatic and manual tickets have the following properties:

Automatic and manual tickets can be defined together. You can define and use both automatic and manual tickets within the same Geo cluster.

Manual ticket management remains manual. The automatic ticket management is not applied to manually controlled tickets. Manual tickets do not require any quorum elections, cannot fail over automatically, and do not have an expiry time.

Manual tickets will not be moved automatically. Tickets which were manually granted to a site will remain there until they are manually revoked. Even if a site goes offline, the ticket will not be moved to another site. This behavior ensures that the services that depend on a ticket remain on a particular site and are not moved to another site.

Same commands for managing both types of tickets. The manual tickets are managed by the same commands as automatic tickets (

grantorrevoke, for example).Arbitrators are not needed if only manual tickets are used. If you configure only manual tickets in a Geo cluster, arbitrators are not necessary, because manual ticket management does not require quorum decisions.

To configure tickets, use the /etc/booth/booth.conf

configuration file (see Section 4.3, “Using the Default Booth Setup” for

further information).

4.3 Using the Default Booth Setup #

If you have set up your basic Geo cluster with the

ha-cluster-bootstrap

scripts as described in the Geo Clustering Quick Start, the scripts have created a default

booth configuration on all sites with a minimal set of parameters. To extend

or fine-tune the minimal booth configuration, have a look at

Example 4.1 or at

the examples in Section 4.4, “Using a Multi-Tenant Booth Setup”.

To add or change parameters needed for booth, either edit the booth

configuration files manually or use the YaST module. To access the YaST module, start it from command

line with yast2 geo-cluster (or start YaST and select

› ).

transport = UDP 1 port = 9929 2 arbitrator = 192.168.203.100 3 site = 192.168.201.100 4 site = 192.168.202.100 4 authfile = /etc/booth/authkey 5 ticket = "ticket-nfs" 6 mode = MANUAL 7 ticket = "ticketA" 6 expire = 600 8 timeout = 10 9 retries = 5 10 renewal-freq = 30 11 before-acquire-handler12 = /etc/booth/ticket-A13 db-1 14 acquire-after = 60 15 ticket = "ticketB" 6 expire = 600 8 timeout = 10 9 retries = 5 10 renewal-freq = 30 11 before-acquire-handler12 = /etc/booth/ticket-B13 db-8 14 acquire-after = 60 15

The transport protocol used for communication between the sites. Only UDP is supported, but other transport layers will follow in the future. Currently, this parameter can therefore be omitted. | |

The port to be used for communication between the booth instances at each

site. When not using the default port ( | |

The IP address of the machine to use as arbitrator. Add an entry for each arbitrator you use in your Geo cluster setup. | |

The IP address used for the

If you have set up booth with the

| |

Optional parameter. Enables booth authentication for clients and servers on the basis of a shared key. This parameter specifies the path to the key file. Key Requirements #

| |

The tickets to be managed by booth or a cluster administrator. For each ticket, add a | |

Optional parameter. Defines the ticket mode. By default, all

tickets are managed by booth. To define tickets which are managed by

the administrator (manual tickets), set the

mode parameter to

Manual tickets do not have expire, renewal-freq, and retries parameters. | |

Optional parameter. Defines the ticket's expiry time in seconds. A

site that has been granted a ticket will renew the ticket regularly. If

booth does not receive any information about renewal of the ticket within

the defined expiry time, the ticket will be revoked and granted to

another site. If no expiry time is specified, the ticket will expire

after | |

Optional parameter. Defines a timeout period in seconds. After that time, booth will resend packets if it did not receive a reply within this period. The timeout defined should be long enough to allow packets to reach other booth members (all arbitrators and sites). | |

Optional parameter. Defines how many times booth retries sending packets

before giving up waiting for confirmation by other sites. Values smaller

than | |

Optional parameter. Sets the ticket renewal frequency period. Ticket

renewal occurs every half expiry time by default. If the network

reliability is often reduced over prolonged periods, it is advisable to

renew more often. Before every renewal the

| |

Optional parameter. It supports one or more scripts. To use more than one

script, each script can be responsible for different checks, like cluster

state, data center connectivity, environment health sensors, and more.

Store all scripts in the directory

The scripts in this directory are executed in alphabetical order. All

scripts will be called before | |

The

Assume that the

| |

The resource to be tested by the

| |

Optional parameter. After a ticket is lost, booth will wait this time in

addition before acquiring the ticket. This is to allow for the site that

lost the ticket to relinquish the resources, by either stopping them or

fencing a node. A typical delay might be

If you are unsure how long stopping or demoting the resources or fencing

a node may take (depending on the |

4.3.1 Manually Editing The Booth Configuration File #

Log in to a cluster node as

rootor equivalent.If

/etc/booth/booth.confdoes not exist yet, copy the example booth configuration file/etc/booth/booth.conf.exampleto/etc/booth/booth.conf:#cp-p /etc/booth/booth.conf.example /etc/booth/booth.confEdit

/etc/booth/booth.confaccording to Example 4.1, “A Booth Configuration File”.Verify your changes and save the file.

On all cluster nodes and arbitrators, open the port in the firewall that you have configured for booth. See Example 4.1, “A Booth Configuration File”, position 2.

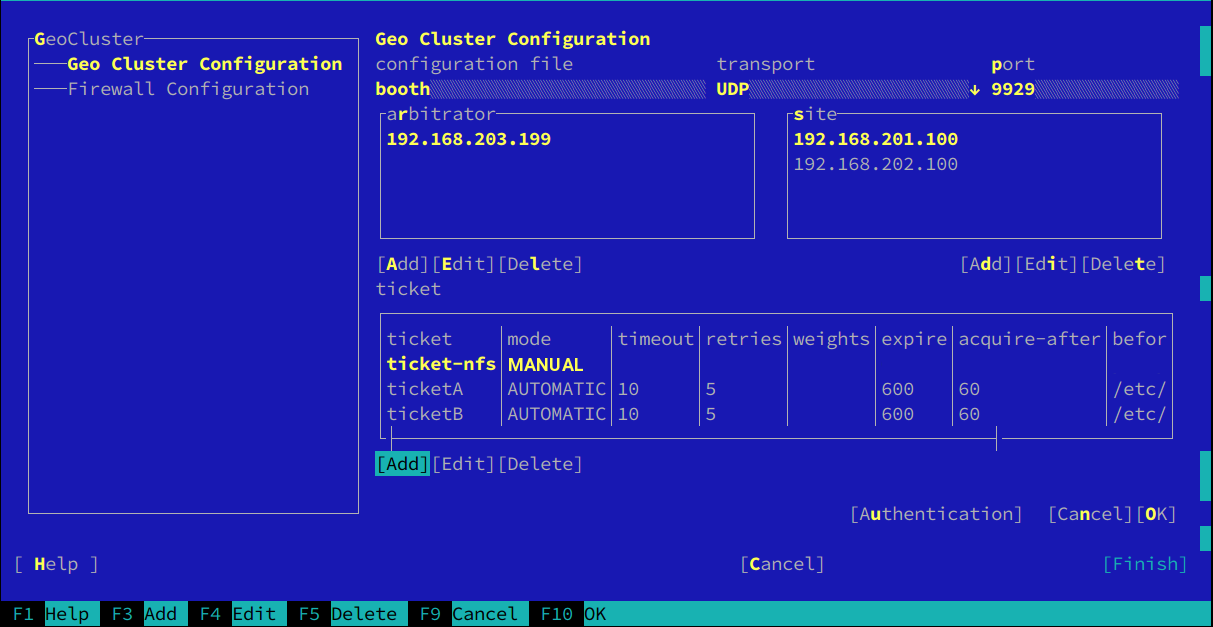

4.3.2 Setting Up Booth with YaST #

Log in to a cluster node as

rootor equivalent.Start the YaST module.

Choose to an existing booth configuration file or click to create a new booth configuration file:

In the screen that appears configure the following parameters:

Configuration File. A name for the booth configuration file. YaST suggests

boothby default. This results in the booth configuration being written to/etc/booth/booth.conf. Only change this value if you need to set up multiple booth instances for different Geo clusters as described in Section 4.4, “Using a Multi-Tenant Booth Setup”.Transport. The transport protocol used for communication between the sites. Only UDP is supported, but other transport layers will follow in the future. See also Example 4.1, “A Booth Configuration File”, position 1.

Port. The port to be used for communication between the booth instances at each site. See also Example 4.1, “A Booth Configuration File”, position 2.

Arbitrator. The IP address of the machine to use as arbitrator. See also Example 4.1, “A Booth Configuration File”, position 3.

To specify an , click . In the dialog that opens, enter the IP address of your arbitrator and click .

Site. The IP address used for the

boothdon a site. See also Example 4.1, “A Booth Configuration File”, position 4.To specify a of your Geo cluster, click . In the dialog that opens, enter the IP address of one site and click .

Ticket. The tickets to be managed by booth or a cluster administrator. See also Example 4.1, “A Booth Configuration File”, position 6.

To specify a , click . In the dialog that opens, enter a unique name. If you need to define multiple tickets with the same parameters and values, save configuration effort by creating a “ticket template” that specifies the default parameters and values for all tickets. To do so, use

__default__as name.Authentication. To enable authentication for booth, click and in the dialog that opens, activate . If you already have an existing key, specify the path and file name in . To generate a key file for a new Geo cluster, click . The key will be created and written to the location specified in .

Additionally, you can specify optional parameters for your ticket. For an overview, see Example 4.1, “A Booth Configuration File”, positions 7 to 15.

Click to confirm your changes.

Figure 4.1: Example Ticket Dependency #Click to close the current booth configuration screen. YaST shows the name of the booth configuration file that you have defined.

Before closing the YaST module, switch to the category.

To open the port you have configured for booth, enable .

Important: Firewall Setting for Local Machine OnlyThe firewall setting is only applied to the current machine. It will open the UDP/TCP ports for all ports that have been specified in

/etc/booth/booth.confor any other booth configuration files (see Section 4.4, “Using a Multi-Tenant Booth Setup”).Make sure to open the respective ports on all other cluster nodes and arbitrators of your Geo cluster setup, too. Do so either manually or by synchronizing the following files with Csync2:

/usr/lib/firewalld/usr/lib/firewalld/services/booth.xml

Click to confirm all settings and close the YaST module. Depending on the NAME of the specified in Step 3.a, the configuration is written to

/etc/booth/NAME.conf.

4.4 Using a Multi-Tenant Booth Setup #

For setups including multiple Geo clusters, it is possible to “share” the same arbitrator (as of SUSE Linux Enterprise High Availability 12). By providing several booth configuration files, you can start multiple booth instances on the same arbitrator, with each booth instance running on a different port. That way, you can use one machine to serve as arbitrator for different Geo clusters.

Let us assume you have two Geo clusters, one in EMEA (Europe, the Middle East and Africa), and one in the Asia-Pacific region (APAC).

To use the same arbitrator for both Geo clusters, create two configuration

files in the /etc/booth directory:

/etc/booth/emea.conf and

/etc/booth/apac.conf. Both must minimally differ in the

following parameters:

The port used for the communication of the booth instances.

The sites belonging to the different Geo clusters that the arbitrator is used for.

/etc/booth/apac.conf #transport = UDP 1 port = 9133 2 arbitrator = 192.168.203.100 3 site = 192.168.2.254 4 site = 192.168.1.112 4 authfile = /etc/booth/authkey-apac 5 ticket ="tkt-db-apac-intern" 6 timeout = 10 retries = 5 renewal-freq = 60 before-acquire-handler12 = /usr/share/booth/service-runnable13 db-apac-intern 14 ticket = "tkt-db-apac-cust" 6 timeout = 10 retries = 5 renewal-freq = 60 before-acquire-handler = /usr/share/booth/service-runnable db-apac-cust

/etc/booth/emea.conf #transport = UDP 1 port = 9150 2 arbitrator = 192.168.203.100 3 site = 192.168.201.100 4 site = 192.168.202.100 4 authfile = /etc/booth/authkey-emea 5 ticket = "tkt-sap-crm" 6 expire = 900 renewal-freq = 60 before-acquire-handler12 = /usr/share/booth/service-runnable13 sap-crm 14 ticket = "tkt-sap-prod" 6 expire = 600 renewal-freq = 60 before-acquire-handler = /usr/share/booth/service-runnable sap-prod

The transport protocol used for communication between the sites. Only UDP is supported, but other transport layers will follow in the future. Currently, this parameter can therefore be omitted. | |

The port to be used for communication between the booth instances at each site. The configuration files use different ports to allow for start of multiple booth instances on the same arbitrator. | |

The IP address of the machine to use as arbitrator. In the examples above, we use the same arbitrator for different Geo clusters. | |

The IP address used for the | |

Optional parameter. Enables booth authentication for clients and servers on the basis of a shared key. This parameter specifies the path to the key file. Use different key files for different tenants. Key Requirements #

| |

The tickets to be managed by booth or a cluster administrator. Theoretically the same ticket names can be defined in different booth configuration files—the tickets will not interfere because they are part of different Geo clusters that are managed by different booth instances. However, (for better overview) we advise to use distinct ticket names for each Geo cluster as shown in the examples above. | |

Optional parameter. If set, the specified command will be called before

| |

The | |

The resource to be tested by the |

Create different booth configuration files in

/etc/boothas shown in Example 4.2, “/etc/booth/apac.conf” and Example 4.3, “/etc/booth/emea.conf”. Do so either manually or with YaST, as outlined in Section 4.3.2, “Setting Up Booth with YaST”.On the arbitrator, open the ports that are defined in any of the booth configuration files in

/etc/booth.On the nodes belonging to the individual Geo clusters that the arbitrator is used for, open the port that is used for the respective booth instance.

Synchronize the respective booth configuration files across all cluster nodes and arbitrators that use the same booth configuration. For details, see Section 4.5, “Synchronizing the Booth Configuration to All Sites and Arbitrators”.

On the arbitrator, start the individual booth instances as described in Starting the Booth Services on Arbitrators for multi-tenancy setups.

On the individual Geo clusters, start the booth service as described in Starting the Booth Services on Cluster Sites.

4.5 Synchronizing the Booth Configuration to All Sites and Arbitrators #

To make booth work correctly, all cluster nodes and arbitrators within one Geo cluster must use the same booth configuration.

You can use Csync2 to synchronize the booth configuration. For details, see Section 5.1, “Csync2 Setup for Geo Clusters” and Section 5.2, “Synchronizing Changes with Csync2”.

In case of any booth configuration changes, make sure to update the configuration files accordingly on all parties and to restart the booth services as described in Section 4.7, “Reconfiguring Booth While Running”.

4.6 Enabling and Starting the Booth Services #

- Starting the Booth Services on Cluster Sites

The booth service for each cluster site is managed by the booth resource group (that has either been configured automatically if you used the

ha-cluster-initscripts for Geo cluster setup, or manually as described in Section 6.2, “Configuring a Resource Group forboothd”). To start one instance of the booth service per site, start the respective booth resource group on each cluster site.- Starting the Booth Services on Arbitrators

Starting with SUSE Linux Enterprise 12, booth arbitrators are managed with systemd. The unit file is named

booth@.service. The@denotes the possibility to run the service with a parameter, which is in this case the name of the configuration file.To enable the booth service on an arbitrator, use the following command:

#systemctlenable booth@boothAfter the service has been enabled from command line, YaST Services Manager can be used to manage the service (as long as the service is not disabled). In that case, it will disappear from the service list in YaST the next time systemd is restarted.

The command to start the booth service depends on your booth setup:

If you are using the default setup as described in Section 4.3, only

/etc/booth/booth.confis configured. In that case, log in to each arbitrator and use the following command:#systemctlstart booth@boothIf you are running booth in multi-tenancy mode as described in Section 4.4, you have configured multiple booth configuration files in

/etc/booth. To start the services for the individual booth instances, usesystemctl start booth@NAME, where NAME stands for the name of the respective configuration file/etc/booth/NAME.conf.For example, if you have the booth configuration files

/etc/booth/emea.confand/etc/booth/apac.conf, log in to your arbitrator and execute the following commands:#systemctlstart booth@emea#systemctlstart booth@apac

This starts the booth service in arbitrator mode. It can communicate with all other booth daemons but in contrast to the booth daemons running on the cluster sites, it cannot be granted a ticket. Booth arbitrators take part in elections only. Otherwise, they are dormant.

4.7 Reconfiguring Booth While Running #

In case you need to change the booth configuration while the booth services are already running, proceed as follows:

Adjust the booth configuration files as desired.

Synchronize the updated booth configuration files to all cluster nodes and arbitrators that are part of your Geo cluster. For details, see Chapter 5, Synchronizing Configuration Files Across All Sites and Arbitrators.

Restart the booth services on the arbitrators and cluster sites as described in Section 4.6, “Enabling and Starting the Booth Services”. This does not have any effect on tickets that have already been granted to sites.