1 SES and Ceph #

SUSE Enterprise Storage is a distributed storage system designed for scalability, reliability and performance which is based on the Ceph technology. A Ceph cluster can be run on commodity servers in a common network like Ethernet. The cluster scales up well to thousands of servers (later on referred to as nodes) and into the petabyte range. As opposed to conventional systems which have allocation tables to store and fetch data, Ceph uses a deterministic algorithm to allocate storage for data and has no centralized information structure. Ceph assumes that in storage clusters the addition or removal of hardware is the rule, not the exception. The Ceph cluster automates management tasks such as data distribution and redistribution, data replication, failure detection and recovery. Ceph is both self-healing and self-managing which results in a reduction of administrative and budget overhead.

This chapter provides a high level overview of SUSE Enterprise Storage 7.1 and briefly describes the most important components.

1.1 Ceph features #

The Ceph environment has the following features:

- Scalability

Ceph can scale to thousands of nodes and manage storage in the range of petabytes.

- Commodity Hardware

No special hardware is required to run a Ceph cluster. For details, see Chapter 2, Hardware requirements and recommendations

- Self-managing

The Ceph cluster is self-managing. When nodes are added, removed or fail, the cluster automatically redistributes the data. It is also aware of overloaded disks.

- No Single Point of Failure

No node in a cluster stores important information alone. The number of redundancies can be configured.

- Open Source Software

Ceph is an open source software solution and independent of specific hardware or vendors.

1.2 Ceph core components #

To make full use of Ceph's power, it is necessary to understand some of the basic components and concepts. This section introduces some parts of Ceph that are often referenced in other chapters.

1.2.1 RADOS #

The basic component of Ceph is called RADOS (Reliable Autonomic Distributed Object Store). It is responsible for managing the data stored in the cluster. Data in Ceph is usually stored as objects. Each object consists of an identifier and the data.

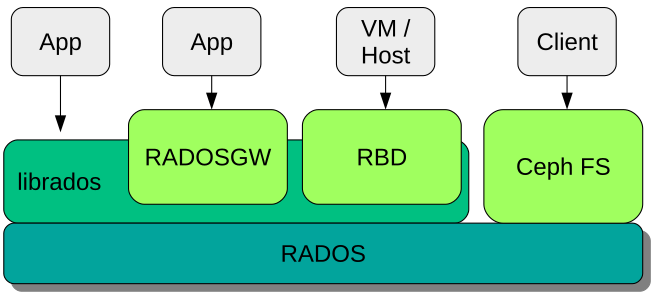

RADOS provides the following access methods to the stored objects that cover many use cases:

- Object Gateway

Object Gateway is an HTTP REST gateway for the RADOS object store. It enables direct access to objects stored in the Ceph cluster.

- RADOS Block Device

RADOS Block Device (RBD) can be accessed like any other block device. These can be used for example in combination with

libvirtfor virtualization purposes.- CephFS

The Ceph File System is a POSIX-compliant file system.

libradoslibradosis a library that can be used with many programming languages to create an application capable of directly interacting with the storage cluster.

librados is used by Object Gateway and RBD

while CephFS directly interfaces with RADOS

Figure 1.1, “Interfaces to the Ceph object store”.

1.2.2 CRUSH #

At the core of a Ceph cluster is the CRUSH algorithm. CRUSH is the acronym for Controlled Replication Under Scalable Hashing. CRUSH is a function that handles the storage allocation and needs comparably few parameters. That means only a small amount of information is necessary to calculate the storage position of an object. The parameters are a current map of the cluster including the health state, some administrator-defined placement rules and the name of the object that needs to be stored or retrieved. With this information, all nodes in the Ceph cluster are able to calculate where an object and its replicas are stored. This makes writing or reading data very efficient. CRUSH tries to evenly distribute data over all nodes in the cluster.

The CRUSH Map contains all storage nodes and administrator-defined placement rules for storing objects in the cluster. It defines a hierarchical structure that usually corresponds to the physical structure of the cluster. For example, the data-containing disks are in hosts, hosts are in racks, racks in rows and rows in data centers. This structure can be used to define failure domains. Ceph then ensures that replications are stored on different branches of a specific failure domain.

If the failure domain is set to rack, replications of objects are distributed over different racks. This can mitigate outages caused by a failed switch in a rack. If one power distribution unit supplies a row of racks, the failure domain can be set to row. When the power distribution unit fails, the replicated data is still available on other rows.

1.2.3 Ceph nodes and daemons #

In Ceph, nodes are servers working for the cluster. They can run several different types of daemons. We recommend running only one type of daemon on each node, except for Ceph Manager daemons which can be co-located with Ceph Monitors. Each cluster requires at least Ceph Monitor, Ceph Manager, and Ceph OSD daemons:

- Admin Node

The Admin Node is a Ceph cluster node from which you run commands to manage the cluster. The Admin Node is a central point of the Ceph cluster because it manages the rest of the cluster nodes by querying and instructing their Salt Minion services.

- Ceph Monitor

Ceph Monitor (often abbreviated as MON) nodes maintain information about the cluster health state, a map of all nodes and data distribution rules (see Section 1.2.2, “CRUSH”).

If failures or conflicts occur, the Ceph Monitor nodes in the cluster decide by majority which information is correct. To form a qualified majority, it is recommended to have an odd number of Ceph Monitor nodes, and at least three of them.

If more than one site is used, the Ceph Monitor nodes should be distributed over an odd number of sites. The number of Ceph Monitor nodes per site should be such that more than 50% of the Ceph Monitor nodes remain functional if one site fails.

- Ceph Manager

The Ceph Manager collects the state information from the whole cluster. The Ceph Manager daemon runs alongside the Ceph Monitor daemons. It provides additional monitoring, and interfaces the external monitoring and management systems. It includes other services as well. For example, the Ceph Dashboard Web UI runs on the same node as the Ceph Manager.

The Ceph Manager requires no additional configuration, beyond ensuring it is running.

- Ceph OSD

A Ceph OSD is a daemon handling Object Storage Devices which are a physical or logical storage units (hard disks or partitions). Object Storage Devices can be physical disks/partitions or logical volumes. The daemon additionally takes care of data replication and rebalancing in case of added or removed nodes.

Ceph OSD daemons communicate with monitor daemons and provide them with the state of the other OSD daemons.

To use CephFS, Object Gateway, NFS Ganesha, or iSCSI Gateway, additional nodes are required:

- Metadata Server (MDS)

CephFS metadata is stored in its own RADOS pool (see Section 1.3.1, “Pools”). The Metadata Servers act as a smart caching layer for the metadata and serializes access when needed. This allows concurrent access from many clients without explicit synchronization.

- Object Gateway

The Object Gateway is an HTTP REST gateway for the RADOS object store. It is compatible with OpenStack Swift and Amazon S3 and has its own user management.

- NFS Ganesha

NFS Ganesha provides an NFS access to either the Object Gateway or the CephFS. It runs in the user instead of the kernel space and directly interacts with the Object Gateway or CephFS.

- iSCSI Gateway

iSCSI is a storage network protocol that allows clients to send SCSI commands to SCSI storage devices (targets) on remote servers.

- Samba Gateway

The Samba Gateway provides a Samba access to data stored on CephFS.

1.3 Ceph storage structure #

1.3.1 Pools #

Objects that are stored in a Ceph cluster are put into pools. Pools represent logical partitions of the cluster to the outside world. For each pool a set of rules can be defined, for example, how many replications of each object must exist. The standard configuration of pools is called replicated pool.

Pools usually contain objects but can also be configured to act similar to a RAID 5. In this configuration, objects are stored in chunks along with additional coding chunks. The coding chunks contain the redundant information. The number of data and coding chunks can be defined by the administrator. In this configuration, pools are referred to as erasure coded pools or EC pools.

1.3.2 Placement groups #

Placement Groups (PGs) are used for the distribution of data within a pool. When creating a pool, a certain number of placement groups is set. The placement groups are used internally to group objects and are an important factor for the performance of a Ceph cluster. The PG for an object is determined by the object's name.

1.3.3 Example #

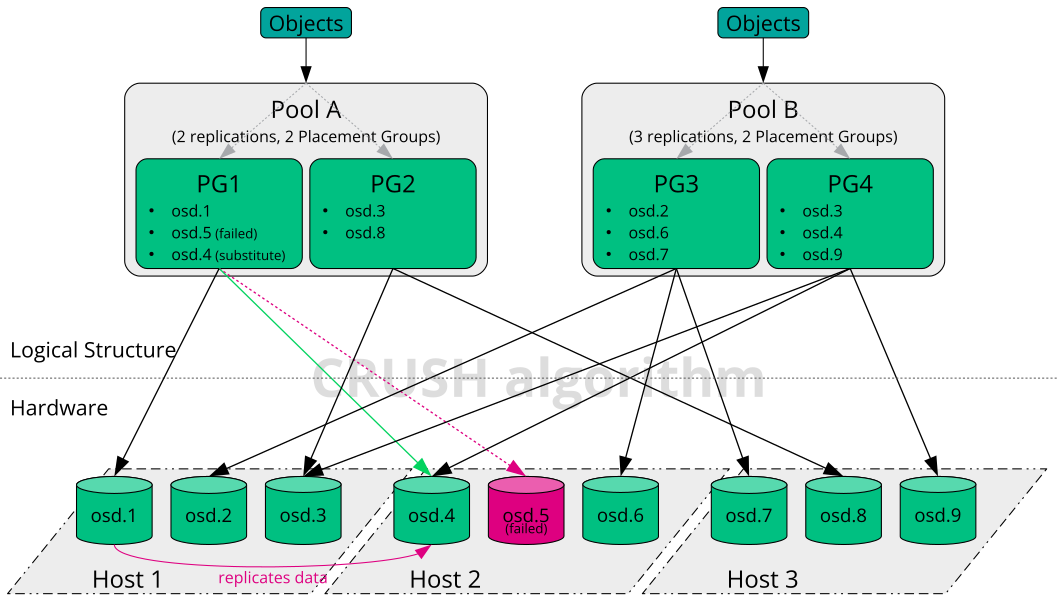

This section provides a simplified example of how Ceph manages data (see

Figure 1.2, “Small scale Ceph example”). This example

does not represent a recommended configuration for a Ceph cluster. The

hardware setup consists of three storage nodes or Ceph OSDs

(Host 1, Host 2, Host

3). Each node has three hard disks which are used as OSDs

(osd.1 to osd.9). The Ceph Monitor nodes are

neglected in this example.

While Ceph OSD or Ceph OSD daemon refers to a daemon that is run on a node, the word OSD refers to the logical disk that the daemon interacts with.

The cluster has two pools, Pool A and Pool

B. While Pool A replicates objects only two times, resilience for

Pool B is more important and it has three replications for each object.

When an application puts an object into a pool, for example via the REST

API, a Placement Group (PG1 to PG4)

is selected based on the pool and the object name. The CRUSH algorithm then

calculates on which OSDs the object is stored, based on the Placement Group

that contains the object.

In this example the failure domain is set to host. This ensures that replications of objects are stored on different hosts. Depending on the replication level set for a pool, the object is stored on two or three OSDs that are used by the Placement Group.

An application that writes an object only interacts with one Ceph OSD, the primary Ceph OSD. The primary Ceph OSD takes care of replication and confirms the completion of the write process after all other OSDs have stored the object.

If osd.5 fails, all object in PG1 are

still available on osd.1. As soon as the cluster

recognizes that an OSD has failed, another OSD takes over. In this example

osd.4 is used as a replacement for

osd.5. The objects stored on osd.1

are then replicated to osd.4 to restore the replication

level.

If a new node with new OSDs is added to the cluster, the cluster map is going to change. The CRUSH function then returns different locations for objects. Objects that receive new locations will be relocated. This process results in a balanced usage of all OSDs.

1.4 BlueStore #

BlueStore is a new default storage back-end for Ceph from SES 5. It has better performance than FileStore, full data check-summing, and built-in compression.

BlueStore manages either one, two, or three storage devices. In the simplest case, BlueStore consumes a single primary storage device. The storage device is normally partitioned into two parts:

A small partition named BlueFS that implements file system-like functionalities required by RocksDB.

The rest of the device is normally a large partition occupying the rest of the device. It is managed directly by BlueStore and contains all of the actual data. This primary device is normally identified by a block symbolic link in the data directory.

It is also possible to deploy BlueStore across two additional devices:

A WAL device can be used for BlueStore’s internal

journal or write-ahead log. It is identified by the

block.wal symbolic link in the data directory. It is only

useful to use a separate WAL device if the device is faster than the primary

device or the DB device, for example when:

The WAL device is an NVMe, and the DB device is an SSD, and the data device is either SSD or HDD.

Both the WAL and DB devices are separate SSDs, and the data device is an SSD or HDD.

A DB device can be used for storing BlueStore’s internal metadata. BlueStore (or rather, the embedded RocksDB) will put as much metadata as it can on the DB device to improve performance. Again, it is only helpful to provision a shared DB device if it is faster than the primary device.

Plan thoroughly to ensure sufficient size of the DB device. If the DB device fills up, metadata will spill over to the primary device, which badly degrades the OSD's performance.

You can check if a WAL/DB partition is getting full and spilling over with

the ceph daemon osd.ID perf

dump command. The slow_used_bytes value shows

the amount of data being spilled out:

cephuser@adm > ceph daemon osd.ID perf dump | jq '.bluefs'

"db_total_bytes": 1073741824,

"db_used_bytes": 33554432,

"wal_total_bytes": 0,

"wal_used_bytes": 0,

"slow_total_bytes": 554432,

"slow_used_bytes": 554432,1.5 Additional information #

Ceph as a community project has its own extensive online documentation. For topics not found in this manual, refer to https://docs.ceph.com/en/pacific/.

The original publication CRUSH: Controlled, Scalable, Decentralized Placement of Replicated Data by S.A. Weil, S.A. Brandt, E.L. Miller, C. Maltzahn provides helpful insight into the inner workings of Ceph. Especially when deploying large scale clusters it is a recommended reading. The publication can be found at http://www.ssrc.ucsc.edu/papers/weil-sc06.pdf.

SUSE Enterprise Storage can be used with non-SUSE OpenStack distributions. The Ceph clients need to be at a level that is compatible with SUSE Enterprise Storage.

NoteSUSE supports the server component of the Ceph deployment and the client is supported by the OpenStack distribution vendor.