7 Deploying the bootstrap cluster using ceph-salt #

This section guides you through the process of deploying a basic Ceph cluster. Read the following subsections carefully and execute the included commands in the given order.

7.1 Installing ceph-salt #

ceph-salt provides tools for deploying Ceph clusters managed by

cephadm. ceph-salt uses the Salt infrastructure to perform OS

management—for example, software updates or time

synchronization—and defining roles for Salt Minions.

On the Salt Master, install the ceph-salt package:

root@master # zypper install ceph-salt

The above command installed ceph-salt-formula as a

dependency which modified the Salt Master configuration by inserting

additional files in the /etc/salt/master.d

directory. To apply the changes, restart

salt-master.service and

synchronize Salt modules:

root@master #systemctl restart salt-master.serviceroot@master #salt \* saltutil.sync_all

7.2 Configuring cluster properties #

Use the ceph-salt config command to configure the

basic properties of the cluster.

The /etc/ceph/ceph.conf file is managed by

cephadm and users should not edit it. Ceph

configuration parameters should be set using the new ceph

config command. See

Section 28.2, “Configuration database” for more information.

7.2.1 Using the ceph-salt shell #

If you run ceph-salt config without any path or

subcommand, you will enter an interactive ceph-salt shell. The shell

is convenient if you need to configure multiple properties in one batch

and do not want type the full command syntax.

root@master #ceph-salt config/>ls o- / ............................................................... [...] o- ceph_cluster .................................................. [...] | o- minions .............................................. [no minions] | o- roles ....................................................... [...] | o- admin .............................................. [no minions] | o- bootstrap ........................................... [no minion] | o- cephadm ............................................ [no minions] | o- tuned ..................................................... [...] | o- latency .......................................... [no minions] | o- throughput ....................................... [no minions] o- cephadm_bootstrap ............................................. [...] | o- advanced .................................................... [...] | o- ceph_conf ................................................... [...] | o- ceph_image_path .................................. [ no image path] | o- dashboard ................................................... [...] | | o- force_password_update ................................. [enabled] | | o- password ................................................ [admin] | | o- ssl_certificate ....................................... [not set] | | o- ssl_certificate_key ................................... [not set] | | o- username ................................................ [admin] | o- mon_ip .................................................. [not set] o- containers .................................................... [...] | o- registries_conf ......................................... [enabled] | | o- registries .............................................. [empty] | o- registry_auth ............................................... [...] | o- password .............................................. [not set] | o- registry .............................................. [not set] | o- username .............................................. [not set] o- ssh ............................................... [no key pair set] | o- private_key .................................. [no private key set] | o- public_key .................................... [no public key set] o- time_server ........................... [enabled, no server host set] o- external_servers .......................................... [empty] o- servers ................................................... [empty] o- subnet .................................................. [not set]

As you can see from the output of ceph-salt's ls

command, the cluster configuration is organized in a tree structure. To

configure a specific property of the cluster in the ceph-salt shell,

you have two options:

Run the command from the current position and enter the absolute path to the property as the first argument:

/>/cephadm_bootstrap/dashboard ls o- dashboard ....................................................... [...] o- force_password_update ..................................... [enabled] o- password .................................................... [admin] o- ssl_certificate ........................................... [not set] o- ssl_certificate_key ....................................... [not set] o- username .................................................... [admin]/> /cephadm_bootstrap/dashboard/username set ceph-adminValue set.Change to the path whose property you need to configure and run the command:

/>cd /cephadm_bootstrap/dashboard//ceph_cluster/minions>ls o- dashboard ....................................................... [...] o- force_password_update ..................................... [enabled] o- password .................................................... [admin] o- ssl_certificate ........................................... [not set] o- ssl_certificate_key ....................................... [not set] o- username ................................................[ceph-admin]

While in a ceph-salt shell, you can use the autocompletion feature

similar to a normal Linux shell (Bash) autocompletion. It completes

configuration paths, subcommands, or Salt Minion names. When

autocompleting a configuration path, you have two options:

To let the shell finish a path relative to your current position,press the TAB key →| twice.

To let the shell finish an absolute path, enter / and press the TAB key →| twice.

If you enter cd from the ceph-salt shell without

any path, the command will print a tree structure of the cluster

configuration with the line of the current path active. You can use

the up and down cursor keys to navigate through individual lines.

After you confirm with Enter, the

configuration path will change to the last active one.

To keep the documentation consistent, we will use a single command

syntax without entering the ceph-salt shell. For example, you can

list the cluster configuration tree by using the following command:

root@master # ceph-salt config ls7.2.2 Adding Salt Minions #

Include all or a subset of Salt Minions that we deployed and accepted in

Chapter 6, Deploying Salt to the Ceph cluster configuration. You

can either specify the Salt Minions by their full names, or use a glob

expressions '*' and '?' to include multiple Salt Minions at once. Use the

add subcommand under the

/ceph_cluster/minions path. The following command

includes all accepted Salt Minions:

root@master # ceph-salt config /ceph_cluster/minions add '*'Verify that the specified Salt Minions were added:

root@master # ceph-salt config /ceph_cluster/minions ls

o- minions ............................................... [Minions: 5]

o- ses-main.example.com .................................. [no roles]

o- ses-node1.example.com ................................. [no roles]

o- ses-node2.example.com ................................. [no roles]

o- ses-node3.example.com ................................. [no roles]

o- ses-node4.example.com ................................. [no roles]7.2.3 Specifying Salt Minions managed by cephadm #

Specify which nodes will belong to the Ceph cluster and will be managed by cephadm. Include all nodes that will run Ceph services as well as the Admin Node:

root@master # ceph-salt config /ceph_cluster/roles/cephadm add '*'7.2.4 Specifying Admin Node #

The Admin Node is the node where the ceph.conf

configuration file and the Ceph admin keyring is installed. You

usually run Ceph related commands on the Admin Node.

In a homogeneous environment where all or most hosts belong to SUSE Enterprise Storage, we recommend having the Admin Node on the same host as the Salt Master.

In a heterogeneous environment where one Salt infrastructure hosts more than one cluster, for example, SUSE Enterprise Storage together with SUSE Manager, do not place the Admin Node on the same host as Salt Master.

To specify the Admin Node, run the following command:

root@master #ceph-salt config /ceph_cluster/roles/admin add ses-main.example.com 1 minion added.root@master #ceph-salt config /ceph_cluster/roles/admin ls o- admin ................................................... [Minions: 1] o- ses-main.example.com ...................... [Other roles: cephadm]

ceph.conf and the admin keyring on multiple nodesYou can install the Ceph configuration file and admin keyring on multiple nodes if your deployment requires it. For security reasons, avoid installing them on all the cluster's nodes.

7.2.5 Specifying first MON/MGR node #

You need to specify which of the cluster's Salt Minions will bootstrap the cluster. This minion will become the first one running Ceph Monitor and Ceph Manager services.

root@master #ceph-salt config /ceph_cluster/roles/bootstrap set ses-node1.example.com Value set.root@master #ceph-salt config /ceph_cluster/roles/bootstrap ls o- bootstrap ..................................... [ses-node1.example.com]

Additionally, you need to specify the bootstrap MON's IP address on the

public network to ensure that the public_network

parameter is set correctly, for example:

root@master # ceph-salt config /cephadm_bootstrap/mon_ip set 192.168.10.207.2.6 Specifying tuned profiles #

You need to specify which of the cluster's minions have actively tuned profiles. To do so, add these roles explicitly with the following commands:

One minion cannot have both the latency and

throughput roles.

root@master #ceph-salt config /ceph_cluster/roles/tuned/latency add ses-node1.example.com Adding ses-node1.example.com... 1 minion added.root@master #ceph-salt config /ceph_cluster/roles/tuned/throughput add ses-node2.example.com Adding ses-node2.example.com... 1 minion added.

7.2.7 Generating an SSH key pair #

cephadm uses the SSH protocol to communicate with cluster nodes. A

user account named cephadm is automatically created

and used for SSH communication.

You need to generate the private and public part of the SSH key pair:

root@master #ceph-salt config /ssh generate Key pair generated.root@master #ceph-salt config /ssh ls o- ssh .................................................. [Key Pair set] o- private_key ..... [53:b1:eb:65:d2:3a:ff:51:6c:e2:1b:ca:84:8e:0e:83] o- public_key ...... [53:b1:eb:65:d2:3a:ff:51:6c:e2:1b:ca:84:8e:0e:83]

7.2.8 Configuring the time server #

All cluster nodes need to have their time synchronized with a reliable time source. There are several scenarios to approach time synchronization:

If all cluster nodes are already configured to synchronize their time using an NTP service of choice, disable time server handling completely:

root@master #ceph-salt config /time_server disableIf your site already has a single source of time, specify the host name of the time source:

root@master #ceph-salt config /time_server/servers add time-server.example.comAlternatively,

ceph-salthas the ability to configure one of the Salt Minion to serve as the time server for the rest of the cluster. This is sometimes referred to as an "internal time server". In this scenario,ceph-saltwill configure the internal time server (which should be one of the Salt Minion) to synchronize its time with an external time server, such aspool.ntp.org, and configure all the other minions to get their time from the internal time server. This can be achieved as follows:root@master #ceph-salt config /time_server/servers add ses-main.example.comroot@master #ceph-salt config /time_server/external_servers add pool.ntp.orgThe

/time_server/subnetoption specifies the subnet from which NTP clients are allowed to access the NTP server. It is automatically set when you specify/time_server/servers. If you need to change it or specify it manually, run:root@master #ceph-salt config /time_server/subnet set 10.20.6.0/24

Check the time server settings:

root@master # ceph-salt config /time_server ls

o- time_server ................................................ [enabled]

o- external_servers ............................................... [1]

| o- pool.ntp.org ............................................... [...]

o- servers ........................................................ [1]

| o- ses-main.example.com ...... ............................... [...]

o- subnet .............................................. [10.20.6.0/24]Find more information on setting up time synchronization in https://documentation.suse.com/sles/15-SP3/html/SLES-all/cha-ntp.html#sec-ntp-yast.

7.2.9 Configuring the Ceph Dashboard login credentials #

Ceph Dashboard will be available after the basic cluster is deployed. To access it, you need to set a valid user name and password, for example:

root@master #ceph-salt config /cephadm_bootstrap/dashboard/username set adminroot@master #ceph-salt config /cephadm_bootstrap/dashboard/password set PWD

By default, the first dashboard user will be forced to change their password on first login to the dashboard. To disable this feature, run the following command:

root@master # ceph-salt config /cephadm_bootstrap/dashboard/force_password_update disable7.2.10 Using the container registry #

These instructions assume the private registry will reside on the SES Admin Node. In cases where the private registry will reside on another host, the instructions will need to be adjusted to meet the new environment.

Also, when migrating from registry.suse.com to a

private registry, migrate the current version of container images

being used. After the current configuration has been migrated and

validated, pull new images from registry.suse.com

and upgrade to the new images.

7.2.10.1 Creating the local registry #

To create the local registry, follow these steps:

Verify that the Containers Module extension is enabled:

#SUSEConnect --list-extensions | grep -A2 "Containers Module" Containers Module 15 SP3 x86_64 (Activated)Verify that the following packages are installed: apache2-utils (if enabling a secure registry), cni, cni-plugins, podman, podman-cni-config, and skopeo:

#zypper in apache2-utils cni cni-plugins podman podman-cni-config skopeoGather the following information:

Fully qualified domain name of the registry host (

REG_HOST_FQDN).An available port number used to map to the registry container port of 5000 (

REG_HOST_PORT):>ss -tulpn | grep :5000Whether the registry will be secure or insecure (the

insecure=[true|false]option).If the registry will be a secure private registry, determine a username and password (REG_USERNAME, REG_PASSWORD).

If the registry will be a secure private registry, a self-signed certificate can be created, or a root certificate and key will need to be provided (

REG_CERTIFICATE.CRT,REG_PRIVATE-KEY.KEY).Record the current

ceph-saltcontainers configuration:cephuser@adm >ceph-salt config ls /containers o- containers .......................................................... [...] o- registries_conf ................................................. [enabled] | o- registries ...................................................... [empty] o- registry_auth ....................................................... [...] o- password ........................................................ [not set] o- registry ........................................................ [not set] o- username ........................................................ [not set]

7.2.10.1.1 Starting an insecure private registry (without SSL encryption) #

Configure

ceph-saltfor the insecure registry:cephuser@adm >ceph-salt config containers/registries_conf enablecephuser@adm >ceph-salt config containers/registries_conf/registries \ add prefix=REG_HOST_FQDN insecure=true \ location=REG_HOST_PORT:5000cephuser@adm >ceph-salt apply --non-interactiveStart the insecure registry by creating the necessary directory, for example,

/var/lib/registryand starting the registry with thepodmancommand:#mkdir -p /var/lib/registry#podman run --privileged -d --name registry \ -p REG_HOST_PORT:5000 -v /var/lib/registry:/var/lib/registry \ --restart=always registry:2TipThe

--net=hostparameter allows the Podman service to be reachable from other nodes.To have the registry start after a reboot, create a

systemdunit file for it and enable it:#podman generate systemd --files --name registry#mv container-registry.service /etc/systemd/system/#systemctl enable container-registry.serviceTest if the registry is running on port 5000:

>ss -tulpn | grep :5000Test to see if the registry port (

REG_HOST_PORT) is in anopenstate using thenmapcommand. Afilteringstate will indicate that the service will not be reachable from other nodes.>nmap REG_HOST_FQDNThe configuration is complete now. Continue by following Section 7.2.10.1.3, “Populating the secure local registry”.

7.2.10.1.2 Starting a secure private registry (with SSL encryption) #

If an insecure registry was previously configured on the host, you need to remove the insecure registry configuration before proceeding with the secure private registry configuration.

Create the necessary directories:

#mkdir -p /var/lib/registry/{auth,certs}Generate an SSL certificate:

>openssl req -newkey rsa:4096 -nodes -sha256 \ -keyout /var/lib/registry/certs/REG_PRIVATE-KEY.KEY -x509 -days 365 \ -out /var/lib/registry/certs/REG_CERTIFICATE.CRTTipIn this example and throughout the instructions, the key and certificate files are named REG_PRIVATE-KEY.KEY and REG_CERTIFICATE.CRT.

NoteSet the

CN=[value]value to the fully qualified domain name of the host (REG_HOST_FQDN).TipIf a certificate signed by a certificate authority is provided, copy the *.key, *.crt, and intermediate certificates to

/var/lib/registry/certs/.Copy the certificate to all cluster nodes and refresh the certificate cache:

root@master #salt-cp '*' /var/lib/registry/certs/REG_CERTIFICATE.CRT \ /etc/pki/trust/anchors/root@master #salt '*' cmd.shell "update-ca-certificates"TipPodman default path is

/etc/containers/certs.d. Refer to thecontainers-certs.dman page (man 5 containers-certs.d) for more details. If a certificate signed by a certificate authority is provided, also copy the intermediate certificates, if provided.Copy the certificates to

/etc/containers/certs.d:root@master #salt '*' cmd.shell "mkdir /etc/containers/certs.d"root@master #salt-cp '*' /var/lib/registry/certs/REG_CERTIFICATE.CRT \ /etc/containers/certs.d/If a certificate signed by a certificate authority is provided, also copy the intermediate certificates if provided.

Generate a user name and password combination for authentication to the registry:

#htpasswd2 -bBc /var/lib/registry/auth/htpasswd REG_USERNAME REG_PASSWORDStart the secure registry. Use the

REGISTRY_STORAGE_DELETE_ENABLED=trueflag so that you can delete images afterward with theskopeo deletecommand. ReplaceREG_HOST_PORTwith the desired port5000and provide correct names for REG_PRIVATE-KEY.KEY, REG_CERTIFICATE.CRT. The registry will bename registry. If you wish to use a different name, rename--name registry.#podman run --name registry --net=host -p REG_HOST_PORT:5000 \ -v /var/lib/registry:/var/lib/registry \ -v /var/lib/registry/auth:/auth:z \ -e "REGISTRY_AUTH=htpasswd" \ -e "REGISTRY_AUTH_HTPASSWD_REALM=Registry Realm" \ -e REGISTRY_AUTH_HTPASSWD_PATH=/auth/htpasswd \ -v /var/lib/registry/certs:/certs:z \ -e "REGISTRY_HTTP_TLS_CERTIFICATE=/certs/REG_CERTIFICATE.CRT" \ -e "REGISTRY_HTTP_TLS_KEY=/certs/REG_PRIVATE-KEY.KEY " \ -e REGISTRY_STORAGE_DELETE_ENABLED=true \ -e REGISTRY_COMPATIBILITY_SCHEMA1_ENABLED=true -d registry:2NoteThe

--net=hostparameter was added to allow the Podman service to be reachable from other nodes.To have the registry start after a reboot, create a

systemdunit file for it and enable it:#podman generate systemd --files --name registry#mv container-registry.service /etc/systemd/system/#systemctl enable container-registry.serviceTipAs an alternative, the following will also start Podman:

#podman container list --all#podman start registryTest if the registry is running on port 5000:

>ss -tulpn | grep :5000Test to see if the registry port (

REG_HOST_PORT) is in an “open” state by using thenmapcommand. A “filtering” state will indicate the service will not be reachable from other nodes.>nmap REG_HOST_FQDNTest secure access to the registry and see the list of repositories:

>curl https://REG_HOST_FQDN:REG_HOST_PORT/v2/_catalog \ -u REG_USERNAME:REG_PASSWORDAlternatively, use the

-koption:>curl -k https:// REG_HOST_FQDN:5000/v2/_catalog \ -u REG_USERNAME:REG_PASSWORDYou can verify the certificate by using the following command:

openssl s_client -connect REG_HOST_FQDN:5000 -servername REG_HOST_FQDN

Modify

/etc/environmentand include theexport GODEBUG=x509ignoreCN=0option for self-signed certificates. Ensure that the existing configuration in/etc/environmentis not overwritten:salt '*' cmd.shell 'echo "export GODEBUG=x509ignoreCN=0" >>/etc/environment

In the current terminal, run the following command:

>export GODEBUG=x509ignoreCN=0Configure the URL of the local registry with username and password, then apply the configuration:

cephuser@adm >ceph-salt config /containers/registry_auth/registry \ set REG_HOST_FQDN:5000cephuser@adm >ceph-salt config /containers/registry_auth/username set REG_USERNAMEcephuser@adm >ceph-salt config /containers/registry_auth/password set REG_PASSWORDVerify configuration change, for example:

cephuser@adm >ceph-salt config ls /containers o- containers .......................................................... [...] o- registries_conf ................................................. [enabled] | o- registries ...................................................... [empty] o- registry_auth ....................................................... [...] o- password ................................................... [REG_PASSWORD] o- registry .......................................... [host.example.com:5000] o- username ................................................... [REG_USERNAME]If the configuration is correct, run:

cephuser@adm >ceph-salt applyor

cephuser@adm >ceph-salt apply --non-interactiveUpdate cephadm credentials:

cephuser@adm >ceph cephadm registry-login REG_HOST_FQDN:5000 \ REG_USERNAME REG_PASSWORDcephuser@adm >ceph cephadm registry-login REG_HOST_FQDN:5000 \ REG_USERNAME REG_PASSWORDIf the command fails, double check REG_HOST_FQDN, REG_USERNAME, and REG_PASSWORD values to ensure they are correct. If the command fails again, fail the active MGR to a different MGR daemon:

cephuser@adm >ceph mgr failValidate the configuration:

cephuser@adm >ceph config-key dump | grep 'registry_credentialsIf the cluster is using a proxy configuration to reach

registry.suse.com, the configuration should be removed. The node running the private registry will still need access toregistry.suse.comand may still need the proxy configuration. For example:wilber:/etc/containers>grep -A1 "env =" containers.conf env = ["https_proxy=http://some.url.example.com:8080", "no_proxy=s3.some.url.exmple.com"]The configuration is complete now. Continue by following Section 7.2.10.1.3, “Populating the secure local registry”.

7.2.10.1.3 Populating the secure local registry #

When the local registry is created, you need to synchronize container images from the official SUSE registry at

registry.suse.comto the local one. You can use theskopeo synccommand found in the skopeo package for that purpose. For more details, refer to the man page (man 1 skopeo-sync). Consider the following examples:Example 7.1: Viewing MANIFEST files on SUSE registry #>skopeo inspect \ docker://registry.suse.com/ses/7.1/ceph/ceph | jq .RepoTags>skopeo inspect \ docker://registry.suse.com/ses/7.1/ceph/grafana | jq .RepoTags>skopeo inspect \ docker://registry.suse.com/ses/7.1/ceph/prometheus-server:2.32.1 | \ jq .RepoTags>skopeo inspect \ docker://registry.suse.com/ses/7.1/ceph/prometheus-node-exporter:1.3.0 | \ jq .RepoTags>skopeo inspect \ docker://registry.suse.com/ses/7.1/ceph/prometheus-alertmanager:0.23.0 | \ jq .RepoTags>skopeo inspect \ docker://registry.suse.com/ses/7.1/ceph/haproxy:2.0.14 | jq .RepoTags>skopeo inspect \ docker://registry.suse.com/ses/7.1/ceph/keepalived:2.0.19 | jq .RepoTags>skopeo inspect \ docker://registry.suse.com/ses/7.1/ceph/prometheus-snmp_notifier:1.2.1 | \ jq .RepoTagsIn the event that SUSE has provided a PTF, you can also inspect the PTF using the path provided by SUSE.

Log in to the secure private registry.

#podman login REG_HOST_FQDN:5000 \ -u REG_USERNAME -p REG_PASSWORDNoteAn insecure private registry does not require login.

Pull images to the local registry, push images to the private registry.

#podman pull registry.suse.com/ses/7.1/ceph/ceph:latest#podman tag registry.suse.com/ses/7.1/ceph/ceph:latest \ REG_HOST_FQDN:5000/ses/7.1/ceph/ceph:latest#podman push REG_HOST_FQDN:5000/ses/7.1/ceph/ceph:latest#podman pull registry.suse.com/ses/7.1/ceph/grafana:8.3.10#podman tag registry.suse.com/ses/7.1/ceph/grafana:8.3.10 \ REG_HOST_FQDN:5000/ses/7.1/ceph/grafana:8.3.10#podman push REG_HOST_FQDN:5000/ses/7.1/ceph/grafana:8.3.10#podman pull registry.suse.com/ses/7.1/ceph/prometheus-server:2.32.1#podman tag registry.suse.com/ses/7.1/ceph/prometheus-server:2.32.1 \ REG_HOST_FQDN:5000/ses/7.1/ceph/prometheus-server:2.32.1#podman push REG_HOST_FQDN:5000/ses/7.1/ceph/prometheus-server:2.32.1#podman pull registry.suse.com/ses/7.1/ceph/prometheus-alertmanager:0.23.0#podman tag registry.suse.com/ses/7.1/ceph/prometheus-alertmanager:0.23.0 \ REG_HOST_FQDN:5000/ses/7.1/ceph/prometheus-alertmanager:0.23.0#podman push REG_HOST_FQDN:5000/ses/7.1/ceph/prometheus-alertmanager:0.23.0#podman pull registry.suse.com/ses/7.1/ceph/prometheus-node-exporter:1.3.0#podman tag registry.suse.com/ses/7.1/ceph/prometheus-node-exporter:1.3.0 \ REG_HOST_FQDN:5000/ses/7.1/ceph/prometheus-node-exporter:1.3.0#podman push REG_HOST_FQDN:5000/ses/7.1/ceph/prometheus-node-exporter:1.3.0#podman pull registry.suse.com/ses/7.1/ceph/haproxy:2.0.14#podman tag registry.suse.com/ses/7.1/ceph/haproxy:2.0.14 \ REG_HOST_FQDN:5000/ses/7.1/ceph/haproxy:2.0.14#podman push REG_HOST_FQDN:5000/ses/7.1/ceph/haproxy:2.0.14#podman pull registry.suse.com/ses/7.1/ceph/keepalived:2.0.19#podman tag registry.suse.com/ses/7.1/ceph/keepalived:2.0.19 \ REG_HOST_FQDN:5000/ses/7.1/ceph/keepalived:2.0.19#podman push REG_HOST_FQDN:5000/ses/7.1/ceph/keepalived:2.0.19#podman pull registry.suse.com/ses/7.1/ceph/prometheus-snmp_notifier:1.2.1#podman tag registry.suse.com/ses/7.1/ceph/prometheus-snmp_notifier:1.2.1 \ REG_HOST_FQDN:5000/ses/7.1/ceph/prometheus-snmp_notifier:1.2.1#podman push REG_HOST_FQDN:5000/ses/7.1/ceph/prometheus-snmp_notifier:1.2.1Log out of the private registry:

#podman logout REG_HOST_FQDN:5000List images with

podman imagesorpodman images | sort.List repositories:

>curl -sSk https://REG_HOST_FQDN:5000/v2/_catalog \ -u REG_USERNAME:REG_PASSWORD | jqUse

skopeoto inspect the private registry. Note that you need to log in to make the following commands work:>skopeo inspect \ docker://REG_HOST_FQDN:5000/ses/7.1/ceph/ceph | jq .RepoTags>skopeo inspect \ docker://REG_HOST_FQDN:5000/ses/7.1/ceph/grafana:8.3.10 | jq .RepoTags>skopeo inspect \ docker://REG_HOST_FQDN:5000/ses/7.1/ceph/prometheus-server:2.32.1 | \ jq .RepoTags>skopeo inspect \ docker://REG_HOST_FQDN:5000/ses/7.1/ceph/prometheus-alertmanager:0.23.0 | \ jq .RepoTags>skopeo inspect \ docker://REG_HOST_FQDN:5000/ses/7.1/ceph/prometheus-node-exporter:1.3.0 | \ jq .RepoTags>skopeo inspect \ docker://REG_HOST_FQDN:5000/ses/7.1/ceph/haproxy:2.0.14 | jq .RepoTags>skopeo inspect \ docker://REG_HOST_FQDN:5000/ses/7.1/ceph/keepalived:2.0.19 | jq .RepoTags>skopeo inspect \ docker://REG_HOST_FQDN:5000/ses/7.1/ceph/prometheus-snmp_notifier:1.2.1 | \ jq .RepoTagsList repository tags example:

>curl -sSk https://REG_HOST_FQDN:5000/v2/ses/7.1/ceph/ceph/tags/list \ -u REG_CERTIFICATE.CRT:REG_PRIVATE-KEY.KEY {"name":"ses/7.1/ceph/ceph","tags":["latest"]}Test pulling an image from another node.

Log in to the private registry. Note that an insecure private registry does not require login.

#podman login REG_HOST_FQDN:5000 -u REG_CERTIFICATE.CRT \ -p REG_PRIVATE-KEY.KEYPull an image from the private registry. Optionally, you can specify login credentials on the command line. For example:

#podman pull REG_HOST_FQDN:5000/ses/7.1/ceph/ceph:latest#podman pull --creds= REG_USERNAME:REG_PASSWORD \ REG_HOST_FQDN:5000/ses/7.1/ceph/ceph:latest#podman pull --cert-dir=/etc/containers/certs.d \ REG_HOST_FQDN:5000/ses/7.1/ceph/ceph:latestLog out of the private registry when done:

#podman logout REG_HOST_FQDN:5000

7.2.10.1.4 Configuring Ceph to pull images from the private registry #

List configuration settings that need to change:

cephuser@adm >ceph config dump | grep container_imageView current configurations:

cephuser@adm >ceph config get mgr mgr/cephadm/container_image_alertmanagercephuser@adm >ceph config get mgr mgr/cephadm/container_image_basecephuser@adm >ceph config get mgr mgr/cephadm/container_image_grafanacephuser@adm >ceph config get mgr mgr/cephadm/container_image_haproxycephuser@adm >ceph config get mgr mgr/cephadm/container_image_keepalivedcephuser@adm >ceph config get mgr mgr/cephadm/container_image_node_exportercephuser@adm >ceph config get mgr mgr/cephadm/container_image_prometheuscephuser@adm >ceph config get mgr mgr/cephadm/container_image_snmp_gatewayConfigure the cluster to use the private registry.

Do not set the following options:

ceph config set mgr mgr/cephadm/container_image_base ceph config set global container_image

Set the following options:

cephuser@adm >ceph config set mgr mgr/cephadm/container_image_alertmanager \ REG_HOST_FQDN:5000/ses/7.1/ceph/prometheus-alertmanager:0.23.0cephuser@adm >ceph config set mgr mgr/cephadm/container_image_grafana \ REG_HOST_FQDN:5000/ses/7.1/ceph/grafana:8.3.10cephuser@adm >ceph config set mgr mgr/cephadm/container_image_haproxy \ REG_HOST_FQDN:5000/ses/7.1/ceph/haproxy:2.0.14cephuser@adm >ceph config set mgr mgr/cephadm/container_image_keepalived \ REG_HOST_FQDN:5000/ses/7.1/ceph/keepalived:2.0.19cephuser@adm >ceph config set mgr mgr/cephadm/container_image_node_exporter \ REG_HOST_FQDN:5000/ses/7.1/ceph/prometheus-node-exporter:1.3.0cephuser@adm >ceph config set mgr mgr/cephadm/container_image_prometheus \ REG_HOST_FQDN:5000/ses/7.1/ceph/prometheus-server:2.32.1cephuser@adm >ceph config set mgr mgr/cephadm/container_image_snmp_gateway \ REG_HOST_FQDN:5000/ses/7.1/ceph/prometheus-snmp_notifier:1.2.1Double-check if there are other custom configurations that need to be modified or removed and if the correct configuration was applied:

cephuser@adm >ceph config dump | grep container_imageAll the daemons need to be redeployed to ensure the correct images are being used:

cephuser@adm >ceph orch upgrade start \ --image REG_HOST_FQDN:5000/ses/7.1/ceph/ceph:latestTipMonitor the redeployment by running the following command:

cephuser@adm >ceph -W cephadmAfter the upgrade is complete, validate that the daemons have been updated. Note the

VERSIONandIMAGE IDvalues in the right columns of the following table:cephuser@adm >ceph orch psTipFor more details, run the following command:

cephuser@adm >ceph orch ps --format=yaml | \ egrep "daemon_name|container_image_name"If needed, redeploy services or daemons:

cephuser@adm >ceph orch redeploy SERVICE_NAMEcephuser@adm >ceph orch daemon rm DAEMON_NAME

7.2.10.2 Configuring the path to container images #

This section helps you configure the path to container images of the bootstrap cluster (deployment of the first Ceph Monitor and Ceph Manager pair). The path does not apply to container images of additional services, for example the monitoring stack.

If you need to use a proxy to communicate with the container registry server, perform the following configuration steps on all cluster nodes:

Copy the configuration file for containers:

>sudocp /usr/share/containers/containers.conf \ /etc/containers/containers.confEdit the newly copied file and add the

http_proxysetting to its[engine]section, for example:>cat /etc/containers/containers.conf [engine] env = ["http_proxy=http://proxy.example.com:PORT"] [...]If the container needs to communicate over HTTP(S) to a specific host or list of hosts bypassing the proxy server, add an exception using the

no_proxyoption:>cat /etc/containers/containers.conf [engine] env = ["http_proxy=http://proxy.example.com:PORT", "no_proxy=rgw.example.com"] [...]

cephadm needs to know a valid URI path to container images. Verify the default setting by executing

root@master # ceph-salt config /cephadm_bootstrap/ceph_image_path lsIf you do not need an alternative or local registry, specify the default SUSE container registry:

root@master # ceph-salt config /cephadm_bootstrap/ceph_image_path set \

registry.suse.com/ses/7.1/ceph/cephIf your deployment requires a specific path, for example, a path to a local registry, configure it as follows:

root@master # ceph-salt config /cephadm_bootstrap/ceph_image_path set LOCAL_REGISTRY_PATH7.2.11 Enabling data in-flight encryption (msgr2) #

The Messenger v2 protocol (MSGR2) is Ceph's on-wire protocol. It provides a security mode that encrypts all data passing over the network, encapsulation of authentication payloads, and the enabling of future integration of new authentication modes (such as Kerberos).

msgr2 is not currently supported by Linux kernel Ceph clients, such as CephFS and RADOS Block Device.

Ceph daemons can bind to multiple ports, allowing both legacy Ceph clients and new v2-capable clients to connect to the same cluster. By default, MONs now bind to the new IANA-assigned port 3300 (CE4h or 0xCE4) for the new v2 protocol, while also binding to the old default port 6789 for the legacy v1 protocol.

The v2 protocol (MSGR2) supports two connection modes:

- crc mode

A strong initial authentication when the connection is established and a CRC32C integrity check.

- secure mode

A strong initial authentication when the connection is established and full encryption of all post-authentication traffic, including a cryptographic integrity check.

For most connections, there are options that control which modes are used:

- ms_cluster_mode

The connection mode (or permitted modes) used for intra-cluster communication between Ceph daemons. If multiple modes are listed, the modes listed first are preferred.

- ms_service_mode

A list of permitted modes for clients to use when connecting to the cluster.

- ms_client_mode

A list of connection modes, in order of preference, for clients to use (or allow) when talking to a Ceph cluster.

There are a parallel set of options that apply specifically to monitors, allowing administrators to set different (usually more secure) requirements on communication with the monitors.

- ms_mon_cluster_mode

The connection mode (or permitted modes) to use between monitors.

- ms_mon_service_mode

A list of permitted modes for clients or other Ceph daemons to use when connecting to monitors.

- ms_mon_client_mode

A list of connection modes, in order of preference, for clients or non-monitor daemons to use when connecting to monitors.

In order to enable MSGR2 encryption mode during the deployment, you

need to add some configuration options to the ceph-salt configuration

before running ceph-salt apply.

To use secure mode, run the following commands.

Add the global section to ceph_conf in the

ceph-salt configuration tool:

root@master # ceph-salt config /cephadm_bootstrap/ceph_conf add globalSet the following options:

root@master #ceph-salt config /cephadm_bootstrap/ceph_conf/global set ms_cluster_mode "secure crc"root@master #ceph-salt config /cephadm_bootstrap/ceph_conf/global set ms_service_mode "secure crc"root@master #ceph-salt config /cephadm_bootstrap/ceph_conf/global set ms_client_mode "secure crc"

Ensure secure precedes crc.

To force secure mode, run the

following commands:

root@master #ceph-salt config /cephadm_bootstrap/ceph_conf/global set ms_cluster_mode secureroot@master #ceph-salt config /cephadm_bootstrap/ceph_conf/global set ms_service_mode secureroot@master #ceph-salt config /cephadm_bootstrap/ceph_conf/global set ms_client_mode secure

If you want to change any of the above settings, set the

configuration changes in the monitor configuration store. This is

achieved using the ceph config set command.

root@master # ceph config set global CONNECTION_OPTION CONNECTION_MODE [--force]For example:

root@master # ceph config set global ms_cluster_mode "secure crc"If you want to check the current value, including default value, run the following command:

root@master # ceph config get CEPH_COMPONENT CONNECTION_OPTION

For example, to get the ms_cluster_mode for OSD's,

run:

root@master # ceph config get osd ms_cluster_mode7.2.12 Configuring the cluster network #

Optionally, if you are running a separate cluster network, you may need

to set the cluster network IP address followed by the subnet mask part

after the slash sign, for example 192.168.10.22/24.

Run the following commands to enable

cluster_network:

root@master #ceph-salt config /cephadm_bootstrap/ceph_conf add globalroot@master #ceph-salt config /cephadm_bootstrap/ceph_conf/global set cluster_network NETWORK_ADDR

7.2.13 Verifying the cluster configuration #

The minimal cluster configuration is finished. Inspect it for obvious errors:

root@master # ceph-salt config ls

o- / ............................................................... [...]

o- ceph_cluster .................................................. [...]

| o- minions .............................................. [Minions: 5]

| | o- ses-main.example.com ....... ........................... [admin]

| | o- ses-node1.example.com ........................ [bootstrap, admin]

| | o- ses-node2.example.com ................................ [no roles]

| | o- ses-node3.example.com ................................ [no roles]

| | o- ses-node4.example.com ................................ [no roles]

| o- roles ....................................................... [...]

| o- admin .............................................. [Minions: 2]

| | o- ses-main.example.com ......................... [no other roles]

| | o- ses-node1.example.com ................ [other roles: bootstrap]

| o- bootstrap ............................... [ses-node1.example.com]

| o- cephadm ............................................ [Minions: 5]

| o- tuned ..................................................... [...]

| o- latency .......................................... [no minions]

| o- throughput ....................................... [no minions]

o- cephadm_bootstrap ............................................. [...]

| o- advanced .................................................... [...]

| o- ceph_conf ................................................... [...]

| o- ceph_image_path ............. [registry.suse.com/ses/7.1/ceph/ceph]

| o- dashboard ................................................... [...]

| o- force_password_update ................................. [enabled]

| o- password ................................... [randomly generated]

| o- username ................................................ [admin]

| o- mon_ip ............................................ [192.168.10.20]

o- containers .................................................... [...]

| o- registries_conf ......................................... [enabled]

| | o- registries .............................................. [empty]

| o- registry_auth ............................................... [...]

| o- password .............................................. [not set]

| o- registry .............................................. [not set]

| o- username .............................................. [not set]

o- ssh .................................................. [Key Pair set]

| o- private_key ..... [53:b1:eb:65:d2:3a:ff:51:6c:e2:1b:ca:84:8e:0e:83]

| o- public_key ...... [53:b1:eb:65:d2:3a:ff:51:6c:e2:1b:ca:84:8e:0e:83]

o- time_server ............................................... [enabled]

o- external_servers .............................................. [1]

| o- 0.pt.pool.ntp.org ......................................... [...]

o- servers ....................................................... [1]

| o- ses-main.example.com ...................................... [...]

o- subnet ............................................. [10.20.6.0/24]You can check if the configuration of the cluster is valid by running the following command:

root@master # ceph-salt status

cluster: 5 minions, 0 hosts managed by cephadm

config: OK7.2.14 Exporting cluster configurations #

After you have configured the basic cluster and its configuration is valid, it is a good idea to export its configuration to a file:

root@master # ceph-salt export > cluster.json

The output of the ceph-salt export includes the

SSH private key. If you are concerned about the security

implications, do not execute this command without taking appropriate

precautions.

In case you break the cluster configuration and need to revert to a backup state, run:

root@master # ceph-salt import cluster.json7.3 Updating nodes and bootstrap minimal cluster #

Before you deploy the cluster, update all software packages on all nodes:

root@master # ceph-salt update

If a node reports Reboot is needed during the update,

important OS packages—such as the kernel—were updated to a

newer version and you need to reboot the node to apply the changes.

To reboot all nodes that require rebooting, either append the

--reboot option

root@master # ceph-salt update --rebootOr, reboot them in a separate step:

root@master # ceph-salt reboot

The Salt Master is never rebooted by ceph-salt update

--reboot or ceph-salt reboot commands. If

the Salt Master needs rebooting, you need to reboot it manually.

After the nodes are updated, bootstrap the minimal cluster:

root@master # ceph-salt applyWhen bootstrapping is complete, the cluster will have one Ceph Monitor and one Ceph Manager.

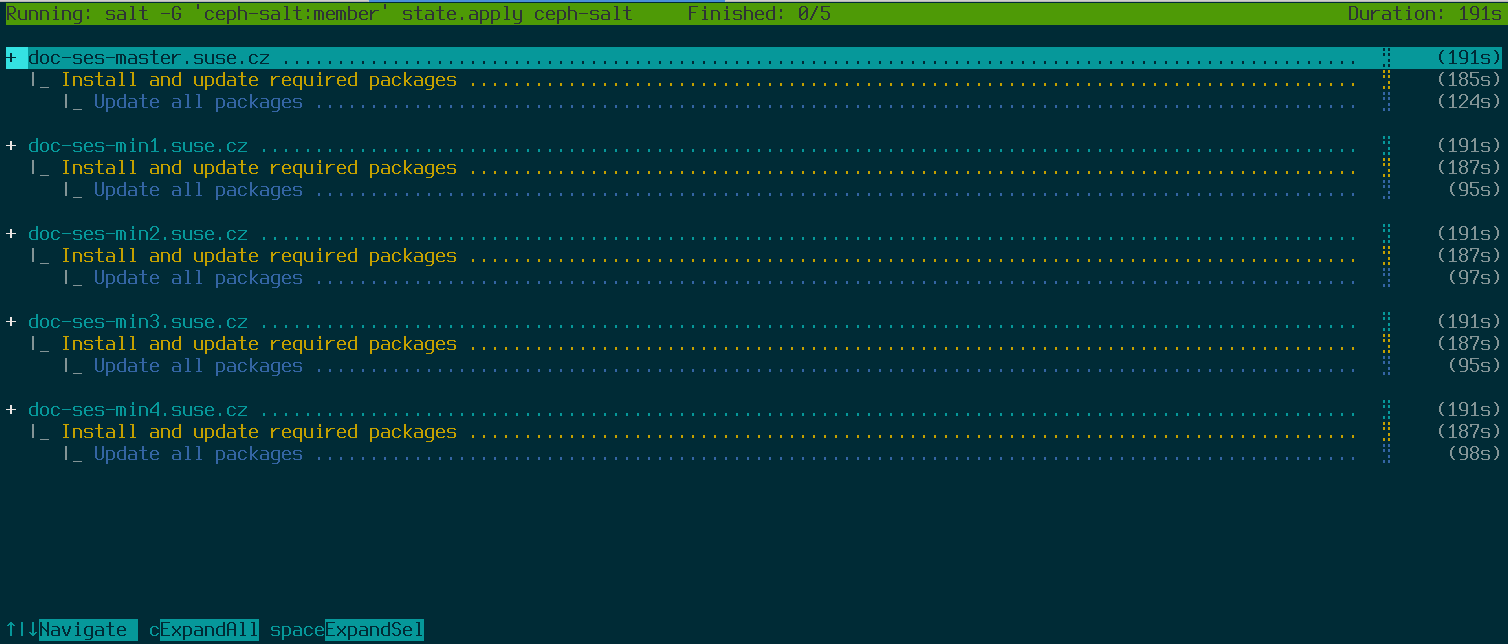

The above command will open an interactive user interface that shows the current progress of each minion.

If you need to apply the configuration from a script, there is also a non-interactive mode of deployment. This is also useful when deploying the cluster from a remote machine because constant updating of the progress information on the screen over the network may become distracting:

root@master # ceph-salt apply --non-interactive7.4 Reviewing final steps #

After the ceph-salt apply command has completed, you

should have one Ceph Monitor and one Ceph Manager. You should be able to run the

ceph status command successfully on any of the minions

that were given the admin role as

root or the cephadm user using

sudo.

The next steps involve using the cephadm to deploy additional Ceph Monitor, Ceph Manager, OSDs, the Monitoring Stack, and Gateways.

Before you continue, review your new cluster's network settings. At this

point, the public_network setting has been populated

based on what was entered for

/cephadm_bootstrap/mon_ip in the

ceph-salt configuration. However, this setting was

only applied to Ceph Monitor. You can review this setting with the following

command:

root@master # ceph config get mon public_network

This is the minimum that Ceph requires to work, but we recommend making

this public_network setting global,

which means it will apply to all types of Ceph daemons, and not only to

MONs:

root@master # ceph config set global public_network "$(ceph config get mon public_network)"This step is not required. However, if you do not use this setting, the Ceph OSDs and other daemons (except Ceph Monitor) will listen on all addresses.

If you want your OSDs to communicate amongst themselves using a completely separate network, run the following command:

root@master # ceph config set global cluster_network "cluster_network_in_cidr_notation"Executing this command will ensure that the OSDs created in your deployment will use the intended cluster network from the start.

If your cluster is set to have dense nodes (greater than 62 OSDs per

host), make sure to assign sufficient ports for Ceph OSDs. The default

range (6800-7300) currently allows for no more than 62 OSDs per host. For

a cluster with dense nodes, adjust the setting

ms_bind_port_max to a suitable value. Each OSD will

consume eight additional ports. For example, given a host that is set to

run 96 OSDs, 768 ports will be needed.

ms_bind_port_max should be set at least to 7568 by

running the following command:

root@master # ceph config set osd.* ms_bind_port_max 7568You will need to adjust your firewall settings accordingly for this to work. See Section 13.7, “Firewall settings for Ceph” for more information.

7.5 Disable insecure clients #

Since Pacific v15.2.11, a new health warning was introduced that

informs you that insecure clients are allowed to join the cluster. This

warning is on by default. The Ceph Dashboard will show

the cluster in the HEALTH_WARN status and verifying

the cluster status on the command line informs you as follows:

cephuser@adm > ceph status

cluster:

id: 3fe8b35a-689f-4970-819d-0e6b11f6707c

health: HEALTH_WARN

mons are allowing insecure global_id reclaim

[...]This warning means that the Ceph Monitors are still allowing old, unpatched clients to connect to the cluster. This ensures existing clients can still connect while the cluster is being upgraded, but warns you that there is a problem that needs to be addressed. When the cluster and all clients are upgraded to the latest version of Ceph, disallow unpatched clients by running the following command:

cephuser@adm > ceph config set mon auth_allow_insecure_global_id_reclaim false