Administration Guide #

This guide describes the administration of SUSE Linux Enterprise Micro.

1 Snapshots #

As snapshots are crucial for the correct functioning of SLE Micro, do not disable the feature, and ensure that the root partition is big enough to store the snapshots.

When a snapshot is created, both the snapshot and the original point to the same blocks in the file system. So, initially a snapshot does not occupy additional disk space. If data in the original file system is modified, changed data blocks are copied while the old data blocks are kept for the snapshot.

Snapshots always reside on the same partition or subvolume on which the snapshot has been taken. It is not possible to store snapshots on a different partition or subvolume. As a result, partitions containing snapshots need to be larger than partitions which do not contain snapshots. The exact amount depends strongly on the number of snapshots you keep and the amount of data modifications. As a rule of thumb, give partitions twice as much space as you normally would. To prevent disks from running out of space, old snapshots are automatically cleaned up.

Snapshots that are known to be working properly are marked as important.

1.1 Directories excluded from snapshots #

As some directories store user-specific or volatile data, these directories are excluded from snapshots:

/homeContains users' data. Excluded so that the data will not be included in snapshots and thus potentially overwritten by a rollback operation.

/rootContains root's data. Excluded so that the data will not be included in snapshots and thus potentially overwritten by a rollback operation.

/optThird-party products usually get installed to

/opt. Excluded so that these applications are not uninstalled during rollbacks./srvContains data for Web and FTP servers. Excluded in order to avoid data loss on rollbacks.

/usr/localThis directory is used when manually installing software. It is excluded to avoid uninstalling these installations on rollbacks.

/varThis directory contains many variable files, including logs, temporary caches, third-party products in

/var/opt, and is the default location for virtual machine images and databases. Therefore, a separate subvolume is created with Copy-On-Write disabled, so as to exclude all of this variable data from snapshots./tmpThe directory contains temporary data.

- the architecture-specific

/boot/grub2directory Rollback of the boot loader binaries is not supported.

1.2 Showing exclusive disk space used by snapshots #

Snapshots share data, for efficient use of storage space, so using ordinary

commands like du and df won't measure

used disk space accurately. When you want to free up disk space on Btrfs

with quotas enabled, you need to know how much exclusive disk space is used

by each snapshot, rather than shared space. The btrfs

command provides a view of space used by snapshots:

# btrfs qgroup show -p /

qgroupid rfer excl parent

-------- ---- ---- ------

0/5 16.00KiB 16.00KiB ---

[...]

0/272 3.09GiB 14.23MiB 1/0

0/273 3.11GiB 144.00KiB 1/0

0/274 3.11GiB 112.00KiB 1/0

0/275 3.11GiB 128.00KiB 1/0

0/276 3.11GiB 80.00KiB 1/0

0/277 3.11GiB 256.00KiB 1/0

0/278 3.11GiB 112.00KiB 1/0

0/279 3.12GiB 64.00KiB 1/0

0/280 3.12GiB 16.00KiB 1/0

1/0 3.33GiB 222.95MiB ---

The qgroupid column displays the identification number

for each subvolume, assigning a qgroup level/ID combination.

The rfer column displays the total amount of data

referred to in the subvolume.

The excl column displays the exclusive data in each

subvolume.

The parent column shows the parent qgroup of the

subvolumes.

The final item, 1/0, shows the totals for the parent

qgroup. In the above example, 222.95 MiB will be freed if all subvolumes

are removed. Run the following command to see which snapshots are

associated with each subvolume:

# btrfs subvolume list -st /2 Administration using transactional updates #

SLE Micro was designed to use a read-only root file system. This means that

after the deployment is complete, you are not able to perform direct

modifications to the root file system, e.g. by using

zypper. Instead, SUSE Linux Enterprise Micro introduces the concept of

transactional updates which enables you to modify your system and keep it up

to date.

The key features of transactional updates are the following:

They are atomic - the update is applied only if it completes successfully.

Changes are applied in a separate snapshot and so do not influence the running system.

Changes can easily be rolled back.

Each time you call the transactional-update command to

change your system—either to install a package, perform an update or

apply a patch—the following actions take place:

A new read-write snapshot is created from your current root file system, or from a snapshot that you specified.

All changes are applied (updates, patches or package installation).

The snapshot is switched back to read-only mode.

The new root file system snapshot is prepared, so that it will be active after you reboot.

After rebooting, the new root file system is set as the default snapshot.

NoteBear in mind that without rebooting your system, the changes will not be applied.

In case you do not reboot your machine before performing further changes,

the transactional-update command will create a new

snapshot from the current root file system. This means that you will end up

with several parallel snapshots, each including that particular change but

not changes from the other invocations of the command. After reboot, the

most recently created snapshot will be used as your new root file system,

and it will not include changes done in the previous snapshots.

2.1 transactional-update usage #

The transactional-update command enables atomic

installation or removal of updates; updates are applied only if all of them

can be successfully installed. transactional-update

creates a snapshot of your system and use it to update the system. Later

you can restore this snapshot. All changes become active only after reboot.

The transactional-update command syntax is as follows:

transactional-update [option] [general_command] [package_command] standalone_commandtransactional-update without arguments.

If you do not specify any command or option while running the

transactional-update command, the system updates

itself.

Possible command parameters are described further.

transactional-update options #--interactive, -iCan be used along with a package command to turn on interactive mode.

--non-interactive, -nCan be used along with a package command to turn on non-interactive mode.

--continue [number], -cThe

--continueoption is for making multiple changes to an existing snapshot without rebooting.The default

transactional-updatebehavior is to create a new snapshot from the current root file system. If you forget something, such as installing a new package, you have to reboot to apply your previous changes, runtransactional-updateagain to install the forgotten package, and reboot again. You cannot run thetransactional-updatecommand multiple times without rebooting to add more changes to the snapshot, because this will create separate independent snapshots that do not include changes from the previous snapshots.Use the

--continueoption to make as many changes as you want without rebooting. A separate snapshot is made each time, and each snapshot contains all the changes you made in the previous snapshots, plus your new changes. Repeat this process as many times as you want, and when the final snapshot includes everything you want, reboot the system, and your final snapshot becomes the new root file system.Another useful feature of the

--continueoption is you may select any existing snapshot as the base for your new snapshot. The following example demonstrates runningtransactional-updateto install a new package in a snapshot based on snapshot 13, and then running it again to install another package:#transactional-update pkg install package_1#transactional-update --continue 13 pkg install package_2--no-selfupdateDisables self updating of

transactional-update.--drop-if-no-change, -dDiscards the snapshot created by

transactional-updateif there were no changes to the root file system. If there are some changes to the/etcdirectory, those changes merged back to the current file system.--quietThe

transactional-updatecommand will not output tostdout.--help, -hPrints help for the

transactional-updatecommand.--versionDisplays the version of the

transactional-updatecommand.

The general commands are the following:

cleanup-snapshotsThe command marks all unused snapshots that are intended to be removed.

cleanup-overlaysThe command removes all unused overlay layers of

/etc.cleanupThe command combines the

cleanup-snapshotsandcleanup-overlayscommands. For more details refer to Section 2.2, “Snapshots cleanup”.grub.cfgUse this command to rebuild the GRUB boot loader configuration file.

bootloaderThe command reinstall the boot loader.

initrdUse the command to rebuild

initrd.kdumpIn case you perform changes to your hardware or storage, you may need to rebuild the kdump initrd.

shellOpens a read-write shell in the new snapshot before exiting. The command is typically used for debugging purposes.

rebootThe system reboots after the transactional-update is complete.

run<command>Runs the provided command in a new snapshot.

setup-selinuxInstalls and enables targeted SELinux policy.

The package commands are the following:

The installation of packages from repositories other than

the official ones (for example, the SUSE Linux Enterprise Server repositories) is

not supported and not

recommended. To use the tools available for SUSE Linux Enterprise Server, run the

toolbox container and install the tools inside

the container. For details about the toolbox

container, refer to Section 5, “toolbox for SLE Micro debugging”.

dupPerforms upgrade of your system. The default option for this command is

--non-interactive.migrationThe command migrates your system to a selected target. Typically it is used to upgrade your system if it has been registered via SUSE Customer Center.

patchChecks for available patches and installs them. The default option for this command is

--non-interactive.pkg installInstalls individual packages from the available channels using the

zypper installcommand. This command can also be used to install Program Temporary Fix (PTF) RPM files. The default option for this command is--interactive.#transactional-update pkg install package_nameor

#transactional-update pkg install rpm1 rpm2pkg removeRemoves individual packages from the active snapshot using the

zypper removecommand. This command can also be used to remove PTF RPM files. The default option for this command is--interactive.#transactional-update pkg remove package_namepkg updateUpdates individual packages from the active snapshot using the

zypper updatecommand. Only packages that are part of the snapshot of the base file system can be updated. The default option for this command is--interactive.#transactional-update pkg update package_nameregisterThe register command enables you to register/deregister your system. For a complete usage description, refer to Section 2.1.1, “The

registercommand”.upUpdates installed packages to newer versions. The default option for this command is

--non-interactive.

The standalone commands are the following:

rollback<snapshot number>This sets the default subvolume. The current system is set as the new default root file system. If you specify a number, that snapshot is used as the default root file system. On a read-only file system, it does not create any additional snapshots.

#transactional-update rollback snapshot_numberrollback lastThis command sets the last known to be working snapshot as the default.

statusThis prints a list of available snapshots. The currently booted one is marked with an asterisk, the default snapshot is marked with a plus sign.

2.1.1 The register command #

The register command enables you to handle all tasks

regarding registration and subscription management. You can supply the

following options:

--list-extensionsWith this option, the command will list available extensions for your system. You can use the output to find a product identifier for product activation.

-p, --productUse this option to specify a product for activation. The product identifier has the following format: <name>/<version>/<architecture>, for example

sle-module-live-patching/15.3/x86_64. The appropriate command will then be the following:#transactional-update register -p sle-module-live-patching/15.3/x86_64-r, --regcodeRegister your system with the provided registration code. The command will register the subscription and enable software repositories.

-d, --de-registerThe option deregisters the system, or when used along with the

-poption, deregisters an extension.-e, --emailSpecify an email address that will be used in SUSE Customer Center for registration.

--urlSpecify the URL of your registration server. The URL is stored in the configuration and will be used in subsequent command invocations. For example:

#transactional-update register --url https://scc.suse.com-s, --statusDisplays the current registration status in JSON format.

--write-configWrites the provided options value to the

/etc/SUSEConnectconfiguration file.--cleanupRemoves old system credentials.

--versionPrints the version.

--helpDisplays usage of the command.

2.2 Snapshots cleanup #

If you run the command transactional-update cleanup, all

old snapshots without a cleanup algorithm will have one set. All important

snapshots are also marked. The command also removes all unreferenced (and

thus unused) /etc overlay directories in

/var/lib/overlay.

The snapshots with the set number cleanup algorithm will

be deleted according to the rules configured in

/etc/snapper/configs/root by the following parameters:

- NUMBER_MIN_AGE

Defines the minimum age of a snapshot (in seconds) that can be automatically removed.

- NUMBER_LIMIT/NUMBER_LIMIT_IMPORTANT

Defines the maximum count of stored snapshots. The cleaning algorithms delete snapshots above the specified maximum value, without taking the snapshot and file system space into account. The algorithms also delete snapshots above the minimum value until the limits for the snapshot and file system are reached.

The snapshot cleanup is also preformed regularly by systemd.

2.3 System rollback #

GRUB 2 enables booting from btrfs snapshots and thus allows you to use any older functional snapshot in case that the new snapshot does not work correctly.

When booting a snapshot, the parts of the file system included in the snapshot are mounted read-only; all other file systems and parts that are excluded from snapshots are mounted read-write and can be modified.

An initial bootable snapshot is created at the end of the initial system

installation. You can go back to that state at any time by booting this

snapshot. The snapshot can be identified by the description after

installation.

There are two methods how you can perform a system rollback.

From a running system you can set the default snapshot, see more in Procedure 2, “Rollback from a running system”.

Especially in cases where the current snapshot is broken, you can boot to the new snapshot and set it then default, for details refer to Procedure 3, “Rollback to a working snapshot”.

In case your current snapshot is functional, you can use the following procedure for system rollback.

Choose the snapshot that should be set as default, run:

#transactional-update statusto get a list of available snapshots. Note the number of the snapshot to be set as default.

Set the snapshot as the default by running:

#transactional-update rollback snapshot_numberIf you omit the snapshot number, the current snapshot will be set as default.

Reboot your system to boot in to the new default snapshot.

The following procedure is used in case the current snapshot is broken and you are not able to boot into it.

Reboot your system and select

Start bootloader from a read-only snapshotChoose a snapshot to boot. The snapshots are sorted according to the date of creation, with the latest one at the top.

Log in to your system and check whether everything works as expected. Data written to directories excluded from the snapshots will stay untouched.

If the snapshot you booted into is not suitable for rollback, reboot your system and choose another one.

If the snapshot works as expected, you can perform rollback by running the following command:

#transactional-update rollbackAnd reboot afterwards.

2.4 Managing automatic transactional updates #

Automatic updates are controlled by a systemd.timer that

runs once per day. This applies all updates, and informs

rebootmgrd that the machine should be rebooted. You may

adjust the time when the update runs, see systemd.timer(5) documentation.

You can disable automatic transactional updates with this command:

#systemctl --now disable transactional-update.timer

3 Health checker #

Health checker is a program delivered with SLE Micro that checks whether services are running properly during booting of your system.

During the boot process, systemd calls Health checker,

which in turn calls its plugins. Each plugin checks a particular service or

condition. If each check passes, a status file

(/var/lib/misc/health-checker.state) is created. The

status file marks the current root file system as correct.

If any of the health checker plugins reports an error, the action taken depends on a particular condition, as described below:

- The snapshot is booted for the first time.

If the current snapshot is different from the last one that worked properly, an automatic rollback to the last working snapshot is performed. This means that the last change performed to the file system broke the snapshot.

- The snapshot has already booted correctly in the past.

There could be just a temporary problem, and the system is rebooted automatically.

- The reboot of a previously correctly booted snapshot has failed.

If there was already a problem during boot and automatic reboot has been triggered, but the problem still persists, then the system is kept running to enable to the administrator to fix the problem. The services that are tested by the health checker plugins are stopped if possible.

3.1 Adding custom plugins #

Health checker supports the addition of your own plugins to check services during the boot process. Each plugin is a bash script that must fulfill the following requirements:

Plugins are located within a specific directory—

/usr/libexec/health-checkerThe service that will be checked by the particular plugin must be defined in the

Unitsection of the/usr/lib/systemd/system/health-checker.servicefile. For example, theetcdservice is defined as follows:[Unit] ... After=etcd.service ...

Each plugin must have functions called

run.checksandstop_servicesdefined. Therun.checksfunction checks whether a particular service has started properly. Bear in mind that service that has not been enabled by systemd, should be ignored. The functionstop_servicesis called to stop the particular service in case the service has not been started properly. You can use the plugin template for your reference.

4 SLE Micro administration using Cockpit #

Cockpit is a web-based graphical interface that enables you to manage your SLE Micro deployments from one place. Cockpit is included in the delivered pre-built images, or can be installed if you are installing your own instances manually. For details regarding the manual installation, refer to Section 12.9.2, “Software”.

Though Cockpit is present in the pre-built images by default, the plugin for

administration of virtual machines needs to be installed manually. You can

do so by installing the microos-cockpit pattern as

described bellow. Use the command below as well in case Cockpit is not

installed on your system.

# transactional-update pkg install -t pattern microos-cockpitReboot your machine to switch to the latest snapshot.

microos-cockpit pattern may differ according to technologies installed on your system

The plugin Podman containers is installed only if the

Containers Runtime for non-clustered systems patterns

are installed on your system. Similarly, the Virtual

Machines plugin is installed only if the KVM

Virtualization Host pattern is installed on your system.

Before running Cockpit on you machine, you need to enable the cockpit socket in systemd by running:

# systemctl enable --now cockpit.socketIn case you have enabled the firewall, you also must open the firewall for Cockpit as follows:

# firewall-cmd --permanent --zone=public --add-service=cockpitAnd then reload the firewall configuration by running:

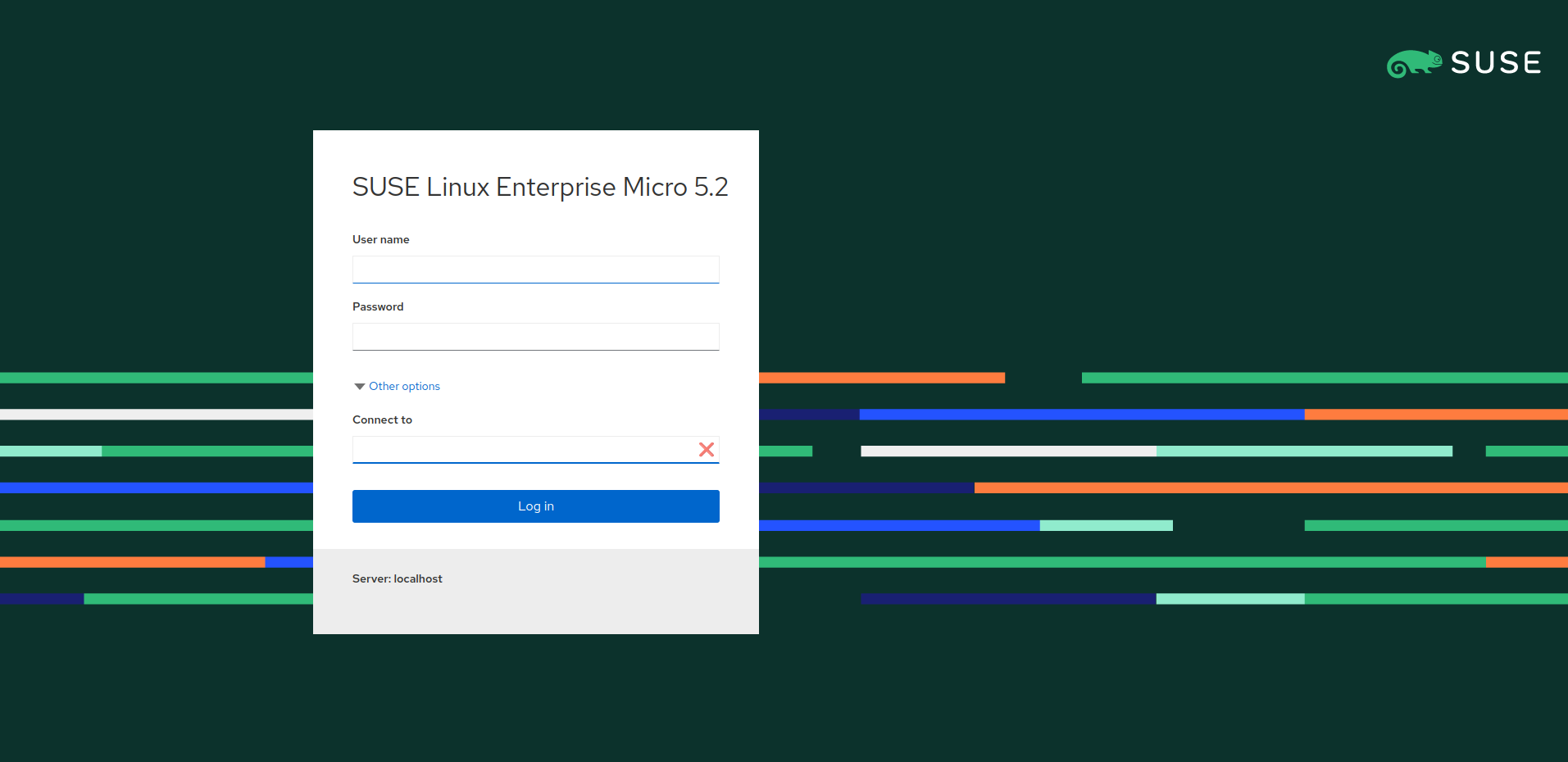

# firewall-cmd --reloadNow you can access the Cockpit web interface by opening the following address in your web browser:

https://IP_ADDRESS_OF_MACHINE:9090

A login screen opens. To login, use the same credentials as you use to login to your machine via console or SSH.

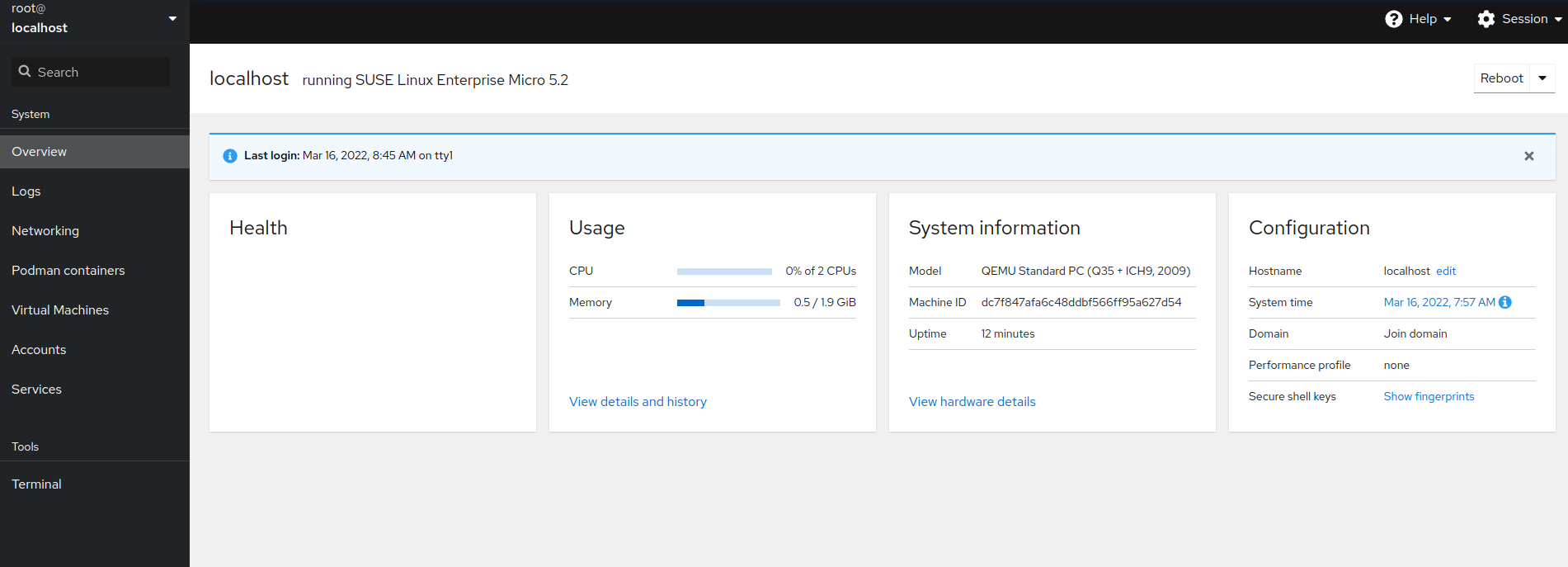

After successful login, the Cockpit web console opens. Here you can view and administer your system's performance, network interfaces, Podman containers, your virtual machines, services, accounts and logs. You can also access your machine using shell in a terminal emulator.

4.1 Users administration #

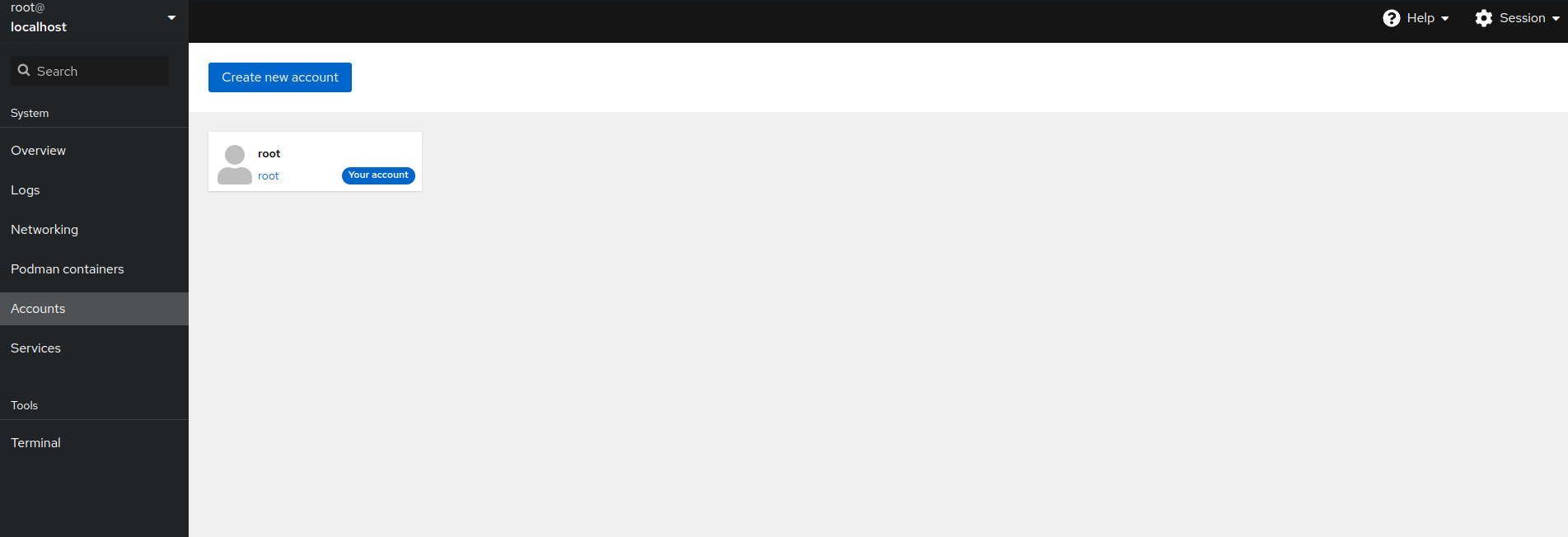

Only users with the role can edit other users.

Cockpit enables you to manage users of your system. Click to open the user administration page. Here you can create a new account by clicking or manage already existing accounts by clicking on the particular account.

4.1.1 Creating new accounts #

Click to open the window that enables you to add a new user. Fill in the user's login and/or full name and password, then confirm the form by clicking .

To add authorized SSH keys for the new user or set the role, edit the already created account by clicking on it. For details, refer to Section 4.1.2, “Modifying accounts”.

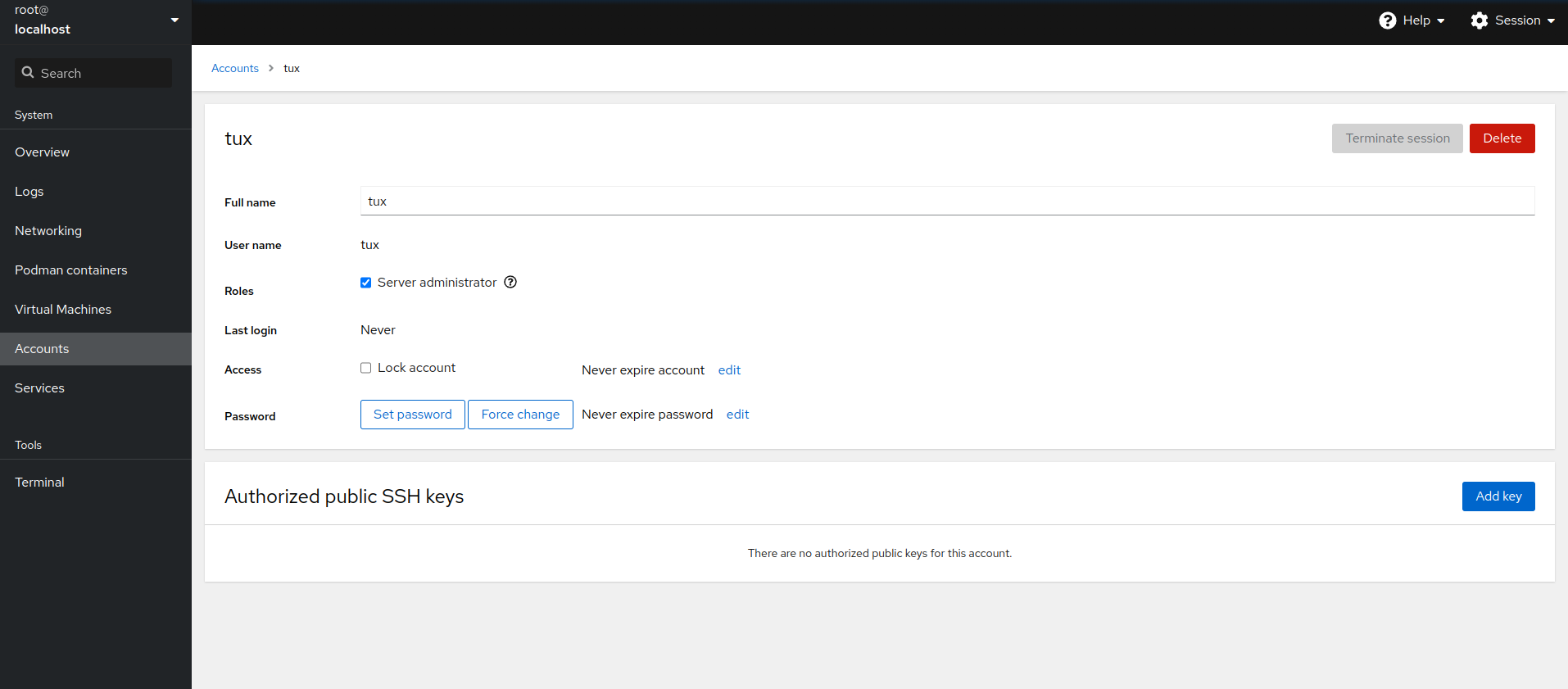

4.1.2 Modifying accounts #

After clicking the user icon in the page, the user details view opens and you can edit the user.

In the user's details view, you perform the following actions.

- Delete the user

Click to remove the user from the system.

- Terminate user's session

By clicking , you can log out the particular user from the system.

- Change user's role

By checking/unchecking the check box, you can assign or remove the administrator role from the user.

- Manage access of the account

You can lock the account or you can set a date when the account will expire.

- Manage the user's password

Click to set a new password for the account.

By clicking , the user will have to change the password on the next login.

Click to set whether or when the password expires.

- Add SSH key

You can add a SSH key for passwordless authentication via SSH. Click , paste the contents of the public SSH key and confirm it by clicking .

5 toolbox for SLE Micro debugging #

SLE Micro uses the transactional-update command to apply

changes to the system, but the changes are applied only after reboot. That

solution has several benefits, but it also has some disadvantages. If you

need to debug your system and install a new tool, the tool will be available

only after reboot. Therefore you are not able to debug the currently running

system. For this reason a utility called toolbox has been

developed.

toolbox is a small script that pulls a container image

and runs a privileged container based on that image. In the toolbox

container you can install any tool you want with zypper

and then use the tool without rebooting your system.

To start the toolbox container, run the following:

# /usr/bin/toolbox

If the script completes successfully, you will see the

toolbox container prompt.

toolbox image

You can also use Podman or Cockpit to pull the toolbox

image and start a container based on that image.

6 Monitoring performance #

For performance monitoring purposes, SLE Micro provides a container image that enables you to run the Performance Co-Pilot (PCP) analysis toolkit in a container. The toolkit comprises tools for gathering and processing performance information collected either in real time or from PCP archive logs.

The performance data are collected by performance

metrics domain agents and passed to the pmcd

daemon. The daemon coordinates the gathering and exporting of performance

statistics in response to requests from the PCP monitoring tools.

pmlogger is then used to log the metrics. For details,

refer to the

PCP

documentation.

6.1 Getting the PCP container image #

The PCP container image is based on the BCI-Init

container that utilizes systemd used to manage the PCP services.

You can pull the container image using podman or from the Cockpit web management console. To pull the image by using podman, run the following command:

# podman pull registry.suse.com/suse/pcp:latest

To get the container image using Cockpit, go to , click , and search for

pcp. Then select the image from the

registry.suse.com for SLE 15 SP3 and download it.

6.2 Running the PCP container #

The following command shows minimal options that you need to use to run a PCP container:

# podman run -d \

--systemd always \

-p HOST_IP:HOST_PORT:CONTAINER_PORT \

-v HOST_DIR:/var/log/pcp/pmlogger \

PCP_CONTAINER_IMAGEwhere the options have the following meaning:

-dRuns the container in the

systemdmode. All services needed to run in the PCP container will be started automatically bysystemdin the container.--systemd alwaysRuns the container in the

systemdmode. All services needed to run in the PCP container will be started automatically bysystemdin the container.--privilegedThe container runs with extended privileges. Use this option if your system has SELinux enabled, otherwise the collected metrics will be incomplete.

-v HOST_DIR:/var/log/pcp/pmloggerCreates a bind mount so that

pmloggerarchives are written to the HOST_DIR on the host. By default,pmloggerstores the collected metrics in/var/log/pcp/pmlogger.- PCP_CONTAINER_IMAGE

Is the downloaded PCP container image.

Other useful options of the podman run command follow:

-p HOST_IP:HOST_PORT:CONTAINER_PORTPublishes ports of the container by mapping a container port onto a host port. If you do not specify HOST_IP, the ports will be mapped on the local host. If you omit the HOST_PORT value, a random port number will be used. By default, the

pmcddaemon listens and exposes the PMAPI to receive metrics on the port 44321, so it is recommended to map this port on the same port number on the host. Thepmproxydaemon listens on and exposes the REST PMWEBAPI to access metrics on the 44322 port by default, so it is recommended to map this port on the same host port number.--net hostThe container uses the host's network. Use this option if you want to collect metrics from the host's network interfaces.

-eThe option enables you to set the following environment variables:

- PCP_SERVICES

Is a comma-separated list of services to start by

systemdin the container.Default services are:

pmcd,pmie,pmlogger,pmproxy.You can use this variable, if you want to run a container with a list of services that is different from the default one, for example, only with

pmlogger:#podman run -d \ --name pmlogger \ --systemd always \ -e PCP_SERVICES=pmlogger \ -v pcp-archives:/var/log/pcp/pmlogger \ registry.suse.com/suse/pcp:latest- HOST_MOUNT

Is a path inside the container to the bind mount of the host's root file system. The default value is not set.

- REDIS_SERVERS

Specifies a connection to a Redis server. In a non-clustered setup, provide a comma-separated list of hosts specs. In a clustered setup, provide any individual cluster host, other hosts in the cluster are discovered automatically. The default value is:

localhost:6379.

If you need to use different configuration then provided by the environment variables, proceed as described in Section 6.3, “Configuring PCP services”.

6.3 Configuring PCP services #

All services that run inside the PCP container have a default configuration that might not suit your needs. If you need a custom configuration that cannot be covered by the environment variables described above, create configuration files for the PCP services and pass them to the PCP using a bind mount as follows:

# podman run -d \

--name CONTAINER_NAME \

--systemd always \

-v $HOST_CONFIG:CONTAINER_CONFIG_PATH:z \

-v HOST_LOGS_PATH:/var/log/pcp/pmlogger \

registry.suse.com/suse/pcp:latestWhere:

- CONTAINER_NAME

Is an optional container name.

- HOST_CONFIG

Is an absolute path to the config you created on the host machine. You can choose any file name you want.

- CONTAINER_CONFIG_PATH

Is an absolute path to a particular configuration file inside the container. Each available configuration file is described in the corresponding sections further.

- HOST_LOGS_PATH

Is a directory that should be bind mount to the container logs.

For example, a container called pcp, with the

configuration file pmcd on the host machine and the

pcp-archives directory for logs on the host machine, is

run by the following command:

# podman run -d \

--name pcp \

--systemd always \

-v $(pwd)/pcp-archives:/var/log/pcp/pmlogger \

-v $(pwd)/pmcd:/etc/sysconfig/pmcd \

registry.suse.com/suse/pcp:latest6.3.1 Custom pmcd daemon configuration #

The pmcd daemon configuration is stored in the

/etc/sysconfig/pmcd file. The file stores environment variables

that modify the behavior of the pmcd daemon.

6.3.1.1 The /etc/sysconfig/pmcd file #

You can add the following variables to the file to configure the

pmcd daemon:

- PMCD_LOCAL

Defines whether the remote host can connect to the

pmcddaemon. If set to 0, remote connections to the daemon are allowed. If set to 1, the daemon listens only on the local host. The default value is 0.- PMCD_MAXPENDING

Defines the maximum count of pending connections to the agent. The default value is 5.

- PMCD_ROOT_AGENT

If the

pmdarootis enabled (the value is set to 1), adding a new PDMA does not trigger restarting of other PMDAs. Ifpmdarootis not enabled,pmcdwill require to restart all PMDAs when a new PMDA is added. The default value is 1.- PMCD_RESTART_AGENTS

If set to 1, the

pmcddaemon tries to restart any exited PMDA. Enable this option only if you have enabledpmdaroot, aspmcditself does not have privileges to restart PMDA.- PMCD_WAIT_TIMEOUT

Defines the maximum time in seconds,

pmcdcan wait to accept a connection. After this time, the connection is reported as failed. The default value is 60.- PCP_NSS_INIT_MODE

Defines the mode in which

pmcdinitializes the NSS certificate database when secured connections are used. The default value isreadonly. You can set the mode toreadwrite, but if the initialization fails, the default value is used as a fallback.

An example follows:

PMCD_LOCAL=0

PMCD_MAXPENDING=5

PMCD_ROOT_AGENT=1

PMCD_RESTART_AGENTS=1

PMCD_WAIT_TIMEOUT=70

PCP_NSS_INIT_MODE=readwrite6.3.2 Custom pmlogger configuration #

The custom configuration for the pmlogger is stored in

the following configuration files:

/etc/sysconfig/pmlogger/etc/pcp/pmlogger/control.d/local

6.3.2.1 The /etc/sysconfig/pmlogger file #

You can use the following attributes to configure the

pmlogger:

- PMLOGGER_LOCAL

Defines whether

pmloggerallows connections from remote hosts. If set to 1,pmloggerallows connections from local host only.- PMLOGGER_MAXPENDING

Defines the maximum count of pending connections. The default value is 5.

- PMLOGGER_INTERVAL

Defines the default sampling interval

pmloggeruses. The default value is 60 s. Keep in mind that this value can be overridden by thepmloggercommand line.- PMLOGGER_CHECK_SKIP_LOGCONF

Setting this option to yes disables the regeneration and checking of the

pmloggerconfiguration if the configurationpmloggercomes frompmlogconf. The default behavior is to regenerate configuration files and check for changes every timepmloggeris started.

An example follows:

PMLOGGER_LOCAL=1 PMLOGGER_MAXPENDING=5 PMLOGGER_INTERVAL=10 PMLOGGER_CHECK_SKIP_LOGCONF=yes

6.3.2.2 The /etc/pcp/pmlogger/control.d/local file #

The file /etc/pcp/pmlogger/control.d/local stores

specifications of the host, which metrics should be logged, the logging

frequency (default is 24 hours), and pmlogger options.

For example:

# === VARIABLE ASSIGNMENTS ===

#

# DO NOT REMOVE OR EDIT THE FOLLOWING LINE

$version=1.1

# Uncomment one of the lines below to enable/disable compression behaviour

# that is different to the pmlogger_daily default.

# Value is days before compressing archives, 0 is immediate compression,

# "never" or "forever" suppresses compression.

#

#$PCP_COMPRESSAFTER=0

#$PCP_COMPRESSAFTER=3

#$PCP_COMPRESSAFTER=never

# === LOGGER CONTROL SPECIFICATIONS ===

#

#Host P? S? directory args

# local primary logger

LOCALHOSTNAME y n PCP_ARCHIVE_DIR/LOCALHOSTNAME -r -T24h10m -c config.default -v 100Mb

If you run the pmlogger in a container on a different

machine than the one that runs the pmcd (a client), change the

following line to point to the client:

# local primary logger CLIENT_HOSTNAME y n PCP_ARCHIVE_DIR/CLIENT_HOSTNAME -r -T24h10m -c config.default -v 100Mb

For example, for the slemicro_1 host name, the line should look as follows:

# local primary logger slemicro_1 y n PCP_ARCHIVE_DIR/slemicro_1 -r -T24h10m -c config.default -v 100Mb

6.4 Starting the PCP container automatically on boot #

After you run the PCP container, you can configure systemd to start the

container on boot. To do so, follow the procedure below:

Create a unit file for the container by using the

podman generate systemdcommand:#podman generate systemd --name CONTAINER_NAME > /etc/systemd/system/container-CONTAINER_NAME.servicewhere CONTAINER_NAME is the name of the PCP container you used when running the container from the container image.

Enable the service in

systemd:#systemctl enable container-CONTAINER_NAME

6.5 Metrics management #

6.5.1 Listing available performance metrics #

From within the container, you can use the command pminfo to list metrics. For

example, to list all available performance metrics, run:

# pminfoYou can list a group of related metrics by specifying the metrics prefix:

# pminfo METRIC_PREFIXFor example, to list all metrics related to kernel, use:

# pminfo disk

disk.dev.r_await

disk.dm.await

disk.dm.r_await

disk.md.await

disk.md.r_await

...You can also specify additional strings to narrow down the list of metrics, for example:

# piminfo disk.dev

disk.dev.read

disk.dev.write

disk.dev.total

disk.dev.blkread

disk.dev.blkwrite

disk.dev.blktotal

...

To get online help text of a particular metric, use the

-t option followed by the metric, for example:

# pminfo -t kernel.cpu.util.user

kernel.cpu.util.user [percentage of user time across all CPUs, including guest CPU time]

To display a description text of a particular metric, use the

-T option followed by the metric, for example:

# pminfo -T kernel.cpu.util.user

Help:

percentage of user time across all CPUs, including guest CPU time6.5.2 Checking local metrics #

After you start the PCP container, you can verify that metrics are being recorded properly by running the following command inside the container:

# pcp

Performance Co-Pilot configuration on localhost:

platform: Linux localhost 5.3.18-150300.59.68-default #1 SMP Wed May 4 11:29:09 UTC 2022 (ea30951) x86_64

hardware: 1 cpu, 1 disk, 1 node, 1726MB RAM

timezone: UTC

services: pmcd pmproxy

pmcd: Version 5.2.2-1, 9 agents, 4 clients

pmda: root pmcd proc pmproxy xfs linux mmv kvm jbd2

pmlogger: primary logger: /var/log/pcp/pmlogger/localhost/20220607.09.24

pmie: primary engine: /var/log/pcp/pmie/localhost/pmie.logNow check if the logs are written to a proper destination:

# ls PATH_TO_PMLOGGER_LOGS

where PATH_TO_PMLOGGER_LOGS should be

/var/log/pcp/pmlogger/localhost/ in this case.

6.5.3 Recording metrics from remote systems #

You can deploy collector containers that collect metrics from different

remote systems than the ones where the pmlogger containers are

running. Each remote collector system needs the pmcd

daemon and a set of pmda. To deploy several

collectors with a centralized monitoring system, proceed as follows.

On each system you want to collect metrics from (clients), run a container with the

pmcddaemon:#podman run -d \ --name pcp-pmcd \ --privileged \ --net host \ --systemd always \ -e PCP_SERVICES=pmcd \ -e HOST_MOUNT=/host \ -v /:/host:ro,rslave \ registry.suse.com/suse/pcp:latestOn the monitoring system, create a

pmloggerconfiguration file for each clientcontrol.CLIENTwith the following content:$version=1.1 CLIENT_HOSTNAME n n PCP_ARCHIVE_DIR/CLIENT -N -r -T24h10m -c config.default -v 100Mb

Keep in mind that the CLIENT_HOSTNAME must be resolvable in DNS. You can use IP addresses or fully qualified domain names (FQDN) instead.

On the monitoring system, create a directory for each client to store the recorded logs:

#mkdir /root/pcp-archives/CLIENTFor example, for

slemicro_1:#mkdir /root/pcp-archives/slemicro_1On the monitoring system, run a container with

pmloggerfor each client:#podman run -d \ --name pcp-pmlogger-CLIENT \ --systemd always \ -e PCP_SERVICES=pmlogger \ -v /root/pcp-archives/CLIENT:/var/log/pcp/pmlogger:z \ -v $(pwd)/control.CLIENT:/etc/pcp/pmlogger/control.d/local:z \ registry.suse.com/suse/pcp:latestFor example, for a client called

slemicro_1:#podman run -d \ --name pcp-pmlogger-slemicro_1 \ --systemd always \ -e PCP_SERVICES=pmlogger \ -v /root/pcp-archives:/var/log/pcp/pmlogger:z \ -v $(pwd)/control.slemicro_1:/etc/pcp/pmlogger/control.d/local:z \ registry.suse.com/suse/pcp:latestNoteThe second bind mount points to the configuration file created in Step 2 and replaces the default

pmloggerconfiguration. If you do not create this bind mount,pmloggeruses the default/etc/pcp/pmlogger/control.d/localfile and logging from clients fails as the default configuration points to a local host. For details about the configuration file, refer to Section 6.3.2.2, “The/etc/pcp/pmlogger/control.d/localfile”.To check if the logs collection is working properly, run:

#ls -l pcp-archives/CLIENT/CLIENTFor example:

#ls -l pcp-archives/slemicro_1/slemicro_1 total 1076 -rw-r--r--. 1 systemd-network systemd-network 876372 Jun 8 11:24 20220608.10.58.0 -rw-r--r--. 1 systemd-network systemd-network 312 Jun 8 11:22 20220608.10.58.index -rw-r--r--. 1 systemd-network systemd-network 184486 Jun 8 10:58 20220608.10.58.meta -rw-r--r--. 1 systemd-network systemd-network 246 Jun 8 10:58 Latest -rw-r--r--. 1 systemd-network systemd-network 24595 Jun 8 10:58 pmlogger.log

7 User management #

You can define users during the deployment process of SLE Micro. Although you

can define users as you want when deploying pre-built images, during the

manual installation, you define only the root user in the installation

flow. Therefore, you might want to use other users than those provided during the

installation process. There are two possibilities for adding users to an

already installed system:

using CLI - the command

useradd. Run the following command for usage:#useradd --helpBear in mind that a user that should have the server administrator role, must be included in the group

wheel.using Cockpit; for details refer to Section 4.1, “Users administration”.