35 Setting up the management cluster #

35.1 Introduction #

The management cluster is the part of ATIP that is used to manage the provision and lifecycle of the runtime stacks. From a technical point of view, the management cluster contains the following components:

SUSE Linux Enterprise Microas the OS. Depending on the use case, some configurations like networking, storage, users and kernel arguments can be customized.RKE2as the Kubernetes cluster. Depending on the use case, it can be configured to use specific CNI plugins, such asMultus,Cilium, etc.Rancheras the management platform to manage the lifecycle of the clusters.Metal3as the component to manage the lifecycle of the bare-metal nodes.CAPIas the component to manage the lifecycle of the Kubernetes clusters (downstream clusters). With ATIP, also theRKE2 CAPI Provideris used to manage the lifecycle of the RKE2 clusters (downstream clusters).

With all components mentioned above, the management cluster can manage the lifecycle of downstream clusters, using a declarative approach to manage the infrastructure and applications.

For more information about SUSE Linux Enterprise Micro, see: SLE Micro (Chapter 7, SLE Micro)

For more information about RKE2, see: RKE2 (Chapter 14, RKE2)

For more information about Rancher, see: Rancher (Chapter 4, Rancher)

For more information about Metal3, see: Metal3 (Chapter 8, Metal3)

35.2 Steps to set up the management cluster #

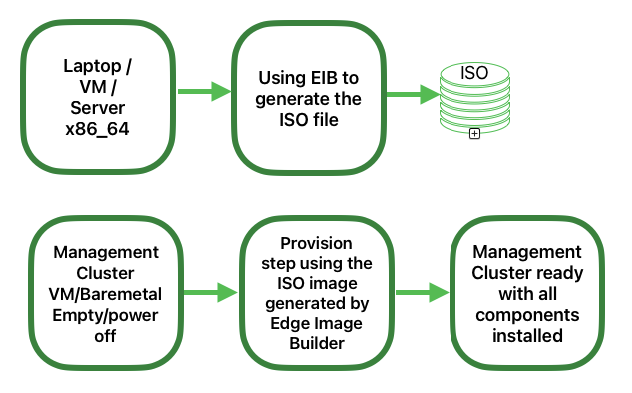

The following steps are necessary to set up the management cluster (using a single node):

The following are the main steps to set up the management cluster using a declarative approach:

Image preparation for connected environments (Section 35.3, “Image preparation for connected environments”): The first step is to prepare the manifests and files with all the necessary configurations to be used in connected environments.

Directory structure for connected environments (Section 35.3.1, “Directory structure”): This step creates a directory structure to be used by Edge Image Builder to store the configuration files and the image itself.

Management cluster definition file (Section 35.3.2, “Management cluster definition file”): The

mgmt-cluster.yamlfile is the main definition file for the management cluster. It contains the following information about the image to be created:Image Information: The information related to the image to be created using the base image.

Operating system: The operating system configurations to be used in the image.

Kubernetes: Helm charts and repositories, kubernetes version, network configuration, and the nodes to be used in the cluster.

Custom folder (Section 35.3.3, “Custom folder”): The

customfolder contains the configuration files and scripts to be used by Edge Image Builder to deploy a fully functional management cluster.Files: Contains the configuration files to be used by the management cluster.

Scripts: Contains the scripts to be used by the management cluster.

Kubernetes folder (Section 35.3.4, “Kubernetes folder”): The

kubernetesfolder contains the configuration files to be used by the management cluster.Manifests: Contains the manifests to be used by the management cluster.

Helm: Contains the Helm values files to be used by the management cluster.

Config: Contains the configuration files to be used by the management cluster.

Network folder (Section 35.3.5, “Networking folder”): The

networkfolder contains the network configuration files to be used by the management cluster nodes.

Image preparation for air-gap environments (Section 35.4, “Image preparation for air-gap environments”): The step is to show the differences to prepare the manifests and files to be used in an air-gap scenario.

Modifications in the definition file (Section 35.4.1, “Modifications in the definition file”): The

mgmt-cluster.yamlfile must be modified to include theembeddedArtifactRegistrysection with theimagesfield set to all container images to be included into the EIB output image.Modifications in the custom folder (Section 35.4.2, “Modifications in the custom folder”): The

customfolder must be modified to include the resources needed to run the management cluster in an air-gap environment.Register script: The

custom/scripts/99-register.shscript must be removed when you use an air-gap environment.

Modifications in the helm values folder (Section 35.4.3, “Modifications in the helm values folder”): The

helm/valuesfolder must be modified to include the configuration needed to run the management cluster in an air-gap environment.

Image creation (Section 35.5, “Image creation”): This step covers the creation of the image using the Edge Image Builder tool (for both, connected and air-gap scenarios). Check the prerequisites (Chapter 9, Edge Image Builder) to run the Edge Image Builder tool on your system.

Management Cluster Provision (Section 35.6, “Provision the management cluster”): This step covers the provisioning of the management cluster using the image created in the previous step (for both, connected and air-gap scenarios). This step can be done using a laptop, server, VM or any other x86_64 system with a USB port.

For more information about Edge Image Builder, see Edge Image Builder (Chapter 9, Edge Image Builder) and Edge Image Builder Quick Start (Chapter 3, Standalone clusters with Edge Image Builder).

35.3 Image preparation for connected environments #

Edge Image Builder is used to create the image for the management cluster, in this document we cover the minimal configuration necessary to set up the management cluster.

Edge Image Builder runs inside a container, so a container runtime is required such as Podman or Rancher Desktop. For this guide, we assume podman is available.

Also, as a prerequisite to deploy a highly available management cluster, you need to reserve three IPs in your network:

- apiVIP for the API VIP Address (used to access the Kubernetes API server).

- ingressVIP for the Ingress VIP Address (consumed, for example, by the Rancher UI).

- metal3VIP for the Metal3 VIP Address.

35.3.1 Directory structure #

When running EIB, a directory is mounted from the host, so the first thing to do is to create a directory structure to be used by EIB to store the configuration files and the image itself. This directory has the following structure:

eib

├── mgmt-cluster.yaml

├── network

│ └── mgmt-cluster-node1.yaml

├── kubernetes

│ ├── manifests

│ │ ├── rke2-ingress-config.yaml

│ │ ├── neuvector-namespace.yaml

│ │ ├── ingress-l2-adv.yaml

│ │ └── ingress-ippool.yaml

│ ├── helm

│ │ └── values

│ │ ├── rancher.yaml

│ │ ├── neuvector.yaml

│ │ ├── metal3.yaml

│ │ └── certmanager.yaml

│ └── config

│ └── server.yaml

├── custom

│ ├── scripts

│ │ ├── 99-register.sh

│ │ ├── 99-mgmt-setup.sh

│ │ └── 99-alias.sh

│ └── files

│ ├── rancher.sh

│ ├── mgmt-stack-setup.service

│ ├── metal3.sh

│ └── basic-setup.sh

└── base-imagesThe image SL-Micro.x86_64-6.0-Base-SelfInstall-GM2.install.iso must be downloaded from the SUSE Customer Center or the SUSE Download page, and it must be located under the base-images folder.

You should check the SHA256 checksum of the image to ensure it has not been tampered with. The checksum can be found in the same location where the image was downloaded.

An example of the directory structure can be found in the SUSE Edge GitHub repository under the "telco-examples" folder.

35.3.2 Management cluster definition file #

The mgmt-cluster.yaml file is the main definition file for the management cluster. It contains the following information:

apiVersion: 1.0

image:

imageType: iso

arch: x86_64

baseImage: SL-Micro.x86_64-6.0-Base-SelfInstall-GM2.install.iso

outputImageName: eib-mgmt-cluster-image.iso

operatingSystem:

isoConfiguration:

installDevice: /dev/sda

users:

- username: root

encryptedPassword: ${ROOT_PASSWORD}

packages:

packageList:

- git

- jq

sccRegistrationCode: ${SCC_REGISTRATION_CODE}

kubernetes:

version: ${KUBERNETES_VERSION}

helm:

charts:

- name: cert-manager

repositoryName: jetstack

version: 1.15.3

targetNamespace: cert-manager

valuesFile: certmanager.yaml

createNamespace: true

installationNamespace: kube-system

- name: longhorn-crd

version: 104.2.2+up1.7.3

repositoryName: rancher-charts

targetNamespace: longhorn-system

createNamespace: true

installationNamespace: kube-system

- name: longhorn

version: 104.2.2+up1.7.3

repositoryName: rancher-charts

targetNamespace: longhorn-system

createNamespace: true

installationNamespace: kube-system

- name: metal3-chart

version: 0.8.3

repositoryName: suse-edge-charts

targetNamespace: metal3-system

createNamespace: true

installationNamespace: kube-system

valuesFile: metal3.yaml

- name: rancher-turtles-chart

version: 0.3.3

repositoryName: suse-edge-charts

targetNamespace: rancher-turtles-system

createNamespace: true

installationNamespace: kube-system

- name: neuvector-crd

version: 104.0.8+up2.8.8

repositoryName: rancher-charts

targetNamespace: neuvector

createNamespace: true

installationNamespace: kube-system

valuesFile: neuvector.yaml

- name: neuvector

version: 104.0.8+up2.8.8

repositoryName: rancher-charts

targetNamespace: neuvector

createNamespace: true

installationNamespace: kube-system

valuesFile: neuvector.yaml

- name: rancher

version: 2.9.12

repositoryName: rancher-prime

targetNamespace: cattle-system

createNamespace: true

installationNamespace: kube-system

valuesFile: rancher.yaml

repositories:

- name: jetstack

url: https://charts.jetstack.io

- name: rancher-charts

url: https://charts.rancher.io/

- name: suse-edge-charts

url: oci://registry.suse.com/edge/3.1

- name: rancher-prime

url: https://charts.rancher.com/server-charts/prime

network:

apiHost: ${API_HOST}

apiVIP: ${API_VIP}

nodes:

- hostname: mgmt-cluster-node1

initializer: true

type: server

# - hostname: mgmt-cluster-node2

# type: server

# - hostname: mgmt-cluster-node3

# type: serverTo explain the fields and values in the mgmt-cluster.yaml definition file, we have divided it into the following sections.

Image section (definition file):

image:

imageType: iso

arch: x86_64

baseImage: SL-Micro.x86_64-6.0-Base-SelfInstall-GM2.install.iso

outputImageName: eib-mgmt-cluster-image.isowhere the baseImage is the original image you downloaded from the SUSE Customer Center or the SUSE Download page. outputImageName is the name of the new image that will be used to provision the management cluster.

Operating system section (definition file):

operatingSystem:

isoConfiguration:

installDevice: /dev/sda

users:

- username: root

encryptedPassword: ${ROOT_PASSWORD}

packages:

packageList:

- jq

sccRegistrationCode: ${SCC_REGISTRATION_CODE}where the installDevice is the device to be used to install the operating system, the username and encryptedPassword are the credentials to be used to access the system, the packageList is the list of packages to be installed (jq is required internally during the installation process), and the sccRegistrationCode is the registration code used to get the packages and dependencies at build time and can be obtained from the SUSE Customer Center.

The encrypted password can be generated using the openssl command as follows:

openssl passwd -6 MyPassword!123This outputs something similar to:

$6$UrXB1sAGs46DOiSq$HSwi9GFJLCorm0J53nF2Sq8YEoyINhHcObHzX2R8h13mswUIsMwzx4eUzn/rRx0QPV4JIb0eWCoNrxGiKH4R31Kubernetes section (definition file):

kubernetes:

version: ${KUBERNETES_VERSION}

helm:

charts:

- name: cert-manager

repositoryName: jetstack

version: 1.15.3

targetNamespace: cert-manager

valuesFile: certmanager.yaml

createNamespace: true

installationNamespace: kube-system

- name: longhorn-crd

version: 104.2.2+up1.7.3

repositoryName: rancher-charts

targetNamespace: longhorn-system

createNamespace: true

installationNamespace: kube-system

- name: longhorn

version: 104.2.2+up1.7.3

repositoryName: rancher-charts

targetNamespace: longhorn-system

createNamespace: true

installationNamespace: kube-system

- name: metal3-chart

version: 0.8.3

repositoryName: suse-edge-charts

targetNamespace: metal3-system

createNamespace: true

installationNamespace: kube-system

valuesFile: metal3.yaml

- name: rancher-turtles-chart

version: 0.3.3

repositoryName: suse-edge-charts

targetNamespace: rancher-turtles-system

createNamespace: true

installationNamespace: kube-system

- name: neuvector-crd

version: 104.0.8+up2.8.8

repositoryName: rancher-charts

targetNamespace: neuvector

createNamespace: true

installationNamespace: kube-system

valuesFile: neuvector.yaml

- name: neuvector

version: 104.0.8+up2.8.8

repositoryName: rancher-charts

targetNamespace: neuvector

createNamespace: true

installationNamespace: kube-system

valuesFile: neuvector.yaml

- name: rancher

version: 2.9.12

repositoryName: rancher-prime

targetNamespace: cattle-system

createNamespace: true

installationNamespace: kube-system

valuesFile: rancher.yaml

repositories:

- name: jetstack

url: https://charts.jetstack.io

- name: rancher-charts

url: https://charts.rancher.io/

- name: suse-edge-charts

url: oci://registry.suse.com/edge/3.1

- name: rancher-prime

url: https://charts.rancher.com/server-charts/prime

network:

apiHost: ${API_HOST}

apiVIP: ${API_VIP}

nodes:

- hostname: mgmt-cluster-node1

initializer: true

type: server

# - hostname: mgmt-cluster-node2

# type: server

# - hostname: mgmt-cluster-node3

# type: serverwhere version is the version of Kubernetes to be installed. In our case, we are using an RKE2 cluster, so the version must be minor less than 1.29 to be compatible with Rancher (for example, v1.30.11+rke2r1).

The helm section contains the list of Helm charts to be installed, the repositories to be used, and the version configuration for all of them.

The network section contains the configuration for the network, like the apiHost and apiVIP to be used by the RKE2 component.

The apiVIP should be an IP address that is not used in the network and should not be part of the DHCP pool (in case we use DHCP). Also, when we use the apiVIP in a multi-node cluster, it is used to access the Kubernetes API server.

The apiHost is the name resolution to apiVIP to be used by the RKE2 component.

The nodes section contains the list of nodes to be used in the cluster. The nodes section contains the list of nodes to be used in the cluster. In this example, a single-node cluster is being used, but it can be extended to a multi-node cluster by adding more nodes to the list (by uncommenting the lines).

The names of the nodes must be unique in the cluster.

Optionally, use the

initializerfield to specify the bootstrap host, otherwise it will be the first node in the list.The names of the nodes must be the same as the host names defined in the Network Folder (Section 35.3.5, “Networking folder”) when network configuration is required.

35.3.3 Custom folder #

The custom folder contains the following subfolders:

...

├── custom

│ ├── scripts

│ │ ├── 99-register.sh

│ │ ├── 99-mgmt-setup.sh

│ │ └── 99-alias.sh

│ └── files

│ ├── rancher.sh

│ ├── mgmt-stack-setup.service

│ ├── metal3.sh

│ └── basic-setup.sh

...The

custom/filesfolder contains the configuration files to be used by the management cluster.The

custom/scriptsfolder contains the scripts to be used by the management cluster.

The custom/files folder contains the following files:

basic-setup.sh: contains the configuration parameters about theMetal3version to be used, as well as theRancherandMetalLBbasic parameters. Only modify this file if you want to change the versions of the components or the namespaces to be used.#!/bin/bash # Pre-requisites. Cluster already running export KUBECTL="/var/lib/rancher/rke2/bin/kubectl" export KUBECONFIG="/etc/rancher/rke2/rke2.yaml" ################## # METAL3 DETAILS # ################## export METAL3_CHART_TARGETNAMESPACE="metal3-system" ########### # METALLB # ########### export METALLBNAMESPACE="metallb-system" ########### # RANCHER # ########### export RANCHER_CHART_TARGETNAMESPACE="cattle-system" export RANCHER_FINALPASSWORD="adminadminadmin" die(){ echo ${1} 1>&2 exit ${2} }metal3.sh: contains the configuration for theMetal3component to be used (no modifications needed). In future versions, this script will be replaced to use insteadRancher Turtlesto make it easy.#!/bin/bash set -euo pipefail BASEDIR="$(dirname "$0")" source ${BASEDIR}/basic-setup.sh METAL3LOCKNAMESPACE="default" METAL3LOCKCMNAME="metal3-lock" trap 'catch $? $LINENO' EXIT catch() { if [ "$1" != "0" ]; then echo "Error $1 occurred on $2" ${KUBECTL} delete configmap ${METAL3LOCKCMNAME} -n ${METAL3LOCKNAMESPACE} fi } # Get or create the lock to run all those steps just in a single node # As the first node is created WAY before the others, this should be enough # TODO: Investigate if leases is better if [ $(${KUBECTL} get cm -n ${METAL3LOCKNAMESPACE} ${METAL3LOCKCMNAME} -o name | wc -l) -lt 1 ]; then ${KUBECTL} create configmap ${METAL3LOCKCMNAME} -n ${METAL3LOCKNAMESPACE} --from-literal foo=bar else exit 0 fi # Wait for metal3 while ! ${KUBECTL} wait --for condition=ready -n ${METAL3_CHART_TARGETNAMESPACE} $(${KUBECTL} get pods -n ${METAL3_CHART_TARGETNAMESPACE} -l app.kubernetes.io/name=metal3-ironic -o name) --timeout=10s; do sleep 2 ; done # Get the ironic IP IRONICIP=$(${KUBECTL} get cm -n ${METAL3_CHART_TARGETNAMESPACE} ironic-bmo -o jsonpath='{.data.IRONIC_IP}') # If LoadBalancer, use metallb, else it is NodePort if [ $(${KUBECTL} get svc -n ${METAL3_CHART_TARGETNAMESPACE} metal3-metal3-ironic -o jsonpath='{.spec.type}') == "LoadBalancer" ]; then # Wait for metallb while ! ${KUBECTL} wait --for condition=ready -n ${METALLBNAMESPACE} $(${KUBECTL} get pods -n ${METALLBNAMESPACE} -l app.kubernetes.io/component=controller -o name) --timeout=10s; do sleep 2 ; done # Do not create the ippool if already created ${KUBECTL} get ipaddresspool -n ${METALLBNAMESPACE} ironic-ip-pool -o name || cat <<-EOF | ${KUBECTL} apply -f - apiVersion: metallb.io/v1beta1 kind: IPAddressPool metadata: name: ironic-ip-pool namespace: ${METALLBNAMESPACE} spec: addresses: - ${IRONICIP}/32 serviceAllocation: priority: 100 serviceSelectors: - matchExpressions: - {key: app.kubernetes.io/name, operator: In, values: [metal3-ironic]} EOF # Same for L2 Advs ${KUBECTL} get L2Advertisement -n ${METALLBNAMESPACE} ironic-ip-pool-l2-adv -o name || cat <<-EOF | ${KUBECTL} apply -f - apiVersion: metallb.io/v1beta1 kind: L2Advertisement metadata: name: ironic-ip-pool-l2-adv namespace: ${METALLBNAMESPACE} spec: ipAddressPools: - ironic-ip-pool EOF fi # If rancher is deployed if [ $(${KUBECTL} get pods -n ${RANCHER_CHART_TARGETNAMESPACE} -l app=rancher -o name | wc -l) -ge 1 ]; then cat <<-EOF | ${KUBECTL} apply -f - apiVersion: management.cattle.io/v3 kind: Feature metadata: name: embedded-cluster-api spec: value: false EOF # Disable Rancher webhooks for CAPI ${KUBECTL} delete --ignore-not-found=true mutatingwebhookconfiguration.admissionregistration.k8s.io mutating-webhook-configuration ${KUBECTL} delete --ignore-not-found=true validatingwebhookconfigurations.admissionregistration.k8s.io validating-webhook-configuration ${KUBECTL} wait --for=delete namespace/cattle-provisioning-capi-system --timeout=300s fi # Clean up the lock cm ${KUBECTL} delete configmap ${METAL3LOCKCMNAME} -n ${METAL3LOCKNAMESPACE}rancher.sh: contains the configuration for theRanchercomponent to be used (no modifications needed).#!/bin/bash set -euo pipefail BASEDIR="$(dirname "$0")" source ${BASEDIR}/basic-setup.sh RANCHERLOCKNAMESPACE="default" RANCHERLOCKCMNAME="rancher-lock" if [ -z "${RANCHER_FINALPASSWORD}" ]; then # If there is no final password, then finish the setup right away exit 0 fi trap 'catch $? $LINENO' EXIT catch() { if [ "$1" != "0" ]; then echo "Error $1 occurred on $2" ${KUBECTL} delete configmap ${RANCHERLOCKCMNAME} -n ${RANCHERLOCKNAMESPACE} fi } # Get or create the lock to run all those steps just in a single node # As the first node is created WAY before the others, this should be enough # TODO: Investigate if leases is better if [ $(${KUBECTL} get cm -n ${RANCHERLOCKNAMESPACE} ${RANCHERLOCKCMNAME} -o name | wc -l) -lt 1 ]; then ${KUBECTL} create configmap ${RANCHERLOCKCMNAME} -n ${RANCHERLOCKNAMESPACE} --from-literal foo=bar else exit 0 fi # Wait for rancher to be deployed while ! ${KUBECTL} wait --for condition=ready -n ${RANCHER_CHART_TARGETNAMESPACE} $(${KUBECTL} get pods -n ${RANCHER_CHART_TARGETNAMESPACE} -l app=rancher -o name) --timeout=10s; do sleep 2 ; done until ${KUBECTL} get ingress -n ${RANCHER_CHART_TARGETNAMESPACE} rancher > /dev/null 2>&1; do sleep 10; done RANCHERBOOTSTRAPPASSWORD=$(${KUBECTL} get secret -n ${RANCHER_CHART_TARGETNAMESPACE} bootstrap-secret -o jsonpath='{.data.bootstrapPassword}' | base64 -d) RANCHERHOSTNAME=$(${KUBECTL} get ingress -n ${RANCHER_CHART_TARGETNAMESPACE} rancher -o jsonpath='{.spec.rules[0].host}') # Skip the whole process if things have been set already if [ -z $(${KUBECTL} get settings.management.cattle.io first-login -ojsonpath='{.value}') ]; then # Add the protocol RANCHERHOSTNAME="https://${RANCHERHOSTNAME}" TOKEN="" while [ -z "${TOKEN}" ]; do # Get token sleep 2 TOKEN=$(curl -sk -X POST ${RANCHERHOSTNAME}/v3-public/localProviders/local?action=login -H 'content-type: application/json' -d "{\"username\":\"admin\",\"password\":\"${RANCHERBOOTSTRAPPASSWORD}\"}" | jq -r .token) done # Set password curl -sk ${RANCHERHOSTNAME}/v3/users?action=changepassword -H 'content-type: application/json' -H "Authorization: Bearer $TOKEN" -d "{\"currentPassword\":\"${RANCHERBOOTSTRAPPASSWORD}\",\"newPassword\":\"${RANCHER_FINALPASSWORD}\"}" # Create a temporary API token (ttl=60 minutes) APITOKEN=$(curl -sk ${RANCHERHOSTNAME}/v3/token -H 'content-type: application/json' -H "Authorization: Bearer ${TOKEN}" -d '{"type":"token","description":"automation","ttl":3600000}' | jq -r .token) curl -sk ${RANCHERHOSTNAME}/v3/settings/server-url -H 'content-type: application/json' -H "Authorization: Bearer ${APITOKEN}" -X PUT -d "{\"name\":\"server-url\",\"value\":\"${RANCHERHOSTNAME}\"}" curl -sk ${RANCHERHOSTNAME}/v3/settings/telemetry-opt -X PUT -H 'content-type: application/json' -H 'accept: application/json' -H "Authorization: Bearer ${APITOKEN}" -d '{"value":"out"}' fi # Clean up the lock cm ${KUBECTL} delete configmap ${RANCHERLOCKCMNAME} -n ${RANCHERLOCKNAMESPACE}mgmt-stack-setup.service: contains the configuration to create the systemd service to run the scripts during the first boot (no modifications needed).[Unit] Description=Setup Management stack components Wants=network-online.target # It requires rke2 or k3s running, but it will not fail if those services are not present After=network.target network-online.target rke2-server.service k3s.service # At least, the basic-setup.sh one needs to be present ConditionPathExists=/opt/mgmt/bin/basic-setup.sh [Service] User=root Type=forking # Metal3 can take A LOT to download the IPA image TimeoutStartSec=1800 ExecStartPre=/bin/sh -c "echo 'Setting up Management components...'" # Scripts are executed in StartPre because Start can only run a single on ExecStartPre=/opt/mgmt/bin/rancher.sh ExecStartPre=/opt/mgmt/bin/metal3.sh ExecStart=/bin/sh -c "echo 'Finished setting up Management components'" RemainAfterExit=yes KillMode=process # Disable & delete everything ExecStartPost=rm -f /opt/mgmt/bin/rancher.sh ExecStartPost=rm -f /opt/mgmt/bin/metal3.sh ExecStartPost=rm -f /opt/mgmt/bin/basic-setup.sh ExecStartPost=/bin/sh -c "systemctl disable mgmt-stack-setup.service" ExecStartPost=rm -f /etc/systemd/system/mgmt-stack-setup.service [Install] WantedBy=multi-user.target

The custom/scripts folder contains the following files:

99-alias.shscript: contains the alias to be used by the management cluster to load the kubeconfig file at first boot (no modifications needed).#!/bin/bash echo "alias k=kubectl" >> /etc/profile.local echo "alias kubectl=/var/lib/rancher/rke2/bin/kubectl" >> /etc/profile.local echo "export KUBECONFIG=/etc/rancher/rke2/rke2.yaml" >> /etc/profile.local99-mgmt-setup.shscript: contains the configuration to copy the scripts during the first boot (no modifications needed).#!/bin/bash # Copy the scripts from combustion to the final location mkdir -p /opt/mgmt/bin/ for script in basic-setup.sh rancher.sh metal3.sh; do cp ${script} /opt/mgmt/bin/ done # Copy the systemd unit file and enable it at boot cp mgmt-stack-setup.service /etc/systemd/system/mgmt-stack-setup.service systemctl enable mgmt-stack-setup.service99-register.shscript: contains the configuration to register the system using the SCC registration code. The${SCC_ACCOUNT_EMAIL}and${SCC_REGISTRATION_CODE}have to be set properly to register the system with your account.#!/bin/bash set -euo pipefail # Registration https://www.suse.com/support/kb/doc/?id=000018564 if ! which SUSEConnect > /dev/null 2>&1; then zypper --non-interactive install suseconnect-ng fi SUSEConnect --email "${SCC_ACCOUNT_EMAIL}" --url "https://scc.suse.com" --regcode "${SCC_REGISTRATION_CODE}"

35.3.4 Kubernetes folder #

The kubernetes folder contains the following subfolders:

...

├── kubernetes

│ ├── manifests

│ │ ├── rke2-ingress-config.yaml

│ │ ├── neuvector-namespace.yaml

│ │ ├── ingress-l2-adv.yaml

│ │ └── ingress-ippool.yaml

│ ├── helm

│ │ └── values

│ │ ├── rancher.yaml

│ │ ├── neuvector.yaml

│ │ ├── metal3.yaml

│ │ └── certmanager.yaml

│ └── config

│ └── server.yaml

...The kubernetes/config folder contains the following files:

server.yaml: By default, theCNIplug-in installed by default isCilium, so you do not need to create this folder and file. Just in case you need to customize theCNIplug-in, you can use theserver.yamlfile under thekubernetes/configfolder. It contains the following information:cni: - multus - cilium

This is an optional file to define certain Kubernetes customization, like the CNI plug-ins to be used or many options you can check in the official documentation.

The kubernetes/manifests folder contains the following files:

rke2-ingress-config.yaml: contains the configuration to create theIngressservice for the management cluster (no modifications needed).apiVersion: helm.cattle.io/v1 kind: HelmChartConfig metadata: name: rke2-ingress-nginx namespace: kube-system spec: valuesContent: |- controller: config: use-forwarded-headers: "true" enable-real-ip: "true" publishService: enabled: true service: enabled: true type: LoadBalancer externalTrafficPolicy: Localneuvector-namespace.yaml: contains the configuration to create theNeuVectornamespace (no modifications needed).apiVersion: v1 kind: Namespace metadata: labels: pod-security.kubernetes.io/enforce: privileged name: neuvectoringress-l2-adv.yaml: contains the configuration to create theL2Advertisementfor theMetalLBcomponent (no modifications needed).apiVersion: metallb.io/v1beta1 kind: L2Advertisement metadata: name: ingress-l2-adv namespace: metallb-system spec: ipAddressPools: - ingress-ippoolingress-ippool.yaml: contains the configuration to create theIPAddressPoolfor therke2-ingress-nginxcomponent. The${INGRESS_VIP}has to be set properly to define the IP address reserved to be used by therke2-ingress-nginxcomponent.apiVersion: metallb.io/v1beta1 kind: IPAddressPool metadata: name: ingress-ippool namespace: metallb-system spec: addresses: - ${INGRESS_VIP}/32 serviceAllocation: priority: 100 serviceSelectors: - matchExpressions: - {key: app.kubernetes.io/name, operator: In, values: [rke2-ingress-nginx]}

The kubernetes/helm/values folder contains the following files:

rancher.yaml: contains the configuration to create theRanchercomponent. The${INGRESS_VIP}must be set properly to define the IP address to be consumed by theRanchercomponent. The URL to access theRanchercomponent will behttps://rancher-${INGRESS_VIP}.sslip.io.hostname: rancher-${INGRESS_VIP}.sslip.io bootstrapPassword: "foobar" replicas: 1 global.cattle.psp.enabled: "false"neuvector.yaml: contains the configuration to create theNeuVectorcomponent (no modifications needed).controller: replicas: 1 ranchersso: enabled: true manager: enabled: false cve: scanner: enabled: false replicas: 1 k3s: enabled: true crdwebhook: enabled: falsemetal3.yaml: contains the configuration to create theMetal3component. The${METAL3_VIP}must be set properly to define the IP address to be consumed by theMetal3component.global: ironicIP: ${METAL3_VIP} enable_vmedia_tls: false additionalTrustedCAs: false metal3-ironic: global: predictableNicNames: "true" persistence: ironic: size: "5Gi"

The Media Server is an optional feature included in Metal3 (by default is disabled). To use the Metal3 feature, you need to configure it on the previous manifest. To use the Metal3 media server, specify the following variable:

add the

enable_metal3_media_servertotrueto enable the media server feature in the global section.include the following configuration about the media server where ${MEDIA_VOLUME_PATH} is the path to the media volume in the media (e.g

/home/metal3/bmh-image-cache)metal3-media: mediaVolume: hostPath: ${MEDIA_VOLUME_PATH}

An external media server can be used to store the images, and in the case you want to use it with TLS, you will need to modify the following configurations:

set to

truetheadditionalTrustedCAsin the previousmetal3.yamlfile to enable the additional trusted CAs from the external media server.include the following secret configuration in the folder

kubernetes/manifests/metal3-cacert-secret.yamlto store the CA certificate of the external media server.apiVersion: v1 kind: Namespace metadata: name: metal3-system --- apiVersion: v1 kind: Secret metadata: name: tls-ca-additional namespace: metal3-system type: Opaque data: ca-additional.crt: {{ additional_ca_cert | b64encode }}

The additional_ca_cert is the base64-encoded CA certificate of the external media server. You can use the following command to encode the certificate and generate the secret doing manually:

kubectl -n meta3-system create secret generic tls-ca-additional --from-file=ca-additional.crt=./ca-additional.crtcertmanager.yaml: contains the configuration to create theCert-Managercomponent (no modifications needed).installCRDs: "true"

35.3.5 Networking folder #

The network folder contains as many files as nodes in the management cluster. In our case, we have only one node, so we have only one file called mgmt-cluster-node1.yaml.

The name of the file must match the host name defined in the mgmt-cluster.yaml definition file into the network/node section described above.

If you need to customize the networking configuration, for example, to use a specific static IP address (DHCP-less scenario), you can use the mgmt-cluster-node1.yaml file under the network folder. It contains the following information:

${MGMT_GATEWAY}: The gateway IP address.${MGMT_DNS}: The DNS server IP address.${MGMT_MAC}: The MAC address of the network interface.${MGMT_NODE_IP}: The IP address of the management cluster.

routes:

config:

- destination: 0.0.0.0/0

metric: 100

next-hop-address: ${MGMT_GATEWAY}

next-hop-interface: eth0

table-id: 254

dns-resolver:

config:

server:

- ${MGMT_DNS}

- 8.8.8.8

interfaces:

- name: eth0

type: ethernet

state: up

mac-address: ${MGMT_MAC}

ipv4:

address:

- ip: ${MGMT_NODE_IP}

prefix-length: 24

dhcp: false

enabled: true

ipv6:

enabled: falseIf you want to use DHCP to get the IP address, you can use the following configuration (the MAC address must be set properly using the ${MGMT_MAC} variable):

## This is an example of a dhcp network configuration for a management cluster

interfaces:

- name: eth0

type: ethernet

state: up

mac-address: ${MGMT_MAC}

ipv4:

dhcp: true

enabled: true

ipv6:

enabled: falseDepending on the number of nodes in the management cluster, you can create more files like

mgmt-cluster-node2.yaml,mgmt-cluster-node3.yaml, etc. to configure the rest of the nodes.The

routessection is used to define the routing table for the management cluster.

35.4 Image preparation for air-gap environments #

This section describes how to prepare the image for air-gap environments showing only the differences from the previous sections. The following changes to the previous section (Image preparation for connected environments (Section 35.3, “Image preparation for connected environments”)) are required to prepare the image for air-gap environments:

The

mgmt-cluster.yamlfile must be modified to include theembeddedArtifactRegistrysection with theimagesfield set to all container images to be included into the EIB output image.The

mgmt-cluster.yamlfile must be modified to includerancher-turtles-airgap-resourceshelm chart.The

custom/scripts/99-register.shscript must be removed when use an air-gap environment.

35.4.1 Modifications in the definition file #

The mgmt-cluster.yaml file must be modified to include the embeddedArtifactRegistry section with the images field set to all container images to be included into the EIB output image. The images field must contain the list of all container images to be included in the output image. The following is an example of the mgmt-cluster.yaml file with the embeddedArtifactRegistry section included:

The rancher-turtles-airgap-resources helm chart must also be added, this creates resources as described in the Rancher Turtles Airgap Documentation. This also requires a turtles.yaml values file for the rancher-turtles chart to specify the necessary configuration.

apiVersion: 1.0

image:

imageType: iso

arch: x86_64

baseImage: SL-Micro.x86_64-6.0-Base-SelfInstall-GM2.install.iso

outputImageName: eib-mgmt-cluster-image.iso

operatingSystem:

isoConfiguration:

installDevice: /dev/sda

users:

- username: root

encryptedPassword: ${ROOT_PASSWORD}

packages:

packageList:

- jq

sccRegistrationCode: ${SCC_REGISTRATION_CODE}

kubernetes:

version: ${KUBERNETES_VERSION}

helm:

charts:

- name: cert-manager

repositoryName: jetstack

version: 1.15.3

targetNamespace: cert-manager

valuesFile: certmanager.yaml

createNamespace: true

installationNamespace: kube-system

- name: longhorn-crd

version: 104.2.2+up1.7.3

repositoryName: rancher-charts

targetNamespace: longhorn-system

createNamespace: true

installationNamespace: kube-system

- name: longhorn

version: 104.2.2+up1.7.3

repositoryName: rancher-charts

targetNamespace: longhorn-system

createNamespace: true

installationNamespace: kube-system

- name: metal3-chart

version: 0.8.3

repositoryName: suse-edge-charts

targetNamespace: metal3-system

createNamespace: true

installationNamespace: kube-system

valuesFile: metal3.yaml

- name: rancher-turtles-chart

version: 0.3.3

repositoryName: suse-edge-charts

targetNamespace: rancher-turtles-system

createNamespace: true

installationNamespace: kube-system

valuesFile: turtles.yaml

- name: rancher-turtles-airgap-resources-chart

version: 0.3.3

repositoryName: suse-edge-charts

targetNamespace: rancher-turtles-system

createNamespace: true

installationNamespace: kube-system

- name: neuvector-crd

version: 104.0.8+up2.8.8

repositoryName: rancher-charts

targetNamespace: neuvector

createNamespace: true

installationNamespace: kube-system

valuesFile: neuvector.yaml

- name: neuvector

version: 104.0.8+up2.8.8

repositoryName: rancher-charts

targetNamespace: neuvector

createNamespace: true

installationNamespace: kube-system

valuesFile: neuvector.yaml

- name: rancher

version: 2.9.12

repositoryName: rancher-prime

targetNamespace: cattle-system

createNamespace: true

installationNamespace: kube-system

valuesFile: rancher.yaml

repositories:

- name: jetstack

url: https://charts.jetstack.io

- name: rancher-charts

url: https://charts.rancher.io/

- name: suse-edge-charts

url: oci://registry.suse.com/edge/3.1

- name: rancher-prime

url: https://charts.rancher.com/server-charts/prime

network:

apiHost: ${API_HOST}

apiVIP: ${API_VIP}

nodes:

- hostname: mgmt-cluster-node1

initializer: true

type: server

# - hostname: mgmt-cluster-node2

# type: server

# - hostname: mgmt-cluster-node3

# type: server

# type: server

embeddedArtifactRegistry:

images:

- name: registry.rancher.com/rancher/backup-restore-operator:v5.0.4

- name: registry.rancher.com/rancher/calico-cni:v3.28.1-rancher1

- name: registry.rancher.com/rancher/cis-operator:v1.2.6

- name: registry.rancher.com/rancher/flannel-cni:v1.4.1-rancher1

- name: registry.rancher.com/rancher/fleet-agent:v0.10.16

- name: registry.rancher.com/rancher/fleet:v0.10.16

- name: registry.rancher.com/rancher/hardened-addon-resizer:1.8.23-build20250612

- name: registry.rancher.com/rancher/hardened-calico:v3.30.2-build20250711

- name: registry.rancher.com/rancher/hardened-cluster-autoscaler:v1.10.2-build20250611

- name: registry.rancher.com/rancher/hardened-cni-plugins:v1.7.1-build20250611

- name: registry.rancher.com/rancher/hardened-coredns:v1.12.2-build20250611

- name: registry.rancher.com/rancher/hardened-dns-node-cache:1.26.0-build20250611

- name: registry.rancher.com/rancher/hardened-etcd:v3.5.21-k3s1-build20250612

- name: registry.rancher.com/rancher/hardened-flannel:v0.27.1-build20250710

- name: registry.rancher.com/rancher/hardened-k8s-metrics-server:v0.8.0-build20250704

- name: registry.rancher.com/rancher/hardened-kubernetes:v1.30.14-rke2r4-build20250911

- name: registry.rancher.com/rancher/hardened-multus-cni:v4.2.1-build20250627

- name: registry.rancher.com/rancher/hardened-node-feature-discovery:v0.15.6-build20240822

- name: registry.rancher.com/rancher/hardened-whereabouts:v0.9.1-build20250704

- name: registry.rancher.com/rancher/helm-project-operator:v0.2.1

- name: registry.rancher.com/rancher/k3s-upgrade:v1.30.14-k3s2

- name: registry.rancher.com/rancher/klipper-helm:v0.9.8-build20250709

- name: registry.rancher.com/rancher/klipper-lb:v0.4.13

- name: registry.rancher.com/rancher/kube-api-auth:v0.2.2

- name: registry.rancher.com/rancher/kubectl:v1.30.5

- name: registry.rancher.com/rancher/local-path-provisioner:v0.0.31

- name: registry.rancher.com/rancher/machine:v0.15.0-rancher125

- name: registry.rancher.com/rancher/mirrored-cluster-api-controller:v1.7.3

- name: registry.rancher.com/rancher/nginx-ingress-controller:v1.12.4-hardened2

- name: registry.rancher.com/rancher/prometheus-federator:v0.4.4

- name: registry.rancher.com/rancher/pushprox-client:v0.1.4-rancher2-client

- name: registry.rancher.com/rancher/pushprox-proxy:v0.1.4-rancher2-proxy

- name: registry.rancher.com/rancher/rancher-agent:v2.9.12

- name: registry.rancher.com/rancher/rancher-csp-adapter:v4.0.0

- name: registry.rancher.com/rancher/rancher-webhook:v0.5.12

- name: registry.rancher.com/rancher/rancher:v2.9.12

- name: registry.rancher.com/rancher/rke-tools:v0.1.114

- name: registry.rancher.com/rancher/rke2-cloud-provider:v1.30.13-rc1.0.20250516172343-e77f78ee9466-build20250613

- name: registry.rancher.com/rancher/rke2-runtime:v1.30.14-rke2r4

- name: registry.rancher.com/rancher/rke2-upgrade:v1.30.14-rke2r4

- name: registry.rancher.com/rancher/security-scan:v0.4.4

- name: registry.rancher.com/rancher/shell:v0.3.0

- name: registry.rancher.com/rancher/system-agent-installer-k3s:v1.30.14-k3s2

- name: registry.rancher.com/rancher/system-agent-installer-rke2:v1.30.14-rke2r4

- name: registry.rancher.com/rancher/system-agent:v0.3.10-suc

- name: registry.rancher.com/rancher/system-upgrade-controller:v0.13.4

- name: registry.rancher.com/rancher/ui-plugin-catalog:4.0.5

- name: registry.rancher.com/rancher/kubectl:v1.20.2

- name: registry.rancher.com/rancher/kubectl:v1.29.2

- name: registry.rancher.com/rancher/shell:v0.1.24

- name: registry.rancher.com/rancher/mirrored-ingress-nginx-kube-webhook-certgen:v1.4.1

- name: registry.rancher.com/rancher/mirrored-ingress-nginx-kube-webhook-certgen:v1.4.3

- name: registry.rancher.com/rancher/mirrored-ingress-nginx-kube-webhook-certgen:v1.4.4

- name: registry.rancher.com/rancher/mirrored-ingress-nginx-kube-webhook-certgen:v1.5.0

- name: registry.rancher.com/rancher/mirrored-ingress-nginx-kube-webhook-certgen:v1.5.2

- name: registry.rancher.com/rancher/mirrored-ingress-nginx-kube-webhook-certgen:v1.5.3

- name: registry.rancher.com/rancher/mirrored-ingress-nginx-kube-webhook-certgen:v1.6.0

- name: registry.rancher.com/rancher/mirrored-ingress-nginx-kube-webhook-certgen:v20230312-helm-chart-4.5.2-28-g66a760794

- name: registry.rancher.com/rancher/mirrored-ingress-nginx-kube-webhook-certgen:v20231011-8b53cabe0

- name: registry.rancher.com/rancher/mirrored-ingress-nginx-kube-webhook-certgen:v20231226-1a7112e06

- name: registry.suse.com/rancher/mirrored-longhornio-csi-attacher:v4.8.0

- name: registry.suse.com/rancher/mirrored-longhornio-csi-provisioner:v4.0.1-20250204

- name: registry.suse.com/rancher/mirrored-longhornio-csi-resizer:v1.13.1

- name: registry.suse.com/rancher/mirrored-longhornio-csi-snapshotter:v7.0.2-20250204

- name: registry.suse.com/rancher/mirrored-longhornio-csi-node-driver-registrar:v2.13.0

- name: registry.suse.com/rancher/mirrored-longhornio-livenessprobe:v2.15.0

- name: registry.suse.com/rancher/mirrored-longhornio-openshift-origin-oauth-proxy:4.15

- name: registry.suse.com/rancher/mirrored-longhornio-backing-image-manager:v1.7.3

- name: registry.suse.com/rancher/mirrored-longhornio-longhorn-engine:v1.7.3

- name: registry.suse.com/rancher/mirrored-longhornio-longhorn-instance-manager:v1.7.3

- name: registry.suse.com/rancher/mirrored-longhornio-longhorn-manager:v1.7.3

- name: registry.suse.com/rancher/mirrored-longhornio-longhorn-share-manager:v1.7.3

- name: registry.suse.com/rancher/mirrored-longhornio-longhorn-ui:v1.7.3

- name: registry.suse.com/rancher/mirrored-longhornio-longhorn-cli:v1.7.3

- name: registry.suse.com/rancher/mirrored-longhornio-support-bundle-kit:v0.0.51

- name: registry.suse.com/edge/3.1/cluster-api-provider-rke2-bootstrap:v0.7.1

- name: registry.suse.com/edge/3.1/cluster-api-provider-rke2-controlplane:v0.7.1

- name: registry.suse.com/edge/3.1/cluster-api-controller:v1.7.5

- name: registry.suse.com/edge/3.1/cluster-api-provider-metal3:v1.7.1

- name: registry.suse.com/edge/3.1/ip-address-manager:v1.7.135.4.2 Modifications in the custom folder #

The

custom/scripts/99-register.shscript must be removed when using an air-gap environment. As you can see in the directory structure, the99-register.shscript is not included in thecustom/scriptsfolder.

35.4.3 Modifications in the helm values folder #

The

turtles.yaml: contains the configuration required to specify airgapped operation for Rancher Turtles, note this depends on installation of the rancher-turtles-airgap-resources chart.cluster-api-operator: cluster-api: core: fetchConfig: selector: "{\"matchLabels\": {\"provider-components\": \"core\"}}" rke2: bootstrap: fetchConfig: selector: "{\"matchLabels\": {\"provider-components\": \"rke2-bootstrap\"}}" controlPlane: fetchConfig: selector: "{\"matchLabels\": {\"provider-components\": \"rke2-control-plane\"}}" metal3: infrastructure: fetchConfig: selector: "{\"matchLabels\": {\"provider-components\": \"metal3\"}}"

35.5 Image creation #

Once the directory structure is prepared following the previous sections (for both, connected and air-gap scenarios), run the following command to build the image:

podman run --rm --privileged -it -v $PWD:/eib \

registry.suse.com/edge/3.1/edge-image-builder:1.1.2 \

build --definition-file mgmt-cluster.yamlThis creates the ISO output image file that, in our case, based on the image definition described above, is eib-mgmt-cluster-image.iso.

35.6 Provision the management cluster #

The previous image contains all components explained above, and it can be used to provision the management cluster using a virtual machine or a bare-metal server (using the virtual-media feature).