29 Edge 3.1 migration #

This section offers migration guidelines for existing Edge 3.0 (including minor releases such as 3.0.1 and 3.0.2) management and downstream clusters to Edge 3.1.0.

For a list of Edge 3.1.0 component versions, refer to the release notes (Section 47.1, “Abstract”).

29.1 Management cluster #

This section covers how to migrate a management cluster from Edge 3.0 to Edge 3.1.0.

Management cluster components should be migrated in the following order:

Operating System (OS) (Section 29.1.1, “Operating System (OS)”)

RKE2 (Section 29.1.2, “RKE2”)

Edge Helm charts (Section 29.1.3, “Edge Helm charts”)

29.1.1 Operating System (OS) #

This section covers the steps needed to migrate your management cluster nodes' OS to an Edge 3.1.0 supported version.

The below steps should be done for each node of the management cluster.

To avoid any unforeseen problems, migrate the cluster’s control-plane nodes first and the worker nodes second.

29.1.1.1 Prerequisites #

SCC registered nodes- ensure your cluster nodes' OS are registered with a subscription key that supports the operating system version specified in theEdge 3.1release (Section 47.1, “Abstract”).

Air-gapped:

Mirror SUSE RPM repositories- RPM repositories related to the operating system that is specified in theEdge 3.1.0release (Section 47.1, “Abstract”) should be locally mirrored, so thattransactional-updatehas access to them. This can be achieved by using either RMT or SUMA.

29.1.1.2 Migration steps #

The below steps assume you are running as root and that kubectl has been configured to connect to the management cluster.

Mark the node as unschedulable:

kubectl cordon <node_name>For a full list of the options for the

cordoncommand, see kubectl cordon.Optionally, there might be use-cases where you would like to

drainthe nodes' workloads:kubectl drain <node>For a full list of the options for the

draincommand, see kubectl drain.Before a migration, you need to ensure that packages on your current OS are updated. To do this, execute:

transactional-updateThe above command executes zypper up to update the OS packages. For more information on

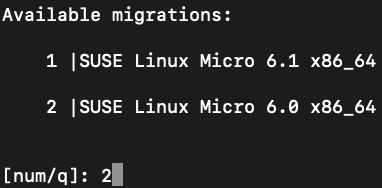

transactional-update, see the transactional-update guide.Proceed to do the OS migration:

transactional-update --continue migrationNoteThe

--continueoption is used here to reuse the previous snapshot without having to reboot the system.After a successful

transactional-updaterun, for the changes to take effect on the system you would need to reboot:rebootAfter the host has been rebooted, validate that the operating system is migrated to

SUSE Linux Micro 6.0:cat /etc/os-releaseOutput should be similar to:

NAME="SL-Micro" VERSION="6.0" VERSION_ID="6.0" PRETTY_NAME="SUSE Linux Micro 6.0" ID="sl-micro" ID_LIKE="suse" ANSI_COLOR="0;32" CPE_NAME="cpe:/o:suse:sl-micro:6.0" HOME_URL="https://www.suse.com/products/micro/" DOCUMENTATION_URL="https://documentation.suse.com/sl-micro/6.0/"NoteIn case something failed with the migration, you can rollback to the last working snapshot using:

transactional-update rollback lastYou would need to reboot your system for the

rollbackto take effect. See the officialtransactional-updatedocumentation for more information about the rollback procedure.Mark the node as schedulable:

kubectl uncordon <node_name>

29.1.2 RKE2 #

The below steps should be done for each node of the management cluster.

As the RKE2 documentation explains, the upgrade procedure requires to upgrade the clusters' control-plane nodes one at a time and once all have been upgraded, the agent nodes.

To ensure disaster recovery, we advise to do a backup of the RKE2 cluster data. For information on how to do this, check the RKE2 backup and restore guide. The default location for the rke2 binary is /opt/rke2/bin.

You can upgrade the RKE2 version to a Edge 3.1.0 compatible version using the RKE2 installation script as follows:

Mark the node as unschedulable:

kubectl cordon <node_name>For a full list of the options for the

cordoncommand, see kubectl cordon.Optionally, there might be use-cases where you would like to

drainthe nodes' workloads:kubectl drain <node>For a full list of the options for the

draincommand, see kubectl drain.Use the RKE2 installation script to install the correct

Edge 3.1.0compatible RKE2 version:curl -sfL https://get.rke2.io | INSTALL_RKE2_VERSION=v1.30.3+rke2r1 sh -Restart the

rke2process:# For control-plane nodes: systemctl restart rke2-server # For worker nodes: systemctl restart rke2-agentValidate that the nodes' RKE2 version is upgraded:

kubectl get nodesMark the node as schedulable:

kubectl uncordon <node_name>

29.1.3 Edge Helm charts #

This section assumes you have installed helm on your system and you have a valid kubeconfig pointing to the desired cluster. For helm installation instructions, check the Installing Helm guide.

This section provides guidelines for upgrading the Helm chart components that make up a specific Edge release. It covers the following topics:

Known limitations (Section 29.1.3.1, “Known Limitations”) that the upgrade process has.

How to migrate (Section 29.1.3.2, “Cluster API controllers migration”) Cluster API controllers through the

Rancher TurtlesHelm chart.How to upgrade Edge Helm charts (Section 29.1.3.3, “Edge Helm chart upgrade - EIB”) deployed through EIB (Chapter 9, Edge Image Builder).

How to upgrade Edge Helm charts (Section 29.1.3.4, “Edge Helm chart upgrade - non-EIB”) deployed through non-EIB means.

29.1.3.1 Known Limitations #

This section covers known limitations to the current migration process. Users should first go through the steps described here before moving to upgrade their helm charts.

29.1.3.1.1 Rancher upgrade #

With the current RKE2 version that Edge 3.1.0 utilizes, there is an issue where all ingresses that do not contain an IngressClass are ignored by the ingress controller. To mitigate this, users would need to manually add the name of the default IngressClass to the default Rancher Ingress.

For more information on the problem that the below steps fix, see the upstream RKE2 issue and more specifically this comment.

In some cases the default IngressClass might have a different name than nginx.

Make sure to validate the name by running:

kubectl get ingressclassBefore upgrading Rancher, make sure to execute the following command:

If

Rancherwas deployed through EIB (Chapter 9, Edge Image Builder):kubectl patch helmchart rancher -n <namespace> --type='merge' -p '{"spec":{"set":{"ingress.ingressClassName":"nginx"}}}'If

Rancherwas deployed through Helm, add the--set ingress.ingressClassName=nginxflag to your upgrade command. For a full example of how to utilize this option, see the following example (Section 29.1.3.4.1, “Example”).

29.1.3.2 Cluster API controllers migration #

From Edge 3.1.0, Cluster API (CAPI) controllers on a Metal3 management cluster are managed via Rancher Turtles.

To migrate the CAPI controllers versions to Edge 3.1.0 compatible versions, install the Rancher Turtles chart:

helm install rancher-turtles oci://registry.suse.com/edge/3.1/rancher-turtles-chart --version 0.3.2 --namespace rancher-turtles-system --create-namespaceAfter some time, the controller pods running in the capi-system, capm3-system, rke2-bootstrap-system and rke2-control-plane-system namespaces are upgraded with the Edge 3.1.0 compatible controller versions.

For information on how to install Rancher Turtles in an air-gapped environment, refer to Rancher Turtles air-gapped installation (Section 29.1.3.2.1, “Rancher Turtles air-gapped installation”).

29.1.3.2.1 Rancher Turtles air-gapped installation #

The below steps assume that kubectl has been configured to connect to the management cluster that you wish to upgrade.

Before installing the below mentioned

rancher-turtles-airgap-resourcesHelm chart, ensure that it will have the correct ownership over theclusterctlcreated namespaces:capi-systemownership change:kubectl label namespace capi-system app.kubernetes.io/managed-by=Helm --overwrite kubectl annotate namespace capi-system meta.helm.sh/release-name=rancher-turtles-airgap-resources --overwrite kubectl annotate namespace capi-system meta.helm.sh/release-namespace=rancher-turtles-system --overwritecapm3-systemownership change:kubectl label namespace capm3-system app.kubernetes.io/managed-by=Helm --overwrite kubectl annotate namespace capm3-system meta.helm.sh/release-name=rancher-turtles-airgap-resources --overwrite kubectl annotate namespace capm3-system meta.helm.sh/release-namespace=rancher-turtles-system --overwriterke2-bootstrap-systemownership change:kubectl label namespace rke2-bootstrap-system app.kubernetes.io/managed-by=Helm --overwrite kubectl annotate namespace rke2-bootstrap-system meta.helm.sh/release-name=rancher-turtles-airgap-resources --overwrite kubectl annotate namespace rke2-bootstrap-system meta.helm.sh/release-namespace=rancher-turtles-system --overwriterke2-control-plane-systemownership change:kubectl label namespace rke2-control-plane-system app.kubernetes.io/managed-by=Helm --overwrite kubectl annotate namespace rke2-control-plane-system meta.helm.sh/release-name=rancher-turtles-airgap-resources --overwrite kubectl annotate namespace rke2-control-plane-system meta.helm.sh/release-namespace=rancher-turtles-system --overwrite

Pull the

rancher-turtles-airgap-resourcesandrancher-turtleschart archives:helm pull oci://registry.suse.com/edge/3.1/rancher-turtles-airgap-resources-chart --version 0.3.2 helm pull oci://registry.suse.com/edge/3.1/rancher-turtles-chart --version 0.3.2To provide the needed resources for an air-gapped installation of the

Rancher TurtlesHelm chart, install therancher-turtles-airgap-resourcesHelm chart:helm install rancher-turtles-airgap-resources ./rancher-turtles-airgap-resources-chart-0.3.2.tgz --namespace rancher-turtles-system --create-namespaceConfigure the

cluster-api-operatorin theRancher TurtlesHelm chart to fetch controller data from correct locations:cat > values.yaml <<EOF cluster-api-operator: cluster-api: core: fetchConfig: selector: "{\"matchLabels\": {\"provider-components\": \"core\"}}" rke2: bootstrap: fetchConfig: selector: "{\"matchLabels\": {\"provider-components\": \"rke2-bootstrap\"}}" controlPlane: fetchConfig: selector: "{\"matchLabels\": {\"provider-components\": \"rke2-control-plane\"}}" metal3: infrastructure: fetchConfig: selector: "{\"matchLabels\": {\"provider-components\": \"metal3\"}}" EOFInstall

Rancher Turtles:helm install rancher-turtles ./rancher-turtles-chart-0.3.2.tgz --namespace rancher-turtles-system --create-namespace --values values.yaml

After some time, the controller pods running in the capi-system, capm3-system, rke2-bootstrap-system and rke2-control-plane-system namespaces will be upgraded with the Edge 3.1.0 compatible controller versions.

29.1.3.3 Edge Helm chart upgrade - EIB #

This section explains how to upgrade a Helm chart from the Edge component stack, deployed via EIB (Chapter 9, Edge Image Builder), to an Edge 3.1.0 compatible version.

29.1.3.3.1 Prerequisites #

In Edge 3.1, EIB changes the way it deploys charts and no longer uses the RKE2/K3s manifest auto-deploy mechanism.

This means that, before upgrading to an Edge 3.1.0 compatible version, any Helm charts deployed on an Edge 3.0 environment using EIB should have their chart manifests removed from the manifests directory of the relevant Kubernetes distribution.

If this is not done, any chart upgrade will be reverted by the RKE2/K3s process upon restart of the process or the operating system.

Deleting manifests from the RKE2/K3s directory will not result in the resources being removed from the cluster.

As per the RKE2/K3s documentation:

"Deleting files out of this directory will not delete the corresponding resources from the cluster."

Removing any EIB deployed chart manifests involves the following steps:

To ensure disaster recovery, make a backup of each EIB deployed manifest:

NoteEIB deployed manifests will have the

"edge.suse.com/source: edge-image-builder"label.NoteMake sure that the

<backup_location>that you provide to the below command exists.grep -lrIZ 'edge.suse.com/source: edge-image-builder' /var/lib/rancher/rke2/server/manifests | xargs -0 -I{} cp {} <backup_location>Remove all EIB deployed manifests:

grep -lrIZ 'edge.suse.com/source: edge-image-builder' /var/lib/rancher/rke2/server/manifests | xargs -0 rm -f --

29.1.3.3.2 Upgrade steps #

The below steps assume that kubectl has been configured to connect to the management cluster that you wish to upgrade.

Locate the

Edge 3.1compatible chart version that you wish to migrate to by looking at the release notes (Section 47.1, “Abstract”).Pull the desired Helm chart version:

For charts hosted in HTTP repositories:

helm repo add <chart_repo_name> <chart_repo_urls> helm pull <chart_repo_name>/<chart_name> --version=X.Y.ZFor charts hosted in OCI registries:

helm pull oci://<chart_oci_url> --version=X.Y.Z

Encode the pulled chart archive:

base64 -w 0 <chart_name>-X.Y.Z.tgz > <chart_name>-X.Y.Z.txtCheck the Known Limitations (Section 29.1.3.1, “Known Limitations”) section if there are any additional steps that need to be done for the charts.

Patch the existing

HelmChartresource:ImportantMake sure to pass the

HelmChartname, namespace, encoded file and version to the command below.kubectl patch helmchart <helmchart_name> --type=merge -p "{\"spec\":{\"chartContent\":\"$(cat <helmchart_name>-X.Y.Z.txt)\", \"version\":\"<helmchart_version>\"}}" -n <helmchart_namespace>This will signal the helm-controller to schedule a Job that will create a Pod that will upgrade the desired Helm chart. To view the logs of the created Pod, follow these steps:

Locate the created Pod:

kubectl get pods -l helmcharts.helm.cattle.io/chart=<helmchart_name> -n <namespace>View the Pod logs:

kubectl logs <pod_name> -n <namespace>

A Completed Pod with non-error logs would result in a successful upgrade of the desired Helm chart.

For a full example of how to upgrade a Helm chart deployed through EIB, refer to the Example (Section 29.1.3.3.3, “Example”) section.

29.1.3.3.3 Example #

This section provides an example of upgrading the Rancher and Metal3 Helm charts to a version compatible with the Edge 3.1.0 release. It follows the steps outlined in the "Upgrade Steps" (Section 29.1.3.3.2, “Upgrade steps”) section.

Use-case:

Current

RancherandMetal3charts need to be upgraded to anEdge 3.1.0compatible version.Rancheris deployed through EIB and itsHelmChartis deployed in thedefaultnamespace.Metal3is deployed through EIB and itsHelmChartis deployed in thekube-systemnamespace.

Steps:

Locate the desired versions for

RancherandMetal3from the release notes (Section 47.1, “Abstract”). ForEdge 3.1.0, these versions would be2.9.1for Rancher and0.8.1for Metal3.Pull the desired chart versions:

For

Rancher:helm repo add rancher-prime https://charts.rancher.com/server-charts/prime helm pull rancher-prime/rancher --version=2.9.1For

Metal3:helm pull oci://registry.suse.com/edge/3.1/metal3-chart --version=0.8.1

Encode the

RancherandMetal3Helm charts:base64 -w 0 rancher-2.9.1.tgz > rancher-2.9.1.txt base64 -w 0 metal3-chart-0.8.1.tgz > metal3-chart-0.8.1.txtThe directory structure should look similar to this:

. ├── metal3-chart-0.8.1.tgz ├── metal3-chart-0.8.1.txt ├── rancher-2.9.1.tgz └── rancher-2.9.1.txtCheck the Known Limitations (Section 29.1.3.1, “Known Limitations”) section if there are any additional steps that need to be done for the charts.

For

Rancher:Execute the command described in the

Known Limitationssection:# In this example the rancher helmchart is in the 'default' namespace kubectl patch helmchart rancher -n default --type='merge' -p '{"spec":{"set":{"ingress.ingressClassName":"nginx"}}}'Validate that the

ingressClassNameproperty was successfully added:kubectl get ingress rancher -n cattle-system -o yaml | grep -w ingressClassName # Example output ingressClassName: nginx

Patch the

RancherandMetal3HelmChart resources:# Rancher deployed in the default namespace kubectl patch helmchart rancher --type=merge -p "{\"spec\":{\"chartContent\":\"$(cat rancher-2.9.1.txt)\", \"version\":\"2.9.1\"}}" -n default # Metal3 deployed in the kube-system namespace kubectl patch helmchart metal3 --type=merge -p "{\"spec\":{\"chartContent\":\"$(cat metal3-chart-0.8.1.txt)\", \"version\":\"0.8.1\"}}" -n kube-systemLocate the

helm-controllercreated Rancher and Metal3 Pods:Rancher:

kubectl get pods -l helmcharts.helm.cattle.io/chart=rancher -n default # Example output NAME READY STATUS RESTARTS AGE helm-install-rancher-wg7nf 0/1 Completed 0 5m2sMetal3:

kubectl get pods -l helmcharts.helm.cattle.io/chart=metal3 -n kube-system # Example output NAME READY STATUS RESTARTS AGE helm-install-metal3-57lz5 0/1 Completed 0 4m35s

View the logs of each pod using kubectl logs:

Rancher:

kubectl logs helm-install-rancher-wg7nf -n default # Example successful output ... Upgrading rancher + helm_v3 upgrade --namespace cattle-system --create-namespace --version 2.9.1 --set-string global.clusterCIDR=10.42.0.0/16 --set-string global.clusterCIDRv4=10.42.0.0/16 --set-string global.clusterDNS=10.43.0.10 --set-string global.clusterDomain=cluster.local --set-string global.rke2DataDir=/var/lib/rancher/rke2 --set-string global.serviceCIDR=10.43.0.0/16 --set-string ingress.ingressClassName=nginx rancher /tmp/rancher.tgz --values /config/values-01_HelmChart.yaml Release "rancher" has been upgraded. Happy Helming! ...Metal3:

kubectl logs helm-install-metal3-57lz5 -n kube-system # Example successful output ... Upgrading metal3 + echo 'Upgrading metal3' + shift 1 + helm_v3 upgrade --namespace metal3-system --create-namespace --version 0.8.1 --set-string global.clusterCIDR=10.42.0.0/16 --set-string global.clusterCIDRv4=10.42.0.0/16 --set-string global.clusterDNS=10.43.0.10 --set-string global.clusterDomain=cluster.local --set-string global.rke2DataDir=/var/lib/rancher/rke2 --set-string global.serviceCIDR=10.43.0.0/16 metal3 /tmp/metal3.tgz --values /config/values-01_HelmChart.yaml Release "metal3" has been upgraded. Happy Helming! ...

Validate that the pods for the specific chart are running:

# For Rancher kubectl get pods -n cattle-system # For Metal3 kubectl get pods -n metal3-system

29.1.3.4 Edge Helm chart upgrade - non-EIB #

This section explains how to upgrade a Helm chart from the Edge component stack, deployed via Helm, to an Edge 3.1.0 compatible version.

The below steps assume that kubectl has been configured to connect to the management cluster that you wish to upgrade.

Locate the

Edge 3.1.0compatible chart version that you wish to migrate to by looking at the release notes (Section 47.1, “Abstract”).Get the custom values of the currently running helm chart:

helm get values <chart_name> -n <chart_namespace> -o yaml > <chart_name>-values.yamlCheck the Known Limitations (Section 29.1.3.1, “Known Limitations”) section if there are any additional steps, or changes that need to be done for the charts.

Upgrade the helm chart to the desired version:

For non air-gapped setups:

# For charts hosted in HTTP repositories helm upgrade <chart_name> <chart_repo>/<chart_name> --version <version> --values <chart_name>-values.yaml -n <chart_namespace> # For charts hosted in OCI registries helm upgrade <chart_name> oci://<oci_registry_url>/<chart_name> --namespace <chart_namespace> --values <chart_name>-values.yaml --version=X.Y.ZFor air-gapped setups:

On a machine with access to the internet, pull the desired chart version:

# For charts hosted in HTTP repositories helm pull <chart_repo_name>/<chart_name> --version=X.Y.Z # For charts hosted in OCI registries helm pull oci://<chart_oci_url> --version=X.Y.ZTransfer the chart archive to your

managementcluster:scp <chart>.tgz <machine-address>:<filesystem-path>Upgrade the chart:

helm upgrade <chart_name> <chart>.tgz --values <chart_name>-values.yaml -n <chart_namespace>

Verify that the chart pods are running:

kubectl get pods -n <chart_namespace>

You may want to do additional verification of the upgrade by checking resources specific to your chart. After this has been done, the upgrade can be considered successful.

For a full example, refer to the Example (Section 29.1.3.4.1, “Example”) section.

29.1.3.4.1 Example #

This section provides an example of upgrading the Rancher and Metal3 Helm charts to a version compatible with the Edge 3.1.0 release. It follows the steps outlined in the "Edge Helm chart upgrade - non-EIB" (Section 29.1.3.4, “Edge Helm chart upgrade - non-EIB”) section.

Use-case:

Current

RancherandMetal3charts need to be upgraded to anEdge 3.1.0compatible version.The

Rancherhelm chart is deployed from the Rancher Prime repository in thecattle-systemnamespace. TheRancher Primerepository was added in the following way:helm repo add rancher-prime https://charts.rancher.com/server-charts/primeThe

Metal3is deployed from theregistry.suse.comOCI registry in themetal3-systemnamespace.

Steps:

Locate the desired versions for

RancherandMetal3from the release notes (Section 47.1, “Abstract”). ForEdge 3.1.0, these versions would be2.9.1for Rancher and0.8.1for Metal3.Get the custom values of the currently running

RancherandMetal3helm charts:# For Rancher helm get values rancher -n cattle-system -o yaml > rancher-values.yaml # For Metal3 helm get values metal3 -n metal3-system -o yaml > metal3-values.yamlCheck the Known Limitations (Section 29.1.3.1, “Known Limitations”) section if there are any additional steps that need to be done for the charts.

For

Rancherthe--set ingress.ingressClassName=nginxoption needs to be added to the upgrade command.

Upgrade the

RancherandMetal3helm charts:# For Rancher helm upgrade rancher rancher-prime/rancher --version 2.9.1 --set ingress.ingressClassName=nginx --values rancher-values.yaml -n cattle-system # For Metal3 helm upgrade metal3 oci://registry.suse.com/edge/3.1/metal3-chart --version 0.8.1 --values metal3-values.yaml -n metal3-systemValidate that the

Rancherand Metal3 pods are running:# For Rancher kubectl get pods -n cattle-system # For Metal3 kubectl get pods -n metal3-system

29.2 Downstream clusters #

This section covers how to migrate your Edge 3.0.X downstream clusters to Edge 3.1.0.

29.2.1 Prerequisites #

This section covers any prerequisite steps that users should go through before beginning the migration process.

29.2.1.1 Charts deployed through EIB #

In Edge 3.1, EIB (Chapter 9, Edge Image Builder) changes the way it deploys charts and no longer uses the RKE2/K3s manifest auto-deploy mechanism.

This means that, before migrating to an Edge 3.1.0 compatible version, any Helm charts deployed on an Edge 3.0 environment using EIB should have their chart manifests removed from the manifests directory of the relevant Kubernetes distribution.

If this is not done, any chart upgrade will be reverted by the RKE2/K3s process upon restart of the process or the operating system.

On downstream clusters, the removal of the EIB created chart manifest files is handled by a Fleet called eib-charts-migration-prep located in the suse-edge/fleet-examples repository.

Using the eib-charts-migration-prep Fleet file from the main branch is not advised. The Fleet file should always be used from a valid Edge release tag.

This process requires that System Upgrade Controller (SUC) is already deployed. For installation details, refer to "Installing the System Upgrade Controller" (Section 19.2, “Installing the System Upgrade Controller”).

Once created, the eib-charts-migration-prep Fleet ships an SUC (Chapter 19, System Upgrade Controller) Plan that contains a script that will do the following:

Determine if the current node on which it is running is an

initializernode. If it is not, it won’t do anything.If the node is an

initializer, it will:Detect all

HelmChartresources deployed by EIB.Locate the manifest file of each of the above

HelmChartresources.NoteHelmChartmanifest files are located only on theinitializernode under/var/lib/rancher/rke2/server/manifestsfor RKE2 and/var/lib/rancher/k3s/server/manifestsfor K3s.To ensure disaster recovery, make a backup of each located manifest under

/tmp.NoteThe backup location can be changed by defining the

MANIFEST_BACKUP_DIRenvironment variable in the SUC Plan file of the Fleet.Remove each manifest file related to a

HelmChartresource deployed by EIB.

Depending on your use-case, the eib-charts-migration-prep Fleet can be deployed in the following two ways:

Through a GitRepo resource - for use-cases where an external/local Git server is available. For more information, refer to EIB chart migration preparation Fleet deployment - GitRepo (Section 29.2.1.1.1, “EIB chart manifest removal Fleet deployment - GitRepo”).

Through a Bundle resource - for air-gapped use-cases that do not support a local Git server option. For more information, refer to EIB chart manifest removal Fleet deployment - Bundle (Section 29.2.1.1.2, “EIB chart manifest removal Fleet deployment - Bundle”).

29.2.1.1.1 EIB chart manifest removal Fleet deployment - GitRepo #

On the

managementcluster, deploy the followingGitReporesource:NoteBefore deploying the resource below, you must provide a valid

targetsconfiguration, so that Fleet knows on which downstream clusters to deploy your resource. For information on how to map to downstream clusters, see Mapping to Downstream Clusters.kubectl apply -n fleet-default -f - <<EOF apiVersion: fleet.cattle.io/v1alpha1 kind: GitRepo metadata: name: eib-chart-migration-prep spec: revision: release-3.1.0 paths: - fleets/day2/system-upgrade-controller-plans/eib-charts-migration-prep repo: https://github.com/suse-edge/fleet-examples.git targets: - clusterSelector: CHANGEME # Example matching all clusters: # targets: # - clusterSelector: {} EOFAlternatively, you can also create the resource through Ranchers' UI, if such is available. For more information, see Accessing Fleet in the Rancher UI.

By creating the above

GitRepoon yourmanagementcluster, Fleet will deploy aSUC Plan(calledeib-chart-migration-prep) on each downstream cluster that matches thetargetsspecified in theGitRepo. To monitor the lifecycle of this plan, refer to "Monitoring System Upgrade Controller Plans" (Section 19.3, “Monitoring System Upgrade Controller Plans”).

29.2.1.1.2 EIB chart manifest removal Fleet deployment - Bundle #

This section describes how to convert the eib-chart-migration-prep Fleet to a Bundle resource that can then be used in air-gapped environments that cannot utilize a local git server.

Steps:

On a machine with network access download the fleet-cli:

NoteMake sure that the version of the fleet-cli you download matches the version of Fleet that has been deployed on your cluster.

For Mac users, there is a fleet-cli Homebrew Formulae.

For Linux users, the binaries are present as assets to each Fleet release.

Retrieve the desired binary:

Linux AMD:

curl -L -o fleet-cli https://github.com/rancher/fleet/releases/download/<FLEET_VERSION>/fleet-linux-amd64Linux ARM:

curl -L -o fleet-cli https://github.com/rancher/fleet/releases/download/<FLEET_VERSION>/fleet-linux-arm64

Move the binary to

/usr/local/bin:sudo mkdir -p /usr/local/bin sudo mv ./fleet-cli /usr/local/bin/fleet-cli sudo chmod 755 /usr/local/bin/fleet-cli

Clone the suse-edge/fleet-examples release that you wish to use the

eib-chart-migration-prepfleet from:git clone -b release-3.1.0 https://github.com/suse-edge/fleet-examples.gitNavigate to the

eib-chart-migration-prepfleet, located in the fleet-examples repo:cd fleet-examples/fleets/day2/system-upgrade-controller-plans/eib-charts-migration-prepCreate a

targets.yamlfile that will point to all downstream clusters on which you wish to deploy the fleet:cat > targets.yaml <<EOF targets: - clusterSelector: CHANGEME EOFFor information on how to map to downstream clusters, see Mapping to Downstream Clusters.

Proceed to build the Bundle:

NoteMake sure you did not download the fleet-cli in the

fleet-examples/fleets/day2/system-upgrade-controller-plans/eib-charts-migration-prepdirectory, otherwise it will be packaged with the Bundle, which is not advised.fleet-cli apply --compress --targets-file=targets.yaml -n fleet-default -o - eib-chart-migration-prep . > eib-chart-migration-prep-bundle.yamlFor more information about this process, see Convert a Helm Chart into a Bundle.

For more information about the

fleet-cli applycommand, see fleet apply.Transfer the eib-chart-migration-prep-bundle.yaml bundle to your management cluster machine:

scp eib-chart-migration-prep-bundle.yaml <machine-address>:<filesystem-path>On your management cluster, deploy the eib-chart-migration-prep-bundle.yaml Bundle:

kubectl apply -f eib-chart-migration-prep-bundle.yamlOn your management cluster, validate that the Bundle is deployed:

kubectl get bundle eib-chart-migration-prep -n fleet-default NAME BUNDLEDEPLOYMENTS-READY STATUS eib-chart-migration-prep 1/1By creating the above

Bundleon yourmanagementcluster, Fleet will deploy anSUC Plan(calledeib-chart-migration-prep) on each downstream cluster that matches thetargetsspecified in thetargets.yamlfile. To monitor the lifecycle of this plan, refer to "Monitoring System Upgrade Controller Plans" (Section 19.3, “Monitoring System Upgrade Controller Plans”).

29.2.2 Migration steps #

After executing the prerequisite (Section 29.2.1, “Prerequisites”) steps, you can proceed to follow the downstream cluster (Chapter 31, Downstream clusters) upgrade documentation for the Edge 3.1.0 release.