Administration Guide

- About This Guide

- I Installation, Setup and Upgrade

- II Configuration and Administration

- 6 Configuration and Administration Basics

- 7 Configuring and Managing Cluster Resources with Hawk2

- 8 Configuring and Managing Cluster Resources (Command Line)

- 9 Adding or Modifying Resource Agents

- 10 Fencing and STONITH

- 11 Storage Protection and SBD

- 12 Access Control Lists

- 13 Network Device Bonding

- 14 Load Balancing

- 15 Geo Clusters (Multi-Site Clusters)

- 16 Executing Maintenance Tasks

- III Storage and Data Replication

- IV Appendix

- Glossary

- E GNU Licenses

14 Load Balancing #

Abstract#

Load Balancing makes a cluster of servers appear as one large, fast server to outside clients. This apparent single server is called a virtual server. It consists of one or more load balancers dispatching incoming requests and several real servers running the actual services. With a load balancing setup of High Availability Extension, you can build highly scalable and highly available network services, such as Web, cache, mail, FTP, media and VoIP services.

14.1 Conceptual Overview #

High Availability Extension supports two technologies for load balancing: Linux Virtual Server (LVS) and HAProxy. The key difference is Linux Virtual Server operates at OSI layer 4 (Transport), configuring the network layer of kernel, while HAProxy operates at layer 7 (Application), running in user space. Thus Linux Virtual Server needs fewer resources and can handle higher loads, while HAProxy can inspect the traffic, do SSL termination and make dispatching decisions based on the content of the traffic.

On the other hand, Linux Virtual Server includes two different software: IPVS (IP Virtual Server) and KTCPVS (Kernel TCP Virtual Server). IPVS provides layer 4 load balancing whereas KTCPVS provides layer 7 load balancing.

This section gives you a conceptual overview of load balancing in combination with high availability, then briefly introduces you to Linux Virtual Server and HAProxy. Finally, it points you to further reading.

The real servers and the load balancers may be interconnected by either high-speed LAN or by geographically dispersed WAN. The load balancers dispatch requests to the different servers. They make parallel services of the cluster appear as one virtual service on a single IP address (the virtual IP address or VIP). Request dispatching can use IP load balancing technologies or application-level load balancing technologies. Scalability of the system is achieved by transparently adding or removing nodes in the cluster.

High availability is provided by detecting node or service failures and reconfiguring the whole virtual server system appropriately, as usual.

There are several load balancing strategies. Here are some Layer 4 strategies, suitable for Linux Virtual Server:

Round Robin. The simplest strategy is to direct each connection to a different address, taking turns. For example, a DNS server can have several entries for a given host name. With DNS roundrobin, the DNS server will return all of them in a rotating order. Thus different clients will see different addresses.

Selecting the “best” server. Although this has several drawbacks, balancing could be implemented with an “the first server who responds” or “the least loaded server” approach.

Balance number of connections per server. A load balancer between users and servers can divide the number of users across multiple servers.

Geo Location. It is possible to direct clients to a server nearby.

Here are some Layer 7 strategies, suitable for HAProxy:

URI. Inspect the HTTP content and dispatch to a server most suitable for this specific URI.

URL parameter, RDP cookie. Inspect the HTTP content for a session parameter, possibly in post parameters, or the RDP (remote desktop protocol) session cookie, and dispatch to the server serving this session.

Although there is some overlap, HAProxy can be used in scenarios

where LVS/ipvsadm is not adequate and vice versa:

SSL termination. The front-end load balancers can handle the SSL layer. Thus the cloud nodes do not need to have access to the SSL keys, or could take advantage of SSL accelerators in the load balancers.

Application level. HAProxy operates at the application level, allowing the load balancing decisions to be influenced by the content stream. This allows for persistence based on cookies and other such filters.

On the other hand, LVS/ipvsadm cannot be fully

replaced by HAProxy:

LVS supports “direct routing”, where the load balancer is only in the inbound stream, whereas the outbound traffic is routed to the clients directly. This allows for potentially much higher throughput in asymmetric environments.

LVS supports stateful connection table replication (via

conntrackd). This allows for load balancer failover that is transparent to the client and server.

14.2 Configuring Load Balancing with Linux Virtual Server #

The following sections give an overview of the main LVS components and concepts. Then we explain how to set up Linux Virtual Server on High Availability Extension.

14.2.1 Director #

The main component of LVS is the ip_vs (or IPVS) Kernel code. It implements transport-layer load balancing inside the Linux Kernel (layer-4 switching). The node that runs a Linux Kernel including the IPVS code is called director. The IPVS code running on the director is the essential feature of LVS.

When clients connect to the director, the incoming requests are load-balanced across all cluster nodes: The director forwards packets to the real servers, using a modified set of routing rules that make the LVS work. For example, connections do not originate or terminate on the director, it does not send acknowledgments. The director acts as a specialized router that forwards packets from end users to real servers (the hosts that run the applications that process the requests).

By default, the Kernel does not need the IPVS module installed. The

IPVS Kernel module is included in the kernel-default package.

14.2.2 User Space Controller and Daemons #

The ldirectord daemon is a

user space daemon for managing Linux Virtual Server and monitoring the real servers

in an LVS cluster of load balanced virtual servers. A configuration

file, /etc/ha.d/ldirectord.cf, specifies the

virtual services and their associated real servers and tells

ldirectord how to configure the

server as an LVS redirector. When the daemon is initialized, it creates

the virtual services for the cluster.

By periodically requesting a known URL and checking the responses, the

ldirectord daemon monitors the

health of the real servers. If a real server fails, it will be removed

from the list of available servers at the load balancer. When the

service monitor detects that the dead server has recovered and is

working again, it will add the server back to the list of available

servers. In case that all real servers should be down, a fall-back

server can be specified to which to redirect a Web service. Typically

the fall-back server is localhost, presenting an emergency page about

the Web service being temporarily unavailable.

The ldirectord uses the

ipvsadm tool (package

ipvsadm) to manipulate the

virtual server table in the Linux Kernel.

14.2.3 Packet Forwarding #

There are three different methods of how the director can send packets from the client to the real servers:

- Network Address Translation (NAT)

Incoming requests arrive at the virtual IP. They are forwarded to the real servers by changing the destination IP address and port to that of the chosen real server. The real server sends the response to the load balancer which in turn changes the destination IP address and forwards the response back to the client. Thus, the end user receives the replies from the expected source. As all traffic goes through the load balancer, it usually becomes a bottleneck for the cluster.

- IP Tunneling (IP-IP Encapsulation)

IP tunneling enables packets addressed to an IP address to be redirected to another address, possibly on a different network. The LVS sends requests to real servers through an IP tunnel (redirecting to a different IP address) and the real servers reply directly to the client using their own routing tables. Cluster members can be in different subnets.

- Direct Routing

Packets from end users are forwarded directly to the real server. The IP packet is not modified, so the real servers must be configured to accept traffic for the virtual server's IP address. The response from the real server is sent directly to the client. The real servers and load balancers need to be in the same physical network segment.

14.2.4 Scheduling Algorithms #

Deciding which real server to use for a new connection requested by a

client is implemented using different algorithms. They are available as

modules and can be adapted to specific needs. For an overview of

available modules, refer to the ipvsadm(8) man page.

Upon receiving a connect request from a client, the director assigns a

real server to the client based on a schedule. The

scheduler is the part of the IPVS Kernel code which decides which real

server will get the next new connection.

More detailed description about Linux Virtual Server scheduling algorithms can be

found at http://kb.linuxvirtualserver.org/wiki/IPVS.

Furthermore, search for --scheduler in the

ipvsadm man page.

Related load balancing strategies for HAProxy can be found at http://www.haproxy.org/download/1.6/doc/configuration.txt.

14.2.5 Setting Up IP Load Balancing with YaST #

You can configure Kernel-based IP load balancing with the YaST IP

Load Balancing module. It is a front-end for

ldirectord.

To access the IP Load Balancing dialog, start YaST as root

and select › . Alternatively, start the YaST

cluster module as root on a command line with

yast2 iplb.

The YaST module writes its configuration to

/etc/ha.d/ldirectord.cf. The tabs available in the

YaST module correspond to the structure of the

/etc/ha.d/ldirectord.cf configuration file,

defining global options and defining the options for the virtual

services.

For an example configuration and the resulting processes between load balancers and real servers, refer to Example 14.1, “Simple ldirectord Configuration”.

Note: Global Parameters and Virtual Server Parameters

If a certain parameter is specified in both the virtual server section and in the global section, the value defined in the virtual server section overrides the value defined in the global section.

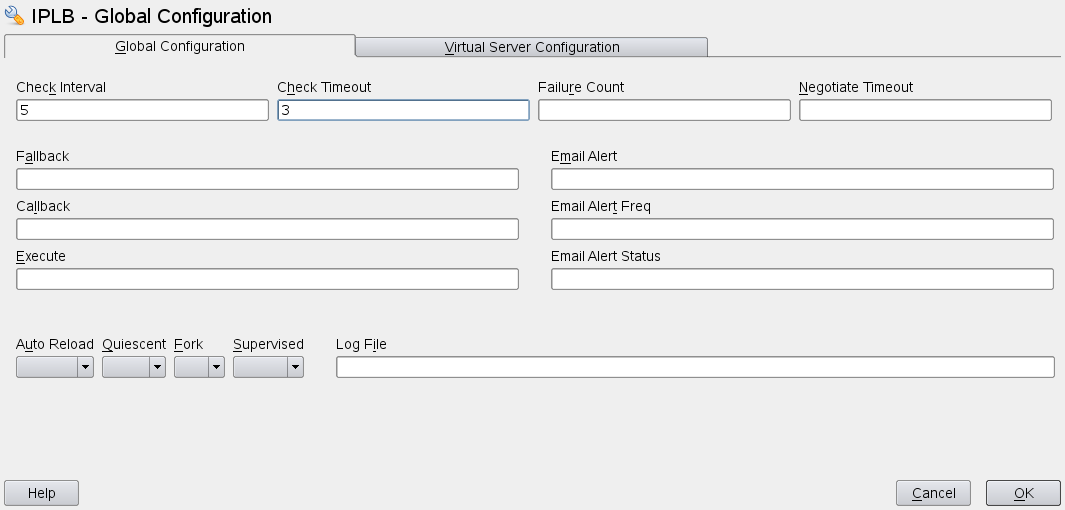

Procedure 14.1: Configuring Global Parameters #

The following procedure describes how to configure the most important

global parameters. For more details about the individual parameters

(and the parameters not covered here), click or

refer to the ldirectord man

page.

With , define the interval in which

ldirectordwill connect to each of the real servers to check if they are still online.With , set the time in which the real server should have responded after the last check.

With you can define how many times

ldirectordwill attempt to request the real servers until the check is considered failed.With define a timeout in seconds for negotiate checks.

In , enter the host name or IP address of the Web server onto which to redirect a Web service in case all real servers are down.

If you want the system to send alerts in case the connection status to any real server changes, enter a valid e-mail address in .

With , define after how many seconds the e-mail alert should be repeated if any of the real servers remains inaccessible.

In specify the server states for which e-mail alerts should be sent. If you want to define more than one state, use a comma-separated list.

With define, if

ldirectordshould continuously monitor the configuration file for modification. If set toyes, the configuration is automatically reloaded upon changes.With the switch, define whether to remove failed real servers from the Kernel's LVS table or not. If set to , failed servers are not removed. Instead their weight is set to

0which means that no new connections will be accepted. Already established connections will persist until they time out.To use an alternative path for logging, specify a path for the log files in . By default,

ldirectordwrites its log files to/var/log/ldirectord.log.

Figure 14.1: YaST IP Load Balancing—Global Parameters #

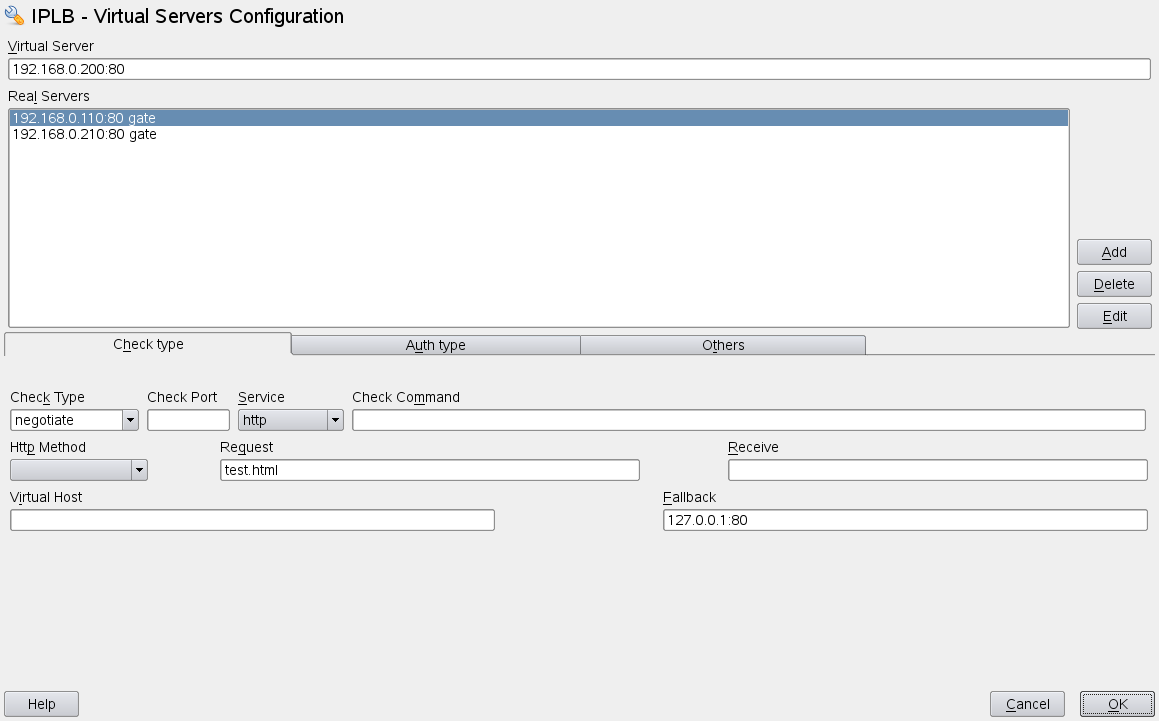

Procedure 14.2: Configuring Virtual Services #

You can configure one or more virtual services by defining a couple of

parameters for each. The following procedure describes how to configure

the most important parameters for a virtual service. For more details

about the individual parameters (and the parameters not covered here),

click or refer to the

ldirectord man page.

In the YaST IP Load Balancing module, switch to the tab.

a new virtual server or an existing virtual server. A new dialog shows the available options.

In enter the shared virtual IP address (IPv4 or IPv6) and port under which the load balancers and the real servers are accessible as LVS. Instead of IP address and port number you can also specify a host name and a service. Alternatively, you can also use a firewall mark. A firewall mark is a way of aggregating an arbitrary collection of

VIP:portservices into one virtual service.To specify the , you need to enter the IP addresses (IPv4, IPv6, or host names) of the servers, the ports (or service names) and the forwarding method. The forwarding method must either be

gate,ipipormasq, see Section 14.2.3, “Packet Forwarding”.Click the button and enter the required arguments for each real server.

As , select the type of check that should be performed to test if the real servers are still alive. For example, to send a request and check if the response contains an expected string, select

Negotiate.If you have set the to

Negotiate, you also need to define the type of service to monitor. Select it from the drop-down box.In , enter the URI to the object that is requested on each real server during the check intervals.

If you want to check if the response from the real servers contains a certain string (“I am alive” message), define a regular expression that needs to be matched. Enter the regular expression into . If the response from a real server contains this expression, the real server is considered to be alive.

Depending on the type of you have selected in Step 6, you also need to specify further parameters for authentication. Switch to the tab and enter the details like , , , or . For more information, refer to the YaST help text or to the

ldirectordman page.Switch to the tab.

Select the to be used for load balancing. For information on the available schedulers, refer to the

ipvsadm(8)man page.Select the to be used. If the virtual service is specified as an IP address and port, it must be either

tcporudp. If the virtual service is specified as a firewall mark, the protocol must befwm.Define further parameters, if needed. Confirm your configuration with . YaST writes the configuration to

/etc/ha.d/ldirectord.cf.

Figure 14.2: YaST IP Load Balancing—Virtual Services #

Example 14.1: Simple ldirectord Configuration #

The values shown in Figure 14.1, “YaST IP Load Balancing—Global Parameters” and

Figure 14.2, “YaST IP Load Balancing—Virtual Services”, would lead to the following

configuration, defined in /etc/ha.d/ldirectord.cf:

autoreload = yes 1 checkinterval = 5 2 checktimeout = 3 3 quiescent = yes 4 virtual = 192.168.0.200:80 5 checktype = negotiate 6 fallback = 127.0.0.1:80 7 protocol = tcp 8 real = 192.168.0.110:80 gate 9 real = 192.168.0.120:80 gate 9 receive = "still alive" 10 request = "test.html" 11 scheduler = wlc 12 service = http 13

Defines that | |

Interval in which | |

Time in which the real server should have responded after the last check. | |

Defines not to remove failed real servers from the Kernel's LVS

table, but to set their weight to | |

Virtual IP address (VIP) of the LVS. The LVS is available at port

| |

Type of check that should be performed to test if the real servers are still alive. | |

Server onto which to redirect a Web service all real servers for this service are down. | |

Protocol to be used. | |

Two real servers defined, both available at port

| |

Regular expression that needs to be matched in the response string from the real server. | |

URI to the object that is requested on each real server during the check intervals. | |

Selected scheduler to be used for load balancing. | |

Type of service to monitor. |

This configuration would lead to the following process flow: The

ldirectord will connect to each

real server once every 5 seconds

(2)

and request 192.168.0.110:80/test.html or

192.168.0.120:80/test.html as specified in

9

and

11.

If it does not receive the expected still alive

string

(10)

from a real server within 3 seconds

(3)

of the last check, it will remove the real server from the available

pool. However, because of the quiescent=yes setting

(4),

the real server will not be removed from the LVS table. Instead its

weight will be set to 0 so that no new connections

to this real server will be accepted. Already established connections

will be persistent until they time out.

14.2.6 Further Setup #

Apart from the configuration of

ldirectord with YaST, you

need to make sure the following conditions are fulfilled to complete the

LVS setup:

The real servers are set up correctly to provide the needed services.

The load balancing server (or servers) must be able to route traffic to the real servers using IP forwarding. The network configuration of the real servers depends on which packet forwarding method you have chosen.

To prevent the load balancing server (or servers) from becoming a single point of failure for the whole system, you need to set up one or several backups of the load balancer. In the cluster configuration, configure a primitive resource for

ldirectord, so thatldirectordcan fail over to other servers in case of hardware failure.As the backup of the load balancer also needs the

ldirectordconfiguration file to fulfill its task, make sure the/etc/ha.d/ldirectord.cfis available on all servers that you want to use as backup for the load balancer. You can synchronize the configuration file with Csync2 as described in Section 4.5, “Transferring the Configuration to All Nodes”.

14.3 Configuring Load Balancing with HAProxy #

The following section gives an overview of the HAProxy and how to set up on High Availability. The load balancer distributes all requests to its back-end servers. It is configured as active/passive, meaning if one master fails, the slave becomes the master. In such a scenario, the user will not notice any interruption.

In this section, we will use the following setup:

A load balancer, with the IP address

192.168.1.99.A virtual, floating IP address

192.168.1.99.Our servers (usually for Web content)

www.example1.com(IP:192.168.1.200) andwww.example2.com(IP:192.168.1.201)

To configure HAProxy, use the following procedure:

Install the

haproxypackage.Create the file

/etc/haproxy/haproxy.cfgwith the following contents:global 1 maxconn 256 daemon defaults 2 log global mode http option httplog option dontlognull retries 3 option redispatch maxconn 2000 timeout connect 5000 3 timeout client 50s 4 timeout server 50000 5 frontend LB bind 192.168.1.99:80 6 reqadd X-Forwarded-Proto:\ http default_backend LB backend LB mode http stats enable stats hide-version stats uri /stats stats realm Haproxy\ Statistics stats auth haproxy:password 7 balance roundrobin 8 option httpclose option forwardfor cookie LB insert option httpchk GET /robots.txt HTTP/1.0 server web1-srv 192.168.1.200:80 cookie web1-srv check server web2-srv 192.168.1.201:80 cookie web2-srv check

Section which contains process-wide and OS-specific options.

maxconnMaximum per-process number of concurrent connections.

daemonRecommended mode, HAProxy runs in the background.

Section which sets default parameters for all other sections following its declaration. Some important lines:

redispatchEnables or disables session redistribution in case of connection failure.

logEnables logging of events and traffic.

mode httpOperates in HTTP mode (recommended mode for HAProxy). In this mode, a request will be analyzed before a connection to any server is performed. Request that are not RFC-compliant will be rejected.

option forwardforAdds the HTTP

X-Forwarded-Forheader into the request. You need this option if you want to preserve the client's IP address.

The maximum time to wait for a connection attempt to a server to succeed.

The maximum time of inactivity on the client side.

The maximum time of inactivity on the server side.

Section which combines front-end and back-end sections in one.

balance leastconnDefines the load balancing algorithm, see http://cbonte.github.io/haproxy-dconv/configuration-1.5.html#4-balance.

stats enable,stats authEnables statistics reporting (by

stats enable). Theauthoption logs statistics with authentication to a specific account.

Credentials for HAProxy Statistic report page.

Load balancing will work in a round-robin process.

Test your configuration file:

root #haproxy-f /etc/haproxy/haproxy.cfg -cAdd the following line to Csync2's configuration file

/etc/csync2/csync2.cfgto make sure the HAProxy configuration file is included:include /etc/haproxy/haproxy.cfg

Synchronize it:

root #csync2-f /etc/haproxy/haproxy.cfgroot #csync2-xvNote

The Csync2 configuration part assumes that the HA nodes were configured using

ha-cluster-bootstrap. For details, see the Installation and Setup Quick Start.Make sure HAProxy is disabled on both load balancers (

aliceandbob) as it is started by Pacemaker:root #systemctldisable haproxyConfigure a new CIB:

root #crmconfigurecrm(live)#cibnew haproxy-configcrm(haproxy-config)#primitiveprimitive haproxy systemd:haproxy \ op start timeout=120 interval=0 \ op stop timeout=120 interval=0 \ op monitor timeout=100 interval=5s \ meta target-role=Startedcrm(haproxy-config)#primitivevip IPaddr2 \ params ip=192.168.1.99 nic=eth0 cidr_netmask=23 broadcast=192.168.1.255 \ op monitor interval=5s timeout=120 on-fail=restart \ meta target-role=Startedcrm(haproxy-config)#orderhaproxy-after-IP Mandatory: vip haproxycrm(haproxy-config)#colocationhaproxy-with-public-IPs inf: haproxy vipcrm(haproxy-config)#groupg-haproxy vip haproxy-after-IPVerify the new CIB and correct any errors:

crm(haproxy-config)#verifyCommit the new CIB:

crm(haproxy-config)#cibuse livecrm(live)#cibcommit haproxy-config

14.4 For More Information #

Project home page at http://www.linuxvirtualserver.org/.

For more information about

ldirectord, refer to its comprehensive man page.LVS Knowledge Base: http://kb.linuxvirtualserver.org/wiki/Main_Page