6 Installation of virtualization components #

You can install the virtualization tools required to run a VM Host Server in two ways:

During the host OS installation by selecting a specific system role.

After the host OS is installed by running a corresponding YaST module.

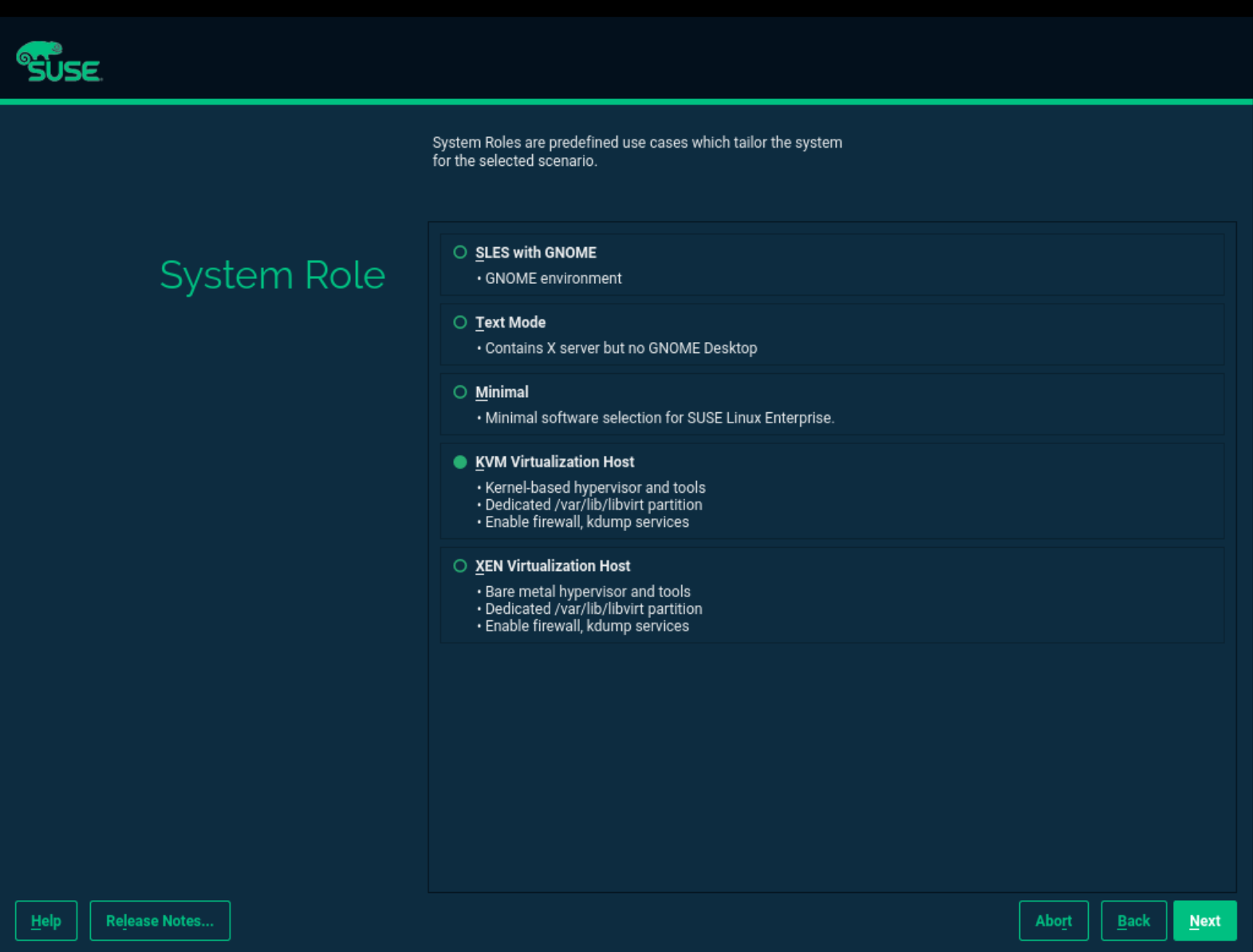

6.1 Specifying a system role #

You can install all the tools required for virtualization during the host SUSE Linux Enterprise Server installation. During the installation steps (refer to 第8章 「インストール手順」), you will be presented with the screen.

Here you can select either the or roles. The appropriate software selection and setup will be automatically performed during the OS installation.

Both virtualization system roles will create a dedicated

/var/lib/libvirt partition, and enable the firewall

and Kdump services.

6.2 Running the yast2-vm module #

Depending on the scope of the installation, none of the virtualization tools may be installed on your system. They will be automatically installed when configuring the hypervisor with the YaST module › . In case this module is not available in YaST, install the package yast2-vm.

6.2.1 Installing KVM #

To install KVM and the KVM tools, proceed as follows:

Verify that the yast2-vm package is installed. This package is YaST's configuration tool that simplifies the installation of hypervisors.

Start YaST and choose › .

Select for a minimal installation of QEMU tools. Select if a

libvirt-based management stack is also desired. Confirm with .To enable normal networking for the VM Guest, using a network bridge is recommended. YaST offers to automatically configure a bridge on the VM Host Server. Agree to do so by choosing , otherwise choose .

After the setup has been finished, you can start setting up VM Guests. Rebooting the VM Host Server is not required.

6.2.2 Installing Xen #

To install Xen and Xen tools, proceed as follows:

Start YaST and choose › .

Select for a minimal installation of Xen tools. Select if a

libvirt-based management stack is also desired. Confirm with .To enable normal networking for the VM Guest, using a network bridge is recommended. YaST offers to automatically configure a bridge on the VM Host Server. Agree to do so by choosing , otherwise choose .

After the setup has been finished, you need to reboot the machine with the Xen kernel.

Tip: Default boot kernelIf everything works as expected, change the default boot kernel with YaST and make the Xen-enabled kernel the default. For more information about changing the default kernel, see 14.3項 「YaSTによるブートローダの設定」.

6.3 Patterns #

It is possible using Zypper and patterns to install virtualization

packages. Run the command zypper in -t pattern

PATTERN. Available patterns are:

- KVM

kvm_server: sets up the KVM VM Host Server with QEMU tools for managementkvm_tools: installs thelibvirttools for managing and monitoring VM Guests

- Xen

xen_server: sets up the Xen VM Host Server with Xen tools for managementxen_tools: installs thelibvirttools for managing and monitoring VM Guests

6.4 Installing UEFI support #

We support UEFI Secure Boot on AMD64/Intel 64 guests only. KVM guests support UEFI Secure Boot by using the OVMF firmware. Xen HVM guests support booting from the OVMF firmware as well, but they do not support UEFI Secure Boot.

UEFI support is provided by OVMF (Open Virtual Machine Firmware). To enable UEFI boot, first install the qemu-ovmf-x86_64 or qemu-uefi-aarch64 package depending on the architecture of the guest.

The firmware used by virtual machines is auto-selected. The auto-selection

is based on the JSON files in the firmware package described above. The

libvirt QEMU driver parses those files when loading so it knows the

capabilities of the various types of firmware. Then when the user selects

the type of firmware and any desired features (for example, support for

UEFI Secure Boot), libvirt will be able to find a firmware file that

satisfies the user's requirements.

For example, to specify EFI with UEFI Secure Boot, use the following configuration:

<os firmware='efi'> <loader secure='yes'/> </os>

The qemu-ovmf-x86_64 package contains the following important UEFI firmware images. They provide UEFI Secure Boot capability for various VM Guests:

#rpm -ql qemu-ovmf-x86_64[...] /usr/share/qemu/ovmf-x86_64-smm-ms-code.bin /usr/share/qemu/ovmf-x86_64-smm-ms-vars.bin /usr/share/qemu/ovmf-x86_64-smm-opensuse-code.bin /usr/share/qemu/ovmf-x86_64-smm-opensuse-vars.bin /usr/share/qemu/ovmf-x86_64-smm-suse-code.bin /usr/share/qemu/ovmf-x86_64-smm-suse-vars.bin [...]

To use UEFI Secure Boot for SUSE Linux Enterprise guests, use the

ovmf-x86_64-smm-suse-code.binfirmware.To use UEFI Secure Boot for openSUSE guests, use the

ovmf-x86_64-smm-opensuse-code.binfirmware.To use UEFI Secure Boot for Microsoft Windows guests, use the

ovmf-x86_64-smm-ms-code.binfirmware.

For the AArch64 architecture, the package is named qemu-uefi-aarch32:

#rpm -ql qemu-uefi-aarch32[...] /usr/share/qemu/aavmf-aarch32-code.bin /usr/share/qemu/aavmf-aarch32-vars.bin /usr/share/qemu/firmware /usr/share/qemu/firmware/60-aavmf-aarch32.json /usr/share/qemu/qemu-uefi-aarch32.bin

The *-code.bin files are the UEFI firmware files. The

*-vars.bin files are corresponding variable store

images that can be used as a template for a per-VM non-volatile store.

libvirt copies the specified vars template to a per-VM

path under /var/lib/libvirt/qemu/nvram/ when first

creating the VM. Files without code or

vars in the name can be used as a single UEFI image. They

are not as useful since no UEFI variables persist across power cycles of the

VM.

The *-ms*.bin files contain UEFI CA keys as found on

real hardware. Therefore, they are configured as the default in libvirt.

Likewise, the *-suse*.bin files contain preinstalled

SUSE keys. There is also a set of files with no preinstalled keys.

For details, see Using UEFI Secure Boot and http://www.linux-kvm.org/downloads/lersek/ovmf-whitepaper-c770f8c.txt.

6.5 Enable nested virtualization in KVM #

KVM's nested virtualization is still a technology preview. It is provided for testing purposes and is not supported.

Nested guests are KVM guests run in a KVM guest. When describing nested guests, we will use the following virtualization layers:

- L0

A bare metal host running KVM.

- L1

A virtual machine running on L0. Because it can run another KVM, it is called a guest hypervisor.

- L2

A virtual machine running on L1. It is called a nested guest.

Nested virtualization has many advantages. You can benefit from it in the following scenarios:

Manage your own virtual machines directly with your hypervisor of choice in cloud environments.

Enable the live migration of hypervisors and their guest virtual machines as a single entity.

Use it for software development and testing.

To enable nesting temporarily, remove the module and reload it with the

nested KVM module parameter:

For Intel CPUs, run:

>sudomodprobe -r kvm_intel && modprobe kvm_intel nested=1For AMD CPUs, run:

>sudomodprobe -r kvm_amd && modprobe kvm_amd nested=1

To enable nesting permanently, enable the nested KVM

module parameter in the /etc/modprobe.d/kvm_*.conf file,

depending on your CPU:

For Intel CPUs, edit

/etc/modprobe.d/kvm_intel.confand add the following line:options kvm_intel nested=1

For AMD CPUs, edit

/etc/modprobe.d/kvm_amd.confand add the following line:options kvm_amd nested=1

When your L0 host is capable of nesting, you will be able to start an L1 guest in one of the following ways:

Use the

-cpu hostQEMU command line option.Add the

vmx(for Intel CPUs) or thesvm(for AMD CPUs) CPU feature to the-cpuQEMU command line option, which enables virtualization for the virtual CPU.

6.5.1 VMware ESX as a guest hypervisor #

If you use VMware ESX as a guest hypervisor on top of a KVM bare metal hypervisor, you may experience unstable network communication. This problem occurs especially between nested KVM guests and the KVM bare metal hypervisor or external network. The following default CPU configuration of the nested KVM guest is causing the problem:

<cpu mode='host-model' check='partial'/>

To fix it, modify the CPU configuration as follow:

[...] <cpu mode='host-passthrough' check='none'> <cache mode='passthrough'/> </cpu> [...]