23 DRBD #

The distributed replicated block device (DRBD*) allows you to create a mirror of two block devices that are located at two different sites across an IP network. When used with Corosync, DRBD supports distributed high-availability Linux clusters. This chapter shows you how to install and set up DRBD.

23.1 Conceptual overview #

DRBD replicates data on the primary device to the secondary device in a way that ensures that both copies of the data remain identical. Think of it as a networked RAID 1. It mirrors data in real-time, so its replication occurs continuously. Applications do not need to know that in fact their data is stored on different disks.

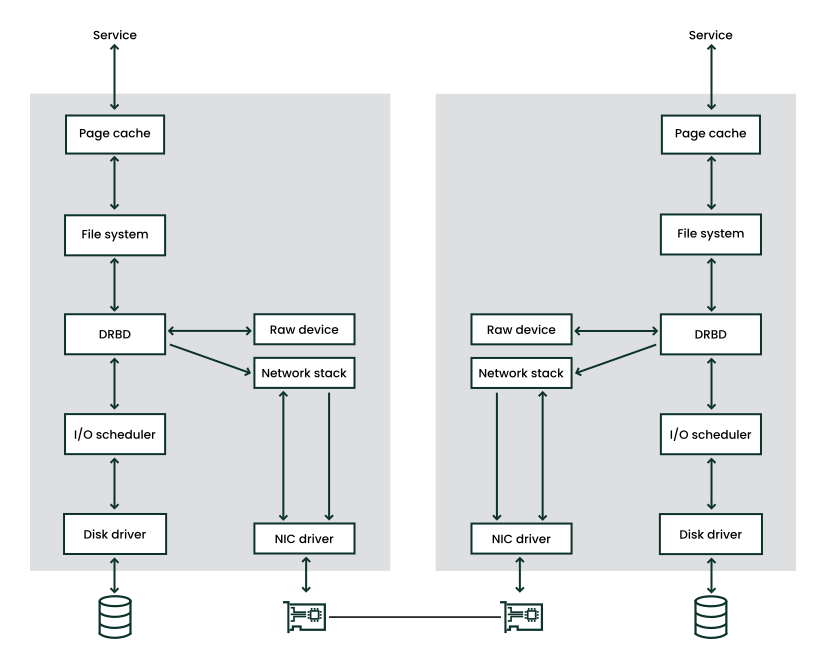

DRBD is a Linux Kernel module and sits between the I/O scheduler at the

lower end and the file system at the upper end, see

Figure 23.1, “Position of DRBD within Linux”. To communicate with DRBD, users

use the high-level command drbdadm. For maximum

flexibility DRBD comes with the low-level tool

drbdsetup.

The data traffic between mirrors is not encrypted. For secure data exchange, you should deploy a Virtual Private Network (VPN) solution for the connection.

DRBD allows you to use any block device supported by Linux, usually:

partition or complete hard disk

software RAID

Logical Volume Manager (LVM)

Enterprise Volume Management System (EVMS)

By default, DRBD uses the TCP ports 7788 and higher

for communication between DRBD nodes. Make sure that your firewall does

not prevent communication on the used ports.

You must set up the DRBD devices before creating file systems on them.

Everything pertaining to user data should be done solely via the

/dev/drbdN device and

not on the raw device, as DRBD uses the last part of the raw device for

metadata. Using the raw device causes inconsistent data.

With udev integration, you can also get symbolic links in the form

/dev/drbd/by-res/RESOURCES

which are easier to use and provide safety against remembering the wrong

minor number of the device.

For example, if the raw device is 1024 MB in size, the DRBD device has only 1023 MB available for data, with about 70 KB hidden and reserved for the metadata. Any attempt to access the remaining kilobytes via raw disks fails because it is not available for user data.

23.2 Installing DRBD services #

Install the High Availability pattern on both SUSE Linux Enterprise Server machines in your networked cluster as described in Part I, “Installation and setup”. Installing the pattern also installs the DRBD program files.

If you do not need the complete cluster stack but only want to use DRBD, install the packages drbd, drbd-kmp-FLAVOR, drbd-utils, and yast2-drbd.

23.3 Setting up the DRBD service #

The following procedures use the server names alice and bob,

and the DRBD resource name r0. It sets up

alice as the primary node and /dev/disk/by-id/example-disk1 for

storage. Make sure to modify the instructions to use your own nodes and

file names.

The following procedures assume that the cluster nodes use the TCP port 7788.

Make sure this port is open in your firewall.

To set up DRBD, perform the following procedures:

Configure DRBD using one of the following methods:

Section 23.3.4, “Initializing and formatting DRBD resources”

23.3.1 Preparing your system to use DRBD #

Before you start configuring DRBD, you might need to perform some or all of the following steps:

Make sure the block devices in your Linux nodes are ready and partitioned (if needed).

If your disk already contains a file system that you do not need anymore, destroy the file system structure with the following command:

#dd if=/dev/zero of=YOUR_DEVICE count=16 bs=1MIf you have more file systems to destroy, repeat this step on all devices you want to include into your DRBD setup.

If the cluster is already using DRBD, put your cluster in maintenance mode:

#crm maintenance onIf you skip this step when your cluster already uses DRBD, a syntax error in the live configuration leads to a service shutdown. Alternatively, you can also use

drbdadm -c FILEto test a configuration file.

23.3.2 Configuring DRBD manually #

The DRBD9 “auto-promote” feature can automatically promote a resource to the primary role when one of its devices is mounted or opened for writing.

The auto promote feature has currently restricted support. With DRBD 9, SUSE supports the same use cases that were also supported with DRBD 8. Use cases beyond that, such as setups with more than two nodes, are not supported.

To set up DRBD manually, proceed as follows:

Beginning with DRBD version 8.3, the former configuration file is

split into separate files, located under the directory

/etc/drbd.d/.

Open the file

/etc/drbd.d/global_common.conf. It already contains global, pre-defined values. Go to thestartupsection and insert these lines:startup { # wfc-timeout degr-wfc-timeout outdated-wfc-timeout # wait-after-sb; wfc-timeout 100; degr-wfc-timeout 120; }These options are used to reduce the timeouts when booting, see https://docs.linbit.com/docs/users-guide-9.0/#ch-configure for more details.

Create the file

/etc/drbd.d/r0.res. Change the lines according to your situation and save it:resource r0 { 1 device /dev/drbd0; 2 disk /dev/disk/by-id/example-disk1; 3 meta-disk internal; 4 on alice { 5 address 192.168.1.10:7788; 6 node-id 0; 7 } on bob { 5 address 192.168.1.11:7788; 6 node-id 1; 7 } disk { resync-rate 10M; 8 } connection-mesh { 9 hosts alice bob; } net { protocol C; 10 fencing resource-and-stonith; 11 } handlers { 12 fence-peer "/usr/lib/drbd/crm-fence-peer.9.sh"; after-resync-target "/usr/lib/drbd/crm-unfence-peer.9.sh"; } }A DRBD resource name that decribes the associated service. For example,

nfs,http,mysql_0,postgres_wal, etc. In this example, a more general namer0is used.The device name for DRBD and its minor number.

In the example above, the minor number 0 is used for DRBD. The udev integration scripts give you a symbolic link

/dev/drbd/by-res/nfs/0. Alternatively, omit the device node name in the configuration and use the following line instead:drbd0 minor 0(/dev/is optional) or/dev/drbd0The raw device that is replicated between nodes. Note, in this example the devices are the same on both nodes. If you need different devices, move the

diskparameter into theonhost.The meta-disk parameter usually contains the value

internal, but it is possible to specify an explicit device to hold the metadata. See https://docs.linbit.com/docs/users-guide-9.0/#s-metadata for more information.The

onsection states which host this configuration statement applies to.The IP address and port number of the respective node. Each resource needs an individual port, usually starting with

7788. Both ports must be the same for a DRBD resource.The node ID is required when configuring more than two nodes. It is a unique, non-negative integer to distinguish the different nodes.

The synchronization rate. Set it to one third of the lower of the disk- and network bandwidth. It only limits the resynchronization, not the replication.

Defines all nodes of a mesh. The

hostsparameter contains all host names that share the same DRBD setup.The protocol to use for this connection. Protocol

Cis the default option. It provides better data availability and does not consider a write to be complete until it has reached all local and remote disks.Specifies the fencing policy

resource-and-stonithat the DRBD level. This policy immediately suspends active I/O operations until STONITH completes.Enables resource-level fencing to prevent Pacemaker from starting a service with outdated data. If the DRBD replication link becomes disconnected, the

crm-fence-peer.9.shscript stops the DRBD resource from being promoted to another node until the replication link becomes connected again and DRBD completes its synchronization process.Check the syntax of your configuration files. If the following command returns an error, verify your files:

#drbdadm dump allCopy the DRBD configuration files to all nodes:

#csync2 -xvBy default, the DRBD configuration file

/etc/drbd.confand the directory/etc/drbd.d/are already included in the list of files that Csync2 synchronizes.

23.3.3 Configuring DRBD with YaST #

YaST can be used to start with an initial setup of DRBD. After you have created your DRBD setup, you can fine-tune the generated files manually.

However, when you have changed the configuration files, do not use the YaST DRBD module anymore. The DRBD module supports only a limited set of basic configuration. If you use it again, the module might not show your changes.

To set up DRBD with YaST, proceed as follows:

Start YaST and select the configuration module › . If you already have a DRBD configuration, YaST warns you. YaST changes your configuration and saves your old DRBD configuration files as

*.YaSTsave.Leave the booting flag in › as it is (by default it is

off); do not change that as Pacemaker manages this service.If you have a firewall running, enable .

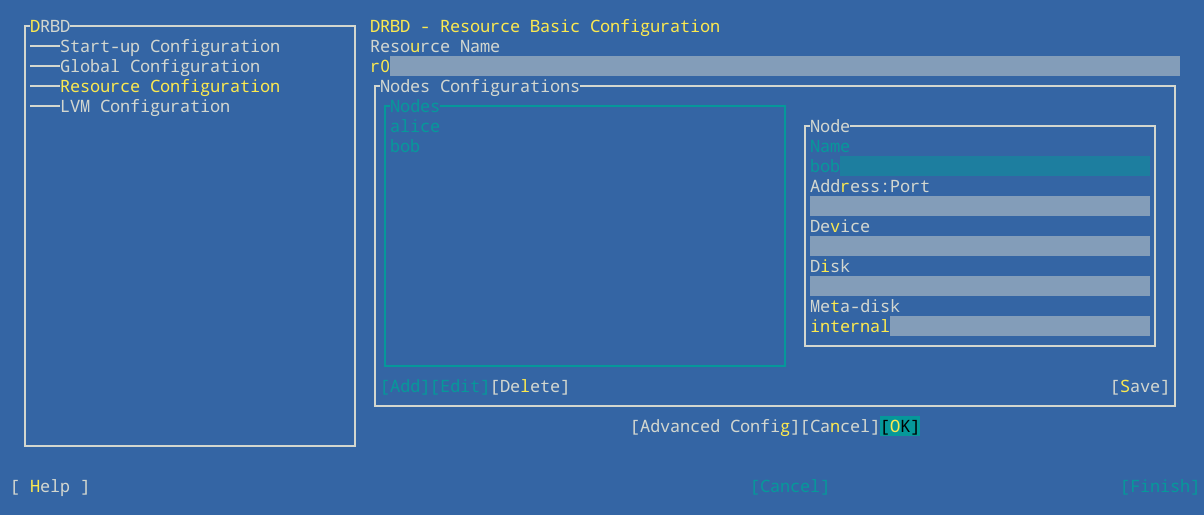

Go to the entry. Select to create a new resource (see Figure 23.2, “Resource configuration”).

Figure 23.2: Resource configuration #The following parameters need to be set:

The name of the DRBD resource (mandatory)

The host name of the relevant node

The IP address and port number (default

7788) for the respective nodeThe block device path that is used to access the replicated data. If the device contains a minor number, the associated block device is usually named

/dev/drbdX, where X is the device minor number. If the device does not contain a minor number, make sure to addminor 0after the device name.The raw device that is replicated between both nodes. If you use LVM, insert your LVM device name.

The is either set to the value

internalor specifies an explicit device extended by an index to hold the metadata needed by DRBD.A real device may also be used for multiple DRBD resources. For example, if your is

/dev/disk/by-id/example-disk6[0]for the first resource, you may use/dev/disk/by-id/example-disk6[1]for the second resource. However, there must be at least 128 MB space for each resource available on this disk. The fixed metadata size limits the maximum data size that you can replicate.

All these options are explained in the examples in the

/usr/share/doc/packages/drbd/drbd.conffile and in the man page ofdrbd.conf(5).Click .

Click to enter the second DRBD resource and finish with .

Close the resource configuration with and .

If you use LVM with DRBD, it is necessary to change certain options in the LVM configuration file (see the entry). This change can be done by the YaST DRBD module automatically.

The disk name of localhost for the DRBD resource and the default filter will be rejected in the LVM filter. Only

/dev/drbdcan be scanned for an LVM device.For example, if

/dev/disk/by-id/example-disk1is used as a DRBD disk, the device name will be inserted as the first entry in the LVM filter. To change the filter manually, click the check box.Save your changes with .

Copy the DRBD configuration files to all nodes:

#csync2 -xvBy default, the DRBD configuration file

/etc/drbd.confand the directory/etc/drbd.d/are already included in the list of files that Csync2 synchronizes.

23.3.4 Initializing and formatting DRBD resources #

After you have prepared your system and configured DRBD, initialize your disk for the first time:

On both nodes (alice and bob), initialize the metadata storage:

#drbdadm create-md r0#drbdadm up r0To shorten the initial resynchronization of your DRBD resource check the following:

If the DRBD devices on all nodes have the same data (for example, by destroying the file system structure with the

ddcommand as shown in Section 23.3, “Setting up the DRBD service”), then skip the initial resynchronization with the following command (on both nodes):#drbdadm new-current-uuid --clear-bitmap r0/0The state should be

Secondary/Secondary UpToDate/UpToDateOtherwise, proceed with the next step.

On the primary node alice, start the resynchronization process:

#drbdadm primary --force r0Check the status with:

#drbdadm status r0r0 role:Primary disk:UpToDate bob role:Secondary peer-disk:UpToDateCreate your file system on top of your DRBD device, for example:

#mkfs.ext3 /dev/drbd0Mount the file system and use it:

#mount /dev/drbd0 /mnt/

23.3.5 Creating cluster resources for DRBD #

After you have initialized your DRBD device, create a cluster resource to manage the DRBD device, and a promotable clone to allow this resource to run on both nodes:

Start the

crminteractive shell:#crm configureCreate a primitive for the DRBD resource

r0:crm(live)configure#primitive drbd-r0 ocf:linbit:drbd \ params drbd_resource="r0" \ op monitor interval=15 role=Promoted \ op monitor interval=30 role=UnpromotedCreate a promotable clone for the

drbd-r0primitive:crm(live)configure#clone cl-drbd-r0 drbd-r0 \ meta promotable="true" promoted-max="1" promoted-node-max="1" \ clone-max="2" clone-node-max="1" notify="true" interleave=trueCommit this configuration:

crm(live)configure#commitExit the interactive shell:

crm(live)configure#quit

If you put the cluster in maintenance mode before configuring DRBD, you can now move

it back to normal operation with crm maintenance off.

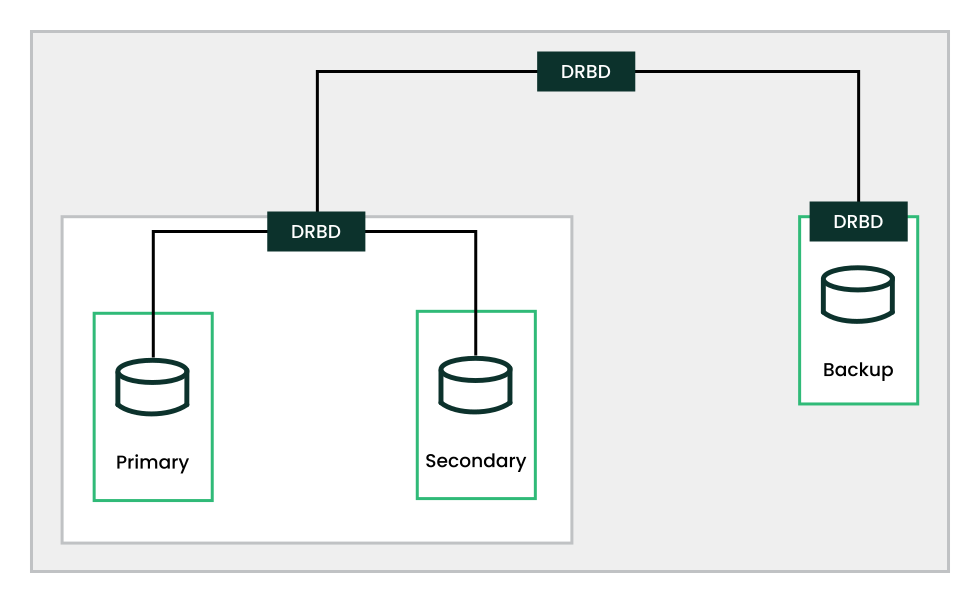

23.4 Creating a stacked DRBD device #

A stacked DRBD device contains two other devices of which at least one device is also a DRBD resource. In other words, DRBD adds an additional node on top of an existing DRBD resource (see Figure 23.3, “Resource stacking”). Such a replication setup can be used for backup and disaster recovery purposes.

Three-way replication uses asynchronous (DRBD protocol A) and synchronous replication (DRBD protocol C). The asynchronous part is used for the stacked resource whereas the synchronous part is used for the backup.

Your production environment uses the stacked device. For example,

if you have a DRBD device /dev/drbd0 and a stacked

device /dev/drbd10 on top, the file system

is created on /dev/drbd10. See Example 23.1, “Configuration of a three-node stacked DRBD resource” for more details.

# /etc/drbd.d/r0.res

resource r0 {

protocol C;

device /dev/drbd0;

disk /dev/disk/by-id/example-disk1;

meta-disk internal;

on amsterdam-alice {

address 192.168.1.1:7900;

}

on amsterdam-bob {

address 192.168.1.2:7900;

}

}

resource r0-U {

protocol A;

device /dev/drbd10;

stacked-on-top-of r0 {

address 192.168.2.1:7910;

}

on berlin-charlie {

disk /dev/disk/by-id/example-disk10;

address 192.168.2.2:7910; # Public IP of the backup node

meta-disk internal;

}

}23.5 Testing the DRBD service #

If the install and configuration procedures worked as expected, you are ready to run a basic test of the DRBD functionality. This test also helps with understanding how the software works.

Test the DRBD service on alice.

Open a terminal console, then log in as

root.Create a mount point on alice, such as

/srv/r0:#mkdir -p /srv/r0Mount the

drbddevice:#mount -o rw /dev/drbd0 /srv/r0Create a file from the primary node:

#touch /srv/r0/from_aliceUnmount the disk on alice:

#umount /srv/r0Downgrade the DRBD service on alice by typing the following command on alice:

#drbdadm secondary r0

Test the DRBD service on bob.

Open a terminal console, then log in as

rooton bob.On bob, promote the DRBD service to primary:

#drbdadm primary r0On bob, check to see if bob is primary:

#drbdadm status r0On bob, create a mount point such as

/srv/r0:#mkdir /srv/r0On bob, mount the DRBD device:

#mount -o rw /dev/drbd0 /srv/r0Verify that the file you created on alice exists:

#ls /srv/r0/from_aliceThe

/srv/r0/from_alicefile should be listed.

If the service is working on both nodes, the DRBD setup is complete.

Set up alice as the primary again.

Dismount the disk on bob by typing the following command on bob:

#umount /srv/r0Downgrade the DRBD service on bob by typing the following command on bob:

#drbdadm secondary r0On alice, promote the DRBD service to primary:

#drbdadm primary r0On alice, check to see if alice is primary:

#drbdadm status r0

To get the service to automatically start and fail over if the server has a problem, you can set up DRBD as a high availability service with Pacemaker/Corosync. For information about installing and configuring for SUSE Linux Enterprise 15 SP6 see Part II, “Configuration and administration”.

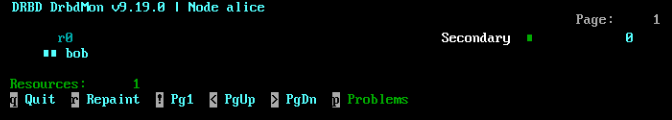

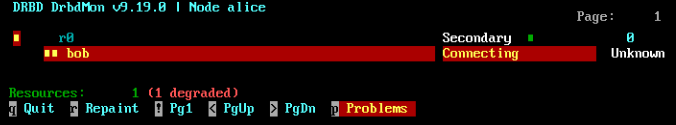

23.6 Monitoring DRBD devices #

DRBD comes with the utility drbdmon which offers

real time monitoring. It shows all the configured resources and their

problems.

drbdmon #In case of problems, drbdadm shows an error message:

drbdmon #23.7 Tuning DRBD #

There are several ways to tune DRBD:

Use an external disk for your metadata. This might help, at the cost of maintenance ease.

Tune your network connection, by changing the receive and send buffer settings via

sysctl.Change the

max-buffers,max-epoch-sizeor both in the DRBD configuration.Increase the

al-extentsvalue, depending on your IO patterns.If you have a hardware RAID controller with a BBU (Battery Backup Unit), you might benefit from setting

no-disk-flushes,no-disk-barrierand/orno-md-flushes.Enable read-balancing depending on your workload. See https://www.linbit.com/en/read-balancing/ for more details.

23.8 Troubleshooting DRBD #

The DRBD setup involves many components and problems may arise from different sources. The following sections cover several common scenarios and recommend solutions.

23.8.1 Configuration #

If the initial DRBD setup does not work as expected, there may be something wrong with your configuration.

To get information about the configuration:

Open a terminal console, then log in as

root.Test the configuration file by running

drbdadmwith the-doption. Enter the following command:#drbdadm -d adjust r0In a dry run of the

adjustoption,drbdadmcompares the actual configuration of the DRBD resource with your DRBD configuration file, but it does not execute the calls. Review the output to make sure you know the source and cause of any errors.If there are errors in the

/etc/drbd.d/*anddrbd.conffiles, correct them before continuing.If the partitions and settings are correct, run

drbdadmagain without the-doption.#drbdadm adjust r0This applies the configuration file to the DRBD resource.

23.8.2 Host names #

For DRBD, host names are case-sensitive (Node0

would be a different host than node0), and

compared to the host name as stored in the Kernel (see the

uname -n output).

If you have several network devices and want to use a dedicated network

device, the host name might not resolve to the used IP address. In

this case, use the parameter disable-ip-verification.

23.8.3 TCP port 7788 #

If your system cannot connect to the peer, this might be a problem with

your local firewall. By default, DRBD uses the TCP port

7788 to access the other node. Make sure that this

port is accessible on both nodes.

23.8.4 DRBD devices broken after reboot #

In cases when DRBD does not know which of the real devices holds the latest data, it changes to a split-brain condition. In this case, the respective DRBD subsystems come up as secondary and do not connect to each other. In this case, the following message can be found in the logging data:

Split-Brain detected, dropping connection!

To resolve this situation, enter the following commands on the node which has data to be discarded:

#drbdadm disconnect r0#drbdadm secondary r0#drbdadm connect --discard-my-data r0

On the node which has the latest data, enter the following commands:

#drbdadm disconnect r0#drbdadm connect r0

That resolves the issue by overwriting one node's data with the peer's data, therefore getting a consistent view on both nodes.

23.9 For more information #

The following open source resources are available for DRBD:

The project home page https://linbit.com/drbd/.

The following man pages for DRBD are available in the distribution:

drbd(8),drbdmeta(8),drbdsetup(8),drbdadm(8),drbd.conf(5).Find a commented example configuration for DRBD at

/usr/share/doc/packages/drbd-utils/drbd.conf.example.