3 Admin Node HA setup #

The Admin Node is a Ceph cluster node where the Salt Master service runs. It manages the rest of the cluster nodes by querying and instructing their Salt Minion services. It usually includes other services as well, for example the Grafana dashboard backed by the Prometheus monitoring toolkit.

In case of Admin Node failure, you usually need to provide new working hardware for the node and restore the complete cluster configuration stack from a recent backup. Such a method is time consuming and causes cluster outage.

To prevent the Ceph cluster performance downtime caused by the Admin Node failure, we recommend making use of a High Availability (HA) cluster for the Ceph Admin Node.

3.1 Outline of the HA cluster for Admin Node #

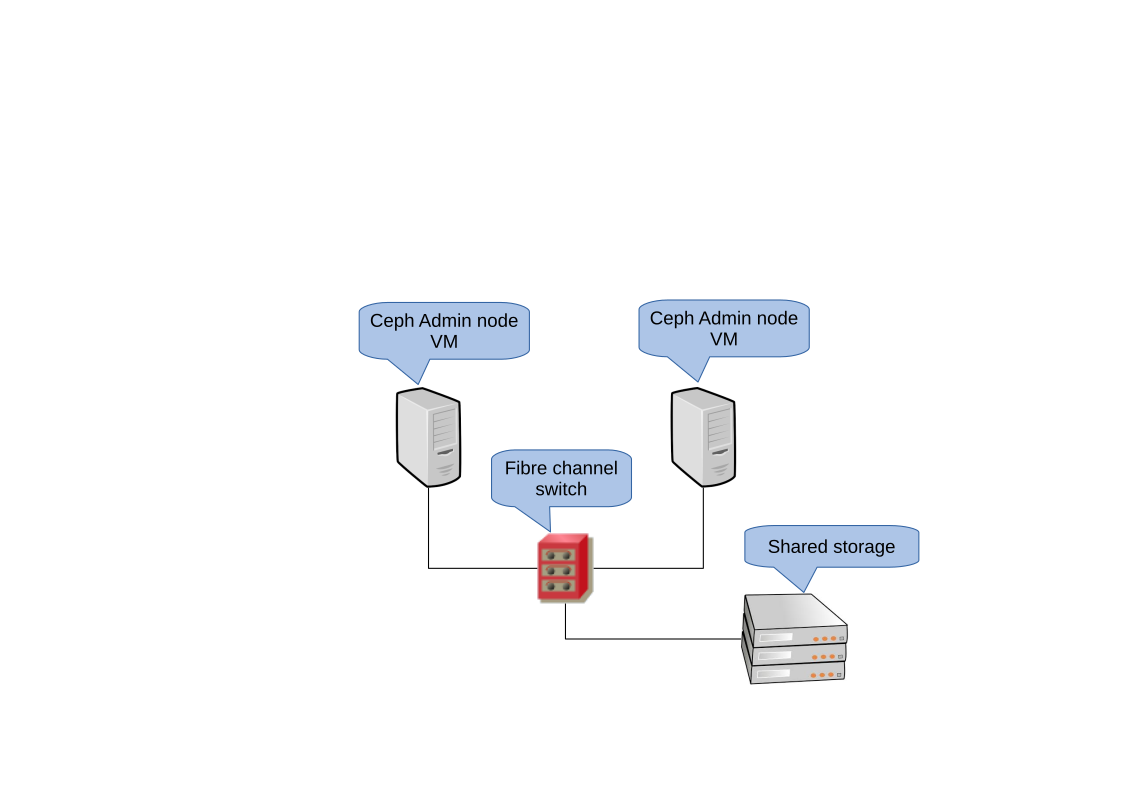

The idea of an HA cluster is that in case of one cluster node failing, the other node automatically takes over its role, including the virtualized Admin Node. This way, other Ceph cluster nodes do not notice that the Admin Node failed.

The minimal HA solution for the Admin Node requires the following hardware:

Two bare metal servers able to run SUSE Linux Enterprise with the High Availability extension and virtualize the Admin Node.

Two or more redundant network communication paths, for example via Network Device Bonding.

Shared storage to host the disk image(s) of the Admin Node virtual machine. The shared storage needs to be accessible from both servers. It can be, for example, an NFS export, a Samba share, or iSCSI target.

Find more details on the cluster requirements at https://documentation.suse.com/sle-ha/15-SP2/html/SLE-HA-all/art-sleha-install-quick.html#sec-ha-inst-quick-req.

3.2 Building an HA cluster with the Admin Node #

The following procedure summarizes the most important steps of building the HA cluster for virtualizing the Admin Node. For details, refer to the indicated links.

Set up a basic 2-node HA cluster with shared storage as described in https://documentation.suse.com/sle-ha/15-SP2/html/SLE-HA-all/art-sleha-install-quick.html.

On both cluster nodes, install all packages required for running the KVM hypervisor and the

libvirttoolkit as described in https://documentation.suse.com/sles/15-SP2/html/SLES-all/cha-vt-installation.html#sec-vt-installation-kvm.On the first cluster node, create a new KVM virtual machine (VM) making use of

libvirtas described in https://documentation.suse.com/sles/15-SP2/html/SLES-all/cha-kvm-inst.html#sec-libvirt-inst-virt-install. Use the preconfigured shared storage to store the disk images of the VM.After the VM setup is complete, export its configuration to an XML file on the shared storage. Use the following syntax:

#virsh dumpxml VM_NAME > /path/to/shared/vm_name.xmlCreate a resource for the Admin Node VM. Refer to https://documentation.suse.com/sle-ha/15-SP2/html/SLE-HA-all/cha-conf-hawk2.html for general info on creating HA resources. Detailed info on creating resources for a KVM virtual machine is described in http://www.linux-ha.org/wiki/VirtualDomain_%28resource_agent%29.

On the newly-created VM guest, deploy the Admin Node including the additional services you need there. Follow the relevant steps in Chapter 6, Deploying Salt. At the same time, deploy the remaining Ceph cluster nodes on the non-HA cluster servers.