9 Deployment of additional services #

9.1 Installation of iSCSI gateway #

iSCSI is a storage area network (SAN) protocol that allows clients (called

initiators) to send SCSI commands to SCSI storage

devices (targets) on remote servers. SUSE Enterprise Storage

7 includes a facility that opens Ceph storage management to

heterogeneous clients, such as Microsoft Windows* and VMware* vSphere, through the

iSCSI protocol. Multipath iSCSI access enables availability and scalability

for these clients, and the standardized iSCSI protocol also provides an

additional layer of security isolation between clients and the SUSE Enterprise Storage

7 cluster. The configuration facility is named ceph-iscsi.

Using ceph-iscsi, Ceph storage administrators can define thin-provisioned,

replicated, highly-available volumes supporting read-only snapshots,

read-write clones, and automatic resizing with Ceph RADOS Block Device

(RBD). Administrators can then export volumes either via a single ceph-iscsi

gateway host, or via multiple gateway hosts supporting multipath failover.

Linux, Microsoft Windows, and VMware hosts can connect to volumes using the iSCSI

protocol, which makes them available like any other SCSI block device. This

means SUSE Enterprise Storage 7 customers can effectively run a complete

block-storage infrastructure subsystem on Ceph that provides all the

features and benefits of a conventional SAN, enabling future growth.

This chapter introduces detailed information to set up a Ceph cluster infrastructure together with an iSCSI gateway so that the client hosts can use remotely stored data as local storage devices using the iSCSI protocol.

9.1.1 iSCSI block storage #

iSCSI is an implementation of the Small Computer System Interface (SCSI) command set using the Internet Protocol (IP), specified in RFC 3720. iSCSI is implemented as a service where a client (the initiator) talks to a server (the target) via a session on TCP port 3260. An iSCSI target's IP address and port are called an iSCSI portal, where a target can be exposed through one or more portals. The combination of a target and one or more portals is called the target portal group (TPG).

The underlying data link layer protocol for iSCSI is most often Ethernet. More specifically, modern iSCSI infrastructures use 10 GigE Ethernet or faster networks for optimal throughput. 10 Gigabit Ethernet connectivity between the iSCSI gateway and the back-end Ceph cluster is strongly recommended.

9.1.1.1 The Linux kernel iSCSI target #

The Linux kernel iSCSI target was originally named LIO for

linux-iscsi.org, the project's original domain and Web

site. For some time, no fewer than four competing iSCSI target

implementations were available for the Linux platform, but LIO ultimately

prevailed as the single iSCSI reference target. The mainline kernel code

for LIO uses the simple, but somewhat ambiguous name "target",

distinguishing between "target core" and a variety of front-end and

back-end target modules.

The most commonly used front-end module is arguably iSCSI. However, LIO also supports Fibre Channel (FC), Fibre Channel over Ethernet (FCoE) and several other front-end protocols. At this time, only the iSCSI protocol is supported by SUSE Enterprise Storage.

The most frequently used target back-end module is one that is capable of simply re-exporting any available block device on the target host. This module is named iblock. However, LIO also has an RBD-specific back-end module supporting parallelized multipath I/O access to RBD images.

9.1.1.2 iSCSI initiators #

This section introduces brief information on iSCSI initiators used on Linux, Microsoft Windows, and VMware platforms.

9.1.1.2.1 Linux #

The standard initiator for the Linux platform is

open-iscsi. open-iscsi

launches a daemon, iscsid, which the user can

then use to discover iSCSI targets on any given portal, log in to

targets, and map iSCSI volumes. iscsid

communicates with the SCSI mid layer to create in-kernel block devices

that the kernel can then treat like any other SCSI block device on the

system. The open-iscsi initiator can be deployed

in conjunction with the Device Mapper Multipath

(dm-multipath) facility to provide a highly

available iSCSI block device.

9.1.1.2.2 Microsoft Windows and Hyper-V #

The default iSCSI initiator for the Microsoft Windows operating system is the Microsoft iSCSI initiator. The iSCSI service can be configured via a graphical user interface (GUI), and supports multipath I/O for high availability.

9.1.1.2.3 VMware #

The default iSCSI initiator for VMware vSphere and ESX is the VMware

ESX software iSCSI initiator, vmkiscsi. When

enabled, it can be configured either from the vSphere client, or using

the vmkiscsi-tool command. You can then format storage

volumes connected through the vSphere iSCSI storage adapter with VMFS,

and use them like any other VM storage device. The VMware initiator

also supports multipath I/O for high availability.

9.1.2 General information about ceph-iscsi #

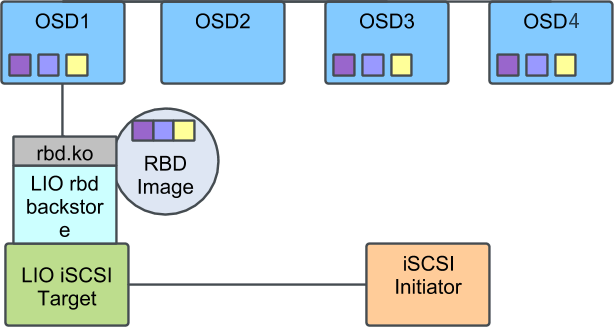

ceph-iscsi combines the benefits of RADOS Block Devices with the ubiquitous

versatility of iSCSI. By employing ceph-iscsi on an iSCSI target host (known

as the iSCSI Gateway), any application that needs to make use of block storage can

benefit from Ceph, even if it does not speak any Ceph client protocol.

Instead, users can use iSCSI or any other target front-end protocol to

connect to an LIO target, which translates all target I/O to RBD storage

operations.

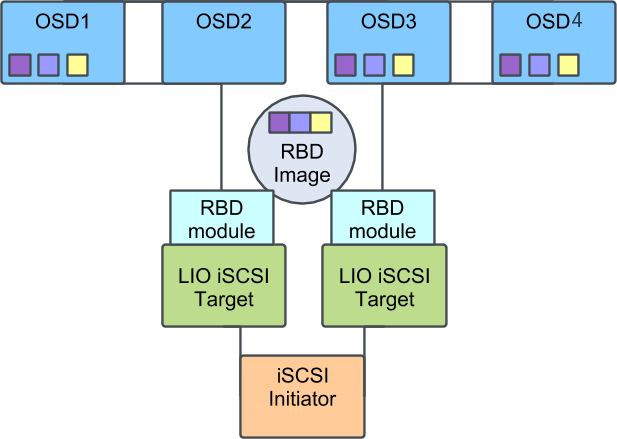

ceph-iscsi is inherently highly-available and supports multipath operations.

Thus, downstream initiator hosts can use multiple iSCSI gateways for both

high availability and scalability. When communicating with an iSCSI

configuration with more than one gateway, initiators may load-balance iSCSI

requests across multiple gateways. In the event of a gateway failing, being

temporarily unreachable, or being disabled for maintenance, I/O will

transparently continue via another gateway.

9.1.3 Deployment considerations #

A minimum configuration of SUSE Enterprise Storage 7 with ceph-iscsi

consists of the following components:

A Ceph storage cluster. The Ceph cluster consists of a minimum of four physical servers hosting at least eight object storage daemons (OSDs) each. In such a configuration, three OSD nodes also double as a monitor (MON) host.

An iSCSI target server running the LIO iSCSI target, configured via

ceph-iscsi.An iSCSI initiator host, running

open-iscsi(Linux), the Microsoft iSCSI Initiator (Microsoft Windows), or any other compatible iSCSI initiator implementation.

A recommended production configuration of SUSE Enterprise Storage 7

with ceph-iscsi consists of:

A Ceph storage cluster. A production Ceph cluster consists of any number of (typically more than 10) OSD nodes, each typically running 10-12 object storage daemons (OSDs), with a minimum of three dedicated MON hosts.

Several iSCSI target servers running the LIO iSCSI target, configured via

ceph-iscsi. For iSCSI failover and load-balancing, these servers must run a kernel supporting thetarget_core_rbdmodule. Update packages are available from the SUSE Linux Enterprise Server maintenance channel.Any number of iSCSI initiator hosts, running

open-iscsi(Linux), the Microsoft iSCSI Initiator (Microsoft Windows), or any other compatible iSCSI initiator implementation.

9.1.4 Installation and configuration #

This section describes steps to install and configure an iSCSI Gateway on top of SUSE Enterprise Storage.

9.1.4.1 Deploy the iSCSI Gateway to a Ceph cluster #

The Ceph iSCSI Gateway deployment follows the same procedure as the deployment of other Ceph services—by means of cephadm. For more details, see Section 8.3.5, “Deploying iSCSI Gateways”.

9.1.4.2 Creating RBD images #

RBD images are created in the Ceph store and subsequently exported to

iSCSI. We recommend that you use a dedicated RADOS pool for this purpose.

You can create a volume from any host that is able to connect to your

storage cluster using the Ceph rbd command line

utility. This requires the client to have at least a minimal

ceph.conf configuration file, and appropriate CephX

authentication credentials.

To create a new volume for subsequent export via iSCSI, use the

rbd create command, specifying the volume size in

megabytes. For example, in order to create a 100 GB volume named

testvol in the pool named

iscsi-images, run:

cephuser@adm > rbd --pool iscsi-images create --size=102400 testvol9.1.4.3 Exporting RBD images via iSCSI #

To export RBD images via iSCSI, you can use either Ceph Dashboard Web

interface or the ceph-iscsi gwcli utility. In this section, we will focus

on gwcli only, demonstrating how to create an iSCSI target that exports

an RBD image using the command line.

RBD images with the following properties cannot be exported via iSCSI:

images with the

journalingfeature enabledimages with a

stripe unitless than 4096 bytes

As root, enter the iSCSI Gateway container:

# cephadm enter --name CONTAINER_NAME

As root, start the iSCSI Gateway command line interface:

# gwcli

Go to iscsi-targets and create a target with the name

iqn.2003-01.org.linux-iscsi.iscsi.SYSTEM-ARCH:testvol:

gwcli >/> cd /iscsi-targetsgwcli >/iscsi-targets> create iqn.2003-01.org.linux-iscsi.iscsi.SYSTEM-ARCH:testvol

Create the iSCSI gateways by specifying the gateway

name and ip address:

gwcli >/iscsi-targets> cd iqn.2003-01.org.linux-iscsi.iscsi.SYSTEM-ARCH:testvol/gatewaysgwcli >/iscsi-target...tvol/gateways> create iscsi1 192.168.124.104gwcli >/iscsi-target...tvol/gateways> create iscsi2 192.168.124.105

Use the help command to show the list of available

commands in the current configuration node.

Add the RBD image with the name testvol in the pool

iscsi-images::

gwcli >/iscsi-target...tvol/gateways> cd /disksgwcli >/disks> attach iscsi-images/testvol

Map the RBD image to the target:

gwcli >/disks> cd /iscsi-targets/iqn.2003-01.org.linux-iscsi.iscsi.SYSTEM-ARCH:testvol/disksgwcli >/iscsi-target...testvol/disks> add iscsi-images/testvol

You can use lower-level tools, such as targetcli, to

query the local configuration, but not to modify it.

You can use the ls command to review the

configuration. Some configuration nodes also support the

info command, which can be used to display more

detailed information.

Note that, by default, ACL authentication is enabled so this target is not accessible yet. Check Section 9.1.4.4, “Authentication and access control” for more information about authentication and access control.

9.1.4.4 Authentication and access control #

iSCSI authentication is flexible and covers many authentication possibilities.

9.1.4.4.1 Disabling ACL authentication #

No Authentication means that any initiator will be able to access any LUNs on the corresponding target. You can enable No Authentication by disabling the ACL authentication:

gwcli >/> cd /iscsi-targets/iqn.2003-01.org.linux-iscsi.iscsi.SYSTEM-ARCH:testvol/hostsgwcli >/iscsi-target...testvol/hosts> auth disable_acl

9.1.4.4.2 Using ACL authentication #

When using initiator-name-based ACL authentication, only the defined initiators are allowed to connect. You can define an initiator by doing:

gwcli >/> cd /iscsi-targets/iqn.2003-01.org.linux-iscsi.iscsi.SYSTEM-ARCH:testvol/hostsgwcli >/iscsi-target...testvol/hosts> create iqn.1996-04.de.suse:01:e6ca28cc9f20

Defined initiators will be able to connect, but will only have access to the RBD images that were explicitly added to the initiator:

gwcli > /iscsi-target...:e6ca28cc9f20> disk add rbd/testvol9.1.4.4.3 Enabling CHAP authentication #

In addition to the ACL, you can enable CHAP authentication by specifying a user name and password for each initiator:

gwcli >/> cd /iscsi-targets/iqn.2003-01.org.linux-iscsi.iscsi.SYSTEM-ARCH:testvol/hosts/iqn.1996-04.de.suse:01:e6ca28cc9f20gwcli >/iscsi-target...:e6ca28cc9f20> auth username=common12 password=pass12345678

User names must have a length of 8 to 64 characters and can contain

alphanumeric characters, ., @,

-, _ or :.

Passwords must have a length of 12 to 16 characters and can contain

alphanumeric characters, @, -,

_ or /..

Optionally, you can also enable CHAP mutual authentication by specifying

the mutual_username and mutual_password

parameters in the auth command.

9.1.4.4.4 Configuring discovery and mutual authentication #

Discovery authentication is independent of the previous authentication methods. It requires credentials for browsing, it is optional, and can be configured by:

gwcli >/> cd /iscsi-targetsgwcli >/iscsi-targets> discovery_auth username=du123456 password=dp1234567890

User names must have a length of 8 to 64 characters and can only contain

letters, ., @,

-, _ or :.

Passwords must have a length of 12 to 16 characters and can only contain

letters, @, -,

_ or /.

Optionally, you can also specify the mutual_username and

mutual_password parameters in the

discovery_auth command.

Discovery authentication can be disabled by using the following command:

gwcli > /iscsi-targets> discovery_auth nochap9.1.4.5 Configuring advanced settings #

ceph-iscsi can be configured with advanced parameters which are

subsequently passed on to the LIO I/O target. The parameters are divided

up into target and disk parameters.

Unless otherwise noted, changing these parameters from the default setting is not recommended.

9.1.4.5.1 Viewing target settings #

You can view the value of these settings by using the

info command:

gwcli >/> cd /iscsi-targets/iqn.2003-01.org.linux-iscsi.iscsi.SYSTEM-ARCH:testvolgwcli >/iscsi-target...i.SYSTEM-ARCH:testvol> info

And change a setting using the reconfigure command:

gwcli > /iscsi-target...i.SYSTEM-ARCH:testvol> reconfigure login_timeout 20

The available target settings are:

- default_cmdsn_depth

Default CmdSN (Command Sequence Number) depth. Limits the amount of requests that an iSCSI initiator can have outstanding at any moment.

- default_erl

Default error recovery level.

- login_timeout

Login timeout value in seconds.

- netif_timeout

NIC failure timeout in seconds.

- prod_mode_write_protect

If set to

1, prevents writes to LUNs.

9.1.4.5.2 Viewing disk settings #

You can view the value of these settings by using the

info command:

gwcli >/> cd /disks/rbd/testvolgwcli >/disks/rbd/testvol> info

And change a setting using the reconfigure command:

gwcli > /disks/rbd/testvol> reconfigure rbd/testvol emulate_pr 0

The available disk settings are:

- block_size

Block size of the underlying device.

- emulate_3pc

If set to

1, enables Third Party Copy.- emulate_caw

If set to

1, enables Compare and Write.- emulate_dpo

If set to 1, turns on Disable Page Out.

- emulate_fua_read

If set to

1, enables Force Unit Access read.- emulate_fua_write

If set to

1, enables Force Unit Access write.- emulate_model_alias

If set to

1, uses the back-end device name for the model alias.- emulate_pr

If set to 0, support for SCSI Reservations, including Persistent Group Reservations, is disabled. While disabled, the SES iSCSI Gateway can ignore reservation state, resulting in improved request latency.

TipSetting

backstore_emulate_prto0is recommended if iSCSI initiators do not require SCSI Reservation support.- emulate_rest_reord

If set to

0, the Queue Algorithm Modifier has Restricted Reordering.- emulate_tas

If set to

1, enables Task Aborted Status.- emulate_tpu

If set to

1, enables Thin Provisioning Unmap.- emulate_tpws

If set to

1, enables Thin Provisioning Write Same.- emulate_ua_intlck_ctrl

If set to

1, enables Unit Attention Interlock.- emulate_write_cache

If set to

1, turns on Write Cache Enable.- enforce_pr_isids

If set to

1, enforces persistent reservation ISIDs.- is_nonrot

If set to

1, the backstore is a non-rotational device.- max_unmap_block_desc_count

Maximum number of block descriptors for UNMAP.

- max_unmap_lba_count:

Maximum number of LBAs for UNMAP.

- max_write_same_len

Maximum length for WRITE_SAME.

- optimal_sectors

Optimal request size in sectors.

- pi_prot_type

DIF protection type.

- queue_depth

Queue depth.

- unmap_granularity

UNMAP granularity.

- unmap_granularity_alignment

UNMAP granularity alignment.

- force_pr_aptpl

When enabled, LIO will always write out the persistent reservation state to persistent storage, regardless of whether the client has requested it via

aptpl=1. This has no effect with the kernel RBD back-end for LIO—it always persists PR state. Ideally, thetarget_core_rbdoption should force it to '1' and throw an error if someone tries to disable it via configuration.- unmap_zeroes_data

Affects whether LIO will advertise LBPRZ to SCSI initiators, indicating that zeros will be read back from a region following UNMAP or WRITE SAME with an unmap bit.

9.1.5 Exporting RADOS Block Device images using tcmu-runner #

The ceph-iscsi supports both rbd (kernel-based) and

user:rbd (tcmu-runner) backstores, making all the

management transparent and independent of the backstore.

tcmu-runner based iSCSI Gateway deployments are currently

a technology preview.

Unlike kernel-based iSCSI Gateway deployments, tcmu-runner

based iSCSI Gateways do not offer support for multipath I/O or SCSI Persistent

Reservations.

To export an RADOS Block Device image using tcmu-runner, all

you need to do is specify the user:rbd backstore when

attaching the disk:

gwcli > /disks> attach rbd/testvol backstore=user:rbd

When using tcmu-runner, the exported RBD image

must have the exclusive-lock feature enabled.