23 Cluster logical volume manager (Cluster LVM) #

When managing shared storage on a cluster, every node must be informed about changes to the storage subsystem. Logical Volume Manager (LVM) supports transparent management of volume groups across the whole cluster. Volume groups shared among multiple hosts can be managed using the same commands as local storage.

23.1 Conceptual overview #

Cluster LVM is coordinated with different tools:

- Distributed lock manager (DLM)

Coordinates access to shared resources among multiple hosts through cluster-wide locking.

- Logical Volume Manager (LVM)

LVM provides a virtual pool of disk space and enables flexible distribution of one logical volume over several disks.

- Cluster logical volume manager (Cluster LVM)

The term

Cluster LVMindicates that LVM is being used in a cluster environment. This needs some configuration adjustments to protect the LVM metadata on shared storage. From SUSE Linux Enterprise 15 onward, the cluster extension uses lvmlockd, which replaces clvmd. For more information about lvmlockd, see the man page of thelvmlockdcommand (man 8 lvmlockd).lvmlockd with sanlock is not officially supported.

- Volume group and logical volume

Volume groups (VGs) and logical volumes (LVs) are basic concepts of LVM. A volume group is a storage pool of multiple physical disks. A logical volume belongs to a volume group, and can be seen as an elastic volume on which you can create a file system. In a cluster environment, there is a concept of shared VGs, which consist of shared storage and can be used concurrently by multiple hosts.

23.2 Configuration of Cluster LVM #

Make sure the following requirements are fulfilled:

A shared storage device is available, provided by a Fibre Channel, FCoE, SCSI, iSCSI SAN, or DRBD*, for example.

Make sure the following packages have been installed:

lvm2andlvm2-lockd.From SUSE Linux Enterprise 15 onward, the cluster extension uses lvmlockd, which replaces clvmd. Make sure the clvmd daemon is not running, otherwise lvmlockd will fail to start.

23.2.1 Creating the cluster resources #

Perform the following basic steps on one node to configure a shared VG in the cluster:

Start a shell and log in as

root.Check the current configuration of the cluster resources:

#crm configure showIf you have already configured a DLM resource (and a corresponding base group and base clone), continue with Procedure 23.2, “Creating an lvmlockd resource”.

Otherwise, configure a DLM resource and a corresponding base group and base clone as described in Procedure 19.1, “Configuring a base group for DLM”.

Start a shell and log in as

root.Run the following command to see the usage of this resource:

#crm configure ra info lvmlockdConfigure a

lvmlockdresource as follows:#crm configure primitive lvmlockd lvmlockd \ op start timeout="90" \ op stop timeout="100" \ op monitor interval="30" timeout="90"To ensure the

lvmlockdresource is started on every node, add the primitive resource to the base group for storage you have created in Procedure 23.1, “Creating a DLM resource”:#crm configure modgroup g-storage add lvmlockdReview your changes:

#crm configure showCheck if the resources are running well:

#crm status full

Start a shell and log in as

root.Assuming you already have two shared disks, create a shared VG with them:

#vgcreate --shared vg1 /dev/disk/by-id/DEVICE_ID1 /dev/disk/by-id/DEVICE_ID2Create an LV and do not activate it initially:

#lvcreate -an -L10G -n lv1 vg1

Start a shell and log in as

root.Run the following command to see the usage of this resource:

#crm configure ra info LVM-activateThis resource manages the activation of a VG. In a shared VG, LV activation has two different modes: exclusive and shared mode. The exclusive mode is the default and should be used normally, when a local file system like

ext4uses the LV. The shared mode should only be used for cluster file systems like OCFS2.Configure a resource to manage the activation of your VG. Choose one of the following options according to your scenario:

Use exclusive activation mode for local file system usage:

#crm configure primitive vg1 LVM-activate \ params vgname=vg1 vg_access_mode=lvmlockd \ op start timeout=90s interval=0 \ op stop timeout=90s interval=0 \ op monitor interval=30s timeout=90sUse shared activation mode for OCFS2:

#crm configure primitive vg1 LVM-activate \ params vgname=vg1 vg_access_mode=lvmlockd activation_mode=shared \ op start timeout=90s interval=0 \ op stop timeout=90s interval=0 \ op monitor interval=30s timeout=90s

Make sure the VG can only be activated on nodes where the DLM and

lvmlockdresources are already running:Exclusive activation mode:

Because this VG is only active on a single node, do not add it to the cloned

g-storagegroup. Instead, add constraints directly to the resource:#crm configure colocation col-vg-with-dlm inf: vg1 cl-storage#crm configure order o-dlm-before-vg Mandatory: cl-storage vg1For multiple VGs, you can add constraints to multiple resources at once:

#crm configure colocation col-vg-with-dlm inf: ( vg1 vg2 ) cl-storage#crm configure order o-dlm-before-vg Mandatory: cl-storage ( vg1 vg2 )Shared activation mode:

Because this VG is active on multiple nodes, you can add it to the cloned

g-storagegroup, which already has internal colocation and order constraints:#crm configure modgroup g-storage add vg1Do not add multiple VGs to the group, because this creates a dependency between the VGs. For multiple VGs, clone the resources and add constraints to the clones:

#crm configure clone cl-vg1 vg1 meta interleave=true#crm configure clone cl-vg2 vg2 meta interleave=true#crm configure colocation col-vg-with-dlm inf: ( cl-vg1 cl-vg2 ) cl-storage#crm configure order o-dlm-before-vg Mandatory: cl-storage ( cl-vg1 cl-vg2 )

Check if the resources are running well:

#crm status full

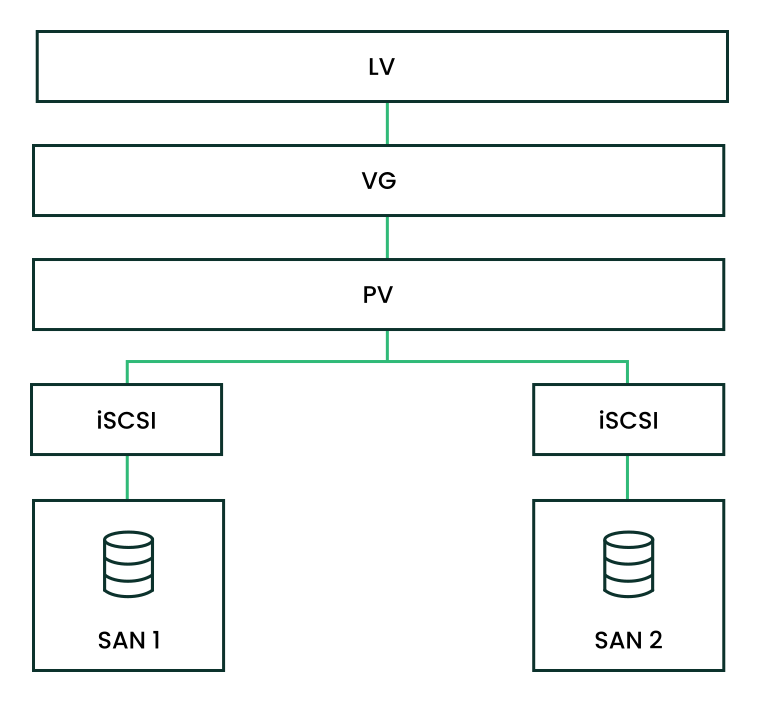

23.2.2 Scenario: Cluster LVM with iSCSI on SANs #

The following scenario uses two SAN boxes which export their iSCSI targets to several clients. The general idea is displayed in Figure 23.1, “Setup of a shared disk with Cluster LVM”.

The following procedures will destroy any data on your disks!

Configure only one SAN box first. Each SAN box needs to export its own iSCSI target. Proceed as follows:

Run YaST and click › to start the iSCSI Server module.

If you want to start the iSCSI target whenever your computer is booted, choose , otherwise choose .

If you have a firewall running, enable .

Switch to the tab. If you need authentication, enable incoming or outgoing authentication or both. In this example, we select .

Add a new iSCSI target:

Switch to the tab.

Click .

Enter a target name. The name needs to be formatted like this:

iqn.DATE.DOMAIN

For more information about the format, refer to Section 3.2.6.3.1. Type "iqn." (iSCSI Qualified Name) at http://www.ietf.org/rfc/rfc3720.txt.

If you want a more descriptive name, you can change it as long as your identifier is unique for your different targets.

Click .

Enter the device name in and use a .

Click twice.

Confirm the warning box with .

Open the configuration file

/etc/iscsi/iscsid.confand change the parameternode.startuptoautomatic.

Now set up your iSCSI initiators as follows:

Run YaST and click › .

If you want to start the iSCSI initiator whenever your computer is booted, choose , otherwise set .

Change to the tab and click the button.

Add the IP address and the port of your iSCSI target (see Procedure 23.5, “Configuring iSCSI targets (SAN)”). Normally, you can leave the port as it is and use the default value.

If you use authentication, insert the incoming and outgoing user name and password, otherwise activate .

Select . The found connections are displayed in the list.

Proceed with .

Open a shell, log in as

root.Test if the iSCSI initiator has been started successfully:

#iscsiadm-m discovery -t st -p 192.168.3.100 192.168.3.100:3260,1 iqn.2010-03.de.jupiter:san1Establish a session:

#iscsiadm-m node -l -p 192.168.3.100 -T iqn.2010-03.de.jupiter:san1 Logging in to [iface: default, target: iqn.2010-03.de.jupiter:san1, portal: 192.168.3.100,3260] Login to [iface: default, target: iqn.2010-03.de.jupiter:san1, portal: 192.168.3.100,3260]: successfulSee the device names with

lsscsi:... [4:0:0:2] disk IET ... 0 /dev/sdd [5:0:0:1] disk IET ... 0 /dev/sde

Look for entries with

IETin their third column. In this case, the devices are/dev/sddand/dev/sde.

Open a

rootshell on one of the nodes you have run the iSCSI initiator from Procedure 23.6, “Configuring iSCSI initiators”.Create the shared volume group on disks

/dev/sddand/dev/sde, using their stable device names (for example, in/dev/disk/by-id/):#vgcreate --shared testvg /dev/disk/by-id/DEVICE_ID /dev/disk/by-id/DEVICE_IDCreate logical volumes as needed:

#lvcreate--name lv1 --size 500M testvgCheck the volume group with

vgdisplay:--- Volume group --- VG Name testvg System ID Format lvm2 Metadata Areas 2 Metadata Sequence No 1 VG Access read/write VG Status resizable MAX LV 0 Cur LV 0 Open LV 0 Max PV 0 Cur PV 2 Act PV 2 VG Size 1016,00 MB PE Size 4,00 MB Total PE 254 Alloc PE / Size 0 / 0 Free PE / Size 254 / 1016,00 MB VG UUID UCyWw8-2jqV-enuT-KH4d-NXQI-JhH3-J24anDCheck the shared state of the volume group with the command

vgs:#vgsVG #PV #LV #SN Attr VSize VFree vgshared 1 1 0 wz--ns 1016.00m 1016.00mThe

Attrcolumn shows the volume attributes. In this example, the volume group is writable (w), resizeable (z), the allocation policy is normal (n), and it is a shared resource (s). See the man page ofvgsfor details.

After you have created the volumes and started your resources you should have new device

names under /dev/testvg, for example /dev/testvg/lv1.

This indicates the LV has been activated for use.

23.2.3 Scenario: Cluster LVM with DRBD #

The following scenarios can be used if you have data centers located in different parts of your city, country, or continent.

Create a primary/primary DRBD resource:

First, set up a DRBD device as primary/secondary as described in Procedure 22.2, “Manually configuring DRBD”. Make sure the disk state is

up-to-dateon both nodes. Check this withdrbdadm status.Add the following options to your configuration file (usually something like

/etc/drbd.d/r0.res):resource r0 { net { allow-two-primaries; } ... }Copy the changed configuration file to the other node, for example:

#scp/etc/drbd.d/r0.res venus:/etc/drbd.d/Run the following commands on both nodes:

#drbdadmdisconnect r0#drbdadmconnect r0#drbdadmprimary r0Check the status of your nodes:

#drbdadmstatus r0

Include the lvmlockd resource as a clone in the pacemaker configuration, and make it depend on the DLM clone resource. See Procedure 23.1, “Creating a DLM resource” for detailed instructions. Before proceeding, confirm that these resources have started successfully on your cluster. Use

crm statusor the Web interface to check the running services.Prepare the physical volume for LVM with the command

pvcreate. For example, on the device/dev/drbd_r0the command would look like this:#pvcreate/dev/drbd_r0Create a shared volume group:

#vgcreate--shared testvg /dev/drbd_r0Create logical volumes as needed. You probably want to change the size of the logical volume. For example, create a 4 GB logical volume with the following command:

#lvcreate--name lv1 -L 4G testvgThe logical volumes within the VG are now available as file system mounts for raw usage. Ensure that services using them have proper dependencies to collocate them with and order them after the VG has been activated.

After finishing these configuration steps, the LVM configuration can be done like on any stand-alone workstation.

23.3 Configuring eligible LVM devices explicitly #

When several devices seemingly share the same physical volume signature (as can be the case for multipath devices or DRBD), we recommend to explicitly configure the devices which LVM scans for PVs.

For example, if the command vgcreate uses the physical

device instead of using the mirrored block device, DRBD will be confused.

This may result in a split brain condition for DRBD.

To deactivate a single device for LVM, do the following:

Edit the file

/etc/lvm/lvm.confand search for the line starting withfilter.The patterns there are handled as regular expressions. A leading “a” means to accept a device pattern to the scan, a leading “r” rejects the devices that follow the device pattern.

To remove a device named

/dev/sdb1, add the following expression to the filter rule:"r|^/dev/sdb1$|"

The complete filter line will look like the following:

filter = [ "r|^/dev/sdb1$|", "r|/dev/.*/by-path/.*|", "r|/dev/.*/by-id/.*|", "a/.*/" ]

A filter line that accepts DRBD and MPIO devices but rejects all other devices would look like this:

filter = [ "a|/dev/drbd.*|", "a|/dev/.*/by-id/dm-uuid-mpath-.*|", "r/.*/" ]

Write the configuration file and copy it to all cluster nodes.

23.4 Online migration from mirror LV to cluster MD #

Starting with SUSE Linux Enterprise High Availability 15, cmirrord in Cluster LVM is deprecated. We highly

recommend to migrate the mirror logical volumes in your cluster to cluster MD.

Cluster MD stands for cluster multi-device and is a software-based

RAID storage solution for a cluster.

23.4.1 Example setup before migration #

Let us assume you have the following example setup:

You have a two-node cluster consisting of the nodes

aliceandbob.A mirror logical volume named

test-lvwas created from a volume group namedcluster-vg2.The volume group

cluster-vg2is composed of the disks/dev/vdband/dev/vdc.

#lsblkNAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT vda 253:0 0 40G 0 disk ├─vda1 253:1 0 4G 0 part [SWAP] └─vda2 253:2 0 36G 0 part / vdb 253:16 0 20G 0 disk ├─cluster--vg2-test--lv_mlog_mimage_0 254:0 0 4M 0 lvm │ └─cluster--vg2-test--lv_mlog 254:2 0 4M 0 lvm │ └─cluster--vg2-test--lv 254:5 0 12G 0 lvm └─cluster--vg2-test--lv_mimage_0 254:3 0 12G 0 lvm └─cluster--vg2-test--lv 254:5 0 12G 0 lvm vdc 253:32 0 20G 0 disk ├─cluster--vg2-test--lv_mlog_mimage_1 254:1 0 4M 0 lvm │ └─cluster--vg2-test--lv_mlog 254:2 0 4M 0 lvm │ └─cluster--vg2-test--lv 254:5 0 12G 0 lvm └─cluster--vg2-test--lv_mimage_1 254:4 0 12G 0 lvm └─cluster--vg2-test--lv 254:5 0 12G 0 lvm

Before you start the migration procedure, check the capacity and degree

of utilization of your logical and physical volumes. If the logical volume

uses 100% of the physical volume capacity, the migration might fail with an

insufficient free space error on the target volume.

How to prevent this migration failure depends on the options used for

mirror log:

Is the mirror log itself mirrored (

mirroredoption) and allocated on the same device as the mirror leg? (For example, this might be the case if you have created the logical volume for acmirrordsetup on SUSE Linux Enterprise High Availability 11 or 12 as described in the Administration Guide for those versions.)By default,

mdadmreserves a certain amount of space between the start of a device and the start of array data. During migration, you can check for the unused padding space and reduce it with thedata-offsetoption as shown in Step 1.d and following.The

data-offsetmust leave enough space on the device for cluster MD to write its metadata to it. On the other hand, the offset must be small enough for the remaining capacity of the device to accommodate all physical volume extents of the migrated volume. Because the volume may have spanned the complete device minus the mirror log, the offset must be smaller than the size of the mirror log.We recommend to set the

data-offsetto 128 kB. If no value is specified for the offset, its default value is 1 kB (1024 bytes).Is the mirror log written to a different device (

diskoption) or kept in memory (coreoption)? Before starting the migration, either enlarge the size of the physical volume or reduce the size of the logical volume (to free more space for the physical volume).

23.4.2 Migrating a mirror LV to cluster MD #

The following procedure is based on Section 23.4.1, “Example setup before migration”. Adjust the instructions to match your setup and replace the names for the LVs, VGs, disks and the cluster MD device accordingly.

The migration does not involve any downtime. The file system can still be mounted during the migration procedure.

On node

alice, execute the following steps:Convert the mirror logical volume

test-lvto a linear logical volume:#lvconvert -m0 cluster-vg2/test-lv /dev/vdcRemove the physical volume

/dev/vdcfrom the volume groupcluster-vg2:#vgreduce cluster-vg2 /dev/vdcRemove this physical volume from LVM:

#pvremove /dev/vdcWhen you run

lsblknow, you get:NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT vda 253:0 0 40G 0 disk ├─vda1 253:1 0 4G 0 part [SWAP] └─vda2 253:2 0 36G 0 part / vdb 253:16 0 20G 0 disk └─cluster--vg2-test--lv 254:5 0 12G 0 lvm vdc 253:32 0 20G 0 disk

Create a cluster MD device

/dev/md0with the disk/dev/vdc:#mdadm --create /dev/md0 --bitmap=clustered \ --metadata=1.2 --raid-devices=1 --force --level=mirror \ /dev/vdc --data-offset=128For details on why to use the

data-offsetoption, see Important: Avoiding migration failures.

On node

bob, assemble this MD device:#mdadm --assemble md0 /dev/vdcIf your cluster consists of more than two nodes, execute this step on all remaining nodes in your cluster.

Back on node

alice:Initialize the MD device

/dev/md0as physical volume for use with LVM:#pvcreate /dev/md0Add the MD device

/dev/md0to the volume groupcluster-vg2:#vgextend cluster-vg2 /dev/md0Move the data from the disk

/dev/vdbto the/dev/md0device:#pvmove /dev/vdb /dev/md0Remove the physical volume

/dev/vdbfrom the volumegroup cluster-vg2:#vgreduce cluster-vg2 /dev/vdbRemove the label from the device so that LVM no longer recognizes it as physical volume:

#pvremove /dev/vdbAdd

/dev/vdbto the MD device/dev/md0:#mdadm --grow /dev/md0 --raid-devices=2 --add /dev/vdb

23.4.3 Example setup after migration #

When you run lsblk now, you get:

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT vda 253:0 0 40G 0 disk ├─vda1 253:1 0 4G 0 part [SWAP] └─vda2 253:2 0 36G 0 part / vdb 253:16 0 20G 0 disk └─md0 9:0 0 20G 0 raid1 └─cluster--vg2-test--lv 254:5 0 12G 0 lvm vdc 253:32 0 20G 0 disk └─md0 9:0 0 20G 0 raid1 └─cluster--vg2-test--lv 254:5 0 12G 0 lvm