1 Building a SUSE OpenStack Cloud Test lab #

1.1 Document Scope #

This document will help you to prepare SUSE and prospective customers for a Proof of Concept (PoC) deployment of SUSE OpenStack Cloud. This document provides specific details for a PoC deployment. It serves as an addition to the SUSE OpenStack Cloud Deployment Guide using Crowbar.

1.2 SUSE OpenStack Cloud Key Features #

The latest version 9 of SUSE OpenStack Cloud supports all OpenStack Rocky release components for best-in-class capabilities to deploy an open source private cloud. Here is a brief overview of SUSE OpenStack Cloud features and functionality.

Installation Framework Integration with the Crowbar project speeds up and simplifies installation and administration of your physical cloud infrastructure.

Mixed Hypervisor Support Enhanced virtualization management through support for multi-hypervisor environments that use KVM, VMware vSphere.

High Availability Automated deployment and configuration of control plane clusters. This ensures continuous access to business services and delivery of enterprise-grade Service Level Agreements.

High availability for KVM Compute Nodes and Workloads Enhanced support for critical workloads not designed for cloud architectures.

Docker Support Gives the ability to build and run innovative containerized applications through Magnum integration.

Scalability Cloud control system designed to grow with your demands.

Open APIs Using the standard APIs, customers can enhance and integrate OpenStack with third-party software.

Block Storage Plug-Ins A wide range of block storage plug-ins available from SUSE Enterprise Storage and other storage vendors like EMC, NetApp.

Award-Winning Support SUSE OpenStack Cloud is backed by 24x7 worldwide-technical support.

Full Integration with SUSE Update Processes simplifies maintenance and patch cloud deployments.

Non-Disruptive Upgrade Capabilities Simplify migration to future SUSE OpenStack Cloud releases.

1.3 Main Components #

The following is a brief overview of components for setting up and managing SUSE OpenStack Cloud.

Administration Server provides all services needed to manage and deploy all other nodes in the cloud. Most of these services are provided by the Crowbar tool. Together with Chef, Crowbar automates all the required installation and configuration tasks. The services provided by the server include DHCP, DNS, NTP, PXE, TFTP.

The Administration Server also hosts the software repositories for SUSE Linux Enterprise Server and SUSE OpenStack Cloud. These repositories are required for node deployment. If no other sources for the software repositories are available, the Administration Server can also host the Subscription Management Tool (SMT), providing up-to-date repositories with updates and patches for all nodes.

Control Nodes host all OpenStack services for orchestrating virtual machines deployed on the compute nodes. OpenStack in SUSE OpenStack Cloud uses a MariaDB database that is also hosted on the Control Nodes. When deployed, the following OpenStack components run on the Control Nodes:

MariaDB

Image (glance)

Identity (keystone)

Networking (neutron)

Block Storage (cinder)

Shared Storage (manila)

OpenStack Dashboard

keystone

Pacemaker

nova controller

Message broker

swift proxy server

Hawk monitor

Orchestration (heat)

ceilometer server and agents

A single Control Node running multiple services can become a performance bottleneck, especially in large SUSE OpenStack Cloud deployments. It is possible to distribute the services listed above on more than one Control Node. This includes scenarios where each service runs on its own node.

Compute Nodes are a pool of machines for running instances. These machines require an adequate number of CPUs and enough RAM to start several instances. They also need to provide sufficient storage. A Control Node distributes instances within the pool of compute nodes and provides the necessary network resources. The OpenStack service Compute (nova) runs on Compute Nodes and provides means for setting up, starting, and stopping virtual machines. SUSE OpenStack Cloud supports several hypervisors such as KVM and VMware vSphere. Each image that can be started with an instance is bound to one hypervisor. Each Compute Node can only run one hypervisor at a time. For a PoC deployment, SUSE recommends to leverage KVM as hypervisor of choice.

Optional Storage Nodes Storage Node is a pool of machines that provide object or block storage. Object storage supports several back-ends.

1.4 Objectives and Preparations #

Although each customer has a specific set of requirements, it is important to have 3-5 clearly-defined objectives. This objectives should be provable, measurable and have a specific time scale in which proof is required. The objectives can be adjusted and amended, provided that both parties are agreed on the changes. For a full record of the performed and completed work, it is recommended to use this document for making amendments to the proof requirements.

Before deploying SUSE OpenStack Cloud, it is necessary to meet certain requirements and consider various aspects of the deployment. Some decisions need to be made before deploying SUSE OpenStack Cloud, since they cannot be changed afterward.

The following procedure covers preparatory steps for the deployment of SUSE OpenStack Cloud along with the software and hardware components required for a successful implementation.

Make sure that the required hardware and virtual machines are provided and configured

Check that PXE boot from the first NIC in BIOS is enabled

Ensure that the hardware is certified for use with SUSE Linux Enterprise Server 12 SP4

Check that booting from ISO images works

Make sure that all NICs are visible

Install

sar/sysstatfor performance troubleshootingEnsure that all needed subscription records are available. Depending on the size of the cloud to be implemented, this includes the following:

SUSE OpenStack Cloud subscriptions

SUSE Linux Enterprise Server subscriptions

SLES High Availability Extensions (HAE) subscriptions

Optional SUSE Enterprise Storage (SES) subscriptions

Check whether all needed channels and updates are available either locally or remotely. The following options can be used to provide the repositories and channels:

SMT server on the administration server (optional step)

Existing SMT server

Existing SUSE Manager

Make sure that networking planed and wired according to the specified layout or topology

If SUSE Enterprise Storage is a part of the PoC deployment, all nodes must be installed, configured, and optimized before installing SUSE OpenStack Cloud. Storage services (nova, cinder, glance, Cluster STONITH,) required by SUSE OpenStack Cloud 6 must be available and accessible.

Check whether

network.jsonis configured according to the specific requirements. This step must be discussed and completed in advance (see Section 1.6.1, “The network.json Network Control File” and Deployment Guide using Crowbar)

1.5 Hardware and Software Matrix #

The hardware and software matrix below has the following requirements:

All machines must run SUSE Linux Enterprise Server 12 SP4

KVM Hypervisor must be running on bare metal

The Admin Node can be deployed on a KVM or VMware virtual machine

The sizing recommendation includes an Admin Node (bare metal or VM), Controller Nodes, Compute Nodes to host all your OpenStack services, and the optional SES Nodes. The matrix also provides information on the necessary network equipment and bandwidth requirements.

These recommendations are based on real-world use cases and experience gathered by SUSE in the last three years. However, these recommendations are meant to serve as guidelines and not as requirements. The final sizing decision depends on the actual customer workloads and architecture, which must be discussed in depth. The type and number of hardware components such as hard disks, CPU, and RAM also serve as starting points for further discussion and evaluation depending on workloads.

|

Number of Units |

Function |

Configuration |

OpenStack Component |

|---|---|---|---|

|

3 |

Compute nodes |

2 hard disks 2 Quad Core Intel or AMD processors 256GB RAM 2 or 4 10Gb Ethernet NICs |

nova-multi-compute ML2 Agent OVS Agent |

|

1 |

Admin Node or VM |

2 hard disks 1 Quad Core Intel or AMD processor 8GB RAM 2 or 4 10Gb Ethernet NICs |

Crowbar, tftpboot, PXE |

|

3 |

Control node |

2 hard disks 2 Quad Core Intel or AMD processors 2x64GB RAM 2 or 4 10Gb Ethernet NICs |

horizon Rabbit MQ nova multi-controller cinder glance heat ceilometer neutron-Server ML2 Agent keystone MariaDB neutron ML2 Plugin L2/L3 Agents DHCP Agent |

|

CloudFoundry |

48 vCPUs 256GB RAM Min 2TB Storage |

SUSE Cloud Application Platform | |

|

4 |

Storage Server – SUSE Enterprise Storage |

2 hard disks 2 Quad Core Intel or AMD processors 64GB RAM 2 or 4 10Gb Ethernet NICs |

Admin – Server MON - Server OSD - Server |

|

1 |

Switch min. 10 GbE ports |

All VLANs/Tagged or Untagged | |

| OS: SUSE Linux Enterprise Server 12 SP4 | |||

|

DHCP, DNS |

Isolated within administrator network | ||

|

HA |

3 Control Nodes | ||

1.6 Network Topology #

Configuring and managing your network are two of the most challenging tasks of deploying a SUSE OpenStack Cloud. Therefore they need to be planned carefully. Just as OpenStack provides flexibility and agility for compute and storage, SDN in OpenStack gives cloud administrators more control over their networks. However, building and manually configuring the virtual network infrastructure for OpenStack is difficult and error-prone. SUSE OpenStack Cloud solve this by delivering a structured installation process for OpenStack which could be customized to adapt the given environment.

1.6.1 The network.json Network Control File #

The deployment of the network configuration is done while setting up an

Administrator Node. As a requirement for the deployment, the entire network

configuration needs to be specified in the

network.json file.

The Crowbar network barclamp provides two functions for the system:

Initialization of network interfaces on the Crowbar managed systems

Address pool management. While the addresses can be managed with the YaST Crowbar module, complex network setups require to manually edit the network barclamp template file

/etc/crowbar/network.json. For more detailed explanation and description see https://documentation.suse.com/soc/9/single-html/suse-openstack-cloud-crowbar-deployment/#sec-depl-inst-admserv-post-network.

The network definitions contain IP address assignments, the bridge and VLAN setup, and settings for the router preference. Each network is also assigned to a logical interface. These VLAN IDs and networks can be modified according to the customer's environment.

1.6.2 The Network Mode #

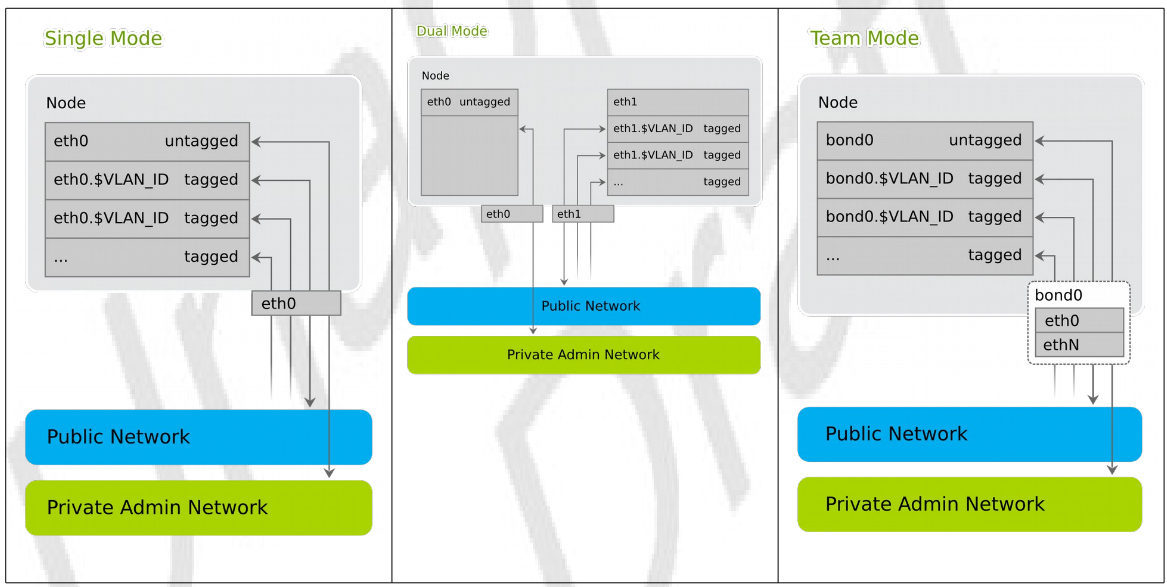

SUSE OpenStack Cloud supports three network modes: single, dual and team. As of SUSE OpenStack Cloud 6, the network mode is applied to all nodes and the Administration Server. That means that all machines need to meet the hardware requirements for the chosen mode. The following network modes are available:

Single Network Mode In single mode one Ethernet card is used for all the traffic.

Dual Network Mode Dual mode needs two Ethernet cards (on all nodes but Administration Server). This allows to completely separate traffic to and from the administrator network and to and from the public network.

Team Network Mode The team mode is almost identical to single mode, except it combines several Ethernet cards to a so-called bond (network device bonding). Team mode requires two or more Ethernet cards.

In an HA configuration, make sure that SUSE OpenStack Cloud is deployed with the team network mode.

1.6.3 Default Layout #

The following networks are pre-defined for use with SUSE OpenStack Cloud.

|

Network Name |

VLAN |

IP Range |

|---|---|---|

|

Router |

No - untagged |

192.168.124.1 |

|

Admin |

No - untagged |

192.168.124.10 – 192.168.124.11 |

|

DHCP |

No - untagged |

192.168.124.21 – 192.168.124.80 |

|

Host |

No - untagged |

192.168.124.81 – 192.168.124.160 |

|

BMC VLAN Host |

100 |

192.168.124.61 |

|

BMC Host |

No - untagged |

192.168.124.162 – 192.168.124.240 |

|

Switch |

No - untagged |

192.168.124.241 – 192.168.124.250 |

|

Network Name |

VLAN |

IP Range |

|---|---|---|

|

Router |

500 |

192.168.123.1 – 192.168.123.49 |

|

DHCP |

500 |

192.168.123.50 – 192.168.123.254 |

|

Network Name |

VLAN |

IP Range |

|---|---|---|

|

Public Host |

300 |

192.168.126.2 – 192.168.126.49 |

|

Public DHCP |

300 |

192.168.126.50 – 192.168.126.127 |

|

Floating Host |

300 |

192.168.126.129 – 192.168.126.191 |

|

Network Name |

VLAN |

IP Range |

|---|---|---|

|

Host |

200 |

192.168.125.2 – 192.168.125.254 |

The default IP addresses can be changed using YaST Crowbar module or by editing the appropriate JSON file. It is also possible to customize the network setup for your environment. This can be done by editing the network barclamp template.

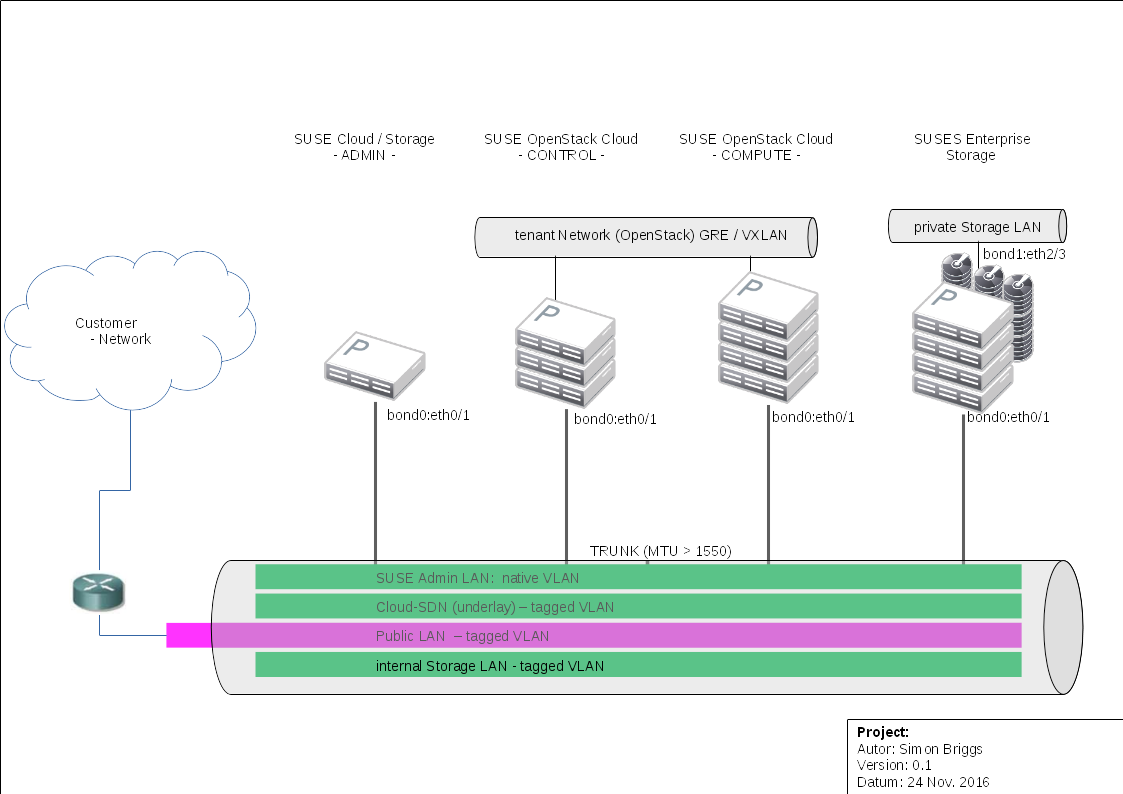

1.7 Network Architecture #

SUSE OpenStack Cloud requires a complex network setup consisting of several networks configured during installation. These networks are reserved for cloud usage. Access to these networks from an existing network requires a router.

The network configuration on the nodes in the SUSE OpenStack Cloud network is controlled by Crowbar. Any network configuration changes done outside Crowbar will be automatically overwritten. After the cloud is deployed, network settings cannot be changed without reinstalling the cloud.

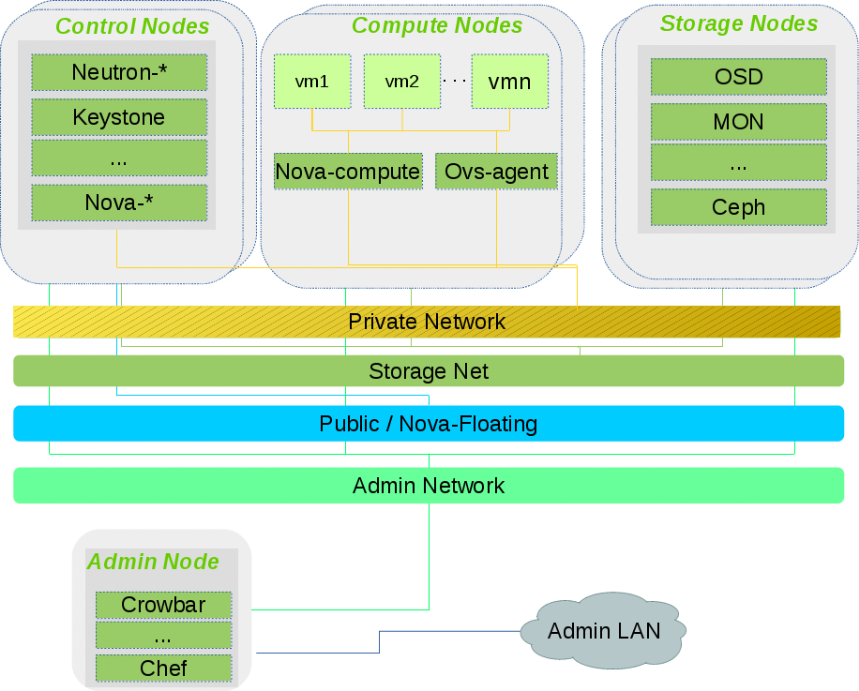

Controller Node serves as the front-end for API calls to the compute, image, volume, network, and orchestration services. In addition to that, the node hosts multiple neutron plug-ins and agents. The node also aggregates all route traffic within tenant and between tenant network and outside world.

Compute Node creates on-demand virtual machines using chosen hypervisor for customer application.

Administrator Node automates the installation processes via Crowbar using pre-defined cookbooks for configuring and deploying a Control Node and Compute and Network Nodes.

The Administrator Node requires a dedicated local and isolated DHCP/PXE environment controlled by Crowbar.

Optional storage access. cinder is used for block storage access exposed through iSCSI or NFS (FC connection is not supported in the current release of OpenStack). This could also be a dedicated Storage Node.

Implementation of Ceph as a cloud storage requires a high-performance network. The Storage Net in the following network topology would allow the respective OpenStack services to connect to the external Ceph storage cluster public network. It is recommended to run a Ceph storage cluster with two networks: a public network and a cluster network. To support two networks, each Ceph node must have more than one NIC.

Network mode. What mode to choose for a PoC deployment depends on the High Availability (HA) requirements. The team network mode is required for an HA setup of SUSE OpenStack Cloud.

1.7.1 Network Architecture: Pre-Defined VLANs #

VLAN support for the administrator network must be handled at the switch level. The following networks are predefined when setting up SUSE OpenStack Cloud. The listed default IP addresses can be changed using the YaST Crowbar module. It is also possible to customize the network setup.

The default network proposal described below allows maximum 80 Compute Nodes, 61 floating IP addresses, and 204 addresses in the nova_fixed network. To overcome these limitations, you need to reconfigure the network setup by using appropriate address ranges manually.

- Administrator Network (192.168.124/24)

A private network to access the Administration Server and all nodes for administration purposes. The default setup lets you also access the Baseboard Management Controller (BMC) data via Intelligent Platform Management Interface (IPMI) from this network. If required, BMC access can be swapped to a separate network.

You have the following options for controlling access to this network:

Do not allow access from the outside and keep the administrator network completely separated

Allow access to the administration server from a single network (for example, your company's administration network) via the “bastion network” option configured on an additional network card with a fixed IP address

Allow access from one or more networks via a gateway

- Storage Network (192.168.125/24)

Private SUSE OpenStack Cloud internal virtual network. This network is used by Ceph and swift only. It should not be accessed by users.

- Private Network (nova-fixed, 192.168.123/24)

Private SUSE OpenStack Cloud internal virtual network. This network is used for communication between instances and provides them with access to the outside world. SUSE OpenStack Cloud automatically provides the required gateway.

- Public Network (nova-floating, public, 192.168.126/24)

The only public network provided by SUSE OpenStack Cloud. On this network, you can access the nova Dashboard and all instances (provided they are equipped with a floating IP). This network can only be accessed via a gateway that needs to be provided externally. All SUSE OpenStack Cloud users and administrators need to be able to access the public network.

- Software Defined Network (os_sdn, 192.168.130/24)

Private SUSE OpenStack Cloud internal virtual network. This network is used when neutron is configured to use

openvswitchwith GRE tunneling for the virtual networks. It should not be accessed by users.SUSE OpenStack Cloud supports different network modes: single, dual, and team. Starting with SUSE OpenStack Cloud 6, the networking mode is applied to all nodes and the Administration Server. This means that all machines need to meet the hardware requirements for the chosen mode. The network mode can be configured using the YaST Crowbar module (see Chapter 7, Crowbar Setup). The network mode cannot be changed after the cloud is deployed.

More flexible network mode setups can be configured by editing the Crowbar network configuration files (see Section 7.5, “Custom Network Configuration” for more information). SUSE or a partner can assist you in creating a custom setup within the scope of a consulting services agreement. For more information on SUSE consulting, visit http://www.suse.com/consulting/.

Team network mode is required for an HA setup of SUSE OpenStack Cloud. If you are planning to move your cloud to an HA setup later, deploy SUSE OpenStack Cloud with team network mode right from the beginning. Migration to an HA setup is not supported.

1.8 Services Architecture #

SUSE OpenStack Cloud is based on SUSE Linux Enterprise Server 12 SP4, OpenStack, Crowbar and Chef. SUSE Linux Enterprise Server is used as the underlying operating system for all infrastructure nodes. Crowbar and Chef are used to automatically deploy and manage the OpenStack nodes from a central Administration Server.