7 Crowbar Setup #

The YaST Crowbar module enables you to configure all networks within the

cloud, to set up additional repositories, and to manage the Crowbar users.

This module should be launched before starting the SUSE OpenStack Cloud Crowbar installation. To start

this module, either run yast crowbar or › › .

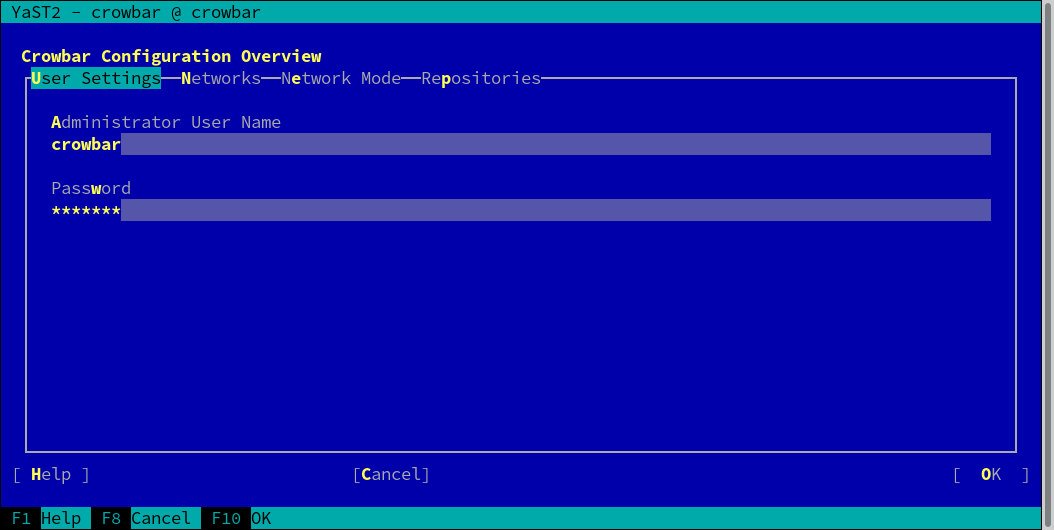

7.1 #

In this section, you can manage the administration user for the Crowbar Web

interface. Use the entries to change the user name and the password. The

preconfigured user is crowbar

(password crowbar). This administration user configured

here has no relation to any existing system user on the

Administration Server.

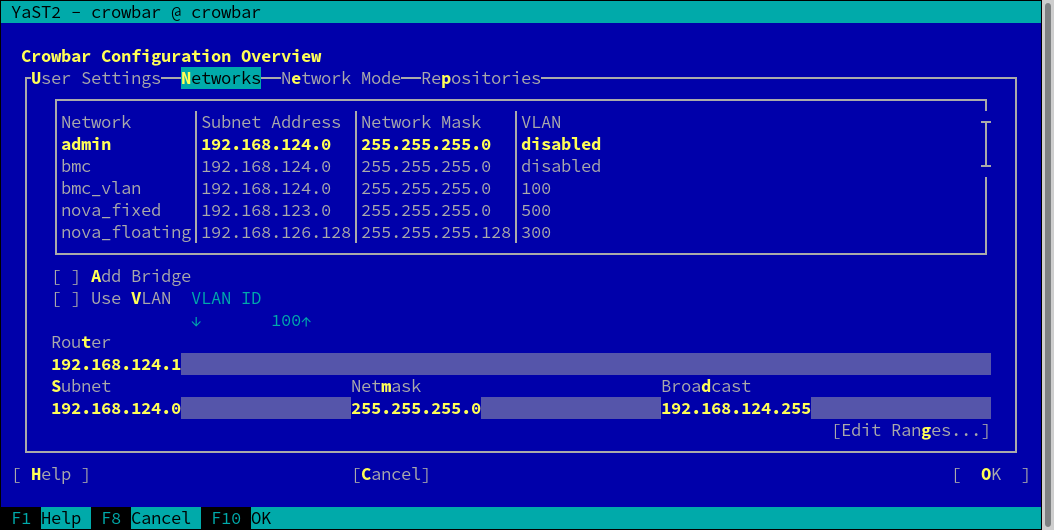

7.2 #

Use the tab to change the default network setup (described in Section 2.1, “Network”). Change the IP address assignment for each network under . You may also add a bridge () or a VLAN (, ) to a network. Only change the latter two settings if you really know what you require; we recommend sticking with the defaults.

After you have completed the SUSE OpenStack Cloud Crowbar installation, you cannot change the network setup. If you do need to change it, you must completely set up the Administration Server again.

As of SUSE OpenStack Cloud Crowbar 8, using a VLAN for the admin network is only supported on a native/untagged VLAN. If you need VLAN support for the admin network, it must be handled at switch level.

When changing the network configuration with YaST or by editing

/etc/crowbar/network.json, you can define VLAN

settings for each network. For the networks nova-fixed

and nova-floating, however, special rules apply:

nova-fixed: The setting will be ignored. However, VLANs will automatically be used if deploying neutron with VLAN support (using the drivers linuxbridge, openvswitch plus VLAN, or cisco_nexus). In this case, you need to specify a correct for this network.

nova-floating: When using a VLAN for

nova-floating (which is the default), the and settings for

and default to

the same.

You have the option of separating public and floating networks with a

custom configuration. Configure your own separate floating network (not as

a subnet of the public network), and give the floating network its own

router. For example, define nova-floating as part of an

external network with a custom bridge-name. When you are

using different networks and OpenVSwitch is configured, the pre-defined

bridge-name won't work.

Other, more flexible network mode setups, can be configured by manually editing the Crowbar network configuration files. See Section 7.5, “Custom Network Configuration” for more information. SUSE or a partner can assist you in creating a custom setup within the scope of a consulting services agreement. See http://www.suse.com/consulting/ for more information on SUSE consulting.

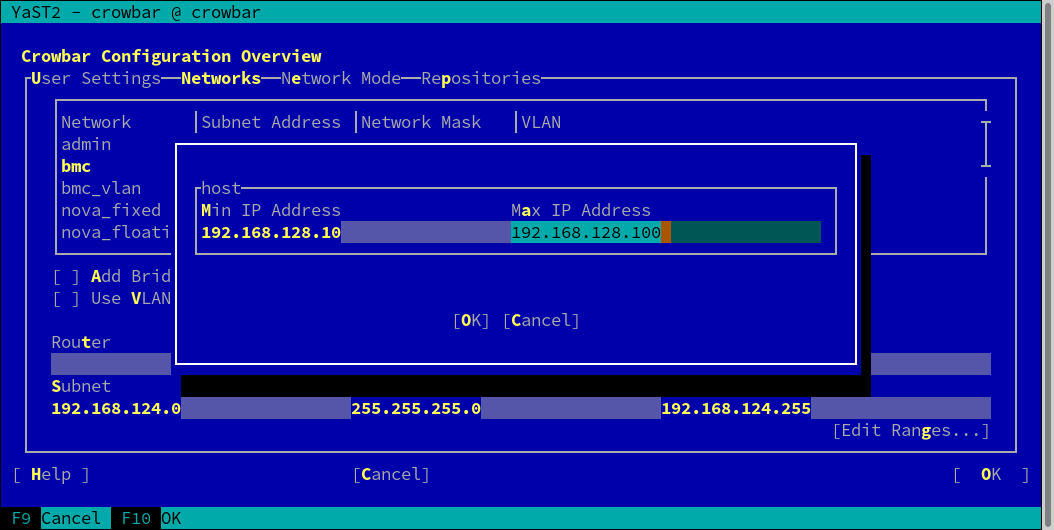

7.2.1 Separating the Admin and the BMC Network #

If you want to separate the admin and the BMC network, you must change the settings for the networks and . The is used to generate a VLAN tagged interface on the Administration Server that can access the network. The needs to be in the same ranges as , and needs to have enabled.

|

bmc |

bmc_vlan | |

|---|---|---|

|

Subnet |

| |

|

Netmask |

| |

|

Router |

| |

|

Broadcast |

| |

|

Host Range |

|

|

|

VLAN |

yes | |

|

VLAN ID |

100 | |

|

Bridge |

no | |

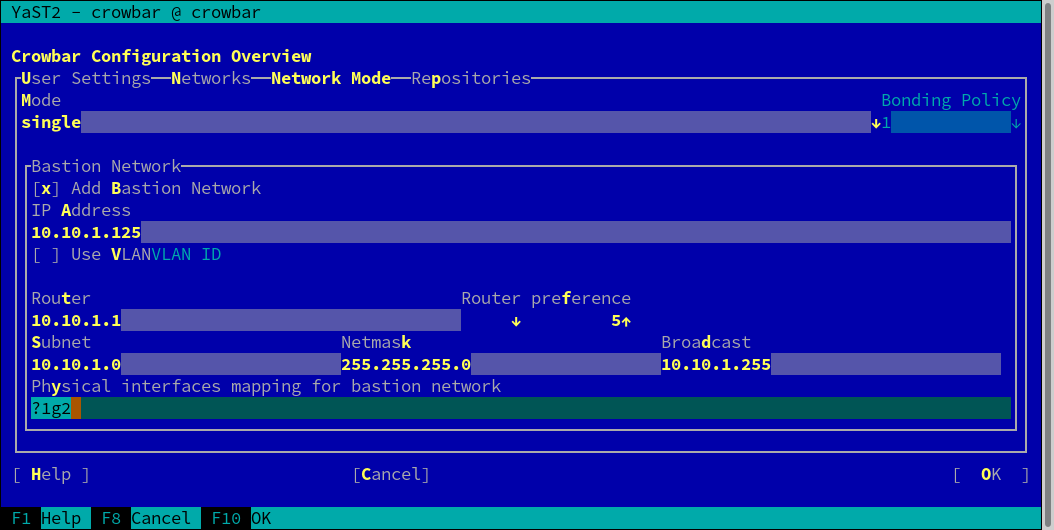

7.3 #

On the tab you can choose between , , and . In single mode, all traffic is handled by a single Ethernet card. Dual mode requires two Ethernet cards and separates traffic for private and public networks. See Section 2.1.2, “Network Modes” for details.

Team mode is similar to single mode, except that you combine several Ethernet cards to a “bond”. It is required for an HA setup of SUSE OpenStack Cloud. When choosing this mode, you also need to specify a . This option lets you define whether to focus on reliability (fault tolerance), performance (load balancing), or a combination of both. You can choose from the following modes:

- (balance-rr)

Default mode in SUSE OpenStack Cloud Crowbar. Packets are transmitted in round-robin fashion from the first to the last available interface. Provides fault tolerance and load balancing.

- (active-backup)

Only one network interface is active. If it fails, a different interface becomes active. This setting is the default for SUSE OpenStack Cloud. Provides fault tolerance.

- (balance-xor)

Traffic is split between all available interfaces based on the following policy:

[(source MAC address XOR'd with destination MAC address XOR packet type ID) modulo slave count]Requires support from the switch. Provides fault tolerance and load balancing.- (broadcast)

All traffic is broadcast on all interfaces. Requires support from the switch. Provides fault tolerance.

- (802.3ad)

Aggregates interfaces into groups that share the same speed and duplex settings. Requires

ethtoolsupport in the interface drivers, and a switch that supports and is configured for IEEE 802.3ad Dynamic link aggregation. Provides fault tolerance and load balancing.- (balance-tlb)

Adaptive transmit load balancing. Requires

ethtoolsupport in the interface drivers but no switch support. Provides fault tolerance and load balancing.- (balance-alb)

Adaptive load balancing. Requires

ethtoolsupport in the interface drivers but no switch support. Provides fault tolerance and load balancing.

For a more detailed description of the modes, see https://www.kernel.org/doc/Documentation/networking/bonding.txt.

7.3.1 Setting Up a Bastion Network #

The tab of the YaST Crowbar module also lets you set up a Bastion network. As outlined in Section 2.1, “Network”, one way to access the Administration Server from a defined external network is via a Bastion network and a second network card (as opposed to providing an external gateway).

To set up the Bastion network, you need to have a static IP address for the Administration Server from the external network. The example configuration used below assumes that the external network from which to access the admin network has the following addresses. Adjust them according to your needs.

|

Subnet |

|

|

Netmask |

|

|

Broadcast |

|

|

Gateway |

|

|

Static Administration Server address |

|

In addition to the values above, you need to enter the . With this value you specify the Ethernet card

that is used for the bastion network. See

Section 7.5.5, “Network Conduits” for details on the

syntax. The default value ?1g2 matches the second

interface (“eth1”) of the system.

After you have completed the SUSE OpenStack Cloud Crowbar installation, you cannot change the network setup. If you do need to change it, you must completely set up the Administration Server again.

The example configuration from above allows access to the SUSE OpenStack Cloud nodes

from within the bastion network. If you want to

access nodes from outside the bastion network, make the router for the

bastion network the default router for the Administration Server. This is achieved by

setting the value for the bastion network's entry to a lower value than the corresponding entry

for the admin network. By default no router preference is set for the

Administration Server—in this case, set the preference for the bastion network

to 5.

If you use a Linux gateway between the outside and the bastion network, you also need to disable route verification (rp_filter) on the Administration Server. Do so by running the following command on the Administration Server:

echo 0 > /proc/sys/net/ipv4/conf/all/rp_filter

That command disables route verification for the current session, so the

setting will not survive a reboot. Make it permanent by editing

/etc/sysctl.conf and setting the value for

to 0.

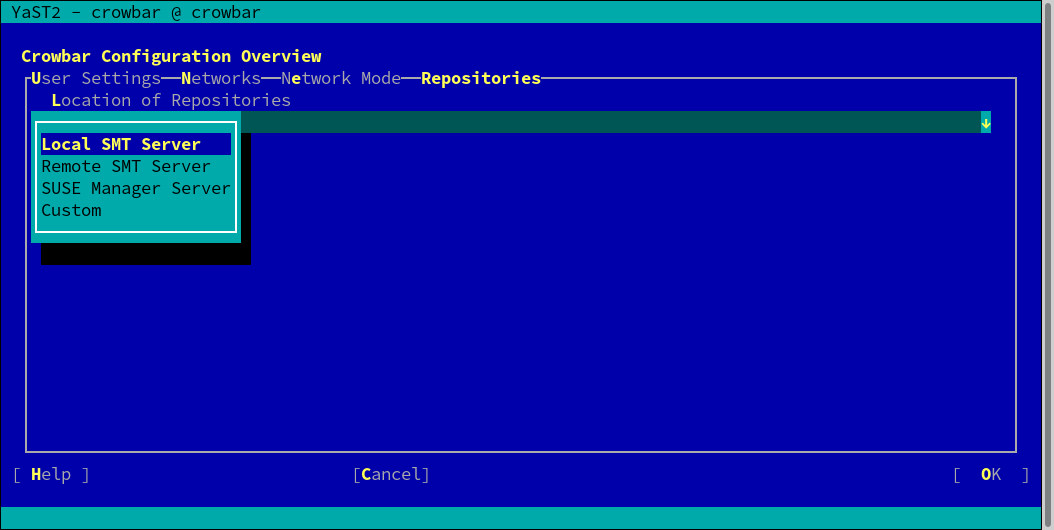

7.4 #

This dialog lets you announce the locations of the product, pool, and update repositories (see Chapter 5, Software Repository Setup for details). You can choose between four alternatives:

If you have an SMT server installed on the Administration Server as explained in Chapter 4, Installing and Setting Up an SMT Server on the Administration Server (Optional), choose this option. The repository details do not need to be provided as they will be configured automatically. This option will be applied by default if the repository configuration has not been changed manually.

If you use a remote SMT for all repositories, choose this option and provide the (in the form of

http://smt.example.com). The repository details do not need to be provided, they will be configured automatically.If you use a remote SUSE Manager server for all repositories, choose this option and provide the (in the form of

http://manager.example.com).- Custom

If you use different sources for your repositories or are using non-standard locations, choose this option and manually provide a location for each repository. This can either be a local directory (

/srv/tftpboot/suse-12.4/x86_64/repos/SLES12-SP4-Pool/) or a remote location (http://manager.example.com/ks/dist/child/sles12-sp4-updates-x86_64/sles12-sp4-x86_64/). Activating ensures that you will be informed if a repository is not available during node deployment, otherwise errors will be silently ignored.The dialog allows adding additional repositories. See How to make custom software repositories from an external server (for example a remote SMT or SUSE M..? for instructions.

Tip: Default LocationsIf you have made the repositories available in the default locations on the Administration Server (see Table 5.5, “Default Repository Locations on the Administration Server” for a list), choose and leave the empty (default). The repositories will automatically be detected.

7.5 Custom Network Configuration #

To adjust the pre-defined network setup of SUSE OpenStack Cloud beyond the scope of changing IP address assignments (as described in Chapter 7, Crowbar Setup), modify the network barclamp template.

The Crowbar network barclamp provides two functions for the system. The first

is a common role to instantiate network interfaces on the Crowbar managed

systems. The other function is address pool management. While the addresses

can be managed with the YaST Crowbar module, complex network setups require

to manually edit the network barclamp template file

/etc/crowbar/network.json. This section explains the

file in detail. Settings in this file are applied to all nodes in SUSE OpenStack Cloud.

(See Section 7.5.11, “Matching Logical and Physical Interface Names with network-json-resolve” to learn how to verify

your correct network interface names.)

After you have completed the SUSE OpenStack Cloud Crowbar installation installation, you cannot change the network setup. If you do need to change it, you must completely set up the Administration Server again.

The only exception to this rule is the interface map, which can be changed after setup. See Section 7.5.3, “Interface Map” for details.

7.5.1 Editing network.json #

The network.json file is located in

/etc/crowbar/. The template has the following general

structure:

{

"attributes" : {

"network" : {

"mode" : "VALUE",

"start_up_delay" : VALUE,

"teaming" : { "mode": VALUE },1

"enable_tx_offloading" : VALUE,

"enable_rx_offloading" : VALUE,

"interface_map"2 : [

...

],

"conduit_map"3 : [

...

],

"networks"4 : {

...

},

}

}

}General attributes. Refer to Section 7.5.2, “Global Attributes” for details. | |

Interface map section. Defines the order in which the physical network interfaces are to be used. Refer to Section 7.5.3, “Interface Map” for details. | |

Network conduit section defining the network modes and the network interface usage. Refer to Section 7.5.5, “Network Conduits” for details. | |

Network definition section. Refer to Section 7.5.7, “Network Definitions” for details. |

The order in which the entries in the network.json

file appear may differ from the one listed above. Use your editor's search

function to find certain entries.

7.5.2 Global Attributes #

The most important options to define in the global attributes section are the default values for the network and bonding modes. The following global attributes exist:

{

"attributes" : {

"network" : {

"mode" : "single",1

"start_up_delay" : 30,2

"teaming" : { "mode": 5 },3

"enable_tx_offloading" : true, 4

"enable_rx_offloading" : true, 4

"interface_map" : [

...

],

"conduit_map" : [

...

],

"networks" : {

...

},

}

}

}Network mode. Defines the configuration name (or name space) to be used from the conduit_map (see Section 7.5.5, “Network Conduits”). Your choices are single, dual, or team. | |

Time (in seconds) the Chef-client waits for the network interfaces to come online before timing out. | |

Default bonding mode. For a list of available modes, see Section 7.3, “”. | |

Turn on/off TX and RX checksum offloading. If set to

Checksum offloading is set to Important: Change of the Default Value

Starting with SUSE OpenStack Cloud Crowbar, the default value for TX and RX checksum

offloading changed from

To check which defaults a network driver uses, run

Note that if the output shows a value marked as

|

7.5.3 Interface Map #

By default, physical network interfaces are used in the order they appear

under /sys/class/net/. If you want to apply a

different order, you need to create an interface map where you can specify

a custom order of the bus IDs. Interface maps are created for specific

hardware configurations and are applied to all machines matching this

configuration.

{

"attributes" : {

"network" : {

"mode" : "single",

"start_up_delay" : 30,

"teaming" : { "mode": 5 },

"enable_tx_offloading" : true ,

"enable_rx_offloading" : true ,

"interface_map" : [

{

"pattern" : "PowerEdge R610"1,

"serial_number" : "0x02159F8E"2,

"bus_order" : [3

"0000:00/0000:00:01",

"0000:00/0000:00:03"

]

}

...

],

"conduit_map" : [

...

],

"networks" : {

...

},

}

}

}

Hardware specific identifier. This identifier can be obtained by running

the command | |

Additional hardware specific identifier. This identifier can be used in

case two machines have the same value for , but

different interface maps are needed. Specifying this parameter is

optional (it is not included in the default

| |

Bus IDs of the interfaces. The order in which they are listed here

defines the order in which Chef addresses the interfaces. The IDs can

be obtained by listing the contents of

|

The physical interface used to boot the node via PXE must always be listed first.

Contrary to all other sections in network.json, you

can change interface maps after completing the SUSE OpenStack Cloud Crowbar installation. However, nodes

that are already deployed and affected by these changes must be deployed

again. Therefore, we do not recommend making changes to the interface map

that affect active nodes.

If you change the interface mappings after completing the SUSE OpenStack Cloud Crowbar installation you

must not make your changes by editing

network.json. You must rather use the Crowbar Web

interface and open › › › . Activate your changes by clicking

.

7.5.4 Interface Map Example #

Get the machine identifier by running the following command on the machine to which the map should be applied:

~ # dmidecode -s system-product-name AS 2003R

The resulting string needs to be entered on the line of the map. It is interpreted as a Ruby regular expression (see http://www.ruby-doc.org/core-2.0/Regexp.html for a reference). Unless the pattern starts with

^and ends with$, a substring match is performed against the name returned from the above commands.List the interface devices in

/sys/class/netto get the current order and the bus ID of each interface:~ # ls -lgG /sys/class/net/ | grep eth lrwxrwxrwx 1 0 Jun 19 08:43 eth0 -> ../../devices/pci0000:00/0000:00:1c.0/0000:09:00.0/net/eth0 lrwxrwxrwx 1 0 Jun 19 08:43 eth1 -> ../../devices/pci0000:00/0000:00:1c.0/0000:09:00.1/net/eth1 lrwxrwxrwx 1 0 Jun 19 08:43 eth2 -> ../../devices/pci0000:00/0000:00:1c.0/0000:09:00.2/net/eth2 lrwxrwxrwx 1 0 Jun 19 08:43 eth3 -> ../../devices/pci0000:00/0000:00:1c.0/0000:09:00.3/net/eth3

The bus ID is included in the path of the link target—it is the following string:

../../devices/pciBUS ID/net/eth0Create an interface map with the bus ID listed in the order the interfaces should be used. Keep in mind that the interface from which the node is booted using PXE must be listed first. In the following example the default interface order has been changed to

eth0,eth2,eth1andeth3.{ "attributes" : { "network" : { "mode" : "single", "start_up_delay" : 30, "teaming" : { "mode": 5 }, "enable_tx_offloading" : true, "enable_rx_offloading" : true, "interface_map" : [ { "pattern" : "AS 2003R", "bus_order" : [ "0000:00/0000:00:1c.0/0000:09:00.0", "0000:00/0000:00:1c.0/0000:09:00.2", "0000:00/0000:00:1c.0/0000:09:00.1", "0000:00/0000:00:1c.0/0000:09:00.3" ] } ... ], "conduit_map" : [ ... ], "networks" : { ... }, } } }

7.5.5 Network Conduits #

Network conduits define mappings for logical interfaces—one or more physical interfaces bonded together. Each conduit can be identified by a unique name, the . This pattern is also called “Network Mode” in this document.

Three network modes are available:

| single: Only use the first interface for all networks. VLANs will be added on top of this single interface. |

| dual: Use the first interface as the admin interface and the second one for all other networks. VLANs will be added on top of the second interface. |

| team: Bond the first two or more interfaces. VLANs will be added on top of the bond. |

See Section 2.1.2, “Network Modes” for detailed descriptions.

Apart from these modes a fallback mode ".*/.*/.*" is

also pre-defined—it is applied in case no other mode matches the one

specified in the global attributes section. These modes can be adjusted

according to your needs. It is also possible to customize modes, but mode

names must be either single, dual, or

team.

The mode name that is specified with mode in the global

attributes section is deployed on all nodes in SUSE OpenStack Cloud. It is not possible

to use a different mode for a certain node. However, you can define

“sub” modes with the same name that only match the following

machines:

Machines with a certain number of physical network interfaces.

Machines with certain roles (all Compute Nodes for example).

{

"attributes" : {

"network" : {

"mode" : "single",

"start_up_delay" : 30,

"teaming" : { "mode": 5 },

"enable_tx_offloading" : true,

"enable_rx_offloading" : true,

"interface_map" : [

...

],

"conduit_map" : [

{

"pattern" : "single/.*/.*"1,

"conduit_list" : {

"intf2"2 : {

"if_list" : ["1g1","1g2"]3,

"team_mode" : 54

},

"intf1" : {

"if_list" : ["1g1","1g2"],

"team_mode" : 5

},

"intf0" : {

"if_list" : ["1g1","1g2"],

"team_mode" : 5

}

}

},

...

],

"networks" : {

...

},

}

}

}

This line contains the pattern definition for the

MODE_NAME/NUMBER_OF_NICS/NODE_ROLE Each field in the pattern is interpreted as a Ruby regular expression (see http://www.ruby-doc.org/core-2.0/Regexp.html for a reference).

| |||||||||||||||||

The logical network interface definition. Each conduit list must contain

at least one such definition. This line defines the name of the logical

interface. This identifier must be unique and will also be referenced in

the network definition section. We recommend sticking with the

pre-defined naming scheme: | |||||||||||||||||

This line maps one or more physical interfaces to the logical interface. Each entry represents a physical interface. If more than one entry exists, the interfaces are bonded—either with the mode defined in the attribute of this conduit section. Or, if that is not present, by the globally defined attribute. The physical interfaces definition needs to fit the following pattern: [Quantifier][Speed][Order]

Valid examples are

| |||||||||||||||||

The bonding mode to be used for this logical interface. Overwrites the default set in the global attributes section for this interface. See https://www.kernel.org/doc/Documentation/networking/bonding.txt for a list of available modes. Specifying this option is optional—if not specified here, the global setting applies. |

7.5.6 Network Conduit Examples #

The following example defines a team network mode for nodes with 6, 3, and an arbitrary number of network interfaces. Since the first mode that matches is applied, it is important that the specific modes (for 6 and 3 NICs) are listed before the general mode:

{

"attributes" : {

"network" : {

"mode" : "single",

"start_up_delay" : 30,

"teaming" : { "mode": 5 },

"enable_tx_offloading" : true,

"enable_rx_offloading" : true,

"interface_map" : [

...

],

"conduit_map" : [

{

"pattern" : "single/6/.*",

"conduit_list" : {

...

}

},

{

"pattern" : "single/3/.*",

"conduit_list" : {

...

}

},

{

"pattern" : "single/.*/.*",

"conduit_list" : {

...

}

},

...

],

"networks" : {

...

},

}

}

}

The following example defines network modes for Compute Nodes with four

physical interfaces, the Administration Server (role crowbar), the

Control Node, and a general mode applying to all other nodes.

{

"attributes" : {

"network" : {

"mode" : "team",

"start_up_delay" : 30,

"teaming" : { "mode": 5 },

"enable_tx_offloading" : true,

"enable_rx_offloading" : true,

"interface_map" : [

...

],

"conduit_map" : [

{

"pattern" : "team/4/nova-compute",

"conduit_list" : {

...

}

},

{

"pattern" : "team/.*/^crowbar$",

"conduit_list" : {

...

}

},

{

"pattern" : "team/.*/nova-controller",

"conduit_list" : {

...

}

},

{

"pattern" : "team/.*/.*",

"conduit_list" : {

...

}

},

...

],

"networks" : {

...

},

}

}

}

The following values for node_role can be used:

ceilometer-central

|

ceilometer-server

|

cinder-controller

|

cinder-volume

|

crowbar

|

database-server

|

glance-server

|

heat-server

|

horizon-server

|

keystone-server

|

manila-server

|

manila-share

|

monasca-agent

|

monasca-log-agent

|

monasca-master

|

monasca-server

|

neutron-network

|

neutron-server

|

nova-controller

|

nova-compute-*

|

rabbitmq-server

|

swift-dispersion

|

swift-proxy

|

swift-ring-compute

|

swift-storage

|

The role crowbar refers to the Administration Server.

crowbar and Pattern Matching

As explained in Example 7.4, “Network Modes for Certain Machines”, each node has an

additional, unique role named crowbar-FULLY

QUALIFIED HOSTNAME.

All three elements of the value of the pattern line

are read as regular expressions. Therefore using the pattern

mode-name/.*/crowbar will

match all nodes in your installation. crowbar is

considered a substring and therefore will also match all strings

crowbar-FULLY QUALIFIED

HOSTNAME. As a consequence, all subsequent map

definitions will be ignored. To make sure this does not happen, you must

use the proper regular expression ^crowbar$:

mode-name/.*/^crowbar$.

Apart from the roles listed under Example 7.3, “Network Modes for Certain Roles”, each

node in SUSE OpenStack Cloud has a unique role, which lets you create modes matching

exactly one node. Each node can be addressed by its unique role name in

the pattern entry of the

conduit_map.

The role name depends on the fully qualified host name (FQHN) of the

respective machine. The role is named after the scheme

crowbar-FULLY QUALIFIED

HOSTNAME where colons are replaced with dashes,

and periods are replaced with underscores. The FQHN depends on whether the

respective node was booted via PXE or not.

To determine the host name of a node, log in to the Crowbar Web interface and got to › . Click the respective node name to get detailed data for the node. The FQHN is listed first under .

- Role Names for Nodes Booted via PXE

The FULLY QUALIFIED HOSTNAME for nodes booted via PXE is composed of the following: a prefix 'd', the MAC address of the network interface used to boot the node via PXE, and the domain name as configured on the Administration Server. A machine with the fully qualified host name

d1a-12-05-1e-35-49.cloud.example.comwould get the following role name:crowbar-d1a-12-05-1e-35-49_cloud_example_com

- Role Names for the Administration Server and Nodes Added Manually

The fully qualified hostnames of the Administration Server and all nodes added manually (as described in Section 11.3, “Converting Existing SUSE Linux Enterprise Server 12 SP4 Machines Into SUSE OpenStack Cloud Nodes”) are defined by the system administrator. They typically have the form hostname+domain, for example admin.cloud.example.com, which would result in the following role name:

crowbar-admin_cloud_example_com

Network mode definitions for certain machines must be listed first in the conduit map. This prevents other, general rules which would also map from being applied.

{

"attributes" : {

"network" : {

"mode" : "dual",

"start_up_delay" : 30,

"teaming" : { "mode": 5 },

"enable_tx_offloading" : true,

"enable_rx_offloading" : true,

"interface_map" : [

...

],

"conduit_map" : [

{

"pattern" : "dual/.*/crowbar-d1a-12-05-1e-35-49_cloud_example_com",

"conduit_list" : {

...

}

},

...

],

"networks" : {

...

},

}

}

}7.5.7 Network Definitions #

The network definitions contain IP address assignments, the bridge and VLAN setup, and settings for the router preference. Each network is also assigned to a logical interface defined in the network conduit section. In the following the network definition is explained using the example of the admin network definition:

{

"attributes" : {

"network" : {

"mode" : "single",

"start_up_delay" : 30,

"teaming" : { "mode": 5 },

"enable_tx_offloading" : true,

"enable_rx_offloading" : true,

"interface_map" : [

...

],

"conduit_map" : [

...

],

"networks" : {

"admin" : {

"conduit" : "intf0"1,

"add_bridge" : false2,

"use_vlan" : false3,

"vlan" : 1004,

"router_pref" : 105,

"subnet" : "192.168.124.0"6,

"netmask" : "255.255.255.0",

"router" : "192.168.124.1",

"broadcast" : "192.168.124.255",

"ranges" : {

"admin" : {

"start" : "192.168.124.10",

"end" : "192.168.124.11"

},

"switch" : {

"start" : "192.168.124.241",

"end" : "192.168.124.250"

},

"dhcp" : {

"start" : "192.168.124.21",

"end" : "192.168.124.80"

},

"host" : {

"start" : "192.168.124.81",

"end" : "192.168.124.160"

}

}

},

"nova_floating": {

"add_ovs_bridge": false7,

"bridge_name": "br-public"8,

....

}

...

},

}

}

}Logical interface assignment. The interface must be defined in the network conduit section and must be part of the active network mode. | |

Bridge setup. Do not touch. Should be | |

Create a VLAN for this network. Changing this setting is not recommended. | |

ID of the VLAN. Change this to the VLAN ID you intend to use for the

specific network, if required. This setting can also be changed using the

YaST Crowbar interface. The VLAN ID for the

| |

Router preference, used to set the default route. On nodes hosting

multiple networks the router with the lowest

| |

Network address assignments. These values can also be changed by using the YaST Crowbar interface. | |

Openvswitch virtual switch setup. This attribute is maintained by Crowbar on a per-node level and should not be changed manually. | |

Name of the openvswitch virtual switch. This attribute is maintained by Crowbar on a per-node level and should not be changed manually. |

As of SUSE OpenStack Cloud Crowbar 8, using a VLAN for the admin network is only supported on a native/untagged VLAN. If you need VLAN support for the admin network, it must be handled at switch level.

When changing the network configuration with YaST or by editing

/etc/crowbar/network.json, you can define VLAN

settings for each network. For the networks nova-fixed

and nova-floating, however, special rules apply:

nova-fixed: The setting will be ignored. However, VLANs will automatically be used if deploying neutron with VLAN support (using the plugins linuxbridge, openvswitch plus VLAN, or cisco plus VLAN). In this case, you need to specify a correct for this network.

nova-floating: When using a VLAN for

nova-floating (which is the default), the and settings for

and default to

the same.

You have the option of separating public and floating networks with a

custom configuration. Configure your own separate floating network (not as

a subnet of the public network), and give the floating network its own

router. For example, define nova-floating as part of an

external network with a custom bridge-name. When you

are using different networks and OpenVSwitch is configured, the

pre-defined bridge-name won't work.

7.5.8 Providing Access to External Networks #

By default, external networks cannot be reached from nodes in the SUSE OpenStack Cloud.

To access external services such as a SUSE Manager server, an SMT server, or

a SAN, you need to make the external network(s) known to SUSE OpenStack Cloud. Do so by

adding a network definition for each external network to

/etc/crowbar/network.json. Refer to

Section 7.5, “Custom Network Configuration” for setup

instructions.

"external" : {

"add_bridge" : false,

"vlan" : XXX,

"ranges" : {

"host" : {

"start" : "192.168.150.1",

"end" : "192.168.150.254"

}

},

"broadcast" : "192.168.150.255",

"netmask" : "255.255.255.0",

"conduit" : "intf1",

"subnet" : "192.168.150.0",

"use_vlan" : true

}Replace the value XXX for the VLAN by a value not used within the SUSE OpenStack Cloud network and not used by neutron. By default, the following VLANs are already used:

|

VLAN ID |

Used by |

|---|---|

|

100 |

BMC VLAN (bmc_vlan) |

|

200 |

Storage Network |

|

300 |

Public Network (nova-floating, public) |

|

400 |

Software-defined network (os_sdn) |

|

500 |

Private Network (nova-fixed) |

|

501 - 2500 |

neutron (value of nova-fixed plus 2000) |

7.5.9 Split Public and Floating Networks on Different VLANs #

For custom setups, the public and floating networks can be separated.

Configure your own separate floating network (not as a subnet of the public

network), and give the floating network its own router. For example, define

nova-floating as part of an external network with a

custom bridge-name. When you are using different

networks and OpenVSwitch is configured, the pre-defined

bridge-name won't work.

7.5.10 Adjusting the Maximum Transmission Unit for the Admin and Storage Network #

If you need to adjust the Maximum Transmission Unit (MTU) for the Admin

and/or Storage Network, adjust

/etc/crowbar/network.json as shown below. You can also

enable jumbo frames this way by setting the MTU to 9000. The following

example enables jumbo frames for both, the storage and the admin network by

setting "mtu": 9000.

"admin": {

"add_bridge": false,

"broadcast": "192.168.124.255",

"conduit": "intf0",

"mtu": 9000,

"netmask": "255.255.255.0",

"ranges": {

"admin": {

"end": "192.168.124.11",

"start": "192.168.124.10"

},

"dhcp": {

"end": "192.168.124.80",

"start": "192.168.124.21"

},

"host": {

"end": "192.168.124.160",

"start": "192.168.124.81"

},

"switch": {

"end": "192.168.124.250",

"start": "192.168.124.241"

}

},

"router": "192.168.124.1",

"router_pref": 10,

"subnet": "192.168.124.0",

"use_vlan": false,

"vlan": 100

},

"storage": {

"add_bridge": false,

"broadcast": "192.168.125.255",

"conduit": "intf1",

"mtu": 9000,

"netmask": "255.255.255.0",

"ranges": {

"host": {

"end": "192.168.125.239",

"start": "192.168.125.10"

}

},

"subnet": "192.168.125.0",

"use_vlan": true,

"vlan": 200

},After you have completed the SUSE OpenStack Cloud Crowbar installation, you cannot change the network setup, and you cannot change the MTU size.

7.5.11 Matching Logical and Physical Interface Names with network-json-resolve #

SUSE OpenStack Cloud includes a new script, network-json-resolve,

which matches the physical and logical names of network interfaces, and

prints them to stdout. Use this to verify that you are using the correct

interface names in network.json. Note that it will

only work if OpenStack nodes have been deployed. The following command

prints a help menu:

sudo /opt/dell/bin/network-json-resolve -h

network-json-resolve reads your deployed

network.json file. To use a different

network.json file, specify its full path with the

--network-json option. The following example shows how

to use a different network.json file, and prints the

interface mappings of a single node:

sudo /opt/dell/bin/network-json-resolve --network-json /opt/configs/network.json aliases compute1

eth0: 0g1, 1g1

eth1: 0g1, 1g1You may query the mappings of a specific network interface:

sudo /opt/dell/bin/network-json-resolve aliases compute1 eth0

eth0: 0g1, 1g1

Print the bus ID order on a node. This returns no bus order

defined for node if you did not

configure any bus ID mappings:

sudo /opt/dell/bin/network-json-resolve bus_order compute1Print the defined conduit map for the node:

sudo /opt/dell/bin/network-json-resolve conduit_map compute1

bastion: ?1g1

intf0: ?1g1

intf1: ?1g1

intf2: ?1g1Resolve conduits to the standard interface names:

sudo /opt/dell/bin/network-json-resolve conduits compute1

bastion:

intf0: eth0

intf1: eth0

intf2: eth0Resolve the configured networks on a node to the standard interface names:

sudo /opt/dell/bin/network-json-resolve networks compute1

bastion:

bmc_vlan: eth0

nova_fixed: eth0

nova_floating: eth0

os_sdn: eth0

public: eth0

storage: eth0Resolve the specified network to the standard interface name(s):

sudo /opt/dell/bin/network-json-resolve networks compute1 public

public: eth0

Resolve a network.json-style interface to its standard

interface name(s):

sudo /opt/dell/bin/network-json-resolve resolve compute1 1g1

eth0