12 Deploying the OpenStack Services #

After the nodes are installed and configured you can start deploying the OpenStack components to finalize the installation. The components need to be deployed in a given order, because they depend on one another. The component for an HA setup is the only exception from this rule—it can be set up at any time. However, when deploying SUSE OpenStack Cloud Crowbar from scratch, we recommend deploying the proposal(s) first. Deployment for all components is done from the Crowbar Web interface through recipes, so-called “barclamps”. (See Section 12.24, “Roles and Services in SUSE OpenStack Cloud Crowbar” for a table of all roles and services, and how to start and stop them.)

The components controlling the cloud, including storage management and control components, need to be installed on the Control Node(s) (refer to Section 1.2, “The Control Node(s)” for more information). However, you may not use your Control Node(s) as a compute node or storage host for swift. Do not install the components and on the Control Node(s). These components must be installed on dedicated Storage Nodes and Compute Nodes.

When deploying an HA setup, the Control Nodes are replaced by one or more controller clusters consisting of at least two nodes, and three are recommended. We recommend setting up three separate clusters for data, services, and networking. See Section 2.6, “High Availability” for more information on requirements and recommendations for an HA setup.

The OpenStack components need to be deployed in the following order. For general instructions on how to edit and deploy barclamps, refer to Section 10.3, “Deploying Barclamp Proposals”. Any optional components that you elect to use must be installed in their correct order.

12.1 Deploying designate #

designate provides SUSE OpenStack Cloud Crowbar DNS as a Service (DNSaaS). It is used to

create and propagate zones and records over the network using pools of DNS

servers. Deployment defaults are in place, so not much is required to

configure designate. neutron needs additional settings for integration with

designate, which are also present in the [designate] section in neutron configuration.

The designate barclamp relies heavily on the DNS barclamp and expects it to be applied without any failures.

In order to deploy designate, at least one node is necessary in the DNS barclamp that is not the admin node. The admin node is not added to the public network. So another node is needed that can be attached to the public network and appear in the designate default pool.

We recommend that DNS services are running in a cluster in highly available deployments where Designate services are running in a cluster. For example, in a typical HA deployment where the controllers are deployed in a 3-node cluster, the DNS barclamp should be applied to all the controllers, in the same manner as Designate.

- designate-server role

Installs the designate server packages and configures the mini-dns (mdns) service required by designate.

- designate-worker role

Configures a designate worker on the selected nodes. designate uses the workers to distribute its workload.

designate Sink is an optional service and is not configured as part

of this barclamp.

designate uses pool(s) over which it can distribute zones and records. Pools can have varied configuration. Any misconfiguration can lead to information leakage.

The designate barclamp creates default Bind9 pool out of the box, which can be

modified later as needed. The default Bind9 pool configuration is created by Crowbar

on a node with designate-server role in

/etc/designate/pools.crowbar.yaml. You can copy

this file and edit it according to your requirements. Then provide this

configuration to designate using the command:

tux > designate-manage pool update --file /etc/designate/pools.crowbar.yaml

The dns_domain specified in neutron configuration in [designate] section

is the default Zone where DNS records for neutron resources are created via

neutron-designate integration. If this is desired, you have to create this zone

explicitly using the following command:

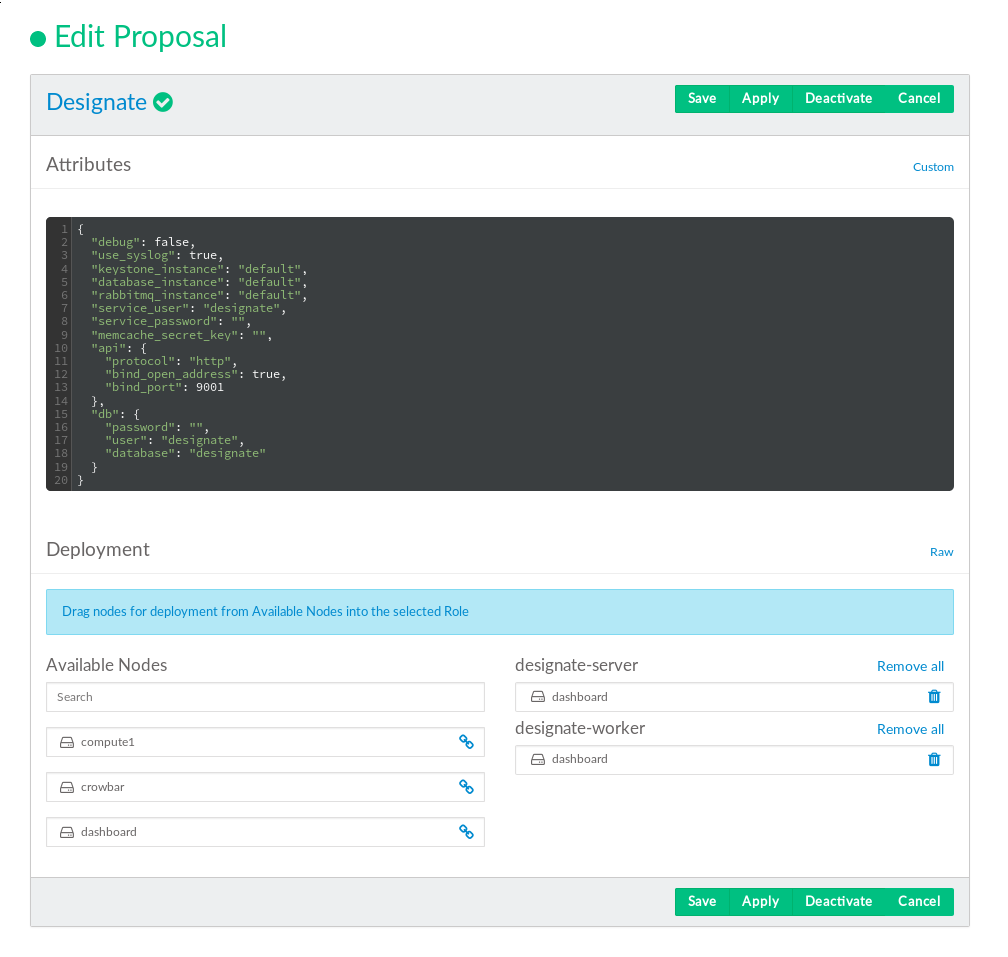

ardana > openstack zone create < email > < dns_domain >Editing the designate proposal:

12.1.1 Using PowerDNS Backend #

Designate uses Bind9 backend by default. It is also possible to use PowerDNS backend in addition to, or as an alternative, to Bind9 backend. To do so PowerDNS must be manually deployed as The designate barclamp currently does not provide any facility to automatically install and configure PowerDNS. This section outlines the steps to deploy PowerDNS backend.

If PowerDNS is already deployed, you may skip the Section 12.1.1.1, “Install PowerDNS” section and jump to the Section 12.1.1.2, “Configure Designate To Use PowerDNS Backend” section.

12.1.1.1 Install PowerDNS #

Follow these steps to install and configure PowerDNS on a Crowbar node. Keep in mind that PowerDNS must be deployed with MySQL backend.

We recommend that PowerDNS are running in a cluster in highly availability deployments where Designate services are running in a cluster. For example, in a typical HA deployment where the controllers are deployed in a 3-node cluster, PowerDNS should be running on all the controllers, in the same manner as Designate.

Install PowerDNS packages.

root #zypper install pdns pdns-backend-mysqlEdit

/etc/pdns/pdns.confand provide these options: (See https://doc.powerdns.com/authoritative/settings.html for a complete reference).- api

Set it to

yesto enable Web service Rest API.- api-key

Static Rest API access key. Use a secure random string here.

- launch

Must set to

gmysqlto use MySQL backend.- gmysql-host

Hostname (i.e. FQDN) or IP address of the MySQL server.

- gmysql-user

MySQL user which have full access to the PowerDNS database.

- gmysql-password

Password for the MySQL user.

- gmysql-dbname

MySQL database name for PowerDNS.

- local-port

Port number where PowerDNS is listening for upcoming requests.

- setgid

The group where the PowerDNS process is running under.

- setuid

The user where the PowerDNS process is running under.

- webserver

Must set to

yesto enable web service RestAPI.- webserver-address

Hostname (FQDN) or IP address of the PowerDNS web service.

- webserver-allow-from

List of IP addresses (IPv4 or IPv6) of the nodes that are permitted to talk to the PowerDNS web service. These must include the IP address of the Designate worker nodes.

For example:

api=yes api-key=Sfw234sDFw90z launch=gmysql gmysql-host=mysql.acme.com gmysql-user=powerdns gmysql-password=SuperSecured123 gmysql-dbname=powerdns local-port=54 setgid=pdns setuid=pdns webserver=yes webserver-address=192.168.124.83 webserver-allow-from=0.0.0.0/0,::/0

Login to MySQL from a Crowbar MySQL node and create the PowerDNS database and the user which has full access to the PowerDNS database. Remember, the database name, username, and password must match

gmysql-dbname,gmysql-user, andgmysql-passwordthat were specified above respectively.For example:

root #mysql Welcome to the MariaDB monitor. Commands end with ; or \g. Your MariaDB connection id is 20075 Server version: 10.2.29-MariaDB-log SUSE package Copyright (c) 2000, 2018, Oracle, MariaDB Corporation Ab and others. Type 'help;' or '\h' for help. Type '\c' to clear the current input statement. MariaDB [(none)]> CREATE DATABASE powerdns; Query OK, 1 row affected (0.01 sec) MariaDB [(none)]> GRANT ALL ON powerdns.* TO 'powerdns'@'localhost' IDENTIFIED BY 'SuperSecured123'; Query OK, 0 rows affected (0.00 sec) MariaDB [(none)]> GRANT ALL ON powerdns.* TO 'powerdns'@'192.168.124.83' IDENTIFIED BY 'SuperSecured123'; Query OK, 0 rows affected, 1 warning (0.02 sec) MariaDB [(none)]> FLUSH PRIVILEGES; Query OK, 0 rows affected (0.01 sec) MariaDB [(none)]> exit ByeCreate a MySQL schema file, named

powerdns-schema.sql, with the following content:/* SQL statements to create tables in designate_pdns DB. Note: This file is taken as is from: https://raw.githubusercontent.com/openstack/designate/master/devstack/designate_plugins/backend-pdns4-mysql-db.sql */ CREATE TABLE domains ( id INT AUTO_INCREMENT, name VARCHAR(255) NOT NULL, master VARCHAR(128) DEFAULT NULL, last_check INT DEFAULT NULL, type VARCHAR(6) NOT NULL, notified_serial INT DEFAULT NULL, account VARCHAR(40) DEFAULT NULL, PRIMARY KEY (id) ) Engine=InnoDB; CREATE UNIQUE INDEX name_index ON domains(name); CREATE TABLE records ( id INT AUTO_INCREMENT, domain_id INT DEFAULT NULL, name VARCHAR(255) DEFAULT NULL, type VARCHAR(10) DEFAULT NULL, -- Changed to "TEXT", as VARCHAR(65000) is too big for most MySQL installs content TEXT DEFAULT NULL, ttl INT DEFAULT NULL, prio INT DEFAULT NULL, change_date INT DEFAULT NULL, disabled TINYINT(1) DEFAULT 0, ordername VARCHAR(255) BINARY DEFAULT NULL, auth TINYINT(1) DEFAULT 1, PRIMARY KEY (id) ) Engine=InnoDB; CREATE INDEX nametype_index ON records(name,type); CREATE INDEX domain_id ON records(domain_id); CREATE INDEX recordorder ON records (domain_id, ordername); CREATE TABLE supermasters ( ip VARCHAR(64) NOT NULL, nameserver VARCHAR(255) NOT NULL, account VARCHAR(40) NOT NULL, PRIMARY KEY (ip, nameserver) ) Engine=InnoDB; CREATE TABLE comments ( id INT AUTO_INCREMENT, domain_id INT NOT NULL, name VARCHAR(255) NOT NULL, type VARCHAR(10) NOT NULL, modified_at INT NOT NULL, account VARCHAR(40) NOT NULL, -- Changed to "TEXT", as VARCHAR(65000) is too big for most MySQL installs comment TEXT NOT NULL, PRIMARY KEY (id) ) Engine=InnoDB; CREATE INDEX comments_domain_id_idx ON comments (domain_id); CREATE INDEX comments_name_type_idx ON comments (name, type); CREATE INDEX comments_order_idx ON comments (domain_id, modified_at); CREATE TABLE domainmetadata ( id INT AUTO_INCREMENT, domain_id INT NOT NULL, kind VARCHAR(32), content TEXT, PRIMARY KEY (id) ) Engine=InnoDB; CREATE INDEX domainmetadata_idx ON domainmetadata (domain_id, kind); CREATE TABLE cryptokeys ( id INT AUTO_INCREMENT, domain_id INT NOT NULL, flags INT NOT NULL, active BOOL, content TEXT, PRIMARY KEY(id) ) Engine=InnoDB; CREATE INDEX domainidindex ON cryptokeys(domain_id); CREATE TABLE tsigkeys ( id INT AUTO_INCREMENT, name VARCHAR(255), algorithm VARCHAR(50), secret VARCHAR(255), PRIMARY KEY (id) ) Engine=InnoDB; CREATE UNIQUE INDEX namealgoindex ON tsigkeys(name, algorithm);

Create the PowerDNS schema for the database using

mysqlCLI. For example:root #mysql powerdns < powerdns-schema.sqlEnable

pdnssystemd service.root #systemctl enable pdnsroot #systemctl start pdnsIf

pdnsis successfully running, you should see the following logs by runningjournalctl -u pdnscommand.Feb 07 01:44:12 d52-54-77-77-01-01 systemd[1]: Started PowerDNS Authoritative Server. Feb 07 01:44:12 d52-54-77-77-01-01 pdns_server[21285]: Done launching threads, ready to distribute questions

12.1.1.2 Configure Designate To Use PowerDNS Backend #

Configure Designate to use PowerDNS backend by appending the PowerDNS

servers to /etc/designate/pools.crowbar.yaml file

on a Designate worker node.

If we are replacing Bind9 backend with PowerDNS backend, make sure to

remove the bind9 entries from

/etc/designate/pools.crowbar.yaml.

In HA deployment, there should be multiple PowerDNS entries.

Also, make sure the api_token matches the

api-key that was specified in the

/etc/pdns/pdns.conf file earlier.

Append the PowerDNS entries to the end of

/etc/designate/pools.crowbar.yaml. For example:

---

- name: default-bind

description: Default BIND9 Pool

id: 794ccc2c-d751-44fe-b57f-8894c9f5c842

attributes: {}

ns_records:

- hostname: public-d52-54-77-77-01-01.virtual.cloud.suse.de.

priority: 1

- hostname: public-d52-54-77-77-01-02.virtual.cloud.suse.de.

priority: 1

nameservers:

- host: 192.168.124.83

port: 53

- host: 192.168.124.81

port: 53

also_notifies: []

targets:

- type: bind9

description: BIND9 Server

masters:

- host: 192.168.124.83

port: 5354

- host: 192.168.124.82

port: 5354

- host: 192.168.124.81

port: 5354

options:

host: 192.168.124.83

port: 53

rndc_host: 192.168.124.83

rndc_port: 953

rndc_key_file: "/etc/designate/rndc.key"

- type: bind9

description: BIND9 Server

masters:

- host: 192.168.124.83

port: 5354

- host: 192.168.124.82

port: 5354

- host: 192.168.124.81

port: 5354

options:

host: 192.168.124.81

port: 53

rndc_host: 192.168.124.81

rndc_port: 953

rndc_key_file: "/etc/designate/rndc.key"

- type: pdns4

description: PowerDNS4 DNS Server

masters:

- host: 192.168.124.83

port: 5354

- host: 192.168.124.82

port: 5354

- host: 192.168.124.81

port: 5354

options:

host: 192.168.124.83

port: 54

api_endpoint: http://192.168.124.83:8081

api_token: Sfw234sDFw90z

Update the pools using designate-manage CLI.

tux > designate-manage pool update --file /etc/designate/pools.crowbar.yamlOnce Designate sync up with PowerDNS, you should see the domains in the PowerDNS database which reflects the zones in Designate.

It make take a few minutes for Designate to sync with PowerDNS.

We can verify that the domains are successfully sync up with Designate

by inpsecting the domains table in the database.

For example:

root # mysql powerdns

Reading table information for completion of table and column names

You can turn off this feature to get a quicker startup with -A

Welcome to the MariaDB monitor. Commands end with ; or \g.

Your MariaDB connection id is 21131

Server version: 10.2.29-MariaDB-log SUSE package

Copyright (c) 2000, 2018, Oracle, MariaDB Corporation Ab and others.

Type 'help;' or '\h' for help. Type '\c' to clear the current input statement.

MariaDB [powerdns]> select * from domains;

+----+---------+--------------------------------------------------------------+------------+-------+-----------------+---------+

| id | name | master | last_check | type | notified_serial | account |

+----+---------+--------------------------------------------------------------+------------+-------+-----------------+---------+

| 1 | foo.bar | 192.168.124.81:5354 192.168.124.82:5354 192.168.124.83:5354 | NULL | SLAVE | NULL | |

+----+---------+--------------------------------------------------------------+------------+-------+-----------------+---------+

1 row in set (0.00 sec)12.2 Deploying Pacemaker (Optional, HA Setup Only) #

To make the SUSE OpenStack Cloud controller functions and the Compute Nodes highly available, set up one or more clusters by deploying Pacemaker (see Section 2.6, “High Availability” for details). Since it is possible (and recommended) to deploy more than one cluster, a separate proposal needs to be created for each cluster.

Deploying Pacemaker is optional. In case you do not want to deploy it, skip this section and start the node deployment by deploying the database as described in Section 12.3, “Deploying the Database”.

To set up a cluster, at least two nodes are required. See Section 2.6.5, “Cluster Requirements and Recommendations” for more information.

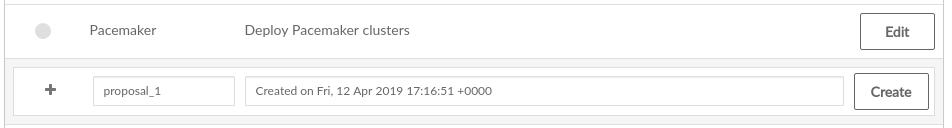

To create a proposal, go to › and click for the Pacemaker barclamp. A drop-down box where you can enter a name and a description for the proposal opens. Click to open the configuration screen for the proposal.

The name you enter for the proposal will be used to generate host names for the virtual IP addresses of HAProxy. By default, the names follow this scheme:

cluster-PROPOSAL_NAME.FQDN

(for the internal name) |

public-cluster-PROPOSAL_NAME.FQDN

(for the public name) |

For example, when PROPOSAL_NAME is set to

data, this results in the following names:

cluster-data.example.com

|

public-cluster-data.example.com

|

For requirements regarding SSL encryption and certificates, see Section 2.3, “SSL Encryption”.

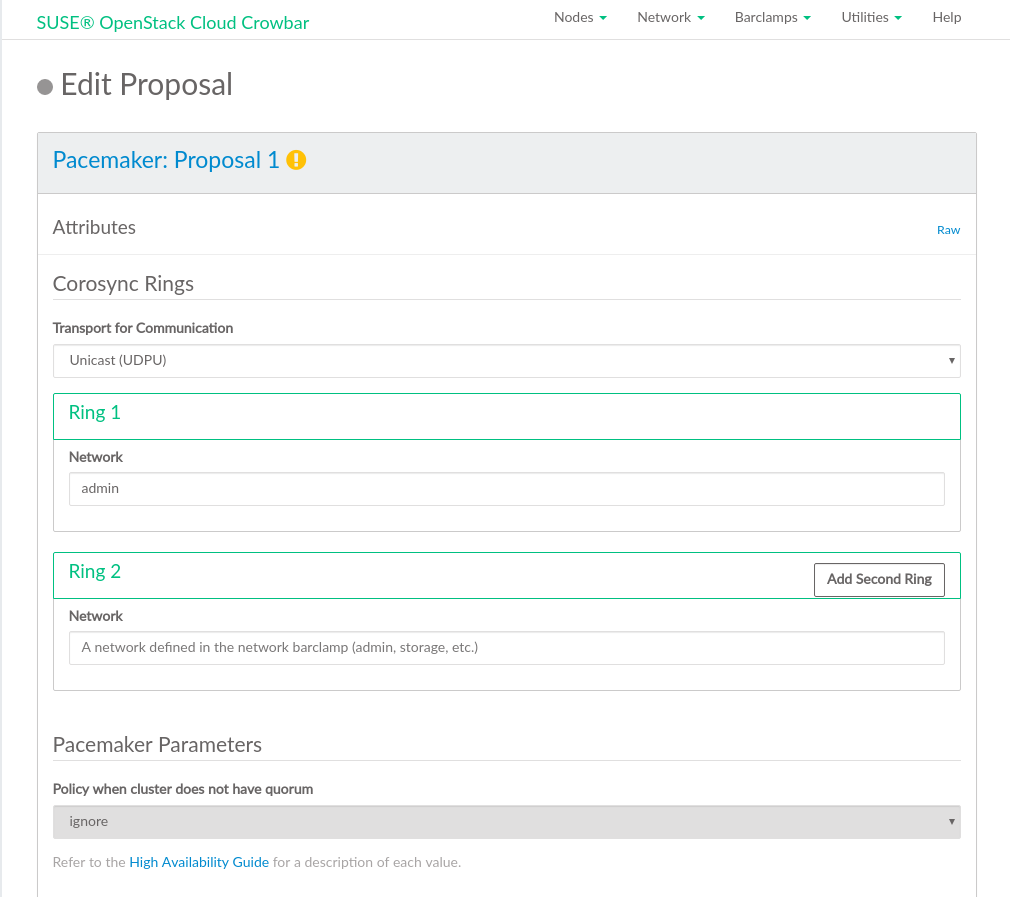

The following options are configurable in the Pacemaker configuration screen:

- Transport for Communication

Choose a technology used for cluster communication. You can choose between , sending a message to multiple destinations, or , sending a message to a single destination. By default unicast is used.

Whenever communication fails between one or more nodes and the rest of the cluster a “cluster partition” occurs. The nodes of a cluster are split in partitions but are still active. They can only communicate with nodes in the same partition and are unaware of the separated nodes. The cluster partition that has the majority of nodes is defined to have “quorum”.

This configuration option defines what to do with the cluster partition(s) that do not have the quorum. See https://documentation.suse.com/sle-ha/15-SP1/single-html/SLE-HA-guide/#sec-conf-hawk2-cluster-config, for details.

The recommended setting is to choose . However, is enforced for two-node clusters to ensure that the remaining node continues to operate normally in case the other node fails. For clusters using shared resources, choosing may be used to ensure that these resources continue to be available.

- STONITH: Configuration mode for STONITH

“Misbehaving” nodes in a cluster are shut down to prevent them from causing trouble. This mechanism is called STONITH (“Shoot the other node in the head”). STONITH can be configured in a variety of ways, refer to https://documentation.suse.com/sle-ha/15-SP1/single-html/SLE-HA-guide/#cha-ha-fencing for details. The following configuration options exist:

STONITH will not be configured when deploying the barclamp. It needs to be configured manually as described in https://documentation.suse.com/sle-ha/15-SP1/single-html/SLE-HA-guide/#cha-ha-fencing. For experts only.

Using this option automatically sets up STONITH with data received from the IPMI barclamp. Being able to use this option requires that IPMI is configured for all cluster nodes. This should be done by default. To check or change the IPMI deployment, go to › › › . Also make sure the option is set to on this barclamp.

Important: STONITH Devices Must Support IPMITo configure STONITH with the IPMI data, all STONITH devices must support IPMI. Problems with this setup may occur with IPMI implementations that are not strictly standards compliant. In this case it is recommended to set up STONITH with STONITH block devices (SBD).

This option requires manually setting up shared storage and a watchdog on the cluster nodes before applying the proposal. To do so, proceed as follows:

Prepare the shared storage. The path to the shared storage device must be persistent and consistent across all nodes in the cluster. The SBD device must not use host-based RAID or cLVM2.

Install the package

sbdon all cluster nodes.Initialize the SBD device with by running the following command. Make sure to replace

/dev/SBDwith the path to the shared storage device.sbd -d /dev/SBD create

Refer to https://documentation.suse.com/sle-ha/15-SP1/single-html/SLE-HA-guide/#sec-ha-storage-protect-test for details.

In , specify the respective kernel module to be used. Find the most commonly used watchdog drivers in the following table:

Hardware Driver HP hpwdtDell, Fujitsu, Lenovo (Intel TCO) iTCO_wdtGeneric softdogIf your hardware is not listed above, either ask your hardware vendor for the right name or check the following directory for a list of choices:

/lib/modules/KERNEL_VERSION/kernel/drivers/watchdog.Alternatively, list the drivers that have been installed with your kernel version:

root #rpm-ql kernel-VERSION |grepwatchdogIf the nodes need different watchdog modules, leave the text box empty.

After the shared storage has been set up, specify the path using the “by-id” notation (

/dev/disk/by-id/DEVICE). It is possible to specify multiple paths as a comma-separated list.Deploying the barclamp will automatically complete the SBD setup on the cluster nodes by starting the SBD daemon and configuring the fencing resource.

All nodes will use the identical configuration. Specify the to use and enter for the agent.

To get a list of STONITH devices which are supported by the High Availability Extension, run the following command on an already installed cluster nodes:

stonith -L. The list of parameters depends on the respective agent. To view a list of parameters use the following command:stonith -t agent -n

All nodes in the cluster use the same , but can be configured with different parameters. This setup is, for example, required when nodes are in different chassis and therefore need different IPMI parameters.

To get a list of STONITH devices which are supported by the High Availability Extension, run the following command on an already installed cluster nodes:

stonith -L. The list of parameters depends on the respective agent. To view a list of parameters use the following command:stonith -t agent -n

Use this setting for completely virtualized test installations. This option is not supported.

- STONITH: Do not start corosync on boot after fencing

With STONITH, Pacemaker clusters with two nodes may sometimes hit an issue known as STONITH deathmatch where each node kills the other one, resulting in both nodes rebooting all the time. Another similar issue in Pacemaker clusters is the fencing loop, where a reboot caused by STONITH will not be enough to fix a node and it will be fenced again and again.

This setting can be used to limit these issues. When set to , a node that has not been properly shut down or rebooted will not start the services for Pacemaker on boot. Instead, the node will wait for action from the SUSE OpenStack Cloud operator. When set to , the services for Pacemaker will always be started on boot. The value is used to have the most appropriate value automatically picked: it will be for two-node clusters (to avoid STONITH deathmatches), and otherwise.

When a node boots but not starts corosync because of this setting, then the node's status is in the is set to "

Problem" (red dot).- Mail Notifications: Enable Mail Notifications

Get notified of cluster node failures via e-mail. If set to , you need to specify which to use, a prefix for the mails' subject and sender and recipient addresses. Note that the SMTP server must be accessible by the cluster nodes.

The public name is the host name that will be used instead of the generated public name (see Important: Proposal Name) for the public virtual IP address of HAProxy. (This is the case when registering public endpoints, for example). Any name specified here needs to be resolved by a name server placed outside of the SUSE OpenStack Cloud network.

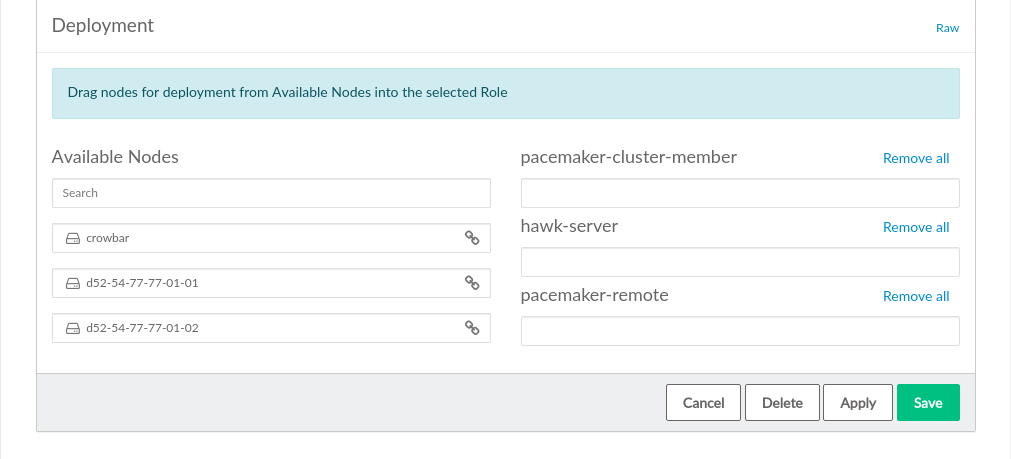

The Pacemaker component consists of the following roles. Deploying the role is optional:

Deploy this role on all nodes that should become member of the cluster.

Deploying this role is optional. If deployed, sets up the Hawk Web interface which lets you monitor the status of the cluster. The Web interface can be accessed via

https://IP-ADDRESS:7630. The default hawk credentials are usernamehacluster, passwordcrowbar.The password is visible and editable in the view of the Pacemaker barclamp, and also in the

"corosync":section of the view.Note that the GUI on SUSE OpenStack Cloud can only be used to monitor the cluster status and not to change its configuration.

may be deployed on at least one cluster node. It is recommended to deploy it on all cluster nodes.

Deploy this role on all nodes that should become members of the Compute Nodes cluster. They will run as Pacemaker remote nodes that are controlled by the cluster, but do not affect quorum. Instead of the complete cluster stack, only the

pacemaker-remotecomponent will be installed on this nodes.

After a cluster has been successfully deployed, it is listed under in the section and can be used for role deployment like a regular node.

When using clusters, roles from other barclamps must never be deployed to single nodes that are already part of a cluster. The only exceptions from this rule are the following roles:

cinder-volume

swift-proxy + swift-dispersion

swift-ring-compute

swift-storage

After a role has been deployed on a cluster, its services are managed by the HA software. You must never manually start or stop an HA-managed service, nor configure it to start on boot. Services may only be started or stopped by using the cluster management tools Hawk or the crm shell. See https://documentation.suse.com/sle-ha/15-SP1/single-html/SLE-HA-guide/#sec-ha-config-basics-resources for more information.

To check whether all cluster resources are running, either use the Hawk

Web interface or run the command crm_mon

-1r. If it is not the case, clean up the respective

resource with crm resource

cleanup RESOURCE , so it gets

respawned.

Also make sure that STONITH correctly works before continuing with the SUSE OpenStack Cloud setup. This is especially important when having chosen a STONITH configuration requiring manual setup. To test if STONITH works, log in to a node on the cluster and run the following command:

pkill -9 corosync

In case STONITH is correctly configured, the node will reboot.

Before testing on a production cluster, plan a maintenance window in case issues should arise.

12.3 Deploying the Database #

The very first service that needs to be deployed is the . The database component is using MariaDB and is used by all other components. It must be installed on a Control Node. The Database can be made highly available by deploying it on a cluster.

The only attribute you may change is the maximum number of database connections (). The default value should usually work—only change it for large deployments in case the log files show database connection failures.

12.3.1 Deploying MariaDB #

Deploying the database requires the use of MariaDB

MariaDB back end features full HA support based on the Galera clustering technology. The HA setup requires an odd number of nodes. The recommended number of nodes is 3.

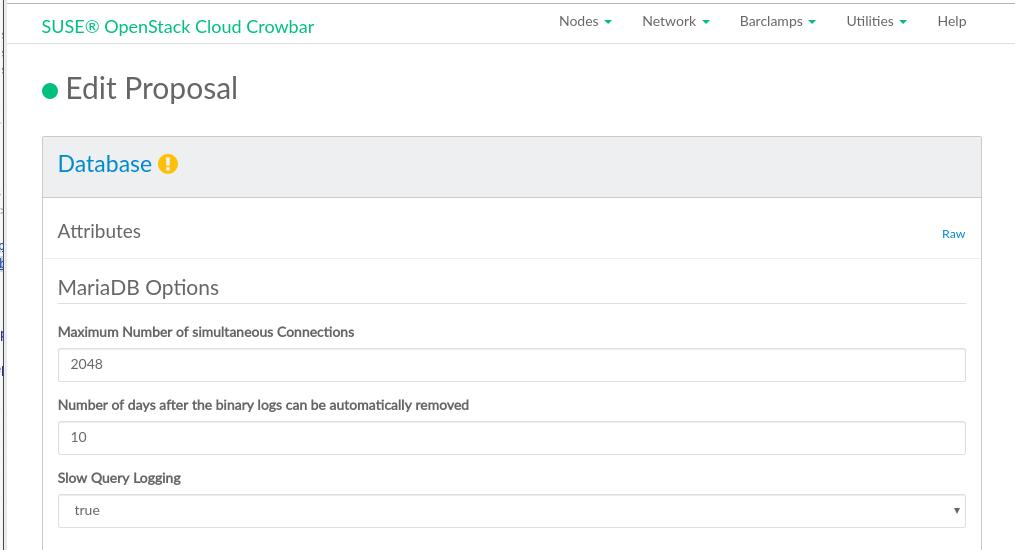

12.3.1.1 SSL Configuration #

SSL can be enabled with either a stand-alone or cluster deployment. The replication traffic between database nodes is not encrypted, whilst traffic between the database server(s) and clients are, so a separate network for the database servers is recommended.

Certificates can be provided, or the barcamp can generate self-signed

certificates. The certificate filenames are configurable in the

barclamp, and the directories /etc/mysql/ssl/certs

and /etc/mysql/ssl/private to use the defaults will

need to be created before the barclamp is applied. The CA certificate

and the certificate for MariaDB to use both go into

/etc/mysql/ssl/certs. The appropriate private key

for the certificate is placed into the

/etc/mysql/ssl/private directory. As long as the

files are readable when the barclamp is deployed, permissions can be

tightened after a successful deployment once the appropriate UNIX

groups exist.

The Common Name (CN) for the SSL certificate must be fully

qualified server name for single host deployments, and

cluster-cluster name.full domain

name for cluster deployments.

If certificate validation errors are causing issues with deploying

other barclamps (for example, when creating databases or users) you

can check the configuration with

mysql --ssl-verify-server-cert which will perform

the same verification that Crowbar does when connecting to the

database server.

If certificates are supplied, the CA certificate and its full trust

chain must be in the ca.pem file. The certificate

must be trusted by the machine (or all cluster members in a cluster

deployment), and it must be available on all client machines —

IE, if the OpenStack services are deployed on separate machines or

cluster members they will all require the CA certificate to be in

/etc/mysql/ssl/certs as well as trusted by the

machine.

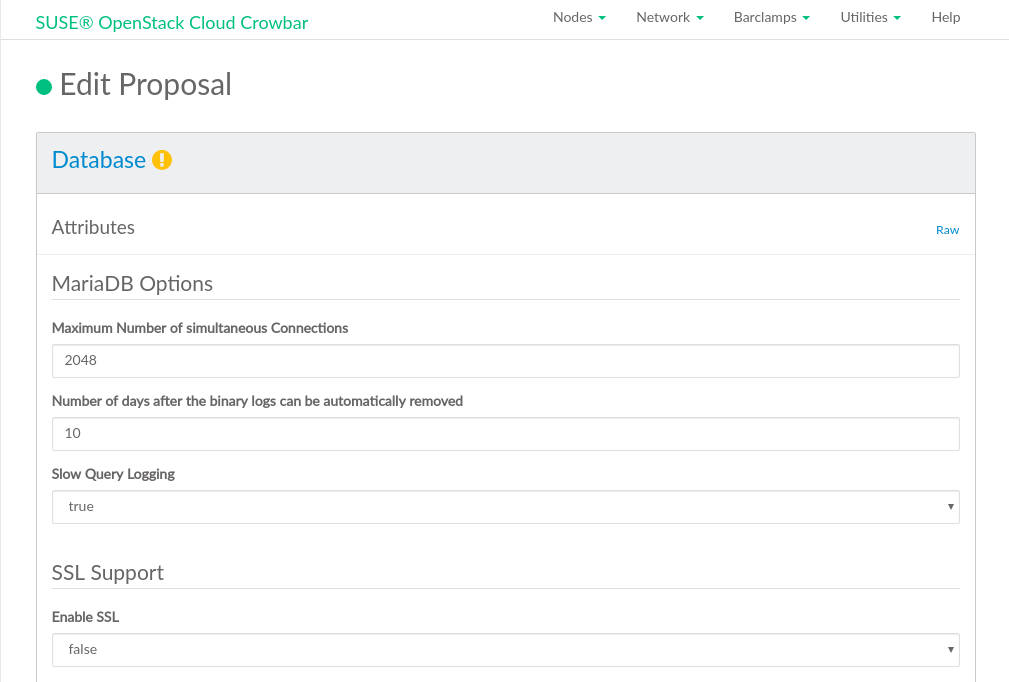

12.3.1.2 MariaDB Configuration Options #

The following configuration settings are available via the barclamp graphical interface:

- Datadir

Path to a directory for storing database data.

- Maximum Number of Simultaneous Connections

The maximum number of simultaneous client connections.

- Number of days after the binary logs can be automatically removed

A period after which the binary logs are removed.

- Slow Query Logging

When enabled, all queries that take longer than usual to execute are logged to a separate log file (by default, it's

/var/log/mysql/mysql_slow.log). This can be useful for debugging.

When MariaDB is used as the database back end, the role cannot be deployed to the node with the role. These two roles cannot coexist due to the fact that monasca uses its own MariaDB instance.

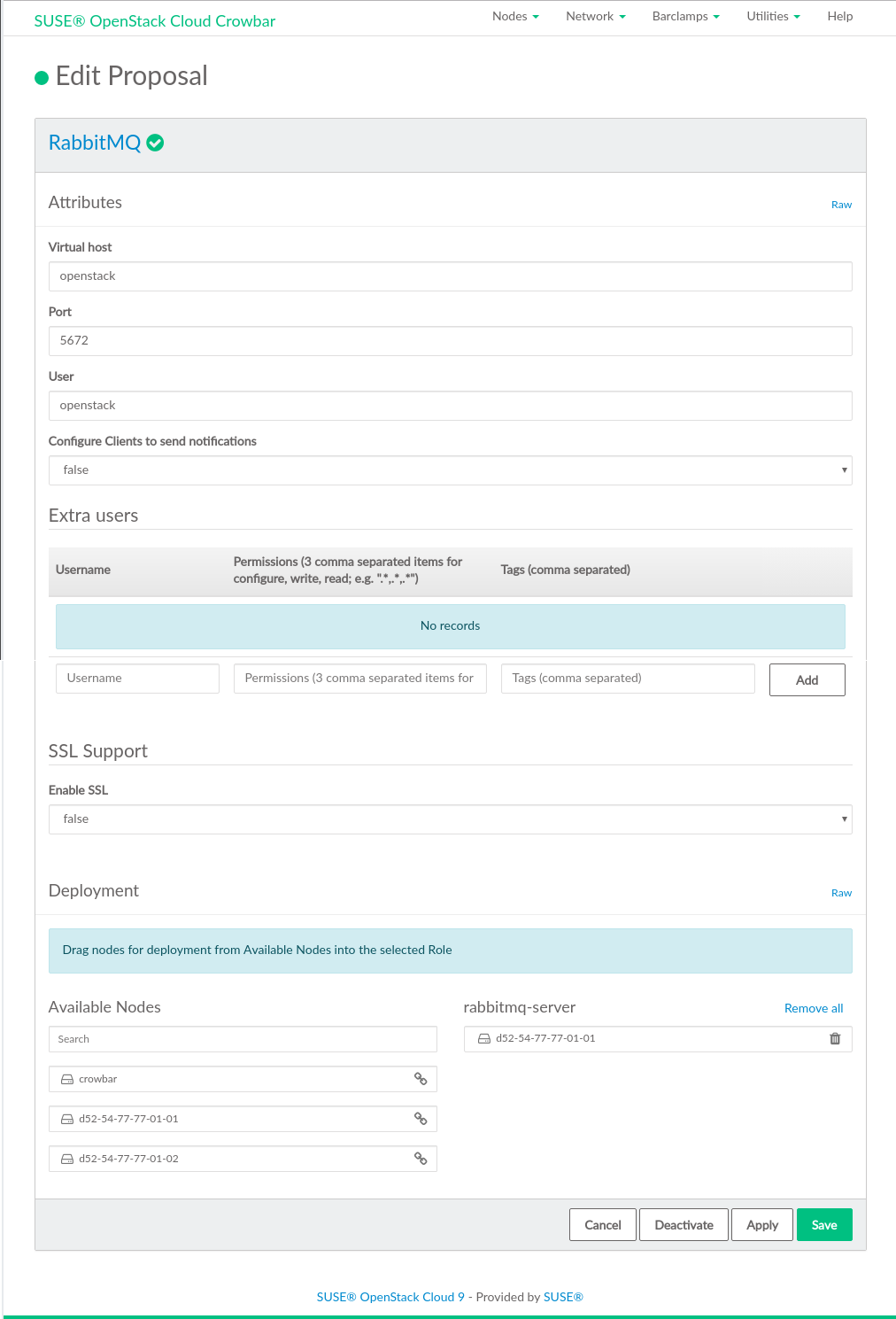

12.4 Deploying RabbitMQ #

The RabbitMQ messaging system enables services to communicate with the other nodes via Advanced Message Queue Protocol (AMQP). Deploying it is mandatory. RabbitMQ needs to be installed on a Control Node. RabbitMQ can be made highly available by deploying it on a cluster. We recommend not changing the default values of the proposal's attributes.

Name of the default virtual host to be created and used by the RabbitMQ server (

default_vhostconfiguration option inrabbitmq.config).- Port

Port the RabbitMQ server listens on (

tcp_listenersconfiguration option inrabbitmq.config).- User

RabbitMQ default user (

default_userconfiguration option inrabbitmq.config).

12.4.1 HA Setup for RabbitMQ #

To make RabbitMQ highly available, deploy it on a cluster instead of a single Control Node. This also requires shared storage for the cluster that hosts the RabbitMQ data. We recommend using a dedicated cluster to deploy RabbitMQ together with the database, since both components require shared storage.

Deploying RabbitMQ on a cluster makes an additional section available in the section of the proposal. Configure the in this section.

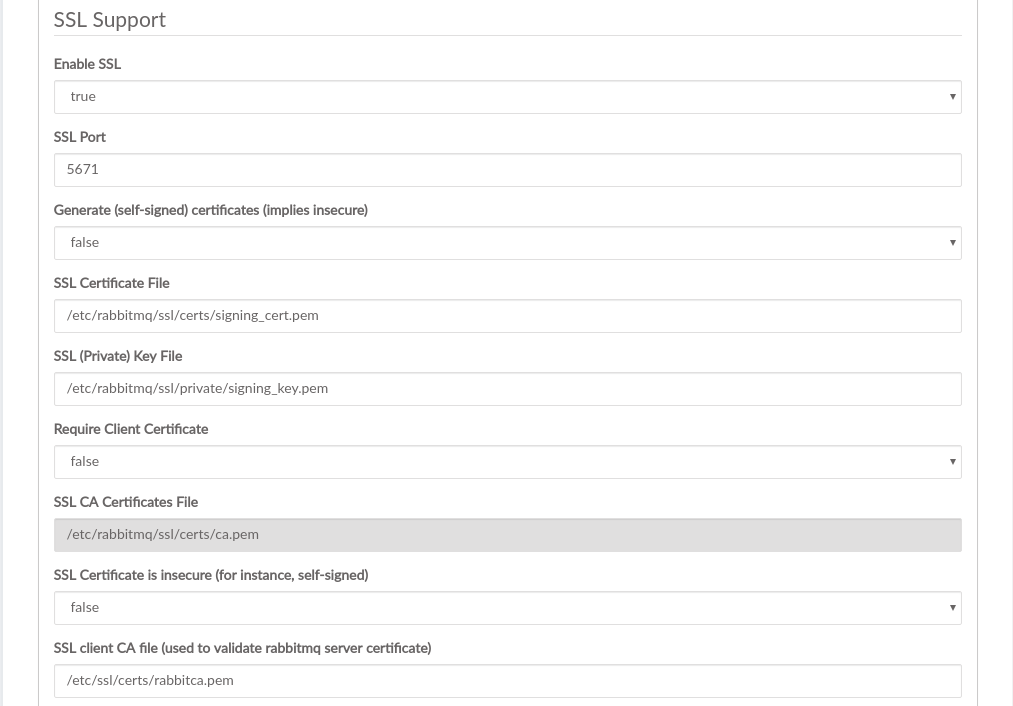

12.4.2 SSL Configuration for RabbitMQ #

The RabbitMQ barclamp supports securing traffic via SSL. This is similar to the SSL support in other barclamps, but with these differences:

RabbitMQ can listen on two ports at the same time, typically port 5672 for unsecured and port 5671 for secured traffic.

The ceilometer pipeline for OpenStack swift cannot be passed SSL-related parameters. When SSL is enabled for RabbitMQ the ceilometer pipeline in swift is turned off, rather than sending it over an unsecured channel.

The following steps are the fastest way to set up and test a new SSL certificate authority (CA).

In the RabbitMQ barclamp set to , and to

true, then apply the barclamp. The barclamp will create a new CA, enter the correct settings in/etc/rabbitmq/rabbitmq.config, and start RabbitMQ.Test your new CA with OpenSSL, substituting the hostname of your control node:

openssl s_client -connect d52-54-00-59-e5-fd:5671 [...] Verify return code: 18 (self signed certificate)

This outputs a lot of information, including a copy of the server's public certificate, protocols, ciphers, and the chain of trust.

The last step is to configure client services to use SSL to access the RabbitMQ service. (See https://www.rabbitmq.com/ssl.html for a complete reference).

It is preferable to set up your own CA. The best practice is to use a commercial certificate authority. You may also deploy your own self-signed certificates, provided that your cloud is not publicly-accessible, and only for your internal use. Follow these steps to enable your own CA in RabbitMQ and deploy it to SUSE OpenStack Cloud:

Configure the RabbitMQ barclamp to use the control node's certificate authority (CA), if it already has one, or create a CA specifically for RabbitMQ and configure the barclamp to use that. (See Section 2.3, “SSL Encryption”, and the RabbitMQ manual has a detailed howto on creating your CA at http://www.rabbitmq.com/ssl.html, with customizations for .NET and Java clients.)

Figure 12.6: SSL Settings for RabbitMQ Barclamp #

The configuration options in the RabbitMQ barclamp allow tailoring the barclamp to your SSL setup.

Set this to to expose all of your configuration options.

RabbitMQ's SSL listening port. The default is 5671.

When this is set to

true, self-signed certificates are automatically generated and copied to the correct locations on the control node, and all other barclamp options are set automatically. This is the fastest way to apply and test the barclamp. Do not use this on production systems. When this is set tofalsethe remaining options are exposed.The location of your public root CA certificate.

The location of your private server key.

This goes with . Set to to require clients to present SSL certificates to RabbitMQ.

Trust client certificates presented by the clients that are signed by other CAs. You'll need to store copies of the CA certificates; see "Trust the Client's Root CA" at http://www.rabbitmq.com/ssl.html.

When this is set to , clients validate the RabbitMQ server certificate with the file.

Tells clients of RabbitMQ where to find the CA bundle that validates the certificate presented by the RabbitMQ server, when is set to .

12.4.3 Configuring Clients to Send Notifications #

RabbitMQ has an option called Configure clients to send

notifications. It defaults to false, which

means no events will be sent. It is required to be set to

true for ceilometer, monasca, and any other services

consuming notifications. When it is set to true,

OpenStack services are configured to submit lifecycle audit events to the

notification RabbitMQ queue.

This option should only be enabled if an active consumer is configured, otherwise events will accumulate on the RabbitMQ server, clogging up CPU, memory, and disk storage.

Any accumulation can be cleared by running:

$ rabbitmqctl -p /openstack purge_queue notifications.info $ rabbitmqctl -p /openstack purge_queue notifications.error

12.5 Deploying keystone #

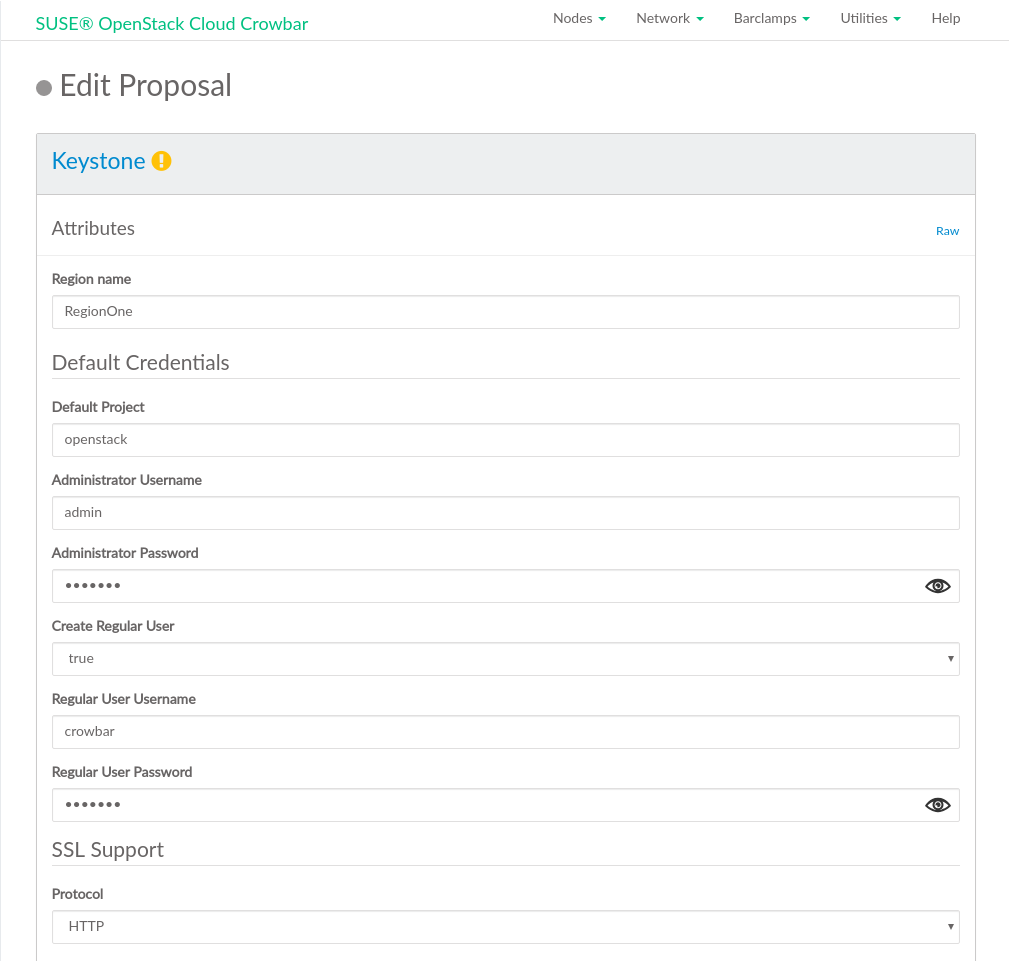

keystone is another core component that is used by all other OpenStack components. It provides authentication and authorization services. keystone needs to be installed on a Control Node. keystone can be made highly available by deploying it on a cluster. You can configure the following parameters of this barclamp:

Set the algorithm used by keystone to generate the tokens. You can choose between

Fernet(the default) orUUID. Note that for performance and security reasons it is strongly recommended to useFernet.Allows customizing the region name that crowbar is going to manage.

Tenant for the users. Do not change the default value of

openstack.User name and password for the administrator.

Specify whether a regular user should be created automatically. Not recommended in most scenarios, especially in an LDAP environment.

User name and password for the regular user. Both the regular user and the administrator accounts can be used to log in to the SUSE OpenStack Cloud Dashboard. However, only the administrator can manage keystone users and access.

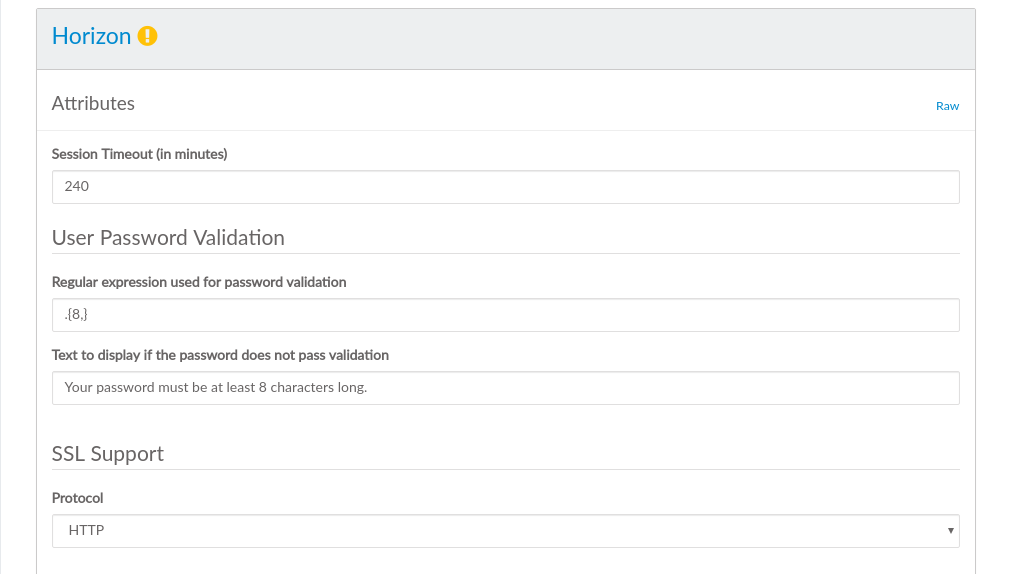

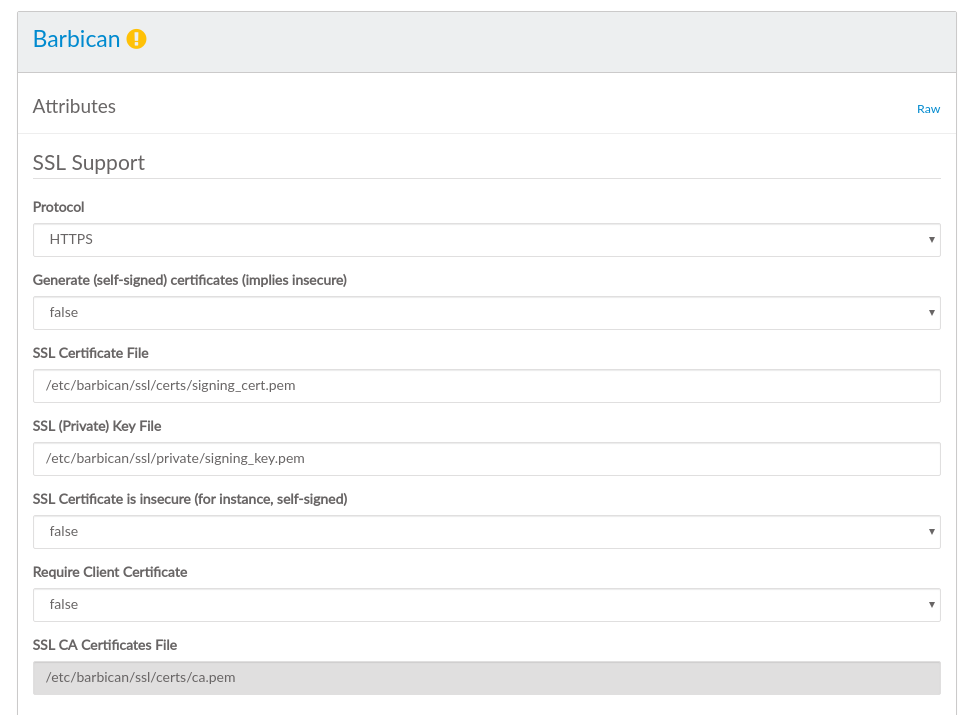

Figure 12.7: The keystone Barclamp #- SSL Support: Protocol

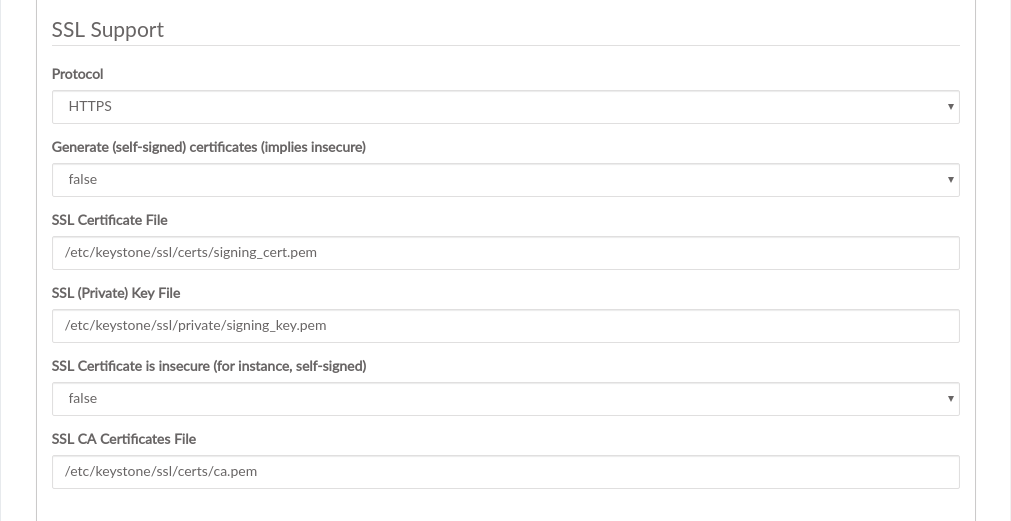

When you use the default value , public communication will not be encrypted. Choose to use SSL for encryption. See Section 2.3, “SSL Encryption” for background information and Section 11.4.6, “Enabling SSL” for installation instructions. The following additional configuration options will become available when choosing :

When set to

true, self-signed certificates are automatically generated and copied to the correct locations. This setting is for testing purposes only and should never be used in production environments!- /

Location of the certificate key pair files.

Set this option to

truewhen using self-signed certificates to disable certificate checks. This setting is for testing purposes only and should never be used in production environments!Specify the absolute path to the CA certificate. This field is mandatory, and leaving it blank will cause the barclamp to fail. To fix this issue, you have to provide the absolute path to the CA certificate, restart the

apache2service, and re-deploy the barclamp.When the certificate is not already trusted by the pre-installed list of trusted root certificate authorities, you need to provide a certificate bundle that includes the root and all intermediate CAs.

Figure 12.8: The SSL Dialog #

12.5.1 Authenticating with LDAP #

keystone has the ability to separate identity back-ends by domains. SUSE OpenStack Cloud 9 uses this method for authenticating users.

The keystone barclamp sets up a MariaDB database by default. Configuring an LDAP back-end is done in the view.

Set

Then in the section configure a map with domain names as keys, and configuration as values. In the default proposal the domain name key is , and the keys are the two required sections for an LDAP-based identity driver configuration, the section which sets the driver, and the section which sets the LDAP connection options. You may configure multiple domains, each with its own configuration.

You may make this available to horizon by setting to in the horizon barclamp.

Users in the LDAP-backed domain have to know the name of the domain in order to authenticate, and must use the keystone v3 API endpoint. (See the OpenStack manuals, Domain-specific Configuration and Integrate Identity with LDAP, for additional details.)

12.5.2 HA Setup for keystone #

Making keystone highly available requires no special configuration—it is sufficient to deploy it on a cluster.

12.5.3 OpenID Connect Setup for keystone #

keystone supports WebSSO by federating with an external identity provider (IdP) using auth_openidc module.

There are two steps to enable this feature:

Configure the

"federation"and"openidc"attributes for the Keystone Barclamp in Crowbar.Create the Identity Provider, Protocol, and Mapping resource in keystone using .

12.5.3.1 keystone Barclamp Configuration #

Configurating OpenID Connect is done in the view, under the section. The global attributes, namely and are not specific to OpenID Connect. Rather, they are designed to help facilitate WebSSO browser redirects with external IdPs in a complex cloud deployment environment.

If the cloud deployment does not have any external proxies or load balancers, where the public keystone and horizon (Dashboard service) endpoints are directly managed by Crowbar, and does not need to be provided. However, in a complex cloud deployment where the public Keystone and Horizon endpoints are handled by external load balancers or proxies, and they are not directly managed by Crowbar, and must be provided and they must correctly reflect the external public endpoints.

To configure OpenID Connect, edit the attributes in the subsection.

Set

Provide the name for the . This must be the same as the identity provider to be created in Keystone using the . For example, if the identity provider is

foo, create the identity provider with the name via Openstack CLI (i.e. ).corresponds to auth_openidc OIDCResponseType. In most cases, it should be

id_token.corresponds to auth_openidc OIDCScope.

corresponds to auth_openidc OIDCProviderMetadataURL.

corresponds to auth_openidc OIDCClientID.

corresponds to auth_openidc OIDCClientSecret.

corresponds to auth_openidc OIDCRedirectURI. In a cloud deployment where all the external endpoints are directly managed by Crowbar, this attribute can be left blank as it will be auto-populated by Crowbar. However, in a complex cloud deployment where the public Keystone endpoint is handled by an external load balancer or proxy, this attribute must reflect the external Keystone auth endpoint for the OpenID Connect IdP. For example,

"https://keystone-public-endpoint.foo.com/v3/OS-FEDERATION/identity_providers/foo/protocols/openid/auth"WarningSome OpenID Connect IdPs such as Google require the hostname in the to be a public FQDN. In that case, the hostname in Keystone public endpoint must also be a public FQDN and must match the one specified in the .

12.5.3.2 Create Identity Provider, Protocol, and Mapping #

To fully enable OpenID Connect, the Identity Provider,

Protocol, and Mapping for the given

IdP must be created in Keystone. This is done by using the

, on a controller node, and

using the Keystone admin credential.

Login to a controller node as

rootuser.Use the Keystone admin credential.

source ~/.openrc

Create the Identity Provider. For example:

openstack identity provider create foo

WarningThe name of the Identity Provider must be exactly the same as the attribute given when configuring Keystone in the previous section.

Next, create the Mapping for the Identity Provider. Prior to creating the Mapping, one must fully grasp the intricacies of Mapping Combinations as it may have profound security implications if done incorrectly. Here's an example of a mapping file.

[ { "local": [ { "user": { "name": "{0}", "email": "{1}", "type": "ephemeral" }, "group": { "domain": { "name": "Default" }, "name": "openidc_demo" } } ], "remote": [ { "type": "REMOTE_USER" }, { "type": "HTTP_OIDC_EMAIL" } ] } ]Once the mapping file is created, now create the mapping resource in Keystone. For example:

openstack mapping create --rule oidc_mapping.json oidc_mapping

Lastly, create the Protocol for the Identity Provider and its mapping. For OpenID Connect, the protocol name must be openid. For example:

openstack federation protocol create --identity-provider google --mapping oidc_mapping openid

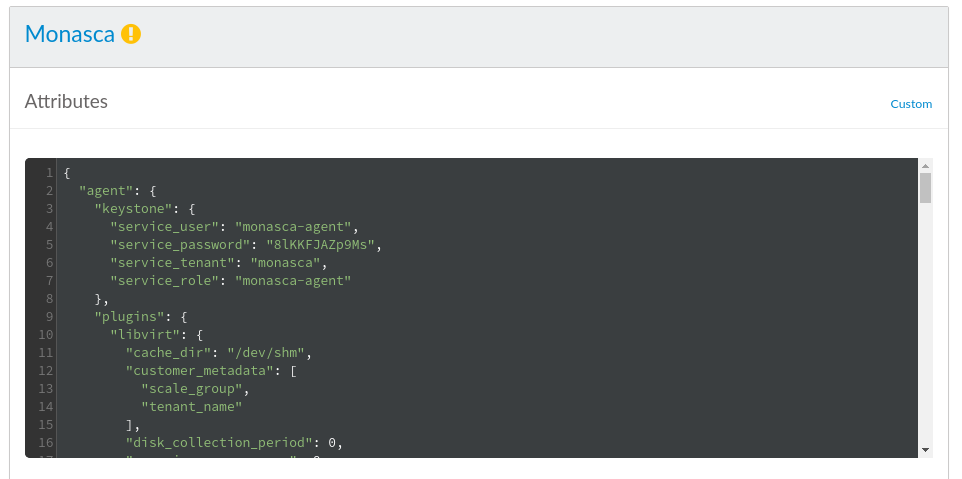

12.6 Deploying monasca (Optional) #

monasca is an open-source monitoring-as-a-service solution that integrates with OpenStack. monasca is designed for scalability, high performance, and fault tolerance.

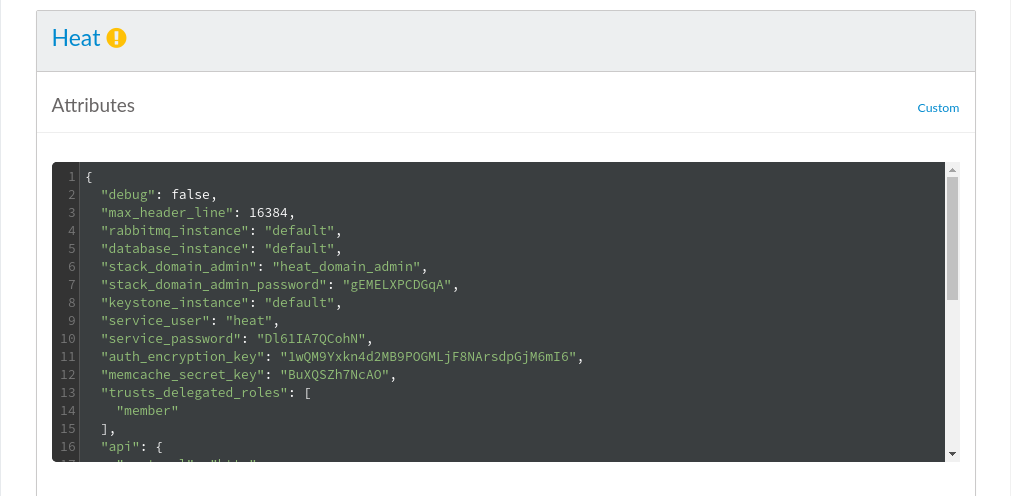

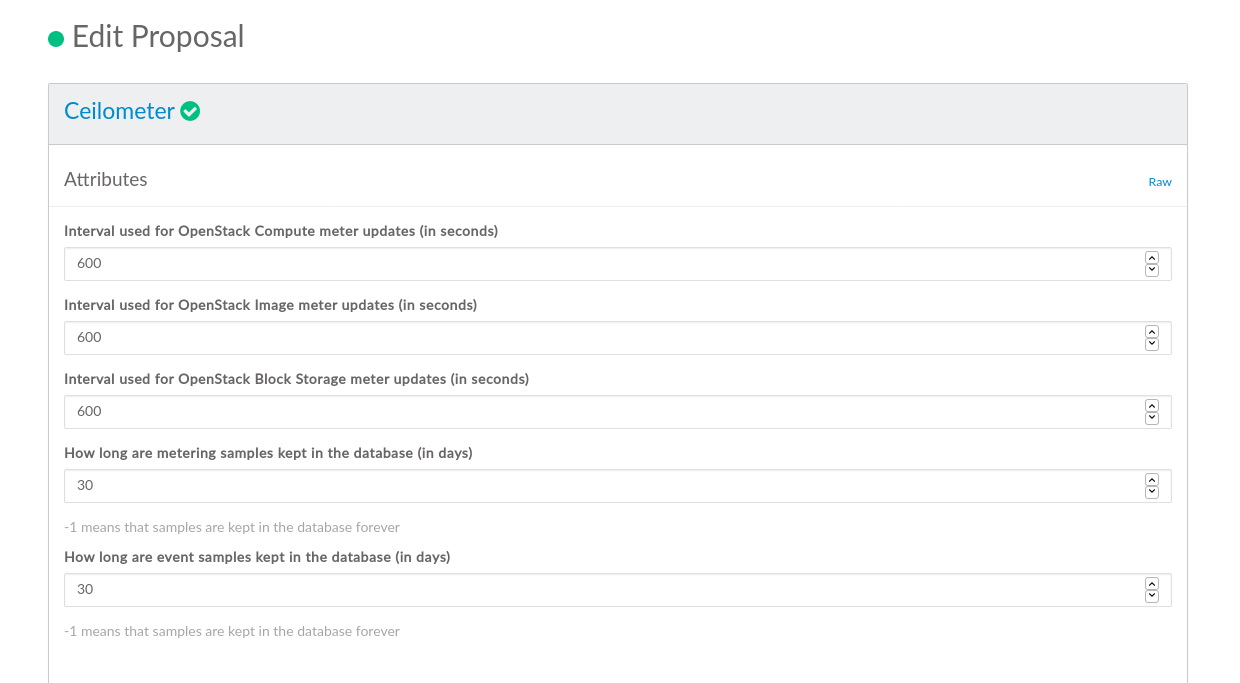

Accessing the interface is not required for day-to-day operation. But as not all monasca settings are exposed in the barclamp graphical interface (for example, various performance tuneables), it is recommended to configure monasca in the mode. Below are the options that can be configured via the interface of the monasca barclamp.

- keystone

Contains keystone credentials that the agents use to send metrics. Do not change these options, as they are configured by Crowbar.

- insecure

Specifies whether SSL certificates are verified when communicating with keystone. If set to

false, theca_fileoption must be specified.- ca_file

Specifies the location of a CA certificate that is used for verifying keystone's SSL certificate.

- log_dir

Path for storing log files. The specified path must exist. Do not change the default

/var/log/monasca-agentpath.- log_level

Agent's log level. Limits log messages to the specified level and above. The following levels are available: Error, Warning, Info (default), and Debug.

- check_frequency

Interval in seconds between running agents' checks.

- num_collector_threads

Number of simultaneous collector threads to run. This refers to the maximum number of different collector plug-ins (for example,

http_check) that are allowed to run simultaneously. The default value1means that plug-ins are run sequentially.- pool_full_max_retries

If a problem with the results from multiple plug-ins results blocks the entire thread pool (as specified by the

num_collector_threadsparameter), the collector exits, so it can be restarted by thesupervisord. The parameterpool_full_max_retriesspecifies when this event occurs. The collector exits when the defined number of consecutive collection cycles have ended with the thread pool completely full.- plugin_collect_time_warn

Upper limit in seconds for any collection plug-in's run time. A warning is logged if a plug-in runs longer than the specified limit.

- max_measurement_buffer_size

Maximum number of measurements to buffer locally if the monasca API is unreachable. Measurements will be dropped in batches, if the API is still unreachable after the specified number of messages are buffered. The default

-1value indicates unlimited buffering. Note that a large buffer increases the agent's memory usage.- backlog_send_rate

Maximum number of measurements to send when the local measurement buffer is flushed.

- amplifier

Number of extra dimensions to add to metrics sent to the monasca API. This option is intended for load testing purposes only. Do not enable the option in production! The default

0value disables the addition of dimensions.

- max_data_size_kb

Maximum payload size in kilobytes for a request sent to the monasca log API.

- num_of_logs

Maximum number of log entries the log agent sends to the monasca log API in a single request. Reducing the number increases performance.

- elapsed_time_sec

Time interval in seconds between sending logs to the monasca log API.

- delay

Interval in seconds for checking whether

elapsed_time_sechas been reached.- keystone

keystone credentials the log agents use to send logs to the monasca log API. Do not change this option manually, as it is configured by Crowbar.

- bind_host

Interfaces

monasca-apilistens on. Do not change this option, as it is configured by Crowbar.- processes

Number of processes to spawn.

- threads

Number of WSGI worker threads to spawn.

- log_level

Log level for

openstack-monasca-api. Limits log messages to the specified level and above. The following levels are available: Critical, Error, Warning, Info (default), Debug, and Trace.

- repo_dir

List of directories for storing Elasticsearch snapshots. Must be created manually and be writeable by the

elasticsearchuser. Must contain at least one entry in order for the snapshot functionality to work.- heap_size

Sets the heap size. We recommend setting heap size at 50% of the available memory, but not more than 31 GB. The default of 4 GB is likely too small and should be increased if possible.

- limit_memlock

The maximum size that may be locked into memory in bytes

- limit_nofile

The maximum number of open file descriptors

- limit_nproc

The maximum number of processes

- vm_max_map_count

The maximum number of memory map areas a process may have.

For instructions on creating an Elasticsearch snapshot, see Section 4.7.4, “Backup and Recovery”.

elasticsearch-curator removes old and large

elasticsearch indices. The settings below determine its behavior.

- delete_after_days

Time threshold for deleting indices. Indices older the specified number of days are deleted. This parameter is unset by default, so indices are kept indefinitely.

- delete_after_size

Maximum size in megabytes of indices. Indices larger than the specified size are deleted. This parameter is unset by default, so indices are kept irrespective of their size.

- delete_exclude_index

List of indices to exclude from

elasticsearch-curatorruns. By default, only the.kibanafiles are excluded.

- log_retention_hours

Number of hours for retaining log segments in Kafka's on-disk log. Messages older than the specified value are dropped.

- log_retention_bytes

Maximum size for Kafka's on-disk log in bytes. If the log grows beyond this size, the oldest log segments are dropped.

- topics

list of topics

metrics

events

alarm-state-transitions

alarm-notifications

retry-notifications

60-seconds-notifications

log

transformed-log

The following are options of every topic:

- replicas

Controls how many servers replicate each message that is written

- partitions

Controls how many logs the topic is sharded into

- config_options

Map of configuration options is described in the Apache Kafka documentation

These parameters only affect first time installations. Parameters may be changed after installation with scripts available from Apache Kafka.

Kafka does not support reducing the number of partitions for a topic.

- email_enabled

Enable or disable email alarm notifications.

- email_smtp_host

SMTP smarthost for sending alarm notifications.

- email_smtp_port

Port for the SMTP smarthost.

- email_smtp_user

User name for authenticating against the smarthost.

- email_smtp_password

Password for authenticating against the smarthost.

- email_smtp_from_address

Sender address for alarm notifications.

- influxdb_retention_policy

Number of days to keep metrics records in influxdb.

For an overview of all supported values, see https://docs.influxdata.com/influxdb/v1.1/query_language/database_management/#create-retention-policies-with-create-retention-policy.

- monitor_libvirt

The global switch for toggling libvirt monitoring. If set to true, libvirt metrics will be gathered on all libvirt based Compute Nodes. This setting is available in the Crowbar UI.

- monitor_ceph

The global switch for toggling Ceph monitoring. If set to true, Ceph metrics will be gathered on all Ceph-based Compute Nodes. This setting is available in Crowbar UI. If the Ceph cluster has been set up independently, Crowbar ignores this setting.

- cache_dir

The directory where monasca-agent will locally cache various metadata about locally running VMs on each Compute Node.

- customer_metadata

Specifies the list of instance metadata keys to be included as dimensions with customer metrics. This is useful for providing more information about an instance.

- disk_collection_period

Specifies a minimum interval in seconds for collecting disk metrics. Increase this value to reduce I/O load. If the value is lower than the global agent collection period (

check_frequency), it will be ignored in favor of the global collection period.- max_ping_concurrency

Specifies the number of ping command processes to run concurrently when determining whether the VM is reachable. This should be set to a value that allows the plugin to finish within the agent's collection period, even if there is a networking issue. For example, if the expected number of VMs per Compute Node is 40 and each VM has one IP address, then the plugin will take at least 40 seconds to do the ping checks in the worst-case scenario where all pings fail (assuming the default timeout of 1 second). Increasing

max_ping_concurrencyallows the plugin to finish faster.- metadata

Specifies the list of nova side instance metadata keys to be included as dimensions with the cross-tenant metrics for the project. This is useful for providing more information about an instance.

- nova_refresh

Specifies the number of seconds between calls to the nova API to refresh the instance cache. This is helpful for updating VM hostname and pruning deleted instances from the cache. By default, it is set to 14,400 seconds (four hours). Set to 0 to refresh every time the Collector runs, or to None to disable regular refreshes entirely. In this case, the instance cache will only be refreshed when a new instance is detected.

- ping_check

Includes the entire ping command (without the IP address, which is automatically appended) to perform a ping check against instances. The

NAMESPACEkeyword is automatically replaced with the appropriate network namespace for the VM being monitored. Set to False to disable ping checks.- vnic_collection_period

Specifies a minimum interval in seconds for collecting disk metrics. Increase this value to reduce I/O load. If the value is lower than the global agent collection period (

check_frequency), it will be ignored in favor of the global collection period.- vm_cpu_check_enable

Toggles the collection of VM CPU metrics. Set to true to enable.

- vm_disks_check_enable

Toggles the collection of VM disk metrics. Set to true to enable.

- vm_extended_disks_check_enable

Toggles the collection of extended disk metrics. Set to true to enable.

- vm_network_check_enable

Toggles the collection of VM network metrics. Set to true to enable.

- vm_ping_check_enable

Toggles ping checks for checking whether a host is alive. Set to true to enable.

- vm_probation

Specifies a period of time (in seconds) in which to suspend metrics from a newly-created VM. This is to prevent quickly-obsolete metrics in an environment with a high amount of instance churn (VMs created and destroyed in rapid succession). The default probation length is 300 seconds (5 minutes). Set to 0 to disable VM probation. In this case, metrics are recorded immediately after a VM is created.

- vnic_collection_period

Specifies a minimum interval in seconds for collecting VM network metrics. Increase this value to reduce I/O load. If the value is lower than the global agent collection period (check_frequency), it will be ignored in favor of the global collection period.

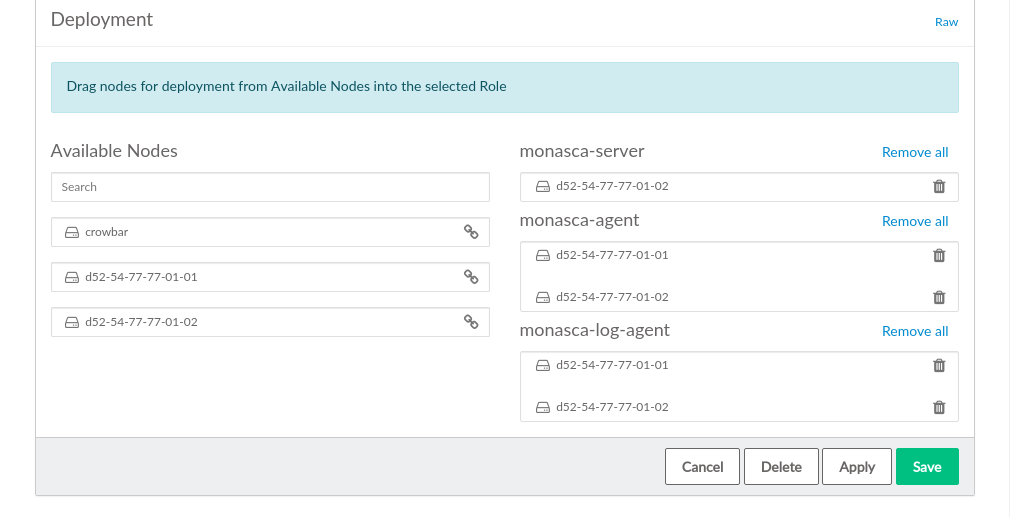

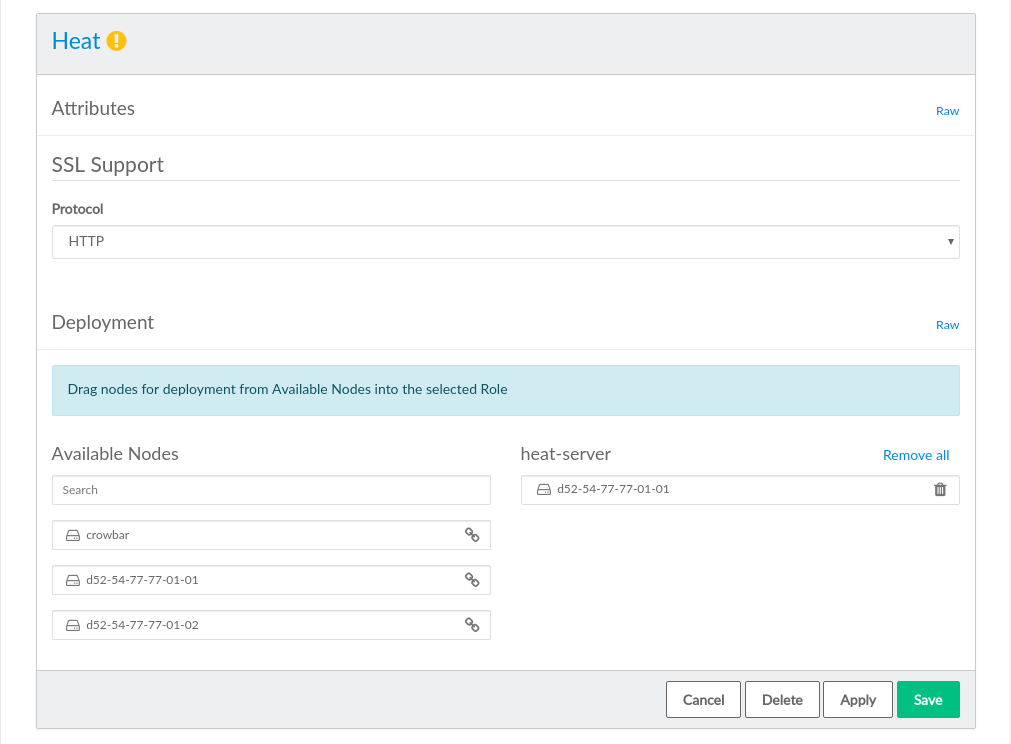

The monasca component consists of following roles:

- monasca-server

monasca server-side components that are deployed by Chef. Currently, this only creates keystone resources required by monasca, such as users, roles, endpoints, etc. The rest is left to the Ansible-based

monasca-installerrun by themonasca-masterrole.- monasca-master

Runs the Ansible-based

monasca-installerfrom the Crowbar node. The installer deploys the monasca server-side components to the node that has themonasca-serverrole assigned to it. These components areopenstack-monasca-api, andopenstack-monasca-log-api, as well as all the back-end services they use.- monasca-agent

Deploys

openstack-monasca-agentthat is responsible for sending metrics tomonasca-apion nodes it is assigned to.- monasca-log-agent

Deploys

openstack-monasca-log-agentresponsible for sending logs tomonasca-log-apion nodes it is assigned to.

12.7 Deploying swift (optional) #

swift adds an object storage service to SUSE OpenStack Cloud for storing single files such as images or snapshots. It offers high data security by storing the data redundantly on a pool of Storage Nodes—therefore swift needs to be installed on at least two dedicated nodes.

To properly configure swift it is important to understand how it places the data. Data is always stored redundantly within the hierarchy. The swift hierarchy in SUSE OpenStack Cloud is formed out of zones, nodes, hard disks, and logical partitions. Zones are physically separated clusters, for example different server rooms each with its own power supply and network segment. A failure of one zone must not affect another zone. The next level in the hierarchy are the individual swift storage nodes (on which has been deployed), followed by the hard disks. Logical partitions come last.

swift automatically places three copies of each object on the highest hierarchy level possible. If three zones are available, then each copy of the object will be placed in a different zone. In a one zone setup with more than two nodes, the object copies will each be stored on a different node. In a one zone setup with two nodes, the copies will be distributed on different hard disks. If no other hierarchy element fits, logical partitions are used.

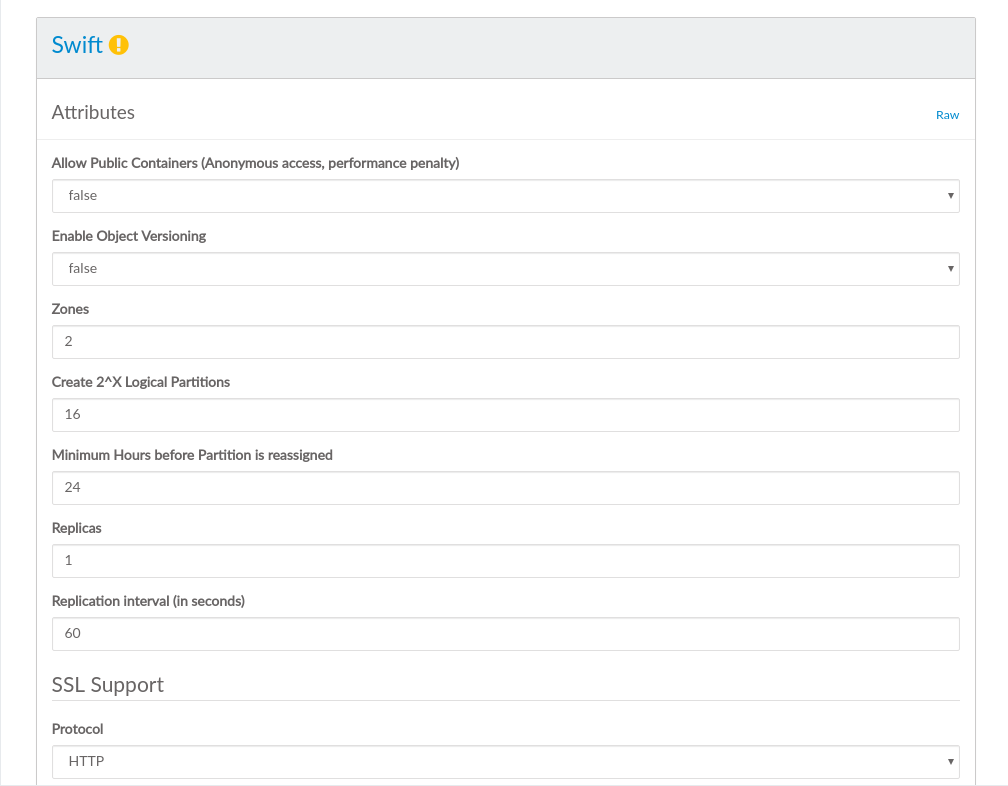

The following attributes can be set to configure swift:

Set to

trueto enable public access to containers.If set to true, a copy of the current version is archived each time an object is updated.

Number of zones (see above). If you do not have different independent installations of storage nodes, set the number of zones to

1.Partition power. The number entered here is used to compute the number of logical partitions to be created in the cluster. The number you enter is used as a power of 2 (2^X).

We recommend using a minimum of 100 partitions per disk. To measure the partition power for your setup, multiply the number of disks from all swift nodes by 100, and then round up to the nearest power of two. Keep in mind that the first disk of each node is not used by swift, but rather for the operating system.

Example: 10 swift nodes with 5 hard disks each. Four hard disks on each node are used for swift, so there is a total of forty disks. 40 x 100 = 4000. The nearest power of two, 4096, equals 2^12. So the partition power that needs to be entered is

12.Important: Value Cannot be Changed After the Proposal Has Been DeployedChanging the number of logical partition after swift has been deployed is not supported. Therefore the value for the partition power should be calculated from the maximum number of partitions this cloud installation is likely going to need at any point in time.

This option sets the number of hours before a logical partition is considered for relocation.

24is the recommended value.The number of copies generated for each object. The number of replicas depends on the number of disks and zones.

Time (in seconds) after which to start a new replication process.

Shows debugging output in the log files when set to

true.Choose whether to encrypt public communication () or not (). If you choose , you have two options. You can either or provide the locations for the certificate key pair files. Using self-signed certificates is for testing purposes only and should never be used in production environments!

Apart from the general configuration described above, the swift barclamp lets you also activate and configure . The features these middlewares provide can be used via the swift command line client only. The Ratelimit and S3 middleware provide for the most interesting features, and we recommend enabling other middleware only for specific use-cases.

Provides an S3 compatible API on top of swift.

Serve container data as a static Web site with an index file and optional file listings. See http://docs.openstack.org/developer/swift/middleware.html#staticweb for details.

This middleware requires setting to

true.Create URLs to provide time-limited access to objects. See http://docs.openstack.org/developer/swift/middleware.html#tempurl for details.

Upload files to a container via Web form. See http://docs.openstack.org/developer/swift/middleware.html#formpost for details.

Extract TAR archives into a swift account, and delete multiple objects or containers with a single request. See http://docs.openstack.org/developer/swift/middleware.html#module-swift.common.middleware.bulk for details.

Interact with the swift API via Flash, Java, and Silverlight from an external network. See http://docs.openstack.org/developer/swift/middleware.html#module-swift.common.middleware.crossdomain for details.

Translates container and account parts of a domain to path parameters that the swift proxy server understands. Can be used to create short URLs that are easy to remember, for example by rewriting

home.tux.example.com/$ROOT/tux/home/myfiletohome.tux.example.com/myfile. See http://docs.openstack.org/developer/swift/middleware.html#module-swift.common.middleware.domain_remap for details.Throttle resources such as requests per minute to provide denial of service protection. See http://docs.openstack.org/developer/swift/middleware.html#module-swift.common.middleware.ratelimit for details.

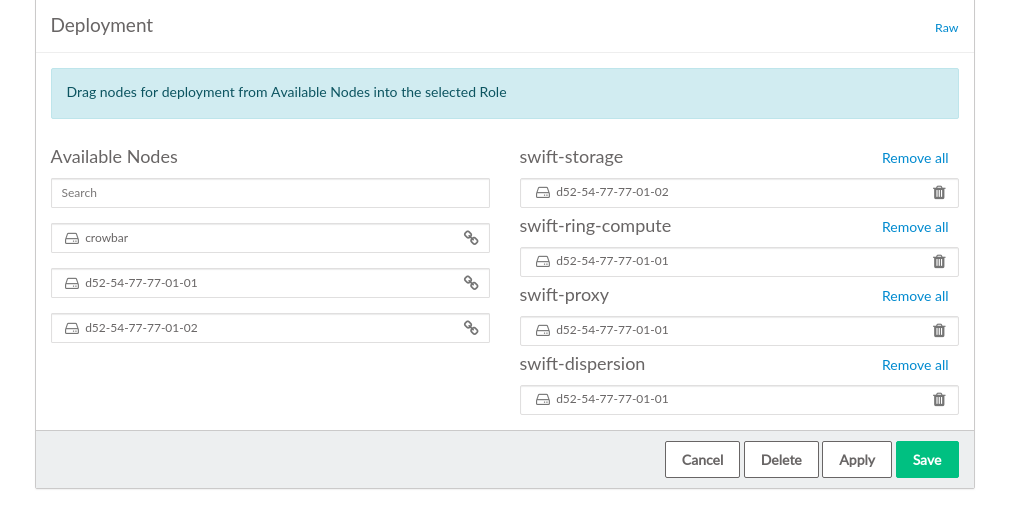

The swift component consists of four different roles. Deploying is optional:

The virtual object storage service. Install this role on all dedicated swift Storage Nodes (at least two), but not on any other node.

Warning: swift-storage Needs Dedicated MachinesNever install the swift-storage service on a node that runs other OpenStack components.

The ring maintains the information about the location of objects, replicas, and devices. It can be compared to an index that is used by various OpenStack components to look up the physical location of objects. must only be installed on a single node, preferably a Control Node.

The swift proxy server takes care of routing requests to swift. Installing a single instance of on a Control Node is recommended. The role can be made highly available by deploying it on a cluster.

Deploying is optional. The swift dispersion tools can be used to test the health of the cluster. It creates a heap of dummy objects (using 1% of the total space available). The state of these objects can be queried using the swift-dispersion-report query. needs to be installed on a Control Node.

12.7.1 HA Setup for swift #

swift replicates by design, so there is no need for a special HA setup. Make sure to fulfill the requirements listed in Section 2.6.4.1, “swift—Avoiding Points of Failure”.

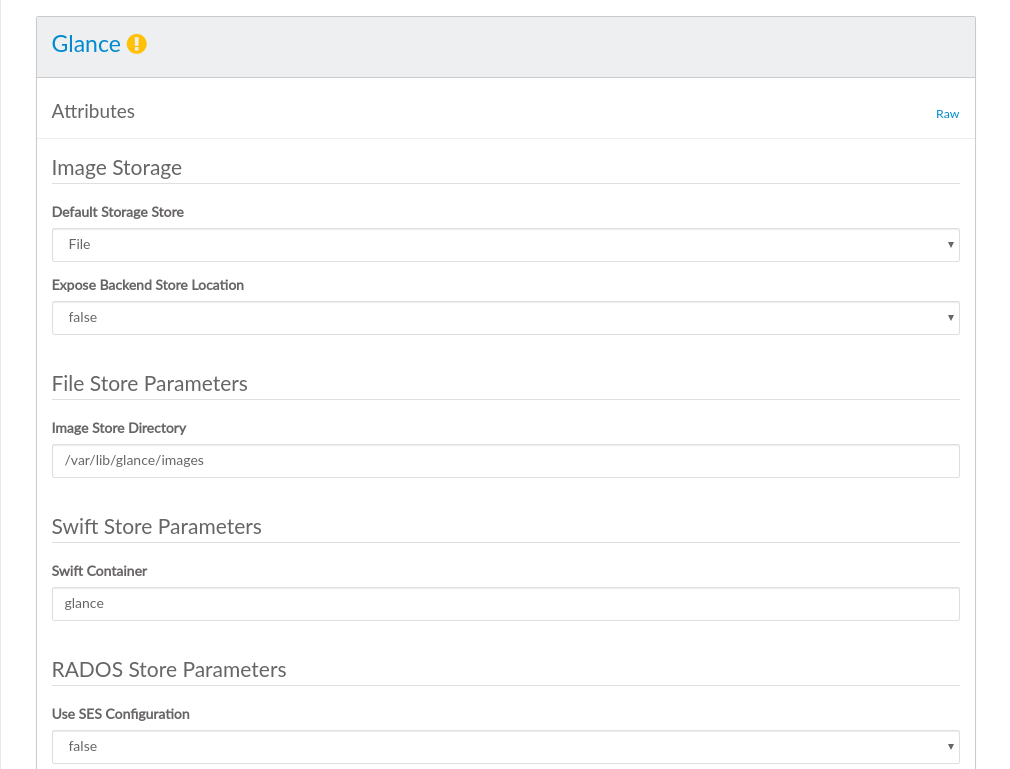

12.8 Deploying glance #

glance provides discovery, registration, and delivery services for virtual disk images. An image is needed to start an instance—it is its pre-installed root-partition. All images you want to use in your cloud to boot instances from, are provided by glance. glance must be deployed onto a Control Node. glance can be made highly available by deploying it on a cluster.

There are a lot of options to configure glance. The most important ones are explained below—for a complete reference refer to https://github.com/crowbar/crowbar-openstack/blob/master/glance.yml.

As of SUSE OpenStack Cloud Crowbar 7, the glance API v1 is no longer enabled by default. Instead, glance API v2 is used by default.

If you need to re-enable API v1 for compatibility reasons:

Switch to the view of the glance barclamp.

Search for the

enable_v1entry and set it totrue:"enable_v1": true

In new installations, this entry is set to

falseby default. When upgrading from an older version of SUSE OpenStack Cloud Crowbar it is set totrueby default.Apply your changes.

. Images are stored in an image file on the Control Node.

. Provides volume block storage to SUSE OpenStack Cloud Crowbar. Use it to store images.

. Provides an object storage service to SUSE OpenStack Cloud Crowbar.

. SUSE Enterprise Storage (based on Ceph) provides block storage service to SUSE OpenStack Cloud Crowbar.

. If you are using VMware as a hypervisor, it is recommended to use for storing images. This will make starting VMware instances much faster.

. If this is set to , the API will communicate the direct URl of the image's back-end location to HTTP clients. Set to by default.

Depending on the storage back-end, there are additional configuration options available:

Only required if is set to .

Specify the directory to host the image file. The directory specified here can also be an NFS share. See Section 11.4.3, “Mounting NFS Shares on a Node” for more information.

Only required if is set to .

Set the name of the container to use for the images in swift.

Only required if is set to .

- RADOS User for CephX Authentication

If you are using an external Ceph cluster, specify the user you have set up for glance (see Section 11.4.4, “Using an Externally Managed Ceph Cluster” for more information).

- RADOS Pool for glance images

If you are using a SUSE OpenStack Cloud internal Ceph setup, the pool you specify here is created if it does not exist. If you are using an external Ceph cluster, specify the pool you have set up for glance (see Section 11.4.4, “Using an Externally Managed Ceph Cluster” for more information).

Only required if is set to .

Name or IP address of the vCenter server.

- /

vCenter login credentials.

A comma-separated list of datastores specified in the format: DATACENTER_NAME:DATASTORE_NAME

Specify an absolute path here.

Choose whether to encrypt public communication () or not (). If you choose , refer to SSL Support: Protocol for configuration details.

Enable and configure image caching in this section. By default, image caching is disabled. You can see this the Raw view of your nova barclamp:

image_cache_manager_interval = -1

This option sets the number of seconds to wait between runs of the image cache manager. Disabling it means that the cache manager will not automatically remove the unused images from the cache, so if you have many glance images and are running out of storage you must manually remove the unused images from the cache. We recommend leaving this option disabled as it is known to cause issues, especially with shared storage. The cache manager may remove images still in use, e.g. when network outages cause synchronization problems with compute nodes.

If you wish to enable caching, re-enable it in a custom nova configuration file, for example

/etc/nova/nova.conf.d/500-nova.conf. This sets the interval to four minutes:image_cache_manager_interval = 2400

See Chapter 14, Configuration Files for OpenStack Services for more information on custom configurations.

Learn more about glance's caching feature at http://docs.openstack.org/developer/glance/cache.html.

Shows debugging output in the log files when set to .

12.8.1 HA Setup for glance #

glance can be made highly available by deploying it on a cluster. We strongly recommended doing this for the image data as well. The recommended way is to use swift or an external Ceph cluster for the image repository. If you are using a directory on the node instead (file storage back-end), you should set up shared storage on the cluster for it.

12.9 Deploying cinder #

cinder, the successor of nova-volume, provides

volume block storage.

It adds persistent storage to an instance that persists until deleted,

contrary to ephemeral volumes that only persist while the instance is

running.

cinder can provide volume storage by using different back-ends such as local file, one or more local disks, Ceph (RADOS), VMware, or network storage solutions from EMC, EqualLogic, Fujitsu, NetApp or Pure Storage. Since SUSE OpenStack Cloud Crowbar 5, cinder supports using several back-ends simultaneously. It is also possible to deploy the same network storage back-end multiple times and therefore use different installations at the same time.

The attributes that can be set to configure cinder depend on the back-end. The only general option is (see SSL Support: Protocol for configuration details).

When first opening the cinder barclamp, the default proposal——is already available for configuration. To optionally add a back-end, go to the section and choose a from the drop-down box. Optionally, specify the . This is recommended when deploying the same volume type more than once. Existing back-end configurations (including the default one) can be deleted by clicking the trashcan icon if no longer needed. Note that you must configure at least one back-end.

(local disks) #

Choose whether to use the disk or disks. “Available disks” are all disks currently not used by the system. Note that one disk (usually

/dev/sda) of every block storage node is already used for the operating system and is not available for cinder.Specify a name for the cinder volume.

(EMC² Storage) #

- /

IP address and Port of the ECOM server.

- /

Login credentials for the ECOM server.

VMAX port groups that expose volumes managed by this back-end.

Unique VMAX array serial number.

Unique pool name within a given array.

Name of the FAST Policy to be used. When specified, volumes managed by this back-end are managed as under FAST control.

For more information on the EMC driver refer to the OpenStack documentation at http://docs.openstack.org/liberty/config-reference/content/emc-vmax-driver.html.

EqualLogic drivers are included as a technology preview and are not supported.

Select the protocol used to connect, either or .

- /

IP address and port of the ETERNUS SMI-S Server.

- /

Login credentials for the ETERNUS SMI-S Server.

Storage pool (RAID group) in which the volumes are created. Make sure that the RAID group on the server has already been created. If a RAID group that does not exist is specified, the RAID group is built from unused disk drives. The RAID level is automatically determined by the ETERNUS DX Disk storage system.

For information on configuring the Hitachi HUSVM back-end, refer to http://docs.openstack.org/ocata/config-reference/block-storage/drivers/hitachi-storage-volume-driver.html.

- /

SUSE OpenStack Cloud can use “Data ONTAP” in , or in . In vFiler will be configured, in vServer will be configured. The can be set to either or . Choose the driver and the protocol your NetApp is licensed for.

The management IP address for the 7-Mode storage controller, or the cluster management IP address for the clustered Data ONTAP.

Transport protocol for communicating with the storage controller or clustered Data ONTAP. Supported protocols are HTTP and HTTPS. Choose the protocol your NetApp is licensed for.

The port to use for communication. Port 80 is usually used for HTTP, 443 for HTTPS.

- /

Login credentials.

The vFiler unit to be used for provisioning of OpenStack volumes. This setting is only available in .

Provide a list of comma-separated volume names to be used for provisioning. This setting is only available when using iSCSI as storage protocol.

A list of available file systems on an NFS server. Enter your NFS mountpoints in the form in this format: host:mountpoint -o options. For example:

host1:/srv/nfs/share1 /mnt/nfs/share1 -o rsize=8192,wsize=8192,timeo=14,intr

IP address of the FlashArray management VIP

API token for access to the FlashArray

Enable or disable iSCSI CHAP authentication

For more information on the Pure Storage FlashArray driver refer to the OpenStack documentation at https://docs.openstack.org/ocata/config-reference/block-storage/drivers/pure-storage-driver.html.

(Ceph) #

Select , if you are using an external Ceph cluster (see Section 11.4.4, “Using an Externally Managed Ceph Cluster” for setup instructions).

Name of the pool used to store the cinder volumes.

Ceph user name.

Host name or IP address of the vCenter server.

- /

vCenter login credentials.

Provide a comma-separated list of cluster names.

Path to the directory used to store the cinder volumes.

Absolute path to the vCenter CA certificate.

Default value:

false(the CA truststore is used for verification). Set this option totruewhen using self-signed certificates to disable certificate checks. This setting is for testing purposes only and must not be used in production environments!

Absolute path to the file to be used for block storage.

Maximum size of the volume file. Make sure not to overcommit the size, since it will result in data loss.

Specify a name for the cinder volume.

Using a file for block storage is not recommended for production systems, because of performance and data security reasons.

Lets you manually pick and configure a driver. Only use this option for testing purposes, as it is not supported.

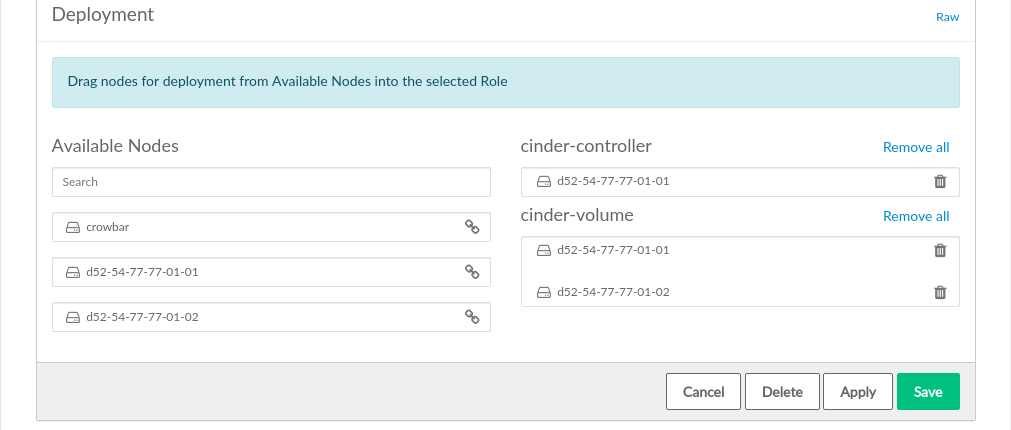

The cinder component consists of two different roles:

The cinder controller provides the scheduler and the API. Installing on a Control Node is recommended.

The virtual block storage service. It can be installed on a Control Node. However, we recommend deploying it on one or more dedicated nodes supplied with sufficient networking capacity to handle the increase in network traffic.

12.9.1 HA Setup for cinder #

Both the and the role can be deployed on a cluster.

If you need to re-deploy role from a single machine to a cluster environment, the following will happen: Volumes that are currently attached to instances will continue to work, but adding volumes to instances will not succeed.

To solve this issue, run the following script once on each node that

belongs to the cluster:

/usr/bin/cinder-migrate-volume-names-to-cluster.

The script is automatically installed by Crowbar on every machine or cluster that has a role applied to it.

In combination with Ceph or a network storage solution, deploying cinder in a cluster minimizes the potential downtime. For to be applicable to a cluster, the role needs all cinder backends to be configured for non-local storage. If you are using local volumes or raw devices in any of your volume backends, you cannot apply to a cluster.

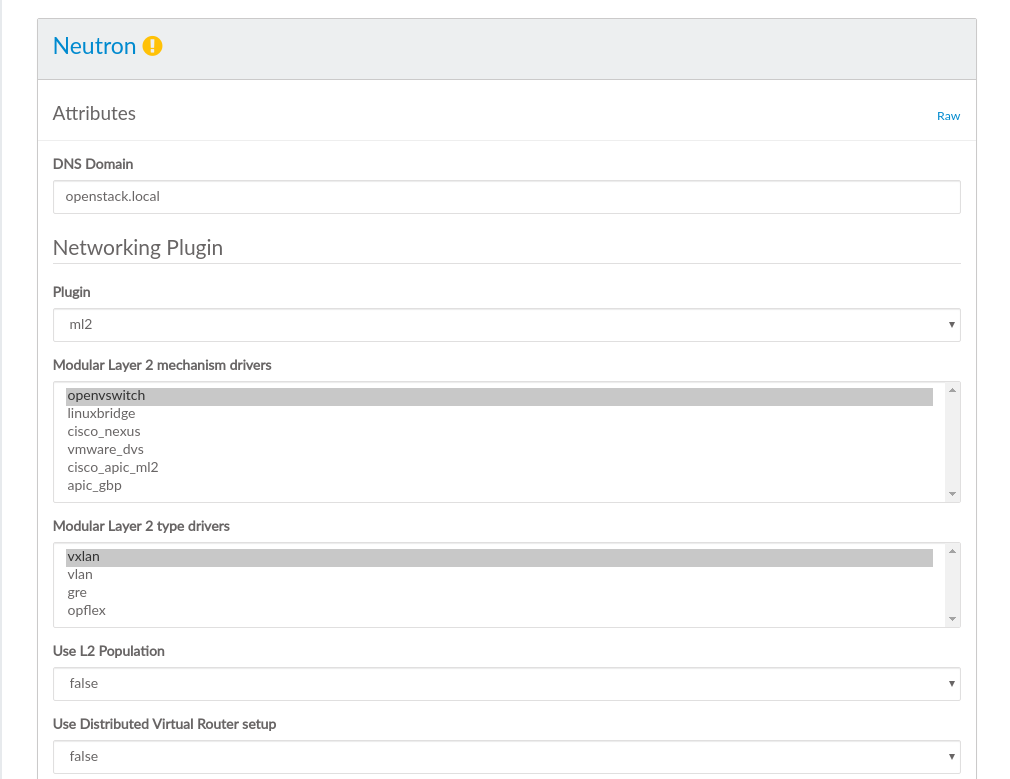

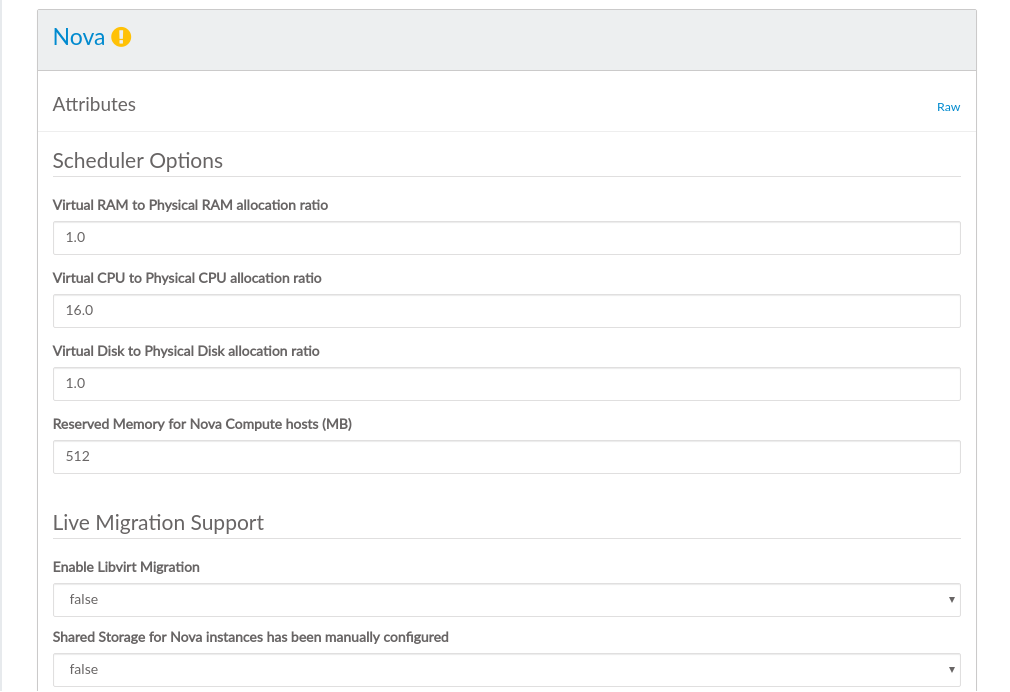

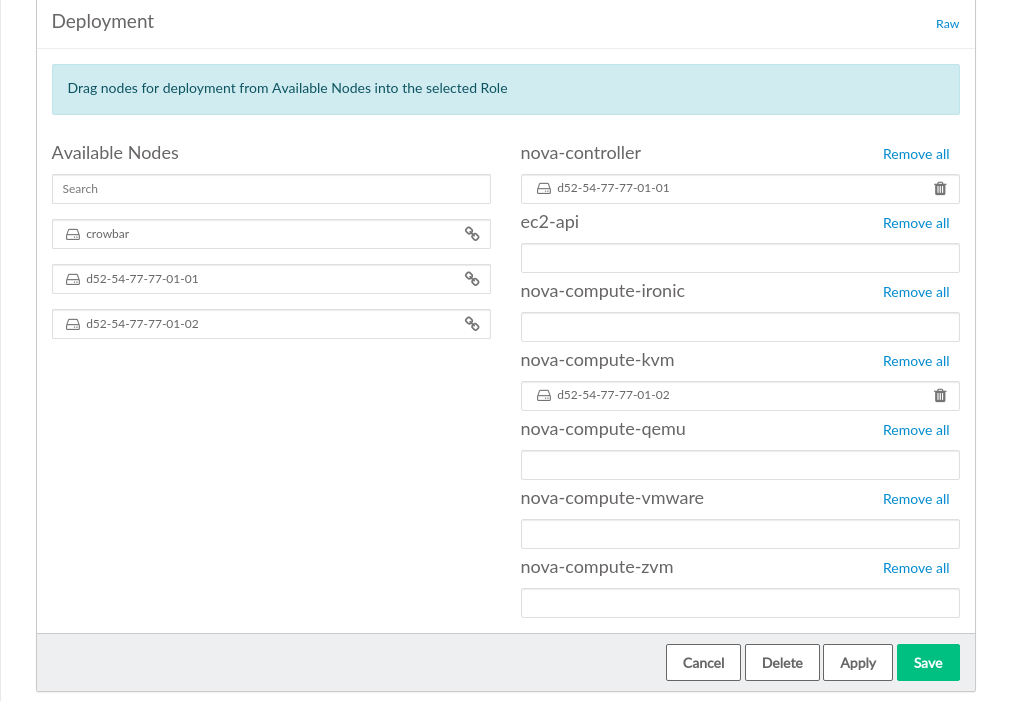

12.10 Deploying neutron #

neutron provides network connectivity between interface devices managed by other OpenStack components (most likely nova). The service works by enabling users to create their own networks and then attach interfaces to them.

neutron must be deployed on a Control Node. You first need to choose a core plug-in— or . Depending on your choice, more configuration options will become available.

The option lets you use an existing VMware NSX installation. Using this plugin is not a prerequisite for the VMware vSphere hypervisor support. However, it is needed when wanting to have security groups supported on VMware compute nodes. For all other scenarios, choose .

The only global option that can be configured is . Choose whether to encrypt public communication () or not (). If choosing , refer to SSL Support: Protocol for configuration details.

(Modular Layer 2) #

Select which mechanism driver(s) shall be enabled for the ml2 plugin. It is possible to select more than one driver by holding the Ctrl key while clicking. Choices are:

. Supports GRE, VLAN and VXLAN networks (to be configured via the setting). VXLAN is the default.

. Supports VLANs only. Requires to specify the .

. Enables neutron to dynamically adjust the VLAN settings of the ports of an existing Cisco Nexus switch when instances are launched. It also requires which will automatically be selected. With , must be added. This option also requires to specify the . See Appendix A, Using Cisco Nexus Switches with neutron for details.

. vmware_dvs driver makes it possible to use neutron for networking in a VMware-based environment. Choosing , automatically selects the required , , and drivers. In the view, it is also possible to configure two additional attributes: (clean up the DVS portgroups on the target vCenter Servers when neutron-server is restarted) and (create DVS portgroups corresponding to networks in advance, rather than when virtual machines are attached to these networks).

With the default setup, all intra-Compute Node traffic flows through the network Control Node. The same is true for all traffic from floating IPs. In large deployments the network Control Node can therefore quickly become a bottleneck. When this option is set to , network agents will be installed on all compute nodes. This will de-centralize the network traffic, since Compute Nodes will be able to directly “talk” to each other. Distributed Virtual Routers (DVR) require the driver and will not work with the driver. For details on DVR refer to https://wiki.openstack.org/wiki/Neutron/DVR.

This option is only available when having chosen the or the mechanism drivers. Options are , and . It is possible to select more than one driver by holding the Ctrl key while clicking.

When multiple type drivers are enabled, you need to select the , that will be used for newly created provider networks. This also includes the

nova_fixednetwork, that will be created when applying the neutron proposal. When manually creating provider networks with theneutroncommand, the default can be overwritten with the--provider:network_type typeswitch. You will also need to set a . It is not possible to change this default when manually creating tenant networks with theneutroncommand. The non-default type driver will only be used as a fallback.Depending on your choice of the type driver, more configuration options become available.

. Having chosen , you also need to specify the start and end of the tunnel ID range.

. The option requires you to specify the .

. Having chosen , you also need to specify the start and end of the VNI range.

neutron must not be deployed with the openvswitch with

gre plug-in.

- xCAT Host/IP Address

Host name or IP address of the xCAT Management Node.

- xCAT Username/Password

xCAT login credentials.

- rdev list for physnet1 vswitch uplink (if available)

List of rdev addresses that should be connected to this vswitch.

- xCAT IP Address on Management Network

IP address of the xCAT management interface.

- Net Mask of Management Network

Net mask of the xCAT management interface.

This plug-in requires to configure access to the VMware NSX service.

Login credentials for the VMware NSX server. The user needs to have administrator permissions on the NSX server.

Enter the IP address and the port number (IP-ADDRESS:PORT) of the controller API endpoint. If the port number is omitted, port 443 will be used. You may also enter multiple API endpoints (comma-separated), provided they all belong to the same controller cluster. When multiple API endpoints are specified, the plugin will load balance requests on the various API endpoints.

The UUIDs for the transport zone and the gateway service can be obtained from the NSX server. They will be used when networks are created.

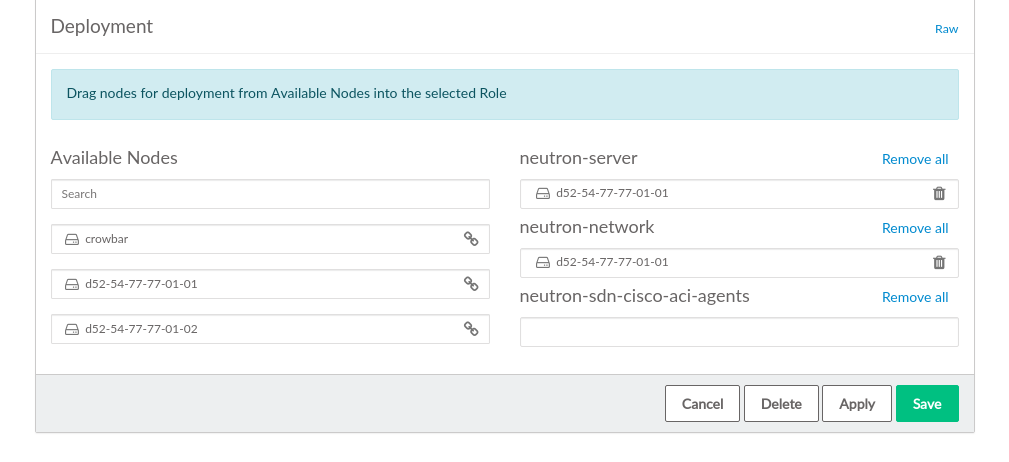

The neutron component consists of two different roles:

provides the scheduler and the API. It needs to be installed on a Control Node.

This service runs the various agents that manage the network traffic of all the cloud instances. It acts as the DHCP and DNS server and as a gateway for all cloud instances. It is recommend to deploy this role on a dedicated node supplied with sufficient network capacity.

12.10.1 Using Infoblox IPAM Plug-in #

In the neutron barclamp, you can enable support for the infoblox IPAM

plug-in and configure it. For configuration, the

infoblox section contains the subsections

grids and grid_defaults.

- grids