10 The Crowbar Web Interface #

The Crowbar Web interface runs on the Administration Server. It provides an

overview of the most important deployment details in your cloud. This includes a

view of the nodes and which roles are deployed on which nodes, and the

barclamp proposals that can be edited and deployed. In addition, the

Crowbar Web interface shows details about the networks and switches in your

cloud. It also provides graphical access to tools for managing

your repositories, backing up or restoring the Administration Server, exporting the

Chef configuration, or generating a supportconfig TAR

archive with the most important log files.

You can access the Crowbar API documentation from the following static page:

http://CROWBAR_SERVER/apidoc.

The documentation contains information about the crowbar API endpoints and its parameters, including response examples, possible errors (and their HTTP response codes), parameter validations, and required headers.

10.1 Logging In #

The Crowbar Web interface uses the HTTP protocol and port

80.

On any machine, start a Web browser and make sure that JavaScript and cookies are enabled.

As URL, enter the IP address of the Administration Server, for example:

http://192.168.124.10/

Log in as user

crowbar. If you have not changed the password, it iscrowbarby default.

On the Administration Server, open the following file in a text editor:

/etc/crowbarrc. It contains the following:[default] username=crowbar password=crowbar

Change the

passwordentry and save the file.Alternatively, use the YaST Crowbar module to edit the password as described in Section 7.1, “”.

Manually run

chef-client. This step is not needed if the installation has not been completed yet.

10.2 Overview: Main Elements #

After logging in to Crowbar, you will see a navigation bar at the top-level row. Its menus and the respective views are described in the following sections.

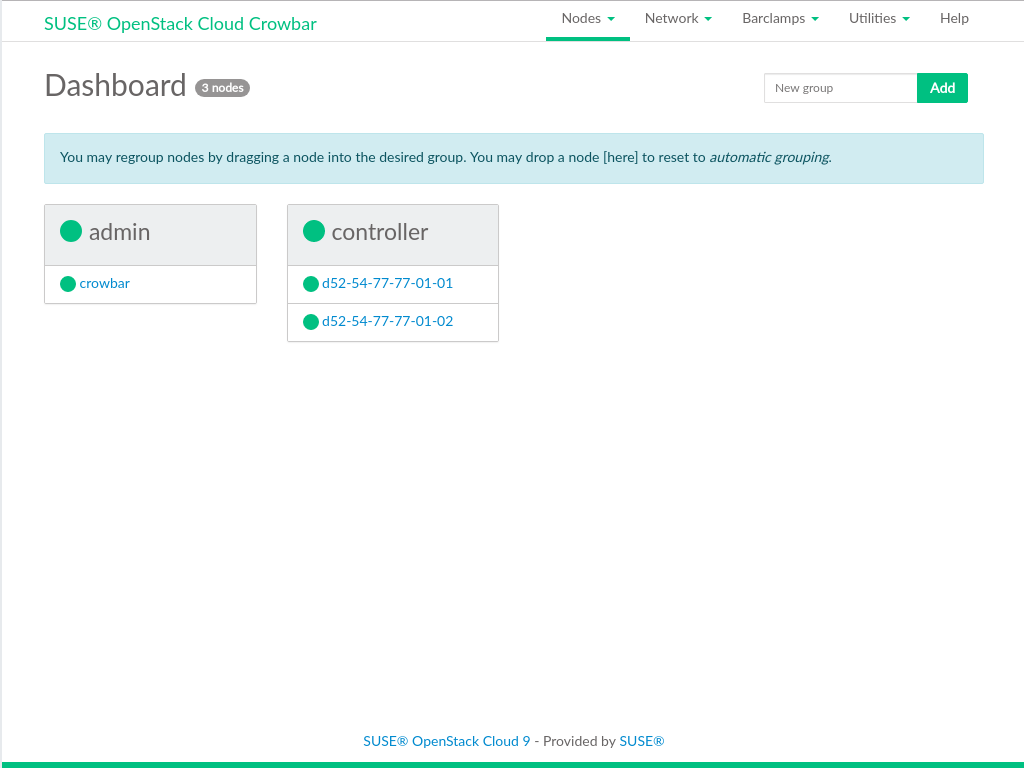

10.2.1 Nodes #

This is the default view after logging in to the Crowbar Web interface. The Dashboard shows the groups (which you can create to arrange nodes according to their purpose), which nodes belong to each group, and which state the nodes and groups are in. In addition, the total number of nodes is displayed in the top-level row.

The color of the dot in front of each node or group indicates the status. If the dot for a group shows more than one color, hover the mouse pointer over the dot to view the total number of nodes and the statuses they are in.

Gray means the node is being discovered by the Administration Server, or that there is no up-to-date information about a deployed node. If the status is shown for a node longer than expected, check if the chef-client is still running on the node.

Yellow means the node has been successfully

Discovered. As long as the node has not been allocated the dot will flash. A solid (non-flashing) yellow dot indicates that the node has been allocated, but installation has not yet started.Flashing from yellow to green means the node has been allocated and is currently being installed.

Solid green means the node is in status

Ready.Red means the node is in status

Problem.

During the initial state of the setup, the Dashboard only shows one group called

sw_unknowninto which the Administration Server is automatically sorted. Initially, all nodes (except the Administration Server) are listed with their MAC address as a name. However, we recommend creating an alias for each node. This makes it easier to identify the node in the admin network and on the Dashboard. For details on how to create groups, how to assign nodes to a group, and how to create node aliases, see Section 11.2, “Node Installation”.This screen allows you to edit multiple nodes at once instead of editing them individually. It lists all nodes, including (in form of the MAC address), configuration, (used within the admin network), (name used outside of the SUSE OpenStack Cloud network), , , (the operating system that is going to be installed on the node), (if available), and allocation status. You can toggle the list view between or nodes.

For details on how to fill in the data for all nodes and how to start the installation process, see Section 11.2, “Node Installation”.

This menu entry only appears if your cloud contains a High Availability setup. The overview shows all clusters in your setup, including the that are members of the respective cluster and the assigned to the cluster. It also shows if a cluster contains and which roles are assigned to the remote nodes.

This overview shows which roles have been deployed on which node(s). The roles are grouped according to the service to which they belong. You cannot edit anything here. To change role deployment, you need to edit and redeploy the appropriate barclamps as described in Chapter 12, Deploying the OpenStack Services.

10.2.2 Barclamps #

This screen shows a list of all available barclamp proposals, including their , , and a short . From here, you can individual barclamp proposals as described in Section 10.3, “Deploying Barclamp Proposals”.

This screen only shows the barclamps that are included with the core Crowbar framework. They contain general recipes for setting up and configuring all nodes. From here, you can individual barclamp proposals.

This screen only shows the barclamps that are dedicated to OpenStack service deployment and configuration. From here, you can individual barclamp proposals.

If barclamps are applied to one or more nodes that are not yet available for deployment (for example, because they are rebooting or have not been fully installed yet), the proposals will be put in a queue. This screen shows the proposals that are or .

10.2.3 Utilities #

The screen allows you to export the Chef configuration and the

supportconfigTAR archive. Thesupportconfigarchive contains system information such as the current kernel version being used, the hardware, RPM database, partitions, and the most important log files for analysis of any problems. To access the export options, click . After the export has been successfully finished, the screen will show any files that are available for download.This screen shows an overview of the mandatory, recommended, and optional repositories for all architectures of SUSE OpenStack Cloud Crowbar. On each reload of the screen the Crowbar Web interface checks the availability and status of the repositories. If a mandatory repository is not present, it is marked red in the screen. Any repositories marked green are usable and available to each node in the cloud. Usually, the available repositories are also shown as in the rightmost column. This means that the managed nodes will automatically be configured to use this repository. If you disable the check box for a repository, managed nodes will not use that repository.

You cannot edit any repositories in this screen. If you need additional, third-party repositories, or want to modify the repository metadata, edit

/etc/crowbar/repos.yml. Find an example of a repository definition below:suse-12.2: x86_64: Custom-Repo-12.2: url: 'http://example.com/12-SP2:/x86_64/custom-repo/' ask_on_error: true # sets the ask_on_error flag in # the autoyast profile for that repo priority: 99 # sets the repo priority for zypperAlternatively, use the YaST Crowbar module to add or edit repositories as described in Section 7.4, “”.

This screen allows you to run

swift-dispersion-reporton the node or nodes to which it has been deployed. Use this tool to measure the overall health of the swift cluster. For details, see http://docs.openstack.org/liberty/config-reference/content/object-storage-dispersion.html.This screen is for creating and downloading a backup of the Administration Server. You can also restore from a backup or upload a backup image from your local file system.

10.2.4 Help #

From this screen you can access HTML and PDF versions of the SUSE OpenStack Cloud Crowbar manuals that are installed on the Administration Server.

10.3 Deploying Barclamp Proposals #

Barclamps are a set of recipes, templates, and installation instructions. They are used to automatically install OpenStack components on the nodes. Each barclamp is configured via a so-called proposal. A proposal contains the configuration of the service(s) associated with the barclamp and a list of machines onto which to deploy the barclamp.

Most barclamps consist of two sections:

For changing the barclamp's configuration, either by editing the respective Web forms ( view) or by switching to the view, which exposes all configuration options for the barclamp. In the view, you directly edit the configuration file.

Important: Saving Your ChangesBefore you switch to view or back again to view, your changes. Otherwise they will be lost.

Lets you choose onto which nodes to deploy the barclamp. On the left-hand side, you see a list of . The right-hand side shows a list of roles that belong to the barclamp.

Assign the nodes to the roles that should be deployed on that node. Some barclamps contain roles that can also be deployed to a cluster. If you have deployed the Pacemaker barclamp, the section additionally lists and in this case. The latter are clusters that contain both “normal” nodes and Pacemaker remote nodes. See Section 2.6.3, “High Availability of the Compute Node(s)” for the basic details.

Important: Clusters with Remote NodesClusters (or clusters with remote nodes) cannot be assigned to roles that need to be deployed on individual nodes. If you try to do so, the Crowbar Web interface shows an error message.

If you assign a cluster with remote nodes to a role that can only be applied to “normal” (Corosync) nodes, the role will only be applied to the Corosync nodes of that cluster. The role will not be applied to the remote nodes of the same cluster.

10.3.1 Creating, Editing and Deploying Barclamp Proposals #

The following procedure shows how to generally edit, create and deploy barclamp proposals. For the description and deployment of the individual barclamps, see Chapter 12, Deploying the OpenStack Services.

Log in to the Crowbar Web interface.

Click and select . Alternatively, filter for categories by selecting either or .

To create a new proposal or edit an existing one, click or next to the appropriate barclamp.

Change the configuration in the section:

Change the available options via the Web form.

To edit the configuration file directly, first save changes made in the Web form. Click to edit the configuration in the editor view.

After you have finished, your changes. (They are not applied yet).

Assign nodes to a role in the section of the barclamp. By default, one or more nodes are automatically pre-selected for available roles.

If this pre-selection does not meet your requirements, click the icon next to the role to remove the assignment.

To assign a node or cluster of your choice, select the item you want from the list of nodes or clusters on the left-hand side, then drag and drop the item onto the desired role name on the right.

NoteDo not drop a node or cluster onto the text box—this is used to filter the list of available nodes or clusters!

To save your changes without deploying them yet, click .

Deploy the proposal by clicking .

Warning: Wait Until a Proposal Has Been DeployedIf you deploy a proposal onto a node where a previous one is still active, the new proposal will overwrite the old one.

Deploying a proposal might take some time (up to several minutes). Always wait until you see the message “Successfully applied the proposal” before proceeding to the next proposal.

A proposal that has not been deployed yet can be deleted in the view by clicking . To delete a proposal that has already been deployed, see Section 10.3.3, “Deleting a Proposal That Already Has Been Deployed”.

10.3.2 Barclamp Deployment Failure #

A deployment failure of a barclamp may leave your node in an inconsistent state. If deployment of a barclamp fails:

Fix the reason that has caused the failure.

Re-deploy the barclamp.

For help, see the respective troubleshooting section at Q & A 2, “OpenStack Node Deployment”.

10.3.3 Deleting a Proposal That Already Has Been Deployed #

To delete a proposal that has already been deployed, you first need to it.

Log in to the Crowbar Web interface.

Click › .

Click to open the editing view.

Click and confirm your choice in the following pop-up.

Deactivating a proposal removes the chef role from the nodes, so the routine that installed and set up the services is not executed anymore.

Click to confirm your choice in the following pop-up.

This removes the barclamp configuration data from the server.

However, deactivating and deleting a barclamp that already had been deployed does not remove packages installed when the barclamp was deployed. Nor does it stop any services that were started during the barclamp deployment. On the affected node, proceed as follows to undo the deployment:

Stop the respective services:

root #systemctl stop serviceDisable the respective services:

root #systemctl disable service

Uninstalling the packages should not be necessary.

10.3.4 Queuing/Dequeuing Proposals #

When a proposal is applied to one or more nodes that are not yet available for deployment (for example, because they are rebooting or have not been yet fully installed), the proposal will be put in a queue. A message like

Successfully queued the proposal until the following become ready: d52-54-00-6c-25-44

will be shown when having applied the proposal. A new button will also become available. Use it to cancel the deployment of the proposal by removing it from the queue.