2 Considerations and Requirements #

Before deploying SUSE OpenStack Cloud Crowbar, there are some requirements to meet and architectural decisions to make. Read this chapter thoroughly first, as some decisions need to be made before deploying SUSE OpenStack Cloud, and they cannot be changed afterward.

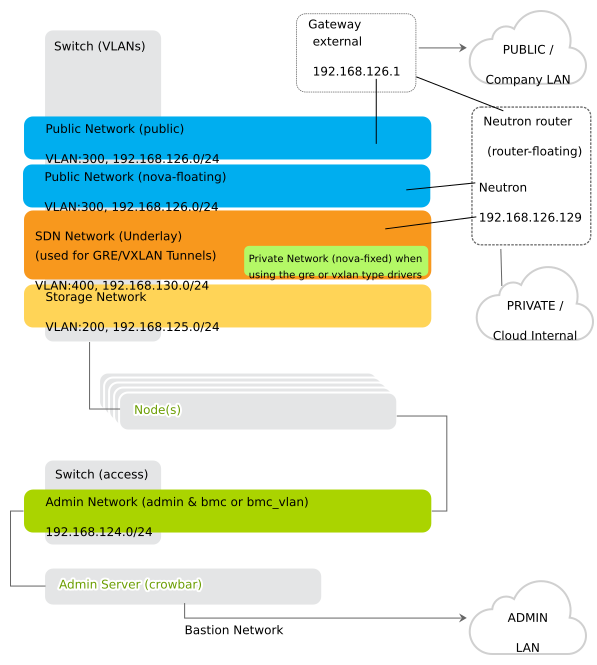

2.1 Network #

SUSE OpenStack Cloud Crowbar requires a complex network setup consisting of several networks that are configured during installation. These networks are for exclusive cloud usage. You need a router to access them from an existing network.

The network configuration on the nodes in the SUSE OpenStack Cloud network is entirely controlled by Crowbar. Any network configuration not created with Crowbar (for example, with YaST) will automatically be overwritten. After the cloud is deployed, network settings cannot be changed.

The following networks are pre-defined when setting up SUSE OpenStack Cloud Crowbar. The IP addresses listed are the default addresses and can be changed using the YaST Crowbar module (see Chapter 7, Crowbar Setup). It is also possible to customize the network setup by manually editing the network barclamp template. See Section 7.5, “Custom Network Configuration” for detailed instructions.

- Admin Network (192.168.124/24)

A private network to access the Administration Server and all nodes for administration purposes. The default setup also allows access to the BMC (Baseboard Management Controller) data via IPMI (Intelligent Platform Management Interface) from this network. If required, BMC access can be swapped to a separate network.

You have the following options for controlling access to this network:

Do not allow access from the outside and keep the admin network completely separated.

Allow access to the Administration Server from a single network (for example, your company's administration network) via the “bastion network” option configured on an additional network card with a fixed IP address.

Allow access from one or more networks via a gateway.

- Storage Network (192.168.125/24)

Private SUSE OpenStack Cloud internal virtual network. This network is used by Ceph and swift only. It should not be accessed by users.

- Private Network (nova-fixed, 192.168.123/24)

Private SUSE OpenStack Cloud internal virtual network. This network is used for inter-instance communication and provides access to the outside world for the instances. The required gateway is automatically provided by SUSE OpenStack Cloud.

- Public Network (nova-floating, public, 192.168.126/24)

The only public network provided by SUSE OpenStack Cloud. You can access the nova Dashboard and all instances (provided they have been equipped with floating IP addresses) on this network. This network can only be accessed via a gateway, which must be provided externally. All SUSE OpenStack Cloud users and administrators must have access to the public network.

- Software Defined Network (os_sdn, 192.168.130/24)

Private SUSE OpenStack Cloud internal virtual network. This network is used when neutron is configured to use openvswitch with

GREtunneling for the virtual networks. It should not be accessible to users.- The monasca Monitoring Network

The monasca monitoring node needs to have an interface on both the admin network and the public network. monasca's backend services will listen on the admin network, the API services (

openstack-monasca-api,openstack-monasca-log-api) will listen on all interfaces.openstack-monasca-agentandopenstack-monasca-log-agentwill send their logs and metrics to themonasca-api/monasca-log-apiservices to the monitoring node's public network IP address.

If the Octavia barclamp will be deployed, the Octavia Management Network also needs to be configured. Octavia uses a set of instances running on one or more compute nodes called amphorae and the Octavia services need to communicate with the amphorae over a dedicated management network. This network is not pre-defined when setting up SUSE OpenStack Cloud Crowbar. It needs to be explicitly configured as covered under Section 12.20.1.1, “Management network”.

For security reasons, protect the following networks from external access:

Especially traffic from the cloud instances must not be able to pass through these networks.

As of SUSE OpenStack Cloud Crowbar 8, using a VLAN for the admin network is only supported on a native/untagged VLAN. If you need VLAN support for the admin network, it must be handled at switch level.

When changing the network configuration with YaST or by editing

/etc/crowbar/network.json you can define VLAN

settings for each network. For the networks nova-fixed

and nova-floating, however, special rules apply:

nova-fixed: The setting will be ignored. However, VLANs will automatically be used if deploying neutron with VLAN support (using the plugins linuxbridge, openvswitch plus VLAN, or cisco plus VLAN). In this case, you need to specify a correct for this network.

nova-floating: When using a VLAN for

nova-floating (which is the default), the and settings for

and must be

the same. When not using a VLAN for nova-floating, it

must have a different physical network interface than the

nova_fixed network.

As of SUSE OpenStack Cloud Crowbar 8, IPv6 is not supported. This applies to the cloud internal networks and to the instances. You must use static IPv4 addresses for all network interfaces on the Admin Node, and disable IPv6 before deploying Crowbar on the Admin Node.

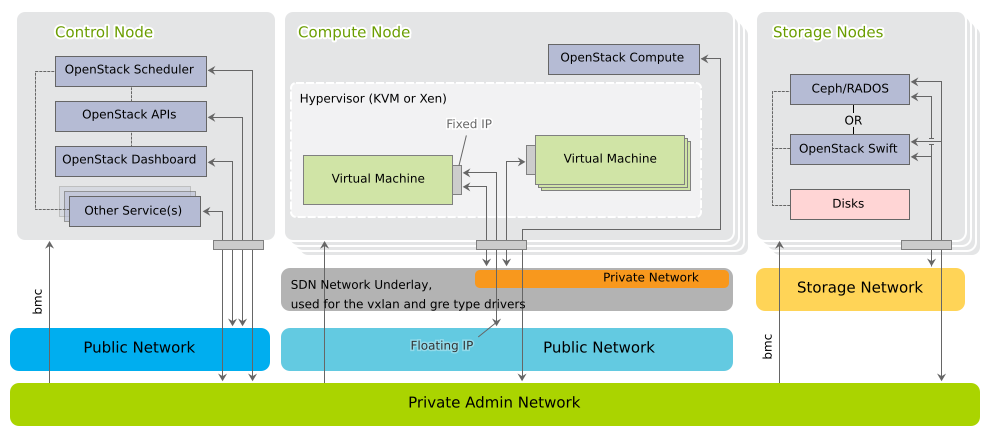

The following diagram shows the pre-defined SUSE OpenStack Cloud network in more detail. It demonstrates how the OpenStack nodes and services use the different networks.

2.1.1 Network Address Allocation #

The default networks set up in SUSE OpenStack Cloud are class C networks with 256 IP addresses each. This limits the maximum number of instances that can be started simultaneously. Addresses within the networks are allocated as outlined in the following table. Use the YaST Crowbar module to make customizations (see Chapter 7, Crowbar Setup). The last address in the IP address range of each network is always reserved as the broadcast address. This assignment cannot be changed.

For an overview of the minimum number of IP addresses needed for each of the ranges in the network settings, see Table 2.1, “Minimum Number of IP Addresses for Network Ranges”.

|

Network |

Required Number of IP addresses |

|---|---|

|

Admin Network |

|

|

Public Network |

|

|

BMC Network |

|

|

Software Defined Network |

|

The default network proposal as described below limits the maximum number of Compute Nodes to 80, the maximum number of floating IP addresses to 61 and the maximum number of addresses in the nova_fixed network to 204.

To overcome these limitations you need to reconfigure the network setup by using appropriate address ranges. Do this by either using the YaST Crowbar module as described in Chapter 7, Crowbar Setup, or by manually editing the network template file as described in Section 7.5, “Custom Network Configuration”.

192.168.124.0/24 (Admin/BMC) Network Address Allocation #|

Function |

Address |

Remark |

|---|---|---|

|

router |

|

Provided externally. |

|

admin |

|

Fixed addresses reserved for the Administration Server. |

|

DHCP |

|

Address range reserved for node allocation/installation. Determines the maximum number of parallel allocations/installations. |

|

host |

|

Fixed addresses for the OpenStack nodes. Determines the maximum number of OpenStack nodes that can be deployed. |

|

bmc vlan host |

|

Fixed address for the BMC VLAN. Used to generate a VLAN tagged interface on the Administration Server that can access the BMC network. The BMC VLAN must be in the same ranges as BMC, and BMC must have VLAN enabled. |

|

bmc host |

|

Fixed addresses for the OpenStack nodes. Determines the maximum number of OpenStack nodes that can be deployed. |

|

switch |

|

This range is not used in current releases and might be removed in the future. |

192.168.125/24 (Storage) Network Address Allocation #|

Function |

Address |

Remark |

|---|---|---|

|

host |

|

Each Storage Node will get an address from this range. |

192.168.123/24 (Private Network/nova-fixed) Network Address Allocation #|

Function |

Address |

Remark |

|---|---|---|

|

DHCP |

|

Address range for instances, routers and DHCP/DNS agents. |

192.168.126/24 (Public Network nova-floating, public) Network Address Allocation #|

Function |

Address |

Remark |

|---|---|---|

|

router |

|

Provided externally. |

|

public host |

|

Public address range for external SUSE OpenStack Cloud components such as the OpenStack Dashboard or the API. |

|

floating host |

|

Floating IP address range. Floating IP addresses can be manually assigned to a running instance to allow to access the guest from the outside. Determines the maximum number of instances that can concurrently be accessed from the outside. The nova_floating network is set up with a netmask of 255.255.255.192, allowing a maximum number of 61 IP addresses. This range is pre-allocated by default and managed by neutron. |

192.168.130/24 (Software Defined Network) Network Address Allocation #|

Function |

Address |

Remark |

|---|---|---|

|

host |

|

If neutron is configured with |

Addresses not used in the ranges mentioned above can be used to add additional servers with static addresses to SUSE OpenStack Cloud. Such servers can be used to provide additional services. A SUSE Manager server inside SUSE OpenStack Cloud, for example, must be configured using one of these addresses.

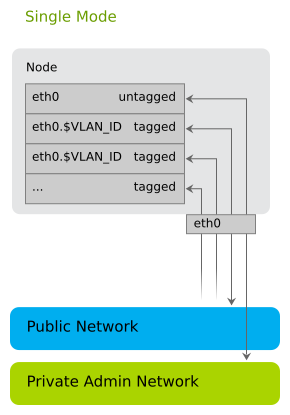

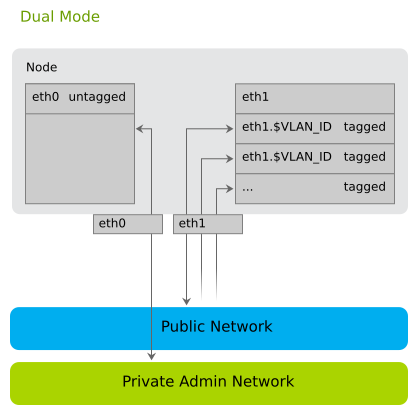

2.1.2 Network Modes #

SUSE OpenStack Cloud Crowbar supports different network modes defined in Crowbar: single, dual, and

team. As of SUSE OpenStack Cloud Crowbar 8, the networking mode

is applied to all nodes and the Administration Server. That means that all

machines need to meet the hardware requirements for the chosen mode. The

network mode can be configured using the YaST Crowbar module

(Chapter 7, Crowbar Setup). The network mode cannot

be changed after the cloud is deployed.

Other, more flexible network mode setups can be configured by manually editing the Crowbar network configuration files. See Section 7.5, “Custom Network Configuration” for more information. SUSE or a partner can assist you in creating a custom setup within the scope of a consulting services agreement (see http://www.suse.com/consulting/ for more information on SUSE consulting).

Network device bonding is required for an HA setup of SUSE OpenStack Cloud Crowbar. If you are planning to move your cloud to an HA setup at a later point in time, make sure to use a network mode in the YaST Crowbar that supports network device bonding.

Otherwise a migration to an HA setup is not supported.

2.1.2.1 Single Network Mode #

In single mode you use one Ethernet card for all the traffic:

2.1.2.2 Dual Network Mode #

Dual mode needs two Ethernet cards (on all nodes but Administration Server) to completely separate traffic between the Admin Network and the public network:

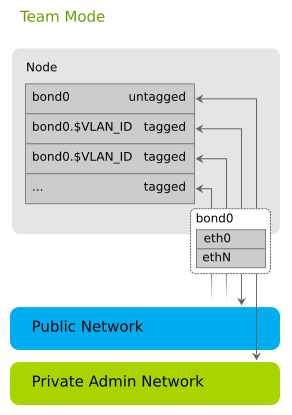

2.1.2.3 Team Network Mode #

Team mode is similar to single mode, except that you combine several Ethernet cards to a “bond” (network device bonding). Team mode needs two or more Ethernet cards.

When using team mode, you must choose a “bonding policy” that defines how to use the combined Ethernet cards. You can either set them up for fault tolerance, performance (load balancing), or a combination of both.

2.1.3 Accessing the Administration Server via a Bastion Network #

Enabling access to the Administration Server from another network requires an external gateway. This option offers maximum flexibility, but requires additional hardware and may be less secure than you require. Therefore SUSE OpenStack Cloud offers a second option for accessing the Administration Server: the bastion network. You only need a dedicated Ethernet card and a static IP address from the external network to set it up.

The bastion network setup (see Section 7.3.1, “Setting Up a Bastion Network” for setup instructions) enables logging in to the Administration Server via SSH from the company network. A direct login to other nodes in the cloud is not possible. However, the Administration Server can act as a “jump host”: First log in to the Administration Server via SSH, then log in via SSH to other nodes.

2.1.4 DNS and Host Names #

The Administration Server acts as a name server for all nodes in the cloud. If the Administration Server has access to the outside, then you can add additional name servers that are automatically used to forward requests. If additional name servers are found on your cloud deployment, the name server on the Administration Server is automatically configured to forward requests for non-local records to these servers.

The Administration Server must have a fully qualified host name. The domain name you specify is used for the DNS zone. It is required to use a sub-domain such as cloud.example.com. The Administration Server must have authority over the domain it is on so that it can create records for discovered nodes. As a result, it will not forward requests for names it cannot resolve in this domain, and thus cannot resolve names for the second-level domain, .e.g. example.com, other than for nodes in the cloud.

This host name must not be changed after SUSE OpenStack Cloud has been deployed. The OpenStack nodes are named after their MAC address by default, but you can provide aliases, which are easier to remember when allocating the nodes. The aliases for the OpenStack nodes can be changed at any time. It is useful to have a list of MAC addresses and the intended use of the corresponding host at hand when deploying the OpenStack nodes.

2.2 Persistent Storage #

When talking about “persistent storage” on SUSE OpenStack Cloud Crowbar, there are two completely different aspects to discuss: 1) the block and object storage services SUSE OpenStack Cloud offers, 2) the hardware related storage aspects on the different node types.

Block and object storage are persistent storage models where files or images are stored until they are explicitly deleted. SUSE OpenStack Cloud also offers ephemeral storage for images attached to instances. These ephemeral images only exist during the life of an instance and are deleted when the guest is terminated. See Section 2.2.2.3, “Compute Nodes” for more information.

2.2.1 Cloud Storage Services #

SUSE OpenStack Cloud offers two different types of services for persistent storage: object and block storage. Object storage lets you upload and download files (similar to an FTP server), whereas a block storage provides mountable devices (similar to a hard disk partition). SUSE OpenStack Cloud provides a repository to store the virtual disk images used to start instances.

- Object Storage with swift

The OpenStack object storage service is called swift. The storage component of swift (swift-storage) must be deployed on dedicated nodes where no other cloud services run. Deploy at least two swift nodes to provide redundant storage. SUSE OpenStack Cloud Crowbar is configured to always use all unused disks on a node for storage.

swift can optionally be used by glance, the service that manages the images used to boot the instances. Offering object storage with swift is optional.

- Block Storage

Block storage on SUSE OpenStack Cloud is provided by cinder. cinder can use a variety of storage back-ends, including network storage solutions like NetApp or EMC. It is also possible to use local disks for block storage. A list of drivers available for cinder and the features supported for each driver is available from the CinderSupportMatrix at https://wiki.openstack.org/wiki/CinderSupportMatrix. SUSE OpenStack Cloud Crowbar 9 ships with OpenStack Rocky.

Alternatively, cinder can use Ceph RBD as a back-end. Ceph offers data security and speed by storing the the content on a dedicated Ceph cluster.

- The glance Image Repository

glance provides a catalog and repository for virtual disk images used to start the instances. glance is installed on a Control Node. It uses swift, Ceph, or a directory on the Control Node to store the images. The image directory can either be a local directory or an NFS share.

2.2.2 Storage Hardware Requirements #

Each node in SUSE OpenStack Cloud needs sufficient disk space to store both the operating system and additional data. Requirements and recommendations for the various node types are listed below.

The operating system will always be installed on the first hard disk. This is the disk that is listed first in the BIOS, the one from which the machine will boot. Make sure that the hard disk the operating system is installed on will be recognized as the first disk.

2.2.2.1 Administration Server #

If you store the update repositories directly on the Administration Server (see

Section 2.5.2, “Product and Update Repositories”), we recommend mounting /srv on a separate partition or volume with a minimum of 30 GB space.

Log files from all nodes in SUSE OpenStack Cloud are stored on the Administration Server

under /var/log.

The message service RabbitMQ requires 1 GB of free space

in /var (see

Section 5.4.1, “On the Administration Server” for a complete list).

2.2.2.2 Control Nodes #

Depending on how the services are set up, glance and cinder may require additional disk space on the Control Node on which they are running. glance may be configured to use a local directory, whereas cinder may use a local image file for storage. For performance and scalability reasons this is only recommended for test setups. Make sure there is sufficient free disk space available if you use a local file for storage.

cinder may be configured to use local disks for storage (configuration option raw). If you choose this setup, we recommend deploying the role to one or more dedicated Control Nodes. Those should be equipped with several disks providing sufficient storage space. It may also be necessary to equip this node with two or more bonded network cards, since it will generate heavy network traffic. Bonded network cards require a special setup for this node. For details, refer to Section 7.5, “Custom Network Configuration”.

2.2.2.3 Compute Nodes #

Unless an instance is started via “Boot from Volume”, it is started with at least one disk, which is a copy of the image from which it has been started. Depending on the flavor you start, the instance may also have a second, so-called “ephemeral” disk. The size of the root disk depends on the image itself. Ephemeral disks are always created as sparse image files that grow up to a defined size as they are “filled”. By default ephemeral disks have a size of 10 GB.

Both disks, root images and ephemeral disk, are directly bound to the

instance and are deleted when the instance is terminated.

These disks are bound to the Compute Node on which the

instance has been started. The disks are created under

/var/lib/nova on the Compute Node. Your

Compute Nodes should be equipped with enough disk space to store the

root images and ephemeral disks.

Do not confuse ephemeral disks with persistent block storage. In addition to an ephemeral disk, which is automatically provided with most instance flavors, you can optionally add a persistent storage device provided by cinder. Ephemeral disks are deleted when the instance terminates, while persistent storage devices can be reused in another instance.

The maximum disk space required on a compute node depends on the available flavors. A flavor specifies the number of CPUs, RAM, and disk size of an instance. Several flavors ranging from (1 CPU, 512 MB RAM, no ephemeral disk) to (8 CPUs, 8 GB RAM, 10 GB ephemeral disk) are available by default. Adding custom flavors, and editing and deleting existing flavors is also supported.

To calculate the minimum disk space needed on a compute node, you need to determine the highest disk-space-to-RAM ratio from your flavors. For example:

| Flavor small: 2 GB RAM, 100 GB ephemeral disk => 50 GB disk /1 GB RAM |

| Flavor large: 8 GB RAM, 200 GB ephemeral disk => 25 GB disk /1 GB RAM |

So, 50 GB disk /1 GB RAM is the ratio that matters. If you multiply that value by the amount of RAM in GB available on your compute node, you have the minimum disk space required by ephemeral disks. Pad that value with sufficient space for the root disks plus a buffer to leave room for flavors with a higher disk-space-to-RAM ratio in the future.

The scheduler that decides in which node an instance is started does not check for available disk space. If there is no disk space left on a compute node, this will not only cause data loss on the instances, but the compute node itself will also stop operating. Therefore you must make sure all compute nodes are equipped with enough hard disk space.

2.2.2.4 Storage Nodes (optional) #

The block storage service Ceph RBD and the object storage service swift need to be deployed onto dedicated nodes—it is not possible to mix these services. The swift component requires at least two machines (more are recommended) to store data redundantly. For information on hardware requirements for Ceph, see https://documentation.suse.com/ses/5.5/single-html/ses-deployment/#storage-bp-hwreq

Each Ceph/swift Storage Node needs at least two hard disks. The first one will be used for the operating system installation, while the others can be used for storage. We recommend equipping the storage nodes with as many disks as possible.

Using RAID on swift storage nodes is not supported. swift takes care of redundancy and replication on its own. Using RAID with swift would also result in a huge performance penalty.

2.3 SSL Encryption #

Whenever non-public data travels over a network it must be encrypted. Encryption protects the integrity and confidentiality of data. Therefore you should enable SSL support when deploying SUSE OpenStack Cloud to production. (SSL is not enabled by default as it requires you to provide certificates.) The following services (and their APIs, if available) can use SSL:

cinder

horizon

glance

heat

keystone

manila

neutron

nova

swift

VNC

RabbitMQ

ironic

Magnum

You have two options for deploying your SSL certificates. You may use a single shared certificate for all services on each node, or provide individual certificates for each service. The minimum requirement is a single certificate for the Control Node and all services installed on it.

Certificates must be signed by a trusted authority. Refer to https://documentation.suse.com/sles/15-SP1/single-html/SLES-admin/#sec-apache2-ssl for instructions on how to create and sign them.

Each SSL certificate is issued for a certain host name and, optionally,

for alternative host names (via the AlternativeName

option). Each publicly available node in SUSE OpenStack Cloud has two host

names—an internal and a public one. The SSL certificate needs

to be issued for both internal and public names.

The internal name has the following scheme:

dMACADDRESS.FQDN

MACADDRESS is the MAC address of the

interface used to boot the machine via PXE. All letters are turned

lowercase and all colons are replaced with dashes. For example,

00-00-5E-00-53-00. FQDN is

the fully qualified domain name. An example name looks like this:

d00-00-5E-00-53-00.example.com

Unless you have entered a custom for a

client (see Section 11.2, “Node Installation” for details),

the public name is the same as the internal name prefixed by

public:

public-d00-00-5E-00-53-00.example.com

To look up the node names open the Crowbar Web interface and click the name of a node in the . The names are listed as and .

2.4 Hardware Requirements #

Precise hardware requirements can only be listed for the Administration Server and the OpenStack Control Node. The requirements of the OpenStack Compute and Storage Nodes depends on the number of concurrent instances and their virtual hardware equipment.

A minimum of three machines are required for a SUSE OpenStack Cloud: one Administration Server, one Control Node, and one Compute Node. You also need a gateway providing access to the public network. Deploying storage requires additional nodes: at least two nodes for swift and a minimum of four nodes for Ceph.

Deploying SUSE OpenStack Cloud functions to virtual machines is only supported for the Administration Server—all other nodes need to be physical hardware. Although the Control Node can be virtualized in test environments, this is not supported for production systems.

SUSE OpenStack Cloud currently only runs on x86_64 hardware.

2.4.1 Administration Server #

Architecture: x86_64.

RAM: at least 4 GB, 8 GB recommended. The demand for memory depends on the total number of nodes in SUSE OpenStack Cloud—the higher the number of nodes, the more RAM is needed. A deployment with 50 nodes requires a minimum of 24 GB RAM for each Control Node.

Hard disk: at least 50 GB. We recommend putting

/srvon a separate partition with at least additional 30 GB of space. Alternatively, you can mount the update repositories from another server (see Section 2.5.2, “Product and Update Repositories” for details).Number of network cards: 1 for single and dual mode, 2 or more for team mode. Additional networks such as the bastion network and/or a separate BMC network each need an additional network card. See Section 2.1, “Network” for details.

Can be deployed on physical hardware or a virtual machine.

2.4.2 Control Node #

Architecture: x86_64.

RAM: at least 8 GB, 12 GB when deploying a single Control Node, and 32 GB recommended.

Number of network cards: 1 for single mode, 2 for dual mode, 2 or more for team mode. See Section 2.1, “Network” for details.

Hard disk: See Section 2.2.2.2, “Control Nodes”.

2.4.3 Compute Node #

The Compute Nodes need to be equipped with a sufficient amount of RAM and CPUs, matching the numbers required by the maximum number of instances running concurrently. An instance started in SUSE OpenStack Cloud cannot share resources from several physical nodes. It uses the resources of the node on which it was started. So if you offer a flavor (see Flavor for a definition) with 8 CPUs and 12 GB RAM, at least one of your nodes should be able to provide these resources. Add 1 GB RAM for every two nodes (including Control Nodes and Storage Nodes) deployed in your cloud.

See Section 2.2.2.3, “Compute Nodes” for storage requirements.

2.4.4 Storage Node #

Usually a single CPU and a minimum of 4 GB RAM are sufficient for the Storage Nodes. Memory requirements increase depending on the total number of nodes in SUSE OpenStack Cloud—the higher the number of nodes, the more RAM you need. A deployment with 50 nodes requires a minimum of 20 GB for each Storage Node. If you use Ceph as storage, the storage nodes should be equipped with an additional 2 GB RAM per OSD (Ceph object storage daemon).

For storage requirements, see Section 2.2.2.4, “Storage Nodes (optional)”.

2.4.5 monasca Node #

The monasca Node is a dedicated physical machine that runs the

monasca-server role. This node is used for

SUSE OpenStack Cloud Crowbar Monitoring. Hardware requirements for the monasca Node are as

follows:

Architecture: x86_64

RAM: At least 32 GB, 64 GB or more is recommended

CPU: At least 8 cores, 16 cores or more is recommended

Hard Disk: SSD is strongly recommended

The following formula can be used to calculate the required disk space:

200 GB + ["number of nodes" * "retention period" * ("space for log

data/day" + "space for metrics data/day") ]The recommended values for the formula are as follows:

Retention period = 60 days for InfluxDB and Elasticsearch

Space for daily log data = 2GB

Space for daily metrics data = 50MB

The formula is based on the following log data assumptions:

Approximately 50 log files per node

Approximately 1 log entry per file per sec

200 bytes in size

The formula is based on the following metrics data assumptions:

400 metrics per node

Time interval of 30 seconds

20 bytes in size

The formula provides only a rough estimation of the required disk space. There are several factors that can affect disk space requirements. This includes the exact combination of services that run on your OpenStack node actual cloud usage pattern, and whether any or all services have debug logging enabled.

2.5 Software Requirements #

All nodes and the Administration Server in SUSE OpenStack Cloud run on SUSE Linux Enterprise Server 12 SP4. Subscriptions for the following components are available as one- or three-year subscriptions including priority support:

SUSE OpenStack Cloud Crowbar Control Node + SUSE OpenStack Cloud Crowbar Administration Server (including entitlements for High Availability and SUSE Linux Enterprise Server 12 SP4)

Additional SUSE OpenStack Cloud Crowbar Control Node (including entitlements for High Availability and SUSE Linux Enterprise Server 12 SP4)

SUSE OpenStack Cloud Crowbar Compute Node (excluding entitlements for High Availability and SUSE Linux Enterprise Server 12 SP4)

SUSE OpenStack Cloud Crowbar swift node (excluding entitlements for High Availability and SUSE Linux Enterprise Server 12 SP4)

SUSE Linux Enterprise Server 12 SP4, HA entitlements for Compute Nodes and swift Storage Nodes, and entitlements for guest operating systems need to be purchased separately. Refer to http://www.suse.com/products/suse-openstack-cloud/how-to-buy/ for more information on licensing and pricing.

Running an external Ceph cluster (optional) with SUSE OpenStack Cloud requires an additional SUSE Enterprise Storage subscription. Refer to https://www.suse.com/products/suse-enterprise-storage/ and https://www.suse.com/products/suse-openstack-cloud/frequently-asked-questions for more information.

A SUSE account is needed for product registration and access to update repositories. If you do not already have one, go to http://www.suse.com/ to create it.

2.5.1 Optional Component: SUSE Enterprise Storage #

SUSE OpenStack Cloud Crowbar can be extended by SUSE Enterprise Storage for setting up a Ceph cluster providing block storage services. To store virtual disks for instances, SUSE OpenStack Cloud uses block storage provided by the cinder module. cinder itself needs a back-end providing storage. In production environments this usually is a network storage solution. cinder can use a variety of network storage back-ends, among them solutions from EMC, Fujitsu, or NetApp. In case your organization does not provide a network storage solution that can be used with SUSE OpenStack Cloud, you can set up a Ceph cluster with SUSE Enterprise Storage. SUSE Enterprise Storage provides a reliable and fast distributed storage architecture using commodity hardware platforms.

2.5.2 Product and Update Repositories #

You need seven software repositories to deploy SUSE OpenStack Cloud and to keep a running SUSE OpenStack Cloud up-to-date. This includes the static product repositories, which do not change over the product life cycle, and the update repositories, which constantly change. The following repositories are needed:

- SUSE Linux Enterprise Server 12 SP4 Product

The SUSE Linux Enterprise Server 12 SP4 product repository is a copy of the installation media (DVD #1) for SUSE Linux Enterprise Server. As of SUSE OpenStack Cloud Crowbar 8, it is required to have it available locally on the Administration Server. This repository requires approximately 3.5 GB of hard disk space.

- SUSE OpenStack Cloud Crowbar 9 Product

The SUSE OpenStack Cloud Crowbar 9 product repository is a copy of the installation media (DVD #1) for SUSE OpenStack Cloud. It can either be made available remotely via HTTP, or locally on the Administration Server. We recommend the latter since it makes the setup of the Administration Server easier. This repository requires approximately 500 MB of hard disk space.

- PTF

This repository is created automatically on the Administration Server when you install the SUSE OpenStack Cloud add-on product. It serves as a repository for “Program Temporary Fixes” (PTF), which are part of the SUSE support program.

- SLES12-SP4-Pool and SUSE-OpenStack-Cloud-Crowbar-9-Pool

The SUSE Linux Enterprise Server and SUSE OpenStack Cloud Crowbar repositories contain all binary RPMs from the installation media, plus pattern information and support status metadata. These repositories are served from SUSE Customer Center and need to be kept in synchronization with their sources. Make them available remotely via an existing SMT or SUSE Manager server. Alternatively, make them available locally on the Administration Server by installing a local SMT server, by mounting or synchronizing a remote directory, or by copying them.

- SLES12-SP4-Updates and SUSE-OpenStack-Cloud-Crowbar-9-Updates

These repositories contain maintenance updates to packages in the corresponding Pool repositories. These repositories are served from SUSE Customer Center and need to be kept synchronized with their sources. Make them available remotely via an existing SMT or SUSE Manager server, or locally on the Administration Server by installing a local SMT server, by mounting or synchronizing a remote directory, or by regularly copying them.

As explained in Section 2.6, “High Availability”, Control Nodes in SUSE OpenStack Cloud can optionally be made highly available with the SUSE Linux Enterprise High Availability Extension. The following repositories are required to deploy SLES High Availability Extension nodes:

- SLE-HA12-SP4-Pool

The pool repositories contain all binary RPMs from the installation media, plus pattern information and support status metadata. These repositories are served from SUSE Customer Center and need to be kept in synchronization with their sources. Make them available remotely via an existing SMT or SUSE Manager server. Alternatively, make them available locally on the Administration Server by installing a local SMT server, by mounting or synchronizing a remote directory, or by copying them.

- SLE-HA12-SP4-Updates

These repositories contain maintenance updates to packages in the corresponding pool repositories. These repositories are served from SUSE Customer Center and need to be kept synchronized with their sources. Make them available remotely via an existing SMT or SUSE Manager server, or locally on the Administration Server by installing a local SMT server, by mounting or synchronizing a remote directory, or by regularly copying them.

The product repositories for SUSE Linux Enterprise Server 12 SP4 and SUSE OpenStack Cloud Crowbar 9 do not change during the life cycle of a product. Thus, they can be copied to the destination directory from the installation media. However, the pool and update repositories must be kept synchronized with their sources on the SUSE Customer Center. SUSE offers two products that synchronize repositories and make them available within your organization: SUSE Manager (http://www.suse.com/products/suse-manager/, and Subscription Management Tool (which ships with SUSE Linux Enterprise Server 12 SP4).

All repositories must be served via HTTP to be available for SUSE OpenStack Cloud deployment. Repositories that are installed on the Administration Server are made available by the Apache Web server running on the Administration Server. If your organization already uses SUSE Manager or SMT, you can use the repositories provided by these servers.

Making the repositories locally available on the Administration Server has the advantage of a simple network setup within SUSE OpenStack Cloud, and it allows you to seal off the SUSE OpenStack Cloud network from other networks in your organization. Hosting the repositories on a remote server has the advantage of using existing resources and services, and it makes setting up the Administration Server much easier. However, this requires a custom network setup for SUSE OpenStack Cloud, since the Administration Server needs access to the remote server.

- Installing a Subscription Management Tool (SMT) Server on the Administration Server

The SMT server, shipping with SUSE Linux Enterprise Server 12 SP4, regularly synchronizes repository data from SUSE Customer Center with your local host. Installing the SMT server on the Administration Server is recommended if you do not have access to update repositories from elsewhere within your organization. This option requires the Administration Server to have Internet access.

- Using a Remote SMT Server

If you already run an SMT server within your organization, you can use it within SUSE OpenStack Cloud. When using a remote SMT server, update repositories are served directly from the SMT server. Each node is configured with these repositories upon its initial setup.

The SMT server needs to be accessible from the Administration Server and all nodes in SUSE OpenStack Cloud (via one or more gateways). Resolving the server's host name also needs to work.

- Using a SUSE Manager Server

Each client that is managed by SUSE Manager needs to register with the SUSE Manager server. Therefore the SUSE Manager support can only be installed after the nodes have been deployed. SUSE Linux Enterprise Server 12 SP4 must be set up for autoinstallation on the SUSE Manager server in order to use repositories provided by SUSE Manager during node deployment.

The server needs to be accessible from the Administration Server and all nodes in SUSE OpenStack Cloud (via one or more gateways). Resolving the server's host name also needs to work.

- Using Existing Repositories

If you can access existing repositories from within your company network from the Administration Server, you have the following options: mount, synchronize, or manually transfer these repositories to the required locations on the Administration Server.

2.6 High Availability #

Several components and services in SUSE OpenStack Cloud Crowbar are potentially single points of failure that may cause system downtime and data loss if they fail.

SUSE OpenStack Cloud Crowbar provides various mechanisms to ensure that the crucial components and services are highly available. The following sections provide an overview of components on each node that can be made highly available. For making the Control Node functions and the Compute Nodes highly available, SUSE OpenStack Cloud Crowbar uses the cluster software SUSE Linux Enterprise High Availability Extension. Make sure to thoroughly read Section 2.6.5, “Cluster Requirements and Recommendations” to learn about additional requirements for high availability deployments.

2.6.1 High Availability of the Administration Server #

The Administration Server provides all services needed to manage and deploy all other nodes in the cloud. If the Administration Server is not available, new cloud nodes cannot be allocated, and you cannot add new roles to cloud nodes.

However, only two services on the Administration Server are single points of failure, without which the cloud cannot continue to run properly: DNS and NTP.

2.6.1.1 Administration Server—Avoiding Points of Failure #

To avoid DNS and NTP as potential points of failure, deploy the roles

dns-server and

ntp-server to multiple nodes.

If any configured DNS forwarder or NTP external server is not

reachable through the admin network from these nodes, allocate an

address in the public network for each node that has the

dns-server and

ntp-server roles:

crowbar network allocate_ip default `hostname -f` public host

Then the nodes can use the public gateway to reach the external

servers. The change will only become effective after the next run of

chef-client on the affected nodes.

2.6.2 High Availability of the Control Node(s) #

The Control Node(s) usually run a variety of services without which the cloud would not be able to run properly.

2.6.2.1 Control Node(s)—Avoiding Points of Failure #

To prevent the cloud from avoidable downtime if one or more Control Nodes fail, you can make the following roles highly available:

database-server(databasebarclamp)keystone-server(keystonebarclamp)rabbitmq-server(rabbitmqbarclamp)swift-proxy(swiftbarclamp)glance-server(glancebarclamp)cinder-controller(cinderbarclamp)neutron-server(neutronbarclamp)neutron-network(neutronbarclamp)nova-controller(novabarclamp)nova_dashboard-server(nova_dashboardbarclamp)ceilometer-server(ceilometerbarclamp)ceilometer-control(ceilometerbarclamp)heat-server(heatbarclamp)octavia-api(octaviabarclamp)octavia-backend(octaviabarclamp)

Instead of assigning these roles to individual cloud nodes, you can assign them to one or several High Availability clusters. SUSE OpenStack Cloud Crowbar will then use the Pacemaker cluster stack (shipped with the SUSE Linux Enterprise High Availability Extension) to manage the services. If one Control Node fails, the services will fail over to another Control Node. For details on the Pacemaker cluster stack and the SUSE Linux Enterprise High Availability Extension, refer to the High Availability Guide, available at https://documentation.suse.com/sle-ha/15-SP1/. Note that SUSE Linux Enterprise High Availability Extension includes Linux Virtual Server as the load-balancer, and SUSE OpenStack Cloud Crowbar uses HAProxy for this purpose (http://haproxy.1wt.eu/).

Though it is possible to use the same cluster for all of the roles above, the recommended setup is to use three clusters and to deploy the roles as follows:

datacluster:database-serverandrabbitmq-servernetworkcluster:neutron-network(as theneutron-networkrole may result in heavy network load and CPU impact)servicescluster: all other roles listed above (as they are related to API/schedulers)

For setting up the clusters, some special requirements and recommendations apply. For details, refer to Section 2.6.5, “Cluster Requirements and Recommendations”.

2.6.2.2 Control Node(s)—Recovery #

Recovery of the Control Node(s) is done automatically by the cluster software: if one Control Node fails, Pacemaker will fail over the services to another Control Node. If a failed Control Node is repaired and rebuilt via Crowbar, it will be automatically configured to join the cluster. At this point Pacemaker will have the option to fail back services if required.

2.6.3 High Availability of the Compute Node(s) #

If a Compute Node fails, all VMs running on that node will go down. While it cannot protect against failures of individual VMs, a

High Availability setup for Compute Nodes helps to minimize VM downtime caused by

Compute Node failures. If the nova-compute service or

libvirtd fail on a Compute Node, Pacemaker will

try to automatically recover them. If recovery fails, or the

node itself should become unreachable, the node will be fenced and the

VMs will be moved to a different Compute Node.

If you decide to use High Availability for Compute Nodes, your Compute Node will

be run as Pacemaker remote nodes. With the pacemaker-remote

service, High Availability clusters can be extended to control remote nodes without any

impact on scalability, and without having to install the full cluster stack

(including corosync) on the remote nodes. Instead, each

Compute Node only runs the pacemaker-remote service. The service

acts as a proxy, allowing the cluster stack on the “normal”

cluster nodes to connect to it and to control services remotely. Thus, the

node is effectively integrated into the cluster as a remote node. In this way,

the services running on the OpenStack compute nodes can be controlled from the core

Pacemaker cluster in a lightweight, scalable fashion.

Find more information about the pacemaker_remote

service in

Pacemaker Remote—Extending High Availability into

Virtual Nodes,

available at http://www.clusterlabs.org/doc/.

To configure High Availability for Compute Nodes, you need to adjust the following barclamp proposals:

Pacemaker—for details, see Section 12.2, “Deploying Pacemaker (Optional, HA Setup Only)”.

nova—for details, see Section 12.11.1, “HA Setup for nova”.

2.6.4 High Availability of the Storage Node(s) #

SUSE OpenStack Cloud Crowbar offers two different types of storage that can be used for the Storage Nodes: object storage (provided by the OpenStack swift component) and block storage (provided by Ceph).

Both already consider High Availability aspects by design, therefore it does not require much effort to make the storage highly available.

2.6.4.1 swift—Avoiding Points of Failure #

The OpenStack Object Storage replicates the data by design, provided the following requirements are met:

The option in the swift barclamp is set to

3, the tested and recommended value.The number of Storage Nodes needs to be greater than the value set in the option.

To avoid single points of failure, assign the

swift-storagerole to multiple nodes.To make the API highly available, assign the

swift-proxyrole to a cluster instead of assigning it to a single Control Node. See Section 2.6.2.1, “Control Node(s)—Avoiding Points of Failure”. Other swift roles must not be deployed on a cluster.

2.6.4.2 Ceph—Avoiding Points of Failure #

Ceph is a distributed storage solution that can provide High Availability. For High Availability redundant storage and monitors need to be configured in the Ceph cluster. For more information refer to the SUSE Enterprise Storage documentation at https://documentation.suse.com/ses/5.5/.

2.6.5 Cluster Requirements and Recommendations #

When considering setting up one or more High Availability clusters, refer to the chapter System Requirements in the High Availability Guide for SUSE Linux Enterprise High Availability Extension. The guide is available at https://documentation.suse.com/sle-ha/15-SP1/.

The HA requirements for Control Node also apply to SUSE OpenStack Cloud Crowbar. Note that by buying SUSE OpenStack Cloud Crowbar, you automatically get an entitlement for SUSE Linux Enterprise High Availability Extension.

Especially note the following requirements:

- Number of Cluster Nodes

Each cluster needs to consist of at least three cluster nodes.

Important: Odd Number of Cluster NodesThe Galera cluster needs an odd number of cluster nodes with a minimum of three nodes.

A cluster needs Quorum to keep services running. A three-node cluster can tolerate failure of only one node at a time, whereas a five-node cluster can tolerate failures of two nodes.

- STONITH

The cluster software will shut down “misbehaving” nodes in a cluster to prevent them from causing trouble. This mechanism is called

fencingor STONITH.Important: No Support Without STONITHA cluster without STONITH is not supported.

For a supported HA setup, ensure the following:

Each node in the High Availability cluster needs to have at least one STONITH device (usually a hardware device). We strongly recommend multiple STONITH devices per node, unless STONITH Block Device (SBD) is used.

The global cluster options

stonith-enabledandstartup-fencingmust be set totrue. These options are set automatically when deploying thePacemakerbarclamp. When you change them, you will lose support.When deploying the

Pacemakerservice, select a STONITH: Configuration mode for STONITH that matches your setup. If your STONITH devices support the IPMI protocol, choosing the IPMI option is the easiest way to configure STONITH. Another alternative is SBD. It provides a way to enable STONITH and fencing in clusters without external power switches, but it requires shared storage. For SBD requirements, see http://linux-ha.org/wiki/SBD_Fencing, section Requirements.

For more information, refer to the High Availability Guide, available at https://documentation.suse.com/sle-ha/15-SP1/. Especially read the following chapters: Configuration and Administration Basics, and Fencing and STONITH, Storage Protection.

- Network Configuration

- Important: Redundant Communication Paths

For a supported HA setup, it is required to set up cluster communication via two or more redundant paths. For this purpose, use network device bonding and team network mode in your Crowbar network setup. For details, see Section 2.1.2.3, “Team Network Mode”. At least two Ethernet cards per cluster node are required for network redundancy. We advise using team network mode everywhere (not only between the cluster nodes) to ensure redundancy.

For more information, refer to the High Availability Guide, available at https://documentation.suse.com/sle-ha/15-SP1/. Especially read the following chapter: Network Device Bonding.

Using a second communication channel (ring) in Corosync (as an alternative to network device bonding) is not supported yet in SUSE OpenStack Cloud Crowbar. By default, SUSE OpenStack Cloud Crowbar uses the admin network (typically

eth0) for the first Corosync ring.Important: Dedicated NetworksThe

corosyncnetwork communication layer is crucial to the health of the cluster.corosynctraffic always goes over the admin network.Use redundant communication paths for the

corosyncnetwork communication layer.Do not place the

corosyncnetwork communication layer on interfaces shared with any other networks that could experience heavy load, such as the OpenStack public / private / SDN / storage networks.

Similarly, if SBD over iSCSI is used as a STONITH device (see STONITH), do not place the iSCSI traffic on interfaces that could experience heavy load, because this might disrupt the SBD mechanism.

- Storage Requirements

When using SBD as STONITH device, additional requirements apply for the shared storage. For details, see http://linux-ha.org/wiki/SBD_Fencing, section Requirements.

2.6.6 For More Information #

For a basic understanding and detailed information on the SUSE Linux Enterprise High Availability Extension (including the Pacemaker cluster stack), read the High Availability Guide. It is available at https://documentation.suse.com/sle-ha/15-SP1/.

In addition to the chapters mentioned in Section 2.6.5, “Cluster Requirements and Recommendations”, the following chapters are especially recommended:

Product Overview

Configuration and Administration Basics

The High Availability Guide also provides comprehensive information about the cluster management tools with which you can view and check the cluster status in SUSE OpenStack Cloud Crowbar. They can also be used to look up details like configuration of cluster resources or global cluster options. Read the following chapters for more information:

HA Web Console: Configuring and Managing Cluster Resources (Web Interface)

crm.sh: Configuring and Managing Cluster Resources (Command Line)

2.7 Summary: Considerations and Requirements #

As outlined above, there are some important considerations to be made before deploying SUSE OpenStack Cloud. The following briefly summarizes what was discussed in detail in this chapter. Keep in mind that as of SUSE OpenStack Cloud Crowbar 8, it is not possible to change some aspects such as the network setup when SUSE OpenStack Cloud is deployed!

If you do not want to stick with the default networks and addresses, define custom networks and addresses. You need five different networks. If you need to separate the admin and the BMC network, a sixth network is required. See Section 2.1, “Network” for details. Networks that share interfaces need to be configured as VLANs.

The SUSE OpenStack Cloud networks are completely isolated, therefore it is not required to use public IP addresses for them. A class C network as used in this documentation may not provide enough addresses for a cloud that is supposed to grow. You may alternatively choose addresses from a class B or A network.

Determine how to allocate addresses from your network. Make sure not to allocate IP addresses twice. See Section 2.1.1, “Network Address Allocation” for the default allocation scheme.

Define which network mode to use. Keep in mind that all machines within the cloud (including the Administration Server) will be set up with the chosen mode and therefore need to meet the hardware requirements. See Section 2.1.2, “Network Modes” for details.

Define how to access the admin and BMC network(s): no access from the outside (no action is required), via an external gateway (gateway needs to be provided), or via bastion network. See Section 2.1.3, “Accessing the Administration Server via a Bastion Network” for details.

Provide a gateway to access the public network (public, nova-floating).

Make sure the Administration Server's host name is correctly configured (

hostname-fneeds to return a fully qualified host name). If this is not the case, run › › and add a fully qualified host name.Prepare a list of MAC addresses and the intended use of the corresponding host for all OpenStack nodes.

Depending on your network setup you have different options for providing up-to-date update repositories for SUSE Linux Enterprise Server and SUSE OpenStack Cloud for SUSE OpenStack Cloud deployment: using an existing SMT or SUSE Manager server, installing SMT on the Administration Server, synchronizing data with an existing repository, mounting remote repositories, or using physical media. Choose the option that best matches your needs.

Decide whether you want to deploy the object storage service swift. If so, you need to deploy at least two nodes with sufficient disk space exclusively dedicated to swift.

Decide which back-end to use with cinder. If using the back-end (local disks) we strongly recommend using a separate node equipped with several hard disks for deploying

cinder-volume. Ceph needs a minimum of four exclusive nodes with sufficient disk space.Make sure all Compute Nodes are equipped with sufficient hard disk space.

Decide whether to use different SSL certificates for the services and the API, or whether to use a single certificate.

Get one or more SSL certificates certified by a trusted third party source.

Make sure the hardware requirements for the different node types are met.

Make sure to have all required software at hand.

2.8 Overview of the SUSE OpenStack Cloud Installation #

Deploying and installing SUSE OpenStack Cloud Crowbar is a multi-step process. Start by deploying a basic SUSE Linux Enterprise Server installation and the SUSE OpenStack Cloud Crowbar add-on product to the Administration Server. Then the product and update repositories need to be set up and the SUSE OpenStack Cloud network needs to be configured. Next, complete the Administration Server setup. After the Administration Server is ready, you can start deploying and configuring the OpenStack nodes. The complete node deployment is done automatically via Crowbar and Chef from the Administration Server. All you need to do is to boot the nodes using PXE and to deploy the OpenStack components to them.

Install SUSE Linux Enterprise Server 12 SP4 on the Administration Server with the add-on product SUSE OpenStack Cloud. Optionally select the Subscription Management Tool (SMT) pattern for installation. See Chapter 3, Installing the Administration Server.

Optionally set up and configure the SMT server on the Administration Server. See Chapter 4, Installing and Setting Up an SMT Server on the Administration Server (Optional).

Make all required software repositories available on the Administration Server. See Chapter 5, Software Repository Setup.

Set up the network on the Administration Server. See Chapter 6, Service Configuration: Administration Server Network Configuration.

Perform the Crowbar setup to configure the SUSE OpenStack Cloud network and to make the repository locations known. When the configuration is done, start the SUSE OpenStack Cloud Crowbar installation. See Chapter 7, Crowbar Setup.

Boot all nodes onto which the OpenStack components should be deployed using PXE and allocate them in the Crowbar Web interface to start the automatic SUSE Linux Enterprise Server installation. See Chapter 11, Installing the OpenStack Nodes.

Configure and deploy the OpenStack components via the Crowbar Web interface or command line tools. See Chapter 12, Deploying the OpenStack Services.

When all OpenStack components are up and running, SUSE OpenStack Cloud is ready. The cloud administrator can now upload images to enable users to start deploying instances. See the Administrator Guide and the Supplement to Administrator Guide and User Guide.