1 The SUSE OpenStack Cloud Architecture #

SUSE OpenStack Cloud Crowbar is a managed cloud infrastructure solution that provides a full stack of cloud deployment and management services.

SUSE OpenStack Cloud Crowbar 9 provides the following features:

Open source software that is based on the OpenStack Rocky release.

Centralized resource tracking providing insight into activities and capacity of the cloud infrastructure for optimized automated deployment of services.

A self-service portal enabling end users to configure and deploy services as necessary, and to track resource consumption (horizon).

An image repository from which standardized, pre-configured virtual machines are published (glance).

Automated installation processes via Crowbar using pre-defined scripts for configuring and deploying the Control Node(s) and Compute and Storage Nodes.

Multi-tenant, role-based provisioning and access control for multiple departments and users within your organization.

APIs enabling the integration of third-party software, such as identity management and billing solutions.

An optional monitoring as a service solution, that allows to manage, track, and optimize the cloud infrastructure and the services provided to end users (SUSE OpenStack Cloud Crowbar Monitoring, monasca).

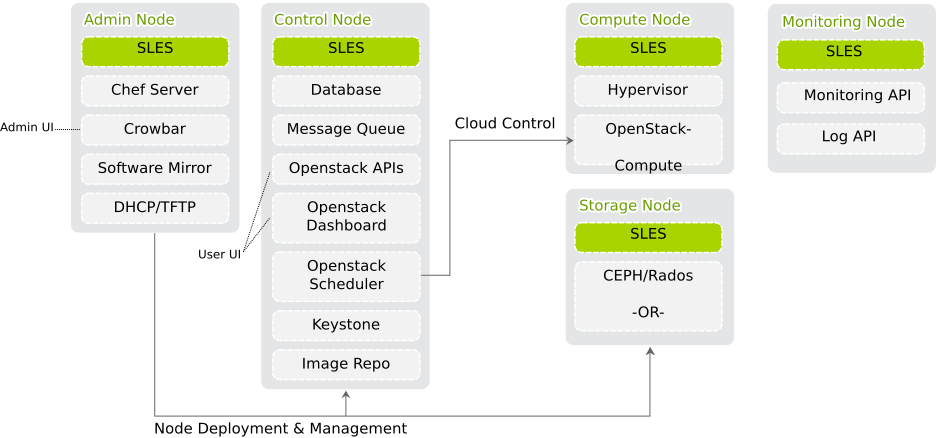

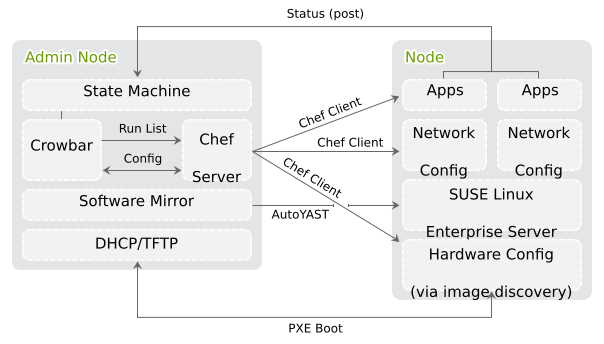

SUSE OpenStack Cloud Crowbar is based on SUSE Linux Enterprise Server, OpenStack, Crowbar, and Chef. SUSE Linux Enterprise Server is the underlying operating system for all cloud infrastructure machines (also called nodes). The cloud management layer, OpenStack, works as the “Cloud Operating System”. Crowbar and Chef automatically deploy and manage the OpenStack nodes from a central Administration Server.

SUSE OpenStack Cloud Crowbar is deployed to four different types of machines:

one Administration Server for node deployment and management

one or more Control Nodes hosting the cloud management services

several Compute Nodes on which the instances are started

several Monitoring Node for monitoring services and servers.

1.1 The Administration Server #

The Administration Server provides all services needed to manage and deploy all other nodes in the cloud. Most of these services are provided by the Crowbar tool that—together with Chef—automates all the required installation and configuration tasks. Among the services provided by the server are DHCP, DNS, NTP, PXE, and TFTP.

The Administration Server also hosts the software repositories for SUSE Linux Enterprise Server and SUSE OpenStack Cloud Crowbar, which are needed for node deployment. If no other sources for the software repositories are available, it can host the Subscription Management Tool (SMT), providing up-to-date repositories with updates and patches for all nodes.

1.2 The Control Node(s) #

The Control Node(s) hosts all OpenStack components needed to orchestrate virtual machines deployed on the Compute Nodes in the SUSE OpenStack Cloud. OpenStack on SUSE OpenStack Cloud uses a MariaDB database, which is hosted on the Control Node(s). The following OpenStack components—if deployed—run on the Control Node(s):

MariaDB database.

Image (glance) for managing virtual images.

Identity (keystone), providing authentication and authorization for all OpenStack components.

Networking (neutron), providing “networking as a service” between interface devices managed by other OpenStack services.

Block Storage (cinder), providing block storage.

OpenStack Dashboard (horizon), providing the Dashboard, a user Web interface for the OpenStack components.

Compute (nova) management (

nova-controller) including API and scheduler.Message broker (RabbitMQ).

swift proxy server plus dispersion tools (health monitor) and swift ring (index of objects, replicas, and devices). swift provides object storage.

Hawk, a monitor for a pacemaker cluster in a High Availability (HA) setup.

heat, an orchestration engine.

designate provides DNS as a Service (DNSaaS)

ironic, the OpenStack bare metal service for provisioning physical machines.

SUSE OpenStack Cloud Crowbar requires a three-node cluster for any production deployment since it leverages a MariaDB Galera Cluster for high availability.

We recommend deploying certain parts of Networking (neutron) on separate nodes for production clouds. See Section 12.10, “Deploying neutron” for details.

You can separate authentication and authorization services from other cloud services, for stronger security, by hosting Identity (keystone) on a separate node. Hosting Block Storage (cinder, particularly the cinder-volume role) on a separate node when using local disks for storage enables you to customize your storage and network hardware to best meet your requirements.

If you plan to move a service from one Control Node to another, we strongly recommended shutting down or saving all instances before doing so. Restart them after having successfully re-deployed the services. Moving services also requires stopping them manually on the original Control Node.

1.3 The Compute Nodes #

The Compute Nodes are the pool of machines on which your instances are running. These machines need to be equipped with a sufficient number of CPUs and enough RAM to start several instances. They also need to provide sufficient hard disk space, see Section 2.2.2.3, “Compute Nodes” for details. The Control Node distributes instances within the pool of Compute Nodes and provides them with the necessary network resources. The OpenStack component Compute (nova) runs on the Compute Nodes and provides the means for setting up, starting, and stopping virtual machines.

SUSE OpenStack Cloud Crowbar supports several hypervisors, including KVM and VMware vSphere. Each image that is started with an instance is bound to one hypervisor. Each Compute Node can only run one hypervisor at a time. You will choose which hypervisor to run on each Compute Node when deploying the nova barclamp.

1.4 The Storage Nodes #

The Storage Nodes are the pool of machines providing object or block storage. Object storage is provided by the OpenStack swift component, while block storage is provided by cinder. The latter supports several back-ends, including Ceph, that are deployed during the installation. Deploying swift and Ceph is optional.

1.5 The Monitoring Node #

The Monitoring Node is the node that has the monasca-server role assigned.

It hosts most services needed for SUSE OpenStack Cloud

Monitoring, our monasca-based monitoring and logging solution. The following

services run on this node:

- Monitoring API

The monasca Web API that is used for sending metrics by monasca agents, and retrieving metrics with the monasca command line client and the monasca Grafana dashboard.

- Message Queue

A Kafka instance used exclusively by SUSE OpenStack Cloud Monitoring.

- Persister

Stores metrics and alarms in InfluxDB.

- Notification Engine

Consumes alarms sent by the Threshold Engine and sends notifications (e.g. via email).

- Threshold Engine

Based on Apache Storm. Computes thresholds on metrics and handles alarming.

- Metrics and Alarms Database

A Cassandra database for storing metrics alarm history.

- Config Database

A dedicated MariaDB instance used only for monitoring related data.

- Log API

The monasca Web API that is used for sending log entries by monasca agents, and retrieving log entries with the Kibana Server.

- Log Transformer

Transforms raw log entries sent to the Log API into a format suitable for storage.

- Log Metrics

Sends metrics about high severity log messages to the Monitoring API.

- Log Persister

Stores logs processed by monasca Log Transformer in the Log Database.

- Kibana Server

A graphical web frontend for querying the Log Database.

- Log Database

An Elasticsearch database for storing logs.

- Zookeeper

Cluster synchronization for Kafka and Storm.

Currently there can only be one Monitoring node. Clustering support is planned for a future release. We strongly recommend using a dedicated physical node without any other services as a Monitoring Node.

1.6 HA Setup #

A failure of components in SUSE OpenStack Cloud Crowbar can lead to system downtime and data loss. To prevent this, set up a High Availability (HA) cluster consisting of several nodes. You can assign certain roles to this cluster instead of assigning them to individual nodes. As of SUSE OpenStack Cloud Crowbar 8, Control Nodes and Compute Nodes can be made highly available.

For all HA-enabled roles, their respective functions are automatically handled by the clustering software SUSE Linux Enterprise High Availability Extension. The High Availability Extension uses the Pacemaker cluster stack with Pacemaker as cluster resource manager, and Corosync as the messaging/infrastructure layer.

View the cluster status and configuration with the cluster

management tools HA Web Console (Hawk) or the

crm shell.

Use the cluster management tools only for viewing. All of the clustering configuration is done automatically via Crowbar and Chef. If you change anything via the cluster management tools you risk breaking the cluster. Changes done there may be reverted by the next run of Chef anyway.

A failure of the OpenStack infrastructure services (running on the Control Nodes) can be critical and may cause downtime within the cloud. For more information on making those services highly-available and avoiding other potential points of failure in your cloud setup, refer to Section 2.6, “High Availability”.