1 Maintenance #

1.1 Keeping the Nodes Up-To-Date #

Keeping the nodes in SUSE OpenStack Cloud Crowbar up-to-date requires an appropriate setup of the update and pool repositories and the deployment of either the barclamp or the SUSE Manager barclamp. For details, see Section 5.2, “Update and Pool Repositories”, Section 11.4.1, “Deploying Node Updates with the Updater Barclamp”, and Section 11.4.2, “Configuring Node Updates with the Barclamp”.

If one of those barclamps is deployed, patches are installed on the nodes. Patches that do not require a reboot will not cause a service interruption. If a patch (for example, a kernel update) requires a reboot after the installation, services running on the machine that is rebooted will not be available within SUSE OpenStack Cloud. Therefore, we strongly recommend installing those patches during a maintenance window.

As of SUSE OpenStack Cloud Crowbar 8, it is not possible to put your entire SUSE OpenStack Cloud into “Maintenance Mode” (such as limiting all users to read-only operations on the control plane), as OpenStack does not support this. However when Pacemaker is deployed to manage HA clusters, it should be used to place services and cluster nodes into “Maintenance Mode” before performing maintenance functions on them. For more information, see SUSE Linux Enterprise High Availability documentation.

- Administration Server

While the Administration Server is offline, it is not possible to deploy new nodes. However, rebooting the Administration Server has no effect on starting instances or on instances already running.

- Control Nodes

The consequences a reboot of a Control Node depend on the services running on that node:

Database, keystone, RabbitMQ, glance, nova: No new instances can be started.

swift: No object storage data is available. If glance uses swift, it will not be possible to start new instances.

cinder, Ceph: No block storage data is available.

neutron: No new instances can be started. On running instances the network will be unavailable.

horizon. horizon will be unavailable. Starting and managing instances can be done with the command line tools.

- Compute Nodes

Whenever a Compute Node is rebooted, all instances running on that particular node will be shut down and must be manually restarted. Therefore it is recommended to “evacuate” the node by migrating instances to another node, before rebooting it.

1.2 Service Order on SUSE OpenStack Cloud Start-up or Shutdown #

In case you need to restart your complete SUSE OpenStack Cloud (after a complete shut down or a power outage), ensure that the external Ceph cluster is started, available and healthy. Then start nodes and services in the order documented below.

Control Node/Cluster on which the Database is deployed

Control Node/Cluster on which RabbitMQ is deployed

Control Node/Cluster on which keystone is deployed

For swift:

Storage Node on which the

swift-storagerole is deployedStorage Node on which the

swift-proxyrole is deployed

Any remaining Control Node/Cluster. The following additional rules apply:

The Control Node/Cluster on which the

neutron-serverrole is deployed needs to be started before starting the node/cluster on which theneutron-l3role is deployed.The Control Node/Cluster on which the

nova-controllerrole is deployed needs to be started before starting the node/cluster on which heat is deployed.

Compute Nodes

If multiple roles are deployed on a single Control Node, the services are automatically started in the correct order on that node. If you have more than one node with multiple roles, make sure they are started as closely as possible to the order listed above.

To shut down SUSE OpenStack Cloud, terminate nodes and services in the order documented below (which is the reverse of the start-up order).

Compute Nodes

Control Node/Cluster on which heat is deployed

Control Node/Cluster on which the

nova-controllerrole is deployedControl Node/Cluster on which the

neutron-l3role is deployedAll Control Node(s)/Cluster(s) on which neither of the following services is deployed: Database, RabbitMQ, and keystone.

For swift:

Storage Node on which the

swift-proxyrole is deployedStorage Node on which the

swift-storagerole is deployed

Control Node/Cluster on which keystone is deployed

Control Node/Cluster on which RabbitMQ is deployed

Control Node/Cluster on which the Database is deployed

If required, gracefully shut down an external Ceph cluster

1.3 Upgrading from SUSE OpenStack Cloud Crowbar 8 to SUSE OpenStack Cloud Crowbar 9 #

Upgrading from SUSE OpenStack Cloud Crowbar 8 to SUSE OpenStack Cloud Crowbar 9 can be done either via a Web interface or from the command line. SUSE OpenStack Cloud Crowbar supports a non-disruptive upgrade which provides a fully-functional SUSE OpenStack Cloud operation during most of the upgrade procedure, if your installation meets the requirements at Non-Disruptive Upgrade Requirements.

If the requirements for a non-disruptive upgrade are not met, the upgrade procedure will be done in normal mode. When live-migration is set up, instances will be migrated to another node before the respective Compute Node is updated to ensure continuous operation.

Make sure that the STONITH mechanism in your cloud does not rely on the state of the Administration Server (for example, no SBD devices are located there, and IPMI is not using the network connection relying on the Administration Server). Otherwise, this may affect the clusters when the Administration Server is rebooted during the upgrade procedure.

1.3.1 Requirements #

When starting the upgrade process, several checks are performed to determine whether the SUSE OpenStack Cloud is in an upgradeable state and whether a non-disruptive update would be supported:

All nodes need to have the latest SUSE OpenStack Cloud Crowbar 8 updates and the latest SLES 12 SP3 updates installed. If this is not the case, refer to Section 11.4.1, “Deploying Node Updates with the Updater Barclamp” for instructions on how to update.

All allocated nodes need to be turned on and have to be in state “ready”.

All barclamp proposals need to have been successfully deployed. If a proposal is in state “failed”, the upgrade procedure will refuse to start. Fix the issue, or remove the proposal, if necessary.

If the Pacemaker barclamp is deployed, all clusters need to be in a healthy state.

The upgrade will not start when Ceph is deployed via Crowbar. Only external Ceph is supported. Documentation for SUSE Enterprise Storage is available at https://documentation.suse.com/ses/5.5/.

The following repositories need to be available on a server that is accessible from the Administration Server. The HA repositories are only needed if you have an HA setup. It is recommended to use the same server that also hosts the respective repositories of the current version.

SUSE-OpenStack-Cloud-Crowbar-9-PoolSUSE-OpenStack-Cloud-Crowbar-9-UpdateSLES12-SP4-PoolSLES12-SP4-UpdateSLE-HA12-SP4-Pool(for HA setups only)SLE-HA12-SP4-Update(for HA setups only)ImportantDo not add repositories to the SUSE OpenStack Cloud repository configuration. This needs to be done during the upgrade procedure.

All Control Nodes need to be set up highly available.

A non-disruptive upgrade is not supported if the cinder has been deployed with the

raw devicesorlocal fileback-end. In this case, you have to perform a regular upgrade, or change the cinder back-end for a non-disruptive upgrade.A non-disruptive upgrade is prevented if the

cinder-volumeservice is placed on Compute Node. For a non-disruptive upgrade,cinder-volumeshould either be HA-enabled or placed on non-compute nodes.A non-disruptive upgrade is prevented if

manila-shareservice is placed on a Compute Node. For more information, see Section 12.15, “Deploying manila”.Live-migration support needs to be configured and enabled for the Compute Nodes. The amount of free resources (CPU and RAM) on the Compute Nodes needs to be sufficient to evacuate the nodes one by one.

In case of a non-disruptive upgrade, glance must be configured as a shared storage if the value in the glance is set to

File.For a non-disruptive upgrade, only KVM-based Compute Nodes with the

nova-compute-kvmrole are allowed in SUSE OpenStack Cloud Crowbar 8.Non-disruptive upgrade is limited to the following cluster configurations:

Single cluster that has all supported controller roles on it

Two clusters where one only has

neutron-networkand the other one has the rest of the controller roles.Two clusters where one only has

neutron-serverplusneutron-networkand the other one has the rest of the controller roles.Two clusters, where one cluster runs the database and RabbitMQ

Three clusters, where one cluster runs database and RabbitMQ, another cluster has the controller roles, and the third cluster has the

neutron-networkrole.

If your cluster configuration is not supported by the non-disruptive upgrade procedure, you can still perform a normal upgrade.

1.3.2 Unsupported configurations #

In SUSE OpenStack Cloud 9, certain configurations and barclamp combinations are no longer supported. See the SUSE OpenStack Cloud 9 release notes for details. The upgrade prechecks fail if they detect an unsupported configuration. Below is a list configurations that cause failure and possible solutions.

- aodh deployed

The aodh barclamp has been removed in SUSE OpenStack Cloud 9. Deactivate and delete the aodh proposal before continuing the upgrade.

- ceilometer deployed without monasca

As of SUSE OpenStack Cloud 9, ceilometer has been reduced to the ceilometer agent, with monasca being used as a storage back-end. Consequently, a standalone ceilometer without monasca present will fail the upgrade prechecks. There are two possible solutions.

Deactivate and delete the ceilometer proposal before the upgrade, and re-enable it after the upgrade.

Deploy the monasca barclamp before the upgrade.

Note: Existing ceilometer DataExisting ceilometer data is not migrated to new monasca storage. It stays in the MariaDB ceilometer database, and it can be accessed directly, if needed. The data is also included in the OpenStack database backup created by upgrade process.

- nova: Xen Compute Nodes present

As of SUSE OpenStack Cloud 9, the Xen hypervisor is no longer supported. On any cloud, where Xen based compute nodes are still present, the following procedure must be followed before it can be upgraded to SUSE OpenStack Cloud 9:

Run

nova service-disablefor all nova Compute Nodes running the Xen hypervisor. In the Crowbar context, these are the Compute Nodes with thenova-compute-xenrole in the nova barclamp. This prevents any new VMs from being launched on these Compute Nodes. It is safe to leave the Compute Nodes in question in this state for the entire duration of the next step.Recreate all nova instances still running on the Xen-based Compute Nodes on non-Xen Compute Nodes or shut them down completely.

With no other instances on the Xen-based Compute Nodes, remove the nova barclamp's

nova-compute-xenrole from these nodes and re-apply the nova barclamp. You may optionally assign a differentnova-computerole, such asnova-compute-kvm, to configure them as Compute Nodes for one of the remaining hypervisors.

- trove deployed

The trove barclamp has been removed in SUSE OpenStack Cloud 9. Deactivate and delete the trove proposal before continuing the upgrade.

1.3.3 Upgrading Using the Web Interface #

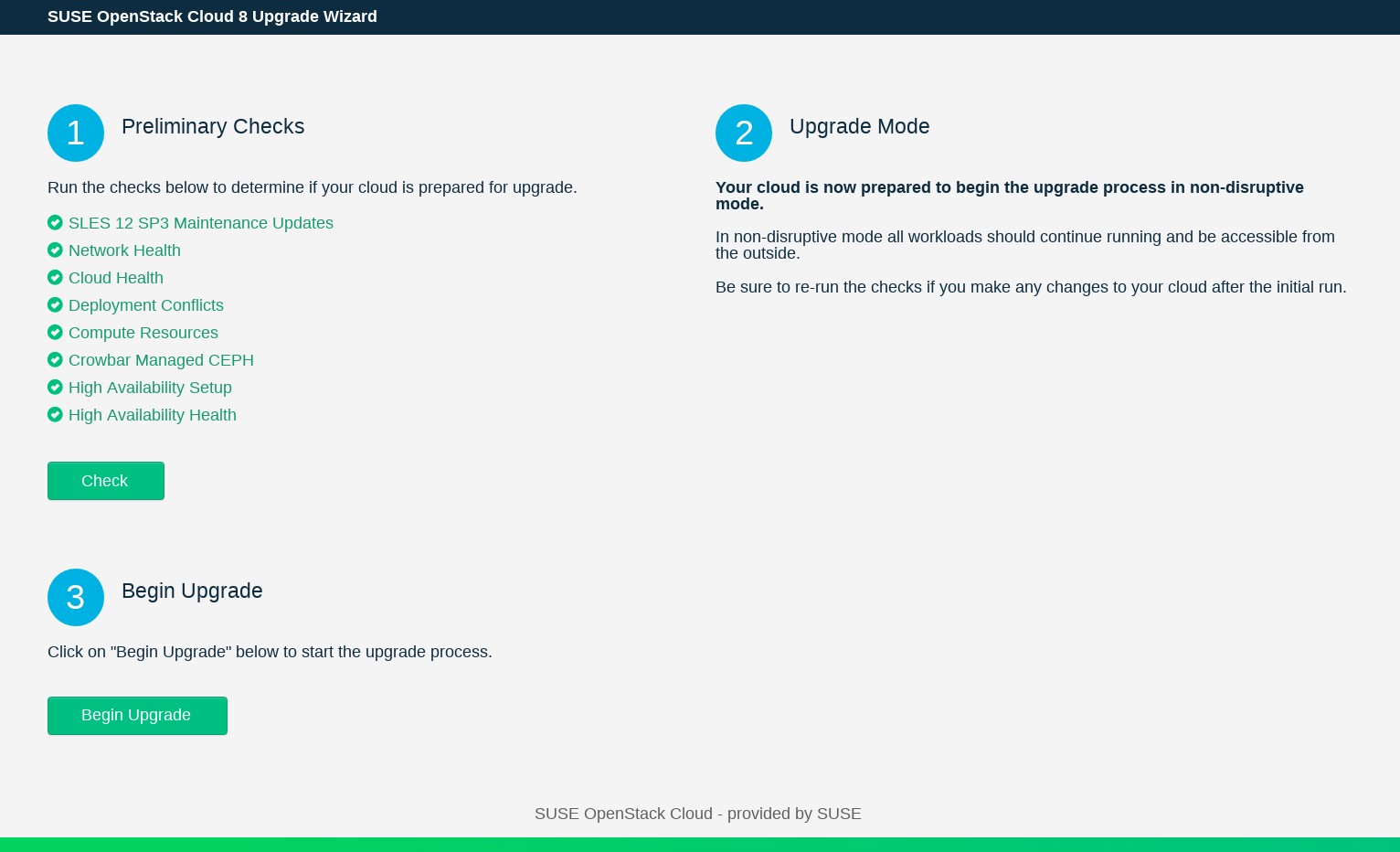

The Web interface features a wizard that guides you through the upgrade procedure.

You can cancel the upgrade process by clicking . The upgrade operation can only be canceled before the Administration Server upgrade is started. When the upgrade has been canceled, the nodes return to the ready state. However, any user modifications must be undone manually. This includes reverting repository configuration.

To start the upgrade procedure, open the Crowbar Web interface on the Administration Server and choose › . Alternatively, point the browser directly to the upgrade wizard on the Administration Server, for example

http://192.168.124.10/upgrade/.On the first screen of the Web interface you will run preliminary checks, get information about the upgrade mode and start the upgrade process.

Perform the preliminary checks to determine whether the upgrade requirements are met by clicking in

Preliminary Checks.The Web interface displays the progress of the checks. You will see green, yellow or red indicator next to each check. Yellow means the upgrade can only be performed in the normal mode. Red indicates an error, which means that you need to fix the problem and run the again.

When all checks in the previous step have passed,

Upgrade Modeshows the result of the upgrade analysis. It will indicate whether the upgrade procedure will continue in non-disruptive or in normal mode.To start the upgrade process, click .

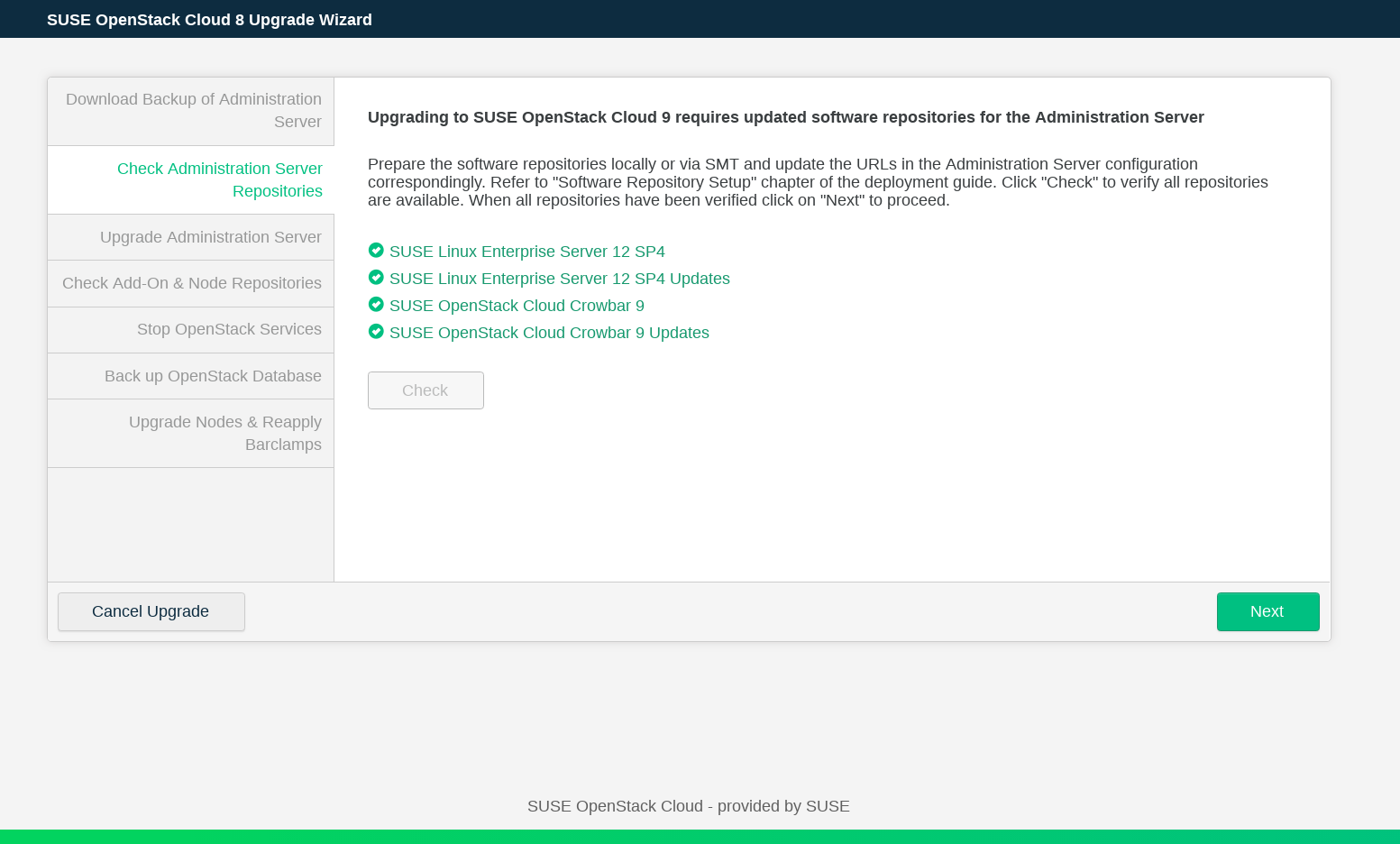

While the upgrade of the Administration Server is prepared, the upgrade wizard prompts you to . When the backup is done, move it to a safe place. If something goes wrong during the upgrade procedure of the Administration Server, you can restore the original state from this backup using the

crowbarctl backup restore NAMEcommand.Check that the repositories required for upgrading the Administration Server are available and updated. To do this, click the button. If the checks fail, add the software repositories as described in Chapter 5, Software Repository Setup. Run the checks again, and click .

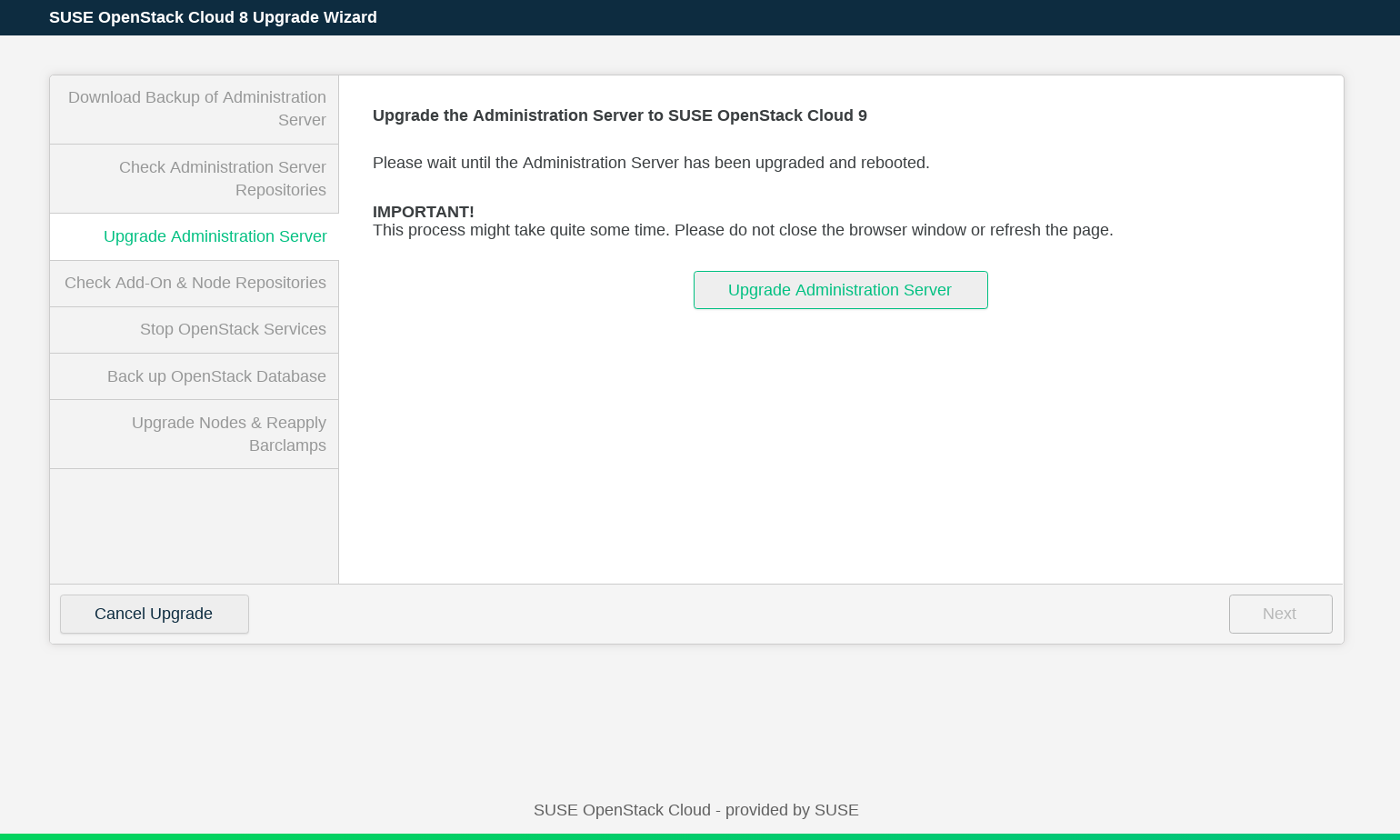

Click to upgrade and reboot the admin node. Note that this operation may take a while. When the Administration Server has been updated, click .

Check that the repositories required for upgrading all nodes are available and updated. To do this click the button. If the check fails, add the software repositories as described in Chapter 5, Software Repository Setup. Run the checks again, and click .

Next, you need to stop OpenStack services. This makes the OpenStack API unavailable until the upgrade of control plane is completed. When you are ready, click . Wait until the services are stopped and click .

Before upgrading the nodes, the wizard prompts you to . The MariaDB database backup will be stored on the Administration Server. It can be used to restore the database in case something goes wrong during the upgrade. To back up the database, click . When the backup operation is finished, click .

If you prefer to upgrade the controller nodes and postpone upgrading Compute Nodes, disable the option. In this case, you can use the button to switch to the Crowbar Web interface to check the current configuration and make changes, as long as they do not affect the Compute Nodes. If you choose to postpone upgrading Compute Nodes, all OpenStack services remain up and running.

When the upgrade is completed, press to return to the Dashboard.

If an error occurs during the upgrade process, the wizard displays a message with a description of the error and a possible solution. After fixing the error, re-run the step where the error occurred.

1.3.4 Upgrading from the Command Line #

The upgrade procedure on the command line is performed by using the program

crowbarctl. For general help, run crowbarctl

help. To get help on a certain subcommand, run

crowbarctl COMMAND help.

To review the process of the upgrade procedure, you may call

crowbarctl upgrade status at any time. Steps may have

three states: pending, running, and

passed.

To start the upgrade procedure from the command line, log in to the Administration Server as

root.Perform the preliminary checks to determine whether the upgrade requirements are met:

root #crowbarctl upgrade prechecksThe command's result is shown in a table. Make sure the column does not contain any entries. If there are errors, fix them and restart the

precheckcommand afterwards. You cannot proceed until the mandatory checks have passed.root #crowbarctl upgrade prechecks +-------------------------------+--------+----------+--------+------+ | Check ID | Passed | Required | Errors | Help | +-------------------------------+--------+----------+--------+------+ | network_checks | true | true | | | | cloud_healthy | true | true | | | | maintenance_updates_installed | true | true | | | | compute_status | true | false | | | | ha_configured | true | false | | | | clusters_healthy | true | true | | | +-------------------------------+--------+----------+--------+------+Depending on the outcome of the checks, it is automatically decided whether the upgrade procedure will continue in non-disruptive or in normal mode. For the non-disruptive mode, all the checks must pass, including those that are not marked in the table as required.

Tip: Forcing Normal Mode UpgradeThe non-disruptive update will take longer than an upgrade in normal mode, because it performs certain tasks sequentially which are done in parallel during the normal upgrade. Live-migrating guests to other Compute Nodes during the non-disruptive upgrade takes additional time.

Therefore, if a non-disruptive upgrade is not a requirement for you, you may want to switch to the normal upgrade mode, even if your setup supports the non-disruptive method. To force the normal upgrade mode, run:

root #crowbarctl upgrade mode normalTo query the current upgrade mode run:

root #crowbarctl upgrade modeTo switch back to the non-disruptive mode run:

root #crowbarctl upgrade mode non_disruptiveIt is possible to call this command at any time during the upgrade process until the

servicesstep is started. After that point the upgrade mode can no longer be changed.Prepare the nodes by transitioning them into the “upgrade” state and stopping the chef daemon:

root #crowbarctl upgrade prepareDepending of the size of your SUSE OpenStack Cloud deployment, this step may take some time. Use the command

crowbarctl upgrade statusto monitor the status of the process namedsteps.prepare.status. It needs to be in statepassedbefore you proceed:root #crowbarctl upgrade status +--------------------------------+----------------+ | Status | Value | +--------------------------------+----------------+ | current_step | backup_crowbar | | current_substep | | | current_substep_status | | | current_nodes | | | current_node_action | | | remaining_nodes | | | upgraded_nodes | | | crowbar_backup | | | openstack_backup | | | suggested_upgrade_mode | non_disruptive | | selected_upgrade_mode | | | compute_nodes_postponed | false | | steps.prechecks.status | passed | | steps.prepare.status | passed | | steps.backup_crowbar.status | pending | | steps.repocheck_crowbar.status | pending | | steps.admin.status | pending | | steps.repocheck_nodes.status | pending | | steps.services.status | pending | | steps.backup_openstack.status | pending | | steps.nodes.status | pending | +--------------------------------+----------------+Create a backup of the existing Administration Server installation. In case something goes wrong during the upgrade procedure of the Administration Server you can restore the original state from this backup with the command

crowbarctl backup restore NAMEroot #crowbarctl upgrade backup crowbarTo list all existing backups including the one you have just created, run the following command:

root #crowbarctl backup list +----------------------------+--------------------------+--------+---------+ | Name | Created | Size | Version | +----------------------------+--------------------------+--------+---------+ | crowbar_upgrade_1534864741 | 2018-08-21T15:19:03.138Z | 219 KB | 4.0 | +----------------------------+--------------------------+--------+---------+This step prepares the upgrade of the Administration Server by checking the availability of the update and pool repositories for SUSE OpenStack Cloud Crowbar 9 and SUSE Linux Enterprise Server 12 SP4. Run the following command:

root #crowbarctl upgrade repocheck crowbar +----------------------------------------+------------------+-----------+ | Repository | Status | Type | +----------------------------------------+------------------+-----------+ | SLES12-SP4-Pool | x86_64 (missing) | os | | SLES12-SP4-Updates | x86_64 (missing) | os | | SUSE-OpenStack-Cloud-Crowbar-9-Pool | available | openstack | | SUSE-OpenStack-Cloud-Crowbar-9-Updates | available | openstack | +----------------------------------------+------------------+-----------+Two of the required repositories are reported as missing, because they have not yet been added to the Crowbar configuration. To add them to the Administration Server proceed as follows.

Note that this step is for setting up the repositories for the Administration Server, not for the nodes in SUSE OpenStack Cloud (this will be done in a subsequent step).

Start

yast repositoriesand proceed with . Replace the repositoriesSLES12-SP3-PoolandSLES12-SP3-Updateswith the respective SP4 repositories.If you prefer to use zypper over YaST, you may alternatively make the change using

zypper mr.Next, replace the

SUSE-OpenStack-Cloud-Crowbar-8update and pool repositories with the respective SUSE OpenStack Cloud Crowbar 9 versions.Check for other (custom) repositories. All SLES SP3 repositories need to be replaced with the respective SLES SP4 version. In case no SP3 version exists, disable the repository—the respective packages from that repository will be deleted during the upgrade.

Note: Administration Server with external SMTIf the Administration Server is configured with external SMT, the system repositories configuration is managed by the system utilities. In this case, skip the above substeps and run the

zypper migration --download-onlycommand.Once the repository configuration on the Administration Server has been updated, run the command to check the repositories again. If the configuration is correct, the result should look like the following:

root #crowbarctl upgrade repocheck crowbar +----------------------------------------+-----------+-----------+ | Repository | Status | Type | +----------------------------------------+-----------+-----------+ | SLES12-SP4-Pool | available | os | | SLES12-SP4-Updates | available | os | | SUSE-OpenStack-Cloud-Crowbar-9-Pool | available | openstack | | SUSE-OpenStack-Cloud-Crowbar-9-Updates | available | openstack | +----------------------------------------+-----------+-----------+Now that the repositories are available, the Administration Server itself will be upgraded. The update will run in the background using

zypper dup. Once all packages have been upgraded, the Administration Server will be rebooted and you will be logged out. To start the upgrade run:root #crowbarctl upgrade adminAfter the Administration Server has been successfully updated, the Control Nodes and Compute Nodes will be upgraded. At first the availability of the repositories used to provide packages for the SUSE OpenStack Cloud nodes is tested.

Note: Correct Metadata in the PTF RepositoryWhen adding new repositories to the nodes, make sure that the new PTF repository also contains correct metadata (even if it is empty). To do this, run the

createrepo-cloud-ptfcommand.Note that the configuration for these repositories differs from the one for the Administration Server that was already done in a previous step. In this step the repository locations are made available to Crowbar rather than to libzypp on the Administration Server. To check the repository configuration run the following command:

root #crowbarctl upgrade repocheck nodes +----------------------------------------+-------------------------------------+-----------+ | Repository | Status | Type | +----------------------------------------+-------------------------------------+-----------+ | SLE12-SP4-HA-Pool | missing (x86_64), inactive (x86_64) | ha | | SLE12-SP4-HA-Updates | missing (x86_64), inactive (x86_64) | ha | | SLE12-SP4-HA-Updates-test | missing (x86_64), inactive (x86_64) | ha | | SLES12-SP4-Pool | missing (x86_64), inactive (x86_64) | os | | SLES12-SP4-Updates | missing (x86_64), inactive (x86_64) | os | | SLES12-SP4-Updates-test | missing (x86_64), inactive (x86_64) | os | | SUSE-OpenStack-Cloud-Crowbar-9-Pool | missing (x86_64), inactive (x86_64) | openstack | | SUSE-OpenStack-Cloud-Crowbar-9-Updates | missing (x86_64), inactive (x86_64) | openstack | +----------------------------------------+-------------------------------------+-----------+To update the locations for the listed repositories, start

yast crowbarand proceed as described in Section 7.4, “”.Once the repository configuration for Crowbar has been updated, run the command to check the repositories again to determine, whether the current configuration is correct.

root #crowbarctl upgrade repocheck nodes +----------------------------------------+-----------+-----------+ | Repository | Status | Type | +----------------------------------------+-----------+-----------+ | SLE12-SP4-HA-Pool | available | ha | | SLE12-SP4-HA-Updates | available | ha | | SLES12-SP4-Pool | available | os | | SLES12-SP4-Updates | available | os | | SUSE-OpenStack-Cloud-Crowbar-9-Pool | available | openstack | | SUSE-OpenStack-Cloud-Crowbar-9-Updates | available | openstack | +----------------------------------------+-----------+-----------+Important: Shut Down Running instances in Normal ModeIf the upgrade is done in the normal mode, you need to shut down or suspend all running instances before performing the next step.

Important: Product Media Repository CopiesTo PXE boot new nodes, an additional SUSE Linux Enterprise Server 12 SP4 repository—a copy of the installation system— is required. Although not required during the upgrade procedure, it is recommended to set up this directory now. Refer to Section 5.1, “Copying the Product Media Repositories” for details. If you had also copied the SUSE OpenStack Cloud Crowbar 8 installation media (optional), you may also want to provide the SUSE OpenStack Cloud Crowbar 9 the same way.

Once the upgrade procedure has been successfully finished, you may delete the previous copies of the installation media in

/srv/tftpboot/suse-12.4/x86_64/installand/srv/tftpboot/suse-12.4/x86_64/repos/Cloud.To ensure the status of the nodes does not change during the upgrade process, the majority of the OpenStack services will be stopped on the nodes. As a result, the OpenStack API will no longer be accessible. In case of the non-disruptive mode, the instances will continue to run and stay accessible. Run the following command:

root #crowbarctl upgrade servicesThis step takes a while to finish. Monitor the process by running

crowbarctl upgrade status. Do not proceed beforesteps.services.statusis set topassed.The last step before upgrading the nodes is to make a backup of the OpenStack database. The database dump will be stored on the Administration Server and can be used to restore the database in case something goes wrong during the upgrade.

root #crowbarctl upgrade backup openstackTo find the location of the database dump, run the

crowbarctl upgrade status.The final step of the upgrade procedure is upgrading the nodes. To start the process, enter:

root #crowbarctl upgrade nodes allThe upgrade process runs in the background and can be queried with

crowbarctl upgrade status. Depending on the size of your SUSE OpenStack Cloud it may take several hours, especially when performing a non-disruptive update. In that case, the Compute Nodes are updated one-by-one after instances have been live-migrated to other nodes.Instead of upgrading all nodes you may also upgrade the Control Nodes first and individual Compute Nodes afterwards. Refer to

crowbarctl upgrade nodes --helpfor details. If you choose this approach, you can use thecrowbarctl upgrade statuscommand to monitor the upgrade process. The output of this command contains the following entries:- current_node_action

The current action applied to the node.

- current_substep

Shows the current substep of the node upgrade step. For example, for the

crowbarctl upgrade nodes controllers, thecurrent_substepentry displays thecontroller_nodesstatus when upgrading controllers.

After the controllers have been upgraded, the

steps.nodes.statusentry in the output displays therunningstatus. Check then the status of thecurrent_substep_statusentry. If it displaysfinished, you can move to the next step of upgrading the Compute Nodes.Postponing the Upgrade

It is possible to stop the upgrade of compute nodes and postpone their upgrade with the command:

root #crowbarctl upgrade nodes postponeAfter the upgrade of compute nodes is postponed, you can go to Crowbar Web interface, check the configuration. You can also apply some changes, provided they do not affect the Compute Nodes. During the postponed upgrade, all OpenStack services should be up and running.

To resume the upgrade, issue the command:

root #crowbarctl upgrade nodes resumeFinish the upgrade with either

crowbarctl upgrade nodes allor upgrade nodes one node by one withcrowbarctl upgrade nodes NODE_NAME.When upgrading individual Compute Nodes using the

crowbarctl upgrade nodesNODE_NAME command, thecurrent_substep_statusentry changes tonode_finishedwhen the upgrade of a single node is done. After all nodes have been upgraded, thecurrent_substep_statusentry displaysfinished.

If an error occurs during the upgrade process, the output of the

crowbarctl upgrade status provides a detailed

description of the failure. In most cases, both the output and the error

message offer enough information for fixing the issue. When the problem has

been solved, run the previously-issued upgrade command to resume the

upgrade process.

1.3.4.1 Simultaneous Upgrade of Multiple Nodes #

It is possible to select more Compute Nodes for selective upgrade instead of just one. Upgrading multiple nodes simultaneously significantly reduces the time required for the upgrade.

To upgrade multiple nodes simultaneously, use the following command:

root # crowbarctl upgrade nodes NODE_NAME_1,NODE_NAME_2,NODE_NAME_3Node names can be separated by comma, semicolon, or space. When using space as separator, put the part containing node names in quotes.

Use the following command to find the names of the nodes that haven't been upgraded:

root # crowbarctl upgrade status nodesSince the simultaneous upgrade is intended to be non-disruptive, all Compute Nodes targeted for a simultaneous upgrade must be cleared of any running instances.

You can check what instances are running on a specific node using the following command:

tux > nova list --all-tenants --host NODE_NAME

This means that it is not possible to pick an arbitrary number of

Compute Nodes for the simultaneous upgrade operation: you have to make sure

that it is possible to live-migrate every instance away from the batch of

nodes that are supposed to be upgraded in parallel. In case of high load

on all Compute Nodes, it might not be possible to upgrade more than one node

at a time. Therefore, it is recommended to perform the following steps for

each node targeted for the simultaneous upgrade prior to running the

crowbarctl upgrade nodes command.

Disable the Compute Node so it's not used as a target during live-evacuation of any other node:

tux >openstack compute service set --disable "NODE_NAME" nova-computeEvacuate all running instances from the node:

tux >nova host-evacuate-live "NODE_NAME"

After completing these steps, you can perform a simultaneous upgrade of the selected nodes.

1.3.5 Troubleshooting Upgrade Issues #

- 1. Upgrade of the admin server has failed.

Check for empty, broken, and not signed repositories in the Administration Server upgrade log file

/var/log/crowbar/admin-server-upgrade.log. Fix the repository setup. Upgrade then remaining packages manually to SUSE Linux Enterprise Server 12 SP4 and SUSE OpenStack Cloud 9 using the commandzypper dup. Reboot the Administration Server.

- 2. An upgrade step repeatedly fails due to timeout.

Timeouts for most upgrade operations can be adjusted in the

/etc/crowbar/upgrade_timeouts.ymlfile. If the file doesn't exist, use the following template, and modify it to your needs::prepare_repositories: 120 :pre_upgrade: 300 :upgrade_os: 1500 :post_upgrade: 600 :shutdown_services: 600 :shutdown_remaining_services: 600 :evacuate_host: 300 :chef_upgraded: 1200 :router_migration: 600 :lbaas_evacuation: 600 :set_network_agents_state: 300 :delete_pacemaker_resources: 600 :delete_cinder_services: 300 :delete_nova_services: 300 :wait_until_compute_started: 60 :reload_nova_services: 120 :online_migrations: 1800The following entries may require higher values (all values are specified in seconds):

upgrade_osTime allowed for upgrading all packages of one node.chef_upgradedTime allowed for initialcrowbar_joinandchef-clientrun on a node that has been upgraded and rebooted.evacuate_hostTime allowed for live migrate all VMs from a host.

- 3. Node upgrade has failed during live migration.

The problem may occur when it is not possible to live migrate certain VMs anywhere. It may be necessary to shut down or suspend other VMs to make room for migration. Note that the Bash shell script that starts the live migration for the Compute Node is executed from the Control Node. An error message generated by the

crowbarctl upgrade statuscommand contains the exact names of both nodes. Check the/var/log/crowbar/node-upgrade.logfile on the Control Node for the information that can help you with troubleshooting. You might also need to check OpenStack logs in/var/log/novaon the Compute Node as well as on the Control Nodes.It is possible that live-migration of a certain VM takes too long. This can happen if instances are very large or network connection between compute hosts is slow or overloaded. If this case, try to raise the global timeout in

/etc/crowbar/upgrade_timeouts.yml.We recommend to perform the live migration manually first. After it is completed successfully, call the

crowbarctl upgradecommand again.The following commands can be helpful for analyzing issues with live migrations:

nova server-migration-list nova server-migration-show nova instance-action-list nova instance-actionNote that these commands require OpenStack administrator privileges.

The following log files may contain useful information:

/var/log/nova/nova-computeon the Compute Nodes that the migration is performed from and to./var/log/nova/*.log(especially log files for the conductor, scheduler and placement services) on the Control Nodes.

It can happen that active instances and instances with heavy loads cannot be live migrated in a reasonable time. In that case, you can abort a running live-migration operation using the

nova live-migration-abort MIGRATION-IDcommand. You can then perform the upgrade of the specific node at a later time.Alternatively, it is possible to force the completion of the live migration by using the

nova live-migration-force-complete MIGRATION-IDcommand. However, this might pause the instances for a prolonged period of time and have a negative impact on the workload running inside the instance.

- 4. Node has failed during OS upgrade.

Possible reasons include an incorrect repository setup or package conflicts. Check the

/var/log/crowbar/node-upgrade.loglog file on the affected node. Check the repositories on node using thezypper lrcommand. Make sure the required repositories are available. To test the setup, install a package manually or run thezypper dupcommand (this command is executed by the upgrade script). Fix the repository setup and run the failed upgrade step again. If custom package versions or version locks are in place, make sure that they don't interfere with thezypper dupcommand.

- 5. Node does not come up after reboot.

In some cases, a node can take too long to reboot causing a timeout. We recommend to check the node manually, make sure it is online, and repeat the step.

- 6.

N number of nodes were provided to compute upgrade using

crowbarctl upgrade nodes node_1,node_2,...,node_N, but less then N were actually upgraded. If the live migration cannot be performed for certain nodes due to a timeout, Crowbar upgrades only the nodes that it was able to live-evacuate in the specified time. Because some nodes have been upgraded, it is possible that more resources will be available for live-migration when you try to run this step again. See also Node upgrade has failed during live migration. .

- 7. Node has failed at the initial chef client run stage.

An unsupported entry in the configuration file may prevent a service from starting. This causes the node to fail at the initial chef client run stage. Checking the

/var/log/crowbar/crowbar_join/chef.*log files on the node is a good starting point.

- 8. I need to change OpenStack configuration during the upgrade but I cannot access Crowbar.

Crowbar Web interface is accessible only when an upgrade is completed or when it is postponed. Postponing the upgrade can be done only after upgrading all Control Nodes using the

crowbarctl upgrade nodes postponecommand. You can then access Crowbar and save your modifications. Before you can continue with the upgrade of rest of the nodes, resume the upgrade using thecrowbarctl upgrade nodes resumecommand.

- 9. Failure occurred when evacuating routers.

Check the

/var/log/crowbar/node-upgrade.logfile on the node that performs the router evacuation (it should be mentioned in the error message). The ID of the router that failed to migrate (or the affected network port) is logged to/var/log/crowbar/node-upgrade.log. Use the OpenStack CLI tools to check the state of the affected router and its ports. Fix manually, if necessary. This can be done by bringing the router or port up and down again. The following commands can be useful for solving the issue:openstack router show ID openstack port list --router ROUTER-ID openstack port show PORT-ID openstack port setResume the upgrade by running the failed upgrade step again to continue with the router migration.

- 10.

Some non-controller nodes were upgraded after performing

crowbarctl upgrade nodes controllers. In the current upgrade implementation, OpenStack nodes are divided into Compute Nodes and other nodes. The

crowbarctl upgrade nodes controllerscommand starts the upgrade of all the nodes that do not host compute services. This includes the controllers.

1.4 Recovering from Compute Node Failure #

The following procedure assumes that there is at least one Compute Node already running. Otherwise, see Section 1.5, “Bootstrapping the Compute Plane”.

If the Compute Node failed, it should have been fenced. Verify that this is the case. Otherwise, check

/var/log/pacemaker.logon the Designated Coordinator to determine why the Compute Node was not fenced. The most likely reason is a problem with STONITH devices.Determine the cause of the Compute Node's failure.

Rectify the root cause.

Boot the Compute Node again.

Check whether the

crowbar_joinscript ran successfully on the Compute Node. If this is not the case, check the log files to find out the reason. Refer to Section 5.4.2, “On All Other Crowbar Nodes” to find the exact location of the log file.If the

chef-clientagent triggered bycrowbar_joinsucceeded, confirm that thepacemaker_remoteservice is up and running.Check whether the remote node is registered and considered healthy by the core cluster. If this is not the case check

/var/log/pacemaker.logon the Designated Coordinator to determine the cause. There should be a remote primitive running on the core cluster (active/passive). This primitive is responsible for establishing a TCP connection to thepacemaker_remoteservice on port 3121 of the Compute Node. Ensure that nothing is preventing this particular TCP connection from being established (for example, problems with NICs, switches, firewalls etc.). One way to do this is to run the following commands:tux >lsof -i tcp:3121tux >tcpdump tcp port 3121If Pacemaker can communicate with the remote node, it should start the

nova-computeservice on it as part of the cloned groupcl-g-nova-computeusing the NovaCompute OCF resource agent. This cloned group will block startup ofnova-evacuateuntil at least one clone is started.A necessary, related but different procedure is described in Section 1.5, “Bootstrapping the Compute Plane”.

It may happen that

novaComputehas been launched correctly on the Compute Node bylrmd, but theopenstack-nova-computeservice is still not running. This usually happens whennova-evacuatedid not run correctly.If

nova-evacuateis not running on one of the core cluster nodes, make sure that the service is marked as started (target-role="Started"). If this is the case, then your cloud does not have any Compute Nodes already running as assumed by this procedure.If

nova-evacuateis started but it is failing, check the Pacemaker logs to determine the cause.If

nova-evacuateis started and functioning correctly, it should call nova'sevacuateAPI to release resources used by the Compute Node and resurrect elsewhere any VMs that died when it failed.If

openstack-nova-computeis running, but VMs are not booted on the node, check that the service is not disabled or forced down using theopenstack compute service listcommand. In case the service is disabled, run theopenstack compute service set –enable SERVICE_IDcommand. If the service is forced down, run the following commands:tux >fence_nova_param () { key="$1" cibadmin -Q -A "//primitive[@id="fence-nova"]//nvpair[@name='$key']" | \ sed -n '/.*value="/{s///;s/".*//;p}' }tux >fence_compute \ --auth-url=`fence_nova_param auth-url` \ --endpoint-type=`fence_nova_param endpoint-type` \ --tenant-name=`fence_nova_param tenant-name` \ --domain=`fence_nova_param domain` \ --username=`fence_nova_param login` \ --password=`fence_nova_param passwd` \ -n COMPUTE_HOSTNAME \ --action=on

The above steps should be performed automatically after the node is booted. If that does not happen, try the following debugging techniques.

Check the evacuate attribute for the Compute Node in the

Pacemaker cluster's attrd service using the

command:

tux > attrd_updater -p -n evacuate -N NODEPossible results are the following:

The attribute is not set. Refer to Step 1 in Procedure 1.1, “Procedure for Recovering from Compute Node Failure”.

The attribute is set to

yes. This means that the Compute Node was fenced, butnova-evacuatenever initiated the recovery procedure by calling nova's evacuate API.The attribute contains a time stamp, in which case the recovery procedure was initiated at the time indicated by the time stamp, but has not completed yet.

If the attribute is set to

no, the recovery procedure recovered successfully and the cloud is ready for the Compute Node to rejoin.

If the attribute is stuck with the wrong value, it can be set to

no using the command:

tux > attrd_updater -n evacuate -U no -N NODE

After standard fencing has been performed, fence agent

fence_compute should activate the secondary

fencing device (fence-nova). It does this by setting

the attribute to yes to mark the node as needing

recovery. The agent also calls nova's

force_down API to notify it that the host is down.

You should be able to see this in

/var/log/nova/fence_compute.log on the node in the core

cluster that was running the fence-nova agent at

the time of fencing. During the recovery, fence_compute

tells nova that the host is up and running again.

1.5 Bootstrapping the Compute Plane #

If the whole compute plane is down, it is not always obvious how to boot it

up, because it can be subject to deadlock if evacuate attributes are set on

every Compute Node. In this case, manual intervention is

required. Specifically, the operator must manually choose one or more

Compute Nodes to bootstrap the compute plane, and then run the

attrd_updater -n evacuate -U no -N NODE

command for each

of those Compute Nodes to indicate that they do not require the resurrection

process and can have their nova-compute start up straight

away. Once these Compute Nodes are up, this breaks the deadlock allowing

nova-evacuate to start. This way, any other nodes that

require resurrection can be processed automatically. If no resurrection is

desired anywhere in the cloud, then the attributes should be set to

no for all nodes.

If Compute Nodes are started too long after the

remote-* resources are started on the control plane,

they are liable to fencing. This should be avoided.

1.6 Bootstrapping the MariaDB Galera Cluster with Pacemaker when a node is missing #

Pacemaker does not promote a node to master until it received from all nodes the latest Sequence number (seqno). That is a problem when one node of the MariaDB Galera Cluster is down (eg. due to hardware or network problems) because the Sequence number can not be received from the unavailable node. To recover a MariaDB Galera Cluster manual steps are needed to select a bootstrap node for MariaDB Galera Cluster and to promote that node with Pacemaker.

Selecting the correct bootstrap node (depending on the highest Sequence number (seqno)) is important. If the wrong node is selected data loss is possible.

To find out which node has the latest Sequence number, call the following command on all MariaDB Galera Cluster nodes and select the node with the highest Sequence number.

mysqld_safe --wsrep-recover tail -5 /var/log/mysql/mysqld.log ... [Note] WSREP: Recovered position: 7a477edc-757d-11e9-a01a-d218e7381711:2490At the end of

/var/log/mysql/mysqld.logthe Sequence number is written (in this example, the sequence number is 2490). After all Sequence numbers are collected from all nodes, the node with the highest Sequence number is selected for bootstrap node. In this example, the node with the highest Sequence number is callednode1.Temporarily mark the galera Pacemaker resource as unmanaged:

crm resource unmanage galera

Mark the node as bootstrap node (call the following commands from the bootstrap node which is

node1in this example):crm_attribute -N node1 -l reboot --name galera-bootstrap -v true crm_attribute -N node1 -l reboot --name master-galera -v 100Promote the bootstrap node:

crm_resource --force-promote -r galera -V

Redetect the current state of the galera resource:

crm resource cleanup galera

Return the control to Pacemaker:

crm resource manage galera crm resource start galera

The MariaDB Galera Cluster is now running and Pacemaker is handling the cluster.

1.7 Updating MariaDB with Galera #

Updating MariaDB with Galera must be done manually. Crowbar does not install updates automatically. Updates can be done with Pacemaker or with the CLI. In particular, manual updating applies to upgrades to MariaDB 10.2.17 or higher from MariaDB 10.2.16 or earlier. See MariaDB 10.2.22 Release Notes - Notable Changes.

In order to run the following update steps, the database cluster needs to be up and healthy.

Using the Pacemaker GUI, update MariaDB with the following procedure:

Put the cluster into maintenance mode. Detailed information about the Pacemaker GUI and its operation is available in the https://documentation.suse.com/sle-ha/15-SP1/single-html/SLE-HA-guide/#cha-conf-hawk2.

Perform a rolling upgrade to MariaDB following the instructions at Upgrading Between Minor Versions with Galera Cluster.

The process involves the following steps:

Stop MariaDB

Uninstall the old versions of MariaDB and the Galera wsrep provider

Install the new versions of MariaDB and the Galera wsrep provider

Change configuration options if necessary

Start MariaDB

Run

mysql_upgradewith the--skip-write-binlogoption

Each node must upgraded individually so that the cluster is always operational.

Using the Pacemaker GUI, take the cluster out of maintenance mode.

When updating with the CLI, the database cluster must be up and healthy. Update MariaDB with the following procedure:

Mark Galera as unmanaged:

crm resource unmanage galera

Or put the whole cluster into maintenance mode:

crm configure property maintenance-mode=true

Pick a node other than the one currently targeted by the load balancer and stop MariaDB on that node:

crm_resource --wait --force-demote -r galera -V

Perform updates with the following steps:

Uninstall the old versions of MariaDB and the Galera wsrep provider.

Install the new versions of MariaDB and the Galera wsrep provider. Select the appropriate instructions at Installing MariaDB with zypper.

Change configuration options if necessary.

Start MariaDB on the node.

crm_resource --wait --force-promote -r galera -V

Run

mysql_upgradewith the--skip-write-binlogoption.On the other nodes, repeat the process detailed above: stop MariaDB, perform updates, start MariaDB, run

mysql_upgrade.Mark Galera as managed:

crm resource manage galera

Or take the cluster out of maintenance mode.

1.8 Load Balancer: Octavia Administration #

1.8.1 Removing load balancers #

The following procedures demonstrate how to delete a load

balancer that is in the ERROR,

PENDING_CREATE, or

PENDING_DELETE state.

Query the Neutron service for the loadbalancer ID:

tux >neutron lbaas-loadbalancer-list neutron CLI is deprecated and will be removed in the future. Use openstack CLI instead. +--------------------------------------+---------+----------------------------------+--------------+---------------------+----------+ | id | name | tenant_id | vip_address | provisioning_status | provider | +--------------------------------------+---------+----------------------------------+--------------+---------------------+----------+ | 7be4e4ab-e9c6-4a57-b767-da9af5ba7405 | test-lb | d62a1510b0f54b5693566fb8afeb5e33 | 192.168.1.10 | ERROR | haproxy | +--------------------------------------+---------+----------------------------------+--------------+---------------------+----------+Connect to the neutron database:

ImportantThe default database name depends on the life cycle manager. Ardana uses

ovs_neutronwhile Crowbar usesneutron.Ardana:

mysql> use ovs_neutron

Crowbar:

mysql> use neutron

Get the pools and healthmonitors associated with the loadbalancer:

mysql> select id, healthmonitor_id, loadbalancer_id from lbaas_pools where loadbalancer_id = '7be4e4ab-e9c6-4a57-b767-da9af5ba7405'; +--------------------------------------+--------------------------------------+--------------------------------------+ | id | healthmonitor_id | loadbalancer_id | +--------------------------------------+--------------------------------------+--------------------------------------+ | 26c0384b-fc76-4943-83e5-9de40dd1c78c | 323a3c4b-8083-41e1-b1d9-04e1fef1a331 | 7be4e4ab-e9c6-4a57-b767-da9af5ba7405 | +--------------------------------------+--------------------------------------+--------------------------------------+

Get the members associated with the pool:

mysql> select id, pool_id from lbaas_members where pool_id = '26c0384b-fc76-4943-83e5-9de40dd1c78c'; +--------------------------------------+--------------------------------------+ | id | pool_id | +--------------------------------------+--------------------------------------+ | 6730f6c1-634c-4371-9df5-1a880662acc9 | 26c0384b-fc76-4943-83e5-9de40dd1c78c | | 06f0cfc9-379a-4e3d-ab31-cdba1580afc2 | 26c0384b-fc76-4943-83e5-9de40dd1c78c | +--------------------------------------+--------------------------------------+

Delete the pool members:

mysql> delete from lbaas_members where id = '6730f6c1-634c-4371-9df5-1a880662acc9'; mysql> delete from lbaas_members where id = '06f0cfc9-379a-4e3d-ab31-cdba1580afc2';

Find and delete the listener associated with the loadbalancer:

mysql> select id, loadbalancer_id, default_pool_id from lbaas_listeners where loadbalancer_id = '7be4e4ab-e9c6-4a57-b767-da9af5ba7405'; +--------------------------------------+--------------------------------------+--------------------------------------+ | id | loadbalancer_id | default_pool_id | +--------------------------------------+--------------------------------------+--------------------------------------+ | 3283f589-8464-43b3-96e0-399377642e0a | 7be4e4ab-e9c6-4a57-b767-da9af5ba7405 | 26c0384b-fc76-4943-83e5-9de40dd1c78c | +--------------------------------------+--------------------------------------+--------------------------------------+ mysql> delete from lbaas_listeners where id = '3283f589-8464-43b3-96e0-399377642e0a';

Delete the pool associated with the loadbalancer:

mysql> delete from lbaas_pools where id = '26c0384b-fc76-4943-83e5-9de40dd1c78c';

Delete the healthmonitor associated with the pool:

mysql> delete from lbaas_healthmonitors where id = '323a3c4b-8083-41e1-b1d9-04e1fef1a331';

Delete the loadbalancer:

mysql> delete from lbaas_loadbalancer_statistics where loadbalancer_id = '7be4e4ab-e9c6-4a57-b767-da9af5ba7405'; mysql> delete from lbaas_loadbalancers where id = '7be4e4ab-e9c6-4a57-b767-da9af5ba7405';

Query the Octavia service for the loadbalancer ID:

tux >openstack loadbalancer list --column id --column name --column provisioning_status +--------------------------------------+---------+---------------------+ | id | name | provisioning_status | +--------------------------------------+---------+---------------------+ | d8ac085d-e077-4af2-b47a-bdec0c162928 | test-lb | ERROR | +--------------------------------------+---------+---------------------+Query the Octavia service for the amphora IDs (in this example we use

ACTIVE/STANDBYtopology with 1 spare Amphora):tux >openstack loadbalancer amphora list +--------------------------------------+--------------------------------------+-----------+--------+---------------+-------------+ | id | loadbalancer_id | status | role | lb_network_ip | ha_ip | +--------------------------------------+--------------------------------------+-----------+--------+---------------+-------------+ | 6dc66d41-e4b6-4c33-945d-563f8b26e675 | d8ac085d-e077-4af2-b47a-bdec0c162928 | ALLOCATED | BACKUP | 172.30.1.7 | 192.168.1.8 | | 1b195602-3b14-4352-b355-5c4a70e200cf | d8ac085d-e077-4af2-b47a-bdec0c162928 | ALLOCATED | MASTER | 172.30.1.6 | 192.168.1.8 | | b2ee14df-8ac6-4bb0-a8d3-3f378dbc2509 | None | READY | None | 172.30.1.20 | None | +--------------------------------------+--------------------------------------+-----------+--------+---------------+-------------+Query the Octavia service for the loadbalancer pools:

tux >openstack loadbalancer pool list +--------------------------------------+-----------+----------------------------------+---------------------+----------+--------------+----------------+ | id | name | project_id | provisioning_status | protocol | lb_algorithm | admin_state_up | +--------------------------------------+-----------+----------------------------------+---------------------+----------+--------------+----------------+ | 39c4c791-6e66-4dd5-9b80-14ea11152bb5 | test-pool | 86fba765e67f430b83437f2f25225b65 | ACTIVE | TCP | ROUND_ROBIN | True | +--------------------------------------+-----------+----------------------------------+---------------------+----------+--------------+----------------+Connect to the octavia database:

mysql> use octavia

Delete any listeners, pools, health monitors, and members from the load balancer:

mysql> delete from listener where load_balancer_id = 'd8ac085d-e077-4af2-b47a-bdec0c162928'; mysql> delete from health_monitor where pool_id = '39c4c791-6e66-4dd5-9b80-14ea11152bb5'; mysql> delete from member where pool_id = '39c4c791-6e66-4dd5-9b80-14ea11152bb5'; mysql> delete from pool where load_balancer_id = 'd8ac085d-e077-4af2-b47a-bdec0c162928';

Delete the amphora entries in the database:

mysql> delete from amphora_health where amphora_id = '6dc66d41-e4b6-4c33-945d-563f8b26e675'; mysql> update amphora set status = 'DELETED' where id = '6dc66d41-e4b6-4c33-945d-563f8b26e675'; mysql> delete from amphora_health where amphora_id = '1b195602-3b14-4352-b355-5c4a70e200cf'; mysql> update amphora set status = 'DELETED' where id = '1b195602-3b14-4352-b355-5c4a70e200cf';

Delete the load balancer instance:

mysql> update load_balancer set provisioning_status = 'DELETED' where id = 'd8ac085d-e077-4af2-b47a-bdec0c162928';

The following script automates the above steps:

#!/bin/bash if (( $# != 1 )); then echo "Please specify a loadbalancer ID" exit 1 fi LB_ID=$1 set -u -e -x readarray -t AMPHORAE < <(openstack loadbalancer amphora list \ --format value \ --column id \ --column loadbalancer_id \ | grep ${LB_ID} \ | cut -d ' ' -f 1) readarray -t POOLS < <(openstack loadbalancer show ${LB_ID} \ --format value \ --column pools) mysql octavia --execute "delete from listener where load_balancer_id = '${LB_ID}';" for p in "${POOLS[@]}"; do mysql octavia --execute "delete from health_monitor where pool_id = '${p}';" mysql octavia --execute "delete from member where pool_id = '${p}';" done mysql octavia --execute "delete from pool where load_balancer_id = '${LB_ID}';" for a in "${AMPHORAE[@]}"; do mysql octavia --execute "delete from amphora_health where amphora_id = '${a}';" mysql octavia --execute "update amphora set status = 'DELETED' where id = '${a}';" done mysql octavia --execute "update load_balancer set provisioning_status = 'DELETED' where id = '${LB_ID}';"

1.9 Periodic OpenStack Maintenance Tasks #

Heat-manage helps manage Heat specific database operations. The associated

database should be periodically purged to save space. The following should

be setup as a cron job on the servers where the heat service is running at

/etc/cron.weekly/local-cleanup-heat

with the following content:

#!/bin/bash su heat -s /bin/bash -c "/usr/bin/heat-manage purge_deleted -g days 14" || :

nova-manage db archive_deleted_rows command will move deleted rows

from production tables to shadow tables. Including

--until-complete will make the command run continuously

until all deleted rows are archived. It is recommended to setup this task

as /etc/cron.weekly/local-cleanup-nova

on the servers where the nova service is running, with the

following content:

#!/bin/bash su nova -s /bin/bash -c "/usr/bin/nova-manage db archive_deleted_rows --until-complete" || :

1.10 Rotating Fernet Tokens #

Fernet tokens should be rotated frequently for security purposes.

It is recommended to setup this task as a cron job in

/etc/cron.weekly/openstack-keystone-fernet

on the keystone server designated as a master node in a highly

available setup with the following content:

#!/bin/bash su keystone -s /bin/bash -c "keystone-manage fernet_rotate" /usr/bin/keystone-fernet-keys-push.sh 192.168.81.168; /usr/bin/keystone-fernet-keys-push.sh 192.168.81.169;

The IP addresses in the above example, i.e. 192.168.81.168 and 192.168.81.169 are the IP addresses of the other two nodes of a three-node cluster. Be sure to use the correct IP addresses when configuring the cron job. Note that if the master node is offline and a new master is elected, the cron job will need to be removed from the previous master node and then re-created on the new master node. Do not run the fernet_rotate cron job on multiple nodes.

For a non-HA setup, the cron job should be configured at

/etc/cron.weekly/openstack-keystone-fernet

on the keystone server as follows:

#!/bin/bash su keystone -s /bin/bash -c "keystone-manage fernet_rotate"