7 Software RAID Configuration #

The purpose of RAID (redundant array of independent disks) is to combine several hard disk partitions into one large virtual hard disk to optimize performance, data security, or both. Most RAID controllers use the SCSI protocol because it can address a larger number of hard disks in a more effective way than the IDE protocol and is more suitable for parallel processing of commands. There are some RAID controllers that support IDE or SATA hard disks. Software RAID provides the advantages of RAID systems without the additional cost of hardware RAID controllers. However, this requires some CPU time and has memory requirements that make it unsuitable for real high performance computers.

Software RAID underneath clustered file systems needs to be set up using a cluster multi-device (Cluster MD). Refer to the High Availability documentation at https://documentation.suse.com/sle-ha/html/SLE-HA-all/cha-ha-cluster-md.html.

SUSE Linux Enterprise offers the option of combining several hard disks into one soft RAID system. RAID implies several strategies for combining several hard disks in a RAID system, each with different goals, advantages, and characteristics. These variations are commonly known as RAID levels.

7.1 Understanding RAID Levels #

This section describes common RAID levels 0, 1, 2, 3, 4, 5, and nested RAID levels.

7.1.1 RAID 0 #

This level improves the performance of your data access by spreading out blocks of each file across multiple disks. Actually, this is not really a RAID, because it does not provide data backup, but the name RAID 0 for this type of system has become the norm. With RAID 0, two or more hard disks are pooled together. The performance is very good, but the RAID system is destroyed and your data lost if even one hard disk fails.

7.1.2 RAID 1 #

This level provides adequate security for your data, because the data is copied to another hard disk 1:1. This is known as hard disk mirroring. If a disk is destroyed, a copy of its contents is available on another mirrored disk. All disks except one could be damaged without endangering your data. However, if damage is not detected, damaged data might be mirrored to the correct disk and the data is corrupted that way. The writing performance suffers a little in the copying process compared to when using single disk access (10 to 20 percent slower), but read access is significantly faster in comparison to any one of the normal physical hard disks, because the data is duplicated so can be scanned in parallel. RAID 1 generally provides nearly twice the read transaction rate of single disks and almost the same write transaction rate as single disks.

7.1.3 RAID 2 and RAID 3 #

These are not typical RAID implementations. Level 2 stripes data at the bit level rather than the block level. Level 3 provides byte-level striping with a dedicated parity disk and cannot service simultaneous multiple requests. Both levels are rarely used.

7.1.4 RAID 4 #

Level 4 provides block-level striping like Level 0 combined with a dedicated parity disk. If a data disk fails, the parity data is used to create a replacement disk. However, the parity disk might create a bottleneck for write access. Nevertheless, Level 4 is sometimes used.

7.1.5 RAID 5 #

RAID 5 is an optimized compromise between Level 0 and Level 1 in terms of performance and redundancy. The hard disk space equals the number of disks used minus one. The data is distributed over the hard disks as with RAID 0. Parity blocks, created on one of the partitions, are there for security reasons. They are linked to each other with XOR, enabling the contents to be reconstructed by the corresponding parity block in case of system failure. With RAID 5, no more than one hard disk can fail at the same time. If one hard disk fails, it must be replaced when possible to avoid the risk of losing data.

7.1.6 RAID 6 #

RAID 6 is essentially an extension of RAID 5 that allows for additional fault tolerance by using a second independent distributed parity scheme (dual parity). Even if two of the hard disks fail during the data recovery process, the system continues to be operational, with no data loss.

RAID 6 provides for extremely high data fault tolerance by sustaining multiple simultaneous drive failures. It handles the loss of any two devices without data loss. Accordingly, it requires N+2 drives to store N drives worth of data. It requires a minimum of four devices.

The performance for RAID 6 is slightly lower but comparable to RAID 5 in normal mode and single disk failure mode. It is very slow in dual disk failure mode. A RAID 6 configuration needs a considerable amount of CPU time and memory for write operations.

|

Feature |

RAID 5 |

RAID 6 |

|---|---|---|

|

Number of devices |

N+1, minimum of 3 |

N+2, minimum of 4 |

|

Parity |

Distributed, single |

Distributed, dual |

|

Performance |

Medium impact on write and rebuild |

More impact on sequential write than RAID 5 |

|

Fault-tolerance |

Failure of one component device |

Failure of two component devices |

7.1.7 Nested and Complex RAID Levels #

Other RAID levels have been developed, such as RAIDn, RAID 10, RAID 0+1, RAID 30, and RAID 50. Some are proprietary implementations created by hardware vendors. Examples for creating RAID 10 configurations can be found in Chapter 9, Creating Software RAID 10 Devices.

7.2 Soft RAID Configuration with YaST #

The YaST soft RAID configuration can be reached from the YaST Expert Partitioner. This partitioning tool also enables you to edit and delete existing partitions and create new ones that should be used with soft RAID. These instructions apply on setting up RAID levels 0, 1, 5, and 6. Setting up RAID 10 configurations is explained in Chapter 9, Creating Software RAID 10 Devices.

Launch YaST and open the .

If necessary, create partitions that should be used with your RAID configuration. Do not format them and set the partition type to . When using existing partitions it is not necessary to change their partition type—YaST will automatically do so. Refer to Section 12.1, « Utilisation de l'outil de partitionnement de YaST » for details.

It is strongly recommended to use partitions stored on different hard disks to decrease the risk of losing data if one is defective (RAID 1 and 5) and to optimize the performance of RAID 0.

For RAID 0 at least two partitions are needed. RAID 1 requires exactly two partitions, while at least three partitions are required for RAID 5. A RAID 6 setup requires at least four partitions. It is recommended to use only partitions of the same size because each segment can contribute only the same amount of space as the smallest sized partition.

In the left panel, select .

A list of existing RAID configurations opens in the right panel.

At the lower left of the RAID page, click .

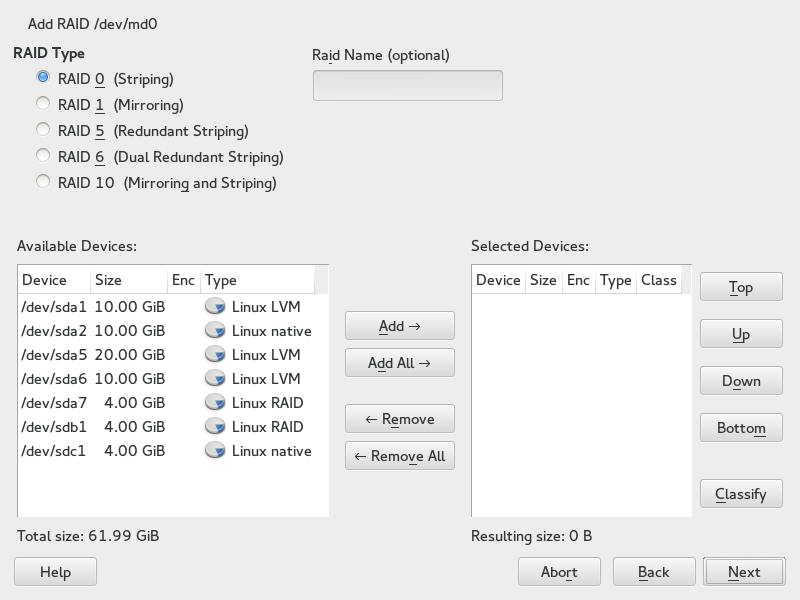

Select a and an appropriate number of partitions from the dialog.

You can optionally assign a to your RAID. It will make it available as

/dev/md/NAME. See Section 7.2.1, “RAID Names” for more information.Figure 7.1: Example RAID 5 Configuration #Proceed with .

Select the and, if applicable, the . The optimal chunk size depends on the type of data and the type of RAID. See https://raid.wiki.kernel.org/index.php/RAID_setup#Chunk_sizes for more information. More information on parity algorithms can be found with

man 8 mdadmwhen searching for the--layoutoption. If unsure, stick with the defaults.Choose a for the volume. Your choice here only affects the default values for the upcoming dialog. They can be changed in the next step. If in doubt, choose .

Under , select , then select the . The content of the menu depends on the file system. Usually there is no need to change the defaults.

Under , select , then select the mount point. Click to add special mounting options for the volume.

Click .

Click , verify that the changes are listed, then click .

7.2.1 RAID Names #

By default, software RAID devices have numeric names following the pattern

mdN, where N is a number. As such

they can be accessed as, for example, /dev/md127 and

are listed as md127 in /proc/mdstat

and /proc/partitions. Working with these names can be

clumsy. SUSE Linux Enterprise Server offers two ways to work around this problem:

- Providing a Named Link to the Device

You can optionally specify a name for the RAID device when creating it with YaST or on the command line with

mdadm --create '/dev/md/NAME'. The device name will still bemdN, but a link/dev/md/NAMEwill be created:tux >ls -og /dev/md total 0 lrwxrwxrwx 1 8 Dec 9 15:11 myRAID -> ../md127The device will still be listed as

md127under/proc.- Providing a Named Device

In case a named link to the device is not sufficient for your setup, add the line CREATE names=yes to

/etc/mdadm.confby running the following command:tux >echo "CREATE names=yes" | sudo tee -a /etc/mdadm.confIt will cause names like

myRAIDto be used as a “real” device name. The device will not only be accessible at/dev/myRAID, but also be listed asmyRAIDunder/proc. Note that this will only apply to RAIDs configured after the change to the configuration file. Active RAIDS will continue to use themdNnames until they get stopped and re-assembled.Warning: Incompatible ToolsNot all tools may support named RAID devices. In case a tool expects a RAID device to be named

mdN, it will fail to identify the devices.

7.3 Troubleshooting Software RAIDs #

Check the /proc/mdstat file to find out whether a RAID

partition has been damaged. If a disk fails, shut down your Linux system and

replace the defective hard disk with a new one partitioned the same way.

Then restart your system and enter the command mdadm /dev/mdX --add

/dev/sdX. Replace X with your particular device

identifiers. This integrates the hard disk automatically into the RAID

system and fully reconstructs it (for all RAID levels except for

RAID 0).

Although you can access all data during the rebuild, you might encounter some performance issues until the RAID has been fully rebuilt.

7.3.1 Recovery after Failing Disk is Back Again #

There are several reasons a disk included in a RAID array may fail. Here is a list of the most common ones:

Problems with the disk media.

Disk drive controller failure.

Broken connection to the disk.

In the case of the disk media or controller failure, the device needs to be replaced or repaired. If a hot-spare was not configured within the RAID, then manual intervention is required.

In the last case, the failed device can be automatically re-added by the

mdadm command after the connection is repaired (which

might be automatic).

Because md/mdadm cannot reliably

determine what caused the disk failure, it assumes a serious disk error and

treats any failed device as faulty until it is explicitly told that the

device is reliable.

Under some circumstances—such as storage devices with the internal

RAID array— the connection problems are very often the cause of the

device failure. In such case, you can tell mdadm that it

is safe to automatically --re-add the device after it

appears. You can do this by adding the following line to

/etc/mdadm.conf:

POLICY action=re-add

Note that the device will be automatically re-added after re-appearing only

if the udev rules cause mdadm -I

DISK_DEVICE_NAME to be run on any

device that spontaneously appears (default behavior), and if write-intent

bitmaps are configured (they are by default).

If you want this policy to only apply to some devices and not to the

others, then the path= option can be added to the

POLICY line in /etc/mdadm.conf to

restrict the non-default action to only selected devices. Wild cards can be

used to identify groups of devices. See man 5 mdadm.conf

for more information.

7.4 For More Information #

Configuration instructions and more details for soft RAID can be found in the Howtos at:

The Linux RAID wiki: https://raid.wiki.kernel.org/

The Software RAID HOWTO in the

/usr/share/doc/packages/mdadm/Software-RAID.HOWTO.htmlfile

Linux RAID mailing lists are also available, such as linux-raid at http://marc.info/?l=linux-raid.