19 The systemd daemon #

systemd initializes the system. It has the process ID 1. systemd is

started directly by the kernel and resists signal 9, which normally

terminates processes. All other programs are started directly by

systemd or by one of its child processes. systemd is a replacement for

the System V init daemon and is fully compatible with System V init (by

supporting init scripts).

The main advantage of systemd is that it considerably speeds up boot time

by parallelizing service starts. Furthermore, systemd only starts a

service when it is really needed. Daemons are not started unconditionally

at boot time, but when being required for the first time. systemd also

supports Kernel Control Groups (cgroups), creating snapshots, and restoring

the system state. For more details see

https://www.freedesktop.org/wiki/Software/systemd/.

systemd inside WSL

Windows Subsystem for Linux (WSL) enables running Linux applications and distributions under

the Microsoft Windows operating system. WSL uses its init process instead of systemd. To enable

systemd in SLES running in WSL, install the wsl_systemd

pattern that automates the process:

>sudozypper in -t pattern wsl_systemd

Alternatively, you can edit /etc/wsl.conf and add the following lines

manually:

[boot] systemd=true

Keep in mind that the support for systemd in WSL is partial—systemd unit files must

have reasonable process management behavior.

19.1 The systemd concept #

The following section explains the concept behind systemd.

systemd is a system and session manager for Linux, compatible with

System V and LSB init scripts. The main features of systemd include:

parallelization capabilities

socket and D-Bus activation for starting services

on-demand starting of daemons

tracking of processes using Linux cgroups

creating snapshots and restoring of the system state

maintains mount and automount points

implements an elaborate transactional dependency-based service control logic

19.1.1 Unit file #

A unit configuration file contains information about a service, a

socket, a device, a mount point, an automount point, a swap file or

partition, a start-up target, a watched file system path, a timer

controlled and supervised by systemd, a temporary system state

snapshot, a resource management slice or a group of externally created

processes.

“Unit file” is a generic term used by systemd for the

following:

Service. Information about a process (for example, running a daemon); file ends with .service

Targets. Used for grouping units and as synchronization points during start-up; file ends with .target

Sockets. Information about an IPC or network socket or a file system FIFO, for socket-based activation (like

inetd); file ends with .socketPath. Used to trigger other units (for example, running a service when files change); file ends with .path

Timer. Information about a timer controlled, for timer-based activation; file ends with .timer

Mount point. Normally auto-generated by the fstab generator; file ends with .mount

Automount point. Information about a file system automount point; file ends with .automount

Swap. Information about a swap device or file for memory paging; file ends with .swap

Device. Information about a device unit as exposed in the sysfs/udev(7) device tree; file ends with .device

Scope / slice. A concept for hierarchically managing resources of a group of processes; file ends with .scope/.slice

For more information about systemd unit files, see

https://www.freedesktop.org/software/systemd/man/latest/systemd.unit.html

19.2 Basic usage #

The System V init system uses several commands to handle

services—the init scripts, insserv,

telinit and others. systemd makes it easier to

manage services, because there is only one command to handle most service

related tasks: systemctl. It uses the “command

plus subcommand” notation like git or

zypper:

systemctl GENERAL OPTIONS SUBCOMMAND SUBCOMMAND OPTIONS

See man 1 systemctl for a complete manual.

If the output goes to a terminal (and not to a pipe or a file, for

example), systemd commands send long output to a pager by default.

Use the --no-pager option to turn off paging mode.

systemd also supports bash-completion, allowing you to enter the

first letters of a subcommand and then press →|.

This feature is only available in the bash

shell and requires the installation of the package

bash-completion.

19.2.1 Managing services in a running system #

Subcommands for managing services are the same as for managing a

service with System V init (start,

stop, ...). The general syntax for service

management commands is as follows:

systemdsystemctl reload|restart|start|status|stop|... MY_SERVICE(S)

- System V init

rcMY_SERVICE(S) reload|restart|start|status|stop|...

systemd allows you to manage several services in one go. Instead of

executing init scripts one after the other as with System V init,

execute a command like the following:

>sudosystemctl start MY_1ST_SERVICE MY_2ND_SERVICE

To list all services available on the system:

>sudosystemctl list-unit-files --type=service

The following table lists the most important service management

commands for systemd and System V init:

|

Task |

|

System V init Command |

|---|---|---|

|

Starting. |

start |

start |

|

Stopping. |

stop |

stop |

|

Restarting. Shuts down services and starts them afterward. If a service is not yet running, it is started. |

restart |

restart |

|

Restarting conditionally. Restarts services if they are currently running. Does nothing for services that are not running. |

try-restart |

try-restart |

|

Reloading.

Tells services to reload their configuration files without

interrupting operation. Use case: tell Apache to reload a

modified |

reload |

reload |

|

Reloading or restarting. Reloads services if reloading is supported, otherwise restarts them. If a service is not yet running, it is started. |

reload-or-restart |

n/a |

|

Reloading or restarting conditionally. Reloads services if reloading is supported, otherwise restarts them if currently running. Does nothing for services that are not running. |

reload-or-try-restart |

n/a |

|

Getting detailed status information.

Lists information about the status of services. The

|

status |

status |

|

Getting short status information. Shows whether services are active or not. |

is-active |

status |

19.2.2 Permanently enabling/disabling services #

The service management commands mentioned in the previous section let

you manipulate services for the current session. systemd also lets

you permanently enable or disable services, so they are automatically

started when requested or are always unavailable. You can either do

this by using YaST, or on the command line.

19.2.2.1 Enabling/disabling services on the command line #

The following table lists enabling and disabling commands for

systemd and System V init:

When enabling a service on the command line, it is not started

automatically. It is scheduled to be started with the next system

start-up or runlevel/target change. To immediately start a service

after having enabled it, explicitly run systemctl start

MY_SERVICE or rc

MY_SERVICE start.

|

Task |

|

System V init Command |

|---|---|---|

|

Enabling. |

|

|

|

Disabling. |

|

|

|

Checking. Shows whether a service is enabled or not. |

|

|

|

Re-enabling. Similar to restarting a service, this command first disables and then enables a service. Useful to re-enable a service with its defaults. |

|

n/a |

|

Masking. After “disabling” a service, it can still be started manually. To disable a service, you need to mask it. Use with care. |

|

n/a |

|

Unmasking. A service that has been masked can only be used again after it has been unmasked. |

|

n/a |

19.3 System start and target management #

The entire process of starting the system and shutting it down is

maintained by systemd. From this point of view, the kernel can be

considered a background process to maintain all other processes and

adjust CPU time and hardware access according to requests from other

programs.

19.3.1 Targets compared to runlevels #

With System V init the system was booted into a so-called

“Runlevel”. A runlevel defines how the system is started

and what services are available in the running system. Runlevels are

numbered; the most commonly known ones are 0

(shutting down the system), 3 (multiuser with

network) and 5 (multiuser with network and display

manager).

systemd introduces a new concept by using so-called “target

units”. However, it remains fully compatible with the runlevel

concept. Target units are named rather than numbered and serve specific

purposes. For example, the targets

local-fs.target and

swap.target mount local file systems and swap

spaces.

The target graphical.target provides a

multiuser system with network and display manager capabilities and is

equivalent to runlevel 5. Complex targets, such as

graphical.target act as “meta”

targets by combining a subset of other targets. Since systemd makes

it easy to create custom targets by combining existing targets, it

offers great flexibility.

The following list shows the most important systemd target units. For

a full list refer to man 7 systemd.special.

systemd target units #default.targetThe target that is booted by default. Not a “real” target, but rather a symbolic link to another target like

graphic.target. Can be permanently changed via YaST (see Section 19.4, “Managing services with YaST”). To change it for a session, use the kernel parametersystemd.unit=MY_TARGET.targetat the boot prompt.emergency.targetStarts a minimal emergency

rootshell on the console. Only use it at the boot prompt assystemd.unit=emergency.target.graphical.targetStarts a system with network, multiuser support and a display manager.

halt.targetShuts down the system.

mail-transfer-agent.targetStarts all services necessary for sending and receiving mails.

multi-user.targetStarts a multiuser system with network.

reboot.targetReboots the system.

rescue.targetStarts a single-user

rootsession without network. Basic tools for system administration are available. Therescuetarget is suitable for solving multiple system problems, for example, failing logins or fixing issues with a display driver.

To remain compatible with the System V init runlevel system, systemd

provides special targets named

runlevelX.target mapping

the corresponding runlevels numbered X.

To inspect the current target, use the command: systemctl

get-default

systemd target units #|

System V runlevel |

|

Purpose |

|---|---|---|

|

0 |

|

System shutdown |

|

1, S |

|

Single-user mode |

|

2 |

|

Local multiuser without remote network |

|

3 |

|

Full multiuser with network |

|

4 |

|

Unused/User-defined |

|

5 |

|

Full multiuser with network and display manager |

|

6 |

|

System reboot |

systemd ignores /etc/inittab

The runlevels in a System V init system are configured in

/etc/inittab. systemd does

not use this configuration. Refer to

Section 19.5.5, “Creating custom targets” for instructions on

how to create your own bootable target.

19.3.1.1 Commands to change targets #

Use the following commands to operate with target units:

|

Task |

|

System V init Command |

|---|---|---|

|

Change the current target/runlevel |

|

|

|

Change to the default target/runlevel |

|

n/a |

|

Get the current target/runlevel |

With |

or

|

|

persistently change the default runlevel |

Use the Services Manager or run the following command:

|

Use the Services Manager or change the line

in |

|

Change the default runlevel for the current boot process |

Enter the following option at the boot prompt

|

Enter the desired runlevel number at the boot prompt. |

|

Show a target's/runlevel's dependencies |

“Requires” lists the hard dependencies (the ones that must be resolved), whereas “Wants” lists the soft dependencies (the ones that get resolved if possible). |

n/a |

19.3.2 Debugging system start-up #

systemd offers the means to analyze the system start-up process. You

can review the list of all services and their status (rather than

having to parse /var/log/). systemd also allows

you to scan the start-up procedure to find out how much time each

service start-up consumes.

19.3.2.1 Review start-up of services #

To review the complete list of services that have been started since

booting the system, enter the command systemctl.

It lists all active services like shown below (shortened). To get

more information on a specific service, use systemctl status

MY_SERVICE.

# systemctl

UNIT LOAD ACTIVE SUB JOB DESCRIPTION

[...]

iscsi.service loaded active exited Login and scanning of iSC+

kmod-static-nodes.service loaded active exited Create list of required s+

libvirtd.service loaded active running Virtualization daemon

nscd.service loaded active running Name Service Cache Daemon

chronyd.service loaded active running NTP Server Daemon

polkit.service loaded active running Authorization Manager

postfix.service loaded active running Postfix Mail Transport Ag+

rc-local.service loaded active exited /etc/init.d/boot.local Co+

rsyslog.service loaded active running System Logging Service

[...]

LOAD = Reflects whether the unit definition was properly loaded.

ACTIVE = The high-level unit activation state, i.e. generalization of SUB.

SUB = The low-level unit activation state, values depend on unit type.

161 loaded units listed. Pass --all to see loaded but inactive units, too.

To show all installed unit files use 'systemctl list-unit-files'.

To restrict the output to services that failed to start, use the

--failed option:

# systemctl --failed

UNIT LOAD ACTIVE SUB JOB DESCRIPTION

apache2.service loaded failed failed apache

NetworkManager.service loaded failed failed Network Manager

plymouth-start.service loaded failed failed Show Plymouth Boot Screen

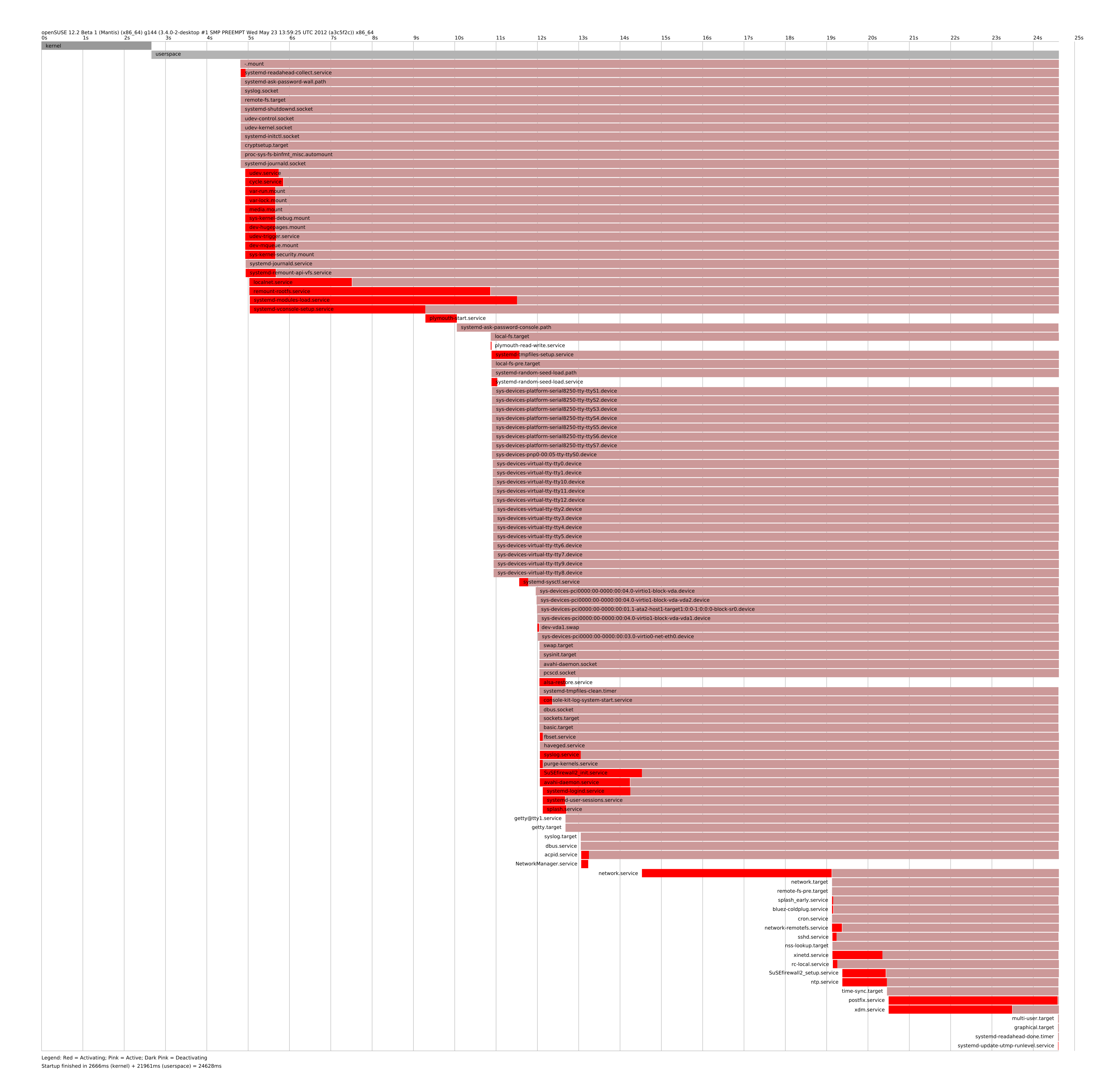

[...]19.3.2.2 Debug start-up time #

To debug system start-up time, systemd offers the

systemd-analyze command. It shows the total

start-up time, a list of services ordered by start-up time and can

also generate an SVG graphic showing the time services took to start

in relation to the other services.

- Listing the system start-up time

#systemd-analyze Startup finished in 2666ms (kernel) + 21961ms (userspace) = 24628ms- Listing the services start-up time

#systemd-analyze blame 15.000s backup-rpmdb.service 14.879s mandb.service 7.646s backup-sysconfig.service 4.940s postfix.service 4.921s logrotate.service 4.640s libvirtd.service 4.519s display-manager.service 3.921s btrfsmaintenance-refresh.service 3.466s lvm2-monitor.service 2.774s plymouth-quit-wait.service 2.591s firewalld.service 2.137s initrd-switch-root.service 1.954s ModemManager.service 1.528s rsyslog.service 1.378s apparmor.service [...]- Services start-up time graphics

#systemd-analyze plot > jupiter.example.com-startup.svg

19.3.2.3 Review the complete start-up process #

The commands above list the services that are started and their

start-up times. For a more detailed overview, specify the following

parameters at the boot prompt to instruct systemd to create a

verbose log of the complete start-up procedure.

systemd.log_level=debug systemd.log_target=kmsg

Now systemd writes its log messages into the kernel ring buffer.

View that buffer with dmesg:

> dmesg -T | less19.3.3 System V compatibility #

systemd is compatible with System V, allowing you to still use

existing System V init scripts. However, there is at least one known

issue where a System V init script does not work with systemd out of

the box: starting a service as a different user via

su or sudo in init scripts

results in a failure of the script, producing an “Access

denied” error.

When changing the user with su or

sudo, a PAM session is started. This session will be

terminated after the init script is finished. As a consequence, the

service that has been started by the init script is also

terminated. To work around this error, proceed as follows:

Create a service file wrapper with the same name as the init script plus the file name extension

.service:[Unit] Description=DESCRIPTION After=network.target [Service] User=USER Type=forking1 PIDFile=PATH TO PID FILE1 ExecStart=PATH TO INIT SCRIPT start ExecStop=PATH TO INIT SCRIPT stop ExecStopPost=/usr/bin/rm -f PATH TO PID FILE1 [Install] WantedBy=multi-user.target2

Replace all values written in UPPERCASE LETTERS with appropriate values.

Start the daemon with

systemctl start APPLICATION.

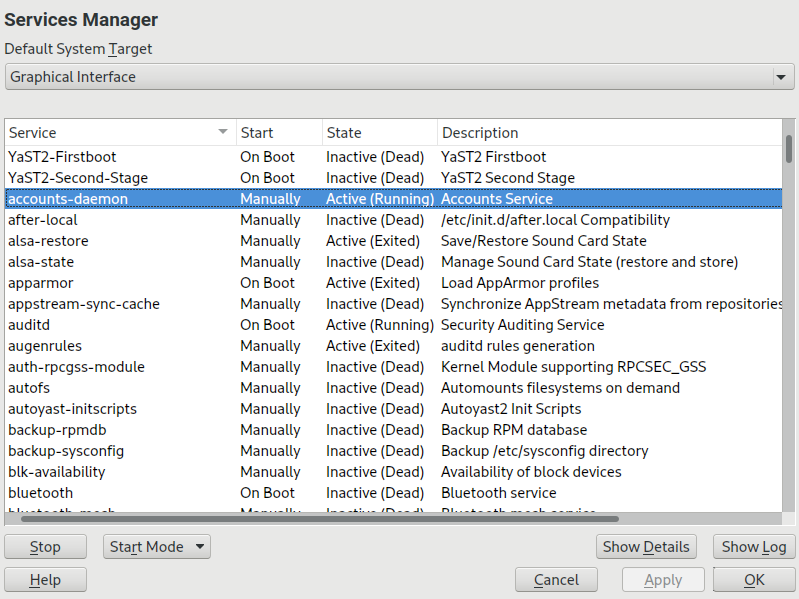

19.4 Managing services with YaST #

Basic service management can also be done with the YaST Services Manager module. It supports starting, stopping, enabling and disabling services. It also lets you show a service's status and change the default target. Start the YaST module with › › .

- Changing the

To change the target the system boots into, choose a target from the drop-down box. The most often used targets are (starting a graphical login screen) and (starting the system in command line mode).

- Starting or stopping a service

Select a service from the table. The column shows whether it is currently running () or not (). Toggle its status by choosing or .

Starting or stopping a service changes its status for the currently running session. To change its status throughout a reboot, you need to enable or disable it.

- Defining service start-up behavior

Services can either be started automatically at boot time or manually. Select a service from the table. The column shows whether it is currently started or . Toggle its status by choosing .

To change a service status in the current session, you need to start or stop it as described above.

- View a status messages

To view the status message of a service, select it from the list and choose . The output is identical to the one generated by the command

systemctl-lstatus MY_SERVICE.

19.5 Customizing systemd #

The following sections describe how to customize systemd unit files.

19.5.1 Where are unit files stored? #

systemd unit files shipped by SUSE are stored in

/usr/lib/systemd/. Customized unit files and unit file

drop-ins are stored in

/etc/systemd/.

When customizing systemd, always use the directory

/etc/systemd/ instead of /usr/lib/systemd/.

Otherwise your changes will be overwritten by the next update of systemd.

19.5.2 Override with drop-in files #

Drop-in files (or drop-ins) are partial unit files that override only

specific settings of the unit file. Drop-ins have higher precedence over main configuration

files. The command

systemctl edit SERVICE

starts the default text editor and creates a directory with an empty

override.conf file in

/etc/systemd/system/NAME.service.d/. The

command also ensures that the running systemd process is notified about the changes.

For example, to change the amount of time that the system waits for MariaDB to start, run

sudo systemctl edit mariadb.service and edit the opened file to include

the modified lines only:

# Configures the time to wait for start-up/stop TimeoutSec=300

Adjust the TimeoutSec value and save the changes. To enable the changes,

run sudo systemctl daemon-reload.

For further information, refer to the man pages that can be evoked with

the man 1 systemctl command.

If you use the --full option in the systemctl edit --full

SERVICE command, a copy of the original unit file is

created where you can modify specific options. We do not recommend such customization

because when the unit file is updated by SUSE, its changes are overridden by the

customized copy in the /etc/systemd/system/ directory. Moreover, if

SUSE provides updates to distribution drop-ins, they override the copy of the unit file

created with --full. To prevent this confusion and always have your

customization valid, use drop-ins.

19.5.3 Creating drop-in files manually #

Apart from using the systemctl edit command, you can create drop-ins

manually to have more control over their priority. Such drop-ins let you extend both unit

and daemon configuration files without having to edit or override the files themselves.

They are stored in the following directories:

/etc/systemd/*.conf.d/,/etc/systemd/system/*.service.d/Drop-ins added and customized by system administrators.

/usr/lib/systemd/*.conf.d/,/usr/lib/systemd/system/*.service.d/Drop-ins installed by customization packages to override upstream settings. For example, SUSE ships systemd-default-settings.

See the man page man 5 systemd.unit for the full

list of unit search paths.

For example, to disable the rate limiting that is enforced by the

default setting of

systemd-journald, follow these steps:

Create a directory called

/etc/systemd/journald.conf.d.>sudomkdir /etc/systemd/journald.conf.dNoteThe directory name must follow the service name that you want to patch with the drop-in file.

In that directory, create a file

/etc/systemd/journald.conf.d/60-rate-limit.confwith the option that you want to override, for example:>cat /etc/systemd/journald.conf.d/60-rate-limit.conf# Disable rate limiting RateLimitIntervalSec=0Save your changes and restart the service of the corresponding

systemddaemon.>sudosystemctl restart systemd-journald

To avoid name conflicts between your drop-ins and files shipped by

SUSE, it is recommended to prefix all drop-ins with a two-digit

number and a dash, for example,

80-override.conf.

The following ranges are reserved:

0-19is reserved forsystemdupstream.20-29is reserved forsystemdshipped by SUSE.30-39is reserved for SUSE packages other thansystemd.40-49is reserved for third-party packages.50is reserved for unit drop-in files created withsystemctl set-property.

Use a two-digit number above this range to ensure that none of the drop-ins shipped by SUSE can override your own drop-ins.

You can use systemctl cat $UNIT to list and verify

which files are taken into account in the units configuration.

Because the configuration of systemd components can be scattered

across different places on the file system, it might be hard to get a

global overview. To inspect the configuration of a systemd

component, use the following commands:

systemctl cat UNIT_PATTERNprints configuration files related to one or moresystemdunits, for example:>systemctl cat atd.servicesystemd-analyze cat-config DAEMON_NAME_OR_PATHcopies the contents of a configuration file and drop-ins for asystemddaemon, for example:>systemd-analyze cat-config systemd/journald.conf

19.5.4 Converting xinetd services to systemd #

Since the release of SUSE Linux Enterprise Server 15, the

xinetd infrastructure has been removed. This

section outlines how to convert existing custom

xinetd service files to systemd sockets.

For each xinetd service file, you need at

least two systemd unit files: the socket file

(*.socket) and an associated service file

(*.service). The socket file tells systemd which

socket to create, and the service file tells systemd which executable

to start.

Consider the following example xinetd service

file:

# cat /etc/xinetd.d/example

service example

{

socket_type = stream

protocol = tcp

port = 10085

wait = no

user = user

group = users

groups = yes

server = /usr/libexec/example/exampled

server_args = -auth=bsdtcp exampledump

disable = no

}

To convert it to systemd, you need the following two matching files:

# cat /usr/lib/systemd/system/example.socket

[Socket]

ListenStream=0.0.0.0:10085

Accept=false

[Install]

WantedBy=sockets.target# cat /usr/lib/systemd/system/example.service

[Unit]

Description=example

[Service]

ExecStart=/usr/libexec/example/exampled -auth=bsdtcp exampledump

User=user

Group=users

StandardInput=socket

For a complete list of the systemd “socket” and

“service” file options, refer to the systemd.socket and

systemd.service manual pages (man 5 systemd.socket,

man 5 systemd.service).

19.5.5 Creating custom targets #

On System V init SUSE systems, runlevel 4 is unused to allow

administrators to create their own runlevel configuration. systemd

allows you to create any number of custom targets. It is suggested to

start by adapting an existing target such as

graphical.target.

Copy the configuration file

/usr/lib/systemd/system/graphical.targetto/etc/systemd/system/MY_TARGET.targetand adjust it according to your needs.The configuration file copied in the previous step already covers the required (“hard”) dependencies for the target. To also cover the wanted (“soft”) dependencies, create a directory

/etc/systemd/system/MY_TARGET.target.wants.For each wanted service, create a symbolic link from

/usr/lib/systemd/systeminto/etc/systemd/system/MY_TARGET.target.wants.When you have finished setting up the target, reload the

systemdconfiguration to make the new target available:>sudosystemctl daemon-reload

19.6 Advanced usage #

The following sections cover advanced topics for system administrators.

For even more advanced systemd documentation, refer to Lennart

Pöttering's series about systemd for administrators at

https://0pointer.de/blog/projects/.

19.6.1 Cleaning temporary directories #

systemd supports cleaning temporary directories regularly. The

configuration from the previous system version is automatically

migrated and active. tmpfiles.d—which is

responsible for managing temporary files—reads its configuration

from /etc/tmpfiles.d/*.conf,

/run/tmpfiles.d/*.conf, and

/usr/lib/tmpfiles.d/*.conf files. Configuration

placed in /etc/tmpfiles.d/*.conf overrides related

configurations from the other two directories

(/usr/lib/tmpfiles.d/*.conf is where packages

store their configuration files).

The configuration format is one line per path containing action and path, and optionally mode, ownership, age and argument fields, depending on the action. The following example unlinks the X11 lock files:

Type Path Mode UID GID Age Argument r /tmp/.X[0-9]*-lock

To get the status the tmpfile timer:

>sudosystemctl status systemd-tmpfiles-clean.timer systemd-tmpfiles-clean.timer - Daily Cleanup of Temporary Directories Loaded: loaded (/usr/lib/systemd/system/systemd-tmpfiles-clean.timer; static) Active: active (waiting) since Tue 2018-04-09 15:30:36 CEST; 1 weeks 6 days ago Docs: man:tmpfiles.d(5) man:systemd-tmpfiles(8) Apr 09 15:30:36 jupiter systemd[1]: Starting Daily Cleanup of Temporary Directories. Apr 09 15:30:36 jupiter systemd[1]: Started Daily Cleanup of Temporary Directories.

For more information on temporary files handling, see man 5

tmpfiles.d.

19.6.2 System log #

Section 19.6.9, “Debugging services” explains

how to view log messages for a given service. However, displaying log

messages is not restricted to service logs. You can also access and

query the complete log messages written by systemd—the

so-called “Journal”. Use the command

journalctl to display the complete log messages

starting with the oldest entries. Refer to man 1

journalctl for options such as applying filters or changing

the output format.

19.6.3 Snapshots #

You can save the current state of systemd to a named snapshot and

later revert to it with the isolate subcommand. This

is useful when testing services or custom targets, because it allows

you to return to a defined state at any time. A snapshot is only

available in the current session and will automatically be deleted on

reboot. A snapshot name must end in .snapshot.

- Create a snapshot

>sudosystemctl snapshot MY_SNAPSHOT.snapshot- Delete a snapshot

>sudosystemctl delete MY_SNAPSHOT.snapshot- View a snapshot

>sudosystemctl show MY_SNAPSHOT.snapshot- Activate a snapshot

>sudosystemctl isolate MY_SNAPSHOT.snapshot

19.6.4 Loading kernel modules #

With systemd, kernel modules can automatically be loaded at boot time

via a configuration file in /etc/modules-load.d.

The file should be named MODULE.conf and

have the following content:

# load module MODULE at boot time MODULE

In case a package installs a configuration file for loading a kernel

module, the file gets installed to

/usr/lib/modules-load.d. If two configuration

files with the same name exist, the one in

/etc/modules-load.d tales precedence.

For more information, see the

modules-load.d(5) man page.

19.6.5 Performing actions before loading a service #

With System V init actions that need to be performed before loading a

service, needed to be specified in /etc/init.d/before.local

. This procedure is no longer supported with systemd. If

you need to do actions before starting services, do the following:

- Loading kernel modules

Create a drop-in file in

/etc/modules-load.ddirectory (seeman modules-load.dfor the syntax)- Creating Files or Directories, Cleaning-up Directories, Changing Ownership

Create a drop-in file in

/etc/tmpfiles.d(seeman tmpfiles.dfor the syntax)- Other tasks

Create a system service file, for example,

/etc/systemd/system/before.service, from the following template:[Unit] Before=NAME OF THE SERVICE YOU WANT THIS SERVICE TO BE STARTED BEFORE [Service] Type=oneshot RemainAfterExit=true ExecStart=YOUR_COMMAND # beware, executable is run directly, not through a shell, check the man pages # systemd.service and systemd.unit for full syntax [Install] # target in which to start the service WantedBy=multi-user.target #WantedBy=graphical.target

When the service file is created, you should run the following commands (as

root):>sudosystemctl daemon-reload>sudosystemctl enable beforeEvery time you modify the service file, you need to run:

>sudosystemctl daemon-reload

19.6.6 Kernel control groups (cgroups) #

On a traditional System V init system, it is not always possible to match a process to the service that spawned it. Certain services, such as Apache, spawn a lot of third-party processes (for example, CGI or Java processes), which themselves spawn more processes. This makes a clear assignment difficult or even impossible. Additionally, a service may not finish correctly, leaving certain children alive.

systemd solves this problem by placing each service into its own

cgroup. cgroups are a kernel feature that allows aggregating processes

and all their children into hierarchical organized groups. systemd

names each cgroup after its service. Since a non-privileged process is

not allowed to “leave” its cgroup, this provides an

effective way to label all processes spawned by a service with the name

of the service.

To list all processes belonging to a service, use the command

systemd-cgls, for example:

# systemd-cgls --no-pager

├─1 /usr/lib/systemd/systemd --switched-root --system --deserialize 20

├─user.slice

│ └─user-1000.slice

│ ├─session-102.scope

│ │ ├─12426 gdm-session-worker [pam/gdm-password]

│ │ ├─15831 gdm-session-worker [pam/gdm-password]

│ │ ├─15839 gdm-session-worker [pam/gdm-password]

│ │ ├─15858 /usr/lib/gnome-terminal-server

[...]

└─system.slice

├─systemd-hostnamed.service

│ └─17616 /usr/lib/systemd/systemd-hostnamed

├─cron.service

│ └─1689 /usr/sbin/cron -n

├─postfix.service

│ ├─ 1676 /usr/lib/postfix/master -w

│ ├─ 1679 qmgr -l -t fifo -u

│ └─15590 pickup -l -t fifo -u

├─sshd.service

│ └─1436 /usr/sbin/sshd -D

[...]See Chapter 10, Kernel control groups for more information about cgroups.

19.6.7 Terminating services (sending signals) #

As explained in Section 19.6.6, “Kernel control groups (cgroups)”, it is not always possible to assign a process to its parent service process in a System V init system. This makes it difficult to stop a service and its children. Child processes that have not been terminated remain as zombie processes.

systemd's concept of confining each service into a cgroup makes it

possible to identify all child processes of a service and therefore

allows you to send a signal to each of these processes. Use

systemctl kill to send signals to services. For a

list of available signals refer to man 7 signals.

- Sending

SIGTERMto a service SIGTERMis the default signal that is sent.>sudosystemctl kill MY_SERVICE- Sending SIGNAL to a service

Use the

-soption to specify the signal that should be sent.>sudosystemctl kill -s SIGNAL MY_SERVICE- Selecting processes

By default the

killcommand sends the signal toallprocesses of the specified cgroup. You can restrict it to thecontrolor themainprocess. The latter is, for example, useful to force a service to reload its configuration by sendingSIGHUP:>sudosystemctl kill -s SIGHUP --kill-who=main MY_SERVICE

19.6.8 Important notes on the D-Bus service #

The D-Bus service is the message bus for communication between

systemd clients and the systemd manager that is running as pid 1.

Even though dbus is a

stand-alone daemon, it is an integral part of the init infrastructure.

Stopping dbus or restarting it

in the running system is similar to an attempt to stop or restart PID

1. It breaks the systemd client/server communication and makes most

systemd functions unusable.

Therefore, terminating or restarting

dbus is neither

recommended nor supported.

Updating the dbus or

dbus-related packages requires a reboot. When

in doubt whether a reboot is necessary, run the sudo zypper ps

-s. If dbus appears among the listed

services, you need to reboot the system.

Keep in mind that dbus is updated even when

automatic updates are configured to skip the packages that require

reboot.

19.6.9 Debugging services #

By default, systemd is not overly verbose. If a service was started

successfully, no output is produced. In case of a failure, a short

error message is displayed. However, systemctl

status provides a means to debug the start-up and operation of a

service.

systemd comes with its own logging mechanism (“The

Journal”) that logs system messages. This allows you to display

the service messages together with status messages. The

status command works similar to

tail and can also display the log messages in

different formats, making it a powerful debugging tool.

- Show service start-up failure

Whenever a service fails to start, use

systemctl status MY_SERVICEto get a detailed error message:#systemctl start apache2 Job failed. See system journal and 'systemctl status' for details.#systemctl status apache2 Loaded: loaded (/usr/lib/systemd/system/apache2.service; disabled) Active: failed (Result: exit-code) since Mon, 04 Apr 2018 16:52:26 +0200; 29s ago Process: 3088 ExecStart=/usr/sbin/start_apache2 -D SYSTEMD -k start (code=exited, status=1/FAILURE) CGroup: name=systemd:/system/apache2.service Apr 04 16:52:26 g144 start_apache2[3088]: httpd2-prefork: Syntax error on line 205 of /etc/apache2/httpd.conf: Syntax error on li...alHost>- Show last N service messages

The default behavior of the

statussubcommand is to display the last ten messages a service issued. To change the number of messages to show, use the--lines=Nparameter:>sudosystemctl status chronyd>sudosystemctl --lines=20 status chronyd- Show service messages in append mode

To display a “live stream” of service messages, use the

--followoption, which works liketail-f:>sudosystemctl --follow status chronyd- Messages output format

The

--output=MODEparameter allows you to change the output format of service messages. The most important modes available are:shortThe default format. Shows the log messages with a human readable time stamp.

verboseFull output with all fields.

catTerse output without time stamps.

19.7 systemd timer units #

Similar to cron, systemd timer units provide a mechanism for scheduling

jobs on Linux. Although systemd timer units serve the same purpose as

cron, they offer several advantages.

Jobs scheduled using a timer unit can depend on other

systemdservices.Timer units are treated as regular

systemdservices, so can be managed withsystemctl.Timers can be realtime and monotonic.

Time units are logged to the

systemdjournal, which makes it easier to monitor and troubleshoot them.

systemd timer units are identified by the .timer

file name extension.

19.7.1 systemd timer types #

Timer units can use monotonic and realtime timers.

Similar to cronjobs, realtime timers are triggered on calendar events. Realtime timers are defined using the option

OnCalendar.Monotonic timers are triggered at a specified time elapsed from a certain starting point. The latter could be a system boot or system unit activation event. There are several options for defining monotonic timers including

OnBootSec,OnUnitActiveSec, andOnTypeSec. Monotonic timers are not persistent, and they are reset after each reboot.

19.7.2 systemd timers and service units #

Every timer unit must have a corresponding systemd unit file it

controls. In other words, a .timer file activates

and manages the corresponding .service file. When

used with a timer, the .service file does not

require an [Install] section, as the service is

managed by the timer.

19.7.3 Practical example #

To understand the basics of systemd timer units, we set up a timer

that triggers the foo.sh shell script.

First step is to create a systemd service unit that controls the

shell script. To do this, open a new text file for editing and add the

following service unit definition:

[Unit] Description="Foo shell script" [Service] ExecStart=/usr/local/bin/foo.sh

Save the file under the name foo.service in the

directory /etc/systemd/system/.

Next, open a new text file for editing and add the following timer definition:

[Unit] Description="Run foo shell script" [Timer] OnBootSec=5min OnUnitActiveSec=24h Unit=foo.service [Install] WantedBy=multi-user.target

The [Timer] section in the example above specifies

what service to trigger (foo.service) and when to

trigger it. In this case, the option OnBootSec

specifies a monotonic timer that triggers the service five minutes

after the system boot, while the option

OnUnitActiveSec triggers the service 24 hours after

the service has been activated (that is, the timer triggers the service

once a day). Finally, the option WantedBy specifies

that the timer should start when the system has reached the multiuser

target.

Instead of a monotonic timer, you can specify a real-time one using the

option OnCalendar. The following realtime timer

definition triggers the related service unit once a week, starting on

Monday at 12:00.

[Timer] OnCalendar=weekly Persistent=true

The option Persistent=true indicates that the service

is triggered immediately after the timer activation if the timer missed

the last start time (for example, because of the system being powered

off).

The option OnCalendar can also be used to define

specific dates times for triggering a service using the following

format: DayOfWeek Year-Month-Day Hour:Minute:Second.

The example below triggers a service at 5am every day:

OnCalendar=*-*-* 5:00:00

You can use an asterisk to specify any value, and commas to list possible values. Use two values separated by .. to indicate a contiguous range. The following example triggers a service at 6pm on Friday of every month:

OnCalendar=Fri *-*-1..7 18:00:00

To trigger a service at different times, you can specify several

OnCalendar entries:

OnCalendar=Mon..Fri 10:00 OnCalendar=Sat,Sun 22:00

In the example above, a service is triggered at 10am on week days and at 10pm on weekends.

When you are done editing the timer unit file, save it under the name

foo.timer in the

/etc/systemd/system/ directory. To check the

correctness of the created unit files, run the following command:

>sudosystemd-analyze verify /etc/systemd/system/foo.*

If the command returns no output, the files have passed the verification successfully.

To start the timer, use the command sudo systemctl start

foo.timer. To enable the timer on boot, run the command

sudo systemctl enable foo.timer.

19.7.4 Managing systemd timers #

Since timers are treated as regular systemd units, you can manage

them using systemctl. You can start a timer with

systemctl start, enable a timer with

systemctl enable, and so on. Additionally, you can

list all active timers using the command systemctl

list-timers. To list all timers, including inactive ones, run

the command systemctl list-timers --all.

19.8 More information #

For more information on systemd refer to the following online

resources:

- Homepage

systemdfor administratorsLennart Pöttering, one of the

systemdauthors, has written a series of blog entries (13 at the time of writing this chapter). Find them at https://0pointer.de/blog/projects/.