21 OCFS2 #

Oracle Cluster File System 2 (OCFS2) is a general-purpose journaling file system that has been fully integrated since the Linux 2.6 Kernel. OCFS2 allows you to store application binary files, data files, and databases on devices on shared storage. All nodes in a cluster have concurrent read and write access to the file system. A user space control daemon, managed via a clone resource, provides the integration with the HA stack, in particular with Corosync and the Distributed Lock Manager (DLM).

21.1 Features and benefits #

OCFS2 can be used for the following storage solutions for example:

General applications and workloads.

Xen image store in a cluster. Xen virtual machines and virtual servers can be stored on OCFS2 volumes that are mounted by cluster servers. This provides quick and easy portability of Xen virtual machines between servers.

LAMP (Linux, Apache, MySQL, and PHP | Perl | Python) stacks.

As a high-performance, symmetric and parallel cluster file system, OCFS2 supports the following functions:

An application's files are available to all nodes in the cluster. Users simply install it once on an OCFS2 volume in the cluster.

All nodes can concurrently read and write directly to storage via the standard file system interface, enabling easy management of applications that run across the cluster.

File access is coordinated through DLM. DLM control is good for most cases, but an application's design might limit scalability if it contends with the DLM to coordinate file access.

Storage backup functionality is available on all back-end storage. An image of the shared application files can be easily created, which can help provide effective disaster recovery.

OCFS2 also provides the following capabilities:

Metadata caching.

Metadata journaling.

Cross-node file data consistency.

Support for multiple-block sizes up to 4 KB, cluster sizes up to 1 MB, for a maximum volume size of 4 PB (Petabyte).

Support for up to 32 cluster nodes.

Asynchronous and direct I/O support for database files for improved database performance.

OCFS2 is only supported by SUSE when used with the pcmk (Pacemaker) stack, as provided by SUSE Linux Enterprise High Availability. SUSE does not provide support for OCFS2 in combination with the o2cb stack.

21.2 OCFS2 packages and management utilities #

The OCFS2 Kernel module (ocfs2) is installed

automatically in SUSE Linux Enterprise High Availability 15 SP4. To use

OCFS2, make sure the following packages are installed on each node in

the cluster: ocfs2-tools and

the matching ocfs2-kmp-*

packages for your Kernel.

The ocfs2-tools package provides the following utilities for management of OCFS2 volumes. For syntax information, see their man pages.

|

OCFS2 Utility |

Description |

|---|---|

|

debugfs.ocfs2 |

Examines the state of the OCFS2 file system for debugging. |

| defragfs.ocfs2 | Reduces fragmentation of the OCFS2 file system. |

|

fsck.ocfs2 |

Checks the file system for errors and optionally repairs errors. |

|

mkfs.ocfs2 |

Creates an OCFS2 file system on a device, usually a partition on a shared physical or logical disk. |

|

mounted.ocfs2 |

Detects and lists all OCFS2 volumes on a clustered system. Detects and lists all nodes on the system that have mounted an OCFS2 device or lists all OCFS2 devices. |

|

tunefs.ocfs2 |

Changes OCFS2 file system parameters, including the volume label, number of node slots, journal size for all node slots, and volume size. |

21.3 Configuring OCFS2 services and a STONITH resource #

Before you can create OCFS2 volumes, you must configure the following resources as services in the cluster: DLM and a STONITH resource.

The following procedure uses the crm shell to

configure the cluster resources. Alternatively, you can also use

Hawk2 to configure the resources as described in

Section 21.6, “Configuring OCFS2 resources with Hawk2”.

You need to configure a fencing device. Without a STONITH

mechanism (like external/sbd) in place the

configuration will fail.

Start a shell and log in as

rootor equivalent.Create an SBD partition as described in Procedure 13.3, “Initializing the SBD devices”.

Run

crm configure.Configure

external/sbdas the fencing device:crm(live)configure#primitive sbd_stonith stonith:external/sbd \ params pcmk_delay_max=30 meta target-role="Started"Review your changes with

show.If everything is correct, submit your changes with

commitand leave the crm live configuration withquit.

For details on configuring the resource for DLM, see Section 20.2, “Configuring DLM cluster resources”.

21.4 Creating OCFS2 volumes #

After you have configured a DLM cluster resource as described in Section 21.3, “Configuring OCFS2 services and a STONITH resource”, configure your system to use OCFS2 and create OCFs2 volumes.

We recommend that you generally store application files and data files on different OCFS2 volumes. If your application volumes and data volumes have different requirements for mounting, it is mandatory to store them on different volumes.

Before you begin, prepare the block devices you plan to use for your OCFS2 volumes. Leave the devices as free space.

Then create and format the OCFS2 volume with the

mkfs.ocfs2 as described in

Procedure 21.2, “Creating and formatting an OCFS2 volume”. The most important parameters for the

command are listed in Table 21.2, “Important OCFS2 parameters”.

For more information and the command syntax, refer to the

mkfs.ocfs2 man page.

|

OCFS2 Parameter |

Description and Recommendation |

|---|---|

|

Volume Label ( |

A descriptive name for the volume to make it uniquely identifiable

when it is mounted on different nodes. Use the

|

|

Cluster Size ( |

Cluster size is the smallest unit of space allocated to a file to

hold the data. For the available options and recommendations, refer

to the |

|

Number of Node Slots ( |

The maximum number of nodes that can concurrently mount a volume. For each of the nodes, OCFS2 creates separate system files, such as the journals. Nodes that access the volume can be a combination of little-endian architectures (such as AMD64/Intel 64) and big-endian architectures (such as S/390x).

Node-specific files are called local files. A node slot

number is appended to the local file. For example:

Set each volume's maximum number of node slots when you create it,

according to how many nodes that you expect to concurrently mount

the volume. Use the

In case the |

|

Block Size ( |

The smallest unit of space addressable by the file system. Specify

the block size when you create the volume. For the available options

and recommendations, refer to the |

|

Specific Features On/Off ( |

A comma separated list of feature flags can be provided, and

For an overview of all available flags, refer to the

|

|

Pre-Defined Features ( |

Allows you to choose from a set of pre-determined file system

features. For the available options, refer to the

|

If you do not specify any features when creating and formatting

the volume with mkfs.ocfs2, the following features are

enabled by default: backup-super,

sparse, inline-data,

unwritten, metaecc,

indexed-dirs, and xattr.

Execute the following steps only on one of the cluster nodes.

Open a terminal window and log in as

root.Check if the cluster is online with the command

crm status.Create and format the volume using the

mkfs.ocfs2utility. For information about the syntax for this command, refer to themkfs.ocfs2man page.For example, to create a new OCFS2 file system that supports up to 32 cluster nodes, enter the following command:

#mkfs.ocfs2 -N 32 /dev/disk/by-id/DEVICE_IDAlways use a stable device name (for example:

/dev/disk/by-id/scsi-ST2000DM001-0123456_Wabcdefg).

21.5 Mounting OCFS2 volumes #

You can either mount an OCFS2 volume manually or with the cluster manager, as described in Procedure 21.4, “Mounting an OCFS2 volume with the cluster resource manager”.

To mount multiple OCFS2 volumes, see Procedure 21.5, “Mounting multiple OCFS2 volumes with the cluster resource manager”.

Open a terminal window and log in as

root.Check if the cluster is online with the command

crm status.Mount the volume from the command line, using the

mountcommand.

You can mount an OCFS2 volume on a single node without a fully functional

cluster stack: for example, to quickly access the data from a backup.

To do so, use the mount command with the

-o nocluster option.

This mount method lacks cluster-wide protection. To avoid damaging the file system, you must ensure that it is only mounted on one node.

To mount an OCFS2 volume with the High Availability software, configure an

OCFS2 file system resource in the cluster. The following procedure uses

the crm shell to configure the cluster resources.

Alternatively, you can also use Hawk2 to configure the resources as

described in Section 21.6, “Configuring OCFS2 resources with Hawk2”.

Log in to a node as

rootor equivalent.Run

crm configure.Configure Pacemaker to mount the OCFS2 file system on every node in the cluster:

crm(live)configure#primitive ocfs2-1 ocf:heartbeat:Filesystem \ params device="/dev/disk/by-id/DEVICE_ID" directory="/mnt/shared" fstype="ocfs2" \ op monitor interval="20" timeout="40" \ op start timeout="60" op stop timeout="60" \ meta target-role="Started"Add the

ocfs2-1primitive to theg-storagegroup you created in Procedure 20.1, “Configuring a base group for DLM”.crm(live)configure#modgroup g-storage add ocfs2-1Because of the base group's internal colocation and ordering, the

ocfs2-1resource will only start on nodes that also have adlmresource already running.Important: Do not use a group for multiple OCFS2 resourcesAdding multiple OCFS2 resources to a group creates a dependency between the OCFS2 volumes. For example, if you created a group with

crm configure group g-storage dlm ocfs2-1 ocfs2-2, then stoppingocfs2-1will also stopocfs2-2, and startingocfs2-2will also startocfs2-1.To use multiple OCFS2 resources in the cluster, use colocation and order constraints as described in Procedure 21.5, “Mounting multiple OCFS2 volumes with the cluster resource manager”.

Review your changes with

show.If everything is correct, submit your changes with

commitand leave the crm live configuration withquit.

To mount multiple OCFS2 volumes in the cluster, configure an OCFS2 file system

resource for each volume, and colocate them with the dlm

resource you created in Procedure 20.2, “Configuring an independent DLM resource”.

Do not add multiple OCFS2 resources to a group with DLM.

This creates a dependency between the OCFS2 volumes. For example, if

ocfs2-1 and ocfs2-2 are in the same group,

then stopping ocfs2-1 will also stop ocfs2-2.

Log in to a node as

rootor equivalent.Run

crm configure.Create the primitive for the first OCFS2 volume:

crm(live)configure#primitive ocfs2-1 Filesystem \ params directory="/srv/ocfs2-1" fstype=ocfs2 device="/dev/disk/by-id/DEVICE_ID1" \ op monitor interval=20 timeout=40 \ op start timeout=60 interval=0 \ op stop timeout=60 interval=0Create the primitive for the second OCFS2 volume:

crm(live)configure#primitive ocfs2-2 Filesystem \ params directory="/srv/ocfs2-2" fstype=ocfs2 device="/dev/disk/by-id/DEVICE_ID2" \ op monitor interval=20 timeout=40 \ op start timeout=60 interval=0 \ op stop timeout=60 interval=0Clone the OCFS2 resources so that they can run on all nodes:

crm(live)configure#clone cl-ocfs2-1 ocfs2-1 meta interleave=truecrm(live)configure#clone cl-ocfs2-2 ocfs2-2 meta interleave=trueAdd a colocation constraint for both OCFS2 resources so that they can only run on nodes where DLM is also running:

crm(live)configure#colocation co-ocfs2-with-dlm inf: ( cl-ocfs2-1 cl-ocfs2-2 ) cl-dlmAdd an order constraint for both OCFS2 resources so that they can only start after DLM is already running:

crm(live)configure#order o-dlm-before-ocfs2 Mandatory: cl-dlm ( cl-ocfs2-1 cl-ocfs2-2 )Review your changes with

show.If everything is correct, submit your changes with

commitand leave the crm live configuration withquit.

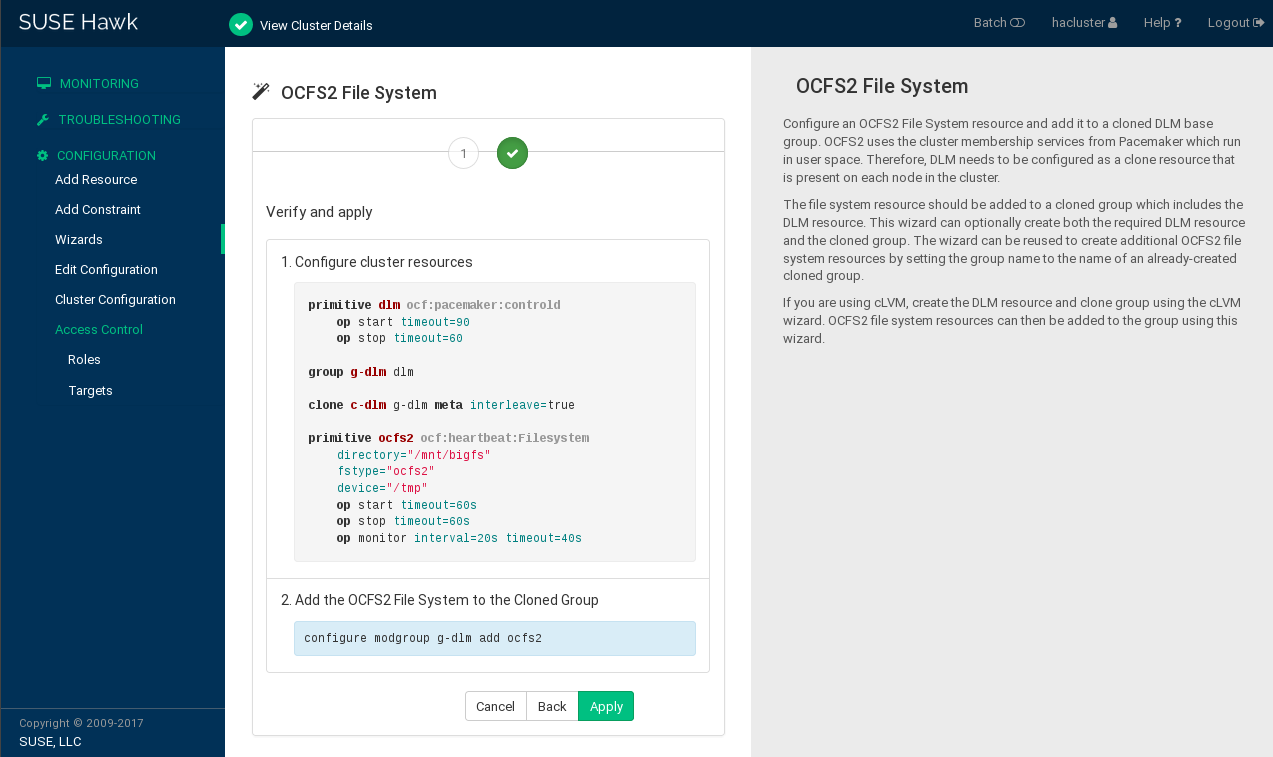

21.6 Configuring OCFS2 resources with Hawk2 #

Instead of configuring the DLM and the file system resource for OCFS2 manually with the CRM Shell, you can also use the OCFS2 template in Hawk2's .

The OCFS2 template in the does not include the configuration of a STONITH resource. If you use the wizard, you still need to create an SBD partition on the shared storage and configure a STONITH resource as described in Procedure 21.1, “Configuring a STONITH resource”.

Using the OCFS2 template in the Hawk2 also leads to a slightly different resource configuration than the manual configuration described in Procedure 20.1, “Configuring a base group for DLM” and Procedure 21.4, “Mounting an OCFS2 volume with the cluster resource manager”.

Log in to Hawk2:

https://HAWKSERVER:7630/

In the left navigation bar, select .

Expand the category and select

OCFS2 File System.Follow the instructions on the screen. If you need information about an option, click it to display a short help text in Hawk2. After the last configuration step, the values you have entered.

The wizard displays the configuration snippet that will be applied to the CIB and any additional changes, if required.

Check the proposed changes. If everything is according to your wishes, apply the changes.

A message on the screen shows if the action has been successful.

21.7 Using quotas on OCFS2 file systems #

To use quotas on an OCFS2 file system, create and mount the files

system with the appropriate quota features or mount options,

respectively: ursquota (quota for individual users) or

grpquota (quota for groups). These features can also

be enabled later on an unmounted file system using

tunefs.ocfs2.

When a file system has the appropriate quota feature enabled, it tracks

in its metadata how much space and files each user (or group) uses. Since

OCFS2 treats quota information as file system-internal metadata, you

do not need to run the quotacheck(8) program. All

functionality is built into fsck.ocfs2 and the file system driver itself.

To enable enforcement of limits imposed on each user or group, run

quotaon(8) like you would do for any other file

system.

For performance reasons each cluster node performs quota accounting

locally and synchronizes this information with a common central storage

once per 10 seconds. This interval is tuneable with

tunefs.ocfs2, options

usrquota-sync-interval and

grpquota-sync-interval. Therefore quota information may

not be exact at all times and as a consequence users or groups can

slightly exceed their quota limit when operating on several cluster nodes

in parallel.

21.8 For more information #

For more information about OCFS2, see the following links:

- https://ocfs2.wiki.kernel.org/

The OCFS2 project home page.

- http://oss.oracle.com/projects/ocfs2/

The former OCFS2 project home page at Oracle.

- http://oss.oracle.com/projects/ocfs2/documentation

The project's former documentation home page.