Deploying and Installing SUSE AI in Air-Gapped Environments #

- WHAT?

This document provides a comprehensive, step-by-step guide for the SUSE AI air-gapped deployment.

- WHY?

To help users successfully complete the air-gapped deployment process.

- EFFORT

Less than one hour of reading and an advanced knowledge of Linux deployment.

- GOAL

To learn enough information to deploy SUSE AI in both testing and production air-gapped environments.

SUSE AI is a versatile product consisting of multiple software layers and components. This document outlines the complete workflow for air-gapped deployment and installation of all SUSE AI dependencies, as well as SUSE AI itself. You can also find references to recommended hardware and software requirements, as well as steps to take after the product installation.

For hardware, software and application-specific requirements, refer to SUSE AIrequirements.

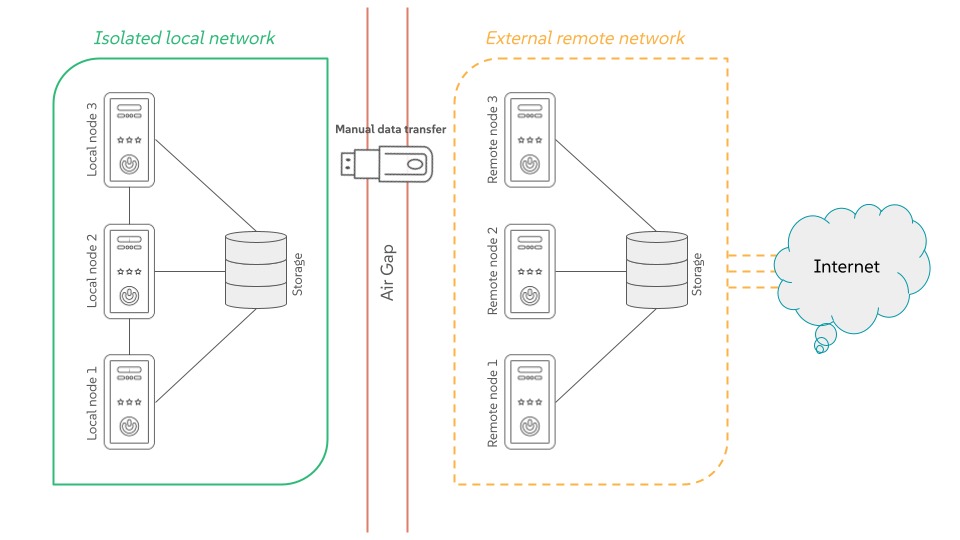

1 Air-gapped environments #

An air-gapped environment is a security measure where a single host or the whole network is isolated from all other networks, such as the public Internet. This "air gap" acts as a physical or logical barrier, preventing any direct connection that could be exploited by cyber threats.

1.1 Why you need an air-gapped environment? #

The primary goal is to protect highly sensitive data and critical systems from unauthorized access, cyber attacks, malware and ransomware. Air-gapped environments are typically found in situations where security is of the utmost importance, such as:

Military and government networks handling classified information.

Industrial control systems (ICS) for critical infrastructure like power plants and water treatment facilities.

Financial institutions and stock exchanges.

Systems controlling nuclear power plants or other life-critical operations.

1.2 How do air-gapped environments work? #

There are two types of air gaps:

- Physical air gaps

This is the most secure method, where the system is disconnected from any network. It might even involve placing the system in a shielded room.

- Logical air gaps

This type uses software controls such as firewall rules and network segmentation to create a highly restricted connection. While it offers more convenience, it is not as secure as a physical air gap because the air-gapped system is still technically connected to a network.

1.3 What challenges do air-gapped environments face? #

When working in air-gapped systems, you usually face the following limitations:

- Manual updates

Air-gapped systems cannot automatically receive software or security updates from external networks. You must manually download and install updates, which can be time-consuming and create vulnerabilities if not done regularly.

- Insider threats and physical attacks

An air gap does not protect against threats that gain physical access to the system, such as a malicious insider with a compromised USB drive.

- Limited functionality

The lack of connectivity limits the system’s ability to communicate with other devices or services, making it less efficient for many modern applications.

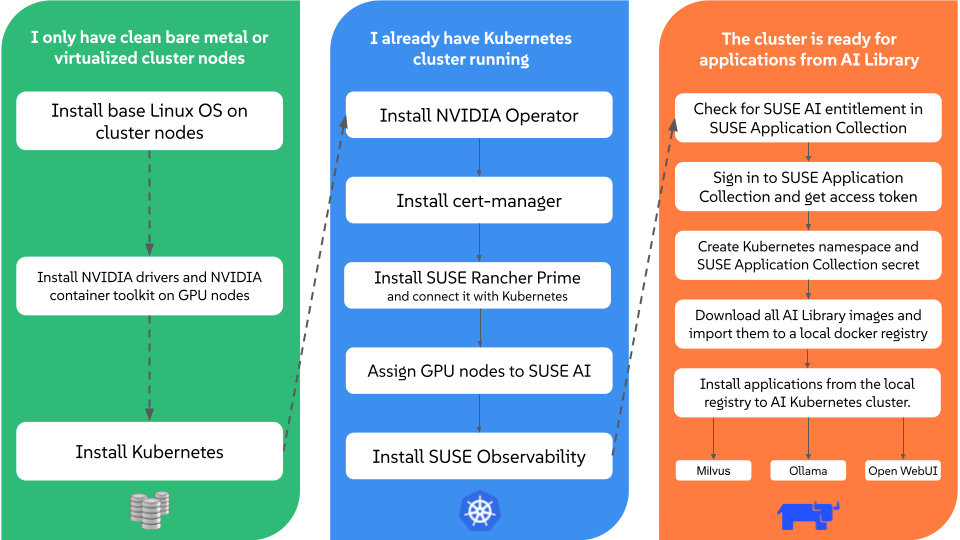

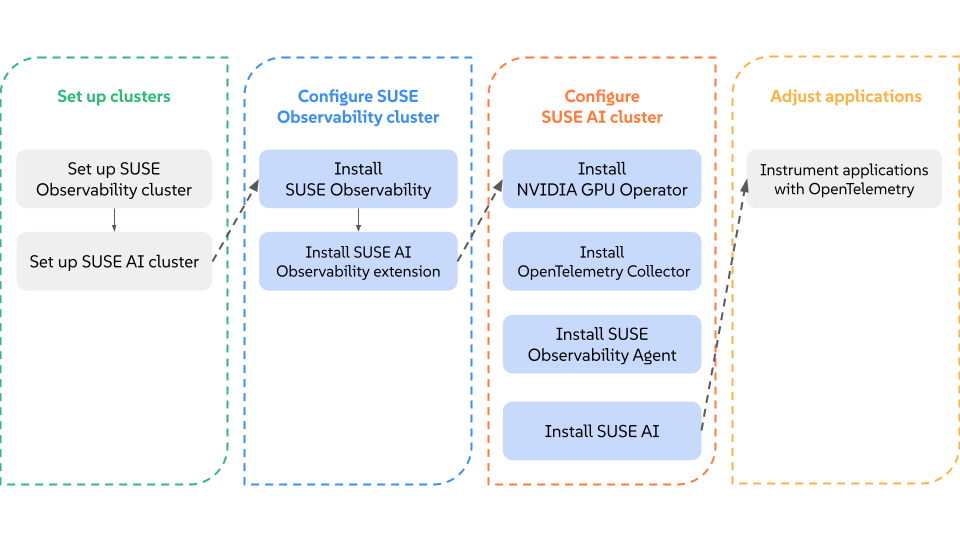

2 Installation overview #

This chart illustrates important steps involved in the installation process of SUSE AI.

The chart focuses on the following possible scenarios:

You have clean cluster nodes prepared without a supported Linux operating system installed.

You have a supported Linux operating system and Kubernetes distribution installed on cluster nodes.

You have SUSE Rancher Prime and all supportive components installed on the Kubernetes cluster and are prepared to install the required applications from the AI Library.

2.1 SUSE AI air-gapped stack #

The air-gapped stack is a set of scripts that ease the successful air-gap installation of certain SUSE AI components. To use them, you need to clone or download them from the stack’s GitHub repository.

2.1.1 What scripts does the stack include? #

The following scripts are included in the air-gapped stack:

SUSE-AI-mirror-nvidia.shMirrors all RPM packages from a specified Web URLs.

SUSE-AI-get-images.shDownloads Docker images of SUSE AI applications from SUSE Application Collection.

SUSE-AI-load-images.shLoads downloaded Docker images into a custom Docker image registry.

2.1.2 Where do the scripts fit into the air-gap installation? #

The scripts are required in several places during the SUSE AI air-gapped installation. The following simplified workflow outlines the intended usage:

Use

SUSE-AI-mirror-nvidia.shon a remote host to download the required NVIDIA RPM packages. Transfer the downloaded content to an air-gapped local host and add it as a Zypper repository to install NVIDIA drivers on local GPU nodes.Use

SUSE-AI-get-images.shon a remote host to download Docker images of required SUSE AI components. Transfer them to an air-gapped local host.Use

SUSE-AI-load-images.shto load the transferred Docker images of SUSE AI components into a custom local $Docker image registry.Install AI Library components on the local Kubernetes cluster from the local custom Docker registry.

3 Installing the Linux and Kubernetes distribution #

This procedure includes the steps to install the base Linux operating system and a Kubernetes distribution for users who start deploying on cluster nodes from scratch. If you already have a Kubernetes cluster installed and running, you can skip this procedure and continue with Section 5.1, “Installation procedure”.

Install and register a supported Linux operating system on each cluster node. We recommend using one of the following operating systems:

SUSE Linux Enterprise Server 15 SP6 for a traditional non-transactional operating system. For more information, see Section 3.1, “Installing SUSE Linux Enterprise Server”.

SUSE Linux Micro 6.1 for an immutable transactional operating system. For more information, see SUSE Linux Micro 6.1 documentation.

For a list of supported operating systems, refer to https://www.suse.com/suse-rancher/support-matrix/all-supported-versions/.

Install the NVIDIA GPU driver on cluster nodes with GPUs. Refer to Section 3.2, “Installing NVIDIA GPU drivers” for details.

Install Kubernetes on cluster nodes. We recommend using the supported SUSE Rancher Prime: RKE2 distribution. Refer to Section 3.3, “Installing SUSE Rancher Prime: RKE2 in air-gapped environments” for details. For a list of supported Kubernetes platforms, refer to https://www.suse.com/suse-rancher/support-matrix/all-supported-versions/.

3.1 Installing SUSE Linux Enterprise Server #

Use the following procedures to install SLES on all supported hardware platforms. They assume you have successfully booted into the installation system. For more detailed installation instructions and deployment strategies, refer to SUSE Linux Enterprise Server Deployment Guide.

3.1.1 The Unified Installer #

Starting with SLES 15, the installation medium consists only of the Unified Installer, a minimal system for installing, updating and registering all SLE base products. During the installation, you can add functionality by selecting modules and extensions to be installed on top of the Unified Installer.

3.1.2 Installing offline or without registration #

The default installation medium 15 SP6-Online-ARCH-GM-media1.iso is optimized for size and does not contain any modules and extensions. Therefore, the installation requires network access to register your product and retrieve repository data for the modules and extensions.

For installation without registering the system, use the

15 SP6-Full-ARCH-GM-media1.iso image from

https://www.suse.com/download/sles/ and refer to

Installing without registration.

Use the following command to copy the contents of the installation image to a removable flash disk.

> sudo dd if=IMAGE of=FLASH_DISK bs=4M && syncIMAGE needs to be replaced with the path to

the 15 SP6-Online-ARCH-GM-media1.iso or

15 SP6-Full-ARCH-GM-media1.iso image file.

FLASH_DISK needs to be replaced with the

flash device. To identify the device, insert it and run:

# grep -Ff <(hwinfo --disk --short) <(hwinfo --usb --short)

disk:

/dev/sdc General USB Flash DiskMake sure the size of the device is sufficient for the desired image. You can check the size of the device with:

# fdisk -l /dev/sdc | grep -e "^/dev"

/dev/sdc1 * 2048 31490047 31488000 15G 83 LinuxIn this example, the device has a capacity of 15 GB. The command to

use for the 15 SP6-Full-ARCH-GM-media1.iso would be:

dd if=15 SP6-Full-ARCH-GM-media1.iso of=/dev/sdc bs=4M && syncThe device must not be mounted when running the dd

command. Note that all data on the partition will be erased.

3.1.3 The installation procedure #

To install SLES, boot or IPL into the installer from the Unified Installer medium and start the installation.

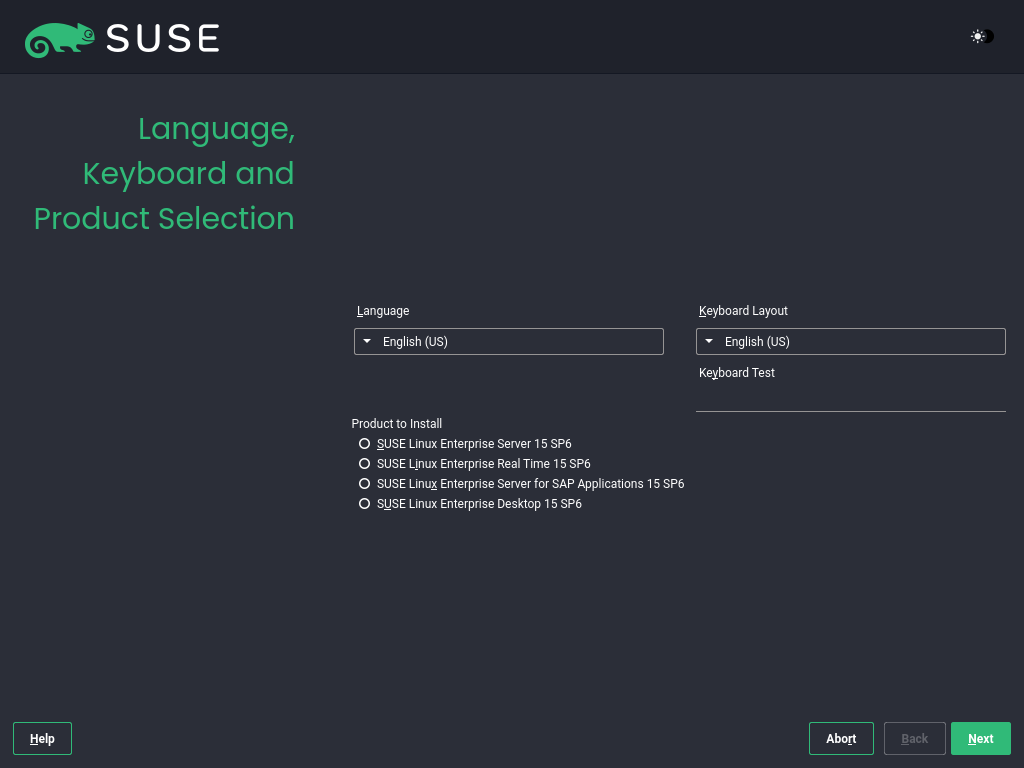

3.1.3.1 Language, keyboard and product selection #

The and settings are initialized with the language you chose on the boot screen. If you do not change the default, it remains English (US). Change the settings here, if necessary. Use the text box to test the layout.

Select SUSE Linux Enterprise Server 15 SP6 for installation. You need to have a registration code for the product. Proceed with .

If you have difficulty reading the labels in the installer, you can change the widget colors and theme.

Click the button or press

Shift–F3 to

open a theme selection dialog. Select a theme from the list and

the dialog.

Shift–F4 switches to the color scheme for vision-impaired users. Press the buttons again to switch back to the default scheme.

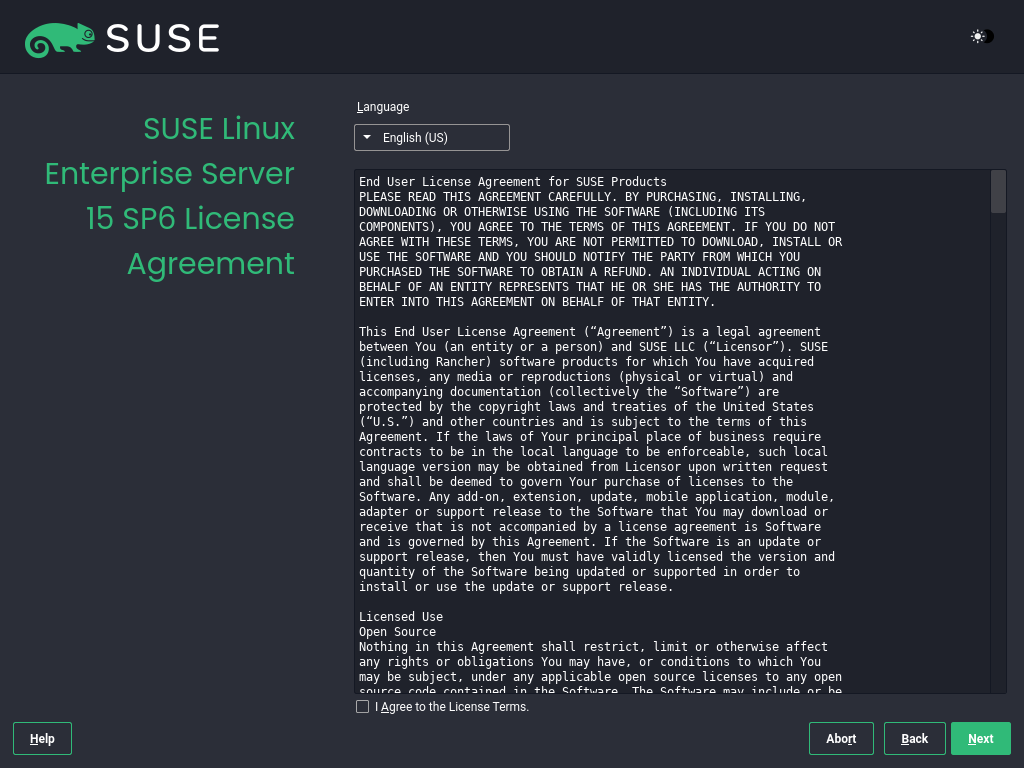

3.1.3.2 License agreement #

Read the License Agreement. It is presented in the language you have chosen on the boot screen. Translations are available via the drop-down list. You need to accept the agreement by checking to install SLES. Proceed with .

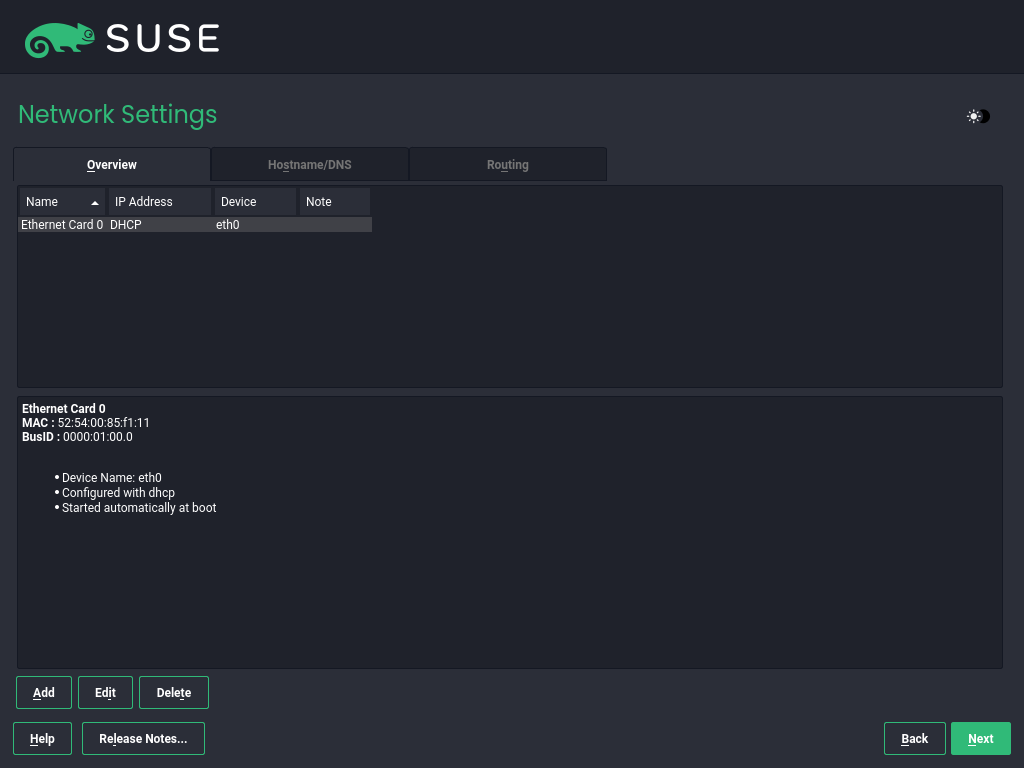

3.1.3.3 Network settings #

A system analysis is performed, where the installer probes for storage devices and tries to find other installed systems. If the network was automatically configured via DHCP during the start of the installation, you are presented the registration step.

If the network is not yet configured, the dialog opens. Choose a network interface from the list and configure it with . Alternatively, an interface manually. See the sections on installer network settings and configuring a network connection with YaST for more information. If you prefer to do an installation without network access, skip this step without making any changes and proceed with .

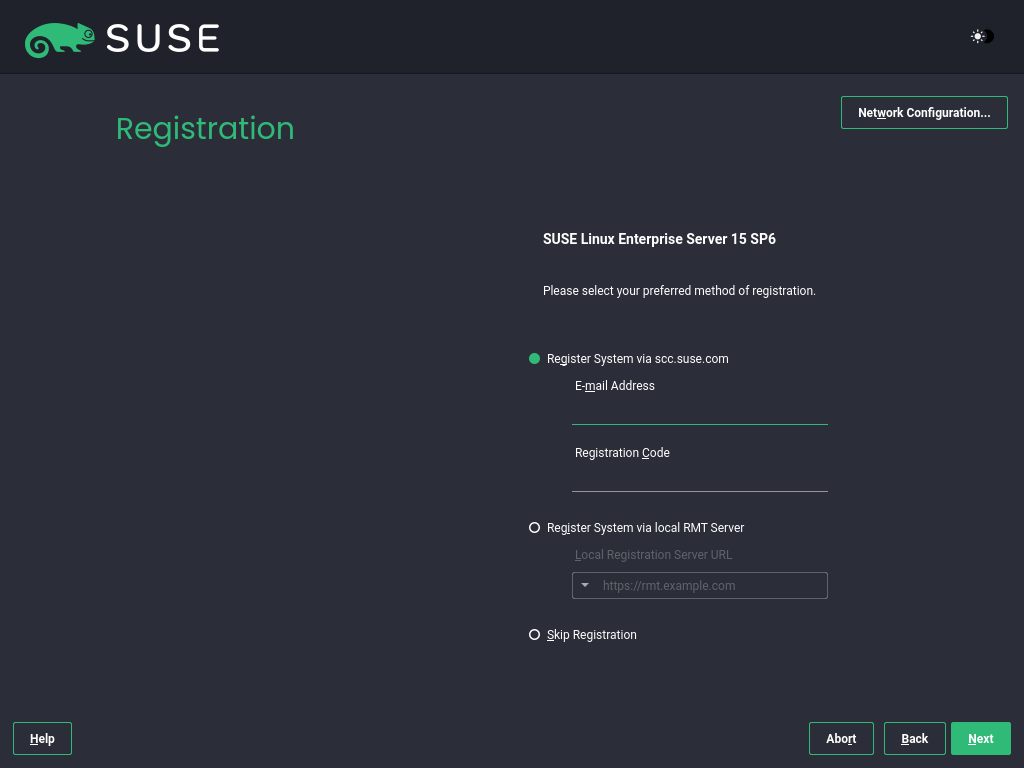

3.1.3.4 Registration #

To get technical support and product updates, you need to register and activate SLES with the SUSE Customer Center or a local registration server. Registering your product at this stage also grants you immediate access to the update repository. This enables you to install the system with the latest updates and patches available.

When registering, repositories and dependencies for modules and extensions are loaded from the registration server.

To register at the SUSE Customer Center, enter the associated with your SUSE Customer Center account and the for SLES. Proceed with .

If your organization provides a local registration server, you may alternatively register to it. Activate and either choose a URL from the drop-down list or type in an address. Proceed with .

If you are offline or want to skip registration, activate . Accept the warning with and proceed with .

Important: Skipping the registrationYour system and extensions need to be registered to retrieve updates and to be eligible for support. Skipping the registration is only possible when installing from the

15 SP6-Full-ARCH-GM-media1.isoimage.If you do not register during the installation, you can do so at any time later from the running system. To do so, run › or the command-line tool

SUSEConnect.

After SLES has been successfully registered, you are asked whether to install the latest available online updates during the installation. If choosing , the system will be installed with the most current packages without having to apply the updates after installation. Activating this option is recommended.

By default, the firewall on SUSE AI only blocks incoming

connections. If your system is behind another firewall that blocks

outgoing traffic, make sure to allow connections to

https://scc.suse.com/ and

https://updates.suse.com on ports 80 and 443

to receive updates.

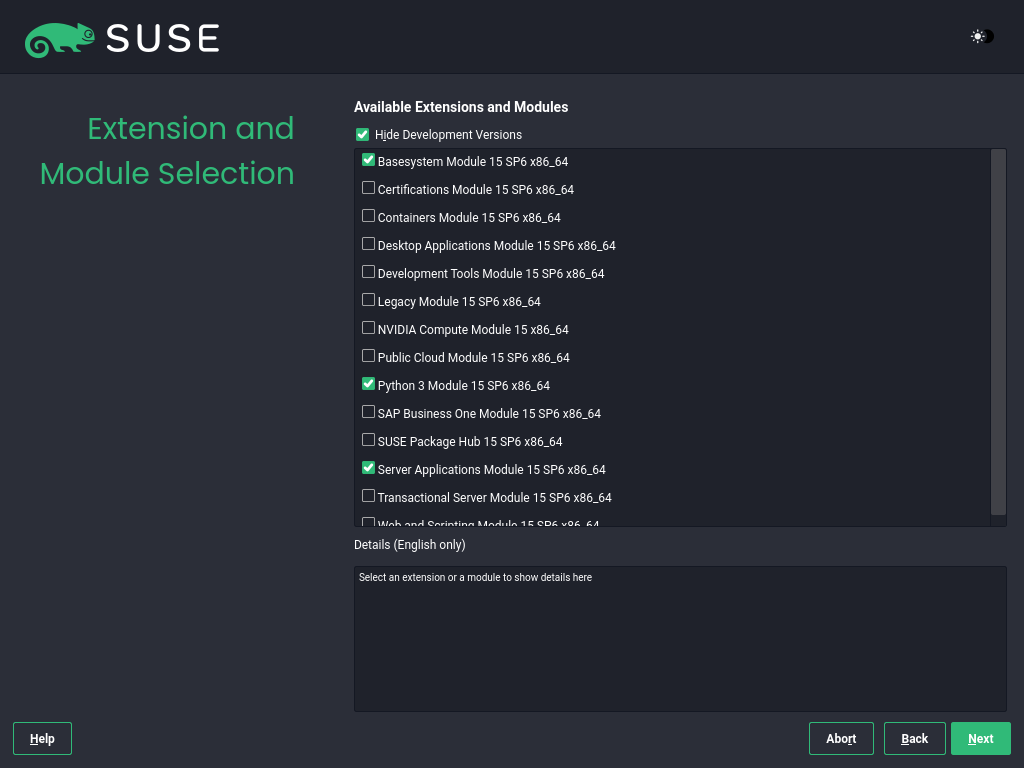

3.1.3.5 Extension and module selection #

After the system is successfully registered, the installer lists modules and extensions that are available for SLES. Modules are components that allow you to customize the product according to your needs. They are included in your SLES subscription. Extensions add functionality to your product. They must be purchased separately.

The availability of certain modules or extensions depends on the product selected in the first step of the installation. For a description of the modules and their lifecycles, select a module to see the accompanying text. More detailed information is available in the Modules and extensins quick start guide.

The selection of modules indirectly affects the scope of the installation, because it defines which software sources (repositories) are available for installation and in the running system.

The following modules and extensions are available for SUSE Linux Enterprise Server:

- Basesystem Module

This module adds a basic system on top of the Unified Installer. It is required by all other modules and extensions. The scope of an installation that only contains the base system is comparable to the installation pattern

minimal systemof previous SLES versions. This module is selected for installation by default and should not be deselected.Dependencies: None

- Certifications Module

Contains the FIPS certification packages.

Dependencies: Server Applications

- Confidential Computing Technical Preview

Contains packages related to confidential computing.

Dependencies: Basesystem

- Containers Module

Contains support and tools for containers.

Dependencies: Basesystem

- Desktop Applications Module

Adds a graphical user interface and essential desktop applications to the system.

Dependencies: Basesystem

- Development Tools Module

Contains the compilers (including

gcc) and libraries required for compiling and debugging applications. Replaces the former Software Development Kit (SDK).Dependencies: Basesystem, Desktop Applications

- High Performance Computing (HPC) Module

Provides specific tools commonly used for high performance, numerically intensive workloads.

Dependencies: Basesystem

- Legacy Module

Helps you with migrating applications from earlier versions of SLES and other systems to SLES 15 SP6 by providing packages which are discontinued on SLE. Packages in this module are selected based on the requirements for migration and the level of complexity of configuration.

This module is recommended when migrating from a previous product version.

Dependencies: Basesystem, Server Applications

- NVIDIA Compute Module

Contains the NVIDIA CUDA (Compute Unified Device Architecture) drivers.

The software in this module is provided by NVIDIA under the CUDA End User License Agreement and is not supported by SUSE.

Dependencies: Basesystem

- Public Cloud Module

Contains all tools required to create images for deploying SLES in cloud environments such as Amazon Web Services (AWS), Microsoft Azure, Google Compute Platform, or OpenStack.

Dependencies: Basesystem, Server Applications

- Python 3 Module

This module contains the most recent versions of the selected Python 3 packages.

Dependencies: Basesystem

- SAP Business One Server

This module contains packages and system configurations specific to SAP Business One Server. It is maintained and supported under the SUSE Linux Enterprise Server product subscription.

Dependencies: Basesystem, Server Applications, Desktop Applications, Development Tools

- Server Applications Module

Adds server functionality by providing network services such as DHCP server, name server, or Web server. This module is selected for installation by default. Deselecting it is not recommended.

Dependencies: Basesystem

- SLE High Availability

Adds clustering support for mission-critical setups to SLES. This extension requires a separate license key.

Dependencies: Basesystem, Server Applications

- SLE Live Patching

Adds support for performing critical patching without having to shut down the system. This extension requires a separate license key.

Dependencies: Basesystem, Server Applications

- SUSE Linux Enterprise Workstation Extension

Extends the functionality of SLES with packages from SUSE Linux Enterprise Desktop, like additional desktop applications (office suite, e-mail client, graphical editor, etc.) and libraries. It allows combining both products to create a fully featured workstation. This extension requires a separate license key.

Dependencies: Basesystem, Desktop Applications

- SUSE Package Hub

Provides access to packages for SLES maintained by the openSUSE community. These packages are delivered without L3 support and do not interfere with the supportability of SLES. For more information, refer to https://packagehub.suse.com/.

Dependencies: Basesystem

- Transactional Server Module

Adds support for transactional updates. Updates are either applied to the system as a single transaction or not applied at all. This happens without influencing the running system. If an update fails, or if the successful update is deemed to be incompatible or otherwise incorrect, it can be discarded to immediately return the system to its previous functioning state.

Dependencies: Basesystem

- Web and Scripting Module

Contains packages intended for a running Web server.

Dependencies: Basesystem, Server Applications

Certain modules depend on the installation of other modules. Therefore, when selecting a module, other modules may be selected automatically to fulfill dependencies.

Depending on the product, the registration server can mark modules and extensions as recommended. Recommended modules and extensions are preselected for registration and installation. To avoid installing these recommendations, deselect them manually.

Select the modules and extensions you want to install and proceed with . In case you have chosen one or more extensions, you will be prompted to provide the respective registration codes. Depending on your choice, it may also be necessary to accept additional license agreements.

When performing an offline installation from the 15 SP6-Full-ARCH-GM-media1.iso, only the is selected by default. To install the complete default package set of SUSE Linux Enterprise Server, additionally select the and the .

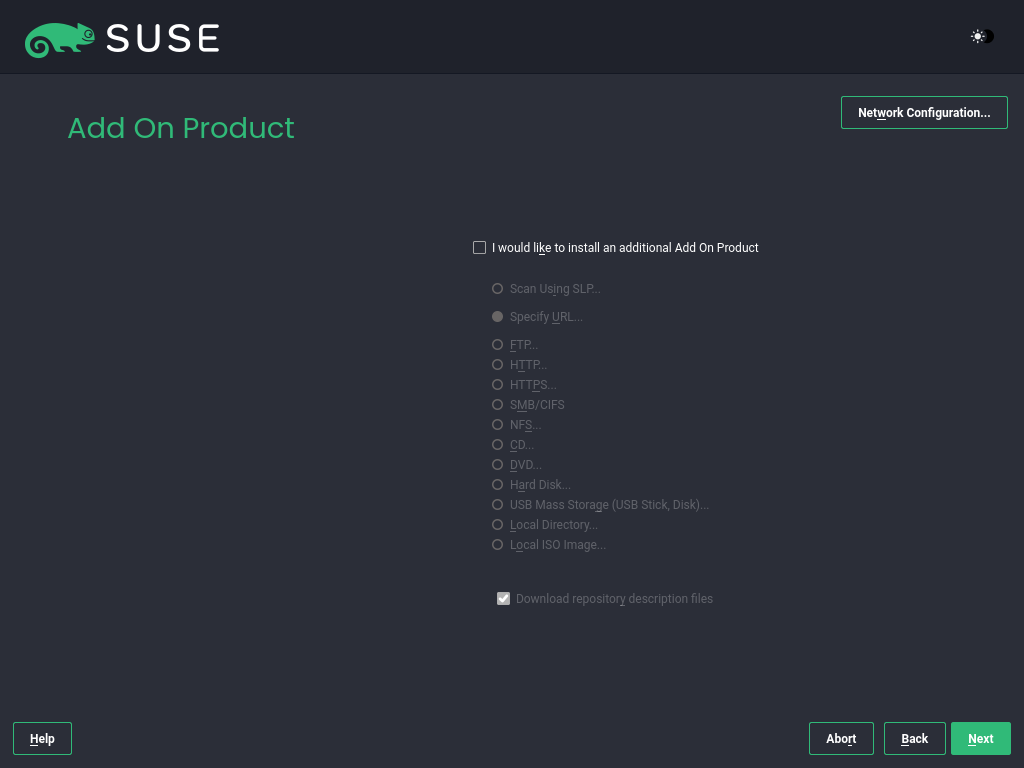

3.1.3.6 Add-on product #

The dialog allows you to add additional software sources (called "repositories") to SLES that are not provided by the SUSE Customer Center. Add-on products may include third-party products and drivers as well as additional software for your system.

You can also add driver update repositories via the dialog. Driver updates for SLE are provided at https://drivers.suse.com/. These drivers have been created through the SUSE SolidDriver Program.

To skip this step, proceed with . Otherwise, activate . Specify a media type, a local path, or a network resource hosting the repository and follow the on-screen instructions.

Check to download the files describing the repository now. If deactivated, they will be downloaded after the installation has started. Proceed with and insert a medium if required. Depending on the content of the product, it may be necessary to accept additional license agreements. Proceed with . If you have chosen an add-on product requiring a registration key, you will be asked to enter it before proceeding to the next step.

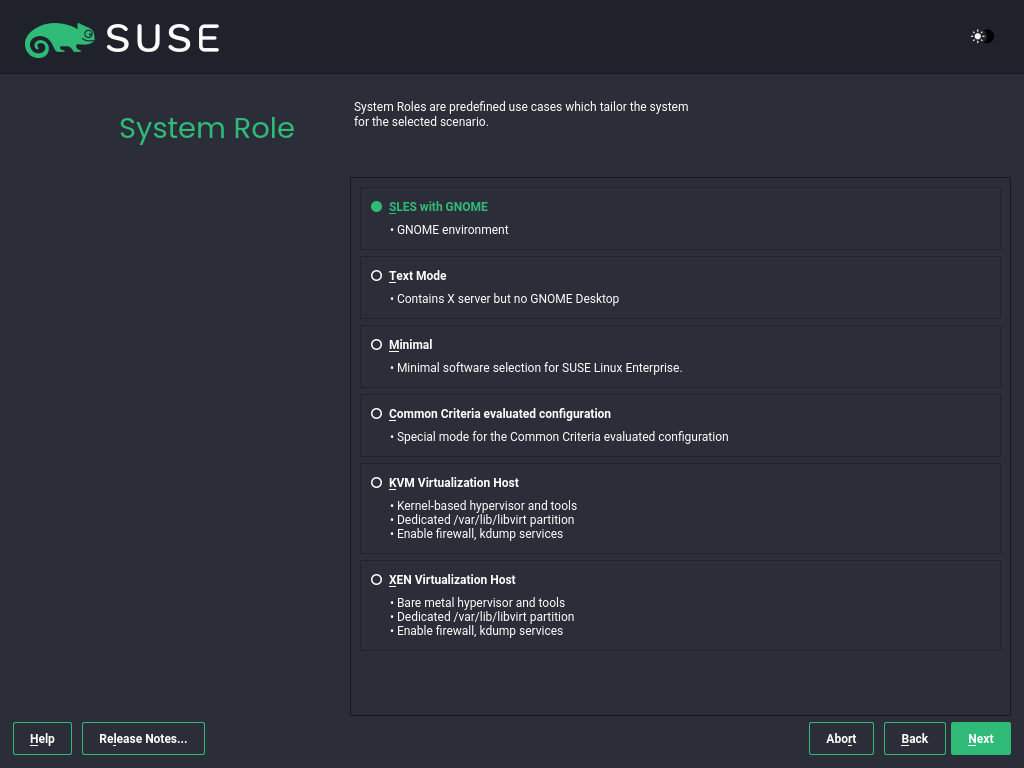

3.1.3.7 System role #

The availability of system roles depends on your selection of modules and extensions. System roles define, for example, the set of software patterns that are preselected for the installation. Refer to the description on the screen to make your choice. Select a role and proceed with . If from the enabled modules only one role or no role is suitable for the respective base product, the dialog is omitted.

From this point on, the Release Notes can be viewed from any screen during the installation process by selecting .

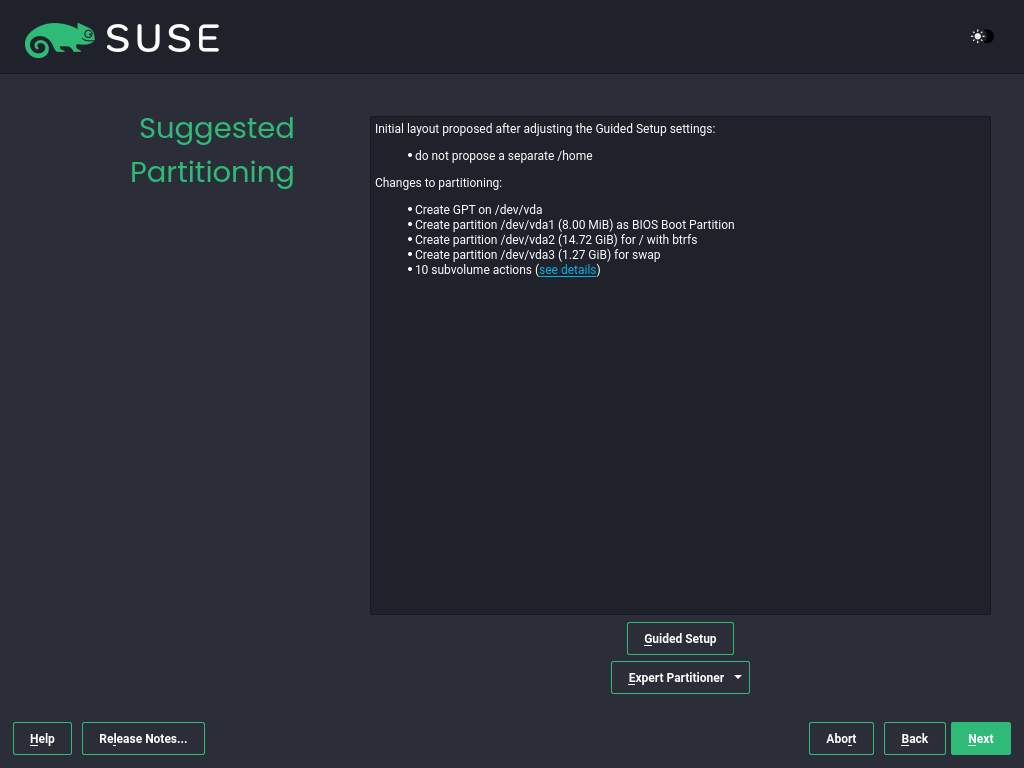

3.1.3.8 Suggested partitioning #

Review the partition setup proposed by the system. If necessary, change it. You have the following options:

Starts a wizard that lets you refine the partitioning proposal. The options available here depend on your system setup. If it contains more than a single hard disk, you can choose which disk or disks to use and where to place the root partition. If the disks already contain partitions, decide whether to remove or resize them.

In subsequent steps, you may also add LVM support and disk encryption. You can change the file system for the root partition and decide whether or not to have a separate home partition.

Opens the . This gives you full control over the partitioning setup and lets you create a custom setup. This option is intended for experts. For details, see the Expert Partitioner chapter.

For partitioning purposes, disk space is measured in binary units

rather than in decimal units. For example, if you enter sizes of

1GB, 1GiB or

1G, they all signify 1 GiB (Gibibyte), as

opposed to 1 GB (Gigabyte).

- Binary

1 GiB = 1,073,741,824 bytes.

- Decimal

1 GB = 1,000,000,000 bytes.

- Difference

1 GiB ≈ 1.07 GB.

To accept the proposed setup without any changes, choose to proceed.

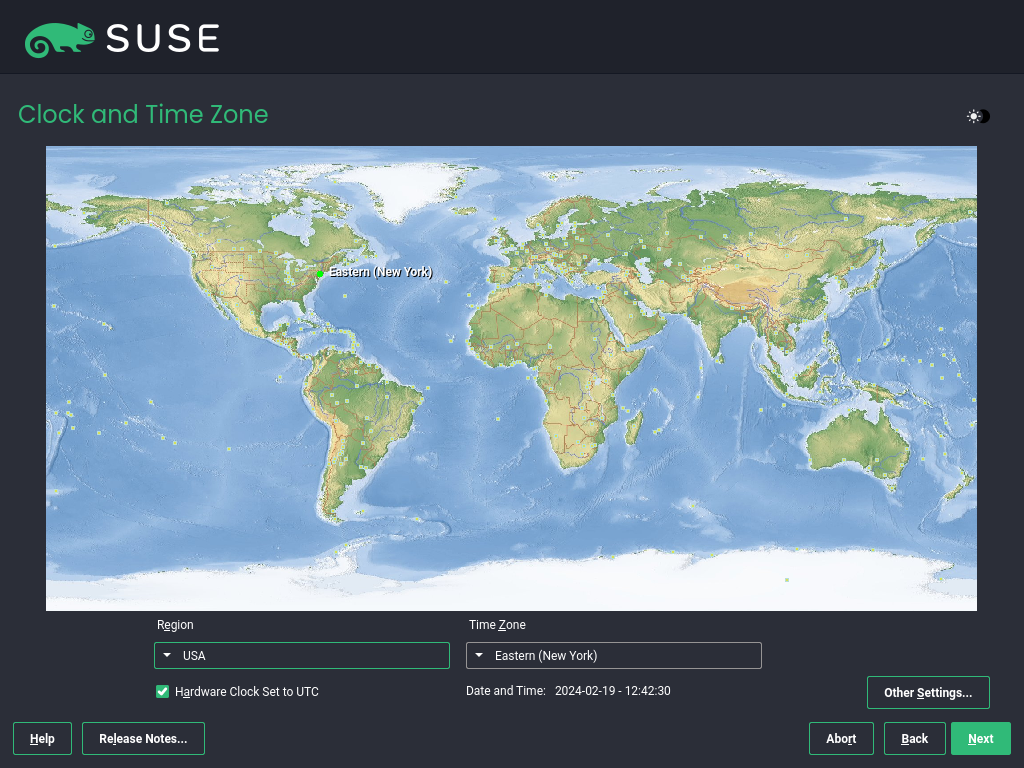

3.1.3.9 Clock and time zone #

Select the clock and time zone to use in your system. To manually adjust the time or to configure an NTP server for time synchronization, choose . See the section on Clock and Time Zone for detailed information. Proceed with .

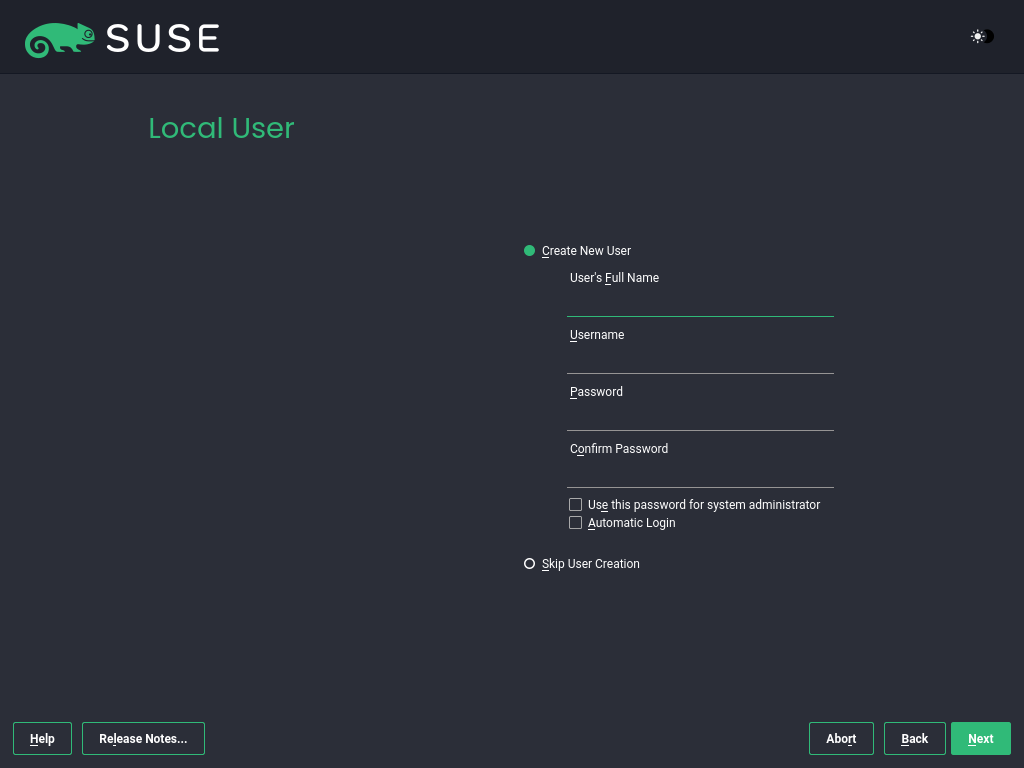

3.1.3.10 Local user #

To create a local user, type the first and last name in the field, the login name in the field, and the password in the field.

The password should be at least eight characters long and should contain both uppercase and lowercase letters and numbers. The maximum length for passwords is 72 characters, and passwords are case-sensitive.

For security reasons, it is also strongly recommended not to enable . You should also not but provide a separate root password in the next installation step.

If you install on a system where a previous Linux installation was found, you may . Click for a list of available user accounts. Select one or more users.

In an environment where users are centrally managed (for example, by NIS or LDAP), you can skip the creation of local users. Select in this case.

Proceed with .

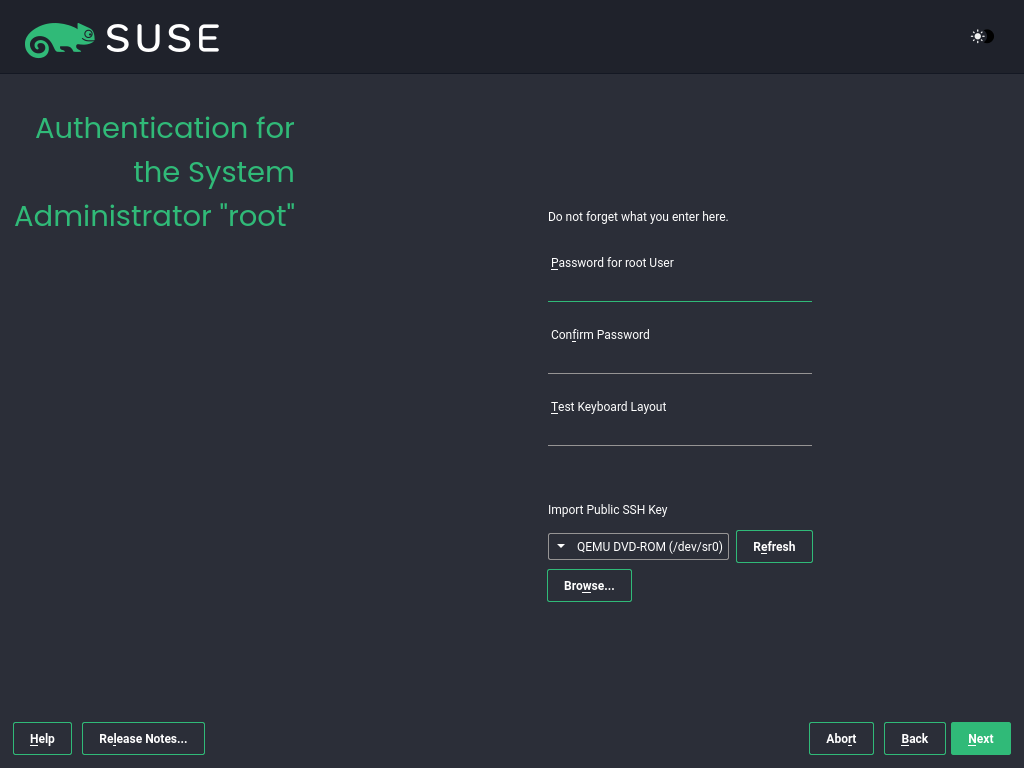

3.1.3.11 Authentication for the system administrator root #

root #Type a password for the system administrator (called the root user) or provide a public SSH key. If you want, you can use both.

Because the root user is equipped with extensive permissions, the password should be chosen carefully. You should never forget the root password. After you entered it here, the password cannot be retrieved.

It is recommended to use only US ASCII characters. In the event of a system error or when you need to start your system in rescue mode, the keyboard may not be localized.

To access the system remotely via SSH using a public key, import a key from removable media or an existing partition. See the section on Authentication for the system administrator root for more information.

Proceed with .

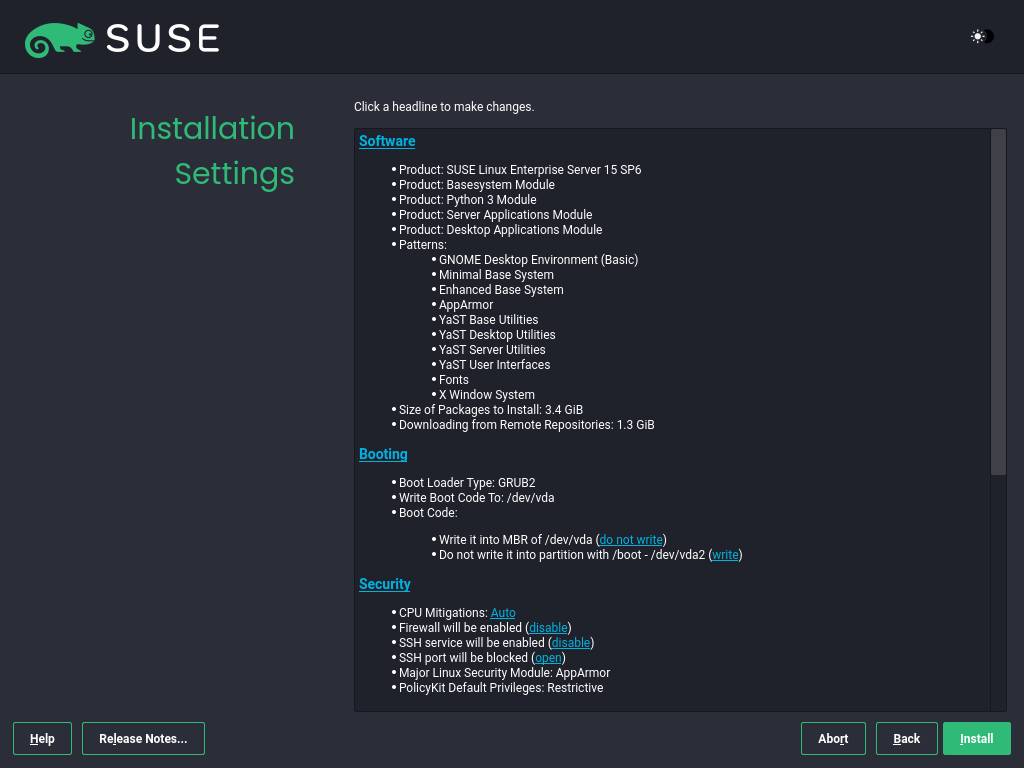

3.1.3.12 Installation settings #

Use the screen to review and—if necessary—change several proposed installation settings. The current configuration is listed for each setting. To change it, click the headline. Certain settings, such as firewall or SSH, can be changed directly by clicking the respective links.

Changes you can make here can also be made later at any time from the installed system. However, if you need remote access right after the installation, you may need to open the SSH port in the settings.

The scope of the installation is defined by the modules and extensions you have chosen for this installation. However, depending on your selection, not all packages available in a module are selected for installation.

Clicking opens the screen, where you can change the software selection by selecting or deselecting patterns. Each pattern contains several software packages needed for specific functions (for example, ). For a more detailed selection based on software packages to install, select to switch to the YaST . See Installing or removing software for more information.

This section shows the boot loader configuration. Changing the defaults is recommended only if really needed. Refer to The boot loader GRUB 2 for details.

The refer to kernel boot command-line parameters for software mitigations that have been deployed to prevent CPU side-channel attacks. Click the selected entry to choose a different option. For details, see the section on CPU Mitigations.

By default, the is enabled on all configured network interfaces. To disable firewalld, click (not recommended). Refer to the Masquerading and Firewalls chapter for configuration details.

Note: Firewall settings for receiving updatesBy default, the firewall on SUSE AI only blocks incoming connections. If your system is behind another firewall that blocks outgoing traffic, make sure to allow connections to

https://scc.suse.com/andhttps://updates.suse.comon ports 80 and 443 to receive updates.The is enabled by default, but its port (22) is closed in the firewall. Click to open the port or to disable the service. If SSH is disabled, remote logins will not be possible. Refer to Securing network operations with OpenSSH for more information.

The default is . To disable it, select as the module in the settings.

Click to the

Defense Information Systems Agency STIGsecurity policy. If any installation settings are incompatible with the policy, you will be prompted to modify them accordingly. Certain settings can be adjusted automatically while others require user input.Enabling a security profile enables a full SCAP remediation on first boot. You can also perform a or and manually remediate the system later with OpenSCAP. For more information, refer to the section on Security Profiles.

Displays the current network configuration. By default,

wickedis used for server installations and NetworkManager for desktop workloads. Click to change the settings. For details, see the section on Configuring a network connection with YaST.Important: Support for NetworkManagerSUSE only supports NetworkManager for desktop workloads with SLED or the Workstation extension. All server certifications are done with

wickedas the network configuration tool, and using NetworkManager may invalidate them. NetworkManager is not supported by SUSE for server workloads.Kdump saves the memory image ("core dump") to the file system in case the kernel crashes. This enables you to find the cause of the crash by debugging the dump file. Kdump is preconfigured and enabled by default. See the Basic Kdump configuration for more information.

If you have installed the desktop applications module, the system boots into the target, with network, multi-user and display manager support. Switch to if you do not need to log in via a display manager.

View detailed hardware information by clicking . In the resulting screen, you can also change —see the section on System Information for more information.

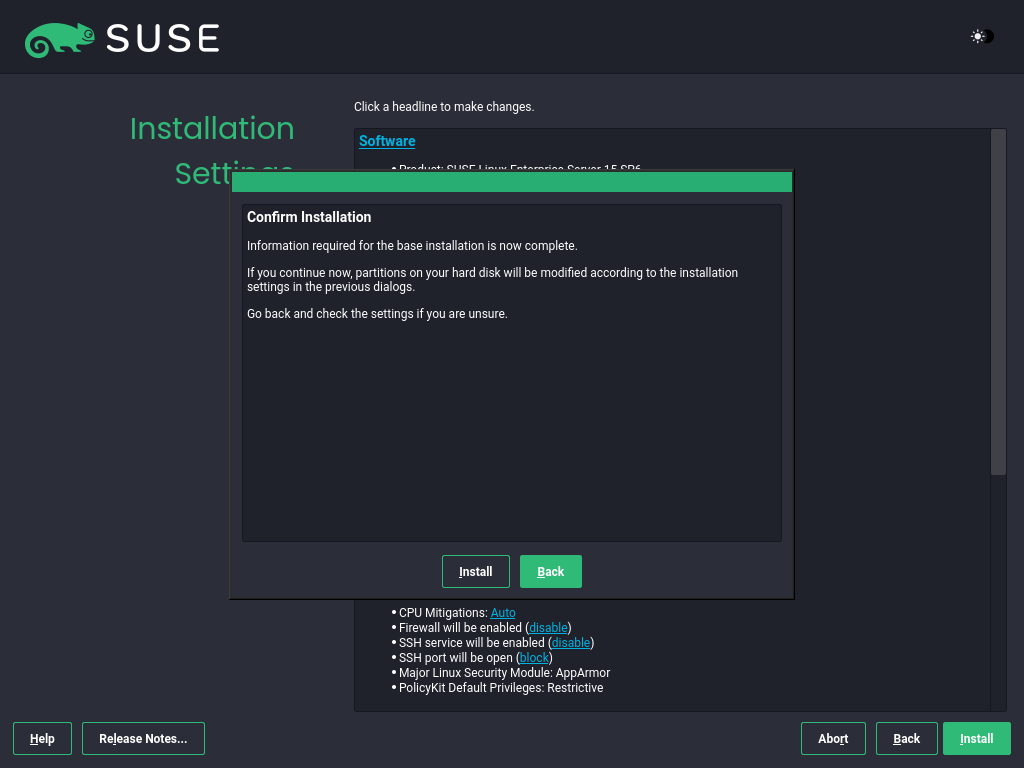

3.1.3.13 Start the installation #

After you have finalized the system configuration on the screen, click . Depending on your software selection, you may need to agree to license agreements before the installation confirmation screen pops up. Up to this point, no changes have been made to your system. After you click a second time, the installation process starts.

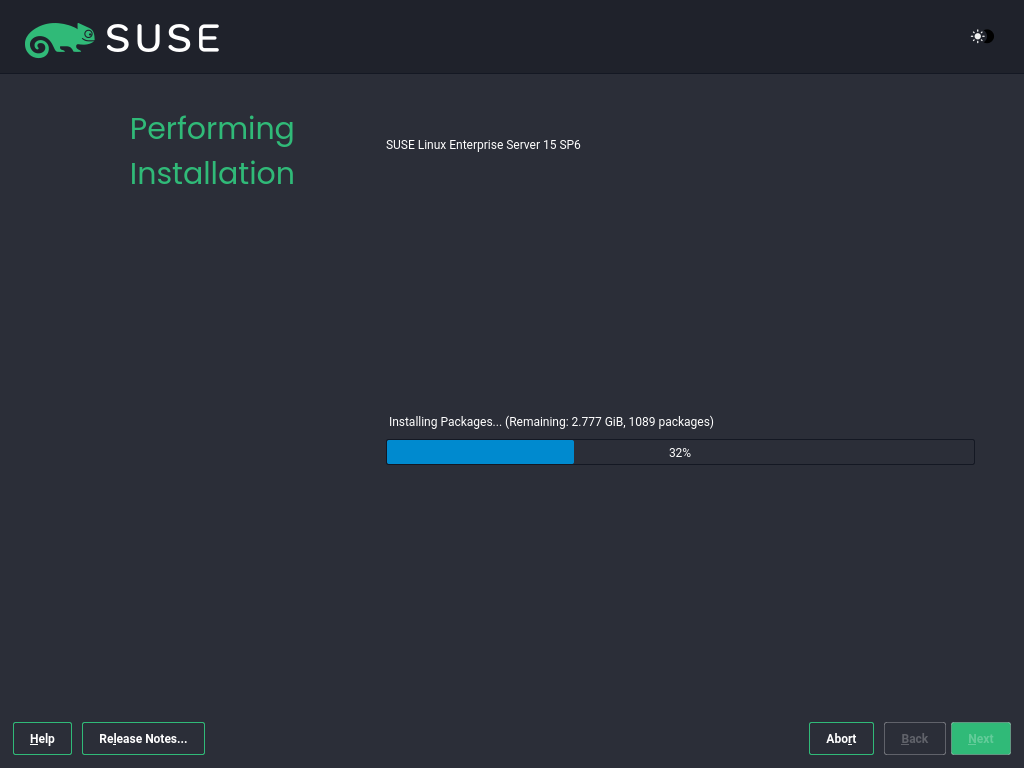

3.1.3.14 The installation process #

During the installation, the progress is shown. After the installation routine has finished, the computer is rebooted into the installed system.

3.2 Installing NVIDIA GPU drivers #

This article demonstrates how to implement host-level NVIDIA GPU

support via the open-driver. The

`open-driver`is part of the core package

repositories. Therefore, there is no need to compile it or

download executable packages. This driver is built into the

operating system rather than dynamically loaded by the NVIDIA

GPU Operator. This configuration is desirable for customers who

want to pre-build all artifacts required for deployment into the

image, and where the dynamic selection of the driver version via

Kubernetes is not a requirement.

3.2.1 Installing NVIDIA GPU drivers on SUSE Linux Enterprise Server #

3.2.1.1 Requirements #

If you are following this guide, it assumes that you have the following already available:

At least one host with SLES 15 SP6 installed, physical or virtual.

Your hosts are attached to a subscription as this is required for package access.

A compatible NVIDIA GPU installed or fully passed through to the virtual machine in which SLES is running.

Access to the root user—these instructions assume you are the root user, and not escalating your privileges via

sudo.

3.2.1.2 Considerations before the installation #

3.2.1.2.1 Select the driver generation #

You must verify the driver generation for the NVIDIA GPU that your

system has. For modern GPUs, the G06 driver is the

most common choice. Find more details in

the support database.

This section details the installation of the G06

generation of the driver.

3.2.1.2.2 Additional NVIDIA components #

Besides the NVIDIA open-driver provided by SUSE as part of SLES,

you might also need additional NVIDIA components. These could include

OpenGL libraries, CUDA toolkits, command-line utilities such as

nvidia-smi, and container-integration components such

as nvidia-container-toolkit. Many of these components are not shipped by

SUSE as they are proprietary NVIDIA software. This section describes

how to configure additional repositories that give you access to these

components and provides examples of using these tools to achieve a fully

functional system.

3.2.1.3 The installation procedure #

On the remote host, run the script

SUSE-AI-mirror-nvidia.shfrom the air-gapped stack (see Section 2.1, “SUSE AI air-gapped stack”) to download all required NVIDIA RPM packages to a local directory, for example:> SUSE-AI-mirror-nvidia.sh \ -p _/LOCAL_MIRROR_DIRECTORY_ \ -l https://nvidia.github.io/libnvidia-container/stable/rpm/x86_64 \ https://developer.download.nvidia.com/compute/cuda/repos/sles15/x86_64/After the download is complete, transfer the downloaded directory with all its content to each GPU-enabled local host.

Install the Open Kernel driver KMP and detect the driver version.

# zypper install -y --auto-agree-with-licenses \ nv-prefer-signed-open-driver # version=$(rpm -qa --queryformat '%{VERSION}\n' \ nv-prefer-signed-open-driver | cut -d "_" -f1 | sort -u | tail -n 1)You can then install the appropriate packages for additional utilities that are useful for testing purposes.

# zypper install -y --auto-agree-with-licenses \ nvidia-compute-utils-G06=${version} \ nvidia-persistenced=${version}Reboot the host to make the changes effective.

# rebootLog back in and run

nvidia-clicommand as root to ensure it correctly detects the GPU and displays the GPU details.# nvidia-smiThe output of this command should show you something similar to the following output. In the example below, the system has one GPU.

Fri Aug 1 15:32:10 2025 +------------------------------------------------------------------------------+ | NVIDIA-SMI 580.82.07 Driver Version: 580.82.07 CUDA Version: 13.0 | |------------------------------+------------------------+----------------------+ | GPU Name Persistence-M | Bus-Id Disp.A | Volatile Uncorr. ECC | | Fan Temp Perf Pwr:Usage/Cap | Memory-Usage | GPU-Util Compute M. | | | | MIG M. | |==============================+========================+======================| | 0 Tesla T4 On | 00000000:00:1E.0 Off | 0 | | N/A 33C P8 13W / 70W | 0MiB / 15360MiB | 0% Default | | | | N/A | +------------------------------+------------------------+----------------------+ +------------------------------------------------------------------------------+ | Processes: | | GPU GI CI PID Type Process name GPU Memory | | ID ID Usage | |==============================================================================| | No running processes found | +------------------------------------------------------------------------------+

3.2.2 Installing NVIDIA GPU drivers on SUSE Linux Enterprise Micro #

3.2.2.1 Requirements #

If you are following this guide, it assumes that you have the following already available:

At least one host with SUSE Linux Enterprise Micro 6.1 installed, physical or virtual.

Your hosts are attached to a subscription as this is required for package access.

A compatible NVIDIA GPU installed or fully passed through to the virtual machine in which SUSE Linux Enterprise Micro is running.

Access to the root user—these instructions assume you are the root user, and not escalating your privileges via

sudo.

3.2.2.2 Considerations before the installation #

3.2.2.2.1 Select the driver generation #

You must verify the driver generation for the NVIDIA GPU that your

system has. For modern GPUs, the G06 driver is the

most common choice. Find more details in

the support database.

This section details the installation of the G06

generation of the driver.

3.2.2.2.2 Additional NVIDIA components #

Besides the NVIDIA open-driver provided by SUSE as part of SUSE Linux Enterprise Micro,

you might also need additional NVIDIA components. These could include

OpenGL libraries, CUDA toolkits, command-line utilities such as

nvidia-smi, and container-integration components such

as nvidia-container-toolkit. Many of these components are not shipped by

SUSE as they are proprietary NVIDIA software. This section describes

how to configure additional repositories that give you access to these

components and provides examples of using these tools to achieve a fully

functional system.

3.2.2.3 The installation procedure #

On the remote host, run the script

SUSE-AI-mirror-nvidia.shfrom the air-gapped stack (see Section 2.1, “SUSE AI air-gapped stack”) to download all required NVIDIA RPM packages to a local directory, for example:> SUSE-AI-mirror-nvidia.sh \ -p /LOCAL_MIRROR_DIRECTORY \ -l https://nvidia.github.io/libnvidia-container/stable/rpm/x86_64 \ https://developer.download.nvidia.com/compute/cuda/repos/sles15/x86_64/After the download is complete, transfer the downloaded directory with all its content to each GPU-enabled local host.

On each (local) GPU-enabled host, open up a transactional-update shell session to create a new read/write snapshot of the underlying operating system so that we can make changes to the immutable platform.

# transactional-update shellOn each GPU-enabled local host in its transactional-update shell session, add a package repository from the safely transferred NVIDIA RPM packages directory. This allows pulling in additional utilities, for example,

nvidia-smi.transactional update # zypper ar --no-gpgcheck \ file:///LOCAL_MIRROR_DIRECTORY \ nvidia-local-mirror transactional update # zypper --gpg-auto-import-keys refreshInstall the Open Kernel driver KMP and detect the driver version.

transactional update # zypper install -y --auto-agree-with-licenses \ nvidia-open-driver-G06-signed-cuda-kmp-default transactional update # version=$(rpm -qa --queryformat '%{VERSION}\n' \ nvidia-open-driver-G06-signed-cuda-kmp-default \ | cut -d "_" -f1 | sort -u | tail -n 1)You can then install the appropriate packages for additional utilities that are useful for testing purposes.

transactional update # zypper install -y --auto-agree-with-licenses \ nvidia-compute-utils-G06=${version} \ nvidia-persistenced=${version}Exit the transactional-update session and reboot to the new snapshot that contains the changes you have made.

transactional update # exit # rebootAfter the system has rebooted, log back in and run

nvidia-clicommand as root to ensure it correctly detects the GPU and displays the GPU details.# nvidia-smiThe output of this command should show you something similar to the following output. In the example below, the system has one GPU.

Fri Aug 1 14:53:26 2025 +------------------------------------------------------------------------------+ | NVIDIA-SMI 580.82.07 Driver Version: 580.82.07 CUDA Version: 13.0 | |---------------------------------+---------------------+----------------------+ | GPU Name Persistence-M | Bus-Id Disp.A | Volatile Uncorr. ECC | | Fan Temp Perf Pwr:Usage/Cap | Memory-Usage | GPU-Util Compute M. | | | | MIG M. | |=================================+=====================+======================| | 0 Tesla T4 On |00000000:00:1E.0 Off | 0 | | N/A 34C P8 10W / 70W | 0MiB / 15360MiB | 0% Default | | | | N/A | +---------------------------------+---------------------+----------------------+ +------------------------------------------------------------------------------+ | Processes: | | GPU GI CI PID Type Process name GPU Memory | | ID ID Usage | |==============================================================================| | No running processes found | +------------------------------------------------------------------------------+

3.3 Installing SUSE Rancher Prime: RKE2 in air-gapped environments #

SUSE Rancher Prime: RKE2 can be installed in an air-gapped environment with two different methods. You can either deploy via the rke2-airgap-images tarball artifact or by using a private registry.

You can use any RKE2 Prime version listed on the Prime Artifacts URL for the assets mentioned in these steps. To learn more about the Prime Artifacts URL, see our Prime-only documentation. Authentication is required. Use your SUSE Customer Center (SCC) credentials to log in.

3.3.1 Prerequisites #

Ensure you meet the following prerequisites based on your environment before proceeding. All the steps listed on this page must be run as the root user or through sudo.

If your node has NetworkManager installed and enabled, ensure you configure NetworkManager to ignore CNI-managed interfaces.

If running on an air-gapped node with SELinux enabled, you must manually install the necessary SELinux policy RPM before performing these steps. See our RPM Documentation to determine what you need.

If running on an air-gapped node with SELinux enabled, the following are required dependencies for SLES, CentOS, or RHEL 8 when doing an RPM install:

container-selinux iptables libnetfilter_conntrack libnfnetlink libnftnl policycoreutils-python-utils rke2-common rke2-selinuxIf your nodes do not have an interface with a default route, a default route must be configured; even a black-hole route via a dummy interface will suffice. RKE2 requires a default route to auto-detect the node’s primary IP and for kube-proxy ClusterIP routing to function correctly. To add a dummy route, do the following:

ip link add dummy0 type dummy ip link set dummy0 up ip addr add 203.0.113.254/31 dev dummy0 ip route add default via 203.0.113.255 dev dummy0 metric 1000

3.3.2 Tarball Method #

Download the air-gap images tarballs from the Prime Artifacts URL for the RKE2 version, CNI, and platform you are using.

rke2-images.linux-<ARCH>.tar.zst, orrke2-images-core.linux-<ARCH>.tar.gz. This tarball contains the core container images required for RKE2 Prime to function. Zstandard offers better compression ratios and faster decompression speeds compared to gzip.rke2-images-<CNI>.linux-<ARCH>.tar.gz: This tarball specifically contains the container images for your Container Network Interface (CNI). The default CNI in RKE2 is Canal.If you are using the default CNI, Canal (

--cni=canal), you can use either therke2-imagelegacy archive as described above or therke2-images-coreandrke2-images-canalarchives.If you are using the alternative CNI, Cilium (

--cni=cilium), you must download therke2-images-coreandrke2-images-ciliumarchives.If using your own CNI (

--cni=none), download only therke2-images-corearchive.

If enabling the vSphere CPI/CSI charts (

--cloud-provider-name=rancher-vsphere), you must also download therke2-images-vspherearchive.

Create a directory named

/rke2-artifactson the node and save the previously downloaded files there.

3.3.3 Private Registry Method #

Private registry support honors all settings from the containerd registry configuration, including endpoint override, transport protocol (HTTP/HTTPS), authentication, certificate verification, and more.

Add all the required system images to your private registry. A list of images can be obtained from the

.txtfile corresponding to each tarball referenced above. Alternatively, you candocker loadthe airgap image tarballs, then tag and push the loaded images.Section 3.3.4, “Install RKE2” using the

system-default-registryparameter, or use the containerd registry configuration to use your registry as a mirror for docker.io.

3.3.4 Install RKE2 #

The following options to install RKE2 should only be performed after completing either the Section 3.3.2, “Tarball Method” or Section 3.3.3, “Private Registry Method”.

RKE2 can be installed either by running the binary (Section 3.3.4.1, “RKE2 Binary Install”) directly or by using the install.sh script (Section 3.3.4.2, “RKE2 Install.sh Script Install”).

3.3.4.1 RKE2 Binary Install #

Obtain the RKE2 binary file

rke2.linux-<ARCH>.tar.gz.Ensure the binary is named

rke2and place it in/usr/local/bin. Ensure it is executable.Run the binary with the desired parameters.

If you are using the Rancher Prime registry, set the following values in

config.yaml:Set

system-default-registry: registry.rancher.com.If you are not using the default CNI, Canal, set

cni: <CNI>.system-default-registry: registry.rancher.com cni: <CNI>

If using the Private Registry Method, set the following values in

config.yaml:system-default-registry: "registry.example.com:5000"NoteThe

system-default-registryparameter must specify only valid RFC 3986 URI authorities, i.e. a host and optional port.

3.3.4.2 RKE2 Install.sh Script Install #

install.sh may be used in an offline mode by setting the INSTALL_RKE2_ARTIFACT_PATH variable to a path containing pre-downloaded artifacts. This will run through a standard install, including creating systemd units.

Download the install script, RKE2 binaries, RKE2 images, and SHA256 checksums archives from the Prime Artifacts URL into the

/rke2-artifactsdirectory, as in the example below:mkdir /rke2-artifacts && cd /rke2-artifacts/ curl -OLs <PRIME-ARTIFACTS-URL>/rke2/<VERSION>/rke2-images.linux-<ARCH>.tar.zst curl -OLs <PRIME-ARTIFACTS-URL>/rke2/<VERSION>/rke2.linux-<ARCH>.tar.gz curl -OLs <PRIME-ARTIFACTS-URL>/rke2/<VERSION>/sha256sum-<ARCH>.txt curl -sfL https://get.rke2.io --output install.shRun

install.shusing the directory, as in the example below:INSTALL_RKE2_ARTIFACT_PATH=/rke2-artifacts sh install.shEnable and run the service as outlined here.

3.4 Installing SUSE Rancher Prime: RKE2 #

This guide will help you quickly launch a cluster with default options.

New to Kubernetes? The official Kubernetes docs already have some great tutorials outlining the basics here.

You can use any RKE2 Prime version listed on the Prime Artifacts URL for the assets mentioned in these steps. To learn more about the Prime Artifacts URL, see our Prime-only documentation. Authentication is required. Use your SUSE Customer Center (SCC) credentials to log in.

3.4.1 Prerequisites #

Make sure your environment fulfills the requirements. If NetworkManager is installed and enabled on your hosts, ensure that it is configured to ignore CNI-managed interfaces.

If the host kernel supports AppArmor, the AppArmor tools (usually available via the

apparmor-parserpackage) must also be present prior to installing RKE2.The SUSE Rancher Prime: RKE2 installation process must be run as the root user or through

sudo.

3.4.2 Server Node Installation #

SUSE Rancher Prime: RKE2 provides an installation script that is a convenient way to install it as a service on systemd based systems. This script is available at https://get.rke2.io. To install SUSE Rancher Prime: RKE2 using this method do the following:

Run the installer, where

INSTALL_RKE2_ARTIFACT_URLis the Prime Artifacts URL andINSTALL_RKE2_CHANNELis a release channel you can subscribe to and defaults tostable. In this example,INSTALL_RKE2_CHANNEL="latest"gives you the latest version of RKE2.curl -sfL https://get.rke2.io/ | sudo INSTALL_RKE2_ARTIFACT_URL=<PRIME-ARTIFACTS-URL>/rke2 INSTALL_RKE2_CHANNEL="latest" sh -If you want to specify a version, set the

INSTALL_RKE2_VERSIONenvironment variable.curl -sfL https://get.rke2.io/ | sudo INSTALL_RKE2_ARTIFACT_URL=<PRIME-ARTIFACTS-URL>/rke2 INSTALL_RKE2_VERSION="<VERSION>" sh -This will install the

rke2-serverservice and therke2binary onto your machine. Due to its nature, it will fail unless it runs as the root user or throughsudo.Enable the rke2-server service.

systemctl enable rke2-server.serviceTo pull images from the Rancher Prime registry, set the following value in

etc/rancher/rke2/config.yaml:system-default-registry: registry.rancher.comThis configuration tells RKE2 to use registry.rancher.com as the default location for all container images it needs to deploy within the cluster.

Start the service.

systemctl start rke2-server.serviceFollow the logs with the following command:

journalctl -u rke2-server -f

After running this installation:

The

rke2-serverservice will be installed. Therke2-serverservice will be configured to automatically restart after node reboots or if the process crashes or is killed.Additional utilities will be installed at

/var/lib/rancher/rke2/bin/. They include:kubectl,crictl, andctr. Note that these are not on your path by default.Two cleanup scripts,

rke2-killall.shandrke2-uninstall.sh, will be installed to the path at:/usr/local/binfor regular file systems/opt/rke2/binfor read-only and brtfs file systemsINSTALL_RKE2_TAR_PREFIX/binifINSTALL_RKE2_TAR_PREFIXis set

A kubeconfig file will be written to

/etc/rancher/rke2/rke2.yaml.A token that can be used to register other server or agent nodes will be created at

/var/lib/rancher/rke2/server/node-token.

If you are adding additional server nodes, you must have an odd number in total. An odd number is needed to maintain quorum. See the High Availability documentation for more details.

3.4.3 Linux Agent (Worker) Node Installation #

The steps on this section requires root level access or sudo to work.

Run the installer.

curl -sfL https://get.rke2.io | INSTALL_RKE2_ARTIFACT_URL=<PRIME-ARTIFACTS-URL>/rke2 INSTALL_RKE2_CHANNEL="latest" INSTALL_RKE2_TYPE="agent" sh -If you want to specify a version, set the

INSTALL_RKE2_VERSIONenvironment variable.curl -sfL https://get.rke2.io/ | sudo INSTALL_RKE2_ARTIFACT_URL=<PRIME-ARTIFACTS-URL>/rke2 INSTALL_RKE2_VERSION="<VERSION>" INSTALL_RKE2_TYPE="agent" sh -This will install the

rke2-agentservice and therke2binary onto your machine. Due to its nature, it will fail unless it runs as the root user or throughsudo.Enable the rke2-agent service.

systemctl enable rke2-agent.serviceConfigure the rke2-agent service.

mkdir -p /etc/rancher/rke2/ vim /etc/rancher/rke2/config.yamlContent for config.yaml:

server: https://<server>:9345 token: <token from server node>NoteThe

rke2 serverprocess listens on port9345for new nodes to register. The Kubernetes API is still served on port6443, as normal.Start the service.

systemctl start rke2-agent.serviceFollow the logs with the following command:

journalctl -u rke2-agent -f

Each machine must have a unique host name. If your machines do not have unique host names, set the node-name parameter in the config.yaml file and provide a value with a valid and unique host name for each node. To learn more about the config.yaml file, refer to the Configuration Options documentation.

3.4.4 Windows Agent (Worker) Node Installation #

Windows Support works with Calico or Flannel as the CNI for the RKE2 cluster.

Prepare the Windows Agent Node.

NoteThe Windows Server Containers feature needs to be enabled for the RKE2 agent to work.

Open a new Powershell window with Administrator privileges.

powershell -Command "Start-Process PowerShell -Verb RunAs"In the new Powershell window, run the following command to install the containers feature.

Enable-WindowsOptionalFeature -Online -FeatureName containers –AllThis will require a reboot for the

Containersfeature to properly function.

Download the install script.

Invoke-WebRequest -Uri https://raw.githubusercontent.com/rancher/rke2/master/install.ps1 -Outfile install.ps1This script will download the

rke2.exeWindows binary onto your machine.Configure the rke2-agent for Windows.

New-Item -Type Directory c:/etc/rancher/rke2 -Force Set-Content -Path c:/etc/rancher/rke2/config.yaml -Value @" server: https://<server>:9345 token: <token from server node> "@To learn more about the config.yaml file, refer to the Configuration Options documentation.

Configure the PATH.

$env:PATH+=";c:\var\lib\rancher\rke2\bin;c:\usr\local\bin" [Environment]::SetEnvironmentVariable( "Path", [Environment]::GetEnvironmentVariable("Path", [EnvironmentVariableTarget]::Machine) + ";c:\var\lib\rancher\rke2\bin;c:\usr\local\bin", [EnvironmentVariableTarget]::Machine)Run the installer.

./install.ps1Start the Windows RKE2 Service.

rke2.exe agent service --add

Each machine must have a unique host name.

Don’t forget to start the RKE2 service with:

Start-Service rke2If you would prefer to use CLI parameters only instead, run the binary with the desired parameters.

rke2.exe agent --token <TOKEN> --server <SERVER_URL>4 Preparing the cluster for AI Library #

This procedure assumes that you already have the base operating system installed on cluster nodes as well as the SUSE Rancher Prime: RKE2 Kubernetes distribution installed and operational. If you are installing from scratch, refer to Section 3, “Installing the Linux and Kubernetes distribution” first.

Install SUSE Rancher Prime (Section 4.1, “Installing SUSE Rancher Prime on a Kubernetes cluster in air-gapped environments”) on the cluster.

Install the NVIDIA GPU Operator on the cluster as described in Section 4.2, “Installing the NVIDIA GPU Operator on the SUSE Rancher Prime: RKE2 cluster”.

Connect the Kubernetes cluster to SUSE Rancher Prime as described in Section 4.3, “Registering existing clusters”.

Configure the GPU-enabled nodes so that the SUSE AI containers are assigned to Pods that run on nodes equipped with NVIDIA GPU hardware. Find more details about assigning Pods to nodes in Section 4.4, “Assigning GPU nodes to applications”.

(Optional) Install SUSE Security as described in Section 4.5, “Installing SUSE Security”. Although this step is not required, we strongly encourage it to ensure data security in the production environment.

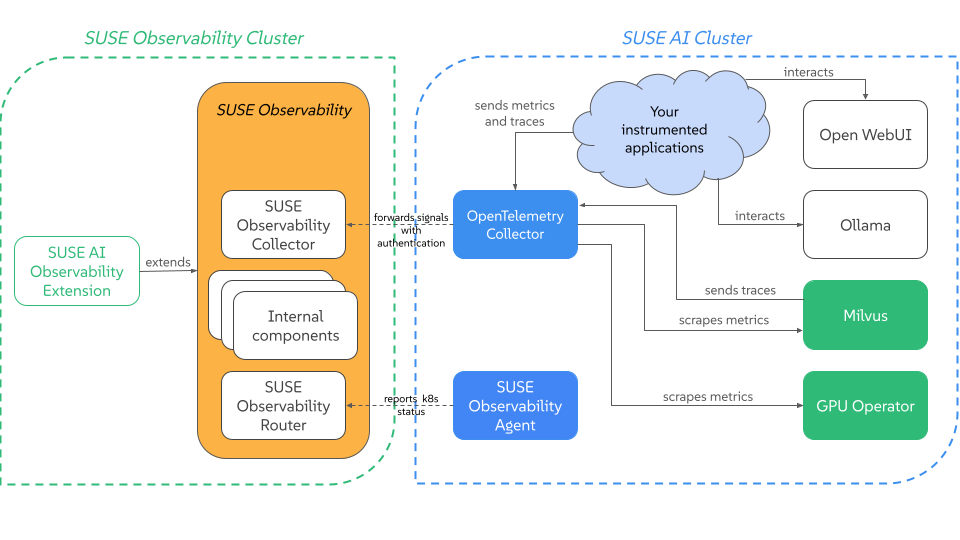

Install and configure SUSE Observability to observe the nodes used for SUSE AI application. Refer to Section 4.6, “Setting up SUSE Observability for SUSE AI” for more details.

4.1 Installing SUSE Rancher Prime on a Kubernetes cluster in air-gapped environments #

This section is about using the Helm CLI to install the Rancher server in an air-gapped environment.

4.1.1 Installation outline #

4.1.2 Set up the infrastructure and a private registry #

In this section, you will provision the underlying infrastructure for your Rancher management server in an air gapped environment. You will also set up the private container image registry that must be available to your Rancher node(s).

In this section, you will provision the underlying infrastructure for your Rancher management server in an air-gapped environment. You will also set up the private container image registry that must be available to your Rancher node(s). The procedures below focus on installing Rancher in the RKE2 cluster. To install the Rancher management server on a high-availability SUSE Rancher Prime: RKE2 cluster, we recommend setting up the following infrastructure:

Three Linux nodes, typically virtual machines, in an infrastructure provider such as Amazon’s EC2, Google Compute Engine or vSphere.

A load balancer to direct front-end traffic to the three nodes.

A DNS record to map a URL to the load balancer. This will become the Rancher server URL, and downstream Kubernetes clusters will need to reach it.

A private image registry to distribute container images to your machines.

These nodes must be in the same region or data center. You may place these servers in separate availability zones.

4.1.2.1 Why three nodes? #

In an RKE2 cluster, the Rancher server data is stored on etcd. This etcd database runs on all three nodes. The etcd database requires an odd number of nodes so that it can always elect a leader with a majority of the etcd cluster. If the etcd database cannot elect a leader, etcd can suffer from split brain, requiring the cluster to be restored from backup. If one of the three etcd nodes fails, the two remaining nodes can elect a leader because they have the majority of the total number of etcd nodes.

4.1.2.2 Set up Linux nodes #

These hosts will be disconnected from the Internet, but require being able to connect with your private registry. Make sure that your nodes fulfill the general installation requirements for OS, container runtime, hardware and networking. For an example of one way to set up Linux nodes, refer to this tutorial for setting up nodes as instances in Amazon EC2.

4.1.2.3 Set up the load balancer #

You will also need to set up a load balancer to direct traffic to the Rancher replica on both nodes. That will prevent the outage of any single node from taking down communications to the Rancher management server. When Kubernetes gets set up in a later step, the RKE2 tool will deploy an NGINX Ingress controller. This controller will listen on ports 80 and 443 of the worker nodes, answering traffic destined for specific hostnames. When Rancher is installed (also in a later step), the Rancher system creates an Ingress resource. That Ingress tells the NGINX Ingress controller to listen for traffic destined for the Rancher host name. The NGINX Ingress controller, when receiving traffic destined for the Rancher host name, will forward that traffic to the running Rancher pods in the cluster. For your implementation, consider if you want or need to use a Layer-4 or Layer-7 load balancer:

A layer-4 load balancer is the simpler of the two choices, in which you are forwarding TCP traffic to your nodes. We recommend configuring your load balancer as a Layer 4 balancer, forwarding traffic on ports TCP/80 and TCP/443 to the Rancher management cluster nodes. The Ingress controller on the cluster will redirect HTTP traffic to HTTPS and terminate SSL/TLS on port TCP/443. The Ingress controller will forward traffic on port TCP/80 to the Ingress pod in the Rancher deployment.

A layer-7 load balancer is a bit more complicated but can offer features that you may want. For instance, a layer-7 load balancer is capable of handling TLS termination at the load balancer, as opposed to Rancher doing TLS termination itself. This can be beneficial to centralize your TLS termination in your infrastructure. Layer-7 load balancing also allows your load balancer to make decisions based on HTTP attributes such as cookies—capabilities that a layer-4 load balancer cannot handle. If you decide to terminate the SSL/TLS traffic on a layer-7 load balancer, you will need to use the

--set tls=externaloption when installing Rancher in a later step. For more information, refer to the Rancher Helm chart options.

For an example showing how to set up an NGINX load balancer, refer to this page. For a how-to guide for setting up an Amazon ELB Network Load Balancer, refer to this page.

Do not use this load balancer (that is, the local cluster Ingress) to load balance applications other than Rancher following installation.

Sharing this Ingress with other applications may result in WebSocket errors to Rancher following Ingress configuration reloads for other apps.

We recommend dedicating the local cluster to Rancher and no other applications.

4.1.2.4 Set up the DNS record #

Once you have set up your load balancer, you will need to create a DNS record to send traffic to this load balancer. Depending on your environment, this may be an A record pointing to the LB IP, or it may be a CNAME pointing to the load balancer host name. In either case, make sure this record matches the host name you want Rancher to respond to. You will need to specify this host name in a later step when you install Rancher, and it is not possible to change it later. Make sure that your decision is final. For a how-to guide for setting up a DNS record to route domain traffic to an Amazon ELB load balancer, refer to the official AWS documentation.

4.1.2.5 Set up a private image registry #

Rancher supports air-gapped installations using a secure private registry. You must have your own private registry or other means of distributing container images to your machines. In a later step, when you set up your RKE2 Kubernetes cluster, you will create a private registries configuration file with details from this registry. If you need to create a private registry, refer to the documentation pages for your respective runtime:

4.1.3 Collect and publish images to your private registry #

This section describes how to set up your private registry so that when you install Rancher, Rancher will pull all the required images from this registry.

This section describes how to set up your private registry so that when you install SUSE Rancher Prime, it will pull all the required images from this registry.

By default, all images used to provision Kubernetes clusters or launch any tools in SUSE Rancher Prime, e.g., monitoring, pipelines or alerts, are pulled from Docker Hub.

In an air-gapped installation of SUSE Rancher Prime, you will need a private registry that is located somewhere accessible by your SUSE Rancher Prime server.

You will then load every image into the registry. Populating the private registry with images is the same process for installing SUSE Rancher Prime with Docker and for installing SUSE Rancher Prime on a Kubernetes cluster.

You must have a private registry available to use.

If the registry has certs, follow this K3s documentation about adding a private registry. The certs and registry configuration files need to be mounted into the SUSE Rancher Prime container.

The following steps populate your private registry.

Find the required assets for your SUSE Rancher Prime version (Section 4.1.3.1, “Find the required assets for your SUSE Rancher Prime version”)

Collect the cert-manager image (Section 4.1.3.2, “Collect the cert-manager image”) (unless you are bringing your own certificates or terminating TLS on a load balancer)

Save the images to your workstation (Section 4.1.3.3, “Save the images to your workstation”)

Populate the private registry (Section 4.1.3.4, “Populate the private registry”)

Prerequisites. These steps expect you to use a Linux workstation that has Internet access, access to your private registry, and at least 20 GB of disk space.

If you use ARM64 hosts, the registry must support manifests. As of April 2020, Amazon Elastic Container Registry does not support manifests.

4.1.3.1 Find the required assets for your SUSE Rancher Prime version #

Go to our releases page, find the SUSE Rancher Prime v2.x.x release that you want to install, and click Assets. Note: Do not use releases marked

rcorPre-release, as they are not stable for production environments.From the release’s Assets section, download the following files, which are required to install SUSE Rancher Prime in an air-gapped environment:

| Release File | Description |

|---|---|

| This file contains a list of images needed to install SUSE Rancher Prime, provision clusters and use SUSE Rancher Prime tools. |

| This script pulls all the images in the |

| This script loads images from the |

4.1.3.2 Collect the cert-manager image #

Skip this step if you are using your own certificates, or if you are terminating TLS on an external load balancer.

In a Kubernetes Install, if you elect to use the Rancher default self-signed TLS certificates, you must add the cert-manager image to rancher-images.txt as well.

Fetch the latest

cert-managerHelm chart and parse the template for image details:NoteRecent changes to cert-manager require an upgrade. If you are upgrading SUSE Rancher Prime and using a version of cert-manager older than v0.12.0, please see our upgrade documentation.

helm repo add jetstack https://charts.jetstack.io helm repo update helm fetch jetstack/cert-manager helm template ./cert-manager-version.tgz | \ awk '$1 ~ /image:/ {print $2}' | sed s/\"//g >> ./rancher-images.txtSort the image list and deduplicate it to remove any overlap between the sources:

> sort -u rancher-images.txt -o rancher-images.txt

4.1.3.3 Save the images to your workstation #

Make

rancher-save-images.shan executable:

{prompt_user}chmod +x rancher-save-images.shRun

rancher-save-images.shwith therancher-images.txtimage list to create a tarball of all the required images:

{prompt_user}./rancher-save-images.sh --image-list ./rancher-images.txtResult: Docker begins pulling the images used for an air-gapped install.

Be patient.

This process takes a few minutes.

When the process completes, your current directory will output a tarball named rancher-images.tar.gz.

Check that the output is in the directory.

4.1.3.4 Populate the private registry #

Next, you will move the images in the rancher-images.tar.gz to your private registry using the scripts to load the images.

Move the images in the rancher-images.tar.gz to your private registry using the scripts to load the images.

The rancher-images.txt is expected to be on the workstation in the same directory that you are running the rancher-load-images.sh script.

The rancher-images.tar.gz should also be in the same directory.

(Optional) Log in to your private registry if required:

> docker login REGISTRY.YOURDOMAIN.COM:PORT

Make

rancher-load-images.shan executable:> chmod +x rancher-load-images.sh

Use

rancher-load-images.shto extract, tag and pushrancher-images.txtandrancher-images.tar.gzto your private registry:> ./rancher-load-images.sh --image-list ./rancher-images.txt \ --registry REGISTRY.YOURDOMAIN.COM:PORT

4.1.4 Install Kubernetes #

This section describes how to install a Kubernetes cluster according to our best practices for the SUSE Rancher Prime server environment. This cluster should be dedicated to running only the SUSE Rancher Prime server.

Skip this section if you are installing SUSE Rancher Prime on a single node with Docker.

Rancher can be installed on any Kubernetes cluster, including hosted Kubernetes providers.

The steps to set up an air-gapped Kubernetes cluster on RKE, RKE2, or K3s are shown below.

In this guide, we are assuming you have created your nodes in your air-gapped environment and have a secure Docker private registry on your bastion server.

== 1. Create RKE2 configuration

Create the config.yaml file at /etc/rancher/rke2/config.yaml. This will contain all the configuration options necessary to create a highly available RKE2 cluster.

On the first server the minimum configuration is:

token: my-shared-secret tls-san: - loadbalancer-dns-domain.com

On each other server the configuration file should contain the same token and tell RKE2 to connect to the existing first server:

server: https://ip-of-first-server:9345 token: my-shared-secret tls-san: - loadbalancer-dns-domain.com

For more information, refer to the RKE2 documentation.

RKE2 additionally provides a resolv-conf option for kubelets, which may help with configuring DNS in air-gap networks.

== 2. Create Registry YAML

Create the registries.yaml file at /etc/rancher/rke2/registries.yaml. This will tell RKE2 the necessary details to connect to your private registry.

The registries.yaml file should look like this before plugging in the necessary information:

---

mirrors:

customreg:

endpoint:

- "https://ip-to-server:5000"

configs:

customreg:

auth:

username: xxxxxx # this is the registry username

password: xxxxxx # this is the registry password

tls:

cert_file: <path to the cert file used in the registry>

key_file: <path to the key file used in the registry>

ca_file: <path to the ca file used in the registry>For more information on private registries configuration file for RKE2, refer to the RKE2 documentation.

== 3. Install RKE2

Rancher needs to be installed on a supported Kubernetes version. To find out which versions of Kubernetes are supported for your Rancher version, refer to the SUSE Rancher Support Matrix.

Download the install script, rke2, rke2-images, and sha256sum archives from the release and upload them into a directory on each server:

mkdir /tmp/rke2-artifacts && cd /tmp/rke2-artifacts/ wget https://github.com/rancher/rke2/releases/download/v1.21.5%2Brke2r2/rke2-images.linux-amd64.tar.zst wget https://github.com/rancher/rke2/releases/download/v1.21.5%2Brke2r2/rke2.linux-amd64.tar.gz wget https://github.com/rancher/rke2/releases/download/v1.21.5%2Brke2r2/sha256sum-amd64.txt curl -sfL https://get.rke2.io --output install.sh

Next, run install.sh using the directory on each server, as in the example below:

INSTALL_RKE2_ARTIFACT_PATH=/tmp/rke2-artifacts sh install.sh

Then enable and start the service on all servers:

` systemctl enable rke2-server.service systemctl start rke2-server.service `

For more information, refer to the RKE2 documentation.

== 4. Save and Start Using the kubeconfig File

When you installed RKE2 on each Rancher server node, a kubeconfig file was created on the node at /etc/rancher/rke2/rke2.yaml. This file contains credentials for full access to the cluster, and you should save this file in a secure location.

To use this kubeconfig file,

Install kubectl, a Kubernetes command-line tool.

Copy the file at

/etc/rancher/rke2/rke2.yamland save it to the directory~/.kube/configon your local machine.In the kubeconfig file, the

serverdirective is defined as localhost. Configure the server as the DNS of your load balancer, referring to port 6443. (The Kubernetes API server will be reached at port 6443, while the Rancher server will be reached at ports 80 and 443.) Here is an examplerke2.yaml:

apiVersion: v1

clusters:

- cluster:

certificate-authority-data: [CERTIFICATE-DATA]

server: [LOAD-BALANCER-DNS]:6443 # Edit this line

name: default

contexts:

- context:

cluster: default

user: default

name: default

current-context: default

kind: Config

preferences: {}

users:

- name: default

user:

password: [PASSWORD]

username: adminResult: You can now use kubectl to manage your RKE2 cluster. If you have more than one kubeconfig file, you can specify which one you want to use by passing in the path to the file when using kubectl:

kubectl --kubeconfig ~/.kube/config/rke2.yaml get pods --all-namespaces

For more information about the kubeconfig file, refer to the RKE2 documentation or the official Kubernetes documentation about organizing cluster access using kubeconfig files.

== Note on Upgrading

Upgrading an air-gap environment can be accomplished in the following manner:

Download the new air-gap artifacts and install script from the releases page for the version of RKE2 you will be upgrading to.

Run the script again just as you had done in the past with the same environment variables.

Restart the RKE2 service.

4.1.5 Install SUSE Rancher Prime #

This section is about how to deploy SUSE Rancher Prime for your air gapped environment in a high-availability Kubernetes installation. An air gapped environment could be where the SUSE Rancher Prime server will be installed offline, behind a firewall, or behind a proxy.

4.1.5.1 Privileged Access for SUSE Rancher Prime #

When the SUSE Rancher Prime server is deployed in the Docker container, a local Kubernetes cluster is installed within the container for SUSE Rancher Prime to use. Because many features of SUSE Rancher Prime run as deployments, and privileged mode is required to run containers within containers, you will need to install SUSE Rancher Prime with the --privileged option.

4.1.5.2 Docker Instructions #

If you want to continue the air gapped installation using Docker commands, skip the rest of this page and follow the instructions on this page..

4.1.5.3 Kubernetes Instructions #

SUSE Rancher Prime recommends installing SUSE Rancher Prime on a Kubernetes cluster. A highly available Kubernetes install is comprised of three nodes running the SUSE Rancher Prime server components on a Kubernetes cluster. The persistence layer (etcd) is also replicated on these three nodes, providing redundancy and data duplication in case one of the nodes fails.

4.1.5.3.1 1. Add the Helm Chart Repository #

From a system that has access to the internet, fetch the latest Helm chart and copy the resulting manifests to a system that has access to the SUSE Rancher Prime server cluster.

If you haven’t already, install

helmlocally on a workstation that has internet access. Note: Refer to the Helm version requirements to choose a version of Helm to install SUSE Rancher Prime.Use the

helm repo addcommand to add the Helm chart repository that contains charts to install SUSE Rancher Prime.helm repo add rancher-prime <helm-chart-repo-url>Fetch the SUSE Rancher Prime chart. This will pull down the chart and save it in the current directory as a

.tgzfile.To fetch the latest version:

shared:ROOT:partial$en/rancher-chart-rename.adoc

helm fetch rancher-prime/rancherTo fetch a specific version:

Check to see which version of SUSE Rancher Prime are available.

helm search repo --versions rancher-primeFetch a specific version by specifying the

--versionparameter:shared:ROOT:partial$en/rancher-chart-rename.adoc

helm fetch rancher-prime/rancher --version=<version>

4.1.5.3.2 2. Choose your SSL Configuration #

The SUSE Rancher Prime server is designed to be secure by default and requires SSL/TLS configuration.

When SUSE Rancher Prime is installed on an air gapped Kubernetes cluster, there are two recommended options for the source of the certificate.

| Configuration | Chart option | Description | Requires cert-manager |

|---|---|---|---|

Rancher Generated Self-Signed Certificates |

| Use certificates issued by Rancher’s generated CA (self signed) | yes |

Certificates from Files |

| Use your own certificate files by creating Kubernetes Secret(s). | no |

4.1.5.3.3 Helm Chart Options for Air Gap Installations #

When setting up the Rancher Helm template, there are several options in the Helm chart that are designed specifically for air gap installations.

| Chart Option | Chart Value | Description |

|---|---|---|

|

| Configure proper SUSE Rancher Prime TLS issuer depending of running cert-manager version. |

|

| Configure SUSE Rancher Prime server to always pull from your private registry when provisioning clusters. |

|

| Configure SUSE Rancher Prime server to use the packaged copy of Helm system charts. The system charts repository contains all the catalog items required for features such as monitoring, logging, alerting and global DNS. These Helm charts are located in GitHub, but since you are in an air gapped environment, using the charts that are bundled within SUSE Rancher Prime is much easier than setting up a Git mirror. |

4.1.5.3.4 3. Fetch the Cert-Manager Chart #

Based on the choice your made in 2. Choose your SSL Configuration (Section 4.1.5.3.2, “2. Choose your SSL Configuration”), complete one of the procedures below.

4.1.5.3.4.1 Option A: Default Self-Signed Certificate #

By default, SUSE Rancher Prime generates a CA and uses cert-manager to issue the certificate for access to the SUSE Rancher Prime server interface.

Recent changes to cert-manager require an upgrade. If you are upgrading SUSE Rancher Prime and using a version of cert-manager older than v0.11.0, please see our upgrade cert-manager documentation.

4.1.5.3.4.1.1 1. Add the cert-manager Repo #

From a system connected to the internet, add the cert-manager repo to Helm:

helm repo add jetstack https://charts.jetstack.io

helm repo update4.1.5.3.4.1.2 2. Fetch the cert-manager Chart #

Fetch the latest cert-manager chart available from the Helm chart repository.

helm fetch jetstack/cert-manager --version v1.11.04.1.5.3.4.1.3 3. Retrieve the cert-manager CRDs #

Download the required CRD file for cert-manager:

curl -L -o cert-manager-crd.yaml https://github.com/cert-manager/cert-manager/releases/download/v1.11.0/cert-manager.crds.yaml4.1.5.3.5 4. Install SUSE Rancher Prime #

Copy the fetched charts to a system that has access to the SUSE Rancher Prime server cluster to complete installation.

4.1.5.3.5.1 1. Install cert-manager #

Install cert-manager with the same options you would use to install the chart. Remember to set the image.repository option to pull the image from your private registry.

To see options on how to customize the cert-manager install (including for cases where your cluster uses PodSecurityPolicies), see the cert-manager docs.

If you are using self-signed certificates, install cert-manager:

Create the namespace for cert-manager.

kubectl create namespace cert-managerCreate the cert-manager CustomResourceDefinitions (CRDs).

kubectl apply -f cert-manager-crd.yamlInstall cert-manager.

helm install cert-manager ./cert-manager-v1.11.0.tgz \ --namespace cert-manager \ --set image.repository=<REGISTRY.YOURDOMAIN.COM:PORT>/quay.io/jetstack/cert-manager-controller \ --set webhook.image.repository=<REGISTRY.YOURDOMAIN.COM:PORT>/quay.io/jetstack/cert-manager-webhook \ --set cainjector.image.repository=<REGISTRY.YOURDOMAIN.COM:PORT>/quay.io/jetstack/cert-manager-cainjector \ --set startupapicheck.image.repository=<REGISTRY.YOURDOMAIN.COM:PORT>/quay.io/jetstack/cert-manager-ctl

4.1.5.3.5.2 2. Install Rancher #

First, refer to Adding TLS Secrets to publish the certificate files so SUSE Rancher Prime and the ingress controller can use them.

Then, create the namespace for SUSE Rancher Prime using kubectl:

kubectl create namespace cattle-systemNext, install SUSE Rancher Prime, declaring your chosen options. Use the reference table below to replace each placeholder. SUSE Rancher Prime needs to be configured to use the private registry in order to provision any SUSE Rancher Prime launched Kubernetes clusters or SUSE Rancher Prime tools.

| Placeholder | Description |

|---|---|

| The version number of the output tarball. |

| The DNS name you pointed at your load balancer. |

| The DNS name for your private registry. |

| Cert-manager version running on k8s cluster. |

helm install rancher ./rancher-<VERSION>.tgz \

--namespace cattle-system \

--set hostname=<RANCHER.YOURDOMAIN.COM> \

--set certmanager.version=<CERTMANAGER_VERSION> \

--set rancherImage=<REGISTRY.YOURDOMAIN.COM:PORT>/rancher/rancher \

--set systemDefaultRegistry=<REGISTRY.YOURDOMAIN.COM:PORT> \ # Set a default private registry to be used in Rancher

--set useBundledSystemChart=true # Use the packaged Rancher system chartsOptional: To install a specific SUSE Rancher Prime version, set the rancherImageTag value, example: --set rancherImageTag=v2.5.8

4.1.5.3.5.3 Option B: Certificates From Files Using Kubernetes Secrets #

4.1.5.3.5.3.1 1. Create Secrets #

Create Kubernetes secrets from your own certificates for SUSE Rancher Prime to use. The common name for the cert will need to match the host name option in the command below, or the ingress controller will fail to provision the site for SUSE Rancher Prime.

4.1.5.3.5.3.2 2. Install Rancher #

Install SUSE Rancher Prime, declaring your chosen options. Use the reference table below to replace each placeholder. SUSE Rancher Prime needs to be configured to use the private registry in order to provision any SUSE Rancher Prime launched Kubernetes clusters or SUSE Rancher Prime tools.

| Placeholder | Description |

|---|---|

| The version number of the output tarball. |

| The DNS name you pointed at your load balancer. |

| The DNS name for your private registry. |

helm install rancher ./rancher-<VERSION>.tgz \

--namespace cattle-system \

--set hostname=<RANCHER.YOURDOMAIN.COM> \

--set rancherImage=<REGISTRY.YOURDOMAIN.COM:PORT>/rancher/rancher \