2 Remote host onboarding with Elemental #

This section documents the "phone home network provisioning" solution as part of SUSE Edge, where we use Elemental to assist with node onboarding. Elemental is a software stack enabling remote host registration and centralized full cloud-native OS management with Kubernetes. In the SUSE Edge stack we use the registration feature of Elemental to enable remote host onboarding into Rancher so that hosts can be integrated into a centralized management platform and from there, deploy and manage Kubernetes clusters along with layered components, applications, and their lifecycle, all from a common place.

This approach can be useful in scenarios where the devices that you want to control are not on the same network as the management cluster or do not have a out-of-band management controller onboard to allow more direct control, and where you’re booting many different "unknown" systems at the edge, and need to securely onboard and manage them at scale. This is a common scenario for use cases in retail, industrial IoT, or other spaces where you have little control over the network your devices are being installed in.

2.1 High-level architecture #

2.2 Resources needed #

The following describes the minimum system and environmental requirements to run through this quickstart:

A host for the centralized management cluster (the one hosting Rancher and Elemental):

Minimum 8 GB RAM and 20 GB disk space for development or testing (see here for production use)

A target node to be provisioned, i.e. the edge device (a virtual machine can be used for demoing or testing purposes)

Minimum 4GB RAM, 2 CPU cores, and 20 GB disk

A resolvable host name for the management cluster or a static IP address to use with a service like sslip.io

A host to build the installation media via Edge Image Builder

A USB flash drive to boot from (if using physical hardware)

A downloaded copy of the latest SUSE Linux Micro 6.1 SelfInstall ISO image found here.

Existing data found on target machines will be overwritten as part of the process, please make sure you backup any data on any USB storage devices and disks attached to target deployment nodes.

This guide is created using a Digital Ocean droplet to host the upstream cluster and an Intel NUC as the downstream device. For building the installation media, SUSE Linux Enterprise Server is used.

2.3 Build bootstrap cluster #

Start by creating a cluster capable of hosting Rancher and Elemental. This cluster needs to be routable from the network that the downstream nodes are connected to.

2.3.1 Create Kubernetes cluster #

If you are using a hyperscaler (such as Azure, AWS or Google Cloud), the easiest way to set up a cluster is using their built-in tools. For the sake of conciseness in this guide, we do not detail the process of each of these options.

If you are installing onto bare-metal or another hosting service where you need to also provide the Kubernetes distribution itself, we recommend using RKE2.

2.3.2 Set up DNS #

Before continuing, you need to set up access to your cluster. As with the setup of the cluster itself, how you configure DNS will be different depending on where it is being hosted.

If you do not want to handle setting up DNS records (for example, this is just an ephemeral test server), you can use a service like sslip.io instead. With this service, you can resolve any IP address with <address>.sslip.io.

2.4 Install Rancher #

To install Rancher, you need to get access to the Kubernetes API of the cluster you just created. This looks different depending on what distribution of Kubernetes is being used.

For RKE2, the kubeconfig file will have been written to /etc/rancher/rke2/rke2.yaml.

Save this file as ~/.kube/config on your local system.

You may need to edit the file to include the correct externally routable IP address or host name.

Install Rancher easily with the commands from the Rancher Documentation:

Install cert-manager:

helm repo add jetstack https://charts.jetstack.io

helm repo update

helm install cert-manager jetstack/cert-manager \

--namespace cert-manager \

--create-namespace \

--set crds.enabled=trueThen install Rancher itself:

helm repo add rancher-prime https://charts.rancher.com/server-charts/prime

helm repo update

helm install rancher rancher-prime/rancher \

--namespace cattle-system \

--create-namespace \

--set hostname=<DNS or sslip from above> \

--set replicas=1 \

--set bootstrapPassword=<PASSWORD_FOR_RANCHER_ADMIN> \

--version 2.12.2If this is intended to be a production system, please use cert-manager to configure a real certificate (such as one from Let’s Encrypt).

Browse to the host name you set up and log in to Rancher with the bootstrapPassword you used. You will be guided through a short setup process.

2.5 Install Elemental #

With Rancher installed, you can now install the Elemental operator and required CRD’s. The Helm chart for Elemental is published as an OCI artifact so the installation is a little simpler than other charts. It can be installed from either the same shell you used to install Rancher or in the browser from within Rancher’s shell.

helm install --create-namespace -n cattle-elemental-system \

elemental-operator-crds \

oci://registry.suse.com/rancher/elemental-operator-crds-chart \

--version 1.7.3

helm install -n cattle-elemental-system \

elemental-operator \

oci://registry.suse.com/rancher/elemental-operator-chart \

--version 1.7.32.5.1 (Optionally) Install the Elemental UI extension #

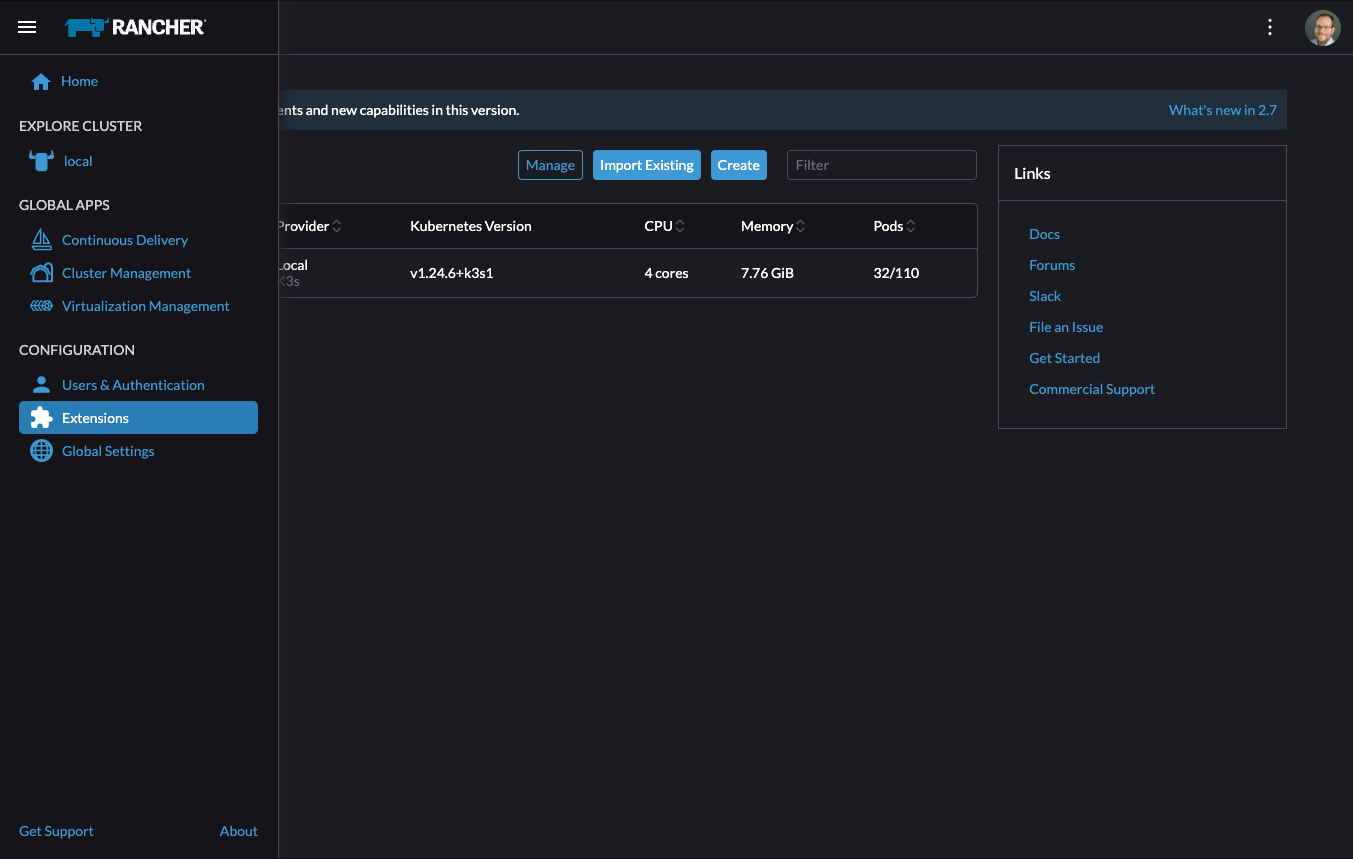

To use the Elemental UI, log in to your Rancher instance, click the three-line menu in the upper left:

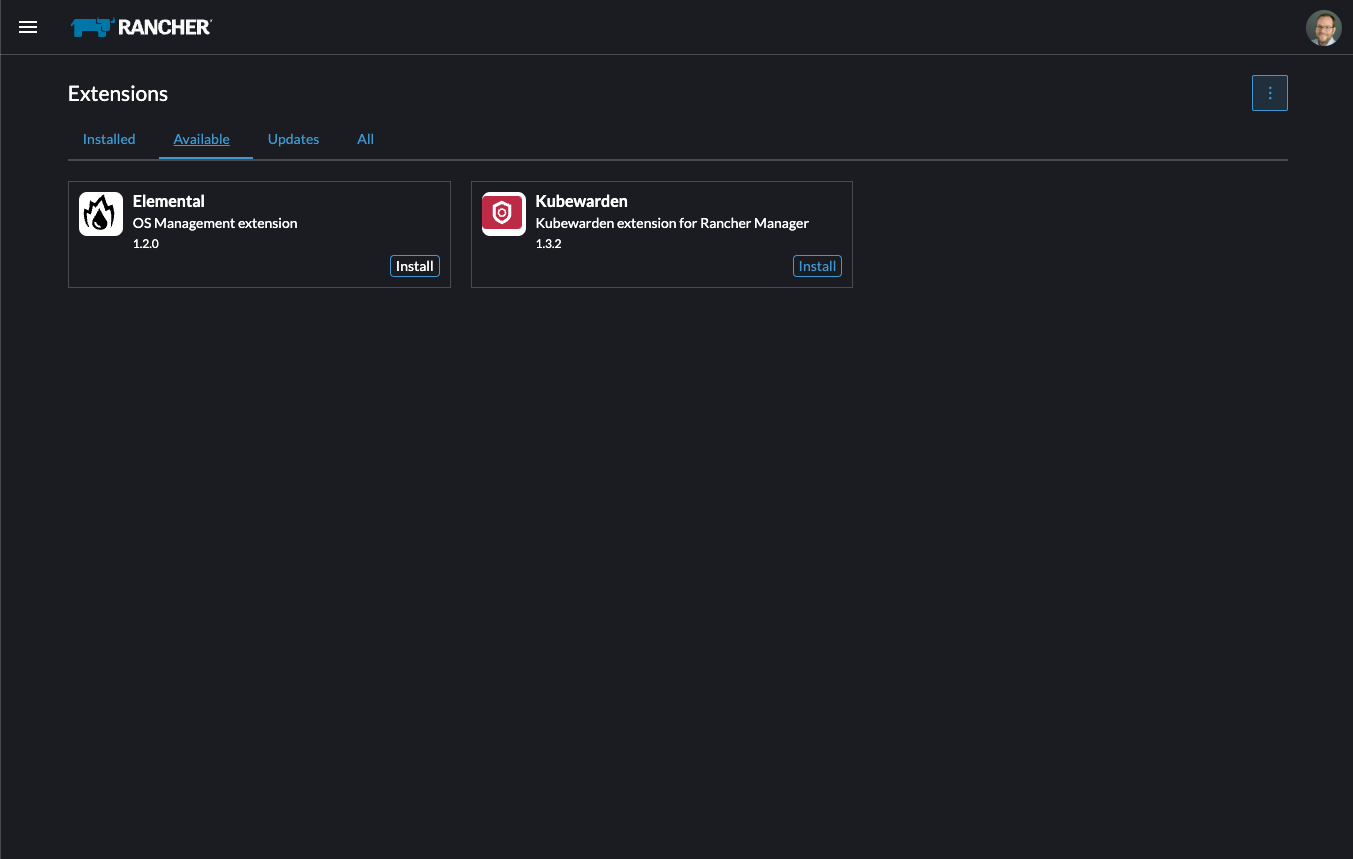

From the "Available" tab on this page, click "Install" on the Elemental card:

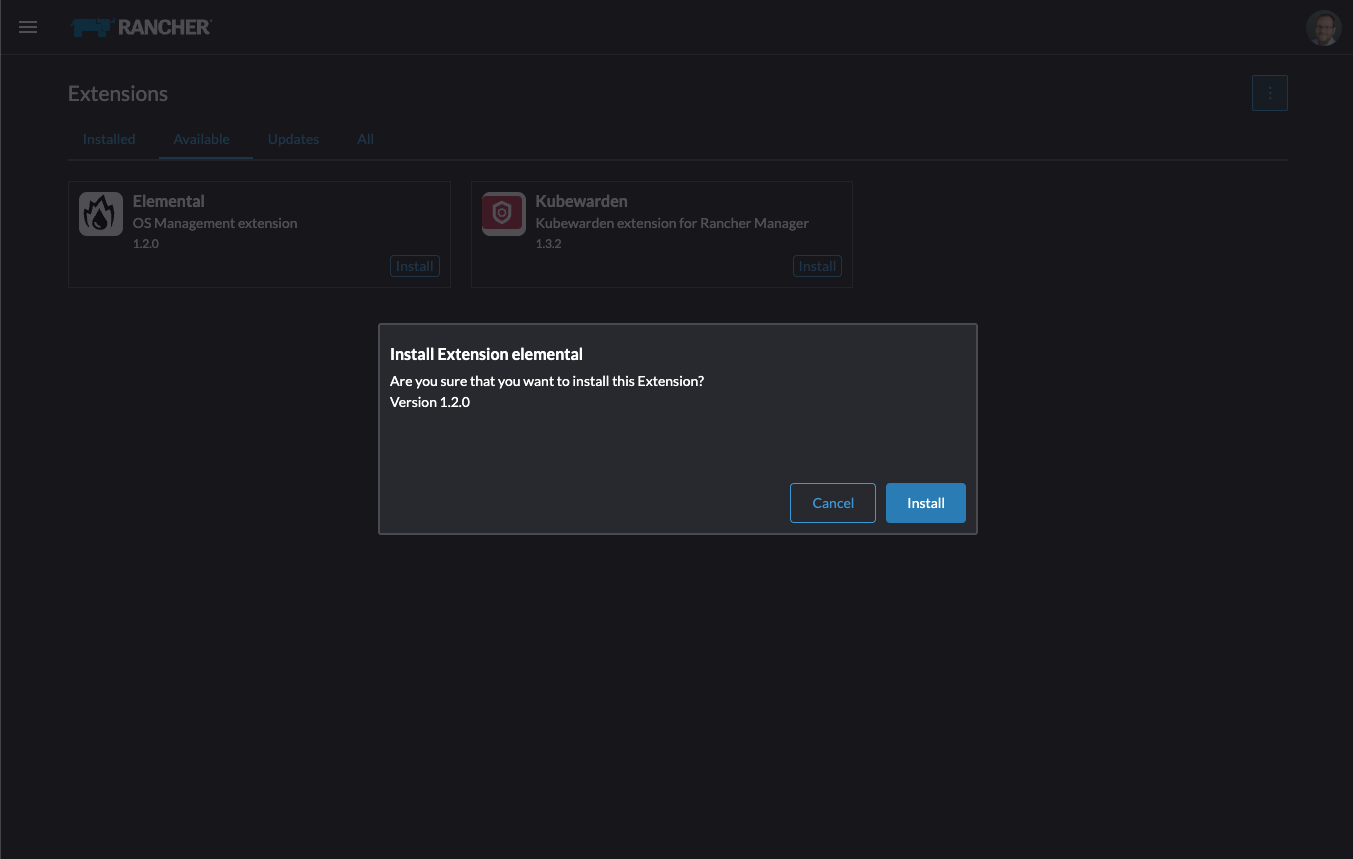

Confirm that you want to install the extension:

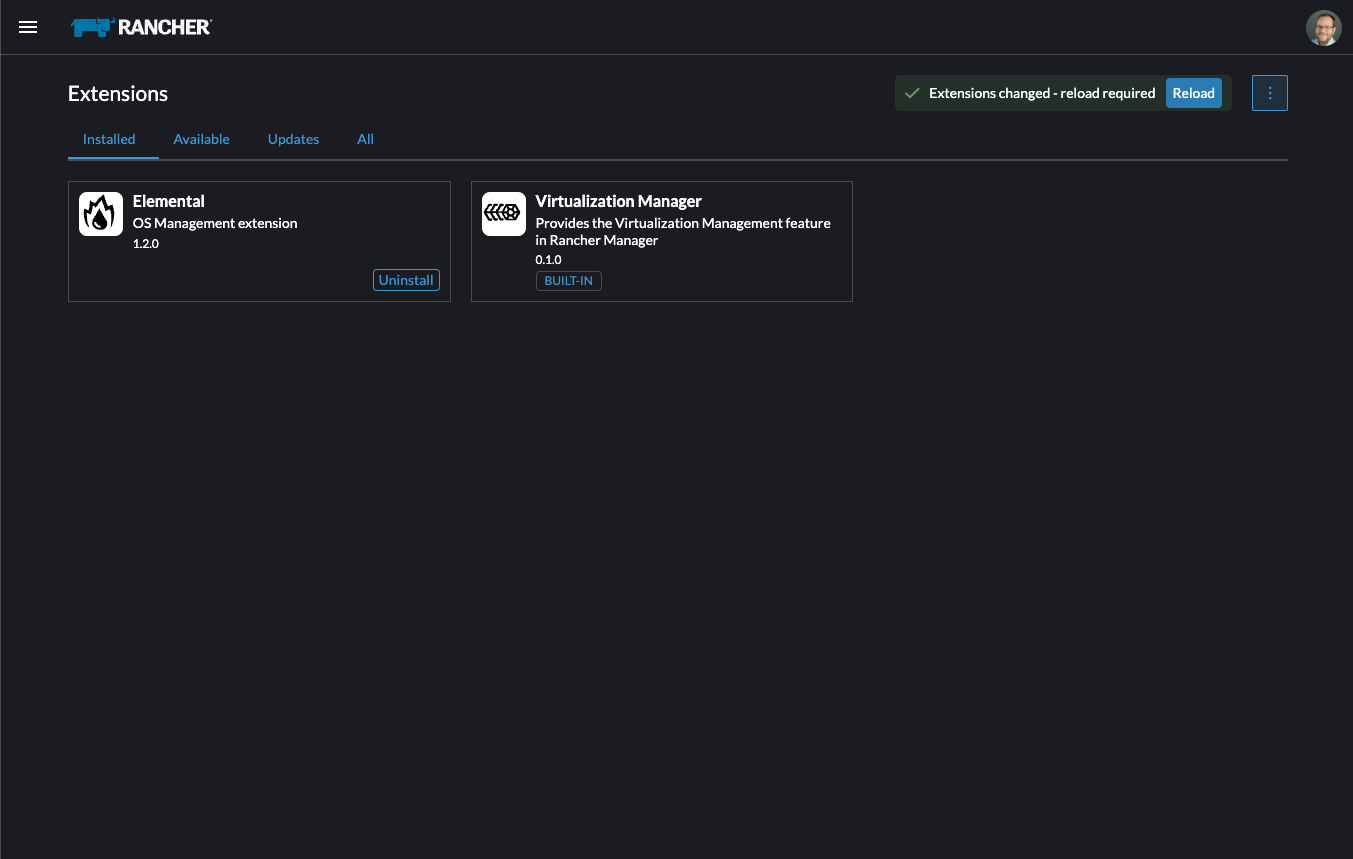

After it installs, you will be prompted to reload the page.

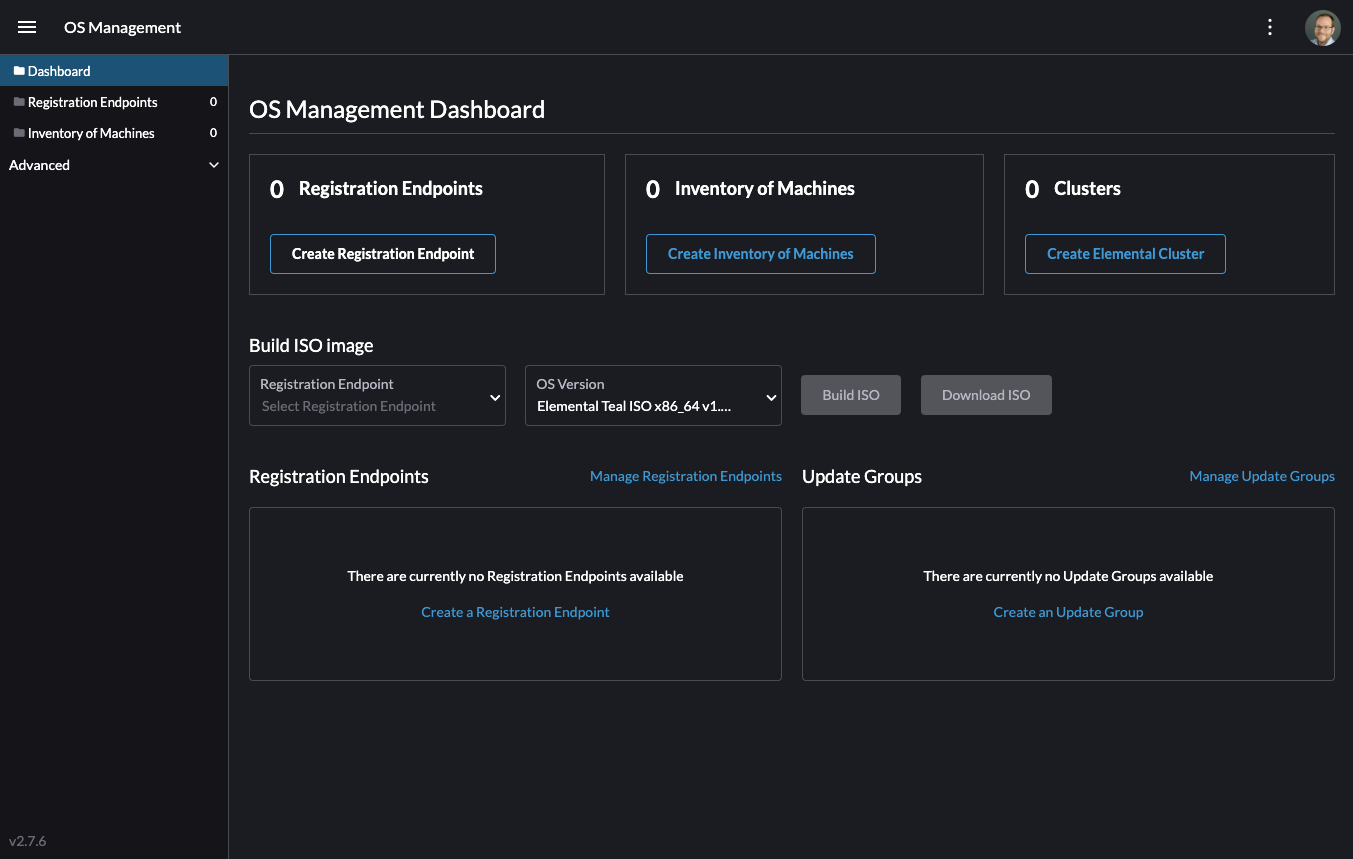

Once you reload, you can access the Elemental extension through the "OS Management" global app.

2.6 Configure Elemental #

For simplicity, we recommend setting the variable $ELEM to the full path of where you want the configuration directory:

export ELEM=$HOME/elemental

mkdir -p $ELEMTo allow machines to register to Elemental, we need to create a MachineRegistration object in the fleet-default namespace.

Let us create a basic version of this object:

cat << EOF > $ELEM/registration.yaml

apiVersion: elemental.cattle.io/v1beta1

kind: MachineRegistration

metadata:

name: ele-quickstart-nodes

namespace: fleet-default

spec:

machineName: "\${System Information/Manufacturer}-\${System Information/UUID}"

machineInventoryLabels:

manufacturer: "\${System Information/Manufacturer}"

productName: "\${System Information/Product Name}"

EOF

kubectl apply -f $ELEM/registration.yamlThe cat command escapes each $ with a backslash (\) so that Bash does not template them. Remove the backslashes if copying manually.

Once the object is created, find and note the endpoint that gets assigned:

REGISURL=$(kubectl get machineregistration ele-quickstart-nodes -n fleet-default -o jsonpath='{.status.registrationURL}')Alternatively, this can also be done from the UI.

- UI Extension

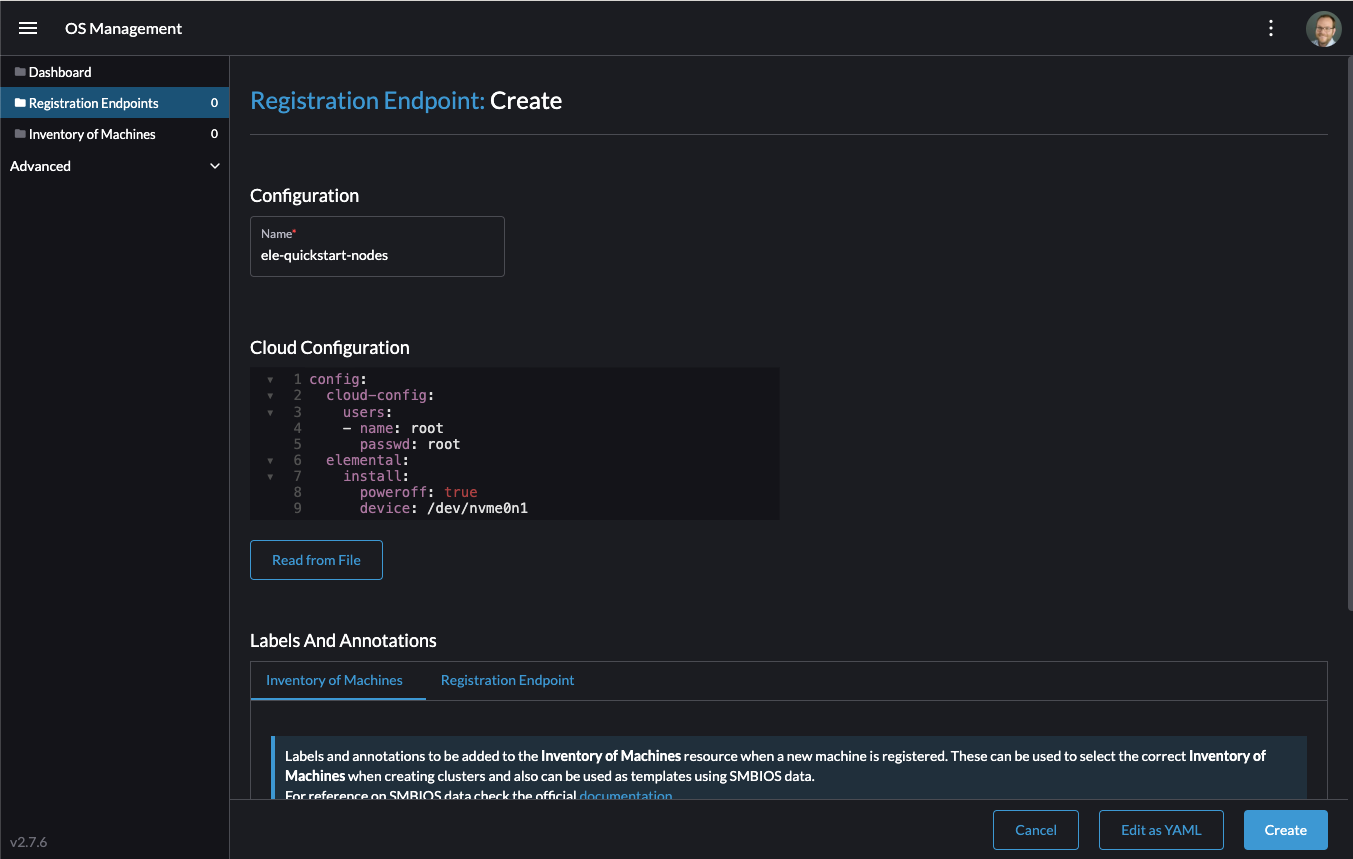

From the OS Management extension, click "Create Registration Endpoint":

Give this configuration a name.

NoteYou can ignore the Cloud Configuration field as the data here is overridden by the following steps with Edge Image Builder.

Next, scroll down and click "Add Label" for each label you want to be on the resource that gets created when a machine registers. This is useful for distinguishing machines.

Click "Create" to save the configuration.

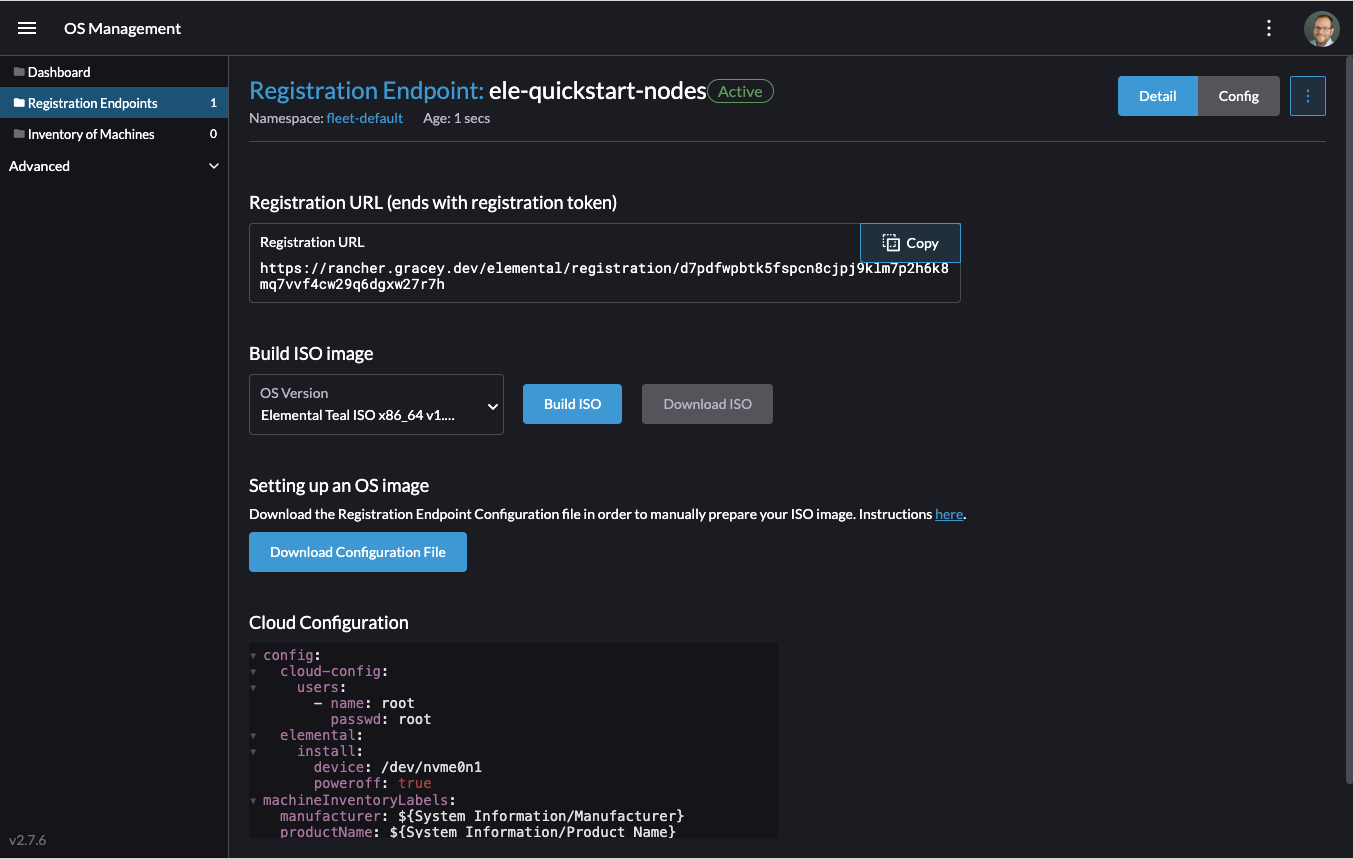

Once the registration is created, you should see the Registration URL listed and can click "Copy" to copy the address:

TipIf you clicked away from that screen, you can click "Registration Endpoints" in the left menu, then click the name of the endpoint you just created.

This URL is used in the next step.

2.7 Build the image #

While the current version of Elemental has a way to build its own installation media, in SUSE Edge 3.4.1 we do this with Kiwi and Edge Image Builder instead, so the resulting system is built with SUSE Linux Micro as the base Operating System.

For more details on Kiwi, please follow Kiwi Image Builder process (Chapter 28, Building Updated SUSE Linux Micro Images with Kiwi) to build fresh images first and for Edge Image Builder, check out the Edge Image Builder Getting Started Guide (Chapter 3, Standalone clusters with Edge Image Builder) and also the Component Documentation (Chapter 11, Edge Image Builder).

From a Linux system with Podman installed, create the directories and place the base image being built by Kiwi:

mkdir -p $ELEM/eib_quickstart/base-images

cp /path/to/{micro-base-image-iso} $ELEM/eib_quickstart/base-images/

mkdir -p $ELEM/eib_quickstart/elementalcurl $REGISURL -o $ELEM/eib_quickstart/elemental/elemental_config.yamlcat << EOF > $ELEM/eib_quickstart/eib-config.yaml

apiVersion: 1.3

image:

imageType: iso

arch: x86_64

baseImage: SL-Micro.x86_64-6.1-Base-SelfInstall-GM.install.iso

outputImageName: elemental-image.iso

operatingSystem:

time:

timezone: Europe/London

ntp:

forceWait: true

pools:

- 2.suse.pool.ntp.org

servers:

- 10.0.0.1

- 10.0.0.2

isoConfiguration:

installDevice: /dev/vda

users:

- username: root

encryptedPassword: \$6\$jHugJNNd3HElGsUZ\$eodjVe4te5ps44SVcWshdfWizrP.xAyd71CVEXazBJ/.v799/WRCBXxfYmunlBO2yp1hm/zb4r8EmnrrNCF.P/

packages:

sccRegistrationCode: XXX

EOFThe

timesection is optional but it is highly recommended to be configured to avoid potential issues with certificates and clock skew. The values provided in this example are for illustrative purposes only. Please adjust them to fit your specific requirements.The unencoded password is

eib.The

sccRegistrationCodeis needed to download and install the necessary RPMs from the official sources (alternatively, theelemental-registerandelemental-system-agentRPMs can be manually side-loaded instead)The

catcommand escapes each$with a backslash (\) so that Bash does not template them. Remove the backslashes if copying manually.The installation device will be wiped during the installation.

podman run --privileged --rm -it -v $ELEM/eib_quickstart/:/eib \

registry.suse.com/edge/3.4/edge-image-builder:1.3.0 \

build --definition-file eib-config.yamlIf you are booting a physical device, we need to burn the image to a USB flash drive. This can be done with:

sudo dd if=/eib_quickstart/elemental-image.iso of=/dev/<PATH_TO_DISK_DEVICE> status=progress2.8 Boot the downstream nodes #

Now that we have created the installation media, we can boot our downstream nodes with it.

For each of the systems that you want to control with Elemental, add the installation media and boot the device. After installation, it will reboot and register itself.

If you are using the UI extension, you should see your node appear in the "Inventory of Machines."

Do not remove the installation medium until you’ve seen the login prompt; during first-boot files are still accessed on the USB stick.

2.9 Create downstream clusters #

There are two objects we need to create when provisioning a new cluster using Elemental.

- Linux

- UI Extension

The first is the MachineInventorySelectorTemplate. This object allows us to specify a mapping between clusters and the machines in the inventory.

Create a selector which will match any machine in the inventory with a label:

cat << EOF > $ELEM/selector.yaml apiVersion: elemental.cattle.io/v1beta1 kind: MachineInventorySelectorTemplate metadata: name: location-123-selector namespace: fleet-default spec: template: spec: selector: matchLabels: locationID: '123' EOFApply the resource to the cluster:

kubectl apply -f $ELEM/selector.yamlObtain the name of the machine and add the matching label:

MACHINENAME=$(kubectl get MachineInventory -n fleet-default | awk 'NR>1 {print $1}') kubectl label MachineInventory -n fleet-default \ $MACHINENAME locationID=123Create a simple single-node K3s cluster resource and apply it to the cluster:

cat << EOF > $ELEM/cluster.yaml apiVersion: provisioning.cattle.io/v1 kind: Cluster metadata: name: location-123 namespace: fleet-default spec: kubernetesVersion: v1.33.5+k3s1 rkeConfig: machinePools: - name: pool1 quantity: 1 etcdRole: true controlPlaneRole: true workerRole: true machineConfigRef: kind: MachineInventorySelectorTemplate name: location-123-selector apiVersion: elemental.cattle.io/v1beta1 EOF kubectl apply -f $ELEM/cluster.yaml

After creating these objects, you should see a new Kubernetes cluster spin up using the new node you just installed with.

2.10 Node Reset (Optional) #

SUSE Rancher Elemental supports the ability to perform a "node reset" which can optionally trigger when either a whole cluster is deleted from Rancher, a single node is deleted from a cluster, or a node is manually deleted from the machine inventory. This is useful when you want to reset and clean-up any orphaned resources and want to automatically bring the cleaned node back into the machine inventory so it can be reused. This is not enabled by default, and thus any system that is removed, will not be cleaned up (i.e. data will not be removed, and any Kubernetes cluster resources will continue to operate on the downstream clusters) and it will require manual intervention to wipe data and re-register the machine to Rancher via Elemental.

If you wish for this functionality to be enabled by default, you need to make sure that your MachineRegistration explicitly enables this by adding config.elemental.reset.enabled: true, for example:

config:

elemental:

registration:

auth: tpm

reset:

enabled: trueThen, all systems registered with this MachineRegistration will automatically receive the elemental.cattle.io/resettable: 'true' annotation in their configuration. If you wish to do this manually on individual nodes, e.g. because you’ve got an existing MachineInventory that doesn’t have this annotation, or you have already deployed nodes, you can modify the MachineInventory and add the resettable configuration, for example:

apiVersion: elemental.cattle.io/v1beta1

kind: MachineInventory

metadata:

annotations:

elemental.cattle.io/os.unmanaged: 'true'

elemental.cattle.io/resettable: 'true'In SUSE Edge 3.1, the Elemental Operator puts down a marker on the operating system that will trigger the cleanup process automatically; it will stop all Kubernetes services, remove all persistent data, uninstall all Kubernetes services, cleanup any remaining Kubernetes/Rancher directories, and force a re-registration to Rancher via the original Elemental MachineRegistration configuration. This happens automatically, there is no need for any manual intervention. The script that gets called can be found in /opt/edge/elemental_node_cleanup.sh and is triggered via systemd.path upon the placement of the marker, so its execution is immediate.

Using the resettable functionality assumes that the desired behavior when removing a node/cluster from Rancher is to wipe data and force a re-registration. Data loss is guaranteed in this situation, so only use this if you’re sure that you want automatic reset to be performed.

2.11 Next steps #

Here are some recommended resources to research after using this guide:

End-to-end automation in Chapter 8, Fleet

Additional network configuration options in Chapter 12, Edge Networking